repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

Lightning-AI/pytorch-lightning

|

deep-learning

| 19,981

|

[fabric.example.rl] Not support torch.float64 for MPS device

|

### Bug description

I found an error when run the example `pytorch-lightning/examples/fabric/reinforcement_learning` on M2 Mac (device type=mps)

### Reproduce Error

```

reinforcement_learning git:(master) ✗ fabric run train_fabric.py

W0617 12:53:22.541000 8107367488 torch/distributed/elastic/multiprocessing/redirects.py:27] NOTE: Redirects are currently not supported in Windows or MacOs.

[rank: 0] Seed set to 42

Missing logger folder: logs/fabric_logs/2024-06-17_12-53-24/CartPole-v1_default_42_1718596404

set default torch dtype as torch.float32

Traceback (most recent call last):

File "/Users/user/workspace/pytorch-lightning/examples/fabric/reinforcement_learning/train_fabric.py", line 215, in <module>

main(args)

File "/Users/user/workspace/pytorch-lightning/examples/fabric/reinforcement_learning/train_fabric.py", line 154, in main

rewards[step] = torch.tensor(reward, device=device).view(-1)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

TypeError: Cannot convert a MPS Tensor to float64 dtype as the MPS framework doesn't support float64. Please use float32 instead.

```

This bug is fixed by checking device.type and type casting to torch.float32 `reward`.

```bash

@@ -146,7 +146,7 @@ def main(args: argparse.Namespace):

# Single environment step

next_obs, reward, done, truncated, info = envs.step(action.cpu().numpy())

done = torch.logical_or(torch.tensor(done), torch.tensor(truncated))

- rewards[step] = torch.tensor(reward, device=device).view(-1)

+ rewards[step] = torch.tensor(reward, device=device, dtype=torch.float32 if device.type == 'mps' else None).view(-1)

```

### What version are you seeing the problem on?

master

### Environment

<details><summary>Current environment</summary>

```

#- Lightning Component (e.g. Trainer, LightningModule, LightningApp, LightningWork, LightningFlow):

#- PyTorch Lightning Version (e.g., 1.5.0): 2.3.0

#- Lightning App Version (e.g., 0.5.2): 2.3.0

#- PyTorch Version (e.g., 2.0): 2.3.1

#- Python version (e.g., 3.9): 3.12.3

#- OS (e.g., Linux): Mac

#- CUDA/cuDNN version: MPS

#- GPU models and configuration: M2

#- How you installed Lightning(`conda`, `pip`, source):

#- Running environment of LightningApp (e.g. local, cloud):

```

</details>

|

closed

|

2024-06-17T04:13:05Z

|

2024-06-21T14:36:12Z

|

https://github.com/Lightning-AI/pytorch-lightning/issues/19981

|

[

"bug",

"example",

"ver: 2.2.x"

] |

swyo

| 0

|

ultralytics/yolov5

|

machine-learning

| 13,389

|

how to reduce false postives in yolov5

|

### Search before asking

- [X] I have searched the YOLOv5 [issues](https://github.com/ultralytics/yolov5/issues) and [discussions](https://github.com/ultralytics/yolov5/discussions) and found no similar questions.

### Question

Hello everyone,

I'm currently training YOLOv5s to detect three objects: phone, cigarette, and vape. My original dataset contained 9,000 images, with 3,000 images for each class. After training the model for 100 epochs, I've noticed a high number of false positives.

To address this, I've added 3,000 negative images (images that don't contain any of the target objects) to the dataset. I've also experimented with adjusting the conf_thres and iou_thres settings a bit. I plan to train the model for more epochs in the future.

Are there any additional strategies or techniques you recommend to further reduce the number of false positives? Any insights would be greatly appreciated!

thanks in advance.

### Additional

training info

pochs 100, --img-size 640, --batch-size 16, --optimizer SGD --cache ram --hyp /content/yolov5/data/hyps/hyp.scratch-low.yaml

the content of hyp.scratch-low.yaml file is set to default

|

open

|

2024-10-28T17:26:18Z

|

2024-11-09T01:07:51Z

|

https://github.com/ultralytics/yolov5/issues/13389

|

[

"question",

"detect"

] |

yAlqubati

| 2

|

zappa/Zappa

|

flask

| 434

|

[Migrated] Deploy and Update from Local and S3-Hosted Zip Files

|

Originally from: https://github.com/Miserlou/Zappa/issues/1128 by [Miserlou](https://github.com/Miserlou)

A few people have requested the ability to do something like:

`zappa deploy dev --zip localfile.zip`

and similarly:

`zappa update dev --zip s3://my_bucket/package.zip`

Could be handy.

|

closed

|

2021-02-20T08:32:53Z

|

2024-04-13T16:17:45Z

|

https://github.com/zappa/Zappa/issues/434

|

[

"enhancement",

"help wanted",

"hacktoberfest",

"no-activity",

"auto-closed"

] |

jneves

| 2

|

microsoft/nni

|

deep-learning

| 5,719

|

Enhancement of GBDTSelector inherited from FeatureSelector

|

<!-- Please only use this template for submitting enhancement requests -->

**What would you like to be added**:

The FeatureSelector class was written in a preliminary form, like the following referenced code snippet:

https://github.com/microsoft/nni/blob/767ed7f22e1e588ce76cbbecb6c6a4a76a309805/nni/feature_engineering/feature_selector.py#L26-L34

I think GBDTSelector is not necessary to inherit FeatureSelector while it rewrite all class methods, though it doesn't inherit the class properties of FeatureSelector.

https://github.com/microsoft/nni/blob/767ed7f22e1e588ce76cbbecb6c6a4a76a309805/nni/algorithms/feature_engineering/gbdt_selector/gbdt_selector.py#L35

and GBDTSelector class adopts train_test_split function from scikit-learn, I was wondering if the validation datasets will be needed to enhance the effect of fit module ([like what I implemented for training cycle while evaluate validation to early stop](https://github.com/linjing-lab/easy-pytorch/blob/9651774dcc4581104f914980baf2ebc05f96fd85/released_box/perming/_utils.py#L382)):

https://github.com/microsoft/nni/blob/767ed7f22e1e588ce76cbbecb6c6a4a76a309805/nni/algorithms/feature_engineering/gbdt_selector/gbdt_selector.py#L86-L89

**Why is this needed**:

The subclass that inherits from FeatureSelector is GBDTSelector, and it is not a valid inheritance because all subclass properties and methods are overridden.

**Without this feature, how does current nni work**:

GBDTSelector works in nni that powered by `fit` module and [`get_selected_features` module](https://github.com/microsoft/nni/blob/767ed7f22e1e588ce76cbbecb6c6a4a76a309805/nni/algorithms/feature_engineering/gbdt_selector/gbdt_selector.py#L102), not obtained by the methods from FeatureSelector class.

**Components that may involve changes**:

Add `super(GBDTSelector, self).__init__` to [initial part of GBDTSelector](https://github.com/microsoft/nni/blob/767ed7f22e1e588ce76cbbecb6c6a4a76a309805/nni/algorithms/feature_engineering/gbdt_selector/gbdt_selector.py#L37), or drop FeatureSelector class if inherited properties wasn't important when subclass rewrited all the methods instead of giving some changes.

**Brief description of your proposal if any**:

The properties and methods contained in FeatureSelector may not contribute to the code logic of any module in GBDTSelector.

|

open

|

2023-12-04T03:25:52Z

|

2023-12-04T03:27:24Z

|

https://github.com/microsoft/nni/issues/5719

|

[] |

linjing-lab

| 0

|

ultrafunkamsterdam/undetected-chromedriver

|

automation

| 1,191

|

Can't login on my Google account

|

Until 3.2.0 version of undetected_chromedriver works perfectly, but on newers it doesn't. Always in headful mode.

|

open

|

2023-04-12T12:58:31Z

|

2023-05-02T22:01:31Z

|

https://github.com/ultrafunkamsterdam/undetected-chromedriver/issues/1191

|

[] |

FRIKIdelTO

| 6

|

dpgaspar/Flask-AppBuilder

|

rest-api

| 2,010

|

Select2.js auto blink is not working

|

In select2widgets when I open drop-down under search input blinking is not working but when I click manually in the search input it working but previous version is working fine

Select2.js

|

closed

|

2023-04-02T14:06:48Z

|

2023-04-09T11:56:48Z

|

https://github.com/dpgaspar/Flask-AppBuilder/issues/2010

|

[] |

Alex-7999

| 1

|

waditu/tushare

|

pandas

| 1,651

|

财报数据更新错乱

|

今天是5月1日了,按理说所有一季报和年报数据都应该已经更新完成。

今天获取美的集团财报,

通过代码pro.income(ts_code='000333.SZ', start_date='20210101',end_date='20220430')

获取美的集团利润表,结果22年1季报更新,但找不到21年年报数据。从获取数据看,end_date时间从20210930后,直接跳到了20220331,缺失20211231年报数据。以下是python输出结果

f_ann_date end_date

0 20220430 20220331

1 20211030 20210930

2 20210831 20210630

但是,通过pro.balancesheet(ts_code='000333.SZ', start_date='20210101',end_date='20220430'),以及pro.cashflow(ts_code='000333.SZ', start_date='20210101',end_date='20220430')

获取美的集团的资产负债表和现金流量表,是有年报数据的。而且试过几家4月30日发布年报公司,也都有利润表数据。

应当是数据错乱了。

<img width="389" alt="tsbug美的财报" src="https://user-images.githubusercontent.com/104714818/166139915-0b9da2c4-2730-4062-a38f-8da678a160be.PNG">

|

open

|

2022-05-01T09:21:54Z

|

2022-05-01T09:41:20Z

|

https://github.com/waditu/tushare/issues/1651

|

[] |

Yellowman9

| 1

|

itamarst/eliot

|

numpy

| 243

|

MemoryLogger.validate mutates the contents of logged dictionaries

|

In particular, serialization does this. Validation really ought to be lacking in any side-effects!

|

open

|

2015-12-01T21:14:19Z

|

2018-09-22T20:59:21Z

|

https://github.com/itamarst/eliot/issues/243

|

[] |

itamarst

| 0

|

nonebot/nonebot2

|

fastapi

| 2,549

|

Plugin: BA模拟抽卡

|

### PyPI 项目名

nonebot-plugin-badrawcard

### 插件 import 包名

nonebot_plugin_BAdrawcard

### 标签

[]

### 插件配置项

_No Response_

|

closed

|

2024-01-26T08:46:31Z

|

2024-01-29T02:39:22Z

|

https://github.com/nonebot/nonebot2/issues/2549

|

[

"Plugin"

] |

lengmianzz

| 6

|

raphaelvallat/pingouin

|

pandas

| 13

|

Add 95% CI for ttest function

|

See gitter chat.

|

closed

|

2019-04-08T16:09:24Z

|

2019-04-22T16:16:48Z

|

https://github.com/raphaelvallat/pingouin/issues/13

|

[

"feature request :construction:"

] |

raphaelvallat

| 1

|

onnx/onnx

|

scikit-learn

| 6,536

|

[Feature request] operator Conv has no example with a bias in the backend test

|

### System information

All

### What is the problem that this feature solves?

Robustness.

### Alternatives considered

_No response_

### Describe the feature

_No response_

### Will this influence the current api (Y/N)?

No

### Feature Area

_No response_

### Are you willing to contribute it (Y/N)

Yes

### Notes

_No response_

|

open

|

2024-11-07T10:22:43Z

|

2024-11-07T10:22:43Z

|

https://github.com/onnx/onnx/issues/6536

|

[

"topic: enhancement"

] |

xadupre

| 0

|

sczhou/CodeFormer

|

pytorch

| 332

|

Video Enhancement, Can it run in parallel?

|

Nice work!

Video Enhancement, Can image processing run in Parallel?

|

open

|

2023-12-14T03:17:32Z

|

2023-12-14T03:17:49Z

|

https://github.com/sczhou/CodeFormer/issues/332

|

[] |

jackyin68

| 0

|

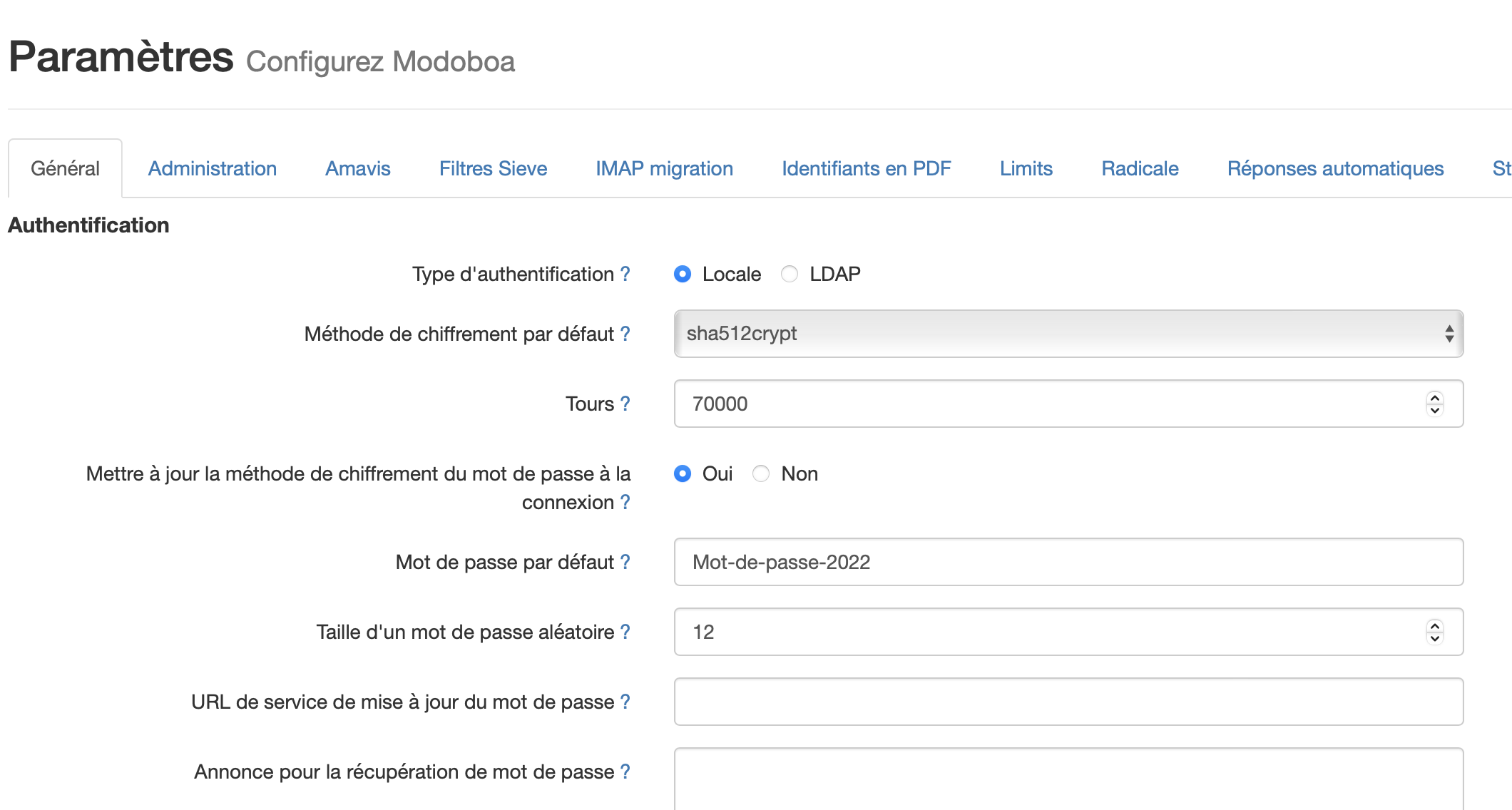

modoboa/modoboa

|

django

| 2,623

|

Admins input fields empty in GUI v2.

|

# Impacted versions

* OS Type: Debian

* OS Version: bullseye

* Database Type: PostgreSQL

* Database version: 13.8-0+deb11u1

* Modoboa: 2.0.2

* installer used: Yes

* Webserver: Nginx

* Navigator: Safari OSX, Chrome OSX

# Current behavior

Hello,

In the v2 interface all input fields are empty. They do not take the values from the database as in the v1 interface.

|

closed

|

2022-10-01T07:17:21Z

|

2023-02-23T15:00:13Z

|

https://github.com/modoboa/modoboa/issues/2623

|

[

"feedback-needed"

] |

stefaweb

| 8

|

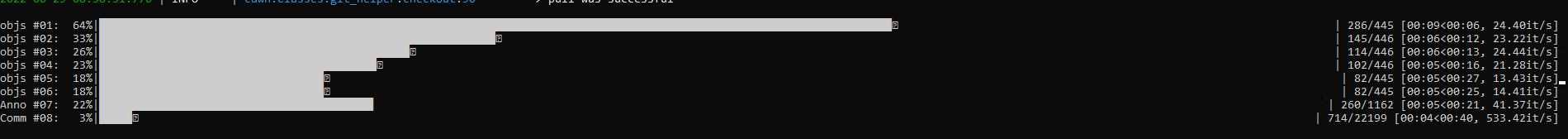

tqdm/tqdm

|

pandas

| 1,336

|

Visual statusbar finish error for multiprocessed tasks

|

I use:

4.64.0 3.9.7 | packaged by conda-forge | (default, Sep 23 2021, 07:24:41) [MSC v.1916 64 bit (AMD64)] win32

While work is done everything is working:

But then every process which ends, wrotes his finish statusbar at the wrong position. This leads to:

As far as i understand it is this line:

https://github.com/tqdm/tqdm/blob/master/tqdm/std.py#L1315-L1317

It should be:

```python

# let the finished status bar at this position it was

self.display(pos=pos)

fp_write('\r')

```

I started with:

```python

freeze_support()

tqdm.set_lock(RLock())

with Pool(initializer=tqdm.set_lock, initargs=(tqdm.get_lock(),), processes=8) as p:

p.map(partial(Historizer._parallel), tasks)

```

Then i do:

```python

for obj in tqdm(object_set.fetchmany(100_000), desc=f'objs #{identifier:02d}', position=identifier):

```

|

open

|

2022-06-29T07:13:34Z

|

2023-08-25T18:34:59Z

|

https://github.com/tqdm/tqdm/issues/1336

|

[] |

MrJack91

| 2

|

vaexio/vaex

|

data-science

| 1,552

|

[BUG-REPORT] Forbidden symbols in column names should raise warning?

|

Hi,

**Description**

When trying to drop a column actually used during intermediate calculations, I got below error.

I finally found out that the bug only arises if using some symbol in initial column name.

```python

import vaex as vx

import pandas as pd

col = 'init#1'

pdf = pd.DataFrame({col: [2, 8, 20, 3, 17] })

vdf = vx.from_pandas(pdf)

# Temporary column used for intermediate calculations.

vdf['truc'] = vdf[col]*2

vdf[col] = vdf['truc']

# Drop temporary column.

vdf.drop(columns=['truc'], inplace=True)

# Show result.

vdf

```

```python

NameError: name 'truc' is not defined

Out[55]:

# init#1

0 error

1 error

2 error

3 error

4 error

```

But if using `col='init_1`, it works.

I have tested with all symbols, and I can see that only `_` is allowed / does not raise error.

I would propose to raise a warning when a symbol is used in a column name so that user is warned further calculations may not be possible.

The error message is not quite clear (in case of dropping a column), and it is only when doing a minimal example with short column name that I got rid of the error, and finally understood it is coming from column name.

**Software information**

- Vaex version (`import vaex; vaex.__version__)`:

{'vaex-core': '4.3.0.post1',

'vaex-viz': '0.5.0',

'vaex-hdf5': '0.8.0',

'vaex-server': '0.5.0',

'vaex-astro': '0.8.2',

'vaex-jupyter': '0.6.0',

'vaex-ml': '0.12.0'}

- Vaex was installed via: conda-forge

- OS: Ubuntu 20.04

|

open

|

2021-08-29T16:46:13Z

|

2021-09-11T18:59:53Z

|

https://github.com/vaexio/vaex/issues/1552

|

[] |

yohplala

| 1

|

cleanlab/cleanlab

|

data-science

| 611

|

Missing link in documentation

|

The documentation on this page: https://docs.cleanlab.ai/stable/index.html have a link for "label errors" that it found, but that link points to https://labelerrors.com/, which doesn't seem to be a site. I'm assuming there is supposed to be a different link here.

|

closed

|

2023-01-30T23:23:28Z

|

2023-03-13T22:04:01Z

|

https://github.com/cleanlab/cleanlab/issues/611

|

[

"needs triage"

] |

jss367

| 2

|

jina-ai/serve

|

machine-learning

| 6,185

|

Jina-ai API unresponsive for Amazon product URLs

|

Jina-ai API is not returning any content when provided with Amazon product URLs, while it works correctly for other websites.

Please advise on potential causes or solutions. Let me know if you need more information.

Returned data:

```

Title: 503 - Service Unavailable Error

URL Source: https://www.amazon.com/Portable-Mechanical-Keyboard-MageGee-Backlit/dp/B098LG3N6R/ref=sr_1_1?_encoding=UTF8&content-id=amzn1.sym.12129333-2117-4490-9c17-6d31baf0582a&dib=eyJ2IjoiMSJ9.xPISJOYMxoc_9dHbx858f1JwKKN8MOEI6pxe0RfkUq5-YoBt2WvHxwQ2JfTjMUHM7KhYH9-CViR_7Mu_sdA9fTtlO6upY81XXLsTCcvQrQd21jMTrrvCPcFNCLu32ovECyUqHJP9do03wDM8Jfj5VMCYBB8Dvkbf3evLyK9vgRnNe1jvnki39RmDw-qvRsAhlUUtDgkkeS6MWfNYIM70Vz83mL8jXD44sShexO4WSU4.znblRHErp_k4_mgDDvhFgjVPe6jUfQ9iO6KvL_-AHyI&dib_tag=se&keywords=gaming+keyboard&pd_rd_r=00494241-850d-40b9-a741-3b34a5027b69&pd_rd_w=zOk93&pd_rd_wg=QZ3N1&pf_rd_p=12129333-2117-4490-9c17-6d31baf0582a&pf_rd_r=0ST68QYVQESTVXXG751C&qid=1723040389&sr=8-1

Markdown Content:

503 - Service Unavailable Error

===============

[](https://www.amazon.com/)

[](https://www.amazon.com/ref=cs_503_link/)

[](https://www.amazon.com/dogsofamazon)

```

|

closed

|

2024-08-07T14:24:21Z

|

2024-11-06T07:11:40Z

|

https://github.com/jina-ai/serve/issues/6185

|

[

"Stale"

] |

suysoftware

| 4

|

taverntesting/tavern

|

pytest

| 26

|

Publishing MQTT message(s) before commencing test

|

How do you think this should work @michaelboulton? Can you come up with something and implement it with some examples in the docs site?

|

closed

|

2018-02-07T08:41:23Z

|

2018-02-22T16:26:46Z

|

https://github.com/taverntesting/tavern/issues/26

|

[

"Type: Enhancement"

] |

benhowes

| 7

|

cvat-ai/cvat

|

tensorflow

| 8,673

|

Keybinds in UI allow drawing disabled shape types

|

### Actions before raising this issue

- [X] I searched the existing issues and did not find anything similar.

- [X] I read/searched [the docs](https://docs.cvat.ai/docs/)

### Steps to Reproduce

1. Create a task with 1 label, points type

2. Open Single Shape mode

3. Open Standard mode

4. Press N - bbox drawing will start

### Expected Behavior

_No response_

### Possible Solution

_No response_

### Context

_No response_

### Environment

_No response_

|

closed

|

2024-11-08T17:34:46Z

|

2024-11-13T12:44:04Z

|

https://github.com/cvat-ai/cvat/issues/8673

|

[

"bug",

"ui/ux"

] |

zhiltsov-max

| 0

|

piskvorky/gensim

|

data-science

| 2,872

|

Broken file link in `run_corpora_and_vector_spaces` tutorial

|

#### Problem description

The `run_corpora_and_vector_spaces.ipynb` tutorial depends on a file on the web, and that file is missing.

#### Steps/code/corpus to reproduce

See https://groups.google.com/g/gensim/c/nX4lc8j0ZO0

#### Versions

Please provide the output of:

```python

import platform; print(platform.platform())

import sys; print("Python", sys.version)

import numpy; print("NumPy", numpy.__version__)

import scipy; print("SciPy", scipy.__version__)

import gensim; print("gensim", gensim.__version__)

from gensim.models import word2vec;print("FAST_VERSION", word2vec.FAST_VERSION)

```

Unknown (probably any).

|

closed

|

2020-07-05T07:24:23Z

|

2021-06-06T13:50:18Z

|

https://github.com/piskvorky/gensim/issues/2872

|

[

"bug",

"documentation",

"difficulty easy"

] |

piskvorky

| 6

|

ultrafunkamsterdam/undetected-chromedriver

|

automation

| 1,691

|

Setting Proxy with Authentication on ChromeDriver in Selenium 4.5

|

Hello,

I am using Selenium version 4.5, and I would like to inquire about setting up a proxy with authentication (username and password) on a custom ChromeDriver using the "undetected-chromedriver" library.

|

open

|

2023-12-07T17:06:04Z

|

2024-02-21T18:58:23Z

|

https://github.com/ultrafunkamsterdam/undetected-chromedriver/issues/1691

|

[] |

behzad-azizan

| 3

|

jschneier/django-storages

|

django

| 692

|

Performance of the url() method

|

Hi there,

I'm wondering if there's a performance opportunity around the `url()` method used in templates, views, etc., to get the full URL of an Image stored in, say, Amazon S3. It seems to me that the storage engine will do much more than simply put together the file `name` and `MEDIA_URL`.

When I'm dealing with publicly-accessible items on S3, would I be better off simply putting those two values together instead of calling the `url()` method?

I'm using Django REST Framework and need to list 100+ images' URLs in one single request, which is currently heavy via the `url()` method — that was my motivator to try accessing these values directly.

What am I missing?

Many thanks!

|

closed

|

2019-04-22T20:06:22Z

|

2024-08-12T18:12:06Z

|

https://github.com/jschneier/django-storages/issues/692

|

[

"s3boto"

] |

avorio

| 27

|

aimhubio/aim

|

tensorflow

| 2,742

|

Unhandled exception when attempting to retrieve commit.

|

## 🐛 Bug

Hi.

I'm getting an issue with the try block associated with this line (in the version I have installed):

https://github.com/aimhubio/aim/blob/416a599ef1c6e80ec08d046a24f49255aa604e98/aim/ext/utils.py#L59

(in the `main` branch):

https://github.com/aimhubio/aim/blob/f469779312ab0e01c0a9241d2b5cecf55b97910a/src/aim/ext/system_info/utils.py#L59

Essentially, if `'commit'` is not part of `results`, the `get()` method will return `None`, which doesn't have a `split()` method. This means that an `AttributeError` is raised, instead of a `ValueError`, which will not be caught and will make the program crash.

### To reproduce

It seems like `aim init` is not initiating a `git` repository and, hence, the corresponding subprocess command to retrieve `'commit'` will fail.

I'm not sure what's causing a `git` repository to not be initiated in the first place.

However, I can track values and visualize them via the web interface (when using the fix below).

### Expected behavior

The following try block would handle the issue:

```python

try:

commit_hash, commit_timestamp, commit_author = results['commit'].split('/')

except KeyError:

commit_hash = commit_timestamp = commit_author = None

```

### Environment

- Aim Version: 3.17.4

- Python version: 3.10.8

- pip version: 23.1.2

- OS: Archlinux

|

closed

|

2023-05-12T13:18:07Z

|

2023-11-14T22:37:00Z

|

https://github.com/aimhubio/aim/issues/2742

|

[

"type / bug",

"help wanted",

"phase / shipped",

"area / SDK-storage"

] |

Nuno-Mota

| 4

|

facebookresearch/fairseq

|

pytorch

| 5,092

|

Wav2Vec-U 2.0: could not training with fp16

|

## ❓ Questions and Help

### Before asking:

1. search the issues.

2. search the docs.

<!-- If you still can't find what you need: -->

#### What is your question?

When training Wav2Vec-U 2.0 models following the official configuration, I tried training with fp16 but leads to errors. The losses will be NaN.

https://github.com/facebookresearch/fairseq/blob/3f6ba43f07a6e9e2acf957fc24e57251a7a3f55c/examples/wav2vec/unsupervised/config/gan/w2vu2.yaml#L3-L10

#### Code

No code needed.

<!-- Please paste a code snippet if your question requires it! -->

#### What have you tried?

Kind of stuck on debugging. Have no idea.

#### What's your environment?

- fairseq Version: '0.12.2'

- PyTorch Version: '1.13.0+cu117'

- OS: Linux avsu-ESC8000-G4 5.15.0-69-generic

- How you installed fairseq (`pip`, source): pip -e install

- Build command you used (if compiling from source):

- Python version: Python 3.8.13

- CUDA/cuDNN version: 11.7

- GPU models and configuration: 4 GeForce RTX 3090

- Any other relevant information:

|

open

|

2023-04-28T02:49:16Z

|

2025-01-28T10:28:42Z

|

https://github.com/facebookresearch/fairseq/issues/5092

|

[

"question",

"needs triage"

] |

xiabingquan

| 3

|

ckan/ckan

|

api

| 8,027

|

datatables_view not search if value contains latvian characters

|

## CKAN version 2.10.1

## Describe the bug

A clear and concise description of what the bug is.

Search return results with search value: "datu sis"

Search return results with search value: "datu sist**ē**m"

### Steps to reproduce

Steps to reproduce the behavior:

1. dataset resource CSV

name, number

"Datu Sistēmas ","4003232323"

2. In datatables_view try serach in field "name" value "Datu Sis" you will get result

3. 2. In datatables_view try serach in field "name" value "Datu Sistēm" you will **not** get result

### Expected behavior

When search text including latvian caracters like ē, ā, ž, ī, ņ etc. search works and return results.

|

open

|

2024-01-25T07:47:18Z

|

2024-01-25T14:13:38Z

|

https://github.com/ckan/ckan/issues/8027

|

[] |

gatiszeiris

| 4

|

tflearn/tflearn

|

tensorflow

| 523

|

Data preprocessing and augmentation causes warnings.

|

Using tensorflow version 0.12.0rc1, these errors occur while using DataPreprocessing() and DataAugmentation().

```

WARNING:tensorflow:Error encountered when serializing data_augmentation.

Type is unsupported, or the types of the items don't match field type in CollectionDef.

'ImageAugmentation' object has no attribute 'name'

WARNING:tensorflow:Error encountered when serializing summary_tags.

Type is unsupported, or the types of the items don't match field type in CollectionDef.

'dict' object has no attribute 'name'

WARNING:tensorflow:Error encountered when serializing data_preprocessing.

Type is unsupported, or the types of the items don't match field type in CollectionDef.

'DataPreprocessing' object has no attribute 'name'

```

|

closed

|

2016-12-16T00:28:40Z

|

2016-12-19T21:15:27Z

|

https://github.com/tflearn/tflearn/issues/523

|

[

"review needed"

] |

jadenyjw

| 2

|

recommenders-team/recommenders

|

deep-learning

| 1,519

|

No module named 'recommenders'[ASK]

|

### Description

I am running a Google Colab notebook for the 00_quick_start/als_movielens.ipynb I have done !pip install recommenders and when I do !pip show recommenders it shows that it has been installed. But when I run the cell with from recommenders.utils.timer import Timer I get an error message. It is attached. I am not sure what I am doing wrong. I am using Windows 10 and Python 3.7.11

### Other Comments

|

closed

|

2021-09-04T01:02:39Z

|

2023-05-22T14:11:30Z

|

https://github.com/recommenders-team/recommenders/issues/1519

|

[

"help wanted"

] |

dagartga

| 9

|

microsoft/qlib

|

deep-learning

| 1,138

|

How to let code change take effect without re-installation?

|

Since we are importing qlib package from installed location, code change never take effect unless re-install it.

For developers, is there any way to let code change take effect immediately?

|

closed

|

2022-06-19T10:20:51Z

|

2022-07-03T12:12:48Z

|

https://github.com/microsoft/qlib/issues/1138

|

[

"question"

] |

jingedawang

| 2

|

feder-cr/Jobs_Applier_AI_Agent_AIHawk

|

automation

| 1,086

|

Generates resume, and then shutsdown itself

|

Generates resume, and then shuts down itself instead of applying jobs

|

open

|

2025-02-06T21:16:50Z

|

2025-03-03T04:05:56Z

|

https://github.com/feder-cr/Jobs_Applier_AI_Agent_AIHawk/issues/1086

|

[

"bug"

] |

mrtknrt

| 4

|

Lightning-AI/pytorch-lightning

|

machine-learning

| 19,794

|

LOG issue

|

Bug description

As shown in the below's screenshot, 710144 is my total number of samples, but 100 is the batch amount. Since my batch size is 64, so I expect the total number is 710144/64=11096, I think 11096 should be at the position of 710144. Can someone explain me this? Which makes me a little bit confused.

This is the way I log during training step:

`self.log('train_loss', loss, on_step=True, rank_zero_only=True)'`

Thanks in advance!

JJ

cc @awaelchli

|

closed

|

2024-04-22T04:10:38Z

|

2024-06-22T03:21:32Z

|

https://github.com/Lightning-AI/pytorch-lightning/issues/19794

|

[

"question",

"progress bar: tqdm",

"ver: 2.1.x"

] |

jzhanghzau

| 2

|

NVIDIA/pix2pixHD

|

computer-vision

| 91

|

How do I increase the number of training iterations for the discriminator?

|

Hi,

I want to increase the training iterations for the discriminator. How do I change the number of D (or even G) iterations?

|

open

|

2018-12-11T11:32:53Z

|

2019-04-09T08:41:29Z

|

https://github.com/NVIDIA/pix2pixHD/issues/91

|

[] |

gvenkat21

| 5

|

streamlit/streamlit

|

python

| 9,984

|

`st.altair_chart` does not show with a good size if the title is too long

|

### Checklist

- [X] I have searched the [existing issues](https://github.com/streamlit/streamlit/issues) for similar issues.

- [X] I added a very descriptive title to this issue.

- [X] I have provided sufficient information below to help reproduce this issue.

### Summary

`st.altair_chart` fails to properly display `alt.Chart` with title that exceed the container width.

When using st.altair_chart, charts with titles that do not fit within the container width are rendered poorly.

In the example below, two `st.altair_chart` instances use the same `alt.Chart` object. The first chart has sufficient space to display the entire title, resulting in a clear presentation. In contrast, the second chart has limited space, causing the title to be truncated and the chart to appear distorted.

<img width="652" alt="image" src="https://github.com/user-attachments/assets/3f520e5e-7677-4d68-a6d0-c7c66ffb05cd">

If the title length is increased to affect the first chart as well, it will also render poorly, indicating that the issue is not related to the `use_container_width` parameter in `st.altair_chart`.

### Reproducible Code Example

[](https://issues.streamlitapp.com/?issue=gh-9984)

```Python

# create a very basic alt.chart

import streamlit as st

import altair as alt

import pandas as pd

# Create a simple dataframe

df = pd.DataFrame({"x": [1, 2, 3, 4, 5], "y": [10, 20, 30, 40, 50]})

# Create a simple chart

chart = (

alt.Chart(

data=df,

title="Lorem ipsum dolor sit amet, consectetur adipiscing elit. Sed nec purus euismod, ultricies nunc nec, ultricies nunc.",

)

.mark_line()

.encode(x="x", y="y")

)

# Render the chart

st.altair_chart(chart, use_container_width=True)

st.altair_chart(chart, use_container_width=False)

```

### Steps To Reproduce

Run the previous code

### Expected Behavior

The chart should be always displayed with the appropriate width.

If the title exceeds the available space, it should be truncated at the point where it reaches the container's width limit without affecting the chart.

### Current Behavior

If the `alt.Chart` title is too long, the title is truncated (which is good), but the chart is rendered with an incorrect width, leading to a poor visual presentation.

### Is this a regression?

- [ ] Yes, this used to work in a previous version.

### Debug info

- Streamlit version: 1.40.2

- Python version: 3.10.11

- Operating System: Windows 11

- Browser: Chrome

### Additional Information

|

open

|

2024-12-09T16:37:58Z

|

2024-12-17T22:55:03Z

|

https://github.com/streamlit/streamlit/issues/9984

|

[

"type:bug",

"status:confirmed",

"priority:P3",

"feature:st.altair_chart"

] |

RubenCata

| 2

|

recommenders-team/recommenders

|

deep-learning

| 2,097

|

[FEATURE] Support Python 3.12

|

I know you just updated it to support Python 3.11, but Manjaro or Ubuntu now use Python3.12 by default.

|

open

|

2024-05-14T11:47:00Z

|

2024-05-15T05:18:28Z

|

https://github.com/recommenders-team/recommenders/issues/2097

|

[

"enhancement"

] |

daviddavo

| 2

|

mars-project/mars

|

numpy

| 2,414

|

Add support for `label_binarize`.

|

<!--

Thank you for your contribution!

Please review https://github.com/mars-project/mars/blob/master/CONTRIBUTING.rst before opening an issue.

-->

**Is your feature request related to a problem? Please describe.**

`mars.learn.preprocessing.label_binarize` can be added support.

|

closed

|

2021-09-02T10:21:25Z

|

2021-09-02T15:24:02Z

|

https://github.com/mars-project/mars/issues/2414

|

[

"type: feature",

"mod: learn"

] |

qinxuye

| 0

|

Zeyi-Lin/HivisionIDPhotos

|

machine-learning

| 14

|

演示空间进不去了

|

ninh您好提示显示一直进不去演示空间,请问是关闭了吗?

|

closed

|

2024-08-17T10:07:25Z

|

2024-08-30T07:10:57Z

|

https://github.com/Zeyi-Lin/HivisionIDPhotos/issues/14

|

[] |

hxj0316

| 1

|

allure-framework/allure-python

|

pytest

| 629

|

hooks.py throws exception because of missing listener function

|

https://github.com/allure-framework/allure-python/blob/master/allure-behave/src/hooks.py#L53

`start_feature` was recently removed from listener.py, but hooks.py still calls this function.

|

open

|

2021-10-06T11:36:13Z

|

2023-07-08T22:30:20Z

|

https://github.com/allure-framework/allure-python/issues/629

|

[

"bug",

"theme:behave"

] |

donders

| 1

|

vitalik/django-ninja

|

django

| 1,025

|

Apply decorators to a subset of routes/all paths under a sub-router

|

Recently, we found the need in Django to take an iterable of Django url configs and decorate the functions within them with something (in our case, observability functions to tag logs correctly).

With NinjaAPI, you can do this perfectly well with the .urls property on the top-level API. 🚀

However, if you want to decorate certain subroutes of that main API, it doesn't seem possible to do this.

For example:

```

router_1 = Router()

router_2 = Router()

# Imagine the routers gets a load of paths/functions registered to it...

api.add_router("prefix_1/", router_1)

api.add_router("prefix_2/", router_2)

urlpatterns = [

path("api/", api.urls)

]

```

Given that example, say I wanted to decorate all the functions under `router_1` with `decorator_1` and `router_2` with `decorator_2`. As far as I can tell, I am unable to do that. Instead, I have to decorate all the functions within those routers individually, which is obviously not ideal.

Could this be possible?

|

open

|

2023-12-22T09:40:00Z

|

2023-12-22T11:58:39Z

|

https://github.com/vitalik/django-ninja/issues/1025

|

[] |

JimNero009

| 2

|

ijl/orjson

|

numpy

| 23

|

Is Rust nightly still necessary, or can you use some released version of Rust?

|

I will need to package `orjson` for Conda-Forge if I decide to use it, and I'm not sure if random versions of rust will be available.

|

closed

|

2019-07-16T23:53:40Z

|

2021-03-10T16:10:10Z

|

https://github.com/ijl/orjson/issues/23

|

[] |

itamarst

| 8

|

litestar-org/litestar

|

pydantic

| 3,189

|

Docs: Add guard example to JWT docs

|

### Summary

Currently in the [JWT docs](https://docs.litestar.dev/latest/usage/security/jwt.html) there are a few references regarding how to access the 'User' object during and after being authenticated. These boil down to:

a) queried using the token

b) directly from the request (which can be easily assumed to be attached via retrieve_user_handler prior to going to the api path)

However there are other instances where user details need to be extracted from the `connection` object (such as in role-based guards).

```py

def admin_guard(connection: ASGIConnection, _: BaseRouteHandler) -> None:

if not connection.user.is_admin:

raies NotAuthorizedException()

```

A gap in knowledge between the page on JWTs and guards is that it's not made entirely clear _how_ user gets attached to connection. I would like to suggest that an example guard is added to the JWT docs with a comment explaining that the Auth object automatically attaches it for you based on the object returned from `retrieve_user_handler`.

It also isn't made abundantly clear that the TypeVar provided to the Auth object directly corresponds to the retrieve_user_handler. For a little while, I was actually setting the TypeVar based on my login response and wondering why it wasn't working. A silly mistake in hindsight, but I believe a simple comment could have saved me from it!

|

open

|

2024-03-11T23:43:59Z

|

2025-03-20T15:54:28Z

|

https://github.com/litestar-org/litestar/issues/3189

|

[

"Documentation :books:",

"Help Wanted :sos:",

"Good First Issue"

] |

BVengo

| 2

|

xonsh/xonsh

|

data-science

| 4,796

|

xonsh-in-docker.py: outdated default python version -> parser does not compile

|

``xonsh-in-docker.py`` is broken due do python 3.6 being set as default.

|

closed

|

2022-05-05T21:15:45Z

|

2024-06-29T22:29:12Z

|

https://github.com/xonsh/xonsh/issues/4796

|

[

"docker"

] |

dev2718

| 4

|

amdegroot/ssd.pytorch

|

computer-vision

| 16

|

How to train own datasets?

|

I have own datasets with labeled. How to train it?

|

open

|

2017-05-02T10:17:37Z

|

2020-11-30T17:39:46Z

|

https://github.com/amdegroot/ssd.pytorch/issues/16

|

[

"enhancement"

] |

zhanghan328

| 26

|

coqui-ai/TTS

|

python

| 3,966

|

[Feature request] Adjust output audio speed in YourTTS

|

Hello,

I have finetuned YourTTS on a number of new speakers, and the quality of audio, pronounciation is good. However, the audio output is a bit fast. I have tried postprocessing like resampling etc, but it changes the pitch.

There is a speed feature available in xttsv2. Can we have a similar one for YourTTS or is there any workaround for this?

Some inputs would be highly appreciated.

Thanks

|

closed

|

2024-08-13T07:14:41Z

|

2025-01-26T08:48:38Z

|

https://github.com/coqui-ai/TTS/issues/3966

|

[

"wontfix",

"feature request"

] |

Rakshith12-pixel

| 9

|

ymcui/Chinese-BERT-wwm

|

tensorflow

| 172

|

相同配置代码,重复运行结果不同?

|

你好,我们的BERT-wwm-ext(tf)模型做ner和情感分类任务,在相同的配置也指定了随机种子的情况下,两次运行结果不同,换了google的BERT或roBERTa都不会出现这个问题。用部分样本训练发现,样本不多(20条)时结果是一样的,但训练样本大以后,结果就不同了。不知道什么原因,谢谢你的解答。

|

closed

|

2021-03-09T14:34:34Z

|

2021-03-22T07:35:02Z

|

https://github.com/ymcui/Chinese-BERT-wwm/issues/172

|

[

"stale"

] |

sirlb

| 9

|

onnx/onnx

|

tensorflow

| 6,308

|

Convert a model with custom pytorch CUDA kernel

|

# Ask a Question

### Question

I have implemented a custom pytorch CUDA kernel (and CPU kernel) for rotated bounding boxes that is not supported in pytorch. (like this example https://github.com/pytorch/extension-cpp)

For my use case, i need to convert the model to onnx, but im struggling a lot. It seems like i have to implement custom operators for onnx using onnx ops only, but this is too much work. What is a better way of converting the model to onnx? Any tips or advices will be very much appreciated.

|

open

|

2024-08-20T13:29:42Z

|

2024-08-20T16:18:10Z

|

https://github.com/onnx/onnx/issues/6308

|

[

"question",

"topic: runtime"

] |

davidgill97

| 1

|

keras-team/keras

|

machine-learning

| 20,985

|

Output discrepancy between keras.predict and tf_saved_model

|

Hi,

I am exporting a model like this:

```

import keras

export_archive = keras.export.ExportArchive()

export_archive.track(model_tra)

export_archive.add_endpoint(

name="serve",

fn=lambda x: model_tra.call(x, training=False),

input_signature=[

keras.InputSpec(shape=(None, 3, 320, 320, 1), dtype="float64")

],

)

export_archive.write_out(

"/home/edge7/Desktop/projects/ing_edurso/wharfreenet-inference/models/la_ao"

)

```

Then I load it like:

`tf.saved_model.load(os.path.join(MODEL_DIRECTORY, "la_ao"))`

and I use it like:

`model.serve(np.expand_dims(np.array(frame), axis=-1))`

I get slightly different results than just using:

model.predict or model.predict_on_batch

am I missing something silly in the conversion?

|

open

|

2025-03-05T09:30:06Z

|

2025-03-20T05:07:24Z

|

https://github.com/keras-team/keras/issues/20985

|

[

"type:Bug"

] |

edge7

| 4

|

sammchardy/python-binance

|

api

| 852

|

Get withdraw history failing unexpectedly

|

**Describe the bug**

I am trying to access my withdrawal and deposit histories and receiving the following error:

```BinanceAPIException Traceback (most recent call last)

<ipython-input-4-e52a5e13df7b> in <module>

----> 1 client.get_withdraw_history()

~/personal/binance/.venv/lib/python3.8/site-packages/binance/client.py in get_withdraw_history(self, **params)

2600

2601 """

-> 2602 return self._request_margin_api('get', 'capital/withdraw/history', True, data=params)

2603

2604 def get_withdraw_history_id(self, withdraw_id, **params):

~/personal/binance/.venv/lib/python3.8/site-packages/binance/client.py in _request_margin_api(self, method, path, signed, **kwargs)

356 uri = self._create_margin_api_uri(path)

357

--> 358 return self._request(method, uri, signed, **kwargs)

359

360 def _request_website(self, method, path, signed=False, **kwargs) -> Dict:

~/personal/binance/.venv/lib/python3.8/site-packages/binance/client.py in _request(self, method, uri, signed, force_params, **kwargs)

307

308 self.response = getattr(self.session, method)(uri, **kwargs)

--> 309 return self._handle_response(self.response)

310

311 @staticmethod

~/personal/binance/.venv/lib/python3.8/site-packages/binance/client.py in _handle_response(response)

316 """

317 if not (200 <= response.status_code < 300):

--> 318 raise BinanceAPIException(response, response.status_code, response.text)

319 try:

320 return response.json()

BinanceAPIException: APIError(code=-1100): Illegal characters found in a parameter.

```

This seems like a weird error to me because I am not passing any parameters. I also am able to hit other endpoints with my client credentials.

**To Reproduce**

I am simply following the example and running:

`client.get_withdraw_history()`

**Expected behavior**

I expect to receive a json containing withdrawal information.

**Environment (please complete the following information):**

- Python version:3.8

- Virtual Env: virtualenv

- OS: Ubuntu

- python-binance version: 1.0.10

|

open

|

2021-05-13T19:18:04Z

|

2021-12-23T02:07:21Z

|

https://github.com/sammchardy/python-binance/issues/852

|

[] |

ngriffiths13

| 2

|

2noise/ChatTTS

|

python

| 911

|

E:\\Chat TTS\\output_audio\\segment\\合并\\segment_合并.wav

|

raise LibsndfileError(err, prefix="Error opening {0!r}: ".format(self.name))

soundfile.LibsndfileError: Error opening 'E:\\Chat TTS\\output_audio\\segment\\合并\\segment_合并.wav': System error

各位大佬这是问什么呢?.

|

closed

|

2025-03-04T07:43:22Z

|

2025-03-12T13:55:46Z

|

https://github.com/2noise/ChatTTS/issues/911

|

[

"wontfix"

] |

paul2264

| 1

|

opengeos/leafmap

|

streamlit

| 483

|

Choropleth map is not working in windows

|

<!-- Please search existing issues to avoid creating duplicates. -->

### Environment Information

- leafmap version: 0.22.0

- Python version: 3.9.13

- Operating System: Windows 11 Enterprise

### Description

I'm trying to do the same example as https://colab.research.google.com/github/opengeos/leafmap/blob/master/examples/notebooks/53_choropleth.ipynb#scrollTo=iyOJ30pLR_ur

however, it shows the type error

### What I Did

```

Paste the command(s) you ran and the output.

If there was a crash, please include the traceback here.

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

in

1 m = leafmap.Map()

----> 2 m.add_data(

3 data, column='POP_EST', scheme='Quantiles', cmap='Blues', legend_title='Population'

4 )

5 m

venv\lib\site-packages\leafmap\leafmap.py in add_data(self, data, column, colors, labels, cmap, scheme, k, add_legend, legend_title, legend_position, legend_kwds, classification_kwds, layer_name, style, hover_style, style_callback, info_mode, encoding, **kwargs)

3687 style_callback = lambda feat: {"fillColor": feat["properties"]["color"]}

3688

-> 3689 self.add_gdf(

3690 gdf,

3691 layer_name=layer_name,

packages\leafmap\leafmap.py in add_gdf(self, gdf, layer_name, style, hover_style, style_callback, fill_colors, info_mode, zoom_to_layer, encoding, **kwargs)

2476 """

2477 for col in gdf.columns:

-> 2478 if gdf[col].dtype in ["datetime64[ns]", "datetime64[ns, UTC]"]:

2479 gdf[col] = gdf[col].astype(str)

2480

TypeError: Invalid datetime unit in metadata string "[ns, UTC]"

```

|

closed

|

2023-06-23T14:16:34Z

|

2023-06-29T19:44:46Z

|

https://github.com/opengeos/leafmap/issues/483

|

[

"bug"

] |

jxlyn

| 4

|

slackapi/python-slack-sdk

|

asyncio

| 736

|

client_msg_id missing from messages with attachments

|

### Description

For all messages without attachments we see client_msg_id as a root element in the message event data. For all messages with attachments, we do not see any element client_msg_id anywhere. Same for RTMClient or EventsAPI.

### What type of issue is this? (place an `x` in one of the `[ ]`)

- [x] bug

- [ ] enhancement (feature request)

- [ ] question

- [ ] documentation related

- [ ] testing related

- [ ] discussion

### Requirements (place an `x` in each of the `[ ]`)

* [x] I've read and understood the [Contributing guidelines](https://github.com/slackapi/python-slackclient/blob/master/.github/contributing.md) and have done my best effort to follow them.

* [x] I've read and agree to the [Code of Conduct](https://slackhq.github.io/code-of-conduct).

* [x] I've searched for any related issues and avoided creating a duplicate issue.

---

### Bug Report

Filling out the following details about bugs will help us solve your issue sooner.

#### Reproducible in:

slackclient version: 2.6.1

python version: ~~2.6.9~~ 3.6.9

OS version(s): MacOS, Ubuntu 16.04

#### Steps to reproduce:

1. Send message with an image attachment

2. Observe message event data.

3. Attempting to extract client_msg_id as with messages without attachments will yield a KeyError

#### Expected result:

client_msg_id should be present no matter if the message has an attachment or not

#### Actual result:

client_msg_id is missing

#### Attachments:

Logs, screenshots, screencast, sample project, funny gif, etc.

|

closed

|

2020-06-26T04:22:12Z

|

2021-02-24T01:46:49Z

|

https://github.com/slackapi/python-slack-sdk/issues/736

|

[

"Version: 2x",

"rtm-client",

"web-client",

"server-side-issue"

] |

pbrackin

| 5

|

plotly/dash

|

data-visualization

| 2,315

|

editing dash-renderer/build/dash_renderer.dev.js has no effect during runtime

|

**Describe your context**

linux, installed dash and dash-cytoscape with pip

- replace the result of `pip list | grep dash` below

```

dash 2.7.0

dash-core-components 2.0.0

dash-cytoscape 0.3.0 /home/nmz787/git/dash-cytoscape

dash-dangerously-set-inner-html 0.0.2

dash-flow-example 0.0.5

dash-html-components 2.0.0

dash-table 5.0.0

```

- if frontend related, tell us your Browser, Version and OS

- OS: Windows 10

- Browser - Chrome

- Version - Version 107.0.5304.106 (Official Build) (64-bit)

**Describe the bug**

when I edit the `site-packages` copy of `dash/dash-renderer/build/dash_renderer.dev.js` and hard-refresh my browser, my code changes do not show up.

What is the correct procedure for testing hot-patches like this? If I need to clone this repo and build a local copy after my changes/alterations, where are the build instructions?

**Expected behavior**

I edit the JS file, which is presumably being served when Dash is run, and my browser runs the changed code.

|

closed

|

2022-11-16T23:15:18Z

|

2023-03-10T21:14:45Z

|

https://github.com/plotly/dash/issues/2315

|

[] |

nmz787-intel

| 2

|

jina-ai/serve

|

machine-learning

| 6,100

|

Suggestions For Improving Testing

|

**Describe your proposal/problem**

<!-- A clear and concise description of what the proposal is. -->

I have a suggestions that could improve development experience specifically around testing. Forgive me if you've already considered these, but I thought this might be useful feedback.

1. ### Sharing Deployments Across Tests via Fixture Scopes

I realize some tests are going to need custom deployments, but many of them can use a shared deployment/flow which is launched once at the beginning of the session. This means we only have to wait for the app to startup once.

```python

@pytest.fixture(scope="session")

def hub_client(session_mocker):

with Deployment(

uses=MyExecutor, protocol="http",

) as dep:

yield dep

```

2. ### Run CI Locally

We can use Tox, Docker Compose, or some orchestration tool to allow us to reproduce CI locally. (If we already have this, I missed it and maybe I can update the docs to make it more clear)

3. ### Use pytest-xdist to run tests asynchronously

I believe there have been some efforts to do this, and it might require some refactoring, but I saw a huge reduction in runtime when using multiple workers.

---

<!-- Optional, but really help us locate the problem faster -->

**Environment**

<!-- Run `jina --version-full` and copy paste the output here -->

**Screenshots**

<!-- If applicable, add screenshots to help explain your problem. -->

|

closed

|

2023-10-26T14:22:41Z

|

2023-10-30T17:17:07Z

|

https://github.com/jina-ai/serve/issues/6100

|

[] |

NarekA

| 2

|

pydantic/pydantic-ai

|

pydantic

| 671

|

TypeError: Object of type ValueError is not JSON serializable

|

### Description

Sorry if this is already reported or if there is a solution im missing.

I've encountered a sporadic issue while using a Pydantic model with a custom validator (but i guess that does not have to be related). About **2% of the runs** result in the following error:

```

Exception: TypeError: Object of type ValueError is not JSON serializable

```

I haven’t yet found a reliable way to reproduce the issue, below is a mocked-up code snippet and a stack trace to demonstrate the scenario.

---

### Code Example

```python

from typing import Any

import pydantic_ai

from pydantic import BaseModel, model_validator

VALID_TYPES = {"test": ["testing"]}

class TypeModel(BaseModel):

type: str

@model_validator(mode="before")

def validate_type(cls, values: dict[str, Any]):

type_ = values.get("type")

if type_ not in VALID_TYPES:

raise ValueError(

f"Invalid type '{type_}'. Valid types are: {list(VALID_TYPES.keys())}."

)

return values

agent = pydantic_ai.Agent(model="openai:gpt-4o", result_type=TypeModel)

agent.run_sync("toast")

```

---

### Stack Trace

```

response = self.agent.run_sync(prompt)

File "/home/site/wwwroot/.python_packages/lib/site-packages/pydantic_ai/agent.py", line 220, in run_sync

return asyncio.run(self.run(user_prompt, message_history=message_history, model=model, deps=deps))

File "/usr/local/lib/python3.10/asyncio/runners.py", line 44, in run

return loop.run_until_complete(main)

File "/usr/local/lib/python3.10/asyncio/base_events.py", line 649, in run_until_complete

return future.result()

File "/home/site/wwwroot/.python_packages/lib/site-packages/pydantic_ai/agent.py", line 172, in run

model_response, request_cost = await agent_model.request(messages)

File "/home/site/wwwroot/.python_packages/lib/site-packages/pydantic_ai/models/openai.py", line 125, in request

response = await self._completions_create(messages, False)

File "/home/site/wwwroot/.python_packages/lib/site-packages/pydantic_ai/models/openai.py", line 155, in _completions_create

openai_messages = [self._map_message(m) for m in messages]

File "/home/site/wwwroot/.python_packages/lib/site-packages/pydantic_ai/models/openai.py", line 155, in <listcomp>

openai_messages = [self._map_message(m) for m in messages]

File "/home/site/wwwroot/.python_packages/lib/site-packages/pydantic_ai/models/openai.py", line 236, in _map_message

content=message.model_response(),

File "/home/site/wwwroot/.python_packages/lib/site-packages/pydantic_ai/messages.py", line 121, in model_response

description = f'{len(self.content)} validation errors: {json.dumps(self.content, indent=2)}'

File "/usr/local/lib/python3.10/json/__init__.py", line 238, in dumps

**kw).encode(obj)

File "/usr/local/lib/python3.10/json/encoder.py", line 201, in encode

chunks = list(chunks)

File "/usr/local/lib/python3.10/json/encoder.py", line 429, in _iterencode

yield from _iterencode_list(o, _current_indent_level)

File "/usr/local/lib/python3.10/json/encoder.py", line 325, in _iterencode_list

yield from chunks

File "/usr/local/lib/python3.10/json/encoder.py", line 405, in _iterencode_dict

yield from chunks

File "/usr/local/lib/python3.10/json/encoder.py", line 405, in _iterencode_dict

yield from chunks

File "/usr/local/lib/python3.10/json/encoder.py", line 438, in _iterencode

o = _default(o)

File "/usr/local/lib/python3.10/json/encoder.py", line 179, in default

raise TypeError(f'Object of type {o.__class__.__name__} '

```

|

open

|

2025-01-13T15:37:08Z

|

2025-01-23T08:00:48Z

|

https://github.com/pydantic/pydantic-ai/issues/671

|

[

"bug"

] |

HampB

| 2

|

zama-ai/concrete-ml

|

scikit-learn

| 85

|

WARNING: The shape inference of onnx.brevitas::Quant type is missing?

|

<img width="1494" alt="image" src="https://github.com/zama-ai/concrete-ml/assets/127387074/66030dd8-d210-4df5-9cb4-b180846c9a8f">

|

closed

|

2023-06-11T11:15:23Z

|

2023-06-12T10:09:18Z

|

https://github.com/zama-ai/concrete-ml/issues/85

|

[] |

maxwellgodv

| 1

|

PrefectHQ/prefect

|

automation

| 17,482

|

Flow Run continues to run after cancelled

|

### Bug summary

A Flow Run in the running state can be cancelled (via API call to Prefect) and the Flow Run will continue to run, including new Tasks which start after the cancellation time.

Similar issues:

- #16939 this issues mentions something about failing tasks (details unclear but in our case no failed tasks). In their case the flow run is cancelled via UI, so possibly a similar root cause?

- #16001 this is for runs stuck in the Cancelling state. In contrast, we saw a run successfully enter the Cancelled state but then continue to run.

### Version info

```Text

Version: 3.1.3

API version: 0.8.4

Python version: 3.10.12

Git commit: 39b6028c

Built: Tue, Nov 19, 2024 3:25 PM

OS/Arch: linux/x86_64

Profile: ephemeral

Server type: cloud

Pydantic version: 2.9.2

Integrations:

prefect-azure: 0.4.2

```

### Additional context

Some additional details:

- In the Prefect UI and logs, we can see a `prefect.flow-run.Cancelled` log at the correct time

- The Start and End time in the UI show the true start of the run and the End time is the cancellation time. Duration is based on start to cancel time.

- New tasks Start and End after the cancellation time. Visible in logs and in UI

I will provide screenshots as possible (all from the same Flow Run):

Full run time visualized:

Start and End agree with cancelled log but not above screenshot:

The logs for the final Tasks of this Flow Run, which were scheduled to start, started, and ended after the cancel time and entered a completed state:

#### Reproduction steps

I have not identified a reliable way to reproduce this. Any insight appreciated.

|

open

|

2025-03-14T21:18:13Z

|

2025-03-17T19:46:37Z

|

https://github.com/PrefectHQ/prefect/issues/17482

|

[

"bug"

] |

gscholtes-relativity

| 1

|

gunthercox/ChatterBot

|

machine-learning

| 2,353

|

healthcare chat bot

|

closed

|

2024-03-04T09:19:33Z

|

2025-01-04T19:10:16Z

|

https://github.com/gunthercox/ChatterBot/issues/2353

|

[] |

Stalin1419

| 2

|

|

amisadmin/fastapi-amis-admin

|

sqlalchemy

| 33

|

URL 组件示例无法正常运行

|

按照文档复制以下代码:

```python

# adminsite.py

from fastapi_amis_admin.admin import admin

from fastapi_amis_admin.amis import PageSchema

@site.register_admin

class GitHubLinkAdmin(admin.LinkAdmin):

# 通过page_schema类属性设置页面菜单信息;

# PageSchema组件支持属性参考: https://baidu.gitee.io/amis/zh-CN/components/app

page_schema = PageSchema(label='AmisLinkAdmin', icon='fa fa-github')

# 设置跳转链接

link = 'https://github.com/amisadmin/fastapi_amis_admin'

```

在侧边栏可得一选项, 但点击后并没有在新页面打开超链接, 而在在本页面开了一个`frame`, 由于同源策略, `github.com 拒绝了我们的连接请求。`.

其余代码如下:

```python

from fastapi import FastAPI

from fastapi_amis_admin.admin.settings import Settings

from fastapi_amis_admin.admin.site import AdminSite

from adminsite import site

# 创建FastAPI应用

app = FastAPI()

# 挂载后台管理系统

site.mount_app(app)

if __name__ == '__main__':

import uvicorn

uvicorn.run('main:app', debug=True, reload=True, workers=1)

```

- fastapi_amis_admin 0.2.0

- python 3.10.5

|

closed

|

2022-07-15T08:48:50Z

|

2022-07-15T08:52:20Z

|

https://github.com/amisadmin/fastapi-amis-admin/issues/33

|

[] |

myuanz

| 0

|

igorbenav/fastcrud

|

pydantic

| 75

|

Multiple nesting for joins.

|

**Is your feature request related to a problem? Please describe.**

I'm wonderring if it's currently possible to have multiple layers of nesting in joined objects.

**Describe the solution you'd like**

Ability to return multi-nested objects like

```

{

'id': 1,

'name': 'Jakub',

'item': {

'id': 1,

'price': 20,

'shop': {

'id': 1,

'name': 'Adidas'

}

}

}

```

|

open

|

2024-05-06T19:55:08Z

|

2024-05-16T01:33:37Z

|

https://github.com/igorbenav/fastcrud/issues/75

|

[

"enhancement",

"FastCRUD Methods"

] |

JakNowy

| 1

|

ultralytics/ultralytics

|

pytorch

| 19,680

|

how can I run model.val() AND get the individual predictions in the results?

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/orgs/ultralytics/discussions) and found no similar questions.

### Question

I'm posting this mostly to get the AI generated response.

This can be nicely used to validate a dataset and get graphs

`results = model.val(data="dataset/data.yaml", split="test")`

However, `results` does not contain any reference to the obb or individual predictions.

### Additional

_No response_

|

closed

|

2025-03-13T15:10:35Z

|

2025-03-13T18:16:49Z

|

https://github.com/ultralytics/ultralytics/issues/19680

|

[

"question"

] |

luizwritescode

| 2

|

gradio-app/gradio

|

data-visualization

| 10,663

|

Search functionality doesn't work for gr.Dataframe

|

### Describe the bug

Notice another bug for gr.Dataframe, i.e., the search & filter functionality doesn't work for text cells.

<img width="1920" alt="Image" src="https://github.com/user-attachments/assets/8f8f180d-451f-4b1c-842d-525cefee8f37" />

<img width="1920" alt="Image" src="https://github.com/user-attachments/assets/89a99660-7b4a-4af3-8cb4-f3e105c37456" />

<img width="1920" alt="Image" src="https://github.com/user-attachments/assets/c3292e86-e5d1-4c7b-8b32-f2ee4f0d66eb" />

### Have you searched existing issues? 🔎

- [x] I have searched and found no existing issues

### Reproduction

```python

import pandas as pd

import gradio as gr

# Creating a sample dataframe

def run():

df = pd.DataFrame({

"A" : ["Apparel & Accessories", "Home & Garden", "Health & Beauty", "Cameras & Optics", "Apparel & Accessories"],

"B" : [6, 2, 54, 3, 2],

"C" : [3, 20, 7, 3, 8],

"D" : [2, 3, 6, 2, 6],

"E" : [-1, 45, 64, 32, 23]

})

df = df.style.map(color_num, subset=["E"])

return df

# Function to apply text color

def color_num(value: float) -> str:

color = "red" if value >= 0 else "green"

color_style = "color: {}".format(color)

return color_style

# Displaying the styled dataframe in Gradio

with gr.Blocks() as demo:

gr.Textbox("{}".format(gr.__version__))

a = gr.DataFrame(show_search="search")

b = gr.Button("run")

b.click(run,outputs=a)

demo.launch()

```

### Screenshot

_No response_

### Logs

```shell

```

### System Info

```shell

gradio = 5.17.1

```

### Severity

Blocking usage of gradio

|

closed

|

2025-02-24T02:54:23Z

|

2025-03-10T18:14:59Z

|

https://github.com/gradio-app/gradio/issues/10663

|

[

"bug",

"💾 Dataframe"

] |

jamie0725

| 4

|

pytorch/pytorch

|

python

| 149,281

|

"Significant" Numerical differences for different tensor shapes in CUDA

|

### 🐛 Describe the bug

I get a very different results when I do calculation on cuda with tensor operation order, below is a sample code for reproduce:

```

import torch

import torch.nn as nn

sequence_size = 32

env_size = 64

input_dim = 39

hidden_dim = 64

output_dim = 6

device = "cuda"

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

batch_input = torch.randn((sequence_size, env_size, input_dim), dtype=torch.float32, device=device)

model = nn.Linear(in_features=input_dim, out_features=output_dim, device=device)

batch_output = model(batch_input)

print("big batch together:", batch_output[0,0])

print("smaller batch:", model(batch_input[0])[0])

```

The output is

where, the big difference is around 3e-4 which is bigger than the 1e-6 (the float precision).

During my application, I saw these difference can go up to 5e-3, which seems much bigger than the previous issues and affects the overall performance of my algorithm.

Everything works fine, when I do the compuation on CPU, no big difference(1e-4~1e-3) noticed

### Versions

PyTorch version: 1.11.0

Is debug build: False

CUDA used to build PyTorch: 11.3

ROCM used to build PyTorch: N/A

OS: Ubuntu 22.04.5 LTS (x86_64)

GCC version: (Ubuntu 11.4.0-1ubuntu1~22.04) 11.4.0

Clang version: Could not collect

CMake version: version 3.30.3

Libc version: glibc-2.35

Python version: 3.8.13 (default, Mar 28 2022, 11:38:47) [GCC 7.5.0] (64-bit runtime)

Python platform: Linux-6.8.0-52-generic-x86_64-with-glibc2.17

Is CUDA available: True

CUDA runtime version: 12.4.99

CUDA_MODULE_LOADING set to:

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 3070 Ti Laptop GPU

Nvidia driver version: 550.120

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 46 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 20

On-line CPU(s) list: 0-19

Vendor ID: GenuineIntel

Model name: 12th Gen Intel(R) Core(TM) i9-12900H

CPU family: 6

Model: 154

Thread(s) per core: 2

Core(s) per socket: 14

Socket(s): 1

Stepping: 3

CPU max MHz: 5000.0000

CPU min MHz: 400.0000

BogoMIPS: 5836.80

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf tsc_known_freq pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb ssbd ibrs ibpb stibp ibrs_enhanced tpr_shadow flexpriority ept vpid ept_ad fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms invpcid rdseed adx smap clflushopt clwb intel_pt sha_ni xsaveopt xsavec xgetbv1 xsaves split_lock_detect user_shstk avx_vnni dtherm ida arat pln pts hwp hwp_notify hwp_act_window hwp_epp hwp_pkg_req hfi vnmi umip pku ospke waitpkg gfni vaes vpclmulqdq tme rdpid movdiri movdir64b fsrm md_clear serialize pconfig arch_lbr ibt flush_l1d arch_capabilities

Virtualization: VT-x

L1d cache: 544 KiB (14 instances)

L1i cache: 704 KiB (14 instances)

L2 cache: 11.5 MiB (8 instances)

L3 cache: 24 MiB (1 instance)

NUMA node(s): 1

NUMA node0 CPU(s): 0-19

Vulnerability Gather data sampling: Not affected

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Mmio stale data: Not affected

Vulnerability Reg file data sampling: Mitigation; Clear Register File

Vulnerability Retbleed: Not affected

Vulnerability Spec rstack overflow: Not affected

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Enhanced / Automatic IBRS; IBPB conditional; RSB filling; PBRSB-eIBRS SW sequence; BHI BHI_DIS_S

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affected

Versions of relevant libraries:

[pip3] numpy==1.24.3

[pip3] torch==1.11.0

[pip3] torchvision==0.12.0

[conda] blas 1.0 mkl

[conda] cudatoolkit 11.3.1 h2bc3f7f_2

[conda] ffmpeg 4.3 hf484d3e_0 pytorch

[conda] mkl 2021.4.0 h06a4308_640

[conda] mkl-service 2.4.0 py38h7f8727e_0

[conda] mkl_fft 1.3.1 py38hd3c417c_0

[conda] mkl_random 1.2.2 py38h51133e4_0

[conda] numpy 1.24.3 py38h14f4228_0

[conda] numpy-base 1.24.3 py38h31eccc5_0

[conda] pytorch 1.11.0 py3.8_cuda11.3_cudnn8.2.0_0 pytorch

[conda] pytorch-mutex 1.0 cuda pytorch

[conda] torchvision 0.12.0 py38_cu113 pytorch

|

closed

|

2025-03-16T20:22:24Z

|

2025-03-17T15:45:57Z

|

https://github.com/pytorch/pytorch/issues/149281

|

[

"module: numerical-stability",

"triaged"

] |

HaoxiangYou

| 1

|

huggingface/transformers

|

machine-learning

| 36,816

|

Gemma3 can't be fine-tuned on multi-image examples

|

### System Info

There are more details in here: https://github.com/google-deepmind/gemma/issues/193

But shortly; it seems like multi-image training is not implemented yet

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [x] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

The following code works:

```python

import io

from typing import Any, cast

import requests

from transformers import AutoProcessor, Gemma3ForConditionalGeneration

from transformers import TrainingArguments

from trl import SFTTrainer, SFTConfig

from peft import LoraConfig

from datasets import IterableDataset, Features

import datasets

from PIL import Image

import numpy as np

HF_TOKEN = "..."

def load_image(url):

response = requests.get(url)

image = Image.open(io.BytesIO(response.content))

return image

def image_from_bytes(image_bytes):

return Image.open(io.BytesIO(image_bytes))

def main():

model_id = "google/gemma-3-4b-it"

model = Gemma3ForConditionalGeneration.from_pretrained(

model_id, device_map="auto", token=HF_TOKEN

)

model.config.use_cache = False # Disable caching for training

processor = AutoProcessor.from_pretrained(model_id, padding_side="right", token=HF_TOKEN)

processor.tokenizer.pad_token = processor.tokenizer.eos_token # Use eos token as pad token

processor.tokenizer.padding_side = "right"

def train_iterable_gen():

N_IMAGES = 1

image = load_image("https://cdn.britannica.com/61/93061-050-99147DCE/Statue-of-Liberty-Island-New-York-Bay.jpg").resize((896, 896))

images = np.array([image] * N_IMAGES)

print("IMAGES SHAPE", images.shape)

yield {

"images": images,

"messages": [

{

"role": "user",

"content": [{"type": "image" } for _ in range(images.shape[0])]

},

{

"role": "assistant",

"content": [{"type": "text", "text": "duck" }]

}

]

}

train_ds = IterableDataset.from_generator(

train_iterable_gen,

features=Features({

'images': [datasets.Image(mode=None, decode=True, id=None)],

'messages': [{'content': [{'text': datasets.Value(dtype='string', id=None), 'type': datasets.Value(dtype='string', id=None) }], 'role': datasets.Value(dtype='string', id=None)}]

} )

)

def collate_fn(examples):

# Get the texts and images, and apply the chat template

texts = [processor.apply_chat_template(example["messages"], tokenize=False) for example in examples]

images = [example["images"] for example in examples]

# Tokenize the texts and process the images

batch = processor(text=texts, images=images, return_tensors="pt", padding=True)

print("collate_fn pixel_values", batch["pixel_values"].shape)

print("collate_fn input_ids", batch["input_ids"].shape)

# The labels are the input_ids, and we mask the padding tokens in the loss computation

labels = batch["input_ids"].clone()

labels[labels == processor.tokenizer.pad_token_id] = -100

labels[labels == processor.image_token_id] = -100

batch["labels"] = labels

return batch

# Set up LoRA configuration for causal language modeling

lora_config = LoraConfig(

r=8,

lora_alpha=16,