repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

ymcui/Chinese-LLaMA-Alpaca

|

nlp

| 274

|

无法合并由alpaca-lora训练的权重,tokenizer使用llama-7b-hf和使用chinese_llama_plus_lora_7b中复制过去的

|

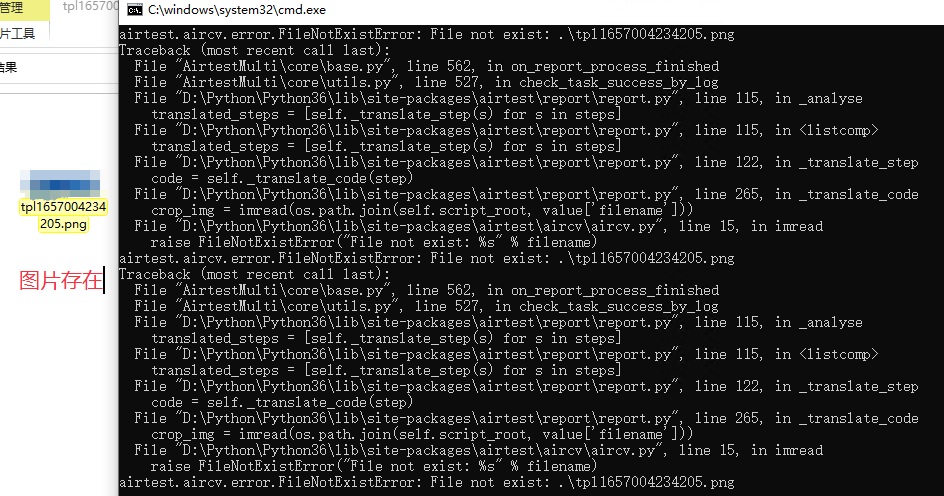

### 详细描述问题

*无法合并由alpaca-lora训练的权重,tokenizer使用llama-7b-hf和使用chinese_llama_plus_lora_7b中复制过去的。*

### 运行截图或log

<img width="1128" alt="image" src="https://user-images.githubusercontent.com/6939585/236842494-58ecb3f3-63a7-4022-82c2-fb6c29991a77.png">

<img width="349" alt="image" src="https://user-images.githubusercontent.com/6939585/236842585-5b17bdb0-8098-4e9e-8c7b-0d2b188c9114.png">

### 必查项目

- [ ] 哪个模型的问题:LLaMA

- [ ] 问题类型:

- 模型转换和合并

|

closed

|

2023-05-08T13:56:23Z

|

2023-05-21T22:02:19Z

|

https://github.com/ymcui/Chinese-LLaMA-Alpaca/issues/274

|

[

"stale"

] |

autoexpect

| 11

|

PaddlePaddle/PaddleHub

|

nlp

| 1,570

|

关于Pneumonia-CT-LKM-PP模型,如何了解它的结构和进行调参?

|

近期我也在倒腾“PaddleHub 新冠CT影像分析”这个项目,它用了一个预测模型Pneumonia_CT_LKM_PP,可是我有一些问题想向你请教:

1.我想了解这个预测模型的结构,我应该怎么去做?

2.我通过对这个预训练模型进行调参,用自己的数据集去训练,看看能不能多识别一些病灶,请问我应该怎么做呢?

|

open

|

2021-08-13T01:46:37Z

|

2021-08-16T01:51:44Z

|

https://github.com/PaddlePaddle/PaddleHub/issues/1570

|

[

"cv"

] |

LCreatorGH

| 1

|

aiortc/aiortc

|

asyncio

| 1,083

|

Bug in filexfer example: filename should be fp?

|

I must admit I didn't try to run the code. Just random stranger on the internet who was reading the example. It appears to me that the 'filename' parameter in run_answer on line 34 should be 'fp'. A) because main() passes the file pointer, not the name, and B) because fp is referenced on line 48, which without having tried it myself I assume will cause an exception.

https://github.com/aiortc/aiortc/blob/e9c13eab915ddc27f365356ed9ded0585b9a1bb7/examples/datachannel-filexfer/filexfer.py#L34C37-L34C45

|

closed

|

2024-04-12T14:02:28Z

|

2024-05-21T06:52:40Z

|

https://github.com/aiortc/aiortc/issues/1083

|

[] |

angererc

| 0

|

InstaPy/InstaPy

|

automation

| 6,332

|

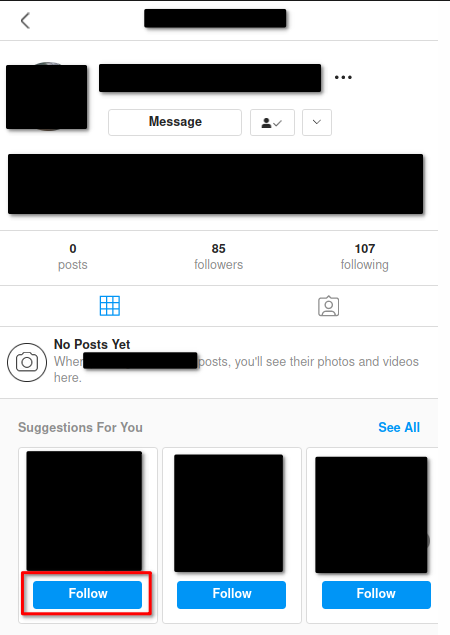

Explanation of Unable to unfollow if the user have no post issue

|

### Errors related:

`Already unfollowed xxx! or a private user that rejected your req`

Even though the user you are unfollowing is definitely followed by you, the problem only happens if the user does not have any post and instagram will show follow suggestions for you instead.

### Explanation

During unfollowing, Instapy will run get_following_status() to check whether the user profile is followed by you, followed you (Follow back button), not followed by you, etc...

The problem is that the Follow button XPath (follow_button_XP), which currently is

```

"follow_button_XP": "//button[text()='Following' or \

text()='Requested' or \

text()='Follow' or \

text()='Follow Back' or \

text()='Unblock' and not(ancestor::*/@role = 'presentation')]"

```

And if the user does not have any post, it will show the follow suggestions instead which results in the xpath selecting the first follow button under follow suggestion (highlighted in red).

Hence, get_following_status() will return "Follow" and fall into this elif, which is incorrect.

```

elif following_status in ["Follow", "Follow Back"]:

logger.info(

"--> Already unfollowed '{}'! or a private user that "

"rejected your req".format(person)

)

post_unfollow_cleanup(

["successful", "uncertain"],

username,

person,

relationship_data,

person_id,

logger,

logfolder,

)

return False, "already unfollowed"

```

### Possible Solutions

I'm not really good at web automation, but the solution I could think of is to have more specific xpaths for different following_status and better if else catching of different scenarios

But I'm aware that the downside of more specific xpaths leads to having to update them more often?

#### I'm running the latest master of Instapy on Ubuntu 21.04 on Firefox 92

Please correct me I'm wrong. Thanks

|

open

|

2021-09-29T14:28:40Z

|

2021-10-24T20:29:27Z

|

https://github.com/InstaPy/InstaPy/issues/6332

|

[] |

Benjaminlooi

| 1

|

OpenInterpreter/open-interpreter

|

python

| 1,430

|

import statement missing in a module

|

### Describe the bug

/envs/openinterpreter/lib/python3.9/site-packages/interpreter/terminal_interface/validate_llm_settings.py

In this file, there is a missing import statement.

The line which needs to be added is:

from interpreter import interpreter

And then it runs just fine.

### Reproduce

1. just launch openinterpreter, and it will produce an error

### Expected behavior

To run properly

### Screenshots

_No response_

### Open Interpreter version

0.3.9

### Python version

3.9.19

### Operating System name and version

Debian GNU/Linux 11 (bullseye)

### Additional context

_No response_

|

closed

|

2024-08-27T14:20:59Z

|

2024-08-27T15:13:21Z

|

https://github.com/OpenInterpreter/open-interpreter/issues/1430

|

[] |

S-Vaisanen

| 0

|

jofpin/trape

|

flask

| 50

|

cannot track redirect

|

so i have set google as an attack perimeter and while i access the google link from there in the search bar when i type any website the error pops

that do you want to leave or stay nothing else

|

closed

|

2018-08-24T05:18:44Z

|

2018-11-24T01:56:07Z

|

https://github.com/jofpin/trape/issues/50

|

[] |

asciiterminal

| 2

|

ipython/ipython

|

data-science

| 14,041

|

Update type(...) to import the Type from it's canonical location

|

I missed that in the previous PR but those should likely be :

```

from types import MethodDescriptorType

from types import ModuleType

```

_Originally posted by @Carreau in https://github.com/ipython/ipython/pull/14029#discussion_r1180062854_

|

closed

|

2023-04-28T08:00:08Z

|

2023-06-02T08:37:34Z

|

https://github.com/ipython/ipython/issues/14041

|

[

"good first issue"

] |

Carreau

| 0

|

piskvorky/gensim

|

machine-learning

| 3,490

|

Compiled extensions are very slow when built with Cython 3.0.0

|

We should produce a minimum reproducible example and show the Cython guys

|

open

|

2023-08-23T11:24:48Z

|

2024-04-10T15:53:55Z

|

https://github.com/piskvorky/gensim/issues/3490

|

[] |

mpenkov

| 1

|

gunthercox/ChatterBot

|

machine-learning

| 2,256

|

mathparse and mathematical evaluation

|

**Question:** Is the code eighteen hundred and twenty-one?

**Result in a fatal error**

**Explanation:** in eighteen (and sixteen, seventeen, and all that jazz) chatterbot sees EIGHT first

hence turning "eighteen" in "8een"

hence the keyword error.

**Solution i found**: in the file `mathwords.py` (of the library `mathparse`), add a space `' '` after each number raising an issue (four, six, seven, eight, nine) to force chatterbot to see the difference bewteen eight and eighteen.

Haven't invistigate further for other language nor other mathematical evaluation.

Hope it will help whoever reads this.

Cheers

|

open

|

2022-06-30T14:19:20Z

|

2022-07-01T08:52:35Z

|

https://github.com/gunthercox/ChatterBot/issues/2256

|

[] |

AskellAytch

| 0

|

taverntesting/tavern

|

pytest

| 315

|

Integration in pytest broken

|

I've noticed that with the recent version `v0.26.1` the integration in pytest seems broken. The command line parameters `--tavern*` are no longer present.

When using `v0.22.0` the command line parameters in pytest are present

```

$ ./env/bin/pytest --help | grep tavern

--tavern-global-cfg=TAVERN_GLOBAL_CFG [TAVERN_GLOBAL_CFG ...]

--tavern-http-backend=TAVERN_HTTP_BACKEND

--tavern-mqtt-backend=TAVERN_MQTT_BACKEND

--tavern-strict={body,headers,redirect_query_params} [{body,headers,redirect_query_params} ...]

--tavern-beta-new-traceback

tavern-global-cfg (linelist) One or more global configuration files to include

tavern-http-backend (string) Which http backend to use

tavern-mqtt-backend (string) Which mqtt backend to use

tavern-strict (args) Default response matching strictness

tavern-beta-new-traceback (bool) Use new traceback style (beta)

```

When using `v0.26.1` the command line parameters are no longer present

```

$ ./env/bin/pytest --help | grep tavern

$

```

|

closed

|

2019-03-18T14:10:21Z

|

2019-04-10T16:27:52Z

|

https://github.com/taverntesting/tavern/issues/315

|

[] |

flazzarini

| 2

|

huggingface/text-generation-inference

|

nlp

| 2,263

|

Documentation about default values of model paramaters

|

### Feature request

In the documentation, there is not enough info about the default values TGI enforces if client request do not contain parameters like `temperature`, `top_p`, `presence_frequency` etc.

For e.g what would be the value setup by TGI if

- `temperature` = `null`

- `temperature` is not at all present in client request.

This would help users to adjust the client codebase when migrating to/from different serving frameworks .

As far I looked into code base I was unable to find a place where this is done.

### Motivation

Documentation for defaults model parameters

### Your contribution

I can create a PR if someone can point me to correct code base

|

open

|

2024-07-19T16:39:49Z

|

2024-07-30T07:53:15Z

|

https://github.com/huggingface/text-generation-inference/issues/2263

|

[] |

mohittalele

| 3

|

Anjok07/ultimatevocalremovergui

|

pytorch

| 1,426

|

Please Help

|

I just updated my app and I keep getting this error when I try to run this.

Last Error Received:

Process: Ensemble Mode

If this error persists, please contact the developers with the error details.

Raw Error Details:

RuntimeError: "Error opening 'F:\\AI Voice\\Songs\\1_1_[MV] PENTAGON(펜타곤) _ Like This_(Vocals)_(Vocals).wav': System error."

Traceback Error: "

File "UVR.py", line 6654, in process_start

File "UVR.py", line 794, in ensemble_outputs

File "lib_v5\spec_utils.py", line 590, in ensemble_inputs

File "soundfile.py", line 430, in write

File "soundfile.py", line 740, in __init__

File "soundfile.py", line 1264, in _open

File "soundfile.py", line 1455, in _error_check

"

Error Time Stamp [2024-06-26 19:29:18]

Full Application Settings:

vr_model: 6_HP-Karaoke-UVR

aggression_setting: 10

window_size: 512

mdx_segment_size: 256

batch_size: Default

crop_size: 256

is_tta: False

is_output_image: False

is_post_process: False

is_high_end_process: False

post_process_threshold: 0.2

vr_voc_inst_secondary_model: No Model Selected

vr_other_secondary_model: No Model Selected

vr_bass_secondary_model: No Model Selected

vr_drums_secondary_model: No Model Selected

vr_is_secondary_model_activate: False

vr_voc_inst_secondary_model_scale: 0.9

vr_other_secondary_model_scale: 0.7

vr_bass_secondary_model_scale: 0.5

vr_drums_secondary_model_scale: 0.5

demucs_model: Choose Model

segment: Default

overlap: 0.25

overlap_mdx: Default

overlap_mdx23: 8

shifts: 2

chunks_demucs: Auto

margin_demucs: 44100

is_chunk_demucs: False

is_chunk_mdxnet: False

is_primary_stem_only_Demucs: False

is_secondary_stem_only_Demucs: False

is_split_mode: True

is_demucs_combine_stems: True

is_mdx23_combine_stems: True

demucs_voc_inst_secondary_model: No Model Selected

demucs_other_secondary_model: No Model Selected

demucs_bass_secondary_model: No Model Selected

demucs_drums_secondary_model: No Model Selected

demucs_is_secondary_model_activate: False

demucs_voc_inst_secondary_model_scale: 0.9

demucs_other_secondary_model_scale: 0.7

demucs_bass_secondary_model_scale: 0.5

demucs_drums_secondary_model_scale: 0.5

demucs_pre_proc_model: No Model Selected

is_demucs_pre_proc_model_activate: False

is_demucs_pre_proc_model_inst_mix: False

mdx_net_model: UVR-MDX-NET Karaoke 2

chunks: Auto

margin: 44100

compensate: Auto

denoise_option: None

is_match_frequency_pitch: True

phase_option: Automatic

phase_shifts: None

is_save_align: False

is_match_silence: True

is_spec_match: False

is_mdx_c_seg_def: False

is_invert_spec: False

is_deverb_vocals: False

deverb_vocal_opt: Main Vocals Only

voc_split_save_opt: Lead Only

is_mixer_mode: False

mdx_batch_size: Default

mdx_voc_inst_secondary_model: No Model Selected

mdx_other_secondary_model: No Model Selected

mdx_bass_secondary_model: No Model Selected

mdx_drums_secondary_model: No Model Selected

mdx_is_secondary_model_activate: False

mdx_voc_inst_secondary_model_scale: 0.9

mdx_other_secondary_model_scale: 0.7

mdx_bass_secondary_model_scale: 0.5

mdx_drums_secondary_model_scale: 0.5

is_save_all_outputs_ensemble: True

is_append_ensemble_name: False

chosen_audio_tool: Manual Ensemble

choose_algorithm: Min Spec

time_stretch_rate: 2.0

pitch_rate: 2.0

is_time_correction: True

is_gpu_conversion: True

is_primary_stem_only: False

is_secondary_stem_only: False

is_testing_audio: False

is_auto_update_model_params: True

is_add_model_name: False

is_accept_any_input: False

is_task_complete: False

is_normalization: False

is_use_opencl: False

is_wav_ensemble: False

is_create_model_folder: False

mp3_bit_set: 320k

semitone_shift: 0

save_format: WAV

wav_type_set: PCM_16

device_set: Default

help_hints_var: False

set_vocal_splitter: No Model Selected

is_set_vocal_splitter: False

is_save_inst_set_vocal_splitter: False

model_sample_mode: False

model_sample_mode_duration: 30

demucs_stems: All Stems

mdx_stems: All Stems

|

open

|

2024-06-26T23:30:24Z

|

2024-06-26T23:30:24Z

|

https://github.com/Anjok07/ultimatevocalremovergui/issues/1426

|

[] |

hollywoodemma

| 0

|

replicate/cog

|

tensorflow

| 1,790

|

Using the Predictor directly results in FieldInfo errors

|

`cog==0.9.6`

`pydantic==1.10.17`

`python==3.10.0`

Hello,

when writing non end-to-end tests for my cog predictor, in particular tests that instantiate then call the Predictor predict method directly, I ran into errors like

`TypeError: '<' not supported between instances of 'FieldInfo' and 'FieldInfo'`.

This is because unlike using the cog CLI or making a HTTP request to a running cog container, when using the `Predictor.predict` method directly, we're missing the arguments processing layer from cog.

So the default args remain pydantic FieldInfo objects (ie the return type of `cog.Input`) and virtually any basic operation on them will fail.

One possible way to workaround this I wrote below, but is there a better way to achieve this?

Thanks,

```python

PREDICTOR_RETURN_TYPE = inspect.signature(Predictor.predict).return_annotation

class TestPredictor(Predictor):

"""A class used only in non end-to-end tests, i.e. those that call directly

the Predictor. It is required because in the absence of the cog input processing layer

(that we get using the cog CLI), arguments to `predict()`that are not passed explicitly remain `FieldInfo` objects,

meaning any basic operation on them will raise an error."""

def predict(self, **kwargs: dict[str, Any]) -> PREDICTOR_RETURN_TYPE:

"""Processes the input (see main docstring) then call superclass' predict method.

Returns:

PREDICTOR_RETURN_TYPE: The output of the superclass `predict` method.

"""

for kwarg_name, kwarg in kwargs.items():

kwargs[kwarg_name] = kwarg.default if isinstance(kwarg, FieldInfo) else kwarg

# pass explicitly all other params

all_predict_params = inspect.signature(Predictor.predict).parameters

for param_name, param in all_predict_params.items():

if param_name != "self" and param_name not in kwargs:

kwargs[param_name] = (

param.default.default if isinstance(param.default, FieldInfo) else param.default

)

logger.info(f"Predicting with {kwargs}")

return super().predict(**kwargs)

```

|

open

|

2024-07-08T10:01:49Z

|

2024-07-08T17:00:28Z

|

https://github.com/replicate/cog/issues/1790

|

[] |

Clement-Lelievre

| 2

|

huggingface/datasets

|

pandas

| 6,663

|

`write_examples_on_file` and `write_batch` are broken in `ArrowWriter`

|

### Describe the bug

`write_examples_on_file` and `write_batch` are broken in `ArrowWriter` since #6636. The order between the columns and the schema is not preserved anymore. So these functions don't work anymore unless the order happens to align well.

### Steps to reproduce the bug

Try to do `write_batch` with anything that has many columns, and it's likely to break.

### Expected behavior

I expect these functions to work, instead of it trying to cast a column to its incorrect type.

### Environment info

- `datasets` version: 2.17.0

- Platform: Linux-5.15.0-1040-aws-x86_64-with-glibc2.35

- Python version: 3.10.13

- `huggingface_hub` version: 0.19.4

- PyArrow version: 15.0.0

- Pandas version: 2.2.0

- `fsspec` version: 2023.10.0

|

closed

|

2024-02-15T01:43:27Z

|

2024-02-16T09:25:00Z

|

https://github.com/huggingface/datasets/issues/6663

|

[] |

bryant1410

| 3

|

dfm/corner.py

|

data-visualization

| 159

|

Error on import, pandas incompatibility?

|

Hello,

Today I noticed that I get an error when importing corner:

`import corner`

returns

```python

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/Users/mdecleir/opt/anaconda3/envs/astroconda/lib/python3.7/site-packages/corner/__init__.py", line 6, in <module>

from .corner import corner

File "/Users/mdecleir/opt/anaconda3/envs/astroconda/lib/python3.7/site-packages/corner/corner.py", line 12, in <module>

from .arviz_corner import arviz_corner

File "/Users/mdecleir/opt/anaconda3/envs/astroconda/lib/python3.7/site-packages/corner/arviz_corner.py", line 9, in <module>

from arviz.data import convert_to_dataset

File "/Users/mdecleir/opt/anaconda3/envs/astroconda/lib/python3.7/site-packages/arviz/__init__.py", line 32, in <module>

from .data import *

File "/Users/mdecleir/opt/anaconda3/envs/astroconda/lib/python3.7/site-packages/arviz/data/__init__.py", line 2, in <module>

from .base import CoordSpec, DimSpec, dict_to_dataset, numpy_to_data_array

File "/Users/mdecleir/opt/anaconda3/envs/astroconda/lib/python3.7/site-packages/arviz/data/base.py", line 10, in <module>

import xarray as xr

File "/Users/mdecleir/opt/anaconda3/envs/astroconda/lib/python3.7/site-packages/xarray/__init__.py", line 12, in <module>

from .core.combine import concat, auto_combine

File "/Users/mdecleir/opt/anaconda3/envs/astroconda/lib/python3.7/site-packages/xarray/core/combine.py", line 11, in <module>

from .merge import merge

File "/Users/mdecleir/opt/anaconda3/envs/astroconda/lib/python3.7/site-packages/xarray/core/merge.py", line 10, in <module>

PANDAS_TYPES = (pd.Series, pd.DataFrame, pd.Panel)

File "/Users/mdecleir/opt/anaconda3/envs/astroconda/lib/python3.7/site-packages/pandas/__init__.py", line 244, in __getattr__

raise AttributeError(f"module 'pandas' has no attribute '{name}'")

AttributeError: module 'pandas' has no attribute 'Panel'

```

I did not see this error before, and I am wondering whether a recent update to pandas might cause this problem?

|

closed

|

2021-04-06T16:11:52Z

|

2021-04-06T18:49:19Z

|

https://github.com/dfm/corner.py/issues/159

|

[] |

mdecleir

| 5

|

521xueweihan/HelloGitHub

|

python

| 2,667

|

【开源自荐】🎉Vue TSX Admin, 中后台管理系统开发的新方向

|

## 推荐项目

<!-- 这里是 HelloGitHub 月刊推荐项目的入口,欢迎自荐和推荐开源项目,唯一要求:请按照下面的提示介绍项目。-->

<!-- 点击上方 “Preview” 立刻查看提交的内容 -->

<!--仅收录 GitHub 上的开源项目,请填写 GitHub 的项目地址-->

- 项目地址:https://github.com/manyuemeiquqi/vue-tsx-admin

<!--请从中选择(C、C#、C++、CSS、Go、Java、JS、Kotlin、Objective-C、PHP、Python、Ruby、Rust、Swift、其它、书籍、机器学习)-->

- 类别:Vue Admin

<!--请用 20 个左右的字描述它是做什么的,类似文章标题让人一目了然 -->

- 项目标题:vue-tsx-admin 是一个免费开源的中后台管理系统模块,帮助你快速搭建起一个中后台前端项目。

<!--这是个什么项目、能用来干什么、有什么特点或解决了什么痛点,适用于什么场景、能够让初学者学到什么。长度 32-256 字符-->

- 项目描述:它使用了最新的前端技术栈,完全采用 Vue3 + TSX 的模式进行开发,提供了开箱即用的中后台前端解决方案,内置了 i18n 国际化解决方案,可配置化布局,主题色修改,权限验证,提炼了典型的业务模型。

<!--令人眼前一亮的点是什么?类比同类型项目有什么特点!-->

- 亮点:

1. 完全使用 JSX 进行开发,适合喜爱 JSX 模式的开发者

2. 提供了开箱即用的中后台前端解决方案

3. 解决了中后台模板开发方式的痛点,比如 表格自定义列冗余、难以同一文件构建声明式弹窗、抽离组件需要同时关注 script 跟 模板的缺点

4. 同类开发模式的开源后台管理模板鲜有

- 示例代码:(可选)

```js

const handleError = () => {

Modal.error({

title: () => <div>error</div>,

content: () => (

<p>

<span>Message can be error</span>

<IconErro />

</p>

)

})

}

```

- 后续更新计划:

- 进行 bug 修复,项目开箱即用即可,不累加过多功能

|

closed

|

2024-01-02T04:59:11Z

|

2024-01-23T07:47:59Z

|

https://github.com/521xueweihan/HelloGitHub/issues/2667

|

[] |

manyuemeiquqi

| 2

|

junyanz/pytorch-CycleGAN-and-pix2pix

|

computer-vision

| 735

|

optimal parameters for synthetic depth image to real depth image

|

Hi,

I am planning to train a cycleGAN model to translate synthetic depth images to real depth images from my depth-camera. I do not have any idea how to choose the optimal parameters. My synthetic and real images have size 480x640. I am planning to resize them to 512x512 -> load_size=512 and I will use crop_size=512.

I have following questions:

Is this a good idea to use cycleGAN for this problem ?

Should I use a different crop_size?

Should I change the network architecture?

Should I change the default parameters for netD and netG?

Here example synthetic and real depth images from my dataset:

<img width="514" alt="Bildschirmfoto 2019-08-19 um 16 13 58" src="https://user-images.githubusercontent.com/45715708/63272552-8e52f980-c29c-11e9-951c-4c986d76f0c2.png">

best regards

|

open

|

2019-08-19T14:16:42Z

|

2019-09-03T19:13:02Z

|

https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/735

|

[] |

frk1993

| 3

|

graphql-python/graphql-core

|

graphql

| 139

|

Rename project/repository

|

hey there! This is a wonderful repository, and a really great reference implementation!

Here's why I think that this repository should be renamed to `graphql` or `graphql-py` instead of `graphql-core`

The current name sort of gives us a feel that it's not for the end user.

It gives us a feel that this project is only for other graphql libraries to build abstractions on top of.

in the GraphQL ecosystem, you'd generally build out your whole schema with graphql-js, in fact it was the only way during the start. It was after the reference implementation that we got apollo server/ nexus.

in the Python ecosystem, this seems like the opposite - graphql-core is relatively newer than graphene and is receiving lesser adoption rates.

also, I think that it would also help renaming the PyPI project name to `graphql` instead of graphql-core`.

This is my opinion, what do you think?

|

closed

|

2021-09-08T07:21:04Z

|

2021-09-19T11:03:19Z

|

https://github.com/graphql-python/graphql-core/issues/139

|

[] |

aryaniyaps

| 1

|

HumanSignal/labelImg

|

deep-learning

| 131

|

PyQt4, QImage.fromData(self.imageData) always return null image

|

labelImg always tell 'Make sure D:\sleep\r4_hyxx_2016.6.2_yw_6\JPEGImages\000001.jpg is a valid image file.'

I'm sure it's a valid image file.

I tried this

`filename='D:\\sleep\\r4_hyxx_2016.6.2_yw_6\\JPEGImages\\000001.jpg'

f=open(filename,'rb')

imageData=f.read()

fout=open('testout.jpg','wb')

fout.write(imageData)

fout.close()

image= QImage.fromData(imageData)

image.isNull()

`

It shows read and write jpg file correctly. I can get correct 'testout.jpg'.

But the image.isNull() always return true, so I get empty QImage.

windows10,

python 2.7

sig-4.19.3,

PyQt4-4.11.4

|

closed

|

2017-07-29T07:46:19Z

|

2018-06-23T18:25:57Z

|

https://github.com/HumanSignal/labelImg/issues/131

|

[] |

huqiaoping

| 2

|

Neoteroi/BlackSheep

|

asyncio

| 400

|

TestClient Example from Documentation and from GIT/BlackSheep-Examples fails

|

I’ve reproduced all steps by Coping/Pasting from official documentations https://www.neoteroi.dev/blacksheep/testing/ and **can't run test example**. Get different errors. I believe the official documentation is outdated and can’t be used for studding.

So, I downloaded **complete example from https://github.com/Neoteroi/BlackSheep-Examples/tree/main/testing-api**

and tried to run it. It **fails too**. Please find enclosed screenshot. I’ve tried to change versions of BlackSheep (1.2.18 / 2.0a9) and pydantic (1.10.10 / 2.0.2) in requrements.txt with no help. Tests always fail but due to selected version of packages can get additional errors.

**Please check if example at https://github.com/Neoteroi/BlackSheep-Examples/tree/main/testing-api can be fixed to use with some version of BlackSheep.**

And it would be great if you can update official documentation. Not only the article about testing but many other pages as they can’t be followed without getting errors.

Thanx.

pip list

Package Version

------------------ --------

annotated-types 0.5.0

blacksheep 2.0a9

certifi 2023.5.7

charset-normalizer 3.1.0

click 8.1.5

colorama 0.4.6

essentials 1.1.5

essentials-openapi 1.0.7

guardpost 1.0.2

h11 0.14.0

httptools 0.6.0

idna 3.4

iniconfig 2.0.0

itsdangerous 2.1.2

Jinja2 3.1.2

MarkupSafe 2.1.3

packaging 23.1

pip 22.3.1

pluggy 1.2.0

pydantic 2.0.2

pydantic_core 2.1.2

pytest 7.4.0

pytest-asyncio 0.21.1

python-dateutil 2.8.2

PyYAML 6.0

requests 2.31.0

rodi 2.0.2

setuptools 65.5.1

six 1.16.0

typing_extensions 4.7.1

urllib3 2.0.3

uvicorn 0.23.0

wheel 0.38.4

|

closed

|

2023-07-16T13:51:57Z

|

2023-11-26T09:20:37Z

|

https://github.com/Neoteroi/BlackSheep/issues/400

|

[] |

xxxxxxbox

| 1

|

robotframework/robotframework

|

automation

| 5,340

|

BDD prefixes with same beginning are not handled properly

|

**Description**:

When defining custom language prefixes for BDD steps in the Robot Framework (for example, in a French language module), I observed that adding multiple prefixes with overlapping substrings (e.g., "Sachant que" and "Sachant") leads to intermittent failures in matching the intended BDD step.

**Steps to Reproduce:**

Create a custom language (e.g., a French language class) inheriting from Language.

Define given_prefixes with overlapping entries such as:

`given_prefixes = ['Étant donné', 'Soit', 'Sachant que', 'Sachant']`

Run tests that rely on detecting BDD step prefixes.

Occasionally, tests fail with errors indicating that the keyword "Sachant que" cannot be found, even though it is defined.

Expected Behavior:

The regex built from the BDD prefixes should consistently match the longer, more specific prefix ("Sachant que") instead of prematurely matching the shorter prefix ("Sachant").

**Observed Behavior:**

Due to the unordered nature of Python sets, the bdd_prefixes property (which is constructed using a set) produces a regex with the alternatives in an arbitrary order. This sometimes results in the shorter prefix ("Sachant") matching before the longer one ("Sachant que"), causing intermittent failures.

**Potential Impact:**

Unpredictable test failures when multiple BDD prefixes with overlapping substrings are used.

Difficulty in debugging issues due to the non-deterministic nature of the problem.

Suggested Fix:

Sort the BDD prefixes by descending length before constructing the regular expression. For example:

```

@property

def bdd_prefix_regexp(self):

if not self._bdd_prefix_regexp:

# Sort prefixes by descending length so that the longest ones are matched first

prefixes = sorted(self.bdd_prefixes, key=len, reverse=True)

pattern = '|'.join(prefix.replace(' ', r'\s') for prefix in prefixes).lower()

self._bdd_prefix_regexp = re.compile(rf'({pattern})\s', re.IGNORECASE)

return self._bdd_prefix_regexp

```

This change would ensure that longer, more specific prefixes are matched before their shorter substrings, eliminating the intermittent failure.

**Environment:**

Robot Framework version: 7.1.1

Python version: 3.11.5

**Additional Notes:**

This bug appears to be caused by the unordered nature of sets in Python, which is used in the bdd_prefixes property to combine all prefixes. The issue might not manifest when there are only three prefixes or when there is no overlapping substring scenario.

I hope this detailed report helps in reproducing and resolving the issue.

|

closed

|

2025-02-16T22:29:14Z

|

2025-03-07T10:16:10Z

|

https://github.com/robotframework/robotframework/issues/5340

|

[

"priority: medium",

"acknowledge",

"effort: small"

] |

orenault

| 1

|

developmentseed/lonboard

|

data-visualization

| 198

|

Clean up memory after closing Map or stopping/restarting the python process

|

When visualizing a large dataset, the browser tab (or GPU memory) can hold a lot of memory, which isn't cleaned up by restarting the python process (testing this in a notebook, and so restarting the kernel).

Also explicitly calling `map.close()` or `map.close_all()` doesn't seem to help. Only actually closing the browser tab does clean up the memory.

Testing this using Firefox.

|

closed

|

2023-11-03T18:14:52Z

|

2024-02-27T20:21:52Z

|

https://github.com/developmentseed/lonboard/issues/198

|

[] |

jorisvandenbossche

| 3

|

tensorlayer/TensorLayer

|

tensorflow

| 1,152

|

Questions about PPO

|

I use PPO to make the car automatically find the way and avoid obstacles,but it didn't perform well. Similar examples use dqn network. Why can dqn but PPO not?

|

open

|

2022-01-15T00:57:20Z

|

2022-11-03T10:42:34Z

|

https://github.com/tensorlayer/TensorLayer/issues/1152

|

[] |

imitatorgkw

| 1

|

kornia/kornia

|

computer-vision

| 2,162

|

Add tensor to gradcheck into the base tester

|

Tensor to gradcheck should be also in the base tester

_Originally posted by @edgarriba in https://github.com/kornia/kornia/pull/2152#discussion_r1071806021_

apply to all tensors on the inputs of `self.gradcheck` automatically

|

closed

|

2023-01-20T12:25:46Z

|

2024-01-23T23:22:41Z

|

https://github.com/kornia/kornia/issues/2162

|

[

"enhancement :rocket:",

"help wanted"

] |

johnnv1

| 0

|

nvbn/thefuck

|

python

| 980

|

Give advice on Python version

|

the output of thefuck --version:

`The Fuck 3.29 using Python 3.7.3 and Bash 3.2.57(1)-release`

your system:

`macOS Sierra 10.12.6`

How to reproduce the bug:

I need to run python3 to use python on the terminal,but when I run python,I got this :

`(base) root:Desktop iuser$ python`

`-bash: /Library/Frameworks/Python.framework/Versions/3.5/bin/python3: No such file or directory`

`(base) root:Desktop iuser$ fuck`

`No fucks given`

I know this can be solved by modifying the configuration file.But can thefuck give advice on the correct version of Python like 'python3'?

My English is so pool...Have I made myself clear?

|

open

|

2019-10-16T07:38:25Z

|

2019-10-20T00:22:12Z

|

https://github.com/nvbn/thefuck/issues/980

|

[] |

icditwang

| 3

|

zappa/Zappa

|

django

| 1,343

|

Changing batch size in SQS event does not actually change the batch size off SQS trigger

|

<!--- Provide a general summary of the issue in the Title above -->

## Context

<!--- Provide a more detailed introduction to the issue itself, and why you consider it to be a bug -->

<!--- Also, please make sure that you are running Zappa _from a virtual environment_ and are using Python 3.8/3.9/3.10/3.11/3.12 -->

I was updating a SQS event batch size with the zappa settings and assumed it had worked and did not even check the console to make sure. Later I was testing another function that invoked the previous one and in doing so I noticed the batch size was not increased.

## Expected Behavior

<!--- Tell us what should happen -->

I changed the batch size in the zappa settings from 1 > 5 and the SQS trigger batch size should change from 1 > 5.

Old Settings:

```

{

"function": "app.lambda_handler",

"event_source": {

"arn": "arn:aws:sqs:us-west-2:1........",

"batch_size": 1,

"enabled": true

}

}

```

New Settings:

```

{

"function": "app.lambda_handler",

"event_source": {

"arn": "arn:aws:sqs:us-west-2:1........",

"batch_size": 5,

"enabled": true

}

}

```

## Actual Behavior

<!--- Tell us what happens instead -->

Nothing happens.

## Steps to Reproduce

<!--- Provide a link to a live example, or an unambiguous set of steps to -->

<!--- reproduce this bug include code to reproduce, if relevant -->

1. Create a deployment with an SQS event with a batch size of 1.

2. Change the batch size to 5 and update the function.

3. Check AWS console to see the batch size has not changed.

## Your Environment

<!--- Include as many relevant details about the environment you experienced the bug in -->

* Zappa version used: 0.59.0

* Operating System and Python version: MacOS Python 3.12

|

closed

|

2024-07-26T12:53:29Z

|

2024-11-10T16:40:24Z

|

https://github.com/zappa/Zappa/issues/1343

|

[

"no-activity",

"auto-closed"

] |

lmuther8

| 4

|

python-restx/flask-restx

|

flask

| 633

|

Dead or alive ?

|

Is this project still alive or is it considered dead ?

**Additional context**

I am seeing a lot of PR (40+) and close to 300 open issues on this project, hence some minor but recent (~3 months ago) changes were made on the code (preparing a version 1.3.1) but I can't see any real progress lately.

That being said, this is just an attempt to understand the project's future - not judging anything.

I've been using this project for some of my stacks so it would be beautiful to see this alive and moving forward.

Best

Mike

|

open

|

2025-02-05T09:17:51Z

|

2025-02-15T15:24:39Z

|

https://github.com/python-restx/flask-restx/issues/633

|

[

"question"

] |

MikeSchiessl

| 0

|

huggingface/datasets

|

computer-vision

| 6,854

|

Wrong example of usage when config name is missing for community script-datasets

|

As reported by @Wauplin, when loading a community dataset with script, there is a bug in the example of usage of the error message if the dataset has multiple configs (and no default config) and the user does not pass any config. For example:

```python

>>> ds = load_dataset("google/fleurs")

ValueError: Config name is missing.

Please pick one among the available configs: ['af_za', 'am_et', 'ar_eg', 'as_in', 'ast_es', 'az_az', 'be_by', 'bg_bg', 'bn_in', 'bs_ba', 'ca_es', 'ceb_ph', 'ckb_iq', 'cmn_hans_cn', 'cs_cz', 'cy_gb', 'da_dk', 'de_de', 'el_gr', 'en_us', 'es_419', 'et_ee', 'fa_ir', 'ff_sn', 'fi_fi', 'fil_ph', 'fr_fr', 'ga_ie', 'gl_es', 'gu_in', 'ha_ng', 'he_il', 'hi_in', 'hr_hr', 'hu_hu', 'hy_am', 'id_id', 'ig_ng', 'is_is', 'it_it', 'ja_jp', 'jv_id', 'ka_ge', 'kam_ke', 'kea_cv', 'kk_kz', 'km_kh', 'kn_in', 'ko_kr', 'ky_kg', 'lb_lu', 'lg_ug', 'ln_cd', 'lo_la', 'lt_lt', 'luo_ke', 'lv_lv', 'mi_nz', 'mk_mk', 'ml_in', 'mn_mn', 'mr_in', 'ms_my', 'mt_mt', 'my_mm', 'nb_no', 'ne_np', 'nl_nl', 'nso_za', 'ny_mw', 'oc_fr', 'om_et', 'or_in', 'pa_in', 'pl_pl', 'ps_af', 'pt_br', 'ro_ro', 'ru_ru', 'sd_in', 'sk_sk', 'sl_si', 'sn_zw', 'so_so', 'sr_rs', 'sv_se', 'sw_ke', 'ta_in', 'te_in', 'tg_tj', 'th_th', 'tr_tr', 'uk_ua', 'umb_ao', 'ur_pk', 'uz_uz', 'vi_vn', 'wo_sn', 'xh_za', 'yo_ng', 'yue_hant_hk', 'zu_za', 'all']

Example of usage:

`load_dataset('fleurs', 'af_za')`

```

Note the example of usage in the error message suggests loading "fleurs" instead of "google/fleurs".

|

closed

|

2024-05-02T06:59:39Z

|

2024-05-03T15:51:59Z

|

https://github.com/huggingface/datasets/issues/6854

|

[

"bug"

] |

albertvillanova

| 0

|

d2l-ai/d2l-en

|

deep-learning

| 2,489

|

installing d2l package on google colab

|

I've tried to run the codes on google colab.

So I tried `pip install d2l==1.0.0-beta0`, but didn't work.

So I used `pip install d2l==1.0.0-alpha0`, and tried to run the following code.

```python

d2l.set_figsize()

img = d2l.plt.imread('../img/catdog.jpg')

d2l.plt.imshow(img);

```

But it says `FileNotFoundError: [Errno 2] No such file or directory: '../img/catdog.jpg'`

How can I run codes in this book on google colab?😭

|

closed

|

2023-05-15T05:50:24Z

|

2023-07-10T13:26:54Z

|

https://github.com/d2l-ai/d2l-en/issues/2489

|

[

"bug"

] |

minjae35

| 1

|

mwaskom/seaborn

|

matplotlib

| 3,382

|

sns.scatterplot

|

**_**hello,sir!

i find a question,When I customized the color range, I found through Searbon that it didn't follow my custom colors,the legend shows color is wrong

codeing:

merged_df1= pd.read_csv("C:\\Users\\Administrator\\Desktop\\data.csv")

plt.figure(figsize=(8.5, 8))

thresholds = [5,50,100,200]

colors = ['darkslategrey','skyblue', 'deepskyblue', 'white','orange']

legend_patches = [

mpatches.Patch(color=colors[0], label=f'<{thresholds[0]}'),

mpatches.Patch(color=colors[1], label=f'{thresholds[0]} - {thresholds[1]}'),

mpatches.Patch(color=colors[2], label=f'{thresholds[1]}- {thresholds[2]}'),

mpatches.Patch(color=colors[3], label=f'{thresholds[2]}- {thresholds[3]}'),

mpatches.Patch(color=colors[4], label=f'>{thresholds[3]}')

]

conditions = [

(merged_df1['logpvalue'] < thresholds[0]),

(merged_df1['logpvalue'] >= thresholds[0]) & (merged_df1['logpvalue'] < thresholds[1]),

(merged_df1['logpvalue'] >= thresholds[1]) & (merged_df1['logpvalue'] < thresholds[2]),

(merged_df1['logpvalue'] >= thresholds[2]) & (merged_df1['logpvalue'] < thresholds[3]),

(merged_df1['logpvalue'] >= thresholds[3])

]

color_array = np.select(conditions, colors)

cmap=sns.color_palette(colors, as_cmap=True)

sns.scatterplot(x=merged_df1['group'], y=merged_df1['gene'], hue=color_array,size=merged_df1['tpm'], sizes=(5, 250),legend='auto',palette=cmap,edgecolor='black')

plt.title('Bubble Chart')

plt.xlabel('tissue')

plt.ylabel('motif')

sizes = [30, 100, 200]

legend_sizes = [plt.scatter([], [], s=size, color='grey', alpha=0.6) for size in sizes]

legend_labels = [f'TPM: {size}' for size in sizes]

a=plt.legend(loc='upper right', bbox_to_anchor=(1.2,1),prop={'size': 10},handles=legend_patches, title='-log pvalue')

plt.legend(legend_sizes, legend_labels, title='Size', loc='upper right',bbox_to_anchor=(1.2, 0.75),prop={'size': 10})

plt.gca().add_artist(a)

|

closed

|

2023-06-12T09:33:48Z

|

2023-06-12T12:36:22Z

|

https://github.com/mwaskom/seaborn/issues/3382

|

[] |

gavinjzg

| 2

|

tox-dev/tox

|

automation

| 3,292

|

{env_name} substitution broken since version 4.14.1

|

## Issue

I'm creating a pipeline where a coverage report is generated over multiple test runs (spanning different Python versions). To do this, I want to include the tox environment in the coverage file name, so the artifacts don't overwrite each other in the pipeline job that combines the coverage files.

It's configured as follows (with some sections left out):

```

[testenv]

package = wheel

deps =

coverage[toml] ~= 7.0

commands_pre =

python -m coverage erase

commands =

python -m coverage run --data-file=.coverage.{env_name} --branch --source my.project -m unittest discover {posargs:tests/unit}

```

This worked fine up to tox 4.14.0:

```

py311: commands_pre[0]> python -m coverage erase

py311: commands[0]> python -m coverage run --data-file=.coverage.py311 --branch --source my.project -m unittest discover tests/unit

```

However, from tox 14.4.1 onwards, the `env_name` variable isn't substituted anymore, and just comes through as `{env_name}`

```

py311: commands_pre[0]> python -m coverage erase

py311: commands[0]> python -m coverage run --data-file=.coverage.{env_name} --branch --source myproject -m unittest discover tests/unit

```

## Environment

Provide at least:

- OS: Windows 10

<details open>

<summary>Output of <code>pip list</code> of the host Python, where <code>tox</code> is installed</summary>

```console

Package Version

------------- -------

cachetools 5.3.3

chardet 5.2.0

colorama 0.4.6

distlib 0.3.8

filelock 3.14.0

packaging 24.1

pip 24.0

platformdirs 4.2.2

pluggy 1.5.0

pyproject-api 1.6.1

setuptools 65.5.0

tomli 2.0.1

tox 4.14.1

virtualenv 20.26.2

```

</details>

## Output of running tox

See above.

## Minimal example

I tried to create a minimal example by creating a tox configuration that doesn't build and install a package, but for some reason the substitution in the command did work there. So somehow the package building and installing is important for the issue to occur.

Unfortunately, I can't share my entire tox config, as it's part of a corporate repo.

|

closed

|

2024-06-10T14:48:34Z

|

2024-08-20T13:53:47Z

|

https://github.com/tox-dev/tox/issues/3292

|

[] |

rhabarberbarbara

| 12

|

Nemo2011/bilibili-api

|

api

| 820

|

发布动态报错'str' object has no attribute 'content'

|

try:

global last_id

dyn_req = dynamic.BuildDynamic.empty()

upload_files = ['A','B','C','D','E']

for item in upload_files:

item_path = f"{BASE_PATH}{item}.JPG"

result = await dynamic.upload_image(image=item_path,credential=credential)

#if debug:print(result)

if 'image_url' in result:

dyn_req.add_image(Picture.from_url(result['image_url']))

await asyncio.sleep(5)

dyn_req.add_plain_text(text=dynamic_title)

#dyn_req.set_options(close_comment=True)

result = await dynamic.send_dynamic(dyn_req,credential)

# if debug:print(result)

if result: last_id = result

except Exception as e:

print(f"发布动态出错, {e}")

提示发布动态出错, 'str' object has no attribute 'content'

|

closed

|

2024-10-06T08:33:08Z

|

2024-10-07T03:37:54Z

|

https://github.com/Nemo2011/bilibili-api/issues/820

|

[

"question"

] |

farjar

| 1

|

tiangolo/uvicorn-gunicorn-fastapi-docker

|

pydantic

| 84

|

How to pass "live auto-reload option" with docker-compose

|

hello there,

- Is it possible to pass live auto-reload option (/start-reload.sh) to our docker-compose.yml file?

- Is it possible to pass live auto-reload option (/start-reload.sh) to the docker-compose up -d command?

many thanks

|

closed

|

2021-04-22T08:56:25Z

|

2021-04-22T12:07:19Z

|

https://github.com/tiangolo/uvicorn-gunicorn-fastapi-docker/issues/84

|

[] |

ghost

| 1

|

clovaai/donut

|

computer-vision

| 30

|

donut processing on PDF Documents

|

Hello,

I have few certificate documents which are in a PDF format. I want to extract metadata on those documents as suggested by you .

Could you please clarify me on the below points.

1.Can I use your model directly without pretraining on the certificate data.

2. How to train your model on my certificates as it is confidential and what folder structure are you expecting to train the data.

3. How do I convert my dataset into your format (synthdog) – It was not much clear to me.

Thank you and looking forward to your response.

Best Regards,

Arun

|

closed

|

2022-08-19T11:53:41Z

|

2022-08-24T02:48:54Z

|

https://github.com/clovaai/donut/issues/30

|

[] |

Arun4GS

| 4

|

keras-team/keras

|

data-science

| 20,169

|

How to make dynamic assetions in Keras v3?

|

In Tensorflow with Keras v2 i have 2 common case to make dynamic assertions:

1. Dynamic shape assertion

```python

x = <some input tensor in NHWC format>

min_size = tf.reduce_min(tf.shape(x)[1:3])

assert_size = tf.assert_greater(min_size, 2)

with tf.control_dependencies([assert_size]):

<apply some ops that work correctly only with inputs of minimum size = 2>

```

2. Dynamic value assertion

```python

x = <some input tensor>

max_val = <some input value>

max_delta = ops.max(x) - ops.min(x)

assert_delta = tf.assert_less(max_delta, max_val + backend.epsilon())

with tf.control_dependencies([assert_delta]):

<apply some ops that work correctly only with inputs if assertion success>

```

How can i make such assertions in Keras V3? I couldn't find any example in sources.

|

closed

|

2024-08-27T06:13:22Z

|

2024-09-13T12:02:21Z

|

https://github.com/keras-team/keras/issues/20169

|

[

"type:support",

"stat:awaiting response from contributor",

"stale"

] |

shkarupa-alex

| 3

|

deepset-ai/haystack

|

machine-learning

| 9,088

|

Docs for HierarchicalDocumentSplitter

|

Added in #9067

|

open

|

2025-03-21T14:03:46Z

|

2025-03-24T09:09:56Z

|

https://github.com/deepset-ai/haystack/issues/9088

|

[

"type:documentation",

"P1"

] |

dfokina

| 0

|

neuml/txtai

|

nlp

| 65

|

Add summary pipeline

|

Use Hugging Face's summary pipeline to summarize text.

|

closed

|

2021-03-17T18:52:12Z

|

2021-05-13T15:07:26Z

|

https://github.com/neuml/txtai/issues/65

|

[] |

davidmezzetti

| 0

|

iterative/dvc

|

machine-learning

| 10,572

|

get / import: No storage files available

|

# Bug Report

## Description

I have tracked files in `repo-a` under `data`. `dvc import` and `dvc get` both fail when trying to get files from `repo-a` in `repo-b`.

### Reproduce

I cloned my own repo (`repo-a`) under `/tmp` to test whether `dvc pull` works. It does. Then I checked status and remote:

```

[/tmp/repo-a] [master *]

-> % uv run dvc status -c

Cache and remote 'azure-blob' are in sync.

[/tmp/repo-a] [master *]

-> % uv run dvc list --dvc-only .

data

```

So that is all correct.

Then I go to my `repo-b`. I configured the remote to be the same as the one of `rebo-a`. Here is the check:

```

[repo-b] [master *]

-> % diff .dvc/config.local /tmp/repo-a/.dvc/config.local | wc -l

0

```

Then I try to get the data from `repo-a`. It fails

```

[repo-b] [master *]

-> % uv run dvc list "git@gitlab.com:<org>/repo-a.git" --dvc-only

data

[repo-b] [master *]

-> % uv run dvc get "git@gitlab.com:<org>/repo-a.git" "data" -v

2024-09-30 13:41:19,905 DEBUG: v3.55.2 (pip), CPython 3.10.14 on Linux-6.8.0-45-generic-x86_64-with-glibc2.35

2024-09-30 13:41:19,906 DEBUG: command: /.../repo-b/.venv/bin/dvc get git@gitlab.com:<org>/repo-a.git data -v

2024-09-30 13:41:19,985 DEBUG: Creating external repo git@gitlab.com:<org>/repo-a.git@None

2024-09-30 13:41:19,985 DEBUG: erepo: git clone 'git@gitlab.com:<org>/repo-a.git' to a temporary dir

2024-09-30 13:41:42,394 DEBUG: failed to load ('data', 'cvat', 'datumaro-dataset') from storage local (/tmp/tmpsuoa_qcgdvc-cache/files/md5) - [Errno 2] No such file or directory: '/tmp/tmpsuoa_qcgdvc-cache/files/md5/8a/6de34918ed22935e97644bf465f920.dir'

Traceback (most recent call last):

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_data/index/index.py", line 611, in _load_from_storage

_load_from_object_storage(trie, entry, storage)

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_data/index/index.py", line 547, in _load_from_object_storage

obj = Tree.load(storage.odb, root_entry.hash_info, hash_name=storage.odb.hash_name)

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_data/hashfile/tree.py", line 193, in load

with obj.fs.open(obj.path, "r") as fobj:

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_objects/fs/base.py", line 324, in open

return self.fs.open(path, mode=mode, **kwargs)

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_objects/fs/local.py", line 131, in open

return open(path, mode=mode, encoding=encoding) # noqa: SIM115

FileNotFoundError: [Errno 2] No such file or directory: '/tmp/tmpsuoa_qcgdvc-cache/files/md5/8a/6de34918ed22935e97644bf465f920.dir'

2024-09-30 13:41:42,401 ERROR: unexpected error - failed to load directory ('data', 'cvat', 'datumaro-dataset'): [Errno 2] No such file or directory: '/tmp/tmpsuoa_qcgdvc-cache/files/md5/8a/6de34918ed22935e97644bf465f920.dir'

Traceback (most recent call last):

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_data/index/index.py", line 611, in _load_from_storage

_load_from_object_storage(trie, entry, storage)

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_data/index/index.py", line 547, in _load_from_object_storage

obj = Tree.load(storage.odb, root_entry.hash_info, hash_name=storage.odb.hash_name)

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_data/hashfile/tree.py", line 193, in load

with obj.fs.open(obj.path, "r") as fobj:

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_objects/fs/base.py", line 324, in open

return self.fs.open(path, mode=mode, **kwargs)

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_objects/fs/local.py", line 131, in open

return open(path, mode=mode, encoding=encoding) # noqa: SIM115

FileNotFoundError: [Errno 2] No such file or directory: '/tmp/tmpsuoa_qcgdvc-cache/files/md5/8a/6de34918ed22935e97644bf465f920.dir'

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc/cli/__init__.py", line 211, in main

ret = cmd.do_run()

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc/cli/command.py", line 41, in do_run

return self.run()

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc/commands/get.py", line 30, in run

return self._get_file_from_repo()

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc/commands/get.py", line 37, in _get_file_from_repo

Repo.get(

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc/repo/get.py", line 64, in get

download(fs, fs_path, os.path.abspath(out), jobs=jobs)

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc/fs/__init__.py", line 67, in download

return fs._get(fs_path, to, batch_size=jobs, callback=cb)

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc/fs/dvc.py", line 692, in _get

return self.fs._get(

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc/fs/dvc.py", line 543, in _get

for root, dirs, files in self.walk(rpath, maxdepth=maxdepth, detail=True):

File "/.../repo-b/.venv/lib/python3.10/site-packages/fsspec/spec.py", line 468, in walk

yield from self.walk(

File "/.../repo-b/.venv/lib/python3.10/site-packages/fsspec/spec.py", line 468, in walk

yield from self.walk(

File "/.../repo-b/.venv/lib/python3.10/site-packages/fsspec/spec.py", line 427, in walk

listing = self.ls(path, detail=True, **kwargs)

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc/fs/dvc.py", line 382, in ls

for info in dvc_fs.ls(dvc_path, detail=True):

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_objects/fs/base.py", line 519, in ls

return self.fs.ls(path, detail=detail, **kwargs)

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_data/fs.py", line 164, in ls

for key, info in self.index.ls(root_key, detail=True):

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_data/index/index.py", line 764, in ls

self._ensure_loaded(root_key)

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_data/index/index.py", line 761, in _ensure_loaded

self._load(prefix, entry)

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_data/index/index.py", line 710, in _load

self.onerror(entry, exc)

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_data/index/index.py", line 638, in _onerror

raise exc

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_data/index/index.py", line 708, in _load

_load_from_storage(self._trie, entry, storage_info)

File "/.../repo-b/.venv/lib/python3.10/site-packages/dvc_data/index/index.py", line 626, in _load_from_storage

raise DataIndexDirError(f"failed to load directory {entry.key}") from last_exc

dvc_data.index.index.DataIndexDirError: failed to load directory ('data', 'cvat', 'datumaro-dataset')

2024-09-30 13:41:42,432 DEBUG: Version info for developers:

DVC version: 3.55.2 (pip)

-------------------------

Platform: Python 3.10.14 on Linux-6.8.0-45-generic-x86_64-with-glibc2.35

Subprojects:

dvc_data = 3.16.6

dvc_objects = 5.1.0

dvc_render = 1.0.2

dvc_task = 0.4.0

scmrepo = 3.3.8

Supports:

azure (adlfs = 2024.7.0, knack = 0.12.0, azure-identity = 1.18.0),

http (aiohttp = 3.10.8, aiohttp-retry = 2.8.3),

https (aiohttp = 3.10.8, aiohttp-retry = 2.8.3)

Config:

Global: /.../.config/dvc

System: /.../.config/kdedefaults/dvc

Cache types: <https://error.dvc.org/no-dvc-cache>

Caches: local

Remotes: azure

Workspace directory: ext4 on /dev/mapper/vgkubuntu-root

Repo: dvc, git

Repo.site_cache_dir: /var/tmp/dvc/repo/bdf5f37be5108aada94933a567e64744

Having any troubles? Hit us up at https://dvc.org/support, we are always happy to help!

2024-09-30 13:41:42,433 DEBUG: Analytics is enabled.

2024-09-30 13:41:42,458 DEBUG: Trying to spawn ['daemon', 'analytics', '/tmp/tmpwllf0ijo', '-v']

2024-09-30 13:41:42,465 DEBUG: Spawned ['daemon', 'analytics', '/tmp/tmpwllf0ijo', '-v'] with pid 111408

2024-09-30 13:41:42,466 DEBUG: Removing '/tmp/tmp5s8bt4cedvc-clone'

2024-09-30 13:41:42,495 DEBUG: Removing '/tmp/tmpsuoa_qcgdvc-cache'

```

Then I tried if I can push from `repo-b`. I can.

```

[repo-b] [master *]

-> % touch test

-> % uv run dvc push

Collecting

|1.00 [00:00, 234entry/s]

Pushing

1 file pushed

```

Same problem when I target a specific file:

```

[repo-b] [master *]

-> % uv run dvc get "git@gitlab.com:<org>/repo-a.git" "data/master-table.csv"

ERROR: unexpected error - [Errno 2] No storage files available: 'data/master-table.csv'

```

But the file IS on the remote. I can pull it in the cloned `repo-a`.

Also, see this:

```

-> % uv run dvc get git@gitlab.com:<org>/repo-a.git data

ERROR: unexpected error - failed to load directory ('data', 'cvat', 'datumaro-dataset'): [Errno 2] No such file or directory: '/tmp/tmp_tgyr2ymdvc-cache/files/md5/8a/6de34918ed22935e97644bf465f920.dir'

```

This file (`files/md5/8a/6de34918ed22935e97644bf465f920.dir`) DOES exist on the remote!

### Environment information

```

-> % uv pip list G dvc

dvc 3.55.2

dvc-data 3.16.5

dvc-http 2.32.0

dvc-objects 5.1.0

dvc-render 1.0.2

dvc-studio-client 0.21.0

dvc-task 0.4.0

-> % uname -a

Linux <name> 6.8.0-45-generic #45~22.04.1-Ubuntu SMP PREEMPT_DYNAMIC Wed Sep 11 15:25:05 UTC 2 x86_64 x86_64 x86_64 GNU/Linux

-> % python --version

Python 3.10.13

```

**Output of `dvc doctor`:**

```console

DVC version: 3.55.2 (pip)

-------------------------

Platform: Python 3.10.14 on Linux-6.8.0-45-generic-x86_64-with-glibc2.35

Subprojects:

dvc_data = 3.16.6

dvc_objects = 5.1.0

dvc_render = 1.0.2

dvc_task = 0.4.0

scmrepo = 3.3.8

Supports:

azure (adlfs = 2024.7.0, knack = 0.12.0, azure-identity = 1.18.0),

http (aiohttp = 3.10.8, aiohttp-retry = 2.8.3),

https (aiohttp = 3.10.8, aiohttp-retry = 2.8.3)

Config:

Global: /home/mbs/.config/dvc

System: /home/mbs/.config/kdedefaults/dvc

Cache types: <https://error.dvc.org/no-dvc-cache>

Caches: local

Remotes: azure

Workspace directory: ext4 on /dev/mapper/vgkubuntu-root

Repo: dvc, git

Repo.site_cache_dir: /var/tmp/dvc/repo/bdf5f37be5108aada94933a567e64744

```

I already deleted `/var/tmp/dvc/`. Did not help.

|

closed

|

2024-09-30T12:26:41Z

|

2024-11-10T01:53:45Z

|

https://github.com/iterative/dvc/issues/10572

|

[

"awaiting response",

"triage"

] |

mbspng

| 10

|

tatsu-lab/stanford_alpaca

|

deep-learning

| 72

|

running the project.

|

så i downloaded and installed the requirements. i noticed utils.py is not written in normal python or at least im getting syntax error. when i run the code i get this error

`valiantlynx@DESKTOP-3EGT6DL:~/stanford_alpaca$ /usr/bin/python3 /home/valiantlynx/stanford_alpaca/train.py

/usr/lib/python3/dist-packages/requests/__init__.py:89: RequestsDependencyWarning: urllib3 (1.26.15) or chardet (3.0.4) doesn't match a supported version!

warnings.warn("urllib3 ({}) or chardet ({}) doesn't match a supported "

2023-03-17 01:44:16.830274: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 AVX512F AVX512_VNNI FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2023-03-17 01:44:17.316763: I tensorflow/core/util/port.cc:104] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable `TF_ENABLE_ONEDNN_OPTS=0`.

2023-03-17 01:44:18.295000: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory

2023-03-17 01:44:18.295070: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory

2023-03-17 01:44:18.295091: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.

Traceback (most recent call last):

File "/home/valiantlynx/stanford_alpaca/train.py", line 25, in <module>

import utils

File "/home/valiantlynx/stanford_alpaca/utils.py", line 40, in <module>

prompts: Union[str, Sequence[str], Sequence[dict[str, str]], dict[str, str]],

TypeError: 'type' object is not subscriptable`

what i ran was train.py. i diddnt edit anything. it comes from this fuction

`def openai_completion(

prompts: Union[str, Sequence[str], Sequence[dict[str, str]], dict[str, str]],

decoding_args: OpenAIDecodingArguments,

model_name="text-davinci-003",

sleep_time=2,

batch_size=1,

max_instances=sys.maxsize,

max_batches=sys.maxsize,

return_text=False,

**decoding_kwargs,

) -> Union[Union[StrOrOpenAIObject], Sequence[StrOrOpenAIObject], Sequence[Sequence[StrOrOpenAIObject]],]:

"""Decode with OpenAI API.

Args:

prompts: A string or a list of strings to complete. If it is a chat model the strings should be formatted

as explained here: https://github.com/openai/openai-python/blob/main/chatml.md. If it is a chat model

it can also be a dictionary (or list thereof) as explained here:

https://github.com/openai/openai-cookbook/blob/main/examples/How_to_format_inputs_to_ChatGPT_models.ipynb

decoding_args: Decoding arguments.

model_name: Model name. Can be either in the format of "org/model" or just "model".

sleep_time: Time to sleep once the rate-limit is hit.

batch_size: Number of prompts to send in a single request. Only for non chat model.

max_instances: Maximum number of prompts to decode.

max_batches: Maximum number of batches to decode. This argument will be deprecated in the future.

return_text: If True, return text instead of full completion object (which contains things like logprob).

decoding_kwargs: Additional decoding arguments. Pass in `best_of` and `logit_bias` if you need them.

Returns:

A completion or a list of completions.

Depending on return_text, return_openai_object, and decoding_args.n, the completion type can be one of

- a string (if return_text is True)

- an openai_object.OpenAIObject object (if return_text is False)

- a list of objects of the above types (if decoding_args.n > 1)

"""

is_single_prompt = isinstance(prompts, (str, dict))

if is_single_prompt:

prompts = [prompts]

if max_batches < sys.maxsize:

logging.warning(

"`max_batches` will be deprecated in the future, please use `max_instances` instead."

"Setting `max_instances` to `max_batches * batch_size` for now."

)

max_instances = max_batches * batch_size

prompts = prompts[:max_instances]

num_prompts = len(prompts)

prompt_batches = [

prompts[batch_id * batch_size : (batch_id + 1) * batch_size]

for batch_id in range(int(math.ceil(num_prompts / batch_size)))

]

completions = []

for batch_id, prompt_batch in tqdm.tqdm(

enumerate(prompt_batches),

desc="prompt_batches",

total=len(prompt_batches),

):

batch_decoding_args = copy.deepcopy(decoding_args) # cloning the decoding_args

while True:

try:

shared_kwargs = dict(

model=model_name,

**batch_decoding_args.__dict__,

**decoding_kwargs,

)

completion_batch = openai.Completion.create(prompt=prompt_batch, **shared_kwargs)

choices = completion_batch.choices

for choice in choices:

choice["total_tokens"] = completion_batch.usage.total_tokens

completions.extend(choices)

break

except openai.error.OpenAIError as e:

logging.warning(f"OpenAIError: {e}.")

if "Please reduce your prompt" in str(e):

batch_decoding_args.max_tokens = int(batch_decoding_args.max_tokens * 0.8)

logging.warning(f"Reducing target length to {batch_decoding_args.max_tokens}, Retrying...")

else:

logging.warning("Hit request rate limit; retrying...")

time.sleep(sleep_time) # Annoying rate limit on requests.

if return_text:

completions = [completion.text for completion in completions]

if decoding_args.n > 1:

# make completions a nested list, where each entry is a consecutive decoding_args.n of original entries.

completions = [completions[i : i + decoding_args.n] for i in range(0, len(completions), decoding_args.n)]

if is_single_prompt:

# Return non-tuple if only 1 input and 1 generation.

(completions,) = completions

return completions

`

im not the best at python, but ive not seen this syntax

`prompts: Union[str, Sequence[str], Sequence[dict[str, str]], dict[str, str]],`

`) -> Union[Union[StrOrOpenAIObject], Sequence[StrOrOpenAIObject], Sequence[Sequence[StrOrOpenAIObject]],]:`

im very new in ml so maybe im doing everything wrong.

|

closed

|

2023-03-17T00:47:40Z

|

2023-09-05T20:26:07Z

|

https://github.com/tatsu-lab/stanford_alpaca/issues/72

|

[] |

valiantlynx

| 6

|

keras-team/keras

|

tensorflow

| 20,463

|

BackupAndRestore callback sometimes can't load checkpoint

|

When training interrupts, sometimes model can't restore weights back with BackupAndRestore callback.

```python

Traceback (most recent call last):

File "/home/alex/jupyter/lab/model_fba.py", line 150, in <module>

model.fit(train_dataset, callbacks=callbacks, epochs=NUM_EPOCHS, steps_per_epoch=STEPS_PER_EPOCH, verbose=2)

File "/home/alex/.local/lib/python3.10/site-packages/keras/src/utils/traceback_utils.py", line 113, in error_handler

return fn(*args, **kwargs)

File "/home/alex/.local/lib/python3.10/site-packages/keras/src/backend/tensorflow/trainer.py", line 311, in fit

callbacks.on_train_begin()

File "/home/alex/.local/lib/python3.10/site-packages/keras/src/callbacks/callback_list.py", line 218, in on_train_begin

callback.on_train_begin(logs)

File "/home/alex/.local/lib/python3.10/site-packages/keras/src/callbacks/backup_and_restore.py", line 116, in on_train_begin

self.model.load_weights(self._weights_path)

File "/home/alex/.local/lib/python3.10/site-packages/keras/src/utils/traceback_utils.py", line 113, in error_handler

return fn(*args, **kwargs)

File "/home/alex/.local/lib/python3.10/site-packages/keras/src/models/model.py", line 353, in load_weights

saving_api.load_weights(

File "/home/alex/.local/lib/python3.10/site-packages/keras/src/saving/saving_api.py", line 251, in load_weights

saving_lib.load_weights_only(

File "/home/alex/.local/lib/python3.10/site-packages/keras/src/saving/saving_lib.py", line 550, in load_weights_only

weights_store = H5IOStore(filepath, mode="r")

File "/home/alex/.local/lib/python3.10/site-packages/keras/src/saving/saving_lib.py", line 931, in __init__

self.h5_file = h5py.File(root_path, mode=self.mode)

File "/home/alex/.local/lib/python3.10/site-packages/h5py/_hl/files.py", line 561, in __init__

fid = make_fid(name, mode, userblock_size, fapl, fcpl, swmr=swmr)

File "/home/alex/.local/lib/python3.10/site-packages/h5py/_hl/files.py", line 235, in make_fid

fid = h5f.open(name, flags, fapl=fapl)

File "h5py/_objects.pyx", line 54, in h5py._objects.with_phil.wrapper

File "h5py/_objects.pyx", line 55, in h5py._objects.with_phil.wrapper

File "h5py/h5f.pyx", line 102, in h5py.h5f.open

OSError: Unable to synchronously open file (bad object header version number)

```

|

closed

|

2024-11-07T05:57:29Z

|

2024-11-11T16:49:36Z

|

https://github.com/keras-team/keras/issues/20463

|

[

"type:Bug"

] |

shkarupa-alex

| 1

|

ShishirPatil/gorilla

|

api

| 45

|

[bug] Hosted Gorilla: <Issue>

|

Exception: Error communicating with OpenAI: HTTPConnectionPool(host='34.132.127.197', port=8000): Max retries exceeded with url: /v1/chat/completions (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fa8bdf53c40>: Failed to establish a new connection: [Errno 111] Connection refused'))

Failed model: gorilla-7b-hf-v0, for prompt: I would like to translate from English to Chinese

|

closed

|

2023-06-09T04:12:38Z

|

2023-06-09T05:11:29Z

|

https://github.com/ShishirPatil/gorilla/issues/45

|

[

"hosted-gorilla"

] |

luismanriqueruiz

| 1

|

indico/indico

|

sqlalchemy

| 6,127

|

Add per-keyword coloring and legend in event calendar view

|

### Is your feature request related to a problem?

With #6105 and #6106 it will be possible to display event in the event calendar view and its legend based on category and location. It would be useful to also present the legend and color the event based on event keywords.

### Describe the solution you'd like

Add "keyword" to the legend dropdrown, allowing users to select coloring events in the calendar by keyword. Since events can have zero, one or more than one keyword, the legend should include two special items:

- [No keyword]

- [Multiple keywords]

In order to distinguish these special items, we could:

1. Display them always on top of the list.

2. Render text differently (e.g. italic?).

### Describe alternatives you've considered

One problem with this feature is that event keywords can be arbitrary. The list, hence, can grow pretty large. Based on my discussion with @ThiefMaster last Wednesday, this feature should:

1. Be implemented alongside the possibility to define a list of curated event keywords in the admin settings, perhaps in the `/admin/event-labels/` view.

2. Choosing "keyword" in the event calendar legend should be enabled only when the list of keywords is curated.

Some thoughts for point `1.`:

- Clarify the difference between event keywords in event labels both in `/admin/event-labels/` view and the `/event/<event_id>/manage/` view.

- Once there is a curated list of keywords, the widget in the `/event/<event_id>/manage/` view should be a dropdown. This dropdown could be the same as the one used for choosing labels in event registrations.

**Additional context**

The feature is a stepping stone for filtering events in the calendar by location.

This feature request comes inspired by [Perimeter Institute](https://perimeterinstitute.ca/)'s in-house developed calendar view:

<img width="1272" alt="image" src="https://github.com/indico/indico/assets/716307/1cca5b7b-da74-47a4-91ed-cdc514ede47e">

|

closed

|

2024-01-12T16:51:16Z

|

2024-03-05T17:14:02Z

|

https://github.com/indico/indico/issues/6127

|

[

"enhancement"

] |

OmeGak

| 0

|

microsoft/MMdnn

|

tensorflow

| 584

|

tf->caffe error Tensorflow has not supported operator [Softmax] with name [eval_prediction]

|

Platform (ubuntu 16.04):

Python version:3.6

Source framework with version (tensorflow-gpu 1.12.0):

Destination framework with version (caffe-gpu 1.0 ):

(py3-mmdnn) root@ubuntu:/home/j00465446/test/ckpt_model# mmconvert -sf tensorflow -in my_model.ckpt.meta -iw my_model.ckpt --dstNode eval_prediction -df caffe -om outmodel

Parse file [my_model.ckpt.meta] with binary format successfully.

Tensorflow model file [my_model.ckpt.meta] loaded successfully.

Tensorflow checkpoint file [my_model.ckpt] loaded successfully. [27] variables loaded.

**Tensorflow has not supported operator [Softmax] with name [eval_prediction].**

IR network structure is saved as [2d6f6575a1414bd1a89c5db0b01b93b8.json].

IR network structure is saved as [2d6f6575a1414bd1a89c5db0b01b93b8.pb].

IR weights are saved as [2d6f6575a1414bd1a89c5db0b01b93b8.npy].

Parse file [2d6f6575a1414bd1a89c5db0b01b93b8.pb] with binary format successfully.

Target network code snippet is saved as [2d6f6575a1414bd1a89c5db0b01b93b8.py].

Target weights are saved as [2d6f6575a1414bd1a89c5db0b01b93b8.npy].

**WARNING: Logging before InitGoogleLogging() is written to STDERR

E0213 15:17:43.837237 49862 common.cpp:114] Cannot create Cublas handle. Cublas won't be available.

E0213 15:17:43.837512 49862 common.cpp:121] Cannot create Curand generator. Curand won't be** available.

I0213 15:17:43.837596 49862 net.cpp:51] Initializing net from parameters:

state {

phase: TRAIN

level: 0

}

layer {

name: "eval_data"

type: "Input"

top: "eval_data"

input_param {

shape {

dim: 1

dim: 4

dim: 1000

dim: 1

}

}

}

layer {

name: "Conv2D"

type: "Convolution"

bottom: "eval_data"

top: "Conv2D"

convolution_param {

num_output: 16

bias_term: true

group: 1

stride: 1

pad_h: 0

pad_w: 0

kernel_h: 5

kernel_w: 1

}

}

layer {

name: "Relu"

type: "ReLU"

bottom: "Conv2D"

top: "Conv2D"

}

layer {

name: "MaxPool"

type: "Pooling"

bottom: "Conv2D"

top: "MaxPool"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

pad_h: 0

pad_w: 0

}

}

layer {

name: "Conv2D_1"

type: "Convolution"

bottom: "MaxPool"

top: "Conv2D_1"

convolution_param {

num_output: 16

bias_term: true

group: 1

stride: 1

pad_h: 0