repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

Gozargah/Marzban

|

api

| 1,320

|

Errors in latest dev version

|

When I try to search a user in the panel, if there are multiple instances only 10 of them will show and I get this error in logs.

also this error appears in the logs when some user ( I do not know which ) updates his subscription.

|

closed

|

2024-09-17T12:24:24Z

|

2024-09-19T13:00:28Z

|

https://github.com/Gozargah/Marzban/issues/1320

|

[

"Question"

] |

mhmdh94

| 1

|

biolab/orange3

|

data-visualization

| 6,837

|

qt.svg: Cannot open file '<local path>/canvas_icons:/Dropdown.svg'

|

When trying to run the env with "python -m Orange.canvas", this alert arises:

qt.svg: Cannot open file '<local path>/canvas_icons:/Dropdown.svg', because: La sintassi del nome del file, della directory o del volume non ? corretta.

(in english: file name syntax, directory or volume is wrong)

<!--

Thanks for taking the time to report a bug!

If you're raising an issue about an add-on (i.e., installed via Options > Add-ons), raise an issue in the relevant add-on's issue tracker instead. See: https://github.com/biolab?q=orange3

To fix the bug, we need to be able to reproduce it. Please answer the following questions to the best of your ability.

-->

**What's wrong?**

<!-- Be specific, clear, and concise. Include screenshots if relevant. -->

<!-- If you're getting an error message, copy it, and enclose it with three backticks (```). -->

**How can we reproduce the problem?**

<!-- Upload a zip with the .ows file and data. -->

<!-- Describe the steps (open this widget, click there, then add this...) -->

**What's your environment?**

<!-- To find your Orange version, see "Help → About → Version" or `Orange.version.full_version` in code -->

- Operating system:

- Orange version:

- How you installed Orange:

|

closed

|

2024-06-17T09:18:54Z

|

2024-08-30T08:02:45Z

|

https://github.com/biolab/orange3/issues/6837

|

[

"bug"

] |

Alex72RM

| 3

|

satwikkansal/wtfpython

|

python

| 348

|

Generate Jupyter notebooks for all translations

|

After #343 is done and translations are synced with base repo, translation maintainers shall generate `Jyputer` notebook

|

open

|

2024-10-16T08:04:27Z

|

2024-10-16T08:04:27Z

|

https://github.com/satwikkansal/wtfpython/issues/348

|

[] |

nifadyev

| 0

|

huggingface/transformers

|

pytorch

| 36,290

|

past_key_value(s) name inconsistency causing problems

|

### System Info

- `transformers` version: 4.50.0.dev0

- Platform: Linux-6.4.3-0_fbk14_zion_2601_gcd42476b84e9-x86_64-with-glibc2.34

- Python version: 3.12.9

- Huggingface_hub version: 0.28.1

- Safetensors version: 0.5.2

- Accelerate version: 1.4.0

- Accelerate config: not found

- DeepSpeed version: not installed

- PyTorch version (GPU?): 2.6.0.dev20241112+cu121 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using distributed or parallel set-up in script?: no

- Using GPU in script?: yes

- GPU type: NVIDIA H100

### Who can help?

@ArthurZucker probably others

### Information

- [x] The official example scripts

- [ ] My own modified scripts

### Tasks

- [x] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

run https://huggingface.co/docs/transformers/main/en/quantization/torchao

### Expected behavior

no error

____

this error is related to https://github.com/huggingface/transformers/pull/36289

a bunch of models use past_key_value and past_key_values interchangeably, this causes issues since kwarg names are hardcoded to be skipped by the _skip_keys_device_placement attribute. This causes issues any time torch.compile is used with a model that has this issue.

The above PR fixes the issue for llama but other models like src/transformers/models/moonshine/modeling_moonshine.py

src/transformers/models/mistral/modeling_mistral.py

src/transformers/models/emu3/modeling_emu3.py

...etc, have the same issue which is actually breaking CI for that PR.

this is also the cause of https://github.com/pytorch/ao/issues/1705 which is where this was first surfaced.

is there a reason for these two names to be used instead of just one? If not it seems like they should be entirely consolidated to avoid such issues, if so, then _skip_keys_device_placement needs to include both across all models or

|

open

|

2025-02-19T22:03:42Z

|

2025-03-22T08:03:05Z

|

https://github.com/huggingface/transformers/issues/36290

|

[

"bug"

] |

HDCharles

| 1

|

akfamily/akshare

|

data-science

| 5,941

|

AKShare 接口问题报告 - stock_board_industry_index_ths

|

已经升级到了AKShare最新版:akshare 1.16.58

Python版本:Python 3.12.8

操作系统:Mac 14.6.1

报错接口:stock_board_industry_index_ths

问题描述:

通过stock_board_concept_name_ths获得同花顺的概念板块列表,

然后通过stock_board_industry_index_ths获得板块指数,发现大部分的板块都无法正常获取

代码如下:

```

import akshare as ak

sklist = ak.stock_board_concept_name_ths()

print(sklist)

stock_board_industry_index_ths_df = ak.stock_board_industry_index_ths(symbol="自由贸易港", start_date="20250301", end_date="20250305")

print(stock_board_industry_index_ths_df)

```

console返回:

```

name code

0 阿尔茨海默概念 308614

1 AI PC 309121

2 AI手机 309120

3 AI语料 309126

4 阿里巴巴概念 301558

.. ... ...

356 租售同权 302034

357 自由贸易港 306398

358 3D打印 300127

359 5G 300843

360 6G概念 309055

[361 rows x 2 columns]

Traceback (most recent call last):

File "/1.py", line 6, in <module>

stock_board_industry_index_ths_df = ak.stock_board_industry_index_ths(symbol="阿里巴巴概念", start_date="20250301", end_date="20250305")

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/Users/lord/.pyenv/versions/3.12.8/lib/python3.12/site-packages/akshare/stock_feature/stock_board_industry_ths.py", line 139, in stock_board_industry_index_ths

symbol_code = code_map[symbol]

~~~~~~~~^^^^^^^^

KeyError: '阿里巴巴概念'

```

目前试过了:阿尔茨海默概念、AI PC、AI手机、阿里巴巴概念租售同权、自由贸易港 等都无法正确获得数据。

|

closed

|

2025-03-21T01:26:21Z

|

2025-03-21T10:07:47Z

|

https://github.com/akfamily/akshare/issues/5941

|

[

"bug"

] |

1eez

| 2

|

HumanSignal/labelImg

|

deep-learning

| 261

|

Licensing?

|

While working on a feature for https://github.com/wkentaro/labelme, I (accidentally) copied a line of code from that project into a Google Search. It seems that there's quite some overlap between the code of wkentaro's labelme project and the code in this project.

After some more reading, I found that wkentaro's labelme project put [an acknowledgement](https://github.com/wkentaro/labelme#acknowledgement) to https://github.com/mpitid/pylabelme. That project was last touched in 2011 and again has large parts of code that are exactly the same. It seems that mpitid's pylabelme project is not only the origin of wkentaro's labelme project, but also this project. Is that true?

|

open

|

2018-03-24T22:37:35Z

|

2018-03-24T22:37:35Z

|

https://github.com/HumanSignal/labelImg/issues/261

|

[] |

mbuijs

| 0

|

kaliiiiiiiiii/Selenium-Driverless

|

web-scraping

| 132

|

(selenium-driverless 1.7)await driver.find_element(By.XPATH,'xxx') : Unable to find element

|

closed

|

2023-12-16T17:57:03Z

|

2023-12-16T18:01:28Z

|

https://github.com/kaliiiiiiiiii/Selenium-Driverless/issues/132

|

[] |

User-Clb

| 1

|

|

numba/numba

|

numpy

| 9,886

|

numba jit: "Type of variable <varname>.2 cannot be determined" for a fully specified variable.

|

# Bug report

### Bug description:

I have several functions that work fine with jit and njit. All variables are explicitly typed, such as

```python

def afun(parm1:int, parm2:int)->list[int]:

varname:int=3

listname:list[int]=[0]

```

In one function, the jit complains about a variable as having an undeterminable type, even though it has been explicitly typed:

```Type of variable 'artp.2' cannot be determined, operation: call $1078load_global.2($binop_add1104.10, func=$1078load_global.2, args=[Var($binop_add1104.10, primes2025a.py:240)], kws=(), vararg=None, varkwarg=None, target=None), location: /home/dakra/./primes2025a.py (240)```

where the code looks like:

```python

from numba import njit

from numba import jit

@jit

def sieve12(upToNumm: int=100000000, pnprimorial:int=3) -> list[int]:

# early in the function similar to:

C0:int=0

modprimorialdo:list[int]=[1,5]

lmodprimorialdo:int=len(modprimorialdo)

ddocol:int=C0

fdorowcol:list[int]=[C0,C0]

artp:int=C0

# and later:

# there are assignment statements for useful values for these variables, and then:

artp:int=int(ddocol+fdorowcol[C0]*lmodprimorialdo))

```

The full error report is :

```

Traceback (most recent call last):

File "/home/dakra/./primes2025a.py", line 810, in <module>

print("sieve12",n,pr,len(primesl:=sieve12(n,pr)), primesl[:10], primesl[-10:])

^^^^^^^^^^^^^

File "/home/dakra/.local/lib/python3.12/site-packages/numba/core/dispatcher.py", line 423, in _compile_for_args

error_rewrite(e, 'typing')

File "/home/dakra/.local/lib/python3.12/site-packages/numba/core/dispatcher.py", line 364, in error_rewrite

raise e.with_traceback(None)

numba.core.errors.TypingError: Failed in nopython mode pipeline (step: nopython frontend)

Type of variable 'artp.2' cannot be determined, operation: call $1078load_global.2($binop_add1104.10, func=$1078load_global.2, args=[Var($binop_add1104.10, primes2025a.py:240)], kws=(), vararg=None, varkwarg=None, target=None), location: /home/dakra/./primes2025a.py (240)

File "primes2025a.py", line 240:

def sieve12(upToNumm: int=100000000, pnprimorial:int=3)->list[int]:

<source elided>

artp:int=int(ddocol+fdorowcol[C0]*lmodprimorialdo)

^

```

In another case, the jit processing complained about one of the parameters.

What does it take to get the jit to recognize the explicit type specifications?

### CPython versions tested on:

3.12

### Operating systems tested on:

Linux

|

open

|

2025-01-06T15:58:31Z

|

2025-02-14T01:57:27Z

|

https://github.com/numba/numba/issues/9886

|

[

"more info needed",

"stale"

] |

dakra137

| 3

|

pydantic/pydantic

|

pydantic

| 10,443

|

Schema generation error when serialization schema holds a reference

|

### Initial Checks

- [X] I confirm that I'm using Pydantic V2

### Description

The following code currently raises:

```python

from typing import Annotated

from pydantic import BaseModel, PlainSerializer

class Sub(BaseModel):

pass

class Model(BaseModel):

sub: Annotated[

Sub,

PlainSerializer(lambda v: v, return_type=Sub),

]

# pydantic_core._pydantic_core.SchemaError: Definitions error: definition `__main__.Sub:97954749220704` was never filled

```

The reason is that, before schema cleaning, the core schema looks like:

```python

{

│ 'type': 'definitions',

│ 'schema': {'type': 'definition-ref', 'schema_ref': '__main__.Model:107773130038816'},

│ 'definitions': [

│ │ {

│ │ │ 'type': 'model',

│ │ │ 'cls': <class '__main__.Sub'>,

│ │ │ 'schema': {'type': 'model-fields', 'fields': {}, 'model_name': 'Sub', 'computed_fields': []},

│ │ │ 'config': {'title': 'Sub'},

│ │ │ 'ref': '__main__.Sub:107773128271744',

│ │ │ 'metadata': {'<stripped>'}

│ │ },

│ │ {

│ │ │ 'type': 'model',

│ │ │ 'cls': <class '__main__.Model'>,

│ │ │ 'schema': {

│ │ │ │ 'type': 'model-fields',

│ │ │ │ 'fields': {

│ │ │ │ │ 'sub': {

│ │ │ │ │ │ 'type': 'model-field',

│ │ │ │ │ │ 'schema': {

│ │ │ │ │ │ │ 'type': 'definition-ref',

│ │ │ │ │ │ │ 'schema_ref': '__main__.Sub:107773128271744',

│ │ │ │ │ │ │ 'serialization': {

│ │ │ │ │ │ │ │ 'type': 'function-plain',

│ │ │ │ │ │ │ │ 'function': <function Model.<lambda> at 0x7ae519b4f060>,

│ │ │ │ │ │ │ │ 'info_arg': False,

│ │ │ │ │ │ │ │ 'return_schema': {'type': 'definition-ref', 'schema_ref': '__main__.Sub:107773128271744'}

│ │ │ │ │ │ │ }

│ │ │ │ │ │ },

│ │ │ │ │ │ 'metadata': {'<stripped>'}

│ │ │ │ │ }

│ │ │ │ },

│ │ │ │ 'model_name': 'Model',

│ │ │ │ 'computed_fields': []

│ │ │ },

│ │ │ 'config': {'title': 'Model'},

│ │ │ 'metadata': {'<stripped>'},

│ │ │ 'ref': '__main__.Model:107773130038816'

│ │ }

│ ]

}

```

Which is quite unusual: the `'definition-ref'` schema to `Sub` has a `serialization` key. Pydantic does not expect this to happen, especially during schema simplification (when counting refs):

https://github.com/pydantic/pydantic/blob/01daafaab0aae71d2fb57c42461b3d021a3c56d4/pydantic/_internal/_core_utils.py#L447-L463

At some point, `count_refs` is called with the following schema:

```python

{

│ 'type': 'definition-ref',

│ 'schema_ref': '__main__.Sub:106278832701040',

│ 'serialization': {

│ │ 'type': 'function-plain',

│ │ 'function': <function Model.<lambda> at 0x7aed4dc920c0>,

│ │ 'info_arg': False,

│ │ 'return_schema': {'type': 'definition-ref', 'schema_ref': '__main__.Sub:106278832701040'}

│ }

}

```

the "top level" `__main__.Sub` ref is properly counted, but we skip recursion of the `serialization` schema. In the end, the ref counts for `__main__.Sub` is incorrect, and ends up incorrectly being inlined, which results in the schema validation error.

### Example Code

_No response_

### Python, Pydantic & OS Version

```Text

2.9

```

|

closed

|

2024-09-19T07:32:28Z

|

2024-09-19T13:42:42Z

|

https://github.com/pydantic/pydantic/issues/10443

|

[

"bug V2"

] |

Viicos

| 0

|

microsoft/nni

|

deep-learning

| 5,224

|

AttributeError: 'torch._C.Node' object has no attribute 'schema'

|

I use the tool to try to prue my model such as (https://github.com/microsoft/nni/blob/dab51f799f77aa72c18774faffaedf8d0ee2c977/examples/model_compress/pruning/admm_pruning_torch.py)

I only change the model(with restnet) and the dataloader:

But now there is some problem when I use ModelSpeedup

File "<ipython-input-7-25297990bbbb>", line 1, in <module>

ModelSpeedup(model, torch.randn([1, 2, 224, 224]).to(device), masks).speedup_model()

File "D:\anaconda3\lib\site-packages\nni\compression\pytorch\speedup\compressor.py", line 543, in speedup_model

self.infer_modules_masks()

File "D:\anaconda3\lib\site-packages\nni\compression\pytorch\speedup\compressor.py", line 380, in infer_modules_masks

self.update_direct_sparsity(curnode)

File "D:\anaconda3\lib\site-packages\nni\compression\pytorch\speedup\compressor.py", line 228, in update_direct_sparsity

func = jit_to_python_function(node, self)

File "D:\anaconda3\lib\site-packages\nni\compression\pytorch\speedup\jit_translate.py", line 554, in jit_to_python_function

return trans_func_dict[node.op_type](node, speedup)

File "D:\anaconda3\lib\site-packages\nni\compression\pytorch\speedup\jit_translate.py", line 488, in generate_aten_to_python

schema = c_node.schema()

AttributeError: 'torch._C.Node' object has no attribute 'schema'

|

closed

|

2022-11-11T14:10:09Z

|

2022-12-05T02:38:01Z

|

https://github.com/microsoft/nni/issues/5224

|

[] |

sunpeil

| 3

|

huggingface/transformers

|

pytorch

| 36,025

|

HIGGS Quantization not working properly

|

### System Info

**Environment**

```

- `transformers` version: 4.48.2

- Platform: Linux-5.4.210-39.1.pagevecsize-x86_64-with-glibc2.27

- Python version: 3.11.10

- Huggingface_hub version: 0.26.2

- Safetensors version: 0.4.5

- Accelerate version: 1.1.1

- Accelerate config: not found

- PyTorch version (GPU?): 2.4.0+cu121 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using distributed or parallel set-up in script?: <fill in>

- Using GPU in script?: <fill in>

- GPU type: NVIDIA A100-SXM4-80GB

- fast_hadamard_transform 1.0.4.post1

```

### Who can help?

@BlackSamorez

@SunMarc

@ArthurZucker

### Information

- [ ] The official example scripts

- [x] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

Recently, in the [PR](https://github.com/huggingface/transformers/pull/34997) HIGGS quantization from the paper [Pushing the Limits of Large Language Model Quantization via the Linearity Theorem](https://arxiv.org/abs/2411.17525) was introduced.

But when attempting to load the quantized `Llama-3.1-8B-Instruct `model in this format as follows:

```python

model_name = "meta-llama/Llama-3.1-8B-Instruct"

quantization_config = HiggsConfig(bits=4, p=2)

model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map="auto",

torch_dtype=torch.bfloat16,

low_cpu_mem_usage=True,

quantization_config=quantization_config

)

model.config.use_cache = False

```

And doing forward pass with dummy inputs

```python

inputs = torch.randint(0, model.config.vocab_size, device="cuda", size=(8,))

with torch.no_grad():

outputs = model(inputs)

```

I get the following error in the RoPE:

```bash

File ~/miniconda3/envs/llm/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py:271, in LlamaAttention.forward(self, hidden_states, position_embeddings, attention_mask, past_key_value, cache_position, **kwargs)

[268](https://vscode-remote+ssh-002dremote-002bultramar.vscode-resource.vscode-cdn.net/home/dkuznedelev/FLUTE_Playground/~/miniconda3/envs/llm/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py:268) value_states = self.v_proj(hidden_states).view(hidden_shape).transpose(1, 2)

[270](https://vscode-remote+ssh-002dremote-002bultramar.vscode-resource.vscode-cdn.net/home/dkuznedelev/FLUTE_Playground/~/miniconda3/envs/llm/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py:270) cos, sin = position_embeddings

--> [271](https://vscode-remote+ssh-002dremote-002bultramar.vscode-resource.vscode-cdn.net/home/dkuznedelev/FLUTE_Playground/~/miniconda3/envs/llm/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py:271) query_states, key_states = apply_rotary_pos_emb(query_states, key_states, cos, sin)

[273](https://vscode-remote+ssh-002dremote-002bultramar.vscode-resource.vscode-cdn.net/home/dkuznedelev/FLUTE_Playground/~/miniconda3/envs/llm/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py:273) if past_key_value is not None:

[274](https://vscode-remote+ssh-002dremote-002bultramar.vscode-resource.vscode-cdn.net/home/dkuznedelev/FLUTE_Playground/~/miniconda3/envs/llm/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py:274) # sin and cos are specific to RoPE models; cache_position needed for the static cache

[275](https://vscode-remote+ssh-002dremote-002bultramar.vscode-resource.vscode-cdn.net/home/dkuznedelev/FLUTE_Playground/~/miniconda3/envs/llm/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py:275) cache_kwargs = {"sin": sin, "cos": cos, "cache_position": cache_position}

File ~/miniconda3/envs/llm/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py:169, in apply_rotary_pos_emb(q, k, cos, sin, position_ids, unsqueeze_dim)

[167](https://vscode-remote+ssh-002dremote-002bultramar.vscode-resource.vscode-cdn.net/home/dkuznedelev/FLUTE_Playground/~/miniconda3/envs/llm/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py:167) cos = cos.unsqueeze(unsqueeze_dim)

[168](https://vscode-remote+ssh-002dremote-002bultramar.vscode-resource.vscode-cdn.net/home/dkuznedelev/FLUTE_Playground/~/miniconda3/envs/llm/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py:168) sin = sin.unsqueeze(unsqueeze_dim)

--> [169](https://vscode-remote+ssh-002dremote-002bultramar.vscode-resource.vscode-cdn.net/home/dkuznedelev/FLUTE_Playground/~/miniconda3/envs/llm/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py:169) q_embed = (q * cos) + (rotate_half(q) * sin)

[170](https://vscode-remote+ssh-002dremote-002bultramar.vscode-resource.vscode-cdn.net/home/dkuznedelev/FLUTE_Playground/~/miniconda3/envs/llm/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py:170) k_embed = (k * cos) + (rotate_half(k) * sin)

[171](https://vscode-remote+ssh-002dremote-002bultramar.vscode-resource.vscode-cdn.net/home/dkuznedelev/FLUTE_Playground/~/miniconda3/envs/llm/lib/python3.11/site-packages/transformers/models/llama/modeling_llama.py:171) return q_embed, k_embed

RuntimeError: The size of tensor a (32) must match the size of tensor b (128) at non-singleton dimension 3

```

### Expected behavior

I would expect successful forward pass through the quantized model.

|

closed

|

2025-02-04T08:55:00Z

|

2025-02-19T05:35:52Z

|

https://github.com/huggingface/transformers/issues/36025

|

[

"bug"

] |

Godofnothing

| 3

|

ipython/ipython

|

jupyter

| 14,377

|

Not properly detecting IPython usage inside virtualenvs created via `uv venv`

|

I used the [`uv`](https://pypi.org/project/uv/) tool to create my venv and also to install ipython into it (via `uv pip install ipython`). When starting ipython I see the following warning:

```

C:\Users\jburnett1\Code\coordinates data analysis\.venv\Lib\site-packages\IPython\core\interactiveshell.py:937: UserWarning: Attempting to work in a virtualenv. If you encounter problems, please install IPython inside the virtualenv.

warn(

```

But if I examine the location of the IPython module it's definitely inside the currently activated venv:

```

import IPython

IPython

Out[4]: <module 'IPython' from 'C:\\Users\\jburnett1\\Code\\coordinates data analysis\\.venv\\Lib\\site-packages\\IPython\\__init__.py'>

import sys

sys.executable

Out[6]: 'C:\\Users\\jburnett1\\Code\\coordinates data analysis\\.venv\\Scripts\\python.exe'

```

I did find [this very old issue](https://github.com/ipython/ipython/issues/10955) which seemed to have a similar root cause, but the fix doesn't apparently work with the way that `uv` creates venvs & installs packages into them.

My version info:

```

sys.version

Out[7]: '3.12.1 (tags/v3.12.1:2305ca5, Dec 7 2023, 22:03:25) [MSC v.1937 64 bit (AMD64)]'

IPython.__version__

Out[8]: '8.22.2'

```

This is w/ uv version 0.1.6.

|

open

|

2024-03-28T14:18:37Z

|

2024-07-26T07:26:44Z

|

https://github.com/ipython/ipython/issues/14377

|

[] |

joshburnett

| 2

|

coqui-ai/TTS

|

deep-learning

| 2,649

|

[Bug]

|

### Describe the bug

Cannot find speaker file when using local nl models

### To Reproduce

1. download the nl model files

2. Run the code:

```

tts = TTS(model_path = <"model_path">,

config_path =<"config_path">,

vocoder_path = None,

vocoder_config_path = None,

progress_bar = False,

gpu = False)

```

### Expected behavior

The same normal behaviour as running `TTS('tts_models/nl/css10/vits', progress_bar=False, gpu=False)`

### Logs

```shell

FileNotFoundError: [Errno 2] No such file or directory: '/root/.cache/huggingface/hub/models--neongeckocom--tts-vits-css10-nl/snapshots/be7a7c7bee463588626b10777d7fc14ed8c07a3e/speaker_ids.json'

```

### Environment

```shell

TTS==0.13.3

```

### Additional context

working on a docker container

|

closed

|

2023-06-02T15:26:29Z

|

2023-06-05T08:00:42Z

|

https://github.com/coqui-ai/TTS/issues/2649

|

[

"bug"

] |

SophieDC98

| 1

|

pallets/flask

|

flask

| 5,004

|

Flask routes to return domain/sub-domains information

|

Currently when checking **flask routes** it provides all routes but **it is no way to see which routes are assigned to which subdomain**.

**Default server name:**

SERVER_NAME: 'test.local'

**Domains (sub-domains):**

test.test.local

admin.test.local

test.local

**Adding blueprints:**

app.register_blueprint(admin_blueprint,url_prefix='',subdomain='admin')

app.register_blueprint(test_subdomain_blueprint,url_prefix='',subdomain='test')

```

$ flask routes

* Tip: There are .env or .flaskenv files present. Do "pip install python-dotenv" to use them.

Endpoint Methods Rule

------------------------------------------------------- --------- ------------------------------------------------

admin_blueprint.home GET /home

test_subdomain_blueprint.home GET /home

static GET /static/<path:filename>

...

```

**Feature request**

It will be good to see something like below (that will make more clear which route for which subdomain, because now need to go and check configuration).

**If it is not possible to fix routes**, can you add or tell which method(s) should be used to get below information from flask?

```

$ flask routes

* Tip: There are .env or .flaskenv files present. Do "pip install python-dotenv" to use them.

Domain Endpoint Methods Rule

----------------- ---------------------------------------------------- ---------- ------------------------------------------------

admin.test.local admin_blueprint.home GET /home

test.test.local test_subdomain_blueprint.home GET /home

test.local static GET /static/<path:filename>

...

```

|

closed

|

2023-02-26T17:25:08Z

|

2023-04-29T00:05:02Z

|

https://github.com/pallets/flask/issues/5004

|

[

"cli"

] |

rimvislt

| 0

|

gradio-app/gradio

|

python

| 9,959

|

gr.File should add .html extension to URLs if possible

|

### Describe the bug

When using gr.File, the file uploaded keeps its extension. However, when using a URL like `https://liquipedia.net/starcraft2/Adept`, the file saved in the cache has no extension. This is technically correct. However, since it's HTML being downloaded, it should really save with the `.html` extension (equivalent to right-click and Save As in the browser). This makes it easier for a user to identify where it came from and also makes it easier for file type detection (avoiding the need for things like libmagic). Maybe it could just be a "default_web_extension" option that the user sets in the control.

The data source is lost when the upload happens (probably for security reasons?), so once a file has been downloaded to cache, you can't tell what it was originally or where it came from. The workaround would be to assume no extension means HTML, but that's a little error prone too.

### Have you searched existing issues? 🔎

- [X] I have searched and found no existing issues

### Reproduction

Just use gr.File to upload a URL by pasting a URL into the File Explorer pop-up that appears when clicking Upload.

### Screenshot

_No response_

### Logs

_No response_

### System Info

```shell

gradio==5.4.0

```

### Severity

I can work around it

|

closed

|

2024-11-14T14:10:07Z

|

2024-12-25T20:46:28Z

|

https://github.com/gradio-app/gradio/issues/9959

|

[

"enhancement",

"needs designing"

] |

JohnDuncanScott

| 2

|

CorentinJ/Real-Time-Voice-Cloning

|

deep-learning

| 747

|

PATH ISSUE module note recognized after installation

|

Hello I am so far doing good with the installation but finally when ready to start it, I receive the error

D:\Real Time Voice Cloning - Copy\Real-Time-Voice-Cloning-master>python demo_toolbox.py

Traceback (most recent call last):

File "demo_toolbox.py", line 2, in <module>

from toolbox import Toolbox

File "D:\Real Time Voice Cloning - Copy\Real-Time-Voice-Cloning-master\toolbox\__init__.py", line 1, in <module>

from toolbox.ui import UI

File "D:\Real Time Voice Cloning - Copy\Real-Time-Voice-Cloning-master\toolbox\ui.py", line 6, in <module>

from encoder.inference import plot_embedding_as_heatmap

File "D:\Real Time Voice Cloning - Copy\Real-Time-Voice-Cloning-master\encoder\inference.py", line 3, in <module>

from encoder.audio import preprocess_wav # We want to expose this function from here

File "D:\Real Time Voice Cloning - Copy\Real-Time-Voice-Cloning-master\encoder\audio.py", line 7, in <module>

import librosa

ModuleNotFoundError: No module named 'librosa'

The strange thing is that when prompted for librosa or to install librosa (specifically I perform a pip install) librosa is already installed. I believe this is simply a path issue because I have a dual hard drive setup. How exactly do I go about pathing the file to recognize librosa since the demo toolbox simply can't find the librosa. If possible can you maybe use imagery since I don't want to demand too much of your time, especially since you developed this amazing piece of software. Thank you for your diligence as well as pro level programming.

|

closed

|

2021-04-28T21:53:03Z

|

2021-05-04T17:20:36Z

|

https://github.com/CorentinJ/Real-Time-Voice-Cloning/issues/747

|

[] |

PsychoJack88

| 1

|

miguelgrinberg/python-socketio

|

asyncio

| 558

|

unable to stream saved videos from client to server

|

I can send my live video feed from client to server but when I am streaming the saved video stream I am unable to send it. It shows a segmentation fault.

Given below is my code

```python

from flask import Flask, render_template, request

import socketio

from time import sleep

import cv2

import json

import base64

cap=cv2.VideoCapture('768x576.avi')

sio = socketio.Client(engineio_logger=True)

@sio.event

def connect():

print("CONNECTED")

@sio.event

def send_data():

i=0

while(1):

ret,img=cap.read()

if ret:

img = cv2.resize(img, (0,0), fx=0.3, fy=0.3)

frame = cv2.imencode('.jpg', img)[1].tobytes()

frame = base64.encodebytes(frame).decode("utf-8")

message(frame)

else:

break

sleep(0.1)

def message(json):

print("/////////////////////////////500")

sio.emit('send',json)

@sio.event

def disconnect():

print("DISCONNECTED")

if __name__ == '__main__':

sio.connect('http://localhost:5000')

sio.wait()

```

Thanks in advance

|

closed

|

2020-11-02T13:14:02Z

|

2020-11-03T05:19:26Z

|

https://github.com/miguelgrinberg/python-socketio/issues/558

|

[

"question"

] |

AhmedBhati

| 2

|

dmlc/gluon-nlp

|

numpy

| 1,094

|

Add length normalized loss metrics in API

|

## Description

In typical machine translation tasks, training/validation metric loss is computed using some loss function normalized by the length of target sequence. For example, in `train_gnmt.py`, the metric is computed with the following code:

```python

loss = loss_function(out, tgt_seq[:, 1:], tgt_valid_length - 1).mean()

loss = loss * (tgt_seq.shape[1] - 1) / (tgt_valid_length - 1).mean()

```

Current Mxnet `metric.loss` does not support length normalization. It will be great to add length normalized metric in the api.

## References

- https://github.com/apache/incubator-mxnet/blob/master/python/mxnet/metric.py#L1661

- https://github.com/dmlc/gluon-nlp/blob/master/scripts/machine_translation/train_gnmt.py

|

closed

|

2020-01-06T07:18:17Z

|

2020-01-22T06:55:49Z

|

https://github.com/dmlc/gluon-nlp/issues/1094

|

[

"enhancement"

] |

liuzh47

| 0

|

napari/napari

|

numpy

| 7,329

|

napari freeze - QXcbIntegration: Cannot create platform OpenGL context, neither GLX nor EGL are enabled

|

### 🐛 Bug Report

when starting napari I get a frozen napari window displaying the terminal and the following error :

```

(napari) biop@c79840740ddb:~$ napari

WARNING: QStandardPaths: XDG_RUNTIME_DIR not set, defaulting to '/tmp/runtime-biop'

08:49:29 : WARNING : MainThread : QStandardPaths: XDG_RUNTIME_DIR not set, defaulting to '/tmp/runtime-biop'

WARNING: QXcbIntegration: Cannot create platform OpenGL context, neither GLX nor EGL are enabled

08:49:29 : WARNING : MainThread : QXcbIntegration: Cannot create platform OpenGL context, neither GLX nor EGL are enabled

WARNING: QXcbIntegration: Cannot create platform offscreen surface, neither GLX nor EGL are enabled

08:49:29 : WARNING : MainThread : QXcbIntegration: Cannot create platform offscreen surface, neither GLX nor EGL are enabled

WARNING: QXcbIntegration: Cannot create platform OpenGL context, neither GLX nor EGL are enabled

08:49:32 : WARNING : MainThread : QXcbIntegration: Cannot create platform OpenGL context, neither GLX nor EGL are enabled

WARNING: QXcbIntegration: Cannot create platform OpenGL context, neither GLX nor EGL are enabled

08:49:32 : WARNING : MainThread : QXcbIntegration: Cannot create platform OpenGL context, neither GLX nor EGL are enabled

WARNING: composeAndFlush: QOpenGLContext creation failed

```

### 💡 Steps to Reproduce

1. build a docker image with the docker file :

```dockerfile

ARG ALIAS=biop/

ARG BASE_IMAGE=0.1.0

FROM ${ALIAS}biop-vnc-base:${BASE_IMAGE}

USER root

# create napari env

RUN conda create --name napari napari=0.5.4 pyqt -c conda-forge -y

RUN chown -R biop:biop /home/biop/ \

&& chmod -R a+rwx /home/biop/

#################################################################

# Container start

USER biop

WORKDIR /home/biop

ENTRYPOINT ["/usr/local/bin/jupyter"]

CMD ["lab", "--allow-root", "--ip=*", "--port=8888", "--no-browser", "--NotebookApp.token=''", "--NotebookApp.allow_origin='*'", "--notebook-dir=/home/biop"]

```

2. start the image, follow the link to http://localhost:8888/lab

3. click on VNC icon , to start the desktop

4. start a terminal

5. type `source activate napari`

6. type napari

7. get the error

### 💡 Expected Behavior

napari window should pop-up normally

### 🌎 Environment

not working env : [napari_notworking.txt](https://github.com/user-attachments/files/17343548/napari_notworking.txt)

working env old version (0.4.18) of napari with devbio-napari [devbio_working.txt](https://github.com/user-attachments/files/17343551/devbio_working.txt)

### 💡 Additional Context

Thank you for any suggestion you might have

Cheers,

Romain

|

closed

|

2024-10-11T13:55:28Z

|

2025-03-10T09:54:12Z

|

https://github.com/napari/napari/issues/7329

|

[

"bug"

] |

romainGuiet

| 10

|

sunscrapers/djoser

|

rest-api

| 243

|

Changing Email Templates and Functionality

|

I was unable to change the email templates for Activation, Confirmation, or PasswordReset email. I wanted to add more to the context like a url with a url scheme for an app (not just http:// or https://).

I created a pull request #242 but if there is another way to do this currently, it would be much appreciated. I prefer to stay on the release branch because #242 maybe rejected or take a while to be made into a release.

|

closed

|

2017-11-01T13:13:22Z

|

2017-11-05T01:41:30Z

|

https://github.com/sunscrapers/djoser/issues/243

|

[] |

hammadzz

| 4

|

globaleaks/globaleaks-whistleblowing-software

|

sqlalchemy

| 3,185

|

Add support for Ubuntu Jammy Jellyfish (22.04)

|

This ticket is to extend support to [Ubuntu Jammy Jellyfish (22.04)](https://ubuntu.com/about/release-cycle) now in beta stage and for which release date is due to April 21, 2022.

|

closed

|

2022-02-28T14:43:44Z

|

2022-08-24T07:27:05Z

|

https://github.com/globaleaks/globaleaks-whistleblowing-software/issues/3185

|

[

"C: Packaging"

] |

evilaliv3

| 0

|

encode/uvicorn

|

asyncio

| 1,851

|

Route identification problem with pyinstaller

|

fastapi 0.89.1

uvicorn[standard] 0.20.0 (it did not happed on 0.17.6)

python 3.11.1

pyinstaller 5.7.0

This promble only happens when i use pyinstaller package the program with version 0.20.0,and when i reduce program version to 0.17.6,it fix.

When i use thwo routers like this,the router [name]with[s] will not get the state

router config/{name} can get state,but /configs cannot get the state,when i change /configs to /configlist , it works.

|

closed

|

2023-01-28T14:40:42Z

|

2023-01-28T21:05:42Z

|

https://github.com/encode/uvicorn/issues/1851

|

[] |

wxh0402

| 0

|

akfamily/akshare

|

data-science

| 5,620

|

AKShare 接口问题报告 | AKShare Interface Issue Report

|

> 欢迎加入《数据科学实战》知识星球,交流财经数据与量化投资相关内容 |

> Welcome to join "Data Science in Practice" Knowledge

> Community for discussions on financial data and quantitative investment.

>

> 详细信息参考 | For detailed information, please visit::https://akshare.akfamily.xyz/learn.html

## 前提 | Prerequisites

遇到任何问题,请先将您的 AKShare 版本升级到**最新版**,可以通过如下命令升级 | Before reporting any issues, please upgrade

your AKShare to the **latest version** using the following command::

```

pip install akshare --upgrade # Python 版本需要大于等于 3.8 | Python version requirement ≥ 3.8

```

## 如何提交问题 | How to Submit an Issue

提交问题的同时,请提交以下相关信息,以更精准的解决问题。| Please provide the following information when

submitting an issue for more accurate problem resolution.

**不符合提交规范的 issues 会被关闭!** | **Issues that don't follow these guidelines will be closed!**

**详细问题描述** | Detailed Problem Description

1. 请先详细阅读文档对应接口的使用方式 | Please read the documentation thoroughly for the

relevant interface:https://akshare.akfamily.xyz

2. 操作系统版本,目前只支持 64 位操作系统 | Operating system version (64-bit only supported)

3. Python 版本,目前只支持 3.8 以上的版本 | Python version (must be 3.8 or above)

4. AKShare 版本,请升级到最新版 | AKShare version (please upgrade to latest)

5. 接口的名称和相应的调用代码 | Interface name and corresponding code

6. 接口报错的截图或描述 | Screenshot or description of the error

7. 期望获得的正确结果 | Expected correct results

|

closed

|

2025-02-16T05:43:44Z

|

2025-02-16T09:32:27Z

|

https://github.com/akfamily/akshare/issues/5620

|

[

"bug"

] |

cnfaxian

| 1

|

vimalloc/flask-jwt-extended

|

flask

| 280

|

flask_restplus and setting cookies

|

I'm using `Flask_RESTPlus` and I'm trying to set JWT cookies but looks like `flask_restplus` don't allow to return a `jsonify object` and I can't set the cookies in the response.

```

@api.route('/sign-in')

class SignInResource(Resource):

def post(self):

username_or_email = request.json.get('username_or_email', None)

password = request.json.get('password', None)

if username_or_email != "test" and password != test:

return {"error": "bad credentials"}

access_token = create_access_token(identity=user.id)

refresh_token = create_refresh_token(identity=user.id)

ret = {'sign_in': True}

resp = jsonify(ret)

set_access_cookies(resp, access_token)

set_refresh_cookies(resp, refresh_token)

return resp, 200 # DONT ALLOW return JSONIFY Object

```

Is there a workaround for this?

|

closed

|

2019-10-17T07:59:22Z

|

2019-10-17T14:52:17Z

|

https://github.com/vimalloc/flask-jwt-extended/issues/280

|

[] |

psdon

| 2

|

zappa/Zappa

|

django

| 928

|

[Migrated] Is it possible to not include the slug in the function name?

|

Originally from: https://github.com/Miserlou/Zappa/issues/2194 by [buckmaxwell](https://github.com/buckmaxwell)

<!--- Provide a general summary of the issue in the Title above -->

## Context

Is it possible to not include the slug in the function name? Ie, change default function name from `<project-name>-<stage-name>` to just `<project-name>`.

I'd like to be able to deploy / update an existing lambda by supplying an arn or something. My lambda was created by terraform.

|

closed

|

2021-02-20T13:24:39Z

|

2024-04-13T19:36:45Z

|

https://github.com/zappa/Zappa/issues/928

|

[

"no-activity",

"auto-closed"

] |

jneves

| 2

|

yihong0618/running_page

|

data-visualization

| 558

|

设置`IGNORE_BEFORE_SAVING=1`后`gen_svg.py`报错

|

看了下代码,应该是 `run_page/gpxtrackposter/track.py`中`load_from_db`函数的问题。blame下,是在这个[commit](https://github.com/yihong0618/running_page/commit/f17a3e694a2ba102a218efc16e21a8cf83f0053b#diff-ea1ab7ec71fbeb34ad5d08163ac064c11b28e4ccbe97f75b1a876015aac51a8e)引入的。

未设置该环境变量时,不会触发bug。设置该变量,则导致`summary_polyline`发生未定义先引用错误。

按照我的理解,代码应该改成这个样子:

```python

if IGNORE_BEFORE_SAVING:

summary_polyline = filter_out(activity.summary_polyline)

else:

summary_polyline = activity.summary_polyline

```

|

closed

|

2023-12-02T13:31:06Z

|

2023-12-02T14:18:51Z

|

https://github.com/yihong0618/running_page/issues/558

|

[] |

conanyangqun

| 4

|

ivy-llc/ivy

|

pytorch

| 28,627

|

Fix Frontend Failing Test: tensorflow - activations.tensorflow.keras.activations.relu

|

To-do List: https://github.com/unifyai/ivy/issues/27499

|

closed

|

2024-03-17T23:57:00Z

|

2024-03-25T12:47:11Z

|

https://github.com/ivy-llc/ivy/issues/28627

|

[

"Sub Task"

] |

ZJay07

| 0

|

kizniche/Mycodo

|

automation

| 950

|

Python 3 code input not storing measurements after upgrading to 8.9.1

|

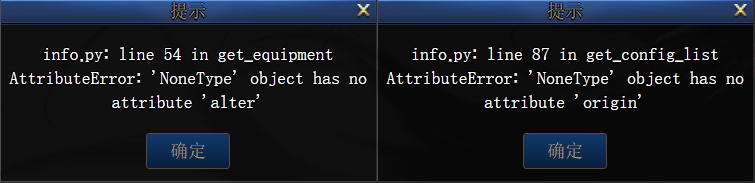

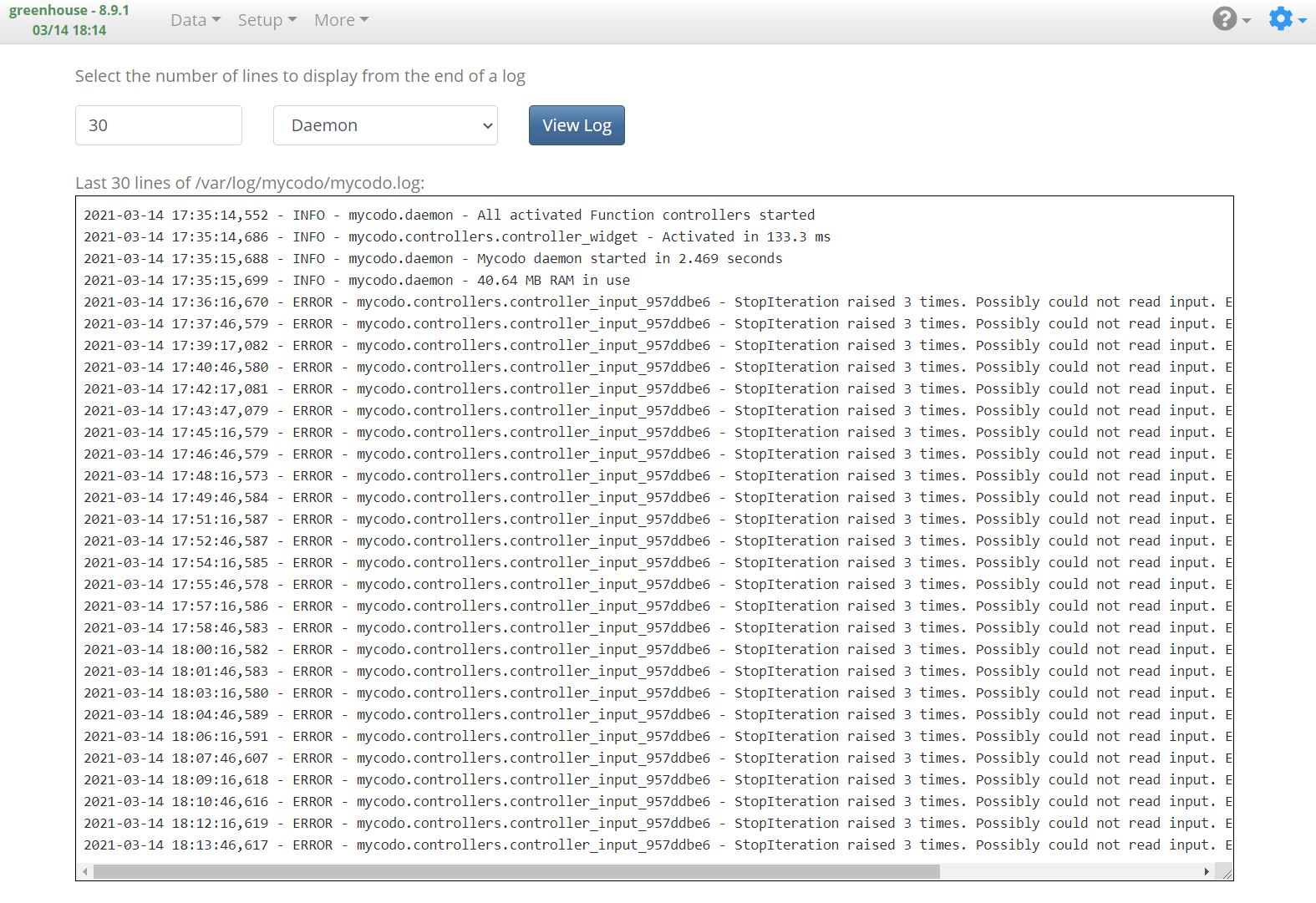

### Issue Summary

A Python 3 code input that was successfully running in Mycodo 8.8.8 without issues is now not storing measurements to the database. Mycodo is logging many of these errors:

`ERROR - mycodo.controllers.controller_input_957ddbe6 - StopIteration raised 3 times. Possibly could not read input. Ensure it's connected properly and detected.`

The code DOES successfully return the expected value, it just doesn't get stored. I can see the returned value in the Data -> Live view, and also on any of the dashboard widgets that use the live value. I can NOT view historical data (e.g. on either of the graph types) as I could on 8.8.8.

This is on a fresh install of Mycodo 8.9.1 with a single Python 3 input configured in the same manner (with the same code) that it was running successfully on 8.8.8.

### Versions:

- Mycodo Version: 8.9.1

- Raspberry Pi Version: 4

- Raspbian OS Version: Buster Lite 2020-02-13

### Reproducibility

The Python 3 code manipulates a JSN-SR04T sensor, so you'd need one to test. On a side note, I see that the latest version of Mycodo supports this sensor natively, but my environment has enough background noise the the sensor results are far too inconsistent to use without some manipulation, which I accomplish via code.

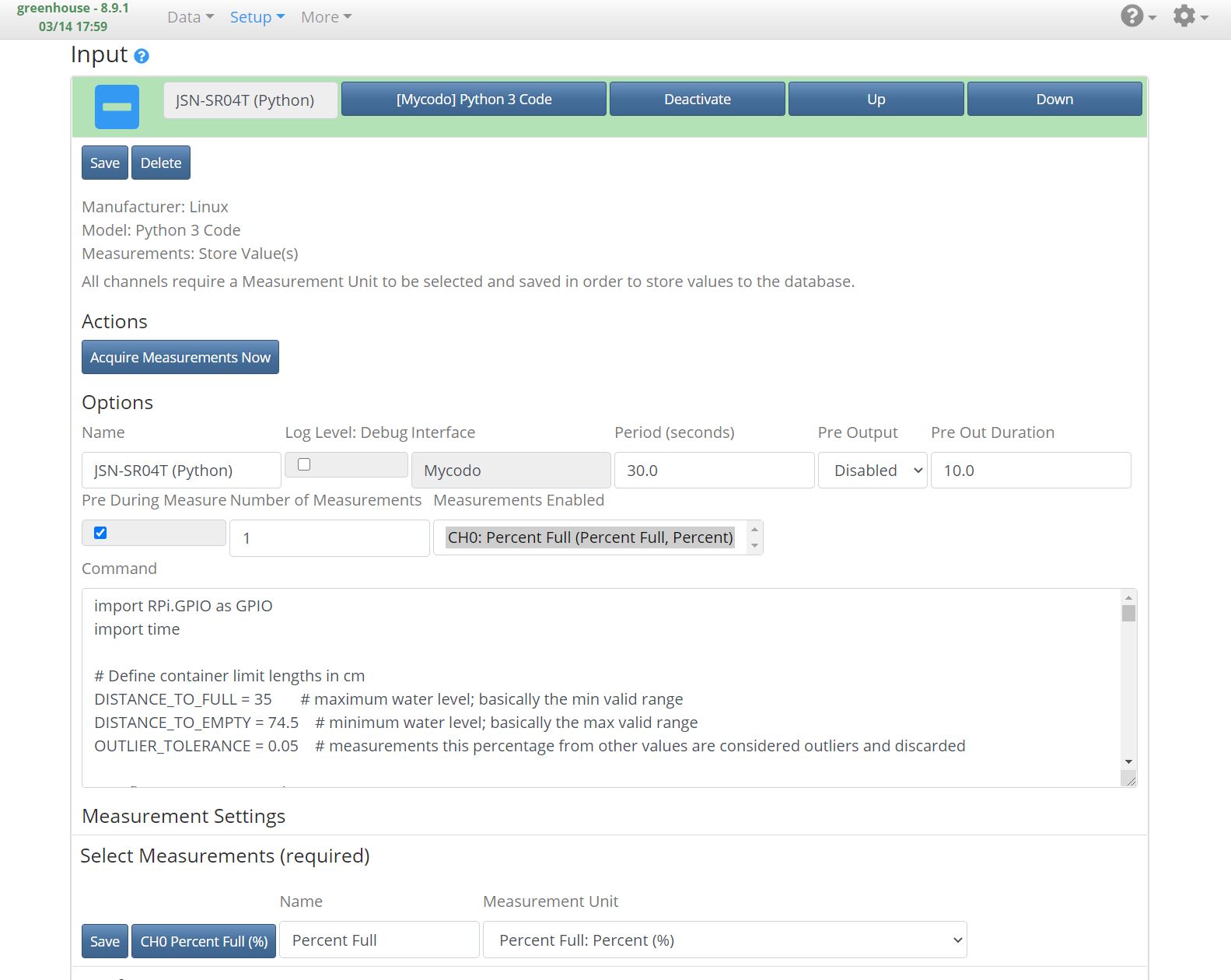

1. Go to 'Setup' -> 'Input'

2. Click on 'Linux: Python 3 Code: Store Value(s) [Mycodo]'

3. Configure as shown in this screenshot:

The full Python 3 code:

```

import RPi.GPIO as GPIO

import time

# Define container limit lengths in cm

DISTANCE_TO_FULL = 35 # maximum water level; basically the min valid range

DISTANCE_TO_EMPTY = 74.5 # minimum water level; basically the max valid range

OUTLIER_TOLERANCE = 0.05 # measurements this percentage from other values are considered outliers and discarded

# Define GPIO to use on Pi

GPIO_TRIGGER = 23

GPIO_ECHO = 18

TRIGGER_TIME = 0.00001

MAX_TIME = 0.1 # max time waiting for response in case something is missed

TIME_BETWEEN_MEASUREMENTS = 0.5 # time to sleep between each measurement

GPIO.setmode(GPIO.BCM)

GPIO.setwarnings(False)

GPIO.setup(GPIO_TRIGGER, GPIO.OUT) # Trigger

GPIO.setup(GPIO_ECHO, GPIO.IN, pull_up_down=GPIO.PUD_UP) # Echo

GPIO.output(GPIO_TRIGGER, False)

# This function measures a distance

def measure():

# Pulse the trigger/echo line to initiate a measurement

GPIO.output(GPIO_TRIGGER, True)

time.sleep(TRIGGER_TIME)

GPIO.output(GPIO_TRIGGER, False)

# ensure start time is set in case of very quick return

start = time.time()

timeout = start + MAX_TIME

# set line to input to check for start of echo response

while GPIO.input(GPIO_ECHO) == 0 and start <= timeout:

start = time.time()

if(start > timeout):

return -1

stop = time.time()

timeout = stop + MAX_TIME

# Wait for end of echo response

while GPIO.input(GPIO_ECHO) == 1 and stop <= timeout:

stop = time.time()

if(stop <= timeout):

elapsed = stop-start

distance = float(elapsed * 34300)/2.0

else:

return -1

return distance

# take 3 measurements, discard outliers, return averge result

def sample():

measurements = []

attempts = 0

while len(measurements) < 3 and attempts < 15:

if attempts > 0:

time.sleep(TIME_BETWEEN_MEASUREMENTS)

distance = measure()

attempts += 1

if(distance >= DISTANCE_TO_FULL and distance <= DISTANCE_TO_EMPTY):

measurements.append(distance)

if len(measurements) == 3:

# check for and remove outlier measurements

for i in range(len(measurements)):

if is_outlier(measurements, i):

measurements.pop(i)

# if there are at least 2 good data points remaining, take the average and return it

if len(measurements) >= 2:

measure_sum = 0

for i in range(len(measurements)):

measure_sum += measurements[i]

measure_avg = measure_sum/len(measurements)

return (measure_avg)

else:

# we didn't have at least 2 good data points

return -2

else:

return -1

# run the sample function twice, discard result if both values not within OUTLIER_TOLERANCE of each other

def double_sample():

trials = [-1, -1]

# run the two trials

for i in range(len(trials)):

attempt = 0

while attempt < 6 and trials[i] < 0:

attempt += 1

trials[i] = sample()

# compare the two results

if is_outlier(trials, 0):

# the two values aren't consistent

return -1

else:

trial_avg = (trials[0] + trials[1])/2

return trial_avg

# compare the value of the list item at the given index to every other item in the list

# if the given item varies by more than OUTLIER_TOLERANCE compared to the other items, it's an outlier

def is_outlier(list, index):

if index > len(list)-1:

return False

else:

outlier_compares = 0

outlier_true = 0

value = list[index]

for i in range(len(list)):

if i != index:

outlier_compares += 1

diff = abs(list[i] - value)

diff_percent = diff/list[i]

if diff_percent > OUTLIER_TOLERANCE:

outlier_true += 1

else:

break

if outlier_compares == outlier_true:

# every other item has a value that is off by at least OUTLIER_TOLERANCE, this is an outlier

return True

else:

# this item is within OUTLIER_TOLERANCE of every other item

return False

# take the measurement

attempts = 0

percent_full = -1

while attempts < 5:

attempts += 1

result = double_sample()

if result >= 0:

percent_full = (1-(result/DISTANCE_TO_EMPTY)) * 100

break

GPIO.cleanup()

# Store measurements in database (must specify the channel and measurement)

self.store_measurement(channel=0, measurement=percent_full)

```

4. Save/activate the input

### Expected behavior

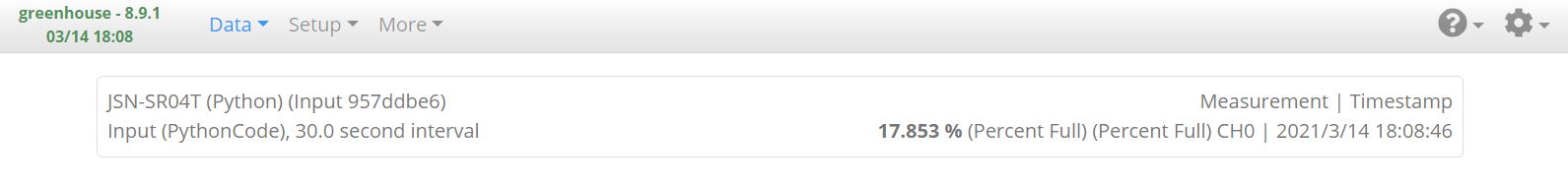

From here, I can navigate to Data -> Live and see the latest measurement as expected:

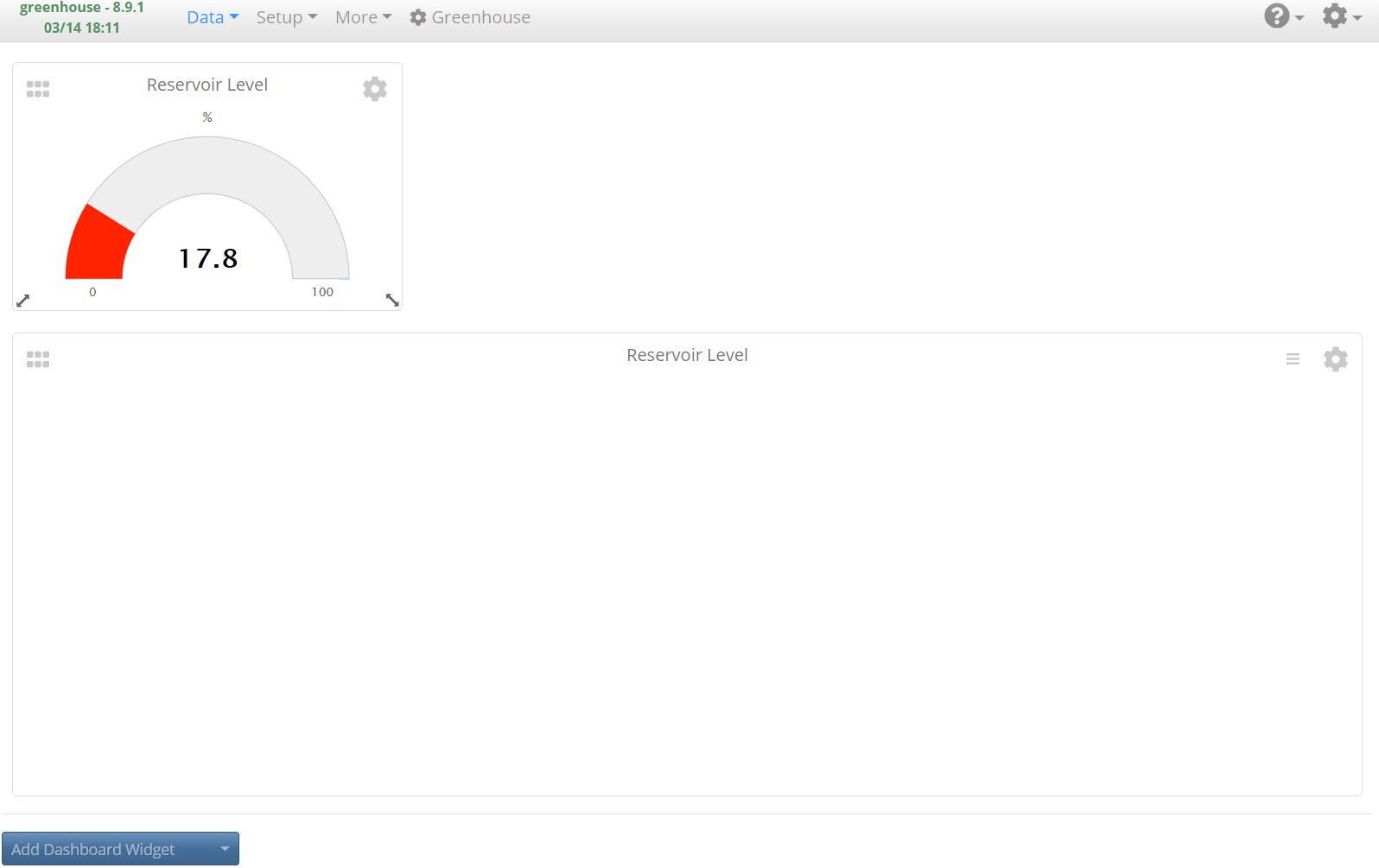

But if I try to create a graph widget, or view async graph data, they're just blank - presumably because historical data isn't being saved to the database properly:

Viewing the log, I see that a bunch of StopIteration errors are being thrown on many (but not all, judging by the timestamps) executions:

### Additional context

This code has been successfully running on a Mycodo 8.8.8 install for a couple weeks without issues. I attempted to use Mycodo's upgrade function to move to 8.9.1, but that broke my install (HTTP 500 errors on most pages, presumably from a dropped custom measurement I had created - I already created a [separate issue](https://github.com/kizniche/Mycodo/issues/949) for that). So I moved my Mycodo installation to a backup directory and installed 8.9.1 from scratch. Just mentioning in case that might play a part in the issue.

|

closed

|

2021-03-14T22:25:22Z

|

2021-03-18T17:01:36Z

|

https://github.com/kizniche/Mycodo/issues/950

|

[

"bug",

"Fixed and Committed"

] |

rbbrdckybk

| 6

|

jonaswinkler/paperless-ng

|

django

| 140

|

Show matching documents when clicking on document count

|

Hi :wave:,

I would like to make the following feature request proposal:

```bash

When I click on Tags in the Manage Menu

And I click on the document count of a specific tag

Then I see all associated documents in the document view using the tag as a filter

When I click on Correspondents in the Manage Menu

And I click on the document count of a specific correspondent

Then I see all associated documents in the document view using the correspondent as a filter

When I click on Document types in the Manage Menu

And I click on the document count of a specific document type

Then I see all associated documents in the document view using the document type as a filter

```

* Maybe this is obsolete with the new filter redesign, but I find it useful to reach the documents within these views

* I don't mind if the link is from document count, tag name, or else

|

closed

|

2020-12-15T16:30:54Z

|

2022-06-25T12:58:23Z

|

https://github.com/jonaswinkler/paperless-ng/issues/140

|

[

"feature request"

] |

Tooa

| 4

|

graphql-python/graphql-core

|

graphql

| 72

|

Exception instance returned from resolver is raised within executor instead of being passed down for further resolution.

|

Today when experimenting with 3rd party library for data validation (https://github.com/samuelcolvin/pydantic) I've noticed that when I take map of validation errors from it (which is dict of lists of `ValueError` and `TypeError` subclasses instances), I need to implement extra step to convert those errors to something else because query executor includes check for `isinstance(result, Exception)`. This check makes it raise returned exception instance, effectively short-circuiting further resolution:

https://github.com/graphql-python/graphql-core-next/blob/master/src/graphql/execution/execute.py#L731

The fix for issue was considerably simple to come up with: just write util that converts those errors to a dict as they are included in my result's `validation_errors` key, but I feel such boilerplate should be unnecessary:

```

try:

... run pydantic validation here

except (PydanticTypeError, PydanticValueError) as error:

return {"validation_errors": flatten_validation_error(error)}

```

Is this implementation a result of something in spec, or mechanism used to keep other feature's (eg. error propagation) code simple? I think we should consider supporting this use-case. Considerable number of libraries use exceptions for messaging (eg. Django with its `ValidationError` and bunch of `core.exceptions.*`)

|

closed

|

2019-12-14T22:35:16Z

|

2020-01-06T13:07:34Z

|

https://github.com/graphql-python/graphql-core/issues/72

|

[] |

rafalp

| 1

|

numpy/numpy

|

numpy

| 28,365

|

BUG: duplication in `ufunc.types`

|

### Describe the issue:

I'm working on type-test code generation, and I assumed that the `ufunc.types` entries would be unique. But as it turns out, that's not the case.

Specifically, these are all the duplicate `types` values in the public `numpy` namespace:

```

acos: e->e f->f d->d

acosh: e->e f->f d->d

asin: e->e f->f d->d

asinh: e->e f->f d->d

atan: e->e f->f d->d

atanh: e->e f->f d->d

cbrt: e->e f->f d->d

ceil: f->f d->d

cosh: e->e f->f d->d

exp: f->f d->d

exp2: e->e f->f d->d

expm1: e->e f->f d->d

floor: f->f d->d

log: f->f d->d

log10: e->e f->f d->d

log1p: e->e f->f d->d

log2: e->e f->f d->d

pow: ee->e ff->f dd->d

rint: f->f d->d

sinh: e->e f->f d->d

sqrt: f->f d->d

tan: e->e f->f d->d

tanh: e->e f->f d->d

trunc: f->f d->d

```

I'm guesing it's related to the e.g. `single` vs `float64` stuff.

In my use-case, it's not difficult to work around. But I thought maybe this could maybe cause some performance issues or something 🤷🏻.

### Reproduce the code example:

```python

import numpy as np

assert len(np.log.types) == len(set(np.log.types))

```

### Error message:

```shell

Traceback (most recent call last):

File "stop_eating_that_clay__son.py", line 3, in <module>

assert len(set(np.log.types)) == len(np.log.types)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

AssertionError

```

### Python and NumPy Versions:

```

2.2.3

3.13.2 (main, Feb 7 2025, 04:13:54) [GCC 11.4.0]

```

### Runtime Environment:

```

[{'numpy_version': '2.2.3',

'python': '3.13.2 (main, Feb 7 2025, 04:13:54) [GCC 11.4.0]',

'uname': uname_result(system='Linux', node='pop-os', release='6.9.3-76060903-generic', version='#202405300957~1738770968~22.04~d5f7c84 SMP PREEMPT_DYNAMIC Wed F', machine='x86_64')},

{'simd_extensions': {'baseline': ['SSE', 'SSE2', 'SSE3'],

'found': ['SSSE3',

'SSE41',

'POPCNT',

'SSE42',

'AVX',

'F16C',

'FMA3',

'AVX2'],

'not_found': ['AVX512F',

'AVX512CD',

'AVX512_KNL',

'AVX512_KNM',

'AVX512_SKX',

'AVX512_CLX',

'AVX512_CNL',

'AVX512_ICL']}},

{'architecture': 'Haswell',

'filepath': '/home/joren/.pyenv/versions/3.13.2/lib/python3.13/site-packages/numpy.libs/libscipy_openblas64_-6bb31eeb.so',

'internal_api': 'openblas',

'num_threads': 32,

'prefix': 'libscipy_openblas',

'threading_layer': 'pthreads',

'user_api': 'blas',

'version': '0.3.28'},

{'architecture': 'Haswell',

'filepath': '/home/joren/.pyenv/versions/3.13.2/lib/python3.13/site-packages/scipy.libs/libscipy_openblas-68440149.so',

'internal_api': 'openblas',

'num_threads': 32,

'prefix': 'libscipy_openblas',

'threading_layer': 'pthreads',

'user_api': 'blas',

'version': '0.3.28'}]

```

### Context for the issue:

_No response_

|

open

|

2025-02-20T18:39:58Z

|

2025-02-25T11:06:53Z

|

https://github.com/numpy/numpy/issues/28365

|

[

"00 - Bug"

] |

jorenham

| 1

|

MaartenGr/BERTopic

|

nlp

| 1,767

|

How to get more than 3 representative docs per topic via get_topic_info()?

|

Hey, thank you so much for making this library! Super awesome.

I've seen a bunch of issues here requesting this but haven't found a straightforward easy way to specify it via get_topic_info() as it contains a lot of the information I need. I wish there was a parameter in there like get_topic_info(number_of_representative_documents=3) that I could modify.

I'm not sure that `_extract_representative_docs` will work in my context as I'm using umap, hdbscan, and gpt for topic labels, no tfidf or anything, which seems to be a required parameter

|

open

|

2024-01-22T22:44:49Z

|

2024-03-20T09:09:16Z

|

https://github.com/MaartenGr/BERTopic/issues/1767

|

[] |

youssefabdelm

| 3

|

python-gitlab/python-gitlab

|

api

| 2,732

|

first run in jenkins always fails with GitlabHttpError: 403: insufficient_scope

|

## Description of the problem, including code/CLI snippet

we use jenkins for our builds and use semantic-versioning. usually, when someone pushed something into master/main, jenkins creates a new version, tags it to gitlab and builds the distribution. unfortunately, since a few weeks(not sure about that) we have a very wierd behaviour. the first run fails.

## Expected Behavior

a regular jenkins build with tags pushed to gitlab and no error

## Actual Behavior

we get an error GitlabHttpError: 403: insufficient_scope when jenkins runs the first time on that branch, when i then start it manually, it works. even more wierd: the tag is created!

```

(.pyenv-python) C:\Users\jenkinsSA\AppData\Local\Jenkins\.jenkins\workspace\usermgmtautomation_master>

git checkout master

Switched to branch 'master'

[Pipeline] bat

(.pyenv-python) C:\Users\jenkinsSA\AppData\Local\Jenkins\.jenkins\workspace\usermgmtautomation_master>

semantic-release version 1>version.txt

The next version is: 0.7.3! \U0001f680

No build command specified, skipping

[08:54:15] ERROR [semantic_release.cli.commands.version] version.py:553

ERROR version.version: 403:

insufficient_scope

+--- Traceback (most recent call last) ----+

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\gi |

| tlab\exceptions.py:336 in wrapped_f |

| |

| 333 @functools.wraps(f) |

| 334 def wrapped_f(*args: Any, |

| 335 try: |

| > 336 return f(*args, ** |

| 337 except GitlabHttpError |

| 338 raise error(e.erro |

| 339 |

| |

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\gi |

| tlab\mixins.py:300 in create |

| |

| 297 |

| 298 # Handle specific URL for |

| 299 path = kwargs.pop("path", |

| > 300 server_data = self.gitlab. |

| 301 if TYPE_CHECKING: |

| 302 assert not isinstance( |

| 303 assert self._obj_cls i |

| |

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\gi |

| tlab\client.py:1021 in http_post |

| |

| 1018 query_data = query_data o |

| 1019 post_data = post_data or |

| 1020 |

| > 1021 result = self.http_reques |

| 1022 "post", |

| 1023 path, |

| 1024 query_data=query_data |

| |

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\gi |

| tlab\client.py:794 in http_request |

| |

| 791 response_body |

| 792 ) |

| 793 |

| > 794 raise gitlab.exceptio |

| 795 response_code=res |

| 796 error_message=err |

| 797 response_body=res |

+------------------------------------------+

GitlabHttpError: 403: insufficient_scope

The above exception was the direct cause of

the following exception:

+--- Traceback (most recent call last) ----+

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\se |

| mantic_release\hvcs\gitlab.py:131 in |

| create_or_update_release |

| |

| 128 self, tag: str, release_no |

| 129 ) -> str: |

| 130 try: |

| > 131 return self.create_rel |

| 132 tag=tag, release_n |

| 133 ) |

| 134 except gitlab.GitlabCreate |

| |

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\se |

| mantic_release\helpers.py:52 in _wrapper |

| |

| 49 ) |

| 50 |

| 51 # Call function |

| > 52 result = func(*args, * |

| 53 |

| 54 # Log result |

| 55 logger.debug("%s -> %s |

| |

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\se |

| mantic_release\hvcs\gitlab.py:97 in |

| create_release |

| |

| 94 client.auth() |

| 95 log.info("Creating release |

| 96 # ref: https://docs.gitlab/ |

| > 97 client.projects.get(self.o |

| 98 { |

| 99 "name": "Release " |

| 100 "tag_name": tag, |

| |

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\gi |

| tlab\exceptions.py:338 in wrapped_f |

| |

| 335 try: |

| 336 return f(*args, ** |

| 337 except GitlabHttpError |

| > 338 raise error(e.erro |

| 339 |

| 340 return cast(__F, wrapped_f |

| 341 |

+------------------------------------------+

GitlabCreateError: 403: insufficient_scope

During handling of the above exception,

another exception occurred:

+--- Traceback (most recent call last) ----+

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\gi |

| tlab\exceptions.py:336 in wrapped_f |

| |

| 333 @functools.wraps(f) |

| 334 def wrapped_f(*args: Any, |

| 335 try: |

| > 336 return f(*args, ** |

| 337 except GitlabHttpError |

| 338 raise error(e.erro |

| 339 |

| |

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\gi |

| tlab\mixins.py:368 in update |

| |

| 365 ) |

| 366 |

| 367 http_method = self._get_up |

| > 368 result = http_method(path, |

| 369 if TYPE_CHECKING: |

| 370 assert not isinstance( |

| 371 return result |

| |

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\gi |

| tlab\client.py:1075 in http_put |

| |

| 1072 query_data = query_data o |

| 1073 post_data = post_data or |

| 1074 |

| > 1075 result = self.http_reques |

| 1076 "put", |

| 1077 path, |

| 1078 query_data=query_data |

| |

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\gi |

| tlab\client.py:794 in http_request |

| |

| 791 response_body |

| 792 ) |

| 793 |

| > 794 raise gitlab.exceptio |

| 795 response_code=res |

| 796 error_message=err |

| 797 response_body=res |

+------------------------------------------+

GitlabHttpError: 403: insufficient_scope

The above exception was the direct cause of

the following exception:

+--- Traceback (most recent call last) ----+

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\se |

| mantic_release\cli\commands\version.py:5 |

| 47 in version |

| |

| 544 noop_report(f"would ha |

| 545 else: |

| 546 try: |

| > 547 release_id = hvcs_ |

| 548 tag=new_versio |

| 549 release_notes= |

| 550 prerelease=new |

| |

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\se |

| mantic_release\helpers.py:52 in _wrapper |

| |

| 49 ) |

| 50 |

| 51 # Call function |

| > 52 result = func(*args, * |

| 53 |

| 54 # Log result |

| 55 logger.debug("%s -> %s |

| |

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\se |

| mantic_release\hvcs\gitlab.py:141 in |

| create_or_update_release |

| |

| 138 self.owner, |

| 139 self.repo_name, |

| 140 ) |

| > 141 return self.edit_relea |

| 142 |

| 143 def compare_url(self, from_rev |

| 144 return |

| f"[https://{self.hvcs_domain}/{self](https://{self.hvcs_domain}/%7Bself) |

| v}" |

| |

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\se |

| mantic_release\helpers.py:52 in _wrapper |

| |

| 49 ) |

| 50 |

| 51 # Call function |

| > 52 result = func(*args, * |

| 53 |

| 54 # Log result |

| 55 logger.debug("%s -> %s |

| |

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\se |

| mantic_release\hvcs\gitlab.py:118 in |

| edit_release_notes |

| |

| 115 client.auth() |

| 116 log.info("Updating release |

| 117 |

| > 118 client.projects.get(self.o |

| 119 release_id, |

| 120 { |

| 121 "description": rel |

| |

| C:\Users\jenkinsSA\AppData\Local\Jenkins |

| \.jenkins\workspace\usermgmtautomation_m |

| aster\.pyenv-python\Lib\site-packages\gi |

| tlab\exceptions.py:338 in wrapped_f |

| |

| 335 try: |

| 336 return f(*args, ** |

| 337 except GitlabHttpError |

| > 338 raise error(e.erro |

| 339 |

| 340 return cast(__F, wrapped_f |

| 341 |

+------------------------------------------+

GitlabUpdateError: 403: insufficient_scope

Usage: semantic-release version [OPTIONS]

Try 'semantic-release version -h' for help.

Error: 403: insufficient_scope

```

## Specifications

- python-gitlab version: python_gitlab-3.15.0-py3-none-any.whl

- API version you are using (v3/v4):

- Gitlab server version (or gitlab.com): gitlab.com

|

closed

|

2023-11-27T08:12:16Z

|

2024-12-02T01:54:49Z

|

https://github.com/python-gitlab/python-gitlab/issues/2732

|

[

"support"

] |

damnmso

| 3

|

indico/indico

|

sqlalchemy

| 6,602

|

Peer-review module: having a synthetic table of the paper status

|

**Is your feature request related to a problem? Please describe.**

For the IPAC conferences Light Peer Review we use the indico Peer-Review module. We have more than 1000 papers submitted to the conference and more than 100 submitted to light peer review.

In the judging area we get a list of all papers dominated by the "not submitted" papers.

Having a synthetic table giving the number of paper with the number of paper with each status would help.

**Describe the solution you'd like**

Have somewhere in the peer-review module with the number of papers with each status and a link to see all the papers with that status.

**Describe alternatives you've considered**

For IPAC'23 I made a script that downloaded the page https://indico.jacow.org/event/37/papers/judging/ and counted the number of papers with each status.

**Additional context**

This is the list I am referring to. That page would count the number of papers with each "state"value.

<img width="654" alt="copie_ecran 2024-11-06 à 15 20 01" src="https://github.com/user-attachments/assets/ea635556-e0df-438c-8c8d-2a121cff459b">

|

open

|

2024-11-06T14:51:38Z

|

2024-11-06T14:51:38Z

|

https://github.com/indico/indico/issues/6602

|

[

"enhancement"

] |

NicolasDelerueLAL

| 0

|

iterative/dvc

|

machine-learning

| 10,612

|

"appending" dvc.log.lock file type idea for exps

|

I've been using dvc for a while and I understand the architecture and philosophy. I also understand the decisions behind `dvc exp`eriments with the challenge there being trying to align git's linear development notion with experiment's more "parallel" notion.

I think having to go through dvc exp is too "heavy". Now, if you think of using special git refs as "backend" storage for experiments, alternatively you can achieve the same with dvc lock files that append (instead of overwrite) runs. This way you can keep original (simple) dvc behavior of just dealing with lock files while also capturing the same information for parameterized runs (exps).

So, I should be able to "checkout" a particular run from the dvc.log.lock file instead of having to deal with dvc exp (parameterized or not).

|

closed

|

2024-11-05T17:01:04Z

|

2024-11-05T19:47:28Z

|

https://github.com/iterative/dvc/issues/10612

|

[] |

majidaldo

| 0

|

scikit-learn/scikit-learn

|

data-science

| 30,744

|

Unexpected <class 'AttributeError'>. 'LinearRegression' object has no attribute 'positive

|

My team changed to scikit-learn v1.6.1 this week. We had v1.5.1 before. Our code crashes in this exact line with the error "Unexpected <class 'AttributeError'>. 'LinearRegression' object has no attribute 'positive'".

We cannot deploy in production because of this. I am desperate enough to come here to ask for help. I do not understand why it would complain that the attribute does not exist given that we were using v1.5.1 before and the attribute has existed for 4 years now. My only guess is if we are loading a very old pickled model that does not have the attribute, so it crashes here. Unfortunately I cannot share any pieces of code as it is proprietary.

_Originally posted by @ItsIronOxide in https://github.com/scikit-learn/scikit-learn/pull/30187#discussion_r1937427235_

|

open

|

2025-01-31T15:08:46Z

|

2025-02-04T06:58:02Z

|

https://github.com/scikit-learn/scikit-learn/issues/30744

|

[

"Needs Reproducible Code"

] |

ItsIronOxide

| 2

|

replicate/cog

|

tensorflow

| 2,035

|

replecate.helpers.fileoutput

|

replecate.helpers.fileoutput

i got this error when try to use fooocus ai from replecate api

but the image is complete processing in the server

|

closed

|

2024-10-30T13:09:22Z

|

2024-11-04T13:28:48Z

|

https://github.com/replicate/cog/issues/2035

|

[] |

uptimeai11062024

| 1

|

InstaPy/InstaPy

|

automation

| 6,826

|

Instagram

|

<!-- Did you know that we have a Discord channel ? Join us: https://discord.gg/FDETsht -->

<!-- Is this a Feature Request ? Please, check out our Wiki first https://github.com/timgrossmann/InstaPy/wiki -->

## Expected Behavior

## Current Behavior

## Possible Solution (optional)

## InstaPy

> **configuration**

|

open

|

2024-09-20T20:04:52Z

|

2024-09-20T20:04:52Z

|

https://github.com/InstaPy/InstaPy/issues/6826

|

[] |

R299489

| 0

|

explosion/spaCy

|

machine-learning

| 13,635

|

Still encounter "TypeError: issubclass() arg 1 must be a class" problem with pydantic == 2.9.2 and scapy == 3.7.6

|

### Discussed in https://github.com/explosion/spaCy/discussions/13634

<div type='discussions-op-text'>

<sup>Originally posted by **LuoXiaoxi-cxq** September 26, 2024</sup>

I was running the training part of the [self-attentive-parser] (https://github.com/nikitakit/self-attentive-parser), where I met this error (probably caused by version problems of `spacy` and `pydantic`):

```

Traceback (most recent call last):

File "D:\postgraduate\research\parsing\self-attentive-parser\src\main.py", line 11, in <module>

from benepar import char_lstm

File "D:\postgraduate\research\parsing\self-attentive-parser\src\benepar\__init__.py", line 20, in <module>

from .integrations.spacy_plugin import BeneparComponent, NonConstituentException

File "D:\postgraduate\research\parsing\self-attentive-parser\src\benepar\integrations\spacy_plugin.py", line 5, in <module>

from .spacy_extensions import ConstituentData, NonConstituentException

File "D:\postgraduate\research\parsing\self-attentive-parser\src\benepar\integrations\spacy_extensions.py", line 177, in <module>

install_spacy_extensions()

File "D:\postgraduate\research\parsing\self-attentive-parser\src\benepar\integrations\spacy_extensions.py", line 153, in install_spacy_extensions

from spacy.tokens import Doc, Span, Token

File "D:\anaconda\lib\site-packages\spacy\__init__.py", line 14, in <module>

from . import pipeline # noqa: F401

File "D:\anaconda\lib\site-packages\spacy\pipeline\__init__.py", line 1, in <module>

from .attributeruler import AttributeRuler

File "D:\anaconda\lib\site-packages\spacy\pipeline\attributeruler.py", line 6, in <module>

from .pipe import Pipe

File "spacy\pipeline\pipe.pyx", line 8, in init spacy.pipeline.pipe