repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

koxudaxi/datamodel-code-generator

|

fastapi

| 1,496

|

Optional not generated for nullable array items defined according to OpenAPI 3.0 spec, despite using --strict-nullable

|

Items in an array are not generated as `Optional`, despite adding the `nullable: true` property.

**To Reproduce**

Example schema:

```yaml

# test.yml

properties:

list1:

type: array

items:

type: string

nullable: true

required:

- list1

```

Used commandline:

```

datamodel-codegen --input test.yml --output model.py --strict-nullable

```

This generates a datamodel in which the list items are not nullable:

```py

class Model(BaseModel):

list1: List[str]

```

**Expected behavior**

The definition for a nullable string is correct according to the OpenAPI 3.0 spec.

```yaml

type: string

nullable: true

```

So I would expect Optional array items to be generated:

```py

class Model(BaseModel):

list1: List[Optional[str]]

```

**Version:**

- OS: macOS 13.4.1

- Python version: 3.11

- datamodel-code-generator version: 0.21.4

|

closed

|

2023-08-18T05:50:09Z

|

2023-12-04T15:11:57Z

|

https://github.com/koxudaxi/datamodel-code-generator/issues/1496

|

[

"bug"

] |

tfausten

| 1

|

huggingface/transformers

|

pytorch

| 36,109

|

Wrong corners format of bboxes in function center_to_corners_format (image_transforms.py)

|

In [image_transforms.py](https://github.com/huggingface/transformers/blob/main/src/transformers/image_transforms.py), function `center_to_corners_format` describes the corners format as "_corners format: contains the coordinates for the top-left and bottom-right corners of the box (top_left_x, top_left_y, bottom_right_x, bottom_right_y)_". All subsequent functions such as `_center_to_corners_format_torch` compute the center format in the following way:

```

def _corners_to_center_format_torch(bboxes_corners: "torch.Tensor") -> "torch.Tensor":

top_left_x, top_left_y, bottom_right_x, bottom_right_y = bboxes_corners.unbind(-1)

b = [

(top_left_x + bottom_right_x) / 2, # center x

(top_left_y + bottom_right_y) / 2, # center y

(bottom_right_x - top_left_x), # width

(bottom_right_y - top_left_y), # height

]

return torch.stack(b, dim=-1)

```

The problem is that the height of the bounding box would have a negative value. This code computes the center format from the corners format (bottom_left_x, bottom_left_y, top_right_x, top_right_y). The expected format or the code should be changed.

|

closed

|

2025-02-10T09:30:54Z

|

2025-02-12T14:31:14Z

|

https://github.com/huggingface/transformers/issues/36109

|

[] |

majakolar

| 3

|

davidteather/TikTok-Api

|

api

| 479

|

How to run the API in FastAPI / in a testing environment

|

Hi there.

We are trying to add some testing around the API interactions. Namely `getUser`.

We are doing something like this within a FastAPI endpoint.

```python

tik_tok_api = TikTokApi.get_instance()

return tik_tok_api.getUser(handle)

```

This is fine when making this call from the `requests` library. However, when running from FastAPI docs page. Or the FastAPI test client. The response is `Can only run one Playwright at a time.`

Any ideas?

|

closed

|

2021-01-26T13:28:29Z

|

2022-02-14T03:08:27Z

|

https://github.com/davidteather/TikTok-Api/issues/479

|

[

"bug"

] |

jaoxford

| 4

|

FactoryBoy/factory_boy

|

sqlalchemy

| 635

|

Mutually dependent django model fields, with defaults

|

#### The problem

Suppose I have a model with two fields, which need to be set according to some relationship between them. Eg (contrived example) I have a product_type field and a size field. I'd like to be able to provide either, neither or both fields. If I provide eg. product_type, I'd like to be able to set the size field accordingly (eg. if product_type is "book", I'd like something like "299 pages". And if the size is provided as "299 pages", I'd like to be able to set the product type to something for which that is a valid size (ie. "book").

#### Proposed solution

What I've tried so far is having both fields as lazy_attributes, but I get a "Cyclic lazy attribute definition" error if I don't supply either of the dependent fields. I don't really have a proposed solution in terms of coding it, but I'd like my factory to be able to provide a default pair of field values if neither is provided by the factory caller (eg. product_type='shoes', size='42'). Or maybe there's a way of doing this already that I haven't found?

#### Extra notes

Just for context, I'm currently trying to replace a lot of django_any factories in a large django codebase, and there are a fair number of places where something like this would be very useful. Otherwise we have to write a bunch of things like "ProductGivenSizeFactory", which is much less convenient than having a single factory with the proper behaviour.

|

closed

|

2019-08-15T13:09:19Z

|

2019-08-22T16:28:43Z

|

https://github.com/FactoryBoy/factory_boy/issues/635

|

[] |

Joeboy

| 2

|

laughingman7743/PyAthena

|

sqlalchemy

| 13

|

jpype._jexception.OutOfMemoryErrorPyRaisable: java.lang.OutOfMemoryError: GC overhead limit exceeded

|

Hi,

I am running into this error after running several big queries and reusing the connection:

```

jpype._jexception.OutOfMemoryErrorPyRaisable: java.lang.OutOfMemoryError: GC overhead limit exceeded

```

Any ideas? Any way to pass the JVM args to JPype to increase the mem given to the JVM on start?

Thanks!

|

closed

|

2017-06-19T19:12:23Z

|

2017-06-22T12:51:19Z

|

https://github.com/laughingman7743/PyAthena/issues/13

|

[] |

arnaudsj

| 2

|

psf/requests

|

python

| 6,223

|

requests.package.chardet* references not assigned correctly?

|

I found that packages.py is assigning sys.modules['requests.package.chardet'] over and over with different modules, is this intentional or a bug?

I looked at the code and this `target` variable confuses me, it is assigned again in loop and placed with itself(so completely?), looks like a name confliction to me. Code is referenced from installed version 2.28.1.

```

target = chardet.__name__

for mod in list(sys.modules):

if mod == target or mod.startswith(f"{target}."):

target = target.replace(target, "chardet")

sys.modules[f"requests.packages.{target}"] = sys.modules[mod]

```

## Expected Result

every chardet.* package maps to requests.packages.chardet.* respectively

## Actual Result

only requests.package.chardet is assigned at last.

## Reproduction Steps

import requests

import sys

print([m for m in sys.modules if name.startswith('requests.packages.chardet')])

|

closed

|

2022-08-31T10:56:22Z

|

2024-05-14T22:53:44Z

|

https://github.com/psf/requests/issues/6223

|

[] |

babyhoo976

| 2

|

HIT-SCIR/ltp

|

nlp

| 506

|

ltp.seg: illegal instruction(core dumped) when using machine with ubuntu 14

|

机器: Ubuntu 14

初始化: ltp = LTP(path=model_path) model_path对应small模型的本地路径

调用: seg, hidden = ltp.seg([text]) => 出现 illegal instruction(core dumped) 错误 .

部署: 使用docker 部署

之前Ubuntu 16.04是没有遇到这个问题的, 我是使用docke部署的 理论上应该不会和系统有耦合. 不知道ltp分词是否会依赖机器操作系统的相关版本或者底层库

|

closed

|

2021-04-22T06:13:20Z

|

2021-04-22T08:04:31Z

|

https://github.com/HIT-SCIR/ltp/issues/506

|

[] |

cl011

| 1

|

prisma-labs/python-graphql-client

|

graphql

| 3

|

Is there any way to specify an authorization path, a token for example?

|

closed

|

2017-10-09T21:33:01Z

|

2017-12-08T21:32:58Z

|

https://github.com/prisma-labs/python-graphql-client/issues/3

|

[] |

eamigo86

| 3

|

|

521xueweihan/HelloGitHub

|

python

| 2,514

|

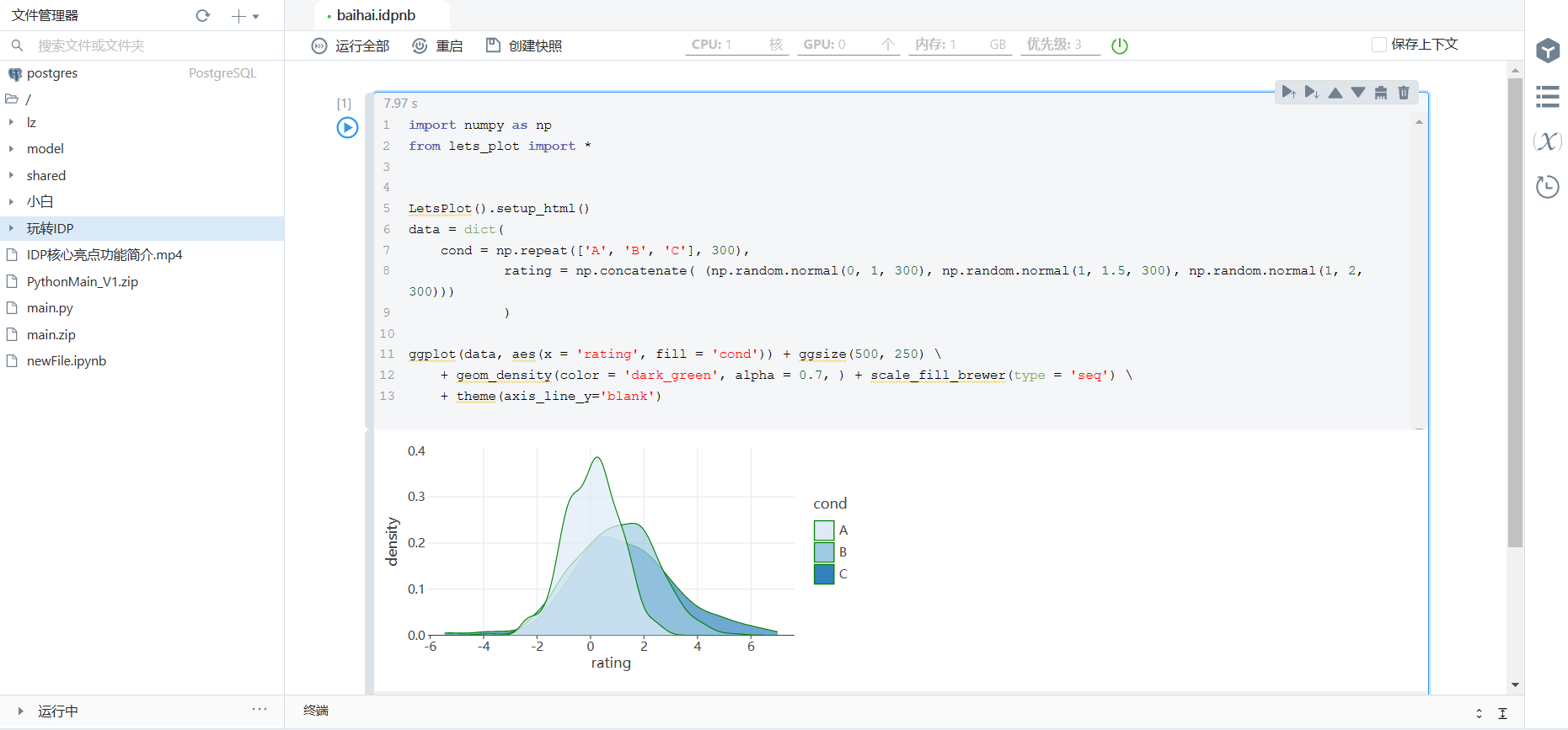

【开源自荐】为数据科学家和算法工程师打造的AI IDE

|

## 推荐项目

<!-- 这里是 HelloGitHub 月刊推荐项目的入口,欢迎自荐和推荐开源项目,唯一要求:请按照下面的提示介绍项目。-->

<!-- 点击上方 “Preview” 立刻查看提交的内容 -->

<!--仅收录 GitHub 上的开源项目,请填写 GitHub 的项目地址-->

- 项目地址:https://github.com/BaihaiAI/IDP

<!--请从中选择(C、C#、C++、CSS、Go、Java、JS、Kotlin、Objective-C、PHP、Python、Ruby、Rust、Swift、其它、书籍、机器学习)-->

- 类别:TypeScript, JavaScript, Python, Rust

<!--请用 20 个左右的字描述它是做什么的,类似文章标题让人一目了然 -->

- 项目标题:为数据科学家和算法工程师打造的易用AI IDE

<!--这是个什么项目、能用来干什么、有什么特点或解决了什么痛点,适用于什么场景、能够让初学者学到什么。长度 32-256 字符-->

- 项目描述:IDP是一款自研AI IDE(人工智能集成开发环境),天然支持Python和SQL这两种在数据科学和AI领域使用最广泛的语言。IDP专为AI和数据科学开发人员(如数据科学家、算法工程师)打造,针对其使用习惯,天然内置版本管理、环境管理等效率工具,从而帮助其最大化提升AI开发效率。

<!--令人眼前一亮的点是什么?类比同类型项目有什么特点!-->

- 亮点:

- 易用易上手,提供版本管理、环境管理与克隆、变量管理、预置代码片段、智能代码辅助等,帮助数据科学家和算法工程师提升效率

- 后端Rust语言,具有更优的运行性能。

- 自主研发,自主可控

- 截图:

- 后续更新计划:

IDP采取插件式架构,可便捷集成AI开发全流程所需的插件,如数据标注插件、超参数优化插件等。后续我们将:

- 构建插件库,欢迎感兴趣的开发者们共同参与插件的打造

- 完善现有功能,增强其易用性

|

open

|

2023-03-04T02:53:54Z

|

2023-03-04T02:58:35Z

|

https://github.com/521xueweihan/HelloGitHub/issues/2514

|

[] |

liminniu

| 0

|

Evil0ctal/Douyin_TikTok_Download_API

|

web-scraping

| 540

|

[BUG] 抖音-获取指定视频的评论回复数据 返回400

|

大佬你好, 拉去项目后,测试 抖音-获取指定视频的评论回复数据 返回400

之后仔细查看文档,并在你的在线接口测试同样也返回400

https://douyin.wtf/docs#/Douyin-Web-API/fetch_video_comments_reply_api_douyin_web_fetch_video_comment_replies_get

|

closed

|

2025-01-17T09:47:12Z

|

2025-02-14T09:04:35Z

|

https://github.com/Evil0ctal/Douyin_TikTok_Download_API/issues/540

|

[

"BUG"

] |

yumingzhu

| 4

|

ray-project/ray

|

pytorch

| 50,917

|

[Data] Timestamptz type loses its time zone after map transforming.

|

### What happened + What you expected to happen

Timestamptz type loses its time zone after map transforming.

### Versions / Dependencies

```

In [45]: ray.__version__

Out[45]: '2.42.1'

```

### Reproduction script

```

import ray

import pyarrow as pa

ray.init()

table = pa.table({

"ts": pa.array([1735689600, 1735689600, 1735689600], type=pa.timestamp("s", tz='UTC')),

"id": [1, 2, 3]

})

ds = ray.data.from_arrow(table)

print(ds.schema())

#Column Type

#------ ----

#ts timestamp[s, tz=UTC]

#id int64

ds2 = ds.map_batches(lambda batch: batch)

print(ds2.schema())

#Column Type

#------ ----

#ts timestamp[s]

#id int64

```

### Issue Severity

None

|

closed

|

2025-02-26T11:37:27Z

|

2025-03-03T11:28:21Z

|

https://github.com/ray-project/ray/issues/50917

|

[

"bug",

"triage",

"data"

] |

sharkdtu

| 1

|

plotly/dash-table

|

plotly

| 591

|

row/column selectable single applies to all tables on the page

|

Make 2 tables with row or column selectable=single - you can only select one row and one column on the page, should be one per table.

reported in https://community.plot.ly/t/multiple-dash-tables-selection-error/28802

```

import dash

import dash_html_components as html

import dash_table

keys = ['a', 'b', 'c']

data = [{'a': 'a', 'b': 'b', 'c': 'c'}, {'a': 'aa', 'b': 'bb', 'c': 'cc'}]

app = dash.Dash(__name__)

app.layout = html.Div([

dash_table.DataTable(

id='a',

columns=[{"name": i, "id": i, "selectable": True} for i in keys],

data=data,

column_selectable='single',

row_selectable='single'

),

dash_table.DataTable(

id='b',

columns=[{"name": i, "id": i, "selectable": True} for i in keys],

data=data,

column_selectable='single',

row_selectable='single'

)

])

if __name__ == '__main__':

app.run_server(debug=True)

```

|

closed

|

2019-09-19T05:03:09Z

|

2019-09-19T20:41:36Z

|

https://github.com/plotly/dash-table/issues/591

|

[

"dash-type-bug",

"size: 0.5"

] |

alexcjohnson

| 3

|

gradio-app/gradio

|

python

| 10,864

|

Cannot Upgrade Gradio - rvc_pipe Is Missing, Cannot Be Installed

|

### Describe the bug

When attempting to upgrade Gradio, it requires music_tag, after that is installed, rvc_pipe is required.

C:\Users\Mehdi\Downloads\ai-voice-cloning-3.0\src>pip install rvc_pipe

Defaulting to user installation because normal site-packages is not writeable

ERROR: Could not find a version that satisfies the requirement rvc_pipe (from versions: none)

ERROR: No matching distribution found for rvc_pipe

I cannot find it online.

### Have you searched existing issues? 🔎

- [x] I have searched and found no existing issues

### Reproduction

```python

import gradio as gr

```

### Screenshot

_No response_

### Logs

```shell

```

### System Info

```shell

It cannot be updated!

```

### Severity

I can work around it

|

closed

|

2025-03-22T17:06:19Z

|

2025-03-23T21:31:56Z

|

https://github.com/gradio-app/gradio/issues/10864

|

[

"bug"

] |

gyprosetti

| 1

|

wkentaro/labelme

|

computer-vision

| 976

|

[Feature] Create specific-sized box

|

I want to create a box with (256x256).

How can I do with labelme?

|

closed

|

2022-01-14T03:54:12Z

|

2022-06-25T04:30:44Z

|

https://github.com/wkentaro/labelme/issues/976

|

[] |

alicera

| 6

|

tableau/server-client-python

|

rest-api

| 973

|

Create/Update site missing support for newer settings

|

The server side API does not appear to support interacting with the following settings:

- Schedule linked tasks

- Mobile offline favorites

- Flow web authoring enable/disable

- Mobile app lock

- Tag limit

- Cross database join toggle

- Toggle personal space

- Personal space storage limits

|

open

|

2022-01-13T14:51:43Z

|

2022-01-25T22:08:21Z

|

https://github.com/tableau/server-client-python/issues/973

|

[

"gap"

] |

jorwoods

| 0

|

ydataai/ydata-profiling

|

jupyter

| 1,129

|

Feature Request: support for Polars

|

### Missing functionality

Polars integration ? https://www.pola.rs/

### Proposed feature

Use polars dataframe as a compute backend.

Or let the user give a polars dataframe to the ProfileReport.

### Alternatives considered

Spark integration.

### Additional context

Polars help to optimize queries and reduce memory footprint.

It could be used to do analysis on big dataframe and speed up computation ?

|

open

|

2022-10-25T20:11:49Z

|

2025-03-24T01:52:27Z

|

https://github.com/ydataai/ydata-profiling/issues/1129

|

[

"needs-triage"

] |

PierreSnell

| 11

|

TheKevJames/coveralls-python

|

pytest

| 1

|

Remove 3d-party modules from coverage report

|

I'm added HTTPretty to my module, and coveralls-python [added HTTPretty to coverage report](https://coveralls.io/builds/5097)

It will be good, if python-coverage don't include 3d-party modules in coverage report.

|

closed

|

2013-02-26T17:16:10Z

|

2013-02-26T18:43:34Z

|

https://github.com/TheKevJames/coveralls-python/issues/1

|

[] |

tyrannosaurus

| 2

|

matterport/Mask_RCNN

|

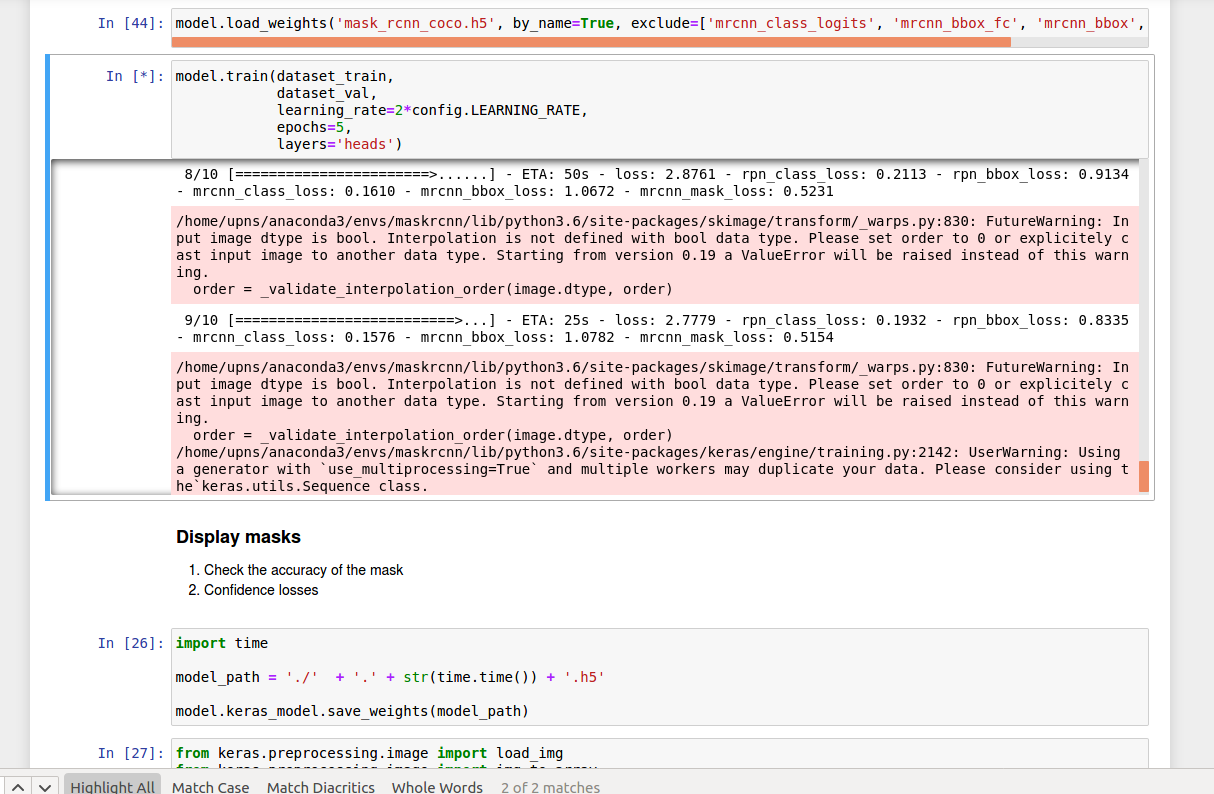

tensorflow

| 2,339

|

Training doesn't go beyond 1 epoch and no masks, rois produced

|

Hello guys,

I have been training on the custom dataset.

I have changed a few parameters such as:

```

STEPS_PER_EPOCH = 10

epochs = 5

```

The training starts well but it is stuck after almost completing the first epoch. You can see below in the image

I would also like to add that I have waited for around 2 hrs after first epoch to post this issue.

I am using CPU.

The time taken for the training is not an issue. It's just that it is stuck.

Also,

When I only train for 1 epoch then I do not see the rois or the masks in the test image which I test the model on. Any idea why this is also happening?

Can someone please help me out?

Regards,

Yash.

|

closed

|

2020-08-25T09:10:04Z

|

2020-08-27T07:33:41Z

|

https://github.com/matterport/Mask_RCNN/issues/2339

|

[] |

YashRunwal

| 1

|

littlecodersh/ItChat

|

api

| 727

|

创建群聊报错

|

创建群聊不成功。创建群聊返回的信息,打印如下:

```

{'BaseResponse': {'ErrMsg': '返回值不带BaseResponse', 'Ret': -1000, 'RawMsg': 'no BaseResponse in raw response'}}

Start auto replying.

```

您的itchat版本为:`1.2.32` Python 版本为 3.7

具体的代码如下

```

itchat.auto_login(hotReload=True,enableCmdQR=2)

memberList = itchat.get_friends()[1:5]

# 创建群聊,topic键值为群聊名

chatroomUserName = itchat.create_chatroom(memberList, 'test chatroom')

print(chatroomUserName)

itchat.run(debug=True)

```

[document]: http://itchat.readthedocs.io/zh/latest/

[issues]: https://github.com/littlecodersh/itchat/issues

[itchatmp]: https://github.com/littlecodersh/itchatmp

|

closed

|

2018-09-10T12:34:59Z

|

2018-10-15T02:00:01Z

|

https://github.com/littlecodersh/ItChat/issues/727

|

[

"duplicate"

] |

nenuwangd

| 1

|

OpenInterpreter/open-interpreter

|

python

| 912

|

OpenAI API key required?

|

### Describe the bug

Project Readme states that you are not affiliated with OpenAI, nor server their product but once launched it requires you to insert an OpenAI API key...

`OpenAI API key not found

To use GPT-4 (highly recommended) please provide an OpenAI API key. `

I thought you had your own model created and publicly shared with the world but why does it now want OpenAI subscription?

### Reproduce

try running interpreter post installation.

### Expected behavior

expected to work without OpenAI API key

### Screenshots

### Open Interpreter version

latest

### Python version

3.11.7

### Operating System name and version

linux mint

### Additional context

_No response_

|

closed

|

2024-01-13T09:10:55Z

|

2024-03-19T21:03:52Z

|

https://github.com/OpenInterpreter/open-interpreter/issues/912

|

[

"Documentation"

] |

andreylisovskiy

| 9

|

keras-team/keras

|

deep-learning

| 20,627

|

GlobalAveragePooling1D data_format Question

|

My rig

- Ubuntu 24.04 VM , RTX3060Ti with driver nvidia 535

- tensorflow-2.14-gpu/tensorflow-2.18 , both pull from docker

- Nvidia Container Toolkit if running in gpu version

About[ this example](https://keras.io/examples/timeseries/timeseries_classification_transformer/)

The transformer blocks of this example contain 2 Conv1D layer, and therefore we have to reshape the input matrix to add the channel dimension at the end.

There is a GlobalAveragePooling1D layer after the transformer blocks:

x = layers.GlobalAveragePooling1D(data_format="channels_last")(x)

which should be correct since our channel is added at the last.

However, if running these example, the summary at the last third line will not have 64,128 Params

dense (Dense) │ (None, 128) │ 64,128 │ global_average_pool…

Instead it will just have 256 parameters and making the total params way less, the model will also have an accuracy of ~50% only

this happen no matter i am running tensorflow-2.14-gpu, or just using the CPU version tensorflow-2.18

However, if changing the data_format="channels_first" everything become fine. The number of params in the GlobalAveragePooling1D layer become 64,128. The total params also match. The training accuracy also more than 90%.

I discover that as i find a very similar model [here](https://github.com/mxochicale/intro-to-transformers/blob/main/tutorials/time-series-classification/timeseries_transformer_classification.ipynb).

The only difference is the data_format

But isn't data_format="channels_last" is the right choice ?

So whats wrong ?

|

open

|

2024-12-11T05:51:31Z

|

2024-12-13T06:28:57Z

|

https://github.com/keras-team/keras/issues/20627

|

[

"type:Bug"

] |

cptang2007

| 0

|

huggingface/transformers

|

tensorflow

| 36,848

|

GPT2 repetition of words in output

|

### System Info

- `transformers` version: 4.45.2

- Platform: Linux-5.15.0-122-generic-x86_64-with-glibc2.35

- Python version: 3.10.12

- Huggingface_hub version: 0.28.1

- Safetensors version: 0.5.2

- Accelerate version: 1.2.1

- Accelerate config: not found

- PyTorch version (GPU?): 2.4.1+cpu (False)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using distributed or parallel set-up in script?: NO

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

```python

@pytest.mark.parametrize("dtype", [torch.float16, torch.float32])

def test_gpt2_cpu_inductor(dtype):

tokenizer = AutoTokenizer.from_pretrained("gpt2")

model = AutoModelForCausalLM.from_pretrained("gpt2").to(dtype)

prompt1 = "GPT2 is model developed by OpenAI"

# run on CPU

input = tokenizer(prompt1, return_tensors="pt")

input_ids1 = input.input_ids

attention_mask = input.attention_mask

gen_tokens1 = model.generate(

input_ids1,

attention_mask = attention_mask,

max_new_tokens=30,

do_sample=False,

pad_token_id=tokenizer.eos_token_id,

)

gen_text1 = tokenizer.batch_decode(gen_tokens1)[0]

print(gen_text1)

import torch._inductor.config as inductor_config

inductor_config.inplace_buffers = False

model.transformer.wte.forward = torch.compile(

model.transformer.wte.forward, backend="inductor", fullgraph=False

)

gen_tokens_cpu1 = model.generate(

input_ids1,

attention_mask = attention_mask,

max_new_tokens=30,

do_sample=False,

pad_token_id=tokenizer.eos_token_id,

)

gen_text_cpu1 = tokenizer.batch_decode(gen_tokens_cpu1)[0]

assert gen_text1 == gen_text_cpu1

```

### Expected behavior

For above test I see output as

`GPT2 is model developed by OpenAI and is based on the OpenAI-based OpenAI-based OpenAI-based OpenAI-based OpenAI-based OpenAI-based Open`

is this expected behavior?

@ArthurZucker could you please explain why the output is like this?

|

closed

|

2025-03-20T10:55:28Z

|

2025-03-20T13:05:33Z

|

https://github.com/huggingface/transformers/issues/36848

|

[

"bug"

] |

vpandya-quic

| 1

|

keras-rl/keras-rl

|

tensorflow

| 192

|

Continue training using saved weights

|

My question basically is:

Is there a way to save the weights/memory and use them after for more training? In my environment I want to train some model and afterwards continue this training changing some features, in order to adapt it to each situation. I think it will be accomplished maybe by saving the memory (e.g. Sequential Memory) into disk, and then load it again to call the agent with this loaded memory, and train it again.

|

closed

|

2018-04-05T11:15:58Z

|

2019-01-12T16:20:27Z

|

https://github.com/keras-rl/keras-rl/issues/192

|

[

"wontfix"

] |

ghub-c

| 4

|

aleju/imgaug

|

machine-learning

| 706

|

how to know which augmenters are used?

|

hi~,

i want to know when i use (Someof), how to know which augmenters are used?

thank you very much!

and i got a problem it seem like that

error: OpenCV(4.1.1) C:\projects\opencv-python\opencv\modules\core\src\alloc.app:72:error(-4:Insufficient memory) Failed to allocate ***** bytes in function 'cv::OutofMemoryError'

|

closed

|

2020-07-30T08:33:55Z

|

2020-08-03T04:16:53Z

|

https://github.com/aleju/imgaug/issues/706

|

[] |

TyrionChou

| 2

|

aiogram/aiogram

|

asyncio

| 1,457

|

Invalid webhook response given with handle_in_background=False

|

### Checklist

- [X] I am sure the error is coming from aiogram code

- [X] I have searched in the issue tracker for similar bug reports, including closed ones

### Operating system

mac os x

### Python version

3.12.2

### aiogram version

3.4.1

### Expected behavior

Bot handles incoming requests and replies with a proper status (200 on success)

### Current behavior

Bot handles requests successfully but do not reply properly

### Steps to reproduce

1. create SimpleRequestHandler

> SimpleRequestHandler(

> dispatcher,

> bot,

> handle_in_background=False

> ).register(app, path=WEBHOOK_PATH)

2. Create an empty command handler

> @router.message(CommandStart())

> async def message_handler(message: types.Message, state: FSMContext):

> pass

3. Run

### Code example

```python3

import logging

import os

import aiohttp.web_app

from aiogram import Dispatcher, Bot, types

from aiogram.client.default import DefaultBotProperties

from aiogram.client.session.aiohttp import AiohttpSession

from aiogram.client.telegram import TelegramAPIServer

from aiogram.enums import ParseMode

from aiogram.filters import CommandStart

from aiogram.fsm.context import FSMContext

from aiogram.webhook.aiohttp_server import setup_application, SimpleRequestHandler

from aiohttp import web

logging.basicConfig(

level=logging.DEBUG,

format='%(asctime)s - %(name)s - %(levelname)s - %(message)s'

)

logger = logging.getLogger()

BOT_TOKEN = os.environ["BOT_TOKEN"]

BOT_API_BASE_URL = os.getenv('BOT_API_BASE_URL')

WEBAPP_HOST = os.getenv('WEBAPP_HOST', 'localhost')

WEBAPP_PORT = int(os.getenv('WEBAPP_PORT', 8080))

BASE_WEBHOOK_URL = os.getenv('BASE_WEBHOOK_URL', f"http://{WEBAPP_HOST}:{WEBAPP_PORT}")

WEBHOOK_PATH = os.getenv('WEBHOOK_PATH', '/webhook')

async def on_startup(bot: Bot) -> None:

logger.info("Setting main bot webhook")

await bot.set_webhook(f"{BASE_WEBHOOK_URL}{WEBHOOK_PATH}")

async def on_shutdown(bot: Bot) -> None:

logger.info("Deleting main bot webhook")

await bot.delete_webhook()

def main():

logger.info('BOT_API_BASE_URL=%s', BOT_API_BASE_URL)

logger.info('WEBAPP_HOST=%s', WEBAPP_HOST)

logger.info('WEBAPP_PORT=%s', WEBAPP_PORT)

logger.info('BASE_WEBHOOK_URL=%s', BASE_WEBHOOK_URL)

logger.info('WEBHOOK_PATH=%s', WEBHOOK_PATH)

if BOT_API_BASE_URL is not None:

session = AiohttpSession(

api=TelegramAPIServer.from_base(BOT_API_BASE_URL)

)

else:

session = None

bot = Bot(

token=BOT_TOKEN, session=session,

default=DefaultBotProperties(

parse_mode=ParseMode.MARKDOWN_V2,

link_preview_is_disabled=True

)

)

app = aiohttp.web_app.Application()

dispatcher = Dispatcher()

dispatcher['bot'] = bot

dispatcher['bot_api_session'] = session

dispatcher.startup.register(on_startup)

dispatcher.shutdown.register(on_shutdown)

@dispatcher.message(CommandStart())

async def message_handler(message: types.Message, state: FSMContext):

pass

SimpleRequestHandler(

dispatcher,

bot,

handle_in_background=False

).register(app, path=WEBHOOK_PATH)

setup_application(app, dispatcher)

# Start web-application.

web.run_app(app, host=WEBAPP_HOST, port=WEBAPP_PORT)

if __name__ == '__main__':

main()

```

### Logs

```sh

2024-04-11 09:28:12,870 - aiogram.event - INFO - Update id=209665694 is handled. Duration 0 ms by bot id=7052315372

2024-04-11 09:28:12,871 - aiohttp.access - INFO - 127.0.0.1 [11/Apr/2024:09:28:08 +0300] "POST /webhook HTTP/1.1" 200 237 "-" "-"

2024-04-11 09:28:12,872 - aiohttp.server - ERROR - Error handling request

Traceback (most recent call last):

File "/Users/ignz/Library/Caches/pypoetry/virtualenvs/tg-feedback-bot-dfXuSdBg-py3.12/lib/python3.12/site-packages/aiohttp/web_protocol.py", line 350, in data_received

messages, upgraded, tail = self._request_parser.feed_data(data)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "aiohttp/_http_parser.pyx", line 557, in aiohttp._http_parser.HttpParser.feed_data

aiohttp.http_exceptions.BadStatusLine: 400, message:

Invalid method encountered:

b'HTTP/1.1 400 Bad Request'

^

2024-04-11 09:28:12,873 - aiohttp.access - INFO - 127.0.0.1 [11/Apr/2024:09:28:12 +0300] "UNKNOWN / HTTP/1.0" 400 0 "-" "-"

```

### Additional information

_No response_

|

open

|

2024-04-11T06:28:58Z

|

2024-04-11T06:28:58Z

|

https://github.com/aiogram/aiogram/issues/1457

|

[

"bug"

] |

unintended

| 0

|

hankcs/HanLP

|

nlp

| 1,356

|

CoreStopWordDictionary.add() 如何持久化

|

<!--

注意事项和版本号必填,否则不回复。若希望尽快得到回复,请按模板认真填写,谢谢合作。

-->

## 注意事项

请确认下列注意事项:

* 我已仔细阅读下列文档,都没有找到答案:

- [首页文档](https://github.com/hankcs/HanLP)

- [wiki](https://github.com/hankcs/HanLP/wiki)

- [常见问题](https://github.com/hankcs/HanLP/wiki/FAQ)

* 我已经通过[Google](https://www.google.com/#newwindow=1&q=HanLP)和[issue区检索功能](https://github.com/hankcs/HanLP/issues)搜索了我的问题,也没有找到答案。

* 我明白开源社区是出于兴趣爱好聚集起来的自由社区,不承担任何责任或义务。我会礼貌发言,向每一个帮助我的人表示感谢。

* [x] 我在此括号内输入x打钩,代表上述事项确认完毕。

## 版本号

<!-- 发行版请注明jar文件名去掉拓展名的部分;GitHub仓库版请注明master还是portable分支 -->

当前最新版本号是:1.7.5

我使用的版本是:1.7.4

<!--以上属于必填项,以下可自由发挥-->

## 我的问题

CoreStopWordDictionary.add() 如何将停用词写入stopwords.txt?

还是说只能手动修改stopword.txt或者用文件流的方式。

## 复现问题

<!-- 你是如何操作导致产生问题的?比如修改了代码?修改了词典或模型?-->

### 步骤

1. 首先……

2. 然后……

3. 接着……

### 触发代码

```

public class DemoStopWord

{

public static void main(String[] args) throws Exception {

System.out.println(CoreStopWordDictionary.contains("一起"));

stopwords();

add();

stopwords();

}

public static void add() {

boolean add = CoreStopWordDictionary.add("一起");

CoreStopWordDictionary.reload();

System.out.println("add = " + add);

}

public static void stopwords() throws Exception {

String con = "我们一起去逛超市,我们先去城西银泰,我们再去城南银泰。然后我们再一起回家";

List<String> strings = HanLP.extractKeyword(con, 5);

System.out.println("strings = " + strings);

}

}

```

### 期望输出

<!-- 你希望输出什么样的正确结果?-->

我期望是调用CoreStopWordDictionary.add("一起");后,将停用词"一起"加入停用词词典。然后调用CoreStopWordDictionary.reload();重新加载字典和缓存达到动态修改停用词词典的功能。

```

false

strings = [一起, 银泰, 城, 再, 西]

add = true

strings = [城, 西, 银泰, 先, 再]

```

### 实际输出

<!-- HanLP实际输出了什么?产生了什么效果?错在哪里?-->

CoreStopWordDictionary.add("一起");并没有将“一起”写入停用词词典,只是在内存中。调用CoreStopWordDictionary.reload();以后停用词字典里没有“一起”

```

false

strings = [一起, 银泰, 城, 再, 西]

add = true

strings = [一起, 银泰, 城, 再, 西]

```

## 其他信息

<!-- 任何可能有用的信息,包括截图、日志、配置文件、相关issue等等。-->

所以说现在还是要手动修改stopword.txt或者是使用文件流的形式来修改对么?那CoreStopWordDictionary.add()和CoreStopWordDictionary.remove()这两个方法应该怎么用?

|

closed

|

2019-12-17T06:54:56Z

|

2019-12-20T02:06:11Z

|

https://github.com/hankcs/HanLP/issues/1356

|

[

"question"

] |

xiuqiang1995

| 2

|

OFA-Sys/Chinese-CLIP

|

computer-vision

| 358

|

保存微调后模型,效果没有微调过程中好

|

我微调过程中文本到图片以及图片到文本都到达了90%以上的情况下,我保存了过程中的pt文件,并基于extracts feature中的代码构建了基于图片匹配描述的demo,但是匹配表达答案时计算的概率只有百分之四五十。

|

open

|

2024-09-12T07:14:45Z

|

2024-09-12T07:14:45Z

|

https://github.com/OFA-Sys/Chinese-CLIP/issues/358

|

[] |

wangly1998

| 0

|

vaexio/vaex

|

data-science

| 1,836

|

[FEATURE-REQUEST]: Remove the df.*func* when using a function after "register_function" + "auto add".

|

**Description**

When registering a new function `foo()` with `@vaex.register_function()`, there is a need to

also apply it to the dataframe with `df.add_function("foo", foo)` or the state won't save it.

Later you can use it with `df.func.foo()`.

1. It will be very cool to have it without the `df.*func*.foo()` → `df.foo()`

2. It would be will be very cool if it would be automatically behind the scenes without the `add_function`.

|

open

|

2022-01-17T15:11:41Z

|

2022-01-17T15:12:40Z

|

https://github.com/vaexio/vaex/issues/1836

|

[] |

xdssio

| 0

|

ets-labs/python-dependency-injector

|

asyncio

| 393

|

A service becomes a _asyncio.Future if it has an asynchronous dependency

|

di version: 4.20.2

python: 3.9.1

os: linux

```python

from dependency_injector import containers, providers

async def init_resource():

yield ...

class Service:

def __init__(self, res):

...

class AppContainer(containers.DeclarativeContainer):

res = providers.Resource(init_resource)

foo = providers.Singleton(Service, res)

bar = providers.Singleton(Service, None)

container = AppContainer()

foo = container.foo()

print(type(foo)) # <class '_asyncio.Future'> <-- why not a <class '__main__.Service'> instance?

bar = container.bar()

print(type(bar)) # <class '__main__.Service'>

```

It's bug or feature? Maybe I miss something in documentation?

|

closed

|

2021-02-09T18:23:13Z

|

2021-02-09T19:27:17Z

|

https://github.com/ets-labs/python-dependency-injector/issues/393

|

[

"question"

] |

gtors

| 3

|

fastapi-admin/fastapi-admin

|

fastapi

| 61

|

NoSQL db support

|

Hello, I am using both PostgreSQL and MongoDB on my fastapi project.

Is there any possibility to add Mongodb collections to admin dashboard and enable CRUD operations for them as well?

|

closed

|

2021-07-29T21:31:27Z

|

2021-07-30T01:44:18Z

|

https://github.com/fastapi-admin/fastapi-admin/issues/61

|

[] |

royalwood

| 1

|

hindupuravinash/the-gan-zoo

|

machine-learning

| 54

|

AttGAN code

|

AttGAN code has been released recently. https://github.com/LynnHo/AttGAN-Tensorflow

|

closed

|

2018-05-08T02:00:22Z

|

2018-05-10T16:31:33Z

|

https://github.com/hindupuravinash/the-gan-zoo/issues/54

|

[] |

LynnHo

| 1

|

pydata/xarray

|

pandas

| 9,129

|

scatter plot is slow

|

### What happened?

scatter plot is slow when the dataset has large (length) coordinates even though those coordinates are not involved in the scatter plot.

### What did you expect to happen?

scatter plot speed does not depend on coordinates that are not involved in the scatter plot, which was the case at some point in the past

### Minimal Complete Verifiable Example

```Python

import numpy as np

import xarray as xr

from matplotlib import pyplot as plt

%config InlineBackend.figure_format = 'retina'

%matplotlib inline

# Define coordinates

month = np.arange(1, 13, dtype=np.int64)

L = np.arange(1, 13, dtype=np.int64)

# Create random values for the variables SP and SE

np.random.seed(0) # For reproducibility

SP_values = np.random.rand(len(L), len(month))

SE_values = SP_values + np.random.rand(len(L), len(month))

# Create the dataset

ds = xr.Dataset(

{

"SP": (["L", "month"], SP_values),

"SE": (["L", "month"], SE_values)

},

coords={

"L": L,

"month": month,

"S": np.arange(250),

"model": np.arange(7),

"M": np.arange(30)

}

)

# slow

ds.plot.scatter(x='SP', y='SE')

ds = xr.Dataset(

{

"SP": (["L", "month"], SP_values),

"SE": (["L", "month"], SE_values)

},

coords={

"L": L,

"month": month

}

)

# fast

ds.plot.scatter(x='SP', y='SE')

```

### MVCE confirmation

- [X] Minimal example — the example is as focused as reasonably possible to demonstrate the underlying issue in xarray.

- [X] Complete example — the example is self-contained, including all data and the text of any traceback.

- [X] Verifiable example — the example copy & pastes into an IPython prompt or [Binder notebook](https://mybinder.org/v2/gh/pydata/xarray/main?urlpath=lab/tree/doc/examples/blank_template.ipynb), returning the result.

- [X] New issue — a search of GitHub Issues suggests this is not a duplicate.

- [X] Recent environment — the issue occurs with the latest version of xarray and its dependencies.

### Relevant log output

_No response_

### Anything else we need to know?

For me, slow = 25 seconds and fast = instantaneous

### Environment

<details>

INSTALLED VERSIONS

------------------

commit: None

python: 3.11.9 | packaged by conda-forge | (main, Apr 19 2024, 18:45:13) [Clang 16.0.6 ]

python-bits: 64

OS: Darwin

OS-release: 23.5.0

machine: x86_64

processor: i386

byteorder: little

LC_ALL: None

LANG: en_US.UTF-8

LOCALE: ('en_US', 'UTF-8')

libhdf5: 1.14.3

libnetcdf: 4.9.2

xarray: 2024.6.0

pandas: 2.2.2

numpy: 1.26.4

scipy: 1.13.1

netCDF4: 1.6.5

pydap: installed

h5netcdf: 1.3.0

h5py: 3.11.0

zarr: 2.18.2

cftime: 1.6.4

nc_time_axis: 1.4.1

iris: None

bottleneck: 1.3.8

dask: 2024.6.0

distributed: 2024.6.0

matplotlib: 3.8.4

cartopy: 0.23.0

seaborn: 0.13.2

numbagg: 0.8.1

fsspec: 2024.6.0

cupy: None

pint: 0.24

sparse: 0.15.4

flox: 0.9.8

numpy_groupies: 0.11.1

setuptools: 70.0.0

pip: 24.0

conda: None

pytest: 8.2.2

mypy: None

IPython: 8.17.2

sphinx: None</details>

|

closed

|

2024-06-16T21:11:31Z

|

2024-07-09T07:09:19Z

|

https://github.com/pydata/xarray/issues/9129

|

[

"bug",

"topic-plotting",

"topic-performance"

] |

mktippett

| 1

|

flasgger/flasgger

|

flask

| 611

|

flask-marshmallow - generating Marshmallow schemas from SQLAlchemy models seemingly breaks flasgger

|

I have a SQLAlchemy model from which I derive a flask-marshmallow schema:

```python

class Customer(db.Model):

__tablename__ = 'customer'

customer_id: Mapped[int] = mapped_column('pk_customer_id', primary_key=True)

first_name: Mapped[str] = mapped_column(String(100))

last_name: Mapped[str] = mapped_column(String(100))

customer_number: Mapped[int]

class CustomerSchema(ma.SQLAlchemyAutoSchema):

class Meta:

model = Customer

```

I then add the Schema to my definitions with `@swag_from`:

```python

@customers_bp.route('/', methods=['GET'])

@swag_from({

'definitions': {

'Customer': CustomerSchema

}

})

def get_customers():

"""

Return a list of customers.

---

responses:

200:

description: A list of customers

schema:

type: object

properties:

customers:

type: array

items:

$ref: '#/definitions/Customer'

"""

...

```

When I head to `/apidocs`, I am greeted with the following error:

```

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/flasgger/base.py", line 164, in get

return jsonify(self.loader())

File "/usr/local/lib/python3.9/site-packages/flask/json/__init__.py", line 170, in jsonify

return current_app.json.response(*args, **kwargs) # type: ignore[return-value]

File "/usr/local/lib/python3.9/site-packages/flask/json/provider.py", line 214, in response

f"{self.dumps(obj, **dump_args)}\n", mimetype=self.mimetype

File "/usr/local/lib/python3.9/site-packages/flask/json/provider.py", line 179, in dumps

return json.dumps(obj, **kwargs)

File "/usr/local/lib/python3.9/json/__init__.py", line 234, in dumps

return cls(

File "/usr/local/lib/python3.9/json/encoder.py", line 201, in encode

chunks = list(chunks)

File "/usr/local/lib/python3.9/json/encoder.py", line 431, in _iterencode

yield from _iterencode_dict(o, _current_indent_level)

File "/usr/local/lib/python3.9/json/encoder.py", line 405, in _iterencode_dict

yield from chunks

File "/usr/local/lib/python3.9/json/encoder.py", line 405, in _iterencode_dict

yield from chunks

File "/usr/local/lib/python3.9/json/encoder.py", line 438, in _iterencode

o = _default(o)

File "/usr/local/lib/python3.9/site-packages/flask/json/provider.py", line 121, in _default

raise TypeError(f"Object of type {type(o).__name__} is not JSON serializable")

TypeError: Object of type SQLAlchemyAutoSchemaMeta is not JSON serializable

```

Seemingly, the `Meta` object containing options for schema generation causes problems when attempting to include it in the flasgger config.

|

open

|

2024-02-29T11:37:39Z

|

2024-03-07T20:49:01Z

|

https://github.com/flasgger/flasgger/issues/611

|

[] |

snctfd

| 1

|

jmcnamara/XlsxWriter

|

pandas

| 254

|

Unable to set x axis intervals to floating point number

|

Hi,

My requirement is to set the x axis between 1.045 and 1.21 with intervals of 0.055. Thus, the four points for x-axis are: 1.045, 1.1, 1.155, 1.21. I am unable to achieve this in xlsxwriter. I have tried the below code which failed:

``` python

chart.set_x_axis({'min': 1.045, 'max': 1.21})

chart.set_x_axis({'interval_unit': 0.055})

```

Kindly suggest a solution.

Thanks and regards,

Amitra

|

closed

|

2015-05-14T07:01:58Z

|

2015-05-14T07:45:01Z

|

https://github.com/jmcnamara/XlsxWriter/issues/254

|

[

"question"

] |

amitrasudan

| 1

|

seleniumbase/SeleniumBase

|

web-scraping

| 2,879

|

Add support for `uc_gui_handle_cf()` with `Driver()` and `DriverContext()` formats

|

### Add support for `uc_gui_handle_cf()` with `Driver()` and `DriverContext()` formats

Currently, if running this code:

```python

from seleniumbase import DriverContext

with DriverContext(uc=True) as driver:

url = "https://www.virtualmanager.com/en/login"

driver.uc_open_with_reconnect(url, 4)

driver.uc_gui_handle_cf() # Ready if needed!

driver.assert_element('input[name*="email"]')

driver.assert_element('input[name*="login"]')

```

That leads to this stack trace:

```python

File "/Users/michael/github/SeleniumBase/seleniumbase/core/browser_launcher.py", line 4025, in <lambda>

lambda *args, **kwargs: uc_gui_handle_cf(

^^^^^^^^^^^^^^^^^

File "/Users/michael/github/SeleniumBase/seleniumbase/core/browser_launcher.py", line 651, in uc_gui_handle_cf

install_pyautogui_if_missing()

File "/Users/michael/github/SeleniumBase/seleniumbase/core/browser_launcher.py", line 559, in install_pyautogui_if_missing

verify_pyautogui_has_a_headed_browser()

File "/Users/michael/github/SeleniumBase/seleniumbase/core/browser_launcher.py", line 552, in verify_pyautogui_has_a_headed_browser

if sb_config.headless or sb_config.headless2:

^^^^^^^^^^^^^^^^^^

AttributeError: module 'seleniumbase.config' has no attribute 'headless'

```

Here's the workaround for now using `SB()`: (Which includes the virtual display needed on Linux)

```python

from seleniumbase import SB

with SB(uc=True) as sb:

url = "https://www.virtualmanager.com/en/login"

sb.uc_open_with_reconnect(url, 4)

sb.uc_gui_handle_cf() # Ready if needed!

sb.assert_element('input[name*="email"]')

sb.assert_element('input[name*="login"]')

```

Once this ticket is resolved, Linux users who use `Driver()` or `DriverContext` formats in UC Mode will still need to set `pyautogui._pyautogui_x11._display` to `Xlib.display.Display(os.environ['DISPLAY'])` on Linux in order to sync up `pyautogui` with the `X11` virtual display after calling `sbvirtualdisplay.Display(visible=True, size=(1366, 768), backend="xvfb", use_xauth=True).start()`. (For `Xlib`, use `import Xlib.display` after `pip install python-xlib`.)

|

closed

|

2024-06-27T15:20:13Z

|

2024-07-02T14:29:16Z

|

https://github.com/seleniumbase/SeleniumBase/issues/2879

|

[

"enhancement",

"UC Mode / CDP Mode"

] |

mdmintz

| 7

|

Sanster/IOPaint

|

pytorch

| 50

|

how can we use our own trained model checkpoints and model config to test.

|

like we have trained Lama Inpaining model with our own datasets and now i want to inference using our trained model config and checkpoints .

or simply provide the code to produce mask image using cursor for some selected area in any given images.

Thank you in advance ,

|

closed

|

2022-05-24T07:50:25Z

|

2022-05-24T08:38:20Z

|

https://github.com/Sanster/IOPaint/issues/50

|

[] |

ram-parvesh

| 0

|

graphql-python/graphene

|

graphql

| 1,452

|

Confusing/incorrect DataLoader example in docs

|

Hi all,

I'm very much new to Graphene, so please excuse me if this is incorrect, but it seems to me the code in the docs (screenshot below) will in fact make 4 round trips to the backend?

<img width="901" alt="image" src="https://user-images.githubusercontent.com/1187758/186154207-041da5ed-9341-4c75-bc10-73d3e950ef9a.png">

I don't see how it would be possible otherwise. Looks like these docs were inspired by the aiodataloader docs (screenshot below). It's reasonable to see how these would be able to bring it down to 2 requests.

<img width="770" alt="image" src="https://user-images.githubusercontent.com/1187758/186154951-5f5fdcb1-be64-4d68-8994-82b02a5fd4a3.png">

|

closed

|

2022-08-23T12:13:54Z

|

2022-08-25T03:22:23Z

|

https://github.com/graphql-python/graphene/issues/1452

|

[

"🐛 bug"

] |

lopatin

| 0

|

keras-team/keras

|

deep-learning

| 20,278

|

Incompatibility of compute_dtype with complex-valued inputs

|

Hi,

In #19872, you introduced the possibility for layers with complex-valued inputs.

It then seems that this statement of the API Documentation is now wrong:

When I feed a complex-valued input tensor into a layer (as in this [unit test](https://github.com/keras-team/keras/commit/076ab315a7d1939d2ec965dc097946c53ef1d539#diff-94db6e94fea3334a876a0c3c02a897c1a99e91398dff51987a786b58d52cc0d1)), it is not cast to the `compute_dtype`, but rather kept as it is. I would somehow expect that the `compute_dtype` becomes complex in this case as well.

|

open

|

2024-09-23T11:48:24Z

|

2024-09-25T19:31:05Z

|

https://github.com/keras-team/keras/issues/20278

|

[

"type:feature"

] |

jhoydis

| 1

|

deepspeedai/DeepSpeed

|

deep-learning

| 6,906

|

[BUG] RuntimeError: Unable to JIT load the fp_quantizer op due to it not being compatible due to hardware/software issue. FP Quantizer is using an untested triton version (3.1.0), only 2.3.(0, 1) and 3.0.0 are known to be compatible with these kernels

|

**Describe the bug**

I am out of my depth here but I'll try.

Installed deepspeed on vllm/vllm-openai docker via pip install deepspeed. Install went fine but when I tried to do an FP6 quant inflight on a model I got the error in the subject line. Noodling around, I see op_builder/fp_quantizer.py is checking the Triton version and presumably blocking it? I tried downgrading triton from 3.1.0 to 3.0.0 and caused a cascading array of interdependency issues. I would like to lift the version check and see if it works but I am not a coder and wouldn't know what to do.

**To Reproduce**

Steps to reproduce the behavior:

1. load vllm/vllm-openai:latest docker

2. install latest deepspeed

3. attempt to load model vllm serve (model_id) with parameter --quantization deepspeedfp (need configuration.json file)

4. See error

**Expected behavior**

**ds_report output**

[2024-12-23 14:25:46,009] [INFO] [real_accelerator.py:222:get_accelerator] Setting ds_accelerator to cuda (auto detect)

--------------------------------------------------

DeepSpeed C++/CUDA extension op report

--------------------------------------------------

NOTE: Ops not installed will be just-in-time (JIT) compiled at

runtime if needed. Op compatibility means that your system

meet the required dependencies to JIT install the op.

--------------------------------------------------

JIT compiled ops requires ninja

ninja .................. [OKAY]

--------------------------------------------------

op name ................ installed .. compatible

--------------------------------------------------

[WARNING] async_io requires the dev libaio .so object and headers but these were not found.

[WARNING] async_io: please install the libaio-dev package with apt

[WARNING] If libaio is already installed (perhaps from source), try setting the CFLAGS and LDFLAGS environment variables to where it can be found.

async_io ............... [NO] ....... [NO]

fused_adam ............. [NO] ....... [OKAY]

cpu_adam ............... [NO] ....... [OKAY]

cpu_adagrad ............ [NO] ....... [OKAY]

cpu_lion ............... [NO] ....... [OKAY]

[WARNING] Please specify the CUTLASS repo directory as environment variable $CUTLASS_PATH

evoformer_attn ......... [NO] ....... [NO]

[WARNING] FP Quantizer is using an untested triton version (3.1.0), only 2.3.(0, 1) and 3.0.0 are known to be compatible with these kernels

fp_quantizer ........... [NO] ....... [NO]

fused_lamb ............. [NO] ....... [OKAY]

fused_lion ............. [NO] ....... [OKAY]

/usr/bin/ld: cannot find -lcufile: No such file or directory

collect2: error: ld returned 1 exit status

gds .................... [NO] ....... [NO]

transformer_inference .. [NO] ....... [OKAY]

inference_core_ops ..... [NO] ....... [OKAY]

cutlass_ops ............ [NO] ....... [OKAY]

quantizer .............. [NO] ....... [OKAY]

ragged_device_ops ...... [NO] ....... [OKAY]

ragged_ops ............. [NO] ....... [OKAY]

random_ltd ............. [NO] ....... [OKAY]

[WARNING] sparse_attn requires a torch version >= 1.5 and < 2.0 but detected 2.5

[WARNING] using untested triton version (3.1.0), only 1.0.0 is known to be compatible

sparse_attn ............ [NO] ....... [NO]

spatial_inference ...... [NO] ....... [OKAY]

transformer ............ [NO] ....... [OKAY]

stochastic_transformer . [NO] ....... [OKAY]

--------------------------------------------------

DeepSpeed general environment info:

torch install path ............... ['/usr/local/lib/python3.12/dist-packages/torch']

torch version .................... 2.5.1+cu124

deepspeed install path ........... ['/usr/local/lib/python3.12/dist-packages/deepspeed']

deepspeed info ................... 0.16.2, unknown, unknown

torch cuda version ............... 12.4

torch hip version ................ None

nvcc version ..................... 12.1

deepspeed wheel compiled w. ...... torch 2.5, cuda 12.4

shared memory (/dev/shm) size .... 46.57 GB

**Screenshots**

**System info (please complete the following information):**

- OS: Ubuntu 22.04

- GPU=2 A40

**Launcher context**

**Docker context**

vllm/vllm-openai:latest (0.65)

**Additional context**

|

closed

|

2024-12-23T22:27:19Z

|

2025-01-13T17:26:35Z

|

https://github.com/deepspeedai/DeepSpeed/issues/6906

|

[

"bug",

"compression"

] |

GHBigD

| 3

|

babysor/MockingBird

|

pytorch

| 511

|

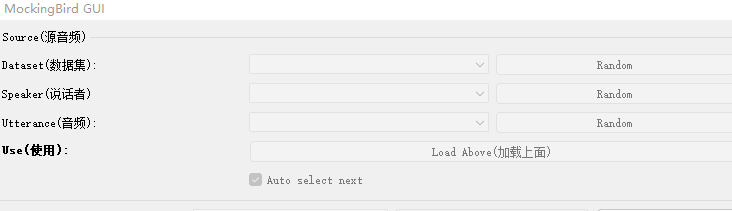

请教作者及各位大佬,模型声音机械怎么办

|

纯小白,请教

1继续作者75K训练到200K,loss02左右,声音有点奇怪,最主要是效果机械,这是什么情况。要怎么办

2是训练还不到位吗?要继续训练还是咋办

3软件顶端的区域,怎么加载。就是下图这个,灰色

|

open

|

2022-04-20T14:24:24Z

|

2022-04-22T14:04:29Z

|

https://github.com/babysor/MockingBird/issues/511

|

[] |

1239hy

| 2

|

Aeternalis-Ingenium/FastAPI-Backend-Template

|

sqlalchemy

| 33

|

Running pytests

|

Hi.

In root dir README it says:

Make sure that you are in the backend/ directory

In backend/README.md:

INFO: For running the test, make sure you are in the root directory and NOT in the backend/ directory!

Try both ways i am not able to run tests,

```

from src.main import backend_app

E ModuleNotFoundError: No module named 'src'

```

|

closed

|

2023-12-19T07:41:34Z

|

2023-12-19T08:10:03Z

|

https://github.com/Aeternalis-Ingenium/FastAPI-Backend-Template/issues/33

|

[] |

djo10

| 0

|

tensorflow/datasets

|

numpy

| 5,263

|

[data request] <dataset name>

|

* Name of dataset: NYU Depth Dataset V2

* URL of dataset: http://horatio.cs.nyu.edu/mit/silberman/nyu_depth_v2/bathrooms_part1.zip

I am not able to download this dataset now (which I could years ago). It says the site can't be reached. Any help would be appreciated. Thanks.

|

closed

|

2024-01-29T15:37:50Z

|

2024-02-14T11:47:34Z

|

https://github.com/tensorflow/datasets/issues/5263

|

[

"dataset request"

] |

Moushumi9medhi

| 2

|

strawberry-graphql/strawberry

|

graphql

| 3,631

|

strawberry.ext.mypy_plugin Pydantic 2.9.0 PydanticModelField.to_argument error missing 'model_strict' and 'is_root_model_root'

|

Hello!

It seems the Pydantic 2.9.0 version introduced a breaking change on PydanticModelField.to_argument adding two new arguments:

https://github.com/pydantic/pydantic/commit/d6df62aaa34c21272cb5fcbcbe3a8b88474732f8

and

https://github.com/pydantic/pydantic/commit/93ced97b00491da4778e0608f2a3be62e64437a8

## Describe the Bug

This is the mypy trace

```

./my-file.py:132: error: INTERNAL ERROR -- Please try using mypy master on GitHub:

https://mypy.readthedocs.io/en/stable/common_issues.html#using-a-development-mypy-build

Please report a bug at https://github.com/python/mypy/issues

version: 1.11.2

Traceback (most recent call last):

File "mypy/semanal.py", line 7087, in accept

File "mypy/nodes.py", line 1183, in accept

File "mypy/semanal.py", line 1700, in visit_class_def

File "mypy/semanal.py", line 1891, in analyze_class

File "mypy/semanal.py", line 1925, in analyze_class_body_common

File "mypy/semanal.py", line 1996, in apply_class_plugin_hooks

File "/Users/victorbarroncas/code/boostsec-asset-management/.venv/lib/python3.12/site-packages/strawberry/ext/mypy_plugin.py", line 489, in strawberry_pydantic_class_callback

f.to_argument(

TypeError: PydanticModelField.to_argument() missing 2 required positional arguments: 'model_strict' and 'is_root_model_root'

./my-file.py:132: : note: use --pdb to drop into pdb

```

## System Information

- Operating system: OSX

- strawberry-graphql 0.240.3

- pydantic 2.9.1

- pydantic-core 2.23.3

- mypy 1.11.2

- mypy-extensions 1.0.0

## Additional Context

Similar issue:

https://github.com/strawberry-graphql/strawberry/issues/3560

|

open

|

2024-09-13T12:02:43Z

|

2025-03-20T15:56:52Z

|

https://github.com/strawberry-graphql/strawberry/issues/3631

|

[

"bug"

] |

victor-nb

| 0

|

Lightning-AI/pytorch-lightning

|

data-science

| 19,768

|

Script freezes when Trainer is instantiated

|

### Bug description

I can run once a training script with pytorch-lightning. However, after the training finishes, if train to run it again, the code freezes when the `L.Trainer` is instantiated. There are no error messages.

Only if I shutdown and restart, I can run it once again, but then the problem persist for the next time.

This happens to me with different codes, even in the "lightning in 15 minutes" example.

### What version are you seeing the problem on?

v2.2

### How to reproduce the bug

```python

# Based on https://lightning.ai/docs/pytorch/stable/starter/introduction.html

import os

import torch

from torch import optim, nn, utils

from torchvision.datasets import MNIST

from torchvision.transforms import ToTensor

import pytorch_lightning as L

# define any number of nn.Modules (or use your current ones)

encoder = nn.Sequential(nn.Linear(28 * 28, 64), nn.ReLU(), nn.Linear(64, 3))

decoder = nn.Sequential(nn.Linear(3, 64), nn.ReLU(), nn.Linear(64, 28 * 28))

# define the LightningModule

class LitAutoEncoder(L.LightningModule):

def __init__(self, encoder, decoder):

super().__init__()

self.encoder = encoder

self.decoder = decoder

def training_step(self, batch, batch_idx):

# training_step defines the train loop.

# it is independent of forward

x, y = batch

x = x.view(x.size(0), -1)

x_hat = self.model_forward(x)

loss = nn.functional.mse_loss(x_hat, x)

# Logging to TensorBoard (if installed) by default

self.log("train_loss", loss)

return batch

def configure_optimizers(self):

optimizer = optim.Adam(self.parameters(), lr=1e-3)

return optimizer

# init the autoencoder

autoencoder = LitAutoEncoder(encoder, decoder)

# setup data

dataset = MNIST(os.getcwd(), download=True, train=True, transform=ToTensor())

# use 20% of training data for validation

train_set_size = int(len(dataset) * 0.8)

valid_set_size = len(dataset) - train_set_size

seed = torch.Generator().manual_seed(42)

train_set, val_set = utils.data.random_split(dataset, [train_set_size, valid_set_size], generator=seed)

train_loader = utils.data.DataLoader(train_set, num_workers=15)

valid_loader = utils.data.DataLoader(val_set, num_workers=15)

print("Before instantiate Trainer")

# train the model (hint: here are some helpful Trainer arguments for rapid idea iteration)

trainer = L.Trainer(limit_train_batches=100, max_epochs=10, check_val_every_n_epoch=10, accelerator="gpu")

print("After instantiate Trainer")

```

### Error messages and logs

There are no error messages

### Environment

<details>

<summary>Current environment</summary>

* CUDA:

- GPU:

- NVIDIA GeForce RTX 3080 Laptop GPU

- available: True

- version: 12.1

* Lightning:

- denoising-diffusion-pytorch: 1.5.4

- ema-pytorch: 0.2.1

- lightning-utilities: 0.11.2

- pytorch-fid: 0.3.0

- pytorch-lightning: 2.2.2

- torch: 2.2.2

- torchaudio: 2.2.2

- torchmetrics: 1.0.0

- torchvision: 0.17.2

* Packages:

- absl-py: 1.4.0

- accelerate: 0.17.1

- addict: 2.4.0

- aiohttp: 3.8.3

- aiosignal: 1.2.0

- antlr4-python3-runtime: 4.9.3

- anyio: 3.6.1

- appdirs: 1.4.4

- argon2-cffi: 21.3.0

- argon2-cffi-bindings: 21.2.0

- array-record: 0.4.0

- arrow: 1.2.3

- astropy: 5.2.1

- asttokens: 2.0.8

- astunparse: 1.6.3

- async-timeout: 4.0.2

- attrs: 23.1.0

- auditwheel: 5.4.0

- babel: 2.10.3

- backcall: 0.2.0

- beautifulsoup4: 4.11.1

- bleach: 5.0.1

- blinker: 1.6.2

- bqplot: 0.12.40

- branca: 0.6.0

- build: 1.2.1

- cachetools: 5.2.0

- carla: 0.9.14

- certifi: 2024.2.2

- cffi: 1.15.1

- chardet: 5.1.0

- charset-normalizer: 2.1.1

- click: 8.1.3

- click-plugins: 1.1.1

- cligj: 0.7.2

- cloudpickle: 3.0.0

- cmake: 3.26.1

- colossus: 1.3.1

- colour: 0.1.5

- contourpy: 1.0.7

- cycler: 0.11.0

- cython: 0.29.32

- dacite: 1.8.1

- dask: 2023.3.1

- dataclass-array: 1.4.1

- debugpy: 1.6.3

- decorator: 4.4.2

- deepspeed: 0.7.2

- defusedxml: 0.7.1

- denoising-diffusion-pytorch: 1.5.4

- deprecation: 2.1.0

- dill: 0.3.6

- distlib: 0.3.6

- dm-tree: 0.1.8

- docker-pycreds: 0.4.0

- docstring-parser: 0.15

- einops: 0.6.0

- einsum: 0.3.0

- ema-pytorch: 0.2.1

- etils: 1.3.0

- exceptiongroup: 1.2.0

- executing: 1.0.0

- farama-notifications: 0.0.4

- fastjsonschema: 2.16.1

- filelock: 3.8.0

- fiona: 1.9.3

- flask: 2.3.3

- flatbuffers: 24.3.25

- folium: 0.14.0

- fonttools: 4.37.1

- frozenlist: 1.3.1

- fsspec: 2022.8.2

- future: 1.0.0

- fvcore: 0.1.5.post20221221

- gast: 0.4.0

- gdown: 4.7.1

- geojson: 3.0.1

- geopandas: 0.12.2

- gitdb: 4.0.11

- gitpython: 3.1.43

- google-auth: 2.16.2

- google-auth-oauthlib: 0.4.6

- google-pasta: 0.2.0

- googleapis-common-protos: 1.63.0

- googledrivedownloader: 0.4

- gputil: 1.4.0

- gpxpy: 1.5.0

- grpcio: 1.62.1

- gunicorn: 20.0.4

- gym: 0.26.2

- gym-notices: 0.0.8

- gymnasium: 0.28.1

- h5py: 3.7.0

- haversine: 2.8.0

- hdf5plugin: 4.1.1

- hjson: 3.1.0

- humanfriendly: 10.0

- idna: 3.6

- imageio: 2.31.3

- imageio-ffmpeg: 0.4.7

- immutabledict: 2.2.0

- importlib-metadata: 4.12.0

- importlib-resources: 6.1.0

- imutils: 0.5.4

- invertedai: 0.0.8.post1

- iopath: 0.1.10

- ipyevents: 2.0.2

- ipyfilechooser: 0.6.0

- ipykernel: 6.15.3

- ipyleaflet: 0.17.4

- ipython: 8.5.0

- ipython-genutils: 0.2.0

- ipytree: 0.2.2

- ipywidgets: 8.0.2

- itsdangerous: 2.1.2

- jax-jumpy: 1.0.0

- jedi: 0.18.1

- jinja2: 3.1.2

- joblib: 1.4.0

- jplephem: 2.19

- json5: 0.9.10

- jsonargparse: 4.15.0

- jsonschema: 4.19.1

- jsonschema-specifications: 2023.7.1

- jstyleson: 0.0.2

- julia: 0.6.1

- jupyter: 1.0.0

- jupyter-client: 7.3.5

- jupyter-console: 6.4.4

- jupyter-core: 4.11.1

- jupyter-packaging: 0.12.3

- jupyter-server: 1.18.1

- jupyterlab: 3.4.7

- jupyterlab-pygments: 0.2.2

- jupyterlab-server: 2.15.1

- jupyterlab-widgets: 3.0.3

- keras: 2.11.0

- kiwisolver: 1.4.4

- lanelet2: 1.2.1

- lark: 1.1.9

- lazy-loader: 0.2

- leafmap: 0.27.0

- libclang: 14.0.6

- lightning-utilities: 0.11.2

- lit: 16.0.0

- llvmlite: 0.39.1

- locket: 1.0.0

- lunarsky: 0.2.1

- lxml: 4.9.1

- lz4: 4.3.3

- markdown: 3.4.1

- markdown-it-py: 2.2.0

- markupsafe: 2.1.1

- matplotlib: 3.6.1

- matplotlib-inline: 0.1.6

- mdurl: 0.1.2

- mistune: 2.0.4

- moviepy: 1.0.3

- mpi4py: 3.1.3

- mpmath: 1.3.0

- msgpack: 1.0.8

- multidict: 6.0.2

- munch: 2.5.0

- natsort: 8.2.0

- nbclassic: 0.4.3

- nbclient: 0.6.8

- nbconvert: 7.0.0

- nbformat: 5.5.0

- nest-asyncio: 1.5.5

- networkx: 2.8.6

- ninja: 1.10.2.3

- notebook: 6.4.12

- notebook-shim: 0.1.0

- numba: 0.56.4

- numpy: 1.24.4

- nvidia-cublas-cu11: 11.10.3.66

- nvidia-cublas-cu12: 12.1.3.1

- nvidia-cuda-cupti-cu11: 11.7.101

- nvidia-cuda-cupti-cu12: 12.1.105

- nvidia-cuda-nvrtc-cu11: 11.7.99

- nvidia-cuda-nvrtc-cu12: 12.1.105

- nvidia-cuda-runtime-cu11: 11.7.99

- nvidia-cuda-runtime-cu12: 12.1.105

- nvidia-cudnn-cu11: 8.5.0.96

- nvidia-cudnn-cu12: 8.9.2.26

- nvidia-cufft-cu11: 10.9.0.58

- nvidia-cufft-cu12: 11.0.2.54

- nvidia-curand-cu11: 10.2.10.91

- nvidia-curand-cu12: 10.3.2.106

- nvidia-cusolver-cu11: 11.4.0.1

- nvidia-cusolver-cu12: 11.4.5.107

- nvidia-cusparse-cu11: 11.7.4.91

- nvidia-cusparse-cu12: 12.1.0.106

- nvidia-nccl-cu11: 2.14.3

- nvidia-nccl-cu12: 2.19.3

- nvidia-nvjitlink-cu12: 12.4.127

- nvidia-nvtx-cu11: 11.7.91

- nvidia-nvtx-cu12: 12.1.105

- oauthlib: 3.2.2

- omegaconf: 2.3.0

- open-humans-api: 0.2.9

- opencv-python: 4.6.0.66

- openexr: 1.3.9

- opt-einsum: 3.3.0

- osmnx: 1.2.2

- p5py: 1.0.0

- packaging: 21.3

- pandas: 1.5.3

- pandocfilters: 1.5.0

- parso: 0.8.3

- partd: 1.4.1

- pep517: 0.13.0

- pickleshare: 0.7.5

- pillow: 9.2.0

- pint: 0.21.1

- pip: 24.0

- pkgconfig: 1.5.5

- pkgutil-resolve-name: 1.3.10

- platformdirs: 2.5.2

- plotly: 5.13.1

- plyfile: 0.8.1

- portalocker: 2.8.2

- powerbox: 0.7.1

- prettymapp: 0.1.0

- proglog: 0.1.10

- prometheus-client: 0.14.1

- promise: 2.3

- prompt-toolkit: 3.0.31

- protobuf: 3.19.6

- psutil: 5.9.2

- ptyprocess: 0.7.0

- pure-eval: 0.2.2

- py-cpuinfo: 8.0.0

- pyarrow: 10.0.0

- pyasn1: 0.4.8

- pyasn1-modules: 0.2.8

- pycocotools: 2.0

- pycosat: 0.6.3

- pycparser: 2.21

- pydantic: 1.10.9

- pydeprecate: 0.3.1

- pydub: 0.25.1

- pyelftools: 0.30

- pyerfa: 2.0.0.1

- pyfftw: 0.13.1

- pygame: 2.1.2

- pygments: 2.13.0

- pylians: 0.7

- pyparsing: 3.0.9

- pyproj: 3.5.0

- pyproject-hooks: 1.0.0

- pyquaternion: 0.9.9

- pyrsistent: 0.18.1

- pyshp: 2.3.1

- pysocks: 1.7.1

- pysr: 0.16.3

- pystac: 1.8.4

- pystac-client: 0.7.5

- python-box: 7.1.1

- python-dateutil: 2.8.2

- pytorch-fid: 0.3.0

- pytorch-lightning: 2.2.2

- pytz: 2022.2.1

- pywavelets: 1.4.1

- pyyaml: 6.0

- pyzmq: 23.2.1

- qtconsole: 5.3.2

- qtpy: 2.2.0

- ray: 2.10.0

- referencing: 0.30.2

- requests: 2.31.0

- requests-oauthlib: 1.3.1

- rich: 13.3.4

- rpds-py: 0.10.3

- rsa: 4.9

- rtree: 1.0.1

- ruamel.yaml: 0.17.21

- ruamel.yaml.clib: 0.2.7

- scikit-build-core: 0.8.2

- scikit-image: 0.20.0

- scikit-learn: 1.2.2

- scipy: 1.8.1

- scooby: 0.7.4

- seaborn: 0.12.2

- send2trash: 1.8.0

- sentry-sdk: 1.44.1

- setproctitle: 1.3.3

- setuptools: 67.6.0

- shapely: 1.8.0

- shellingham: 1.5.4

- six: 1.16.0

- sklearn: 0.0.post1

- smmap: 5.0.1

- sniffio: 1.3.0

- soupsieve: 2.3.2.post1

- spiceypy: 6.0.0

- stack-data: 0.5.0

- stravalib: 1.4

- swagger-client: 1.0.0

- sympy: 1.11.1

- tabulate: 0.9.0

- taichi: 1.5.0

- tenacity: 8.2.3

- tensorboard: 2.11.2

- tensorboard-data-server: 0.6.1

- tensorboard-plugin-wit: 1.8.1

- tensorboardx: 2.6.2.2

- tensorflow: 2.11.0

- tensorflow-addons: 0.21.0

- tensorflow-datasets: 4.9.0

- tensorflow-estimator: 2.11.0

- tensorflow-graphics: 2021.12.3

- tensorflow-io-gcs-filesystem: 0.29.0

- tensorflow-metadata: 1.13.0

- tensorflow-probability: 0.19.0

- termcolor: 2.1.1

- terminado: 0.15.0

- threadpoolctl: 3.1.0

- tifffile: 2023.3.21

- timm: 0.4.12

- tinycss2: 1.1.1

- toml: 0.10.2

- tomli: 2.0.1

- tomlkit: 0.11.4

- toolz: 0.12.1

- torch: 2.2.2

- torchaudio: 2.2.2

- torchmetrics: 1.0.0

- torchvision: 0.17.2

- tornado: 6.2

- tqdm: 4.66.2

- tr: 1.0.0.2

- trafficgen: 0.0.0

- traitlets: 5.4.0

- traittypes: 0.2.1

- trimesh: 4.3.0

- triton: 2.2.0

- typeguard: 2.13.3

- typer: 0.12.2

- typing-extensions: 4.11.0

- urllib3: 1.26.15

- virtualenv: 20.16.5

- visu3d: 1.5.1

- wandb: 0.16.5

- waymo-open-dataset-tf-2-11-0: 1.6.1

- wcwidth: 0.2.5

- webencodings: 0.5.1

- websocket-client: 1.4.1

- werkzeug: 2.3.7

- wheel: 0.37.1

- whitebox: 2.3.1

- whiteboxgui: 2.3.0

- widgetsnbextension: 4.0.3

- wrapt: 1.14.1

- xyzservices: 2023.7.0

- yacs: 0.1.8

- yapf: 0.30.0

- yarl: 1.8.1

- zipp: 3.8.1

* System:

- OS: Linux

- architecture:

- 64bit

- ELF

- processor: x86_64

- python: 3.8.19

- release: 5.15.0-102-generic

- version: #112~20.04.1-Ubuntu SMP Thu Mar 14 14:28:24 UTC 2024

</details>

### More info

_No response_

|

closed

|

2024-04-12T14:11:34Z

|

2024-06-22T22:46:07Z

|

https://github.com/Lightning-AI/pytorch-lightning/issues/19768

|

[

"question"

] |

PabloVD

| 5

|

cvat-ai/cvat

|

tensorflow

| 8,764

|

Can access CVAT over LAN but not Internet

|

Hi, i did all in https://github.com/cvat-ai/cvat/issues/1095 but http://localhost:7070/auth/login don't login and show message "Could not check authentication on the server Open the Browser Console to get details" but on http://localhost:8080/auth/login no problem.it on WAN also http://myip:7060/, i have same state. can you help me?

|

closed

|

2024-12-02T12:04:18Z

|

2024-12-10T17:54:18Z

|

https://github.com/cvat-ai/cvat/issues/8764

|

[

"need info"

] |

alirezajafarishahedan

| 1

|

microsoft/MMdnn

|

tensorflow

| 378

|

load pytorch vgg16 converted from tensorflow : ImportError: No module named 'MainModel'

|

Platform (like ubuntu 16.04/win10): ubuntu16

Python version: 3.6

Source framework with version (like Tensorflow 1.4.1 with GPU): 1.3

Destination framework with version (like CNTK 2.3 with GPU):

Pre-trained model path (webpath or webdisk path):

Running scripts:

when torch.load have converted pretrain model

ImportError: No module named 'MainModel'

|

closed

|

2018-08-27T04:56:18Z

|

2019-03-21T01:26:58Z

|

https://github.com/microsoft/MMdnn/issues/378

|

[] |

Proxiaowen

| 8

|

healthchecks/healthchecks

|

django

| 549

|

log entries: keep last n failure entries

|

Hello,

when the log entries hit the maximum, old messages are removed.

Especially with higher frequency intervals, keeping a few of those "failure" events (which may contain important debug information's in the body) would be useful, as opposed to remove log entries solely based on the timestamp. Positive log entries are often only useful for their timestamp.

It so happens that I could have 100 positives log entries but lacking the last 2 - 3 negative log entries with debug informations in the body, and I'm really interested in the failures.

I'm not sure how this could be structured clearly without over-complicating the UI, maybe always keep the last 3 negative entries in the log?

|

closed

|

2021-08-06T12:07:32Z

|

2022-06-19T13:57:26Z

|

https://github.com/healthchecks/healthchecks/issues/549

|

[

"feature"

] |

lukastribus

| 6

|

dpgaspar/Flask-AppBuilder

|

flask

| 1,516

|

How to use OR operation in Rison

|

In the docunment, I found we can use `AND` operations like this `(filters:!((col:name,opr:sw,value:a),(col:name,opr:ew,value:z)))`, but how to use `OR` operation?

|

closed

|

2020-11-11T00:18:15Z

|

2021-06-29T00:56:11Z

|

https://github.com/dpgaspar/Flask-AppBuilder/issues/1516

|

[

"question",

"stale"

] |

HugoPu

| 2

|

biolab/orange3

|

data-visualization

| 6,892

|

Problem with SVM widget (degree must be int but only allows float)

|

<!--

Thanks for taking the time to report a bug!

If you're raising an issue about an add-on (i.e., installed via Options > Add-ons), raise an issue in the relevant add-on's issue tracker instead. See: https://github.com/biolab?q=orange3

To fix the bug, we need to be able to reproduce it. Please answer the following questions to the best of your ability.

-->

**What's wrong?**

<!-- Be specific, clear, and concise. Include screenshots if relevant. -->

<!-- If you're getting an error message, copy it, and enclose it with three backticks (```). -->

Got this error when try to use SVM widget:

Fitting failed.

The 'degree' parameter of SVC must be an int in the range [0, inf). Got 3.0 instead.