repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

pydantic/pydantic

|

pydantic

| 11,590

|

Mypy error when using `@model_validator(mode="after")` and `@final`

|

### Initial Checks

- [x] I confirm that I'm using Pydantic V2

### Description

This is very similar to https://github.com/pydantic/pydantic/issues/6709, but occurs when the model class is decorated with `@typing.final`.

```console

$ mypy --cache-dir=/dev/null --strict bug.py

bug.py:10: error: Cannot infer function type argument [misc]

Found 1 error in 1 file (checked 1 source file)

```

This error seems to occur irrespective of whether the `pydantic.mypy` plugin is configured or not. I wondered if this might be an issue in `mypy`, but if I switch out `@pydantic.model_validator` for another decorator, e.g. `@typing_extensions.deprecated`, then the error isn't raised.

### Example Code

```Python

from __future__ import annotations

from typing import Self, final

import pydantic

@final

class MyModel(pydantic.BaseModel):

@pydantic.model_validator(mode="after")

def my_validator(self) -> Self:

return self

```

### Python, Pydantic & OS Version

```Text

pydantic version: 2.10.6

pydantic-core version: 2.27.2

pydantic-core build: profile=release pgo=false

install path: /home/nick/Sources/kraken-lexicon-gbr/.venv/lib/python3.12/site-packages/pydantic

python version: 3.12.9 (main, Feb 5 2025, 19:10:45) [Clang 19.1.6 ]

platform: Linux-6.13.4-arch1-1-x86_64-with-glibc2.41

related packages: typing_extensions-4.12.2 mypy-1.15.0

commit: unknown

```

|

closed

|

2025-03-20T11:55:22Z

|

2025-03-21T09:32:48Z

|

https://github.com/pydantic/pydantic/issues/11590

|

[

"bug V2",

"pending"

] |

ngnpope

| 1

|

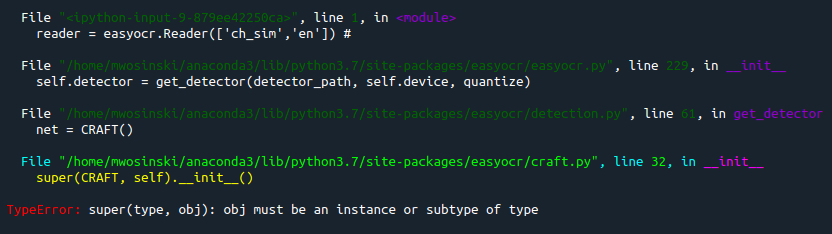

JaidedAI/EasyOCR

|

deep-learning

| 957

|

Unsupport Chinese path of images

|

It is obvious that EasyOCR goes down when it readtext from an image with Chinese path, just because it use python-opencv.

So I add some code peice into utils.py to solve this problem. Now the OCR system run smoothly.

def is_chinese(string):

"""

检查整个字符串是否包含中文

:param string: 需要检查的字符串

:return: bool

参考https://blog.csdn.net/wenqiwenqi123/article/details/122258804

"""

for ch in string:

if u'\u4e00' <= ch <= u'\u9fff':

return True

return True

def reformat_input(image):

if type(image) == str:

if image.startswith('http://') or image.startswith('https://'):

tmp, _ = urlretrieve(image , reporthook=printProgressBar(prefix = 'Progress:', suffix = 'Complete', length = 50))

img_cv_grey = cv2.imread(tmp, cv2.IMREAD_GRAYSCALE)

os.remove(tmp)

else:

if is_chinese(image):

img_cv_grey=cv2.imdecode(np.fromfile(image,dtype=np.uint8),cv2.IMREAD_GRAYSCALE)

else:

img_cv_grey = cv2.imread(image, cv2.IMREAD_GRAYSCALE)

image = os.path.expanduser(image)

img = loadImage(image) # can accept URL

........

|

open

|

2023-03-01T02:31:50Z

|

2023-03-01T02:31:50Z

|

https://github.com/JaidedAI/EasyOCR/issues/957

|

[] |

drrobincroft

| 0

|

onnx/onnx

|

pytorch

| 5,988

|

Version converter: No Adapter From Version 16 for Identity

|

# Ask a Question

### Question

I meet the following issue while trying to convert the onnx model of Opset16 to Opset15 :

"**adapter_lookup: Assertion `false` failed: No Adapter From Version $16 for Identity**"

If I have to use an Opset15 and currently only have Opset16 version of the relevant onnx resources available, do you have any suggestions?

|

open

|

2024-03-02T13:51:14Z

|

2024-03-06T19:14:23Z

|

https://github.com/onnx/onnx/issues/5988

|

[

"question"

] |

lsp2

| 4

|

joke2k/django-environ

|

django

| 410

|

Default for keys in dictionary

|

With dict we can set defaults

```

MYVAR = env.dict(

"MYVAR",

{

'value': bool,

'cast': {

'ACTIVE': bool,

'URL': str,

}

},

default={

'ACTIVE': False,

'URL': "http://example.com",

})

```

now, the default are used IFF the entry `MYVAR` is not present in the `.env` file.

if i've an entry of this type `MYVAR=ACTIVE:True;` the result is a dictionary that is `{"ACTIVE":"True"}`

wouldn't be better to apply the default for all the keys that are not specified in the env? thus, in my example i would get

`{"ACTIVE":"True", "URL": "http://example.com"}`

|

open

|

2022-07-21T08:59:32Z

|

2024-04-30T17:12:38Z

|

https://github.com/joke2k/django-environ/issues/410

|

[] |

esseti

| 1

|

pennersr/django-allauth

|

django

| 3,914

|

Adding/changing email address with MFA enabled

|

It seems that it's impossible to add or change an email address while having MFA enabled, judging by this commit:

https://github.com/pennersr/django-allauth/pull/3383/commits/7bf4d5e6e4b188b7e0738652f2606b98804374ab

What is the logic behind this? What is the expected flow when a user needs to change an email address?

|

closed

|

2024-06-22T18:05:12Z

|

2024-06-22T19:17:58Z

|

https://github.com/pennersr/django-allauth/issues/3914

|

[] |

eyalch

| 2

|

lukasmasuch/streamlit-pydantic

|

streamlit

| 74

|

List of datatypes or Nested Models Not Displaying Correctly in `pydantic_input` form

|

### Checklist

- [x] I have searched the [existing issues](https://github.com/lukasmasuch/streamlit-pydantic/issues) for similar issues.

- [x] I added a very descriptive title to this issue.

- [x] I have provided sufficient information below to help reproduce this issue.

### Summary

### **Description:**

When using `pydantic_input` with lists (e.g., `List[str]`) or list of models, the form do not render correctly.

This issue occurs despite following the correct usage patterns from the [[official examples](https://st-pydantic.streamlit.app/)], specifically the **Complex Instance Model** example. This behavior is observed for:

- Simple lists like `List[str]`, `List[int]`

- List of Pydantic models

```python

# Nested Pydantic Model

class NestedModel(BaseModel):

id: int = Field(..., description="ID of the nested object")

name: str = Field(..., description="Name of the nested object")

# Main ConfigModel

class ConfigModel(BaseModel):

keys: List[str] = Field(..., description="List of keys used for lookup")

value: str = Field(..., description="Value associated with the keys")

nested_items: List[NestedModel] = Field(..., description="List of nested model instances")

```

The above models give the following form when used with `pydantic_input` method

**Expected Behaviour**

### Reproducible Code Example

```Python

import streamlit as st

from pydantic import BaseModel, Field

from typing import List, Dict, Any

import streamlit_pydantic as sp

# Nested Pydantic Model

class NestedModel(BaseModel):

id: int = Field(..., description="ID of the nested object")

name: str = Field(..., description="Name of the nested object")

# Main ConfigModel

class ConfigModel(BaseModel):

keys: List[str] = Field(..., description="List of keys used for lookup")

value: str = Field(..., description="Value associated with the keys")

nested_items: List[NestedModel] = Field(..., description="List of nested model instances")

# -------------------------

# Streamlit UI

# -------------------------

st.set_page_config(layout="wide")

st.title("ConfigModel Input Form")

# Pre-filled data (Optional)

data = {

"keys": ["id1", "id2"],

"value": "example_value",

"metadata": [{"key": "meta1", "value": 100}, {"key": "meta2", "value": 200}],

"nested_items": [{"id": 1, "name": "Item 1"}, {"id": 2, "name": "Item 2"}]

}

model_instance = ConfigModel(**data)

# Render the form using streamlit_pydantic

with st.form("config_model_form"):

form_result = sp.pydantic_input(key="config_model", model=model_instance)

submit_button = st.form_submit_button("Submit")

# Handle form submission

if submit_button:

if form_result:

try:

validated_data = ConfigModel.model_validate(form_result).dict()

st.success("Form submitted successfully!")

st.json(validated_data, expanded=True)

except Exception as e:

st.error(f"Validation error: {str(e)}")

else:

st.warning("Form submission failed. Please check the inputs.")

```

### Steps To Reproduce

1. Install dependencies from the provided `requirements.txt`.

2. Save the code as `app.py`.

3. Run the code using:

```bash

streamlit run app.py

```

**Dependencies:**

```plaintext

pydantic-core==2.27.2

pydantic==2.10.6

streamlit==1.42.1

streamlit-pydantic==0.6.1-rc.2

pydantic-extra-types==2.10.2

pydantic-settings==2.7.1

```

### Expected Behavior

- The `keys` field should display as a list input with pre-filled values `"id1"`, `"id2"`.

- The `nested_items` field should render forms for each instance of the `NestedModel` within a list form.

### Current Behavior

- The list input does not appear or behaves incorrectly.

- List of models within `nested_items` are not displayed as expected, and editing or adding new instances is not possible.

### Is this a regression?

- [ ] Yes, this used to work in a previous version.

### Debug info

- **OS:** Ubuntu 22.04

- **Python:** 3.9, 3.11

- **Streamlit:** 1.42.1

- **Pydantic:** 2.10.6

- **streamlit-pydantic:** 0.6.1-rc.2, 0.6.1-rc.3

### Additional Information

**Please advise on whether this is a compatibility issue or requires additional configuration.** 😊

|

open

|

2025-02-21T20:00:22Z

|

2025-02-25T13:33:12Z

|

https://github.com/lukasmasuch/streamlit-pydantic/issues/74

|

[

"type:bug",

"status:needs-triage"

] |

gaurav-brandscapes

| 0

|

influxdata/influxdb-client-python

|

jupyter

| 31

|

Add support for /delete metrics endpoint

|

closed

|

2019-11-01T15:09:05Z

|

2019-11-04T09:32:01Z

|

https://github.com/influxdata/influxdb-client-python/issues/31

|

[] |

rhajek

| 0

|

|

pydantic/FastUI

|

pydantic

| 253

|

Demo in cities click "1" page not response.

|

this's component bug?

|

closed

|

2024-03-21T13:13:59Z

|

2024-03-21T13:18:26Z

|

https://github.com/pydantic/FastUI/issues/253

|

[] |

qq727127158

| 1

|

pallets-eco/flask-sqlalchemy

|

flask

| 486

|

Connecting multiple pre-exising databases via binds

|

Hi. I'm new to flask-sqlalchemy. I have multiple pre-existing mysql databases (for this example, two is enough). I have created a minimal example of what I'm trying to do below. I'm able to successful connect to one database using ```SQLALCHEMY_DATABASE_URI```. For the second database, I'm trying to use ```SQLALCHEMY_BINDS``` to connect to the other database, but i am unable to access it. I've been unable to find an example that uses binding with pre-existing databases. Any help would be appreciated.

```

from flask import Flask

from flask_sqlalchemy import SQLAlchemy

app = Flask(__name__)

# db1 - works ok.

app.config['SQLALCHEMY_DATABASE_URI'] = 'mysql://user:password@localhost/SiPMCalibration'

# db2 - not working.

app.config['SQLALCHEMY_BINDS'] = {

'db2': 'mysql://user:password@localhost/Run8Chan'

}

db = SQLAlchemy(app)

db.Model.metadata.reflect(db.engine)

# Setup the model for the pre-existing table.

class sipm_calibration(db.Model):

__table__ = db.Model.metadata.tables['Calibration']

# Some code to retrive the most recent 100 entries and print their headers (i.e keys)

query = db.session.query(sipm_calibration).order_by(sipm_calibration.ID.desc()).limit(100)

print db.session.execute(query).keys() # Works fine.

#---------------------

# All ok so far - try again for the second database via binding.

class run_entry(db.Model):

__bind_key__ = 'db2'

__table__ = db.Model.metadata.tables['Runs']

query = db.session.query(run_entry).order_by(run_entry.ID.desc()).limit(100)

print db.session.execute(query).keys()

```

|

closed

|

2017-03-20T14:19:26Z

|

2020-12-05T20:46:27Z

|

https://github.com/pallets-eco/flask-sqlalchemy/issues/486

|

[] |

daniel-saunders

| 3

|

plotly/dash-table

|

plotly

| 448

|

filtering not working in python or R

|

@rpkyle @Marc-Andre-Rivet Data Table filtering does not work when running the first example of the [docs](https://dash.plot.ly/datatable/interactivity) locally:

The gif above is from running:

```

import dash

from dash.dependencies import Input, Output

import dash_table

import dash_core_components as dcc

import dash_html_components as html

import pandas as pd

df = pd.read_csv('https://raw.githubusercontent.com/plotly/datasets/master/gapminder2007.csv')

app = dash.Dash(__name__)

app.layout = html.Div([

dash_table.DataTable(

id='datatable-interactivity',

columns=[

{"name": i, "id": i, "deletable": True} for i in df.columns

],

data=df.to_dict('records'),

editable=True,

filtering=True,

sorting=True,

sorting_type="multi",

row_selectable="multi",

row_deletable=True,

selected_rows=[],

pagination_mode="fe",

pagination_settings={

"current_page": 0,

"page_size": 10,

},

),

html.Div(id='datatable-interactivity-container')

])

@app.callback(

Output('datatable-interactivity-container', "children"),

[Input('datatable-interactivity', "derived_virtual_data"),

Input('datatable-interactivity', "derived_virtual_selected_rows")])

def update_graphs(rows, derived_virtual_selected_rows):

if derived_virtual_selected_rows is None:

derived_virtual_selected_rows = []

dff = df if rows is None else pd.DataFrame(rows)

colors = ['#7FDBFF' if i in derived_virtual_selected_rows else '#0074D9'

for i in range(len(dff))]

return [

dcc.Graph(

id=column,

figure={

"data": [

{

"x": dff["country"],

"y": dff[column],

"type": "bar",

"marker": {"color": colors},

}

],

"layout": {

"xaxis": {"automargin": True},

"yaxis": {

"automargin": True,

"title": {"text": column}

},

"height": 250,

"margin": {"t": 10, "l": 10, "r": 10},

},

},

)

for column in ["pop", "lifeExp", "gdpPercap"] if column in dff

]

if __name__ == '__main__':

app.run_server(debug=True)

```

The same behaviour occurs when running a simple datatable example in either python or R:

`Python` minimal example:

```

import dash

import dash_table

import pandas as pd

df = pd.read_csv('https://raw.githubusercontent.com/plotly/datasets/master/solar.csv')

app = dash.Dash(__name__)

def generateDataTable(DT, type):

return(

dash_table.DataTable(

id = "cost-stats table" if type == "cost" else "procedure-stats-table",

columns=[{"name": i, "id": i} for i in DT.columns],

data = DT.to_dict("records"),

filtering = True,

sorting = True if type == "cost" else False,

sorting_type = "multi",

pagination_mode = "fe",

pagination_settings = {

"displayed pages": 1,

"current_page": 0,

"page_size": 5

},

navigation = "page",

style_cell = {

"background-color": "#171b26",

"color": "#7b7d8d",

"textOverflow": "ellipsis"

},

style_filter = {

"background-color": "#171b26",

"color": "7b7d8d"

}

)

)

app.layout = generateDataTable(df, "cost")

if __name__ == '__main__':

app.run_server(debug=True)

```

`R` minimal example:

```

library(dashR)

library(dashCoreComponents)

library(dashHtmlComponents)

library(dashTable)

df <- read.csv(

url(

'https://raw.githubusercontent.com/plotly/datasets/master/solar.csv'

),

check.names=FALSE,

stringsAsFactors=FALSE

)

generateDataTable <- function(DT, type = c("procedure", "cost")){

dashDataTable(

id = ifelse(

type == "cost",

"cost-stats-table",

"procedure-stats-table"

),

columns = lapply(

colnames(DT),

function(x){

list(name = x, id = x)

}

),

data = dashTable:::df_to_list(DT),

filtering = TRUE,

sorting = ifelse(

type == "cost",

FALSE,

TRUE

),

sorting_type = "multi",

pagination_mode = "fe",

pagination_settings = list(

displayed_pages = 1, current_page = 0, page_size = 5

),

navigation = "page",

style_cell = list(

backgroundColor = "#171b26",

color = "#7b7d8d",

textOverflow = "ellipsis"

),

style_filter = list(

backgroundColor = "#171b26",

color = "#7b7d8d"

)

)

}

app <- Dash$new()

app$layout(generateDataTable(df))

app$run_server(debug = TRUE)

```

Package versions:

```

Python 3.7.2

dash==0.41.0

dash-core-components==0.46.0

dash-html-components==0.15.0

dash-renderer==0.22.0

dash-table==3.6.0

```

This occurs in firefox 67.0 and chrome 74.0.3729.169

|

closed

|

2019-05-29T14:18:57Z

|

2019-06-04T18:07:21Z

|

https://github.com/plotly/dash-table/issues/448

|

[] |

sacul-git

| 2

|

dpgaspar/Flask-AppBuilder

|

rest-api

| 1,574

|

Invalid link in "about" of GitHub repo

|

Currently the "about" section has an invalid link to http://flaskappbuilder.pythonanywhere.com/:

Lets fix this and link to the docs: https://flask-appbuilder.readthedocs.io/en/latest/

@dpgaspar

|

closed

|

2021-02-25T22:55:30Z

|

2021-04-09T13:31:32Z

|

https://github.com/dpgaspar/Flask-AppBuilder/issues/1574

|

[] |

thesuperzapper

| 3

|

dpgaspar/Flask-AppBuilder

|

rest-api

| 1,559

|

export only selected data using the @action method.

|

Hello, I'm desperately trying to export only selected data using the @action method.

The code actually works quite well, but only for one query.

`@action("export", "Export", "Select Export?", "fa-file-excel-o", single=False)

def export(self, items):

if isinstance(items, list):

urltools.get_filter_args(self._filters)

order_column, order_direction = self.base_order

count, lst = self.datamodel.query(self._filters, order_column, order_direction)`

`csv = ""`

`for item in self.datamodel.get_values(items, self.list_columns):

csv += str(item) + '\n'

response = make_response(csv)

cd = 'attachment; filename=mycsv.csv'

response.headers['Content-Disposition'] = cd

response.mimetype='text/csv'

return response`

I don't know what to do next. I would like to export all of the selected data

|

closed

|

2021-02-07T17:47:31Z

|

2021-02-12T08:25:50Z

|

https://github.com/dpgaspar/Flask-AppBuilder/issues/1559

|

[] |

pUC19

| 0

|

proplot-dev/proplot

|

matplotlib

| 20

|

eps figures save massive object outside of figure

|

Not really sure how to debug this, but something in your figure saving creates vector objects that extend to seemingly infinity off the screen.

You can see here in my illustrator screenshot. The bar object can't be modified by illustrator really because it says transforming it will make it too large. The blue outline seen extends downward infinitely. If I save an eps from `matplotlib` the object is only the size of the bar.

<img width="1366" alt="Screen Shot 2019-06-12 at 11 25 21 AM" src="https://user-images.githubusercontent.com/8881170/59372567-0b924900-8d05-11e9-97c6-d32a6b434adc.png">

|

closed

|

2019-06-12T17:28:14Z

|

2020-05-19T16:52:26Z

|

https://github.com/proplot-dev/proplot/issues/20

|

[

"bug"

] |

bradyrx

| 4

|

pyg-team/pytorch_geometric

|

pytorch

| 9,769

|

Slack link no longer works

|

### 📚 Describe the documentation issue

Slack link seems to be no longer active @rus

### Suggest a potential alternative/fix

_No response_

|

closed

|

2024-11-09T01:47:55Z

|

2024-11-13T03:03:56Z

|

https://github.com/pyg-team/pytorch_geometric/issues/9769

|

[

"documentation"

] |

chiggly007

| 1

|

graphistry/pygraphistry

|

jupyter

| 363

|

[FEA] API personal key support

|

Need support for new api service keys:

- [x] `register(personal_key=...)`, used for files + datasets endpoints

- [x] add to `README.md`

+ testing uploads

|

open

|

2022-06-10T23:24:15Z

|

2023-02-10T09:12:05Z

|

https://github.com/graphistry/pygraphistry/issues/363

|

[

"enhancement",

"p2"

] |

lmeyerov

| 1

|

statsmodels/statsmodels

|

data-science

| 8,947

|

How to completely remove training data from model.

|

Dear developers of statsmodels,

First of all, thank you so much for creating such an amazing package.

I've recently used statsmodels (0.14.0) to make a GLM model and I would like to share it with others so that they can input their own data and make predictions.

However, I am prohibited from sharing the data I have used for training.

Therefor, I tried to remove the training data via calling `.save()` with `remove_data=True` , but when I checked the `.model.data.frame` attribute of the fitted model object, it still contained the training data.

To the best of my knowledge the data is also stored in `model.data.orig_exog` and `model.data.orig_endog` as mentioned in [issue7494](https://github.com/statsmodels/statsmodels/issues/7494), but I am not sure where else the training data maybe held.

If you could please enlighten on me how to completely remove training data from the fitted model object that would be great.

Many thanks in advance.

### Reproducible code

```

import numpy as np

import statsmodels.api as sm

import statsmodels.formula.api as smf

# load "growth curves pf pigs" dataset

my_data = sm.datasets.get_rdataset("dietox", "geepack").data

# make GLM model

my_formula = "Weight ~ Time"

my_family = sm.families.Gaussian(link=sm.families.links.identity())

model = smf.glm(formula=my_formula, data=my_data, family=my_family)

result = model.fit()

# save model with "remove_data=True"

result.save('test_model.pickle', remove_data=True)

new_result = sm.load('test_model.pickle')

print(new_result.model.data.frame)

```

|

open

|

2023-07-03T15:24:21Z

|

2023-07-11T06:45:17Z

|

https://github.com/statsmodels/statsmodels/issues/8947

|

[] |

hiroki32

| 1

|

plotly/dash-table

|

plotly

| 716

|

Cell selection does not restore previous cell background after selection

|

I'm using a Dash table component with a custom background color (grey) defined with style_data_conditional. When I select any cell of my table the background color change to the selection color (hot-pink). When I then select another cell, the cell previously selected becomes white instead than the color I defined (grey).

The color grey is restored only if the cell is redrawn either by a refresh or by a callback that update the table data model (this is an independent interaction)

I tried this in Firefox and Chrome and I'm using the 1.9.0 version of Dash

I think this is a bug and hope it can be fixed because it vanishes the custom table style.

Alternatively it would be also good to have the option to disable the cell selection mechanism.

Thanks

|

open

|

2020-03-06T12:04:25Z

|

2020-03-06T12:04:25Z

|

https://github.com/plotly/dash-table/issues/716

|

[] |

claudioiac

| 0

|

Urinx/WeixinBot

|

api

| 259

|

请问入群欢迎是怎么实现的

|

closed

|

2018-07-11T05:04:37Z

|

2018-07-13T07:44:32Z

|

https://github.com/Urinx/WeixinBot/issues/259

|

[] |

WellJay

| 1

|

|

litestar-org/litestar

|

pydantic

| 3,752

|

Enhancement: Allow multiple lifecycle hooks of each type to be run on any given request

|

### Summary

Only having one lifecycle hook per request makes it impossible to use multiple plugins which both set the same hook, or makes it easy to inadvertently break plugins by overriding a hook that they install and depend on (whether at the root application or a lower layer).

It also places in application code the responsibility of being aware of hooks installed by outer layers, and ensuring that any hooks at inner layers take responsibility for those outer hooks' functionality.

The simplest approach to addressing this is to allow each layer to define a list of hooks, and run all of them for each request (halting when any hook reaches a terminal state, for those hooks where this applies).

### Basic Example

Just as guards are defined as a list (where this list is merged between layers to assemble a final list of guards), under this proposal the same would be true of lifecycle hooks.

### Drawbacks and Impact

This is a breaking change from an API perspective: Documentation and test cases would need to be updated, plugins or other code assigning lifecycle hooks would need to be modified.

### Unresolved questions

Compared to the proposal embodied in #3748, this provides less flexibility -- hooks following that proposal can decide to invoke their parents either before or after themselves, or can modify results returned by parent hooks if they so choose.

_However_, with this reduction in flexibility there is also a substantive reduction in responsibility: using that proposal, a hook could inadvertently prevent other hooks from running, most concerningly by way of not simply implementing the newer interface.

|

open

|

2024-09-21T17:50:29Z

|

2025-03-20T15:54:55Z

|

https://github.com/litestar-org/litestar/issues/3752

|

[

"Enhancement"

] |

charles-dyfis-net

| 2

|

pytorch/pytorch

|

deep-learning

| 148,883

|

Pytorch2.7+ROCm6.3 is 34.55% slower than Pytorch2.6+ROCm6.2.4

|

The same hardware and software environment, only the versions of PyTorch+ROCm are different.

Use ComfyUI to run Hunyuan text to video:

ComfyUI:v0.3.24

ComfyUI plugin: teacache

49frames

480x960

20steps

CPU:i5-7500

GPU:AMD 7900XT 20GB

RAM:32GB

PyTorch2.6+ROCm6.2.4 Time taken: 348 seconds 14.7s/it

The VAE Decode Tiled node (parameters: 128 64 32 8) takes: 55 seconds

PyTorch2.7+ROCm6.3 Time taken: 387 seconds 15.66s/it**(11.21% slower)**

The VAE Decode Tiled node (parameters: 128 64 32 8) takes: 74 seconds**(34.55% slower)**

In addition, if the VAE node parameters are set to 256 64 64 8 (the default parameters for nvidia graphics cards), it will take a very long time and seem to be stuck but the program will not crash.The same situation occurs in both Pytorch 2.6 and 2.7.

I'm sorry I don't know what error message to submit for this discrepancy, but I can cooperate with the test and upload the specified information.

Thank you.

[ComfyUI_running_.json](https://github.com/user-attachments/files/19162936/ComfyUI_running_.json)

cc @jeffdaily @sunway513 @jithunnair-amd @pruthvistony @ROCmSupport @dllehr-amd @jataylo @hongxiayang @naromero77amd

|

open

|

2025-03-10T12:56:34Z

|

2025-03-19T14:14:52Z

|

https://github.com/pytorch/pytorch/issues/148883

|

[

"module: rocm",

"triaged"

] |

testbug5577

| 6

|

igorbenav/fastcrud

|

sqlalchemy

| 15

|

Add Automatic Filters for Auto Generated Endpoints

|

**Is your feature request related to a problem? Please describe.**

I would like to be able to filter get_multi endpoint.

**Describe the solution you'd like**

I want to be filtering and searching in the get_multi endpoint. This is such a common feature in most CRUD system that I consider relevant. This is also available in flask-muck.

**Describe alternatives you've considered**

Some out-of-the-box solution are out there:

https://github.com/arthurio/fastapi-filter (sqlalchemy + mongo)

https://github.com/OleksandrZhydyk/FastAPI-SQLAlchemy-Filters (specific sqlalchemy)

**Additional context**

Add any other context or screenshots about the feature request here.

Ifnot being implemented could an example be provided?

|

closed

|

2024-02-04T13:19:43Z

|

2024-05-21T04:14:34Z

|

https://github.com/igorbenav/fastcrud/issues/15

|

[

"enhancement",

"Automatic Endpoint"

] |

AndreGuerra123

| 15

|

pydantic/FastUI

|

fastapi

| 32

|

Toggle switches

|

Similar to [this](https://getbootstrap.com/docs/5.1/forms/checks-radios/#switches), we should allow them as well as checkboxes in forms.

|

closed

|

2023-12-01T19:07:40Z

|

2023-12-04T13:07:19Z

|

https://github.com/pydantic/FastUI/issues/32

|

[

"good first issue",

"New Component"

] |

samuelcolvin

| 2

|

pytest-dev/pytest-html

|

pytest

| 7

|

AttributeError: 'TestReport' object has no attribute 'extra'

|

Tried to add the code below, but got error - AttributeError: 'TestReport' object has no attribute 'extra'

from py.xml import html

from html import extras

def pytest_runtest_makereport(**multicall**, item):

report = **multicall**.execute()

extra = getattr(report, 'extra', [])

if report.when == 'call':

xfail = hasattr(report, 'wasxfail')

if (report.skipped and xfail) or (report.failed and not xfail):

url = TestSetup.selenium.current_url

report.extra.append(extras.url(url))

screenshot = TestSetup.selenium.get_screenshot_as_base64()

report.extra.append(extras.image(screenshot, 'Screenshot'))

html = TestSetup.selenium.page_source.encode('utf-8')

report.extra.append(extra.text(html, 'HTML'))

report.extra.append(extra.html(html.div('Additional HTML')))

report.extra = extra

return report

INTERNALERROR> Traceback (most recent call last):

INTERNALERROR> File "D:\Python27\lib\site-packages_pytest\main.py", line 84, in wrap_session

INTERNALERROR> doit(config, session)

INTERNALERROR> File "D:\Python27\lib\site-packages_pytest\main.py", line 122, in _main

INTERNALERROR> config.hook.pytest_runtestloop(session=session)

...........................

pytest_runtest_makereport

INTERNALERROR> report.extra.append(extra.text(item._obj.__doc__.strip(), 'HTML'))

INTERNALERROR> AttributeError: 'TestReport' object has no attribute 'extra'

|

closed

|

2015-05-26T14:27:45Z

|

2015-05-26T15:27:00Z

|

https://github.com/pytest-dev/pytest-html/issues/7

|

[] |

reddypdl

| 1

|

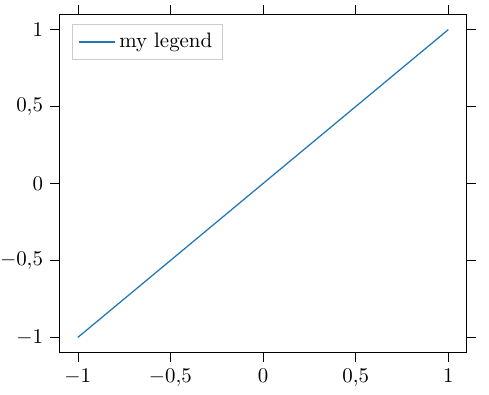

nschloe/tikzplotlib

|

matplotlib

| 401

|

Legend title is not converted to tikz

|

When creating a legend with a title, the title does not transform to tikz code.

Python code:

```

import matplotlib.pyplot as plt

from tikzplotlib import save as tikz_save

x=[-1,1]

y=[-1,1]

plt.figure()

plt.plot(x,y,label='my legend')

#plt.xlim([1e-3,5])

#plt.ylim([1e-3,1e2])

plt.legend(title='my title')

#plt.savefig("test.png")

tikz_save("test.tikz",encoding ='utf-8')

```

Tikz output:

```

% This file was created by tikzplotlib v0.9.1.

\begin{tikzpicture}

\definecolor{color0}{rgb}{0.12156862745098,0.466666666666667,0.705882352941177}

\begin{axis}[

legend cell align={left},

legend style={fill opacity=0.8, draw opacity=1, text opacity=1, at={(0.03,0.97)}, anchor=north west, draw=white!80!black},

tick align=outside,

tick pos=both,

x grid style={white!69.0196078431373!black},

xmin=-1.1, xmax=1.1,

xtick style={color=black},

y grid style={white!69.0196078431373!black},

ymin=-1.1, ymax=1.1,

ytick style={color=black}

]

\addplot [semithick, color0]

table {%

-1 -1

1 1

};

\addlegendentry{my legend}

\end{axis}

\end{tikzpicture}

```

Expected output:

Obtained output:

|

open

|

2020-04-12T11:30:57Z

|

2023-07-25T12:12:37Z

|

https://github.com/nschloe/tikzplotlib/issues/401

|

[] |

Mpedrosab

| 2

|

kennethreitz/responder

|

graphql

| 323

|

dealing with index.html location not in static directory

|

Responder is a great package, loving it so far!

Wanted to share some feedback on trouble that I ran into while trying to trying to deal with SPA, specifically dealing with serving up a React SPA bootstrapped by Create-React-App. The default webpack build scripts put the index.html in the parent folder of the static content.

```

/build

|

-index.html

|

--- /static

|

-css/

|

- js/

|

- media/

```

Because of this deployed layout, I could not figure out how to point to the index.html file and the static directory. Is there any way to point to static directory and index.html separately for add_route method?

For now, my workaround is to fix by moving the index.html file into the static folder, but perhaps there are better ways?

Here is my code for responder with the directory arguments:

```

api = responder.API(static_dir="../build/static", static_route="/static", templates_dir="../build")

api.add_route("/", static=True)

if __name__ == '__main__':

api.run(port=3001)

```

Thanks!

|

closed

|

2019-02-28T23:55:16Z

|

2019-03-10T14:44:13Z

|

https://github.com/kennethreitz/responder/issues/323

|

[

"discussion"

] |

KenanHArik

| 4

|

explosion/spaCy

|

deep-learning

| 12,807

|

Importing spacy (or thinc) breaks dot product

|

A very annoying bug that it took me forever to track down ... I'm at a loss for what might be going on here.

## How to reproduce the behaviour

```

import numpy as np

# works

small = np.random.randn(5, 6)

small.T @ small

# works

larger = np.random.randn(100, 110)

larger.T @ larger

import spacy # or import thinc

# works

small.T @ small

# works

larger + larger

# hangs forever, max CPU usage

larger.T @ larger # larger.T.dot(larger) also hangs

```

## Your Environment

- **spaCy version:** 3.5.3 (also occurs under 3.6.0)

- **Platform:** macOS-13.4.1-x86_64-i386-64bit

- **Python version:** 3.11.4

- **Pipelines:** de_core_news_md (3.5.0), en_core_web_trf (3.5.0), en_core_web_sm (3.5.0), en_core_web_md (3.5.0)

I tried the same thing on another computer (Raspberry Pi) and it worked flawlessly, but on my Macbook, it hangs.

I can move this to the thinc github if you prefer.

|

closed

|

2023-07-08T22:05:46Z

|

2023-08-19T00:02:07Z

|

https://github.com/explosion/spaCy/issues/12807

|

[

"third-party"

] |

jona-sassenhagen

| 17

|

freqtrade/freqtrade

|

python

| 11,053

|

freqtrade 2024.10 backtesting short is not work……?

|

```

{

"$schema": "https://schema.freqtrade.io/schema.json",

"max_open_trades": 100,

"stake_currency": "USDT",

"stake_amount": "unlimited",

"tradable_balance_ratio": 0.99,

"fiat_display_currency": "USD",

"dry_run": true,

"dry_run_wallet": 1000,

"cancel_open_orders_on_exit": false,

"trading_mode": "futures",

"margin_mode": "isolated",

"unfilledtimeout": {

"entry": 10,

"exit": 10,

"exit_timeout_count": 0,

"unit": "minutes"

},

"entry_pricing": {

"price_side": "same",

"use_order_book": true,

"order_book_top": 1,

"price_last_balance": 0.0,

"check_depth_of_market": {

"enabled": false,

"bids_to_ask_delta": 1

}

},

"exit_pricing": {

"price_side": "same",

"use_order_book": true,

"order_book_top": 1

},

"exchange": {

"name": "binance",

"key": "",

"secret": "",

"ccxt_config": {

"httpsProxy": "http://127.0.0.1:7890",

"wsProxy": "http://127.0.0.1:7890"

},

"ccxt_async_config": {

"httpsProxy": "http://127.0.0.1:7890",

"wsProxy": "http://127.0.0.1:7890"

},

"pair_whitelist": [

"BTC/USDT:USDT"

],

"pair_blacklist": [

"BNB/.*"

]

},

"pairlists": [

{

"method": "StaticPairList"

}

],

"api_server": {

"enabled": true,

"listen_ip_address": "127.0.0.1",

"listen_port": 8080,

"verbosity": "error",

"enable_openapi": false,

"jwt_secret_key": "cea5c90043a8d876068a86d813c0f510a8cbf28ef6924fae640265f988ebe153",

"ws_token": "NvP7a0z1y3dFAWxVeZqqpIZrTucdG39oiw",

"CORS_origins": [],

"username": "freqtrader",

"password": "1"

},

"bot_name": "freqtrade",

"initial_state": "running",

"force_entry_enable": false,

"internals": {

"process_throttle_secs": 5

}

}

```

``` python

from freqtrade.strategy.interface import IStrategy

from pandas import DataFrame

import talib.abstract as ta

class SimpleShortStrategy(IStrategy):

timeframe = "5m"

# 技术指标参数

rsi_period = 14

cci_period = 20

short_sma_period = 50

long_sma_period = 200

stoploss = -0.05 # 最大止损 5%

minimal_roi = {

"0": 0.10 # 最低 10% 的盈利目标

}

can_short = True

def populate_indicators(self, dataframe: DataFrame, metadata: dict) -> DataFrame:

"""

Populate indicators for the strategy.

"""

# RSI 指标

dataframe['rsi'] = ta.RSI(dataframe, timeperiod=self.rsi_period)

# CCI 指标

dataframe['cci'] = ta.CCI(dataframe, timeperiod=self.cci_period)

# 短期和长期均线

dataframe['sma_short'] = ta.SMA(dataframe, timeperiod=self.short_sma_period)

dataframe['sma_long'] = ta.SMA(dataframe, timeperiod=self.long_sma_period)

return dataframe

def populate_entry_trend(self, dataframe: DataFrame, metadata: dict) -> DataFrame:

"""

Generate entry signals for short trades.

"""

dataframe.loc[

(

(dataframe['sma_short'] < dataframe['sma_long'])

# (dataframe['rsi'] > 70) & # RSI 超买信号

# (dataframe['cci'] > 100) # CCI 表明市场可能反转

),

'enter_short'

] = 1 # 生成做空信号

return dataframe

def populate_exit_trend(self, dataframe: DataFrame, metadata: dict) -> DataFrame:

"""

Generate exit signals for short trades.

"""

dataframe.loc[

(

(dataframe['sma_short'] > dataframe['sma_long']) | # 短期均线突破长期均线(趋势反转)

(dataframe['rsi'] < 50) # RSI 回到中性水平

),

'exit_short'

] = 1 # 生成平仓信号

return dataframe

```

```

freqtrade backtesting -c user_data/hyperopt-futures-5m-btc.json --strategy SimpleShortStrategy --strategy-path user_data/strategies_short/5m --timeframe 5m --timerange 20210301-20230301 --eps

2024-12-07 20:42:21,656 - freqtrade - INFO - freqtrade 2024.10

2024-12-07 20:42:21,884 - numexpr.utils - INFO - NumExpr defaulting to 12 threads.

2024-12-07 20:42:24,538 - freqtrade.configuration.load_config - INFO - Using config: user_data/hyperopt-futures-5m-btc.json ...

2024-12-07 20:42:24,538 - freqtrade.loggers - INFO - Verbosity set to 0

2024-12-07 20:42:24,539 - freqtrade.configuration.configuration - INFO - Using additional Strategy lookup path: user_data/strategies_short/5m

2024-12-07 20:42:24,539 - freqtrade.configuration.configuration - INFO - Parameter -i/--timeframe detected ... Using timeframe: 5m ...

2024-12-07 20:42:24,539 - freqtrade.configuration.configuration - INFO - Parameter --enable-position-stacking detected ...

2024-12-07 20:42:24,539 - freqtrade.configuration.configuration - INFO - Using max_open_trades: 100 ...

2024-12-07 20:42:24,539 - freqtrade.configuration.configuration - INFO - Parameter --timerange detected: 20210301-20230301 ...

2024-12-07 20:42:24,540 - freqtrade.configuration.configuration - INFO - Using user-data directory: E:\others\freqtrade\user_data ...

2024-12-07 20:42:24,541 - freqtrade.configuration.configuration - INFO - Using data directory: E:\others\freqtrade\user_data\data\binance ...

2024-12-07 20:42:24,541 - freqtrade.configuration.configuration - INFO - Overriding timeframe with Command line argument

2024-12-07 20:42:24,541 - freqtrade.configuration.configuration - INFO - Parameter --cache=day detected ...

2024-12-07 20:42:24,541 - freqtrade.configuration.configuration - INFO - Filter trades by timerange: 20210301-20230301

2024-12-07 20:42:24,542 - freqtrade.exchange.check_exchange - INFO - Checking exchange...

2024-12-07 20:42:24,553 - freqtrade.exchange.check_exchange - INFO - Exchange "binance" is officially supported by the Freqtrade development team.

2024-12-07 20:42:24,553 - freqtrade.configuration.configuration - INFO - Using pairlist from configuration.

2024-12-07 20:42:24,553 - freqtrade.configuration.config_validation - INFO - Validating configuration ...

2024-12-07 20:42:24,556 - freqtrade.commands.optimize_commands - INFO - Starting freqtrade in Backtesting mode

2024-12-07 20:42:24,556 - freqtrade.exchange.exchange - INFO - Instance is running with dry_run enabled

2024-12-07 20:42:24,556 - freqtrade.exchange.exchange - INFO - Using CCXT 4.4.24

2024-12-07 20:42:24,557 - freqtrade.exchange.exchange - INFO - Applying additional ccxt config: {'options': {'defaultType': 'swap'}, 'httpsProxy': 'http://127.0.0.1:7890', 'wsProxy': 'http://127.0.0.1:7890'}

2024-12-07 20:42:24,566 - freqtrade.exchange.exchange - INFO - Applying additional ccxt config: {'options': {'defaultType': 'swap'}, 'httpsProxy': 'http://127.0.0.1:7890', 'wsProxy': 'http://127.0.0.1:7890'}

2024-12-07 20:42:24,578 - freqtrade.exchange.exchange - INFO - Using Exchange "Binance"

2024-12-07 20:42:26,831 - freqtrade.resolvers.exchange_resolver - INFO - Using resolved exchange 'Binance'...

2024-12-07 20:42:27,446 - freqtrade.resolvers.iresolver - INFO - Using resolved strategy SimpleShortStrategy from 'E:\others\freqtrade\user_data\strategies_short\5m\SimpleShortStrategy.py'...

2024-12-07 20:42:27,446 - freqtrade.strategy.hyper - INFO - Found no parameter file.

2024-12-07 20:42:27,446 - freqtrade.resolvers.strategy_resolver - INFO - Override strategy 'timeframe' with value in config file: 5m.

2024-12-07 20:42:27,446 - freqtrade.resolvers.strategy_resolver - INFO - Override strategy 'stake_currency' with value in config file: USDT.

2024-12-07 20:42:27,447 - freqtrade.resolvers.strategy_resolver - INFO - Override strategy 'stake_amount' with value in config file: unlimited.

2024-12-07 20:42:27,447 - freqtrade.resolvers.strategy_resolver - INFO - Override strategy 'unfilledtimeout' with value in config file: {'entry': 10, 'exit': 10, 'exit_timeout_count': 0, 'unit': 'minutes'}.

2024-12-07 20:42:27,447 - freqtrade.resolvers.strategy_resolver - INFO - Override strategy 'max_open_trades' with value in config file: 100.

2024-12-07 20:42:27,447 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using minimal_roi: {'0': 0.1}

2024-12-07 20:42:27,447 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using timeframe: 5m

2024-12-07 20:42:27,447 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using stoploss: -0.05

2024-12-07 20:42:27,448 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using trailing_stop: False

2024-12-07 20:42:27,448 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using trailing_stop_positive_offset: 0.0

2024-12-07 20:42:27,448 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using trailing_only_offset_is_reached: False

2024-12-07 20:42:27,448 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using use_custom_stoploss: False

2024-12-07 20:42:27,448 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using process_only_new_candles: True

2024-12-07 20:42:27,448 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using order_types: {'entry': 'limit', 'exit': 'limit', 'stoploss': 'limit', 'stoploss_on_exchange': False, 'stoploss_on_exchange_interval': 60}

2024-12-07 20:42:27,449 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using order_time_in_force: {'entry': 'GTC', 'exit': 'GTC'}

2024-12-07 20:42:27,449 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using stake_currency: USDT

2024-12-07 20:42:27,449 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using stake_amount: unlimited

2024-12-07 20:42:27,449 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using startup_candle_count: 0

2024-12-07 20:42:27,449 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using unfilledtimeout: {'entry': 10, 'exit': 10, 'exit_timeout_count': 0, 'unit': 'minutes'}

2024-12-07 20:42:27,449 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using use_exit_signal: True

2024-12-07 20:42:27,449 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using exit_profit_only: False

2024-12-07 20:42:27,449 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using ignore_roi_if_entry_signal: False

2024-12-07 20:42:27,449 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using exit_profit_offset: 0.0

2024-12-07 20:42:27,449 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using disable_dataframe_checks: False

2024-12-07 20:42:27,449 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using ignore_buying_expired_candle_after: 0

2024-12-07 20:42:27,450 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using position_adjustment_enable: False

2024-12-07 20:42:27,450 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using max_entry_position_adjustment: -1

2024-12-07 20:42:27,450 - freqtrade.resolvers.strategy_resolver - INFO - Strategy using max_open_trades: 100

2024-12-07 20:42:27,450 - freqtrade.configuration.config_validation - INFO - Validating configuration ...

2024-12-07 20:42:27,465 - freqtrade.resolvers.iresolver - INFO - Using resolved pairlist StaticPairList from 'E:\others\freqtrade\freqtrade\plugins\pairlist\StaticPairList.py'...

2024-12-07 20:42:27,473 - freqtrade.optimize.backtesting - INFO - Using fee 0.0500% - worst case fee from exchange (lowest tier).

2024-12-07 20:42:27,660 - freqtrade.optimize.backtesting - INFO - Loading data from 2021-03-01 00:00:00 up to 2023-03-01 00:00:00 (730 days).

2024-12-07 20:42:27,689 - freqtrade.optimize.backtesting - INFO - Dataload complete. Calculating indicators

2024-12-07 20:42:27,691 - freqtrade.data.btanalysis - INFO - Loading backtest result from E:\others\freqtrade\user_data\backtest_results\backtest-result-2024-12-07_20-28-13.json

2024-12-07 20:42:27,692 - freqtrade.optimize.backtesting - INFO - Reusing result of previous backtest for SimpleShortStrategy

Result for strategy SimpleShortStrategy

BACKTESTING REPORT

┏━━━━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ Pair ┃ Trades ┃ Avg Profit % ┃ Tot Profit USDT ┃ Tot Profit % ┃ Avg Duration ┃ Win Draw Loss Win% ┃

┡━━━━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━┩

│ BTC/USDT:USDT │ 0 │ 0.0 │ 0.000 │ 0.0 │ 0:00 │ 0 0 0 0 │

│ TOTAL │ 0 │ 0.0 │ 0.000 │ 0.0 │ 0:00 │ 0 0 0 0 │

└───────────────┴────────┴──────────────┴─────────────────┴──────────────┴──────────────┴────────────────────────┘

LEFT OPEN TRADES REPORT

┏━━━━━━━┳━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ Pair ┃ Trades ┃ Avg Profit % ┃ Tot Profit USDT ┃ Tot Profit % ┃ Avg Duration ┃ Win Draw Loss Win% ┃

┡━━━━━━━╇━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━┩

│ TOTAL │ 0 │ 0.0 │ 0.000 │ 0.0 │ 0:00 │ 0 0 0 0 │

└───────┴────────┴──────────────┴─────────────────┴──────────────┴──────────────┴────────────────────────┘

ENTER TAG STATS

┏━━━━━━━━━━━┳━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ Enter Tag ┃ Entries ┃ Avg Profit % ┃ Tot Profit USDT ┃ Tot Profit % ┃ Avg Duration ┃ Win Draw Loss Win% ┃

┡━━━━━━━━━━━╇━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━┩

│ TOTAL │ 0 │ 0.0 │ 0.000 │ 0.0 │ 0:00 │ 0 0 0 0 │

└───────────┴─────────┴──────────────┴─────────────────┴──────────────┴──────────────┴────────────────────────┘

EXIT REASON STATS

┏━━━━━━━━━━━━━┳━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ Exit Reason ┃ Exits ┃ Avg Profit % ┃ Tot Profit USDT ┃ Tot Profit % ┃ Avg Duration ┃ Win Draw Loss Win% ┃

┡━━━━━━━━━━━━━╇━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━┩

│ TOTAL │ 0 │ 0.0 │ 0.000 │ 0.0 │ 0:00 │ 0 0 0 0 │

└─────────────┴───────┴──────────────┴─────────────────┴──────────────┴──────────────┴────────────────────────┘

MIXED TAG STATS

┏━━━━━━━━━━━┳━━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ Enter Tag ┃ Exit Reason ┃ Trades ┃ Avg Profit % ┃ Tot Profit USDT ┃ Tot Profit % ┃ Avg Duration ┃ Win Draw Loss Win% ┃

┡━━━━━━━━━━━╇━━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━┩

│ TOTAL │ │ 0 │ 0.0 │ 0.000 │ 0.0 │ 0:00 │ 0 0 0 0 │

└───────────┴─────────────┴────────┴──────────────┴─────────────────┴──────────────┴──────────────┴────────────────────────┘

No trades made. Your starting balance was 1000 USDT, and your stake was unlimited.

Backtested 2021-03-01 00:00:00 -> 2023-03-01 00:00:00 | Max open trades : 1

STRATEGY SUMMARY

┏━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓

┃ Strategy ┃ Trades ┃ Avg Profit % ┃ Tot Profit USDT ┃ Tot Profit % ┃ Avg Duration ┃ Win Draw Loss Win% ┃ Drawdown ┃

┡━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩

│ SimpleShortStrategy │ 0 │ 0.00 │ 0.000 │ 0.0 │ 0:00 │ 0 0 0 0 │ 0 USDT 0.00% │

└─────────────────────┴────────┴──────────────┴─────────────────┴──────────────┴──────────────┴────────────────────────┴───────────────┘

```

|

closed

|

2024-12-07T12:46:38Z

|

2024-12-10T03:21:43Z

|

https://github.com/freqtrade/freqtrade/issues/11053

|

[

"Question"

] |

Toney

| 2

|

taverntesting/tavern

|

pytest

| 791

|

how to include saved value in the next stage request url

|

We have a service with the URL, https://www.domain.com/parallel/tests/uuid

I would like to run the test stage(name: Verify tests get API) with the saved value `newtest_uuid`

How can I use `newtest_uuid` in the second stage?

```

includes:

- !include includes.yaml

stages:

- name: Verify tests post API

request:

url: "{HOST:s}/parallel/tests/"

method: POST

json:

name: 'co-test-9'

apptype: 'parallel'

user: 'wangxi'

product: 'centos'

build : 'ob-123'

resolution: '1600x1200'

start_url: 'https://www.github.com/'

locales: "['192.168.0.1']"

response:

strict:

- json:off

status_code: 200

save:

json:

newtest_uuid: "testcase.uuid"

- name: Verify tests get API

request:

url: "{HOST:s}/parallel/tests/{newtest_uuid}"

method: GET

response:

strict:

- json:off

status_code: 200

```

I did this, but it doesn't work, and got errors

```

- name: Verify tests get API

request:

url: !force_format_include"{HOST}/parallel/tests/{newtest_uuid}"

method: GET

response:

strict:

- json:off

status_code: 200

```

How can i use the saved `newtest_uuid` in the second stage?

Thanks

|

closed

|

2022-06-15T09:19:00Z

|

2022-06-16T03:09:09Z

|

https://github.com/taverntesting/tavern/issues/791

|

[] |

colinshin

| 2

|

python-restx/flask-restx

|

api

| 399

|

the first time to open the swagger page will be very slow

|

After updating flask2.0.1 and restx0.5, start app.run(), the first time to open the swagger page will be very slow, about a few tens of seconds to a few minutes to wait, this problem is caused by too many registered routes, I tried to register only a few routes will not cause a problem. Why is the difference between 0.5 and the 0.3 version so much, the 0.3 version will not have this problem, what exactly caused this problem, can anyone tell me?

|

open

|

2021-12-27T10:18:46Z

|

2021-12-27T10:18:46Z

|

https://github.com/python-restx/flask-restx/issues/399

|

[

"question"

] |

sbigtree

| 0

|

AUTOMATIC1111/stable-diffusion-webui

|

pytorch

| 16,031

|

[Bug]: cannot load SD2 checkpoint after performance update in dev branch

|

After the huge performance update in dev branch it is not possible to load sd2 model, while on master branch it works:

```

RuntimeError: mat1 and mat2 must have the same dtype, but got Half and Float

```

```

Loading weights [3f067a1b94] from /home/user/ai-apps/stable-diffusion-webui/models/Stable-diffusion/sd_v2-1_turbo.safetensors

loading stable diffusion model: RuntimeError

Traceback (most recent call last):

File "/usr/lib/python3.11/threading.py", line 1002, in _bootstrap

self._bootstrap_inner()

File "/usr/lib/python3.11/threading.py", line 1045, in _bootstrap_inner

self.run()

File "/usr/lib/python3.11/threading.py", line 982, in run

self._target(*self._args, **self._kwargs)

File "/home/user/ai-apps/stable-diffusion-webui/modules/initialize.py", line 149, in load_model

shared.sd_model # noqa: B018

File "/home/user/ai-apps/stable-diffusion-webui/modules/shared_items.py", line 175, in sd_model

return modules.sd_models.model_data.get_sd_model()

File "/home/user/ai-apps/stable-diffusion-webui/modules/sd_models.py", line 648, in get_sd_model

load_model()

File "/home/user/ai-apps/stable-diffusion-webui/modules/sd_models.py", line 736, in load_model

checkpoint_config = sd_models_config.find_checkpoint_config(state_dict, checkpoint_info)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/user/ai-apps/stable-diffusion-webui/modules/sd_models_config.py", line 119, in find_checkpoint_config

return guess_model_config_from_state_dict(state_dict, info.filename)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/user/ai-apps/stable-diffusion-webui/modules/sd_models_config.py", line 91, in guess_model_config_from_state_dict

elif is_using_v_parameterization_for_sd2(sd):

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/user/ai-apps/stable-diffusion-webui/modules/sd_models_config.py", line 64, in is_using_v_parameterization_for_sd2

out = (unet(x_test, torch.asarray([999], device=device), context=test_cond) - x_test).mean().item()

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/user/ai-apps/stable-diffusion-webui/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1518, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/user/ai-apps/stable-diffusion-webui/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1527, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/user/ai-apps/stable-diffusion-webui/modules/sd_unet.py", line 91, in UNetModel_forward

return original_forward(self, x, timesteps, context, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/user/ai-apps/stable-diffusion-webui/repositories/stable-diffusion-stability-ai/ldm/modules/diffusionmodules/openaimodel.py", line 789, in forward

emb = self.time_embed(t_emb)

^^^^^^^^^^^^^^^^^^^^^^

File "/home/user/ai-apps/stable-diffusion-webui/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1518, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/user/ai-apps/stable-diffusion-webui/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1527, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/user/ai-apps/stable-diffusion-webui/venv/lib/python3.11/site-packages/torch/nn/modules/container.py", line 215, in forward

input = module(input)

^^^^^^^^^^^^^

File "/home/user/ai-apps/stable-diffusion-webui/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1518, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/user/ai-apps/stable-diffusion-webui/venv/lib/python3.11/site-packages/torch/nn/modules/module.py", line 1527, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/user/ai-apps/stable-diffusion-webui/extensions-builtin/Lora/networks.py", line 527, in network_Linear_forward

return originals.Linear_forward(self, input)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/user/ai-apps/stable-diffusion-webui/venv/lib/python3.11/site-packages/torch/nn/modules/linear.py", line 114, in forward

return F.linear(input, self.weight, self.bias)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

RuntimeError: mat1 and mat2 must have the same dtype, but got Half and Float

Stable diffusion model failed to load

```

|

open

|

2024-06-16T15:03:44Z

|

2024-06-23T15:23:07Z

|

https://github.com/AUTOMATIC1111/stable-diffusion-webui/issues/16031

|

[

"bug-report"

] |

light-and-ray

| 5

|

dask/dask

|

scikit-learn

| 10,949

|

Issue repartitioning a time series by frequency when loaded from parquet file

|

**Describe the issue**:

When loading a parquet file that has a datetime index, I can't repartition based on frequency, getting the following error:

```

Traceback (most recent call last):

File "/Users/.../gitrepos/dask-exp/test_dask_issue.py", line 19, in <module>

df2 = df2.repartition(freq="1D")

File "/Users/.../miniconda3/envs/dask/lib/python3.10/site-packages/dask_expr/_collection.py", line 1184, in repartition

raise TypeError("Can only repartition on frequency for timeseries")

TypeError: Can only repartition on frequency for timeseries

```

Despite the fact the loaded dataframe from parquet file having `datetime64[ns]` dtype.

Note that time series generator dataframe can be repartitioned by frequency.

**Minimal Complete Verifiable Example**:

```python

import dask

dask.config.set({'dataframe.query-planning': True})

import dask.dataframe as dd

df1 = dask.datasets.timeseries(

start="2000",

end="2001",

freq="1h",

seed=1,

)

df1 = df1.repartition(freq="1ME")

df1.to_parquet("test")

df2 = dd.read_parquet(

"test/*parquet",

index="timestamp",

columns=["x", "y"]

)

print(df2.index.dtype)

df2 = df2.repartition(freq="1D")

print(df2.compute())

```

**Anything else we need to know?**:

Looking to repartition data loaded via parquet for efficient time series based queries. Default partition results in larger than need memory bloatedness.

**Environment**:

- Dask version: 2024.2.1

- Python version: 3.10.13

- Operating System: Mac OSX

- Install method (conda, pip, source): pip

|

open

|

2024-02-24T18:30:24Z

|

2024-04-04T12:56:22Z

|

https://github.com/dask/dask/issues/10949

|

[

"dataframe"

] |

pvaezi

| 5

|

grillazz/fastapi-sqlalchemy-asyncpg

|

pydantic

| 98

|

SQLAlchemy Engine disposal

|

I've come across your project and I really appreciate what you've done.

I have a question that bugs me for a long time and I've never seen any project addressing it, including yours.

When you create an `AsyncEngine`, you are supposed to [dispose](https://docs.sqlalchemy.org/en/13/core/connections.html#engine-disposal) it, ideally in `shutdown` event. However, I never see anyone doing it in practice. Is it because you rely on GC of Python to clean it for you?

|

closed

|

2023-07-13T19:17:29Z

|

2023-07-20T08:25:35Z

|

https://github.com/grillazz/fastapi-sqlalchemy-asyncpg/issues/98

|

[] |

hmbui-noze

| 1

|

pydantic/pydantic

|

pydantic

| 11,335

|

Unable to pip install pydantic

|

Hi,

I was trying to set up a repository from source that had `pydantic >= 2.9.0`, but I keep getting the following error -

<img width="917" alt="Image" src="https://github.com/user-attachments/assets/e0410efc-5fb7-494e-80e4-96231fff1489" />

I checked my internet connection a couple of times just before posting this issue. Can you check if the new release was deployed correctly? Or is anyone else facing this issue?

|

closed

|

2025-01-24T04:27:27Z

|

2025-01-24T04:51:19Z

|

https://github.com/pydantic/pydantic/issues/11335

|

[] |

whiz-Tuhin

| 1

|

allenai/allennlp

|

data-science

| 4,855

|

Models: missing None check in PrecoReader's text_to_instance method.

|

<!--

Please fill this template entirely and do not erase any of it.

We reserve the right to close without a response bug reports which are incomplete.

If you have a question rather than a bug, please ask on [Stack Overflow](https://stackoverflow.com/questions/tagged/allennlp) rather than posting an issue here.

-->

## Checklist

<!-- To check an item on the list replace [ ] with [x]. -->

- [x] I have verified that the issue exists against the `master` branch of AllenNLP.

- [x] I have read the relevant section in the [contribution guide](https://github.com/allenai/allennlp/blob/master/CONTRIBUTING.md#bug-fixes-and-new-features) on reporting bugs.

- [x] I have checked the [issues list](https://github.com/allenai/allennlp/issues) for similar or identical bug reports.

- [x] I have checked the [pull requests list](https://github.com/allenai/allennlp/pulls) for existing proposed fixes.

- [x] I have checked the [CHANGELOG](https://github.com/allenai/allennlp/blob/master/CHANGELOG.md) and the [commit log](https://github.com/allenai/allennlp/commits/master) to find out if the bug was already fixed in the master branch.

- [x] I have included in the "Description" section below a traceback from any exceptions related to this bug.

- [x] I have included in the "Related issues or possible duplicates" section beloew all related issues and possible duplicate issues (If there are none, check this box anyway).

- [x] I have included in the "Environment" section below the name of the operating system and Python version that I was using when I discovered this bug.

- [x] I have included in the "Environment" section below the output of `pip freeze`.

- [x] I have included in the "Steps to reproduce" section below a minimally reproducible example.

## Description

Hi,

I think a `None` check is missing at that [line](https://github.com/allenai/allennlp-models/blob/ea1f71c79c329db1b66d9db79f0eaa39d2fd2857/allennlp_models/coref/dataset_readers/preco.py#L94) in `PrecoReader`.

According to the function argument list, and the subsequent call to `make_coref_instance`, `clusters` should be allowed to be `None`.

A typical use-case would be e.g. inference where we don't have any info about the clusters.

<details>

<summary><b>Python traceback:

</b></summary>

<p>

<!-- Paste the traceback from any exception (if there was one) in between the next two lines below -->

```

Traceback (most recent call last):

File "/home/fco/coreference/bug.py", line 15, in <module>

instance = reader.text_to_instance(sentences)

File "/home/fco/anaconda3/envs/coref/lib/python3.8/site-packages/allennlp_models/coref/dataset_readers/preco.py", line 94, in text_to_instance

for cluster in gold_clusters:

TypeError: 'NoneType' object is not iterable

```

</p>

</details>

## Related issues or possible duplicates

- None

## Environment

<!-- Provide the name of operating system below (e.g. OS X, Linux) -->

OS: Ubuntu 18.04.3 LTS

<!-- Provide the Python version you were using (e.g. 3.7.1) -->

Python version: 3.8.5

<details>

<summary><b>Output of <code>pip freeze</code>:</b></summary>

<p>

<!-- Paste the output of `pip freeze` in between the next two lines below -->

```

absl-py==0.11.0

allennlp==1.2.0

allennlp-models==1.2.0

attrs==20.3.0

blis==0.4.1

boto3==1.16.14

botocore==1.19.14

cachetools==4.1.1

catalogue==1.0.0

certifi==2020.6.20

chardet==3.0.4

click==7.1.2

conllu==4.2.1

cymem==2.0.4

en-core-web-sm @ https://github.com/explosion/spacy-models/releases/download/en_core_web_sm-2.3.1/en_core_web_sm-2.3.1.tar.gz

filelock==3.0.12

ftfy==5.8

future==0.18.2

google-auth==1.23.0

google-auth-oauthlib==0.4.2

grpcio==1.33.2

h5py==3.1.0

idna==2.10

importlib-metadata==2.0.0

iniconfig==1.1.1

jmespath==0.10.0

joblib==0.17.0

jsonnet==0.16.0

jsonpickle==1.4.1

Markdown==3.3.3

murmurhash==1.0.4

nltk==3.5

numpy==1.19.4

oauthlib==3.1.0

overrides==3.1.0

packaging==20.4

pandas==1.1.4

plac==1.1.3

pluggy==0.13.1

preshed==3.0.4

protobuf==3.13.0

py==1.9.0

py-rouge==1.1

pyasn1==0.4.8

pyasn1-modules==0.2.8

pyconll==2.3.3

pyparsing==2.4.7

PySocks==1.7.1

pytest==6.1.2

python-dateutil==2.8.1

pytz==2020.4

regex==2020.10.28

requests==2.24.0

requests-oauthlib==1.3.0

rsa==4.6

s3transfer==0.3.3

sacremoses==0.0.43

scikit-learn==0.23.2

scipy==1.5.4

sentencepiece==0.1.94

six==1.15.0

spacy==2.3.2

srsly==1.0.3

tensorboard==2.4.0

tensorboard-plugin-wit==1.7.0

tensorboardX==2.1

thinc==7.4.1

threadpoolctl==2.1.0

tokenizers==0.9.2

toml==0.10.2

torch==1.7.0

tqdm==4.51.0

transformers==3.4.0

tweepy==3.9.0

typing==3.7.4.3

typing-extensions==3.7.4.3

urllib3==1.25.11

wasabi==0.8.0

wcwidth==0.2.5

Werkzeug==1.0.1

word2number==1.1

zipp==3.4.0

```

</p>

</details>

## Steps to reproduce

<details>

<summary><b>Example source:</b></summary>

<p>

<!-- Add a fully runnable example in between the next two lines below that will reproduce the bug -->

```python

import spacy

from allennlp.data.token_indexers import SingleIdTokenIndexer, TokenCharactersIndexer

from allennlp_models.coref import PrecoReader

my_text = "Night you. Subdue creepeth cattle creeping living lesser."

sp = spacy.load("en_core_web_sm")

doc = sp(my_text)

sentences = [[token.text for token in sent] for sent in doc.sents]

reader = PrecoReader(max_span_width=10, token_indexers={"tokens": SingleIdTokenIndexer(),

"token_characters": TokenCharactersIndexer()})

instance = reader.text_to_instance(sentences)

```

</p>

</details>

|

closed

|

2020-12-09T14:16:36Z

|

2020-12-10T20:30:06Z

|

https://github.com/allenai/allennlp/issues/4855

|

[

"bug"

] |

frcnt

| 0

|

jumpserver/jumpserver

|

django

| 14,140

|

[Feature] ldap登录需要修改初始密码问题

|

### 产品版本

v3.10.9

### 版本类型

- [ ] 社区版

- [X] 企业版

- [ ] 企业试用版

### 安装方式

- [ ] 在线安装 (一键命令安装)

- [ ] 离线包安装

- [ ] All-in-One

- [ ] 1Panel

- [ ] Kubernetes

- [ ] 源码安装

### ⭐️ 需求描述

ldap认证如果域控账号设置了初始密码,登录之后需要修改初始密码,我登录其他用域控密码的系统都会提示修改初始密码,同时弹出个修改密码的框,可是我登录堡垒机的时候没提示我修改初始密码,就直接提示密码错误,这个能不能改一下,让堡垒机登录域控账号的时候提示修改初始密码,同时弹出修改密码的框

### 解决方案

目前在对接ldap需要修改初始密码的,能够有修改密码入口吗?否则会报认证失败,不能登录。

### 补充信息

_No response_

|

closed

|

2024-09-13T06:36:14Z

|

2024-10-10T05:56:26Z

|

https://github.com/jumpserver/jumpserver/issues/14140

|

[

"⭐️ Feature Request"

] |

guoheng888

| 1

|

microsoft/unilm

|

nlp

| 1,523

|

All download links failed: vqkd_encoder pre-trained weight of beit2

|

Please refer to https://github.com/microsoft/unilm/blob/master/beit2/TOKENIZER.md

|

closed

|

2024-04-12T13:40:18Z

|

2024-04-12T18:08:14Z

|

https://github.com/microsoft/unilm/issues/1523

|

[] |

JoshuaChou2018

| 2

|

NVIDIA/pix2pixHD

|

computer-vision

| 206

|

THCTensorScatterGather.cu line=380 error=59 : device-side assert triggered when using labels and nyuv2 dataset

|

Hello,

I trained a model using rgb values and the train folders train_A and train_B without any issues.

Now I wanted to use the nyuv2 dataset using the labels as input and rgb as outputs.

I extracted the label images with the class labels in each pixel for a total of 985 classes including 0 as a no class.

I set up the images under `datasets/nyuv2/train_img/0.png` and `datasets/nyuv2/train_label/0.png`

However, I'm stuck trying to train as I keep getting an error when trying to one hot word encode. I run the following command:

`CUDA_LAUNCH_BLOCKING=1 python train.py --label_nc 895 --name nyuv2 --dataroot ./datasets/nyuv2 --save_epoch_freq 5 --loadSize 640 --instance_feat --netG global`

And the error:

`/pytorch/aten/src/THC/THCTensorScatterGather.cu:188: void THCudaTensor_scatterFillKernel(TensorInfo<Real, IndexType>, TensorInfo<long, IndexType>, Real, int, IndexType) [with IndexType = unsigned int, Real = float, Dims = -1]: block: [22,0,0], thread: [127,0,0] Assertion `indexValue >= 0 && indexValue < tensor.sizes[dim]` failed.

THCudaCheck FAIL file=/pytorch/aten/src/THC/generic/THCTensorScatterGather.cu line=380 error=59 : device-side assert triggered

Traceback (most recent call last):

File "train.py", line 71, in <module>

Variable(data['image']), Variable(data['feat']), infer=save_fake)

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py", line 547, in __call__

result = self.forward(*input, **kwargs)

File "/opt/conda/lib/python3.7/site-packages/torch/nn/parallel/data_parallel.py", line 150, in forward

return self.module(*inputs[0], **kwargs[0])

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py", line 547, in __call__

result = self.forward(*input, **kwargs)

File "/home/juancevedo/pix2pixHD/models/pix2pixHD_model.py", line 158, in forward

input_label, inst_map, real_image, feat_map = self.encode_input(label, inst, image, feat)

File "/home/juancevedo/pix2pixHD/models/pix2pixHD_model.py", line 122, in encode_input

input_label = input_label.scatter_(1, label_map.data.long().cuda(), 1.0)

RuntimeError: cuda runtime error (59) : device-side assert triggered at /pytorch/aten/src/THC/generic/THCTensorScatterGather.cu:380`

Here are two sample images:

Label image:

Train image:

I suspect something changed with new pytorch but I don't know how to make the fix.

Any help is appreciated. Thank you.

|

open

|

2020-07-01T21:55:43Z

|

2020-07-26T19:35:14Z

|

https://github.com/NVIDIA/pix2pixHD/issues/206

|

[] |

entrpn

| 4

|

waditu/tushare

|

pandas

| 1,515

|

hk_hold 接口调用频次有问题

|

120积分报下面错误,加分到620分还是报下面错误。而且我的code里面已经等了32秒!

198 for day in days:

199 print(day)

200 df = pro.hk_hold(trade_date = day.replace('-',''))

201 if not df.empty:

202 df.to_csv(filename, mode='a', index=False, header=h_flag)

203

204 if h_flag:

205 h_flag = False

206 if day != days[-1]:

207 time.sleep(32) # can only get 2 times per minutes

208

Exception: 抱歉,您每分钟最多访问该接口2次,权限的具体详情访问:https://tushare.pro/document/1?doc_id=108。

|

open

|

2021-02-17T14:59:40Z

|

2021-02-17T15:00:29Z

|

https://github.com/waditu/tushare/issues/1515

|

[] |

sq2309

| 1

|

adamerose/PandasGUI

|

pandas

| 114

|

Scatter size should handle null values

|

Using a column with null values (ie. demo data `penguins.body_mass`) on size in a scatter plot produces this error:

```

ValueError:

Invalid element(s) received for the 'size' property of scatter.marker

Invalid elements include: [nan]

The 'size' property is a number and may be specified as:

- An int or float in the interval [0, inf]

- A tuple, list, or one-dimensional numpy array of the above

```