repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

modin-project/modin

|

data-science

| 6,849

|

Possible to remove to_pandas calls in merge and join operations

|

In query_compiler.merge and query_compiler.join we use `to_pandas()` eg [right_pandas = right.to_pandas()](https://github.com/modin-project/modin/blob/7ef544f4467ddea18cfbb51ad2a6fcbbb12c0db3/modin/core/storage_formats/pandas/query_compiler.py#L525) in This operation in the main thread is blocking. This could be expensive if the right dataframe size is too large.

It is possible to remove the `to_pandas` calls by replacing `apply_full_axis` with broadcast_apply_full_axis and pass the `right` dataframe to `broadcast_apply_full_axis` as _Originally suggested by @YarShev in https://github.com/modin-project/modin/issues/5524#issuecomment-1880982441_ and

|

closed

|

2024-01-10T14:04:22Z

|

2024-01-11T16:18:29Z

|

https://github.com/modin-project/modin/issues/6849

|

[] |

arunjose696

| 1

|

aiortc/aiortc

|

asyncio

| 201

|

how can i get a certain channel and sample_rate like `channel=1 sample_rate=16000` when i use `MediaRecorder, MediaPlayer or AudioTransformTrack` to get audio frame ?

|

hi, i use MediaPlayer to read a wav file , and it not works when i change options parameters . to get origin data, i have to use channel=2 and sample_rate=48000 to save it .

does options works? please help me .

```python

async def save_wav(fine_name):

# player = MediaPlayer(fine_name, options={'channels': '1', 'sample_rate': '16000'})

with wave.open(fine_name, 'rb') as rf:

print("rf.getnchannels():", rf.getnchannels())

print("rf.getframerate():", rf.getframerate())

print("rf.getsampwidth():", rf.getsampwidth())

player = MediaPlayer(fine_name)

frames = []

frame = None

try:

frame = await player.audio.recv()

except Exception as e:

print("error 1:")

print("type(e):", type(e))

print(e)

while frame is not None:

for p in frame.planes:

data = p.to_bytes()

# print("p.buffer_size):", p.buffer_size)

# print("len(data):", len(data))

frames.append(data)

try:

frame = await player.audio.recv()

except Exception as e:

print("error 2:")

print("type(e):", type(e))

print(e)

frame = None

wave_save_path = os.path.join(file_path, "save.wav")

if wave_save_path is not None:

p = pyaudio.PyAudio()

CHANNELS = 2

FORMAT = pyaudio.paInt16

RATE = 48000

print("p.get_sample_size(FORMAT):", p.get_sample_size(FORMAT))

wf = wave.open(wave_save_path, 'wb')

wf.setnchannels(CHANNELS)

wf.setsampwidth(p.get_sample_size(FORMAT))

wf.setframerate(RATE)

wf.writeframes(b''.join(frames))

wf.close()

with wave.open(wave_save_path, 'rb') as rf:

print("rf.getnchannels():", rf.getnchannels())

print("rf.getframerate():", rf.getframerate())

print("rf.getsampwidth():", rf.getsampwidth())

```

```

2019-08-27 16:02:14,128 asyncio-DEBUG __init__:65 Using selector: EpollSelector

rf.getnchannels(): 1

rf.getframerate(): 16000

rf.getsampwidth(): 2

...

ALSA lib pcm.c:2495:(snd_pcm_open_noupdate) Unknown PCM cards.pcm.rear

ALSA lib pcm.c:2495:(snd_pcm_open_noupdate) Unknown PCM cards.pcm.center_lfe

ALSA lib pcm.c:2495:(snd_pcm_open_noupdate) Unknown PCM cards.pcm.side

ALSA lib pcm_route.c:867:(find_matching_chmap) Found no matching channel map

ALSA lib pcm_route.c:867:(find_matching_chmap) Found no matching channel map

ALSA lib pcm_route.c:867:(find_matching_chmap) Found no matching channel map

ALSA lib pcm_route.c:867:(find_matching_chmap) Found no matching channel map

p.get_sample_size(FORMAT): 2

rf.getnchannels(): 2

rf.getframerate(): 48000

rf.getsampwidth(): 2

```

|

closed

|

2019-08-27T04:16:09Z

|

2023-12-10T16:30:53Z

|

https://github.com/aiortc/aiortc/issues/201

|

[] |

supermanhuyu

| 5

|

jadore801120/attention-is-all-you-need-pytorch

|

nlp

| 79

|

Question about beamsearch

|

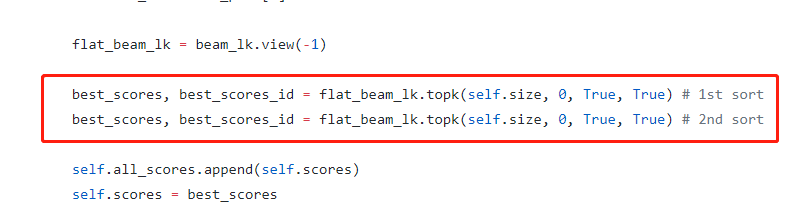

hello,

Your code is very clear! However, I have a quesion about beam search in your code. Why did you do two sorting operations in transformer/beam.py? Is there any special purpose?

Thanks a lot!

|

closed

|

2018-12-27T12:32:34Z

|

2019-12-08T10:28:40Z

|

https://github.com/jadore801120/attention-is-all-you-need-pytorch/issues/79

|

[] |

ZhengkunTian

| 2

|

hzwer/ECCV2022-RIFE

|

computer-vision

| 142

|

关于新添加的VGG模型

|

您好h佬,最近在试图训练RIFE时,发现RIFE更新了新的VGG损失函数相关代码,但在rife.py中相关代码被注释掉了。单纯的取消注释并修改相关代码后并不能顺利运行,想请问这部分工作是否是完成的。不胜感激。

就像这样

```

self.vgg = VGGPerceptualLoss().to(device)

# loss_G = loss_l1 + loss_cons + loss_ter

loss_G = self.vgg(pred, gt) + loss_cons + loss_ter

```

When trying to train RIFE recently, I found that RIFE updated the new VGG loss function related code, but the related code in rife.py was commented out. Simply uncomment and modify the relevant code can not start train, I would like to ask whether this part of the work is completed. I am very grateful.

like,this

```

self.vgg = VGGPerceptualLoss().to(device)

# loss_G = loss_l1 + loss_cons + loss_ter

loss_G = self.vgg(pred, gt) + loss_cons + loss_ter

```

|

closed

|

2021-04-19T01:05:32Z

|

2021-04-21T09:03:28Z

|

https://github.com/hzwer/ECCV2022-RIFE/issues/142

|

[] |

98mxr

| 2

|

miguelgrinberg/python-socketio

|

asyncio

| 455

|

restricting access to socketio/client files with flask-socketio?

|

Hi,

i am having a problem where i am able to download certain socketio files from my flask application.

sending a call to https://localhost:port/socket.io/ downloads a file with 'sid' and other information.

Similarly i am able to download different socketio files like : socket.io.tar.gz and socket.io.arj and other socketio compressed files by appending the names in the path. e.g. https://localhost:port/socket.io/socket.io.tar.gz gives me the file.

after searching alot about this issue, one thing i think can be helpful is setting serveClient=false as it is recommended in production environment. but i am unable find any information on how and where to set this parameter in case of python flask socketio.

So my question is how can we restrict access to these files? is there some socketio side configuration or some Content-Security-Policy i am missing.

I ll be grateful if someone can help with this issue.

Thanks

|

closed

|

2020-04-01T10:39:12Z

|

2020-04-02T11:25:44Z

|

https://github.com/miguelgrinberg/python-socketio/issues/455

|

[

"question"

] |

raheel-ahmad

| 4

|

strawberry-graphql/strawberry

|

django

| 3,583

|

pyinstrument extension doesn't seem to give detail breakdown

|

<!-- Provide a general summary of the bug in the title above. -->

I'm using strawberry with fastapi running everything in docker. I've tried using the pyinstrument extension as per the doc but am not getting breakdown of the execution.

<!--- This template is entirely optional and can be removed, but is here to help both you and us. -->

<!--- Anything on lines wrapped in comments like these will not show up in the final text. -->

## Describe the Bug

When using pyinstruemnt extention, there is no breakdown. Only [Self] category.

<!-- A clear and concise description of what the bug is. -->

## System Information

- Operating system: MacOS

- Strawberry version (if applicable):

## Additional Context

<!-- Add any other relevant information about the problem here. -->

<img width="1369" alt="image" src="https://github.com/user-attachments/assets/7d23a35a-9005-48bc-9bf1-ef37fd08d9ec">

|

open

|

2024-07-27T16:32:23Z

|

2025-03-20T15:56:48Z

|

https://github.com/strawberry-graphql/strawberry/issues/3583

|

[

"bug"

] |

Vincent-liuwingsang

| 0

|

deepspeedai/DeepSpeed

|

machine-learning

| 6,692

|

Installing DeepSpeed in WSL.

|

I am using Windows 11. I have Windows Subsystem for Linux activated (Ubuntu) as well as installed CUDA, and Visual Studio C++ Build tools. I am trying to install deepspeed. However, I am getting the following 2 errors. Could anybody please help resolve this?

|

closed

|

2024-10-30T20:17:24Z

|

2024-11-08T22:11:37Z

|

https://github.com/deepspeedai/DeepSpeed/issues/6692

|

[

"install",

"windows"

] |

anonymous-user803

| 5

|

chezou/tabula-py

|

pandas

| 156

|

tabula merges column in HDFC bank statement

|

Tabula merges columns of last page in HDFC bank statement and even if there is any field which is empty in any page then tabula does not detect that field and even it writes the output of nest column.

tabula.read_pdf(pdfpath,pages='all',columns=['68.0,272.0,357.5,397.0,474.5,553.0'])

I even tried to take column co-ordinate but tabula is not detecting it like Camelot did

camelot separates columns if provided but tabula doesn't

|

closed

|

2019-06-26T13:17:36Z

|

2019-06-27T01:54:53Z

|

https://github.com/chezou/tabula-py/issues/156

|

[] |

ayubansal1998

| 1

|

modin-project/modin

|

pandas

| 6,849

|

Possible to remove to_pandas calls in merge and join operations

|

In query_compiler.merge and query_compiler.join we use `to_pandas()` eg [right_pandas = right.to_pandas()](https://github.com/modin-project/modin/blob/7ef544f4467ddea18cfbb51ad2a6fcbbb12c0db3/modin/core/storage_formats/pandas/query_compiler.py#L525) in This operation in the main thread is blocking. This could be expensive if the right dataframe size is too large.

It is possible to remove the `to_pandas` calls by replacing `apply_full_axis` with broadcast_apply_full_axis and pass the `right` dataframe to `broadcast_apply_full_axis` as _Originally suggested by @YarShev in https://github.com/modin-project/modin/issues/5524#issuecomment-1880982441_ and

|

closed

|

2024-01-10T14:04:22Z

|

2024-01-11T16:18:29Z

|

https://github.com/modin-project/modin/issues/6849

|

[] |

arunjose696

| 1

|

mwaskom/seaborn

|

data-visualization

| 3,219

|

A violinplot in a FacetGrid can ignore the `split` argument

|

I try to produce a FacetGrid containing violin plots with the argument `split=True` but the violins are not split. See the following example:

```python

import numpy as np

import pandas as pd

import seaborn as sns

np.random.seed(42)

df = pd.DataFrame(

{

"value": np.random.rand(20),

"condition": ["true", "false"]*10,

"category": ["right"]*10 + ["wrong"]*10,

}

)

g = sns.FacetGrid(data=df, col="category", hue="condition", col_wrap=5)

g.map(

sns.violinplot,

"value",

split=True, # it is irrelevant if this is present or not the violin plots are never split.

);

```

Plots can be seen here: https://stackoverflow.com/q/74617402/6018688

|

closed

|

2023-01-10T15:20:58Z

|

2023-01-10T23:40:53Z

|

https://github.com/mwaskom/seaborn/issues/3219

|

[] |

fabianegli

| 4

|

matplotlib/mplfinance

|

matplotlib

| 619

|

PnF charts using just close.

|

I have a dataset with only 1 column that represents close price. I am unable to generate a PnF chart as it appears that PnF expects date, open, high, low & close.

PnF charts are drawn using either High/Low or just considering close.

Is there a way to generate a PnF with just close?

|

closed

|

2023-05-29T16:10:02Z

|

2023-05-30T10:22:51Z

|

https://github.com/matplotlib/mplfinance/issues/619

|

[

"question"

] |

boomkap

| 4

|

NVlabs/neuralangelo

|

computer-vision

| 28

|

Cuda out of memory. Anyway to run training on 8GB GPU

|

Is there anyway to run training using nvidia gpu with only 8GB ? no matter how much time it take.

But because I can't train on my gpu nvidia 3070 with 8GB.

what parameters can I edit to solve this issue?

|

closed

|

2023-08-16T15:09:57Z

|

2023-08-25T06:24:26Z

|

https://github.com/NVlabs/neuralangelo/issues/28

|

[] |

parzoe

| 14

|

ghtmtt/DataPlotly

|

plotly

| 202

|

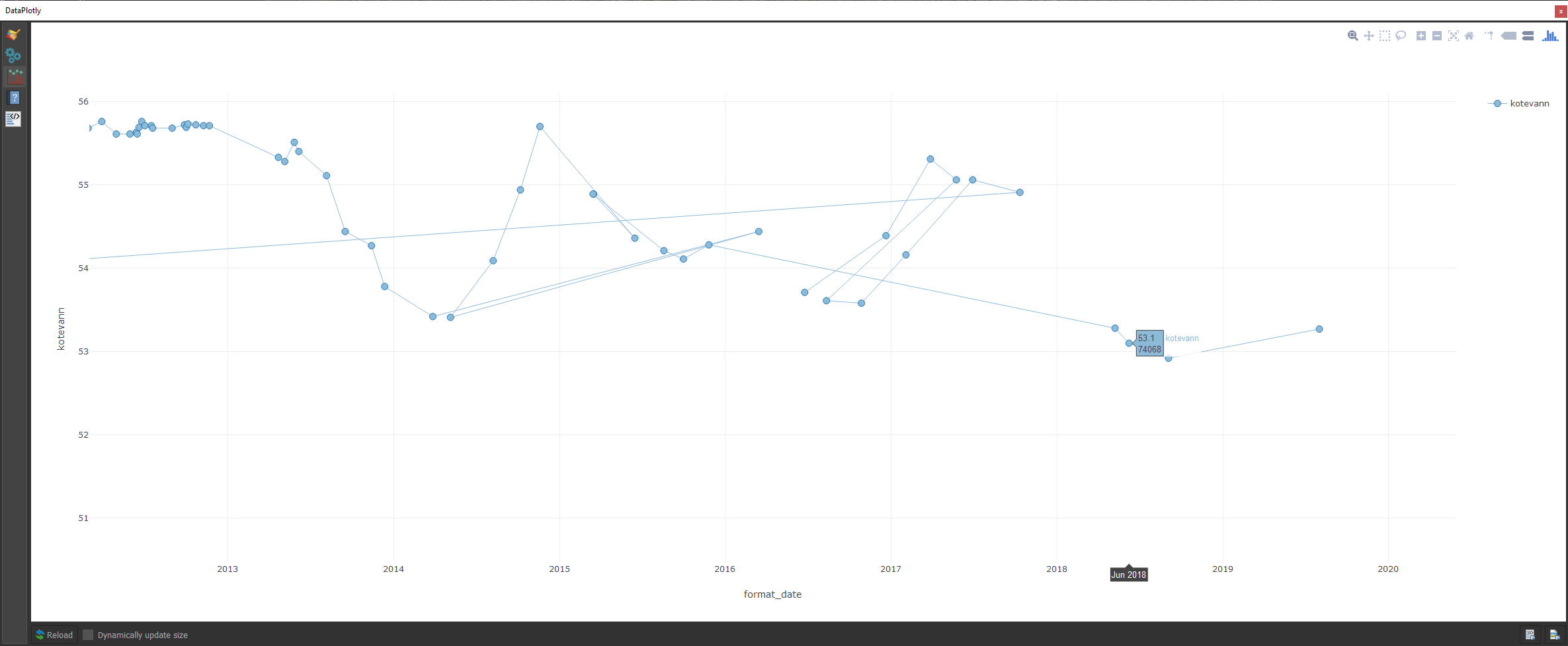

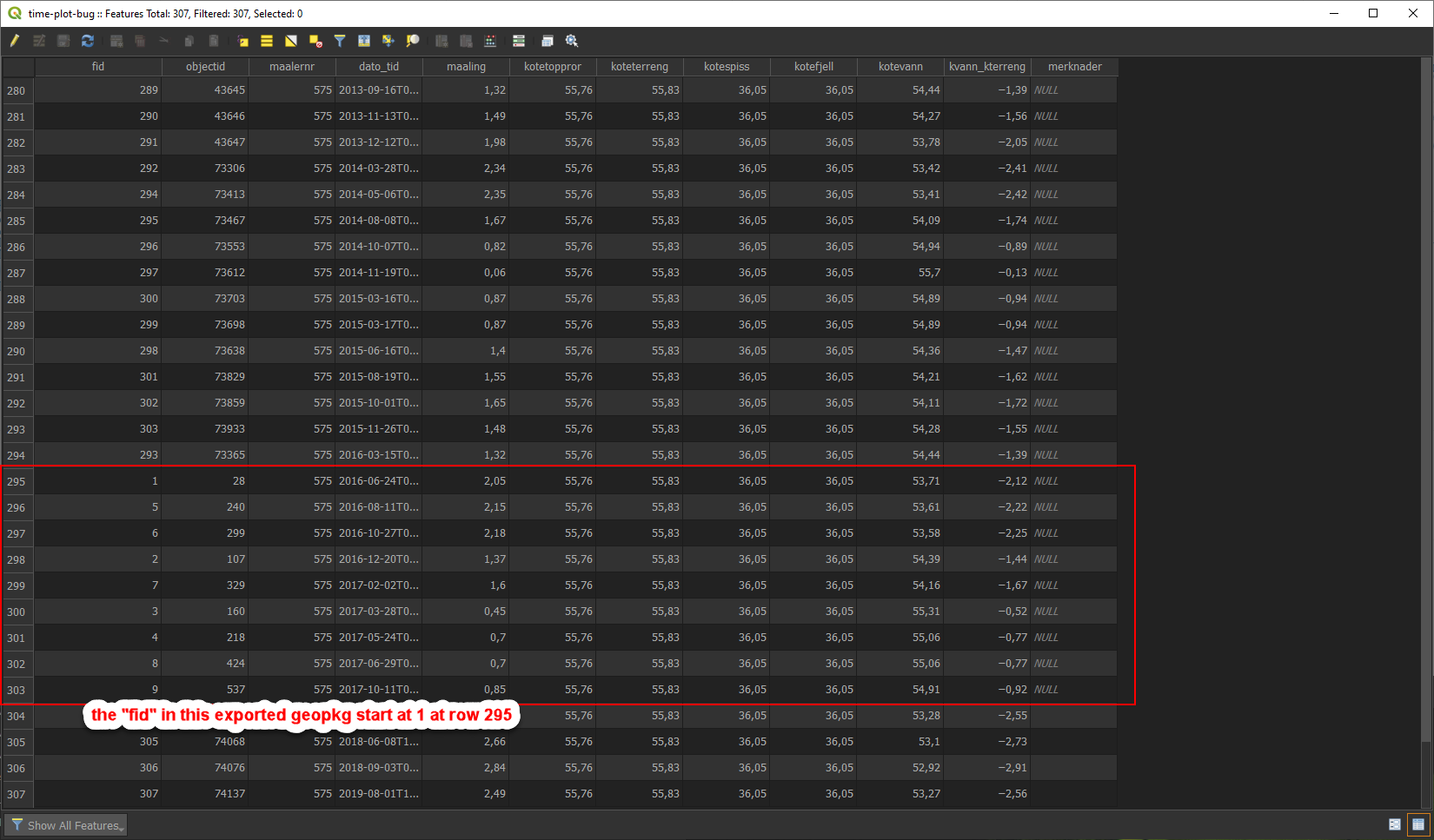

scatterplot of time-data cross-connects

|

**Describe the bug**

a bug plotting time-plots. the "line cross connects" a few places and runs back to start.

**To Reproduce**

1. plot this data geopkg provided as a scatter plot

- [time-plot-bug.zip](https://github.com/ghtmtt/DataPlotly/files/4298541/time-plot-bug.zip)

2. use x-field: format_date( "dato_tid",'yyyy-MM-dd hh:mm:ss')

3. use y-field: kotevann

4. check results in screenshot below

**Screenshots**

**Desktop (please complete the following information):**

- OS: win10

- QGIS release: 3.12.0

- DataPlotly release: 3.3

**Additional context**

I wonder if it have something with feature id? When i delete the "fid" (in the screenshot below) it works

|

closed

|

2020-03-06T13:38:54Z

|

2020-03-11T12:03:49Z

|

https://github.com/ghtmtt/DataPlotly/issues/202

|

[

"bug"

] |

danpejobo

| 5

|

mwaskom/seaborn

|

data-visualization

| 3,542

|

[BUG] Edge color with `catplot` with `kind=bar`

|

Hello,

When passing `edgecolor` to catplot for a bar, the argument doesn't reach the underlying `p.plot_bars` to generate the required output.

Currently there is a line

`edgecolor = p._complement_color(kwargs.pop("edgecolor", default), color, p._hue_map)`

is _not_ passed into the block `elif kind=="bar"`. A local "hack" I implemented is to add a `kwargs["edgecolor"] = edgecolor` before `p.plot_bars` call. Let me know if I should provide more details.

This is on version `0.13.0`.

|

closed

|

2023-10-27T07:33:09Z

|

2023-11-04T16:09:47Z

|

https://github.com/mwaskom/seaborn/issues/3542

|

[

"bug",

"mod:categorical"

] |

prabhuteja12

| 5

|

onnx/onnx

|

deep-learning

| 5,853

|

Request for Swish Op

|

# Swish/SiLU

Do you have any plans to implement the Swish Op in ONNX?

### Describe the operator

Swish is a popular Activation fuction. Its mathematical definition could be found at https://en.wikipedia.org/wiki/Swish_function

TensorFLow has https://www.tensorflow.org/api_docs/python/tf/nn/silu

Keras has https://keras.io/api/layers/activations/ (also in https://www.tensorflow.org/api_docs/python/tf/keras/activations/swish)

Pytorch has https://pytorch.org/docs/stable/generated/torch.nn.SiLU.html

### Can this operator be constructed using existing onnx operators?

Yes, it could be implemented as a combination of Mul and Sigmoid Ops:

x * Sigmoid (beta * x)

### Is this operator used by any model currently? Which one?

Yes. Modern Yolo series like yolov5, yolov7, yolov8, yolop and EfficientNet all have such Swish Ops.

Yolov5: https://github.com/ultralytics/yolov5/blob/master/models/tf.py#L224

EfficientNet:

https://paperswithcode.com/method/efficientnet which has Swish in https://github.com/lukemelas/EfficientNet-PyTorch/blob/2eb7a7d264344ddf15d0a06ee99b0dca524c6a07/efficientnet_pytorch/model.py#L294

### Are you willing to contribute it? (Y/N)

Possibly Yes.

### Notes

|

open

|

2024-01-11T08:18:22Z

|

2025-02-01T06:43:04Z

|

https://github.com/onnx/onnx/issues/5853

|

[

"topic: operator",

"stale",

"contributions welcome"

] |

vera121

| 7

|

sqlalchemy/sqlalchemy

|

sqlalchemy

| 11,994

|

missing type cast for jsonb in values for postgres?

|

### Discussed in https://github.com/sqlalchemy/sqlalchemy/discussions/11990

<div type='discussions-op-text'>

<sup>Originally posted by **JabberWocky-22** October 12, 2024</sup>

I'm using `values` to update multi rows in single execution in postgres.

The sql failed due to type match on jsonb column, since it doesn't have a type cast and take text as fallback.

Currently I can add type cast in update statement, but it will nice to add it in rendering just like uuid column.

To Reproduce:

```python

import sqlalchemy as sa

from sqlalchemy.dialects.postgresql import JSONB

table = sa.Table(

"test",

sa.MetaData(),

sa.Column("id", sa.Integer),

sa.Column("uuid", sa.Uuid, unique=True),

sa.Column("extra", JSONB),

)

engine = sa.create_engine("postgresql://xxx:xxx@localhost:1234/db")

with engine.begin() as conn:

table.create(conn, checkfirst=True)

conn.execute(

table.insert().values(

[

{"id": 1, "uuid": "d24587a1-06d9-41df-b1c3-3f423b97a755"},

{"id": 2, "uuid": "4b07e1c8-d60c-4ea8-9d01-d7cd01362224"},

]

)

)

value = sa.values(

sa.Column("id", sa.Integer),

sa.Column("uuid", sa.Uuid),

sa.Column("extra", JSONB),

name="update_data"

).data(

[

(

1,

"8b6ec1ec-b979-4d0b-b2ce-9acc6e4c2943",

{"foo": 1},

),

(

2,

"a2123bcb-7ea3-420a-8284-1db4b2759d79",

{"bar": 2},

),

]

)

conn.execute(

table.update()

.values(uuid=value.c.uuid, extra=value.c.extra)

.where(table.c.id == value.c.id)

)

```

traceback:

```bash

sqlalchemy.exc.ProgrammingError: (psycopg2.errors.DatatypeMismatch) column "extra" is of type jsonb but expression is of type text

LINE 1: UPDATE test SET uuid=update_data.uuid, extra=update_data.ext...

^

HINT: You will need to rewrite or cast the expression.

[SQL: UPDATE test SET uuid=update_data.uuid, extra=update_data.extra FROM (VALUES (%(param_1)s, %(param_2)s::UUID, %(param_3)s), (%(param_4)s, %(param_5)s::UUID, %(param_6)s)) AS update_data (id, uuid, extra) WHERE test.id = update_data.id]

[parameters: {'param_1': 1, 'param_2': '8b6ec1ec-b979-4d0b-b2ce-9acc6e4c2943', 'param_3': '{"foo": 1}', 'param_4': 2, 'param_5': 'a2123bcb-7ea3-420a-8284-1db4b2759d79', 'param_6': '{"bar": 2}'}]

```

Versions:

SQLAlchemy: 2.0.35

psycopg2-binary: 2.9.3

db: PostgreSQL 15.2

Have a nice day</div>

|

closed

|

2024-10-13T09:00:38Z

|

2024-12-03T08:43:54Z

|

https://github.com/sqlalchemy/sqlalchemy/issues/11994

|

[

"postgresql",

"schema",

"use case"

] |

CaselIT

| 6

|

ageitgey/face_recognition

|

python

| 968

|

Crop image to preferred region to speed up detection

|

Hi everyone.

My problem is that I cannot resize the input image to smaller resolution (because faces then are to small to be detected) to apply cnn detection. My application scenario is that faces only appear in some specific region of a frame. Is it okay to keep the image the original size, but crop only the region that faces most likely appear, then apply face detection to that cropped region and then just use it for recognition without resizing anything? Is this okay? Have anyone tried this please give me some advice. Thank you a lot!!!

|

closed

|

2019-11-05T03:56:06Z

|

2019-11-05T07:42:59Z

|

https://github.com/ageitgey/face_recognition/issues/968

|

[] |

congphase

| 2

|

KevinMusgrave/pytorch-metric-learning

|

computer-vision

| 300

|

Error when using a Miner with ArcFaceLoss when training with Mixed Precision

|

I'm training my model with pytorch-lightning, using its mixed precision. When I tried to add a miner with the ArcFaceLoss, I got the following error:

```

File "/opt/conda/lib/python3.7/site-packages/pytorch_metric_learning/losses/base_metric_loss_function.py", line 34, in forward

loss_dict = self.compute_loss(embeddings, labels, indices_tuple)

File "/opt/conda/lib/python3.7/site-packages/pytorch_metric_learning/losses/large_margin_softmax_loss.py", line 105, in compute_loss

miner_weights = lmu.convert_to_weights(indices_tuple, labels, dtype=dtype)

File "/opt/conda/lib/python3.7/site-packages/pytorch_metric_learning/utils/loss_and_miner_utils.py", line 211, in convert_to_weights

weights[indices] = counts / torch.max(counts)

RuntimeError: Index put requires the source and destination dtypes match, got Half for the destination and Float for the source.

```

I guess the output of my model (which is a convolutional model) had `torch.float16` as its type and when converting the mined indices to weights, it ended up trying to put a `torch.float32` into the weights (which were created with the same dtype of my embedding).

I can see that the code actually tries to convert the `counts` to the same dtype:

```

counts = c_f.to_dtype(counts, dtype=dtype) / torch.sum(counts)

weights[indices] = counts / torch.max(counts)

```

However, when debugging, I noticed that it doesn't have any effect because `torch.is_autocast_enabled()` returns `True`.

|

closed

|

2021-04-08T11:01:39Z

|

2021-05-10T02:54:30Z

|

https://github.com/KevinMusgrave/pytorch-metric-learning/issues/300

|

[

"bug",

"fixed in dev branch"

] |

fernandocamargoai

| 2

|

quokkaproject/quokka

|

flask

| 97

|

Relase PyPi package and change the core architecture

|

closed

|

2013-11-22T12:14:54Z

|

2015-07-16T02:56:42Z

|

https://github.com/quokkaproject/quokka/issues/97

|

[] |

rochacbruno

| 6

|

|

deeppavlov/DeepPavlov

|

nlp

| 1,628

|

Predictions NER_Ontonotes_BERT_Mult for entities with interpunction

|

DeepPavlov version: 1.0.2

Python version: 3.8

Operating system: Ubuntu

**Issue**:

I am using the `ner_ontonotes_bert_mult` model to predict entities for text. For sentences with interpunction in the entities, this gives unexpected results. Before the 1.0.0 release, I used the [Deeppavlov docker image](https://hub.docker.com/r/deeppavlov/base-cpu) with the `ner_ontonotes_bert_mult` config as well. I didn't encounter these issues with the older version of Deeppavlov.

**Content or a name of a configuration file**:

```

[ner_ontonotes_bert_mult](https://github.com/deeppavlov/DeepPavlov/blob/1.0.2/deeppavlov/configs/ner/ner_ontonotes_bert_mult.json)

```

**Command that led to the unexpected results**:

```python

from deeppavlov import build_model

deeppavlov_model = build_model(

"ner_ontonotes_bert_mult",

install=True,

download=True)

sentence = 'Today at 13:10 we had a meeting'

output = deeppavlov_model([sentence])

print(output[0])

[['Today', 'at', '13', ':', '10', 'we', 'had', 'a', 'meeting']]

print(output[1])

[['O', 'O', 'B-TIME', 'O', 'B-TIME', 'O', 'O', 'O', 'O']]

```

As you can see `13:10` is not recognized as a time entity as a whole, but `13` as `B-TIME`, `:` as O, and `10` as `B-time`. The same happens for names with interpunctions such as `E.A. Jones`. I was wondering what I could do to solve this issue, is it possible to fine-tune this model on such examples?

|

closed

|

2023-02-15T15:17:20Z

|

2023-03-16T08:22:50Z

|

https://github.com/deeppavlov/DeepPavlov/issues/1628

|

[

"bug"

] |

ronaldvelzen

| 3

|

marcomusy/vedo

|

numpy

| 882

|

Slice error

|

Examples with `msh.intersect_with_plane` (tried torus and bunny from `Mesh.slice`) produce the same error : AttributeError: module 'vedo.vtkclasses' has no attribute 'vtkPolyDataPlaneCutter'

|

closed

|

2023-06-14T17:07:00Z

|

2023-10-18T13:09:54Z

|

https://github.com/marcomusy/vedo/issues/882

|

[] |

mAxGarak

| 5

|

Evil0ctal/Douyin_TikTok_Download_API

|

fastapi

| 176

|

[BUG] Cannot read property 'JS_MD5_NO_COMMON_JS' of null

|

***发生错误的平台?***

抖音

***发生错误的端点?***

API-V1

http://127.0.0.1:8000/api?url=

***提交的输入值?***

https://www.douyin.com/video/7153585499477757192

***是否有再次尝试?***

是,发生错误后X时间后错误依旧存在。

***你有查看本项目的自述文件或接口文档吗?***

有,并且很确定该问题是程序导致的。官网的接口也试过,报500

|

closed

|

2023-03-14T03:23:14Z

|

2023-03-14T20:08:00Z

|

https://github.com/Evil0ctal/Douyin_TikTok_Download_API/issues/176

|

[

"BUG"

] |

WeiLi1201

| 3

|

pytest-dev/pytest-django

|

pytest

| 790

|

Tests do not run without django-configuration installed.

|

In my project I do not use django-configuration as I don't really need it.

When installing `pytest-django` I try to run my tests, here's what I've got:

```

pytest

Traceback (most recent call last):

File "/home/jakub/.virtualenvs/mobigol/bin/pytest", line 8, in <module>

sys.exit(main())

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/_pytest/config/__init__.py", line 72, in main

config = _prepareconfig(args, plugins)

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/_pytest/config/__init__.py", line 223, in _prepareconfig

pluginmanager=pluginmanager, args=args

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/pluggy/hooks.py", line 286, in __call__

return self._hookexec(self, self.get_hookimpls(), kwargs)

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/pluggy/manager.py", line 93, in _hookexec

return self._inner_hookexec(hook, methods, kwargs)

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/pluggy/manager.py", line 87, in <lambda>

firstresult=hook.spec.opts.get("firstresult") if hook.spec else False,

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/pluggy/callers.py", line 203, in _multicall

gen.send(outcome)

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/_pytest/helpconfig.py", line 89, in pytest_cmdline_parse

config = outcome.get_result()

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/pluggy/callers.py", line 80, in get_result

raise ex[1].with_traceback(ex[2])

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/pluggy/callers.py", line 187, in _multicall

res = hook_impl.function(*args)

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/_pytest/config/__init__.py", line 742, in pytest_cmdline_parse

self.parse(args)

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/_pytest/config/__init__.py", line 948, in parse

self._preparse(args, addopts=addopts)

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/_pytest/config/__init__.py", line 906, in _preparse

early_config=self, args=args, parser=self._parser

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/pluggy/hooks.py", line 286, in __call__

return self._hookexec(self, self.get_hookimpls(), kwargs)

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/pluggy/manager.py", line 93, in _hookexec

return self._inner_hookexec(hook, methods, kwargs)

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/pluggy/manager.py", line 87, in <lambda>

firstresult=hook.spec.opts.get("firstresult") if hook.spec else False,

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/pluggy/callers.py", line 208, in _multicall

return outcome.get_result()

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/pluggy/callers.py", line 80, in get_result

raise ex[1].with_traceback(ex[2])

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/pluggy/callers.py", line 187, in _multicall

res = hook_impl.function(*args)

File "/home/jakub/.virtualenvs/mobigol/lib/python3.6/site-packages/pytest_django/plugin.py", line 239, in pytest_load_initial_conftests

import configurations.importer

ModuleNotFoundError: No module named 'configurations'

```

Also my package versions:

```

pytest==5.3.1

pytest-django==3.3.0

pytest-factoryboy==2.0.3

```

Is `django-configuration` required? I couldn't find any information about it in the documentation.

|

closed

|

2020-01-04T17:42:33Z

|

2025-01-31T08:23:15Z

|

https://github.com/pytest-dev/pytest-django/issues/790

|

[

"bug"

] |

jakubjanuzik

| 6

|

ranaroussi/yfinance

|

pandas

| 2,155

|

Wrong time stamp for 1h time frame for versions after 0.2.44

|

### Describe bug

Whenever I am trying to download stock data for 1h time frame it shows wrong time frame. This problem was not present in 0.2.44 and previous versions. It was easier to use when it used to give output in local time frames

### Simple code that reproduces your problem

import yfinance as yf

print("\nyfinance version:", yf.__version__)

ticker = 'AAPL'

interval = '1h'

data = yf.download(ticker, period='1mo', interval=interval)

print("\nLast 7 Close Prices:")

print(data['Close'].tail(7))

### Debug log

I downloaded stock data of AAPL in 0.2.44 version this is the print out.

Last 7 Close Prices:

Datetime

2024-11-25 11:30:00-05:00 230.206802

2024-11-25 12:30:00-05:00 230.414993

2024-11-25 13:30:00-05:00 230.684998

2024-11-25 14:30:00-05:00 230.520004

2024-11-25 15:30:00-05:00 232.880005

2024-11-26 09:30:00-05:00 234.360001

2024-11-26 10:30:00-05:00 235.139999

But when I downloaded stock data of AAPL in 0.2.50 version this is the print out.

Ticker AAPL

Datetime

2024-11-25 16:30:00+00:00 230.206802

2024-11-25 17:30:00+00:00 230.414993

2024-11-25 18:30:00+00:00 230.684998

2024-11-25 19:30:00+00:00 230.520004

2024-11-25 20:30:00+00:00 232.880005

2024-11-26 14:30:00+00:00 234.360001

2024-11-26 15:30:00+00:00 235.313507

### Bad data proof

### `yfinance` version

0.2.50

### Python version

3.10

### Operating system

_No response_

|

open

|

2024-11-26T16:10:00Z

|

2025-02-16T20:12:37Z

|

https://github.com/ranaroussi/yfinance/issues/2155

|

[] |

indra5534

| 10

|

nonebot/nonebot2

|

fastapi

| 2,924

|

Plugin: LLOneBot-Master

|

### PyPI 项目名

nonebot-plugin-llob-master

### 插件 import 包名

nonebot_plugin_llob_master

### 标签

[{"label":"LLOneBot","color":"#e3e9e9"},{"label":"Windows","color":"#1da6eb"}]

### 插件配置项

_No response_

|

closed

|

2024-08-25T08:15:27Z

|

2024-08-27T13:20:46Z

|

https://github.com/nonebot/nonebot2/issues/2924

|

[

"Plugin"

] |

kanbereina

| 5

|

smarie/python-pytest-cases

|

pytest

| 91

|

Enforce file naming pattern: automatically get cases from file named `test_xxx_cases.py`

|

We suggest this pattern in the doc, we could make it a default.

|

closed

|

2020-06-02T12:57:26Z

|

2020-07-09T09:18:12Z

|

https://github.com/smarie/python-pytest-cases/issues/91

|

[

"enhancement"

] |

smarie

| 2

|

browser-use/browser-use

|

python

| 668

|

Unable to submit vulnerability report

|

### Type of Documentation Issue

Incorrect documentation

### Documentation Page

https://github.com/browser-use/browser-use/security/policy

### Issue Description

The documentation states that security issues should be reported by creating a report at https://github.com/browser-use/browser-use/security/advisories/new. However, accessing this link results in a 404 error.

It seems that the repository's private vulnerability reporting setting is not enabled.

ref: https://docs.github.com/en/code-security/security-advisories/working-with-repository-security-advisories/configuring-private-vulnerability-reporting-for-a-repository

Could you update the settings to allow vulnerability reports to be submitted?

### Suggested Changes

There is no need to update the document itself.

|

closed

|

2025-02-11T14:05:47Z

|

2025-02-22T23:31:32Z

|

https://github.com/browser-use/browser-use/issues/668

|

[

"documentation"

] |

melonattacker

| 5

|

jowilf/starlette-admin

|

sqlalchemy

| 477

|

Enhancement: Register Page

|

What about an out-of-the-box register page? We have a login page, why not have a register page? We can use `Fields` system to make the registration form more dynamic.

|

open

|

2024-01-17T12:20:00Z

|

2024-01-27T20:55:33Z

|

https://github.com/jowilf/starlette-admin/issues/477

|

[

"enhancement"

] |

hasansezertasan

| 0

|

snarfed/granary

|

rest-api

| 122

|

json feed: handle displayName as well as title

|

e.g. @aaronpk's articles feed: https://granary.io/url?input=html&output=jsonfeed&reader=false&url=http://aaronparecki.com/articles

|

closed

|

2017-12-05T20:59:31Z

|

2017-12-06T05:10:46Z

|

https://github.com/snarfed/granary/issues/122

|

[] |

snarfed

| 0

|

eriklindernoren/ML-From-Scratch

|

deep-learning

| 55

|

Moore-Penrose pseudo-inverse in linear regression

|

Hi, I am reimplementing ml algorithms based on yours

But I am a little confused about the part of the calculation of Moore-Penrose pseudoinverse in linear regression.

https://github.com/eriklindernoren/ML-From-Scratch/blob/40b52e4edf9485c4e479568f5a41501914fdc55c/mlfromscratch/supervised_learning/regression.py#L111-L114

Why do not use`np.linalg.pinv(X)`, according to the docstring:

It can compute the Moore-Penrose pseudo-inverse directly.

Thanks!

|

open

|

2019-06-21T03:27:28Z

|

2019-11-20T16:09:03Z

|

https://github.com/eriklindernoren/ML-From-Scratch/issues/55

|

[] |

liadbiz

| 3

|

fastapi/sqlmodel

|

pydantic

| 126

|

max_length does not work in Fields

|

### First Check

- [X] I added a very descriptive title to this issue.

- [X] I used the GitHub search to find a similar issue and didn't find it.

- [X] I searched the SQLModel documentation, with the integrated search.

- [X] I already searched in Google "How to X in SQLModel" and didn't find any information.

- [X] I already read and followed all the tutorial in the docs and didn't find an answer.

- [X] I already checked if it is not related to SQLModel but to [Pydantic](https://github.com/samuelcolvin/pydantic).

- [X] I already checked if it is not related to SQLModel but to [SQLAlchemy](https://github.com/sqlalchemy/sqlalchemy).

### Commit to Help

- [X] I commit to help with one of those options 👆

### Example Code

```python

from sqlmodel import SQLModel, Field

class Locations(SQLModel, table=True):

LocationName: str = Field(max_length=255)

```

### Description

When running the above code, I get a

`ValueError: On field "LocationName" the following field constraints are set but not enforced: max_length`

I also tried pydantic's documentation by changing the 'str' typing to `constr(max_length=255)` to no avail.

### Operating System

Windows

### Operating System Details

_No response_

### SQLModel Version

0.0.4

### Python Version

3.9.4

### Additional Context

_No response_

|

closed

|

2021-10-08T13:35:17Z

|

2024-04-03T16:05:03Z

|

https://github.com/fastapi/sqlmodel/issues/126

|

[

"question"

] |

yudjinn

| 7

|

ading2210/poe-api

|

graphql

| 170

|

KeyError: 'payload'

|

Getting the following error

```

INFO:root:Setting up session...

INFO:root:Downloading next_data...

Traceback (most recent call last):

File "/home/shubharthak/Desktop/apsaraAI/ChatGPT.py", line 10, in <module>

client = poe.Client(api)

File "/home/shubharthak/miniconda3/lib/python3.10/site-packages/poe.py", line 123, in __init__

self.connect_ws()

File "/home/shubharthak/miniconda3/lib/python3.10/site-packages/poe.py", line 366, in connect_ws

self.setup_connection()

File "/home/shubharthak/miniconda3/lib/python3.10/site-packages/poe.py", line 149, in setup_connection

self.next_data = self.get_next_data(overwrite_vars=True)

File "/home/shubharthak/miniconda3/lib/python3.10/site-packages/poe.py", line 198, in get_next_data

self.viewer = next_data["props"]["pageProps"]["payload"]["viewer"]

KeyError: 'payload'

```

Code:-

```

import poe

import sys

import logging

import os

api = os.environ.get('poe')

from IPython.display import display, Markdown, Latex

poe.logger.setLevel(logging.INFO)

client = poe.Client(api)

def say(msg):

ans = ''

for chunk in client.send_message('chinchilla', msg, with_chat_break=False):

# print(not chunk['text_new'].find("'''"))

# if not chunk['text_new'].find("'''"):

# print(chunk['text_new'], end=' ', flush=True)

ans += chunk['text_new']

#yield(chunk['text_new'])

print(chunk['text_new'], end = '', flush=True)

# return ans

#

# res = Markdown(ans + ' ')

# display(res)

# print(display(res), end=' ')

if __name__ == '__main__':

while True:

msg = input('> ')

if 'bye' in msg or 'exit' in msg:

break

say(msg)

print()

print('Thank you for using me')

```

|

closed

|

2023-07-17T19:29:04Z

|

2023-07-17T19:38:47Z

|

https://github.com/ading2210/poe-api/issues/170

|

[] |

shubharthaksangharsha

| 0

|

OWASP/Nettacker

|

automation

| 171

|

Issue in getting results via discovery funstion in service scanner

|

I was trying to perform the same operation on my localhost and results were different everytime.

```python

In [1]: from lib.payload.scanner.service.engine import discovery

In [2]: discovery("127.0.0.1")

Out[2]: {443: 'UNKNOWN', 3306: 'UNKNOWN'}

In [3]: discovery("127.0.0.1")

Out[3]:

{80: 'http',

443: 'UNKNOWN',

631: 'UNKNOWN',

3306: 'UNKNOWN',

5432: 'UNKNOWN',

8002: 'http'}

In [4]: discovery("127.0.0.1")

Out[4]:

{80: 'http',

139: 'UNKNOWN',

443: 'UNKNOWN',

445: 'UNKNOWN',

631: 'UNKNOWN',

3306: 'UNKNOWN',

5432: 'UNKNOWN',

8001: 'UNKNOWN',

8002: 'http'}

In [5]: discovery("127.0.0.1")

Out[5]:

{80: 'http',

139: 'UNKNOWN',

443: 'UNKNOWN',

445: 'UNKNOWN',

631: 'UNKNOWN',

3306: 'UNKNOWN',

5432: 'UNKNOWN',

8001: 'UNKNOWN',

8002: 'http'}

```

Am I doing anything wrong or is it some problem with the module!! Performing a port scan however works fine for me.

_________________

**OS**: `Ubuntu`

**OS Version**: `16.04`

**Python Version**: `2.7.12`

|

closed

|

2018-06-26T01:49:22Z

|

2021-02-02T20:28:14Z

|

https://github.com/OWASP/Nettacker/issues/171

|

[

"enhancement",

"possible bug"

] |

shaddygarg

| 8

|

newpanjing/simpleui

|

django

| 512

|

包括但不限于base.less等多个样式表文件对用户模型依赖问题

|

base.less 273 行:

```

#user_form{

background-color: white;

margin: 10px;

padding: 10px;

//color: #5a9cf8;

}

```

当用户模型User被swap以后,其名称不再是user,这个选择器将失效,从而导致样式有一些怪异。

|

open

|

2025-02-05T12:22:14Z

|

2025-02-05T12:22:14Z

|

https://github.com/newpanjing/simpleui/issues/512

|

[] |

WangQixuan

| 0

|

mljar/mljar-supervised

|

scikit-learn

| 447

|

Where can I find model details?

|

I want to see the best model parameters, preprocessing, etc so that I can reproduce it later or train the model again with the same parameters.

Thanks,

|

closed

|

2021-07-30T19:37:58Z

|

2021-08-28T19:56:47Z

|

https://github.com/mljar/mljar-supervised/issues/447

|

[] |

abdulwaheedsoudagar

| 1

|

fohrloop/dash-uploader

|

dash

| 73

|

Uploading multiple files shows wrong total file count in case of errors

|

Affected version: f033683 (flow-dev branch)

Steps to reproduce

1. Use `max_file_size` for `du.Upload`

2. Upload multiple files (folder of files) where part of files (e.g. 2 files) are below `max_file_size` and part of files (e.g. 2 files) is above `max_file_size`

3. The upload text total files will reflect the *original* amount of selected files, even though some of the files were dropped away because of `max_file_size`. (In the figure: `Uploading (64.00 Mb, File 1/4)` should be `Uploading (64.00 Mb, File 1/2)`)

|

closed

|

2022-02-24T19:23:56Z

|

2022-02-24T19:29:21Z

|

https://github.com/fohrloop/dash-uploader/issues/73

|

[

"bug"

] |

fohrloop

| 2

|

HumanSignal/labelImg

|

deep-learning

| 748

|

No module named 'libs.resources'

|

(ve) user1@comp1:~/path/to/labelImg$ python labelImg.py

Traceback (most recent call last):

File "labelImg.py", line 33, in <module>

from libs.resources import *

ModuleNotFoundError: No module named 'libs.resources'

Ubuntu

- **PyQt version:** 5

|

closed

|

2021-05-16T06:52:54Z

|

2021-05-17T05:00:50Z

|

https://github.com/HumanSignal/labelImg/issues/748

|

[] |

waynemystir

| 1

|

huggingface/diffusers

|

deep-learning

| 10,080

|

Proposal to add sigmas option to FluxPipeline

|

**Is your feature request related to a problem? Please describe.**

Flux's image generation is great, but it seems to have a tendency to remove too much detail.

**Describe the solution you'd like.**

Add the `sigmas` option to `FluxPipeline` to enable adjustment of the degree of noise removal.

**Additional context.**

I propose adding the `sigmas` option to `FluxPipeline`.

The details are as follows.

[Yntec](https://huggingface.co/Yntec) picked up a FLUX modification proposal from [Reddit](https://www.reddit.com/r/comfyui/comments/1g9wfbq/simple_way_to_increase_detail_in_flux_and_remove/), and [r3gm](https://huggingface.co/r3gm), the author of [stablepy](https://github.com/R3gm/stablepy), wrote the code for the logic part of the FLUX pipeline modification, and I made [a demo](https://huggingface.co/spaces/John6666/flux-sigmas-test) by making [a test commit on github](https://github.com/huggingface/diffusers/commit/ad3344e2be033887d854d2731757db8b80dcfb06), and it turned out that it was working as expected.

This time, we only modified the pipeline for T2I for testing. During discussions with r3gm, it was discovered that [the `sigmas` option, which exists in StableDiffusionXLPipeline](https://github.com/huggingface/diffusers/blob/c96bfa5c80eca798d555a79a491043c311d0f608/src/diffusers/pipelines/stable_diffusion_xl/pipeline_stable_diffusion_xl.py#L841), does not exist in `FluxPipeline`, so the actual implementation was switched to porting the `sigmas` option.

Also, in the current `FluxPipeline`, I also found a bug where specifying `timesteps` would probably result in an error because `sigmas` are hard-coded, even though the SDXL pipeline code is reused, so I fixed it while I was at it.

If you want to use Reddit's suggested **0.95**, specify it as follows.

```py

factor = 0.95

sigmas = np.linspace(1.0, 1 / num_inference_steps, num_inference_steps)

sigmas = sigmas * factor

image_sigmas = pipe(

prompt=prompt,

guidance_scale=guidance_scale,

num_inference_steps=num_inference_steps,

width=width,

height=height,

generator=generator,

output_type="pil",

sigmas=sigmas

).images[0]

```

I will post some samples that were actually generated in the demo.

Prompt: anthropomorphic pig Programmer with laptop, colorfull, funny / Seed: 9119

Prompt: A painting by Picasso of Hatsune Miku in an office. Desk, window, books. / Seed: 9119

Prompt: 80s cinematic colored sitcom screenshot. young husband with wife. festive scene at a copper brewery with a wooden keg of enjoying burrito juice in the center. sitting cute little daughter. Display mugs of dark beer. Closeup. beautiful eyes. accompanied by halloween Shirley ingredients. portrait smile / Seed: 9119

|

closed

|

2024-12-02T11:14:40Z

|

2024-12-02T18:16:49Z

|

https://github.com/huggingface/diffusers/issues/10080

|

[] |

John6666cat

| 3

|

AUTOMATIC1111/stable-diffusion-webui

|

deep-learning

| 15,773

|

[Bug]: CUDA error: an illegal instruction was encountered

|

### Checklist

- [ ] The issue exists after disabling all extensions

- [X] The issue exists on a clean installation of webui

- [ ] The issue is caused by an extension, but I believe it is caused by a bug in the webui

- [ ] The issue exists in the current version of the webui

- [ ] The issue has not been reported before recently

- [ ] The issue has been reported before but has not been fixed yet

### What happened?

The Generation stops and display this error

CUDA error: an illegal instruction was encountered

Complete log given below.

### Steps to reproduce the problem

Occurs while generating the image after clicking the generate button.

### What should have happened?

Should have generated the output. It goes upto 10 percent or so then quits.

### What browsers do you use to access the UI ?

_No response_

### Sysinfo

[sysinfo-2024-05-13-05-11.json](https://github.com/AUTOMATIC1111/stable-diffusion-webui/files/15289638/sysinfo-2024-05-13-05-11.json)

### Console logs

```Shell

venv "D:\AI\stable-diffusion-webui\venv\Scripts\Python.exe"

Python 3.10.11 (tags/v3.10.11:7d4cc5a, Apr 5 2023, 00:38:17) [MSC v.1929 64 bit (AMD64)]

Version: v1.9.3

Commit hash: 1c0a0c4c26f78c32095ebc7f8af82f5c04fca8c0

Launching Web UI with arguments: --lowvram --precision full --no-half --skip-torch-cuda-test

no module 'xformers'. Processing without...

no module 'xformers'. Processing without...

No module 'xformers'. Proceeding without it.

Loading weights [6ce0161689] from D:\AI\stable-diffusion-webui\models\Stable-diffusion\v1-5-pruned-emaonly.safetensors

Creating model from config: D:\AI\stable-diffusion-webui\configs\v1-inference.yaml

Running on local URL: http://127.0.0.1:7860

To create a public link, set `share=True` in `launch()`.

D:\AI\stable-diffusion-webui\venv\lib\site-packages\huggingface_hub\file_download.py:1132: FutureWarning: `resume_download` is deprecated and will be removed in version 1.0.0. Downloads always resume when possible. If you want to force a new download, use `force_download=True`.

warnings.warn(

Startup time: 11.7s (prepare environment: 0.3s, import torch: 4.6s, import gradio: 1.3s, setup paths: 1.4s, initialize shared: 0.5s, other imports: 0.7s, load scripts: 1.1s, create ui: 0.9s, gradio launch: 0.7s).

Applying attention optimization: Doggettx... done.

Model loaded in 5.5s (load weights from disk: 1.1s, create model: 0.6s, apply weights to model: 3.3s, calculate empty prompt: 0.4s).

10%|████████▎ | 2/20 [00:06<00:58, 3.22s/it]Exception in thread MemMon:█▋ | 2/20 [00:02<00:23, 1.28s/it]

Traceback (most recent call last):

File "C:\Program Files\WindowsApps\PythonSoftwareFoundation.Python.3.10_3.10.3056.0_x64__qbz5n2kfra8p0\lib\threading.py", line 1016, in _bootstrap_inner

self.run()

File "D:\AI\stable-diffusion-webui\modules\memmon.py", line 53, in run

free, total = self.cuda_mem_get_info()

File "D:\AI\stable-diffusion-webui\modules\memmon.py", line 34, in cuda_mem_get_info

return torch.cuda.mem_get_info(index)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\cuda\memory.py", line 663, in mem_get_info

return torch.cuda.cudart().cudaMemGetInfo(device)

RuntimeError: CUDA error: an illegal instruction was encountered

CUDA kernel errors might be asynchronously reported at some other API call, so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

Compile with `TORCH_USE_CUDA_DSA` to enable device-side assertions.

*** Error completing request

*** Arguments: ('task(rxe7j3hmflc31l0)', <gradio.routes.Request object at 0x000002B1642C5A20>, 'hello', '', [], 1, 1, 7, 512, 512, False, 0.7, 2, 'Latent', 0, 0, 0, 'Use same checkpoint', 'Use same sampler', 'Use same scheduler', '', '', [], 0, 20, 'DPM++ 2M', 'Automatic', False, '', 0.8, -1, False, -1, 0, 0, 0, False, False, 'positive', 'comma', 0, False, False, 'start', '', 1, '', [], 0, '', [], 0, '', [], True, False, False, False, False, False, False, 0, False) {}

Traceback (most recent call last):

File "D:\AI\stable-diffusion-webui\modules\call_queue.py", line 57, in f

res = list(func(*args, **kwargs))

File "D:\AI\stable-diffusion-webui\modules\call_queue.py", line 36, in f

res = func(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\modules\txt2img.py", line 109, in txt2img

processed = processing.process_images(p)

File "D:\AI\stable-diffusion-webui\modules\processing.py", line 845, in process_images

res = process_images_inner(p)

File "D:\AI\stable-diffusion-webui\modules\processing.py", line 981, in process_images_inner

samples_ddim = p.sample(conditioning=p.c, unconditional_conditioning=p.uc, seeds=p.seeds, subseeds=p.subseeds, subseed_strength=p.subseed_strength, prompts=p.prompts)

File "D:\AI\stable-diffusion-webui\modules\processing.py", line 1328, in sample

samples = self.sampler.sample(self, x, conditioning, unconditional_conditioning, image_conditioning=self.txt2img_image_conditioning(x))

File "D:\AI\stable-diffusion-webui\modules\sd_samplers_kdiffusion.py", line 218, in sample

samples = self.launch_sampling(steps, lambda: self.func(self.model_wrap_cfg, x, extra_args=self.sampler_extra_args, disable=False, callback=self.callback_state, **extra_params_kwargs))

File "D:\AI\stable-diffusion-webui\modules\sd_samplers_common.py", line 272, in launch_sampling

return func()

File "D:\AI\stable-diffusion-webui\modules\sd_samplers_kdiffusion.py", line 218, in <lambda>

samples = self.launch_sampling(steps, lambda: self.func(self.model_wrap_cfg, x, extra_args=self.sampler_extra_args, disable=False, callback=self.callback_state, **extra_params_kwargs))

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\utils\_contextlib.py", line 115, in decorate_context

return func(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\repositories\k-diffusion\k_diffusion\sampling.py", line 594, in sample_dpmpp_2m

denoised = model(x, sigmas[i] * s_in, **extra_args)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1518, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1527, in _call_impl

return forward_call(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\modules\sd_samplers_cfg_denoiser.py", line 237, in forward

x_out = self.inner_model(x_in, sigma_in, cond=make_condition_dict(cond_in, image_cond_in))

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1518, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1527, in _call_impl

return forward_call(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\repositories\k-diffusion\k_diffusion\external.py", line 112, in forward

eps = self.get_eps(input * c_in, self.sigma_to_t(sigma), **kwargs)

File "D:\AI\stable-diffusion-webui\repositories\k-diffusion\k_diffusion\external.py", line 138, in get_eps

return self.inner_model.apply_model(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\modules\sd_hijack_utils.py", line 18, in <lambda>

setattr(resolved_obj, func_path[-1], lambda *args, **kwargs: self(*args, **kwargs))

File "D:\AI\stable-diffusion-webui\modules\sd_hijack_utils.py", line 32, in __call__

return self.__orig_func(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\repositories\stable-diffusion-stability-ai\ldm\models\diffusion\ddpm.py", line 858, in apply_model

x_recon = self.model(x_noisy, t, **cond)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1518, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1527, in _call_impl

return forward_call(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\repositories\stable-diffusion-stability-ai\ldm\models\diffusion\ddpm.py", line 1335, in forward

out = self.diffusion_model(x, t, context=cc)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1518, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1527, in _call_impl

return forward_call(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\modules\sd_unet.py", line 91, in UNetModel_forward

return original_forward(self, x, timesteps, context, *args, **kwargs)

File "D:\AI\stable-diffusion-webui\repositories\stable-diffusion-stability-ai\ldm\modules\diffusionmodules\openaimodel.py", line 797, in forward

h = module(h, emb, context)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1518, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1568, in _call_impl

result = forward_call(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\repositories\stable-diffusion-stability-ai\ldm\modules\diffusionmodules\openaimodel.py", line 84, in forward

x = layer(x, context)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1518, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1527, in _call_impl

return forward_call(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\repositories\stable-diffusion-stability-ai\ldm\modules\attention.py", line 334, in forward

x = block(x, context=context[i])

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1518, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1527, in _call_impl

return forward_call(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\repositories\stable-diffusion-stability-ai\ldm\modules\attention.py", line 269, in forward

return checkpoint(self._forward, (x, context), self.parameters(), self.checkpoint)

File "D:\AI\stable-diffusion-webui\repositories\stable-diffusion-stability-ai\ldm\modules\diffusionmodules\util.py", line 121, in checkpoint

return CheckpointFunction.apply(func, len(inputs), *args)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\autograd\function.py", line 539, in apply

return super().apply(*args, **kwargs) # type: ignore[misc]

File "D:\AI\stable-diffusion-webui\repositories\stable-diffusion-stability-ai\ldm\modules\diffusionmodules\util.py", line 136, in forward

output_tensors = ctx.run_function(*ctx.input_tensors)

File "D:\AI\stable-diffusion-webui\repositories\stable-diffusion-stability-ai\ldm\modules\attention.py", line 273, in _forward

x = self.attn2(self.norm2(x), context=context) + x

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1518, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py", line 1527, in _call_impl

return forward_call(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\modules\sd_hijack_optimizations.py", line 240, in split_cross_attention_forward

q, k, v = (rearrange(t, 'b n (h d) -> (b h) n d', h=h) for t in (q_in, k_in, v_in))

File "D:\AI\stable-diffusion-webui\modules\sd_hijack_optimizations.py", line 240, in <genexpr>

q, k, v = (rearrange(t, 'b n (h d) -> (b h) n d', h=h) for t in (q_in, k_in, v_in))

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\einops\einops.py", line 487, in rearrange

return reduce(tensor, pattern, reduction='rearrange', **axes_lengths)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\einops\einops.py", line 410, in reduce

return _apply_recipe(recipe, tensor, reduction_type=reduction)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\einops\einops.py", line 239, in _apply_recipe

return backend.reshape(tensor, final_shapes)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\einops\_backends.py", line 84, in reshape

return x.reshape(shape)

RuntimeError: CUDA error: an illegal instruction was encountered

CUDA kernel errors might be asynchronously reported at some other API call, so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

Compile with `TORCH_USE_CUDA_DSA` to enable device-side assertions.

---

Traceback (most recent call last):

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\gradio\routes.py", line 488, in run_predict

output = await app.get_blocks().process_api(

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\gradio\blocks.py", line 1431, in process_api

result = await self.call_function(

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\gradio\blocks.py", line 1103, in call_function

prediction = await anyio.to_thread.run_sync(

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\anyio\to_thread.py", line 33, in run_sync

return await get_asynclib().run_sync_in_worker_thread(

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\anyio\_backends\_asyncio.py", line 877, in run_sync_in_worker_thread

return await future

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\anyio\_backends\_asyncio.py", line 807, in run

result = context.run(func, *args)

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\gradio\utils.py", line 707, in wrapper

response = f(*args, **kwargs)

File "D:\AI\stable-diffusion-webui\modules\call_queue.py", line 77, in f

devices.torch_gc()

File "D:\AI\stable-diffusion-webui\modules\devices.py", line 81, in torch_gc

torch.cuda.empty_cache()

File "D:\AI\stable-diffusion-webui\venv\lib\site-packages\torch\cuda\memory.py", line 159, in empty_cache

torch._C._cuda_emptyCache()

RuntimeError: CUDA error: an illegal instruction was encountered

CUDA kernel errors might be asynchronously reported at some other API call, so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

Compile with `TORCH_USE_CUDA_DSA` to enable device-side assertions.

```

### Additional information

I updated to the latest driver of NVIDIA.

I tried installing Fooocus but only becuase 1111 stopped working.

I have ooba gooba installed in my system. Maybe the CUDA version there is different?

Could it possibly clash with 1111?

|

open

|

2024-05-13T05:14:24Z

|

2024-10-18T10:23:20Z

|

https://github.com/AUTOMATIC1111/stable-diffusion-webui/issues/15773

|

[

"bug-report"

] |

ClaudeRobbinCR

| 1

|

tflearn/tflearn

|

data-science

| 578

|

possible typo line 584

|

currently:

self.train_var = to_list(self.train_vars)

should it be this?

self.train_vars = to_list(self.train_vars)

|

open

|

2017-01-29T07:18:39Z

|

2017-01-29T07:18:39Z

|

https://github.com/tflearn/tflearn/issues/578

|

[] |

ecohen1

| 0

|

pydantic/FastUI

|

pydantic

| 165

|

Proxy Support

|

I am running my fastapi behind a proxy. The issue I am running into:

assume my path is `https://example.com/proxy/8989/`

1. the root path is invoked

2. the HTMLResponse(prebuilt_html(title='FastUI Demo')) is sent

3. the following content is run

```

<!doctype html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>FastUI Demo</title>

<script type="module" crossorigin src="https://cdn.jsdelivr.net/npm/@pydantic/fastui-prebuilt@0.0.15/dist/assets/index.js"></script>

<link rel="stylesheet" crossorigin href="https://cdn.jsdelivr.net/npm/@pydantic/fastui-prebuilt@0.0.15/dist/assets/index.css">

</head>

<body>

<div id="root"></div>

</body>

</html>

```

4. The index.js is installed

5. Then it makes an fetch call with the react js being loaded to `https://example.com/api/proxy/8989/` instead of `https://example.com/proxy/8989/api/`

Is this expected? Any plans on fixing it?

|

closed

|

2024-01-25T17:36:33Z

|

2024-02-09T07:04:48Z

|

https://github.com/pydantic/FastUI/issues/165

|

[] |

stikkireddy

| 1

|

facebookresearch/fairseq

|

pytorch

| 5,244

|

facebook/mbart-large-50 VS facebook/mbart-large-50-many-to-many-mmt

|

Hi!!

I am conducting some experiments for NMT on low resource languages, for this purpose I am fine tuning mbart for in a number for directions English to Sinhala, English to Tamil and SInhala to Tamil. I am using huggingface platform to perform this finetuning, while selecting the model i have 2 questions, i will highly appreciate it if some one could help be?

1. What is the difference between mbart-large-50 and mbart-large-50-many-to-many? I have been using mbart-large-50 for finetuing since it supported the above mentioned languages, am i using the correct model for this purpose or should i go with many-to-many

2. I want to confirm the model size as well, is it 610M ?

Thank you in Advance!!

|

open

|

2023-07-09T11:23:31Z

|

2023-07-09T11:23:31Z

|

https://github.com/facebookresearch/fairseq/issues/5244

|

[

"question",

"needs triage"

] |

vmenan

| 0

|

pytest-dev/pytest-django

|

pytest

| 964

|

Drop support for unsupported Python and Django versions

|

closed

|

2021-11-22T11:05:01Z

|

2021-12-01T19:45:55Z

|

https://github.com/pytest-dev/pytest-django/issues/964

|

[] |

pauloxnet

| 2

|

|

QingdaoU/OnlineJudge

|

django

| 51

|

批量建立使用者

|

请问有什么方法可以批量建立使用者

|

closed

|

2016-06-24T15:18:56Z

|

2016-06-28T07:02:55Z

|

https://github.com/QingdaoU/OnlineJudge/issues/51

|

[] |

kevin50406418

| 1

|

littlecodersh/ItChat

|

api

| 101

|

如何获得群里的群友的头像的图片具体binary数据?

|

比如获得:

``` python

"HeadImgUrl": "/cgi-bin/mmwebwx-bin/webwxgetheadimg?seq=642242818&sername=@@21ec4b514edf3e7cb867e0512fb85f3a5e6deb657f4e8573d656bcd4558e3594&skey=",

```

但如何获得头像呢? 前面的域名应该是用什么?

|

closed

|

2016-10-16T20:31:54Z

|

2017-02-02T14:45:39Z

|

https://github.com/littlecodersh/ItChat/issues/101

|

[

"question"

] |

9cat

| 4

|

graphql-python/graphene-mongo

|

graphql

| 220

|

Releases v0.2.16 and v0.3.0 missing on PyPI

|

Thanks for the work on this project, happy user here!

I noticed that there are releases on https://github.com/graphql-python/graphene-mongo/releases that are not on https://pypi.org/project/graphene-mongo/#history. Is this an oversight or is there something preventing you from releasing on PyPI?

In the meantime I am installing v0.3.0 directly from github, but it would be nice if it were on PyPI instead.

|

open

|

2023-05-02T14:55:36Z

|

2023-07-31T07:20:21Z

|

https://github.com/graphql-python/graphene-mongo/issues/220

|

[] |

mathiasose

| 4

|

Lightning-AI/pytorch-lightning

|

data-science

| 20,391

|

Error if SLURM_NTASKS != SLURM_NTASKS_PER_NODE

|

### Bug description

Would it be possible for Lightning to raise an error if `SLURM_NTASKS != SLURM_NTASKS_PER_NODE` in case both are set?

With a single node the current behavior is:

* `SLURM_NTASKS == SLURM_NTASKS_PER_NODE`: Everything is fine

* `SLURM_NTASKS > SLURM_NTASKS_PER_NODE`: Slurm doesn't let you schedule the job and raises an error

* `SLURM_NTASKS < SLURM_NTASKS_PER_NODE`: Lightning thinks there are `SLURM_NTASKS_PER_NODE` devices but the job only runs on `SLURM_NTASKS` devices.

Example scripts:

```

#!/bin/bash

#SBATCH --ntasks=1

#SBATCH --nodes=1

#SBATCH --gres=gpu:2

#SBATCH --ntasks-per-node=2

#SBATCH --cpus-per-task=3

source .venv/bin/activate

srun python train_lightning.py

```

And `train_lightning.py`:

```

from pytorch_lightning.demos.boring_classes import BoringModel, BoringDataModule

from pytorch_lightning import Trainer

import os

def main():

print(

f"LOCAL_RANK={os.environ.get('LOCAL_RANK', 0)}, SLURM_NTASKS={os.environ.get('SLURM_NTASKS')}, SLURM_NTASKS_PER_NODE={os.environ.get('SLURM_NTASKS_PER_NODE')}"

)

model = BoringModel()

datamodule = BoringDataModule()

trainer = Trainer(max_epochs=100)

print(f"trainer.num_devices: {trainer.num_devices}")

trainer.fit(model, datamodule)

if __name__ == "__main__":

main()

```

This generates the following output:

```

GPU available: True (cuda), used: True

TPU available: False, using: 0 TPU cores

HPU available: False, using: 0 HPUs

Initializing distributed: GLOBAL_RANK: 0, MEMBER: 1/1

----------------------------------------------------------------------------------------------------

distributed_backend=nccl

All distributed processes registered. Starting with 1 processes

----------------------------------------------------------------------------------------------------

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [1,2]

| Name | Type | Params | Mode

-----------------------------------------

0 | layer | Linear | 66 | train

-----------------------------------------

66 Trainable params

0 Non-trainable params

66 Total params

0.000 Total estimated model params size (MB)

1 Modules in train mode

0 Modules in eval mode

SLURM auto-requeueing enabled. Setting signal handlers.

LOCAL_RANK=0, SLURM_NTASKS=1, SLURM_NTASKS_PER_NODE=2

trainer.num_devices: 2

```

`MEMBER: 1/1` indicates that only 1 GPU is used but `trainer.num_devices` returns 2. `nvidia-smi` also indicates that only a single device is used.

Not sure if there is a valid use case for `SLURM_NTASKS < SLURM_NTASKS_PER_NODE`. But if there is not it would be awesome if Lightning could raise an error in this scenario.

The same error also happens if `--ntasks-per-node` is not set. In this case Lightning assumes 2 devices (I guess based on `CUDA_VISIBLE_DEVICES`) but in reality only a single one is used.

### What version are you seeing the problem on?

v2.4

### How to reproduce the bug

_No response_

### Error messages and logs

```

# Error messages and logs here please

```

### Environment

<details>

<summary>Current environment</summary>

* CUDA:

- GPU:

- NVIDIA GeForce RTX 4090

- NVIDIA GeForce RTX 4090

- NVIDIA GeForce RTX 4090

- NVIDIA GeForce RTX 4090

- available: True

- version: 12.4

* Lightning:

- lightning-utilities: 0.11.8

- pytorch-lightning: 2.4.0

- torch: 2.5.1

- torchmetrics: 1.4.3

- torchvision: 0.20.1

* Packages:

- aenum: 3.1.15

- aiohappyeyeballs: 2.4.3

- aiohttp: 3.10.10

- aiosignal: 1.3.1

- annotated-types: 0.7.0

- antlr4-python3-runtime: 4.9.3

- attrs: 24.2.0

- autocommand: 2.2.2

- backports.tarfile: 1.2.0

- certifi: 2024.8.30

- charset-normalizer: 3.4.0

- eval-type-backport: 0.2.0

- filelock: 3.16.1

- frozenlist: 1.5.0

- fsspec: 2024.10.0

- hydra-core: 1.3.2

- idna: 3.10

- importlib-metadata: 8.0.0

- importlib-resources: 6.4.0

- inflect: 7.3.1

- jaraco.collections: 5.1.0

- jaraco.context: 5.3.0

- jaraco.functools: 4.0.1

- jaraco.text: 3.12.1

- jinja2: 3.1.4

- lightly: 1.5.13

- lightning-utilities: 0.11.8

- markupsafe: 3.0.2

- more-itertools: 10.3.0

- mpmath: 1.3.0

- multidict: 6.1.0

- networkx: 3.4.2

- numpy: 2.1.3

- nvidia-cublas-cu12: 12.4.5.8

- nvidia-cuda-cupti-cu12: 12.4.127

- nvidia-cuda-nvrtc-cu12: 12.4.127

- nvidia-cuda-runtime-cu12: 12.4.127

- nvidia-cudnn-cu12: 9.1.0.70

- nvidia-cufft-cu12: 11.2.1.3

- nvidia-curand-cu12: 10.3.5.147

- nvidia-cusolver-cu12: 11.6.1.9

- nvidia-cusparse-cu12: 12.3.1.170

- nvidia-nccl-cu12: 2.21.5

- nvidia-nvjitlink-cu12: 12.4.127

- nvidia-nvtx-cu12: 12.4.127

- omegaconf: 2.3.0

- packaging: 24.1

- pillow: 11.0.0

- platformdirs: 4.2.2

- propcache: 0.2.0

- psutil: 6.1.0

- pyarrow: 18.0.0

- pydantic: 2.9.2

- pydantic-core: 2.23.4

- python-dateutil: 2.9.0.post0

- pytorch-lightning: 2.4.0

- pytz: 2024.2

- pyyaml: 6.0.2

- requests: 2.32.3

- setuptools: 75.3.0

- six: 1.16.0

- sympy: 1.13.1

- tomli: 2.0.1

- torch: 2.5.1

- torchmetrics: 1.4.3

- torchvision: 0.20.1

- tqdm: 4.66.6

- triton: 3.1.0

- typeguard: 4.3.0

- typing-extensions: 4.12.2

- urllib3: 2.2.3

- wheel: 0.43.0

- yarl: 1.17.1

- zipp: 3.19.2

* System:

- OS: Linux

- architecture:

- 64bit

- ELF

- processor: x86_64

- python: 3.12.3

- release: 6.8.0-38-generic

- version: #38-Ubuntu SMP PREEMPT_DYNAMIC Fri Jun 7 15:25:01 UTC 2024

</details>

### More info

_No response_

|

open

|

2024-11-04T16:19:56Z

|

2024-11-19T00:18:48Z

|

https://github.com/Lightning-AI/pytorch-lightning/issues/20391

|

[

"working as intended",

"ver: 2.4.x"

] |

guarin

| 1

|

taverntesting/tavern

|

pytest

| 24

|

Getting requests.exceptions.InvalidHeader:

|

In my test.tavern.yaml file

```

- name: Make sure signature is returned

request:

url: "{signature_url:s}"

method: PUT

headers:

content-type: application/json

content: {"ppddCode": "11","LIN": "123456789","correlationID":"{correlationId:s}","bodyTypeCode":"utv"}

token: "{sessionToken:s}"

response:

status_code: 200

save:

body:

signature: signature

```

I get the following error response:

```

E requests.exceptions.InvalidHeader: Value for header {content: {'ppddCode': '11', 'LIN': '123456789', 'correlationID': '99019c36-4f49-4be8-815c-9ff5cbcc14ff', 'bodyTypeCode': 'utv'}} must be of type str or bytes, not <class 'dict'>

venv/lib/python3.6/site-packages/requests/utils.py:872: InvalidHeader

```

How to convert my 'content' in my header to a dict? Or any other solution?

|

closed

|

2018-02-06T21:58:48Z

|

2018-02-07T17:22:38Z

|

https://github.com/taverntesting/tavern/issues/24

|

[] |

sridharaiyer

| 2

|

ansible/awx

|

django

| 15,775

|

How Do I Fail an Ansible Playbook Run or AWX Job when Hosts are Skipped?

|

### Please confirm the following

- [x] I agree to follow this project's [code of conduct](https://docs.ansible.com/ansible/latest/community/code_of_conduct.html).

- [x] I have checked the [current issues](https://github.com/ansible/awx/issues) for duplicates.

- [x] I understand that AWX is open source software provided for free and that I might not receive a timely response.

### Feature type

New Feature

### Feature Summary

Hi

How Do I Fail an Ansible Playbook Run or AWX Job when Hosts are Skipped?

Now my awx job is reported "Successful" but nothing occurred

Thanks

### Select the relevant components

- [x] UI

- [ ] API

- [x] Docs

- [ ] Collection

- [ ] CLI

- [ ] Other

### Steps to reproduce

Run a job with an offline host and finally job reported successful status with "skipping: no hosts matched"

### Current results

Job is currently "Successful" even if hosts are skipped:

skipping: no hosts matched

For Job:

Status: Successful

### Sugested feature result

Job failed when hosts are not known

"skipping: no hosts matched"

### Additional information

_No response_

|

closed

|

2025-01-27T08:36:31Z

|

2025-02-05T18:11:39Z

|