repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

FactoryBoy/factory_boy

|

django

| 136

|

Default to null for nullable OneToOneField in Django

|

I can't figure from the docs how to achieve this.

I have a model with a 1to1 field, which is nullable. If I don't specify the relation somehow, I can't create the related model instance using __ syntax. But if I do, it appears to create the related mode no matter what.

How can I have it only create the related model if I pass args for it, and leave it null otherwise?

|

closed

|

2014-02-28T02:46:51Z

|

2014-09-03T21:44:34Z

|

https://github.com/FactoryBoy/factory_boy/issues/136

|

[] |

funkybob

| 1

|

pandas-dev/pandas

|

pandas

| 60,305

|

API: how to check for "logical" equality of dtypes?

|

Assume you have a series, which has a certain dtype. In the case that this dtype is an instance of potentially multiple variants of a logical dtype (for example, string backed by python or backed by pyarrow), how do you check for the "logical" equality of such dtypes?

For checking the logical equality for one series, you have the option to compare it with the generic string alias (which will return True for any variant of it) or checking the dtype with `isinstance` or some `is_..._dtype` (although we have deprecated some of those). Using string dtype as the example:

```python

ser.dtype == "string"

# or

isinstance(ser.dtype, pd.StringDtype)

pd.api.types.is_string_dtype(ser.dtype)

```

When you want to check if two serieses have the same dtype, the `==` will check for exact equality (in the string dtype example, the below can evaluate to False even if both are a StringDtype, but have a different storage):

```python

ser1.dtype == ser2.dtype

```

**But so how to check this logical equality for two dtypes?** In the example, how to know that both dtypes are representing the same logical dtype (i.e. both a StringDtype instance), without necessarily wanting to check the exact type (i.e. the user doesn't necessarily know it are string dtypes, just want to check if they are logically the same)

```python

# this might work?

type(ser1.dtype) == type(ser2.dtype)

```

Do we want some other API here that is a bit more user friendly? (just brainstorming, something like `dtype1.is_same_type(dtype2)`, or a function, ..)

---

This is important in the discussion around logical dtypes (https://github.com/pandas-dev/pandas/pull/58455), but so it is already an issue for the new string dtype as well in pandas 3.0

cc @WillAyd @Dr-Irv @jbrockmendel (tagging some people that were most active in the logical dtypes discussion)

|

open

|

2024-11-13T18:00:22Z

|

2024-12-25T16:19:32Z

|

https://github.com/pandas-dev/pandas/issues/60305

|

[

"API Design"

] |

jorisvandenbossche

| 11

|

pytorch/pytorch

|

machine-learning

| 148,939

|

Whether the transposed tensor is contiguous affects the results of the subsequent Linear layer.

|

### 🐛 Describe the bug

I found that whether the transposed tensor is contiguous affects the results of the subsequent Linear layer. I want to know if it is a bug or not?

```

import torch

from torch import nn

x = torch.randn(3, 4).transpose(0, 1) # 非连续张量(转置后)

linear = nn.Linear(3, 2)

y1 = linear(x) # 非连续输入

y2 = linear(x.contiguous()) # 连续输入

print(torch.allclose(y1, y2)) # True

x = torch.randn(2,226,1024).transpose(0, 1) # 非连续张量(转置后)

linear = nn.Linear(1024, 64)

y1 = linear(x) # 非连续输入

y2 = linear(x.contiguous()) # 连续输入

print(torch.allclose(y1, y2)) # False ???

```

### Versions

PyTorch version: 2.5.0+cu124

Is debug build: False

CUDA used to build PyTorch: 12.4

ROCM used to build PyTorch: N/A

OS: Ubuntu 22.04.4 LTS (x86_64)

GCC version: (Ubuntu 11.4.0-1ubuntu1~22.04) 11.4.0

Clang version: Could not collect

CMake version: version 3.30.5

Libc version: glibc-2.35

Python version: 3.11.10 | packaged by conda-forge | (main, Oct 16 2024, 01:27:36) [GCC 13.3.0] (64-bit runtime)

Python platform: Linux-5.15.0-91-generic-x86_64-with-glibc2.35

Is CUDA available: True

CUDA runtime version: 12.4.131

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration:

GPU 0: NVIDIA L20

GPU 1: NVIDIA L20

Nvidia driver version: 570.86.15

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 52 bits physical, 57 bits virtual

Byte Order: Little Endian

CPU(s): 44

On-line CPU(s) list: 0-43

Vendor ID: GenuineIntel

Model name: Intel(R) Xeon(R) Platinum 8457C

CPU family: 6

Model: 143

Thread(s) per core: 2

Core(s) per socket: 22

Socket(s): 1

Stepping: 8

BogoMIPS: 5200.00

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology nonstop_tsc cpuid tsc_known_freq pni pclmulqdq monitor ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch cpuid_fault invpcid_single ssbd ibrs ibpb stibp ibrs_enhanced fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms invpcid avx512f avx512dq rdseed adx smap avx512ifma clflushopt clwb avx512cd sha_ni avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves avx_vnni avx512_bf16 wbnoinvd arat avx512vbmi umip pku ospke waitpkg avx512_vbmi2 gfni vaes vpclmulqdq avx512_vnni avx512_bitalg avx512_vpopcntdq la57 rdpid cldemote movdiri movdir64b fsrm md_clear serialize tsxldtrk arch_lbr amx_bf16 avx512_fp16 amx_tile amx_int8 arch_capabilities

Hypervisor vendor: KVM

Virtualization type: full

L1d cache: 1 MiB (22 instances)

L1i cache: 704 KiB (22 instances)

L2 cache: 44 MiB (22 instances)

L3 cache: 97.5 MiB (1 instance)

NUMA node(s): 1

NUMA node0 CPU(s): 0-43

Vulnerability Gather data sampling: Not affected

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Mmio stale data: Unknown: No mitigations

Vulnerability Retbleed: Not affected

Vulnerability Spec rstack overflow: Not affected

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl and seccomp

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Enhanced IBRS, IBPB conditional, RSB filling, PBRSB-eIBRS SW sequence

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Mitigation; TSX disabled

Versions of relevant libraries:

[pip3] numpy==2.1.2

[pip3] nvidia-cublas-cu12==12.4.5.8

[pip3] nvidia-cuda-cupti-cu12==12.4.127

[pip3] nvidia-cuda-nvrtc-cu12==12.4.127

[pip3] nvidia-cuda-runtime-cu12==12.4.127

[pip3] nvidia-cudnn-cu12==9.1.0.70

[pip3] nvidia-cufft-cu12==11.2.1.3

[pip3] nvidia-curand-cu12==10.3.5.147

[pip3] nvidia-cusolver-cu12==11.6.1.9

[pip3] nvidia-cusparse-cu12==12.3.1.170

[pip3] nvidia-nccl-cu12==2.21.5

[pip3] nvidia-nvjitlink-cu12==12.4.127

[pip3] nvidia-nvtx-cu12==12.4.127

[pip3] onnx==1.17.0

[pip3] optree==0.13.0

[pip3] pytorch-fid==0.3.0

[pip3] torch==2.5.0+cu124

[pip3] torch-fidelity==0.3.0

[pip3] torchao==0.9.0

[pip3] torchaudio==2.5.0+cu124

[pip3] torchelastic==0.2.2

[pip3] torchmetrics==1.6.1

[pip3] torchvision==0.20.0+cu124

[pip3] triton==3.1.0

[conda] numpy 2.1.2 py311h71ddf71_0 conda-forge

[conda] nvidia-cublas-cu12 12.4.5.8 pypi_0 pypi

[conda] nvidia-cuda-cupti-cu12 12.4.127 pypi_0 pypi

[conda] nvidia-cuda-nvrtc-cu12 12.4.127 pypi_0 pypi

[conda] nvidia-cuda-runtime-cu12 12.4.127 pypi_0 pypi

[conda] nvidia-cudnn-cu12 9.1.0.70 pypi_0 pypi

[conda] nvidia-cufft-cu12 11.2.1.3 pypi_0 pypi

[conda] nvidia-curand-cu12 10.3.5.147 pypi_0 pypi

[conda] nvidia-cusolver-cu12 11.6.1.9 pypi_0 pypi

[conda] nvidia-cusparse-cu12 12.3.1.170 pypi_0 pypi

[conda] nvidia-nccl-cu12 2.21.5 pypi_0 pypi

[conda] nvidia-nvjitlink-cu12 12.4.127 pypi_0 pypi

[conda] nvidia-nvtx-cu12 12.4.127 pypi_0 pypi

[conda] optree 0.13.0 pypi_0 pypi

[conda] pytorch-fid 0.3.0 pypi_0 pypi

[conda] torch 2.5.0+cu124 pypi_0 pypi

[conda] torch-fidelity 0.3.0 pypi_0 pypi

[conda] torchao 0.9.0 pypi_0 pypi

[conda] torchaudio 2.5.0+cu124 pypi_0 pypi

[conda] torchelastic 0.2.2 pypi_0 pypi

[conda] torchmetrics 1.6.1 pypi_0 pypi

[conda] torchvision 0.20.0+cu124 pypi_0 pypi

[conda] triton 3.1.0 pypi_0 pypi

cc @albanD @mruberry @jbschlosser @walterddr @mikaylagawarecki @frank-wei @jgong5 @mingfeima @XiaobingSuper @sanchitintel @ashokei @jingxu10

|

open

|

2025-03-11T02:39:40Z

|

2025-03-18T02:47:42Z

|

https://github.com/pytorch/pytorch/issues/148939

|

[

"needs reproduction",

"module: nn",

"triaged",

"module: intel"

] |

pikerbright

| 3

|

nerfstudio-project/nerfstudio

|

computer-vision

| 2,863

|

Splatfacto new POV image quality

|

Hi,

It not really a bug, but more a question about splatfacto image quality/parameters. If it is not the right place, just let me know.

I am working to reconstruct driving scene from pandaset. Camera poses are along a car trajectory. When I render an image from this trajectory (train or eval set) the quality is very good.

Below a example of one image from eval set:

But if I move a bit the target vehicle and the ego camera (1m on left and 0.5m up), the camera direction stays very close to the original one.

The quality decrease very quickly. The quality is very sensitive to direction.

I tried to reduce sh degree from 3 to 1, to avoid to much overfitting, with no really improvement.

Sure that a driving scene images is less dense, than a unique object scene with video sequence around it. But few months ago, I did it with nerfacto, the quality from initial poses was less, but really less sensible to new POV. Below a short video of nerfacto reconstruction from 5 cameras (front, side, and front-side) on the vehicle:

https://github.com/nerfstudio-project/nerfstudio/assets/42007976/8b347e4e-325c-401e-bed8-27896f160cc1

I improved my results with nerfacto by using camera pose opt. So I tried to do it for splatfacto by cherry pick viewmat gradient backward from gsplat branch https://github.com/nerfstudio-project/gsplat/tree/vickie/camera-grads. But for now, it didn't improve it.

In the same way, if I tried with multi cameras (or just a side camera in place of the front), the quality is very less impressive. Below example with 5 cameras and cameras optimization (but I seem to not have a big influence).

Left view:

Front view

Do you have any idea, about what I should try firstly to improve new POV synthesis and secondly to improve multi cameras reconstruction?

And did someone work one camera pose optimization for SplatFacto ?

And just for the fun, below an example of what we can do with objects and multiples splatFacto instance:

https://github.com/nerfstudio-project/nerfstudio/assets/42007976/8f85ddef-bb3c-481f-b0f5-8490ae53603a

Thanks for your inputs, I will go to implement depth loss from lidar to see if it help.

|

open

|

2024-02-01T18:17:45Z

|

2024-06-28T20:02:04Z

|

https://github.com/nerfstudio-project/nerfstudio/issues/2863

|

[] |

pierremerriaux-leddartech

| 17

|

nl8590687/ASRT_SpeechRecognition

|

tensorflow

| 109

|

第一层的卷积核个数为什么选择32个

|

closed

|

2019-04-26T08:54:29Z

|

2020-05-11T05:48:04Z

|

https://github.com/nl8590687/ASRT_SpeechRecognition/issues/109

|

[] |

myrainbowandsky

| 0

|

|

GibbsConsulting/django-plotly-dash

|

plotly

| 292

|

"find apps" in admin creates almost duplicate app in django_plotly_dash_statelessapp

|

I have created the example app SimpleExample.

When running the django project for the first time, the table `django_plotly_dash_statelessapp` is empty.

When I first run the Dash app, the table contains a new row with `name="SimpleExample"` and `slug="simpleexample"`.

However, when I run the "find apps" command in the admin panel, I get a second app with `name="simpleexample"` and `slug="simpleexample2"`. This is due to the `name = slugify(name)` found https://github.com/GibbsConsulting/django-plotly-dash/blob/master/django_plotly_dash/dash_wrapper.py#L105 and https://github.com/GibbsConsulting/django-plotly-dash/blob/master/django_plotly_dash/dash_wrapper.py#L123.

I guess this is not expected (there should be only one row in this table for the SimpleExample app).

As there is already the `slug` field for the slugified version of the app name, I suspect the two lines should be commented. If I do so, the "find apps" does not create a new row in the table.

Maybe linked to issue #263 ?

|

closed

|

2020-11-24T05:27:33Z

|

2021-02-03T05:17:08Z

|

https://github.com/GibbsConsulting/django-plotly-dash/issues/292

|

[] |

sdementen

| 1

|

plotly/dash

|

plotly

| 2,744

|

[BUG] Duplicate callback outputs

|

Hello:

I tried using the same `Input`, but there are some differences in the `Output`, but dash prompts me that I need to increase `allow_duplicate = True`。

example dash:

```python

from dash import Dash, html,callback,Output,Input,no_update

app = Dash(__name__)

app.layout = html.Div([

html.Div(children='output1',id="output1"),

html.Div(children='output2',id="output2"),

html.Button("button",id = "button")

])

@callback(

Output("output1","children",allow_duplicate=True),

Input("button","n_clicks"),

prevent_initial_call=True,

)

def out1(n_clicks):

return no_update

@callback(

Output("output1","children",allow_duplicate=True),

Output("output2","children"),

Input("button","n_clicks"),

prevent_initial_call=True,

)

def out2(n_clicks):

return no_update,no_update

if __name__ == '__main__':

app.run(debug=True)

```

If I change one of the `Output` parameters to `allow_duplicate=False`, no error is reported.

Thanks.

|

closed

|

2024-02-05T06:24:51Z

|

2024-02-29T15:01:55Z

|

https://github.com/plotly/dash/issues/2744

|

[] |

Liripo

| 4

|

jina-ai/serve

|

deep-learning

| 5,422

|

`metadata.uid` field doesn't exist in generated k8s YAMLs

|

**Describe the bug**

<!-- A clear and concise description of what the bug is. -->

In the [generated YAML file](https://github.com/jina-ai/jina/blob/master/jina/resources/k8s/template/deployment-executor.yml), it has a reference to `metadata.uid`: `fieldPath: metadata.uid`. But looks like in `metadata` there's no `uid`:

```

metadata:

name: {name}

namespace: {namespace}

```

This is sometimes causing problem for our Operator use case; we had to manually remove the `POD_UID` block generated by `jina`. Are we going to address this?

**Describe how you solve it**

<!-- copy past your code/pull request link -->

---

<!-- Optional, but really help us locate the problem faster -->

**Environment**

<!-- Run `jina --version-full` and copy paste the output here -->

**Screenshots**

<!-- If applicable, add screenshots to help explain your problem. -->

|

closed

|

2022-11-22T02:22:01Z

|

2022-12-01T08:33:06Z

|

https://github.com/jina-ai/serve/issues/5422

|

[] |

zac-li

| 0

|

BeanieODM/beanie

|

asyncio

| 246

|

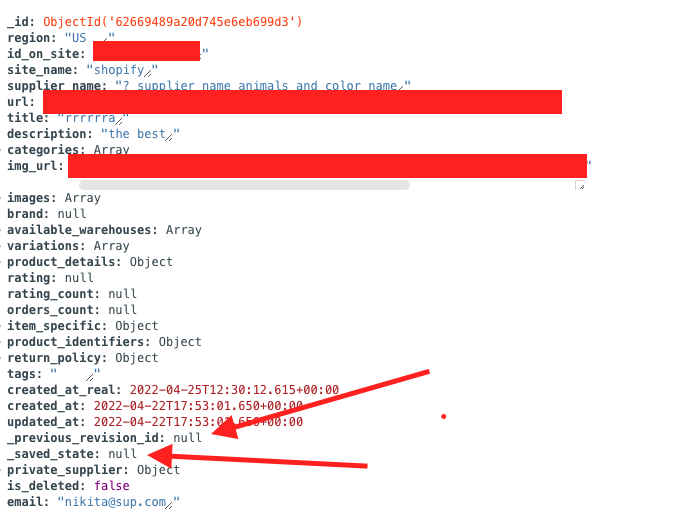

_saved_state and _previous_revision_id saved in DB

|

These fields should not be stored in the database

|

closed

|

2022-04-25T12:41:42Z

|

2023-05-23T18:41:53Z

|

https://github.com/BeanieODM/beanie/issues/246

|

[

"snippet requested"

] |

nichitag

| 3

|

PaddlePaddle/ERNIE

|

nlp

| 76

|

关于msra标签增加报错

|

分类报错.请问在什么地方可以更改分类数

|

closed

|

2019-04-04T07:24:54Z

|

2019-04-17T16:16:49Z

|

https://github.com/PaddlePaddle/ERNIE/issues/76

|

[] |

jtyoui

| 1

|

sinaptik-ai/pandas-ai

|

data-visualization

| 1,455

|

failed to solve: process "/bin/sh -c npm run build" did not complete successfully: exit code: 1

|

![Uploading Screenshot 2024-12-07 at 13.39.02.png…]()

------

> [client 6/6] RUN npm run build:

0.585

0.585 > client@0.1.0 build

0.585 > next build

0.585

1.266 Attention: Next.js now collects completely anonymous telemetry regarding usage.

1.266 This information is used to shape Next.js' roadmap and prioritize features.

1.267 You can learn more, including how to opt-out if you'd not like to participate in this anonymous program, by visiting the following URL:

1.267 https://nextjs.org/telemetry

1.267

1.336 ▲ Next.js 14.2.3

1.336 - Environments: .env

1.336

1.406 Creating an optimized production build ...

41.70 ✓ Compiled successfully

41.70 Skipping linting

41.70 Checking validity of types ...

46.59 Failed to compile.

46.59

46.59 ./app/(ee)/settings/layout.tsx:9:11

46.59 Type error: 'SettingsLayout' cannot be used as a JSX component.

46.59 Its type '({ children, }: { children: ReactNode; }) => Promise<Element>' is not a valid JSX element type.

46.59 Type '({ children, }: { children: ReactNode; }) => Promise<Element>' is not assignable to type '(props: any, deprecatedLegacyContext?: any) => ReactNode'.

46.59 Type 'Promise<Element>' is not assignable to type 'ReactNode'.

46.59

46.59 7 | children: React.ReactNode;

46.59 8 | }) {

46.59 > 9 | return <SettingsLayout>{children}</SettingsLayout>;

46.59 | ^

46.59 10 | }

46.59 11 |

46.59 12 |

------

failed to solve: process "/bin/sh -c npm run build" did not complete successfully: exit code: 1

|

closed

|

2024-12-07T08:09:21Z

|

2024-12-16T11:48:47Z

|

https://github.com/sinaptik-ai/pandas-ai/issues/1455

|

[] |

shivbhor

| 2

|

recommenders-team/recommenders

|

machine-learning

| 1,614

|

[BUG] Error on als_movielens.ipynb

|

### Description

<!--- Describe your issue/bug/request in detail -->

Hello :)

I found **two** bugs on [ALS Tutorial](https://github.com/microsoft/recommenders/blob/main/examples/00_quick_start/als_movielens.ipynb).

### 1. Error at `data = movielens.load_spark_df(spark, size=MOVIELENS_DATA_SIZE, schema=schema)`

This occurs when I use pyspark==2.3.1.

I used pyspark==2.3.1 because [tutorial](https://github.com/microsoft/recommenders/blob/main/examples/00_quick_start/als_movielens.ipynb) was based on this version,

but it fails.

But when I use pyspark==3.2.0, it works.

### 2. Join operation fail at

```

dfs_pred_exclude_train = dfs_pred.alias("pred").join(

train.alias("train"),

(dfs_pred[COL_USER] == train[COL_USER]) & (dfs_pred[COL_ITEM] == train[COL_ITEM]),

how='outer'

)

```

As version 2.3.1 fails, I tried this with pyspark==3.2.0.

However, `AnalysisException` occurs.

I think this is also a version issue.

### In which platform does it happen?

<!--- Describe the platform where the issue is happening (use a list if needed) -->

<!--- For example: -->

<!--- * Azure Data Science Virtual Machine. -->

<!--- * Azure Databricks. -->

<!--- * Other platforms. -->

- Google Colab

- System version: 3.7.12 (GCC 7.5.0)

- Spark version: 2.3.1, 3.2.0

### How do we replicate the issue?

<!--- Please be specific as possible (use a list if needed). -->

<!--- For example: -->

<!--- * Create a conda environment for pyspark -->

<!--- * Run unit test `test_sar_pyspark.py` with `pytest -m 'spark'` -->

<!--- * ... -->

Run https://github.com/microsoft/recommenders/blob/main/examples/00_quick_start/als_movielens.ipynb on Colab

### Expected behavior (i.e. solution)

<!--- For example: -->

<!--- * The tests for SAR PySpark should pass successfully. -->

Version issues should be resolved.

Join operations should be done without error.

### Other Comments

Thank you in advance! :)

|

closed

|

2022-01-19T13:36:18Z

|

2022-01-20T02:44:10Z

|

https://github.com/recommenders-team/recommenders/issues/1614

|

[

"bug"

] |

Seyoung9304

| 2

|

plotly/dash

|

data-visualization

| 2,916

|

[BUG] TypeError: Cannot read properties of undefined (reading 'concat') in getInputHistoryState

|

**Context**

The following code is a simplified example of the real application. The layout initially contains a root div and inputs. By using **pattern matching** and **set_props** the callback function can be setup in a general manner. Anyway, when triggering the callback by a button the root div will be populated by any other content like another div and more inputs. The newly created inputs then trigger the same callback and the layout is further updated. A nested structure.

The ID is like this:` {'type': 'ELEMENT_TYPE', 'eid': 'ELEMNET_ID, 'target': TARGET_ID'}`

- type: This is the elmenet type (Div, Button, Input, ..)

- eid: Unique element id

- target: In case the element is an input, than this is the id of the target element (optional)

In the following example works like this:

1. Button1 is clicked

2. Callback update is triggered

3. key target is checked

4. run set_props on the element with eid = target and the desired content

***failing example:***

This works for Button1 as expected. But when clicking Button2 (which was created by Button1) the mentioned TypeError occures. When inspecting the console, it can be seen, that the itempath is undefined.

```

from dash import Dash, html, dcc, ALL, callback_context, exceptions, set_props, callback

import dash_bootstrap_components as dbc

from dash.dependencies import Input, Output, State

# app

app = Dash(__name__)

# layout

app.layout = html.Div([

html.H4(['Title'], id={'type': 'H4','eid': 'title', 'target': ''})

,dbc.Button(['BTN1 (create new div)'], id={'type': 'Button','eid': 'btn1', 'target': 'content1'})

,html.Div([], id={'type': 'Div','eid': 'content1', 'target': ''})

,html.Div([], id={'type': 'Div','eid': 'content3', 'target': ''})

], id={'type': 'Div','eid': 'body', 'target': ''})

#callback

@callback(

Output({'type': 'H4', 'eid': 'title', 'target': ''}, 'children')

,State({'type': 'Div', 'eid': 'body', 'target': ''}, 'children')

,Input({'type': 'Button', 'eid': ALL, 'target': ALL}, 'n_clicks')

,Input({'type': 'Input', 'eid': ALL, 'target': ALL}, 'value')

,prevent_initial_call=True

)

def update(

H4__children

,Button__n_clicks

,Input__value

):

if callback_context.triggered_id is not None:

id_changed = callback_context.triggered_id

else:

raise exceptions.PreventUpdate

if id_changed['target'] == 'content1':

set_props(

{'type': 'Div', 'eid': id_changed.target, 'target': ''}

,{'children': [

dbc.Button(['BTN2 (write OUTPUT to new div)'], id={'type': 'Button','eid': 'btn2', 'target': 'content2'})

#dbc.Button(['BTN2 (write OUTPUT to new div)'], id={'type': 'Button','eid': 'btn2', 'target': 'content3'})

,html.Div(['div content2 from button1'], id={'type': 'Div','eid': 'content2', 'target': ''})

]}

)

elif id_changed['target'] == 'content2':

set_props({'type': 'Div', 'eid': id_changed.target, 'target': ''}, {'children': 'OUTPUT for content2 from button2'})

elif id_changed['target'] == 'content3':

set_props({'type': 'Div', 'eid': id_changed.target, 'target': ''}, {'children': 'OUTPUT for content3 from button2'})

return 'Title'

# main

if __name__ == '__main__':

app.run(debug=True, dev_tools_hot_reload=True, dev_tools_ui=True)

```

Changing the target of Button1 to content3 works (just replace the line for dbc:Button within the callback). So the itempath for Button2 is found and the callback for the new input works.

***working example:***

`pip list | grep dash`

```

dash 2.17.1

dash_ag_grid 31.2.0

dash-bootstrap-components 1.6.0

dash-core-components 2.0.0

dash-html-components 2.0.0

dash-table 5.0.0

```

- OS: Win11

- Browser chrome

- Version 126

**Describe the bug**

The itempath for content2 Div is undefined and concat in getInputHistoryState fails. The itempath is undefined because the component is not present within the store. So it looks like set_props does not update the store?

```

reducer.js:57 Uncaught (in promise)

TypeError: Cannot read properties of undefined (reading 'concat')

at getInputHistoryState (reducer.js:57:36)

at reducer.js:82:34

at reducer.js:121:16

at dispatch (redux.js:288:1)

at index.js:20:1

at dispatch (redux.js:691:1)

at callbacks.ts:214:9

at index.js:16:1

at dispatch (redux.js:691:1)

at callbacks.ts:255:13

```

**Expected behavior**

The message 'OUTPUT for content2 from button2' should appear in Div with id {'type': 'Div','eid': 'content2', 'target': ''}.

|

closed

|

2024-07-08T17:25:20Z

|

2024-07-26T13:16:01Z

|

https://github.com/plotly/dash/issues/2916

|

[] |

wKollendorf

| 2

|

robotframework/robotframework

|

automation

| 5,146

|

Warning with page screen shot

|

I am seeing the warning below in console and in html report while executing robot script.

Any way to hide or disable warning?

[ WARN ] Keyword 'Capture Page Screenshot' could not be run on failure: WebDriverException: Message: [Exception... "Data conversion failed because significant data would be lost" nsresult: "0x80460003 (NS_ERROR_LOSS_OF_SIGNIFICANT_DATA)" location: "<unknown>" data: no]

I use robotframework 6.1.1 with seleniumLibrary 5.1.3

|

closed

|

2024-06-09T11:33:15Z

|

2024-06-11T16:35:16Z

|

https://github.com/robotframework/robotframework/issues/5146

|

[] |

aya-spec

| 1

|

Asabeneh/30-Days-Of-Python

|

matplotlib

| 565

|

Duplicated exercises in Day 4

|

There are some duplicated exercises in Day 4 ([30-Days-Of-Python](https://github.com/Asabeneh/30-Days-Of-Python/tree/master)/[04_Day_Strings](https://github.com/Asabeneh/30-Days-Of-Python/tree/master/04_Day_Strings)

/04_strings.md).

1. I believe exercise 23 and exercise 26 are nearly the same.

> 23. Use index or find to **find the position of the first occurrence of the word 'because' in the following sentence: 'You cannot end a sentence with because because because is a conjunction'**

> 26. **Find the position of the first occurrence of the word 'because' in the following sentence: 'You cannot end a sentence with because because because is a conjunction'**

2. Exercise 25 and 27 are the same.

|

open

|

2024-07-23T08:25:04Z

|

2024-07-24T07:00:01Z

|

https://github.com/Asabeneh/30-Days-Of-Python/issues/565

|

[] |

chienchuanw

| 1

|

s3rius/FastAPI-template

|

fastapi

| 69

|

Add psycopg support.

|

It would be super nice to have ability to generate project without ORM.

Since for highload it's really useful.

|

closed

|

2022-04-12T22:16:11Z

|

2022-04-15T20:41:15Z

|

https://github.com/s3rius/FastAPI-template/issues/69

|

[] |

s3rius

| 1

|

huggingface/datasets

|

deep-learning

| 6,912

|

Add MedImg for streaming

|

### Feature request

Host the MedImg dataset (similar to Imagenet but for biomedical images).

### Motivation

There is a clear need for biomedical image foundation models and large scale biomedical datasets that are easily streamable. This would be an excellent tool for the biomedical community.

### Your contribution

MedImg can be found [here](https://www.cuilab.cn/medimg/#).

|

open

|

2024-05-22T00:55:30Z

|

2024-09-05T16:53:54Z

|

https://github.com/huggingface/datasets/issues/6912

|

[

"dataset request"

] |

lhallee

| 8

|

FactoryBoy/factory_boy

|

sqlalchemy

| 400

|

'PostGenerationContext' object has no attribute 'items'

|

In https://github.com/FactoryBoy/factory_boy/commit/8dadbe20e845ae7e311edf2cefc4ce9e24c25370

PostGenerationContext was changed to a NamedTuple. This causes a crash in utils.log_pprint:110

AttributeError: 'PostGenerationContext' object has no attribute 'items'

PR with a quick fix here: https://github.com/FactoryBoy/factory_boy/pull/399

|

closed

|

2017-07-31T18:31:48Z

|

2017-07-31T18:34:36Z

|

https://github.com/FactoryBoy/factory_boy/issues/400

|

[] |

Fingel

| 1

|

miguelgrinberg/Flask-SocketIO

|

flask

| 1,185

|

Not working as WebSocket on Windows 7 Ultimate

|

**Your question**

I'm trying to start a Websocket server with Flask and flask-socketio. It's working as ajax polling mode (Not WebSocket Mode). But It didn't work when I installed the eventlet module (for WebSocket Support).

**Logs**

Server initialized for eventlet.

Then nothing:`(

|

closed

|

2020-02-11T07:13:40Z

|

2020-02-11T08:35:40Z

|

https://github.com/miguelgrinberg/Flask-SocketIO/issues/1185

|

[

"question"

] |

fred913

| 2

|

streamlit/streamlit

|

data-visualization

| 10,026

|

Supress multiselect "Remove an option first". Message displays immediately upon reaching max selection.

|

### Checklist

- [X] I have searched the [existing issues](https://github.com/streamlit/streamlit/issues) for similar feature requests.

- [X] I added a descriptive title and summary to this issue.

### Summary

st.multiselect displays this message immediately upon reaching n = max_selections:

For example, if max_selection = 2, the user will make 1 selection, and then a 2nd selection - this message will pop up immediately upon making the 2nd selection.

### Why?

The current state of the function makes the user feel as though their 2nd selection is raising an error.

### How?

The message should only appear if the user is attempting to make n > max_selections.

### Additional Context

_No response_

|

open

|

2024-12-16T04:00:23Z

|

2024-12-17T03:42:44Z

|

https://github.com/streamlit/streamlit/issues/10026

|

[

"type:enhancement",

"feature:st.multiselect"

] |

LarryLoveIV

| 3

|

aleju/imgaug

|

deep-learning

| 760

|

Normalizing points for polygon intersection testing.

|

According to https://github.com/ideasman42/isect_segments-bentley_ottmann/issues/4 , their implementation is best tested on points normalized between -1 and 1. I ran into an issue similar to the issue I linked, running imgaug:

```

Traceback (most recent call last):

File "/usr/lib/python3.9/site-packages/imgaug/external/poly_point_isect_py2py3.py", line 351, in remove

self._events_current_sweep.remove(event)

File "/usr/lib/python3.9/site-packages/imgaug/external/poly_point_isect_py2py3.py", line 1302, in remove

raise KeyError(str(key))

KeyError: 'Event(0x7f4978101400, s0=(780.1091, 495.0222), s1=(781.7717, 493.7595), p=(780.1091, 495.0222), type=2, slope=-0.7594731143991321)'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3.9/site-packages/imgaug/augmentables/polys.py", line 2465, in _generate_intersection_points

intersections = isect_segments_include_segments(segments)

File "/usr/lib/python3.9/site-packages/imgaug/external/poly_point_isect_py2py3.py", line 621, in isect_segments_include_segments

return isect_segments_impl(segments, include_segments=True)

File "/usr/lib/python3.9/site-packages/imgaug/external/poly_point_isect_py2py3.py", line 590, in isect_segments_impl

sweep_line.handle(p, events_current)

File "/usr/lib/python3.9/site-packages/imgaug/external/poly_point_isect_py2py3.py", line 398, in handle

self.handle_event(e)

File "/usr/lib/python3.9/site-packages/imgaug/external/poly_point_isect_py2py3.py", line 438, in handle_event

if self.remove(e):

File "/usr/lib/python3.9/site-packages/imgaug/external/poly_point_isect_py2py3.py", line 360, in remove

assert(event.in_sweep == False)

AssertionError

```

Diving deeper into the issue I found a polygon with two points that were nearly identical (`722.90222168` vs `722.71356201` and `381.67370605` vs `380.05108643`). Removing one of these points prevented the code from crashing, but maybe it is better to normalize the points in the range `[-1, 1]` before using the intersection checking algorithm. I haven't tested if this too solves my problem.

Additional motivation: I assume this will also fix [this](https://github.com/aleju/imgaug/blob/master/imgaug/external/poly_point_isect_py2py3.py#L53-L55) issue.

|

open

|

2021-04-14T14:55:33Z

|

2021-04-14T14:55:33Z

|

https://github.com/aleju/imgaug/issues/760

|

[] |

hgaiser

| 0

|

allenai/allennlp

|

pytorch

| 5,171

|

No module named 'allennlp.data.tokenizers.word_splitter'

|

I'm using python 3.7 in google colab. I install allennlp=2.4.0 and allennlp-models.

When I run my code:

from allennlp.data.tokenizers.word_splitter import SpacyWordSplitter

I get this error:

ModuleNotFoundError: No module named 'allennlp.data.tokenizers.word_splitter'

help me please.

|

closed

|

2021-04-30T17:11:44Z

|

2021-05-17T16:10:36Z

|

https://github.com/allenai/allennlp/issues/5171

|

[

"question",

"stale"

] |

mitra8814

| 2

|

encode/httpx

|

asyncio

| 2,660

|

The `get_environment_proxies` function in _utils.py does not support IPv4, IPv6 correctly

|

Hi, I encountered error when my environment `no_proxy` includes IPv6 address like `::1`. It is wrongly transformed into `all://*::1` and causes urlparse error since the _urlparse.py parses the `:1` as port.

The `get_environment_proxies` function in **_utils.py** is responsible for parsing and mounting proxy info from system environment.

https://github.com/encode/httpx/blob/4b5a92e88e03443c2619f0905d756b159f9f0222/httpx/_utils.py#L229-L264

For env `no_proxy`, according to [CURLOPT_NOPROXY explained](https://curl.se/libcurl/c/CURLOPT_NOPROXY.html), it should support domains, IPv4, IPv6 and the `localhost`. However, current `get_environment_proxies` function implementation only supports domains correctly as it always adds wildcard `*` in front of the `hostname`.

To fix this issue, I looked into this repo and suggest handling the `no_proxy` hostnames as domains, IPv4, IPv6 and the `localhost` seperately. I have updated the `get_environment_proxies` function in **_utils.py** and tested it.

Refer to the PR: #2659

Replies and discussions are welcomed!

|

closed

|

2023-04-14T09:44:37Z

|

2023-04-19T12:14:39Z

|

https://github.com/encode/httpx/issues/2660

|

[] |

HearyShen

| 0

|

ivy-llc/ivy

|

numpy

| 27,862

|

Fix Frontend Failing Test: paddle - tensor.torch.Tensor.__mul__

|

closed

|

2024-01-07T22:57:02Z

|

2024-01-07T23:36:03Z

|

https://github.com/ivy-llc/ivy/issues/27862

|

[

"Sub Task"

] |

NripeshN

| 0

|

|

junyanz/pytorch-CycleGAN-and-pix2pix

|

pytorch

| 914

|

CPU training time

|

it is taking alot of training time on CPU. Is it possible to reduce CPU training time?How long it takes to train using CPU?

|

closed

|

2020-02-07T04:55:41Z

|

2020-02-08T12:33:48Z

|

https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/914

|

[] |

manvirvirk

| 3

|

CorentinJ/Real-Time-Voice-Cloning

|

python

| 852

|

mat1 and mat2 shapes cannot be multiplied (1440x512 and 320x128) TRAINING SYNTHETIZER

|

Can someone help me with this issue?

Traceback (most recent call last):

File "D:\PycharmProjects\Realtime\synthesizer_train.py", line 35, in <module>

train(**vars(args))

File "D:\PycharmProjects\Realtime\synthesizer\train.py", line 178, in train

m1_hat, m2_hat, attention, stop_pred = model(texts, mels, embeds)

File "D:\PycharmProjects\Realtime\venv\lib\site-packages\torch\nn\modules\module.py", line 1051, in _call_impl

return forward_call(*input, **kwargs)

File "D:\PycharmProjects\Realtime\synthesizer\models\tacotron.py", line 387, in forward

encoder_seq_proj = self.encoder_proj(encoder_seq)

File "D:\PycharmProjects\Realtime\venv\lib\site-packages\torch\nn\modules\module.py", line 1051, in _call_impl

return forward_call(*input, **kwargs)

File "D:\PycharmProjects\Realtime\venv\lib\site-packages\torch\nn\modules\linear.py", line 96, in forward

return F.linear(input, self.weight, self.bias)

File "D:\PycharmProjects\Realtime\venv\lib\site-packages\torch\nn\functional.py", line 1847, in linear

return torch._C._nn.linear(input, weight, bias)

RuntimeError: mat1 and mat2 shapes cannot be multiplied (1440x512 and 320x128)

|

closed

|

2021-09-25T14:39:20Z

|

2021-09-27T18:22:49Z

|

https://github.com/CorentinJ/Real-Time-Voice-Cloning/issues/852

|

[] |

ireneb612

| 3

|

ShishirPatil/gorilla

|

api

| 88

|

[bug] Hosted Gorilla: UnicodeDecodeError: 'utf-8' codec can't decode byte 0x8a in position 9: invalid start byte

|

Hello,

I get this error when launching Gorilla on a Windows 10 PC:

`UnicodeDecodeError: 'utf-8' codec can't decode byte 0x8a in position 9: invalid start byte`

It seems like the output works though.

Thank you.

|

open

|

2023-08-07T16:46:52Z

|

2023-08-08T09:43:49Z

|

https://github.com/ShishirPatil/gorilla/issues/88

|

[

"hosted-gorilla"

] |

giuliastro

| 4

|

tqdm/tqdm

|

pandas

| 1,432

|

Multi processing with args

|

- [x ] I have marked all applicable categories:

+ [x ] exception-raising bug

+ [ x] visual output bug

- [ x] I have visited the [source website], and in particular

read the [known issues]

- [ x] I have searched through the [issue tracker] for duplicates

- [ x] I have mentioned version numbers, operating system and

environment, where applicable:

```python

import tqdm, sys

print(tqdm.__version__, sys.version, sys.platform)

4.64.1 3.10.6 (main, Nov 14 2022, 16:10:14) [GCC 11.3.0] linux

```

```

Multi process

So i have a task that i use tqdm to show a progress bar, it work fine but it's long :

```python

for item in tqdm(self.items,

desc=f"Import progression for {self.__filename} {self.name}",

unit=" Item",

colour="green"):

self.db.insert_into(self.__filename, self.name, item)

```

So i want to multiprocess it (using only 1 pbar for all the work to do, so global progression not per process)

i take a look at process_map()

example from stackoverflow:

```python

from tqdm.contrib.concurrent import process_map # or thread_map

import time

def _foo(my_number):

square = my_number * my_number

time.sleep(1)

return square

if __name__ == '__main__':

r = process_map(_foo, range(0, 30), max_workers=2)

```

i'm not sure how to apply this solution to my situation, since my function take more arguments than just the iterable item.

if someone can help me put that in place it would be appreciate

[source website]: https://github.com/tqdm/tqdm/

[known issues]: https://github.com/tqdm/tqdm/#faq-and-known-issues

[issue tracker]: https://github.com/tqdm/tqdm/issues?q=

|

open

|

2023-02-20T14:21:24Z

|

2023-03-07T18:02:40Z

|

https://github.com/tqdm/tqdm/issues/1432

|

[] |

arist0v

| 2

|

eriklindernoren/ML-From-Scratch

|

deep-learning

| 108

|

Naive Bayes

|

Shouldn't the coefficient be

coeff = 1.0 / math.pi * math.sqrt(2.0 * math.pi) + eps

In equation of normal equation the pi is outside of sqrt

|

open

|

2024-03-24T10:33:59Z

|

2024-05-12T20:33:13Z

|

https://github.com/eriklindernoren/ML-From-Scratch/issues/108

|

[] |

StevenSopilidis

| 2

|

miguelgrinberg/flasky

|

flask

| 106

|

How to apply login requirement in posts, users and comments api.

|

As you have mentioned in the book that use **@auth.login_required** to protect any resource. However, github repo does not show it being applied to resources in posts, comments and user apis in api_v1_0.

When I try to import from .authentication import auth and use @auth.login_required, it does not work. Could you please help me resolve the issue?

|

closed

|

2016-01-16T15:20:36Z

|

2016-01-16T15:51:11Z

|

https://github.com/miguelgrinberg/flasky/issues/106

|

[] |

ibbad

| 1

|

cvat-ai/cvat

|

tensorflow

| 8,886

|

"docker-compose up" got error...

|

### Actions before raising this issue

- [X] I searched the existing issues and did not find anything similar.

- [X] I read/searched [the docs](https://docs.cvat.ai/docs/)

### Steps to Reproduce

1. git clone http://192.168.1.122:5000/polonii/cvat

### Expected Behavior

_No response_

### Possible Solution

_No response_

### Context

_No response_

### Environment

_No response_

|

closed

|

2024-12-28T20:54:39Z

|

2024-12-28T20:55:02Z

|

https://github.com/cvat-ai/cvat/issues/8886

|

[

"bug"

] |

PospelovDaniil

| 0

|

rasbt/watermark

|

jupyter

| 15

|

Wrong package versions within virtualenv

|

I'm not sure how widespread this issue is, or if it's something particular to my setup, but when I use watermark to report package information (using -p) within a jupyter notebook that is running in a virtualenv, version information about system level packages are reported rather than packages installed within my environment. If a package is installed in my virtualenv and not installed at the system level, then I end up getting `DistributionNotFound` exceptions when I try to report the version number using watermark.

The problem stems from the call to `pkg_resources.get_distribution`. If I use `get_distribution` directly from within my notebook to report the version of e.g. `numpy` it shows my system level numpy info (v 1.11.0) rather than info about numpy installed within my virtualenv (v 1.11.1). For example:

> `pkg_resources.get_distribution('numpy')`

> `numpy 1.11.0 (/usr/local/lib/python2.7/site-packages)`

Similarly

> `%watermark -p numpy`

> `numpy 1.11.0`

But when I check the version of what is imported:

> `import numpy as np`

> `np.__version__`

> `'1.11.1'`

If I use `pkg_resources` from a python console or script running within a virtualenv, it reports the proper information about packages. But if I use it from an ipython console or jupyter notebook it reports system level information.

If this is a widespread issue, should `watermark` find another more robust solution to getting package information other than using `pkg_resources`?

|

closed

|

2016-08-03T17:35:17Z

|

2016-08-16T22:54:58Z

|

https://github.com/rasbt/watermark/issues/15

|

[

"bug",

"help wanted"

] |

mrbell

| 17

|

Gozargah/Marzban

|

api

| 1,544

|

Can't get config via cli

|

I'm trying to get myself a config to paste it into FoxRay, and getting the following error:

|

open

|

2024-12-28T18:54:50Z

|

2024-12-29T17:31:17Z

|

https://github.com/Gozargah/Marzban/issues/1544

|

[] |

subzero911

| 3

|

aleju/imgaug

|

machine-learning

| 178

|

Does this code use the gpu ?

|

Hi. I just found out that opencv-python doesn't actually use the gpu, even when properly compiled with cuda support on.

I'm currently actively looking for a package that does so. Does this repo expose a code that makes augmentations run on gpu ?

|

open

|

2018-09-10T15:10:03Z

|

2018-10-30T14:50:22Z

|

https://github.com/aleju/imgaug/issues/178

|

[] |

dmenig

| 2

|

ultralytics/ultralytics

|

computer-vision

| 19,547

|

onnx-inference-for-segment-predict

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/orgs/ultralytics/discussions) and found no similar questions.

### Question

HELLO, guys, when I using the @https://github.com/ultralytics/ultralytics/tree/main/examples/YOLOv8-Segmentation-ONNXRuntime-Python demo to predict my task for paper segment, I found that the rotated instance may be unspport, when i defined the param rotated = False , it look like this  but if I make it True, it will become

So makes me confuse is that if I use the pt model for python to predict , the result is

correct , so anyone could help me to resolve the question

### Additional

_No response_

|

closed

|

2025-03-06T08:16:34Z

|

2025-03-15T03:40:42Z

|

https://github.com/ultralytics/ultralytics/issues/19547

|

[

"question",

"fixed",

"segment",

"exports"

] |

Keven-Don

| 29

|

sammchardy/python-binance

|

api

| 1,002

|

ModuleNotFoundError: No module named 'binance.websockets'

|

whenever i try to run the pumpbot with the terminal i get this error

anyone know how i can fix it?

|

open

|

2021-08-29T21:12:03Z

|

2022-08-05T17:28:29Z

|

https://github.com/sammchardy/python-binance/issues/1002

|

[] |

safwaanfazz

| 1

|

TheKevJames/coveralls-python

|

pytest

| 55

|

LICENSE not included in source package

|

When running ./setup.py sdist the LICENSE file is not included. I opt to add it to MANIFEST.in.

|

closed

|

2015-02-07T09:10:31Z

|

2015-02-19T06:09:56Z

|

https://github.com/TheKevJames/coveralls-python/issues/55

|

[] |

joachimmetz

| 5

|

WZMIAOMIAO/deep-learning-for-image-processing

|

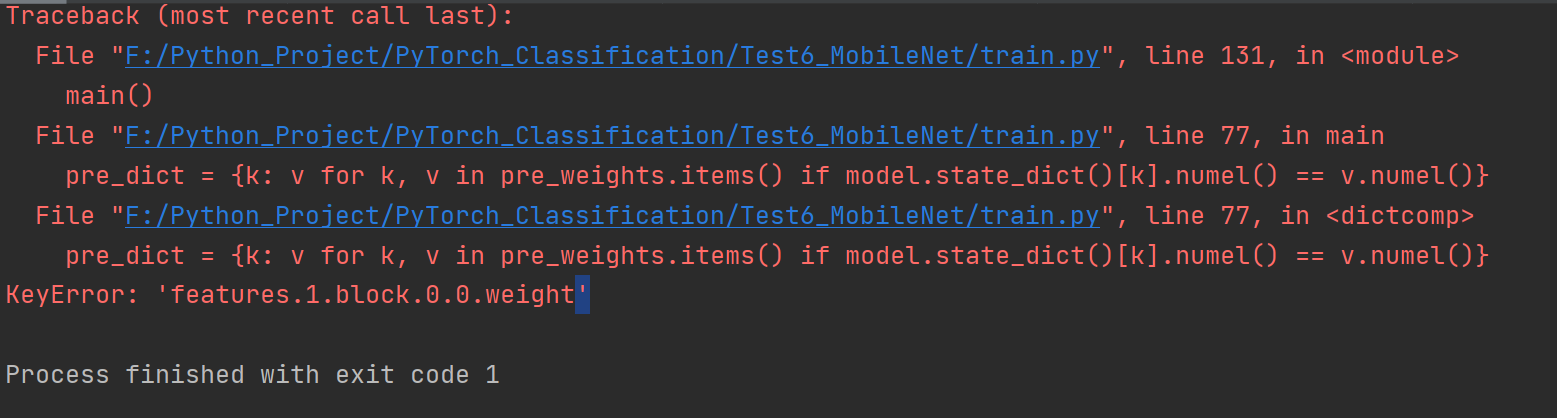

deep-learning

| 185

|

这是什么问题?

|

|

closed

|

2021-03-18T08:38:23Z

|

2021-03-20T07:09:50Z

|

https://github.com/WZMIAOMIAO/deep-learning-for-image-processing/issues/185

|

[] |

HuKai97

| 2

|

wagtail/wagtail

|

django

| 12,062

|

StructBlock missing a joining space when displaying multiple error messages

|

### Issue Summary

When multiple errors are raised for a `StructBlock`, the error messages get joined with no separating space.

For example, given these error messages: `["one.", "two."]`, this would be displayed as follows:

* For a model field: `one. two.` (good 👍🏻)

* For a StructBlock: `one.two.` (not good 👎🏻)

### Steps to Reproduce

Raise multiple `ValidationError`s for a `StructBlock`.

- I have confirmed that this issue can be reproduced as described on a fresh Wagtail project: yes

### Technical details

- Python version: Not relevant for this issue.

- Django version: Not relevant for this issue.

- Wagtail version: 6.1.2

- Browser version: Not relevant for this issue.

|

closed

|

2024-06-18T14:56:13Z

|

2024-06-20T18:30:59Z

|

https://github.com/wagtail/wagtail/issues/12062

|

[

"type:Bug",

"status:Unconfirmed"

] |

kbayliss

| 0

|

scikit-learn/scikit-learn

|

machine-learning

| 30,525

|

OPTICS.fit leaks memory when called under VS Code's built-in debugger

|

### Describe the bug

Running clustering algorithm with n_jobs parameter set to more than 1 thread causes memory leak each time algorithm is run.

This simple code causes additional memory leak at each loop cycle. The issue will not occur if i replace manifold reduction algorithm with precomputed features.

### Steps/Code to Reproduce

```python

import gc

import numpy as np

from sklearn.manifold import TSNE

from sklearn.cluster import OPTICS

import psutil

process = psutil.Process()

def main():

data = np.random.random((100, 100))

for _i in range(1, 50):

points = TSNE().fit_transform(data)

prediction = OPTICS(n_jobs=2).fit_predict(points) # n_jobs!=1

points = None

prediction = None

del prediction

del points

gc.collect()

print(f"{process.memory_info().rss / 1e6:.1f} MB")

main()

```

### Expected Results

Program's memory usage nearly constant between loop cycles

### Actual Results

Program's memory usage increases infinitely

### Versions

```shell

System:

python: 3.10.6 (tags/v3.10.6:9c7b4bd, Aug 1 2022, 21:53:49) [MSC v.1932 64 bit (AMD64)]

executable: .venv\Scripts\python.exe

machine: Windows-10-10.0.26100-SP0

Python dependencies:

sklearn: 1.6.0

pip: 24.3.1

setuptools: 63.2.0

numpy: 1.25.2

scipy: 1.14.1

Cython: None

pandas: 2.2.3

matplotlib: 3.10.0

joblib: 1.4.2

threadpoolctl: 3.5.0

Built with OpenMP: True

threadpoolctl info:

user_api: openmp

internal_api: openmp

num_threads: 16

prefix: vcomp

filepath: .venv\Lib\site-packages\sklearn\.libs\vcomp140.dll

version: None

user_api: blas

internal_api: openblas

num_threads: 16

prefix: libopenblas

filepath: .venv\Lib\site-packages\numpy\.libs\libopenblas64__v0.3.23-246-g3d31191b-gcc_10_3_0.dll

version: 0.3.23.dev

threading_layer: pthreads

architecture: Cooperlake

user_api: blas

internal_api: openblas

num_threads: 16

prefix: libscipy_openblas

filepath: .venv\Lib\site-packages\scipy.libs\libscipy_openblas-5b1ec8b915dfb81d11cebc0788069d2d.dll

version: 0.3.27.dev

threading_layer: pthreads

architecture: Cooperlake

```

|

open

|

2024-12-21T15:50:53Z

|

2024-12-31T14:12:54Z

|

https://github.com/scikit-learn/scikit-learn/issues/30525

|

[

"Bug",

"Performance",

"Needs Investigation"

] |

Probelp

| 18

|

flairNLP/flair

|

pytorch

| 3,036

|

Support for Vietnamese

|

Hi, I am looking through Flair and wondering if it support Vietnamese or not. If not, will it in the future? Thank you!

_Originally posted by @longsc2603 in https://github.com/flairNLP/flair/issues/2#issuecomment-1354413764_

|

closed

|

2022-12-21T01:37:57Z

|

2023-06-11T11:25:47Z

|

https://github.com/flairNLP/flair/issues/3036

|

[

"wontfix"

] |

longsc2603

| 1

|

Zeyi-Lin/HivisionIDPhotos

|

machine-learning

| 211

|

onnxruntime error

|

Hi, I am using slurm and I get the following issue:

```

[E:onnxruntime:Default, env.cc:234 ThreadMain] pthread_setaffinity_np failed for thread: 3131773, index: 22, mask: {23, }, error code: 22 error msg: Invalid argument. Specify the number of threads explicitly so the affinity is not set.#[m

#[1;31m2024-11-22 13:59:58.924148953

```

What can I do?

Thanks in advance.

|

open

|

2024-11-22T13:36:11Z

|

2024-11-22T13:36:11Z

|

https://github.com/Zeyi-Lin/HivisionIDPhotos/issues/211

|

[] |

gebaltso

| 0

|

babysor/MockingBird

|

deep-learning

| 616

|

MacBook在运行python demo_toolbox.py -d .\samples时报错

|

实际我是有安装PyQt5的,网上说了PyQt@5就是PyQt5。

<img width="491" alt="图片" src="https://user-images.githubusercontent.com/22427032/173240261-25972477-abf0-4017-a8bc-f40b8c7b423e.png">

<img width="491" alt="图片" src="https://user-images.githubusercontent.com/22427032/173240295-61d2ec48-a9a2-4fca-a336-3acf2b58df98.png">

|

open

|

2022-06-12T15:24:45Z

|

2022-07-16T10:56:35Z

|

https://github.com/babysor/MockingBird/issues/616

|

[] |

iOS-Kel

| 3

|

hootnot/oanda-api-v20

|

rest-api

| 45

|

Version 0.2.1

|

- [x] fix missing requirement

- [x] fix examples: candle-data.py

|

closed

|

2016-11-15T18:44:19Z

|

2016-11-15T19:03:01Z

|

https://github.com/hootnot/oanda-api-v20/issues/45

|

[

"Release"

] |

hootnot

| 0

|

polakowo/vectorbt

|

data-visualization

| 478

|

Unable to move stop-loss in profit

|

Hi!

I'm using `Portfolio.from_signals` and trying to set up a moving stop-loss using supertrend. So for long position I want my stop-loss to move up with supertrend. I use `adjust_sl_func_nb` for that and everything works great untill the stop-loss moves in profit. There's a condition in `get_stop_price_nb` which does not allow to have a stop-loss in profit for some reason.

Just for the sake of experiment I commented it out and the strategy worked like a charm. So why is that check in there? And how can I move my SL in profit with `adjust_sl_func_nb`?

Thanks!

|

open

|

2022-07-31T22:00:57Z

|

2022-10-01T08:24:33Z

|

https://github.com/polakowo/vectorbt/issues/478

|

[] |

tossha

| 1

|

reloadware/reloadium

|

django

| 183

|

pydevd_process_net_command.py this file frequently wrong as UnicodeDecodeError

|

def _on_run(self):

read_buffer = ""

try:

while not self.killReceived:

try:

r = self.sock.recv(1024)

except:

if not self.killReceived:

traceback.print_exc()

self.handle_except()

return #Finished communication.

#Note: the java backend is always expected to pass utf-8 encoded strings. We now work with unicode

#internally and thus, we may need to convert to the actual encoding where needed (i.e.: filenames

#on python 2 may need to be converted to the filesystem encoding).

if hasattr(r, 'decode'):

r = r.decode('utf-8')

read_buffer += r

if DebugInfoHolder.DEBUG_RECORD_SOCKET_READS:

sys.stderr.write(u'debugger: received >>%s<<\n' % (read_buffer,))

sys.stderr.flush()

if len(read_buffer) == 0:

self.handle_except()

break

while read_buffer.find(u'\n') != -1:

command, read_buffer = read_buffer.split(u'\n', 1)

args = command.split(u'\t', 2)

try:

cmd_id = int(args[0])

pydev_log.debug('Received command: %s %s\n' % (ID_TO_MEANING.get(str(cmd_id), '???'), command,))

self.process_command(cmd_id, int(args[1]), args[2])

except:

traceback.print_exc()

sys.stderr.write("Can't process net command: %s\n" % command)

sys.stderr.flush()

except:

traceback.print_exc()

self.handle_except()

When starting debugging frequently wrong show :

r = r.decode('utf-8')

^^^^^^^^^^^^^^^^^

UnicodeDecodeError: 'utf-8' codec can't decode bytes in position 1022-1023: unexpected end of data

|

open

|

2024-02-26T17:26:45Z

|

2024-02-26T17:26:45Z

|

https://github.com/reloadware/reloadium/issues/183

|

[] |

fangplu

| 0

|

httpie/cli

|

python

| 1,567

|

Online doc error

|

https://httpie.io/docs/cli/non-string-json-fields

> hobbies:='["http", "pies"]' \ # Raw JSON — Array

In my test (PyPI ver.), it should be

```

hobbies='["http", "pies"]'

```

instead of

```

hobbies:='["http", "pies"]'

```

|

closed

|

2024-03-05T03:45:23Z

|

2024-03-06T06:08:44Z

|

https://github.com/httpie/cli/issues/1567

|

[

"new"

] |

XizumiK

| 3

|

pyeventsourcing/eventsourcing

|

sqlalchemy

| 163

|

Question: inject services into aggregates.

|

Hello

I am new to your library.

I want to create event sourced aggregate with service injected into the constructor of aggregates.

Say I want to create an aggregate that handles a command that contains a password.

I need to hash the password before constructing the event.

Something like this:

```python

class Registration(AggregateRoot):

def __init__(self, *args, **kwargs):

# injected

self._id = kwargs.pop('id')

self._hash_password = kwargs.pop('hash_password')

super().__init__(*args, **kwargs)

# aggregate state

self._hashed_password = None

def set_password(self, **kwargs):

if self._hashed_password:

raise InvalidOperation

self.__trigger_event__(

PasswordSet,

**PasswordSet.validate({

'id': self._id,

'hashed_password': self._hash_password(kwargs.pop('password')),

})

)

def on_event(self, event):

if isinstance(event, PasswordSet):

self._hashed_password = event.hashed_password

```

as you can see here I inject "hash_password" service which is actually a python method.

However, when I try to construct the aggregate.

```python

test_registration_aggregate = Registration.__create__(

id='abcd',

hash_password=lambda x: x,

)

```

I got the error

```

Traceback (most recent call last):

File "/home/runner/app/user_registrations/tests/practice.py", line 20, in setUp

hash_password=lambda: 'new_password',

File "/home/runner/.local/share/virtualenvs/app-i5Lb5gVx/lib/python3.7/site-packages/eventsourcing/domain/model/entity.py", line 229, in __create__

return super(EntityWithHashchain, cls).__create__(*args, **kwargs)

File "/home/runner/.local/share/virtualenvs/app-i5Lb5gVx/lib/python3.7/site-packages/eventsourcing/domain/model/entity.py", line 63, in __create__

**kwargs

File "/home/runner/.local/share/virtualenvs/app-i5Lb5gVx/lib/python3.7/site-packages/eventsourcing/domain/model/events.py", line 167, in __init__

self.__dict__['__event_hash__'] = self.__hash_object__(self.__dict__)

File "/home/runner/.local/share/virtualenvs/app-i5Lb5gVx/lib/python3.7/site-packages/eventsourcing/domain/model/events.py", line 145, in __hash_object__

return hash_object(cls.__json_encoder_class__, obj)

File "/home/runner/.local/share/virtualenvs/app-i5Lb5gVx/lib/python3.7/site-packages/eventsourcing/utils/hashing.py", line 12, in hash_object

cls=json_encoder_class,

File "/home/runner/.local/share/virtualenvs/app-i5Lb5gVx/lib/python3.7/site-packages/eventsourcing/utils/transcoding.py", line 133, in json_dumps

cls=cls,

File "/usr/local/lib/python3.7/json/__init__.py", line 238, in dumps

**kw).encode(obj)

File "/usr/local/lib/python3.7/json/encoder.py", line 199, in encode

chunks = self.iterencode(o, _one_shot=True)

File "/home/runner/.local/share/virtualenvs/app-i5Lb5gVx/lib/python3.7/site-packages/eventsourcing/utils/transcoding.py", line 23, in iterencode

return super(ObjectJSONEncoder, self).iterencode(o, _one_shot=_one_shot)

File "/usr/local/lib/python3.7/json/encoder.py", line 257, in iterencode

return _iterencode(o, 0)

File "/home/runner/.local/share/virtualenvs/app-i5Lb5gVx/lib/python3.7/site-packages/eventsourcing/utils/transcoding.py", line 65, in default

return JSONEncoder.default(self, obj)

File "/usr/local/lib/python3.7/json/encoder.py", line 179, in default

raise TypeError(f'Object of type {o.__class__.__name__} '

TypeError: Object of type builtin_function_or_method is not JSON serializable

```

So I assumed that whatever passed into `__create__` should be serializable

but how else do I pass domain services into aggregates.

|

closed

|

2018-10-17T07:57:03Z

|

2018-11-01T02:01:01Z

|

https://github.com/pyeventsourcing/eventsourcing/issues/163

|

[] |

midnight-wonderer

| 4

|

thp/urlwatch

|

automation

| 58

|

Automatically cleaning up cached content

|

Does urlwatch automatically clean up its cache? i.e. keep only the latest version of a page and delete any old versions.

|

closed

|

2016-03-11T11:39:19Z

|

2016-03-12T12:34:41Z

|

https://github.com/thp/urlwatch/issues/58

|

[] |

Immortalin

| 7

|

polakowo/vectorbt

|

data-visualization

| 313

|

Internal numba list grows with each iteration by ~10mb

|

Hi,

I have noticed that if I call `from_signals`/`from_random_signals` inside a loop, some internal numba list grows with each iteration by ~10mb. If you have a large loop, then this fills up your memory quite quickly.

Here is an example which shows this issue:

```Python

import vectorbt as vbt

import numpy as np

from pympler import muppy, summary

import gc

price = vbt.YFData.download('BTC-USD').get('Close')

symbols = ["BTC-USD", "ETH-USD"]

price = vbt.YFData.download(symbols, missing_index='drop').get('Close')

for i in range(10):

n = np.random.randint(10, 101, size=1000).tolist()

portfolio = vbt.Portfolio.from_random_signals(price, n=n, init_cash=100, seed=42)

del n, portfolio

gc.collect()

all_objects = muppy.get_objects()

sum1 = summary.summarize(all_objects)

summary.print_(sum1)

# uncomment if you want to see the content of that list

#list_obj = [ao for ao in all_objects if isinstance(ao, list)]

#

#for d in list_obj:

# print(d)

# print(len(d))

```

You will see in the summary which is printed in each iteration, that there is an object of type `list` which grows with each iteration.

Any idea how to solve that issue?

Thank you very much! And nice project btw!

|

closed

|

2021-12-30T11:09:28Z

|

2022-01-02T13:55:29Z

|

https://github.com/polakowo/vectorbt/issues/313

|

[] |

FalsePositiv3

| 11

|

abhiTronix/vidgear

|

dash

| 137

|

Framerate < 1 fps, display of out of date frames, in example code "Using NetGear_Async with Variable Parameters"

|

## Description

The client for the example [Using NetGear_Async with Variable Parameters](https://abhitronix.github.io/vidgear/gears/netgear_async/usage/) does:

1. display the first frame from the server on connection rather than the most recent

2. display frames updating at a frame rate < 1 per second

### Acknowledgment

<!--- By posting an issue you acknowledge the following: (Put an `x` in all the boxes that apply(important)) -->

- [x] I have searched the [issues](https://github.com/abhiTronix/vidgear/issues) for my issue and found nothing related or helpful.

- [x] I have read the [Documentation](https://abhitronix.github.io/vidgear).

- [x] I have read the [Issue Guidelines](https://abhitronix.github.io/contribution/issue/).

### Environment

Server:

Python 3.8

Raspberry Pi 4 4Gb

Current Raspbian

Current test branch of vidgear installed today (however issue occurred with regular pip3 branch too)

Running on a local network, (other video-streaming approaches do work at expected frame rates, seemingly implying it's not the network)

Client:

Mac OS Catalina

Python 3.8

Current pip3 install vidgear[asyncio]

### Expected Behavior

Whether the server or the client starts first, the client will display the latest frame from the server with reasonable frame rate (eg, > 5 frames per second).

### Actual Behavior

When the server starts first, then when the client connects, it starts showing frames one by one from when the server started at an extremely low rate (frame rate < 1 per second).

When the client starts first, and then the server starts, the client does start with the servers first (current) frame, but the frame rate on the client is so slow as to quickly get behind (< 1 frame per second)

### Possible Fix

Maybe there is config required to make the client/server ignore past frames, and start from the most recent frame when they connect?

Also, this warning prints out when the server starts:

`CamGear :: WARNING :: Threaded Queue Mode is disabled for the current video source!`

If I wave my arms like a madman while starting the server, and then start the client, I can see my arms waving in super super slow motion. That indicates to me that the server is in fact taking frames at the right frame rate (or else the arm wave would be jerkier), and the issue may be more with the client side, or in the transport of frames.

### Steps to reproduce

1. On the server, make sure to get the test branch of vidgear[asyncio] as described here: https://abhitronix.github.io/vidgear/installation/source_install/#installation

2. On the server, setup the example code "Using NetGear_Async with Variable Parameters" and run it:

```

# import libraries

from vidgear.gears.asyncio import NetGear_Async

import asyncio

#initialize Server with suitable source

server=NetGear_Async(source=0, address='192.168.1.14', port='5454', protocol='tcp', pattern=3, logging=True).launch()

if __name__ == '__main__':

#set event loop

asyncio.set_event_loop(server.loop)

try:

#run your main function task until it is complete

server.loop.run_until_complete(server.task)

except KeyboardInterrupt:

#wait for keyboard interrupt

pass

finally:

# finally close the server

server.close()

```

3. On the client, setup the code from the same example and run it:

```

# import libraries

from vidgear.gears.asyncio import NetGear_Async

import cv2, asyncio

#define and launch Client with `receive_mode=True`. #change following IP address '192.168.x.xxx' with yours

client=NetGear_Async(address='192.168.1.14', port='5454', protocol='tcp', pattern=3, receive_mode=True, logging=True).launch()

#Create a async function where you want to show/manipulate your received frames

async def main():

# loop over Client's Asynchronous Frame Generator

async for frame in client.recv_generator():

# do something with received frames here

# Show output window

cv2.imshow("Output Frame", frame)

key=cv2.waitKey(1) & 0xFF

#await before continuing

await asyncio.sleep(0.00001)

if __name__ == '__main__':

#Set event loop to client's

asyncio.set_event_loop(client.loop)

try:

#run your main function task until it is complete

client.loop.run_until_complete(main())

except KeyboardInterrupt:

#wait for keyboard interrupt

pass

# close all output window

cv2.destroyAllWindows()

# safely close client

client.close()

```

|

closed

|

2020-06-13T19:10:22Z

|

2020-06-25T00:54:05Z

|

https://github.com/abhiTronix/vidgear/issues/137

|

[

"QUESTION :question:",

"SOLVED :checkered_flag:"

] |

whogben

| 11

|

InstaPy/InstaPy

|

automation

| 5,842

|

Index Error

|

When I try to run my code it seems to go fine for a while (around 40mins), and then I get this error:

`Traceback (most recent call last):

File "/Users/rafael/Documents/Projects/InstaPySimon/InstagramSimonSetup.py", line 92, in <module>

session.unfollow_users(amount=500, instapy_followed_enabled=True, instapy_followed_param="all", style="RANDOM", unfollow_after=12 * 60 * 60, sleep_delay=501)

File "/Users/rafael/.pyenv/versions/3.8.1/lib/python3.8/site-packages/instapy/instapy.py", line 2001, in like_by_tags

success = process_comments(

File "/Users/rafael/.pyenv/versions/3.8.1/lib/python3.8/site-packages/instapy/comment_util.py", line 456, in process_comments

comment_state, msg = comment_image(

File "/Users/rafael/.pyenv/versions/3.8.1/lib/python3.8/site-packages/instapy/comment_util.py", line 67, in comment_image

rand_comment = random.choice(comments).format(username)

IndexError: Replacement index 1 out of range for positional args tuple`

## InstaPy configuration

` # FOLLOW

session.set_do_follow(enabled=True, percentage=80, times=1)

session.unfollow_users(amount=500, instapy_followed_enabled=True, instapy_followed_param="all", style="RANDOM", unfollow_after=12 * 60 * 60, sleep_delay=501)

`

I tried RANDOM and FIFO but it produces the same error...

|

closed

|

2020-10-23T11:02:15Z

|

2020-12-09T00:54:45Z

|

https://github.com/InstaPy/InstaPy/issues/5842

|

[

"wontfix"

] |

rafo

| 2

|

erdewit/ib_insync

|

asyncio

| 610

|

ib.portfolio() request for specific subaccount fails?

|

Thanks for the great library @erdewit.

I am trying to load the portfolio for a specific sub-account.

I tried using:

`ib.portfolio()`

it returns a `[]` as the first portfolio is empty.

I checked the source for `ib.portfolio()` and modified it to take in a account argument.

```

def portfolio(self, account) -> List[PortfolioItem]:

"""List of portfolio items of the default account."""

#account = self.wrapper.accounts[0]

return [v for v in self.wrapper.portfolio[account].values()]

```

this didn't work either.

The positions() code works with a subaccount specified. Am I overlooking something here?

I needed to update the ib_insync. works now.

Please close.

|

closed

|

2023-06-29T12:26:44Z

|

2023-06-29T13:30:16Z

|

https://github.com/erdewit/ib_insync/issues/610

|

[] |

SidGoy

| 0

|

plotly/dash

|

dash

| 3,062

|

Improve Dependency Management by removing packages not needed at runtime

|

Dash runtime requirements inlcude some packages that are not needed at runtime.

See [requirements/install.txt](https://github.com/plotly/dash/blob/0d9cd2c2a611e1b8cce21d1d46b69234c20bdb11/requirements/install.txt)

**Is your feature request related to a problem? Please describe.**

Working in an enterprise setting there are strict requirements ragarding deploying secure software. Reducing the attack surface by installing only essential packages is key. As of now, dash requires some packages to be installed in the runtime environment which are not needed to run the app at all or not in particular / newer python versions.

**Describe the solution you'd like**

1. Leverage PEP-518 which allows to remove `setuptools` as a runtime dependency and add it as a build time dependency.

2. `importlib_metadata` is sparsely used. Depending on the python version and features needed for this package, it is not required at all and can be replaced with `importlib.metdata` which is inlcuded in the python stanrdard lib (at least for >3.8). Require it only for older python versions. You can handle if the version from the standard-lib or the installed packages should be used by checking the python version when the packages are imported. Add e.g. `importlib-metadata ; python_version < 3.9` to the respective requirements file.

```python

import sys

if sys.version_info >= (3, 8):

from importlib.metadata import ...

else:

from importlib_metadata import ...

```

3. I am pretty sure that the `typing_extensions` package is not needed for newer python versions (>=3.10). If you do not leverage runtime type checking you can make it optional. For newer python versions the types can be imported from the `typing` package. Additionally, you can leverage the `typing.TYPE_CHECKING` constant. Again, require it only for older python versions and check the python version before importing the package.

**Describe alternatives you've considered**

No

|

open

|

2024-11-06T07:36:03Z

|

2024-11-11T14:44:18Z

|

https://github.com/plotly/dash/issues/3062

|

[

"feature",

"P2"

] |

waldemarmeier

| 1

|

keras-team/autokeras

|

tensorflow

| 928

|

Loss starts from initial value every epoch for structured classifier

|

### Bug Description

Loss starts from initial value every epoch for structured classifier:

> Train for 16 steps, validate for 4 steps

> 1/16 [>.............................] - ETA: 1s - loss: 2.3992 - accuracy: 0.8438

> 4/16 [======>.......................] - ETA: 0s - loss: 2.6361 - accuracy: 0.8281

> 7/16 [============>.................] - ETA: 0s - loss: 2.6707 - accuracy: 0.8259

> 10/16 [=================>............] - ETA: 0s - loss: 2.4452 - accuracy: 0.8406

> 13/16 [=======================>......] - ETA: 0s - loss: 2.5466 - accuracy: 0.8341

> 16/16 [==============================] - 1s 36ms/step - loss: 2.5186 - accuracy: 0.8347 - val_loss: 2.2202 - val_accuracy: 0.8560