repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

pywinauto/pywinauto

|

automation

| 887

|

Error after 'from pywinauto.application import Application'

|

## Expected Behavior

```

from pywinauto.application import Application

```

## Actual Behavior

```

Traceback (most recent call last):

File "d:\Devel\python\lib\ctypes\__init__.py", line 121, in WINFUNCTYPE

return _win_functype_cache[(restype, argtypes, flags)]

KeyError: (<class 'ctypes.HRESULT'>, (<class 'ctypes.c_long'>, <class 'comtypes.automation.tagVARIANT'>, <class 'comtypes.LP_POINTER(IUIAutomationCondition)'>), 0)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "d:\Devel\python\lib\site-packages\pywinauto\__init__.py", line 89, in <module>

from . import findwindows

File "d:\Devel\python\lib\site-packages\pywinauto\findwindows.py", line 42, in <module>

from . import controls

File "d:\Devel\python\lib\site-packages\pywinauto\controls\__init__.py", line 36, in <module>

from . import uiawrapper # register "uia" back-end (at the end of uiawrapper module)

File "d:\Devel\python\lib\site-packages\pywinauto\controls\uiawrapper.py", line 47, in <module>

from ..uia_defines import IUIA

File "d:\Devel\python\lib\site-packages\pywinauto\uia_defines.py", line 181, in <module>

pattern_ids = _build_pattern_ids_dic()

File "d:\Devel\python\lib\site-packages\pywinauto\uia_defines.py", line 169, in _build_pattern_ids_dic

if hasattr(IUIA().ui_automation_client, cls_name):

File "d:\Devel\python\lib\site-packages\pywinauto\uia_defines.py", line 50, in __call__

cls._instances[cls] = super(_Singleton, cls).__call__(*args, **kwargs)

File "d:\Devel\python\lib\site-packages\pywinauto\uia_defines.py", line 60, in __init__

self.UIA_dll = comtypes.client.GetModule('UIAutomationCore.dll')

File "d:\Devel\python\lib\site-packages\comtypes\client\_generate.py", line 110, in GetModule

mod = _CreateWrapper(tlib, pathname)

File "d:\Devel\python\lib\site-packages\comtypes\client\_generate.py", line 184, in _CreateWrapper

mod = _my_import(fullname)

File "d:\Devel\python\lib\site-packages\comtypes\client\_generate.py", line 24, in _my_import

return __import__(fullname, globals(), locals(), ['DUMMY'])

File "d:\Devel\python\lib\site-packages\comtypes\gen\_944DE083_8FB8_45CF_BCB7_C477ACB2F897_0_1_0.py", line 1870, in <module>

( ['out', 'retval'], POINTER(POINTER(IUIAutomationElement)), 'element' )),

File "d:\Devel\python\lib\site-packages\comtypes\__init__.py", line 329, in __setattr__

self._make_methods(value)

File "d:\Devel\python\lib\site-packages\comtypes\__init__.py", line 698, in _make_methods

prototype = WINFUNCTYPE(restype, *argtypes)

File "d:\Devel\python\lib\ctypes\__init__.py", line 123, in WINFUNCTYPE

class WinFunctionType(_CFuncPtr):

TypeError: item 2 in _argtypes_ passes a union by value, which is unsupported.

```

## Steps to Reproduce the Problem

## Short Example of Code to Demonstrate the Problem

## Specifications

- Pywinauto version: comtypes-1.1.7 pywin32-227 pywinauto-0.6.8 six-1.14.0

- Python version and bitness: Python 3.7.6, 32bit

- Platform and OS: Win 10

|

closed

|

2020-02-03T15:30:10Z

|

2020-02-13T17:17:44Z

|

https://github.com/pywinauto/pywinauto/issues/887

|

[

"duplicate",

"3rd-party issue"

] |

arozehnal

| 5

|

scikit-optimize/scikit-optimize

|

scikit-learn

| 1,076

|

ImportError when using skopt with scikit-learn 1.0

|

When importing Scikit-optimize, the following ImportError is returned:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/local/lib/python3.7/dist-packages/skopt/__init__.py", line 55, in <module>

from .searchcv import BayesSearchCV

File "/usr/local/lib/python3.7/dist-packages/skopt/searchcv.py", line 16, in <module>

from sklearn.utils.fixes import MaskedArray

ImportError: cannot import name 'MaskedArray' from 'sklearn.utils.fixes' (/usr/local/lib/python3.7/dist-packages/sklearn/utils/fixes.py)

This issue started occurring when upgrading from Scikit-learn 0.24.2 to 1.0.

System dependencies:

Python 3.7.12

scikit-image 0.18.3

scikit-learn 1.0

scikit-optimize 0.8.1

sklearn 0.0

|

closed

|

2021-10-05T12:30:14Z

|

2021-10-12T14:41:36Z

|

https://github.com/scikit-optimize/scikit-optimize/issues/1076

|

[] |

SenneDeproost

| 3

|

s3rius/FastAPI-template

|

fastapi

| 96

|

Request object isn't passed as argument

|

Thanks for this package. I have created graphql app using template but getting below error. It seems fastapi doesn't pass request object.

```log

ERROR: Exception in ASGI application

Traceback (most recent call last):

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/uvicorn/protocols/websockets/websockets_impl.py", line 184, in run_asgi

result = await self.app(self.scope, self.asgi_receive, self.asgi_send)

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/uvicorn/middleware/proxy_headers.py", line 75, in __call__

return await self.app(scope, receive, send)

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/fastapi/applications.py", line 261, in __call__

await super().__call__(scope, receive, send)

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/starlette/applications.py", line 112, in __call__

await self.middleware_stack(scope, receive, send)

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/starlette/middleware/errors.py", line 146, in __call__

await self.app(scope, receive, send)

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/starlette/exceptions.py", line 58, in __call__

await self.app(scope, receive, send)

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/fastapi/middleware/asyncexitstack.py", line 21, in __call__

raise e

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/fastapi/middleware/asyncexitstack.py", line 18, in __call__

await self.app(scope, receive, send)

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/starlette/routing.py", line 656, in __call__

await route.handle(scope, receive, send)

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/starlette/routing.py", line 315, in handle

await self.app(scope, receive, send)

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/starlette/routing.py", line 77, in app

await func(session)

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/fastapi/routing.py", line 264, in app

solved_result = await solve_dependencies(

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/fastapi/dependencies/utils.py", line 498, in solve_dependencies

solved_result = await solve_dependencies(

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/fastapi/dependencies/utils.py", line 498, in solve_dependencies

solved_result = await solve_dependencies(

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/fastapi/dependencies/utils.py", line 498, in solve_dependencies

solved_result = await solve_dependencies(

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/fastapi/dependencies/utils.py", line 523, in solve_dependencies

solved = await solve_generator(

File "/Users/test/Library/Caches/pypoetry/virtualenvs/fastapi-graphql-practice-1UuEp-7G-py3.10/lib/python3.10/site-packages/fastapi/dependencies/utils.py", line 443, in solve_generator

cm = asynccontextmanager(call)(**sub_values)

File "/Users/test/.pyenv/versions/3.10.2/lib/python3.10/contextlib.py", line 314, in helper

return _AsyncGeneratorContextManager(func, args, kwds)

File "/Users/test/.pyenv/versions/3.10.2/lib/python3.10/contextlib.py", line 103, in __init__

self.gen = func(*args, **kwds)

TypeError: get_db_session() missing 1 required positional argument: 'request'

INFO: connection open

INFO: connection closed

```

|

closed

|

2022-07-05T07:01:34Z

|

2022-10-13T21:26:26Z

|

https://github.com/s3rius/FastAPI-template/issues/96

|

[] |

devNaresh

| 16

|

unionai-oss/pandera

|

pandas

| 1,261

|

Fix to_script description

|

#### Location of the documentation

[DataFrameSchema.to_script](https://github.com/unionai-oss/pandera/blob/62bc4840508ff1ac0df595b57b2152737a1228a2/pandera/api/pandas/container.py#L1251)

#### Documentation problem

This method has the description for `from_yaml`.

#### Suggested fix for documentation

Something like "Write `DataFrameSchema` to script".

|

closed

|

2023-07-15T19:25:28Z

|

2023-07-17T17:47:29Z

|

https://github.com/unionai-oss/pandera/issues/1261

|

[

"docs"

] |

tmcclintock

| 1

|

onnx/onnx

|

pytorch

| 5,926

|

Add TopK node to a pretrained Brevitas model

|

We are working with FINN-ONNX, and we want the pretrained models from Brevitas that classify the MNIST images to output the index (class) instead of a probabilities tensor of dim 1x10.To our knowledge, the node responsible for this is the TopK.

Where do we have to add this layer, and what function can we add so the 'export_qonnx' would understand it as a TopK node?

The desired block is in the following image:

|

open

|

2024-02-09T17:21:55Z

|

2024-02-13T10:04:09Z

|

https://github.com/onnx/onnx/issues/5926

|

[

"question"

] |

abedbaltaji

| 1

|

flasgger/flasgger

|

flask

| 443

|

Compatibility Proposal for OpenAPI 3

|

This issue to discuss compatibility of OpenAPI3 in flasgger. Currently, the code differentiates them in runtime, and mixes up the processing of both specifications. In long term, I believe that this would lower code quality, and make the code harder to maintain. Please raise any suggestions or plans to make Flasgger work better with OpenAPI 3 and 2 at the same time.

|

open

|

2020-11-21T18:15:27Z

|

2021-11-14T08:53:02Z

|

https://github.com/flasgger/flasgger/issues/443

|

[] |

billyrrr

| 3

|

nteract/papermill

|

jupyter

| 575

|

Some weird error messages when executing a notebook involving pytorch

|

I have a notebook for training a model using pytorch. The notebook runs fine if I run it from browser. But I ran into the following problem when executing it via papermill

```

Generating grammar tables from /home/ubuntu/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/blib2to3/Grammar.txt

Writing grammar tables to /home/ubuntu/.cache/black/20.8b1/Grammar3.6.10.final.0.pickle

Writing failed: [Errno 2] No such file or directory: '/home/ubuntu/.cache/black/20.8b1/tmp0twtlmvs'

Generating grammar tables from /home/ubuntu/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/blib2to3/PatternGrammar.txt

Writing grammar tables to /home/ubuntu/.cache/black/20.8b1/PatternGrammar3.6.10.final.0.pickle

Writing failed: [Errno 2] No such file or directory: '/home/ubuntu/.cache/black/20.8b1/tmp2_jvdud_'

Executing: 0%| | 0/23 [00:00<?, ?cell/s]Executing notebook with kernel: pytorch_p36

Executing: 22%|████████████████▎ | 5/23 [00:03<00:13, 1.38cell/s]

Traceback (most recent call last):

File "/home/ubuntu/anaconda3/envs/pytorch_p36/bin/papermill", line 8, in <module>

sys.exit(papermill())

File "/home/ubuntu/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/click/core.py", line 764, in __call__

return self.main(*args, **kwargs)

File "/home/ubuntu/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/click/core.py", line 717, in main

rv = self.invoke(ctx)

File "/home/ubuntu/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/click/core.py", line 956, in invoke

return ctx.invoke(self.callback, **ctx.params)

File "/home/ubuntu/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/click/core.py", line 555, in invoke

return callback(*args, **kwargs)

File "/home/ubuntu/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/click/decorators.py", line 17, in new_func

return f(get_current_context(), *args, **kwargs)

File "/home/ubuntu/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/papermill/cli.py", line 256, in papermill

execution_timeout=execution_timeout,

File "/home/ubuntu/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/papermill/execute.py", line 118, in execute_notebook

raise_for_execution_errors(nb, output_path)

File "/home/ubuntu/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/papermill/execute.py", line 230, in raise_for_execution_errors

raise error

papermill.exceptions.PapermillExecutionError:

---------------------------------------------------------------------------

Exception encountered at "In [2]":

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-2-3916aaf64ab2> in <module>

----> 1 from torchvision import datasets, transforms

2

3 datasets.MNIST('data', download=True, transform=transforms.Compose([

4 transforms.ToTensor(),

5 transforms.Normalize((0.1307,), (0.3081,))

~/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/torchvision/__init__.py in <module>

1 import warnings

2

----> 3 from torchvision import models

4 from torchvision import datasets

5 from torchvision import ops

~/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/torchvision/models/__init__.py in <module>

10 from .shufflenetv2 import *

11 from . import segmentation

---> 12 from . import detection

13 from . import video

14 from . import quantization

~/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/torchvision/models/detection/__init__.py in <module>

----> 1 from .faster_rcnn import *

2 from .mask_rcnn import *

3 from .keypoint_rcnn import *

~/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/torchvision/models/detection/faster_rcnn.py in <module>

11

12 from .generalized_rcnn import GeneralizedRCNN

---> 13 from .rpn import AnchorGenerator, RPNHead, RegionProposalNetwork

14 from .roi_heads import RoIHeads

15 from .transform import GeneralizedRCNNTransform

~/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/torchvision/models/detection/rpn.py in <module>

9 from torchvision.ops import boxes as box_ops

10

---> 11 from . import _utils as det_utils

12 from .image_list import ImageList

13

~/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/torchvision/models/detection/_utils.py in <module>

17

18 @torch.jit.script

---> 19 class BalancedPositiveNegativeSampler(object):

20 """

21 This class samples batches, ensuring that they contain a fixed proportion of positives

~/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/torch/jit/__init__.py in script(obj, optimize, _frames_up, _rcb)

1217 if _rcb is None:

1218 _rcb = _jit_internal.createResolutionCallback(_frames_up + 1)

-> 1219 _compile_and_register_class(obj, _rcb, qualified_name)

1220 return obj

1221 else:

~/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/torch/jit/__init__.py in _compile_and_register_class(obj, rcb, qualified_name)

1074 def _compile_and_register_class(obj, rcb, qualified_name):

1075 ast = get_jit_class_def(obj, obj.__name__)

-> 1076 _jit_script_class_compile(qualified_name, ast, rcb)

1077 _add_script_class(obj, qualified_name)

1078

~/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/torch/jit/_recursive.py in try_compile_fn(fn, loc)

220 # object

221 rcb = _jit_internal.createResolutionCallbackFromClosure(fn)

--> 222 return torch.jit.script(fn, _rcb=rcb)

223

224

~/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/torch/jit/__init__.py in script(obj, optimize, _frames_up, _rcb)

1224 if _rcb is None:

1225 _rcb = _gen_rcb(obj, _frames_up)

-> 1226 fn = torch._C._jit_script_compile(qualified_name, ast, _rcb, get_default_args(obj))

1227 # Forward docstrings

1228 fn.__doc__ = obj.__doc__

RuntimeError:

builtin cannot be used as a value:

at /home/ubuntu/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/torchvision/models/detection/_utils.py:14:56

def zeros_like(tensor, dtype):

# type: (Tensor, int) -> Tensor

return torch.zeros_like(tensor, dtype=dtype, layout=tensor.layout,

~~~~~~~~~~~~~ <--- HERE

device=tensor.device, pin_memory=tensor.is_pinned())

'zeros_like' is being compiled since it was called from '__torch__.torchvision.models.detection._utils.BalancedPositiveNegativeSampler.__call__'

at /home/ubuntu/anaconda3/envs/pytorch_p36/lib/python3.6/site-packages/torchvision/models/detection/_utils.py:72:12

# randomly select positive and negative examples

perm1 = torch.randperm(positive.numel(), device=positive.device)[:num_pos]

perm2 = torch.randperm(negative.numel(), device=negative.device)[:num_neg]

pos_idx_per_image = positive[perm1]

neg_idx_per_image = negative[perm2]

# create binary mask from indices

pos_idx_per_image_mask = zeros_like(

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~... <--- HERE

matched_idxs_per_image, dtype=torch.uint8

)

neg_idx_per_image_mask = zeros_like(

matched_idxs_per_image, dtype=torch.uint8

)

pos_idx_per_image_mask[pos_idx_per_image] = torch.tensor(1, dtype=torch.uint8)

neg_idx_per_image_mask[neg_idx_per_image] = torch.tensor(1, dtype=torch.uint8)

```

|

closed

|

2021-01-26T23:16:54Z

|

2021-02-04T17:06:27Z

|

https://github.com/nteract/papermill/issues/575

|

[

"question"

] |

hongshanli23

| 12

|

twopirllc/pandas-ta

|

pandas

| 807

|

SyntaxWarning: invalid escape sequence '\g' return re_.sub("([a-z])([A-Z])","\g<1> \g<2>", x).title()

|

I got this warning after upgrade python from 3.11 to 3.12:

pandas_ta\utils\_core.py:14: SyntaxWarning: invalid escape sequence '\g'

return re_.sub("([a-z])([A-Z])","\g<1> \g<2>", x).title()

python 3.12.4

pandas_ta 0.3.14b

Do I need to downgrade to python 3.11 for now?

|

closed

|

2024-07-02T05:57:48Z

|

2024-12-01T22:06:59Z

|

https://github.com/twopirllc/pandas-ta/issues/807

|

[

"bug"

] |

kopes18

| 1

|

deepfakes/faceswap

|

deep-learning

| 1,356

|

Training collapse

|

*Note: For general usage questions and help, please use either our [FaceSwap Forum](https://faceswap.dev/forum)

or [FaceSwap Discord server](https://discord.gg/FC54sYg). General usage questions are liable to be closed without

response.*

**Crash reports MUST be included when reporting bugs.**

**Describe the bug**

Please take a screenshot

**To Reproduce**

Steps to reproduce the behavior:

1. input A

2. input B

3. input model

4. click train

**Expected behavior**

normal operation

**Screenshots**

**Desktop (please complete the following information):**

- OS: Window11 23H2 22635.2486

- Python Version [e.g. 3.5, 3.6]

- Conda Version [e.g. 4.5.12]

- Commit ID [e.g. e83819f]

-

**Additional context**

============ System Information ============

backend: nvidia

encoding: cp936

git_branch: master

git_commits: 8e6c6c3 patch writer: Sort the json file by key

gpu_cuda: 12.3

gpu_cudnn: No global version found. Check Conda packages for Conda cuDNN

gpu_devices: GPU_0: NVIDIA GeForce RTX 3050 Laptop GPU

gpu_devices_active: GPU_0

gpu_driver: 545.84

gpu_vram: GPU_0: 4096MB (3977MB free)

os_machine: AMD64

os_platform: Windows-10-10.0.22635-SP0

os_release: 10

py_command: d:\faceswap/faceswap.py gui

py_conda_version: conda 23.9.0

py_implementation: CPython

py_version: 3.10.13

py_virtual_env: True

sys_cores: 20

sys_processor: Intel64 Family 6 Model 154 Stepping 3, GenuineIntel

sys_ram: Total: 16076MB, Available: 4159MB, Used: 11916MB, Free: 4159MB

=============== Pip Packages ===============

absl-py==2.0.0

astunparse==1.6.3

cachetools==5.3.1

certifi==2023.7.22

charset-normalizer==3.3.0

colorama @ file:///C:/b/abs_a9ozq0l032/croot/colorama_1672387194846/work

contourpy @ file:///C:/b/abs_d5rpy288vc/croots/recipe/contourpy_1663827418189/work

cycler @ file:///tmp/build/80754af9/cycler_1637851556182/work

decorator @ file:///opt/conda/conda-bld/decorator_1643638310831/work

fastcluster @ file:///D:/bld/fastcluster_1695650232190/work

ffmpy @ file:///home/conda/feedstock_root/build_artifacts/ffmpy_1659474992694/work

flatbuffers==23.5.26

fonttools==4.25.0

gast==0.4.0

google-auth==2.23.3

google-auth-oauthlib==0.4.6

google-pasta==0.2.0

grpcio==1.59.0

h5py==3.10.0

idna==3.4

imageio @ file:///C:/b/abs_3eijmwdodc/croot/imageio_1695996500830/work

imageio-ffmpeg @ file:///home/conda/feedstock_root/build_artifacts/imageio-ffmpeg_1694632425602/work

joblib @ file:///C:/b/abs_1anqjntpan/croot/joblib_1685113317150/work

keras==2.10.0

Keras-Preprocessing==1.1.2

kiwisolver @ file:///C:/b/abs_88mdhvtahm/croot/kiwisolver_1672387921783/work

libclang==16.0.6

Markdown==3.5

MarkupSafe==2.1.3

matplotlib @ file:///C:/b/abs_085jhivdha/croot/matplotlib-suite_1693812524572/work

mkl-fft @ file:///C:/b/abs_19i1y8ykas/croot/mkl_fft_1695058226480/work

mkl-random @ file:///C:/b/abs_edwkj1_o69/croot/mkl_random_1695059866750/work

mkl-service==2.4.0

munkres==1.1.4

numexpr @ file:///C:/b/abs_5fucrty5dc/croot/numexpr_1696515448831/work

numpy @ file:///C:/b/abs_9fu2cs2527/croot/numpy_and_numpy_base_1695830496596/work/dist/numpy-1.26.0-cp310-cp310-win_amd64.whl#sha256=11367989d61b64039738e0c68c95c6b797a41c4c75ec2147c0541b21163786eb

nvidia-ml-py @ file:///home/conda/feedstock_root/build_artifacts/nvidia-ml-py_1693425331741/work

oauthlib==3.2.2

opencv-python==4.8.1.78

opt-einsum==3.3.0

packaging @ file:///C:/b/abs_28t5mcoltc/croot/packaging_1693575224052/work

Pillow @ file:///C:/b/abs_153xikw91n/croot/pillow_1695134603563/work

ply==3.11

protobuf==3.19.6

psutil @ file:///C:/Windows/Temp/abs_b2c2fd7f-9fd5-4756-95ea-8aed74d0039flsd9qufz/croots/recipe/psutil_1656431277748/work

pyasn1==0.5.0

pyasn1-modules==0.3.0

pyparsing @ file:///C:/Users/BUILDE~1/AppData/Local/Temp/abs_7f_7lba6rl/croots/recipe/pyparsing_1661452540662/work

PyQt5==5.15.7

PyQt5-sip @ file:///C:/Windows/Temp/abs_d7gmd2jg8i/croots/recipe/pyqt-split_1659273064801/work/pyqt_sip

python-dateutil @ file:///tmp/build/80754af9/python-dateutil_1626374649649/work

pywin32==305.1

pywinpty @ file:///C:/ci_310/pywinpty_1644230983541/work/target/wheels/pywinpty-2.0.2-cp310-none-win_amd64.whl

requests==2.31.0

requests-oauthlib==1.3.1

rsa==4.9

scikit-learn @ file:///C:/b/abs_55olq_4gzc/croot/scikit-learn_1690978955123/work

scipy==1.11.3

sip @ file:///C:/Windows/Temp/abs_b8fxd17m2u/croots/recipe/sip_1659012372737/work

six @ file:///tmp/build/80754af9/six_1644875935023/work

tensorboard==2.10.1

tensorboard-data-server==0.6.1

tensorboard-plugin-wit==1.8.1

tensorflow==2.10.1

tensorflow-estimator==2.10.0

tensorflow-io-gcs-filesystem==0.31.0

termcolor==2.3.0

threadpoolctl @ file:///Users/ktietz/demo/mc3/conda-bld/threadpoolctl_1629802263681/work

toml @ file:///tmp/build/80754af9/toml_1616166611790/work

tornado @ file:///C:/b/abs_0cbrstidzg/croot/tornado_1696937003724/work

tqdm @ file:///C:/b/abs_f76j9hg7pv/croot/tqdm_1679561871187/work

typing_extensions==4.8.0

urllib3==2.0.7

Werkzeug==3.0.0

wrapt==1.15.0

============== Conda Packages ==============

# packages in environment at C:\Users\cui19\MiniConda3\envs\faceswap:

#

# Name Version Build Channel

absl-py 2.0.0 pypi_0 pypi

astunparse 1.6.3 pypi_0 pypi

blas 1.0 mkl

brotli 1.0.9 h2bbff1b_7

brotli-bin 1.0.9 h2bbff1b_7

bzip2 1.0.8 he774522_0

ca-certificates 2023.08.22 haa95532_0

cachetools 5.3.1 pypi_0 pypi

certifi 2023.7.22 pypi_0 pypi

charset-normalizer 3.3.0 pypi_0 pypi

colorama 0.4.6 py310haa95532_0

contourpy 1.0.5 py310h59b6b97_0

cudatoolkit 11.8.0 hd77b12b_0

cudnn 8.9.2.26 cuda11_0

cycler 0.11.0 pyhd3eb1b0_0

decorator 5.1.1 pyhd3eb1b0_0

fastcluster 1.2.6 py310hecd3228_3 conda-forge

ffmpeg 4.3.1 ha925a31_0 conda-forge

ffmpy 0.3.0 pyhb6f538c_0 conda-forge

flatbuffers 23.5.26 pypi_0 pypi

fonttools 4.25.0 pyhd3eb1b0_0

freetype 2.12.1 ha860e81_0

gast 0.4.0 pypi_0 pypi

giflib 5.2.1 h8cc25b3_3

git 2.40.1 haa95532_1

glib 2.69.1 h5dc1a3c_2

google-auth 2.23.3 pypi_0 pypi

google-auth-oauthlib 0.4.6 pypi_0 pypi

google-pasta 0.2.0 pypi_0 pypi

grpcio 1.59.0 pypi_0 pypi

h5py 3.10.0 pypi_0 pypi

icc_rt 2022.1.0 h6049295_2

icu 58.2 ha925a31_3

idna 3.4 pypi_0 pypi

imageio 2.31.4 py310haa95532_0

imageio-ffmpeg 0.4.9 pyhd8ed1ab_0 conda-forge

intel-openmp 2023.1.0 h59b6b97_46319

joblib 1.2.0 py310haa95532_0

jpeg 9e h2bbff1b_1

keras 2.10.0 pypi_0 pypi

keras-preprocessing 1.1.2 pypi_0 pypi

kiwisolver 1.4.4 py310hd77b12b_0

krb5 1.20.1 h5b6d351_0

lerc 3.0 hd77b12b_0

libbrotlicommon 1.0.9 h2bbff1b_7

libbrotlidec 1.0.9 h2bbff1b_7

libbrotlienc 1.0.9 h2bbff1b_7

libclang 16.0.6 pypi_0 pypi

libclang13 14.0.6 default_h8e68704_1

libdeflate 1.17 h2bbff1b_1

libffi 3.4.4 hd77b12b_0

libiconv 1.16 h2bbff1b_2

libpng 1.6.39 h8cc25b3_0

libpq 12.15 h906ac69_1

libtiff 4.5.1 hd77b12b_0

libwebp 1.3.2 hbc33d0d_0

libwebp-base 1.3.2 h2bbff1b_0

libxml2 2.10.4 h0ad7f3c_1

libxslt 1.1.37 h2bbff1b_1

libzlib 1.2.13 hcfcfb64_5 conda-forge

libzlib-wapi 1.2.13 hcfcfb64_5 conda-forge

lz4-c 1.9.4 h2bbff1b_0

markdown 3.5 pypi_0 pypi

markupsafe 2.1.3 pypi_0 pypi

matplotlib 3.7.2 py310haa95532_0

matplotlib-base 3.7.2 py310h4ed8f06_0

mkl 2023.1.0 h6b88ed4_46357

mkl-service 2.4.0 py310h2bbff1b_1

mkl_fft 1.3.8 py310h2bbff1b_0

mkl_random 1.2.4 py310h59b6b97_0

munkres 1.1.4 py_0

numexpr 2.8.7 py310h2cd9be0_0

numpy 1.26.0 py310h055cbcc_0

numpy-base 1.26.0 py310h65a83cf_0

nvidia-ml-py 12.535.108 pyhd8ed1ab_0 conda-forge

oauthlib 3.2.2 pypi_0 pypi

opencv-python 4.8.1.78 pypi_0 pypi

openssl 3.0.11 h2bbff1b_2

opt-einsum 3.3.0 pypi_0 pypi

packaging 23.1 py310haa95532_0

pcre 8.45 hd77b12b_0

pillow 9.4.0 py310hd77b12b_1

pip 23.3 py310haa95532_0

ply 3.11 py310haa95532_0

protobuf 3.19.6 pypi_0 pypi

psutil 5.9.0 py310h2bbff1b_0

pyasn1 0.5.0 pypi_0 pypi

pyasn1-modules 0.3.0 pypi_0 pypi

pyparsing 3.0.9 py310haa95532_0

pyqt 5.15.7 py310hd77b12b_0

pyqt5-sip 12.11.0 py310hd77b12b_0

python 3.10.13 he1021f5_0

python-dateutil 2.8.2 pyhd3eb1b0_0

python_abi 3.10 2_cp310 conda-forge

pywin32 305 py310h2bbff1b_0

pywinpty 2.0.2 py310h5da7b33_0

qt-main 5.15.2 h879a1e9_9

qt-webengine 5.15.9 h5bd16bc_7

qtwebkit 5.212 h2bbfb41_5

requests 2.31.0 pypi_0 pypi

requests-oauthlib 1.3.1 pypi_0 pypi

rsa 4.9 pypi_0 pypi

scikit-learn 1.3.0 py310h4ed8f06_0

scipy 1.11.3 py310h309d312_0

setuptools 68.0.0 py310haa95532_0

sip 6.6.2 py310hd77b12b_0

six 1.16.0 pyhd3eb1b0_1

sqlite 3.41.2 h2bbff1b_0

tbb 2021.8.0 h59b6b97_0

tensorboard 2.10.1 pypi_0 pypi

tensorboard-data-server 0.6.1 pypi_0 pypi

tensorboard-plugin-wit 1.8.1 pypi_0 pypi

tensorflow 2.10.1 pypi_0 pypi

tensorflow-estimator 2.10.0 pypi_0 pypi

tensorflow-io-gcs-filesystem 0.31.0 pypi_0 pypi

termcolor 2.3.0 pypi_0 pypi

threadpoolctl 2.2.0 pyh0d69192_0

tk 8.6.12 h2bbff1b_0

toml 0.10.2 pyhd3eb1b0_0

tornado 6.3.3 py310h2bbff1b_0

tqdm 4.65.0 py310h9909e9c_0

typing-extensions 4.8.0 pypi_0 pypi

tzdata 2023c h04d1e81_0

ucrt 10.0.22621.0 h57928b3_0 conda-forge

urllib3 2.0.7 pypi_0 pypi

vc 14.2 h21ff451_1

vc14_runtime 14.36.32532 hdcecf7f_17 conda-forge

vs2015_runtime 14.36.32532 h05e6639_17 conda-forge

werkzeug 3.0.0 pypi_0 pypi

wheel 0.41.2 py310haa95532_0

winpty 0.4.3 4

wrapt 1.15.0 pypi_0 pypi

xz 5.4.2 h8cc25b3_0

zlib 1.2.13 hcfcfb64_5 conda-forge

zlib-wapi 1.2.13 hcfcfb64_5 conda-forge

zstd 1.5.5 hd43e919_0

================= Configs ==================

--------- .faceswap ---------

backend: nvidia

--------- convert.ini ---------

[color.color_transfer]

clip: True

preserve_paper: True

[color.manual_balance]

colorspace: HSV

balance_1: 0.0

balance_2: 0.0

balance_3: 0.0

contrast: 0.0

brightness: 0.0

[color.match_hist]

threshold: 99.0

[mask.mask_blend]

type: normalized

kernel_size: 3

passes: 4

threshold: 4

erosion: 0.0

erosion_top: 0.0

erosion_bottom: 0.0

erosion_left: 0.0

erosion_right: 0.0

[scaling.sharpen]

method: none

amount: 150

radius: 0.3

threshold: 5.0

[writer.ffmpeg]

container: mp4

codec: libx264

crf: 23

preset: medium

tune: none

profile: auto

level: auto

skip_mux: False

[writer.gif]

fps: 25

loop: 0

palettesize: 256

subrectangles: False

[writer.opencv]

format: png

draw_transparent: False

separate_mask: False

jpg_quality: 75

png_compress_level: 3

[writer.patch]

start_index: 0

index_offset: 0

number_padding: 6

include_filename: True

face_index_location: before

origin: bottom-left

empty_frames: blank

json_output: False

separate_mask: False

bit_depth: 16

format: png

png_compress_level: 3

tiff_compression_method: lzw

[writer.pillow]

format: png

draw_transparent: False

separate_mask: False

optimize: False

gif_interlace: True

jpg_quality: 75

png_compress_level: 3

tif_compression: tiff_deflate

--------- extract.ini ---------

[global]

allow_growth: False

aligner_min_scale: 0.07

aligner_max_scale: 2.0

aligner_distance: 22.5

aligner_roll: 45.0

aligner_features: True

filter_refeed: True

save_filtered: False

realign_refeeds: True

filter_realign: True

[align.fan]

batch-size: 12

[detect.cv2_dnn]

confidence: 50

[detect.mtcnn]

minsize: 20

scalefactor: 0.709

batch-size: 8

cpu: True

threshold_1: 0.6

threshold_2: 0.7

threshold_3: 0.7

[detect.s3fd]

confidence: 70

batch-size: 4

[mask.bisenet_fp]

batch-size: 8

cpu: False

weights: faceswap

include_ears: False

include_hair: False

include_glasses: True

[mask.custom]

batch-size: 8

centering: face

fill: False

[mask.unet_dfl]

batch-size: 8

[mask.vgg_clear]

batch-size: 6

[mask.vgg_obstructed]

batch-size: 2

[recognition.vgg_face2]

batch-size: 16

cpu: False

--------- gui.ini ---------

[global]

fullscreen: False

tab: extract

options_panel_width: 30

console_panel_height: 20

icon_size: 14

font: default

font_size: 9

autosave_last_session: prompt

timeout: 120

auto_load_model_stats: True

--------- train.ini ---------

[global]

centering: face

coverage: 87.5

icnr_init: False

conv_aware_init: False

optimizer: adam

learning_rate: 5e-05

epsilon_exponent: -7

save_optimizer: exit

lr_finder_iterations: 1000

lr_finder_mode: set

lr_finder_strength: default

autoclip: False

reflect_padding: False

allow_growth: False

mixed_precision: True

nan_protection: True

convert_batchsize: 16

[global.loss]

loss_function: ssim

loss_function_2: mse

loss_weight_2: 100

loss_function_3: None

loss_weight_3: 0

loss_function_4: None

loss_weight_4: 0

mask_loss_function: mse

eye_multiplier: 3

mouth_multiplier: 2

penalized_mask_loss: True

mask_type: extended

mask_blur_kernel: 3

mask_threshold: 4

learn_mask: False

[model.dfaker]

output_size: 128

[model.dfl_h128]

lowmem: False

[model.dfl_sae]

input_size: 128

architecture: df

autoencoder_dims: 0

encoder_dims: 42

decoder_dims: 21

multiscale_decoder: False

[model.dlight]

features: best

details: good

output_size: 256

[model.original]

lowmem: False

[model.phaze_a]

output_size: 128

shared_fc: None

enable_gblock: True

split_fc: True

split_gblock: False

split_decoders: False

enc_architecture: fs_original

enc_scaling: 7

enc_load_weights: True

bottleneck_type: dense

bottleneck_norm: None

bottleneck_size: 1024

bottleneck_in_encoder: True

fc_depth: 1

fc_min_filters: 1024

fc_max_filters: 1024

fc_dimensions: 4

fc_filter_slope: -0.5

fc_dropout: 0.0

fc_upsampler: upsample2d

fc_upsamples: 1

fc_upsample_filters: 512

fc_gblock_depth: 3

fc_gblock_min_nodes: 512

fc_gblock_max_nodes: 512

fc_gblock_filter_slope: -0.5

fc_gblock_dropout: 0.0

dec_upscale_method: subpixel

dec_upscales_in_fc: 0

dec_norm: None

dec_min_filters: 64

dec_max_filters: 512

dec_slope_mode: full

dec_filter_slope: -0.45

dec_res_blocks: 1

dec_output_kernel: 5

dec_gaussian: True

dec_skip_last_residual: True

freeze_layers: keras_encoder

load_layers: encoder

fs_original_depth: 4

fs_original_min_filters: 128

fs_original_max_filters: 1024

fs_original_use_alt: False

mobilenet_width: 1.0

mobilenet_depth: 1

mobilenet_dropout: 0.001

mobilenet_minimalistic: False

[model.realface]

input_size: 64

output_size: 128

dense_nodes: 1536

complexity_encoder: 128

complexity_decoder: 512

[model.unbalanced]

input_size: 128

lowmem: False

nodes: 1024

complexity_encoder: 128

complexity_decoder_a: 384

complexity_decoder_b: 512

[model.villain]

lowmem: False

[trainer.original]

preview_images: 14

mask_opacity: 30

mask_color: #ff0000

zoom_amount: 5

rotation_range: 10

shift_range: 5

flip_chance: 50

color_lightness: 30

color_ab: 8

color_clahe_chance: 50

color_clahe_max_size: 4

**Crash Report**

The crash report generated in the root of your Faceswap folder

|

closed

|

2023-10-22T05:54:22Z

|

2023-10-23T00:03:58Z

|

https://github.com/deepfakes/faceswap/issues/1356

|

[] |

Cashew-wood

| 1

|

alteryx/featuretools

|

scikit-learn

| 2,156

|

release Featuretools v1.11.0

|

- We can release **once these are merged in**

- https://github.com/alteryx/featuretools/pull/2136

- https://github.com/alteryx/featuretools/pull/2157

- The instructions for releasing:

- https://github.com/alteryx/featuretools/blob/main/release.md

|

closed

|

2022-06-29T15:46:14Z

|

2022-06-30T23:07:11Z

|

https://github.com/alteryx/featuretools/issues/2156

|

[] |

gsheni

| 0

|

iperov/DeepFaceLab

|

machine-learning

| 826

|

Save ERROR

|

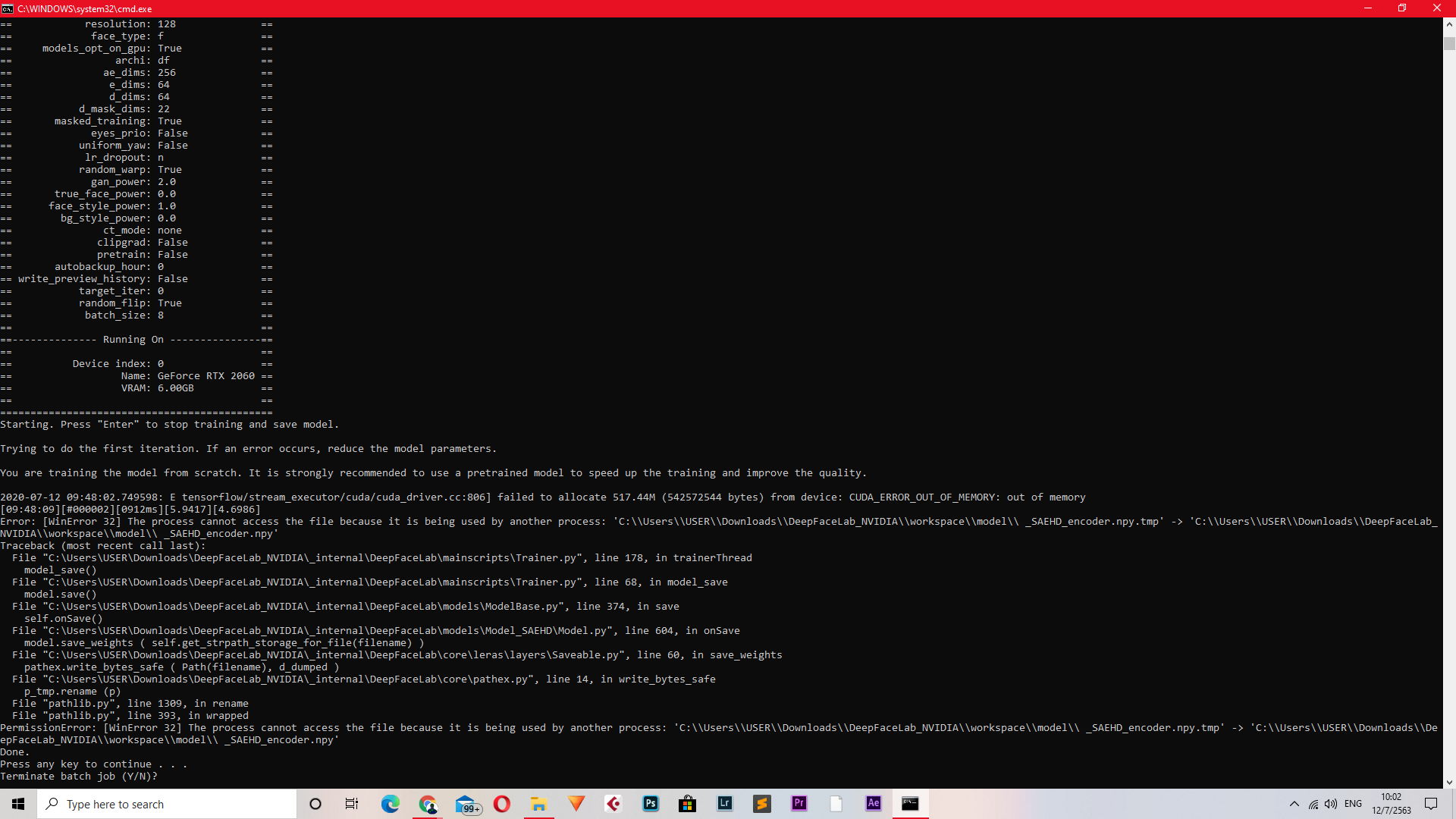

The SAEHD and Quick96 training run as expected however it crashes every time I wanted to press save. This makes all of the previous progress useless. This error message pops up

2020-07-12 09:48:02.749598: E tensorflow/stream_executor/cuda/cuda_driver.cc:806] failed to allocate 517.44M (542572544 bytes) from device: CUDA_ERROR_OUT_OF_MEMORY: out of memory

[09:48:09][#000002][0912ms][5.9417][4.6986]

Error: [WinError 32] The process cannot access the file because it is being used by another process: 'C:\\Users\\USER\\Downloads\\DeepFaceLab_NVIDIA\\workspace\\model\\ _SAEHD_encoder.npy.tmp' -> 'C:\\Users\\USER\\Downloads\\DeepFaceLab_NVIDIA\\workspace\\model\\ _SAEHD_encoder.npy'

Traceback (most recent call last):

File "C:\Users\USER\Downloads\DeepFaceLab_NVIDIA\_internal\DeepFaceLab\mainscripts\Trainer.py", line 178, in trainerThread

model_save()

File "C:\Users\USER\Downloads\DeepFaceLab_NVIDIA\_internal\DeepFaceLab\mainscripts\Trainer.py", line 68, in model_save

model.save()

File "C:\Users\USER\Downloads\DeepFaceLab_NVIDIA\_internal\DeepFaceLab\models\ModelBase.py", line 374, in save

self.onSave()

File "C:\Users\USER\Downloads\DeepFaceLab_NVIDIA\_internal\DeepFaceLab\models\Model_SAEHD\Model.py", line 604, in onSave

model.save_weights ( self.get_strpath_storage_for_file(filename) )

File "C:\Users\USER\Downloads\DeepFaceLab_NVIDIA\_internal\DeepFaceLab\core\leras\layers\Saveable.py", line 60, in save_weights

pathex.write_bytes_safe ( Path(filename), d_dumped )

File "C:\Users\USER\Downloads\DeepFaceLab_NVIDIA\_internal\DeepFaceLab\core\pathex.py", line 14, in write_bytes_safe

p_tmp.rename (p)

File "pathlib.py", line 1309, in rename

File "pathlib.py", line 393, in wrapped

PermissionError: [WinError 32] The process cannot access the file because it is being used by another process: 'C:\\Users\\USER\\Downloads\\DeepFaceLab_NVIDIA\\workspace\\model\\ _SAEHD_encoder.npy.tmp' -> 'C:\\Users\\USER\\Downloads\\DeepFaceLab_NVIDIA\\workspace\\model\\ _SAEHD_encoder.npy'

It did not happened to me on last year's version so I tried using the previous version 06_27_2020 instead of 07_04_2020 but it didn't seem to fix the problem at all. Furthermore, I tried deleting new _SAEHD_encoder.npy.tmp but it recreated the file everytime I pressed save so I tried removing the permission to manage files from new_SAEHD_encoder.npy.tmp but when I tried to save, there is an error saying that new_SAEHD_encoder.npy.tmp doesn't have permission so I don't know what to do with the file.

|

closed

|

2020-07-12T03:05:47Z

|

2020-07-12T05:39:00Z

|

https://github.com/iperov/DeepFaceLab/issues/826

|

[] |

THE-MATT-222

| 1

|

dynaconf/dynaconf

|

flask

| 993

|

[bug] Default value on empty string

|

## Problem

I have a nested structure whose value i need to set to a specific string when empy string or `None` is provided.

Take the following for example:

```python

from dynaconf import Dynaconf, Validator

settings = Dynaconf(

settings_files=[

'config.toml',

'.secrets.toml'

],

merge_enabled=True, # Merge all found files into one configuration.

validators=[ # Custom validators.

Validator(

"files.output.kml",

default="output.kml",

apply_default_on_none=True,

),

],

environments=False, # Disable environments support.

apply_default_on_none=True # Apply default values when a value is None.

)

print(f"KML FILE: '{settings.files.output.kml}'")

```

With the following configuration file (saved as `config.toml`):

```toml

[files.output]

kml = ""

```

- When the `kml` key is **not present** in the config file, the default is given to the setting as espected

- When the `kml` key is set to an **empty string**, the default is completely ignored, even if I passed `apply_default_on_none=True`, while I would expect it to print `output.kml`

It is somehow related to #973, since if that issue was solved I could simply put a `condition=lambda value: value is not None and value.strip() != ""` parameter to the `Validator` and then use the default value since a `ValidationError` would occur.

## What I expected

From the [documentation](https://www.dynaconf.com/validation/#default-values):

> Warning

>

> YAML reads empty keys as `None` and in that case defaults are not applied, if you want to change it set `apply_default_on_none=True` either globally to `Dynaconf` class or individually on a `Validator`.

Reading this I expected the default value to kick in even on empty strings if i set `apply_default_on_none=True` on the `Validator` or on the `Dynaconf` class (I tried both but got the same result).

## Workaround

To work around this issue I had to check manually if the setting was still empty:

```python

from dynaconf import Dynaconf, Validator

settings = Dynaconf(

settings_files=[

'config.toml',

'.secrets.toml'

],

merge_enabled=True, # Merge all found files into one configuration.

validators=[ # Custom validators.

Validator(

"files.output.kml",

default="output.kml",

apply_default_on_none=True,

),

],

environments=False, # Disable environments support.

apply_default_on_none=True # Apply default values when a value is None.

)

# Setup default values for missing settings

if str(settings.files.output.kml.strip()) == "":

settings.files.output.kml = "output.kml"

print(f"KML FILE: '{settings.files.output.kml}'") # Correctly prints `KML FILE: 'output.kml'`

```

And the same for all the other keys I have to make sure exist.

|

open

|

2023-09-04T17:18:12Z

|

2023-11-04T00:47:48Z

|

https://github.com/dynaconf/dynaconf/issues/993

|

[

"Docs"

] |

LukeSavefrogs

| 9

|

kizniche/Mycodo

|

automation

| 867

|

compatible with DFRobot sensor/controller or other Chinese made sensors

|

**Is your feature request related to a problem? Please describe.**

Altas scientific is too costly even though it is very accurate and stable so i prefer cheaper one like DFRobot.

**Describe the solution you'd like**

will mycodo work with product from DFRobot or other Chinese brand? i am not sure if they are all standardised output.

|

closed

|

2020-10-18T09:32:21Z

|

2020-11-17T03:11:01Z

|

https://github.com/kizniche/Mycodo/issues/867

|

[

"question"

] |

garudaonekh

| 9

|

aimhubio/aim

|

data-visualization

| 2,658

|

Show the number of metrics (and system metrics) tracked on the Run page

|

## 🚀 Feature

Add the number of tracked metrics on the Run metrics page.

### Motivation

It would be great to see how many metrics had been tracked.

A couple of use-cases:

- make sure the same number of metrics are shown as intended

- when metrics take time to load and lots are tracked, the number could help shed some light

<img width="1365" alt="Screenshot 2023-04-17 at 16 27 53" src="https://user-images.githubusercontent.com/3179216/232632082-36e9dfe0-266d-4edb-a49d-c1c03fc67fe1.png">

For instance Hyperparameters tab does a good job of showing the number of items.

<img width="555" alt="Screenshot 2023-04-17 at 16 26 09" src="https://user-images.githubusercontent.com/3179216/232632103-c8d4bf6d-cce9-438c-9338-bb949af17b19.png">

### Pitch

Add dimensions of metrics in the Run page.

|

open

|

2023-04-17T23:34:35Z

|

2023-04-17T23:34:35Z

|

https://github.com/aimhubio/aim/issues/2658

|

[

"type / enhancement"

] |

SGevorg

| 0

|

ipython/ipython

|

data-science

| 14,540

|

add support for capturing entire ipython interaction [enhancement]

|

It would be good if IPython can support running exported Jupyter python notebooks in batch mode in a way that better matches the Jupyter output. In particular, being able to see the expressions that are evaluated along with the output would be beneficial, even when the output is not explicit. For example, there could be a command-line option like -capture-session, so that the complete interaction of the REPL is captured in the output.

For example, the following code evaluates an expression and then re-outputs the result.

```

(2 + 2)

_

```

Ideally the session output when running it in batch mode would be like the following:

```

In [1]: (2 + 2)

Out [1]: 4

In [2]: _

Out [2]: 4

```

It seems that the closest ipython current comes to this would be when running the script from stdin:

```

In [1]: Out[1]: 4

In [2]: Out[2]: 4

```

Unfortunately, the input expression is not shown.

An additional complication with running scripts from stdin is that indentation issues can arise. See the attached file for a script that runs into an Indentation error due to an empty line in a function definition. I tried it with four combinations for stdin-vs-file and interactive-vs-non, hoping to find an approximate solution.

[interaction_quirk.py.txt](https://github.com/user-attachments/files/17358257/interaction_quirk.py.txt)

I'm not sure if modern interactive languages support this, but Lisp supports it via its "dribble" mechanism. After a call to dribble, both the input and output of the REPL are saved in the specified log. This is analogous to running the Unix script command.

The motivation for all this comes in the context of testing. With more development being done via Jupyter notebooks, it becomes harder to develop automated tests because the notebooks tend to be opaque and monolithic. If the notebook can be evaluated in batch mode, then automated tests can be written checking for expected output. For this to be effective, all output from the notebook should be included, not just output from explicit calls to print or write. In addition, the output should include the evaluated expressions to allow for more precise tests.

|

open

|

2024-10-14T02:18:47Z

|

2024-10-18T02:18:05Z

|

https://github.com/ipython/ipython/issues/14540

|

[] |

thomas-paul-ohara

| 2

|

samuelcolvin/watchfiles

|

asyncio

| 56

|

[FEATURE] add ‘closed’ as change type

|

I have a server for file uploads. With low latency I need to trigger some python code that reads the incoming files and...

I have an issue right now though. Some times the files are empty and I think it’s because the upload is not done yet. How can I determine if the file is done being written to?

I think inotify have this functionality but I agree with you that it is nice to have it platform independent.

Do you have a proposal for on how to handle this?

|

closed

|

2020-03-30T21:33:04Z

|

2020-05-22T11:44:58Z

|

https://github.com/samuelcolvin/watchfiles/issues/56

|

[] |

NixBiks

| 1

|

unionai-oss/pandera

|

pandas

| 1,059

|

Getting "TypeError: type of out argument not recognized: <class 'str'>" when using class function with Pandera decorator

|

Hi. I am having trouble to get Pandera work with classes.

First I create schemas:

```

from pandera import Column, Check

import yaml

in_ = pa.DataFrameSchema(

{

"Name": Column(object, nullable=True),

"Height": Column(object, nullable=True),

})

with open("./in_.yml", "w") as file:

yaml.dump(in_, file)

out_ = pa.DataFrameSchema(

{

"Name": Column(object, nullable=True),

"Height": Column(object, nullable=True),

})

with open("./out_.yml", "w") as file:

yaml.dump(out_, file)

```

Next I create test.py file with class:

```

from pandera import check_io

import pandas as pd

class TransformClass():

with open("./in_.yml", "r") as file:

in_ = file.read()

with open("./out_.yml", "r") as file:

out_ = file.read()

@staticmethod

@check_io(df=in_, out=out_)

def func(df: pd.DataFrame) -> pd.DataFrame:

return df

```

Finally I importing this class:

```

from test import TransformClass

data = {'Name': [np.nan, 'Princi', 'Gaurav', 'Anuj'],

'Height': [5.1, 6.2, 5.1, 5.2],

'Qualification': ['Msc', 'MA', 'Msc', 'Msc']}

df = pd.DataFrame(data)

TransformClass.func(df)

```

I am getting:

```

File C:\Anaconda3\envs\py310\lib\site-packages\pandera\decorators.py:464, in check_io.<locals>._wrapper(fn, instance, args, kwargs)

462 out_schemas = []

463 else:

--> 464 raise TypeError(

465 f"type of out argument not recognized: {type(out)}"

466 )

468 wrapped_fn = fn

469 for input_getter, input_schema in inputs.items():

470 # pylint: disable=no-value-for-parameter

TypeError: type of out argument not recognized: <class 'str'>

```

Any help would much appreciated

|

closed

|

2022-12-19T08:55:30Z

|

2022-12-19T19:55:28Z

|

https://github.com/unionai-oss/pandera/issues/1059

|

[

"question"

] |

al-yakubovich

| 1

|

pyeve/eve

|

flask

| 964

|

PyMongo 3.4.0 support

|

closed

|

2017-01-15T16:54:21Z

|

2017-01-15T16:58:25Z

|

https://github.com/pyeve/eve/issues/964

|

[

"enhancement"

] |

nicolaiarocci

| 0

|

|

gradio-app/gradio

|

python

| 10,850

|

Could not create share link. Please check your internet connection or our status page: https://status.gradio.app.

|

### Describe the bug

Hi, I am using the latest version of Gradio.

But I encounter this problem:

Do you know how can I solve this problem? Thank you very much!

### Have you searched existing issues? 🔎

- [x] I have searched and found no existing issues

### Reproduction

```python

import gradio as gr

gr.Interface(lambda x: x, "text", "text").launch(share=True)

```

### Screenshot

_No response_

### Logs

```shell

```

### System Info

```shell

Gradio Environment Information:

------------------------------

Operating System: Linux

gradio version: 5.22.0

gradio_client version: 1.8.0

------------------------------------------------

gradio dependencies in your environment:

aiofiles: 23.2.1

anyio: 4.9.0

audioop-lts is not installed.

fastapi: 0.115.11

ffmpy: 0.5.0

gradio-client==1.8.0 is not installed.

groovy: 0.1.2

httpx: 0.28.1

huggingface-hub: 0.29.3

jinja2: 3.1.4

markupsafe: 2.1.5

numpy: 1.24.4

orjson: 3.10.15

packaging: 24.2

pandas: 2.2.3

pillow: 11.0.0

pydantic: 2.10.6

pydub: 0.25.1

python-multipart: 0.0.20

pyyaml: 6.0.2

ruff: 0.11.1

safehttpx: 0.1.6

semantic-version: 2.10.0

starlette: 0.46.1

tomlkit: 0.13.2

typer: 0.15.2

typing-extensions: 4.12.2

urllib3: 2.3.0

uvicorn: 0.34.0

authlib; extra == 'oauth' is not installed.

itsdangerous; extra == 'oauth' is not installed.

gradio_client dependencies in your environment:

fsspec: 2024.6.1

httpx: 0.28.1

huggingface-hub: 0.29.3

packaging: 24.2

typing-extensions: 4.12.2

websockets: 14.2

```

### Severity

Blocking usage of gradio

|

closed

|

2025-03-21T04:00:17Z

|

2025-03-22T22:36:48Z

|

https://github.com/gradio-app/gradio/issues/10850

|

[

"bug"

] |

Allen-Zhou729

| 7

|

ultralytics/ultralytics

|

machine-learning

| 19,781

|

High CPU Usage with OpenVINO YOLOv8n on Integrated GPU – How Can I Reduce It?

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/orgs/ultralytics/discussions) and found no similar questions.

### Question

Hi everyone,

I'm running inference using an OpenVINO-converted YOLOv8n model on an integrated GPU (IGPU), but I'm noticing that the CPU usage stays around 90% while the GPU is only at about 50%. I’ve tried configuring various GPU-specific properties to reduce the CPU load, yet the high CPU usage persists.

```python

import collections

import time

import openvino as ov

import openvino.properties as properties

import openvino.properties.device as device

import openvino.properties.hint as hints

import openvino.properties.streams as streams

import openvino.properties.intel_auto as intel_auto

import cv2

from ultralytics import YOLO

import torch

def open_video_stream():

return cv2.VideoCapture(0)

model_path = r"\yolov8n_openvino_model\yolov8n.xml"

core = ov.Core()

# Read the quantized model

print("Loading OpenVINO model...")

ov_model = core.read_model(str(model_path))

# Reshape the input for GPU

ov_model.reshape({0: [1, 3, 640, 640]})

gpu_config = {

hints.inference_precision: "FP16", # Alternatively, use ov.Type.f16 if available in your API

hints.execution_mode: "PERFORMANCE",

"ENABLE_CPU_PINNING": "NO",

"NUM_STREAMS": "1",

"ENABLE_CPU_PINNING": "NO",

"COMPILATION_NUM_THREADS": "2",

"GPU_DISABLE_WINOGRAD_CONVOLUTION": "YES",

"GPU_QUEUE_THROTTLE": hints.Priority.LOW,

"GPU_HOST_TASK_PRIORITY": hints.Priority.LOW,

}

# Compile the model for GPU

print(f"Compiling model for {device}...")

compiled_model=core.compile_model(ov_model,"GPU",gpu_config)

det_model = YOLO("yolov8n.pt")

label_map = det_model.model.names # Extract class names

test_img_path = "coco_bike.jpg"

# Test inference

try:

test_results = det_model(test_img_path)

print(f"Test inference successful! Found {len(test_results[0].boxes)} objects")

except Exception as e:

print(f"Warning: Test inference failed: {e}")

print("Error details:", e)

print("Continuing anyway...")

def infer(*args):

result = compiled_model(args)

return torch.from_numpy(result[0])

det_model.predictor.inference = infer

det_model.predictor.model.pt = False # Indicate PyTorch model is not used

def run_object_detection():

print("Opening video stream...")

cap = open_video_stream()

if not cap.isOpened():

print("Error: Could not open RTSP stream.")

return

print("Starting object detection loop...")

processing_times = collections.deque()

while True:

ret, frame = cap.read()

if not ret:

print("Failed to get frame from stream. Retrying...")

# Try to reopen the stream if it's dropped

cap.release()

time.sleep(1) # Wait a bit before reconnecting

cap = open_video_stream()

continue

frame= cv2.cvtColor(frame, cv2.COLOR_YUV2BGR_NV12)

# Optionally, resize frame for faster processing if it's too large

scale = 1280 / max(frame.shape)

if scale < 1:

frame = cv2.resize(frame, None, fx=scale, fy=scale, interpolation=cv2.INTER_AREA)

try:

# Run inference on the frame

results = det_model(frame, verbose=False)

if len(processing_times) > 200:

processing_times.popleft()

# Overlay inference time and FPS on the output frame

output_frame = results[0].plot()

cv2.imshow("annotated frame", output_frame)

except Exception as e:

print(f"Error during inference: {e}")

# Show the original frame if inference fails

cv2.putText(frame, "Inference Error", (20, 40),

cv2.FONT_HERSHEY_COMPLEX, 1, (0, 0, 255), 2, cv2.LINE_AA)

cv2.imshow("annotated frame", frame)

if cv2.waitKey(1) == 27: # Exit if ESC key is pressed

break

print("Cleaning up...")

cap.release()

cv2.destroyAllWindows()

if __name__ == "__main__":

print("Starting application...")

run_object_detection()

```

### Additional

_No response_

|

open

|

2025-03-19T10:56:05Z

|

2025-03-20T01:34:16Z

|

https://github.com/ultralytics/ultralytics/issues/19781

|

[

"question",

"detect",

"exports"

] |

AlaaArboun

| 2

|

facebookresearch/fairseq

|

pytorch

| 4,754

|

Forced decoding for translation

|

Hello,

Is there a flag in fairseq-cli to specify a prefix token for forced decoding? The [fairseq-generate](https://fairseq.readthedocs.io/en/latest/command_line_tools.html#fairseq-generate) documentation shows a flag to indicate the size *prefix-size* but I haven't found how to indicate what that token(s) is. Also looking at [sequence-generator.py](https://github.com/facebookresearch/fairseq/blob/main/fairseq/sequence_generator.py) there are code for handling prefix-tokens, but I haven't seen how to specify it in either the code or using fairseq-generate cli.

Thanks

|

open

|

2022-10-03T23:15:45Z

|

2022-10-03T23:15:45Z

|

https://github.com/facebookresearch/fairseq/issues/4754

|

[

"question",

"needs triage"

] |

Pogayo

| 0

|

nalepae/pandarallel

|

pandas

| 244

|

Some workers stuck while others finish 100%

|

## General

- **Operating System**:

Centos 7

- **Python version**:

3.8

- **Pandas version**:

2.0.1

- **Pandarallel version**:

1.6.4

## Acknowledgement

- [x] My issue is **NOT** present when using `pandas` without alone (without `pandarallel`)

- [ ] If I am on **Windows**, I read the [Troubleshooting page](https://nalepae.github.io/pandarallel/troubleshooting/)

before writing a new bug report

## Bug description

<img width="809" alt="image" src="https://github.com/nalepae/pandarallel/assets/12313888/4e12c9ea-a95b-4b55-b136-39d890a71058">

I started a parallel_apply program with 80 workers to decode and clean a large amount of data(about 50GB), after nearly 8mins, most of them reached 100%, but some got stuck. And after 20mins, the progress_bar is still freeze.

```

pandarallel.initialize(nb_workers=os.cpu_count(), progress_bar=True)

df["text"] = df["text"].parallel_apply(decode_clean)

```

### Observed behavior

Progress_bar freezes and cpu usage is 0%

### Expected behavior

The process progress should be nearly linear, the program should be finished after arount 10mins according to the progress_bar.

## Minimal but working code sample to ease bug fix for `pandarallel` team

_Write here the minimal code sample to ease bug fix for `pandarallel` team_

|

closed

|

2023-06-12T13:36:37Z

|

2023-06-28T08:41:51Z

|

https://github.com/nalepae/pandarallel/issues/244

|

[] |

SysuJayce

| 5

|

KevinMusgrave/pytorch-metric-learning

|

computer-vision

| 531

|

DistributedLossWrapper always requires labels

|

It shouldn't require labels if `indices_tuple` is provided.

|

closed

|

2022-09-29T13:42:08Z

|

2023-01-17T01:26:39Z

|

https://github.com/KevinMusgrave/pytorch-metric-learning/issues/531

|

[

"bug"

] |

KevinMusgrave

| 1

|

tatsu-lab/stanford_alpaca

|

deep-learning

| 210

|

Does this code still work when fine-tune with encoder-decoder (BLOOMZ or mT0) ?

|

I'm worry this code doesn't run when use pre-trained BLOOMZ or mT0 [https://github.com/bigscience-workshop/xmtf].

Have anyone fine-tuned this ?

|

open

|

2023-04-14T02:30:27Z

|

2023-04-14T02:30:27Z

|

https://github.com/tatsu-lab/stanford_alpaca/issues/210

|

[] |

nqchieutb01

| 0

|

iMerica/dj-rest-auth

|

rest-api

| 542

|

Get JWT secret used for encoding

|

How can i get the secret being used be library for encoding jwt tokens?

|

closed

|

2023-09-01T13:15:25Z

|

2023-09-01T13:19:25Z

|

https://github.com/iMerica/dj-rest-auth/issues/542

|

[] |

legalimpurity

| 0

|

horovod/horovod

|

tensorflow

| 3,707

|

Reducescatter: Support ncclAvg op for averaging

|

Equivalently to #3646 for Allreduce

|

open

|

2022-09-20T12:17:35Z

|

2022-09-20T12:17:35Z

|

https://github.com/horovod/horovod/issues/3707

|

[

"enhancement"

] |

maxhgerlach

| 0

|

mars-project/mars

|

numpy

| 2,645

|

[BUG] Groupby().agg() returned a DataFrame with index even as_index=False

|

<!--

Thank you for your contribution!

Please review https://github.com/mars-project/mars/blob/master/CONTRIBUTING.rst before opening an issue.

-->

**Describe the bug**

Groupby().agg() returned a DataFrame with index even as_index=False.

**To Reproduce**

To help us reproducing this bug, please provide information below:

1. Your Python version

2. The version of Mars you use

3. Versions of crucial packages, such as numpy, scipy and pandas

4. Full stack of the error.

5. Minimized code to reproduce the error.

```

In [10]: def g(x):

...: return (x == '1').sum()

...:

In [11]: df = md.DataFrame({'a': ['1', '2', '3'], 'b': ['a1', 'a2', 'a1']})

In [12]: df.groupby('b', as_index=False)['a'].agg((g,)).execute()

/Users/qinxuye/Workspace/mars/mars/deploy/oscar/session.py:1932: UserWarning:

Out[12]:

g

b

a1 1

a2 0

```

|

closed

|

2022-01-21T07:58:47Z

|

2022-01-21T09:26:23Z

|

https://github.com/mars-project/mars/issues/2645

|

[

"type: bug",

"reso: invalid",

"mod: dataframe"

] |

qinxuye

| 1

|

graphql-python/graphene-mongo

|

graphql

| 24

|

Types are unaware of parent class attributes defined on child model

|

First of all, thank you for writing this library. I've been wanting to try GraphQL out with my current project but didn't want to have to create an entire new backend application from scratch. I can reuse my existing models thanks to this library, way cool 👍 🥇

Now for my issue...

I have a parent/child relationship defined like this:

```

from mongoengine import Document

class Parent(Document):

bar = StringField()

class Child(Parent):

baz = StringField()

```

When I defined my schema and attempt to query against the `Child` model, it says `Unknown argument "bar" on field "child" of type "Query"`

My query:

```

{

child(bar:"a valid value") {

edges {

node {

bar

baz

}

}

}

}

```

```

from graphene_mongo import MongoengineConnectionField, MongoengineObjectType

from app.models import Child as ChildModel

class Child(MongoengineObjectType):

class Meta:

model = ChildModel

interfaces = (Node,)

class Query(graphene.ObjectType):

node = Node.Field()

child = MongoengineConnectionField(Child)

schema = graphene.Schema(query=Query, types=[Child])

```

I may just be misusing the library, or perhaps this is a feature that isn't implemented yet. If the feature hasn't been implemented yet I am up for taking a stab at it. Is there a way for my schema to infer the parent's attributes based on how I define them like the above example? Thank you again!

|

closed

|

2018-04-01T13:42:14Z

|

2018-04-02T13:59:45Z

|

https://github.com/graphql-python/graphene-mongo/issues/24

|

[] |

msholty-fd

| 1

|

iterative/dvc

|

machine-learning

| 10,064

|

dvc pull: failed to load directory when first failed s3 connection

|

# Bug Report

<!--

## Issue name

Issue names must follow the pattern `command: description` where the command is the dvc command that you are trying to run. The description should describe the consequence of the bug.

Example: `repro: doesn't detect input changes`

-->

## Description

<!--

A clear and concise description of what the bug is.

-->

The command `dvc pull` consistently fails with the error message "failed to load directory" when there was a previous occurrence of "failed to connect to s3". This issue persists even after fixing the s3 credentials.

### Reproduce

<!--

Step list of how to reproduce the bug

-->

#### Reset DVC at the initial step.

```bash

$ rm -rf .dvc

$ git checkout .

Updated 2 paths from the index

```

#### Move credentials to provoke failed s3 connection

```bash

$ mv ~/.aws/credentials{,.tmp}

$ dvc pull

Collecting |25.0 [00:00, 36.8entry/s]

ERROR: failed to connect to s3 (XXX/files/md5) - The config profile (YYY) could not be found

ERROR: failed to pull data from the cloud - 25 files failed to download

```

#### Restore credentials to resolve s3 connection

```bash

$ mv ~/.aws/credentials{.tmp,}

```

#### Reproduce the Bug

```bash

$ dvc pull

Collecting |0.00 [00:00, ?entry/s]

Fetching

ERROR: unexpected error - failed to load directory ('d6', '38d9367bc2b169fb89b59f19e2844f.dir'): [Errno 2] No such file or directory: '/ZZZ/.dvc/cache/files/md5/d6/38d9367bc2b169fb89b59f19e2844f.dir'

```

#### Workaround

```bash

$ rm -rf .dvc/tmp

$ dvc pull

Collecting |1.56k [00:07, 221entry/s]

Fetching

|Fetching from s3 63/130 [00:01<00:00, 78.26file/s]

```

<!--

Example:

1. dvc init

2. Copy dataset.zip to the directory

3. dvc add dataset.zip

4. dvc run -d dataset.zip -o model ./train.sh

5. modify dataset.zip

6. dvc repro

-->

### Expected

<!--

A clear and concise description of what you expect to happen.

-->

`dvc pull` should work without removing ` .dvc/tmp`!

### Environment information

<!--

This is required to ensure that we can reproduce the bug.

-->

**Output of `dvc doctor`:**

```console

DVC version: 3.28.0 (pip)

-------------------------

Platform: Python 3.10.9 on Linux-4.18.0-372.70.1.1.el8_6.x86_64-x86_64-with-glibc2.28

Subprojects:

dvc_data = 2.20.0

dvc_objects = 1.1.0

dvc_render = 0.5.3

dvc_task = 0.3.0

scmrepo = 1.4.0

Supports:

http (aiohttp = 3.8.4, aiohttp-retry = 2.8.3),

https (aiohttp = 3.8.4, aiohttp-retry = 2.8.3),

s3 (s3fs = 2023.6.0, boto3 = 1.26.76)

Config:

Global: /YYY/.config/dvc

System: /etc/xdg/dvc

Cache types: hardlink, symlink

Cache directory: lustre on XXX

Caches: local

Remotes: s3

Workspace directory: lustre on XXX

Repo: dvc, git

Repo.site_cache_dir: /var/tmp/dvc/repo/4372a7cb7af0fda33045046f65b86013

```

## Notes

Maybe related to #10030 ?

<!--

Please check https://github.com/iterative/dvc/wiki/Debugging-DVC on ways to gather more information regarding the issue.

If applicable, please also provide a `--verbose` output of the command, eg: `dvc add --verbose`.

If the issue is regarding the performance, please attach the profiling information and the benchmark comparisons.

-->

|

closed

|

2023-11-03T10:33:57Z

|

2023-12-15T13:36:31Z

|

https://github.com/iterative/dvc/issues/10064

|

[

"awaiting response"

] |

fguiotte

| 3

|

man-group/notebooker

|

jupyter

| 134

|

Add option to pass scheduled cron time to the notebook

|

Being able to read scheduled cron time from the notebook would improve the use case of using notebooker as tool to generate periodic reports. Might also need to maintain that time if same report is re-run.

|

open

|

2023-02-02T20:41:31Z

|

2023-10-11T15:34:27Z

|

https://github.com/man-group/notebooker/issues/134

|

[] |

marcinapostoluk

| 1

|

ivy-llc/ivy

|

numpy

| 28,764

|

Fix Frontend Failing Test: tensorflow - pooling_functions.torch.nn.functional.max_pool2d

|

closed

|

2024-06-15T20:44:58Z

|

2024-07-15T02:29:34Z

|

https://github.com/ivy-llc/ivy/issues/28764

|

[

"Sub Task"

] |

nicolasb0

| 0

|

|

waditu/tushare

|

pandas

| 1,526

|

股票列表接口中没有标明请求所需积分值

|

https://tushare.pro/document/2?doc_id=94

股票列表请求提示无权限,没有明确标明具体所需分值

|

open

|

2021-03-23T06:04:20Z

|

2021-03-23T06:04:20Z

|

https://github.com/waditu/tushare/issues/1526

|

[] |

mestarshine

| 0

|

microsoft/nni

|

pytorch

| 5,309

|

inputs is empty!

|

**Describe the bug**:

inputs is empty!As show in figure:

node-----> name: .aten::mul.146, type: func, op_type: aten::mul, sub_nodes: ['_aten::mul'], inputs: ['logvar'], outputs: ['809'], aux: None

node-----> name: .aten::exp.147, type: func, op_type: aten::exp, sub_nodes: ['_aten::exp'], inputs: ['809'], outputs: ['std'], aux: None

node-----> name: .aten::randn_like.148, type: func, op_type: aten::randn_like, sub_nodes: ['_aten::randn_like'], inputs: ['mu'], outputs: ['eps'], aux: None

node-----> name: .aten::mul.149, type: func, op_type: aten::mul, sub_nodes: ['_aten::mul'], inputs: ['eps', 'std'], outputs: ['817'], aux: None

node-----> name: .aten::add.150, type: func, op_type: aten::add, sub_nodes: ['_aten::add'], inputs: ['817', 'mu'], outputs: ['819'], aux: None

node-----> name: .aten::unsqueeze.151, type: func, op_type: aten::unsqueeze, sub_nodes: ['_aten::unsqueeze'], inputs: ['819'], outputs: ['821'], aux: None

node-----> name: .aten::unsqueeze.152, type: func, op_type: aten::unsqueeze, sub_nodes: ['_aten::unsqueeze'], inputs: ['821'], outputs: ['z.1'], aux: None

node-----> name: .aten::repeat.153, type: func, op_type: aten::repeat, sub_nodes: ['_aten::repeat', '_prim::ListConstruct'], inputs: ['z.1'], outputs: ['z'], aux: None

node-----> name: .aten::cat.154, type: func, op_type: aten::cat, sub_nodes: ['_aten::cat', '_prim::ListConstruct'], inputs: ['z', 'x_stereo'], outputs: ['input.9'], aux: {'out_shape': [2, 322, 276, 513], 'cat_dim': 1, 'in_order': ['.aten::repeat.153', '.aten::transpose.115'], 'in_shape': [[2, 320, 276, 513], [2, 2, 276, 513]]}

node-----> name: .aten::relu.155, type: func, op_type: aten::relu, sub_nodes: ['_aten::relu'], inputs: ['input.82'], outputs: ['input_tensor'], aux: None

node-----> name: .aten::size.156, type: func, op_type: aten::size, sub_nodes: ['_aten::size'], inputs: ['input_tensor'], outputs: ['1812'], aux: None

node-----> name: .aten::Int.157, type: func, op_type: aten::Int, sub_nodes: ['_aten::Int', '_prim::NumToTensor'], inputs: ['1812'], outputs: ['2374'], aux: None

node-----> name: .aten::size.158, type: func, op_type: aten::size, sub_nodes: ['_aten::size'], inputs: ['input_tensor'], outputs: ['1818'], aux: None

node-----> name: .aten::Int.159, type: func, op_type: aten::Int, sub_nodes: ['_aten::Int', '_prim::NumToTensor'], inputs: ['1818'], outputs: ['2125'], aux: None

node-----> name: .aten::Int.160, type: func, op_type: aten::Int, sub_nodes: ['_aten::Int', '_prim::NumToTensor'], inputs: ['1818'], outputs: ['2119'], aux: None

node-----> name: .aten::size.161, type: func, op_type: aten::size, sub_nodes: ['_aten::size'], inputs: ['input_tensor'], outputs: ['1821'], aux: None

node-----> name: .aten::Int.162, type: func, op_type: aten::Int, sub_nodes: ['_aten::Int', '_prim::NumToTensor'], inputs: ['1821'], outputs: ['2126'], aux: None

node-----> name: .aten::Int.163, type: func, op_type: aten::Int, sub_nodes: ['_aten::Int', '_prim::NumToTensor'], inputs: ['1821'], outputs: ['2120'], aux: None

node-----> name: .aten::slice.164, type: func, op_type: aten::slice, sub_nodes: ['_aten::slice'], inputs: ['input_tensor'], outputs: ['1827'], aux: None

node-----> name: .aten::slice.166, type: func, op_type: aten::slice, sub_nodes: ['_aten::slice'], inputs: ['1827'], outputs: ['1839'], aux: None

node-----> name: .aten::slice.167, type: func, op_type: aten::slice, sub_nodes: ['_aten::slice'], inputs: ['1839'], outputs: ['1844'], aux: None

**Environment**:

- NNI version: 2.10

- Training service (local|remote|pai|aml|etc): remote

- Python version: 3.8.13

- PyTorch version: 1.8.0

- Cpu or cuda version: cuda111

**Reproduce the problem**

- Code|Example:

According to my position, the mistake should be here:

def feature_maps_to_wav(

self,

input_tensor: torch.Tensor,

cos_in: torch.tensor,

sin_in: torch.tensor,

cos_c: torch.tensor,

sin_c: torch.tensor,

audio_length: int,

) -> torch.Tensor:

r"""Convert feature maps to waveform.

Outputs:

waveform: (batch_size, output_channels, segment_samples)

"""

batch_size, _, time_steps, freq_bins = input_tensor.shape

l_mag = input_tensor[:, [0], :, :]

r_mag = input_tensor[:, [1], :, :]

c_mag = input_tensor[:, [2], :, :]

lfe_mag = input_tensor[:, [3], :, :]

ls_mag = input_tensor[:, [4], :, :]

rs_mag = input_tensor[:, [5], :, :]

lls_cos_in = cos_in[:, 0:1, :, :]

rrs_cos_in = cos_in[:, 1:2, :, :]

lls_sin_in = sin_in[:, 0:1, :, :]