repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

recommenders-team/recommenders

|

data-science

| 1,969

|

[BUG] Wide and Deep model raise error --- no attribute 'NanLossDuringTrainingError'

|

### Description

<!--- Describe your issue/bug/request in detail -->

### In which platform does it happen?

<!--- Describe the platform where the issue is happening (use a list if needed) -->

<!--- For example: -->

<!--- * Azure Data Science Virtual Machine. -->

<!--- * Azure Databricks. -->

<!--- * Other platforms. -->

https://github.com/microsoft/recommenders/blob/main/examples/00_quick_start/wide_deep_movielens.ipynb

Following the notebook here, change my own dataset, but it runs into this error

```

AttributeError: module 'tensorflow._api.v2.train' has no attribute 'NanLossDuringTrainingError'

```

### How do we replicate the issue?

<!--- Please be specific as possible (use a list if needed). -->

<!--- For example: -->

<!--- * Create a conda environment for pyspark -->

<!--- * Run unit test `test_sar_pyspark.py` with `pytest -m 'spark'` -->

<!--- * ... -->

The dataset is very similar, wondering how different dataset would casue the problem

### Expected behavior (i.e. solution)

<!--- For example: -->

<!--- * The tests for SAR PySpark should pass successfully. -->

### Other Comments

|

closed

|

2023-08-16T16:20:12Z

|

2023-08-17T18:17:00Z

|

https://github.com/recommenders-team/recommenders/issues/1969

|

[

"bug"

] |

Lulu20220

| 1

|

xlwings/xlwings

|

automation

| 1,727

|

executing python code

|

#### OS (Windows 10 )

#### Versions of xlwings0.24.9

#### Describe your issue (incl. Traceback!)

```python

# Your traceback here

```

#### Include a minimal code sample to reproduce the issue (and attach a sample workbook if required!)

```python

import xlwings as xw

# def world():

# wb = xw.Book.caller()

# wb.sheets[0].range('A11').value = 'Hello World!'

x=2

y=45*x

wb = xw.Book.caller()

wb.sheets[0].range('A13').value = y

```

This is a very basic question...sorry if it doesn't make sense. Following your example, I can run the python function World() using RunPython "import hello; hello.world()". I have a python script which when executes runs some actions (like turning on an instrument in the lab etc..). How to run this script directly from excel vba with xlwings. For example my above code doesn't have the function world(). Can I run this python script from excel vba using xlwings to get a value of 90 in range(A13).

|

closed

|

2021-10-05T01:54:49Z

|

2022-02-05T20:08:30Z

|

https://github.com/xlwings/xlwings/issues/1727

|

[] |

leyojoseph

| 1

|

raphaelvallat/pingouin

|

pandas

| 266

|

python3.7 unable to install

|

[lazy_loader](https://pypi.org/project/lazy_loader/)

[pingouin](https://pypi.org/project/pingouin/)

`lazy_loader`>=3.8

Reason: `lazy_loader` not supported 3.7

|

closed

|

2022-05-15T04:54:38Z

|

2022-12-17T23:34:40Z

|

https://github.com/raphaelvallat/pingouin/issues/266

|

[

"invalid :triangular_flag_on_post:"

] |

mochazi

| 1

|

autokey/autokey

|

automation

| 720

|

All custom defined keybindings stopped working in "sticky keys" mode.

|

### Has this issue already been reported?

- [X] I have searched through the existing issues.

### Is this a question rather than an issue?

- [X] This is not a question.

### What type of issue is this?

_No response_

### Which Linux distribution did you use?

Debian.

### Which AutoKey GUI did you use?

_No response_

### Which AutoKey version did you use?

AutoKey-gtk

### How did you install AutoKey?

apt install autokey-gtk

### Can you briefly describe the issue?

All custom defined keybindings stopped working in "sticky keys" mode. For example, if I have defined control+f to be right_arrow, but it stops working once I am "sticky keys" mode after activated "Treat a sequence of modifier keys as a combination" in Debian's "Accessibility" -> "Typing assistance" tab.

### Can the issue be reproduced?

Always

### What are the steps to reproduce the issue?

Enable "sticky keys:Treat a sequence of modifier keys as a combination" in Debian's "Accessibility" -> "Typing assistance" tab.

Type any keybinding defined in AutoKey.

### What should have happened?

The keybinding should remain the same.

### What actually happened?

Nothing as if the keybinding isn't triggered.

### Do you have screenshots?

_No response_

### Can you provide the output of the AutoKey command?

_No response_

### Anything else?

_No response_

|

closed

|

2022-08-04T04:02:09Z

|

2022-08-05T20:54:47Z

|

https://github.com/autokey/autokey/issues/720

|

[

"autokey triggers",

"invalid"

] |

genehwung

| 3

|

AutoGPTQ/AutoGPTQ

|

nlp

| 176

|

[BUG] list index out of range for arch_list when building AutoGPTQ

|

**Describe the bug**

The int4 quantization implementation of [baichuan-7B-GPTQ](https://huggingface.co/TheBloke/baichuan-7B-GPTQ) is based on this project. In an attempt to tetst that LLM, I tried building custom container for that purpose, where `AutoGPTQ` failed upon compiling.

The `Dockerfile`

```

FROM pytorch/pytorch:1.13.1-cuda11.6-cudnn8-devel

RUN apt update && apt install -y build-essential git && rm -rf /var/lib/apt/lists/*

WORKDIR /build

RUN git clone https://github.com/PanQiWei/AutoGPTQ

WORKDIR /build/AutoGPTQ

# RUN GITHUB_ACTIONS=true pip3 install .

RUN GITHUB_ACTIONS=true pip3 install -i https://pypi.tuna.tsinghua.edu.cn/simple .

# RUN pip3 install transformers

WORKDIR /workspace

```

**Hardware details**

Information about CPU and GPU, such as RAM, number, etc.

**Software version**

Ubuntu 22.04 on WSL2

Docker Desktop 4.20.1

Version of relevant software such as operation system, cuda toolkit, python, auto-gptq, pytorch, transformers, accelerate, etc.

**To Reproduce**

Steps to reproduce the behavior:

1. build the image with `Dockerfile` above: `docker build -t autogptq:latest .`

Full log:

```

#0 16.59 Building wheels for collected packages: auto-gptq

#0 16.59 Building wheel for auto-gptq (setup.py): started

#0 41.98 Building wheel for auto-gptq (setup.py): finished with status 'error'

#0 41.99 error: subprocess-exited-with-error

#0 41.99

#0 41.99 × python setup.py bdist_wheel did not run successfully.

#0 41.99 │ exit code: 1

#0 41.99 ╰─> [123 lines of output]

#0 41.99 No CUDA runtime is found, using CUDA_HOME='/opt/conda'

#0 41.99 running bdist_wheel

#0 41.99 /opt/conda/lib/python3.10/site-packages/torch/utils/cpp_extension.py:476: UserWarning: Attempted to use ninja as the BuildExtension backend but we could not find ninja.. Falling back to using the slow distutils backend.

#0 41.99 warnings.warn(msg.format('we could not find ninja.'))

#0 41.99 running build

#0 41.99 running build_py

#0 41.99 creating build

#0 41.99 creating build/lib.linux-x86_64-cpython-310

#0 41.99 creating build/lib.linux-x86_64-cpython-310/auto_gptq

#0 41.99 copying auto_gptq/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq

#0 41.99 creating build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks

#0 41.99 copying auto_gptq/eval_tasks/text_summarization_task.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks

#0 41.99 copying auto_gptq/eval_tasks/sequence_classification_task.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks

#0 41.99 copying auto_gptq/eval_tasks/_base.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks

#0 41.99 copying auto_gptq/eval_tasks/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks

#0 41.99 copying auto_gptq/eval_tasks/language_modeling_task.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks

#0 41.99 creating build/lib.linux-x86_64-cpython-310/auto_gptq/quantization

#0 41.99 copying auto_gptq/quantization/quantizer.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/quantization

#0 41.99 copying auto_gptq/quantization/gptq.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/quantization

#0 41.99 copying auto_gptq/quantization/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/quantization

#0 41.99 creating build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules

#0 41.99 copying auto_gptq/nn_modules/fused_llama_mlp.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules

#0 41.99 copying auto_gptq/nn_modules/fused_llama_attn.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules

#0 41.99 copying auto_gptq/nn_modules/fused_gptj_attn.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules

#0 41.99 copying auto_gptq/nn_modules/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules

#0 41.99 copying auto_gptq/nn_modules/_fused_base.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules

#0 41.99 creating build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/moss.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/gpt_neox.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/gptj.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/baichuan.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/_utils.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/gpt2.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/auto.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/_const.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/codegen.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/opt.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/gpt_bigcode.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/rw.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/_base.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/llama.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 copying auto_gptq/modeling/bloom.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 41.99 creating build/lib.linux-x86_64-cpython-310/auto_gptq/utils

#0 41.99 copying auto_gptq/utils/peft_utils.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/utils

#0 41.99 copying auto_gptq/utils/import_utils.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/utils

#0 41.99 copying auto_gptq/utils/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/utils

#0 41.99 copying auto_gptq/utils/data_utils.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/utils

#0 41.99 creating build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks/_utils

#0 41.99 copying auto_gptq/eval_tasks/_utils/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks/_utils

#0 41.99 copying auto_gptq/eval_tasks/_utils/classification_utils.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks/_utils

#0 41.99 copying auto_gptq/eval_tasks/_utils/generation_utils.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks/_utils

#0 41.99 creating build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/qlinear

#0 41.99 copying auto_gptq/nn_modules/qlinear/qlinear_triton.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/qlinear

#0 41.99 copying auto_gptq/nn_modules/qlinear/qlinear_cuda_old.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/qlinear

#0 41.99 copying auto_gptq/nn_modules/qlinear/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/qlinear

#0 41.99 copying auto_gptq/nn_modules/qlinear/qlinear_cuda.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/qlinear

#0 41.99 creating build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/triton_utils

#0 41.99 copying auto_gptq/nn_modules/triton_utils/custom_autotune.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/triton_utils

#0 41.99 copying auto_gptq/nn_modules/triton_utils/mixin.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/triton_utils

#0 41.99 copying auto_gptq/nn_modules/triton_utils/kernels.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/triton_utils

#0 41.99 copying auto_gptq/nn_modules/triton_utils/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/triton_utils

#0 41.99 running build_ext

#0 41.99 building 'autogptq_cuda_64' extension

#0 41.99 creating build/temp.linux-x86_64-cpython-310

#0 41.99 creating build/temp.linux-x86_64-cpython-310/autogptq_cuda

#0 41.99 gcc -pthread -B /opt/conda/compiler_compat -Wno-unused-result -Wsign-compare -DNDEBUG -fwrapv -O2 -Wall -fPIC -O2 -isystem /opt/conda/include -fPIC -O2 -isystem /opt/conda/include -fPIC -I/opt/conda/lib/python3.10/site-packages/torch/include -I/opt/conda/lib/python3.10/site-packages/torch/include/torch/csrc/api/include -I/opt/conda/lib/python3.10/site-packages/torch/include/TH -I/opt/conda/lib/python3.10/site-packages/torch/include/THC -I/opt/conda/include -Iautogptq_cuda -I/opt/conda/include/python3.10 -c autogptq_cuda/autogptq_cuda_64.cpp -o build/temp.linux-x86_64-cpython-310/autogptq_cuda/autogptq_cuda_64.o -DTORCH_API_INCLUDE_EXTENSION_H -DPYBIND11_COMPILER_TYPE=\"_gcc\" -DPYBIND11_STDLIB=\"_libstdcpp\" -DPYBIND11_BUILD_ABI=\"_cxxabi1011\" -DTORCH_EXTENSION_NAME=autogptq_cuda_64 -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

#0 41.99 Traceback (most recent call last):

#0 41.99 File "<string>", line 2, in <module>

#0 41.99 File "<pip-setuptools-caller>", line 34, in <module>

#0 41.99 File "/build/AutoGPTQ/setup.py", line 98, in <module>

#0 41.99 setup(

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/__init__.py", line 87, in setup

#0 41.99 return distutils.core.setup(**attrs)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/core.py", line 185, in setup

#0 41.99 return run_commands(dist)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/core.py", line 201, in run_commands

#0 41.99 dist.run_commands()

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/dist.py", line 968, in run_commands

#0 41.99 self.run_command(cmd)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/dist.py", line 1217, in run_command

#0 41.99 super().run_command(command)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/dist.py", line 987, in run_command

#0 41.99 cmd_obj.run()

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/wheel/bdist_wheel.py", line 299, in run

#0 41.99 self.run_command('build')

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/cmd.py", line 319, in run_command

#0 41.99 self.distribution.run_command(command)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/dist.py", line 1217, in run_command

#0 41.99 super().run_command(command)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/dist.py", line 987, in run_command

#0 41.99 cmd_obj.run()

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/command/build.py", line 132, in run

#0 41.99 self.run_command(cmd_name)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/cmd.py", line 319, in run_command

#0 41.99 self.distribution.run_command(command)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/dist.py", line 1217, in run_command

#0 41.99 super().run_command(command)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/dist.py", line 987, in run_command

#0 41.99 cmd_obj.run()

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/command/build_ext.py", line 84, in run

#0 41.99 _build_ext.run(self)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/command/build_ext.py", line 346, in run

#0 41.99 self.build_extensions()

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/torch/utils/cpp_extension.py", line 843, in build_extensions

#0 41.99 build_ext.build_extensions(self)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/command/build_ext.py", line 466, in build_extensions

#0 41.99 self._build_extensions_serial()

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/command/build_ext.py", line 492, in _build_extensions_serial

#0 41.99 self.build_extension(ext)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/command/build_ext.py", line 246, in build_extension

#0 41.99 _build_ext.build_extension(self, ext)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/command/build_ext.py", line 547, in build_extension

#0 41.99 objects = self.compiler.compile(

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/ccompiler.py", line 599, in compile

#0 41.99 self._compile(obj, src, ext, cc_args, extra_postargs, pp_opts)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/torch/utils/cpp_extension.py", line 581, in unix_wrap_single_compile

#0 41.99 cflags = unix_cuda_flags(cflags)

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/torch/utils/cpp_extension.py", line 548, in unix_cuda_flags

#0 41.99 cflags + _get_cuda_arch_flags(cflags))

#0 41.99 File "/opt/conda/lib/python3.10/site-packages/torch/utils/cpp_extension.py", line 1780, in _get_cuda_arch_flags

#0 41.99 arch_list[-1] += '+PTX'

#0 41.99 IndexError: list index out of range

#0 41.99 [end of output]

#0 41.99

#0 41.99 note: This error originates from a subprocess, and is likely not a problem with pip.

#0 41.99 ERROR: Failed building wheel for auto-gptq

#0 41.99 Running setup.py clean for auto-gptq

#0 43.35 Failed to build auto-gptq

#0 44.28 Installing collected packages: tokenizers, safetensors, xxhash, tzdata, rouge, regex, python-dateutil, pyarrow, packaging, multidict, fsspec, frozenlist, dill, async-timeout, yarl, pandas, multiprocess, huggingface-hub, aiosignal, accelerate, transformers, aiohttp, peft, datasets, auto-gptq

#0 52.98 Running setup.py install for auto-gptq: started

#0 74.51 Running setup.py install for auto-gptq: finished with status 'error'

#0 74.52 error: subprocess-exited-with-error

#0 74.52

#0 74.52 × Running setup.py install for auto-gptq did not run successfully.

#0 74.52 │ exit code: 1

#0 74.52 ╰─> [127 lines of output]

#0 74.52 No CUDA runtime is found, using CUDA_HOME='/opt/conda'

#0 74.52 running install

#0 74.52 /opt/conda/lib/python3.10/site-packages/setuptools/command/install.py:34: SetuptoolsDeprecationWarning: setup.py install is deprecated. Use build and pip and other standards-based tools.

#0 74.52 warnings.warn(

#0 74.52 running build

#0 74.52 running build_py

#0 74.52 creating build

#0 74.52 creating build/lib.linux-x86_64-cpython-310

#0 74.52 creating build/lib.linux-x86_64-cpython-310/auto_gptq

#0 74.52 copying auto_gptq/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq

#0 74.52 creating build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks

#0 74.52 copying auto_gptq/eval_tasks/text_summarization_task.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks

#0 74.52 copying auto_gptq/eval_tasks/sequence_classification_task.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks

#0 74.52 copying auto_gptq/eval_tasks/_base.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks

#0 74.52 copying auto_gptq/eval_tasks/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks

#0 74.52 copying auto_gptq/eval_tasks/language_modeling_task.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks

#0 74.52 creating build/lib.linux-x86_64-cpython-310/auto_gptq/quantization

#0 74.52 copying auto_gptq/quantization/quantizer.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/quantization

#0 74.52 copying auto_gptq/quantization/gptq.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/quantization

#0 74.52 copying auto_gptq/quantization/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/quantization

#0 74.52 creating build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules

#0 74.52 copying auto_gptq/nn_modules/fused_llama_mlp.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules

#0 74.52 copying auto_gptq/nn_modules/fused_llama_attn.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules

#0 74.52 copying auto_gptq/nn_modules/fused_gptj_attn.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules

#0 74.52 copying auto_gptq/nn_modules/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules

#0 74.52 copying auto_gptq/nn_modules/_fused_base.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules

#0 74.52 creating build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/moss.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/gpt_neox.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/gptj.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/baichuan.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/_utils.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/gpt2.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/auto.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/_const.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/codegen.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/opt.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/gpt_bigcode.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/rw.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/_base.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/llama.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 copying auto_gptq/modeling/bloom.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/modeling

#0 74.52 creating build/lib.linux-x86_64-cpython-310/auto_gptq/utils

#0 74.52 copying auto_gptq/utils/peft_utils.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/utils

#0 74.52 copying auto_gptq/utils/import_utils.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/utils

#0 74.52 copying auto_gptq/utils/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/utils

#0 74.52 copying auto_gptq/utils/data_utils.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/utils

#0 74.52 creating build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks/_utils

#0 74.52 copying auto_gptq/eval_tasks/_utils/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks/_utils

#0 74.52 copying auto_gptq/eval_tasks/_utils/classification_utils.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks/_utils

#0 74.52 copying auto_gptq/eval_tasks/_utils/generation_utils.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/eval_tasks/_utils

#0 74.52 creating build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/qlinear

#0 74.52 copying auto_gptq/nn_modules/qlinear/qlinear_triton.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/qlinear

#0 74.52 copying auto_gptq/nn_modules/qlinear/qlinear_cuda_old.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/qlinear

#0 74.52 copying auto_gptq/nn_modules/qlinear/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/qlinear

#0 74.52 copying auto_gptq/nn_modules/qlinear/qlinear_cuda.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/qlinear

#0 74.52 creating build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/triton_utils

#0 74.52 copying auto_gptq/nn_modules/triton_utils/custom_autotune.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/triton_utils

#0 74.52 copying auto_gptq/nn_modules/triton_utils/mixin.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/triton_utils

#0 74.52 copying auto_gptq/nn_modules/triton_utils/kernels.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/triton_utils

#0 74.52 copying auto_gptq/nn_modules/triton_utils/__init__.py -> build/lib.linux-x86_64-cpython-310/auto_gptq/nn_modules/triton_utils

#0 74.52 running build_ext

#0 74.52 /opt/conda/lib/python3.10/site-packages/torch/utils/cpp_extension.py:476: UserWarning: Attempted to use ninja as the BuildExtension backend but we could not find ninja.. Falling back to using the slow distutils backend.

#0 74.52 warnings.warn(msg.format('we could not find ninja.'))

#0 74.52 building 'autogptq_cuda_64' extension

#0 74.52 creating build/temp.linux-x86_64-cpython-310

#0 74.52 creating build/temp.linux-x86_64-cpython-310/autogptq_cuda

#0 74.52 gcc -pthread -B /opt/conda/compiler_compat -Wno-unused-result -Wsign-compare -DNDEBUG -fwrapv -O2 -Wall -fPIC -O2 -isystem /opt/conda/include -fPIC -O2 -isystem /opt/conda/include -fPIC -I/opt/conda/lib/python3.10/site-packages/torch/include -I/opt/conda/lib/python3.10/site-packages/torch/include/torch/csrc/api/include -I/opt/conda/lib/python3.10/site-packages/torch/include/TH -I/opt/conda/lib/python3.10/site-packages/torch/include/THC -I/opt/conda/include -Iautogptq_cuda -I/opt/conda/include/python3.10 -c autogptq_cuda/autogptq_cuda_64.cpp -o build/temp.linux-x86_64-cpython-310/autogptq_cuda/autogptq_cuda_64.o -DTORCH_API_INCLUDE_EXTENSION_H -DPYBIND11_COMPILER_TYPE=\"_gcc\" -DPYBIND11_STDLIB=\"_libstdcpp\" -DPYBIND11_BUILD_ABI=\"_cxxabi1011\" -DTORCH_EXTENSION_NAME=autogptq_cuda_64 -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

#0 74.52 Traceback (most recent call last):

#0 74.52 File "<string>", line 2, in <module>

#0 74.52 File "<pip-setuptools-caller>", line 34, in <module>

#0 74.52 File "/build/AutoGPTQ/setup.py", line 98, in <module>

#0 74.52 setup(

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/__init__.py", line 87, in setup

#0 74.52 return distutils.core.setup(**attrs)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/core.py", line 185, in setup

#0 74.52 return run_commands(dist)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/core.py", line 201, in run_commands

#0 74.52 dist.run_commands()

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/dist.py", line 968, in run_commands

#0 74.52 self.run_command(cmd)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/dist.py", line 1217, in run_command

#0 74.52 super().run_command(command)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/dist.py", line 987, in run_command

#0 74.52 cmd_obj.run()

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/command/install.py", line 68, in run

#0 74.52 return orig.install.run(self)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/command/install.py", line 698, in run

#0 74.52 self.run_command('build')

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/cmd.py", line 319, in run_command

#0 74.52 self.distribution.run_command(command)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/dist.py", line 1217, in run_command

#0 74.52 super().run_command(command)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/dist.py", line 987, in run_command

#0 74.52 cmd_obj.run()

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/command/build.py", line 132, in run

#0 74.52 self.run_command(cmd_name)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/cmd.py", line 319, in run_command

#0 74.52 self.distribution.run_command(command)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/dist.py", line 1217, in run_command

#0 74.52 super().run_command(command)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/dist.py", line 987, in run_command

#0 74.52 cmd_obj.run()

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/command/build_ext.py", line 84, in run

#0 74.52 _build_ext.run(self)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/command/build_ext.py", line 346, in run

#0 74.52 self.build_extensions()

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/torch/utils/cpp_extension.py", line 843, in build_extensions

#0 74.52 build_ext.build_extensions(self)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/command/build_ext.py", line 466, in build_extensions

#0 74.52 self._build_extensions_serial()

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/command/build_ext.py", line 492, in _build_extensions_serial

#0 74.52 self.build_extension(ext)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/command/build_ext.py", line 246, in build_extension

#0 74.52 _build_ext.build_extension(self, ext)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/command/build_ext.py", line 547, in build_extension

#0 74.52 objects = self.compiler.compile(

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/setuptools/_distutils/ccompiler.py", line 599, in compile

#0 74.52 self._compile(obj, src, ext, cc_args, extra_postargs, pp_opts)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/torch/utils/cpp_extension.py", line 581, in unix_wrap_single_compile

#0 74.52 cflags = unix_cuda_flags(cflags)

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/torch/utils/cpp_extension.py", line 548, in unix_cuda_flags

#0 74.52 cflags + _get_cuda_arch_flags(cflags))

#0 74.52 File "/opt/conda/lib/python3.10/site-packages/torch/utils/cpp_extension.py", line 1780, in _get_cuda_arch_flags

#0 74.52 arch_list[-1] += '+PTX'

#0 74.52 IndexError: list index out of range

#0 74.52 [end of output]

#0 74.52

#0 74.52 note: This error originates from a subprocess, and is likely not a problem with pip.

#0 74.52 error: legacy-install-failure

#0 74.52

#0 74.52 × Encountered error while trying to install package.

#0 74.52 ╰─> auto-gptq

#0 74.52

#0 74.52 note: This is an issue with the package mentioned above, not pip.

#0 74.52 hint: See above for output from the failure.

------

Dockerfile:10

--------------------

8 | WORKDIR /build/AutoGPTQ

9 | # RUN GITHUB_ACTIONS=true pip3 install .

10 | >>> RUN GITHUB_ACTIONS=true pip3 install -i https://pypi.tuna.tsinghua.edu.cn/simple .

11 | # RUN pip3 install transformers

12 | WORKDIR /workspace

--------------------

ERROR: failed to solve: process "/bin/sh -c GITHUB_ACTIONS=true pip3 install -i https://pypi.tuna.tsinghua.edu.cn/simple ." did not complete successfully: exit code: 1

```

**Expected behavior**

A clear and concise description of what you expected to happen.

**Screenshots**

If applicable, add screenshots to help explain your problem.

**Additional context**

Add any other context about the problem here.

|

closed

|

2023-06-25T11:30:38Z

|

2023-06-26T08:19:30Z

|

https://github.com/AutoGPTQ/AutoGPTQ/issues/176

|

[

"bug"

] |

BorisPolonsky

| 1

|

MaartenGr/BERTopic

|

nlp

| 1,290

|

TypeError: cannot unpack non-iterable BERTopic object

|

Greeting MaartenGr,

could you please explain for me this type of error?

TypeError: cannot unpack non-iterable BERTopic object

Thanks, in advance.

|

closed

|

2023-05-22T22:35:35Z

|

2023-09-27T08:59:37Z

|

https://github.com/MaartenGr/BERTopic/issues/1290

|

[] |

Keamww2021

| 2

|

RobertCraigie/prisma-client-py

|

pydantic

| 19

|

Add support for selecting fields

|

## Problem

A crucial part of modern and performant ORMs is the ability to choose what fields are returned, Prisma Client Python is currently missing this feature.

## Mypy solution

As we have a mypy plugin we can dynamically modify types on the fly, this means we would be able to make use of a more ergonomic solution.

```py

class Model(BaseModel):

id: str

name: str

points: Optional[int]

class SelectedModel(BaseModel):

id: Optional[str]

name: Optional[str]

points: Optional[int]

ModelSelect = Iterable[Literal['id', 'name', 'points']]

@overload

def action(

...

) -> Model:

...

@overload

def action(

...

select: ModelSelect

) -> SelectedModel:

...

model = action(select={'id', 'name'})

```

The mypy plugin would then dynamically remove the `Optional` from the model for every field that is selected, we might also be able to remove the fields that aren't selected although I don't know if this is possible.

The downside to a solution like this is that unreachable code will not trigger an error when type checking with a type checker other than mypy, e.g.

```py

user = await client.user.find_first(select={'name'})

if user.id is not None:

print(user.id)

```

Will pass type checks although the if block will never be ran.

EDIT: A potential solution for the above would be to not use optional and instead use our own custom type, e.g. maybe something like `PrismaMaybeUnset`. This has its own downsides though.

EDIT: I also think we may also want to support setting a "default include" value so that relations will always be fetched unless explicitly given `False`. This will not change the generated types and they will still be `Optional[T]`.

## Type checker agnostic solution

After #59 is implemented the query builder should only select the fields that are present on the given `BaseModel`.

This would mean that users could generate partial types and then easily use them to select certain fields.

```py

User.create_partial('UserOnlyName', include={'name'})

```

```py

from prisma.partials import UserOnlyName

user = await UserOnlyName.prisma().find_unique(where={'id': 'abc'})

```

Or create models by themselves

```py

class User(BaseUser):

name: str

user = await User.prisma().find_unique(where={'id': 'abc'})

```

This will make typing generic functions to process models more difficult, for example, the following function would not accept custom models.:

```py

def process_user(user: User) -> None:

...

```

It could however be modified to accept objects with the correct properties by using a `Protocol`.

```py

class UserWithID(Protocol):

id: str

def process_user(user: UserWithID):

...

```

|

closed

|

2021-06-14T18:59:57Z

|

2023-03-08T12:41:27Z

|

https://github.com/RobertCraigie/prisma-client-py/issues/19

|

[

"kind/feature",

"level/advanced",

"priority/medium"

] |

RobertCraigie

| 4

|

K3D-tools/K3D-jupyter

|

jupyter

| 17

|

Plot objects persist

|

If one has a plot with e.g. single object, then it cannot be removed:

from k3d import K3D

plot = K3D()

plot += K3D.text("HEY",(1,1,1))

print("Expect one object",plot.objects )

plot = K3D()

print("Shold be empty list:",plot.objects)

|

closed

|

2017-05-04T10:18:59Z

|

2017-05-07T06:44:28Z

|

https://github.com/K3D-tools/K3D-jupyter/issues/17

|

[] |

marcinofulus

| 0

|

nalepae/pandarallel

|

pandas

| 97

|

Bug with the progressbar

|

Hi!

Thanks for the nice tool! :)

I have an error only if I use the progress bar

`TypeError: ("argument of type 'int' is not iterable", 'occurred at index M05218:191:000000000-D7R5H:1:1102:14482:19336')`

It works well without the bar...

Any idea?

Cheers,

Mathieu

|

closed

|

2020-06-10T12:22:35Z

|

2022-03-14T20:36:46Z

|

https://github.com/nalepae/pandarallel/issues/97

|

[] |

mbahin

| 1

|

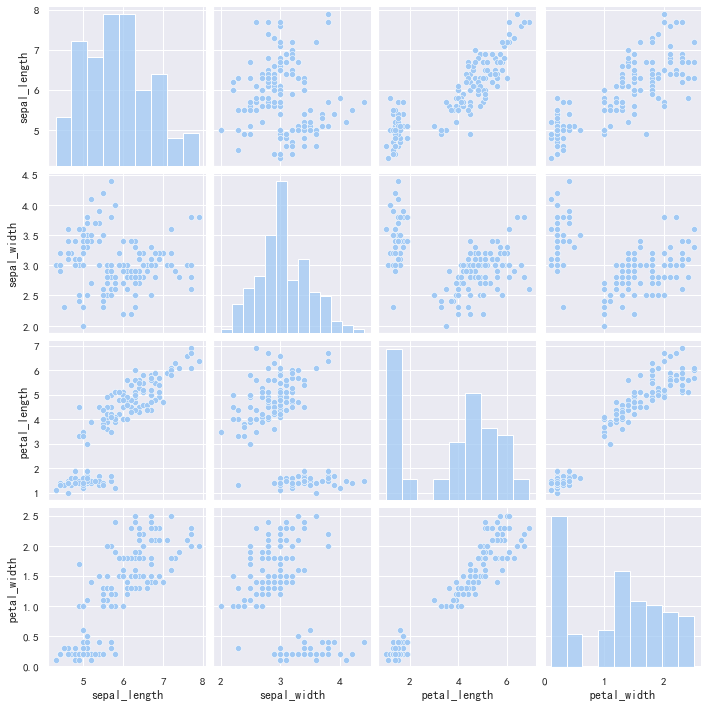

mwaskom/seaborn

|

data-visualization

| 3,268

|

Wrong ylim using pairplot

|

`pairplot` seems not to support `sharey=True` like `FacetGrid`. This may cause unnecessary problems if I have a draw a plot with different ylims using `pairplot`. Just take the iris dataset for an example.

```python

import matplotlib.pyplot as plt

import seaborn as sns

iris = sns.load_dataset('iris')

# pairplot() example

g = sns.pairplot(iris, kind='scatter', diag_kind='hist', grid_kws=dict('sepal_length'))

plt.show()

```

Though passing `'sepal_length'` to the function, the ylim of histogram is not the right value I want. If I use `sns.hisplot` to draw the histogram of sepal_length in iris dataset, the ylim is (0,25).

```pthon

sns.histplot(iris,x='sepal_length')

```

|

closed

|

2023-02-19T05:34:46Z

|

2023-02-19T17:31:01Z

|

https://github.com/mwaskom/seaborn/issues/3268

|

[] |

kebuAAA

| 3

|

ymcui/Chinese-LLaMA-Alpaca

|

nlp

| 451

|

基于LLAMA基础模型,预训练adapter_config怎么产生?

|

*提示:将[ ]中填入x,表示打对钩。提问时删除这行。只保留符合的选项。*

### 详细描述问题

1. 基础模型是llama-7b。

2. tokenizer 是通过 merge_tokenizers (基础模型+中文sp)

3. 通过预训练脚本训练,训练出目录下

4. [wiki](https://github.com/ymcui/Chinese-LLaMA-Alpaca/wiki/%E9%A2%84%E8%AE%AD%E7%BB%83%E8%84%9A%E6%9C%AC#%E8%AE%AD%E7%BB%83%E5%90%8E%E6%96%87%E4%BB%B6%E6%95%B4%E7%90%86) 这里训练后文件整理,adapter_config 这个从哪里生成?

### 运行截图或日志

### 必查项目(前三项只保留你要问的)

- [x ] **基础模型**:LLaMA / Alpaca / LLaMA-Plus / Alpaca-Plus

- [ ] **运行系统**:Windows / MacOS / Linux

- [ ] **问题分类**:下载问题 / 模型转换和合并 / 模型训练与精调 / 模型推理问题(🤗 transformers) / 模型量化和部署问题(llama.cpp、text-generation-webui、LlamaChat) / 效果问题 / 其他问题

- [x ] (必选)由于相关依赖频繁更新,请确保按照[Wiki](https://github.com/ymcui/Chinese-LLaMA-Alpaca/wiki)中的相关步骤执行

- [ ] (必选)我已阅读[FAQ章节](https://github.com/ymcui/Chinese-LLaMA-Alpaca/wiki/常见问题)并且已在Issue中对问题进行了搜索,没有找到相似问题和解决方案

- [ ] (必选)第三方插件问题:例如[llama.cpp](https://github.com/ggerganov/llama.cpp)、[text-generation-webui](https://github.com/oobabooga/text-generation-webui)、[LlamaChat](https://github.com/alexrozanski/LlamaChat)等,同时建议到对应的项目中查找解决方案

|

closed

|

2023-05-29T09:20:06Z

|

2023-05-29T10:39:35Z

|

https://github.com/ymcui/Chinese-LLaMA-Alpaca/issues/451

|

[] |

richardkelly2014

| 5

|

miguelgrinberg/microblog

|

flask

| 228

|

Problem in Chapter 3 using flask-wtf

|

Hi, This is not really an issue but FYI, in working through chapter 3, I had trouble using the current version of flask-wtf or, technically, werkzeug. There was apparently a change to the way werkzeug handled url encoding as documented in [this link](https://github.com/pallets/flask/issues/3481). The solution (albeit temporary I guess) is to downgrade to a prior version of werkzeug as noted in that link.

Here is my stack trace:

[werkzeug_error.txt](https://github.com/miguelgrinberg/microblog/files/4536379/werkzeug_error.txt)

|

closed

|

2020-04-26T20:07:39Z

|

2020-06-30T22:48:50Z

|

https://github.com/miguelgrinberg/microblog/issues/228

|

[

"question"

] |

TriumphTodd

| 1

|

plotly/dash-core-components

|

dash

| 756

|

Mapbox Graph wrapped in dcc.Loading lags one update behind

|

Hi there,

when I wrap a Mapbox scatter plot in a dcc.Loading component, updates to this figure seem to be delayed by one update. The same logic with normal scatter plots works fine. I could reproduce this behavior with Dash Core Components 1.8.0 and previous versions.

Here's a demo:

and here's the code:

```python

import dash

import dash_html_components as html

import dash_core_components as dcc

import plotly.graph_objects as go

from dash.dependencies import Output, Input

app = dash.Dash(__name__)

lat = [10, 10, 10, 10]

lon = [10, 20, 30, 40]

app.layout = html.Div([

html.Div('Select a point on the map:'),

dcc.Slider(min=0, max=3, step=1, value=0, marks=[0, 1, 2, 3], id='slider'),

dcc.Loading(dcc.Graph(id='graphContainer'))

])

@app.callback(Output('graphContainer', 'figure'),

[Input('slider', 'value')])

def UpdateGraph(value):

return {'data': [go.Scattermapbox(lat=lat, lon=lon, selectedpoints=[value])],

'layout': go.Layout(mapbox_style='carto-positron')}

app.run_server()

```

|

closed

|

2020-02-15T14:19:45Z

|

2020-05-12T20:57:30Z

|

https://github.com/plotly/dash-core-components/issues/756

|

[] |

ghost

| 6

|

qubvel-org/segmentation_models.pytorch

|

computer-vision

| 550

|

Custom weights

|

Is there a way to pass custom weights for models other than the ones mentioned in the table?

|

closed

|

2022-01-31T05:38:49Z

|

2022-01-31T08:26:31Z

|

https://github.com/qubvel-org/segmentation_models.pytorch/issues/550

|

[] |

pranavsinghps1

| 2

|

aidlearning/AidLearning-FrameWork

|

jupyter

| 204

|

关于网络安全问题的考虑。Considerations on network security

|

cloud_ip功能是一个极好用的功能,但是他通过局域网http协议明文传输密码,这在大局域网例如校园是十分不安全的,别人通过抓包可以获取你的密码(特别是弱密码),并获得访问你个人手机数据的权限。我的建议是增加ip访问白名单机制,对所有请求ip列表进行临时授权。这个建议是否可行,或实用,如果有更好的方法请告知我。cloud_ IP is an excellent function, but it transmits passwords in plaintext through the LAN HTTP protocol, which is very unsafe in large LAN such as campus. Others can obtain your password (especially weak password) by capturing packets, and access your personal mobile phone data. My suggestion is to add IP access whitelist mechanism to temporarily authorize all request IP lists. Whether this suggestion is feasible or practical, please let me know if there is a better method.(Lazy translator from Baidu)

|

closed

|

2022-01-04T02:59:38Z

|

2022-12-05T12:23:46Z

|

https://github.com/aidlearning/AidLearning-FrameWork/issues/204

|

[] |

LY1806620741

| 5

|

ResidentMario/missingno

|

data-visualization

| 2

|

Option to remove the sparkline

|

Hi,

Many thanks for the awesome work! When the number of rows is large, the sparkline looks less useful (more difficult) to visually understand the #features available just looking at it. Wondering if an option to toggle the sparkline off could be added.

|

closed

|

2016-03-30T05:46:47Z

|

2016-04-08T05:29:41Z

|

https://github.com/ResidentMario/missingno/issues/2

|

[

"enhancement"

] |

nipunbatra

| 3

|

andrew-hossack/dash-tools

|

plotly

| 105

|

https://github.com/badges/shields/issues/8671

|

closed

|

2023-02-02T15:08:15Z

|

2023-10-17T15:51:56Z

|

https://github.com/andrew-hossack/dash-tools/issues/105

|

[] |

andrew-hossack

| 0

|

|

apache/airflow

|

data-science

| 48,026

|

KPO mapped task failing

|

### Apache Airflow version

3.0.0

### If "Other Airflow 2 version" selected, which one?

_No response_

### What happened?

Kpo Mapped task failing

**Error**

```

scheduler [2025-03-20T17:28:28.774+0000] {dagrun.py:994} INFO - Marking run <DagRun kpo_override_resource_negative_case @ 2025-03-20 17:15:52.320478+00:00: scheduled__2025-03-20T17:15:52.320478+00:00, state:running, queued_at: 2025-03-20 17:15:57.320782+00:00. run_type: scheduled> successful

scheduler Dag run in success state

scheduler Dag run start:2025-03-20 17:15:57.356238+00:00 end:2025-03-20 17:28:28.775063+00:00

scheduler [2025-03-20T17:28:28.780+0000] {dagrun.py:1041} INFO - DagRun Finished: dag_id=kpo_override_resource_negative_case, logical_date=2025-03-20 17:15:52.320478+00:00, run_id=scheduled__2025-03-20T17:15:52.320478+00:00, run_start_date=2025-03-20 17:15:57.356238+00:00, run_end_date=2025-03-20 17:28:28.775063+00:00, run_duration=751.418825, state=success, run_type=scheduled, data_interval_start=2025-03-20 17:15:52.320478+00:00, data_interval_end=2025-03-20 17:15:52.320478+00:00,

scheduler [2025-03-20T17:28:28.792+0000] {adapter.py:412} WARNING - Failed to emit DAG success event:

scheduler Traceback (most recent call last):

scheduler File "/usr/local/lib/python3.12/site-packages/sqlalchemy/engine/base.py", line 1910, in _execute_context

scheduler self.dialect.do_execute(

scheduler File "/usr/local/lib/python3.12/site-packages/sqlalchemy/engine/default.py", line 736, in do_execute

scheduler cursor.execute(statement, parameters)

scheduler psycopg2.OperationalError: lost synchronization with server: got message type "r", length 1919509605

scheduler

scheduler

scheduler The above exception was the direct cause of the following exception:

scheduler

scheduler Traceback (most recent call last):

scheduler File "/usr/local/lib/python3.12/site-packages/airflow/providers/openlineage/plugins/adapter.py", line 397, in dag_success

scheduler **get_airflow_state_run_facet(dag_id, run_id, task_ids, dag_run_state),

scheduler ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

scheduler File "/usr/local/lib/python3.12/site-packages/airflow/providers/openlineage/utils/utils.py", line 561, in get_airflow_state_run_facet

scheduler tis = DagRun.fetch_task_instances(dag_id=dag_id, run_id=run_id, task_ids=task_ids)

scheduler ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

scheduler File "/usr/local/lib/python3.12/site-packages/airflow/utils/session.py", line 101, in wrapper

scheduler return func(*args, session=session, **kwargs)

scheduler ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

scheduler File "/usr/local/lib/python3.12/site-packages/airflow/models/dagrun.py", line 708, in fetch_task_instances

scheduler return session.scalars(tis).all()

scheduler ^^^^^^^^^^^^^^^^^^^^

scheduler File "/usr/local/lib/python3.12/site-packages/sqlalchemy/orm/session.py", line 1778, in scalars

scheduler return self.execute(

scheduler ^^^^^^^^^^^^^

scheduler File "/usr/local/lib/python3.12/site-packages/sqlalchemy/orm/session.py", line 1717, in execute

scheduler result = conn._execute_20(statement, params or {}, execution_options)

scheduler ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

scheduler File "/usr/local/lib/python3.12/site-packages/sqlalchemy/engine/base.py", line 1710, in _execute_20

scheduler return meth(self, args_10style, kwargs_10style, execution_options)

scheduler ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

scheduler File "/usr/local/lib/python3.12/site-packages/sqlalchemy/sql/elements.py", line 334, in _execute_on_connection

scheduler return connection._execute_clauseelement(

scheduler ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

scheduler File "/usr/local/lib/python3.12/site-packages/sqlalchemy/engine/base.py", line 1577, in _execute_clauseelement

scheduler ret = self._execute_context(

scheduler ^^^^^^^^^^^^^^^^^^^^^^

scheduler File "/usr/local/lib/python3.12/site-packages/sqlalchemy/engine/base.py", line 1953, in _execute_context

scheduler self._handle_dbapi_exception(

scheduler File "/usr/local/lib/python3.12/site-packages/sqlalchemy/engine/base.py", line 2134, in _handle_dbapi_exception

scheduler util.raise_(

scheduler File "/usr/local/lib/python3.12/site-packages/sqlalchemy/util/compat.py", line 211, in raise_

scheduler raise exception

scheduler File "/usr/local/lib/python3.12/site-packages/sqlalchemy/engine/base.py", line 1910, in _execute_context

scheduler self.dialect.do_execute(

scheduler File "/usr/local/lib/python3.12/site-packages/sqlalchemy/engine/default.py", line 736, in do_execute

scheduler cursor.execute(statement, parameters)

scheduler sqlalchemy.exc.OperationalError: (psycopg2.OperationalError) lost synchronization with server: got message type "r", length 1919509605

scheduler

scheduler [SQL: SELECT task_instance.rendered_map_index, task_instance.task_display_name, task_instance.id, task_instance.task_id, task_instance.dag_id, task_instance.run_id, task_instance.map_index, task_instance.start_date, task_instance.end_date, task_instance.duration, task_instance.state, task_instance.try_id, task_instance.try_number, task_instance.max_tries, task_instance.hostname, task_instance.unixname, task_instance.pool, task_instance.pool_slots, task_instance.queue, task_instance.priority_weight, task_instance.operator, task_instance.custom_operator_name, task_instance.queued_dttm, task_instance.scheduled_dttm, task_instance.queued_by_job_id, task_instance.last_heartbeat_at, task_instance.pid, task_instance.executor, task_instance.executor_config, task_instance.updated_at, task_instance.external_executor_id, task_instance.trigger_id, task_instance.trigger_timeout, task_instance.next_method, task_instance.next_kwargs, task_instance.dag_version_id, dag_run_1.state AS state_1, dag_run_1.id AS id_1, dag_run_1.dag_id AS dag_id_1, dag_run_1.queued_at, dag_run_1.logical_date, dag_run_1.start_date AS start_date_1, dag_run_1.end_date AS end_date_1, dag_run_1.run_id AS run_id_1, dag_run_1.creating_job_id, dag_run_1.run_type, dag_run_1.triggered_by, dag_run_1.conf, dag_run_1.data_interval_start, dag_run_1.data_interval_end, dag_run_1.run_after, dag_run_1.last_scheduling_decision, dag_run_1.log_template_id, dag_run_1.updated_at AS updated_at_1, dag_run_1.clear_number, dag_run_1.backfill_id, dag_run_1.bundle_version

scheduler FROM task_instance JOIN dag_run AS dag_run_1 ON dag_run_1.dag_id = task_instance.dag_id AND dag_run_1.run_id = task_instance.run_id

scheduler WHERE task_instance.dag_id = %(dag_id_2)s AND task_instance.run_id = %(run_id_2)s]

scheduler [parameters: {'dag_id_2': 'kpo_override_resource_negative_case', 'run_id_2': 'scheduled__2025-03-20T17:15:52.320478+00:00'}]

scheduler (Background on this error at: https://sqlalche.me/e/14/e3q8)

scheduler

scheduler-gc Trimming airflow logs to 1 days.

scheduler-gc Trimming airflow logs to 1 days.

scheduler-gc Trimming airflow logs to 1 days.

scheduler-gc Trimming airflow logs to 1 days.

scheduler-gc Trimming airflow logs to 1 days.

```

### What you think should happen instead?

_No response_

### How to reproduce

Try running below DAG

```

from datetime import datetime

from airflow import DAG

from airflow.providers.cncf.kubernetes.operators.pod import (

KubernetesPodOperator,

)

from airflow.configuration import conf

namespace = conf.get("kubernetes_executor", "NAMESPACE")

with DAG(

dag_id="kpo_mapped",

start_date=datetime(1970, 1, 1),

schedule=None,

tags=["taskmap"]

# render_template_as_native_obj=True,

) as dag:

KubernetesPodOperator(

task_id="cowsay_static",

name="cowsay_statc",

namespace=namespace,

image="docker.io/rancher/cowsay",

cmds=["cowsay"],

arguments=["moo"],

log_events_on_failure=True,

)

KubernetesPodOperator.partial(

task_id="cowsay_mapped",

name="cowsay_mapped",

namespace=namespace,

image="docker.io/rancher/cowsay",

cmds=["cowsay"],

log_events_on_failure=True,

).expand(arguments=[["mooooove"], ["cow"], ["get out the way"]])

```

### Operating System

linux

### Versions of Apache Airflow Providers

_No response_

### Deployment

Official Apache Airflow Helm Chart

### Deployment details

_No response_

### Anything else?

_No response_

### Are you willing to submit PR?

- [ ] Yes I am willing to submit a PR!

### Code of Conduct

- [x] I agree to follow this project's [Code of Conduct](https://github.com/apache/airflow/blob/main/CODE_OF_CONDUCT.md)

|

open

|

2025-03-20T17:41:56Z

|

2025-03-24T13:06:03Z

|

https://github.com/apache/airflow/issues/48026

|

[

"kind:bug",

"priority:high",

"area:core",

"area:dynamic-task-mapping",

"provider:openlineage",

"affected_version:3.0.0beta"

] |

vatsrahul1001

| 0

|

blacklanternsecurity/bbot

|

automation

| 1,953

|

Is this a fork from SpiderFoot ?

|

**Question**

Question: Is this a fork from Spiderfoot ?

|

closed

|

2024-11-11T12:25:48Z

|

2024-11-12T02:34:56Z

|

https://github.com/blacklanternsecurity/bbot/issues/1953

|

[

"enhancement"

] |

izdrail

| 5

|

521xueweihan/HelloGitHub

|

python

| 2,718

|

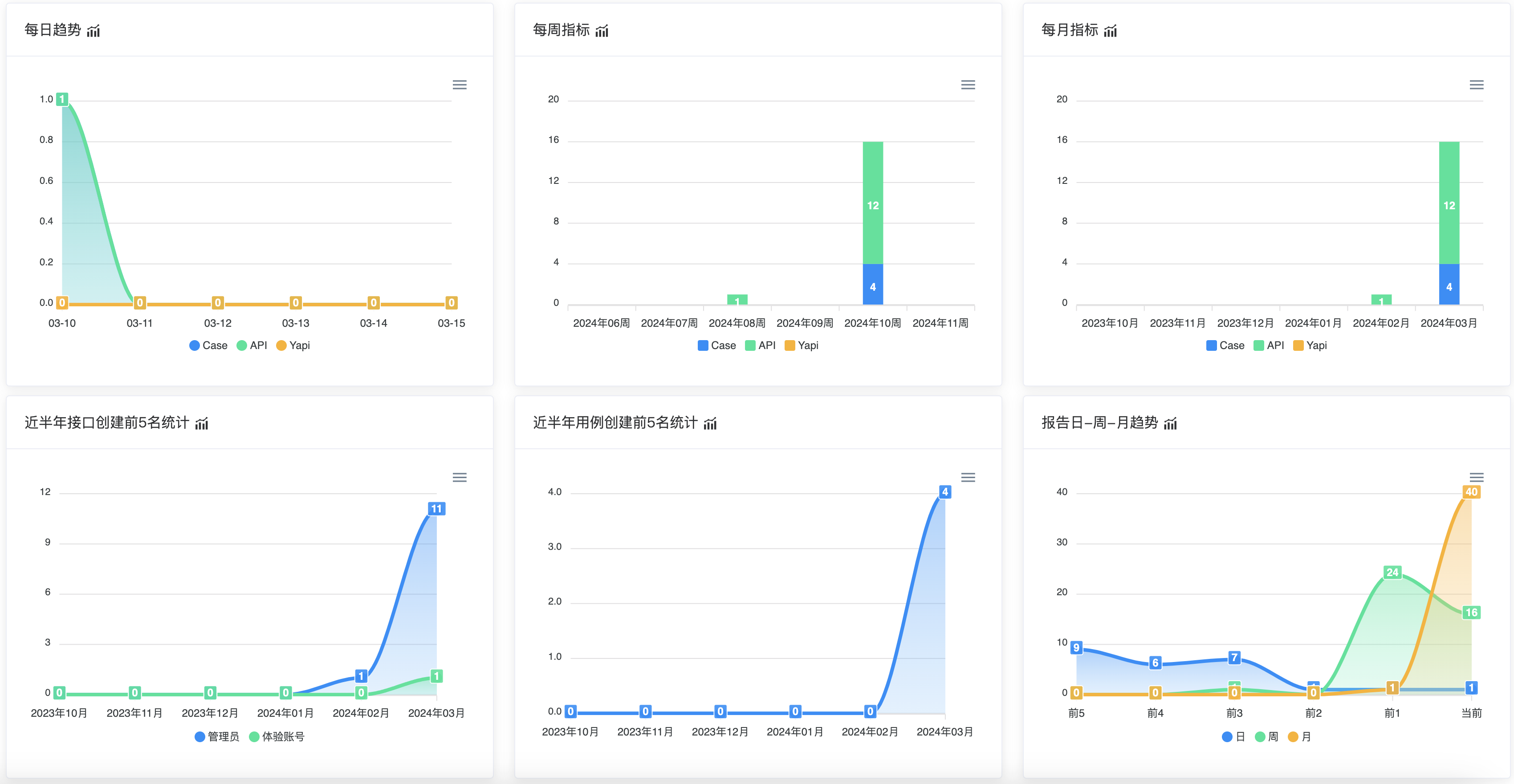

【开源自荐】LunarLink - 接口自动化测试平台,帮助测试工程师快速编写接口自动化测试用例

|

- 项目地址:https://github.com/tahitimoon/LunarLink

- 类别:Python

- 项目标题:一个基于 Web 的接口自动化测试平台,可以快速编写和运行接口自动化测试用例

- 项目描述:基于HttpRunner + Django + Vue + Element UI 的接口自动化测试平台,生产可用。

- 项目文档:https://lunar-link-docs.fun

- 亮点:

- [x] 支持同步 YAPI(间接支持 Swagger,Postman,Har),无需手动录入接口

- [x] 继承[Requests](https://requests.readthedocs.io/projects/cn/zh_CN/latest/index.html)的全部特性,轻松实现 HTTP(S)的各种测试需求

- [x] 借助驱动代码(debugtalk.py),在测试脚本中轻松实现请求参数签名,加密和解密响应等

- [x] 支持完善的 hook 机制,通过请求前置和后置函数,完美解决单接口的 token 依赖和多个接口的参数传递

- [x] 支持录制HTTP(S)请求,简单操作即可生成测试用例

- [x] 类 crontab 的定时任务, 无需额外学习成本

- [x] 测试用例支持参数化和数据驱动机制

- [x] 测试结果统计报告简洁清晰,附带详尽统计信息和日志记录

- [x] 测试报告推送飞书,钉钉,企业微信等

- 截图:

- 后续更新计划:

添加操作日志、优化接口调式页面、批量执行用例交互等

|

open

|

2024-03-29T01:53:22Z

|

2024-04-24T12:08:18Z

|

https://github.com/521xueweihan/HelloGitHub/issues/2718

|

[

"Python 项目"

] |

tahitimoon

| 0

|

vimalloc/flask-jwt-extended

|

flask

| 281

|

How to call @admin_required like @jwt_required

|

I followed in this docs [custom_decorators](https://flask-jwt-extended.readthedocs.io/en/latest/custom_decorators.html)

I have question. How to call @admin_required decorator in another file, namespace ... like @jwt_required

Look like this

```

from flask_jwt_extended import (jwt_required admin_required)

````

Thank!

|

closed

|

2019-10-18T10:47:46Z

|

2019-10-18T14:33:34Z

|

https://github.com/vimalloc/flask-jwt-extended/issues/281

|

[] |

tatdatpham

| 1

|

deeppavlov/DeepPavlov

|

nlp

| 1,194

|

Multi-Lingual Sentence Embedding

|

Can someone help me to get an example of multi-lingual sentence embedding? I want to extract the sentence embedding for Hindi using the Multi-lingual sentence embedding model.

Thanks.

|

closed

|

2020-04-30T12:35:52Z

|

2020-05-14T05:07:47Z

|

https://github.com/deeppavlov/DeepPavlov/issues/1194

|

[] |

ashispapu

| 3

|

s3rius/FastAPI-template

|

asyncio

| 172

|

How to use DAO in a websocket router?

|

I have a websocket router like this

`@router.websocket(

path="/ws",

)

async def websocket(

websocket: WebSocket,

):

await websocket.accept()

...`

and I want to use DAO to save the message in websocket,but if I use

`async def websocket(

websocket: WebSocket,

dao: MessageDAO = Depends(),

):`

when client connect to the websocket, I got a error

File "/Users/xxls/Desktop/Project/db/dependencies.py", line 17, in get_db_session

session: AsyncSession = request.app.state.db_session_factory()

└ <taskiq_dependencies.dependency.Dependency object at 0x111e2bd10>

AttributeError: 'Dependency' object has no attribute 'app'

|

closed

|

2023-06-19T15:56:52Z

|

2024-02-08T17:25:19Z

|

https://github.com/s3rius/FastAPI-template/issues/172

|

[] |

eggb4by

| 15

|

ading2210/poe-api

|

graphql

| 35

|

Using custom bots

|

Can it be implementeD?

|

closed

|

2023-04-11T12:09:54Z

|

2023-04-12T00:40:45Z

|

https://github.com/ading2210/poe-api/issues/35

|

[

"invalid"

] |

ghost

| 2

|

zappa/Zappa

|

django

| 509

|

[Migrated] Enhancement request: async execution for a non-defined function

|

Originally from: https://github.com/Miserlou/Zappa/issues/1332 by [michelorengo](https://github.com/michelorengo)

## Context

My use case is to be able to execute a function ("task") using the async execution in a different lambda. That lambda has a different code base than the calling lambda. In other words, the function ("task") to be executed is not defined in the calling lambda.

The async execution lets you specify a remote lambda and remote region but the function (to be executed) has to be defined in the code. The request is to be able to simply provide a function name as a string in the form of <module_name>.<function_name>.

This obviously does not work for the decorator. It works only using "zappa.async.run".

## Expected Behavior

The below should work:

`

from zappa.async import run

run(func="my_module.my_function", remote_aws_lambda_function_name="my_remote_lambda", remote_aws_region='us-east-1', kwargs=kwargs)

`

## Actual Behavior

The function/task path is retrieved via inspection (hence requires a function type) by "get_func_task_path"

## Possible Fix

This is a bit hackish but is the least intrusive. I'll make a PR but I'm thinking of:

`

def get_func_task_path(func):

"""

Format the modular task path for a function via inspection if param is

a function. If the param is of type string, it will simply return it.

"""

if isinstance(func , (str, unicode)):

return func

module_path = inspect.getmodule(func).__name__

task_path = '{module_path}.{func_name}'.format(

module_path=module_path,

func_name=func.__name__

)

return task_path

`

|

closed

|

2021-02-20T09:43:41Z

|

2024-04-13T16:36:46Z

|

https://github.com/zappa/Zappa/issues/509

|

[

"enhancement",

"feature-request",

"good-idea",

"has-pr",

"no-activity",

"auto-closed"

] |

jneves

| 2

|

AutoViML/AutoViz

|

scikit-learn

| 101

|

ValueError

|

Hi,

stumbled upon autoviz and failed to run even a minimal example.

1) Had to change 'seaborn' to 'seaborn-v0_8' in AutoViz_Class.py and AutoViz_Utils.py

2) After that i got the error below, where i don't know how to proceed

Any suggestions or fixes?

Thanks in advance.

FYI: Here i used python 3.11.6. Had the same Error in python 3.11.4. Could not even install autoviz in python 3.12

`---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In[10], line 2

1 import pandas as pd

----> 2 from autoviz.AutoViz_Class import AutoViz_Class

4 get_ipython().run_line_magic('matplotlib', 'inline')

File ~\AppData\Roaming\Python\Python311\site-packages\autoviz\__init__.py:3

1 name = "autoviz"

2 from .__version__ import __version__, __holo_version__

----> 3 from .AutoViz_Class import AutoViz_Class

4 from .AutoViz_Class import data_cleaning_suggestions

5 from .AutoViz_Class import FixDQ

File ~\AppData\Roaming\Python\Python311\site-packages\autoviz\AutoViz_Class.py:61

59 from sklearn.model_selection import train_test_split

60 ##########################################################################################

---> 61 from autoviz.AutoViz_Holo import AutoViz_Holo

62 from autoviz.AutoViz_Utils import save_image_data, save_html_data, analyze_problem_type, draw_pivot_tables, draw_scatters

63 from autoviz.AutoViz_Utils import draw_pair_scatters, plot_fast_average_num_by_cat, draw_barplots, draw_heatmap

File ~\AppData\Roaming\Python\Python311\site-packages\autoviz\AutoViz_Holo.py:5

3 import pandas as pd

4 ############# Import from autoviz.AutoViz_Class the following libraries #######

----> 5 from autoviz.AutoViz_Utils import *

6 ############## make sure you use: conda install -c pyviz hvplot ###############

7 import hvplot.pandas # noqa

File ~\AppData\Roaming\Python\Python311\site-packages\autoviz\AutoViz_Utils.py:61

59 from sklearn.model_selection import train_test_split

60 ######## This is where we import HoloViews related libraries #########

---> 61 import hvplot.pandas

62 import holoviews as hv

63 from holoviews import opts

File ~\AppData\Roaming\Python\Python311\site-packages\hvplot\__init__.py:12

8 import holoviews as _hv

10 from holoviews import Store

---> 12 from .converter import HoloViewsConverter

13 from .util import get_ipy

14 from .utilities import save, show # noqa

File ~\AppData\Roaming\Python\Python311\site-packages\hvplot\converter.py:25

18 from holoviews.core.util import max_range, basestring

19 from holoviews.element import (

20 Curve, Scatter, Area, Bars, BoxWhisker, Dataset, Distribution,

21 Table, HeatMap, Image, HexTiles, QuadMesh, Bivariate, Histogram,

22 Violin, Contours, Polygons, Points, Path, Labels, RGB, ErrorBars,

23 VectorField, Rectangles, Segments

24 )

---> 25 from holoviews.plotting.bokeh import OverlayPlot, colormap_generator

26 from holoviews.plotting.util import process_cmap

27 from holoviews.operation import histogram

File ~\AppData\Roaming\Python\Python311\site-packages\holoviews\plotting\bokeh\__init__.py:40

38 from .graphs import GraphPlot, NodePlot, TriMeshPlot, ChordPlot

39 from .heatmap import HeatMapPlot, RadialHeatMapPlot

---> 40 from .hex_tiles import HexTilesPlot

41 from .path import PathPlot, PolygonPlot, ContourPlot

42 from .plot import GridPlot, LayoutPlot, AdjointLayoutPlot

File ~\AppData\Roaming\Python\Python311\site-packages\holoviews\plotting\bokeh\hex_tiles.py:22

18 from .selection import BokehOverlaySelectionDisplay

19 from .styles import base_properties, line_properties, fill_properties

---> 22 class hex_binning(Operation):

23 """

24 Applies hex binning by computing aggregates on a hexagonal grid.

25

26 Should not be user facing as the returned element is not directly

27 useable.

28 """

30 aggregator = param.ClassSelector(

31 default=np.size, class_=(types.FunctionType, tuple), doc="""

32 Aggregation function or dimension transform used to compute bin

33 values. Defaults to np.size to count the number of values

34 in each bin.""")

File ~\AppData\Roaming\Python\Python311\site-packages\holoviews\plotting\bokeh\hex_tiles.py:30, in hex_binning()

22 class hex_binning(Operation):

23 """

24 Applies hex binning by computing aggregates on a hexagonal grid.

25

26 Should not be user facing as the returned element is not directly

27 useable.

28 """

---> 30 aggregator = param.ClassSelector(

31 default=np.size, class_=(types.FunctionType, tuple), doc="""

32 Aggregation function or dimension transform used to compute bin

33 values. Defaults to np.size to count the number of values

34 in each bin.""")

36 gridsize = param.ClassSelector(default=50, class_=(int, tuple))

38 invert_axes = param.Boolean(default=False)

File ~\AppData\Roaming\Python\Python311\site-packages\param\__init__.py:1367, in ClassSelector.__init__(self, class_, default, instantiate, is_instance, **params)

1365 self.is_instance = is_instance

1366 super(ClassSelector,self).__init__(default=default,instantiate=instantiate,**params)

-> 1367 self._validate(default)

File ~\AppData\Roaming\Python\Python311\site-packages\param\__init__.py:1371, in ClassSelector._validate(self, val)

1369 def _validate(self, val):

1370 super(ClassSelector, self)._validate(val)

-> 1371 self._validate_class_(val, self.class_, self.is_instance)

File ~\AppData\Roaming\Python\Python311\site-packages\param\__init__.py:1383, in ClassSelector._validate_class_(self, val, class_, is_instance)

1381 if is_instance:

1382 if not (isinstance(val, class_)):

-> 1383 raise ValueError(

1384 "%s parameter %r value must be an instance of %s, not %r." %

1385 (param_cls, self.name, class_name, val))

1386 else:

1387 if not (issubclass(val, class_)):

**ValueError: ClassSelector parameter None value must be an instance of (function, tuple), not <function size at 0x0000019E7F3B3DF0>**.`

|

closed

|

2023-11-11T12:10:51Z

|

2023-12-24T12:14:44Z

|

https://github.com/AutoViML/AutoViz/issues/101

|

[] |

DirtyStreetCoder

| 5

|

sqlalchemy/alembic

|

sqlalchemy

| 611

|

Allow to run statement before creating `alembic_version` table in Postgres

|

I need to run `SET ROLE writer_role;` before creating a table in Postgres, because the user role I use to login does not have `CREATE` permission in the schema. And it is not possible to login with `writer_role`.

This causes the auto generation of the initial revision (`alembic revision --autogenerate -m "initial") to fail when it tries to create the `alembic_version` table and it does not generate the initial migration file.

The output of the command mentioned above is:

```

INFO [alembic.runtime.migration] Context impl PostgresqlImpl.

INFO [alembic.runtime.migration] Will assume transactional DDL.

Traceback (most recent call last):

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/engine/base.py", line 1249, in _execute_context

cursor, statement, parameters, context

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/engine/default.py", line 580, in do_execute

cursor.execute(statement, parameters)

psycopg2.ProgrammingError: permission denied for schema public

LINE 2: CREATE TABLE alembic_version (

^

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/local/bin/alembic", line 11, in <module>

sys.exit(main())

File "/usr/local/lib/python3.6/dist-packages/alembic/config.py", line 573, in main

CommandLine(prog=prog).main(argv=argv)

File "/usr/local/lib/python3.6/dist-packages/alembic/config.py", line 567, in main

self.run_cmd(cfg, options)

File "/usr/local/lib/python3.6/dist-packages/alembic/config.py", line 547, in run_cmd

**dict((k, getattr(options, k, None)) for k in kwarg)

File "/usr/local/lib/python3.6/dist-packages/alembic/command.py", line 214, in revision

script_directory.run_env()

File "/usr/local/lib/python3.6/dist-packages/alembic/script/base.py", line 489, in run_env

util.load_python_file(self.dir, "env.py")

File "/usr/local/lib/python3.6/dist-packages/alembic/util/pyfiles.py", line 98, in load_python_file

module = load_module_py(module_id, path)

File "/usr/local/lib/python3.6/dist-packages/alembic/util/compat.py", line 173, in load_module_py spec.loader.exec_module(module)

File "<frozen importlib._bootstrap_external>", line 678, in exec_module

File "<frozen importlib._bootstrap>", line 219, in _call_with_frames_removed

File "alembic/env.py", line 89, in <module>

run_migrations_online()

File "alembic/env.py", line 83, in run_migrations_online

context.run_migrations()

File "<string>", line 8, in run_migrations

File "/usr/local/lib/python3.6/dist-packages/alembic/runtime/environment.py", line 846, in run_migrations

self.get_context().run_migrations(**kw)

File "/usr/local/lib/python3.6/dist-packages/alembic/runtime/migration.py", line 499, in run_migrations

self._ensure_version_table()

File "/usr/local/lib/python3.6/dist-packages/alembic/runtime/migration.py", line 440, in _ensure_version_table

self._version.create(self.connection, checkfirst=True)

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/sql/schema.py", line 870, in create

bind._run_visitor(ddl.SchemaGenerator, self, checkfirst=checkfirst)

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/engine/base.py", line 1615, in _run_visitor

visitorcallable(self.dialect, self, **kwargs).traverse_single(element)

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/sql/visitors.py", line 138, in traverse_single

return meth(obj, **kw)

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/sql/ddl.py", line 826, in visit_table

include_foreign_key_constraints,

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/engine/base.py", line 988, in execute

return meth(self, multiparams, params)

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/sql/ddl.py", line 72, in _execute_on_connection

return connection._execute_ddl(self, multiparams, params)

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/engine/base.py", line 1050, in _execute_ddl

compiled,

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/engine/base.py", line 1253, in _execute_context

e, statement, parameters, cursor, context

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/engine/base.py", line 1473, in _handle_dbapi_exception

util.raise_from_cause(sqlalchemy_exception, exc_info)

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/util/compat.py", line 398, in raise_from_cause

reraise(type(exception), exception, tb=exc_tb, cause=cause)

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/util/compat.py", line 152, in reraise

raise value.with_traceback(tb)

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/engine/base.py", line 1249, in _execute_context

cursor, statement, parameters, context

File "/usr/local/lib/python3.6/dist-packages/sqlalchemy/engine/default.py", line 580, in do_execute

cursor.execute(statement, parameters)

sqlalchemy.exc.ProgrammingError: (psycopg2.ProgrammingError) permission denied for schema public

LINE 2: CREATE TABLE alembic_version (

^

[SQL:

CREATE TABLE alembic_version (

version_num VARCHAR(32) NOT NULL,

CONSTRAINT alembic_version_pkc PRIMARY KEY (version_num)

)

]

(Background on this error at: http://sqlalche.me/e/f405)

```

|

closed

|

2019-10-22T11:14:58Z

|

2023-10-18T17:58:59Z

|

https://github.com/sqlalchemy/alembic/issues/611

|

[

"question"

] |

notrev

| 7

|

PrefectHQ/prefect

|

automation

| 16,810

|

Automation not working with basic auth enabled

|

### Bug summary

I have set up automation for canceling long-running jobs and sending out notifications.

I noticed that they do no longer work as expected.

When a condition for cancellation is met (in running over 5 minutes) the flow is not cancelled, and I see an error message in the log:

`| WARNING | prefect.server.events.actions - Action failed: "Unexpected status from 'cancel-flow-run' action: 403"`

When I then try to cancel the flow manually, it should trigger the notification automation. This also doesn't work either.

The following message is shown:

`| WARNING | prefect.server.events.actions - Action failed: "Unexpected status from 'send-notification' action: 401"`

I traced this error back to when I enabled basic authentication, so I assume it has something to do with that.

### Version info

```Text

Version: 3.1.13

API version: 0.8.4

Python version: 3.12.8

Git commit: 16e85ce3

Built: Fri, Jan 17, 2025 8:46 AM

OS/Arch: linux/x86_64

Profile: ephemeral

Server type: server

Pydantic version: 2.10.5

```

### Additional context

This is the only line logged on the server even with DEBUG_MODE enabled.

Server is hosted on azure in a docker container

|

closed

|

2025-01-22T10:28:16Z

|

2025-01-23T19:13:51Z

|

https://github.com/PrefectHQ/prefect/issues/16810

|

[

"bug"

] |

dominik-eai

| 1

|

seleniumbase/SeleniumBase

|

web-scraping

| 3,434

|

Headless Mode refactoring for the removal of old Headless Mode

|

## Headless Mode refactoring for the removal of old Headless Mode

As detailed in https://developer.chrome.com/blog/removing-headless-old-from-chrome, Chrome's old headless mode has been removed in Chrome 132. The SeleniumBase `headless1` option mapped to it (`--headless=old`). Before Chrome's newer headless mode appeared, it was just `headless`. After the newer `--headless=new` option appeared, the SeleniumBase `headless2` option mapped to it.

Now that the old headless mode is gone, a few changes will occur to maintain backwards compatibility:

* If the Chrome version is 132 (or newer), `headless1` will automatically remap to `--headless` / `--headless=new`.

* If the Chrome version is less than 132, then `headless1` will continue mapping to `--headless=old`.

So in summary, on Chrome 132 or newer (once this ticket is complete), `headless1` is the same as `headless` is the same as `headless2`. All those will map to the same (new) headless mode on Chrome.

In the meantime, attempting to use the old headless mode on Chrome 132 (or newer) causes the following error to occur:

``selenium.common.exceptions.SessionNotCreatedException: Message: session not created: probably user data directory is already in use, please specify a unique value for --user-data-dir argument, or don't use --user-data-dir``