repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

ckan/ckan

|

api

| 8,333

|

Sometimes Internal Server Error is occurred on CKAN UI while adding Resource

|

## CKAN version

2.10.1

I have set up the CKAN Docker setup by following this link: https://github.com/ckan/ckan-docker

## Describe the bug

While adding resources on the CKAN UI, getting 500 internal server error on the UI.

### Steps to reproduce

1. Login to CKAN UI

2. Add Organization and dataset

3. Try to add Resource file (CSV) multiple time in created dataset.

### Error in CKAN Logs

```

Traceback (most recent call last): File "/usr/lib/python3.10/site-packages/requests/adapters.py", line 489, in send resp = conn.urlopen( File "/usr/lib/python3.10/site-packages/urllib3/connectionpool.py", line 785, in urlopen retries = retries.increment( File "/usr/lib/python3.10/site-packages/urllib3/util/retry.py", line 550, in increment raise six.reraise(type(error), error, _stacktrace) File "/usr/lib/python3.10/site-packages/urllib3/packages/six.py", line 770, in reraise raise value File "/usr/lib/python3.10/site-packages/urllib3/connectionpool.py", line 703, in urlopen httplib_response = self._make_request( File "/usr/lib/python3.10/site-packages/urllib3/connectionpool.py", line 451, in _make_request self._raise_timeout(err=e, url=url, timeout_value=read_timeout) File "/usr/lib/python3.10/site-packages/urllib3/connectionpool.py", line 340, in _raise_timeout raise ReadTimeoutError( urllib3.exceptions.ReadTimeoutError: HTTPConnectionPool(host='datapusher', port=8800): Read timed out. (read timeout=5)

```

### Error in Datapusher Logs

```

Tue Jul 9 07:54:32 2024 - uwsgi_response_writev_headers_and_body_do(): Broken pipe [core/writer.c line 306] during POST /job (192.168.0.3)

OSError: write error

```

### Expected behavior

500 internal server error should not be displayed on the UI and connection read timeout error should not be occurred.

### Additional details

I have observed a read timeout error in the CKAN logs while submitting the job to the data pusher. I tried increasing the ckan.request.timeout value from 5 to 10 and then to 15 in the ckan.ini file. As a result, the frequency of the issue has been reduced.

Does this issue still exist, or is there any solution for this issue?

|

open

|

2024-07-09T09:12:47Z

|

2024-07-10T11:52:37Z

|

https://github.com/ckan/ckan/issues/8333

|

[] |

kumardeepak5

| 2

|

deepspeedai/DeepSpeed

|

deep-learning

| 6,747

|

Model Checkpoint docs are incorrectly rendered on deepspeed.readthedocs.io

|

The top google search hit for most deepspeed documentation searches is https://deepspeed.readthedocs.io/ or its various sub-pages. I assume this export is somehow maintained or at least enabled by the deepspeed team, hence this bug report.

The page on model checkpointing at https://deepspeed.readthedocs.io/en/latest/model-checkpointing.html#model-checkpointing shows empty sections for "Loading Training Checkpoints" and "Saving Training Checkpoints", which I have found confusing on a few occasions. Whereas the rst file in the repo has an `autofunction` declaration inside these sections, e.g. https://github.com/microsoft/DeepSpeed/blob/b692cdea479fba8201584054d654f639e925a265/docs/code-docs/source/model-checkpointing.rst

Evidently the autofunction there is not being correctly rendered by doc export.

|

open

|

2024-11-12T19:52:24Z

|

2024-11-22T21:18:29Z

|

https://github.com/deepspeedai/DeepSpeed/issues/6747

|

[

"bug",

"documentation"

] |

akeshet

| 3

|

holoviz/colorcet

|

matplotlib

| 3

|

license of color scales

|

Hi, I think these color scales are great and would love to have them available to the Julia community. What license are they released under? I would like to copy the scales to the Julia package PlotUtils, which is under the MIT license. A reference to this repo would appear in the source file and in the documentation (at juliaplots.github.io).

Thanks!

|

closed

|

2017-03-13T11:56:14Z

|

2018-08-24T13:40:02Z

|

https://github.com/holoviz/colorcet/issues/3

|

[] |

mkborregaard

| 3

|

huggingface/datasets

|

pytorch

| 7,213

|

Add with_rank to Dataset.from_generator

|

### Feature request

Add `with_rank` to `Dataset.from_generator` similar to `Dataset.map` and `Dataset.filter`.

### Motivation

As for `Dataset.map` and `Dataset.filter`, this is useful when creating cache files using multi-GPU, where the rank can be used to select GPU IDs. For now, rank can be added in the `gen_kwars` argument; however, this, in turn, includes the rank when computing the fingerprint.

### Your contribution

Added #7199 which passes rank based on the `job_id` set by `num_proc`.

|

open

|

2024-10-10T12:15:29Z

|

2024-10-10T12:17:11Z

|

https://github.com/huggingface/datasets/issues/7213

|

[

"enhancement"

] |

muthissar

| 0

|

ClimbsRocks/auto_ml

|

scikit-learn

| 438

|

[Parallel(n_jobs=-1)]: Using backend LokyBackend with 2 concurrent workers. BrokenProcessPool: A task has failed to un-serialize. Please ensure that the arguments of the function are all picklable.

|

open

|

2021-02-11T07:48:07Z

|

2023-10-13T02:28:14Z

|

https://github.com/ClimbsRocks/auto_ml/issues/438

|

[] |

elnurmmmdv

| 1

|

|

horovod/horovod

|

pytorch

| 3,354

|

Trying to get in touch regarding a security issue

|

Hey there!

I belong to an open source security research community, and a member (@srikanthprathi) has found an issue, but doesn’t know the best way to disclose it.

If not a hassle, might you kindly add a `SECURITY.md` file with an email, or another contact method? GitHub [recommends](https://docs.github.com/en/code-security/getting-started/adding-a-security-policy-to-your-repository) this best practice to ensure security issues are responsibly disclosed, and it would serve as a simple instruction for security researchers in the future.

Thank you for your consideration, and I look forward to hearing from you!

(cc @huntr-helper)

|

closed

|

2022-01-09T00:13:04Z

|

2022-01-19T19:25:36Z

|

https://github.com/horovod/horovod/issues/3354

|

[] |

JamieSlome

| 3

|

coqui-ai/TTS

|

python

| 2,744

|

[Bug] Config for bark cannot be found

|

### Describe the bug

Attempting to use Bark through TTS API [Using tts = TTS("tts_models/multilingual/multi-dataset/bark"] throws the following error.

```

Traceback (most recent call last):

File "c:\Users\timeb\Desktop\BarkAI\Clone and Product TTS.py", line 7, in <module>

tts = TTS("tts_models/multilingual/multi-dataset/bark")

File "C:\Users\timeb\AppData\Local\Programs\Python\Python39\lib\site-packages\TTS\api.py", line 289, in __init__

self.load_tts_model_by_name(model_name, gpu)

File "C:\Users\timeb\AppData\Local\Programs\Python\Python39\lib\site-packages\TTS\api.py", line 391, in load_tts_model_by_name

self.synthesizer = Synthesizer(

File "C:\Users\timeb\AppData\Local\Programs\Python\Python39\lib\site-packages\TTS\utils\synthesizer.py", line 107, in __init__

self._load_tts_from_dir(model_dir, use_cuda)

File "C:\Users\timeb\AppData\Local\Programs\Python\Python39\lib\site-packages\TTS\utils\synthesizer.py", line 159, in _load_tts_from_dir

config = load_config(os.path.join(model_dir, "config.json"))

File "C:\Users\timeb\AppData\Local\Programs\Python\Python39\lib\site-packages\TTS\config\__init__.py", line 94, in load_config

config_class = register_config(model_name.lower())

File "C:\Users\timeb\AppData\Local\Programs\Python\Python39\lib\site-packages\TTS\config\__init__.py", line 47, in register_config

raise ModuleNotFoundError(f" [!] Config for {model_name} cannot be found.")

ModuleNotFoundError: [!] Config for bark cannot be found.

```

I know of one other bug report on the issue #2722. However that was supposedly fixed in 0.15.2.

### To Reproduce

Run the following example code:

```

from TTS.api import TTS

# Load the model to GPU

# Bark is really slow on CPU, so we recommend using GPU.

tts = TTS("tts_models/multilingual/multi-dataset/bark")

# Cloning a new speaker

# This expects to find a mp3 or wav file like `bark_voices/new_speaker/speaker.wav`

# It computes the cloning values and stores in `bark_voices/new_speaker/speaker.npz`

tts.tts_to_file(text="Hello, how are you?",

file_path="output.wav",

voice_dir="bark_voices/",

speaker="anna")

# When you run it again it uses the stored values to generate the voice.

tts.tts_to_file(text="Hello, how are you?",

file_path="output.wav",

voice_dir="bark_voices/",

speaker="anna")

```

### Expected behavior

Produce output.wav using Bark.

### Logs

_No response_

### Environment

```shell

-OS: Windows

-Python Version: 3.9.13

-TTS Version: 0.15.5

-Using CPU Only

```

### Additional context

_No response_

|

closed

|

2023-07-06T23:15:51Z

|

2023-07-11T19:21:48Z

|

https://github.com/coqui-ai/TTS/issues/2744

|

[

"bug"

] |

JLPiper

| 5

|

fastapi/sqlmodel

|

fastapi

| 346

|

SQLAlchemy-Continuum compatibility

|

### First Check

- [X] I added a very descriptive title to this issue.

- [X] I used the GitHub search to find a similar issue and didn't find it.

- [X] I searched the SQLModel documentation, with the integrated search.

- [X] I already searched in Google "How to X in SQLModel" and didn't find any information.

- [X] I already read and followed all the tutorial in the docs and didn't find an answer.

- [X] I already checked if it is not related to SQLModel but to [Pydantic](https://github.com/samuelcolvin/pydantic).

- [X] I already checked if it is not related to SQLModel but to [SQLAlchemy](https://github.com/sqlalchemy/sqlalchemy).

### Commit to Help

- [X] I commit to help with one of those options 👆

### Example Code

```python

from typing import Optional

from sqlalchemy_continuum import make_versioned

from sqlmodel import Field, Session, SQLModel, create_engine

from sqlmodel.main import default_registry

# setattr(SQLModel, 'registry', default_registry)

make_versioned(user_cls=None)

class Hero(SQLModel, table=True):

__versioned__ = {}

id: Optional[int] = Field(default=None, primary_key=True)

name: str

secret_name: str

age: Optional[int] = None

hero_1 = Hero(name="Deadpond", secret_name="Dive Wilson")

engine = create_engine("sqlite:///database.db")

SQLModel.metadata.create_all(engine)

with Session(engine) as session:

session.add(hero_1)

session.commit()

session.refresh(hero_1)

print(hero_1)

```

### Description

Very [basic setup](https://sqlalchemy-continuum.readthedocs.io/en/latest/intro.html#installation) of SQLAlchemy-Continuum doesn't work with SQLModel. Following error raised (see [here](https://github.com/kvesteri/sqlalchemy-continuum/blob/1.3.12/sqlalchemy_continuum/factory.py#L10-L13)):

```

AttributeError: type object 'SQLModel' has no attribute '_decl_class_registry'

```

`SQLModel` class misses some expected attribute (`registry` for SQLAlchemy >= 1.4) from SQLAlchemy's `Base`. For debugging purposes the attribute can be manually populated (uncomment `# setattr(SQLModel, 'registry', default_registry)` in the example code) and it helps to proceed to the next error:

```

<skipped>

File ".../.venv/lib/python3.10/site-packages/sqlmodel/main.py", line 277, in __new__

new_cls = super().__new__(cls, name, bases, dict_used, **config_kwargs)

File "pydantic/main.py", line 228, in pydantic.main.ModelMetaclass.__new__

File "pydantic/fields.py", line 488, in pydantic.fields.ModelField.infer

File "pydantic/fields.py", line 419, in pydantic.fields.ModelField.__init__

File "pydantic/fields.py", line 528, in pydantic.fields.ModelField.prepare

File "pydantic/fields.py", line 552, in pydantic.fields.ModelField._set_default_and_type

File "pydantic/fields.py", line 422, in pydantic.fields.ModelField.get_default

File "pydantic/utils.py", line 652, in pydantic.utils.smart_deepcopy

File ".../.venv/lib/python3.10/site-packages/sqlalchemy/sql/elements.py", line 582, in __bool__

raise TypeError("Boolean value of this clause is not defined")

TypeError: Boolean value of this clause is not defined

```

Looks like there is some incompatibility with SQLAlchemy's expected behaviour, because this error was thrown just after SQLModel's `__new__` method call.

### Operating System

macOS

### Operating System Details

_No response_

### SQLModel Version

0.0.6

### Python Version

3.10.2

### Additional Context

_No response_

|

open

|

2022-05-19T13:34:36Z

|

2024-12-05T19:03:11Z

|

https://github.com/fastapi/sqlmodel/issues/346

|

[

"question"

] |

petyunchik

| 9

|

flasgger/flasgger

|

flask

| 35

|

Change "no content" response from 204 status

|

Hi,

is it possible to change the response body when the code is 204?

The only way that I found it was change the javascript code.

|

closed

|

2016-10-14T19:27:35Z

|

2017-03-24T20:19:49Z

|

https://github.com/flasgger/flasgger/issues/35

|

[

"enhancement",

"help wanted"

] |

andryw

| 1

|

paulbrodersen/netgraph

|

matplotlib

| 56

|

Hyperlink or selectable text from annotations?

|

Hey,

I'm currently using the library on a project and have a usecase for linking to a URL based on node attributes. I have the annotation showing on node click, but realized that the link is not selectable or clickable. Would it be possible to do either of those (hyperlinks would be preferred but selectable text is good too)?

Thanks

|

closed

|

2022-12-16T04:13:00Z

|

2022-12-23T20:43:33Z

|

https://github.com/paulbrodersen/netgraph/issues/56

|

[] |

a-arbabian

| 6

|

marshmallow-code/flask-smorest

|

rest-api

| 491

|

grafana + Prometheus + smorest integration

|

how could we integrate grafana and Prometheus with smorest? is there easy solution for it? or is there something to monitor smorest? Prometheus is well integrated with flask-restful?!

|

closed

|

2023-04-16T21:46:46Z

|

2023-08-18T15:58:55Z

|

https://github.com/marshmallow-code/flask-smorest/issues/491

|

[

"question"

] |

justfortest-sketch

| 2

|

dpgaspar/Flask-AppBuilder

|

rest-api

| 1,741

|

update docs website for Flask-AppBuilder `3.4.0`

|

Flask-AppBuilder 3.4.0 was [released 2 days ago](https://github.com/dpgaspar/Flask-AppBuilder/releases/tag/v3.4.0), but the https://flask-appbuilder.readthedocs.io/en/latest/index.html website still only has `3.3.0` visible under the `latest` tag.

|

closed

|

2021-11-12T02:54:43Z

|

2021-11-14T17:46:25Z

|

https://github.com/dpgaspar/Flask-AppBuilder/issues/1741

|

[] |

thesuperzapper

| 2

|

reloadware/reloadium

|

pandas

| 189

|

Hot reloading of HTML files not working with reloadium (works fine with just webpack-dev-server and python manage.py runserver)

|

## Describe the bug*

I have a Django project that uses webpack to serve some frontend assets and for hotreloading js/css/html files.

Hotreloading of HTML files work great when I run django normally.

However, when I run the project using Pycharm addon of Reloadium, the hotreloading of HTML files doesn't work.

The page refresh when I save, but the changes aren't displayed until I manually refresh the page.

Changes made to Python files, css files, js files still works great.

## To Reproduce

Steps to reproduce the behavior:

- Set up a django project that uses webpack with hot-reloading enabled

(I will have a hard time describing the process step by step... I can put the config files below if asked. I will put some bits that seem relevent.)

- Run django project using Reloadium addon

## Expected behavior

When I change the content of my HTML file and save it while using Reloadium, the change should be displayed on my webpage.

## Screenshots

None

## Desktop or remote (please complete the following information):**

- OS: Windows

- OS version: 10

- M1 chip: No

- Reloadium package version: 1.4.0 (latest addon version)

- PyCharm plugin version: 1.4.0 (latest addon version)

- Editor: PyCharm

- Python Version: 3.11.8

- Python Architecture: 64bit

- Run mode: Run & Debug (both modes cause issue)

## Additional context

Some extracts of my config files that may be relevant :

start command used in package.json :

```"start": "npx webpack-dev-server --config webpack/webpack.config.dev.js"```

webpack.config.common.js :

```

const Path = require('path');

const { CleanWebpackPlugin } = require('clean-webpack-plugin');

const CopyWebpackPlugin = require('copy-webpack-plugin');

const HtmlWebpackPlugin = require('html-webpack-plugin');

var BundleTracker = require('webpack-bundle-tracker');

module.exports = {

entry: {

base: Path.resolve(__dirname, '../src/scripts/base.js'),

contact_form: Path.resolve(__dirname, '../src/scripts/contact_form.js'),

},

output: {

path: Path.join(__dirname, '../build'),

filename: 'js/[name].js',

publicPath: '/static/',

},

optimization: {

splitChunks: {

chunks: 'all',

name: false,//'vendors',//false,

},

},

plugins: [

new BundleTracker({

//path: path.join(__dirname, 'frontend/'),

filename: 'webpack-stats.json',

}),

new CleanWebpackPlugin(),

new CopyWebpackPlugin({

patterns: [{ from: Path.resolve(__dirname, '../public'), to: 'public' }],

}),

/*new HtmlWebpackPlugin({

template: Path.resolve(__dirname, '../src/index.html'),

//inject: false,

}),*/

],

resolve: {

alias: {

'~': Path.resolve(__dirname, '../src'),

},

},

module: {

rules: [

{

test: /\.mjs$/,

include: /node_modules/,

type: 'javascript/auto',

},

{

test: /\.html$/i,

loader: 'html-loader',

},

{

test: /\.(ico|jpg|jpeg|png|gif|eot|otf|webp|svg|ttf|woff|woff2)(\?.*)?$/,

type: 'asset'

},

],

},

};

```

webpack.config.dev.js :

```const Path = require('path');

const Webpack = require('webpack');

const { merge } = require('webpack-merge');

const ESLintPlugin = require('eslint-webpack-plugin');

const StylelintPlugin = require('stylelint-webpack-plugin');

const common = require('./webpack.common.js');

module.exports = merge(common, {

target: 'web',

mode: 'development',

devtool: 'eval-cheap-source-map',

output: {

chunkFilename: 'js/[name].chunk.js',

publicPath: 'http://localhost:9091/static/',

},

devServer: {

client: {

logging: 'error',

},

static:

[{directory: Path.join(__dirname, "../public")}

,{directory: Path.join(__dirname, "../src/images")}

,{directory: Path.join(__dirname, "../../accueil/templates/accueil")}

,{directory: Path.join(__dirname, "../../templates")}

]

,

compress: true,

watchFiles: [

//Path.join(__dirname, '../../**/*.py'),

Path.join(__dirname, '../src/**/*.js'),

Path.join(__dirname, '../src/**/*.scss'),

Path.join(__dirname, '../../templates/**/*.html'),

Path.join(__dirname, '../../accueil/templates/accueil/*.html'),

],

devMiddleware: {

writeToDisk: true,

},

port: 9091,

headers: {

"Access-Control-Allow-Origin": "*",

}

},

plugins: [

new Webpack.DefinePlugin({

'process.env.NODE_ENV': JSON.stringify('development'),

}),

new ESLintPlugin({

extensions: 'js',

emitWarning: true,

files: Path.resolve(__dirname, '../src'),

fix: true,

}),

new StylelintPlugin({

files: Path.join('src', '**/*.s?(a|c)ss'),

fix: true,

}),

],

module: {

rules: [

/*{ //Added from default

test: /\.html$/i,

loader: "html-loader",

},*/

{

test: /\.js$/,

include: Path.resolve(__dirname, '../src'),

loader: 'babel-loader',

},

{

test: /\.s?css$/i,

include: Path.resolve(__dirname, '../src'),

use: [

'style-loader',

{

loader: 'css-loader',

options: {

sourceMap: true,

},

},

'postcss-loader',

'sass-loader',

],

},

],

},

});

```

|

closed

|

2024-04-03T17:38:26Z

|

2024-05-20T23:42:57Z

|

https://github.com/reloadware/reloadium/issues/189

|

[] |

antoineprobst

| 2

|

quantmind/pulsar

|

asyncio

| 15

|

Profiling task

|

Add functionality to profile a task run using the python profiler.

|

closed

|

2012-02-10T12:06:14Z

|

2012-12-13T22:06:20Z

|

https://github.com/quantmind/pulsar/issues/15

|

[

"taskqueue"

] |

lsbardel

| 1

|

PeterL1n/RobustVideoMatting

|

computer-vision

| 67

|

前景预测

|

请问在训练阶段,有遇到第一阶段前景预测图整体偏红或者偏绿的情况吗?有什么解决办法吗?

|

closed

|

2021-10-08T04:45:12Z

|

2021-12-16T01:24:48Z

|

https://github.com/PeterL1n/RobustVideoMatting/issues/67

|

[] |

lyc6749

| 10

|

chaos-genius/chaos_genius

|

data-visualization

| 1,007

|

[BUG] Dependency conflict for PyArrow

|

## Describe the bug

PyAthena installs version of PyArrow that is incompatible with Snowflake's Pyarrow requirement

## Explain the environment

- **Chaos Genius version**: 0.9.0 development build

- **OS Version / Instance**: any

- **Deployment type**: any

## Current behavior

warning while pip install

## Expected behavior

pip install warning should not be there

## Screenshots

## Additional context

N/A

## Logs

`

/home/samyak/work/ChaosGenius/chaos_genius/.direnv/python-3.8.13/lib64/python3.8/site-packages/snowflake/connector/options.py:96: UserWarning: You have an incompatible version of 'pyarrow' installed (8.0.0), pleas

e install a version that adheres to: 'pyarrow<6.1.0,>=6.0.0; extra == "pandas"'

warn_incompatible_dep(

`

|

closed

|

2022-06-27T11:16:43Z

|

2022-06-29T12:39:08Z

|

https://github.com/chaos-genius/chaos_genius/issues/1007

|

[] |

rjdp

| 0

|

explosion/spaCy

|

nlp

| 13,712

|

thinc conflicting versions

|

## How to reproduce the behaviour

I had an issue with thinc when updated I numpy:

`thinc 8.3.2 has requirement numpy<2.1.0,>=2.0.0; python_version >= "3.9", but you have numpy 2.1.3.`

Therefore I updated thinc to its latest version `9.1.1` but now I'm having an issue with spaCy

`spacy 3.8.2 depends on thinc<8.4.0 and >=8.3.0`

## Your Environment

<!-- Include details of your environment. You can also type `python -m spacy info --markdown` and copy-paste the result here.-->

* Operating System: WSL Ubuntu 20.04.6

* Python Version Used: Python 3.11

* spaCy Version Used: spacy 3.8.2

|

open

|

2024-12-09T16:43:01Z

|

2024-12-10T16:43:18Z

|

https://github.com/explosion/spaCy/issues/13712

|

[] |

kimlizette

| 1

|

KevinMusgrave/pytorch-metric-learning

|

computer-vision

| 176

|

Add a way of computing logits easily during inference

|

Right now you can only compute the loss, but there are cases where people want to do classification using the logits (see https://github.com/KevinMusgrave/pytorch-metric-learning/issues/175)

|

closed

|

2020-08-11T04:18:20Z

|

2020-09-14T09:56:47Z

|

https://github.com/KevinMusgrave/pytorch-metric-learning/issues/176

|

[

"enhancement",

"fixed in dev branch"

] |

KevinMusgrave

| 0

|

xuebinqin/U-2-Net

|

computer-vision

| 149

|

Taking Too Much CPU and Memory (RAM) on CPU

|

@NathanUA Thank you very much for such a good contribution.I am using the u2netp model which is 4.7 mb size

for inferences on the webcam video feed. I am running it on my CPU device but it is taking too much memory and processing power of CPU Device .My Device get slower while doing testing , Can you help me how I can make it memory efficient so I can use in CPU machine.Thank you

|

open

|

2021-01-20T16:38:19Z

|

2021-03-17T09:36:08Z

|

https://github.com/xuebinqin/U-2-Net/issues/149

|

[] |

NaeemKhan333

| 1

|

graphistry/pygraphistry

|

jupyter

| 50

|

FAQ / guide on exporting

|

cc @thibaudh @padentomasello @briantrice

|

closed

|

2016-01-11T21:50:51Z

|

2020-06-10T06:46:52Z

|

https://github.com/graphistry/pygraphistry/issues/50

|

[

"enhancement"

] |

lmeyerov

| 0

|

pydata/xarray

|

pandas

| 10,166

|

Updating zarr causes errors when saving to zarr.

|

### What is your issue?

xarray version 2024.9.0

zarr version 3.0.5

When attempting to save to zarr, the error below results. I can save the same file to zarr happily using zarr version 2.18.4. I've checked and the same thing happens for a wide range of files. Reseting the encoding has no effect.

[tas_AUST-04_ERA5_historical_hres_BOM_BARRA-C2_v1_mon_197901-197901(1).zip](https://github.com/user-attachments/files/19414274/tas_AUST-04_ERA5_historical_hres_BOM_BARRA-C2_v1_mon_197901-197901.1.zip)

```

ds = xr.open_dataset('/scratch/nhat_drf_data/zarr_sandbox/tas_AUST-04_ERA5_historical_hres_BOM_BARRA-C2_v1_mon_197901-197901(1).nc')

ds.to_zarr('/scratch/nhat_drf_data/zarr_sandbox/test.zarr')

```

gives the error

```

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/zarr/core/common.py:139, in parse_shapelike(data)

138 try:

--> 139 data_tuple = tuple(data)

140 except TypeError as e:

TypeError: 'NoneType' object is not iterable

The above exception was the direct cause of the following exception:

TypeError Traceback (most recent call last)

Cell In[3], line 1

----> 1 ds.to_zarr('/scratch/nhat_drf_data/zarr_sandbox/test.zarr')

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/xarray/core/dataset.py:2562, in Dataset.to_zarr(self, store, chunk_store, mode, synchronizer, group, encoding, compute, consolidated, append_dim, region, safe_chunks, storage_options, zarr_version, write_empty_chunks, chunkmanager_store_kwargs)

2415 """Write dataset contents to a zarr group.

2416

2417 Zarr chunks are determined in the following way:

(...) 2558 The I/O user guide, with more details and examples.

2559 """

2560 from xarray.backends.api import to_zarr

-> 2562 return to_zarr( # type: ignore[call-overload,misc]

2563 self,

2564 store=store,

2565 chunk_store=chunk_store,

2566 storage_options=storage_options,

2567 mode=mode,

2568 synchronizer=synchronizer,

2569 group=group,

2570 encoding=encoding,

2571 compute=compute,

2572 consolidated=consolidated,

2573 append_dim=append_dim,

2574 region=region,

2575 safe_chunks=safe_chunks,

2576 zarr_version=zarr_version,

2577 write_empty_chunks=write_empty_chunks,

2578 chunkmanager_store_kwargs=chunkmanager_store_kwargs,

2579 )

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/xarray/backends/api.py:1784, in to_zarr(dataset, store, chunk_store, mode, synchronizer, group, encoding, compute, consolidated, append_dim, region, safe_chunks, storage_options, zarr_version, write_empty_chunks, chunkmanager_store_kwargs)

1782 writer = ArrayWriter()

1783 # TODO: figure out how to properly handle unlimited_dims

-> 1784 dump_to_store(dataset, zstore, writer, encoding=encoding)

1785 writes = writer.sync(

1786 compute=compute, chunkmanager_store_kwargs=chunkmanager_store_kwargs

1787 )

1789 if compute:

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/xarray/backends/api.py:1467, in dump_to_store(dataset, store, writer, encoder, encoding, unlimited_dims)

1464 if encoder:

1465 variables, attrs = encoder(variables, attrs)

-> 1467 store.store(variables, attrs, check_encoding, writer, unlimited_dims=unlimited_dims)

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/xarray/backends/zarr.py:720, in ZarrStore.store(self, variables, attributes, check_encoding_set, writer, unlimited_dims)

717 else:

718 variables_to_set = variables_encoded

--> 720 self.set_variables(

721 variables_to_set, check_encoding_set, writer, unlimited_dims=unlimited_dims

722 )

723 if self._consolidate_on_close:

724 zarr.consolidate_metadata(self.zarr_group.store)

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/xarray/backends/zarr.py:824, in ZarrStore.set_variables(self, variables, check_encoding_set, writer, unlimited_dims)

821 else:

822 encoding["write_empty_chunks"] = self._write_empty

--> 824 zarr_array = self.zarr_group.create(

825 name,

826 shape=shape,

827 dtype=dtype,

828 fill_value=fill_value,

829 **encoding,

830 )

831 zarr_array = _put_attrs(zarr_array, encoded_attrs)

833 write_region = self._write_region if self._write_region is not None else {}

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/zarr/core/group.py:2354, in Group.create(self, *args, **kwargs)

2352 def create(self, *args: Any, **kwargs: Any) -> Array:

2353 # Backwards compatibility for 2.x

-> 2354 return self.create_array(*args, **kwargs)

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/zarr/_compat.py:43, in _deprecate_positional_args.<locals>._inner_deprecate_positional_args.<locals>.inner_f(*args, **kwargs)

41 extra_args = len(args) - len(all_args)

42 if extra_args <= 0:

---> 43 return f(*args, **kwargs)

45 # extra_args > 0

46 args_msg = [

47 f"{name}={arg}"

48 for name, arg in zip(kwonly_args[:extra_args], args[-extra_args:], strict=False)

49 ]

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/zarr/core/group.py:2473, in Group.create_array(self, name, shape, dtype, chunks, shards, filters, compressors, compressor, serializer, fill_value, order, attributes, chunk_key_encoding, dimension_names, storage_options, overwrite, config)

2378 """Create an array within this group.

2379

2380 This method lightly wraps :func:`zarr.core.array.create_array`.

(...) 2467 AsyncArray

2468 """

2469 compressors = _parse_deprecated_compressor(

2470 compressor, compressors, zarr_format=self.metadata.zarr_format

2471 )

2472 return Array(

-> 2473 self._sync(

2474 self._async_group.create_array(

2475 name=name,

2476 shape=shape,

2477 dtype=dtype,

2478 chunks=chunks,

2479 shards=shards,

2480 fill_value=fill_value,

2481 attributes=attributes,

2482 chunk_key_encoding=chunk_key_encoding,

2483 compressors=compressors,

2484 serializer=serializer,

2485 dimension_names=dimension_names,

2486 order=order,

2487 filters=filters,

2488 overwrite=overwrite,

2489 storage_options=storage_options,

2490 config=config,

2491 )

2492 )

2493 )

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/zarr/core/sync.py:208, in SyncMixin._sync(self, coroutine)

205 def _sync(self, coroutine: Coroutine[Any, Any, T]) -> T:

206 # TODO: refactor this to to take *args and **kwargs and pass those to the method

207 # this should allow us to better type the sync wrapper

--> 208 return sync(

209 coroutine,

210 timeout=config.get("async.timeout"),

211 )

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/zarr/core/sync.py:163, in sync(coro, loop, timeout)

160 return_result = next(iter(finished)).result()

162 if isinstance(return_result, BaseException):

--> 163 raise return_result

164 else:

165 return return_result

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/zarr/core/sync.py:119, in _runner(coro)

114 """

115 Await a coroutine and return the result of running it. If awaiting the coroutine raises an

116 exception, the exception will be returned.

117 """

118 try:

--> 119 return await coro

120 except Exception as ex:

121 return ex

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/zarr/core/group.py:1102, in AsyncGroup.create_array(self, name, shape, dtype, chunks, shards, filters, compressors, compressor, serializer, fill_value, order, attributes, chunk_key_encoding, dimension_names, storage_options, overwrite, config)

1007 """Create an array within this group.

1008

1009 This method lightly wraps :func:`zarr.core.array.create_array`.

(...) 1097

1098 """

1099 compressors = _parse_deprecated_compressor(

1100 compressor, compressors, zarr_format=self.metadata.zarr_format

1101 )

-> 1102 return await create_array(

1103 store=self.store_path,

1104 name=name,

1105 shape=shape,

1106 dtype=dtype,

1107 chunks=chunks,

1108 shards=shards,

1109 filters=filters,

1110 compressors=compressors,

1111 serializer=serializer,

1112 fill_value=fill_value,

1113 order=order,

1114 zarr_format=self.metadata.zarr_format,

1115 attributes=attributes,

1116 chunk_key_encoding=chunk_key_encoding,

1117 dimension_names=dimension_names,

1118 storage_options=storage_options,

1119 overwrite=overwrite,

1120 config=config,

1121 )

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/zarr/core/array.py:4146, in create_array(store, name, shape, dtype, data, chunks, shards, filters, compressors, serializer, fill_value, order, zarr_format, attributes, chunk_key_encoding, dimension_names, storage_options, overwrite, config, write_data)

4141 store_path = await make_store_path(store, path=name, mode=mode, storage_options=storage_options)

4143 data_parsed, shape_parsed, dtype_parsed = _parse_data_params(

4144 data=data, shape=shape, dtype=dtype

4145 )

-> 4146 result = await init_array(

4147 store_path=store_path,

4148 shape=shape_parsed,

4149 dtype=dtype_parsed,

4150 chunks=chunks,

4151 shards=shards,

4152 filters=filters,

4153 compressors=compressors,

4154 serializer=serializer,

4155 fill_value=fill_value,

4156 order=order,

4157 zarr_format=zarr_format,

4158 attributes=attributes,

4159 chunk_key_encoding=chunk_key_encoding,

4160 dimension_names=dimension_names,

4161 overwrite=overwrite,

4162 config=config,

4163 )

4165 if write_data is True and data_parsed is not None:

4166 await result._set_selection(

4167 BasicIndexer(..., shape=result.shape, chunk_grid=result.metadata.chunk_grid),

4168 data_parsed,

4169 prototype=default_buffer_prototype(),

4170 )

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/zarr/core/array.py:3989, in init_array(store_path, shape, dtype, chunks, shards, filters, compressors, serializer, fill_value, order, zarr_format, attributes, chunk_key_encoding, dimension_names, overwrite, config)

3986 chunks_out = chunk_shape_parsed

3987 codecs_out = sub_codecs

-> 3989 meta = AsyncArray._create_metadata_v3(

3990 shape=shape_parsed,

3991 dtype=dtype_parsed,

3992 fill_value=fill_value,

3993 chunk_shape=chunks_out,

3994 chunk_key_encoding=chunk_key_encoding_parsed,

3995 codecs=codecs_out,

3996 dimension_names=dimension_names,

3997 attributes=attributes,

3998 )

4000 arr = AsyncArray(metadata=meta, store_path=store_path, config=config)

4001 await arr._save_metadata(meta, ensure_parents=True)

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/zarr/core/array.py:694, in AsyncArray._create_metadata_v3(shape, dtype, chunk_shape, fill_value, chunk_key_encoding, codecs, dimension_names, attributes)

687 if dtype.kind in "UTS":

688 warn(

689 f"The dtype `{dtype}` is currently not part in the Zarr format 3 specification. It "

690 "may not be supported by other zarr implementations and may change in the future.",

691 category=UserWarning,

692 stacklevel=2,

693 )

--> 694 chunk_grid_parsed = RegularChunkGrid(chunk_shape=chunk_shape)

695 return ArrayV3Metadata(

696 shape=shape,

697 data_type=dtype,

(...) 703 attributes=attributes or {},

704 )

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/zarr/core/chunk_grids.py:176, in RegularChunkGrid.__init__(self, chunk_shape)

175 def __init__(self, *, chunk_shape: ChunkCoordsLike) -> None:

--> 176 chunk_shape_parsed = parse_shapelike(chunk_shape)

178 object.__setattr__(self, "chunk_shape", chunk_shape_parsed)

File /opt/anaconda3/envs/nhat_eval/lib/python3.12/site-packages/zarr/core/common.py:142, in parse_shapelike(data)

140 except TypeError as e:

141 msg = f"Expected an integer or an iterable of integers. Got {data} instead."

--> 142 raise TypeError(msg) from e

144 if not all(isinstance(v, int) for v in data_tuple):

145 msg = f"Expected an iterable of integers. Got {data} instead."

TypeError: Expected an integer or an iterable of integers. Got None instead.

[tas_AUST-04_ERA5_historical_hres_BOM_BARRA-C2_v1_mon_197901-197901(1).zip](https://github.com/user-attachments/files/19414266/tas_AUST-04_ERA5_historical_hres_BOM_BARRA-C2_v1_mon_197901-197901.1.zip)

```

|

closed

|

2025-03-24T04:27:09Z

|

2025-03-24T04:32:47Z

|

https://github.com/pydata/xarray/issues/10166

|

[

"needs triage"

] |

bweeding

| 1

|

klen/mixer

|

sqlalchemy

| 37

|

Create a way to define custom Types and Generators and error descriptively when Types are not recognized.

|

I defined a couple custom SQLAlchemy types with [`sqlalchemy.types.TypeDecorator`](http://docs.sqlalchemy.org/en/rel_0_9/core/custom_types.html#augmenting-existing-types), and immediately Mixer choked on them. I had to reverse-engineer the system and monkey-patch my custom types in order to get it working.

Here's a link to my [Stack Overflow post](http://stackoverflow.com/questions/26416307/missing-parameter-in-function/28362205#28362205) about this, including the hacky solution I implemented, and here's the traceback I got:

```

mixer: ERROR: Traceback (most recent call last):

File "/usr/local/lib/python2.7/site-packages/mixer/main.py", line 576, in blend

return type_mixer.blend(**values)

File "/usr/local/lib/python2.7/site-packages/mixer/main.py", line 125, in blend

for name, value in defaults.items()

File "/usr/local/lib/python2.7/site-packages/mixer/main.py", line 125, in <genexpr>

for name, value in defaults.items()

File "/usr/local/lib/python2.7/site-packages/mixer/mix_types.py", line 220, in gen_value

return type_mixer.gen_field(field)

File "/usr/local/lib/python2.7/site-packages/mixer/main.py", line 202, in gen_field

return self.gen_value(field.name, field, unique=unique)

File "/usr/local/lib/python2.7/site-packages/mixer/main.py", line 245, in gen_value

fab = self.get_fabric(field, field_name, fake=fake)

File "/usr/local/lib/python2.7/site-packages/mixer/main.py", line 290, in get_fabric

self.__fabrics[key] = self.make_fabric(field.scheme, field_name, fake)

File "/usr/local/lib/python2.7/site-packages/mixer/backend/sqlalchemy.py", line 178, in make_fabric

stype, field_name=field_name, fake=fake, kwargs=kwargs)

File "/usr/local/lib/python2.7/site-packages/mixer/main.py", line 306, in make_fabric

fab = self.__factory.get_fabric(scheme, field_name, fake)

File "/usr/local/lib/python2.7/site-packages/mixer/factory.py", line 158, in get_fabric

if not func and fcls.__bases__:

AttributeError: Mixer (myproject.models.MyModel): 'NoneType' object has no attribute '__bases__'

```

Anyway, thanks for making Mixer! It's a really powerful tool, and I love anything that makes testing easier.

|

closed

|

2015-02-06T09:29:40Z

|

2015-08-12T15:01:27Z

|

https://github.com/klen/mixer/issues/37

|

[] |

wolverdude

| 1

|

jschneier/django-storages

|

django

| 1,129

|

Empty SpooledTemporaryFile` created when `closed` property is used multiple times

|

When `GoogleCloudFile`'s `closed` property is used multiple times, the spooled temporary `_file` is created when its previously closed. This is because https://github.com/django/django/blob/6f453cd2981525b33925faaadc7a6e51fa90df5c/django/core/files/utils.py#L53 uses `self.file` which triggers a creation of `_file` regardless. As a result, when `.close()` is called after, an empty file is uploaded to GS.

|

open

|

2022-04-22T07:17:38Z

|

2022-04-22T07:17:38Z

|

https://github.com/jschneier/django-storages/issues/1129

|

[] |

skylander86

| 0

|

encode/apistar

|

api

| 543

|

is this a bug?

|

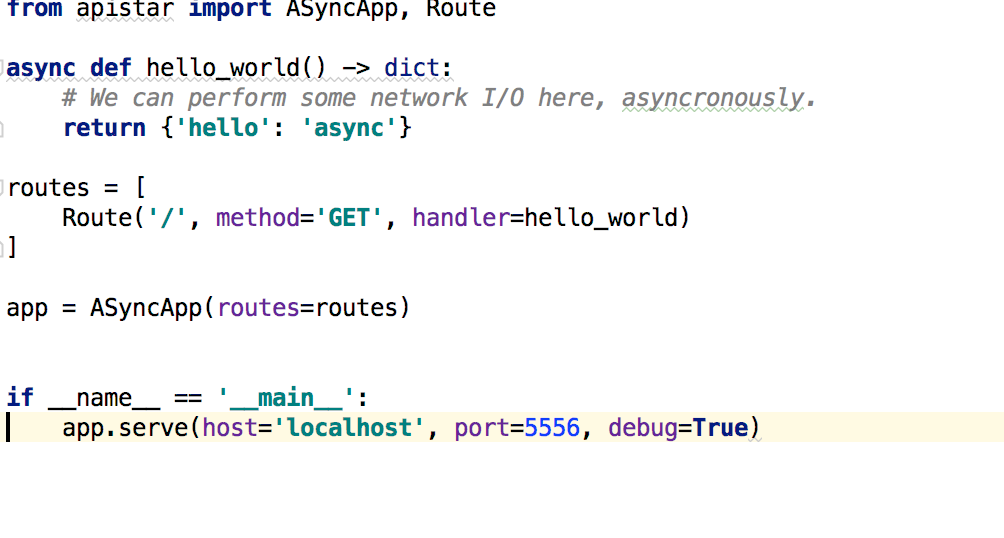

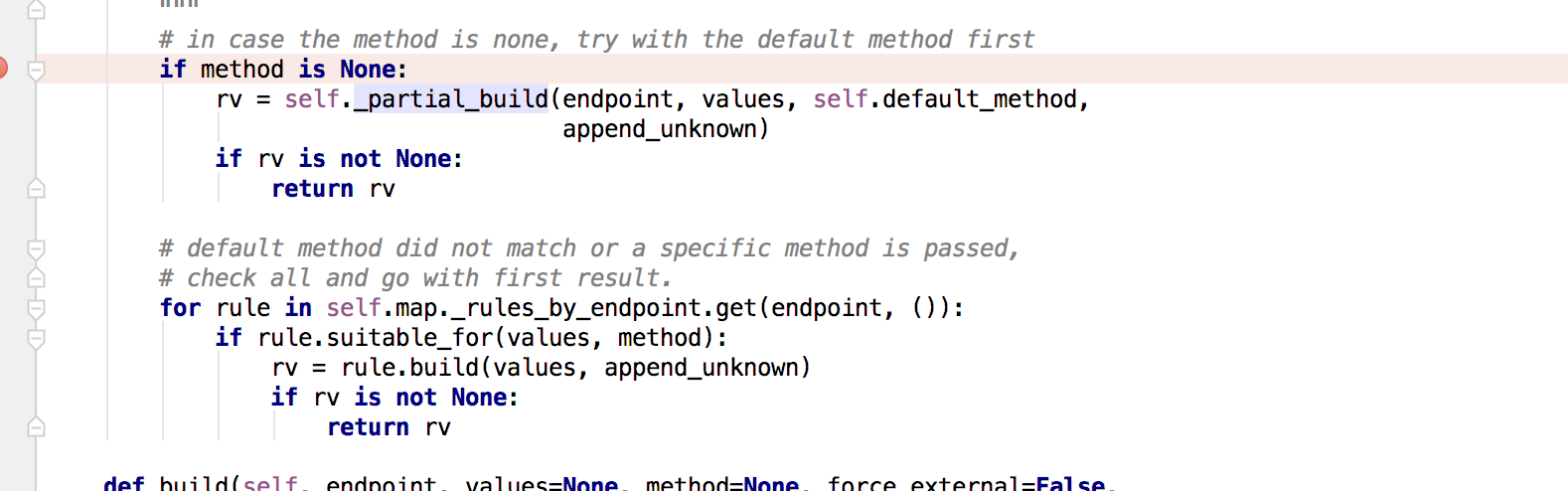

got an error where visit localhost:5556/docs

Could not build url for endpoint 'static' with values ['filename']. Did you mean 'serve_static_asgi' instead?

**and i find self.map._rules_by_endpoint not contain the key of static**

|

closed

|

2018-05-15T10:49:54Z

|

2018-05-18T02:40:17Z

|

https://github.com/encode/apistar/issues/543

|

[] |

goushicui

| 4

|

apify/crawlee-python

|

web-scraping

| 403

|

Evaluate the efficiency of opening new Playwright tabs versus windows

|

Try to experiment with [PlaywrightBrowserController](https://github.com/apify/crawlee-python/blob/master/src/crawlee/browsers/playwright_browser_controller.py) to determine whether opening new Playwright pages in tabs offers better performance compared to opening them in separate windows (current state).

|

open

|

2024-08-06T07:47:10Z

|

2024-08-06T08:31:05Z

|

https://github.com/apify/crawlee-python/issues/403

|

[

"t-tooling",

"solutioning"

] |

vdusek

| 1

|

ansible/awx

|

django

| 14,918

|

variables and source_vars expect dictionary but apply as yaml (multiple modules)

|

### Please confirm the following

- [X] I agree to follow this project's [code of conduct](https://docs.ansible.com/ansible/latest/community/code_of_conduct.html).

- [X] I have checked the [current issues](https://github.com/ansible/awx/issues) for duplicates.

- [X] I understand that AWX is open source software provided for free and that I might not receive a timely response.

- [X] I am **NOT** reporting a (potential) security vulnerability. (These should be emailed to `security@ansible.com` instead.)

### Bug Summary

Dictionary with provided variables seems to be applying as yaml:

- inventories: variables

- hosts: variables

- groups: variables

- inventory sources: source_vars

Also, see https://github.com/ansible/awx/issues/14842

### AWX version

23.2.0

### Select the relevant components

- [ ] UI

- [ ] UI (tech preview)

- [ ] API

- [ ] Docs

- [X] Collection

- [ ] CLI

- [ ] Other

### Installation method

docker development environment

### Modifications

no

### Ansible version

2.14.2

### Operating system

Red Hat Enterprise Linux release 9.1 (Plow)

### Web browser

Chrome

### Steps to reproduce

```

- ansible.controller.inventory:

name: "Dynamic A"

organization: "A"

variables: {tt: tt}

- ansible.controller.host:

name: "Host A"

inventory: "Static A"

variables: {tt: tt}

- ansible.controller.group:

name: "Group A"

inventory: "Static A"

variables: {tt: tt}

- ansible.controller.inventory_source:

name: "A"

inventory: "Dynamic A"

source_vars: {tt: tt}

```

### Expected results

```

YAML:

---

tt: tt

```

### Actual results

```

YAML:

{"tt": "tt"}

```

### Additional information

_No response_

|

open

|

2024-02-25T08:27:40Z

|

2024-02-25T08:32:12Z

|

https://github.com/ansible/awx/issues/14918

|

[

"type:bug",

"component:awx_collection",

"needs_triage",

"community"

] |

kk-at-redhat

| 0

|

microsoft/MMdnn

|

tensorflow

| 832

|

Convert trained yolov3 Darknet-53 custom model to tensorflow model

|

Platform: ubuntu 16.04

Python version: 3.6

Source framework: mxnet (gluon-cv)

Destination framework: Tensorflow

Model Type: Object detection

Pre-trained model path: [https://gluon-cv.mxnet.io/build/examples_detection/demo_yolo.html#sphx-glr-build-examples-detection-demo-yolo-py](url)

I have trained a custom yolov3 Darknet-53 model (yolo3_darknet53_custom.params) using gluon-cv (mxnet). I need to convert the yolo3_darknet53_custom.params (mxnet) model to yolo3_darknet53_custom.pb (tensorflow)

Also, I see https://pypi.org/project/mmdnn/ object detection is under on-going Models.

Queries:

Does mmdnn supports yolo models (object detection) conversion trained using gluon-cv (mxnet) in general?

Is there any way or work around by which I can convert yolo models?

Any leads would be great!

Thank you

|

open

|

2020-05-07T14:13:04Z

|

2020-05-08T18:15:42Z

|

https://github.com/microsoft/MMdnn/issues/832

|

[] |

analyticalrohit

| 1

|

chainer/chainer

|

numpy

| 8,199

|

flaky test: `chainerx_tests/unit_tests/routines_tests/test_normalization.py::test_BatchNorm`

| ERROR: type should be string, got "https://travis-ci.org/chainer/chainer/jobs/591364861\r\n\r\n`FAIL tests/chainerx_tests/unit_tests/routines_tests/test_normalization.py::test_BatchNorm_param_0_{contiguous=None}_param_0_{decay=None}_param_0_{eps=2e-05}_param_0_{param_dtype='float16'}_param_0_{x_dtype='float16'}_param_3_{axis=None, reduced_shape=(3, 4, 5, 2), x_shape=(2, 3, 4, 5, 2)}[native:0]`\r\n\r\n```\r\n[2019-09-30 08:05:44] E chainer.testing.function_link.FunctionTestError: Outputs do not match the expected values.\r\n[2019-09-30 08:05:44] E Indices of outputs that do not match: 0\r\n[2019-09-30 08:05:44] E Expected shapes and dtypes: (2, 3, 4, 5, 2):float16\r\n[2019-09-30 08:05:44] E Actual shapes and dtypes: (2, 3, 4, 5, 2):float16\r\n[2019-09-30 08:05:44] E \r\n[2019-09-30 08:05:44] E \r\n[2019-09-30 08:05:44] E Error details of output [0]:\r\n[2019-09-30 08:05:44] E \r\n[2019-09-30 08:05:44] E Not equal to tolerance rtol=0.1, atol=0.1\r\n[2019-09-30 08:05:44] E \r\n[2019-09-30 08:05:44] E (mismatch 0.4166666666666714%)\r\n[2019-09-30 08:05:44] E x: array([-0.1726 , 0.3286 , 0.4915 , 1.105 , 0.2152 , 0.587 ,\r\n[2019-09-30 08:05:44] E -0.2905 , -0.012695, 0.554 , -1.178 , -0.3076 , -0.4988 ,\r\n[2019-09-30 08:05:44] E -0.3975 , 0.4343 , 0.813 , 1.951 , 0.2693 , 0.758 ,...\r\n[2019-09-30 08:05:44] E y: array([-0.1726 , 0.3286 , 0.4915 , 1.105 , 0.2155 , 0.587 ,\r\n[2019-09-30 08:05:44] E -0.2905 , -0.012695, 0.554 , -1.178 , -0.3071 , -0.4988 ,\r\n[2019-09-30 08:05:44] E -0.3975 , 0.4343 , 0.813 , 1.951 , 0.269 , 0.758 ,...\r\n[2019-09-30 08:05:44] E \r\n[2019-09-30 08:05:44] E assert_allclose failed: \r\n[2019-09-30 08:05:44] E shape: (2, 3, 4, 5, 2) (2, 3, 4, 5, 2)\r\n[2019-09-30 08:05:44] E dtype: float16 float16\r\n[2019-09-30 08:05:44] E i: (0, 2, 0, 4, 1)\r\n[2019-09-30 08:05:44] E x[i]: 0.070556640625\r\n[2019-09-30 08:05:44] E y[i]: -0.03399658203125\r\n[2019-09-30 08:05:44] E relative error[i]: 3.076171875\r\n[2019-09-30 08:05:44] E absolute error[i]: 0.10455322265625\r\n:\r\n```"

|

closed

|

2019-09-30T08:25:24Z

|

2019-10-29T04:56:41Z

|

https://github.com/chainer/chainer/issues/8199

|

[

"cat:test",

"prio:high"

] |

niboshi

| 1

|

mwaskom/seaborn

|

matplotlib

| 2,861

|

Legends don't represent additional visual properties

|

This is a meta issue replacing the following (exhaustive) list of reports:

- #2852

- #2005

- #1763

- #940

The basic issue is that artists in seaborn legends typically do not look exactly like artists in the plot in cases where additional matplotlib keyword arguments have been passed. This is semi-intentional (more like, it was an intentional decision to avoid the complexity of making this work) but is a source of confusion.

This does work as expected in the new objects interface, so I would expect it to eventually be resolved in the function interface once they are refactored to use that behind the scenes. Although the legend code in the objects interface is complex and probably a little buggy at the moment. I don't know whether there will be any effort to improve this aspect of legends in the plotting functions without larger changes to the codebase; legends are hard (#2231).

|

closed

|

2022-06-15T02:53:19Z

|

2023-09-11T01:18:03Z

|

https://github.com/mwaskom/seaborn/issues/2861

|

[

"rough-edge",

"plots"

] |

mwaskom

| 0

|

seleniumbase/SeleniumBase

|

web-scraping

| 3,266

|

Add a full range of scroll methods for CDP Mode

|

### Add a full range of scroll methods for CDP Mode

----

Once this task is completed, we should expect to see all these methods:

```python

sb.cdp.scroll_into_view(selector) # Scroll to the element

sb.cdp.scroll_to_y(y) # Scroll to the y-position

sb.cdp.scroll_to_top() # Scroll to the top

sb.cdp.scroll_to_bottom() # Scroll to the bottom

sb.cdp.scroll_up(amount=25) # Relative scroll by amount

sb.cdp.scroll_down(amount=25) # Relative scroll by amount

```

|

closed

|

2024-11-14T19:25:54Z

|

2024-11-14T21:45:09Z

|

https://github.com/seleniumbase/SeleniumBase/issues/3266

|

[

"enhancement",

"UC Mode / CDP Mode"

] |

mdmintz

| 1

|

django-oscar/django-oscar

|

django

| 4,225

|

Sandbox site is down

|

[Sandbox](http://latest.oscarcommerce.com/) is down. Sandbox site was using heroku for deployments I assume heroku's free tier was being used but they changed their policy awhile ago or their credits have run out.

[Removal of Heroku Free Product Plans FAQ](https://help.heroku.com/RSBRUH58/removal-of-heroku-free-product-plans-faq)

|

open

|

2024-01-14T19:44:28Z

|

2024-02-29T12:20:45Z

|

https://github.com/django-oscar/django-oscar/issues/4225

|

[] |

Hisham-Pak

| 1

|

google-research/bert

|

tensorflow

| 920

|

How to print learning_rate?

|

How to print `learning_rate` so that I could see it if decay.

Thank you!

|

closed

|

2019-11-15T07:21:51Z

|

2021-03-12T03:35:37Z

|

https://github.com/google-research/bert/issues/920

|

[] |

guotong1988

| 2

|

tqdm/tqdm

|

jupyter

| 1,523

|

AttributeError Exception under a console-less PyInstaller build

|

- [x] I have marked all applicable categories:

+ [x] exception-raising bug

+ [ ] visual output bug

- [x] I have visited the [source website], and in particular

read the [known issues]

- [x] I have searched through the [issue tracker] for duplicates

- [x] I have mentioned version numbers, operating system and

environment, where applicable:

```python

import tqdm, sys

print(tqdm.__version__, sys.version, sys.platform)

```

[source website]: https://github.com/tqdm/tqdm/

[known issues]: https://github.com/tqdm/tqdm/#faq-and-known-issues

[issue tracker]: https://github.com/tqdm/tqdm/issues?q=

* * *

```

Environment: 4.66.1 3.11.6 (tags/v3.11.6:8b6ee5b, Oct 2 2023, 14:57:12) [MSC v.1935 64 bit (AMD64)] win32

```

It seems tqdm does not support PyInstaller under a console-less window (`-w` flag). It is supported if you allow PyInstaller to include and show the console (`-c` flag). This may or may not be exclusive to PyInstaller 6.x, but that's what I'm currently using.

To reproduce take the following script:

```python

from tqdm import tqdm

t = tqdm(total=100)

t.update(100)

print("Finished")

```

Run that in a normal Python interpreter environment and it runs just fine. However, freeze it with PyInstaller with `-w` flag (window-only flag) then it will fail. Freezing it with `-c` flag (include console flag) then it will work.

```

File "tqdm\std.py", line 1099, in __init__

File "tqdm\std.py", line 1348, in refresh

File "tqdm\std.py", line 1496, in display

File "tqdm\std.py", line 462, in print_status

File "tqdm\std.py", line 455, in fp_write

File "tqdm\utils.py", line 139, in __getattr__

AttributeError: 'NoneType' object has no attribute 'write'

```

The bug seems to occur when trying to print the status to `fp` where `fp` is None at this point:

https://github.com/tqdm/tqdm/blob/4c956c20b83be4312460fc0c4812eeb3fef5e7df/tqdm/std.py#L455

|

open

|

2023-10-13T19:42:40Z

|

2023-10-13T19:42:40Z

|

https://github.com/tqdm/tqdm/issues/1523

|

[] |

rlaphoenix

| 0

|

iperov/DeepFaceLab

|

machine-learning

| 5,613

|

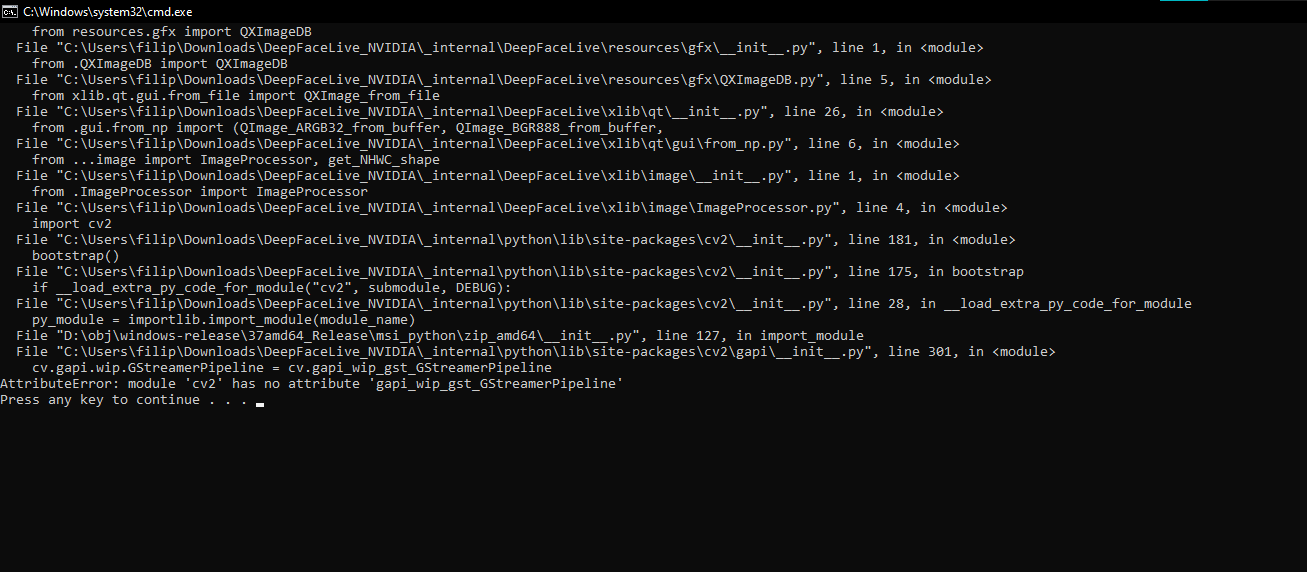

Attribute Error Message Upon Installation

|

Trying to re-download deepfacelive and deep facelab and every time i try to run the batch file after unzip it gives be this error message “ AttributeError: module "cv2' has no attribute gapi

Press any key to continue”

i’ve tried deleting it and reinstalling but the same thing happens. tried restarting my computer and everything. what could be the issue?

|

open

|

2023-01-23T11:41:45Z

|

2023-06-08T23:19:26Z

|

https://github.com/iperov/DeepFaceLab/issues/5613

|

[] |

heyxur

| 1

|

bigscience-workshop/petals

|

nlp

| 250

|

Inference timeout on larger input prompts

|

### Currently using the chatbot example where session id is saved and inference occurs one token at a time:

```python

with models[model_name][1].inference_session(max_length=512) as sess:

print(f"Thread Start -> {threading.get_ident()}")

output[model_name] = ""

inputs = models[model_name][0](prompt, return_tensors="pt")["input_ids"].to(DEVICE)

n_input_tokens = inputs.shape[1]

done = False

while not done and not kill.is_set():

outputs = models[model_name][1].generate(

inputs,

max_new_tokens=1,

do_sample=True,

top_p=top_p,

temperature=temperature,

repetition_penalty=repetition_penalty,

session=sess

)

output[model_name] += models[model_name][0].decode(outputs[0, n_input_tokens:])

token_cnt += 1

print("\n["+ str(threading.get_ident()) + "]" + output[model_name], end="", flush=True)

for stop_word in stop:

stop_word = codecs.getdecoder("unicode_escape")(stop_word)[0]

if stop_word != '' and stop_word in output[model_name]:

print(f"\nDONE (stop) -> {threading.get_ident()}")

done = True

if flag or (token_cnt >= max_tokens):

print(f"\nDONE (max tokens) -> {threading.get_ident()}")

done = True

inputs = None # Prefix is passed only for the 1st token of the bot's response

n_input_tokens = 0

```

### When I pass in a small prompt, inference works:

**PROMPT**

> Please answer the following question:

> Question: What is the capital of Germany?

> Answer:

```

Berlin, Germany

```

### A slightly larger prompt always results in timeout errors:

**PROMPT**

> Given a pair of sentences, choose whether the two sentences agree (entailment)/disagree (contradiction) with each other.

> Possible labels: 1. entailment 2. contradiction

> Sentence 1: The skier was on the edge of the ramp. Sentence 2: The skier was dressed in winter clothes.

> Label: entailment

> Sentence 1: The boy skated down the staircase railing. Sentence 2: The boy is a newbie skater.

> Label: contradiction

> Sentence 1: Two middle-aged people stand by a golf hole. Sentence 2: A couple riding in a golf cart.

> Label:

```

Feb 03 16:16:37.377 [INFO] Peer 12D3KooWJALV7xRuHLzJHAftZhmSeqz68hywh1oK8oYmW844vWHt did not respond, banning it temporarily

Feb 03 16:16:37.377 [WARN] [/home/gene/dockerx/temp/petals/src/petals/client/inference_session.py.step:311] Caught exception when running inference from block 16 (retry in 0 sec): TimeoutError()

Feb 03 16:16:37.378 [WARN] [/home/gene/dockerx/temp/petals/src/petals/client/routing/sequence_manager.py.make_sequence:109] Remote SequenceManager is still searching for routes, waiting for it to become ready

Feb 03 16:17:10.908 [INFO] Peer 12D3KooWJALV7xRuHLzJHAftZhmSeqz68hywh1oK8oYmW844vWHt did not respond, banning it temporarily

Feb 03 16:17:10.908 [WARN] [/home/gene/dockerx/temp/petals/src/petals/client/inference_session.py.step:311] Caught exception when running inference from block 16 (retry in 1 sec): TimeoutError()

Feb 03 16:17:11.909 [WARN] [/home/gene/dockerx/temp/petals/src/petals/client/routing/sequence_manager.py.make_sequence:109] Remote SequenceManager is still searching for routes, waiting for it to become ready

```

|

closed

|

2023-02-04T00:22:26Z

|

2023-02-27T14:25:54Z

|

https://github.com/bigscience-workshop/petals/issues/250

|

[] |

gururise

| 6

|

ultralytics/yolov5

|

pytorch

| 12,468

|

How to analyze remote machine training results with Comet?

|

### Search before asking

- [X] I have searched the YOLOv5 [issues](https://github.com/ultralytics/yolov5/issues) and [discussions](https://github.com/ultralytics/yolov5/discussions) and found no similar questions.

### Question

I'm trying to analyze my yolov5 training with Comet, generated in a HPC machine. After generated the api_key and the configuration file, I don't understand from the Comet UI how to pass the results to analyze from a remote machine.

Could you help me? Thanks.

### Additional

_No response_

|

closed

|

2023-12-05T10:14:54Z

|

2024-01-16T00:21:24Z

|

https://github.com/ultralytics/yolov5/issues/12468

|

[

"question",

"Stale"

] |

unrue

| 4

|

lukasmasuch/streamlit-pydantic

|

streamlit

| 68

|

The type of the following property is currently not supported: Multi Selection

|

### Checklist

- [X] I have searched the [existing issues](https://github.com/lukasmasuch/streamlit-pydantic/issues) for similar issues.

- [X] I added a very descriptive title to this issue.

- [X] I have provided sufficient information below to help reproduce this issue.

### Summary

In examples in https://st-pydantic.streamlit.app/, one example is `Complex Default`. When I ran the code in my own system I face the following issue:

The type of the following property is currently not supported: Multi Selection

What must I do?

### Reproducible Code Example

```Python

from enum import Enum

from typing import Set

import streamlit as st

from pydantic import BaseModel, Field

import streamlit_pydantic as sp

class OtherData(BaseModel):

text: str

integer: int

class SelectionValue(str, Enum):

FOO = "foo"

BAR = "bar"

class ExampleModel(BaseModel):

long_text: str = Field(

..., format="multi-line", description="Unlimited text property"

)

integer_in_range: int = Field(

20,

ge=10,

le=30,

multiple_of=2,

description="Number property with a limited range.",

)

single_selection: SelectionValue = Field(

..., description="Only select a single item from a set."

)

multi_selection: Set[SelectionValue] = Field(

..., description="Allows multiple items from a set."

)

read_only_text: str = Field(

"Lorem ipsum dolor sit amet",

description="This is a ready only text.",

readOnly=True,

)

single_object: OtherData = Field(

...,

description="Another object embedded into this model.",

)

data = sp.pydantic_form(key="my_form", model=ExampleModel)

if data:

st.json(data.model_dump_json())

```

### Steps To Reproduce

streamlit run `the code`

### Expected Behavior

ability to select values to create a set

### Current Behavior

```

The type of the following property is currently not supported: Multi Selection

```

### Is this a regression?

- [ ] Yes, this used to work in a previous version.

### Debug info

- streamlit-pydantic version:

0.6.0

- Python version:

3.10.12

pydantic==2.10.5

pydantic-settings==2.7.1

pydantic_core==2.27.2

streamlit-pydantic==0.6.0

### Additional Information

Thank you in advance

|

open

|

2025-01-10T18:34:15Z

|

2025-02-14T05:03:56Z

|

https://github.com/lukasmasuch/streamlit-pydantic/issues/68

|

[

"type:bug",

"status:needs-triage"

] |

alikaz3mi

| 1

|

ultralytics/yolov5

|

pytorch

| 12,990

|

No detections on custom data training

|

### Search before asking

- [ ] I have searched the YOLOv5 [issues](https://github.com/ultralytics/yolov5/issues) and [discussions](https://github.com/ultralytics/yolov5/discussions) and found no similar questions.

### Question

I'm trying to train yolov5 with custom data. I'm using very few images just to test everything. I have 2 classes and 6 images (3 images for each class), which I know is WAY too little, but as I said, it's only for testing purposes. So I trained the model with the data which worked fine, but when I try to detect one of the images that was used for training, it doesn't work. Shouldn't it be able to detect the images I trained with, even if there are so few?

Command for training:

python train.py --img 2048 --batch 16 --epochs 5 --data test.yaml --weights yolov5s.pt --nosave --cache

Command for testing:

python detect.py --weights runs/train/exp11/weights/last.pt --source data/cartes_mini/images/2-C_jpg.rf.3ad5f752441ac8389c42afec2b5ecc10.jpg

I've also tried with another dataset that contained 1 class and around 20 training images, but got the same result.

Thank you!

### Additional

_No response_

|

closed

|

2024-05-08T16:47:38Z

|

2024-10-20T19:45:27Z

|

https://github.com/ultralytics/yolov5/issues/12990

|

[

"question",

"Stale"

] |

Just1813

| 3

|

sammchardy/python-binance

|

api

| 786

|

support coin margined future operations

|

currently, all the future operations are limited to usd margined futures, which is on /fapi/v1/. coin margined futures operations are on /dapi/v1/.

|

open

|

2021-04-23T07:16:59Z

|

2021-04-23T07:16:59Z

|

https://github.com/sammchardy/python-binance/issues/786

|

[] |

ZhiminHeGit

| 0

|

pytest-dev/pytest-xdist

|

pytest

| 515

|

INTERNALERROR> TypeError: unhashable type: 'ExceptionChainRepr'

|

Running pytest with `-n4` and `--doctest-modules` together with import errors leads to this misleading error which hides what the actual error is.

```

(pytest-debug) :~/PycharmProjects/scratch$ py.test --pyargs test123 --doctest-modules -n4

=================================================== test session starts ====================================================

platform linux -- Python 3.7.6, pytest-5.4.1.dev27+g3b48fce, py-1.8.1, pluggy-0.13.0

rootdir: ~/PycharmProjects/scratch

plugins: forked-1.1.3, xdist-1.31.1.dev1+g6fd5b56

gw0 ok / gw1 ok / gw2 C / gw3 CINTERNALERROR> Traceback (most recent call last):

INTERNALERROR> File "site-packages/pytest-5.4.1.dev27+g3b48fce-py3.7.egg/_pytest/main.py", line 191, in wrap_session

INTERNALERROR> session.exitstatus = doit(config, session) or 0

INTERNALERROR> File "site-packages/pytest-5.4.1.dev27+g3b48fce-py3.7.egg/_pytest/main.py", line 247, in _main

INTERNALERROR> config.hook.pytest_runtestloop(session=session)

INTERNALERROR> File "site-packages/pluggy/hooks.py", line 286, in __call__

INTERNALERROR> return self._hookexec(self, self.get_hookimpls(), kwargs)

INTERNALERROR> File "site-packages/pluggy/manager.py", line 92, in _hookexec

INTERNALERROR> return self._inner_hookexec(hook, methods, kwargs)

INTERNALERROR> File "site-packages/pluggy/manager.py", line 86, in <lambda>

INTERNALERROR> firstresult=hook.spec.opts.get("firstresult") if hook.spec else False,

INTERNALERROR> File "site-packages/pluggy/callers.py", line 208, in _multicall

INTERNALERROR> return outcome.get_result()

INTERNALERROR> File "site-packages/pluggy/callers.py", line 80, in get_result

INTERNALERROR> raise ex[1].with_traceback(ex[2])

INTERNALERROR> File "site-packages/pluggy/callers.py", line 187, in _multicall

INTERNALERROR> res = hook_impl.function(*args)

INTERNALERROR> File "site-packages/pytest_xdist-1.31.1.dev1+g6fd5b56-py3.7.egg/xdist/dsession.py", line 112, in pytest_runtestloop

INTERNALERROR> self.loop_once()

INTERNALERROR> File "site-packages/pytest_xdist-1.31.1.dev1+g6fd5b56-py3.7.egg/xdist/dsession.py", line 135, in loop_once

INTERNALERROR> call(**kwargs)

INTERNALERROR> File "site-packages/pytest_xdist-1.31.1.dev1+g6fd5b56-py3.7.egg/xdist/dsession.py", line 272, in worker_collectreport

INTERNALERROR> self._failed_worker_collectreport(node, rep)

INTERNALERROR> File "site-packages/pytest_xdist-1.31.1.dev1+g6fd5b56-py3.7.egg/xdist/dsession.py", line 302, in _failed_worker_collectreport

INTERNALERROR> if rep.longrepr not in self._failed_collection_errors:

INTERNALERROR> TypeError: unhashable type: 'ExceptionChainRepr'

```

When run with just one of `-n4` and `--doctest-modules` its fine.

```

(pytest-debug) :~/PycharmProjects/scratch$ py.test --pyargs test123 -n4

=================================================== test session starts ====================================================

platform linux -- Python 3.7.6, pytest-5.4.1.dev27+g3b48fce, py-1.8.1, pluggy-0.13.0

rootdir: ~/PycharmProjects/scratch

plugins: forked-1.1.3, xdist-1.31.1.dev1+g6fd5b56

gw0 [0] / gw1 [0] / gw2 [0] / gw3 [0]

========================================================== ERRORS ==========================================================

_____________________________________ ERROR collecting test123/test/test_something.py ______________________________________

ImportError while importing test module '~/PycharmProjects/scratch/test123/test/test_something.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

test123/test/test_something.py:1: in <module>

import foo

E ModuleNotFoundError: No module named 'foo'

================================================= short test summary info ==================================================

ERROR test123/test/test_something.py

=

```

or

```

(pytest-debug) :~/PycharmProjects/scratch$ py.test --pyargs test123 --doctest-modules

=================================================== test session starts ====================================================

platform linux -- Python 3.7.6, pytest-5.4.1.dev27+g3b48fce, py-1.8.1, pluggy-0.13.0

rootdir: ~/PycharmProjects/scratch

plugins: forked-1.1.3, xdist-1.31.1.dev1+g6fd5b56

collected 0 items / 2 errors

========================================================== ERRORS ==========================================================

_____________________________________ ERROR collecting test123/test/test_something.py ______________________________________

test123/test/test_something.py:1: in <module>

import foo

E ModuleNotFoundError: No module named 'foo'

_____________________________________ ERROR collecting test123/test/test_something.py ______________________________________

ImportError while importing test module '~/PycharmProjects/scratch/test123/test/test_something.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

test123/test/test_something.py:1: in <module>

import foo

E ModuleNotFoundError: No module named 'foo'

================================================= short test summary info ==================================================

ERROR test123/test/test_something.py - ModuleNotFoundError: No module named 'foo'

ERROR test123/test/test_something.py

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! Interrupted: 2 errors during collection !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

==================================================== 2 errors in 0.06s =====================================================

```

Project is a minimal set of test files

```

~/PycharmProjects/scratch$ find test123 | grep -v __pycache__

test123

test123/test

test123/test/test_something.py

test123/__init__.py

```

and versions are

```

# packages in environment at /..../pytest-debug:

#

# Name Version Build Channel

_libgcc_mutex 0.1 conda_forge conda-forge

_openmp_mutex 4.5 0_gnu conda-forge

apipkg 1.5 pypi_0 pypi

attrs 19.3.0 py_0 conda-forge

ca-certificates 2019.11.28 hecc5488_0 conda-forge

certifi 2019.11.28 py37hc8dfbb8_1 conda-forge

execnet 1.7.1 pypi_0 pypi

importlib-metadata 1.5.2 py37hc8dfbb8_0 conda-forge

importlib_metadata 1.5.2 0 conda-forge

ld_impl_linux-64 2.34 h53a641e_0 conda-forge

libffi 3.2.1 he1b5a44_1007 conda-forge

libgcc-ng 9.2.0 h24d8f2e_2 conda-forge

libgomp 9.2.0 h24d8f2e_2 conda-forge

libstdcxx-ng 9.2.0 hdf63c60_2 conda-forge

more-itertools 8.2.0 py_0 conda-forge

ncurses 6.1 hf484d3e_1002 conda-forge

openssl 1.1.1e h516909a_0 conda-forge

packaging 20.1 py_0 conda-forge

pip 20.0.2 py_2 conda-forge

pluggy 0.13.0 py37_0 conda-forge

py 1.8.1 py_0 conda-forge

py-cpuinfo 5.0.0 py_0 conda-forge

pyparsing 2.4.6 py_0 conda-forge

pytest 5.4.1.dev27+g3b48fce pypi_0 pypi

pytest-forked 1.1.3 pypi_0 pypi

pytest-xdist 1.31.1.dev1+g6fd5b56.d20200326 pypi_0 pypi

python 3.7.6 h8356626_5_cpython conda-forge

python_abi 3.7 1_cp37m conda-forge

readline 8.0 hf8c457e_0 conda-forge

ripgrep 11.0.2 h516909a_3 conda-forge

setuptools 46.1.3 py37hc8dfbb8_0 conda-forge

six 1.14.0 py_1 conda-forge

sqlite 3.30.1 hcee41ef_0 conda-forge

tk 8.6.10 hed695b0_0 conda-forge

wcwidth 0.1.8 pyh9f0ad1d_1 conda-forge

wheel 0.34.2 py_1 conda-forge

xz 5.2.4 h516909a_1002 conda-forge

zipp 3.1.0 py_0 conda-forge

zlib 1.2.11 h516909a_1006 conda-forge

```

|

closed

|

2020-03-26T14:07:31Z

|

2020-05-13T13:32:39Z

|

https://github.com/pytest-dev/pytest-xdist/issues/515

|

[] |

lusewell

| 2

|

OthersideAI/self-operating-computer

|

automation

| 151

|

[BUG] Fedora system ,can't use it

|

Found a bug? Please fill out the sections below. 👍

My system Fedora 39

### Describe the bug

i can't use

Name: self-operating-computer

Version: 1.2.8

Summary:

Home-page:

Author:

Author-email:

License:

Location: /home/linlori/self-operating-computer/venv/lib64/python3.12/site-packages

Requires: aiohttp, annotated-types, anyio, certifi, charset-normalizer, colorama, contourpy, cycler, distro, easyocr, EasyProcess, entrypoint2, exceptiongroup, fonttools, google-generativeai, h11, httpcore, httpx, idna, importlib-resources, kiwisolver, matplotlib, MouseInfo, mss, numpy, openai, packaging, Pillow, prompt-toolkit, PyAutoGUI, pydantic, pydantic-core, PyGetWindow, PyMsgBox, pyparsing, pyperclip, PyRect, pyscreenshot, PyScreeze, python-dateutil, python-dotenv, python3-xlib, pytweening, requests, rubicon-objc, six, sniffio, tqdm, typing-extensions, ultralytics, urllib3, wcwidth, zipp

Required-by:

### Steps to Reproduce

1. went to "open the browser".

2. error as .

[Self-Operating Computer][Error] Something went wrong. Trying another method X get_image failed: error 8 (73, 0, 1028)

[Self-Operating Computer][Error] Something went wrong. Trying again X get_image failed: error 8 (73, 0, 1028)

[Self-Operating Computer][Error] -> cannot access local variable 'content' where it is not associated with a value

3. not working

### Expected Behavior

A brief description of what you expected to happen.

### Actual Behavior:

what actually happened.

### Environment

- OS: fedora 39

- GPT-4v:

- Framework Version (optional):

### Screenshots

If applicable, add screenshots to help explain your problem.

### Additional context

Add any other context about the problem here.

|

closed

|

2024-01-30T20:42:58Z

|

2024-03-25T15:51:44Z

|

https://github.com/OthersideAI/self-operating-computer/issues/151

|

[

"bug"

] |

Lori-Lin7