repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

jupyter-incubator/sparkmagic

|

jupyter

| 738

|

[qn] Equivalent to `%run -i localscript.py` that runs a script on the cluster

|

[qn] Is there an equivalent to `%run -i localscript.py` that runs a script on the cluster?

E.g. In the local notebook, I have

```py

x=1

y=2

```

in the `localscript.py`.

And would like to run this on the cluster itself.

Currently running `%run` will run the script locally on the notebook instead of the cluster.

|

closed

|

2021-10-29T09:57:40Z

|

2021-11-12T04:37:41Z

|

https://github.com/jupyter-incubator/sparkmagic/issues/738

|

[] |

shern2

| 2

|

Yorko/mlcourse.ai

|

scikit-learn

| 624

|

A2 demo - strict inequalities in problem statement but >= and <= in solution

|

question 1.7:

>height is strictly less than 2.5%-percentile

[https://en.wikipedia.org/wiki/Inequality_(mathematics)](https://en.wikipedia.org/wiki/Inequality_(mathematics))

|

closed

|

2019-09-24T16:31:09Z

|

2019-09-29T20:56:01Z

|

https://github.com/Yorko/mlcourse.ai/issues/624

|

[] |

flinge

| 1

|

pyeve/eve

|

flask

| 580

|

retrieving sub resources returns empty

|

With a sub resource configured as below:

invoices = {

'url': 'people/<regex("[a-f0-9]{24}"):contact_id>/invoices'

POST to people/<contact_id>/invoices was successful. The document was created in Mongo collection "invoices". However, GET people/<contact_id>/invoices returns empty.

```

...

```

|

closed

|

2015-03-24T14:17:06Z

|

2015-03-27T17:36:10Z

|

https://github.com/pyeve/eve/issues/580

|

[] |

guonsoft

| 5

|

AUTOMATIC1111/stable-diffusion-webui

|

deep-learning

| 16,681

|

[Bug]: Getting and error: runtimeerror: no hip gpus are available

|

### Checklist

- [ ] The issue exists after disabling all extensions

- [X] The issue exists on a clean installation of webui

- [ ] The issue is caused by an extension, but I believe it is caused by a bug in the webui

- [ ] The issue exists in the current version of the webui

- [ ] The issue has not been reported before recently

- [ ] The issue has been reported before but has not been fixed yet

### What happened?

Im on a completely fresh install of Ubuntu 22.04.2. I followed the steps on Automatic Installation on Linux. I got WebUI open but it gives me an error: runtimeerror: no hip gpus are available. What am i doing wrong? What do i need to get this thing installed and working? My system is intel 9900k, 32gb ram and a radeon 6700XT. I am new to this stuff and don't know half of the stuff im doing so please be patient with me.

### Steps to reproduce the problem

1. open terminal in the folder i want to instal WebUI

2. copied this to terminal:

sudo apt install git python3.10-venv -y

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui && cd stable-diffusion-webui

python3.10 -m venv venv

3. Then i ran it with:

./webui.sh --upcast-sampling --skip-torch-cuda-test

4. WebUI opens and when i try to generate an image it spits out:

error: runtimeerror: no hip gpus are available

### What should have happened?

It should open the WebUI and use my GPU to generate images...

### What browsers do you use to access the UI ?

Mozilla Firefox

### Sysinfo

[sysinfo-2024-11-25-14-11.json](https://github.com/user-attachments/files/17904136/sysinfo-2024-11-25-14-11.json)

### Console logs

```Shell

serwu@serwu-Z390-AORUS-MASTER:~/Desktop/Ai/stable-diffusion-webui$ ./webui.sh --upcast-sampling --skip-torch-cuda-test

################################################################

Install script for stable-diffusion + Web UI

Tested on Debian 11 (Bullseye), Fedora 34+ and openSUSE Leap 15.4 or newer.

################################################################

################################################################

Running on serwu user

################################################################

################################################################

Repo already cloned, using it as install directory

################################################################

################################################################

Create and activate python venv

################################################################

################################################################

Launching launch.py...

################################################################

glibc version is 2.35

Cannot locate TCMalloc. Do you have tcmalloc or google-perftool installed on your system? (improves CPU memory usage)

Python 3.10.12 (main, Nov 6 2024, 20:22:13) [GCC 11.4.0]

Version: v1.10.1

Commit hash: 82a973c04367123ae98bd9abdf80d9eda9b910e2

Launching Web UI with arguments: --upcast-sampling --skip-torch-cuda-test

/home/serwu/Desktop/Ai/stable-diffusion-webui/venv/lib/python3.10/site-packages/timm/models/layers/__init__.py:48: FutureWarning: Importing from timm.models.layers is deprecated, please import via timm.layers

warnings.warn(f"Importing from {__name__} is deprecated, please import via timm.layers", FutureWarning)

no module 'xformers'. Processing without...

no module 'xformers'. Processing without...

No module 'xformers'. Proceeding without it.

Warning: caught exception 'No HIP GPUs are available', memory monitor disabled

Loading weights [6ce0161689] from /home/serwu/Desktop/Ai/stable-diffusion-webui/models/Stable-diffusion/v1-5-pruned-emaonly.safetensors

Running on local URL: http://127.0.0.1:7860

To create a public link, set `share=True` in `launch()`.

Creating model from config: /home/serwu/Desktop/Ai/stable-diffusion-webui/configs/v1-inference.yaml

/home/serwu/Desktop/Ai/stable-diffusion-webui/venv/lib/python3.10/site-packages/huggingface_hub/file_download.py:797: FutureWarning: `resume_download` is deprecated and will be removed in version 1.0.0. Downloads always resume when possible. If you want to force a new download, use `force_download=True`.

warnings.warn(

Startup time: 4.8s (import torch: 2.3s, import gradio: 0.5s, setup paths: 0.5s, other imports: 0.2s, load scripts: 0.3s, create ui: 0.3s, gradio launch: 0.5s).

Applying attention optimization: InvokeAI... done.

loading stable diffusion model: RuntimeError

Traceback (most recent call last):

File "/usr/lib/python3.10/threading.py", line 973, in _bootstrap

self._bootstrap_inner()

File "/usr/lib/python3.10/threading.py", line 1016, in _bootstrap_inner

self.run()

File "/usr/lib/python3.10/threading.py", line 953, in run

self._target(*self._args, **self._kwargs)

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/initialize.py", line 149, in load_model

shared.sd_model # noqa: B018

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/shared_items.py", line 175, in sd_model

return modules.sd_models.model_data.get_sd_model()

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/sd_models.py", line 693, in get_sd_model

load_model()

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/sd_models.py", line 868, in load_model

with devices.autocast(), torch.no_grad():

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/devices.py", line 228, in autocast

if has_xpu() or has_mps() or cuda_no_autocast():

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/devices.py", line 28, in cuda_no_autocast

device_id = get_cuda_device_id()

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/devices.py", line 40, in get_cuda_device_id

) or torch.cuda.current_device()

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/venv/lib/python3.10/site-packages/torch/cuda/__init__.py", line 778, in current_device

_lazy_init()

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/venv/lib/python3.10/site-packages/torch/cuda/__init__.py", line 293, in _lazy_init

torch._C._cuda_init()

RuntimeError: No HIP GPUs are available

Stable diffusion model failed to load

Using already loaded model v1-5-pruned-emaonly.safetensors [6ce0161689]: done in 0.0s

*** Error completing request

*** Arguments: ('task(y5cdfr3bjrgz0kp)', <gradio.routes.Request object at 0x7ff2024c1480>, 'woman', '', [], 1, 1, 7, 512, 512, False, 0.7, 2, 'Latent', 0, 0, 0, 'Use same checkpoint', 'Use same sampler', 'Use same scheduler', '', '', [], 0, 20, 'DPM++ 2M', 'Automatic', False, '', 0.8, -1, False, -1, 0, 0, 0, False, False, 'positive', 'comma', 0, False, False, 'start', '', 1, '', [], 0, '', [], 0, '', [], True, False, False, False, False, False, False, 0, False) {}

Traceback (most recent call last):

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/call_queue.py", line 74, in f

res = list(func(*args, **kwargs))

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/call_queue.py", line 53, in f

res = func(*args, **kwargs)

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/call_queue.py", line 37, in f

res = func(*args, **kwargs)

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/txt2img.py", line 109, in txt2img

processed = processing.process_images(p)

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/processing.py", line 847, in process_images

res = process_images_inner(p)

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/processing.py", line 920, in process_images_inner

with devices.autocast():

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/devices.py", line 228, in autocast

if has_xpu() or has_mps() or cuda_no_autocast():

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/devices.py", line 28, in cuda_no_autocast

device_id = get_cuda_device_id()

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/modules/devices.py", line 40, in get_cuda_device_id

) or torch.cuda.current_device()

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/venv/lib/python3.10/site-packages/torch/cuda/__init__.py", line 778, in current_device

_lazy_init()

File "/home/serwu/Desktop/Ai/stable-diffusion-webui/venv/lib/python3.10/site-packages/torch/cuda/__init__.py", line 293, in _lazy_init

torch._C._cuda_init()

RuntimeError: No HIP GPUs are available

---

```

### Additional information

_No response_

|

open

|

2024-11-25T14:13:29Z

|

2024-11-25T14:13:29Z

|

https://github.com/AUTOMATIC1111/stable-diffusion-webui/issues/16681

|

[

"bug-report"

] |

Bassoopioka

| 0

|

babysor/MockingBird

|

deep-learning

| 455

|

求助,预处理失败,请帮忙看一下

|

D:\Aria2\Sound_File_Processing-master\Sound_File_Processing-master>python pre.py D:\Aria2\aidatatang_200zh -d aidatatang_200zh -n 10

python: can't open file 'D:\Aria2\Sound_File_Processing-master\Sound_File_Processing-master\pre.py': [Errno 2] No such file or directory

|

closed

|

2022-03-15T07:27:09Z

|

2022-03-15T09:15:57Z

|

https://github.com/babysor/MockingBird/issues/455

|

[] |

tsyj9850

| 0

|

IvanIsCoding/ResuLLMe

|

streamlit

| 15

|

App not working

|

closed

|

2023-06-12T05:31:03Z

|

2023-06-13T23:43:45Z

|

https://github.com/IvanIsCoding/ResuLLMe/issues/15

|

[] |

Amjad-AbuRmileh

| 3

|

|

explosion/spaCy

|

data-science

| 12,946

|

lookups in Language.vocab do not reproduce

|

## How to reproduce the behaviour:

With this function:

```

import spacy

import numpy as np

nlp = spacy.load("en_core_web_md")

def get_similar_words(aword, top_k=4):

word = nlp.vocab[str(aword)]

others = (w for w in nlp.vocab if np.count_nonzero(w.vector))

similarities = ( (w.text, w.similarity(word)) for w in others )

return sorted(similarities, key=lambda x: x[1], reverse=True)[:top_k]

```

First calls to the function reproduce:

```

# starting 'blank', results reproduce

print(get_similar_words('cat'))

print(get_similar_words('cat')) # calling twice to show reproducability

```

Results in:

```

[('cat', 1.0), ("'Cause", 0.2827487885951996), ('Ol', 0.2824869751930237), ('you', 0.27984926104545593)]

[('cat', 1.0), ("'Cause", 0.2827487885951996), ('Ol', 0.2824869751930237), ('you', 0.27984926104545593)]

```

So far, so good. But after performing some lookup in the vocab, the results become different:

```

# Outcome changes if before the call, a (related) word is looked up in the dictionary

_ = nlp.vocab['dog'] # some lookup

print(get_similar_words('cat')) # same call as before

```

Results in:

```

[('cat', 1.0), ('dog', 0.8220816850662231), ("'Cause", 0.2827487885951996), ('Ol', 0.2824869751930237)]

```

which is different from before ('dog' wasn't there). If you do another lookup on vocab (e.g. 'kitten') and repeat the call to the function,

the result also contains the last looked up word:

```

_ = nlp.vocab['kitten']

print(get_similar_words('cat'))

```

Result:

```

[('cat', 1.0), ('kitten', 0.9999999403953552), ('dog', 0.8220816850662231), ("'Cause", 0.2827487885951996)]

```

Very strange. Actually I would have expected the words that I looked up my self to be part of the initial results, but it seems those words are somehow not yet present or fully initialized with a vector until they are explicitly looked up.

## Your Environment

<!-- Include details of your environment. You can also type `python -m spacy info --markdown` and copy-paste the result here.-->

* Operating System: Ubuntu 22.04.2 LTS

* Python Version Used: 3.10.12

* spaCy Version Used: 3.5.3

* Environment Information: in Jupyter notebook

|

closed

|

2023-08-31T18:21:01Z

|

2023-09-01T16:23:33Z

|

https://github.com/explosion/spaCy/issues/12946

|

[

"feat / vectors"

] |

mpjanus

| 1

|

jina-ai/clip-as-service

|

pytorch

| 195

|

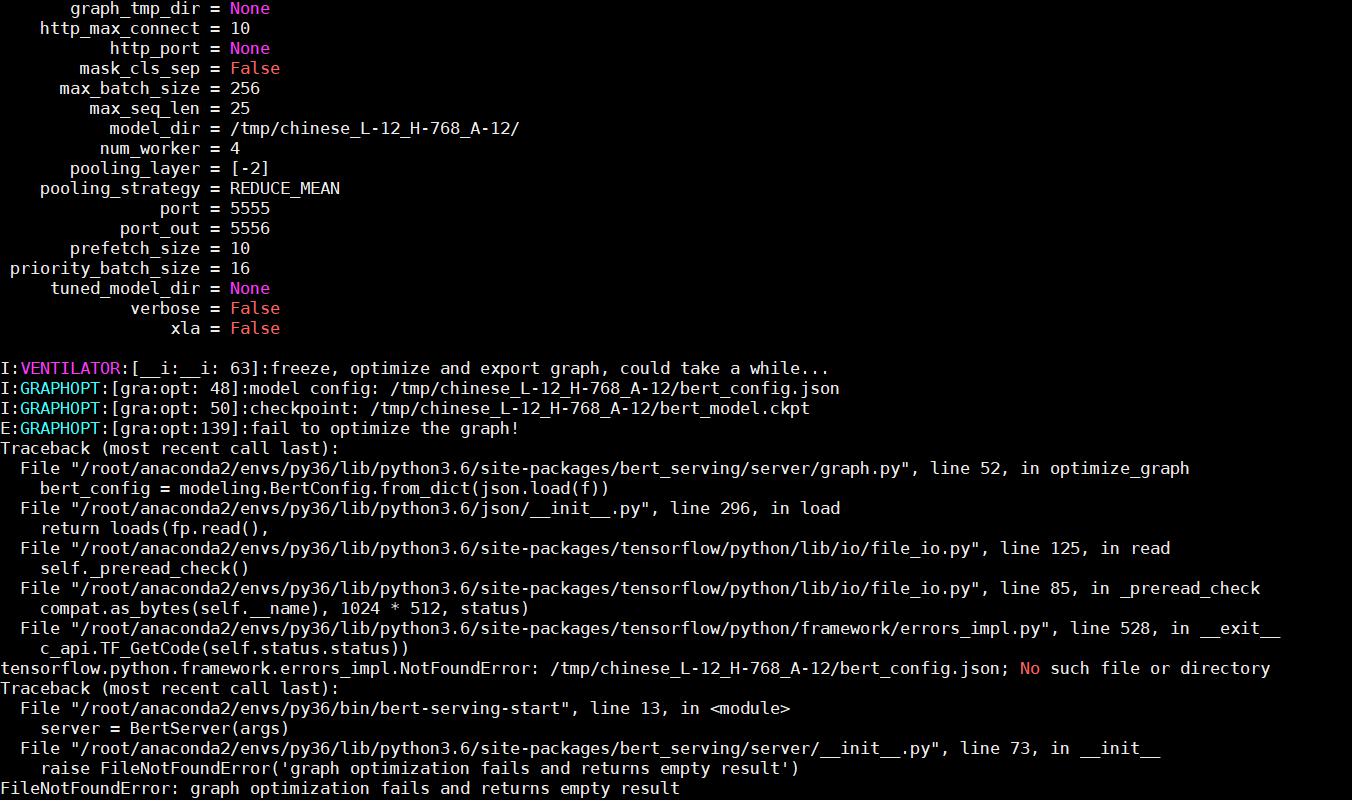

E:GRAPHOPT:[gra:opt:139]:fail to optimize the graph!

|

[**Prerequisites**]

> Please fill in by replacing `[ ]` with `[x]`.

* [√ ] Are you running the latest `bert-as-service`?

* [√ ] Did you follow [the installation](https://github.com/hanxiao/bert-as-service#install) and [the usage](https://github.com/hanxiao/bert-as-service#usage) instructions in `README.md`?

* [ √] Did you check the [FAQ list in `README.md`](https://github.com/hanxiao/bert-as-service#speech_balloon-faq)?

* [√ ] Did you perform [a cursory search on existing issues](https://github.com/hanxiao/bert-as-service/issues)?

**System information**

> Some of this information can be collected via [this script](https://github.com/tensorflow/tensorflow/tree/master/tools/tf_env_collect.sh).

- OS Platform and Distribution :Linux mobaXterm

- TensorFlow installed from (source or binary):pip install tensorflow-gpu & pip install --upgrade tensorflow-gpu

- TensorFlow version:1.12

- Python version:3.6

- `bert-as-service` version: pip install -U

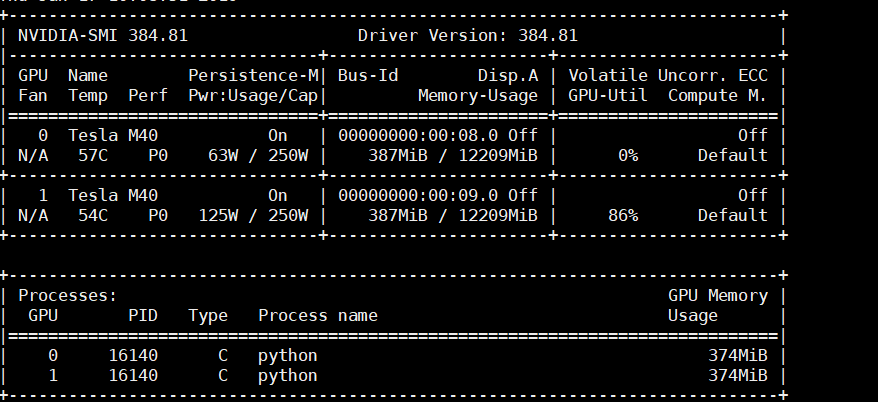

- GPU model and memory:

- CPU model and memory:

---

### Description

> Please replace `YOUR_SERVER_ARGS` and `YOUR_CLIENT_ARGS` accordingly. You can also write your own description for reproducing the issue.

I'm using this command to start the server:

```bash

bert-serving-start YOUR_SERVER_ARGS

```

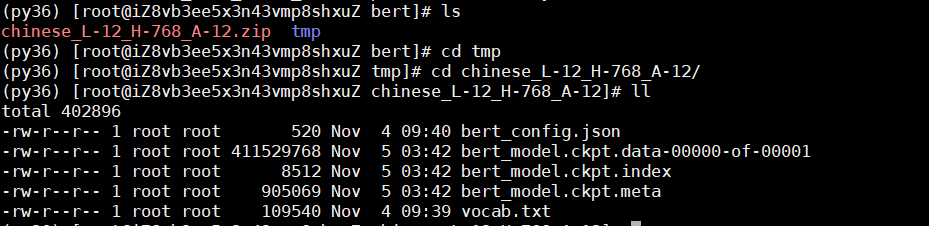

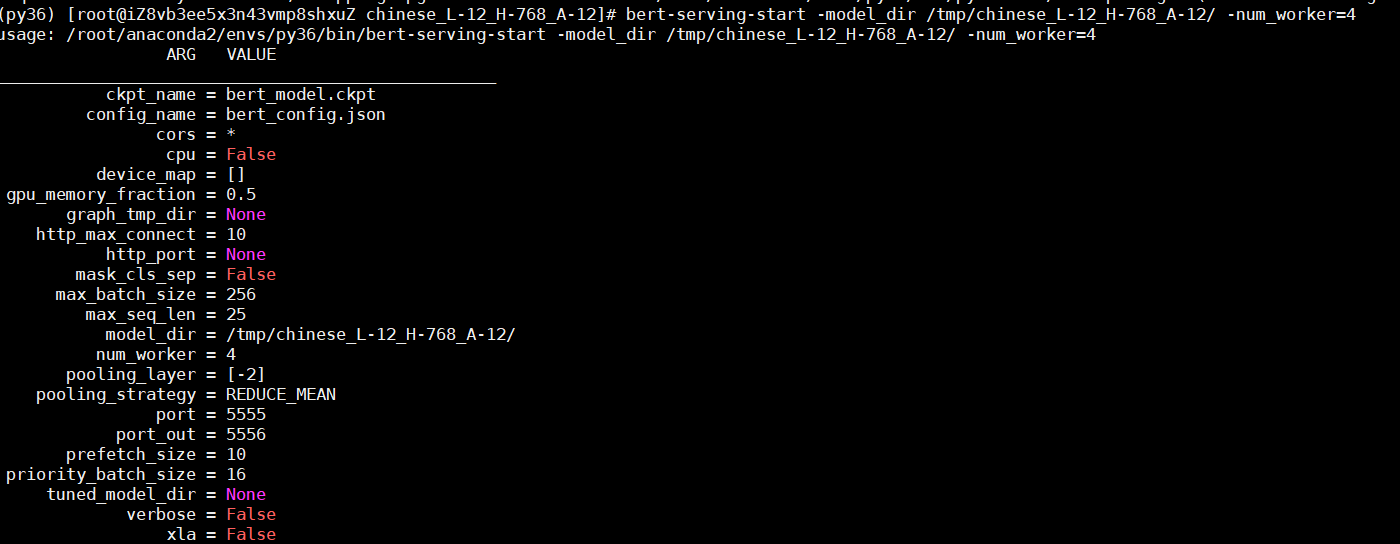

bert-serving-start -model_dir /tmp/chinese_L-12_H-768_A-12/ -num_worker=4

Then this issue shows up:

...

|

closed

|

2019-01-17T02:22:22Z

|

2019-01-17T04:15:20Z

|

https://github.com/jina-ai/clip-as-service/issues/195

|

[] |

HannahXu

| 10

|

coqui-ai/TTS

|

python

| 3,029

|

[Feature request]

|

<!-- Welcome to the 🐸TTS project!

We are excited to see your interest, and appreciate your support! --->

**🚀 Feature Description**

<!--A clear and concise description of what the problem is. Ex. I'm always frustrated when [...] -->

**Solution**

<!-- A clear and concise description of what you want to happen. -->

**Alternative Solutions**

<!-- A clear and concise description of any alternative solutions or features you've considered. -->

**Additional context**

<!-- Add any other context or screenshots about the feature request here. -->

|

closed

|

2023-10-03T18:29:37Z

|

2023-10-03T18:29:53Z

|

https://github.com/coqui-ai/TTS/issues/3029

|

[

"feature request"

] |

Parvezkhan0

| 0

|

widgetti/solara

|

jupyter

| 791

|

AppBar does not play nicely with themes

|

Hi,

I noticed that whenever `solara.AppBar()` is used in an app (or a page of an app), it somehow messes up with the theme.

[Example of of things going wrong](https://py.cafe/snippet/solara/v1#c=H4sIALjw6WYAA5VW227bOBD9FUN9SApEgiU7thNAwLaLLvq42C12H5piQUm0xVoiGZJKohb59z0zkhUnvSR1gACc-wzPHOprVJpKRpeRaq1xYeZNI5y40o-OSSOKK32lfxuPpYFWSx2udCW3sz_FTp6-viSLGX63KtQHxzfWvhWOlaSi30PI5EMtW_nB7HaNPJVaFI38T3TB5H-IxsvXDy7HEdlPFP5RzB8YnZ68N608eWp5VMUHeRdOT_6G1aw0OqClE-T91vxFFfy0knd3orWNjNPvlvPdsiaX-Ki2X06Y_XrC7DjhC6b8pjAdbJ8b82j2goC_I70oXxByMrzS0Vnk5HWnHDClgwekD1iGJvSWQD5IcBbW_qPkbXS5JaidRbJS4R0jMLoMroPE9qE2Gj62N5WqZHwzT7LzJIVzI3o0El1-jW6k84qsMiQ3JvxlEPLrIZvD6Swqa9VUTsLo46QJgI8MUN6qKtTRZXo-P4tapf8djovh9F6qXY08dFQV3LaqkW8R1UvHjSst3Q8ykGlcDLYwsYLiRtH9p_uzb6v4QYkPfhhXYvvH3i_o7vmOxgqf6-bQyPfLr8eo6fr8JTmDdBCL5rmkBzvKSn_3ZzxVIOvjJ4DAlHs6UhTgDfavZrXw9eWsmq82c5ldpMUyS7eiWBVVutik8-Vyk23O19srLXSvTJ4vk2Uyx8ntjMbKbbcqz7NFkj4RxoXSldI7D22aZMl89mrWIjsZYS55niYLdvEhmL3UZIfIKSQhODqNMQtR7kvRNHkOKLNAgm7Vtmu86eySCkqzJIO8kaKs83yVzMmsxEEGYxrEOkcuRC6lgyPVO0f0dZLBa2ggTVJsCU61cLieWBvXikZ9kS7PF3Amw0aV-zzfoKo1TqZth4qgqmTR7WxPYaCeGq1kaZwIBjEQnMLj3em8rO5a7mZNInknS7Sjd9Q_N7zFSD57oz3qbwWJM26I7o3ujypCtUtIritNSbn0Ok0paMq3U3et0Cifp8PeqtKItSA3Zfu9dFqiiBWxAzqAiIiD21tRtFEQ76RGdTTDcfpQ3HSIi364peGMsXL7Yy7bgyJ2MsCN5nUOkTdVh2GAd2j85AzDz2AwLvqCT0p_Fhm3RzXRDKxRACpfAkc-ngs6WycLyDrUKl2MCwKkKSO6OhIbh3LP6bofhPKG2JZSr45DYFtBjnwTSDdd5GN1fFgx9ge0JgO8BLHtd8zkpGOAH-mmoVA3Kfxa4faVucVIAMeHY6wC4kxNk7izXmzRB2GEHYNtTGhUESvdgA54ihwDY2Z08Qxb5UNHWgqFsy7KRnivSr6soxZJMYwPnpwVEqPRLkQA6oqAo4st7QUkQNycJdKHWPhel0QNKaZJnibIwhgAdYXxHOUY5bGv1bg8FKJrh9XJOIdBSoeXC1Na484QzgpdmRLoxxQhHeFusahIOcfGYR5W3llZorBlAihNGS1WFtyHpeYBrQmJVjUN8w-65PCNCNRWpSj6ctho6_BxFWrZ-QlWKHewh8qGmJhlryCnyS4xeOtpUWg0F8nmoQLQsjOl9AyIoZ_OSQBQjIxG1fcltTMgL6PmJhDhvnkfbd8yMvD9AK7EFtEk5skFaYaXvg7BDhHZHE-8J4of14uz8EZXIsih0gyzo14HOa1W3OCzdigDxZKqF8RVRKkc9Ut7DSUo5mjIDrQbaK2J_ZCHvmcACy5-wWvutuVisbiI0bNCeiJEwidumzQXm9VTDXI5RVyeLhCTIuBEN0Hsd0hjK89bAgfYTOV4qassODxqkykYvbPjU7AiQkLXXt3xDjBivVZ4BhjB_FAMX1x8ZGYazhM9PBF3_IAcRNhUBXqZnjOPT709TR3xRrqByDUyBAblgml15JSKIY1-4IhHoS-9ByGOTyXGM1jwoqBFFRCFnzewPuLSJwDN5Jt7RmlAb7acbwhdMMN78whKy-Ex6pwCu-FrGr5TWvAJeGYxvU_djQKn4r5ROxq60rcilHVl8II9BgbL-eODcXlMN7clf0OOeEXptxJ00xh--JfDnUMk8c6OHxHEtKgQQo8IeKIPe0l3PFgPCloM5lfIBr7FF8Oh1aFESuh9g3_XjQoSp-j-f9BjlCVTDgAA)

- In dark mode the AppBar tabs are not visible if selected (the names color coincides with the primary color probably)

- In light mode the `solara.Text()` elements are not visible even though they are not part of the NavBar component (but they are visible in dark mode).

Here is the same example, but without the NavBar component (commented out)

[Example that "works" without the NavBar component](https://py.cafe/snippet/solara/v1#c=H4sIAHnx6WYAA51WbW_bNhD-K4b6ISkQCZbs2E4AAWuLDv04bMX2oSkGSqIt1hLJkFQStch_33MnWXHatGnnAAHu_YV3z-lLVJpKRpeRaq1xYeZNI5y40o_IpBHFlb7Sv41kaSDVUocrXcnt7A-xk6cvL0ljht-L2a0K9cH0lbWvhWPxIKTfg9vkfS1b-d7sdo08lVoUjfxXdMHkv4vGy5eD0S-qH4dnG1H4KYHvKJyevDOtPDnWOor8Xt6F05O_oDErjQ6o_ASxHqs-G_WH0d_eidY2Mk6_SeHJVCb1-CifXwqU_Vqg7DjQM518VZgOej9q5ajypMbrLgSjT0_eNKrcz_AoZ2h6Y1x-Yp1qheuP7Z5M4A1SFeUzKUxK_z-J6Cxy8rpTDjOpg8cWHdYHktBb2quBA1pY-7eSt9Hllkb1LJKVCm95gqPL4DpwbB9qo2Fje1OpSsY38yQ7T1IYN6JHw6LLL9GNdF6RVobgxoQ_DVx-OURzoM6islZN5SSUPkySgHGUAcJbVYU6ukzP52dRq_Q_A7kYqHdS7WrEIVJVMNuqRr6GVy8dN0xp6b4TgVTjYtCFihXkN4ruP96ffZvFd1J8sEO7Ets_tv6J6p6vaMzwuWoOhTydfj16TdfnPxMzSAe2aJ4LetCjqPR3f8ZdxWR9-IghMOWeSPKCeYP-i1ktfH05q-arzVxmF2mxzNKtKFZFlS426Xy53GSb8_X2SgvdK5Pny2SZzEG5ndFY6e1W5Xm2SNKvmHGhdKX0zkOaJlkyB263iE5K6Euep8mCTTy2ZC816cFzCk4IjqjRZyHKfSmaJs8xysyQgGu17RpvOrukhNIsycBvpCjrPF8lc1IrQchgTANf54gFz6V0MKR85_C-TjJYDQWkSYotAVULh-eJtXGtaNRn6fJ8AWNSpDXO8w2yWoMybTtkBFEli25ne3ID8VRoJUvjRMDGIwFE4FPXeVndtVzNmljyTpYoR--ofi54i5Z88kZ75N8KYmdcEL0bvR9lhGyX4FxXmoJy6nWaktOUX6fuWqGRPneHrVWl4WtBZsr2e-m0RBIrQgdUABYBB5e3Im8jI95Jjeyoh2P3Ibjp4Bf1cEkDjbZy-WMs2wMidjLAjPp1DpY3VYdmAHeo_WQMxU9AME76gimlP4mMy6OcqAfWKAwqPwJ7Pu4LKlsnC_A65CpdjAfCSFNEVHXENg7pntNzPzDlDaEthV4du8C2Ahz5JRBuesjH4viwYmyP0ZoUcD1i2-8YyUnGA34km5pC1aSwwxXYV-YWLcE4PpCxCvAzFU3sznqxRR00I2wYbGNCo4pY6QZwwF1kH2gzTxf3sFU-dCQlV6B1UTbCe1XyYx2VSIKhfbDkqOAYjXLBwqCuaHB0saW9AAcTN2eO9CEWvtclQUOKbpKlCbIwBoO6QnuOYoz82NdqXB5y0bXD6mQcwyCkw-VCl9Z4M7izQlemxPSji-CO426xqAg5x8ahH1beWVkisWWCUZoiWqwssA9LzQ1a0yRa1TSMP6iS3TciUFmVIu_LYaOtw4daqGXnp7FCuoM-RDbEhCx7BT51donGW0-LQq25SDYPGQCWnSml54EY6umcxACKEdEo-76kcobJy6i4aYjw3ryPtm95MvDdAazEFlEn5skFSYZLX4dgB4-sjhPvCeLH9eIovNGVCHLINEPvqNaBT6sVN_gsHtJAsiTqBWEVQSp7_dxeQwiIOWqyA-ziQ2dAP8Sh7xmMBSe_4DV323KxWFzEqFkhPAEizSdemyQXm9XXEsRyirA8XcAneQBFL0HodwhjK89bAgPoTOl4qassOBy1SRWI3tnxFKwIkFC1V3e8AzyxXiucAZ5gPhTDFxeTjEwDPcHDV-yOD8iBhU1VgJfpnHl8Iu6p6_A3wg1YrpEh8FAuGFZHTKl4pFEPDHEU-tJ7AOJ4KtGeQYMXBSWqAC983oD68EufANSTb94ZqWF6s-V8Q9MFNdybR6O0HI5R5xTQDV_rsJ3CAk-AM4vpPnU3CpiK90buKOhK34pQ1pXBBXs8GMznjw-ey2O4uS35G3KcV6R-KwE3-DimqVkObw6WxJ0dPyIIaZEhmB4ecKIPe0lvPGgPAloMxlfwBrzFF8Oh1CFFCuh9g3_XjQoSVHT_HzXf88fGDgAA)

[Finally, a kind of patch around this is to use `solara.v.AppBar()` instead](https://py.cafe/snippet/solara/v1#c=H4sIAHHz6WYAA6VWbW_bNhD-K4b2ISkQCZbs2E4AAWuLDv04bMX2oSkGWqIt1hLJkFQctch_33MnWXHSZEkxBwjAe33uePdQ36PClDK6jFRjjQsTb2rhxJV-cExqsb7SV_rX4VgYaLXU4UqXcjP5XWzl6ZtLspjg98tkr0J1cH1r7TvhWE3KY9XNYyX97jMmnyrZyE9mu63lqdRiXct_RBtM_puovXxz73Ick_3E2j-I-YzR6clH08iTx5ZHKD7J23B68iesJoXRARWfIO-P5q9C8J9IPtyKxtYyTp-E8ySs0SU-wvbTCbOfT5gdJ3xFl9-uTQvbl9o8mD1r9a4NwejTk_e1KnYTXNwZLqU2Lj-xTjXCdY99nwTzHtBF8Qo4o-H_AxSdRU5et8phlnXw2LTDikETOku710twFtb-peQ-utzQiJ9FslThA09-dBlcC4ntQmU0fGxnSlXK-GaaZOdJCudadGhgdPk9upHOK7LKkNyY8IdByO-HbA6ns6ioVF06CaPPoyZgbGWAcq_KUEWX6fn0LGqU_rs_zvrTR6m2FfLQUZVw26havkNULx03TWnpnslApvG6t4WJFRQ3iu6-3J39iOIZiPd-aFdiu4fer6ju5YoGhC9VcyjkafjVEDVdnr8mZ5AOYlG_lPRgR1np7-6Mu4rJ-vwFQ2CKHR0pCuYN9r9MKuGry0k5XaymMrtI1_Ms3Yj1Yl2ms1U6nc9X2ep8ubnSQnfK5Pk8mSdTnNzWaKz6ZqPyPJsl6SNhvFa6VHrroU2TLJmC-BtkJyP0Jc_TZMYuHluyk5rsEDmFJARHpyHmWhS7QtR1nmOUWSBB82rT1t60dk6A0izJIK-lKKo8XyRTMitwkMGYGrHOkQuRC-ngSHiniL5MMnj1BaRJii3BqRIO1xNr4xpRq2_S5fkMzmRIa5znK6Ba4mSapkcEVSnX7dZ2FAbqsdBSFsaJgI0HAGTg57D1srxtuJolieStLFCO3lL9XPAGLfnqjfbA3wgSZ1wQ3RvdHyEC2jkk16WmpAy9SlMKmvLtVG0jNOBzd9hblRqxZuSmbLeTTkuAWBA7oAKIiDi4vAVFGwTxVmqgox4O3YfipkVc1MMl9We0lcsfctkOFLGVAW7Ur3OIvClbNAO8Q-0nZxh-BYMx6As-Kf1VZFweYaIeWKMwqHwJHPm4L6hsmcwga4FVuhgXhJGmjKjqSGwc4J7Tdd8L5Q2xLaVeHIfAtoIc-SaQbrzIh-r4sGLsj9EaDfCKxLbbMpOTjgf8SDc2hapJ4YdXYFeaPVqCcbw_xiogzlg0iVvrxQZ10IywY7C1CbVax0rXoAPuIsdAm3m6uIeN8qElLYXCWa-LWnivCr6soxJJ0bcPnpwVEqNRLkQY1AUNjl5vaC8gwcRNWSJ9iIXvdEHUkKKb5GmCXBuDQV2gPUc5BnnsKzUsD4Vom351Ms5hkNLh5UKXlrgzhLNCl6bA9KOLkA7jbrGoSDnFxqEfVt5aWQDYPMEojRktVhbch6XmBi1pEq2qa-YfVMnhaxGorFJR9Hm_0dbhoy5UsvXjWAFubw-VDTExy05BTp2do_HW06JQay6S1T0C0LIzhfQ8EH09rZMYQDEwGqHvCiqnn7yMihuHCPfN-2i7hicD3x7gSmwRdWKaXJCmf-mrEGwfkc3xxHui-GG9OAtvdCmC7JFm6B3V2stpteIan9M9DIAlVSeIq4hSOeq35hpKUMxRkx1oFx86PfshD33PYCwY_IzX3G2K2Wx2EaNmhfREiDSfuG3SXKwWjzXI5RRxeTpDTIqAE90Esd8hjS09bwkcYDPC8VKXWXB41EZTMHprh6dgQYSEqr265R3gifVa4RngCeaHov_i4iMzU38e6eGRuOUH5CDCpirQy_iceXwm7qjriDfQDUSuliHwUM6YVgdOKXmkUQ8c8Sh0hfcgxOGpRHt6C14UlKgCovDzBtZHXPoEoJ78cM-AhunN5tMVTRfM8N48GKV5_xi1ToHd8BUP3zEt-AQ8Mxvfp_ZGgVNx38COgq70XoSiKg1esIeDwXL--OC5PKabfcHfkMO8Avpegm7wcUxTM-_vHCKJd3b4iCCmBUIIPSLgiT7sJd1xb90raDGYXyHr-RZfDIdSe4iU0Psa_65rFSRO0d2_3WTTx-oOAAA)

However the problem here is that the `theme.js` in [assets](https://solara.dev/documentation/advanced/reference/asset-files) is not respected (I think).

Any advice on this, or is this perhaps a known issue?

Many thanks!

|

open

|

2024-09-17T21:28:30Z

|

2024-09-18T15:05:12Z

|

https://github.com/widgetti/solara/issues/791

|

[] |

JovanVeljanoski

| 2

|

quantmind/pulsar

|

asyncio

| 311

|

passing a TemporaryFile to client.HttpRequest fails

|

* **pulsar version**:

2.0.2

* **python version**:

3.6.5

* **platform**:

Linux/Ubuntu16.04

## Description

Appending a TemporaryFile to a post request fails with this trace:

File "/opt/venv/dc-venv/lib/python3.6/site-packages/pulsar/apps/http/client.py", line 919, in _request

request = HttpRequest(self, url, method, params, **nparams)

File "/opt/venv/dc-venv/lib/python3.6/site-packages/pulsar/apps/http/client.py", line 247, in __init__

self.body = self._encode_body(data, files, json)

File "/opt/venv/dc-venv/lib/python3.6/site-packages/pulsar/apps/http/client.py", line 369, in _encode_body

body, ct = self._encode_files(data, files)

File "/opt/venv/dc-venv/lib/python3.6/site-packages/pulsar/apps/http/client.py", line 407, in _encode_files

fn = guess_filename(v) or k

File "/opt/venv/dc-venv/lib/python3.6/site-packages/pulsar/apps/http/client.py", line 64, in guess_filename

if name and name[0] != '<' and name[-1] != '>':

TypeError: 'int' object is not subscriptable

The problem here seems to be that python's tempfile.TemporaryFile's filename has type int and not string.

A look at the documentation of io.FileIO:

> The name can be one of two things:

>

> a character string or bytes object representing the path to the file which will be opened. In this case closefd must be True (the default) otherwise an error will be raised.

> an integer representing the number of an existing OS-level file descriptor to which the resulting FileIO object will give access. When the FileIO object is closed this fd will be closed as well, unless closefd is set to False.

As a user of pulsar the issue can be prevented by using NamedTemporaryFile instead of TemporaryFile.

## Expected behaviour

A TemporaryFile (which has no name) is a file like object and therefore it should be possible to pass it via the 'file' parameter to a request.

(It is possible with with the 'requests' lib)

<!-- What is the behaviour you expect? -->

## Actual behaviour

guess_filename fails during encoding the file because the filename is an int

<!-- What's actually happening? -->

## Steps to reproduce

```

from pulsar.apps import http

from tempfile import TemporaryFile

import asyncio

async def request():

with TemporaryFile() as tmpFile:

tmpFile.write('hello world'.encode())

tmpFile.seek(0)

sessions = http.HttpClient()

await sessions.post(

'http://google.com',

files={'file.txt': tmpFile}

)

asyncio.get_event_loop().run_until_complete(request())

```

|

open

|

2018-08-09T16:38:11Z

|

2018-08-09T16:42:12Z

|

https://github.com/quantmind/pulsar/issues/311

|

[] |

DavHau

| 0

|

taverntesting/tavern

|

pytest

| 404

|

Need support for handling images(jpg/png) in request's data section for REST API

|

Currently Tavern is not supporting if we place the image in the data section of the request. As of now it accepts only "json","yaml","yml" as per source code referenced. Could you please let me know how to do it.

**pyets.ini:**

I have included this image in .ini file because directly not able to reference the image placed.

`[pytest]

tavern-global-cfg=

metro.jpg`

**Sample Test:**

```

test_name: Verify POST HPS_Insurance_Card API with 200 OK Status and its response parameters

stages:

- name: Upload file and data

request:

url: "http://localhost:5000/card/extract/from_image"

method: POST

data:

card_image: "metro.jpg"

subscriber_name: "MARY SAMPLE"

response:

status_code: 200

```

While executing this code,it throws the below mentioned exception.

```

C:\Venkatesh\TestingMetrics&TestCases\InsuranceCardAPITesting\Aug12>pytest -v

============================= test session starts =============================

platform win32 -- Python 2.7.16, pytest-4.3.1, py-1.8.0, pluggy-0.9.0 -- c:\python27\python.exe

cachedir: .pytest_cache

metadata: {'Python': '2.7.16', 'Platform': 'Windows-10-10.0.16299', 'Packages': {'py': '1.8.0', 'pytest': '4.3.1', 'pluggy': '0.9.0'}, 'JAVA_HOME': 'C:\\Program Files\\Java\\jdk-11.0.1', 'Plugins': {'tavern': '0.26.2', 'html': '1.20.0', 'metadata': '1.8.0'}}

rootdir: C:\Venkatesh\TestingMetrics&TestCases\InsuranceCardAPITesting\Aug12, inifile: pytest.ini

plugins: tavern-0.26.2, metadata-1.8.0, html-1.20.0

collected 1 item

test_hps_analytic_cloud_api_knowledge_base_concepts.tavern.yaml::Verify POST HPS_Insurance_Card API with 200 OK Status and its response parameters FAILED [100%]

================================== FAILURES ===================================

_ C:\Venkatesh\TestingMetrics&TestCases\InsuranceCardAPITesting\Aug12\test_hps_analytic_cloud_api_knowledge_base_concepts.tavern.yaml::Verify POST HPS_Insurance_Card API with 200 OK Status and its response parameters _

cls = <class '_pytest.runner.CallInfo'>

func = <function <lambda> at 0x0000000007AB00B8>, when = 'call'

reraise = (<class '_pytest.outcomes.Exit'>, <type 'exceptions.KeyboardInterrupt'>)

@classmethod

def from_call(cls, func, when, reraise=None):

#: context of invocation: one of "setup", "call",

#: "teardown", "memocollect"

start = time()

excinfo = None

try:

> result = func()

c:\python27\lib\site-packages\_pytest\runner.py:226:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

> lambda: ihook(item=item, **kwds), when=when, reraise=reraise

)

c:\python27\lib\site-packages\_pytest\runner.py:198:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <_HookCaller 'pytest_runtest_call'>, args = ()

kwargs = {'item': <YamlItem Verify POST HPS_Insurance_Card API with 200 OK Status and its response parameters>}

notincall = set([])

def __call__(self, *args, **kwargs):

if args:

raise TypeError("hook calling supports only keyword arguments")

assert not self.is_historic()

if self.spec and self.spec.argnames:

notincall = (

set(self.spec.argnames) - set(["__multicall__"]) - set(kwargs.keys())

)

if notincall:

warnings.warn(

"Argument(s) {} which are declared in the hookspec "

"can not be found in this hook call".format(tuple(notincall)),

stacklevel=2,

)

> return self._hookexec(self, self.get_hookimpls(), kwargs)

c:\python27\lib\site-packages\pluggy\hooks.py:289:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <_pytest.config.PytestPluginManager object at 0x00000000059C4B38>

hook = <_HookCaller 'pytest_runtest_call'>

methods = [<HookImpl plugin_name='runner', plugin=<module '_pytest.runner' from 'c:\python27\lib\site-packages\_pytest\runner.py...9EAC88>>, <HookImpl plugin_name='logging-plugin', plugin=<_pytest.logging.LoggingPlugin object at 0x0000000007A84518>>]

kwargs = {'item': <YamlItem Verify POST HPS_Insurance_Card API with 200 OK Status and its response parameters>}

def _hookexec(self, hook, methods, kwargs):

# called from all hookcaller instances.

# enable_tracing will set its own wrapping function at self._inner_hookexec

> return self._inner_hookexec(hook, methods, kwargs)

c:\python27\lib\site-packages\pluggy\manager.py:68:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

hook = <_HookCaller 'pytest_runtest_call'>

methods = [<HookImpl plugin_name='runner', plugin=<module '_pytest.runner' from 'c:\python27\lib\site-packages\_pytest\runner.py...9EAC88>>, <HookImpl plugin_name='logging-plugin', plugin=<_pytest.logging.LoggingPlugin object at 0x0000000007A84518>>]

kwargs = {'item': <YamlItem Verify POST HPS_Insurance_Card API with 200 OK Status and its response parameters>}

self._inner_hookexec = lambda hook, methods, kwargs: hook.multicall(

methods,

kwargs,

> firstresult=hook.spec.opts.get("firstresult") if hook.spec else False,

)

c:\python27\lib\site-packages\pluggy\manager.py:62:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

hook_impls = [<HookImpl plugin_name='runner', plugin=<module '_pytest.runner' from 'c:\python27\lib\site-packages\_pytest\runner.py...9EAC88>>, <HookImpl plugin_name='logging-plugin', plugin=<_pytest.logging.LoggingPlugin object at 0x0000000007A84518>>]

caller_kwargs = {'item': <YamlItem Verify POST HPS_Insurance_Card API with 200 OK Status and its response parameters>}

firstresult = False

def _multicall(hook_impls, caller_kwargs, firstresult=False):

"""Execute a call into multiple python functions/methods and return the

result(s).

``caller_kwargs`` comes from _HookCaller.__call__().

"""

__tracebackhide__ = True

results = []

excinfo = None

try: # run impl and wrapper setup functions in a loop

teardowns = []

try:

for hook_impl in reversed(hook_impls):

try:

args = [caller_kwargs[argname] for argname in hook_impl.argnames]

except KeyError:

for argname in hook_impl.argnames:

if argname not in caller_kwargs:

raise HookCallError(

"hook call must provide argument %r" % (argname,)

)

if hook_impl.hookwrapper:

try:

gen = hook_impl.function(*args)

next(gen) # first yield

teardowns.append(gen)

except StopIteration:

_raise_wrapfail(gen, "did not yield")

else:

res = hook_impl.function(*args)

if res is not None:

results.append(res)

if firstresult: # halt further impl calls

break

except BaseException:

excinfo = sys.exc_info()

finally:

if firstresult: # first result hooks return a single value

outcome = _Result(results[0] if results else None, excinfo)

else:

outcome = _Result(results, excinfo)

# run all wrapper post-yield blocks

for gen in reversed(teardowns):

try:

gen.send(outcome)

_raise_wrapfail(gen, "has second yield")

except StopIteration:

pass

> return outcome.get_result()

c:\python27\lib\site-packages\pluggy\callers.py:208:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <pluggy.callers._Result object at 0x0000000007AB3EF0>

def get_result(self):

"""Get the result(s) for this hook call.

If the hook was marked as a ``firstresult`` only a single value

will be returned otherwise a list of results.

"""

__tracebackhide__ = True

if self._excinfo is None:

return self._result

else:

ex = self._excinfo

if _py3:

raise ex[1].with_traceback(ex[2])

> _reraise(*ex) # noqa

c:\python27\lib\site-packages\pluggy\callers.py:81:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

hook_impls = [<HookImpl plugin_name='runner', plugin=<module '_pytest.runner' from 'c:\python27\lib\site-packages\_pytest\runner.py...9EAC88>>, <HookImpl plugin_name='logging-plugin', plugin=<_pytest.logging.LoggingPlugin object at 0x0000000007A84518>>]

caller_kwargs = {'item': <YamlItem Verify POST HPS_Insurance_Card API with 200 OK Status and its response parameters>}

firstresult = False

def _multicall(hook_impls, caller_kwargs, firstresult=False):

"""Execute a call into multiple python functions/methods and return the

result(s).

``caller_kwargs`` comes from _HookCaller.__call__().

"""

__tracebackhide__ = True

results = []

excinfo = None

try: # run impl and wrapper setup functions in a loop

teardowns = []

try:

for hook_impl in reversed(hook_impls):

try:

args = [caller_kwargs[argname] for argname in hook_impl.argnames]

except KeyError:

for argname in hook_impl.argnames:

if argname not in caller_kwargs:

raise HookCallError(

"hook call must provide argument %r" % (argname,)

)

if hook_impl.hookwrapper:

try:

gen = hook_impl.function(*args)

next(gen) # first yield

teardowns.append(gen)

except StopIteration:

_raise_wrapfail(gen, "did not yield")

else:

> res = hook_impl.function(*args)

c:\python27\lib\site-packages\pluggy\callers.py:187:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

item = <YamlItem Verify POST HPS_Insurance_Card API with 200 OK Status and its response parameters>

def pytest_runtest_call(item):

_update_current_test_var(item, "call")

sys.last_type, sys.last_value, sys.last_traceback = (None, None, None)

try:

> item.runtest()

c:\python27\lib\site-packages\_pytest\runner.py:123:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <YamlItem Verify POST HPS_Insurance_Card API with 200 OK Status and its response parameters>

def runtest(self):

> self.global_cfg = load_global_cfg(self.config)

c:\python27\lib\site-packages\tavern\testutils\pytesthook\item.py:136:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

args = (<_pytest.config.Config object at 0x0000000006B3F390>,), kwds = {}

key = (<_pytest.config.Config object at 0x0000000006B3F390>,), link = None

def wrapper(*args, **kwds):

# size limited caching that tracks accesses by recency

key = make_key(args, kwds, typed) if kwds or typed else args

with lock:

link = cache_get(key)

if link is not None:

# record recent use of the key by moving it to the front of the list

root, = nonlocal_root

link_prev, link_next, key, result = link

link_prev[NEXT] = link_next

link_next[PREV] = link_prev

last = root[PREV]

last[NEXT] = root[PREV] = link

link[PREV] = last

link[NEXT] = root

stats[HITS] += 1

return result

> result = user_function(*args, **kwds)

c:\python27\lib\site-packages\backports\functools_lru_cache.py:137:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

pytest_config = <_pytest.config.Config object at 0x0000000006B3F390>

@lru_cache()

def load_global_cfg(pytest_config):

"""Load globally included config files from cmdline/cfg file arguments

Args:

pytest_config (pytest.Config): Pytest config object

Returns:

dict: variables/stages/etc from global config files

Raises:

exceptions.UnexpectedKeysError: Invalid settings in one or more config

files detected

"""

# Load ini first

ini_global_cfg_paths = pytest_config.getini("tavern-global-cfg") or []

# THEN load command line, to allow overwriting of values

cmdline_global_cfg_paths = pytest_config.getoption("tavern_global_cfg") or []

all_paths = ini_global_cfg_paths + cmdline_global_cfg_paths

> global_cfg = load_global_config(all_paths)

c:\python27\lib\site-packages\tavern\testutils\pytesthook\util.py:73:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

global_cfg_paths = ['metroplus.jpg']

def load_global_config(global_cfg_paths):

"""Given a list of file paths to global config files, load each of them and

return the joined dictionary.

This does a deep dict merge.

Args:

global_cfg_paths (list(str)): List of filenames to load from

Returns:

dict: joined global configs

"""

global_cfg = {}

if global_cfg_paths:

logger.debug("Loading global config from %s", global_cfg_paths)

for filename in global_cfg_paths:

with open(filename, "r") as gfileobj:

> contents = yaml.load(gfileobj, Loader=IncludeLoader)

c:\python27\lib\site-packages\tavern\util\general.py:28:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

stream = <closed file 'metroplus.jpg', mode 'r' at 0x000000000799C8A0>

Loader = <class 'tavern.util.loader.IncludeLoader'>

def load(stream, Loader=None):

"""

Parse the first YAML document in a stream

and produce the corresponding Python object.

"""

if Loader is None:

load_warning('load')

Loader = FullLoader

> loader = Loader(stream)

c:\python27\lib\site-packages\yaml\__init__.py:112:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <tavern.util.loader.IncludeLoader object at 0x0000000007AB3F60>

stream = <closed file 'metroplus.jpg', mode 'r' at 0x000000000799C8A0>

def __init__(self, stream):

"""Initialise Loader."""

try:

self._root = os.path.split(stream.name)[0]

except AttributeError:

self._root = os.path.curdir

> Reader.__init__(self, stream)

c:\python27\lib\site-packages\tavern\util\loader.py:116:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <tavern.util.loader.IncludeLoader object at 0x0000000007AB3F60>

stream = <closed file 'metroplus.jpg', mode 'r' at 0x000000000799C8A0>

def __init__(self, stream):

self.name = None

self.stream = None

self.stream_pointer = 0

self.eof = True

self.buffer = u''

self.pointer = 0

self.raw_buffer = None

self.raw_decode = None

self.encoding = None

self.index = 0

self.line = 0

self.column = 0

if isinstance(stream, unicode):

self.name = "<unicode string>"

self.check_printable(stream)

self.buffer = stream+u'\0'

elif isinstance(stream, str):

self.name = "<string>"

self.raw_buffer = stream

self.determine_encoding()

else:

self.stream = stream

self.name = getattr(stream, 'name', "<file>")

self.eof = False

self.raw_buffer = ''

> self.determine_encoding()

c:\python27\lib\site-packages\yaml\reader.py:87:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <tavern.util.loader.IncludeLoader object at 0x0000000007AB3F60>

def determine_encoding(self):

while not self.eof and len(self.raw_buffer) < 2:

self.update_raw()

if not isinstance(self.raw_buffer, unicode):

if self.raw_buffer.startswith(codecs.BOM_UTF16_LE):

self.raw_decode = codecs.utf_16_le_decode

self.encoding = 'utf-16-le'

elif self.raw_buffer.startswith(codecs.BOM_UTF16_BE):

self.raw_decode = codecs.utf_16_be_decode

self.encoding = 'utf-16-be'

else:

self.raw_decode = codecs.utf_8_decode

self.encoding = 'utf-8'

> self.update(1)

c:\python27\lib\site-packages\yaml\reader.py:137:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <tavern.util.loader.IncludeLoader object at 0x0000000007AB3F60>

length = 1

def update(self, length):

if self.raw_buffer is None:

return

self.buffer = self.buffer[self.pointer:]

self.pointer = 0

while len(self.buffer) < length:

if not self.eof:

self.update_raw()

if self.raw_decode is not None:

try:

data, converted = self.raw_decode(self.raw_buffer,

'strict', self.eof)

except UnicodeDecodeError, exc:

character = exc.object[exc.start]

if self.stream is not None:

position = self.stream_pointer-len(self.raw_buffer)+exc.start

else:

position = exc.start

raise ReaderError(self.name, position, character,

> exc.encoding, exc.reason)

E ReaderError: 'utf8' codec can't decode byte #xff: invalid start byte

E in "metroplus.jpg", position 0

c:\python27\lib\site-packages\yaml\reader.py:170: ReaderError

============================== warnings summary ===============================

c:\python27\lib\site-packages\tavern\testutils\pytesthook\item.py:47

c:\python27\lib\site-packages\tavern\testutils\pytesthook\item.py:47: FutureWarning: Tavern will drop support for Python 2 in a future release, please switch to using Python 3 (see https://docs.pytest.org/en/latest/py27-py34-deprecation.html)

FutureWarning,

-- Docs: https://docs.pytest.org/en/latest/warnings.html

==================== 1 failed, 1 warnings in 1.95 seconds =====================

```

|

closed

|

2019-08-12T11:33:53Z

|

2019-08-30T14:58:40Z

|

https://github.com/taverntesting/tavern/issues/404

|

[] |

kvenkat88

| 2

|

youfou/wxpy

|

api

| 442

|

无法接受到群消息

|

我登录微信机器人后发现可以接受到好友及公众号的消息,但无法接受群消息,但是我在网页登录微信web版,发现可以接受到群消息,所以想请问下是不是API出现了问题

|

open

|

2020-02-26T04:14:27Z

|

2020-02-26T04:14:27Z

|

https://github.com/youfou/wxpy/issues/442

|

[] |

ROC-D

| 0

|

chatanywhere/GPT_API_free

|

api

| 131

|

[Enhancement] 请支持免费KEY对新版gpt-3.5-turbo (gpt-3.5-turbo-1106)的调用

|

请支持免费Key对新版gpt-3.5-turbo (gpt-3.5-turbo-1106)的调用

|

closed

|

2023-11-09T07:53:53Z

|

2023-11-09T08:47:06Z

|

https://github.com/chatanywhere/GPT_API_free/issues/131

|

[] |

kiritoko1029

| 0

|

huggingface/datasets

|

deep-learning

| 6,481

|

using torchrun, save_to_disk suddenly shows SIGTERM

|

### Describe the bug

When I run my code using the "torchrun" command, when the code reaches the "save_to_disk" part, suddenly I get the following warning and error messages:

Because the dataset is too large, the "save_to_disk" function splits it into 70 parts for saving. However, an error occurs suddenly when it reaches the 14th shard.

WARNING: torch.distributed.elastic.multiprocessing.api: Sending process 2224968 closing signal SIGTERM

ERROR: torch.distributed.elastic.multiprocessing.api: failed (exitcode: -7). traceback: Signal 7 (SIGBUS) received by PID 2224967.

### Steps to reproduce the bug

ds_shard = ds_shard.map(map_fn, *args, **kwargs)

ds_shard.save_to_disk(ds_shard_filepaths[rank])

Saving the dataset (14/70 shards): 20%|██ | 875350/4376702 [00:19<01:53, 30863.15 examples/s]

WARNING:torch.distributed.elastic.multiprocessing.api:Sending process 2224968 closing signal SIGTERM

ERROR:torch.distributed.elastic.multiprocessing.api:failed (exitcode: -7) local_rank: 0 (pid: 2224967) of binary: /home/bingxing2/home/scx6964/.conda/envs/ariya235/bin/python

Traceback (most recent call last):

File "/home/bingxing2/home/scx6964/.conda/envs/ariya235/bin/torchrun", line 8, in <module>

sys.exit(main())

File "/home/bingxing2/home/scx6964/.conda/envs/ariya235/lib/python3.10/site-packages/torch/distributed/elastic/multiprocessing/errors/__init__.py", line 346, in wrapper

return f(*args, **kwargs)

File "/home/bingxing2/home/scx6964/.conda/envs/ariya235/lib/python3.10/site-packages/torch/distributed/run.py", line 794, in main

run(args)

File "/home/bingxing2/home/scx6964/.conda/envs/ariya235/lib/python3.10/site-packages/torch/distributed/run.py", line 785, in run

elastic_launch(

File "/home/bingxing2/home/scx6964/.conda/envs/ariya235/lib/python3.10/site-packages/torch/distributed/launcher/api.py", line 134, in __call__

return launch_agent(self._config, self._entrypoint, list(args))

File "/home/bingxing2/home/scx6964/.conda/envs/ariya235/lib/python3.10/site-packages/torch/distributed/launcher/api.py", line 250, in launch_agent

raise ChildFailedError(

torch.distributed.elastic.multiprocessing.errors.ChildFailedError:

==========================================================

run.py FAILED

----------------------------------------------------------

Failures:

<NO_OTHER_FAILURES>

----------------------------------------------------------

Root Cause (first observed failure):

[0]:

time : 2023-12-08_20:09:04

rank : 0 (local_rank: 0)

exitcode : -7 (pid: 2224967)

error_file: <N/A>

traceback : Signal 7 (SIGBUS) received by PID 2224967

### Expected behavior

I hope it can save successfully without any issues, but it seems there is a problem.

### Environment info

`datasets` version: 2.14.6

- Platform: Linux-4.19.90-24.4.v2101.ky10.aarch64-aarch64-with-glibc2.28

- Python version: 3.10.11

- Huggingface_hub version: 0.17.3

- PyArrow version: 14.0.0

- Pandas version: 2.1.2

|

open

|

2023-12-08T13:22:03Z

|

2023-12-08T13:22:03Z

|

https://github.com/huggingface/datasets/issues/6481

|

[] |

Ariya12138

| 0

|

GibbsConsulting/django-plotly-dash

|

plotly

| 205

|

Handling dash.exceptions.PreventUpdate

|

Dash allows the use of this special exception, `dash.exceptions.PreventUpdate`, when no components need updating, even though a callback is triggered.

Because callbacks are triggered on page load, I use this `PreventUpdate` feature quite a bit.

They are raised during this line in `dash_wrapper.py`:

```

res = self.callback_map[target_id]['callback'](*args, **argMap)

```

and never handled. Because they aren't handled, I get a 500, traceback and a bad console log, even though everything is pretty much behaving as expected. It would be nice if you could catch this specific error and return without raising anything, as whenever this is used, it is meant to be there.

I had a look at your code, but I'm not sure where exactly I'd best catch the error, and what I'd return to basically say 'do nothing here, but everything is OK'.

Complete traceback example:

```

Internal Server Error: /resources/app/buzzword/_dash-update-component

Traceback (most recent call last):

File "/home/danny/venv/py3.7/lib/python3.7/site-packages/django/core/handlers/exception.py", line 34, in inner

response = get_response(request)

File "/home/danny/venv/py3.7/lib/python3.7/site-packages/django/core/handlers/base.py", line 115, in _get_response

response = self.process_exception_by_middleware(e, request)

File "/home/danny/venv/py3.7/lib/python3.7/site-packages/django/core/handlers/base.py", line 113, in _get_response

response = wrapped_callback(request, *callback_args, **callback_kwargs)

File "/home/danny/venv/py3.7/lib/python3.7/site-packages/django/views/decorators/csrf.py", line 54, in wrapped_view

return view_func(*args, **kwargs)

File "/home/danny/venv/py3.7/lib/python3.7/site-packages/django_plotly_dash/views.py", line 93, in update

resp = app.dispatch_with_args(request_body, arg_map)

File "/home/danny/venv/py3.7/lib/python3.7/site-packages/django_plotly_dash/dash_wrapper.py", line 562, in dispatch_with_args

res = self.callback_map[target_id]['callback'](*args, **argMap)

File "/home/danny/venv/py3.7/lib/python3.7/site-packages/dash/dash.py", line 1366, in add_context

raise exceptions.PreventUpdate

dash.exceptions.PreventUpdate

[03/Dec/2019 14:59:44] "POST /resources/app/buzzword/_dash-update-component HTTP/1.1" 500 136760

```

|

closed

|

2019-12-03T15:26:59Z

|

2019-12-04T16:15:01Z

|

https://github.com/GibbsConsulting/django-plotly-dash/issues/205

|

[] |

interrogator

| 3

|

christabor/flask_jsondash

|

flask

| 85

|

Clear api results field on modal popup

|

Just to prevent any confusion as to what is loaded there.

|

closed

|

2017-03-01T19:04:52Z

|

2017-03-02T20:28:18Z

|

https://github.com/christabor/flask_jsondash/issues/85

|

[

"enhancement"

] |

christabor

| 0

|

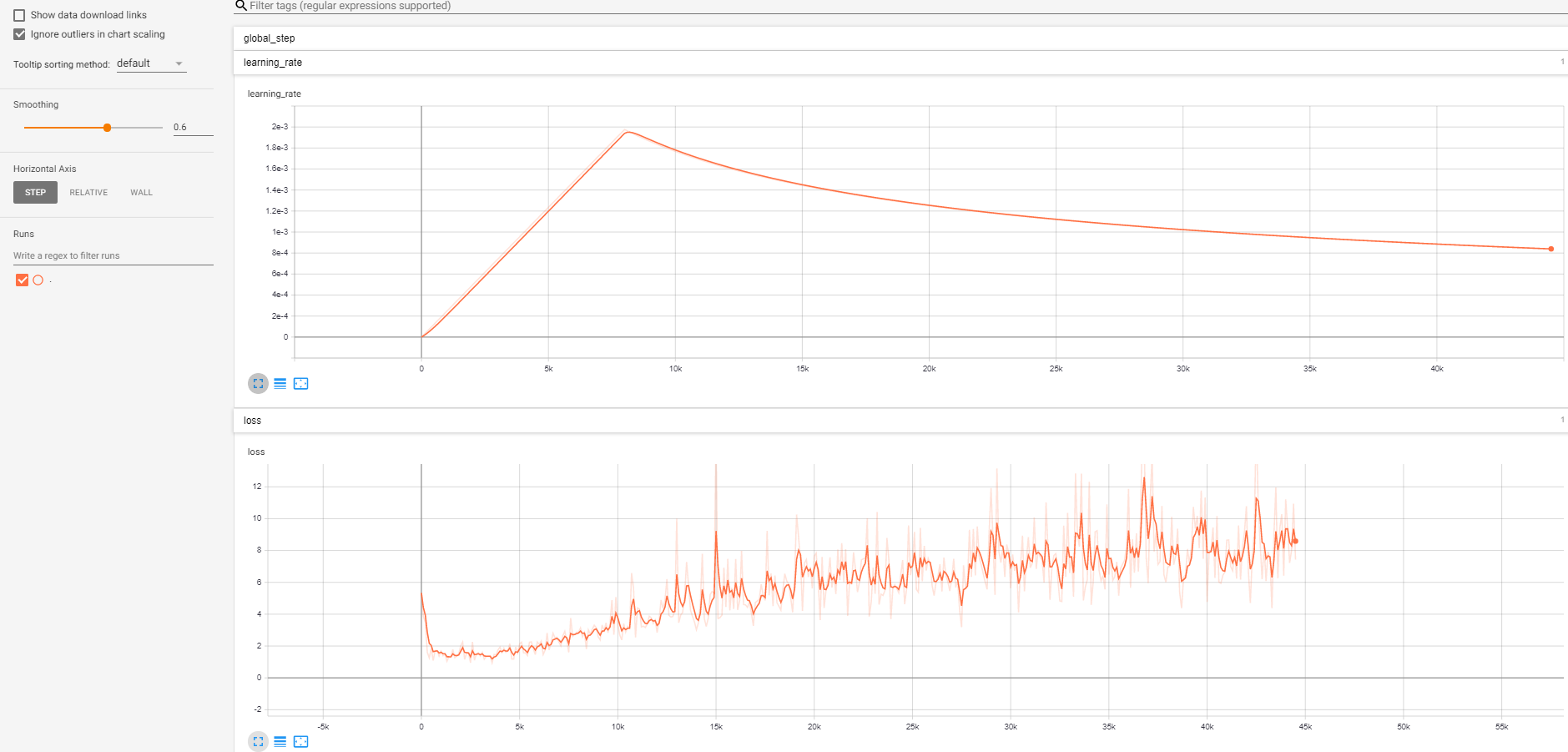

tensorlayer/TensorLayer

|

tensorflow

| 643

|

Tensorboard No scalar data was found and No graph definition files were found

|

### Issue Description

I can't generate graph or scalar data in Tensorboard.

I have create a code for CNN, but I can't understand why can't generate graph or scalar data.

And other error is:

InvalidArgumentError: You must feed a value for placeholder tensor 'y' with dtype float and shape [?,10]

[[Node: y = Placeholderdtype=DT_FLOAT, shape=[?,10], _device="/job:localhost/replica:0/task:0/device:CPU:0"]]

When I take the expression to create the table of contents the error on the placeholder disappears, but in this way I can not create any data for the Tensorboard. I can not find the error in the expression of y for the placeholder.

Can someone help me? Thanks in advance.

### Reproducible Code

```python

import numpy as np

import matplotlib.pyplot as plt

import h5py

import os

import tensorflow as tf

from scipy.io import loadmat

from skimage import color

from skimage import io

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import OneHotEncoder

#Define o limite para quais mensagens serão registradas.

tf.logging.set_verbosity(tf.logging.INFO)

#%matplotlib inline

#Definir o tamanho default da imagem

plt.rcParams['figure.figsize'] = (16.0, 4.0)

"""Criando o modelo CNN para o conjunto das imagens do SVHN"""

TENSORBOARD_SUMMARIES_DIR = '/ArqAna/svhn_classifier_logs'

"""Carregando os dados"""

#Abrindo o arquivo

anadate = h5py.File('SVHN_dados.h5', 'r')

#Carregando o conjunto de treinamento, teste e validação

X_treino = anadate['X_treino'][:]

y_treino = anadate['y_treino'][:]

X_teste = anadate['X_teste'][:]

y_teste = anadate['y_teste'][:]

X_val = anadate['X_val'][:]

y_val = anadate['y_val'][:]

#Fecha o arquivo

anadate.close()

print('Conjunto de treinamento', X_treino.shape, y_treino.shape)

print('Conjunto de validação', X_val.shape, y_val.shape)

print('Conjunto de teste', X_teste.shape, y_teste.shape)

def prepare_log_dir():

'''Limpa os arquivos de log e cria novos diretórios para colocar

o arquivo de log do tensorbard.'''

if tf.gfile.Exists(TENSORBOARD_SUMMARIES_DIR):

tf.gfile.DeleteRecursively(TENSORBOARD_SUMMARIES_DIR)

tf.gfile.MakeDirs(TENSORBOARD_SUMMARIES_DIR)

#Usando placeholder

comp = 32*32

x = tf.placeholder(tf.float32, shape = [None, 32, 32, 1], name='Input_Data')

y = tf.placeholder(tf.float32, shape = [None, 10], name='Input_Labels')

y_cls = tf.argmax(y, 1)

discard_rate = tf.placeholder(tf.float32, name='Discard_rate')

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

def cnn_model_fn(features):

"""Função modelo para CNN."""

# Camada de entrada

# SVHN imagens são 32x32 pixels e 1 canal de cor

input_layer = tf.reshape(features, [-1, 32, 32, 1])

# Camada Convolucional #1

# Utiliza 32 filtros extraindo regiões de 5x5 pixels com função de ativação ReLU

# Com preenchimento para conservar a width and height (evitar que a saída "encolha").

# Input Tensor Shape: [batch_size, 32, 32, 1]

# Output Tensor Shape: [batch_size, 32, 32, 32]

conv1 = tf.layers.conv2d(

inputs=input_layer,

filters=32,

kernel_size=[5, 5],

padding="same",

activation=tf.nn.relu)

# Camada Pooling #1

# Primeira camada max pooling com um filtro 2x2 e um passo de 2

# Input Tensor Shape: [batch_size, 32, 32, 32]

# Output Tensor Shape: [batch_size, 14, 14, 32]

pool1 = tf.layers.max_pooling2d(inputs=conv1, pool_size=[2, 2], strides=2)

# Camada Convolucional #2

# Computa 64 features usando um filtro 5x5

# Com preenchimento para conservar a width and height (evitar que a saída "encolha").

# Input Tensor Shape: [batch_size, 14, 14, 32]

# Output Tensor Shape: [batch_size, 14, 14, 64]

conv2 = tf.layers.conv2d(

inputs=pool1,

filters=64,

kernel_size=[5, 5],

padding="same",

activation=tf.nn.relu)

# Camada Pooling #2

# Segunda camada max pooling com um filtro 2x2 e um passo de 2

# Input Tensor Shape: [batch_size, 14, 14, 64]

# Output Tensor Shape: [batch_size, 8, 8, 64]

pool2 = tf.layers.max_pooling2d(inputs=conv2, pool_size=[2, 2], strides=2)

# Flatten tensor em um batch de vetores

# Input Tensor Shape: [batch_size, 8, 8, 64]

# Output Tensor Shape: [batch_size, 8 * 8 * 64]

pool2_flat = tf.reshape(pool2, [-1, 8 * 8 * 64])

# Camada Dense

# Densely conectada camada com 1024 neuronios

# Input Tensor Shape: [batch_size, 8 * 8 * 64]

# Output Tensor Shape: [batch_size, 1024]

dense = tf.layers.dense(inputs=pool2_flat, units=1024, activation=tf.nn.relu)

# Adicionar operação de dropout; 0.6 probabilidade que o elemento será mantido

dropout = tf.layers.dropout(

inputs=dense, rate=discard_rate)

# Camada Logits

# Input Tensor Shape: [batch_size, 1024]

# Output Tensor Shape: [batch_size, 10]

logits = tf.layers.dense(inputs=dropout, units=10)

return logits

max_epochs = 1

num_examples = X_treino.shape[0]

#Calculando a predição, otimização e a acurácia

with tf.name_scope('Model_Prediction'):

prediction = cnn_model_fn(x)

tf.summary.scalar('Model_Prediction', prediction)

prediction_cls = tf.argmax(prediction, 1)

with tf.name_scope('loss'):

loss = tf.reduce_mean(tf.losses.softmax_cross_entropy(

onehot_labels=y, logits=prediction))

tf.summary.scalar('loss', loss)

with tf.name_scope('Adam_optimizer'):

optimizer = tf.train.AdamOptimizer().minimize(loss)

#Verificando se a classe prevista é igual à verdadeira classe de cada imagem

correct_prediction = tf.equal(prediction_cls, y_cls)

#Checando o elenco prediction to float e calcular a média

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

t_summary = tf.summary.merge_all()

#Abrindo a sessão do Tensorflow e salvando o arquivo

sess = tf.Session()

summary_writer = tf.summary.FileWriter('/ArqAna/summary', sess.graph)

sess.run(tf.global_variables_initializer())

saver = tf.train.Saver()

pasta = 'ArqAna/'

if not os.path.exists(pasta):

os.makedirs(pasta)

diret = os.path.join(pasta, 'ana_svhn')

val_summary = sess.run(t_summary)

summary_writer.add_summary(val_summary)

summary_writer = tf.train.SummaryWriter('/ArqAna/svhn_classifier_logs',sess.graph)

#saver.restore(sess=session, save_path=diret)

#Inicializando

##Sem exemplos em cada batch para atualizar os pesos

batch_size = 100

#Discartando ou fuse % de neuronios em Modo de treinamento

discard_per = 0.7

#with tf.Session() as sess:

# sess.run(tf.global_variables_initializer())

def get_batch(X, y, batch_size=100):

for i in np.arange(0, y.shape[0], batch_size):

end = min(X.shape[0], i + batch_size)

yield(X[i:end],y[i:end])

#Calculando o treinamento

treino_loss = []

valid_loss = []

for epoch in range(max_epochs):

print ('Treinando a rede')

epoch_loss = 0

print ()

print ('Epoca ', epoch+1 , ': \n')

step = 0

## Treinando as epocas

for (epoch_x , epoch_y) in get_batch(X_treino, y_treino, batch_size):

_, treino_accu, c = sess.run([optimizer, accuracy, loss], feed_dict={x: epoch_x, y: epoch_y, discard_rate: discard_per})

treino_loss.append(c)

if(step%40 == 0):

print ("Passo:", step, "\n", "\nMini-Batch Loss : ", c)

print('Mini-Batch Acuracia :' , treino_accu*100.0, '%')

## Validando a prediction e os sumarios

accu = 0.0

for (epoch_x , epoch_y) in get_batch(X_val, y_val, 100):

correct, _c = sess.run([correct_prediction, loss], feed_dict={x: epoch_x, y: epoch_y, discard_rate: 0.0})

valid_loss.append(_c)

accu+= np.sum(correct[correct == True])

print('Validação Acuracia :' , accu*100.0/y_val.shape[0], '%')

print ()

step = step + 1

print ('Epoca', epoch+1, 'completado em', max_epochs)

## Testando a prediction e os sumarios

accu = 0.0

for (epoch_x , epoch_y) in get_batch(X_teste, y_teste, 100):

correct = sess.run([correct_prediction], feed_dict={x: epoch_x, y: epoch_y, discard_rate: 0.0})

accu+= np.sum(correct[correct == True])

print('Teste Acuracia :' , accu*100.0/y_teste.shape[0], '%')

print ()

#Definindo a função de plotar imagens randomicas do conjunto de imagens

def plot_images(images, nrows, ncols, cls_true, cls_pred=None):

# Inicialize o subplotgrid

fig, axes = plt.subplots(nrows, ncols)

# Seleciona randomicamente nrows * ncols imagens

rs = np.random.choice(images.shape[0], nrows*ncols)

# Para cada eixo objeto na grid

for i, ax in zip(rs, axes.flat):

# Predições que não passaram

if cls_pred is None:

title = "True: {0}".format(np.argmax(cls_true[i]))

# Quando as predições passaram mostra labels + predictions

else:

title = "True: {0}, Pred: {1}".format(np.argmax(cls_true[i]), cls_pred[i])

# Mostra a imagem

ax.imshow(images[i,:,:,0], cmap='binary')

# Anota a imagem

ax.set_title(title)

# Não sobrescreve a grid

ax.set_xticks([])

ax.set_yticks([])

#Plotando as imagens do treino

plot_images(X_treino, 2, 4, y_treino);

#Avaliar os dados de teste

teste_pred = []

for (epoch_x , epoch_y) in get_batch(X_teste, y_teste, 100):

correct = sess.run([prediction_cls], feed_dict={x: epoch_x, y: epoch_y, discard_rate: 0.0})

teste_pred.append((np.asarray(correct, dtype=int)).T)

#Converter a lista numa lista de numpy array

def flatten(lists):

results = []

for numbers in lists:

for x in numbers:

results.append(x)

return np.asarray(results)

flat_array = flatten(teste_pred)

flat_array = (flat_array.T)

flat_array = flat_array[0]

flat_array.shape

#Plotando os resultados classificados errados

incorrect = flat_array != np.argmax(y_teste, axis=1)

images = X_teste[incorrect]

cls_true = y_teste[incorrect]

cls_pred = flat_array[incorrect]

plot_images(images, 2, 6, cls_true, cls_pred);

#Plotando os resultados classificados corretos de uma amostra randomica do conjunto de teste

correct = np.invert(incorrect)

images = X_teste[correct]

cls_true = y_teste[correct]

cls_pred = flat_array[correct]

plot_images(images, 2, 6, cls_true, cls_pred);

#Plotando a perda do treinamento e da validação

import matplotlib.pyplot as plt

plt.plot(treino_loss ,'r')

plt.plot(valid_loss, 'g')

prepare_log_dir()

#Salvando o arquivo ArqAna

saver.save(sess = sess, save_path = diret)

```

|

closed

|

2018-05-21T11:17:54Z

|

2018-05-21T20:39:38Z

|

https://github.com/tensorlayer/TensorLayer/issues/643

|

[] |

AnaValeriaS

| 1

|

modelscope/data-juicer

|

data-visualization

| 175

|

[MM] audio duration filter

|

1. Support audio reading better.

2. Add a Filter based on the duration of audios.

|

closed

|

2024-01-12T07:09:42Z

|

2024-01-17T09:57:16Z

|

https://github.com/modelscope/data-juicer/issues/175

|

[

"enhancement",

"dj:multimodal",

"dj:op"

] |

HYLcool

| 0

|

LAION-AI/Open-Assistant

|

python

| 3,707

|

Bug chat

|

Hi, I cannot write a chat in Open Assistant, Is this normal ?

|

closed

|

2023-10-04T19:20:18Z

|

2023-11-28T07:34:09Z

|

https://github.com/LAION-AI/Open-Assistant/issues/3707

|

[] |

NeWZekh

| 3

|

aio-libs/aiomysql

|

sqlalchemy

| 92

|

How to get the log for sql

|

How to get the log for sql statement

|

closed

|

2016-08-03T14:57:52Z

|

2017-04-17T10:33:46Z

|

https://github.com/aio-libs/aiomysql/issues/92

|

[] |

631068264

| 5

|

pydata/xarray

|

pandas

| 9,539

|

Creating DataTree from DataArrays

|

### What is your issue?

Original issue can be found here: https://github.com/xarray-contrib/datatree/issues/320

## Creating DataTree from DataArrays

What are the best ways to create a DataTree from DataArrays?

I don't usually work with Dataset objects, but rather with DataArray objects. I want the option to re-use the (physics-motived) arithmetic throughout my code, but manipulating multiple DataArrays at once. I initially thought about combining those DataArrays into a Dataset, but then learned that is not really a sufficient solution (more details [here](https://github.com/pydata/xarray/issues/8787)). DataTree seems like a better solution!

The only issue I am having right now is that DataTree seems to be adding more complexity than I need, because it is converting everything into Datasets, instead of allowing me to have a tree of DataArrays. This is not a deal-breaker for me, however it definitely increases the mental load of using the DataTree code. One thing that would help _significantly_ would be an easy way to create a DataTree from a list or iterable of arrays.

Here are my suggestions. Please let me know if there are already easy ways to do this which I just didn't find yet!

## 1 - Improve DataTree.from_dict

There is currently `DataTree.from_dict`, however this fails for unnamed DataArrays (when using `datatree.__version__=='0.0.14'`). For example:

```python

import numpy as np

import xarray as xr

import datatree as xrd

# generate some 3D data

nx, ny, nz = (32, 32, 32)

dx, dy, dz = (0.1, 0.1, 0.1)

coords=dict(x=np.arange(nx)*dx, y=np.arange(ny)*dy, z=np.arange(nz)*dz)

array = xr.DataArray(np.random.random((nx,ny,nz)), coords=coords)

# take some 2D slices of the 3D data

arrx = array.isel(x=0)

arry = array.isel(y=0)

arrz = array.isel(z=0)

# combine those slices into a single object so we can apply operations to all arrays at once,

# using the same interface & code for applying operations to a single DataArray!

tree = xrd.DataTree.from_dict(dict(x0=arrx, y0=arry, z0=arrz))

```

This fails, with: `ValueError: unable to convert unnamed DataArray to a Dataset without providing an explicit name`

However, it will instead succeed if I give names to the arrays:

```python

arrx.name = 'x0'

arry.name = 'y0'

arrz.name = 'z0'

tree = xrd.DataTree.from_dict(dict(x0=arrx, y0=arry, z0=arrz))

```

I suggest to improve the `DataTree.from_dict` method so that it will instead succeed if provided unnamed DataArrays. The behavior in this case should be to construct the Dataset from each DataArray but using the key provided in from_dict as the DataArray.name, for any DataArray without a name.

## 2 - Add a DataTree.from_arrays method

Ideally, I would like to be able to do something like (using arrays from the example above):

```python

tree = xrd.DataTree.from_arrays([arrx, arry, arrz])

```

This should work for any list of named arrays, and can use the array names to infer keys for the resulting DataTree. It will probably be easy to implement, I am thinking something like this:

```python

@classmethod

def from_arrays(cls, arrays):

if any(getattr(arr, name, None) is None for arr in arrays):

raise Exception('from_arrays requires all arrays to have names!')

d = {arr.name: arr for arr in arrays}

return cls.from_dict(d)

```

## 3 - Allow DataTree data to be DataArrays instead of requiring that they are Datasets

This would be excellent for me as an end-user, however I am guessing it would be really difficult to implement. I would understand if this is not feasible!

|

open

|

2024-09-23T19:00:26Z

|

2024-09-23T19:28:14Z

|

https://github.com/pydata/xarray/issues/9539

|

[

"design question",

"topic-DataTree"

] |

eni-awowale

| 1

|

microsoft/JARVIS

|

deep-learning

| 48

|

Error when start web server.

|

```

(jarvis) m200@DESKTOP-K0LFJU8:~/workdir/JARVIS/web$ npm run dev

> vue3-ts-vite-router-tailwindcss@0.0.0 dev

> vite

file:///home/m200/workdir/JARVIS/web/node_modules/vite/bin/vite.js:7

await import('source-map-support').then((r) => r.default.install())

^^^^^

SyntaxError: Unexpected reserved word

at Loader.moduleStrategy (internal/modules/esm/translators.js:133:18)

at async link (internal/modules/esm/module_job.js:42:21)

```

Any idea?

|

closed

|

2023-04-05T16:56:29Z

|

2023-04-07T06:18:28Z

|

https://github.com/microsoft/JARVIS/issues/48

|

[] |

lychees

| 2

|

graphql-python/graphene-django

|

graphql

| 943

|

Date type not accepting ISO DateTime string.

|

This causes error

But this works fine

Is it a bug or feature?

|

closed

|

2020-04-21T05:46:15Z

|

2020-04-25T13:13:35Z

|

https://github.com/graphql-python/graphene-django/issues/943

|

[] |

a-c-sreedhar-reddy

| 1

|

redis/redis-om-python

|

pydantic

| 656

|

Unable to declare a Vector-Field for a JsonModel

|

## The Bug

I'm trying to declare a Field as a Vector-Field by setting the `vector_options` on the `Field`.

Pydantic is then forcing me to annotate the field with a proper type.

But with any possible type for a vector, I always get errors.

The one that I think should definitly work is `list[float]`, but this results in

`AttributeError: type object 'float' has no attribute '__origin__'`.

### Example

```python

class Group(JsonModel):

articles: List[Article]

tender_text: str = Field(index=False)

tender_embedding: list[float] = Field(

index=True,

vector_options=VectorFieldOptions(

algorithm=VectorFieldOptions.ALGORITHM.FLAT,

type=VectorFieldOptions.TYPE.FLOAT32,

dimension=3,

distance_metric=VectorFieldOptions.DISTANCE_METRIC.COSINE

))

```

## No Documentation for Vector-Fields?

There seems to be no documentation about this feature.

I just found a merge-request which describes some features a bit, but there is no example, test or any documentation about this.

|

open

|

2024-09-17T09:58:41Z

|

2024-10-05T14:59:26Z

|

https://github.com/redis/redis-om-python/issues/656

|

[] |

MarkusPorti

| 2

|

sqlalchemy/sqlalchemy

|

sqlalchemy

| 10,888

|

OrderingList typing incorrect / incomplete and implementation doesn't accept non-int indices

|

### Describe the bug

There are a number of issues with the sqlalchemy.ext.orderinglist:OrderingList type hints and implementation:

1. The ordering function is documented as being allowed to return any type of object, and the tests demonstrate this by returning strings. The type annotation requires it to return integers, only, however.

2. The `OrderingList` signature marks the `ordering_attr` as optional, but accepting `None` here would lead to a type error when `getattr(entity, self.ordering_attr)` is called.

3. List objects accept any `SupportsIndex` type object, one that implements the [`.__index__()` method](https://docs.python.org/3/reference/datamodel.html#object.__index__). The codebase assumes it is always an integer (`count_from_1()` and `count_from_n_factory()` use addition with the value, which `SupportsIndex` objects don't need to support). It's probably best to use `operator.index()` on the value before passing it to the ordering function.

4. The `ordering_list()` ignores the `_T` type variable in the `OrderingFunc` and `OrderingList` signatures

5. The `OrderingFunc.ordering_func` annotation ignores the `_T` type variable in the `OrderingFunc` signature

6. List objects accept setting values via a slice and any iterable, e.g. `listobj[start:stop] = values_generator()`, but `OrderingList.__setitem__` with a slice object assumes the value is a sequence (supporting indexing), where a list accepts any iterable, plus it doesn't adjust the list length when the slice is being assigned fewer or more elements or handle slices with stride other than 1. This issue is mostly mitigated by the SQLAlchemy collections instrumentation (which wraps `OrderingList.__setitem__` with an implementation that handles slices *almost* correctly, only assuming a sequence when slices with a stride are used). But, there may be situations where `OrderingList` is used without this instrumentation. The internal implementation of `list.__setitem__()` converts the passed-in object to a list first, consuming the iterable; `OrderingList.__setitem__()` should do the same, plus it should handle slice strides.

7. There are no type annotations for the `count_from_*` utility functions or the overridden list methods.

### SQLAlchemy Version in Use

2.0.25

### Python Version

3.11

### Operating system

Linux

### To Reproduce

```python

# point 2, ordering_attr is not optional

from sqlalchemy.ext.orderinglist import OrderingList

class Item:

position = None

ol = OrderingList()

ol.append(Item())

"""

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File ".../sqlalchemy/lib/sqlalchemy/ext/orderinglist.py", line 339, in append

self._order_entity(len(self) - 1, entity, self.reorder_on_append)

File "...//sqlalchemy/lib/sqlalchemy/ext/orderinglist.py", line 327, in _order_entity

have = self._get_order_value(entity)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "...//sqlalchemy/lib/sqlalchemy/ext/orderinglist.py", line 308, in _get_order_value

return getattr(entity, self.ordering_attr)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

TypeError: attribute name must be string, not 'NoneType'

"""

# point 6, slice assigment of iterable items

ol = OrderingList('position')

ol[:] = (Item() for _ in range(3))

"""

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "...//sqlalchemy/lib/sqlalchemy/ext/orderinglist.py", line 375, in __setitem__

self.__setitem__(i, entity[i])

~~~~~~^^^

TypeError: 'generator' object is not subscriptable

"""

# point 6, slice assigment length not matching item length

ol = OrderingList('position')

ol.append(Item())

# assign 1 item to a slice of length 0

ol[:0] = [Item()]

assert len(ol) == 2 # assertion error, length is 1

# assign 0 items to a slice of length 1

ol[:] = []

"""

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File ".../sqlalchemy/lib/sqlalchemy/ext/orderinglist.py", line 375, in __setitem__

self.__setitem__(i, entity[i])

~~~~~~^^^

IndexError: list index out of range

"""

```

### Additional context

I'll be providing a PR that addresses all these issues.