repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

gradio-app/gradio

|

python

| 9,942

|

Component refresh log flooding issue

|

### Describe the bug

When I use gr.Textbox in gradio, parameters configured every=1, console keeps refreshing logs, I don't want this. This will cause the logs to be flooded and no other useful messages to be seen. I just want to configure the component with every=1 not to refresh the INFO log, but I couldn't find any solutions, could you help me to solve it?

### Have you searched existing issues? 🔎

- [X] I have searched and found no existing issues

### Reproduction

```python

import gradio as gr

with gr.Row:

with gr.Column():

server_output = gr.Textbox(

label="",

lines=20,

value=func,

show_copy_button=True,

elem_classes="log-container",

every=1, # Refresh every 1 second

autoscroll=True

)

```

### Screenshot

_No response_

### Logs

_No response_

### System Info

```shell

Yes, I have used the update command to update Gradio

```

### Severity

I can work around it

|

open

|

2024-11-12T07:30:38Z

|

2024-11-14T22:27:44Z

|

https://github.com/gradio-app/gradio/issues/9942

|

[

"bug"

] |

diya-he

| 3

|

onnx/onnx

|

deep-learning

| 5,783

|

[Resize Spec] roi is not used to compute the output shape

|

# Bug Report

### Describe the bug

In the spec of [resize](https://onnx.ai/onnx/operators/onnx__Resize.html#resize-19), Each dimension value of the output tensor is:

`output_dimension = floor(input_dimension * (roi_end - roi_start) * scale)`

While actual [shape inference code](https://github.com/onnx/onnx/blob/5be7f3164ba0b2c323813264ceb0ae7e929d2350/onnx/defs/tensor/utils.cc#L336C18-L336C18) doesn't reference the ROI:

`input_shape.dim(i).dim_value()) * scales_data[i])`

As well as EPs in ONNXRuntime, looks none is consuming roi to compute output shape.

|

closed

|

2023-11-30T21:12:37Z

|

2025-01-29T06:43:46Z

|

https://github.com/onnx/onnx/issues/5783

|

[

"bug",

"module: shape inference",

"stale"

] |

zhangxiang1993

| 1

|

aleju/imgaug

|

deep-learning

| 48

|

OSError: [Errno 2] No such file or directory

|

I run "sudo -H python -m pip install imgaug-0.2.4.tar.gz" command to user my pip in Cellar to install imgaug, and got the following error.

And i tried "pip install imgaug-0.2.4.tar.gz", and it seems that it uses 2.7.10 version python in osx system. However, my opencv is installed in 2.7.11 python in Cellar folder.

How can i solve this problem?

----

Processing ./imgaug-0.2.4.tar.gz

Error [Errno 2] No such file or directory while executing command python setup.py egg_info

Exception:

Traceback (most recent call last):

File "/usr/local/lib/python2.7/site-packages/pip/basecommand.py", line 215, in main

status = self.run(options, args)

File "/usr/local/lib/python2.7/site-packages/pip/commands/install.py", line 335, in run

wb.build(autobuilding=True)

File "/usr/local/lib/python2.7/site-packages/pip/wheel.py", line 749, in build

self.requirement_set.prepare_files(self.finder)

File "/usr/local/lib/python2.7/site-packages/pip/req/req_set.py", line 380, in prepare_files

ignore_dependencies=self.ignore_dependencies))

File "/usr/local/lib/python2.7/site-packages/pip/req/req_set.py", line 634, in _prepare_file

abstract_dist.prep_for_dist()

File "/usr/local/lib/python2.7/site-packages/pip/req/req_set.py", line 129, in prep_for_dist

self.req_to_install.run_egg_info()

File "/usr/local/lib/python2.7/site-packages/pip/req/req_install.py", line 439, in run_egg_info

command_desc='python setup.py egg_info')

File "/usr/local/lib/python2.7/site-packages/pip/utils/__init__.py", line 667, in call_subprocess

cwd=cwd, env=env)

File "/usr/local/Cellar/python/2.7.11/Frameworks/Python.framework/Versions/2.7/lib/python2.7/subprocess.py", line 710, in __init__

errread, errwrite)

File "/usr/local/Cellar/python/2.7.11/Frameworks/Python.framework/Versions/2.7/lib/python2.7/subprocess.py", line 1335, in _execute_child

raise child_exception

OSError: [Errno 2] No such file or directory

|

open

|

2017-07-24T04:01:03Z

|

2017-07-24T16:44:17Z

|

https://github.com/aleju/imgaug/issues/48

|

[] |

universewill

| 1

|

iusztinpaul/energy-forecasting

|

streamlit

| 22

|

[TypeError] Error while running Feature-pipeline with Airflow

|

Hi guys, I'm getting the error while running the feature-pipeline on Airflow, I've followed the instructions as https://github.com/iusztinpaul/energy-forecasting#run and stuck here, the logs was look like this:

[2023-10-26, 21:48:13 +07] {process_utils.py:182} INFO - Executing cmd: /tmp/venvyb53_u_a/bin/python /tmp/venvyb53_u_a/script.py /tmp/venvyb53_u_a/script.in /tmp/venvyb53_u_a/script.out /tmp/venvyb53_u_a/string_args.txt /tmp/venvyb53_u_a/termination.log

[2023-10-26, 21:48:13 +07] {process_utils.py:186} INFO - Output:

[2023-10-26, 21:48:18 +07] {process_utils.py:190} INFO - INFO:__main__:export_end_datetime = 2023-10-26 14:46:59

[2023-10-26, 21:48:18 +07] {process_utils.py:190} INFO - INFO:__main__:days_delay = 15

[2023-10-26, 21:48:18 +07] {process_utils.py:190} INFO - INFO:__main__:days_export = 30

[2023-10-26, 21:48:18 +07] {process_utils.py:190} INFO - INFO:__main__:url = https://drive.google.com/uc?export=download&id=1y48YeDymLurOTUO-GeFOUXVNc9MCApG5

[2023-10-26, 21:48:18 +07] {process_utils.py:190} INFO - INFO:__main__:feature_group_version = 1

[2023-10-26, 21:48:18 +07] {process_utils.py:190} INFO - INFO:feature_pipeline.pipeline:Extracting data from API.

[2023-10-26, 21:48:18 +07] {process_utils.py:190} INFO - WARNING:feature_pipeline.etl.extract:We clapped 'export_end_reference_datetime' to 'datetime(2023, 6, 30) + datetime.timedelta(days=days_delay)' as the dataset will not be updated starting from July 2023. The dataset will expire during 2023. Check out the following link for more information: https://www.energidataservice.dk/tso-electricity/ConsumptionDE35Hour

[2023-10-26, 21:48:18 +07] {process_utils.py:190} INFO - INFO:feature_pipeline.etl.extract:Data already downloaded at: /opt/***/dags/output/data/ConsumptionDE35Hour.csv

[2023-10-26, 21:48:19 +07] {process_utils.py:190} INFO - INFO:feature_pipeline.pipeline:Successfully extracted data from API.

[2023-10-26, 21:48:19 +07] {process_utils.py:190} INFO - INFO:feature_pipeline.pipeline:Transforming data.

[2023-10-26, 21:48:19 +07] {process_utils.py:190} INFO - INFO:feature_pipeline.pipeline:Successfully transformed data.

[2023-10-26, 21:48:19 +07] {process_utils.py:190} INFO - INFO:feature_pipeline.pipeline:Building validation expectation suite.

[2023-10-26, 21:48:19 +07] {process_utils.py:190} INFO - INFO:feature_pipeline.pipeline:Successfully built validation expectation suite.

[2023-10-26, 21:48:19 +07] {process_utils.py:190} INFO - INFO:feature_pipeline.pipeline:Validating data and loading it to the feature store.

[2023-10-26, 21:48:19 +07] {process_utils.py:190} INFO - Connected. Call `.close()` to terminate connection gracefully.

[2023-10-26, 21:48:21 +07] {process_utils.py:190} INFO -

[2023-10-26, 21:48:21 +07] {process_utils.py:190} INFO -

[2023-10-26, 21:48:21 +07] {process_utils.py:190} INFO - UserWarning: The installed hopsworks client version 3.2.0 may not be compatible with the connected Hopsworks backend version 3.4.1.

[2023-10-26, 21:48:21 +07] {process_utils.py:190} INFO - To ensure compatibility please install the latest bug fix release matching the minor version of your backend (3.4) by running 'pip install hopsworks==3.4.*'

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO -

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - Logged in to project, explore it here https://c.app.hopsworks.ai:443/p/140438

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - Connected. Call `.close()` to terminate connection gracefully.

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - Traceback (most recent call last):

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - File "/tmp/venvyb53_u_a/script.py", line 90, in <module>

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - res = run_feature_pipeline(*arg_dict["args"], **arg_dict["kwargs"])

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - File "/tmp/venvyb53_u_a/script.py", line 81, in run_feature_pipeline

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - return pipeline.run(

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - File "/tmp/venvyb53_u_a/lib/python3.9/site-packages/feature_pipeline/pipeline.py", line 61, in run

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - load.to_feature_store(

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - File "/tmp/venvyb53_u_a/lib/python3.9/site-packages/feature_pipeline/etl/load.py", line 24, in to_feature_store

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - feature_store = project.get_feature_store()

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - File "/tmp/venvyb53_u_a/lib/python3.9/site-packages/hopsworks/project.py", line 111, in get_feature_store

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - return connection(

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - File "/tmp/venvyb53_u_a/lib/python3.9/site-packages/hsfs/decorators.py", line 35, in if_connected

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - return fn(inst, *args, **kwargs)

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - File "/tmp/venvyb53_u_a/lib/python3.9/site-packages/hsfs/connection.py", line 178, in get_feature_store

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - return self._feature_store_api.get(util.rewrite_feature_store_name(name))

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - File "/tmp/venvyb53_u_a/lib/python3.9/site-packages/hsfs/core/feature_store_api.py", line 35, in get

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - return FeatureStore.from_response_json(

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - File "/tmp/venvyb53_u_a/lib/python3.9/site-packages/hsfs/feature_store.py", line 109, in from_response_json

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - return cls(**json_decamelized)

[2023-10-26, 21:48:23 +07] {process_utils.py:190} INFO - TypeError: __init__() missing 3 required positional arguments: 'hdfs_store_path', 'featurestore_description', and 'inode_id'

[2023-10-26, 21:48:26 +07] {taskinstance.py:1937} ERROR - Task failed with exception

Traceback (most recent call last):

File "/home/airflow/.local/lib/python3.8/site-packages/airflow/decorators/base.py", line 221, in execute

return_value = super().execute(context)

File "/home/airflow/.local/lib/python3.8/site-packages/airflow/operators/python.py", line 395, in execute

return super().execute(context=serializable_context)

File "/home/airflow/.local/lib/python3.8/site-packages/airflow/operators/python.py", line 192, in execute

return_value = self.execute_callable()

File "/home/airflow/.local/lib/python3.8/site-packages/airflow/operators/python.py", line 609, in execute_callable

result = self._execute_python_callable_in_subprocess(python_path, tmp_path)

File "/home/airflow/.local/lib/python3.8/site-packages/airflow/operators/python.py", line 463, in _execute_python_callable_in_subprocess

raise AirflowException(error_msg) from None

airflow.exceptions.AirflowException: Process returned non-zero exit status 1.

__init__() missing 3 required positional arguments: 'hdfs_store_path', 'featurestore_description', and 'inode_id'

[2023-10-26, 21:48:26 +07] {taskinstance.py:1400} INFO - Marking task as FAILED. dag_id=ml_pipeline, task_id=run_feature_pipeline, execution_date=20231026T144659, start_date=20231026T144705, end_date=20231026T144826

[2023-10-26, 21:48:26 +07] {standard_task_runner.py:104} ERROR - Failed to execute job 31 for task run_feature_pipeline (Process returned non-zero exit status 1.

__init__() missing 3 required positional arguments: 'hdfs_store_path', 'featurestore_description', and 'inode_id'; 3001)

[2023-10-26, 21:48:26 +07] {local_task_job_runner.py:228} INFO - Task exited with return code 1

[2023-10-26, 21:48:26 +07] {taskinstance.py:2778} INFO - 0 downstream tasks scheduled from follow-on schedule check

|

closed

|

2023-10-26T15:00:50Z

|

2023-10-27T03:24:48Z

|

https://github.com/iusztinpaul/energy-forecasting/issues/22

|

[] |

minhct13

| 1

|

ranaroussi/yfinance

|

pandas

| 1,508

|

Regular market price for indices

|

If I use either

```

stock = yf.Ticker("^DJI")

stock = yf.Ticker("DJIA")

```

there is no "regular market price," which there used to be. What happened?

|

closed

|

2023-04-26T19:03:25Z

|

2023-09-09T17:30:14Z

|

https://github.com/ranaroussi/yfinance/issues/1508

|

[] |

tenaciouslyantediluvian

| 1

|

mlfoundations/open_clip

|

computer-vision

| 245

|

Fix stochasticity in tests

|

Some tests seem to pass/fail arbitrarily. Inference test seems to be the culprit:

```

=================================== FAILURES ===================================

_________ test_inference_with_data[timm-swin_base_patch4_window7_224] __________

```

|

closed

|

2022-11-23T04:35:22Z

|

2022-12-09T00:40:10Z

|

https://github.com/mlfoundations/open_clip/issues/245

|

[

"bug"

] |

iejMac

| 8

|

graphdeco-inria/gaussian-splatting

|

computer-vision

| 910

|

Could not show results with SIBR_gaussian_viewer

|

I have trained the model with the provided scene of truck on my ubuntu server which has no GUI.

As suggessted, I use xvfb-run like the following:

```shell

xvfb-run -s "-screen 0 1400x900x24" SIBR_viewers/install/bin/SIBR_gaussianViewer_app -m /root/onethingai-tmp/3dgs/output/truck/

```

But it reports some errors and stucks after that, as the following:

How to tackle the error and show the 3D scene?

|

open

|

2024-07-28T11:06:13Z

|

2024-07-28T19:42:48Z

|

https://github.com/graphdeco-inria/gaussian-splatting/issues/910

|

[] |

JackeyLee007

| 1

|

computationalmodelling/nbval

|

pytest

| 204

|

`--sanitize-with` option seems to be behaving weirdly with "newly computed (test) output"

|

We have this failure below and we could not understand why our `output-sanitize.cfg` regex file is unable to cover the diff.

Why is the "**newly computed (test) output**" has the "**Text(0.5, 91.20243008191655, 'Longitude')**" part in it.

_ pavics-sdi-fix_nbs_jupyter_alpha/docs/source/notebooks/regridding.ipynb::Cell 27 _

Notebook cell execution failed

Cell 27: Cell outputs differ

Input:

# Now we can plot easily the results as a choropleth map!

ax = shapes_data.plot(

"tasmin", legend=True, legend_kwds={"label": "Minimal temperature 1993-05-20 [K]"}

)

ax.set_ylabel("Latitude")

ax.set_xlabel("Longitude");

Traceback:

dissimilar number of outputs for key "text/plain"<<<<<<<<<<<< Reference outputs from ipynb file:

<Figure size LENGTHxWIDTH with N Axes>

============ disagrees with newly computed (test) output:

Text(0.5, 91.20243008191655, 'Longitude')

<Figure size LENGTHxWIDTH with N Axes>

>>>>>>>>>>>>

`output-sanitize.cfg`:

```

[finch-figure-size]

regex: <Figure size \d+x\d+\swith\s\d\sAxes>

replace: <Figure size LENGTHxWIDTH with N Axes>

```

Full `output-sanitize.cfg` file: https://github.com/Ouranosinc/PAVICS-e2e-workflow-tests/blob/a4592eab55ad177b00cba77f126be56ff6566287/notebooks/output-sanitize.cfg#L92-L94

Notebook: https://github.com/Ouranosinc/pavics-sdi/blob/4ffec2df463b413c78991e9481fbe182537b3a65/docs/source/notebooks/regridding.ipynb

command:

py.test --nbval pavics-sdi-fix_nbs_jupyter_alpha_refresh_output/docs/source/notebooks/regridding.ipynb --sanitize-with notebooks/output-sanitize.cfg --dist=loadscope --numprocesses=0

============================= test session starts ==============================

platform linux -- Python 3.10.13, pytest-8.1.1, pluggy-1.4.0

rootdir: /home/jenkins/agent/workspace/_workflow-tests_new-docker-build

plugins: anyio-4.3.0, dash-2.16.1, nbval-0.11.0, tornasync-0.6.0.post2, xdist-3.5.0

|

open

|

2024-03-23T19:21:11Z

|

2024-03-23T19:33:05Z

|

https://github.com/computationalmodelling/nbval/issues/204

|

[] |

tlvu

| 0

|

xlwings/xlwings

|

automation

| 1,802

|

add .clear_formats() to Sheet & Range

|

hi Felix,

the clear() and clear_contents() are already available but not the .clear_formats().

it could be useful to add it (today, I monkey patch xlwings when needed.

tell me if you would like a PR (the changes are trivials ;-))

|

closed

|

2022-01-27T09:28:30Z

|

2022-02-06T08:16:40Z

|

https://github.com/xlwings/xlwings/issues/1802

|

[

"enhancement"

] |

sdementen

| 1

|

amidaware/tacticalrmm

|

django

| 1,124

|

NAT Service not working outside of local network.

|

The port 4222 tcp is not working outside of my local network. Telnet works locally but outside of the network does not work... IP tables are currently accepting incoming and outgoing connections and ufw is disabled... Don't know what the issue is.

|

closed

|

2022-05-11T18:53:50Z

|

2022-05-11T18:54:24Z

|

https://github.com/amidaware/tacticalrmm/issues/1124

|

[] |

ashermyers

| 0

|

getsentry/sentry

|

python

| 86,994

|

Include additional User Feedback details when creating a Jira Server Issue from Sentry User Feedback

|

### Problem Statement

When creating an issue in Jira Server from User Feedback in Sentry, the issue is successfully created, however, the description currently only includes a URL link to Sentry.

I would like the Jira Server issue description to include more detailed information from the User Feedback, such as the user's username, their message, and any attached screenshots.

Reported [via this ZD ticket](https://sentry.zendesk.com/agent/tickets/147414).

### Solution Brainstorm

_No response_

### Product Area

User Feedback

|

open

|

2025-03-13T15:45:14Z

|

2025-03-13T20:06:41Z

|

https://github.com/getsentry/sentry/issues/86994

|

[

"Product Area: User Feedback"

] |

kerenkhatiwada

| 3

|

autokey/autokey

|

automation

| 584

|

Insert phrase using abbreviation and tab not working as expected

|

## Classification:Bug

(Pick one of: Bug, Crash/Hang/Data Loss, Performance, UI/Usability, Feature (New), Enhancement)

## Reproducibility: Always (?)

(Pick one of: Always, Sometimes, Rarely, Unable, I Didn't Try)

## Version 0.96.0-beta.5

AutoKey version:

Used GUI (Gtk, Qt, or both): gtk

If the problem is known to be present in more than one version, please list all of those.

Installed via: (PPA, pip3, …).

Linux Distribution: Linux Mint 20.2 Uma \n \l

## Summary

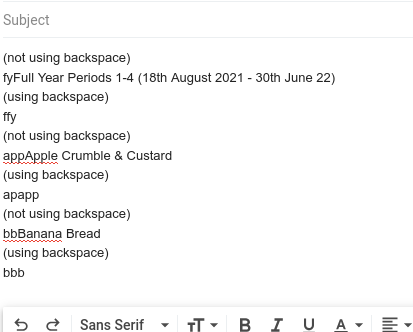

In Libre Office writer using backspace to delete the tab after phrase inserted deletes every thing except first few replaced characters, (so far no of characters remaining doesn't seem dependent on length of inserted phrase).

In sticky notes the back space deletes everything - acts as undo and leaves just the abbreviation.

In google mail the phrase is inserted but the abbreviation isn't removed, and using backspace to delete the tab leaves the abbreviation and random characters

## Steps to Reproduce (if applicable)

- I do this set up an abbreviation to enter a phrase using tab as the trigger

- as this eg (edited - had uploaded wrong screen shot)

-

- I do that press backspace to get rid of the tab.

-

- eg in screenshot gives 'ful' in LO

- another eg apple crumble & custard - trigger abbreviation is app - gives Appl

- in sticky notes the original abbreviation

- in google mail

## Expected Results

just the phrase remains

- This should happen.

## Actual Results

Varies depends on application used as in summary.

- Instead, this happens. :(

If helpful, submit screenshots of the issue to help debug.\

Debugging output, obtained by launching autokey via `autokey-gtk --verbose` (or `autokey-qt --verbose`, if you use the Qt interface) is also useful.\

Please upload the log somewhere accessible or put the output into a code block (enclose in triple backticks).

```

Example code block. Replace this whith your log content.

```

## Notes

Describe any debugging steps you've taken yourself.

If you've found a workaround, please provide it here.

Press enter/click elsewhere and then you can delete the tab

|

open

|

2021-07-19T22:55:19Z

|

2025-02-27T09:01:31Z

|

https://github.com/autokey/autokey/issues/584

|

[

"bug",

"autokey triggers",

"user support"

] |

unlucky67

| 9

|

pydantic/FastUI

|

fastapi

| 341

|

could this project be simplified/refactored with FastHTML?

|

https://github.com/AnswerDotAI/fasthtml

Maybe all the complications with NPM, React, bundling that are not familiar to python users like myself could be removed?

|

closed

|

2024-08-09T18:03:57Z

|

2024-10-10T07:19:18Z

|

https://github.com/pydantic/FastUI/issues/341

|

[] |

rbavery

| 6

|

scanapi/scanapi

|

rest-api

| 362

|

Remove changelog entries that should't be there

|

## Description

Following our [changelog guide](https://github.com/scanapi/scanapi/wiki/Changelog#what-warrants-a-changelog-entry) there are some changelog entries that should not be there in the `Unreleased` section. We need to clean it up before a release.

|

closed

|

2021-04-22T14:34:15Z

|

2021-04-22T14:47:16Z

|

https://github.com/scanapi/scanapi/issues/362

|

[

"Refactor"

] |

camilamaia

| 0

|

ets-labs/python-dependency-injector

|

flask

| 105

|

Rename AbstractCatalog to DeclarativeCatalog (with backward compatibility)

|

closed

|

2015-11-09T22:04:08Z

|

2015-11-10T08:42:55Z

|

https://github.com/ets-labs/python-dependency-injector/issues/105

|

[

"feature",

"refactoring"

] |

rmk135

| 0

|

|

roboflow/supervision

|

pytorch

| 844

|

[PolygonZone] - allow `triggering_position` to be `Iterable[Position]`

|

### Description

Update [`PolygonZone`](https://github.com/roboflow/supervision/blob/87a4927d03b6d8ec57208e8e3d01094135f9c829/supervision/detection/tools/polygon_zone.py#L15) logic, triggering the zone when not one anchor but multiple anchors are inside the zone. This type of logic is already implemented in [`LineZone`](https://github.com/roboflow/supervision/blob/87a4927d03b6d8ec57208e8e3d01094135f9c829/supervision/detection/line_counter.py#L12).

- Rename `triggering_position` to `triggering_anchors` to be consistent with the `LineZone` naming convention.

- `Update type of argument from `Position` to `Iterable[Position]

- Maintain the default behavior. The zone should, by default, be triggered by `Position.BOTTOM_CENTER`.

### API

```python

class PolygonZone:

def __init__(

self,

polygon: np.ndarray,

frame_resolution_wh: Tuple[int, int],

triggering_anchors: Iterable[Position] = (Position.BOTTOM_CENTER, )

):

pass

def trigger(self, detections: Detections) -> np.ndarray:

pass

```

### Additional

- Note: Please share a Google Colab with minimal code to test the new feature. We know it's additional work, but it will speed up the review process. The reviewer must test each change. Setting up a local environment to do this is time-consuming. Please ensure that Google Colab can be accessed without any issues (make it public). Thank you! 🙏🏻

|

closed

|

2024-02-02T17:51:07Z

|

2024-12-12T09:37:48Z

|

https://github.com/roboflow/supervision/issues/844

|

[

"enhancement",

"Q1.2024",

"api:polygonzone"

] |

SkalskiP

| 8

|

pytorch/vision

|

computer-vision

| 8,450

|

Let `v2.functional.gaussian_blur` backprop through `sigma` parameter

|

the v1 version of `gaussian_blur` allows to backprop through sigma

(example taken from https://github.com/pytorch/vision/issues/8401)

```

import torch

from torchvision.transforms.functional import gaussian_blur

device = "cuda"

device = "cpu"

k = 15

s = torch.tensor(0.3 * ((5 - 1) * 0.5 - 1) + 0.8, requires_grad=True, device=device)

blurred = gaussian_blur(torch.randn(1, 3, 256, 256, device=device), k, [s])

blurred.mean().backward()

print(s.grad)

```

on CPU and on GPU (after https://github.com/pytorch/vision/pull/8426).

However, the v2 version fails with

```

RuntimeError: element 0 of tensors does not require grad and does not have a grad_fn

```

The support in v1 is sort of undocumented and probably just works out of luck (sigma is typically expected to be a list of floats rather than a tensor). So while it works, it's not 100% clear to me whether this is a feature we absolutely want. I guess we can implement it if it doesn't make the code much more complex or slower.

|

closed

|

2024-05-29T12:45:21Z

|

2024-07-29T15:45:14Z

|

https://github.com/pytorch/vision/issues/8450

|

[] |

NicolasHug

| 3

|

yvann-ba/Robby-chatbot

|

streamlit

| 48

|

streamlit cloud server

|

Hello @yvann-hub ,

can you please write the steps of how to run this on streamlit cloud server?

it is only working locally.

Many thanks!

|

closed

|

2023-06-12T10:51:26Z

|

2023-06-15T09:34:37Z

|

https://github.com/yvann-ba/Robby-chatbot/issues/48

|

[] |

AhmedEwis

| 4

|

pytorch/pytorch

|

python

| 149,138

|

FSDP with AveragedModel

|

I am trying to use FSDP with `torch.optim.swa_utils.AveragedModel`, but I am getting the error

```

File "/scratch/nikolay_nikolov/.cache/bazel/_bazel_nikolay_nikolov/79bf5e678fbb2019f1e30944a206f079/external/python_runtime_x86_64-unknown-linux-gnu/lib/python3.10/copy.py", line 161, in deepcopy

rv = reductor(4)

TypeError: cannot pickle 'module' object

```

This happens at `deepcopy.copy` inside `torch.optim.swa_utils.AveragedModel.__init__` and `module` seems to refer to `<module 'torch.cuda' from '/scratch/nikolay_nikolov/.cache/bazel/_bazel_nikolay_nikolov/79bf5e678fbb2019f1e30944a206f079/execroot/barrel/bazel-out/k8-opt/bin/barrel/pipes/vlams/train.runfiles/pip-core_torch/site-packages/torch/cuda/__init__.py'>`

1. Is FSDP supposed to work with `torch.optim.swa_utils.AveragedModel`?

2. If not, how can one implement it? My plan to avoid `deepcopy` was to instead use a sharded state dict and compute the average separately on each rank to save memory. However, I can't find an easy way to convert the sharded state dict back to a full state dict offloaded to CPU when I need to save the state dict. Any tips on that?

cc @H-Huang @awgu @kwen2501 @wanchaol @fegin @fduwjj @wz337 @wconstab @d4l3k @c-p-i-o @zhaojuanmao @mrshenli @rohan-varma @chauhang

|

open

|

2025-03-13T17:46:52Z

|

2025-03-21T14:55:27Z

|

https://github.com/pytorch/pytorch/issues/149138

|

[

"oncall: distributed",

"module: fsdp"

] |

nikonikolov

| 2

|

ultralytics/yolov5

|

pytorch

| 12,534

|

When running the validation set to save labels, there is no labels folder in the expX folder.

|

### Search before asking

- [X] I have searched the YOLOv5 [issues](https://github.com/ultralytics/yolov5/issues) and found no similar bug report.

### YOLOv5 Component

_No response_

### Bug

When running the validation set to save labels, there is no labels folder in the expX folder. Therefore, line 261 of code val.py will report an error. Should I add code here:

### Environment

_No response_

### Minimal Reproducible Example

if save_txt:

if not os.path.isdir(save_dir / 'labels'):

os.mkdir(save / 'labels')

save_one_txt(predn, save_conf, shape, file=save_dir / 'labels' / f'{path.stem}.txt')

### Additional

_No response_

### Are you willing to submit a PR?

- [x] Yes I'd like to help by submitting a PR!

|

closed

|

2023-12-21T11:49:49Z

|

2024-10-20T19:35:04Z

|

https://github.com/ultralytics/yolov5/issues/12534

|

[

"bug"

] |

Gary55555

| 2

|

WeblateOrg/weblate

|

django

| 13,762

|

String translation history is deleted

|

### Describe the issue

After changing a string in the remote repository, translations are deleted along with previous history. We have disabled "Use fuzzy matching" in 400 files (it inserted incorrect translations, several thousand incorrect ones). The problem has been there since the very beginning, when we started using weblate.

To work around this issue, we generate one file with all changes with "fuzzy matching" enabled and add it to the project. We translate the changes file using automatic translation (automatic translation generates a lot of false translations, because it is not based on matching by string id). After updating the changes file with "fuzzy matching" enabled, the translations are not removed.

### I already tried

- [x] I've read and searched [the documentation](https://docs.weblate.org/).

- [x] I've searched for similar filed issues in this repository.

### Steps to reproduce the behavior

1) file settings:

2) Changing the string in the remote repository:

3)A changed string that still has the same id is treated as a new string.

The string translation has been deleted, and the previous translation history has also been deleted:

4) The automatic suggestions are also empty because they do not fit within the threshold:

### Expected behavior

The history of previous translations is not deleted.

Additionally, it would be useful to know that the string had a translation but was removed by the changes.

### Screenshots

_No response_

### Exception traceback

```pytb

```

### How do you run Weblate?

Docker container

### Weblate versions

5.10-dev — [0656bbd123047d8b44d77cb7f982093df625dd7a](https://github.com/WeblateOrg/weblate/commits/0656bbd123047d8b44d77cb7f982093df625dd7a)

### Weblate deploy checks

```shell

```

### Additional context

_No response_

|

open

|

2025-02-06T07:05:30Z

|

2025-02-28T08:05:59Z

|

https://github.com/WeblateOrg/weblate/issues/13762

|

[] |

tomkolp

| 8

|

encode/httpx

|

asyncio

| 2,169

|

RFE: provide suport for `rfc3986` 2.0.0

|

Currently `rfc3986` 2.0.0 is not supported.

https://github.com/encode/httpx/blob/3af5146788f2945c806d4225cd588a2aa8073b90/setup.py#L58-L62

Do you have any plans to provide such support?

|

closed

|

2022-04-07T08:06:04Z

|

2023-01-10T10:36:17Z

|

https://github.com/encode/httpx/issues/2169

|

[

"external"

] |

kloczek

| 1

|

davidteather/TikTok-Api

|

api

| 182

|

[BUG] - 'browser' object has no attribute 'signature'

|

**Describe the bug**

I think this may be a similar issue as the 'verifyFp' problem. It happens occasionally, despite my proxy server being in the US.

```

app/worker.14 [2020-07-14 16:38:17,509: WARNING/ForkPoolWorker-1] 'browser' object has no attribute 'signature'

app/worker.14 save_tiktoks_for_user(record_id, username)

app/worker.14 File "/app/tasks.py", line 29

app/worker.14 tiktoks = api.byUsername(tiktok_username, count=250)

app/worker.14 File "/app/.heroku/python/lib/python3.7/site-packages/TikTokApi/tiktok.py", line 147, in byUsername

app/worker.14 return self.getUser(username, language, proxy=proxy)['userInfo']['user']

app/worker.14 "&_signature=" + b.signature

```

|

closed

|

2020-07-14T16:41:02Z

|

2020-08-19T21:32:33Z

|

https://github.com/davidteather/TikTok-Api/issues/182

|

[

"bug"

] |

kbyatnal

| 14

|

pydata/xarray

|

pandas

| 9,951

|

⚠️ Nightly upstream-dev CI failed ⚠️

|

[Workflow Run URL](https://github.com/pydata/xarray/actions/runs/12819944199)

<details><summary>Python 3.12 Test Summary</summary>

```

xarray/tests/test_coding_times.py::test_encode_cf_timedelta_casting_value_error[False]: ValueError: output array is read-only

xarray/tests/test_variable.py::TestVariable::test_index_0d_datetime: AssertionError: assert dtype('<M8[us]') == 'datetime64[ns]'

+ where dtype('<M8[us]') = np.datetime64('2000-01-01T00:00:00.000000').dtype

xarray/tests/test_variable.py::TestVariable::test_datetime64_conversion[values5-ns]: AssertionError: assert dtype('<M8[us]') == dtype('<M8[ns]')

+ where dtype('<M8[us]') = <xarray.Variable (t: 3)> Size: 24B\narray(['1970-01-01T00:00:00.000000', '1970-01-02T00:00:00.000000',\n '1970-01-03T00:00:00.000000'], dtype='datetime64[us]').dtype

+ and dtype('<M8[ns]') = <class 'numpy.dtype'>('datetime64[ns]')

+ where <class 'numpy.dtype'> = np.dtype

xarray/tests/test_variable.py::TestVariable::test_datetime64_conversion_scalar[values1-ns]: AssertionError: assert dtype('<M8[s]') == dtype('<M8[ns]')

+ where dtype('<M8[s]') = <xarray.Variable ()> Size: 8B\narray('2000-01-01T00:00:00', dtype='datetime64[s]').dtype

+ and dtype('<M8[ns]') = <class 'numpy.dtype'>('datetime64[ns]')

+ where <class 'numpy.dtype'> = np.dtype

xarray/tests/test_variable.py::TestVariable::test_datetime64_conversion_scalar[values2-ns]: AssertionError: assert dtype('<M8[us]') == dtype('<M8[ns]')

+ where dtype('<M8[us]') = <xarray.Variable ()> Size: 8B\narray('2000-01-01T00:00:00.000000', dtype='datetime64[us]').dtype

+ and dtype('<M8[ns]') = <class 'numpy.dtype'>('datetime64[ns]')

+ where <class 'numpy.dtype'> = np.dtype

xarray/tests/test_variable.py::TestVariable::test_0d_datetime: AssertionError: assert dtype('<M8[s]') == dtype('<M8[ns]')

+ where dtype('<M8[s]') = <xarray.Variable ()> Size: 8B\narray('2000-01-01T00:00:00', dtype='datetime64[s]').dtype

+ and dtype('<M8[ns]') = <class 'numpy.dtype'>('datetime64[ns]')

+ where <class 'numpy.dtype'> = np.dtype

xarray/tests/test_variable.py::TestVariableWithDask::test_index_0d_datetime: AssertionError: assert dtype('<M8[us]') == 'datetime64[ns]'

+ where dtype('<M8[us]') = np.datetime64('2000-01-01T00:00:00.000000').dtype

xarray/tests/test_variable.py::TestVariableWithDask::test_datetime64_conversion[values5-ns]: AssertionError: assert dtype('<M8[us]') == dtype('<M8[ns]')

+ where dtype('<M8[us]') = <xarray.Variable (t: 3)> Size: 24B\ndask.array<array, shape=(3,), dtype=datetime64[us], chunksize=(3,), chunktype=numpy.ndarray>.dtype

+ and dtype('<M8[ns]') = <class 'numpy.dtype'>('datetime64[ns]')

+ where <class 'numpy.dtype'> = np.dtype

xarray/tests/test_variable.py::TestIndexVariable::test_index_0d_datetime: AssertionError: assert dtype('<M8[us]') == 'datetime64[ns]'

+ where dtype('<M8[us]') = np.datetime64('2000-01-01T00:00:00.000000').dtype

xarray/tests/test_variable.py::TestIndexVariable::test_datetime64_conversion[values5-ns]: AssertionError: assert dtype('<M8[us]') == dtype('<M8[ns]')

+ where dtype('<M8[us]') = <xarray.IndexVariable 't' (t: 3)> Size: 24B\narray(['1970-01-01T00:00:00.000000', '1970-01-02T00:00:00.000000',\n '1970-01-03T00:00:00.000000'], dtype='datetime64[us]').dtype

+ and dtype('<M8[ns]') = <class 'numpy.dtype'>('datetime64[ns]')

+ where <class 'numpy.dtype'> = np.dtype

xarray/tests/test_variable.py::TestAsCompatibleData::test_datetime: AssertionError: assert dtype('<M8[ns]') == dtype('<M8[us]')

+ where dtype('<M8[ns]') = <class 'numpy.dtype'>('datetime64[ns]')

+ where <class 'numpy.dtype'> = np.dtype

+ and dtype('<M8[us]') = array('2000-01-01T00:00:00.000000', dtype='datetime64[us]').dtype

xarray/tests/test_variable.py::test_datetime_conversion[2000-01-01 00:00:00-ns]: AssertionError: assert dtype('<M8[us]') == dtype('<M8[ns]')

+ where dtype('<M8[us]') = <xarray.Variable ()> Size: 8B\narray('2000-01-01T00:00:00.000000', dtype='datetime64[us]').dtype

+ and dtype('<M8[ns]') = <class 'numpy.dtype'>('datetime64[ns]')

+ where <class 'numpy.dtype'> = np.dtype

xarray/tests/test_variable.py::test_datetime_conversion[[datetime.datetime(2000, 1, 1, 0, 0)]-ns]: AssertionError: assert dtype('<M8[us]') == dtype('<M8[ns]')

+ where dtype('<M8[us]') = <xarray.Variable (time: 1)> Size: 8B\narray(['2000-01-01T00:00:00.000000'], dtype='datetime64[us]').dtype

+ and dtype('<M8[ns]') = <class 'numpy.dtype'>('datetime64[ns]')

+ where <class 'numpy.dtype'> = np.dtype

```

</details>

|

closed

|

2025-01-16T00:27:03Z

|

2025-01-17T13:16:48Z

|

https://github.com/pydata/xarray/issues/9951

|

[

"CI"

] |

github-actions[bot]

| 0

|

nteract/testbook

|

pytest

| 137

|

testbook loads wrong signature for function

|

Hello,

i have following test:

``` python

import testbook

import numpy as np

from assertpy import assert_that

from pytest import fixture

@fixture(scope='module')

def tb():

with testbook.testbook('h2_2.ipynb', execute=True) as tb:

yield tb

def test_some_small_function(tb):

some_function = tb.ref("some_function")

test_w_1_0 = np.array([1])

test_no_bias = np.array([0])

test_w_2_1 = np.array([1])

print(locals())

assert_that(some_function(0, test_w_1_0, test_no_bias, test_w_2_1, np.sign)).contains([0, 1, -1])

```

having in the notebook:

``` python

def some_function(input_var: float, first: np.ndarray, bias: np.ndarray, second: np.ndarray, the_transfer_function):

h: np.ndarray = input_var * first - bias

return np.dot(second, the_transfer_function(h))

```

and I get the following TypeError error:

> E TypeError Traceback (most recent call last)

E /var/folders/jk/bq46f4ys107bjb6jwvn0yhqr0000gp/T/ipykernel_52132/3127954361.py in <module>

E ----> 1 some_function(*(0, "[1]", "[0]", "[1]", "<ufunc 'sign'>", ), **{})

E

E /var/folders/jk/bq46f4ys107bjb6jwvn0yhqr0000gp/T/ipykernel_52132/179938020.py in some_function(input_var, first, bias, second, the_transfer_function)

E 1 def some_function(input_var: float, first: np.ndarray, bias: np.ndarray, second: np.ndarray, the_transfer_function):

E ----> 2 h: np.ndarray = input_var * first - bias

E 3 return np.dot(second, the_transfer_function(h))

E

E TypeError: unsupported operand type(s) for -: 'str' and 'str'

E TypeError: unsupported operand type(s) for -: 'str' and 'str'

I have added extra type information to make it clear to you the intend of the code.

Tests inside the notebook are running successfully

> test_h2.py::test_some_small_function /Users/1000ber-5078/PycharmProjects/machine-intelligence/venv/lib/python3.8/site-packages/debugpy/_vendored/force_pydevd.py:20: UserWarning: incompatible copy of pydevd already imported:

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/__init__.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/_pydev_calltip_util.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/_pydev_completer.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/_pydev_filesystem_encoding.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/_pydev_imports_tipper.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/_pydev_tipper_common.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/fix_getpass.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/pydev_code_executor.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/pydev_console_types.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/pydev_imports.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/pydev_ipython_code_executor.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/pydev_ipython_console_011.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/pydev_is_thread_alive.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/pydev_log.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/pydev_monkey.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/pydev_override.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_bundle/pydev_stdin.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_imps/__init__.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_imps/_pydev_execfile.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydev_imps/_pydev_saved_modules.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/__init__.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_additional_thread_info.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_additional_thread_info_regular.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_breakpointhook.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_breakpoints.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_bytecode_utils.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_collect_try_except_info.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_comm.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_comm_constants.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_command_line_handling.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_console.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_console_integration.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_console_pytest.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_constants.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_custom_frames.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_cython_darwin_38_64.cpython-38-darwin.so

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_cython_darwin_38_64.cpython-38-darwin.so

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_cython_wrapper.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_dont_trace.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_dont_trace_files.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_exec2.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_extension_api.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_extension_utils.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_frame.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_frame_utils.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_import_class.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_io.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_kill_all_pydevd_threads.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_plugin_utils.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_process_net_command.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_resolver.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_save_locals.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_signature.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_tables.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_trace_api.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_trace_dispatch.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_traceproperty.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_utils.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_vars.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_vm_type.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_bundle/pydevd_xml.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_frame_eval/__init__.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/_pydevd_frame_eval/pydevd_frame_eval_main.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydev_ipython/__init__.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydev_ipython/inputhook.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydev_ipython/matplotlibtools.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd_concurrency_analyser/__init__.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd_concurrency_analyser/pydevd_concurrency_logger.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd_concurrency_analyser/pydevd_thread_wrappers.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd_file_utils.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd_plugins/__init__.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd_plugins/django_debug.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd_plugins/extensions/__init__.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd_plugins/extensions/types/__init__.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd_plugins/extensions/types/pydevd_helpers.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd_plugins/extensions/types/pydevd_plugin_numpy_types.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd_plugins/extensions/types/pydevd_plugins_django_form_str.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd_plugins/jinja2_debug.py

/Applications/PyCharm.app/Contents/plugins/python/helpers/pydev/pydevd_tracing.py

warnings.warn(msg + ':\n {}'.format('\n '.join(_unvendored)))

[IPKernelApp] WARNING | debugpy_stream undefined, debugging will not be enabled

FAILED [100%]{'tb': <testbook.client.TestbookNotebookClient object at 0x7f9ef824d2e0>, 'some_function': '<function some_function at 0x7fb2b2e70c10>', 'test_w_1_0': array([1]), 'test_no_bias': array([0]), 'test_w_2_1': array([1])}

test_h2.py:12 (test_some_small_function)

self = <testbook.client.TestbookNotebookClient object at 0x7f9ef824d2e0>

cell = [8], kwargs = {}, cell_indexes = [8], executed_cells = [], idx = 8

def execute_cell(self, cell, **kwargs) -> Union[Dict, List[Dict]]:

"""

Executes a cell or list of cells

"""

if isinstance(cell, slice):

start, stop = self._cell_index(cell.start), self._cell_index(cell.stop)

if cell.step is not None:

raise TestbookError('testbook does not support step argument')

cell = range(start, stop + 1)

elif isinstance(cell, str) or isinstance(cell, int):

cell = [cell]

cell_indexes = cell

if all(isinstance(x, str) for x in cell):

cell_indexes = [self._cell_index(tag) for tag in cell]

executed_cells = []

for idx in cell_indexes:

try:

> cell = super().execute_cell(self.nb['cells'][idx], idx, **kwargs)

../venv/lib/python3.8/site-packages/testbook/client.py:133:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

args = (<testbook.client.TestbookNotebookClient object at 0x7f9ef824d2e0>, {'id': '6ac327d5', 'cell_type': 'code', 'metadata'...0m\x1b[0;34m\x1b[0m\x1b[0m\n', "\x1b[0;31mTypeError\x1b[0m: unsupported operand type(s) for -: 'str' and 'str'"]}]}, 8)

kwargs = {}

def wrapped(*args, **kwargs):

> return just_run(coro(*args, **kwargs))

../venv/lib/python3.8/site-packages/nbclient/util.py:78:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

coro = <coroutine object NotebookClient.async_execute_cell at 0x7f9ef81fea40>

def just_run(coro: Awaitable) -> Any:

"""Make the coroutine run, even if there is an event loop running (using nest_asyncio)"""

# original from vaex/asyncio.py

loop = asyncio._get_running_loop()

if loop is None:

had_running_loop = False

try:

loop = asyncio.get_event_loop()

except RuntimeError:

# we can still get 'There is no current event loop in ...'

loop = asyncio.new_event_loop()

asyncio.set_event_loop(loop)

else:

had_running_loop = True

if had_running_loop:

# if there is a running loop, we patch using nest_asyncio

# to have reentrant event loops

check_ipython()

import nest_asyncio

nest_asyncio.apply()

check_patch_tornado()

> return loop.run_until_complete(coro)

../venv/lib/python3.8/site-packages/nbclient/util.py:57:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <_UnixSelectorEventLoop running=False closed=False debug=False>

future = <Task finished name='Task-48' coro=<NotebookClient.async_execute_cell() done, defined at /Users/1000ber-5078/PycharmPr...rted operand type(s) for -: \'str\' and \'str\'\nTypeError: unsupported operand type(s) for -: \'str\' and \'str\'\n')>

def run_until_complete(self, future):

"""Run until the Future is done.

If the argument is a coroutine, it is wrapped in a Task.

WARNING: It would be disastrous to call run_until_complete()

with the same coroutine twice -- it would wrap it in two

different Tasks and that can't be good.

Return the Future's result, or raise its exception.

"""

self._check_closed()

self._check_running()

new_task = not futures.isfuture(future)

future = tasks.ensure_future(future, loop=self)

if new_task:

# An exception is raised if the future didn't complete, so there

# is no need to log the "destroy pending task" message

future._log_destroy_pending = False

future.add_done_callback(_run_until_complete_cb)

try:

self.run_forever()

except:

if new_task and future.done() and not future.cancelled():

# The coroutine raised a BaseException. Consume the exception

# to not log a warning, the caller doesn't have access to the

# local task.

future.exception()

raise

finally:

future.remove_done_callback(_run_until_complete_cb)

if not future.done():

raise RuntimeError('Event loop stopped before Future completed.')

> return future.result()

/Library/Frameworks/Python.framework/Versions/3.8/lib/python3.8/asyncio/base_events.py:616:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <testbook.client.TestbookNotebookClient object at 0x7f9ef824d2e0>

cell = {'id': '6ac327d5', 'cell_type': 'code', 'metadata': {'execution': {'iopub.status.busy': '2021-10-23T15:57:12.875064Z',...x1b[0m\x1b[0;34m\x1b[0m\x1b[0m\n', "\x1b[0;31mTypeError\x1b[0m: unsupported operand type(s) for -: 'str' and 'str'"]}]}

cell_index = 8, execution_count = None, store_history = True

async def async_execute_cell(

self,

cell: NotebookNode,

cell_index: int,

execution_count: t.Optional[int] = None,

store_history: bool = True) -> NotebookNode:

"""

Executes a single code cell.

To execute all cells see :meth:`execute`.

Parameters

----------

cell : nbformat.NotebookNode

The cell which is currently being processed.

cell_index : int

The position of the cell within the notebook object.

execution_count : int

The execution count to be assigned to the cell (default: Use kernel response)

store_history : bool

Determines if history should be stored in the kernel (default: False).

Specific to ipython kernels, which can store command histories.

Returns

-------

output : dict

The execution output payload (or None for no output).

Raises

------

CellExecutionError

If execution failed and should raise an exception, this will be raised

with defaults about the failure.

Returns

-------

cell : NotebookNode

The cell which was just processed.

"""

assert self.kc is not None

if cell.cell_type != 'code' or not cell.source.strip():

self.log.debug("Skipping non-executing cell %s", cell_index)

return cell

if self.record_timing and 'execution' not in cell['metadata']:

cell['metadata']['execution'] = {}

self.log.debug("Executing cell:\n%s", cell.source)

cell_allows_errors = (not self.force_raise_errors) and (

self.allow_errors

or "raises-exception" in cell.metadata.get("tags", []))

parent_msg_id = await ensure_async(

self.kc.execute(

cell.source,

store_history=store_history,

stop_on_error=not cell_allows_errors

)

)

# We launched a code cell to execute

self.code_cells_executed += 1

exec_timeout = self._get_timeout(cell)

cell.outputs = []

self.clear_before_next_output = False

task_poll_kernel_alive = asyncio.ensure_future(

self._async_poll_kernel_alive()

)

task_poll_output_msg = asyncio.ensure_future(

self._async_poll_output_msg(parent_msg_id, cell, cell_index)

)

self.task_poll_for_reply = asyncio.ensure_future(

self._async_poll_for_reply(

parent_msg_id, cell, exec_timeout, task_poll_output_msg, task_poll_kernel_alive

)

)

try:

exec_reply = await self.task_poll_for_reply

except asyncio.CancelledError:

# can only be cancelled by task_poll_kernel_alive when the kernel is dead

task_poll_output_msg.cancel()

raise DeadKernelError("Kernel died")

except Exception as e:

# Best effort to cancel request if it hasn't been resolved

try:

# Check if the task_poll_output is doing the raising for us

if not isinstance(e, CellControlSignal):

task_poll_output_msg.cancel()

finally:

raise

if execution_count:

cell['execution_count'] = execution_count

> self._check_raise_for_error(cell, exec_reply)

../venv/lib/python3.8/site-packages/nbclient/client.py:862:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <testbook.client.TestbookNotebookClient object at 0x7f9ef824d2e0>

cell = {'id': '6ac327d5', 'cell_type': 'code', 'metadata': {'execution': {'iopub.status.busy': '2021-10-23T15:57:12.875064Z',...x1b[0m\x1b[0;34m\x1b[0m\x1b[0m\n', "\x1b[0;31mTypeError\x1b[0m: unsupported operand type(s) for -: 'str' and 'str'"]}]}

exec_reply = {'buffers': [], 'content': {'ename': 'TypeError', 'engine_info': {'engine_id': -1, 'engine_uuid': '7b8e70ee-5b55-4029-...e, 'engine': '7b8e70ee-5b55-4029-888b-a23a76f683ca', 'started': '2021-10-23T15:57:12.869603Z', 'status': 'error'}, ...}

def _check_raise_for_error(

self,

cell: NotebookNode,

exec_reply: t.Optional[t.Dict]) -> None:

if exec_reply is None:

return None

exec_reply_content = exec_reply['content']

if exec_reply_content['status'] != 'error':

return None

cell_allows_errors = (not self.force_raise_errors) and (

self.allow_errors

or exec_reply_content.get('ename') in self.allow_error_names

or "raises-exception" in cell.metadata.get("tags", []))

if not cell_allows_errors:

> raise CellExecutionError.from_cell_and_msg(cell, exec_reply_content)

E nbclient.exceptions.CellExecutionError: An error occurred while executing the following cell:

E ------------------

E

E some_function(*(0, "[1]", "[0]", "[1]", "<ufunc 'sign'>", ), **{})

E

E ------------------

E

E ---------------------------------------------------------------------------

E TypeError Traceback (most recent call last)

E /var/folders/jk/bq46f4ys107bjb6jwvn0yhqr0000gp/T/ipykernel_52132/3127954361.py in <module>

E ----> 1 some_function(*(0, "[1]", "[0]", "[1]", "<ufunc 'sign'>", ), **{})

E

E /var/folders/jk/bq46f4ys107bjb6jwvn0yhqr0000gp/T/ipykernel_52132/179938020.py in some_function(input_var, first, bias, second, the_transfer_function)

E 1 def some_function(input_var: float, first: np.ndarray, bias: np.ndarray, second: np.ndarray, the_transfer_function):

E ----> 2 h: np.ndarray = input_var * first - bias

E 3 return np.dot(second, the_transfer_function(h))

E

E TypeError: unsupported operand type(s) for -: 'str' and 'str'

E TypeError: unsupported operand type(s) for -: 'str' and 'str'

../venv/lib/python3.8/site-packages/nbclient/client.py:765: CellExecutionError

During handling of the above exception, another exception occurred:

tb = <testbook.client.TestbookNotebookClient object at 0x7f9ef824d2e0>

def test_some_small_function(tb):

some_function = tb.ref("some_function")

test_w_1_0 = np.array([1])

test_no_bias = np.array([0])

test_w_2_1 = np.array([1])

print(locals())

> assert_that(some_function(0, test_w_1_0, test_no_bias, test_w_2_1, np.sign)).contains([0, 1, -1])

test_h2.py:19:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

../venv/lib/python3.8/site-packages/testbook/reference.py:85: in __call__

return self.tb.value(code)

../venv/lib/python3.8/site-packages/testbook/client.py:273: in value

result = self.inject(code, pop=True)

../venv/lib/python3.8/site-packages/testbook/client.py:237: in inject

cell = TestbookNode(self.execute_cell(inject_idx)) if run else TestbookNode(code_cell)

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <testbook.client.TestbookNotebookClient object at 0x7f9ef824d2e0>

cell = [8], kwargs = {}, cell_indexes = [8], executed_cells = [], idx = 8

def execute_cell(self, cell, **kwargs) -> Union[Dict, List[Dict]]:

"""

Executes a cell or list of cells

"""

if isinstance(cell, slice):

start, stop = self._cell_index(cell.start), self._cell_index(cell.stop)

if cell.step is not None:

raise TestbookError('testbook does not support step argument')

cell = range(start, stop + 1)

elif isinstance(cell, str) or isinstance(cell, int):

cell = [cell]

cell_indexes = cell

if all(isinstance(x, str) for x in cell):

cell_indexes = [self._cell_index(tag) for tag in cell]

executed_cells = []

for idx in cell_indexes:

try:

cell = super().execute_cell(self.nb['cells'][idx], idx, **kwargs)

except CellExecutionError as ce:

> raise TestbookRuntimeError(ce.evalue, ce, self._get_error_class(ce.ename))

E testbook.exceptions.TestbookRuntimeError: An error occurred while executing the following cell:

E ------------------

E

E some_function(*(0, "[1]", "[0]", "[1]", "<ufunc 'sign'>", ), **{})

E

E ------------------

E

E ---------------------------------------------------------------------------

E TypeError Traceback (most recent call last)

E /var/folders/jk/bq46f4ys107bjb6jwvn0yhqr0000gp/T/ipykernel_52132/3127954361.py in <module>

E ----> 1 some_function(*(0, "[1]", "[0]", "[1]", "<ufunc 'sign'>", ), **{})

E

E /var/folders/jk/bq46f4ys107bjb6jwvn0yhqr0000gp/T/ipykernel_52132/179938020.py in some_function(input_var, first, bias, second, the_transfer_function)

E 1 def some_function(input_var: float, first: np.ndarray, bias: np.ndarray, second: np.ndarray, the_transfer_function):

E ----> 2 h: np.ndarray = input_var * first - bias

E 3 return np.dot(second, the_transfer_function(h))

E

E TypeError: unsupported operand type(s) for -: 'str' and 'str'

E TypeError: unsupported operand type(s) for -: 'str' and 'str'

../venv/lib/python3.8/site-packages/testbook/client.py:135: TestbookRuntimeError

======================== 1 failed, 12 warnings in 2.97s ========================

Process finished with exit code 1

|

open

|

2021-10-23T16:00:15Z

|

2021-10-30T19:20:55Z

|

https://github.com/nteract/testbook/issues/137

|

[] |

midumitrescu

| 1

|

kubeflow/katib

|

scikit-learn

| 1,789

|

Display more detailed information and logs of experiment on the user interface

|

/kind feature

**Describe the solution you'd like**

[A clear and concise description of what you want to happen.]

We may often meet a situation that everything seems Ok on the UI,the status of experiment and trials are remaining running, but actually there are something wrong have already happened with the experiment and trials.

To determine if everything is ok, we have to use commands like `kubectl logs` and `kubectl describe` to check logs and Events of almost every resource associating katib experiment. This is very unfriendly for data scientists, who may want focus on machine learning problems.

**Therefore, I suggest to display more detailed information and logs of experiment on the user interface, make us easy to know if the experiment and trials are actrually runing well from the UI.**

[Miscellaneous information that will assist in solving the issue.]

---

<!-- Don't delete this message to encourage users to support your issue! -->

Love this feature? Give it a 👍 We prioritize the features with the most 👍

|

closed

|

2022-01-24T09:24:50Z

|

2022-02-11T18:13:12Z

|

https://github.com/kubeflow/katib/issues/1789

|

[

"kind/feature"

] |

javen218

| 4

|

tqdm/tqdm

|

pandas

| 1,574

|

Add wrapper for functions that are called repeatedly

|

## Proposed enhancement

Add a decorator that wraps a function in a progress bar when you expect a specific number of calls to that function.

## Use case

As a specific example I'd like to look at creating animations with matplotlib. The workflow is roughly to create a figure and then providing a method to update the figure each frame. When creating the animation matplotlib repeatedly calls the update method. Matplotlib does not support a progress bar as far as I know, however, if we wrap the update method ourselves we could still provide the user with feedback on the progress of their animation.

## Proposed implementation

```python

def progress_bar_decorator(*tqdm_args, **tqdm_kwargs):

progress_bar = tqdm(*tqdm_args, **tqdm_kwargs)

def decorator(f):

@functools.wraps(f)

def wrapper(*args, **kwargs):

result = f(*args, **kwargs)

progress_bar.update()

return result

return wrapper

return decorator

```

In the example of the matplotlib animation it can then be used as such:

```python

figure, axes = plt.subplots(1, 1)

scatter = axes.scatter(X[0], Y[0])

@progress_bar_decorator(total=len(X), desc="Generating animation")

def update(frame):

nonlocal scatter

scatter.set_offsets(X[frame], Y[frame])

return scatter

FuncAnimation(figure, update, frames=len(X), ...).save("file.mp4")

```

Matplotlib will call `update` exactly `len(X)` times causing `tqdm` to update the progress bar accordingly. In this example `X` and `Y` are some fictional data arrays.

|

open

|

2024-04-26T10:44:18Z

|

2024-04-26T10:44:18Z

|

https://github.com/tqdm/tqdm/issues/1574

|

[] |

jvdoorn

| 0

|

junyanz/pytorch-CycleGAN-and-pix2pix

|

deep-learning

| 996

|

Need some clarification

|

I am using both pix2pix and cycleGan models for my thesis research. I have a custom dataset with 417 image pairs. I have done a lots of experiments using your repositories. I have few questions. Some of my questions are generic as well. I use two gpus with 8GB memory each. so in total 16 GB GPU machine.

1) I understand the batch size as a hyperparamater. Currently i use 4 as a batch size for my experiments. But apart from increasing the training speed, what is the importance of batch size?

2) What does normalisation does? I use grayscale images during training. What is the difference between batch and instance normalization. And why it is not possible to use batch normalisation in multi GPU training?

3) Use of dropout and eval flags. When i train pix2pix i never use --dropout flag during testing but for cycleGAN i do. Why is it so? And when should i use --eval flag?

4) I use resnet9block generator as i train rectangular images. Is there any advantage of using unet architecture over resnet. I did not use unet since my images are 600*400.

5) I read a commit comment few days back about the semialigned dataset. Can you please brief about it and how to use it. Can it be used for both pix2pix and cycleGAN models?

6)Is it possible to save the training graphs from the visdom server?

@junyanz , Sorry for asking too many questions :).. Thanks for such a great repository.

|

closed

|

2020-04-17T08:25:25Z

|

2020-04-22T10:27:45Z

|

https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/996

|

[] |

kalai2033

| 3

|

ultralytics/ultralytics

|

deep-learning

| 19,614

|

jetpack=5.1.3, pt export engine OOM

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and found no similar bug report.

### Ultralytics YOLO Component

_No response_

### Bug

When I converted the pt to an engine file, there was an OOM

### Environment

Jetson Nano

Jetpack: 5.1.3

TensorRT: 8.5.2.2

CUDA: 11.4.315

Python: 3.8.10

torch 2.1.0a0+41361538.nv23.6

torchvision 0.16.0

ultralytics 8.2.35

onnxruntime-gpu 1.17.0

onnx 1.17.0

### Minimal Reproducible Example

hers's my code:

```python

from ultralytics import YOLO

model_path = 'best.pt'

model = YOLO(model_path)

model.export(format='engine')

# load

trt_model = YOLO('best.engine', task='detect')

```

### Additional

Log:

```txt

(.perf) nvidia@nvidia:~/work$ python3 pt_to_engine.py

WARNING ⚠️ TensorRT requires GPU export, automatically assigning device=0

Ultralytics YOLOv8.2.35 🚀 Python-3.8.10 torch-2.1.0a0+41361538.nv23.06 CUDA:0 (Orin, 3426MiB)

Model summary (fused): 168 layers, 3006623 parameters, 0 gradients, 8.1 GFLOPs

PyTorch: starting from 'best.pt' with input shape (1, 3, 416, 416) BCHW and output shape(s) (1, 9, 3549) (5.9 MB)

ONNX: starting export with onnx 1.17.0 opset 17...

====== Diagnostic Run torch.onnx.export version 2.1.0a0+41361538.nv23.06 =======

verbose: False, log level: Level.ERROR

======================= 0 NONE 0 NOTE 0 WARNING 0 ERROR ========================

ONNX: export success ✅ 1.5s, saved as 'best.onnx' (11.6 MB)

TensorRT: starting export with TensorRT 8.5.2.2...

[03/10/2025-18:09:44] [TRT] [I] [MemUsageChange] Init CUDA: CPU +215, GPU +0, now: CPU 1834, GPU 3324 (MiB)

Killed

```

### Are you willing to submit a PR?

- [ ] Yes I'd like to help by submitting a PR!

|

open

|

2025-03-10T10:10:19Z

|

2025-03-11T04:02:10Z

|

https://github.com/ultralytics/ultralytics/issues/19614

|

[

"bug",

"embedded",

"exports"

] |

feiniua

| 4

|

thunlp/OpenPrompt

|

nlp

| 25

|

Test performance mismatch between training and testing phases

|

I used the default setting in the `experiments` directory for classification and found that the performance during training is **inconsistent** with that during testing.

Here are the commands for reproducing the issue.

- training:

`python cli.py --config_yaml classification_softprompt.yaml`

- test:

`python cli.py --config_yaml classification_softprompt.yaml --test --resume`

I append the following lines to the yaml file to load the trained model:

>logging:

path: logs/agnews_bert-base-cased_soft_manual_template_manual_verbalizer_211023110855

The training log shows the performance on the test set is:

> trainer.evaluate Test Performance: micro-f1: 0.7927631578947368

However, the testing log says:

> trainer.evaluate Test Performance: micro-f1: 0.8102631578947368

|

closed

|

2021-10-23T03:26:28Z

|

2021-11-06T15:36:01Z

|

https://github.com/thunlp/OpenPrompt/issues/25

|

[] |

huchinlp

| 1

|

identixone/fastapi_contrib

|

pydantic

| 166

|

Allow overriding settings from fastAPI app

|

* FastAPI Contrib version: 0.2.9

* FastAPI version: 0.52.0

* Python version: 3.8.2

* Operating System: Linux

### Description

Currently we can only override settings with settings from the fastAPI app by using environment variables (See the [Todo](https://github.com/identixone/fastapi_contrib/blob/081670603917b1b7e9646c75fba5614b09823a3e/fastapi_contrib/conf.py#L72)).

Can we implement this functionaility, so we do not have to go through the environment variables?

|

closed

|

2021-02-11T08:37:00Z

|

2021-03-17T08:42:11Z

|

https://github.com/identixone/fastapi_contrib/issues/166

|

[] |

pheanex

| 2

|

SALib/SALib

|

numpy

| 541

|

SALib.sample.sobol.sample raises ValueError with "skip_values" set to zero.

|

Using SALib 1.4.6 with Python 3.10.6. The code to reproduce the error is as follows:

```

from SALib.sample.sobol import sample

from SALib.test_functions import Ishigami

import numpy as np

problem = {

'num_vars': 3,

'names': ['x1', 'x2', 'x3'],

'bounds': [[-3.14159265359, 3.14159265359],

[-3.14159265359, 3.14159265359],

[-3.14159265359, 3.14159265359]]

}

param_values = sample(problem, 1024, skip_values=0)

```

Error message is as follows:

```

ValueError Traceback (most recent call last)

Cell In [22], line 13

4 import numpy as np

6 problem = {

7 'num_vars': 3,

8 'names': ['x1', 'x2', 'x3'],

(...)

11 [-3.14159265359, 3.14159265359]]

12 }

---> 13 param_values = sample(problem, 1024, skip_values=0)

File ~/opt/anaconda3/envs/sensitivity/lib/python3.10/site-packages/SALib/sample/sobol.py:129, in sample(problem, N, calc_second_order, scramble, skip_values, seed)

127 qrng.fast_forward(M)

128 elif skip_values < 0 or isinstance(skip_values, int):

--> 129 raise ValueError("`skip_values` must be a positive integer.")

131 # sample Sobol' sequence

132 base_sequence = qrng.random(N)

ValueError: `skip_values` must be a positive integer.

```

|

closed

|

2022-10-17T16:57:57Z

|

2022-10-17T22:57:56Z

|

https://github.com/SALib/SALib/issues/541

|

[] |

ddebnath-nrel

| 1

|

httpie/cli

|

python

| 1,043

|

Consider joining efforts with xh in porting HTTPie to Rust

|

HTTPie is one of a few tools that I recommend to everyone. However, the language it is currently written in means it can suffer from slow startup and some occasional installation problems.

I and other lovely contributors have been working on [porting HTTPie to Rust](https://github.com/ducaale/xh). And given that an [official Rust version of HTTPie](https://crates.io/crates/httpie) is now being planned, we would love to help in any way we can.

|

closed

|

2021-02-28T21:04:18Z

|

2021-04-02T15:18:36Z

|

https://github.com/httpie/cli/issues/1043

|

[] |

ducaale

| 2

|

pyro-ppl/numpyro

|

numpy

| 1,694

|

bug in NeuTraReparam

|

Minimal example:

```python

import jax

import jax.numpy as jnp

from jax.random import PRNGKey

import numpyro

import numpyro.distributions as dist

from numpyro.infer import MCMC, NUTS, Trace_ELBO, SVI

from numpyro.infer.reparam import NeuTraReparam

from numpyro.infer.autoguide import AutoBNAFNormal

n = 100

p = 10 # n_dim x

q = 5 # n_dim y

k = min(3, p, q) # n_dim latent

X = dist.MultivariateNormal(jnp.zeros(p), jnp.eye(p, p)).sample(PRNGKey(0), (n,))

Y = dist.MultivariateNormal(jnp.zeros(q), jnp.eye(q, q)).sample(PRNGKey(1), (n,))

def model(X, Y=None):

with numpyro.plate('_k', k):

P_cov = numpyro.sample('P_cov', dist.InverseGamma(3, 1))

with numpyro.plate('_q', q):

Q_cov = numpyro.sample('Q_cov', dist.InverseGamma(3, 1))

P_cov = P_cov * jnp.eye(k, k)

Q_cov = Q_cov * jnp.eye(q, q)

with numpyro.plate('p', p):

P = numpyro.sample('P', dist.MultivariateNormal(jnp.zeros(k), P_cov))

with numpyro.plate('k', k):