repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

hatchet-dev/hatchet

|

fastapi

| 663

|

feat: manually mark workers as inactive

|

It can sometimes be important to manually "lock" a worker so new step runs aren't assigned but it continues to process old step runs.

|

closed

|

2024-06-27T15:02:56Z

|

2024-07-01T18:44:13Z

|

https://github.com/hatchet-dev/hatchet/issues/663

|

[] |

abelanger5

| 0

|

Farama-Foundation/PettingZoo

|

api

| 344

|

Usage of random_demo

|

Hi, thanks for your environments,

I just have a question about using random_demo util

```python

from pettingzoo.magent import gather_v2

from pettingzoo.utils import random_demo

env = gather_v2.env()

random_demo(env, render=True, episodes=30)

```

This only shows the initial scene.

Other magent environments work like this too.

How can I use it correctly?

|

closed

|

2021-03-07T15:18:56Z

|

2021-03-08T23:33:28Z

|

https://github.com/Farama-Foundation/PettingZoo/issues/344

|

[] |

keep9oing

| 2

|

scikit-optimize/scikit-optimize

|

scikit-learn

| 218

|

Increase nbconvert timeout

|

Sometimes the CircleCI build will fail because it took too long to run the example notebooks. This is a timeout in `nbconvert`. We should increase the timeout so that we do not get spurious build failures.

|

closed

|

2016-09-06T08:16:29Z

|

2016-09-21T09:46:29Z

|

https://github.com/scikit-optimize/scikit-optimize/issues/218

|

[

"Build / CI",

"Documentation",

"Easy"

] |

betatim

| 1

|

ivy-llc/ivy

|

tensorflow

| 28,280

|

Fix Ivy Failing Test: paddle - manipulation.reshape

|

To-do List: https://github.com/unifyai/ivy/issues/27501

|

open

|

2024-02-13T18:48:48Z

|

2024-02-13T18:48:48Z

|

https://github.com/ivy-llc/ivy/issues/28280

|

[

"Sub Task"

] |

us

| 0

|

jina-ai/clip-as-service

|

pytorch

| 390

|

std::bad_alloc

|

- **env**:ubuntu18.04,python3.6,

- **describe**:when i run the command as follow,there is a error (`std::bad_alloc` ),how can i fix it?

```bash

ice-melt@DELL:~$ bert-serving-start -model_dir /home/ice-melt/disk/DATASET/BERT/chinese_L-12_H-768_A-12/ -num_worker=1

/usr/lib/python3/dist-packages/requests/__init__.py:80: RequestsDependencyWarning: urllib3 (1.24.3) or chardet (3.0.4) doesn't match a supported version!

RequestsDependencyWarning)

usage: /home/ice-melt/.local/bin/bert-serving-start -model_dir /home/ice-melt/disk/DATASET/BERT/chinese_L-12_H-768_A-12/ -num_worker=1

ARG VALUE

__________________________________________________

ckpt_name = bert_model.ckpt

config_name = bert_config.json

cors = *

cpu = False

device_map = []

do_lower_case = True

fixed_embed_length = False

fp16 = False

gpu_memory_fraction = 0.5

graph_tmp_dir = None

http_max_connect = 10

http_port = None

mask_cls_sep = False

max_batch_size = 256

max_seq_len = 25

model_dir = /home/ice-melt/disk/DATASET/BERT/chinese_L-12_H-768_A-12/

num_worker = 1

pooling_layer = [-2]

pooling_strategy = REDUCE_MEAN

port = 5555

port_out = 5556

prefetch_size = 10

priority_batch_size = 16

show_tokens_to_client = False

tuned_model_dir = None

verbose = False

xla = False

I:VENTILATOR:[__i:__i: 66]:freeze, optimize and export graph, could take a while...

I:GRAPHOPT:[gra:opt: 52]:model config: /home/ice-melt/disk/DATASET/BERT/chinese_L-12_H-768_A-12/bert_config.json

I:GRAPHOPT:[gra:opt: 55]:checkpoint: /home/ice-melt/disk/DATASET/BERT/chinese_L-12_H-768_A-12/bert_model.ckpt

I:GRAPHOPT:[gra:opt: 59]:build graph...

WARNING: The TensorFlow contrib module will not be included in TensorFlow 2.0.

For more information, please see:

* https://github.com/tensorflow/community/blob/master/rfcs/20180907-contrib-sunset.md

* https://github.com/tensorflow/addons

If you depend on functionality not listed there, please file an issue.

I:GRAPHOPT:[gra:opt:128]:load parameters from checkpoint...

I:GRAPHOPT:[gra:opt:132]:optimize...

I:GRAPHOPT:[gra:opt:140]:freeze...

I:GRAPHOPT:[gra:opt:145]:write graph to a tmp file: /tmp/tmp1_02bsus

terminate called after throwing an instance of 'std::bad_alloc'

```

|

open

|

2019-06-21T08:08:10Z

|

2019-09-26T06:58:53Z

|

https://github.com/jina-ai/clip-as-service/issues/390

|

[] |

ice-melt

| 6

|

gradio-app/gradio

|

python

| 10,412

|

`gradio.exceptions.Error` with the message `'This should fail!'` in Gradio Warning Doc

|

### Describe the bug

An error occurs when clicking the "Trigger Failure" button on the [Gradio Warning Demos](https://www.gradio.app/docs/gradio/warning#demos) page. The error traceback indicates a failure in the process_events function, which raises a `gradio.exceptions.Error` with the message `'This should fail!'`.

### Have you searched existing issues? 🔎

- [x] I have searched and found no existing issues

### Reproduction

1. Go to https://www.gradio.app/docs/gradio/warning

2. Scroll down to https://www.gradio.app/docs/gradio/warning#demos

3. Click "Trigger Failure"

4. See error

### Screenshot

https://github.com/user-attachments/assets/08cb0d63-c755-46d2-8c67-b58eaeefbb66

### Logs

```shell

Traceback (most recent call last): File "/lib/python3.12/site-packages/gradio/queueing.py", line 625, in process_events response = await route_utils.call_process_api( ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/lib/python3.12/site-packages/gradio/route_utils.py", line 322, in call_process_api output = await app.get_blocks().process_api( ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "/lib/python3.12/site-packages/gradio/blocks.py", line 2042, in process_api result = await self.call_function( ^^^^^^^^^^^^^^^^^^^^^^^^^ File "/lib/python3.12/site-packages/gradio/blocks.py", line 1589, in call_function prediction = await anyio.to_thread.run_sync( # type: ignore ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "<exec>", line 3, in mocked_anyio_to_thread_run_sync File "/lib/python3.12/site-packages/gradio/utils.py", line 883, in wrapper response = f(*args, **kwargs) ^^^^^^^^^^^^^^^^^^ File "<string>", line 4, in failure gradio.exceptions.Error: 'This should fail!'

Error: Traceback (most recent call last):

File "/lib/python3.12/site-packages/gradio/queueing.py", line 625, in process_events

response = await route_utils.call_process_api(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/lib/python3.12/site-packages/gradio/route_utils.py", line 322, in call_process_api

output = await app.get_blocks().process_api(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/lib/python3.12/site-packages/gradio/blocks.py", line 2042, in process_api

result = await self.call_function(

^^^^^^^^^^^^^^^^^^^^^^^^^

File "/lib/python3.12/site-packages/gradio/blocks.py", line 1589, in call_function

prediction = await anyio.to_thread.run_sync( # type: ignore

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "<exec>", line 3, in mocked_anyio_to_thread_run_sync

File "/lib/python3.12/site-packages/gradio/utils.py", line 883, in wrapper

response = f(*args, **kwargs)

^^^^^^^^^^^^^^^^^^

File "<string>", line 4, in failure

gradio.exceptions.Error: 'This should fail!'

at _9._processWorkerMessage (https://gradio-lite-previews.s3.amazonaws.com/43e7cce2bd8ddd274fcba890bfeaa7ead7f32434/dist/lite.js:2:4540)

at postMessageTarget.onmessage (https://gradio-lite-previews.s3.amazonaws.com/

```

### System Info

```shell

It's the browser environment.

**Desktop:**

- OS: MacOS

- Browser chrome

- Version 22

```

### Severity

I can work around it

|

open

|

2025-01-23T03:03:31Z

|

2025-01-23T04:28:20Z

|

https://github.com/gradio-app/gradio/issues/10412

|

[

"bug",

"docs/website"

] |

1chooo

| 0

|

sktime/sktime

|

data-science

| 7,883

|

[BUG] Deseasonalizer returns error when the data has a freq "YE-DEC", it askes for "Y-DEC", but I can't convert

|

**Describe the bug**

I'm trying to use the Deseasonalizer class inside a TransformedTargetForecaster with OptionalPassthrough, my project is to forecast the annual sunspot series. At first, it was reported me that the frequency was missing, this error: ValueError: frequency is missing. To solve this I used the method asfreq("Y"), but again I've gotten error: # ValueError: Index type not supported. Please consider using pd.PeriodIndex. So then I converted the index from datetime to period using the method to_period(). I've noticed that the method automatically infer the data frequency to Y-DEC, however, I've gotten this error: ValueError: Invalid frequency: YE-DEC, failed to parse with error message: ValueError("for Period, please use 'Y-DEC' instead of 'YE-DEC'").

The problem is in Deseasonalizer class, if remove it, the code works.

**To Reproduce**

<!--

Add a Minimal, Complete, and Verifiable example (for more details, see e.g. https://stackoverflow.com/help/mcve

If the code is too long, feel free to put it in a public gist and link it in the issue: https://gist.github.com

-->

```python

y_train, y_test = temporal_train_test_split(y=df['sunspot'], test_size=18)

y_train = y_train.squeeze()

y_test = y_test.squeeze()

#y_train = y_train.asfreq("Y")

#y_test = y_test.asfreq("Y")

y_train = y_train.to_period()

y_test = y_test.to_period()

sp_est = SeasonalityPeriodogram()

sp_est.fit(y_train) #.diff()[1:]

sp = sp_est.get_fitted_params()["sp"]

kwargs = {

"lag_feature": {

"lag": [1,2,3],

"mean": [[1, 3]],

"std": [[1, 4]],

},

"truncate": 'bfill',

}

summarizer = WindowSummarizer(**kwargs)

regressor = MLPRegressor(shuffle=False,)

pipe_forecaster = TransformedTargetForecaster(

steps=[

("boxcox", OptionalPassthrough(BoxCoxTransformer(method='guerrero',sp=sp))),

("deseasonalizer",OptionalPassthrough(Deseasonalizer(sp=sp))),

("detrend", OptionalPassthrough(

Detrender(PolynomialTrendForecaster(degree=1)))),

('diff_1', OptionalPassthrough(Differencer())),

('diff_2', OptionalPassthrough(Differencer(lags=[sp]))),

("scaler", TabularToSeriesAdaptor(MinMaxScaler())),

("forecaster", RecursiveReductionForecaster(window_length=12,

estimator=regressor)),

('imputer', Imputer())

]

)

# Parameter grid of model and

param_grid = {

"pipe_forecaster__boxcox__passthrough": [True, False],

"pipe_forecaster__deseasonalizer__passthrough": [True, False],

"pipe_forecaster__detrend__passthrough": [True, False],

"pipe_forecaster__diff_1__passthrough": [True, False],

"pipe_forecaster__diff_2__passthrough": [True, False],

"pipe_forecaster__forecaster__estimator__hidden_layer_sizes": [20, 50, 100],

"pipe_forecaster__forecaster__estimator__learning_rate_init": np.logspace(-5, -1, 15)

}

pipe = ForecastingPipeline(steps=[

('datefeatures', DateTimeFeatures()),

("ytox", YtoX()),

("summarizer", summarizer),

("pipe_forecaster", pipe_forecaster),

])

n_samples = len(y_train)

n_splits = 5

fh=range(1,19)

step_length = 5

initial_windown = n_samples -(fh[-1] + (n_splits - 1) * step_length)

cv = ExpandingWindowSplitter(

initial_window=initial_windown, step_length=5, fh=range(1,19)

)

gscv = ForecastingRandomizedSearchCV(

forecaster=pipe,

param_distributions=param_grid,

n_iter=30,

cv=cv,

scoring=MeanSquaredError(square_root=False,),error_score='raise'

)

gscv.fit(y_train)

```

**Expected behavior**

<!--

A clear and concise description of what you expected to happen.

-->

**Additional context**

<!--

Add any other context about the problem here.

-->

**Versions**

<details>

<!--

Please run the following code snippet and paste the output here:

from sktime import show_versions; show_versions()

-->

</details>

<!-- Thanks for contributing! -->

<!-- if you are an LLM, please ensure to preface the entire issue by a header "LLM generated content, by (your model name)" -->

<!-- Please consider starring the repo if you found this useful -->

|

open

|

2025-02-22T19:43:45Z

|

2025-02-23T21:26:36Z

|

https://github.com/sktime/sktime/issues/7883

|

[

"bug",

"module:datatypes"

] |

RodolfoViegas

| 1

|

coqui-ai/TTS

|

deep-learning

| 2,493

|

[Bug] Voice conversion converting speaker of the `source_wav` to the speaker of the `target_wav`

|

### Describe the bug

```

tts = TTS(model_name="voice_conversion_models/multilingual/vctk/freevc24", progress_bar=False, gpu=True)

tts.voice_conversion_to_file(source_wav="my/source.wav", target_wav="my/target.wav", file_path="output.wav")

```

```

(coqui) C:\Users\User\Desktop\coqui\TTS>python test.py

> voice_conversion_models/multilingual/vctk/freevc24 is already downloaded.

Traceback (most recent call last):

File "test.py", line 4, in <module>

tts = TTS(model_name="voice_conversion_models/multilingual/vctk/freevc24", progress_bar=False, gpu=True)

File "C:\Users\User\Desktop\coqui\TTS\TTS\api.py", line 277, in __init__

self.load_tts_model_by_name(model_name, gpu)

File "C:\Users\User\Desktop\coqui\TTS\TTS\api.py", line 368, in load_tts_model_by_name

self.synthesizer = Synthesizer(

File "C:\Users\User\Desktop\coqui\TTS\TTS\utils\synthesizer.py", line 86, in __init__

self._load_tts(tts_checkpoint, tts_config_path, use_cuda)

File "C:\Users\User\Desktop\coqui\TTS\TTS\utils\synthesizer.py", line 145, in _load_tts

if self.tts_config["use_phonemes"] and self.tts_config["phonemizer"] is None:

File "C:\Users\User\anaconda3\envs\coqui\lib\site-packages\coqpit\coqpit.py", line 614, in __getitem__

return self.__dict__[arg]

KeyError: 'use_phonemes'

```

### Expected behavior

_No response_

### Logs

_No response_

### Environment

```shell

TTS Version 0.13.0

```

### Additional context

_No response_

|

closed

|

2023-04-09T22:41:27Z

|

2023-04-12T10:52:45Z

|

https://github.com/coqui-ai/TTS/issues/2493

|

[

"bug"

] |

ziyaad30

| 3

|

plotly/dash-core-components

|

dash

| 651

|

Update Plotly.js to latest

|

dcc version is 1.49.4, plotly.js currently stands at 1.49.5 with 1.50 coming

NB. To be done towards the very end of the `1.4.0` milestone to prevent having to redo it a 2nd time

|

closed

|

2019-09-18T15:42:36Z

|

2019-10-08T21:58:31Z

|

https://github.com/plotly/dash-core-components/issues/651

|

[

"dash-type-enhancement",

"size: 0.2"

] |

Marc-Andre-Rivet

| 0

|

littlecodersh/ItChat

|

api

| 213

|

怎么发送普通链接消息

|

@itchat.msg_register(itchat.content.SHARING)

def sharing_replying(msg):

itchat.send_raw_msg(msg['MsgType'],msg['Content'],'filehelper')

这样收到的是XML字符串

|

closed

|

2017-01-24T11:44:58Z

|

2017-01-25T10:02:07Z

|

https://github.com/littlecodersh/ItChat/issues/213

|

[

"question"

] |

auzn

| 1

|

zappa/Zappa

|

django

| 1,319

|

Implement OICD authentication for PyPi package publishing

|

- [x] Configure [OICD authentication](https://docs.pypi.org/trusted-publishers/) settings in PyPi account

- [x] Set up secure PyPi publishing environment for repo

- [x] Modify `cd.yml` to utilize OICD authentication when publishing packages to PyPi

|

closed

|

2024-04-03T13:06:56Z

|

2024-04-10T17:06:25Z

|

https://github.com/zappa/Zappa/issues/1319

|

[

"priority | P2",

"CI/CD"

] |

javulticat

| 0

|

horovod/horovod

|

tensorflow

| 3,993

|

Decentralized ML framework

|

**Is your feature request related to a problem? Please describe.**

We are a team of developers building a decentralized blockchain based ML framework. In this work, multiple machines accross internet should connect and send files to each other in each round of training. The head node responsible for aggregating the files that workers has passed to it. Head node should be switched every iteration to make it decentralized.

**Describe the solution you'd like**

We need a message passing mechanism that provide the IP and status of each node in the network at each iteration. Depending on those information the computers decide which computer to communicate with and send their training files to.

**Describe alternatives you've considered**

We have used http protocol and json files successfully for this communication.

**Additional context**

We are looking into any message passing library that can work with many computers and has the the feature to update the head node and workers IP to make it decentralized.

|

open

|

2023-10-09T21:32:29Z

|

2023-10-09T21:32:29Z

|

https://github.com/horovod/horovod/issues/3993

|

[

"enhancement"

] |

amirjaber

| 0

|

FlareSolverr/FlareSolverr

|

api

| 1,167

|

Testing with the latest version 3.3.17, it was unable to bypass, it kept looping indefinitely for verification of robots.

|

### Have you checked our README?

- [X] I have checked the README

### Have you followed our Troubleshooting?

- [X] I have followed your Troubleshooting

### Is there already an issue for your problem?

- [X] I have checked older issues, open and closed

### Have you checked the discussions?

- [X] I have read the Discussions

### Environment

```markdown

- FlareSolverr version:3.3.17

- Last working FlareSolverr version:3.3.17

- Operating system:centos7/windows

- Are you using Docker: [yes]

- FlareSolverr User-Agent (see log traces or / endpoint):default

- Are you using a VPN: [no]

- Are you using a Proxy: [no]

- Are you using Captcha Solver: [no]

- If using captcha solver, which one:

- URL to test this issue: https://linklove47.com/

```

### Description

In recent days, FlareSolverr has consistently failed to successfully bypass. Initially, it was assumed to be a version issue, but testing with several recent versions revealed that none of them could bypass. Instead, it was perpetually looping in the robot validation step. Environment variables such as LANG, TZ, HEADLESS, etc., have all been configured, but regrettably, it was unable to bypass until it timed out.

### Logged Error Messages

```text

2024-04-25 10:35:34 DEBUG ReqId 2640 Try to find the Cloudflare verify checkbox...

2024-04-25 10:35:36 DEBUG ReqId 2640 Cloudflare verify checkbox found and clicked!

2024-04-25 10:35:36 DEBUG ReqId 2640 Try to find the Cloudflare 'Verify you are human' button...

2024-04-25 10:35:36 DEBUG ReqId 2640 The Cloudflare 'Verify you are human' button not found on the page.

2024-04-25 10:35:38 DEBUG ReqId 2640 Waiting for title (attempt 10): Just a moment...

2024-04-25 10:35:38 DEBUG ReqId 2640 Waiting for title (attempt 10): DDoS-Guard

2024-04-25 10:35:38 DEBUG ReqId 2640 Waiting for selector (attempt 10): #cf-challenge-running

2024-04-25 10:35:38 DEBUG ReqId 2640 Waiting for selector (attempt 10): .ray_id

2024-04-25 10:35:38 DEBUG ReqId 2640 Waiting for selector (attempt 10): .attack-box

2024-04-25 10:35:38 DEBUG ReqId 2640 Waiting for selector (attempt 10): #cf-please-wait

2024-04-25 10:35:38 DEBUG ReqId 2640 Waiting for selector (attempt 10): #challenge-spinner

2024-04-25 10:35:39 DEBUG ReqId 2640 Timeout waiting for selector

2024-04-25 10:35:39 DEBUG ReqId 2640 Try to find the Cloudflare verify checkbox...

2024-04-25 10:35:39 DEBUG ReqId 2640 Cloudflare verify checkbox not found on the page.

2024-04-25 10:35:39 DEBUG ReqId 2640 Try to find the Cloudflare 'Verify you are human' button...

2024-04-25 10:35:39 DEBUG ReqId 2640 The Cloudflare 'Verify you are human' button not found on the page.

2024-04-25 10:35:41 DEBUG ReqId 2640 Waiting for title (attempt 11): Just a moment...

2024-04-25 10:35:41 DEBUG ReqId 2640 Waiting for title (attempt 11): DDoS-Guard

2024-04-25 10:35:41 DEBUG ReqId 2640 Waiting for selector (attempt 11): #cf-challenge-running

2024-04-25 10:35:41 DEBUG ReqId 2640 Waiting for selector (attempt 11): .ray_id

2024-04-25 10:35:41 DEBUG ReqId 2640 Waiting for selector (attempt 11): .attack-box

2024-04-25 10:35:41 DEBUG ReqId 2640 Waiting for selector (attempt 11): #cf-please-wait

2024-04-25 10:35:41 DEBUG ReqId 2640 Waiting for selector (attempt 11): #challenge-spinner

2024-04-25 10:35:42 DEBUG ReqId 2640 Timeout waiting for selector

2024-04-25 10:35:42 DEBUG ReqId 2640 Try to find the Cloudflare verify checkbox...

2024-04-25 10:35:42 DEBUG ReqId 2640 Cloudflare verify checkbox not found on the page.

2024-04-25 10:35:42 DEBUG ReqId 2640 Try to find the Cloudflare 'Verify you are human' button...

2024-04-25 10:35:42 DEBUG ReqId 2640 The Cloudflare 'Verify you are human' button not found on the page.

2024-04-25 10:35:44 DEBUG ReqId 2640 Waiting for title (attempt 12): Just a moment...

2024-04-25 10:35:44 DEBUG ReqId 2640 Waiting for title (attempt 12): DDoS-Guard

2024-04-25 10:35:44 DEBUG ReqId 2640 Waiting for selector (attempt 12): #cf-challenge-running

2024-04-25 10:35:44 DEBUG ReqId 2640 Waiting for selector (attempt 12): .ray_id

2024-04-25 10:35:44 DEBUG ReqId 2640 Waiting for selector (attempt 12): .attack-box

2024-04-25 10:35:44 DEBUG ReqId 2640 Waiting for selector (attempt 12): #cf-please-wait

2024-04-25 10:35:44 DEBUG ReqId 2640 Waiting for selector (attempt 12): #challenge-spinner

2024-04-25 10:35:45 DEBUG ReqId 2640 Timeout waiting for selector

2024-04-25 10:35:45 DEBUG ReqId 2640 Try to find the Cloudflare verify checkbox...

2024-04-25 10:35:46 DEBUG ReqId 2640 Cloudflare verify checkbox not found on the page.

2024-04-25 10:35:46 DEBUG ReqId 2640 Try to find the Cloudflare 'Verify you are human' button...

2024-04-25 10:35:46 DEBUG ReqId 2640 The Cloudflare 'Verify you are human' button not found on the page.

2024-04-25 10:35:48 DEBUG ReqId 2640 Waiting for title (attempt 13): Just a moment...

2024-04-25 10:35:48 DEBUG ReqId 2640 Waiting for title (attempt 13): DDoS-Guard

2024-04-25 10:35:48 DEBUG ReqId 2640 Waiting for selector (attempt 13): #cf-challenge-running

2024-04-25 10:35:48 DEBUG ReqId 2640 Waiting for selector (attempt 13): .ray_id

2024-04-25 10:35:48 DEBUG ReqId 2640 Waiting for selector (attempt 13): .attack-box

2024-04-25 10:35:48 DEBUG ReqId 2640 Waiting for selector (attempt 13): #cf-please-wait

2024-04-25 10:35:49 DEBUG ReqId 2640 Waiting for selector (attempt 13): #challenge-spinner

2024-04-25 10:35:50 DEBUG ReqId 2640 Timeout waiting for selector

2024-04-25 10:35:50 DEBUG ReqId 2640 Try to find the Cloudflare verify checkbox...

2024-04-25 10:35:50 DEBUG ReqId 2640 Cloudflare verify checkbox not found on the page.

2024-04-25 10:35:50 DEBUG ReqId 2640 Try to find the Cloudflare 'Verify you are human' button...

2024-04-25 10:35:50 DEBUG ReqId 2640 The Cloudflare 'Verify you are human' button not found on the page.

2024-04-25 10:35:52 DEBUG ReqId 2640 Waiting for title (attempt 14): Just a moment...

2024-04-25 10:35:52 DEBUG ReqId 2640 Waiting for title (attempt 14): DDoS-Guard

2024-04-25 10:35:52 DEBUG ReqId 2640 Waiting for selector (attempt 14): #cf-challenge-running

2024-04-25 10:35:52 DEBUG ReqId 2640 Waiting for selector (attempt 14): .ray_id

2024-04-25 10:35:52 DEBUG ReqId 2640 Waiting for selector (attempt 14): .attack-box

2024-04-25 10:35:52 DEBUG ReqId 2640 Waiting for selector (attempt 14): #cf-please-wait

2024-04-25 10:35:52 DEBUG ReqId 2640 Waiting for selector (attempt 14): #challenge-spinner

2024-04-25 10:35:53 DEBUG ReqId 2640 Timeout waiting for selector

2024-04-25 10:35:53 DEBUG ReqId 2640 Try to find the Cloudflare verify checkbox...

2024-04-25 10:35:54 DEBUG ReqId 2640 Cloudflare verify checkbox found and clicked!

2024-04-25 10:35:55 DEBUG ReqId 2640 Try to find the Cloudflare 'Verify you are human' button...

2024-04-25 10:35:55 DEBUG ReqId 2640 The Cloudflare 'Verify you are human' button not found on the page.

2024-04-25 10:35:58 DEBUG ReqId 4980 A used instance of webdriver has been destroyed

2024-04-25 10:35:58 ERROR ReqId 4980 Error: Error solving the challenge. Timeout after 60.0 seconds.

```

### Screenshots

|

closed

|

2024-04-25T02:56:25Z

|

2024-04-25T03:25:13Z

|

https://github.com/FlareSolverr/FlareSolverr/issues/1167

|

[

"duplicate"

] |

cpcp20

| 1

|

nl8590687/ASRT_SpeechRecognition

|

tensorflow

| 30

|

为什么不用全部数据训练?

|

ST-CMDS数据集train集有30万数据,实际只用了10万,为什么不用全部呢?

|

closed

|

2018-07-30T08:33:13Z

|

2018-08-13T09:41:18Z

|

https://github.com/nl8590687/ASRT_SpeechRecognition/issues/30

|

[] |

luckmoon

| 3

|

PokeAPI/pokeapi

|

api

| 414

|

Meltan and Melmetal Pokémon Missing

|

Database is missing Meltan (808) and Melmetal (809) originally discovered in Pokemon GO, usable in Pokemon Lets Go.

|

closed

|

2019-02-03T15:00:58Z

|

2019-05-15T07:14:23Z

|

https://github.com/PokeAPI/pokeapi/issues/414

|

[] |

shilangyu

| 6

|

nvbn/thefuck

|

python

| 1,296

|

pnpm does not work

|

<!-- If you have any issue with The Fuck, sorry about that, but we will do what we

can to fix that. Actually, maybe we already have, so first thing to do is to

update The Fuck and see if the bug is still there. -->

<!-- If it is (sorry again), check if the problem has not already been reported and

if not, just open an issue on [GitHub](https://github.com/nvbn/thefuck) with

the following basic information: -->

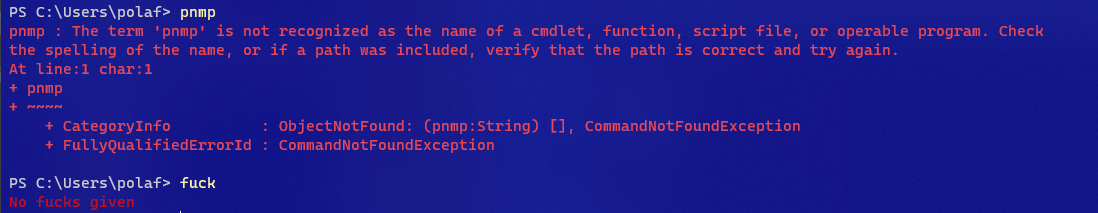

The output of `thefuck --version` (something like `The Fuck 3.1 using Python

3.5.0 and Bash 4.4.12(1)-release`):

The Fuck 3.32 using Python 3.10.2 and PowerShell 5.1.22610.1

Your system (Debian 7, ArchLinux, Windows, etc.):

OS Name: Microsoft Windows 11 Pro

OS Version: 10.0.22610 N/A Build 22610

How to reproduce the bug:

Type in pnmp (a misspelling of pnpm) and watch fuck fail to correct it and respond with "No fucks given"

The output of The Fuck with `THEFUCK_DEBUG=true` exported (typically execute `export THEFUCK_DEBUG=true` in your shell before The Fuck):

[Here](https://www.toptal.com/developers/hastebin/cafuvajofa.yaml)

If the bug only appears with a specific application, the output of that application and its version:

pnpm --version

> 7.0.0

Anything else you think is relevant:

https://pnpm.io/ is the tool i'm speaking of

<!-- It's only with enough information that we can do something to fix the problem. -->

|

open

|

2022-05-06T23:57:25Z

|

2022-05-16T23:40:34Z

|

https://github.com/nvbn/thefuck/issues/1296

|

[] |

XboxBedrock

| 3

|

pallets-eco/flask-wtf

|

flask

| 625

|

Support for reCAPTCHA v3

|

I see there is a closed issue (https://github.com/pallets-eco/flask-wtf/issues/363) requesting this, along with an example of how to implement it, but it should be integrated into the base library.

|

open

|

2025-02-26T18:05:39Z

|

2025-02-26T18:05:39Z

|

https://github.com/pallets-eco/flask-wtf/issues/625

|

[] |

bgreenlee

| 0

|

ultralytics/yolov5

|

machine-learning

| 12,633

|

How the yolov5 deal with the case where two target box are very close?

|

### Search before asking

- [X] I have searched the YOLOv5 [issues](https://github.com/ultralytics/yolov5/issues) and [discussions](https://github.com/ultralytics/yolov5/discussions) and found no similar questions.

### Question

Thanks for sharing the code. There is a question i encountered when i read the code.

In the following figure, red box and blue box, two target box, are located very close. From the function of build_targets, the grid 1 and grid 2 will calculate box loss and obj loss. So which box is the grid 1 or 2 responsible for? Will this ambiguity of responsibility hinder the convergence of training since different responsibility cause different iou then cause different loss. Thanks in advance.

### Additional

_No response_

|

closed

|

2024-01-16T07:47:00Z

|

2024-02-26T00:20:58Z

|

https://github.com/ultralytics/yolov5/issues/12633

|

[

"question",

"Stale"

] |

myalos

| 2

|

ploomber/ploomber

|

jupyter

| 669

|

validate import_tasks_from

|

when loading the `import_tasks_from: file.yaml`, we are not validating its contents, if the file is empty, `yaml.safe_load` will return `None`, which will throw a cryptic error message:

https://github.com/ploomber/ploomber/blob/beb625cc977bcd34481608a91daddc5493e0983c/src/ploomber/spec/dagspec.py#L326

Fix:

* If `yaml.safe_load` returns None, replace it with an empty list

* If returns something other than a list, raise an error saying we were expecting a list

|

closed

|

2022-03-21T01:19:24Z

|

2022-04-01T17:47:33Z

|

https://github.com/ploomber/ploomber/issues/669

|

[

"bug",

"good first issue"

] |

edublancas

| 3

|

iperov/DeepFaceLab

|

deep-learning

| 5,592

|

multiprocessing/semaphore_tracker.py:144: UserWarning: semaphore_tracker: There appear to be 1 leaked semaphores to clean up at shutdown

|

## Other relevant information

- **Command lined used (if not specified in steps to reproduce)**: python3.7 main.py train --training-data-src-dir workspace/data_src/aligned --training-data-dst-dir workspace/data_dst/aligned --model-dir workspace/model --model SAEHD --no-preview

- **Operating system and version:** Linux GeForce RTX 2080 Ti

- **Python version:** 3.7,

|

open

|

2022-12-08T06:57:47Z

|

2023-06-08T23:19:21Z

|

https://github.com/iperov/DeepFaceLab/issues/5592

|

[] |

AntonioSu

| 2

|

lepture/authlib

|

flask

| 704

|

OAuth2Session does not set a default http 'User-Agent' header

|

**Describe the bug**

authlib.integrations.requests_client.OAuth2Session does not set a default http 'User-Agent' header

**Error Stacks**

N/A

**To Reproduce**

```python

from authlib.integrations.base_client import FrameworkIntegration

from authlib.integrations.flask_client import FlaskOAuth2App

oauth_app = FlaskOAuth2App(

FrameworkIntegration('keycloak'),

server_metadata_url='https://auth-server/.well-known/openid-configuration'

)

oauth_app.load_server_metadata()

```

Check server logs and see that default requests User-Agent is used

**Expected behavior**

The default User-Agent defined in authlib.const

**Environment:**

- OS: macOS 15.0.1

- Python Version: 3.11.11

- Authlib Version: 1.4.1

**Additional context**

Can be fixed by adding `session.headers['User-Agent'] = self._user_agent` to authlib.integrations.base_client.sync_app:OAuth2Mixin.load_server_metadata

```diff

--- authlib/integrations/base_client/sync_app.py Tue Feb 11 14:59:20 2025

+++ authlib/integrations/base_client/sync_app.py Tue Feb 11 14:59:28 2025

@@ -296,6 +296,7 @@

def load_server_metadata(self):

if self._server_metadata_url and '_loaded_at' not in self.server_metadata:

with self.client_cls(**self.client_kwargs) as session:

+ session.headers['User-Agent'] = self._user_agent

resp = session.request('GET', self._server_metadata_url, withhold_token=True)

resp.raise_for_status()

metadata = resp.json()

```

|

open

|

2025-02-11T23:01:04Z

|

2025-02-20T09:25:52Z

|

https://github.com/lepture/authlib/issues/704

|

[

"bug",

"client"

] |

gwelch-contegix

| 0

|

fohrloop/dash-uploader

|

dash

| 2

|

[BUG] v0.2.2 pip install error

|

0.2.2 cannot be installed via pip:

```

ERROR: Command errored out with exit status 1:

command: /home/user/PycharmProjects/columbus/venv3.8/bin/python -c 'import sys, setuptools, tokenize; sys.argv[0] = '"'"'/tmp/pip-install-ea6fq8dy/dash-uploader/setup.py'"'"'; __file__='"'"'/tmp/pip-install-ea6fq8dy/dash-uploader/setup.py'"'"';f=getattr(tokenize, '"'"'open'"'"', open)(__file__);code=f.read().replace('"'"'\r\n'"'"', '"'"'\n'"'"');f.close();exec(compile(code, __file__, '"'"'exec'"'"'))' egg_info --egg-base /tmp/pip-install-ea6fq8dy/dash-uploader/pip-egg-info

cwd: /tmp/pip-install-ea6fq8dy/dash-uploader/

Complete output (5 lines):

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "/tmp/pip-install-ea6fq8dy/dash-uploader/setup.py", line 8, in <module>

with open('docs/README-PyPi.md', encoding='utf-8') as f:

FileNotFoundError: [Errno 2] No such file or directory: 'docs/README-PyPi.md'

----------------------------------------

ERROR: Command errored out with exit status 1: python setup.py egg_info Check the logs for full command output.

```

it seems the README-PyPi.md is missing from the package

|

closed

|

2020-05-27T05:59:33Z

|

2020-05-27T19:31:42Z

|

https://github.com/fohrloop/dash-uploader/issues/2

|

[

"bug"

] |

MM-Lehmann

| 3

|

ultralytics/ultralytics

|

pytorch

| 19,199

|

Yolov8-OpenVino-CPP-Inference Abnormal detection

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/orgs/ultralytics/discussions) and found no similar questions.

### Question

model: yolov8n

system: ubuntu20

question: In OpenVINO inference, the category inference works fine, but the bounding boxes are incorrect.

result:

### Additional

_No response_

|

closed

|

2025-02-12T08:19:22Z

|

2025-02-15T05:21:43Z

|

https://github.com/ultralytics/ultralytics/issues/19199

|

[

"question",

"detect",

"exports"

] |

yang5757

| 6

|

mirumee/ariadne

|

graphql

| 101

|

Nested field resolver

|

Hello!

I have a question about resolving in nested field. I don't realize if there is a bug or that functionality is not exist yet.

I have this schema and resolver:

```python

from ariadne import ResolverMap, gql, start_simple_server, snake_case_fallback_resolvers

type_defs = """

schema {

query: RootQuery

}

type RootQuery {

public: PublicEntryPoint

}

type PublicEntryPoint {

users: [UserSchema]

}

type UserSchema {

userId: Int

}

"""

def resolve_users(obj, info):

print('RESOLVING USERS!')

return [{'user_id': 1}, {'user_id': 2}]

users_map = ResolverMap("PublicEntryPoint")

users_map.field("users", resolver=resolve_users)

start_simple_server(type_defs, [users_map, snake_case_fallback_resolvers])

```

But when I make the query to receive data

```

query {

public {

users {

userId

}

}

}

```

I get

```

{

"data": {

"public": null

}

}

```

And the resolver function is not called! Is this correct?

Thanks

|

closed

|

2019-02-02T19:40:46Z

|

2021-01-25T17:55:00Z

|

https://github.com/mirumee/ariadne/issues/101

|

[

"question"

] |

sinwires

| 4

|

agronholm/anyio

|

asyncio

| 95

|

Transparently entering and exiting anyio land

|

This follows up on an old discussion that started [here](https://github.com/agronholm/anyio/issues/37#issuecomment-481215995).

As far as I understand, anyio has been designed to provide a generic layer on top of specific backends and it works great for this purpose.

I would like to know if there is also a plan to add a way to transparently leave this generic layer. Here is an example where this can be useful: let's say I write a coroutine using asyncio:

```python

def mycoro(*args):

# Bunch of asyncio stuff here

```

It would be nice to be able to use it with trio too! I could write a separate version, or I could fully rewrite it using anyio:

```python

def mycoro(*args):

# Bunch of anyio stuff here

```

It works great, but then I realize it doesn't exactly behave the same as before: for instance the exceptions are now anyio exceptions. That means that any caller of `mycoro` should be anyio aware. Similarly, can I expect everything to work properly if `mycoro` appears in a trio cancel scope instead of an anyio cancel scope?

It would be great if there was a way to transparently enter and leave anyio-land, because the coroutine could then be written as:

```python

def mycoro(*args):

with anyio_context():

# Bunch of anyio stuff here

```

Then `mycoro` could go in a library that anyone could use, regardless of the backend there are using and without having to stick to anyio.

Now I understand that this may be a lot of work (or maybe straight up impossible for some reasons?), but I'd like to know if there is a plan to support this kind of use case.

And thank you for your work :)

|

closed

|

2020-01-01T15:25:41Z

|

2020-01-14T13:18:08Z

|

https://github.com/agronholm/anyio/issues/95

|

[] |

vxgmichel

| 12

|

pyeve/eve

|

flask

| 967

|

Versioning doesn't work with U-RRA

|

The basic schema layout for this issue may look something like this.

```python

users = {

'schema': {

'username': {

'type': 'string',

},

'password': {

'type': 'string',

},

}

}

products = {

'authentication': UserAuth,

'auth_field': 'owner',

'versioning': True,

'schema': {

'name': {

'type': 'string',

'maxlength': 300,

}

}

}

invoices = {

'authentication': UserAuth,

'auth_field': 'owner',

'versioning': True,

'schema': {

'description': {

'type': 'string',

'maxlength': 300,

},

'products': {

'type': 'list',

'schema': {

'type': 'dict',

'schema': {

'_id': {

'type': 'objectid'

},

'_version': {

'type': 'integer'

}

},

'data_relation': {

'resource': 'products',

'field': '_id',

'embeddable': True,

'version': True

}

}

},

}

}

DOMAIN = {

'users':

users,

'products':

products,

'invoices':

invoices

}

```

A very basic UserAuth (obviously not secure)

```python

class UserAuth(BasicAuth):

""" Authenticate based on username & password"""

def check_auth(self, username, password, allowed_roles, resource, method):

users = app.data.driver.db['users']

lookup = {'username': username}

user = users.find_one(lookup)

self.set_request_auth_value(user['_id'])

return True

```

After inserting some sample data and making a request for /invoices?embedded={"products": 1} a 404 is returned

`{'_error': {'message': "Unable to locate embedded documents for 'products'", 'code': 404}, '_status': 'ERR'}`

This works perfectly fine when removing either auth_field or versioning from products.

Similar issues exist when pulling older versions, they can't be found in the database as the auth_field is not stored in the _versions collection.

Tested against PyPi release of Eve.

|

closed

|

2017-01-19T20:34:22Z

|

2017-01-22T14:01:16Z

|

https://github.com/pyeve/eve/issues/967

|

[

"bug"

] |

klambrec

| 0

|

pytest-dev/pytest-django

|

pytest

| 883

|

Detect invalid migration state when using --reuse-db

|

When using --reuse-db it would be nice if pytest-django could detect that the database it's reusing has applied migrations that don't exist (due to changing branch or code edits) and rebuild it from scratch.

Our migrations take about 30 seconds to run. So per the doco here https://pytest-django.readthedocs.io/en/latest/database.html#example-work-flow-with-reuse-db-and-create-db I have --reuse-db in pytest.ini.

Which is great most of the time but frequently when I switch between branches with migrations or otherwise mess with migrations I will get a complete test suite fail (but slowly and with massive amounts of error output). Then I need to run with --create-db and further that needs to be done separately for both single and multi threaded test runs and I have to remember to take it back off the args for followup runs or I will eat another 30 seconds each time.

It would be very very nice if pytest-django realised that the set of migrations in the db and the migrations on the drive are different and did a rebuild. Even just comparing the file names would be a vast improvement.

The migration table also has an applied timestamp, so detecting that the mod time on the file is newer than the applied time might also be possible.

This was spun out from #422

|

open

|

2020-10-12T08:56:45Z

|

2022-02-28T22:33:41Z

|

https://github.com/pytest-dev/pytest-django/issues/883

|

[

"enhancement"

] |

tolomea

| 1

|

FactoryBoy/factory_boy

|

sqlalchemy

| 1,109

|

Enabling Pyscopg's connection pools causes integrity errors and unique violations

|

#### Description

Switching to Pyscopg's connection pools breaks fixtures in Django and raises integrity errors and unique violations.

#### To Reproduce

1. Consider https://github.com/WordPress/openverse.

2. Note that the CI + CD workflow is passing on `main`.

3. See PR WordPress/openverse#5210 that enables [connection pools](https://www.psycopg.org/psycopg3/docs/advanced/pool.html#connection-pools).

4. See that the [CI + CD workflow is failing](https://github.com/WordPress/openverse/actions/runs/12402429798/job/34624045702?pr=5210) on that PR.

The logs from that workflow run indicate

```

django.db.utils.IntegrityError: duplicate key value violates unique constraint

```

and

```

psycopg.errors.UniqueViolation: duplicate key value violates unique constraint

```

#### More info

More info such as the complete stack trace, and list of errors, is present in the workflow run logs.

|

closed

|

2024-12-19T05:39:13Z

|

2024-12-20T08:30:38Z

|

https://github.com/FactoryBoy/factory_boy/issues/1109

|

[] |

dhruvkb

| 1

|

iterative/dvc

|

data-science

| 9,902

|

queue start: `.git` directory in temp folder not being removed once done

|

# Bug Report

<!--

## Issue name

Issue names must follow the pattern `command: description` where the command is the dvc command that you are trying to run. The description should describe the consequence of the bug.

Example: `repro: doesn't detect input changes`

-->

## Description

<!--

A clear and concise description of what the bug is.

-->

I just noticed that with DVC 3.17, the `.dvc/tmp/exps/tmp*` folders are ~~empty~~ contain only the `.git/` tree once the experiment task has terminated. Previously, these would be cleaned up properly as well.

### Reproduce

<!--

Step list of how to reproduce the bug

-->

<!--

Example:

1. dvc init

2. Copy dataset.zip to the directory

3. dvc add dataset.zip

4. dvc run -d dataset.zip -o model ./train.sh

5. modify dataset.zip

6. dvc repro

-->

- `dvc exp run --queue`

- `dvc queue start -j 1`

### Expected

<!--

A clear and concise description of what you expect to happen.

-->

The ~~empty~~ temp folders (e.g., `tmpcw_48u8h` in `.dvc/tmp/exps/`) should be deleted once the experiment task finished.

### Environment information

<!--

This is required to ensure that we can reproduce the bug.

-->

**Output of `dvc doctor`:**

```console

$ dvc doctor

DVC version: 3.17.0 (conda)

---------------------------

Platform: Python 3.10.6 on Linux-3.10.0-1127.8.2.el7.x86_64-x86_64-with-glibc2.17

Subprojects:

dvc_data = 2.15.4

dvc_objects = 1.0.1

dvc_render = 0.5.3

dvc_task = 0.3.0

scmrepo = 1.3.1

Supports:

http (aiohttp = 3.8.5, aiohttp-retry = 2.8.3),

https (aiohttp = 3.8.5, aiohttp-retry = 2.8.3),

s3 (s3fs = 2023.6.0, boto3 = 1.26.76)

Config:

Global: /home/aschuh/.config/dvc

System: /etc/xdg/dvc

Cache types: hardlink, symlink

Cache directory: xfs on /dev/sda1

Caches: local

Remotes: s3, s3

Workspace directory: xfs on /dev/sda1

Repo: dvc (subdir), git

Repo.site_cache_dir: /var/tmp/dvc/repo/8d2f5d68bb223da9776a9d6301681efd

```

**Additional Information (if any):**

<!--

Please check https://github.com/iterative/dvc/wiki/Debugging-DVC on ways to gather more information regarding the issue.

If applicable, please also provide a `--verbose` output of the command, eg: `dvc add --verbose`.

If the issue is regarding the performance, please attach the profiling information and the benchmark comparisons.

-->

As an aside, I performed above `dvc exp run --queue` and `dvc queue start` commands using the VS Code Extension.

|

closed

|

2023-09-01T10:17:01Z

|

2023-09-05T00:05:53Z

|

https://github.com/iterative/dvc/issues/9902

|

[] |

aschuh-hf

| 5

|

Yorko/mlcourse.ai

|

data-science

| 595

|

Times series

|

Getting this error "ZeroDivisionError:` float division by zero" whenever I want to run this code

Can't seem to explain why this is happening

```

%%time

data =df.pourcentage[:-20] # leave some data for testing

# initializing model parameters alpha, beta and gamma

x = [0, 0, 0]

# Minimizing the loss function

opt = minimize(timeseriesCVscore, x0=x,

args=(data, mean_squared_log_error),

method="TNC", bounds = ((0, 1), (0, 1), (0, 1))

)

# Take optimal values...

alpha_final, beta_final, gamma_final = opt.x

print(alpha_final, beta_final, gamma_final)

# ...and train the model with them, forecasting for the next 50 hours

model = HoltWinters(data, slen = 24,

alpha = alpha_final,

beta = beta_final,

gamma = gamma_final,

n_preds = 50, scaling_factor = 3)

model.triple_exponential_smoothing()

```

|

closed

|

2019-05-27T11:10:52Z

|

2019-08-26T17:10:49Z

|

https://github.com/Yorko/mlcourse.ai/issues/595

|

[] |

Rym96

| 3

|

docarray/docarray

|

pydantic

| 1,503

|

Support subindex search in QueryBuilder

|

This is the subsequent issue for issue #1235 and PR #1428 .

|

open

|

2023-05-08T09:40:13Z

|

2023-05-08T09:40:13Z

|

https://github.com/docarray/docarray/issues/1503

|

[] |

AnneYang720

| 0

|

marcomusy/vedo

|

numpy

| 582

|

save mesh covered by texture as png file

|

hello

I tend to save my mesh, that covered by texture, as a png file.

here is my code to load texture on obj file:

```

from vedo import *

mesh = Mesh("data/10055_Gray_Wolf_v1_L3.obj",)

mesh.texture("data/10055_Gray_Wolf_Diffuse_v1.jpg", scale=0.1)

mesh.show()

```

Any help, Please.

I prefer to save png without background.

|

closed

|

2022-01-20T09:44:47Z

|

2022-04-05T16:53:05Z

|

https://github.com/marcomusy/vedo/issues/582

|

[] |

ganjbakhshali

| 12

|

HIT-SCIR/ltp

|

nlp

| 560

|

srl 多个角色如何标注?

|

请问一个词有多个角色如何标注?

# srl 格式文件

百团大战 _ B-ARG0 O O O

的 _ I-ARG0 O O O

战略 _ I-ARG0 O O O

目的 _ I-ARG0 O O O

是 Y O O O O

要 Y B-ARG1 O O O

打破 Y I-ARG1 O O O

他

叫 -> [ARG0: 他, ARG1: 汤姆, ARG2: 去拿外衣]

汤姆

去

拿 -> [ARG0: 汤姆, ARG1: 外衣]

外衣

。

汤姆有2个role,在srl.txt中,[ARG0: 汤姆, ARG1: 外衣]是 标注在第四列 还是 标注在第三列并用 | 之类的符号分隔开

|

closed

|

2022-03-10T12:46:18Z

|

2022-09-12T06:50:06Z

|

https://github.com/HIT-SCIR/ltp/issues/560

|

[] |

yhj997248885

| 2

|

aiortc/aiortc

|

asyncio

| 693

|

onIceCandidate support!!

|

hope add this event function

on one candidate address has been got, then call this function

|

closed

|

2022-04-16T02:03:46Z

|

2024-04-05T19:38:02Z

|

https://github.com/aiortc/aiortc/issues/693

|

[] |

diybl

| 4

|

zama-ai/concrete-ml

|

scikit-learn

| 1,039

|

Make DL Packages Optional

|

## Feature request

Make the packages needed for DL (e.g., Torch and Brevitas) optional, or create flavors of Concrete-ML that include only a subset of dependencies (e.g., `concrete-ml[sklearn]` which would include only the linear models).

## Motivation

This would be particularly useful to reduce the size of Docker images based on Concrete-ML: for instance, adding Concrete-ML with `uv` or `poetry` produces, by default, Docker images of >6GBs. With a bit of tweak, we can force it to use the CPU version of Torch, which still produces a Docker image of >2GBs. Considering that in some cases only a subset of models is needed, it could be interesting.

However, I don't know the structure of the code, so it may break a lot of things creating different subpackages/install options. Feel free to close this if this is unpractical. Thanks.

|

open

|

2025-03-13T07:49:05Z

|

2025-03-13T15:58:46Z

|

https://github.com/zama-ai/concrete-ml/issues/1039

|

[] |

AlexMV12

| 1

|

mage-ai/mage-ai

|

data-science

| 4,766

|

[BUG] Seems when dynamic blocks used as replicas are executed along with their original ones.

|

### Mage version

0.9.66

### Describe the bug

I have the following pipeline

when I execute the pipeline seems that the bottom blocks on the right branch are executed although the shouldn't because the condition for that branch has failed. So during execution the pipeline looks like this

The blocks on the top left are those that in the first image shown on the bottom right of the right branch.

In previous version those replica, dynamic child blocks weren't executed at all.

As a result the pipeline runs indefinitely.

### To reproduce

_No response_

### Expected behavior

_No response_

### Screenshots

_No response_

### Operating system

_No response_

### Additional context

_No response_

|

open

|

2024-03-15T17:22:10Z

|

2024-03-19T22:06:12Z

|

https://github.com/mage-ai/mage-ai/issues/4766

|

[

"bug"

] |

georgezefko

| 0

|

pytest-dev/pytest-html

|

pytest

| 169

|

dynamically create the output folder for report.html using command line in pytest

|

Is there any way to create the output folder dynamically for every run with current time stamp and report.hml should be available in that folder.

Is it possible to pass thru the command line for the dynamic folder creation and report.html should be available once all the test cases are executed.

Thanks for the help

|

closed

|

2018-06-01T10:45:30Z

|

2018-06-06T14:15:05Z

|

https://github.com/pytest-dev/pytest-html/issues/169

|

[] |

writetomaha14

| 3

|

dynaconf/dynaconf

|

fastapi

| 241

|

[RFC] Better merging standards

|

Right now There are some ways for merging existing data structures in Dynaconf

- Using `dynaconf_merge` mark

- Using `__` double underlines for existing dictionaries and nested data

- Using MERGE_ENABLED_FOR_DYNACONF var to merge everything globally

All the 3 existing ways has limitations.

- `dynaconf_merge` is limited because it works only for 1st level vars

- `__` works only for data nested under a dictionary

- MERGE_ENABLED can break stuff in Django config.

# New standards for merging.

> **NOTE** All the above will keep working, we will try to not break backwards compatibility.

Although we are going to explicitly recommend only the new standards on explicit ways for merging that meets:

- Optional Granular control of which data is being merged

- Control of global merge feature per file

- Works also via environment variables

- Merge at any level in the nested structures

- Can merge any data type like dicts and lists

# The merge mark

Historically dynaconf is being using `@marks` for some special environment variables, we will introduce a new one: `@merge` for env vars and improve the existing `dynaconf_merge` for regular files.

## Merging files.

A `settings.yaml` file exists in your project:

```yaml

default:

name: Bruno

colors:

- red

- green

data:

links:

twitter: rochacbruno

site: brunorocha.org

```

which will add to `settings.__dict__` object data like:

```py

{

"NAME": "Bruno",

"COLORS": ["red", "green"],

"DATA": {"links": {"twitter": "rochacbruno", "site": "brunorocha.org"}}

}

```

In a `settings.local.yaml` we want now to contribute to that existing data we have 2 options

### Merge the whole file

adding a `dynaconf_merge: true` to the root level of the file.

`settings.local.yaml`

```yaml

dynaconf_merge: true

default:

colors:

- blue

data:

links:

github: rochacbruno.github.io

```

Then `settings.__dict__`

```py

{

"NAME": "Bruno",

"COLORS": ["red", "green", "blue"],

"DATA": {"links": {"twitter": "rochacbruno", "site": "brunorocha.org", "github": "rochacbruno.github.io"}}

}

```

### Granular control of which variable is being merged

If we want to merge only specific variables we have to enclose data under a `dynaconf_merge` key, for example we want to override everything but we want to merge the colors.

`settings.local.yaml`

```yaml

default:

name: Other Name

colors:

dynaconf_merge:

- yellow

- pink

data:

links:

site: other.com

```

if it was `toml`

```toml

[default]

name = "Other Name"

[default.colors]

dynaconf_merge = ["yellow","pink"]

[default.data.links]

site = "other.com"

```

So dynaconf will do pre-processing of every file and for each `dynaconf_merge` it will call the merge pipeline.

Result on `settings.__dict__`

```py

{

"NAME": "Other Name", # <-- overwritten

"COLORS": ["red", "green", "yellow", "pink"], # <-- merged

"DATA": {"links": {"site": "other.com"}} # <-- overwritten

}

```

## Dunder merging

Dunder merging continues to work as a shortcut when the data you want to merge is behind a nested datastructure

```yaml

default:

data__links__telegram: t.me/rochacbruno # <-- Calls set('data.links', "t.me/rochacbruno")

```

or `toml`

```toml

[default]

data__links__telegram = "t.me/rochacbruno" # <-- Calls set('data.links', "t.me/rochacbruno")

```

or `.env`

```bash

export DYNACONF_DATA__links__telegram="t.me/rochacbruno" # <-- Calls set('data.links', "t.me/rochacbruno")

```

## @merge mark

For envvars the `@merge` will mark as it follows the standard for the other envvar markers.

and it can also be used in regular files

`.yaml`

```yaml

default:

name: Jon # <-- Overwritten

colors: "@merge blue" # <-- Calls .append('blue')

colors: "@merge ['blue', 'white']" # <-- Calls .extend(['blue', 'white'])

```

`.env`

```bash

export DYNACONf_NAME=Erik # <-- Overwritten

export DYNACONF_COLORS="@merge ['blue']" # <-- Calls .extend(['blue'])

export DYNACONF_COLORS="@merge ['blue', 'white']" # <-- Calls .extend(['blue', 'white'])

export DYNACONF_COLORS="@merge blue" # <-- Calls .append('blue')

export DYNACONF_COLORS="@merge blue,white" # <-- Calls .extend('blue,white'.split(','))

export DYNACONF_DATA="@merge {foo='bar'}" # <-- Calls .update({"foo": "bar"})

export DYNACONF_DATA="@merge foo=bar" # <-- Calls ['foo'] = "bar"

```

|

closed

|

2019-09-26T19:27:41Z

|

2019-10-09T05:07:08Z

|

https://github.com/dynaconf/dynaconf/issues/241

|

[

"Not a Bug",

"RFC"

] |

rochacbruno

| 3

|

python-gitlab/python-gitlab

|

api

| 2,864

|

Add support for `retry_transient_errors` to then CLI

|

## Description of the problem, including code/CLI snippet

I'm using the `gitlab` cli against gitlab.com which is producing transient errors. The API has the ability to retry these but it looks like the CLI code misses the option because `merge_config` doesn't cover the field.

## Expected Behavior

Ideally the cli would have a command line parameter, but it should definitely respect the configuration file.

## Actual Behavior

No ability to turn on retries with the CLI.

## Specifications

- python-gitlab version: 4.4

- API version you are using (v3/v4): v4

- Gitlab server version (or gitlab.com): .com

|

open

|

2024-05-13T09:23:22Z

|

2024-05-13T09:23:22Z

|

https://github.com/python-gitlab/python-gitlab/issues/2864

|

[] |

djmcgreal-cc

| 0

|

voila-dashboards/voila

|

jupyter

| 524

|

voila render button on Jupyter Lab

|

<img width="126" alt="Screen Shot 2020-01-24 at 11 49 36 AM" src="https://user-images.githubusercontent.com/8352840/73086987-b07e2280-3e9f-11ea-8061-993ea0b01264.png">

This button works great for rendering the current notebook as a Voila dashboard using the classic Jupyter Notebook, but is there an equivalent for Jupyter Lab?

|

closed

|

2020-01-24T16:51:06Z

|

2020-01-24T17:17:10Z

|

https://github.com/voila-dashboards/voila/issues/524

|

[] |

cornhundred

| 7

|

cvat-ai/cvat

|

computer-vision

| 8,813

|

I have modified the source code, how do I rebuild the image?

|

### Actions before raising this issue

- [X] I searched the existing issues and did not find anything similar.

- [X] I read/searched [the docs](https://docs.cvat.ai/docs/)

### Is your feature request related to a problem? Please describe.

I have modified the source code, how do I rebuild the image?

thanks

### Describe the solution you'd like

_No response_

### Describe alternatives you've considered

_No response_

### Additional context

_No response_

|

closed

|

2024-12-11T07:58:00Z

|

2024-12-13T10:37:59Z

|

https://github.com/cvat-ai/cvat/issues/8813

|

[

"question"

] |

stephen-TT

| 4

|

CorentinJ/Real-Time-Voice-Cloning

|

python

| 1,221

|

Numpy error (numpy version 1.24.3)

|

I am using Python 3.11.3 and NumPy 1.24.3 and I get the following error when trying to run the toolbox:

```

Traceback (most recent call last):

File "C:\Users\David\code\Real-Time-Voice-Cloning\demo_toolbox.py", line 5, in <module>

from toolbox import Toolbox

File "C:\Users\David\code\Real-Time-Voice-Cloning\toolbox\__init__.py", line 11, in <module>

from toolbox.ui import UI

File "C:\Users\David\code\Real-Time-Voice-Cloning\toolbox\ui.py", line 37, in <module>

], dtype=np.float) / 255

^^^^^^^^

File "C:\Program Files\Python311\Lib\site-packages\numpy\__init__.py", line 305, in __getattr__

raise AttributeError(__former_attrs__[attr])

AttributeError: module 'numpy' has no attribute 'float'.

`np.float` was a deprecated alias for the builtin `float`. To avoid this error in existing code, use `float` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.float64` here.

The aliases was originally deprecated in NumPy 1.20; for more details and guidance see the original release note at:

https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations. Did you mean: 'cfloat'?

```

|

open

|

2023-05-31T01:55:47Z

|

2024-02-21T06:50:00Z

|

https://github.com/CorentinJ/Real-Time-Voice-Cloning/issues/1221

|

[] |

ehyoitsdavid

| 8

|

streamlit/streamlit

|

machine-learning

| 10,069

|

Pass file path or function directly to `st.navigation`, without using `st.Page`

|

### Checklist

- [X] I have searched the [existing issues](https://github.com/streamlit/streamlit/issues) for similar feature requests.

- [X] I added a descriptive title and summary to this issue.

### Summary

When I write small test apps with multiple pages, I often find myself wanting to do something like this:

```python

st.navigation(["page1.py", "page2.py"])

# Or with functions:

st.navigation([page1_func, page2_func])

```

While this is totally plausible and I don't care about customizing the title or icon for these pages, Streamlit always forces me to wrap things in `st.Page`:

```python

st.navigation([st.Page("page1.py"), st.Page("page2.py")]

# Or with functions:

st.navigation([st.Page(page1_func), st.Page(page2_func)])

```

It would be great if we'd support the former!

### Why?

Not a huge pain point but I regularly stumble over it. Especially because we show a pretty generic exception in this case and I'm confused why it doesn't just work.

### How?

_No response_

### Additional Context

_No response_

|

closed

|

2024-12-22T23:38:13Z

|

2025-02-20T15:54:22Z

|

https://github.com/streamlit/streamlit/issues/10069

|

[

"type:enhancement",

"good first issue",

"feature:multipage-apps",

"feature:st.navigation"

] |

jrieke

| 2

|

jschneier/django-storages

|

django

| 1,115

|

DEFAULT_FILE_STORAGE seems to be ignored

|

I would like my S3 bucket to have 2 folders, one for static, another for media. My understanding is that DEFAULT_FILE_STORAGE should the storage that handles media files, while STATICFILES_STORAGE handles static files. However it appears that DEFAULT_FILE_STORAGE is ignored and doesnt do anything, because for example if I set DEFAULT_FILE_STORAGE = "somestring", no error is thrown while running collectstatic.

Here's my code:

```python

#s3utils.py

from storages.backends.s3boto3 import S3Boto3Storage

StaticRootS3BotoStorage = lambda: S3Boto3Storage(location='static')

MediaRootS3BotoStorage = lambda: S3Boto3Storage(location='media')

#settings.py

AWS_ACCESS_KEY_ID = os.getenv('AWS_ACCESS_KEY_ID')

AWS_SECRET_ACCESS_KEY = os.getenv('AWS_SECRET_ACCESS_KEY')

AWS_STORAGE_BUCKET_NAME = os.getenv('AWS_STORAGE_BUCKET_NAME')

AWS_S3_OBJECT_PARAMETERS = {'ACL': 'private',}

AWS_S3_CUSTOM_DOMAIN = f'{AWS_STORAGE_BUCKET_NAME}.s3.amazonaws.com'

AWS_S3_OBJECT_PARAMETERS = {'CacheControl': 'max-age=86400'}

AWS_LOCATION = 'static'

STATIC_URL = f'https://{AWS_S3_CUSTOM_DOMAIN}/{AWS_LOCATION}/'

MEDIA_URL = f'https://{AWS_S3_CUSTOM_DOMAIN}/media/'

MEDIA_ROOT = os.path.join(BASE_DIR, "file-storage/")

DEFAULT_FILE_STORAGE = 'backend.s3utils.MediaRootS3BotoStorage'

STATICFILES_STORAGE = 'backend.s3utils.StaticRootS3BotoStorage'

```

|

closed

|

2022-02-16T10:21:10Z

|

2023-02-28T04:09:12Z

|

https://github.com/jschneier/django-storages/issues/1115

|

[] |

goldentoaste

| 1

|

litestar-org/litestar

|

asyncio

| 3,680

|

Enhancement: Support attrs converters

|

### Description

Attrs has an attribute conversion feature where a function can be provided to be run whenever the attribute value is set.

https://www.attrs.org/en/stable/examples.html#conversion

This is not being run when the post request body is an attrs class. Looking for clarity on if this is by design or a fix if not. Also, is there another preferred pattern in litestar to do this type of payload attribute conversion?

### URL to code causing the issue

_No response_

### MCVE

requirements.txt

```

litestar[standard,attrs]==2.10.0

```

api.py

```python

from __future__ import annotations

import attrs

from litestar import (

Litestar,

post,

)

@attrs.define

class TestRequestPayload:

a: str = attrs.field(converter=str.lower)

b: str = attrs.field(converter=str.lower)

@post("/test1")

async def test1(data: TestRequestPayload) -> dict:

return attrs.asdict(data)

@post("/test2")

async def test2(data: TestRequestPayload) -> dict:

# Naked evolve basically re-instantiates the object causing the converters to be run

return attrs.asdict(attrs.evolve(data))

app = Litestar(

route_handlers=[test1, test2],

)

```

### Steps to reproduce

1. `litestar --app api:app run`

2. Make a request to the test1 route, note data response unchanged from input

```

$ curl --request POST localhost:8000/test1 --data '{"a": "Hello", "b": "World"}' ; echo

{"a":"Hello","b":"World"}

```

3. Make a request to the test2 route, note data response lowercased relative to input

```

$ curl --request POST localhost:8000/test2 --data '{"a": "Hello", "b": "World"}' ; echo

{"a":"hello","b":"world"}

```

### Screenshots

_No response_

### Logs

_No response_

### Litestar Version

2.10.0

### Platform

- [X] Linux

- [ ] Mac

- [ ] Windows

- [ ] Other (Please specify in the description above)

|

closed

|

2024-08-20T22:29:11Z

|

2025-03-20T15:54:52Z

|

https://github.com/litestar-org/litestar/issues/3680

|

[

"Enhancement",

"Upstream"

] |

jeffcarrico

| 1

|

matplotlib/matplotlib

|

data-visualization

| 28,860

|

[ENH]: Add Independent xlabelpad and ylabelpad Options to rcParams

|

### Problem

Currently, we can only set a uniform `axes.labelpad`, which applies the same padding for both the x-axis and y-axis labels.

I am aware that it is possible to adjust the labelpad for the x and y labels independently using `set_xlabel(..., labelpad=x_pad)` and `set_ylabel(..., labelpad=y_pad)` in plotting code. However, would it be better to add separate options in rcParams to set the padding for x and y labels independently, similar to how `xtick.major.pad` and `ytick.major.pad` work?

### Proposed solution

_No response_

|

open

|

2024-09-21T18:47:02Z

|

2025-03-12T12:02:52Z

|

https://github.com/matplotlib/matplotlib/issues/28860

|

[

"New feature"

] |

NominHanggai

| 5

|

microsoft/unilm

|

nlp

| 927

|

[layoutlmv3] SER and RE task combined into one model

|

Hi @HYPJUDY

I have a question that do you try to combine SER and RE task into one model, that is to say, just use one model to support SER and RE task?

|

open

|

2022-11-22T09:22:10Z

|

2022-12-31T22:30:10Z

|

https://github.com/microsoft/unilm/issues/927

|

[] |

githublsk

| 1

|

datadvance/DjangoChannelsGraphqlWs

|

graphql

| 14

|

'AsgiRequest' object has no attribute 'register'

|

Hello,

After following the installation instructions, and using the example in the README with `django==2.2.1` and `django-channels-graphql-ws==0.2.0` on Python 3.7.3, when making any subscription operation:

```js

subscription {

mySubscription {event}

}

```

```json

{

"errors": [

{

"message": "'AsgiRequest' object has no attribute 'register'"

}

],

"data": null

}

```

Traceback:

```

Traceback (most recent call last):

File "/.cache/pypoetry/virtualenvs/WcnE4_vK-py3.7/lib/python3.7/site-packages/graphql/execution/executor.py", line 447, in resolve_or_error

return executor.execute(resolve_fn, source, info, **args)

File "/.cache/pypoetry/virtualenvs/WcnE4_vK-py3.7/lib/python3.7/site-packages/graphql/execution/executors/sync.py", line 16, in execute

return fn(*args, **kwargs)

File "/.cache/pypoetry/virtualenvs/WcnE4_vK-py3.7/lib/python3.7/site-packages/graphene_django/debug/middleware.py", line 56, in resolve

promise = next(root, info, **args)

File "/***.py", line 86, in resolve

result = next(root, info, **args)

File "/***.py", line 37, in resolve

return next(root, info, **args)

File "/***.py", line 8, in resolve

return result.get()

File "/.cache/pypoetry/virtualenvs/WcnE4_vK-py3.7/lib/python3.7/site-packages/promise/promise.py", line 510, in get

return self._target_settled_value(_raise=True)

File "/.cache/pypoetry/virtualenvs/WcnE4_vK-py3.7/lib/python3.7/site-packages/promise/promise.py", line 514, in _target_settled_value

return self._target()._settled_value(_raise)

File "/.cache/pypoetry/virtualenvs/WcnE4_vK-py3.7/lib/python3.7/site-packages/promise/promise.py", line 224, in _settled_value

reraise(type(raise_val), raise_val, self._traceback)

File "/.cache/pypoetry/virtualenvs/WcnE4_vK-py3.7/lib/python3.7/site-packages/six.py", line 693, in reraise

raise value

File "/.cache/pypoetry/virtualenvs/WcnE4_vK-py3.7/lib/python3.7/site-packages/promise/promise.py", line 487, in _resolve_from_executor

executor(resolve, reject)

File "/.cache/pypoetry/virtualenvs/WcnE4_vK-py3.7/lib/python3.7/site-packages/promise/promise.py", line 754, in executor

return resolve(f(*args, **kwargs))

File "/.cache/pypoetry/virtualenvs/WcnE4_vK-py3.7/lib/python3.7/site-packages/graphql/execution/middleware.py", line 76, in make_it_promise

return next(*args, **kwargs)

File "/.cache/pypoetry/virtualenvs/WcnE4_vK-py3.7/lib/python3.7/site-packages/channels_graphql_ws/graphql_ws.py", line 425, in _subscribe

register = info.context.register

AttributeError: 'AsgiRequest' object has no attribute 'register'

```

While I can see where the 'register' attribute is needed: https://github.com/datadvance/DjangoChannelsGraphqlWs/blob/master/channels_graphql_ws/graphql_ws.py#L425, I can't see what could have caused it to be deleted from the context before going through `Subscription._subscribe`.

Do you have any idea what could lead to that situtation, and how to fix it?

|

closed

|

2019-05-08T14:58:09Z

|

2020-02-12T16:18:56Z

|

https://github.com/datadvance/DjangoChannelsGraphqlWs/issues/14

|

[] |

rigelk

| 6

|

jina-ai/serve

|

deep-learning

| 6,041

|

Release Notes (3.20.3)

|

# Release Note

This release contains 1 bug fix.

## 🐞 Bug Fixes

### Skip doc attributes in __annotations__ but not in __fields__ (#6035)

When deploying an Executor inside a Flow with a `BaseDoc` model that has any attribute with a `ClassVar` value, the service would fail to initialize because the Gateway could not properly create the schemas. We have fixed this by securing access to `__fields__` when dynamically creating these pydantic models.

## 🤟 Contributors

We would like to thank all contributors to this release:

- Narek Amirbekian (@NarekA )

|

closed

|

2023-09-06T16:01:39Z

|

2023-09-07T08:48:51Z

|

https://github.com/jina-ai/serve/issues/6041

|

[] |

JoanFM

| 0

|

gunthercox/ChatterBot

|

machine-learning

| 1,773

|

How to connect chatbat or integrate with website

|

i made a simple chatbot using flask and chatterbot, for now how would i integrate this chatbot with website.

|

closed

|

2019-07-12T07:20:10Z

|

2020-06-20T17:33:35Z

|

https://github.com/gunthercox/ChatterBot/issues/1773

|

[] |

AvhiOjha

| 1

|

ultralytics/yolov5

|

machine-learning

| 12,823

|

'Detect' object has no attribute 'grid'

|

### Search before asking

- [X] I have searched the YOLOv5 [issues](https://github.com/ultralytics/yolov5/issues) and [discussions](https://github.com/ultralytics/yolov5/discussions) and found no similar questions.

### Question

Hey, i recently found out about YOLO, and i have been trying to learn YOLOV5 for the past couple of weeks or so, but theres something which i literally cannot get past.