repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

coqui-ai/TTS

|

deep-learning

| 2,686

|

[Bug] Unable to download models

|

### Describe the bug

When I run the example the model is unable to download.

when I try to manually download the model from

```

https://coqui.gateway.scarf.sh/v0.10.1_models/tts_models--multilingual--multi-dataset--your_tts.zip

```

I get redirected to

```

https://huggingface.co/erogol/v0.10.1_models/resolve/main/tts_models--multilingual--multi-dataset--your_tts.zip

```

which says "Repository not found"

### To Reproduce

Run the basic example for python

code:

``` python

from TTS.api import TTS

# Running a multi-speaker and multi-lingual model

# List available 🐸TTS models and choose the first one

model_name = TTS.list_models()[0]

# Init TTS

tts = TTS(model_name)

# Run TTS

# ❗ Since this model is multi-speaker and multi-lingual, we must set the target speaker and the language

# Text to speech with a numpy output

wav = tts.tts("This is a test! This is also a test!!", speaker=tts.speakers[0], language=tts.languages[0])

```

output:

```

zipfile.BadZipFile: File is not a zip file

### Expected behavior

Being able to download the pretrained models

### Logs

```shell

> Downloading model to /home/mb/.local/share/tts/tts_models--multilingual--multi-dataset--your_tts

0%| | 0.00/29.0 [00:00<?, ?iB/s] > Error: Bad zip file - https://coqui.gateway.scarf.sh/v0.10.1_models/tts_models--multilingual--multi-dataset--your_tts.zip

Traceback (most recent call last):

File "/home/mb/Documents/ai/TTS/TTS/utils/manage.py", line 434, in _download_zip_file

with zipfile.ZipFile(temp_zip_name) as z:

File "/usr/lib/python3.10/zipfile.py", line 1267, in __init__

self._RealGetContents()

File "/usr/lib/python3.10/zipfile.py", line 1334, in _RealGetContents

raise BadZipFile("File is not a zip file")

zipfile.BadZipFile: File is not a zip file

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/mb/Documents/ai/tts/main.py", line 8, in <module>

tts = TTS(model_name)

File "/home/mb/Documents/ai/TTS/TTS/api.py", line 289, in __init__

self.load_tts_model_by_name(model_name, gpu)

File "/home/mb/Documents/ai/TTS/TTS/api.py", line 385, in load_tts_model_by_name

model_path, config_path, vocoder_path, vocoder_config_path, model_dir = self.download_model_by_name(

File "/home/mb/Documents/ai/TTS/TTS/api.py", line 348, in download_model_by_name

model_path, config_path, model_item = self.manager.download_model(model_name)

File "/home/mb/Documents/ai/TTS/TTS/utils/manage.py", line 303, in download_model

self._download_zip_file(model_item["github_rls_url"], output_path, self.progress_bar)

File "/home/mb/Documents/ai/TTS/TTS/utils/manage.py", line 439, in _download_zip_file

raise zipfile.BadZipFile # pylint: disable=raise-missing-from

zipfile.BadZipFile

100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 29.0/29.0 [00:00<00:00, 185iB/s]

```

### Environment

```shell

{

"CUDA": {

"GPU": [],

"available": false,

"version": "11.7"

},

"Packages": {

"PyTorch_debug": false,

"PyTorch_version": "2.0.1+cu117",

"TTS": "0.14.3",

"numpy": "1.23.5"

},

"System": {

"OS": "Linux",

"architecture": [

"64bit",

"ELF"

],

"processor": "x86_64",

"python": "3.10.6",

"version": "#202303130630~1685473338~22.04~995127e SMP PREEMPT_DYNAMIC Tue M"

}

}

```

### Additional context

_No response_

|

closed

|

2023-06-19T13:49:57Z

|

2025-02-28T16:41:31Z

|

https://github.com/coqui-ai/TTS/issues/2686

|

[

"bug"

] |

Maarten-buelens

| 23

|

xuebinqin/U-2-Net

|

computer-vision

| 357

|

Hi, when I run this code, I get strange errors in other detection tasks.The following is the warning where the error occurs

|

/pytorch/aten/src/ATen/native/cuda/Loss.cu:115: operator(): block: [290,0,0], thread: [62,0,0] Assertion `input_val >= zero && input_val <= one` failed.

/pytorch/aten/src/ATen/native/cuda/Loss.cu:115: operator(): block: [290,0,0], thread: [63,0,0] Assertion `input_val >= zero && input_val <= one` failed.

[epoch: 3/100, batch: 11/ 0, ite: 517] train loss: 66.000237, tar: 11.000006/lr:0.000100

l0: 1.000000, l1: 1.000016, l2: 1.000000, l3: 1.000000, l4: 1.000000, l5: nan

[epoch: 3/100, batch: 12/ 0, ite: 518] train loss: nan, tar: 12.000006/lr:0.000100

Traceback (most recent call last):

File "train_multiple_loss.py", line 149, in <module>

loss2, loss = muti_bce_loss_fusion(d6, d1, d2, d3, d4, d5, labels_v)

File "train_multiple_loss.py", line 49, in muti_bce_loss_fusion

loss4 = bce_ssim_loss(d4,labels_v)

File "train_multiple_loss.py", line 37, in bce_ssim_loss

iou_out = iou_loss(pred,target)

File "/root/miniconda3/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1102, in _call_impl

return forward_call(*input, **kwargs)

File "/root/autodl-tmp/pytorch_iou/__init__.py", line 28, in forward

return _iou(pred, target, self.size_average)

File "/root/autodl-tmp/pytorch_iou/__init__.py", line 13, in _iou

Ior1 = torch.sum(target[i,:,:,:]) + torch.sum(pred[i,:,:,:])-Iand1

RuntimeError: CUDA error: device-side assert triggered

CUDA kernel errors might be asynchronously reported at some other API call,so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

I think there might be a vanishing gradient happening, but I don't have a solution

|

open

|

2023-04-24T15:29:11Z

|

2024-04-24T06:03:58Z

|

https://github.com/xuebinqin/U-2-Net/issues/357

|

[] |

1dhuh

| 1

|

slackapi/python-slack-sdk

|

asyncio

| 1,153

|

How to get messages from a personal chat?

|

I want to be able to grab a chat log using the api

```bash

conversation_id = "D0....."

result = client.conversations_history(channel=conversation_id)

```

When i send this message i alway get `channel_not_found` even though the bot is added to the DM.

It also has the correct scopes.

<img width="391" alt="Screen Shot 2021-12-14 at 11 51 26 AM" src="https://user-images.githubusercontent.com/17206638/146042742-9c846457-479b-4d46-8422-8e290be8f474.png">

|

closed

|

2021-12-14T16:52:08Z

|

2022-01-31T00:02:31Z

|

https://github.com/slackapi/python-slack-sdk/issues/1153

|

[

"question",

"needs info",

"auto-triage-stale"

] |

jeremiahlukus

| 3

|

huggingface/datasets

|

computer-vision

| 6,460

|

jsonlines files don't load with `load_dataset`

|

### Describe the bug

While [the docs](https://huggingface.co/docs/datasets/upload_dataset#upload-dataset) seem to state that `.jsonl` is a supported extension for `datasets`, loading the dataset results in a `JSONDecodeError`.

### Steps to reproduce the bug

Code:

```

from datasets import load_dataset

dset = load_dataset('slotreck/pickle')

```

Traceback:

```

Downloading readme: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 925/925 [00:00<00:00, 3.11MB/s]

Downloading and preparing dataset json/slotreck--pickle to /mnt/home/lotrecks/.cache/huggingface/datasets/slotreck___json/slotreck--pickle-0c311f36ed032b04/0.0.0/8bb11242116d547c741b2e8a1f18598ffdd40a1d4f2a2872c7a28b697434bc96...

Downloading data: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 589k/589k [00:00<00:00, 18.9MB/s]

Downloading data: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 104k/104k [00:00<00:00, 4.61MB/s]

Downloading data: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 170k/170k [00:00<00:00, 7.71MB/s]

Downloading data files: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 3/3 [00:00<00:00, 3.77it/s]

Extracting data files: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 3/3 [00:00<00:00, 523.92it/s]

Generating train split: 0 examples [00:00, ? examples/s]Failed to read file '/mnt/home/lotrecks/.cache/huggingface/datasets/downloads/6ec07bb2f279c9377036af6948532513fa8f48244c672d2644a2d7018ee5c9cb' with error <class 'pyarrow.lib.ArrowInvalid'>: JSON parse error: Column(/ner/[]/[]/[]) changed from number to string in row 0

Traceback (most recent call last):

File "/mnt/home/lotrecks/anaconda3/envs/pickle/lib/python3.7/site-packages/datasets/packaged_modules/json/json.py", line 144, in _generate_tables

dataset = json.load(f)

File "/mnt/home/lotrecks/anaconda3/envs/pickle/lib/python3.7/json/__init__.py", line 296, in load

parse_constant=parse_constant, object_pairs_hook=object_pairs_hook, **kw)

File "/mnt/home/lotrecks/anaconda3/envs/pickle/lib/python3.7/json/__init__.py", line 348, in loads

return _default_decoder.decode(s)

File "/mnt/home/lotrecks/anaconda3/envs/pickle/lib/python3.7/json/decoder.py", line 340, in decode

raise JSONDecodeError("Extra data", s, end)

json.decoder.JSONDecodeError: Extra data: line 2 column 1 (char 3086)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/mnt/home/lotrecks/anaconda3/envs/pickle/lib/python3.7/site-packages/datasets/builder.py", line 1879, in _prepare_split_single

for _, table in generator:

File "/mnt/home/lotrecks/anaconda3/envs/pickle/lib/python3.7/site-packages/datasets/packaged_modules/json/json.py", line 147, in _generate_tables

raise e

File "/mnt/home/lotrecks/anaconda3/envs/pickle/lib/python3.7/site-packages/datasets/packaged_modules/json/json.py", line 122, in _generate_tables

io.BytesIO(batch), read_options=paj.ReadOptions(block_size=block_size)

File "pyarrow/_json.pyx", line 259, in pyarrow._json.read_json

File "pyarrow/error.pxi", line 144, in pyarrow.lib.pyarrow_internal_check_status

File "pyarrow/error.pxi", line 100, in pyarrow.lib.check_status

pyarrow.lib.ArrowInvalid: JSON parse error: Column(/ner/[]/[]/[]) changed from number to string in row 0

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/mnt/home/lotrecks/anaconda3/envs/pickle/lib/python3.7/site-packages/datasets/load.py", line 1815, in load_dataset

storage_options=storage_options,

File "/mnt/home/lotrecks/anaconda3/envs/pickle/lib/python3.7/site-packages/datasets/builder.py", line 913, in download_and_prepare

**download_and_prepare_kwargs,

File "/mnt/home/lotrecks/anaconda3/envs/pickle/lib/python3.7/site-packages/datasets/builder.py", line 1004, in _download_and_prepare

self._prepare_split(split_generator, **prepare_split_kwargs)

File "/mnt/home/lotrecks/anaconda3/envs/pickle/lib/python3.7/site-packages/datasets/builder.py", line 1768, in _prepare_split

gen_kwargs=gen_kwargs, job_id=job_id, **_prepare_split_args

File "/mnt/home/lotrecks/anaconda3/envs/pickle/lib/python3.7/site-packages/datasets/builder.py", line 1912, in _prepare_split_single

raise DatasetGenerationError("An error occurred while generating the dataset") from e

datasets.builder.DatasetGenerationError: An error occurred while generating the dataset

```

### Expected behavior

For the dataset to be loaded without error.

### Environment info

- `datasets` version: 2.13.1

- Platform: Linux-3.10.0-1160.80.1.el7.x86_64-x86_64-with-centos-7.9.2009-Core

- Python version: 3.7.12

- Huggingface_hub version: 0.15.1

- PyArrow version: 8.0.0

- Pandas version: 1.3.5

|

closed

|

2023-11-29T21:20:11Z

|

2023-12-29T02:58:29Z

|

https://github.com/huggingface/datasets/issues/6460

|

[] |

serenalotreck

| 4

|

graphql-python/graphene

|

graphql

| 1,041

|

Problem with Implementing Custom Directives

|

Hi,

I've have read all the issues in your repo regarding this argument.

I've also checked your source code and the graphql-core source code.

And still I can't find a satisfying answer to my problem.

I have to implement custom directives like the ones implemented in this package (https://github.com/ekampf/graphene-custom-directives).

The question is: since the graphql-core apparently only supports the logic for the skip and include ones, and it seems quite hardcoded ( since it strictly supports only those two ), which is the correct way to implement custom directives ?

Is the way in the package I mentioned the only one possible to achieve my goal ?

Thanks

|

closed

|

2019-07-22T13:40:35Z

|

2019-09-20T14:46:54Z

|

https://github.com/graphql-python/graphene/issues/1041

|

[] |

frank2411

| 2

|

AirtestProject/Airtest

|

automation

| 342

|

调用本地环境的第三方模块bs4 一直失败 python IDE调用 是成功的

|

Remove any following parts if does not have details about

**Describe the bug**

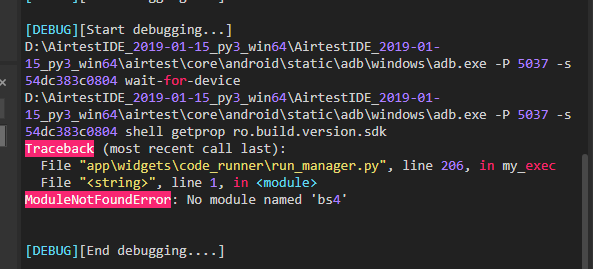

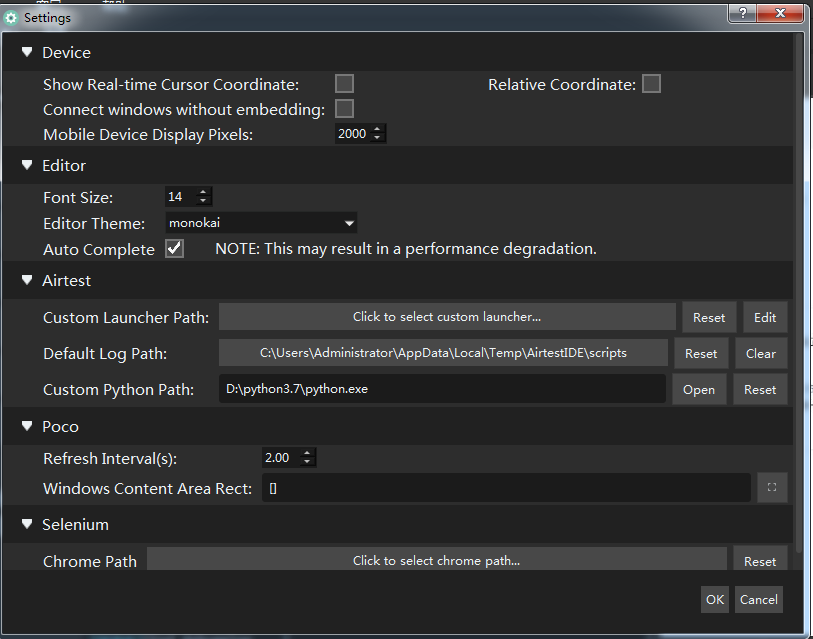

[DEBUG][Start debugging...]

D:\AirtestIDE_2019-01-15_py3_win64\AirtestIDE_2019-01-15_py3_win64\airtest\core\android\static\adb\windows\adb.exe -P 5037 -s 54dc383c0804 wait-for-device

D:\AirtestIDE_2019-01-15_py3_win64\AirtestIDE_2019-01-15_py3_win64\airtest\core\android\static\adb\windows\adb.exe -P 5037 -s 54dc383c0804 shell getprop ro.build.version.sdk

Traceback (most recent call last):

File "app\widgets\code_runner\run_manager.py", line 206, in my_exec

File "<string>", line 1, in <module>

ModuleNotFoundError: No module named 'bs4'

python本地环境3.7 设置里面已经填写了python.exe路径。

**To Reproduce**

Steps to reproduce the behavior:

1. python3.7安装bs4.. (python IDE调用bs4是成功的)

2. airtest设置python.exe路径保存。重启airtest。

3. 选中 from bs4 import BeautifulSoup 。只运行选中代码。

4. 不存在bs4

**Expected behavior**

A clear and concise description of what you expected to happen.

**Screenshots**

**python version:** `python3.7`

**airtest version:** `1.2.0

> You can get airtest version via `pip freeze` command.

**Smartphone (please complete the following information):**

- Device: 小米5plus

- OS: [e.g. Android 7.0]

- more information if have

**Additional context**

换了两台电脑 同样的环境同样的报错

|

closed

|

2019-04-01T12:57:51Z

|

2019-04-01T14:05:41Z

|

https://github.com/AirtestProject/Airtest/issues/342

|

[] |

hoangwork

| 9

|

CorentinJ/Real-Time-Voice-Cloning

|

python

| 946

|

Getting stuck on "Loading the encoder" bit (not responding)

|

Hey, I just installed everything. I'm having trouble where whenever I try to input anything it loads up successfully and then gets caught on "Loading the encoder" and then says it's not responding. I have an RTX 3080. I've tried redownloading and using different pretrained.pt files for the encoder thing it's trying to load.

|

open

|

2021-12-10T10:32:13Z

|

2022-01-10T12:11:13Z

|

https://github.com/CorentinJ/Real-Time-Voice-Cloning/issues/946

|

[] |

HirabayashiCallie

| 2

|

Asabeneh/30-Days-Of-Python

|

numpy

| 383

|

Python

|

closed

|

2023-04-18T01:52:32Z

|

2023-07-08T21:56:11Z

|

https://github.com/Asabeneh/30-Days-Of-Python/issues/383

|

[] |

YeudielVai

| 1

|

|

KaiyangZhou/deep-person-reid

|

computer-vision

| 550

|

cmc curve graph

|

How do I get the cmc graph after training? Thank you for your answer.

|

open

|

2023-07-14T09:10:43Z

|

2023-07-14T09:10:43Z

|

https://github.com/KaiyangZhou/deep-person-reid/issues/550

|

[] |

xiaboAAA

| 0

|

ets-labs/python-dependency-injector

|

flask

| 28

|

Make Objects compatible with Python 2.6

|

Acceptance criterias:

- Tests on Python 2.6 passed.

- Badge with supported version added to README.md

|

closed

|

2015-03-17T12:59:09Z

|

2015-03-23T14:49:02Z

|

https://github.com/ets-labs/python-dependency-injector/issues/28

|

[

"enhancement"

] |

rmk135

| 0

|

sktime/pytorch-forecasting

|

pandas

| 1,354

|

Issue with TFT generating empty features in torch/nn/modules/linear.py

|

- PyTorch-Forecasting version: 1.0.0

- PyTorch version: 2.0.1+cu117

- Python version: 3.8.10

- Operating System: Ubuntu 20.04.6 LTS

### Expected behavior

Code would build a working TFT model from TimeSeriesDataSet data.

### Actual behavior

The code reported an error and crashed:

### Code to reproduce the problem

This stripped down code produces the error reliably either for a single target (no parameter) or for 2 targets (any parameter):

`import os

import sys

import logging

import pandas as pd

import numpy as np

import torch

import pytorch_lightning as pl

import pytorch_forecasting as pf

from pytorch_lightning import Trainer

from pytorch_lightning.callbacks import EarlyStopping,LearningRateMonitor

from pytorch_lightning.loggers import TensorBoardLogger

from pytorch_forecasting import Baseline,TimeSeriesDataSet

from pytorch_forecasting.models import TemporalFusionTransformer as TFT

from pytorch_forecasting.data import MultiNormalizer,TorchNormalizer

print("python version",sys.version)

print("pandas version",pd.__version__)

print("numpy version",np.__version__)

print("torch version",torch.__version__)

print("pytorch_forecasting version",pf.__version__)

print("pytorch_lightning version",pl.__version__)

os.environ["CUDA_VISIBLE_DEVICES"] = ""

logging.getLogger("pytorch_lightning").setLevel(logging.WARNING)

Train=pd.read_csv("ShortTrain2.csv")

Train[Train.select_dtypes(include=['float64']).columns] = Train[Train.select_dtypes(include=['float64']).columns].astype('float32')

print(Train.dtypes)

predictors=1

if len(sys.argv) > 1 :

predictors = 2

xtarget,xtarget_normalizer,xtime_varying_known_reals,xtime_varying_unkown_reals=None,None,None,None

if predictors == 1:

print("Using a single predictor v1_R")

# single predictor

xtarget=['v1_R']

xtarget_normalizer=MultiNormalizer([TorchNormalizer()])

xtime_varying_known_reals=['v1_Act']

xtime_varying_unknown_reals=['v1_C','v1_D','v1_V','v1_S','v1_H','v1_L','v1_R_lag10','v1_R_lag20']

else:

print("Using 2 predictors v1_R and v2_R")

# 2 predictors

xtarget=['v1_R','v2_R']

xtarget_normalizer=MultiNormalizer([TorchNormalizer(),TorchNormalizer()])

xtime_varying_known_reals=['v1_Act','v2_Act']

xtime_varying_unknown_reals=['v1_C','v1_D','v1_V','v1_S','v1_H','v1_L','v1_R_lag10','v1_R_lag20','v2_C','v2_D','v2_V','v2_S','v2_H','v2_L','v2_R_lag10','v2_R_lag20']

print(xtarget)

print(xtarget_normalizer)

print(xtime_varying_known_reals)

print(xtime_varying_unknown_reals)

training = TimeSeriesDataSet(

data=Train,

add_encoder_length=True,

add_relative_time_idx=True,

add_target_scales=True,

allow_missing_timesteps=True,

group_ids=['Group'],

max_encoder_length=10,

max_prediction_length=20,

min_encoder_length=5,

min_prediction_length=1,

# time_idx='unique',

time_idx='time_idx',

static_categoricals=[], # Categorical features that do not change over time - list

static_reals=[], # Continuous features that do not change over time - list

time_varying_known_categoricals=[], # Known in the future - list

time_varying_unknown_categoricals=[],

target=xtarget,

target_normalizer=xtarget_normalizer,

time_varying_known_reals=xtime_varying_known_reals,

time_varying_unknown_reals=xtime_varying_unknown_reals

)

validation = TimeSeriesDataSet.from_dataset(training, Train, predict=False, stop_randomization=True)

print("Passed validation")

train_dataloader = training.to_dataloader(train=True, batch_size=16, num_workers=32,shuffle=False)

print("Created dataloaders - show first batch")

#warnings.filterwarnings("ignore")

print("Start train_dataloader comparison with baseline")

device = torch.device("cpu")

print("actuals calculated")

Baseline().to(device)

baseline_predictions = Baseline().predict(train_dataloader)

baseline_predictions = torch.cat([b.clone().detach() for b in baseline_predictions])

print("baseline_predictions calculated")

val_loss=1e6

early_stop_callback = EarlyStopping(monitor="val_loss", min_delta=1e-4, patience=10, verbose=False, mode="min")

lr_logger = LearningRateMonitor() # log the learning rate

logger = TensorBoardLogger("lightning_logs") # logging results to a tensorboard

trainer = Trainer(

callbacks=[lr_logger, early_stop_callback],

enable_model_summary=True,

gradient_clip_val=0.1,

limit_train_batches=10, # comment in for training, running valiation every 30 batches

logger=logger

)

model = TFT(

training,

attention_head_size=1,

dropout=0.1,

hidden_continuous_size=8,

learning_rate=0.03, # 1

log_interval=10,

lstm_layers=1, # could be interesting

output_size=1, # number of predictors

reduce_on_plateau_patience=4,

)

print(f"\nNumber of parameters in network: {model.size()/1e3:.1f}k\n")

`

Paste the command(s) you ran and the output. Including a link to a colab notebook will speed up issue resolution.

python3 filename.py (no parameter prepares and tries to build a univariate model, any parameter -> 2 variate mode:

[ShortTrain2.txt](https://github.com/jdb78/pytorch-forecasting/files/12270065/ShortTrain2.txt)

If there was a crash, please include the traceback here.

Traceback (most recent call last):

File "ShortTrain2.py", line 94, in <module>

model = TFT(

File "/home/jl/.local/lib/python3.8/site-packages/pytorch_forecasting/models/temporal_fusion_transformer/__init__.py", line 248, in __init__

self.static_context_variable_selection = GatedResidualNetwork(

File "/home/jl/.local/lib/python3.8/site-packages/pytorch_forecasting/models/temporal_fusion_transformer/sub_modules.py", line 204, in __init__

self.fc1 = nn.Linear(self.input_size, self.hidden_size)

File "/home/jl/.local/lib/python3.8/site-packages/torch/nn/modules/linear.py", line 97, in __init__

self.weight = Parameter(torch.empty((out_features, in_features), **factory_kwargs))

TypeError: empty() received an invalid combination of arguments - got (tuple, dtype=NoneType, device=NoneType), but expected one of:

* (tuple of ints size, *, tuple of names names, torch.memory_format memory_format, torch.dtype dtype, torch.layout layout, torch.device device, bool pin_memory, bool requires_grad)

* (tuple of ints size, *, torch.memory_format memory_format, Tensor out, torch.dtype dtype, torch.layout layout, torch.device device, bool pin_memory, bool requires_grad)

The code used to initialize the TimeSeriesDataSet and model should be also included.

TSDS preparation is Included in the code which just reads a short example from file called ShortTrain2.csv (640 lines long):

[ShortTrain2.csv](https://github.com/jdb78/pytorch-forecasting/files/12230525/ShortTrain2.csv)

|

closed

|

2023-08-01T14:38:59Z

|

2023-08-31T19:16:43Z

|

https://github.com/sktime/pytorch-forecasting/issues/1354

|

[] |

Loggy48

| 2

|

miguelgrinberg/Flask-Migrate

|

flask

| 208

|

changing log level in configuration callback

|

Following the docs, I am trying to alter the log level in the configuration to turn off logging:

```python

@migrate.configure

def configure_alembic(config):

config.set_section('logger_alembic', 'level', 'WARN')

return config

```

I've confirmed that making this same change in `alembic.ini` turns off logging successfully - but I don't want to change the "default" level as pulled from that file.

Unfortunately, this does not seem to work. I have tried outputting the level from the logger (in various locations) and every time it reports it as `WARN` but still outputs logs at `INFO` level.

Any help or suggestions would be gratefully received.

|

closed

|

2018-06-08T12:33:11Z

|

2018-06-13T10:05:16Z

|

https://github.com/miguelgrinberg/Flask-Migrate/issues/208

|

[

"question"

] |

bugsduggan

| 2

|

scikit-tda/kepler-mapper

|

data-visualization

| 251

|

Overlapping bins in the HTML visualization.

|

**Description**

There is overlap in the member distribution histogram when the number of visualized bins is greater than 10.

**To Reproduce**

```python

import kmapper as km

from sklearn import datasets

data, labels = datasets.make_circles(n_samples=5000, noise=0.03, factor=0.3)

mapper = km.KeplerMapper(verbose=1)

projected_data = mapper.fit_transform(data, projection=[0,1]) # X-Y axis

graph = mapper.map(projected_data, data, cover=km.Cover(n_cubes=10))

NBINS = 20

mapper.visualize(graph, path_html="make_circles_keplermapper_output_5.html",

nbins = NBINS,

title="make_circles(n_samples=5000, noise=0.03, factor=0.3)")

```

With *keppler-mapper* version `2.0.1`.

**Expected behavior**

It would be great if all the histogram bars would adjust their size to accommodate all the desired bins.

**Screenshots**

The above code generates the following:

But I have some internal code that generates this, where the overlap is more evident:

**Additional context**

I was digging around and found that the code that generates the histogram is in the function `set_histogram`

https://github.com/scikit-tda/kepler-mapper/blob/ece5d47f7d65654c588431dd2275197502740066/kmapper/static/kmapper.js#L187-L202

I was thinking it would be possible to modify the width of the bars (just like the height is), however I can't find a clean way to get the parent's width.

I am happy to open a PR with my current, not-so-great solution, but I was wondering if you had any guidance on how to do that.

|

open

|

2023-11-27T10:22:44Z

|

2023-11-27T10:22:44Z

|

https://github.com/scikit-tda/kepler-mapper/issues/251

|

[] |

fferegrino

| 0

|

keras-team/keras

|

python

| 20,402

|

`EpochIterator` initialized with a generator cannot be reset

|

`EpochIterator` initialized with a generator cannot be reset, consequently the following bug might occur: https://github.com/keras-team/keras/issues/20394

Another consequence is running prediction on an unbuilt model discards samples:

```python

import os

import numpy as np

os.environ["KERAS_BACKEND"] = "jax"

import keras

set_size = 4

def generator():

for _ in range(set_size):

yield np.ones((1, 4)), np.ones((1, 1))

model = keras.models.Sequential([keras.layers.Dense(1, activation="relu")])

model.compile(loss="mse", optimizer="adam")

y = model.predict(generator())

print(f"There should be {set_size} predictions, there are {len(y)}.")

```

```

There should be 4 predictions, there are 2.

```

|

closed

|

2024-10-24T11:31:37Z

|

2024-10-26T17:33:12Z

|

https://github.com/keras-team/keras/issues/20402

|

[

"stat:awaiting response from contributor",

"type:Bug"

] |

nicolaspi

| 2

|

browser-use/browser-use

|

python

| 890

|

Add Mouse Interaction Functions for Full Web Automation

|

### Problem Description

I would like to request support for mouse actions (such as click, scroll up/down, drag, and hover) in browser-use, in addition to the existing keyboard automation.

**Use Case**

I need to access a remote server through a web portal, and browser-use can successfully recognize and interact with standard webpage elements. However, the portal streams a remote desktop interface inside a <canvas> element, which browser-use does not seem to recognize. This limitation prevents me from interacting with the remote interface as I would with a normal web page.

**Problem**

Currently, browser-use appears to only support keyboard interactions but lacks mouse control. Without mouse support, it’s impossible to interact with elements inside streamed remote sessions or complex web applications that rely on mouse-based interactions.

**Impact**

Adding mouse control would enable seamless automation for remote desktops, cloud-based IDEs, and other streamed environments where UI elements are rendered inside a <canvas>. This would make browser-use much more versatile and applicable to a wider range of use cases.

Thank you for considering this feature request! Looking forward to any updates on this.

### Proposed Solution

• Add support for mouse functions such as:

• Left-click, right-click, double-click

• Scrolling up/down

• Drag-and-drop

• Mouse movement and hovering

This enhancement would significantly improve browser-use for automating interactions with web applications that require both keyboard and mouse input.

### Alternative Solutions

_No response_

### Additional Context

_No response_

|

open

|

2025-02-27T09:10:28Z

|

2025-03-19T12:00:30Z

|

https://github.com/browser-use/browser-use/issues/890

|

[

"enhancement"

] |

JamesChen9415

| 3

|

ultralytics/ultralytics

|

computer-vision

| 18,965

|

benchmark error

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and found no similar bug report.

### Ultralytics YOLO Component

_No response_

### Bug

The scripts i wrote is

> from ultralytics.utils.benchmarks import benchmark

> benchmark(model="yolo11n.pt", data="coco8.yaml", imgsz=640, format="onnx")

(the same is https://docs.ultralytics.com/zh/modes/benchmark/#usage-examples):, but i got the error:

ONNX: starting export with onnx 1.17.0 opset 19...

ONNX: slimming with onnxslim 0.1.48...

ONNX: export success ✅ 0.7s, saved as 'yolo11n.onnx' (10.2 MB)

Export complete (0.9s)

Results saved to /data/code/yolo11/ultralytics

Predict: yolo predict task=detect model=yolo11n.onnx imgsz=640

Validate: yolo val task=detect model=yolo11n.onnx imgsz=640 data=/usr/src/ultralytics/ultralytics/cfg/datasets/coco.yaml

Visualize: https://netron.app

ERROR ❌️ Benchmark failure for ONNX: model='/data/code/yolo11/ultralytics/ultralytics/cfg/datasets/coco.yaml' is not a supported model format. Ultralytics supports: ('PyTorch', 'TorchScript', 'ONNX', 'OpenVINO', 'TensorRT', 'CoreML', 'TensorFlow SavedModel', 'TensorFlow GraphDef', 'TensorFlow Lite', 'TensorFlow Edge TPU', 'TensorFlow.js', 'PaddlePaddle', 'MNN', 'NCNN', 'IMX', 'RKNN')

See https://docs.ultralytics.com/modes/predict for help.

Setup complete ✅ (8 CPUs, 61.4 GB RAM, 461.1/499.8 GB disk)

Traceback (most recent call last):

File "/data/code/yolo11/ultralytics/onnx_run.py", line 7, in <module>

benchmark(model="yolo11n.pt", data="coco8.yaml", imgsz=640, format="onnx")

File "/data/code/yolo11/ultralytics/ultralytics/utils/benchmarks.py", line 183, in benchmark

df = pd.DataFrame(y, columns=["Format", "Status❔", "Size (MB)", key, "Inference time (ms/im)", "FPS"])

UnboundLocalError: local variable 'key' referenced before assignment

### Environment

Ultralytics 8.3.70 🚀 Python-3.10.0 torch-2.6.0+cu124 CUDA:0 (NVIDIA L20, 45589MiB)

Setup complete ✅ (8 CPUs, 61.4 GB RAM, 461.2/499.8 GB disk)

OS Linux-5.15.0-124-generic-x86_64-with-glibc2.35

Environment Linux

Python 3.10.0

Install pip

RAM 61.43 GB

Disk 461.2/499.8 GB

CPU Intel Xeon Gold 6462C

CPU count 8

GPU NVIDIA L20, 45589MiB

GPU count 1

CUDA 12.4

numpy ✅ 2.1.1<=2.1.1,>=1.23.0

matplotlib ✅ 3.10.0>=3.3.0

opencv-python ✅ 4.11.0.86>=4.6.0

pillow ✅ 11.1.0>=7.1.2

pyyaml ✅ 6.0.2>=5.3.1

requests ✅ 2.32.3>=2.23.0

scipy ✅ 1.15.1>=1.4.1

torch ✅ 2.6.0>=1.8.0

torch ✅ 2.6.0!=2.4.0,>=1.8.0; sys_platform == "win32"

torchvision ✅ 0.21.0>=0.9.0

tqdm ✅ 4.67.1>=4.64.0

psutil ✅ 6.1.1

py-cpuinfo ✅ 9.0.0

pandas ✅ 2.2.3>=1.1.4

seaborn ✅ 0.13.2>=0.11.0

ultralytics-thop ✅ 2.0.14>=2.0.0

### Minimal Reproducible Example

from ultralytics.utils.benchmarks import benchmark

benchmark(model="yolo11n.pt", data="coco8.yaml", imgsz=640, format="onnx")

### Additional

_No response_

### Are you willing to submit a PR?

- [ ] Yes I'd like to help by submitting a PR!

|

closed

|

2025-02-01T10:20:21Z

|

2025-02-10T07:03:48Z

|

https://github.com/ultralytics/ultralytics/issues/18965

|

[

"non-reproducible",

"exports"

] |

AlexiAlp

| 14

|

lukas-blecher/LaTeX-OCR

|

pytorch

| 281

|

Train the model with my own data

|

Hi, First of all thanks for the great work!

I want to train your model with my own data, but it doesn't work and I don't know why?

The training with your data works perfect and I have modified my data like yours Crohme and pdf.

The generation of the dataset works, also without problems, but if I start the training I get an error which tells me the tensors have different sizes? Please, do you have any tipps for me to solve the problem.

<img width="1200" alt="Bildschirmfoto 2023-06-12 um 13 08 07" src="https://github.com/lukas-blecher/LaTeX-OCR/assets/93684165/95b3fb62-9706-4349-93a5-d1cd5018a643">

|

closed

|

2023-06-12T11:08:32Z

|

2023-06-15T08:59:35Z

|

https://github.com/lukas-blecher/LaTeX-OCR/issues/281

|

[] |

ficht74

| 10

|

CorentinJ/Real-Time-Voice-Cloning

|

tensorflow

| 516

|

ERROR: No matching distribution found for tensorflow==1.15

|

I'm trying to install the prerequisites but am unable to install tensorflow version 1.15. Does anyone have a workaround? Using python3-pip.

|

closed

|

2020-09-01T04:13:46Z

|

2020-09-04T05:12:03Z

|

https://github.com/CorentinJ/Real-Time-Voice-Cloning/issues/516

|

[] |

therealjr

| 3

|

aeon-toolkit/aeon

|

scikit-learn

| 1,970

|

[BUG] visualize_best_shapelets_one_class not handling RTSD correctly

|

### Describe the bug

For RTSD classifiers this method plots the same shapelet three times at different levels of importance.

RTSD transforms the time series space into an array of shape (n_cases, 3*n_shapelets) where 3 features relate to one shapelet. The visualize_best_shapelets_one_class method ranks the importance of each feature according to weights from the classifier, in the case of ST this works because each feature is one shapelet. This function works by calling _get_shp_importance which specially handles RDST by grouping the three features under one index for a shapelet, however this index still appears three times in the returned list. Instead the position of this index should be handled by either:

- averaging the position of the shapelets three features.

- - Returning only the best position out of the three features.

### Steps/Code to reproduce the bug

_No response_

### Expected results

Each shapelet can only be plotted once.

### Actual results

Each shapelet is plotted three times according to the importance of each of its three features.

### Versions

_No response_

|

closed

|

2024-08-14T06:11:14Z

|

2024-08-17T18:44:00Z

|

https://github.com/aeon-toolkit/aeon/issues/1970

|

[

"bug"

] |

IRKnyazev

| 2

|

dmlc/gluon-cv

|

computer-vision

| 1,651

|

Fine-tuning SOTA video models on your own dataset - Sign Language

|

Sir, I am trying to implement a sign classifier using this API as part of my final year college project.

Data set: http://facundoq.github.io/datasets/lsa64/

I followed the Fine-tuning SOTA video models on your own dataset tutorial and fine-tuned

1. i3d_resnet50_v1_custom

2. slowfast_4x16_resnet50_custom

The plotted graph showing almost 90% accuracy but when I running on my inference I am getting miss classification even on the videos I used to train.

So I am stuck, could you have some guide to give anything will be help full.

Thank you

**My data loader for I3D:**

```

num_gpus = 1

ctx = [mx.gpu(i) for i in range(num_gpus)]

transform_train = video.VideoGroupTrainTransform(size=(224, 224), scale_ratios=[1.0, 0.8], mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

per_device_batch_size = 5

num_workers = 0

batch_size = per_device_batch_size * num_gpus

train_dataset = VideoClsCustom(root=os.path.expanduser('DataSet/train/'),

setting=os.path.expanduser('DataSet/train/train.txt'),

train=True,

new_length=64,

new_step=2,

video_loader=True,

use_decord=True,

transform=transform_train)

print('Load %d training samples.' % len(train_dataset))

train_data = gluon.data.DataLoader(train_dataset, batch_size=batch_size,

shuffle=True, num_workers=num_workers)

```

**Inference running:**

```

from gluoncv.utils.filesystem import try_import_decord

decord = try_import_decord()

video_fname = 'DataSet/test/006_001_001.mp4'

vr = decord.VideoReader(video_fname)

frame_id_list = range(0, 64, 2)

video_data = vr.get_batch(frame_id_list).asnumpy()

clip_input = [video_data[vid, :, :, :] for vid, _ in enumerate(frame_id_list)]

transform_fn = video.VideoGroupValTransform(size=(224, 224), mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

clip_input = transform_fn(clip_input)

clip_input = np.stack(clip_input, axis=0)

clip_input = clip_input.reshape((-1,) + (32, 3, 224, 224))

clip_input = np.transpose(clip_input, (0, 2, 1, 3, 4))

print('Video data is readed and preprocessed.')

# Running the prediction

pred = net(nd.array(clip_input, ctx = mx.gpu(0)))

topK = 5

ind = nd.topk(pred, k=topK)[0].astype('int')

print('The input video clip is classified to be')

for i in range(topK):

print('\t[%s], with probability %.3f.'%

(CLASS_MAP[ind[i].asscalar()], nd.softmax(pred)[0][ind[i]].asscalar()))

```

|

closed

|

2021-04-20T18:39:30Z

|

2021-05-05T19:18:51Z

|

https://github.com/dmlc/gluon-cv/issues/1651

|

[] |

aslam-ep

| 1

|

abhiTronix/vidgear

|

dash

| 426

|

[Question]: How do i directly get the encoded frames???

|

### Issue guidelines

- [X] I've read the [Issue Guidelines](https://abhitronix.github.io/vidgear/latest/contribution/issue/#submitting-an-issue-guidelines) and wholeheartedly agree.

### Issue Checklist

- [X] I have searched open or closed [issues](https://github.com/abhiTronix/vidgear/issues) for my problem and found nothing related or helpful.

- [X] I have read the [Documentation](https://abhitronix.github.io/vidgear/latest) and found nothing related to my problem.

- [X] I have gone through the [Bonus Examples](https://abhitronix.github.io/vidgear/latest/help/get_help/#bonus-examples) and [FAQs](https://abhitronix.github.io/vidgear/latest/help/get_help/#frequently-asked-questions) and found nothing related or helpful.

### Describe your Question

I am able to use vidgear, setup to read frames from usb camera, use ffmpeg, and encode to h264 format, i don't want to write it to a mp4 file or something, i just want the encoded frames as they come, to be in my local variable, i will use that to stream directly.

### Terminal log output(Optional)

_No response_

### Python Code(Optional)

_No response_

### VidGear Version

0.3.3

### Python version

3.8

### Operating System version

windows

### Any other Relevant Information?

_No response_

|

closed

|

2024-11-12T06:09:49Z

|

2024-11-13T07:39:30Z

|

https://github.com/abhiTronix/vidgear/issues/426

|

[

"QUESTION :question:",

"SOLVED :checkered_flag:"

] |

Praveenstein

| 1

|

gevent/gevent

|

asyncio

| 1,936

|

gevent 1.5.0 specifies a cython file as a prerequisite, causing cython to run only when it was installed after 2020

|

When installing [`spack install py-gevent`](https://github.com/spack/spack) it resolves to

```

py-gevent@1.5.0

py-cffi@1.15.1

libffi@3.4.4

py-pycparser@2.21

py-cython@0.29.33

py-greenlet@1.1.3

py-pip@23.0

py-setuptools@63.0.0

py-wheel@0.37.1

python@3.10.8

...

```

and attempts a build using `pypi` tarballs.

### Description:

`py-gevent` re-cythonizes files because it has a prereq specified on cython files:

```

Compiling src/gevent/resolver/cares.pyx because it depends on /opt/linux-ubuntu20.04-x86_64/gcc-11.1.0/py-cython-0.29.32-wistmswtv7bm55wpadctrr3eqb7jnona/lib/python3.10/site-packages/Cython/Includes/libc/string.pxd.

```

So, depending on what date you've installed cython on your system, it runs cython or not.

It's like writing a makefile that specifies a system library as a prereq by absolute path...

Also, is there a way to tell gevent setup that it should always run cython no matter what?

|

closed

|

2023-03-10T13:38:51Z

|

2023-03-10T16:31:47Z

|

https://github.com/gevent/gevent/issues/1936

|

[] |

haampie

| 5

|

graphql-python/gql

|

graphql

| 179

|

Using a single session with FastAPI

|

I have a Hasura GraphQL engine as a server with a few small services acting like webhooks for business logic and handling database events. One of theses services has a REST API and needs to retrieve data from the GraphQL engine or run mutations.

Due to performance concerns I have decided to rewrite one of the services with FastAPI in order to leverage async.

I am quite new to async in Python in general which is why I took my time to go through gql documentation.

It is my understanding that it is ideal to keep a single async client session throughout the life span of the service. It is also my understanding that the only way of getting an async session is using the `async with client as session:` syntax.

That poses the question of how can I wrap the whole app inside of the `async with`. Or perhaps I am missing something and there is a better way of doing this.

|

closed

|

2020-12-07T20:32:48Z

|

2022-07-03T13:54:29Z

|

https://github.com/graphql-python/gql/issues/179

|

[

"type: feature"

] |

antonkravc

| 11

|

K3D-tools/K3D-jupyter

|

jupyter

| 426

|

model_matrix not updated when using manipulators

|

* K3D version: 2.15.2

* Python version: 3.8.13

* Operating System: Ubuntu 20.04 (backend), Windows 10 Pro (frontend)

### Description

When manipulating an object in plot "manipulate" mode the object's model matrix doesn't get updated and also "observe" callback is not fired.

I found that it's probably because of this commented out code: https://github.com/K3D-tools/K3D-jupyter/blob/main/js/src/providers/threejs/initializers/Manipulate.js#L18

and could probably simply create a PR reversing this, but I guess there is some broader context of this change I don't know :) (https://github.com/K3D-tools/K3D-jupyter/commit/46d508b39255fc16e2ed7704fcadb5dae6d61952#diff-6863658dc28ee81ff40444d18191cad7547592119a5f4a302ecee062b6bb428f).

### Simple example

```python

import k3d

import k3d.platonic

plot = k3d.plot()

cube = k3d.platonic.Cube().mesh

plot += cube

plot

```

```python

plot.mode = "manipulate"

plot.manipulate_mode = "rotate"

cube.observe(print)

```

When manipulating the object the callback is not fired and when checking the model_matrix directly:

```python

cube.model_matrix

```

it's still an identity matrix:

```

array([[1., 0., 0., 0.],

[0., 1., 0., 0.],

[0., 0., 1., 0.],

[0., 0., 0., 1.]], dtype=float32)

```

|

open

|

2023-06-07T07:47:37Z

|

2023-08-16T22:36:44Z

|

https://github.com/K3D-tools/K3D-jupyter/issues/426

|

[] |

wmalara

| 0

|

dynaconf/dynaconf

|

flask

| 331

|

[RFC] Add support for Pydantic BaseSettings

|

Pydantic has a schema class BaseSettings that can be integrated with Dynaconf validators.

|

closed

|

2020-04-29T14:24:51Z

|

2020-09-12T04:20:13Z

|

https://github.com/dynaconf/dynaconf/issues/331

|

[

"Not a Bug",

"RFC"

] |

rochacbruno

| 2

|

Textualize/rich

|

python

| 3,647

|

[BUG] legacy_windows is True when is_terminal is False

|

- [x] I've checked [docs](https://rich.readthedocs.io/en/latest/introduction.html) and [closed issues](https://github.com/Textualize/rich/issues?q=is%3Aissue+is%3Aclosed) for possible solutions.

- [x] I can't find my issue in the [FAQ](https://github.com/Textualize/rich/blob/master/FAQ.md).

**Describe the bug**

The console object's `legacy_windows` is True when `is_terminal` is False. I think this is a bug, because it's unable to successfully calling Windows console API on non-terminal environment.

```python

# foo.py

from rich.console import Console

console = Console()

print(console.legacy_windows)

```

```shell

> python foo.py

False

> python foo.py | echo

True

```

**Platform**

I use terminal in VS Code on Windows 10.

|

open

|

2025-03-02T03:31:34Z

|

2025-03-02T03:31:50Z

|

https://github.com/Textualize/rich/issues/3647

|

[

"Needs triage"

] |

xymy

| 1

|

pytorch/pytorch

|

python

| 149,621

|

`torch.cuda.manual_seed` ignored

|

### 🐛 Describe the bug

When using torch.compile, torch.cuda.manual_seed/torch.cuda.manual_seed_all/torch.cuda.random.manual_seed do not seem to properly enforce reproducibility across multiple calls to a compiled function.

# torch.cuda.manual_seed

Code:

```python

import torch

import torch._inductor.config

torch._inductor.config.fallback_random = True

@torch.compile

def foo():

# Set the GPU seed

torch.cuda.manual_seed(3)

# Create a random tensor on the GPU.

# If a CUDA device is available, the tensor will be created on CUDA.

return torch.rand(4, device='cuda' if torch.cuda.is_available() else 'cpu')

# Call the compiled function twice

print("cuda.is_available:", torch.cuda.is_available())

result1 = foo()

result2 = foo()

print(result1)

print(result2)

```

Output:

```

cuda.is_available: True

tensor([0.2501, 0.4582, 0.8599, 0.0313], device='cuda:0')

tensor([0.3795, 0.0543, 0.4973, 0.4942], device='cuda:0')

```

# `torch.cuda.manual_seed_all`

Code:

```

import torch

import torch._inductor.config

torch._inductor.config.fallback_random = True

@torch.compile

def foo():

# Reset CUDA seeds

torch.cuda.manual_seed_all(3)

# Generate a random tensor on the GPU

return torch.rand(4, device='cuda')

# Call the compiled function twice

result1 = foo()

result2 = foo()

print(result1)

print(result2)

```

Output:

```

tensor([0.0901, 0.8324, 0.4412, 0.2539], device='cuda:0')

tensor([0.5561, 0.6098, 0.8558, 0.1980], device='cuda:0')

```

# torch.cuda.random.manual_seed

Code

```

import torch

import torch._inductor.config

torch._inductor.config.fallback_random = True

# Ensure a CUDA device is available.

if not torch.cuda.is_available():

print("CUDA is not available on this system.")

@torch.compile

def foo():

# Reset GPU random seed

torch.cuda.random.manual_seed(3)

# Generate a random tensor on GPU

return torch.rand(4, device='cuda')

# Call the compiled function twice

result1 = foo()

result2 = foo()

print(result1)

print(result2)

```

Output:

```

tensor([8.1055e-01, 4.8494e-01, 8.3937e-01, 6.7405e-04], device='cuda:0')

tensor([0.4365, 0.5669, 0.7746, 0.8702], device='cuda:0')

```

### Versions

torch 2.6.0

cc @pbelevich @chauhang @penguinwu

|

open

|

2025-03-20T12:42:27Z

|

2025-03-24T15:46:40Z

|

https://github.com/pytorch/pytorch/issues/149621

|

[

"triaged",

"module: random",

"oncall: pt2"

] |

vwrewsge

| 3

|

horovod/horovod

|

tensorflow

| 3,088

|

Add terminate_on_nan flag to spark lightning estimator

|

**Is your feature request related to a problem? Please describe.**

pytorch lightning trainer's api has a parameter to control the behavior of seeing nan output, this needs to be added to the estimator's api to support controling that behavior for Horovod users.

**Describe the solution you'd like**

Add terminate_on_nan to estimator and pass it down to trainer constructor.

|

closed

|

2021-08-06T17:47:05Z

|

2021-08-09T18:21:13Z

|

https://github.com/horovod/horovod/issues/3088

|

[

"enhancement"

] |

Tixxx

| 1

|

litestar-org/litestar

|

pydantic

| 3,677

|

Enhancement: Allow streaming Templates to serve very large content

|

### Summary

The render() method of Template class generates the entire content of the template at once.

But for large content, this can be inadequate.

For instance, for jinja2 one can use generators and also call the generate() method to yield the contents in a streaming fashion.

### Basic Example

_No response_

### Drawbacks and Impact

_No response_

### Unresolved questions

_No response_

|

open

|

2024-08-20T13:07:38Z

|

2025-03-20T15:54:52Z

|

https://github.com/litestar-org/litestar/issues/3677

|

[

"Enhancement",

"Templating",

"Responses"

] |

ptallada

| 0

|

slackapi/bolt-python

|

fastapi

| 600

|

trigger_exchanged error using AWS Lambda + Workflow Steps

|

Hi,

I created a workflow step at the company where I work to automate the approval of requests without having to open a ticket. Basically I have a workflow where the user fills in some information and after submitting the form, a message arrives for the manager with a button to approve or deny the request.

The issue is that I need to validate the information entered in the workflow and for that I need to connect to other APIs etc, and this can all take up to 10 seconds before validating the data and sending the request.

I believe that due to slack's response time, as I can't respond within 3 seconds, slack repeats requests, and with that I have repeated request messages being sent.

These are the AWS logs where requests are repeated and the error occurs:

If this is really the problem and I can solve it using Lazy listeners, I would like to understand how to use them in workflow steps.

This is the part of my project where I do the validations in the execute method of the workflow:

```

St = SlackTools()

def execute(step, complete, fail):

inputs = step["inputs"]

sendername = inputs["senderName"]["value"].strip()

sendermail = inputs["senderEmail"]["value"].strip()

sendermention = inputs["senderMention"]["value"].strip()

senderid = sendermention[2:len(sendermention)-1]

awsname = inputs["awsName"]["value"].strip()

awsrole = inputs["awsRole"]["value"].strip()

squad = inputs["squad"]["value"].strip()

reason = inputs["reason"]["value"].strip()

managermail = inputs["managerMail"]["value"].strip()

outputs = {

"senderName": sendername,

"senderEmail": sendermail,

"senderMention": sendermention,

"awsName": awsname,

"awsRole": awsrole,

"squad": squad,

"reason": reason,

"managerMail": managermail

}

checkinputname = Ot.check_inputs(inputs, INPUT_AWS_NAME)

checkinputmanager = Ot.check_inputs(inputs, INPUT_MANAGER_MAIL)

# Check inputs

if not checkinputname or not checkinputmanager:

if not checkinputname:

errmsg = {"message": f"Acesso {awsname} não encontrado! Olhar no Pin fixado do canal o nome de cada AWS."}

blocklayout = FAIL_MSG_AWS_NAME

else:

errmsg = {"message": f"Gestor {managermail} não encontrado no ambiente!"}

blocklayout = FAIL_MSG_MANAGER_MAIL

fail(error=errmsg)

blockfail = get_workflow_block_fail(sendermention, managermail, awsname, awsrole, blocklayout)

send_block_fail_message(blockfail, senderid) # Send fail block message to user

else:

try:

manager = St.app().client.users_lookupByEmail(email=managermail)

managerid = manager["user"]["id"]

blocksuccess = get_workflow_block_success(sendermention, awsname)

send_request_aws_message(sendername, sendermail, sendermention,

awsname, awsrole, squad, reason, managermail, managerid) # Send block message to manager

except Exception as e:

if "users_not_found" in str(e):

error = {"message": f"Gestor {managermail} não encontrado no ambiente!"}

fail(error=error)

blocklayout = FAIL_MSG_MANAGER_MAIL

blockfail = get_workflow_block_fail(sendermention, managermail, awsname, awsrole, blocklayout)

send_block_fail_message(blockfail, senderid) # Send fail block message to user

else:

error = {"message": "Just testing step failure! {}".format(e)}

fail(error=error)

St.webhook_directly(WEBHOOK_LOGS_URI_FAIL, e, FAIL)

else:

St.app().client.chat_postMessage(text="Envio com sucesso de solicitação de acesso AWS", blocks=blocksuccess,

channel=senderid) # Send success block message to user

complete(outputs=outputs)

```

|

closed

|

2022-02-24T19:36:40Z

|

2022-02-28T19:35:21Z

|

https://github.com/slackapi/bolt-python/issues/600

|

[

"question"

] |

bsouzagit

| 4

|

mouredev/Hello-Python

|

fastapi

| 80

|

关于平台碰到被黑出不了款显示通道一直维护怎么办?

|

需要帮助请添加出黑咨询V《zhkk8683》咨询QQ:1923630145

被平台黑了是很让人头疼的一件事情,因为这意味着您无法取出您在平台上的资金。首先,您需要了解平台黑名单的原因。

如果您违反了平台的政策,那么您的帐户可能会被暂停或限制。在这种情况下,您需要阅读平台念汪的用户协议,并与平台

的客户支持团队联系,以了解如何解决问题。

如果您认为您的帐户被错误地黑名单,您应该立即联系平台的枝高樱客户支持团队并提供所有必要的信息。您可能需要提供

您的身份证明、交易历史记录和其他证明您身份的文件。在提供这些信息之后,您需要耐心等待平台的回复,通常需要几个

工作日。如果您被黑名单的原因是因为您的交易被怀疑存在欺诈行为,您需要提供证据以证明您的清白。

|

closed

|

2024-10-07T06:35:45Z

|

2024-10-16T05:27:09Z

|

https://github.com/mouredev/Hello-Python/issues/80

|

[] |

xiao691

| 0

|

JaidedAI/EasyOCR

|

pytorch

| 914

|

Greek Language

|

First of all thank you soo much for this wonderful work. What about the Greek language isn't it developed yet?

Or plz tell me how I can trained easy-OCR model with Greek language

|

open

|

2022-12-23T10:09:31Z

|

2023-01-14T04:57:15Z

|

https://github.com/JaidedAI/EasyOCR/issues/914

|

[] |

Ham714

| 1

|

trevorstephens/gplearn

|

scikit-learn

| 123

|

Crossover only results in one offspring

|

Usually crossover produces two individuals by symmetrically replacing the subtrees in each other.

The crossover operator in gplearn however only produces one offspring, is there a reason it was implemented that way?

The ramification of this implementation is that genetic material from the parent is completely eradicated from the gene pool, because it is simply overwritten from genetic material from the donor.

When implemented in a symmetric fashion the genetic material is swapped and both are kept in the gene pool which may result in better results.

It would be relatively simple to do it.

The first one to return is as it is in the code:

https://github.com/trevorstephens/gplearn/blob/07b41a150dbf7e16645268b88405514b7f23590a/gplearn/_program.py#L549-L551

The second individual would be:

```

(donor[:donor_start +

self.program[start:end] +

donor[donor_end:])

```

Then a few adjustments in the function _parallel_evolve in genetic.py (because instead of adding one we add two)

Evolutionary speaking it may be even better that we only add one from the crossover or perhaps in the end it doesn't even make a huge difference if we do the operation twice to add two or do it once to add two.

Opinions?

|

closed

|

2019-01-11T17:48:48Z

|

2019-02-17T10:21:30Z

|

https://github.com/trevorstephens/gplearn/issues/123

|

[] |

hwulfmeyer

| 3

|

scanapi/scanapi

|

rest-api

| 4

|

Implement Query Params

|

Similar to `headers` implementation

```yaml

api:

base_url: ${BASE_URL}

headers:

Authorization: ${BEARER_TOKEN}

params:

per_page: 10

```

|

closed

|

2019-07-21T19:20:54Z

|

2019-08-01T13:08:31Z

|

https://github.com/scanapi/scanapi/issues/4

|

[

"Feature",

"Good First Issue"

] |

camilamaia

| 0

|

miguelgrinberg/python-socketio

|

asyncio

| 195

|

Default ping_timout is too high for react native

|

Related issues:

https://github.com/facebook/react-native/issues/12981

https://github.com/socketio/socket.io/issues/3054

Fix:

```py

Socket = socketio.AsyncServer(

ping_timeout=30,

ping_interval=30

)

```

|

closed

|

2018-07-23T15:41:15Z

|

2019-01-27T08:59:21Z

|

https://github.com/miguelgrinberg/python-socketio/issues/195

|

[

"question"

] |

Rybak5611

| 1

|

autogluon/autogluon

|

data-science

| 4,486

|

use in pyspark

|

## Description

from autogluon.tabular import TabularPredictor

can TabularPredictor use spark engine to deal with big data?

## References

|

closed

|

2024-09-23T10:45:40Z

|

2024-09-26T18:12:28Z

|

https://github.com/autogluon/autogluon/issues/4486

|

[

"enhancement",

"module: tabular"

] |

hhk123

| 1

|

modin-project/modin

|

pandas

| 7,139

|

Simplify Modin on Ray installation

|

Details in https://github.com/modin-project/modin/pull/6955#issue-2147677569

|

closed

|

2024-04-02T11:36:26Z

|

2024-05-02T13:32:36Z

|

https://github.com/modin-project/modin/issues/7139

|

[

"dependencies 🔗"

] |

anmyachev

| 0

|

jupyterlab/jupyter-ai

|

jupyter

| 972

|

Create a kernel so you can have an AI notebook with context

|

<!-- Welcome! Thank you for contributing. These HTML comments will not render in the issue, but you can delete them once you've read them if you prefer! -->

<!--

Thanks for thinking of a way to improve JupyterLab. If this solves a problem for you, then it probably solves that problem for lots of people! So the whole community will benefit from this request.

Before creating a new feature request please search the issues for relevant feature requests.

-->

### Problem

I would like to have AI conversations in a notebook file with the file as the context. New Notebook would be a new conversation

### Proposed Solution

Create a kernel rather than magics in another kernel. Use for context

### Additional context

The notebook format is great for "call and response" like a chat. The current abilities do not let you create a notebook where all "code" is evaluated by the AI chat. You could preface every cell with `%%ai` but that is cumbersome. Also doesn't seem to save context (but that may be user setting)

|

open

|

2024-08-29T19:49:28Z

|

2024-08-29T19:49:28Z

|

https://github.com/jupyterlab/jupyter-ai/issues/972

|

[

"enhancement"

] |

Jwink3101

| 0

|

Hironsan/BossSensor

|

computer-vision

| 3

|

memory problem?

|

It seems you read all the images in to cache. Will it be problem if amount of images is huge. like 100k training images?

I also used keras, but I use flow_from_directory method in keras instead

Thanks you code.

Got some inspiration.

|

open

|

2016-12-24T07:00:16Z

|

2016-12-24T22:19:55Z

|

https://github.com/Hironsan/BossSensor/issues/3

|

[] |

staywithme23

| 0

|

axnsan12/drf-yasg

|

django

| 700

|

How to group swagger API endpoints with drf_yasg

|

My related question in stackoverflow https://stackoverflow.com/questions/66001064/how-to-group-swagger-api-endpoints-with-drf-yasg-django

I am am not able to group "v2" in swagger. Is that possible ?

path('v2/token_api1', api.token_api1, name='token_api1'),

path('v2/token_api2', api.token_api2, name='token_api2'),

previous rest_framework_swagger was able to group like this https://i.imgur.com/EJN5o8c.png

Here is the full code (please note I am using @api_view as it was testing for migration work)

https://gist.github.com/axilaris/099393c171f940bd7c127a7d9942a056

|

closed

|

2021-02-05T23:08:40Z

|

2021-02-07T23:49:44Z

|

https://github.com/axnsan12/drf-yasg/issues/700

|

[] |

axilaris

| 1

|

Kludex/mangum

|

fastapi

| 106

|

Refactor tests parameters and fixtures

|

The tests are a bit difficult to understand at the moment. I think the parameterization and fixtures could be modified to make it easier to make the behaviour more clear, docstrings are probably a good idea too.

|

closed

|

2020-05-09T13:49:54Z

|

2021-03-22T15:08:37Z

|

https://github.com/Kludex/mangum/issues/106

|

[

"improvement",

"chore"

] |

jordaneremieff

| 7

|

pyeve/eve

|

flask

| 669

|

Keep getting exception "DOMAIN dictionary missing or wrong."

|

I have no idea what the cause of this is. I'm running Ubuntu 14.04, my virtual host is pointed to a wsgi file in the same folder as the app file. I also have a settings.py file in that same folder. this runs just fine on windows locally, but Ubuntu virtualhost with virtualenv it throws this error.

|

closed

|

2015-07-14T01:51:50Z

|

2015-07-14T06:40:16Z

|

https://github.com/pyeve/eve/issues/669

|

[] |

chawk

| 1

|

RomelTorres/alpha_vantage

|

pandas

| 375

|

[3.0.0][regression] can't copy 'alpha_vantage/async_support/sectorperformance.py'

|

Build fails:

```

copying alpha_vantage/async_support/foreignexchange.py -> build/lib/alpha_vantage/async_support

error: can't copy 'alpha_vantage/async_support/sectorperformance.py': doesn't exist or not a regular file

*** Error code 1

```

This file is a broken symbolic link:

```

$ file ./work-py311/alpha_vantage-3.0.0/alpha_vantage/async_support/sectorperformance.py

./work-py311/alpha_vantage-3.0.0/alpha_vantage/async_support/sectorperformance.py: broken symbolic link to ../sectorperformance.py

```

FreeBSD 14.1

|

closed

|

2024-07-18T13:02:16Z

|

2024-07-30T04:56:18Z

|

https://github.com/RomelTorres/alpha_vantage/issues/375

|

[] |

yurivict

| 1

|

lmcgartland/graphene-file-upload

|

graphql

| 21

|

from graphene_file_upload.flask import FileUploadGraphQLView fails with this error:

|

```

Traceback (most recent call last):

File "server.py", line 4, in <module>

import graphene_file_upload.flask

File "~/.local/share/virtualenvs/backend-JODmqDQ7/lib/python2.7/site-packages/graphene_file_upload/flask.py", line 1, in <module>

from flask import request

ImportError: cannot import name request

```

Potentially I am just using this incorrectly?

|

closed

|

2018-11-07T23:12:01Z

|

2018-11-09T21:46:27Z

|

https://github.com/lmcgartland/graphene-file-upload/issues/21

|

[] |

mfix22

| 9

|

dynaconf/dynaconf

|

fastapi

| 371

|

Link from readthedocs to the new websites

|

Colleagues of mine (not really knowing about dynaconf) were kind of confused when I told them about dynaconf 3.0 and they could just find the docs for 2.2.3 on https://dynaconf.readthedocs.io/

Would it be feasible to add a prominent link pointing to https://www.dynaconf.com/?

|

closed

|

2020-07-14T06:00:23Z

|

2020-07-14T21:31:54Z

|

https://github.com/dynaconf/dynaconf/issues/371

|

[

"question"

] |

aberres

| 0

|

PedroBern/django-graphql-auth

|

graphql

| 57

|

Feature / Idea: Use UserModel mixins to add UserStatus directly to a custom User model

|

Thank you for producing a great library with outstanding documentation.

The intention of this "issue" is to propose / discuss a different "design approach" of using mixins on a custom user model rather than the `UserStatus` `OneToOneField`.

This would work by putting all the functionality provided by `UserStatus` in to mixins of the form:

```

class VerifiedUserMixin(models.Model):

verified = models.BooleanField(default=False)

class Meta:

abstract = True

...

class SecondaryEmailUserMixin(models.Model):

secondary_email = models.EmailField(blank=True, null=True)

class Meta:

abstract = True

...

```

The mixin is then added to the custom user model in the project:

```

from django.contrib.auth.models import AbstractUser

from graphql_auth.mixins import VerifiedUserMixin

class CustomUser(VerifiedUserMixin, AbstractUser):

email = models.EmailField(blank=False, max_length=254, verbose_name="email address")

USERNAME_FIELD = "username" # e.g: "username", "email"

EMAIL_FIELD = "email" # e.g: "email", "primary_email"

```

The main advantages are:

- There is no need for an additional db table

- All functionality is directly available from the `CustomUser` model (rather than via `CustomUser.status`). This reduces any opportunity of additional db queries when status is not `select_related`

- It becomes easy to separate the different functionality and only include what is needed (in the example above I omitted the `SecondaryEmailUserMixin` from my `CustomUser`)

- No need for signals to create the `UserStatus` instance

There is an added setup step of adding the mixins to the custom user model, but I am assuming the library is intended to be used with a custom user module so this is not a significant barrier

I realise this is quite a significant change, but in my experience adding functionality directly onto the user model is simpler to work with than related objects.

In principle the library could probably support both the 1-to-1 and mixin approach, but that would fairly significantly increase the maintenance overhead.

|

open

|

2020-07-02T07:58:46Z

|

2020-07-02T17:30:37Z

|

https://github.com/PedroBern/django-graphql-auth/issues/57

|

[

"enhancement"

] |

maxpeterson

| 2

|

piskvorky/gensim

|

machine-learning

| 3,183

|

Doc2Vec loss always showing 0

|

```

class MonitorCallback(CallbackAny2Vec):

def __init__(self, test_cui, test_sec):

self.test_cui = test_cui

self.test_sec = test_sec

def on_epoch_end(self, model):

print('Model loss:', model.get_latest_training_loss())

for word in self.test_cui: # show wv logic changes

print(word, model.wv.most_similar(word))

for word in self.test_sec: # show dv logic changes

print(word, model.dv.most_similar(word))

```

```

model = Doc2Vec(vector_size=300, min_count=1, epochs=1, window=5, workers=32)

print('Building vocab...')

model.build_vocab(train_corpus)

print(model.corpus_count, model.epochs)

model.train(

train_corpus, total_examples=model.corpus_count, compute_loss=True, epochs=model.epochs, callbacks=[monitor])

print('Done training...')

model.save('sec2vec.model')

```

Each time the callback prints, it prints 0. The second issue is that after the first epoch, the model seems pretty good according to calls to most_similar. Yet, after the second it appears random. I have a fairly large dataset so I don't think dramatic overfitting is happening. Is there a bug after the first epoch or is the learning rate getting messed up? It's tough to know what's going on because there's no within-epoch logging and the training loss is always evaluating to 0.

|

closed

|

2021-06-28T01:33:32Z

|

2021-06-28T21:33:04Z

|

https://github.com/piskvorky/gensim/issues/3183

|

[] |

griff4692

| 2

|

TracecatHQ/tracecat

|

automation

| 116

|

Update docs for Linux installs

|

Docker engine community edition for linux doesn't ship with `host.docker.internal`. We found a fix by adding:

```yaml

extra_hosts:

- "host.docker.internal:host-gateway"

```

to each service in the docker compose yaml file.

|

closed

|

2024-05-01T02:59:30Z

|

2024-05-01T03:14:33Z

|

https://github.com/TracecatHQ/tracecat/issues/116

|

[] |

daryllimyt

| 0

|

alyssaq/face_morpher

|

numpy

| 26

|

FaceMorph error

|

Hi i am new to python please can you tell me how to morph the images using commands in linux.

when i am using this command python facemorpher/morpher.py --src=index.jpg --dest=index3.jpg

Traceback (most recent call last):

File "facemorpher/morpher.py", line 139, in <module>

verify_args(args)

File "facemorpher/morpher.py", line 42, in verify_args

if args['/home/newter/face_morpher/images'] is None:

KeyError: '/home/newter/face_morpher/images'

thanks in advance

|

open

|

2017-09-09T08:15:19Z

|

2019-01-02T09:50:16Z

|

https://github.com/alyssaq/face_morpher/issues/26

|

[] |

sadhanareddy007

| 5

|

Farama-Foundation/Gymnasium

|

api

| 831

|

[Question] Manually reset vector envronment

|

### Question

As far as I know, the gym vector environment auto-reset a subenv when the env is done. I wonder If there is a way to manually reset it. Because I want to exploiting vecenv feature in implementing vanilla policy gradient algorithm, where every update's data should be one or severy complete episode.

|

open

|

2023-12-09T12:00:29Z

|

2024-02-28T14:45:33Z

|

https://github.com/Farama-Foundation/Gymnasium/issues/831

|

[

"question"

] |

zzhixin

| 9

|

donnemartin/system-design-primer

|

python

| 596

|

Notifying the user that the transactions have completed

|

> Notifies the user the transactions have completed through the Notification Service:

Uses a Queue (not pictured) to asynchronously send out notifications

Do we need to notify the user that the transaction has completed? Isn't notifying only for if budget was exceeded?

|

open

|

2021-10-12T13:48:40Z

|

2024-10-15T16:06:20Z

|

https://github.com/donnemartin/system-design-primer/issues/596

|

[

"needs-review"

] |

lor-engel

| 1

|

jumpserver/jumpserver

|

django

| 14,541

|

[Feature] 支持管理需要端口敲门的 ssh 资产

|

### 产品版本

v3.10.15

### 版本类型

- [ ] 社区版

- [ ] 企业版

- [X] 企业试用版

### 安装方式

- [ ] 在线安装 (一键命令安装)

- [ ] 离线包安装

- [ ] All-in-One

- [ ] 1Panel

- [X] Kubernetes

- [ ] 源码安装

### ⭐️ 需求描述

默认 ssh 端口是关闭的,现在所有服务都是部署在 k8s 上的,具体执行的 k8s 节点无法确认

端口敲门是一种通过在预设的一组关闭端口上生成连接尝试来从外部打开防火墙端口的方法。一旦收到正确的连接尝试序列,防火墙规则就会动态修改,允许发送连接尝试的主机通过特定端口连接。此技术通常用于隐藏重要服务(例如SSH),以防止暴力破解或其他未经授权的攻击

### 解决方案

暂无

### 补充信息

_No response_

|

closed

|

2024-11-27T05:02:41Z

|

2024-12-31T07:27:00Z

|

https://github.com/jumpserver/jumpserver/issues/14541

|

[

"⭐️ Feature Request"

] |

LiaoSirui

| 3

|

allenai/allennlp

|

data-science

| 4,739

|

Potential bug: The maxpool in cnn_encoder can be triggered by pad tokens.

|

## Description