repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

httpie/cli

|

api

| 813

|

Document JSON-escaping in "=" syntax

|

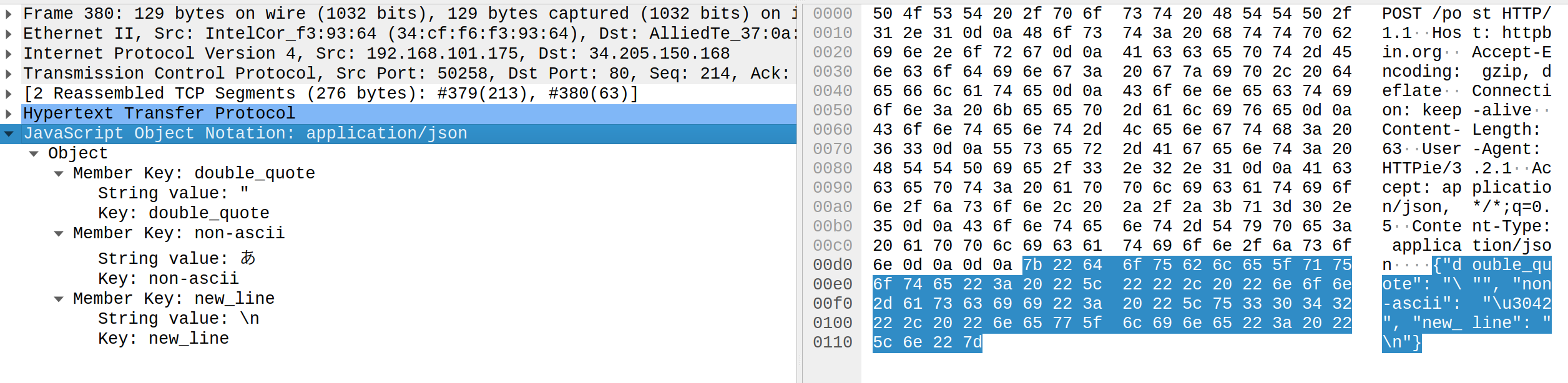

In `=` syntax, special characters:

- not a `char` defined in [RFC 8259](https://www.rfc-editor.org/rfc/rfc8259) 7. Strings

- non-ascii characters (such like "あ")

are escaped, and `:=` escapes only non-ascii characters:

```sh

http httpbin.org/post double_quote='"' non-ascii=あ new_line='

'

# sends: {"double_quote": "\"", "non-ascii": "\u3042", "new_line": "\n"}

```

(The output of `http -v` is somewhat confusing, so I pasted a Wireshark capture. See https://github.com/httpie/httpie/issues/1474)

This behavior should be documented, shouldn't it?

|

closed

|

2019-11-02T11:26:48Z

|

2023-01-21T08:15:36Z

|

https://github.com/httpie/cli/issues/813

|

[

"help wanted"

] |

wataash

| 3

|

graphql-python/gql

|

graphql

| 344

|

Managing CRSF cookies with graphene-django

|

Hello,

I'm trying to do a GraphQL mutation between 2 Django projects (different hosts). One project has the `gql` client and the other has the `graphene` and `graphene-django` libraries. Everything works fine when `django.middleware.csrf.CsrfViewMiddleware` is deactivated on the second project, but when it is enabled the server throws an error `Forbidden (CSRF cookie not set.): /graphql`.

At the client side, how can I fetch a CSRF token from the server and include it in the HTTP header ?

This is my code

```

transport = AIOHTTPTransport(url="http://x.x.x.x:8000/graphql")

client = Client(transport=transport, fetch_schema_from_transport=True)

query = gql(

"""

mutation ...

"""

)

result = client.execute(query)

```

Please find the complete traceback. As you can see there is also a `"Not a JSON answer"` but it must be `gql` that doesn't recognize the answer from `graphene`.

```

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: Traceback (most recent call last):

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/transport/aiohttp.py", line 316, in execute

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: result = await resp.json(content_type=None)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/aiohttp/client_reqrep.py", line 1119, in json

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return loads(stripped.decode(encoding))

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/usr/lib/python3.7/json/__init__.py", line 348, in loads

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return _default_decoder.decode(s)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/usr/lib/python3.7/json/decoder.py", line 337, in decode

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: obj, end = self.raw_decode(s, idx=_w(s, 0).end())

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/usr/lib/python3.7/json/decoder.py", line 355, in raw_decode

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: raise JSONDecodeError("Expecting value", s, err.value) from None

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: json.decoder.JSONDecodeError: Expecting value: line 1 column 1 (char 0)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: During handling of the above exception, another exception occurred:

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: Traceback (most recent call last):

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/transport/aiohttp.py", line 304, in raise_response_error

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: resp.raise_for_status()

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/aiohttp/client_reqrep.py", line 1009, in raise_for_status

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: headers=self.headers,

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: aiohttp.client_exceptions.ClientResponseError: 403, message='Forbidden', url=URL('http://y.y.y.y:8000/graphql')

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: The above exception was the direct cause of the following exception:

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: Traceback (most recent call last):

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/asgiref/sync.py", line 482, in thread_handler

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: raise exc_info[1]

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/core/handlers/base.py", line 233, in _get_response_async

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: response = await wrapped_callback(request, *callback_args, **callback_kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/asgiref/sync.py", line 444, in __call__

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: ret = await asyncio.wait_for(future, timeout=None)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/usr/lib/python3.7/asyncio/tasks.py", line 414, in wait_for

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return await fut

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/asgiref/current_thread_executor.py", line 22, in run

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: result = self.fn(*self.args, **self.kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/asgiref/sync.py", line 486, in thread_handler

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return func(*args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/contrib/admin/options.py", line 616, in wrapper

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return self.admin_site.admin_view(view)(*args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/utils/decorators.py", line 130, in _wrapped_view

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: response = view_func(request, *args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/views/decorators/cache.py", line 44, in _wrapped_view_func

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: response = view_func(request, *args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/contrib/admin/sites.py", line 232, in inner

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return view(request, *args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/utils/decorators.py", line 43, in _wrapper

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return bound_method(*args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/utils/decorators.py", line 130, in _wrapped_view

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: response = view_func(request, *args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/contrib/admin/options.py", line 1723, in changelist_view

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: response = self.response_action(request, queryset=cl.get_queryset(request))

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/contrib/admin/options.py", line 1408, in response_action

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: response = func(self, request, queryset)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/var/www/myapp/strategy/admin.py", line 42, in push

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: target.push()

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/aiohttp_csrf/__init__.py", line 102, in wrapped_handler

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return handler(*args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/var/www/myapp/strategy/models.py", line 125, in push

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: result = client.execute(query, variable_values=params)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/client.py", line 396, in execute

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: **kwargs,

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/usr/lib/python3.7/asyncio/base_events.py", line 579, in run_until_complete

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return future.result()

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/client.py", line 284, in execute_async

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: async with self as session:

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/client.py", line 658, in __aenter__

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return await self.connect_async()

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/client.py", line 638, in connect_async

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: await self.session.fetch_schema()

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/client.py", line 1253, in fetch_schema

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: parse(get_introspection_query())

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/transport/aiohttp.py", line 323, in execute

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: await raise_response_error(resp, "Not a JSON answer")

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/transport/aiohttp.py", line 306, in raise_response_error

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: raise TransportServerError(str(e), e.status) from e

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: gql.transport.exceptions.TransportServerError: 403, message='Forbidden', url=URL('http://y.y.y.y:8000/graphql')

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: [error ] request_failed [django_structlog.middlewares.request] code=500 request=<ASGIRequest: POST '/admin/strategy/target/'> user_id=1

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: Internal Server Error: /admin/strategy/target/

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: Traceback (most recent call last):

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/transport/aiohttp.py", line 316, in execute

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: result = await resp.json(content_type=None)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/aiohttp/client_reqrep.py", line 1119, in json

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return loads(stripped.decode(encoding))

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/usr/lib/python3.7/json/__init__.py", line 348, in loads

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return _default_decoder.decode(s)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/usr/lib/python3.7/json/decoder.py", line 337, in decode

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: obj, end = self.raw_decode(s, idx=_w(s, 0).end())

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/usr/lib/python3.7/json/decoder.py", line 355, in raw_decode

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: raise JSONDecodeError("Expecting value", s, err.value) from None

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: json.decoder.JSONDecodeError: Expecting value: line 1 column 1 (char 0)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: During handling of the above exception, another exception occurred:

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: Traceback (most recent call last):

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/transport/aiohttp.py", line 304, in raise_response_error

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: resp.raise_for_status()

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/aiohttp/client_reqrep.py", line 1009, in raise_for_status

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: headers=self.headers,

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: aiohttp.client_exceptions.ClientResponseError: 403, message='Forbidden', url=URL('http://y.y.y.y:8000/graphql')

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: The above exception was the direct cause of the following exception:

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: Traceback (most recent call last):

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/asgiref/sync.py", line 482, in thread_handler

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: raise exc_info[1]

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/core/handlers/exception.py", line 38, in inner

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: response = await get_response(request)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/core/handlers/base.py", line 233, in _get_response_async

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: response = await wrapped_callback(request, *callback_args, **callback_kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/asgiref/sync.py", line 444, in __call__

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: ret = await asyncio.wait_for(future, timeout=None)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/usr/lib/python3.7/asyncio/tasks.py", line 414, in wait_for

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return await fut

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/asgiref/current_thread_executor.py", line 22, in run

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: result = self.fn(*self.args, **self.kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/asgiref/sync.py", line 486, in thread_handler

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return func(*args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/contrib/admin/options.py", line 616, in wrapper

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return self.admin_site.admin_view(view)(*args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/utils/decorators.py", line 130, in _wrapped_view

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: response = view_func(request, *args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/views/decorators/cache.py", line 44, in _wrapped_view_func

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: response = view_func(request, *args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/contrib/admin/sites.py", line 232, in inner

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return view(request, *args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/utils/decorators.py", line 43, in _wrapper

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return bound_method(*args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/utils/decorators.py", line 130, in _wrapped_view

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: response = view_func(request, *args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/contrib/admin/options.py", line 1723, in changelist_view

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: response = self.response_action(request, queryset=cl.get_queryset(request))

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/django/contrib/admin/options.py", line 1408, in response_action

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: response = func(self, request, queryset)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/var/www/myapp/strategy/admin.py", line 42, in push

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: target.push()

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/aiohttp_csrf/__init__.py", line 102, in wrapped_handler

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return handler(*args, **kwargs)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/var/www/myapp/strategy/models.py", line 125, in push

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: result = client.execute(query, variable_values=params)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/client.py", line 396, in execute

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: **kwargs,

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/usr/lib/python3.7/asyncio/base_events.py", line 579, in run_until_complete

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return future.result()

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/client.py", line 284, in execute_async

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: async with self as session:

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/client.py", line 658, in __aenter__

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: return await self.connect_async()

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/client.py", line 638, in connect_async

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: await self.session.fetch_schema()

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/client.py", line 1253, in fetch_schema

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: parse(get_introspection_query())

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/transport/aiohttp.py", line 323, in execute

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: await raise_response_error(resp, "Not a JSON answer")

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: File "/home/username/.local/lib/python3.7/site-packages/gql/transport/aiohttp.py", line 306, in raise_response_error

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: raise TransportServerError(str(e), e.status) from e

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: gql.transport.exceptions.TransportServerError: 403, message='Forbidden', url=URL('http://y.y.y.y:8000/graphql')

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: Internal Server Error: /admin/strategy/target/ exc_info=(<class 'gql.transport.exceptions.TransportServerError'>, TransportServerError("403, message='Forbidden', url=URL('http://y.y.y.y:8000/graphql')"), <traceback object at 0x7f5d70ac8910>)

Jul 16 08:00:59 ubuntu-2cpu-4gb-de-fra1 uvicorn[129750]: INFO: x.x.x.x:21866 - "POST /admin/strategy/target/ HTTP/1.1" 500 Internal Server Error

```

|

closed

|

2022-07-16T07:51:39Z

|

2022-07-21T12:16:39Z

|

https://github.com/graphql-python/gql/issues/344

|

[

"type: question or discussion"

] |

Kinzowa

| 1

|

pytorch/vision

|

computer-vision

| 8,048

|

ImageFolder balancer

|

### 🚀 The feature

The new feature impacts the file [torchvision/datasets/folder.py](https://github.com/pytorch/vision/blob/main/torchvision/datasets/folder.py).

The idea is to add to the `make_dataset` function a new optional parameter that allows balancing the dataset folder. The new parameter, `sampling_strategy`, can assume the following values: `None` (default), `"oversample"` and `"undersample"`.

|Value| Description|

|---|---|

|`None` | no operation performed on the dataset. This value will be the dafault. |

|`"oversample"`| the dataset will be balanced by adding image path copies of minoritary classes up to the number of the majority class.|

|`"undersample"` | the dataset will be balanced by deleting images path of majority classes up to the number of the minoritary class.|

### Motivation, pitch

While working with an unbalanced dataset, I find extremely useful to balance it at runtime instead of copying/removing images in the filesystem.

After the balanced data folder is defined you can also apply data augmentation when you define the data loader to not use simply image copies and avoid overfitting.

### Alternatives

The implementation can be done in two ways:

1. add the parameter `sampling_strategy` to the `make_dataset` function;

2. define a new class, namely `BalancedImageFolder`, that overwrites the `make_dataset` method in order to define the `sampling` parameter.

We believe that the less impactful way is the first implementation, because, for how the code is currently structured, for overwriting the `make_dataset` method I probably will have to change the structure of the file (because for defining the new `make_balanced_dataset` function I have to copy a lot of code from the original `make_dataset` function, and this is obviously a bad practice).

### Additional context

_No response_

|

closed

|

2023-10-16T09:41:20Z

|

2023-10-17T14:15:24Z

|

https://github.com/pytorch/vision/issues/8048

|

[] |

lorenzomassimiani

| 2

|

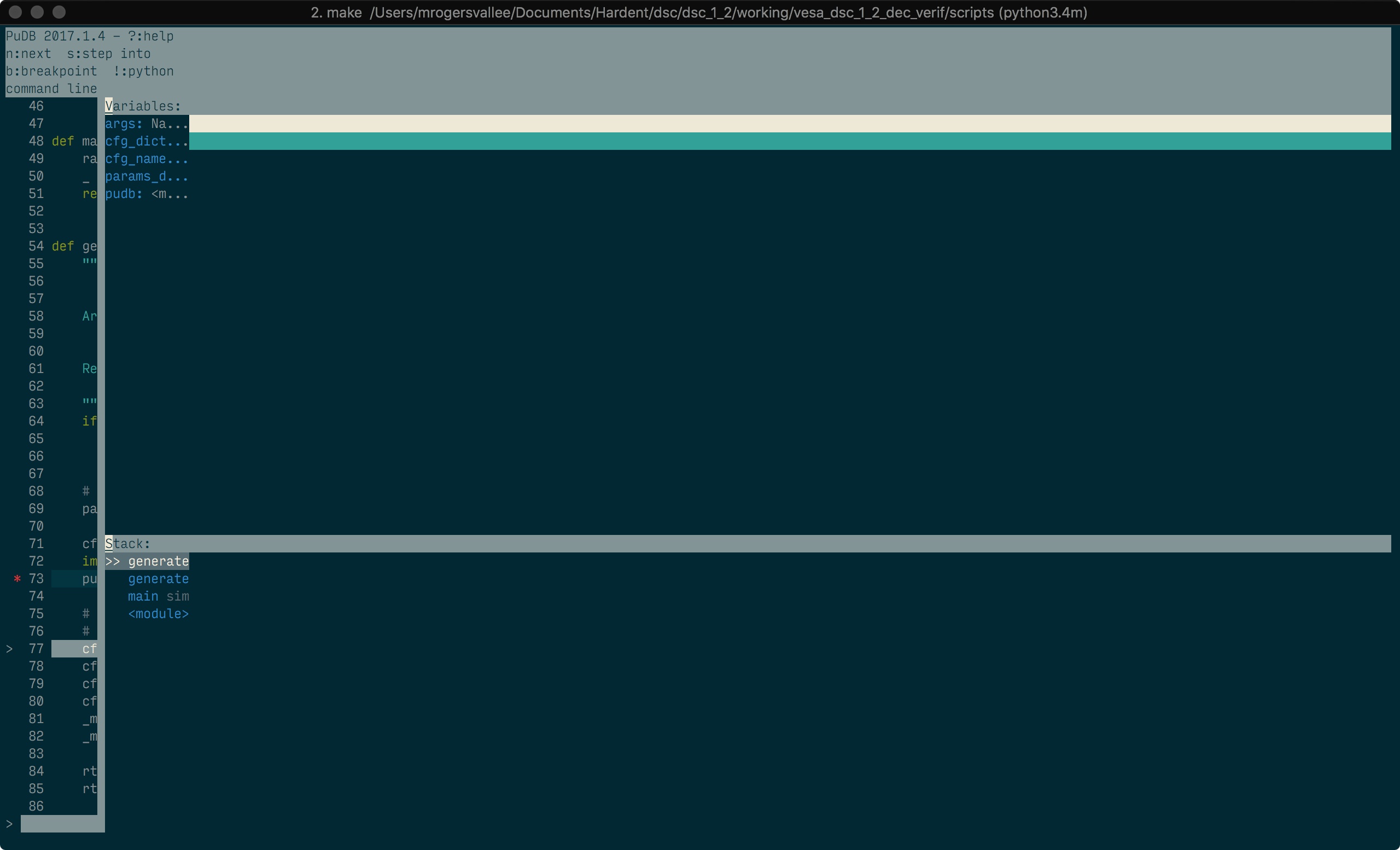

inducer/pudb

|

pytest

| 284

|

Pudb not being drawn at full width of the shell

|

Hi,

I am running pudb 2017.1.4 on macosx High Sierra. When I run pudb from my code (pudb.set_trace()) it draws the pudb interface on the left 1/4 of my shell.

I was able to replicate the behaviour with and without all my config on fish (aka bare fish shell). I tried it on iTerm and Terminal.

If I run the pudb3 <my script> command, the gui is fine.

pudb config:

```

[pudb]

breakpoints_weight = 1

current_stack_frame = top

custom_shell =

custom_stringifier =

custom_theme =

display = auto

line_numbers = True

prompt_on_quit = True

seen_welcome = e033

shell = internal

sidebar_width = 1

stack_weight = 1

stringifier = type

theme = solarized

variables_weight = 1

wrap_variables = False

```

pip3.4 package:

```

autopep8 (1.3.3)

flake8 (3.5.0)

mccabe (0.6.1)

pip (9.0.1)

ptvsd (3.2.1)

pudb (2017.1.4)

pycodestyle (2.3.1)

pydocstyle (2.1.1)

pyflakes (1.6.0)

Pygments (2.2.0)

setuptools (38.2.1)

six (1.11.0)

snowballstemmer (1.2.1)

urwid (1.3.1)

wheel (0.30.0)

yapf (0.20.0)

```

pudb.set_trace()

|

closed

|

2017-11-29T02:53:32Z

|

2024-02-02T22:02:31Z

|

https://github.com/inducer/pudb/issues/284

|

[] |

mrvkino

| 5

|

nolar/kopf

|

asyncio

| 707

|

kopf.on.event : how to identify "MODIFIED" event of only the spec change

|

**patch.status** call also raising "MODIFIED" event, so I could not find a way to identify only the "MODIFIED" event which happens as part of **spec change**.

I am doing **patch.status** update in both **kopf.on.update** and **kopf.on.event** handlers for event type "MODIFIED", will it make any problem ?

I think somewhere **patch.status** update conflict happening. I am not able to see the patch.status update in the **kopf.on.event** handle.

use case:

-------------

I want to show the state as "Updating" (kubectl get mycr) when updating CR. I were checking even['type'] == "MODIFIED" in kopf.on.event handle, but that is not working because of issue mentioned above.

Should i use any kubernetes python client library to update status field ?

Strange Behavior

---------

I have 2 **patch.status** update in **kopf.on.update** handle, one for change state to "**Updating**" and another one for change state to "**Updated**".

But i can see only one "**Patching with**" debug **log message** and only one patch is working, why ?

|

open

|

2021-03-07T14:49:39Z

|

2021-04-06T14:17:31Z

|

https://github.com/nolar/kopf/issues/707

|

[

"question"

] |

sajuptpm

| 4

|

miguelgrinberg/flasky

|

flask

| 273

|

Is the 'seed()’ in the app/models.py necessary ??

|

In the chapter 11c ,Model User and Post have function 'generate_fake(count=100)',In order to generate random data,import random.seed and forgery_py ,function code is:

from sqlalchemy.exc import IntegrityError

from random import seed

import forgery_py

seed() #why?

for i in range(count):

u = User(email=forgery_py.internet.email_address(),

username=forgery_py.internet.user_name(True),

password=forgery_py.lorem_ipsum.word(),

confirmed=True,

name=forgery_py.name.full_name(),

location=forgery_py.address.city(),

about_me=forgery_py.lorem_ipsum.sentence(),

member_since=forgery_py.date.date(True))

db.session.add(u)

try:

db.session.commit()

except IntegrityError:

db.session.rollback()

random.seed() Initialize internal state of the random number generator

Why should first call seed() function ?what's the effect?Is it necessary?

Try to delete ‘seed()',Random data can alse be generated normally

Thankes

@miguelgrinberg

|

closed

|

2017-05-30T13:15:56Z

|

2017-05-31T02:52:44Z

|

https://github.com/miguelgrinberg/flasky/issues/273

|

[

"question"

] |

sandylili

| 2

|

Significant-Gravitas/AutoGPT

|

python

| 8,918

|

Unable to setup locally : docker compose up -d fails at autogpt_platform-market-migrations-1

|

### ⚠️ Search for existing issues first ⚠️

- [X] I have searched the existing issues, and there is no existing issue for my problem

### Which Operating System are you using?

MacOS

### Which version of AutoGPT are you using?

Master (branch)

### What LLM Provider do you use?

Azure

### Which area covers your issue best?

Installation and setup

### What commit or version are you using?

2121ffd06b26a438706bf642372cc46d81c94ddc

### Describe your issue.

I am trying to set AutoGPT locally. I am following the steps in the video tutorial on main README.md file.

The docker setup fails when i run this command.

`docker compose up -d`

It fails with the following status :

` ✘ Container autogpt_platform-market-migrations-1 service "market-migrations" didn't complete successfully: exit 1 `

and then gets stuck at

` Container autogpt_platform-migrate-1 Waiting `

What did I miss ?

### Upload Activity Log Content

_No response_

### Upload Error Log Content

_No response_

|

open

|

2024-12-09T12:10:32Z

|

2025-02-14T23:22:07Z

|

https://github.com/Significant-Gravitas/AutoGPT/issues/8918

|

[] |

mali-tintash

| 20

|

healthchecks/healthchecks

|

django

| 712

|

Average execution time

|

I would like to add an idea, that would be helpful for me:

In the detail page of a check an option to display the average execution time would be very helpful. Maybe even a graph that displays the execution time? Also a minimum or maximum value could be helpful.

This would help especially when adding new features to a script to see if it is not consuming too much time.

Thanks for your consideration and your work!

|

open

|

2022-10-05T12:58:35Z

|

2024-07-08T09:10:17Z

|

https://github.com/healthchecks/healthchecks/issues/712

|

[

"feature"

] |

BeyondVertical

| 1

|

pytorch/pytorch

|

numpy

| 149,551

|

Remove PyTorch conda installation instructions from the documentation and tutorials

|

### 🐛 Describe the bug

Please see: https://github.com/pytorch/pytorch/issues/138506

and https://dev-discuss.pytorch.org/t/pytorch-deprecation-of-conda-nightly-builds/2590

Pytorch have deprecated usage of conda builds for release 2.6. We need to remove mentioning of conda package installtion instructions from tutorials and documents.

Examples:

https://pytorch.org/tutorials/beginner/introyt/tensorboardyt_tutorial.html#before-you-start

* https://pytorch.org/audio/main/build.linux.html

* https://pytorch.org/audio/main/installation.html

* https://pytorch.org/audio/main/build.windows.html#install-pytorch

* https://pytorch.org/tutorials/recipes/recipes/tensorboard_with_pytorch.html

* https://pytorch.org/tutorials/beginner/introyt/captumyt.html

* https://pytorch.org/vision/main/training_references.html

* https://pytorch.org/tutorials/advanced/torch_script_custom_ops.html#environment-setup

* https://pytorch.org/docs/stable/notes/windows.html#package-not-found-in-win-32-channel

### Versions

2.7.0

cc @svekars @sekyondaMeta @AlannaBurke

|

open

|

2025-03-19T20:18:59Z

|

2025-03-20T16:08:48Z

|

https://github.com/pytorch/pytorch/issues/149551

|

[

"module: docs",

"triaged",

"topic: docs"

] |

atalman

| 1

|

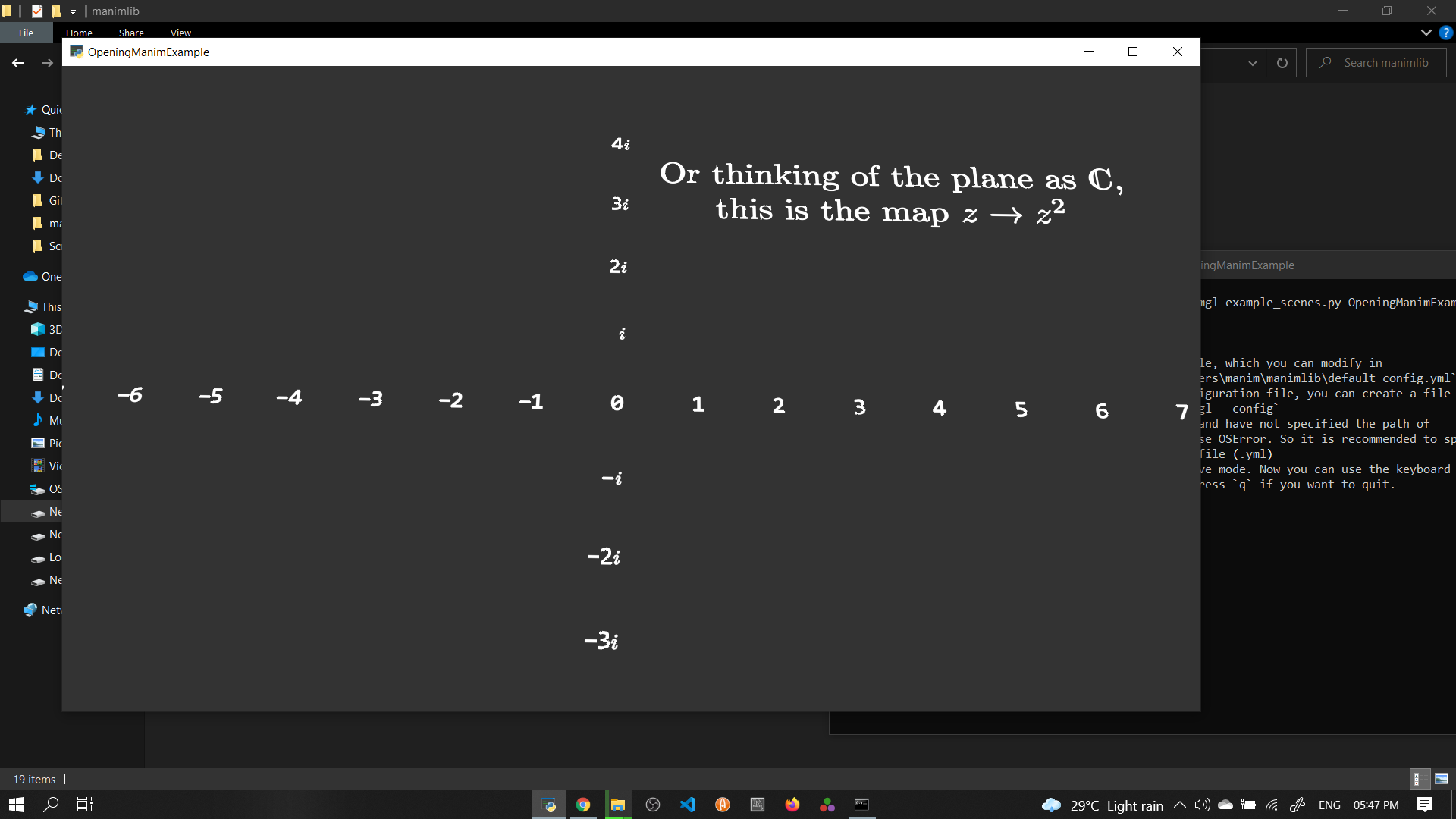

3b1b/manim

|

python

| 1,652

|

NumberPlane not showing it's lines

|

### Describe the bug

I have used manimgl before but after changing the version (almost 4-5 months old) with new version, whenever i am running the example scene (**OpeningManimExample**), the lines in the **NumberPlane** is not showing.

**Image**

**Version Used**:

Manim - 1.1.0

|

closed

|

2021-10-16T12:51:21Z

|

2021-10-17T03:21:40Z

|

https://github.com/3b1b/manim/issues/1652

|

[

"bug"

] |

aburousan

| 2

|

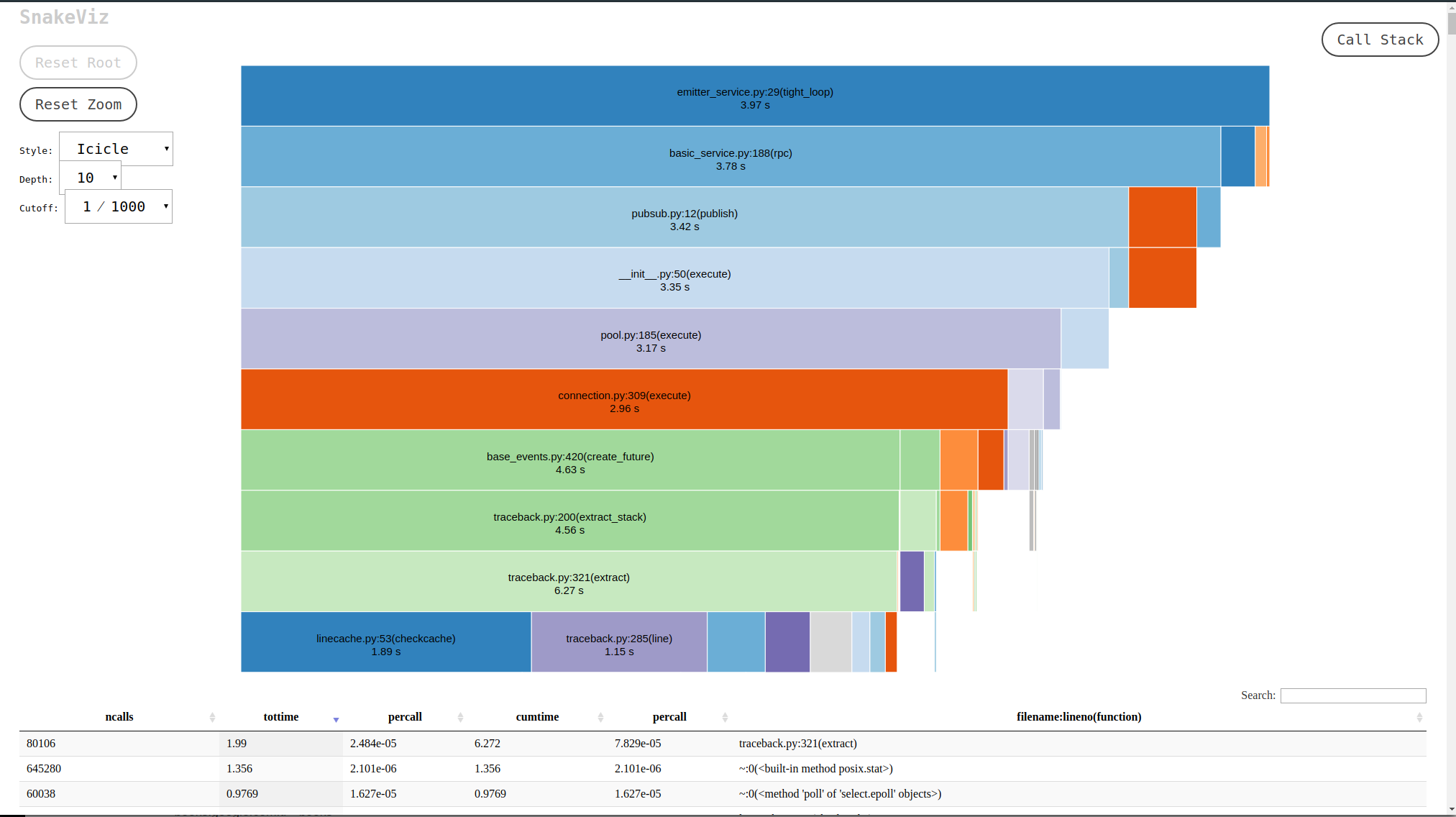

aio-libs-abandoned/aioredis-py

|

asyncio

| 702

|

connection pool publish spending a lot of time in traceback

|

I tried publishing 20k messages and it took about 6 seconds so I wondered why it was so slow, as redis self-test tells me it can do 200k set commands with 50 connections in 1.5 seconds which is pretty ok.

I did a profiling with snakeviz and it turns out when I publish from the pool, the execute function spends a lot of time in traceback.py

I am not very familiar with python internals, so I came here to ask why the profiler would show that we are spending so much time as if we are catching exceptions and getting stacktraces in a tight loop?

|

closed

|

2020-02-18T16:54:29Z

|

2020-02-26T10:43:18Z

|

https://github.com/aio-libs-abandoned/aioredis-py/issues/702

|

[] |

nurettin

| 1

|

RobertCraigie/prisma-client-py

|

pydantic

| 1,052

|

Prisma client not generating in production aws

|

### Discussed in https://github.com/RobertCraigie/prisma-client-py/discussions/1051

<div type='discussions-op-text'>

<sup>Originally posted by **ifaronti** December 7, 2024</sup>

My set up is quite simple:

```

generator py {

provider = "prisma-client-py"

recursive_type_depth = "5"

interface = "asyncio"

enable_experimental_decimal = true

}

datasource db {

provider = "postgresql"

url = env("DATABASE_URL")

}

```

However, deploying to both aws lambda and vercel throwing same prisma client not generated yet error even though everything works fine locally.

my project folder structure places prisma folder which contains the schema.prisma file in the root folder.

stack trace:

```

" File \"/var/lang/lib/python3.13/importlib/__init__.py\", line 88, in import_module\n return _bootstrap._gcd_import(name[level:], package, level)\n",

" File \"<frozen importlib._bootstrap>\", line 1387, in _gcd_import\n",

" File \"<frozen importlib._bootstrap>\", line 1360, in _find_and_load\n",

" File \"<frozen importlib._bootstrap>\", line 1331, in _find_and_load_unlocked\n",

" File \"<frozen importlib._bootstrap>\", line 935, in _load_unlocked\n",

" File \"<frozen importlib._bootstrap_external>\", line 1022, in exec_module\n",

" File \"<frozen importlib._bootstrap>\", line 488, in _call_with_frames_removed\n",

" File \"/var/task/main.py\", line 2, in <module>\n from app.routers import transactions\n",

" File \"/var/task/app/routers/transactions.py\", line 2, in <module>\n from ..controllers.transactions.get_transactions import get_Transactions\n",

" File \"/var/task/app/controllers/transactions/get_transactions.py\", line 1, in <module>\n from prisma import Prisma\n",

" File \"<frozen importlib._bootstrap>\", line 1412, in _handle_fromlist\n",

" File \"/opt/python/prisma/__init__.py\", line 53, in __getattr__\n raise RuntimeError(\n"

]

```

Note: It's not the transactions controller or route that is the problem if I put any route as first in order of route calls from the index file, that is what will appear in the stack trace.

Everything is fine locally but production fails. Thanks in advance</div>

|

closed

|

2024-12-07T17:59:55Z

|

2024-12-11T21:40:14Z

|

https://github.com/RobertCraigie/prisma-client-py/issues/1052

|

[] |

ifaronti

| 1

|

cobrateam/splinter

|

automation

| 478

|

add cookies to phantomjs browser have a error

|

phantomjs version: phantomjs-2.1.1-linux-x86_64.tar.bz2 have e error

but phantomjs-1.9.8-linux-x86_64.tar.bz2 is normal.

code:

`from splinter import Browser

cookie = {'test':'1234'}

current_url = 'http://www.google.com'

browser = Browser('phantomjs')

browser.visit(current_url)

browser.cookies.add(cookie)

source = browser.html

browser.quit()

`

except output:

Traceback (most recent call last):

File "dy.py", line 37, in <module>

dy.dynamic_request()

File "dy.py", line 31, in dynamic_request

browser.cookies.add(cookie)

File "/usr/local/lib/python2.7/dist-packages/splinter-0.7.3-py2.7.egg/splinter/driver/webdriver/cookie_manager.py", line 28, in add

self.driver.add_cookie({'name': key, 'value': value})

File "/usr/local/lib/python2.7/dist-packages/selenium-2.53.0-py2.7.egg/selenium/webdriver/remote/webdriver.py", line 666, in add_cookie

self.execute(Command.ADD_COOKIE, {'cookie': cookie_dict})

File "/usr/local/lib/python2.7/dist-packages/selenium-2.53.0-py2.7.egg/selenium/webdriver/remote/webdriver.py", line 233, in execute

self.error_handler.check_response(response)

File "/usr/local/lib/python2.7/dist-packages/selenium-2.53.0-py2.7.egg/selenium/webdriver/remote/errorhandler.py", line 194, in check_response

raise exception_class(message, screen, stacktrace)

selenium.common.exceptions.WebDriverException: Message: {"errorMessage":"Can only set Cookies for the current domain","request":{"headers":{"Accept":"application/json","Accept-Encoding":"identity","Connection":"close","Content-Length":"98","Content-Type":"application/json;charset=UTF-8","Host":"127.0.0.1:55891","User-Agent":"Python-urllib/2.7"},"httpVersion":"1.1","method":"POST","post":"{\"sessionId\": \"7b996980-de36-11e5-8735-cd0a007cdec8\", \"cookie\": {\"name\": \"test\", \"value\": \"1234\"}}","url":"/cookie","urlParsed":{"anchor":"","query":"","file":"cookie","directory":"/","path":"/cookie","relative":"/cookie","port":"","host":"","password":"","user":"","userInfo":"","authority":"","protocol":"","source":"/cookie","queryKey":{},"chunks":["cookie"]},"urlOriginal":"/session/7b996980-de36-11e5-8735-cd0a007cdec8/cookie"}}

Screenshot: available via screen

|

closed

|

2016-03-31T09:23:13Z

|

2018-08-27T01:04:05Z

|

https://github.com/cobrateam/splinter/issues/478

|

[

"bug",

"help wanted"

] |

galaxy6

| 1

|

babysor/MockingBird

|

deep-learning

| 714

|

capturable=False 怎么办?总结的一些办法。

|

①先卸载pytorch cmd运行 pip uninstall torch

②修改对应的torchvision、torchaudio版本(见下两图)

③安装指令:pip install torchvision==0.10.0 和 pip install torchaudio==0.9.0

④去https://download.pytorch.org/whl/torch_stable.html网站,找到并下载cu111/torchvision-0.9.0%2Bcu111-cp39-cp39-win_amd64.whl此文件。

⑤在下载目录运行cmd,安装指令: pip install torch-1.9.0+cu111-cp39-cp39-win_amd64.whl

|

open

|

2022-08-18T06:45:47Z

|

2022-09-10T16:10:30Z

|

https://github.com/babysor/MockingBird/issues/714

|

[] |

pzhyyd

| 1

|

tensorlayer/TensorLayer

|

tensorflow

| 1,039

|

BatchNorm1d IndexError: list index out of range

|

My code as follow:

```python

ni2 = Input(shape=[None, flags.z2_dim])

ni2 = Dense(100, W_init=w_init, b_init=None)(ni2)

ni2 = BatchNorm1d(decay=0.9, act=act, gamma_init=gamma_init)(ni2)

```

I got the following error.

```

File "/home/asus/Workspace/dcgan-disentangle/model.py", line 18, in get_generator

ni2 = BatchNorm1d(decay=0.9, act=act, gamma_init=gamma_init)(ni2)

File "/home/asus/Workspace/dcgan-disentangle/tensorlayer/layers/core.py", line 238, in __call__

self.build(inputs_shape)

File "/home/asus/Workspace/dcgan-disentangle/tensorlayer/layers/normalization.py", line 250, in build

params_shape, self.axes = self._get_param_shape(inputs_shape)

File "/home/asus/Workspace/dcgan-disentangle/tensorlayer/layers/normalization.py", line 311, in _get_param_shape

channels = inputs_shape[axis]

IndexError: list index out of range

```

According to the docs, `BatchNorm1d` only works for input shape of `[batch, xxx, xxx]` ? How about `[batch, xxx]`?

```

>>> # in static model, no need to specify num_features

>>> net = tl.layers.Input([None, 50, 32], name='input')

>>> net = tl.layers.BatchNorm1d()(net)

>>> # in dynamic model, build by specifying num_features

>>> conv = tl.layers.Conv1d(32, 5, 1, in_channels=3)

>>> bn = tl.layers.BatchNorm1d(num_features=32)

```

|

closed

|

2019-08-28T07:49:14Z

|

2019-08-31T01:41:21Z

|

https://github.com/tensorlayer/TensorLayer/issues/1039

|

[] |

zsdonghao

| 2

|

frol/flask-restplus-server-example

|

rest-api

| 110

|

Please document in enabled_modules that api must be last

|

This bit me and was hard to debug when I was playing around with enabled modules in my project.

|

closed

|

2018-05-09T14:56:14Z

|

2018-06-30T19:29:45Z

|

https://github.com/frol/flask-restplus-server-example/issues/110

|

[

"bug"

] |

bitfinity

| 6

|

facebookresearch/fairseq

|

pytorch

| 5,293

|

Pretrained models not gzip files?

|

I am trying to load a pretrained model from fairseq following the simple example [here](https://pytorch.org/hub/pytorch_fairseq_roberta/). However, whenever I run the following code I get the following `ReadError`.

#### Code

```py

roberta = torch.hub.load('pytorch/fairseq', 'roberta.base')

ReadError: not a gzip file

```

#### What's your environment?

- PyTorch Version (2.0.1+cu117)

- OS: Linux

- How you installed fairseq: `pip install fairseq`

- Python version: 3.10.9

- CUDA version: 12.2

|

open

|

2023-08-22T17:18:09Z

|

2023-08-30T16:27:11Z

|

https://github.com/facebookresearch/fairseq/issues/5293

|

[

"question",

"needs triage"

] |

cdeterman

| 1

|

xlwings/xlwings

|

automation

| 2,529

|

FileNotFoundError(2, 'No such file or directory') when trying to install

|

#### OS (e.g. Windows 10 or macOS Sierra)

Windows 11 Home

#### Versions of xlwings, Excel and Python (e.g. 0.11.8, Office 365, Python 3.7)

Excel, Office 365

Python 3.12.4

xlwings 0.33.1

#### Describe your issue (incl. Traceback!)

I just got an annoying message from Windows Defender about xlwings being a trojan horse and therefore tried to remove it (xlwings). After some research I'm confident that it was a false positive and now I'm trying to reinstall xlwings, but instead I get an errormessage saying

> FileNotFoundError(2, 'No such file or directory')

Already tried rebooting (well, it IS Windows, after all...) without any effect. In order to debug further it would help to know what's actually missing?

|

closed

|

2024-10-07T12:52:28Z

|

2024-10-08T09:05:33Z

|

https://github.com/xlwings/xlwings/issues/2529

|

[] |

HansThorsager

| 4

|

InstaPy/InstaPy

|

automation

| 6,381

|

Key Error shortcode_media not find

|

## Expected Behavior

at like_util.py the get_additional_data function get some post info which one of them is shortcode_media.

## Current Behavior

the shortcode_media key not found in the post_page (post_page = get_additional_data(browser))

|

open

|

2021-10-23T06:28:44Z

|

2021-12-25T18:14:48Z

|

https://github.com/InstaPy/InstaPy/issues/6381

|

[] |

kharazian

| 10

|

keras-team/keras

|

tensorflow

| 20,984

|

Is this Bug?

|

Issue Description:

I am running CycleGAN training using Keras v3.8.0 and have observed the following behavior during the initial epoch (0/200):

Discriminator (D):

The D loss starts around 0.70 (e.g., 0.695766 at batch 0) with a very low accuracy (~25%).

In the next few batches, the D loss quickly decreases to approximately 0.47 and the discriminator accuracy increases and stabilizes around 49%.

Generator (G):

The G loss begins at approximately 17.42 and gradually decreases (e.g., reaching around 14.85 by batch 15).

However, the adversarial component of the G loss remains nearly constant at roughly 0.91 throughout these batches.

Both the reconstruction loss and identity loss show a slight downward trend.

Questions/Concerns:

Discriminator Behavior:

The discriminator accuracy quickly climbs to ~49%, which is close to random guessing (50%). Is this the expected behavior in early training stages, or might it indicate an issue with the discriminator setup?

Constant Adversarial Loss:

Despite the overall generator loss decreasing, the adversarial loss remains almost unchanged (~0.91). Should I be concerned that the generator is not improving its ability to fool the discriminator, or is this typical during the initial epochs?

Next Steps:

Would further hyperparameter tuning or changes in the training strategy be recommended at this stage to encourage more effective adversarial learning?

Steps to Reproduce:

Use Keras version 3.8.0.

Train CycleGAN for 200 epochs on the given dataset.

Observe the training logs, especially during the initial epoch (batches 0–15).

Example Log Snippet:

[Epoch 0/200] [Batch 0/3400] [D loss: 0.695766, acc: 25%] [G loss: 17.421459, adv: 0.911943, recon: 0.703973, id: 0.664554] time: 0:00:54.098616

[Epoch 0/200] [Batch 1/3400] [D loss: 0.544706, acc: 41%] [G loss: 17.582617, adv: 0.913612, recon: 0.759879, id: 0.702040] time: 0:00:54.705443

[Epoch 0/200] [Batch 2/3400] [D loss: 0.516353, acc: 44%] [G loss: 17.095995, adv: 0.912766, recon: 0.749940, id: 0.726107] time: 0:00:54.945915

...

[Epoch 0/200] [Batch 15/3400] [D loss: 0.477357, acc: 49%] [G loss: 14.845922, adv: 0.901916, recon: 0.742566, id: 0.698672] time: 0:00:58.317200

Any insights or recommendations would be greatly appreciated. Thank you in advance for your help!

|

open

|

2025-03-05T07:23:22Z

|

2025-03-12T05:04:32Z

|

https://github.com/keras-team/keras/issues/20984

|

[

"type:Bug"

] |

AmadeuSY-labo

| 2

|

matplotlib/matplotlib

|

data-visualization

| 29,380

|

[MNT]: deprecate auto hatch fallback to patch.edgecolor when edgecolor='None'

|

### Summary

Since #28104 now separates out hatchcolor, users should not be allowed to specifically ask to fallback to edgecolor and also explicitly set that edgecolor to none because silently falling back on ```edgecolor="None"``` introduces the problem that:

1. because of eager color resolution and frequently setting "none" by knocking out the alpha, the way to check for none is checking the alpha, which leads to fallback being dependent on the interplay between alpha and edgecolor:

3. fallback to edgecolor rcParams doesn't check if the rcParam is also none so could sink the problem a layer deeper

### Proposed fix

Deprecate this fallback behavior and raise a warning on

``` Rectangle( (0,0), .5, .5, hatchcolor='edge', edgecolor='None')```

The alternatives are:

* set a hatchcolor

* don't set edgecolor at all, .i.e. ``` Rectangle( (0,0), .5, .5, hatchcolor='edge')``` falls back to the rcParam in `get_edgecolor`

|

open

|

2024-12-25T00:19:58Z

|

2024-12-26T03:11:59Z

|

https://github.com/matplotlib/matplotlib/issues/29380

|

[

"Maintenance",

"topic: hatch"

] |

story645

| 2

|

neuml/txtai

|

nlp

| 646

|

ImportError: Textractor pipeline is not available - install "pipeline" extra to enable

|

When i run the code block below, it gives error :

`from txtai.pipeline import Textractor`

`textractor = Textractor()`

ImportError: Textractor pipeline is not available - install "pipeline" extra to enable

Note :` pip install txtai[pipeline]` did not work

|

closed

|

2024-01-23T23:14:23Z

|

2024-09-16T06:04:23Z

|

https://github.com/neuml/txtai/issues/646

|

[] |

berkgungor

| 6

|

Kludex/mangum

|

asyncio

| 264

|

Magnum have huge list of dependencies, many have dubious utility.

|

Currently magnum installs over 150 pacakages along with it:

[pdm list --graph](https://gist.github.com/Fak3/419769a9fb05ec98a46db4d5a9a21dae)

I wish there was a slimmer version, that only installs core depndencies. Currently some deps like yappi and netifaces does not provide binary wheels, which makes magnum installation fail in case of missing python headers:

<details>

<pre>

Preparing isolated env for PEP 517 build...

Building wheel for https://files.pythonhosted.org/packages/9c/bb/47be36b473e56360d3012bf1b6e405785e42d1f2e91da715964c1a705937/yappi-1.3.5.tar.gz#sha256=f54c25f04aa7c613633b529bffd14e0699a4363f414dc9c065616fd52064a49b (from https://pypi.org/simple/yappi/)

Collecting wheel

Using cached wheel-0.37.1-py2.py3-none-any.whl (35 kB)

Collecting setuptools>=40.8.0

Using cached setuptools-62.6.0-py3-none-any.whl (1.2 MB)

Installing collected packages: wheel, setuptools

Successfully installed setuptools-62.6.0 wheel-0.37.1

/tmp/timer_create3280tt_g.c: In function ‘main’:

/tmp/timer_create3280tt_g.c:2:5: warning: implicit declaration of function ‘timer_create’ [-Wimplicit-function-declaration]

2 | timer_create();

| ^~~~~~~~~~~~

running egg_info

writing yappi/yappi.egg-info/PKG-INFO

writing dependency_links to yappi/yappi.egg-info/dependency_links.txt

writing entry points to yappi/yappi.egg-info/entry_points.txt

writing requirements to yappi/yappi.egg-info/requires.txt

writing top-level names to yappi/yappi.egg-info/top_level.txt

reading manifest file 'yappi/yappi.egg-info/SOURCES.txt'

reading manifest template 'MANIFEST.in'

adding license file 'LICENSE'

writing manifest file 'yappi/yappi.egg-info/SOURCES.txt'

/tmp/timer_create8z57jb1h.c: In function ‘main’:

/tmp/timer_create8z57jb1h.c:2:5: warning: implicit declaration of function ‘timer_create’ [-Wimplicit-function-declaration]

2 | timer_create();

| ^~~~~~~~~~~~

running bdist_wheel

running build

running build_py

creating build

creating build/lib.linux-x86_64-cpython-310

copying yappi/yappi.py -> build/lib.linux-x86_64-cpython-310

running build_ext

building '_yappi' extension

creating build/temp.linux-x86_64-cpython-310

creating build/temp.linux-x86_64-cpython-310/yappi

gcc -Wno-unused-result -Wsign-compare -DNDEBUG -O2 -Wall -U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=3 -fstack-protector-strong -funwind-tables -fasynchronous-unwind-tables -fstack-clash-protection -Werror=return-type -g -DOPENSSL_LOAD_CONF -fwrapv -fno-semantic-interposition -O2 -Wall -U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=3 -fstack-protector-strong -funwind-tables -fasynchronous-unwind-tables -fstack-clash-protection -Werror=return-type -g -IVendor/ -O2 -Wall -U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=3 -fstack-protector-strong -funwind-tables -fasynchronous-unwind-tables -fstack-clash-protection -Werror=return-type -g -IVendor/ -fPIC -DLIB_RT_AVAILABLE=1 -I/usr/include/python3.10 -c yappi/_yappi.c -o build/temp.linux-x86_64-cpython-310/yappi/_yappi.o

In file included from yappi/_yappi.c:10:

yappi/config.h:4:10: fatal error: Python.h: No such file or directory

4 | #include "Python.h"

| ^~~~~~~~~~

compilation terminated.

error: command '/usr/bin/gcc' failed with exit code 1

Error occurs:

Traceback (most recent call last):

File "/usr/lib64/python3.10/concurrent/futures/thread.py", line 58, in run

result = self.fn(*self.args, **self.kwargs)

File "/usr/lib/python3.10/site-packages/pdm/installers/synchronizers.py", line 207, in install_candidate

self.manager.install(can)

File "/usr/lib/python3.10/site-packages/pdm/installers/manager.py", line 39, in install

installer(prepared.build(), self.environment, prepared.direct_url())

File "/usr/lib/python3.10/site-packages/pdm/models/candidates.py", line 331, in build

self.wheel = builder.build(build_dir, metadata_directory=self._metadata_dir)

File "/usr/lib/python3.10/site-packages/pdm/builders/wheel.py", line 28, in build

filename = self._hook.build_wheel(out_dir, config_settings, metadata_directory)

File "/usr/lib/python3.10/site-packages/pep517/wrappers.py", line 208, in build_wheel

return self._call_hook('build_wheel', {

File "/usr/lib/python3.10/site-packages/pep517/wrappers.py", line 322, in _call_hook

self._subprocess_runner(

File "/usr/lib/python3.10/site-packages/pdm/builders/base.py", line 246, in subprocess_runner

return log_subprocessor(cmd, cwd, extra_environ=env)

File "/usr/lib/python3.10/site-packages/pdm/builders/base.py", line 87, in log_subprocessor

raise BuildError(

pdm.exceptions.BuildError: Call command ['/usr/bin/python3.10', '/usr/lib/python3.10/site-packages/pep517/in_process/_in_process.py', 'build_wheel', '/tmp/tmprefrneax'] return non-zero status(1).

Preparing isolated env for PEP 517 build...

Reusing shared build env: /tmp/pdm-build-env-eq22vk28-shared

Building wheel for https://files.pythonhosted.org/packages/9c/bb/47be36b473e56360d3012bf1b6e405785e42d1f2e91da715964c1a705937/yappi-1.3.5.tar.gz#sha256=f54c25f04aa7c613633b529bffd14e0699a4363f414dc9c065616fd52064a49b (from https://pypi.org/simple/yappi/)

/tmp/timer_createh4zqpscq.c: In function ‘main’:

/tmp/timer_createh4zqpscq.c:2:5: warning: implicit declaration of function ‘timer_create’ [-Wimplicit-function-declaration]

2 | timer_create();

| ^~~~~~~~~~~~

running egg_info

writing yappi/yappi.egg-info/PKG-INFO

writing dependency_links to yappi/yappi.egg-info/dependency_links.txt

writing entry points to yappi/yappi.egg-info/entry_points.txt

writing requirements to yappi/yappi.egg-info/requires.txt

writing top-level names to yappi/yappi.egg-info/top_level.txt

reading manifest file 'yappi/yappi.egg-info/SOURCES.txt'

reading manifest template 'MANIFEST.in'

adding license file 'LICENSE'

writing manifest file 'yappi/yappi.egg-info/SOURCES.txt'

/tmp/timer_createnezrnkq7.c: In function ‘main’:

/tmp/timer_createnezrnkq7.c:2:5: warning: implicit declaration of function ‘timer_create’ [-Wimplicit-function-declaration]

2 | timer_create();

| ^~~~~~~~~~~~

running bdist_wheel

running build

running build_py

running build_ext

building '_yappi' extension

gcc -Wno-unused-result -Wsign-compare -DNDEBUG -O2 -Wall -U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=3 -fstack-protector-strong -funwind-tables -fasynchronous-unwind-tables -fstack-clash-protection -Werror=return-type -g -DOPENSSL_LOAD_CONF -fwrapv -fno-semantic-interposition -O2 -Wall -U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=3 -fstack-protector-strong -funwind-tables -fasynchronous-unwind-tables -fstack-clash-protection -Werror=return-type -g -IVendor/ -O2 -Wall -U_FORTIFY_SOURCE -D_FORTIFY_SOURCE=3 -fstack-protector-strong -funwind-tables -fasynchronous-unwind-tables -fstack-clash-protection -Werror=return-type -g -IVendor/ -fPIC -DLIB_RT_AVAILABLE=1 -I/usr/include/python3.10 -c yappi/_yappi.c -o build/temp.linux-x86_64-cpython-310/yappi/_yappi.o

In file included from yappi/_yappi.c:10:

yappi/config.h:4:10: fatal error: Python.h: No such file or directory

4 | #include "Python.h"

| ^~~~~~~~~~

compilation terminated.

error: command '/usr/bin/gcc' failed with exit code 1

Error occurs:

Traceback (most recent call last):

File "/usr/lib64/python3.10/concurrent/futures/thread.py", line 58, in run

result = self.fn(*self.args, **self.kwargs)

File "/usr/lib/python3.10/site-packages/pdm/installers/synchronizers.py", line 207, in install_candidate

self.manager.install(can)

File "/usr/lib/python3.10/site-packages/pdm/installers/manager.py", line 39, in install

installer(prepared.build(), self.environment, prepared.direct_url())

File "/usr/lib/python3.10/site-packages/pdm/models/candidates.py", line 331, in build

self.wheel = builder.build(build_dir, metadata_directory=self._metadata_dir)

File "/usr/lib/python3.10/site-packages/pdm/builders/wheel.py", line 28, in build

filename = self._hook.build_wheel(out_dir, config_settings, metadata_directory)

File "/usr/lib/python3.10/site-packages/pep517/wrappers.py", line 208, in build_wheel

return self._call_hook('build_wheel', {

File "/usr/lib/python3.10/site-packages/pep517/wrappers.py", line 322, in _call_hook

self._subprocess_runner(

File "/usr/lib/python3.10/site-packages/pdm/builders/base.py", line 246, in subprocess_runner

return log_subprocessor(cmd, cwd, extra_environ=env)

File "/usr/lib/python3.10/site-packages/pdm/builders/base.py", line 87, in log_subprocessor

raise BuildError(

pdm.exceptions.BuildError: Call command ['/usr/bin/python3.10', '/usr/lib/python3.10/site-packages/pep517/in_process/_in_process.py', 'build_wheel', '/tmp/tmpga2r2t_o'] return non-zero status(1).

Error occurs

Traceback (most recent call last):

File "/usr/lib/python3.10/site-packages/pdm/termui.py", line 200, in logging

yield logger

File "/usr/lib/python3.10/site-packages/pdm/installers/synchronizers.py", line 374, in synchronize

raise InstallationError("Some package operations are not complete yet")

pdm.exceptions.InstallationError: Some package operations are not complete yet

</pre>

</details>

|

closed

|

2022-06-24T19:09:38Z

|

2022-06-25T07:31:31Z

|

https://github.com/Kludex/mangum/issues/264

|

[

"more info needed"

] |

Fak3

| 2

|

marimo-team/marimo

|

data-visualization

| 4,145

|

[Newbie Q] Cannot add SQLite table

|

New to Marimo, probably doing something simple wrong.

I want to add my sqlite lite database which is stored in `my_fair_table.db`.

I copy the full path.

I paste the path into the UI flow of SQLite and click "Add".

Nothing happens. No error. No database.

When running python code, I can successfully connect to the database file.

But with the table view of Marimo or using the SQL cell I can't.

After not figuring it out for 45 min I decided to write this issue.

What might I be doing wrong?

OS: Linux

Python: 3.12

Marimo: 0.11.21

|

closed

|

2025-03-18T13:26:27Z

|

2025-03-18T15:42:46Z

|

https://github.com/marimo-team/marimo/issues/4145

|

[] |

dentroai

| 3

|

OpenInterpreter/open-interpreter

|

python

| 615

|

Issues with Azure setting

|

### Describe the bug

I am trying to point to my azure keys in the application, but each time I get the issue

`There might be an issue with your API key(s).

To reset your API key (we'll use OPENAI_API_KEY for this example, but you may need to reset your ANTHROPIC_API_KEY, HUGGINGFACE_API_KEY, etc):

Mac/Linux: 'export OPENAI_API_KEY=your-key-here',

Windows: 'setx OPENAI_API_KEY your-key-here' then restart terminal.`

Have done everything mentioned in the documentation to setup interpreter with azure but it does not work for me. :(

Any help will be appreciated. I am trying to build a simple streamlit application as a frontend invoking interpreter..

I even went and set the model in the code to reflect azure..

### Reproduce

1. Configure the interpreter to use azure:

```

interpreter.azure_api_type = "azure"

interpreter.use_azure = True

interpreter.azure_api_base = "https://something.openai.azure.com/"

interpreter.azure_api_version = "2023-05-15"

interpreter.azure_api_key = "my-azure-key"

interpreter.azure_deployment_name="GPT-4"

interpreter.auto_run = True

interpreter.conversation_history = True

```

```

async def get_response(prompt: str, files: Optional[list] = None):

if files is None:

files = []

with st.chat_message("user"):

st.write(prompt)

with st.spinner():

response=''

for chunk in interpreter.chat(f'content={input_text}, files={file_path}', stream=True):

if 'message' in chunk:

response += chunk['message']

st.write(response)

```

and sometimes i get an issue as below abotu SSL...

<img width="839" alt="image" src="https://github.com/KillianLucas/open-interpreter/assets/40305398/801bb045-3677-416f-b348-ab91ec584761">

### Expected behavior

The azure specific configurations must be picked up and the interpreter must work as expected.

### Screenshots

<img width="1264" alt="Screenshot 2023-10-10 at 12 16 58" src="https://github.com/KillianLucas/open-interpreter/assets/40305398/c6519d1d-55d6-41bf-80a8-95b7cc6df300">

### Open Interpreter version

0.1.7

### Python version

3.11.3

### Operating System name and version

Windows 11

### Additional context

_No response_

|

closed

|

2023-10-10T07:03:35Z

|

2023-10-27T17:21:59Z

|

https://github.com/OpenInterpreter/open-interpreter/issues/615

|

[

"Bug"

] |

nsvbhat

| 1

|

Johnserf-Seed/TikTokDownload

|

api

| 266

|

[BUG]

|

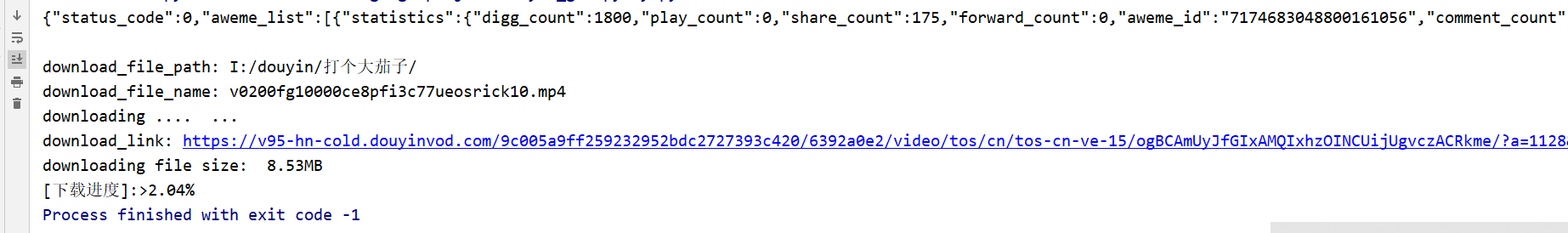

我抄了一部分你的代码 主要来爬取我喜欢点赞的一些抖音视频但是

接口有问题 图集没有下载链接 我抓了返回的接口数据 就没有图集的视频数据

前面有2个图片视频的 但是直接返回了第三个视频的接口数据

|

closed

|

2022-12-09T02:11:33Z

|

2023-01-14T12:14:09Z

|

https://github.com/Johnserf-Seed/TikTokDownload/issues/266

|

[

"故障(bug)",

"额外求助(help wanted)",

"无效(invalid)"

] |

zhangsan-feng

| 3

|

koxudaxi/fastapi-code-generator

|

pydantic

| 179

|

support for constraints in Query/Body rather than as "conX

|

open

|

2021-06-28T04:32:27Z

|

2021-07-09T14:48:31Z

|

https://github.com/koxudaxi/fastapi-code-generator/issues/179

|

[

"enhancement"

] |

aeresov

| 1

|

|

google-research/bert

|

nlp

| 1,405

|

MRPC and CoLA Dataset UnicodeDecodeError

|

Error message:UnicodeDecodeError: 'utf-8' codec can't decode byte 0xd5 in position 147: invalid continuation byte

I can't train properly after loading these two data sets. Still report an error after using "ISO-8859-1" and "latin-1" code

After checking the train.txt file of the MRPC dataset, I found that the error byte code corresponds to the character "é", but I modified train.txt and test.txt and preprocessed again to get train.tsv and test.tsv (the file also checked that it did not contain the character "é"). Finally, I still reported an error in training.

|

open

|

2024-04-17T14:33:36Z

|

2024-04-17T14:33:36Z

|

https://github.com/google-research/bert/issues/1405

|

[] |

ZhuHouYi

| 0

|

graphql-python/gql

|

graphql

| 68

|

how to upload file

|

Is there any example to show how to upload a file to the "Input!"?

|

closed

|

2020-03-24T05:27:57Z

|

2021-09-29T12:28:49Z

|

https://github.com/graphql-python/gql/issues/68

|

[

"type: feature"

] |

DogeWatch

| 22

|

unytics/bigfunctions

|

data-visualization

| 6

|

Are you interested in providing RFM clustering?

|

I'm thinking about queries like these ones I've contributed to [my pet Super Store project](https://github.com/mickaelandrieu/dbt-super_store/tree/main/models/marts/core/customers/segmentation).

WDYT?

|

open

|

2022-10-31T21:18:44Z

|

2022-12-06T22:52:24Z

|

https://github.com/unytics/bigfunctions/issues/6

|

[

"new-bigfunction"

] |

mickaelandrieu

| 2

|

littlecodersh/ItChat

|

api

| 728

|

文档里面写uin可以用作标识,可是我获取到的都是0,是现在uin获取不到了,还是我获取的方式不对?

|

文档里面写uin可以用作标识,可是我获取到的都是0,是现在uin获取不到了,还是我获取的方式不对?

|

open

|

2018-09-13T08:47:53Z

|

2018-09-13T08:47:53Z

|

https://github.com/littlecodersh/ItChat/issues/728

|

[] |

yehanliang

| 0

|

ydataai/ydata-profiling

|

data-science

| 1,359

|

Time series mode inactive

|

### Current Behaviour

The following code produces a regular report instead of a time series report as shown in https://ydata.ai/resources/how-to-do-an-eda-for-time-series.

```

from pandas_profiling import ProfileReport

profile = ProfileReport(df, tsmode=True, sortby="datadate", title="Time-Series EDA")

profile.to_file("report_timeseries.html")

```

In addition the time series example [USA Air Quality](https://github.com/ydataai/ydata-profiling/tree/master/examples/usaairquality)) (Time-series air quality dataset EDA example) cannot be found in the git repository.

### Expected Behaviour

A time series report as in https://ydata.ai/resources/how-to-do-an-eda-for-time-series

### Data Description

My dataframe has only two columns "datadate" (pandas datetime) and "value" (float).

### Code that reproduces the bug

_No response_

### pandas-profiling version

v4.2.0

### Dependencies

```Text

python==3.7.1

numpy==1.21.5

pandas==1.3.5

```

### OS

_No response_

### Checklist

- [X] There is not yet another bug report for this issue in the [issue tracker](https://github.com/ydataai/pandas-profiling/issues)

- [X] The problem is reproducible from this bug report. [This guide](http://matthewrocklin.com/blog/work/2018/02/28/minimal-bug-reports) can help to craft a minimal bug report.

- [X] The issue has not been resolved by the entries listed under [Common Issues](https://pandas-profiling.ydata.ai/docs/master/pages/support_contrib/common_issues.html).

|

closed

|

2023-06-08T15:11:17Z

|

2023-08-09T15:41:54Z

|

https://github.com/ydataai/ydata-profiling/issues/1359

|

[

"feature request 💬"

] |

xshi19

| 2

|

iterative/dvc

|

machine-learning

| 10,639

|

`dvc exp remove`: should we make --queue work with -n and other flags ?

|

Currently using `dvc exp remove --queue` clears the whole queue (much like `dvc queue remove`).

`dvc exp remove` has some useful modifiers, such as `-n` to specify how many experiments should be deleted, or a list of the names of the experiments to remove.

These modifiers currently don't affect the behavior of the remove command on queued experiments.

The question is : should they ? and if so, how would that work ? and what would be the semantics when composing multiple modifiers together ? e.g.: what would the remove command do exactly if we composed `--rev`, `--queue` and `-n` together ?

This is an open question initially raised during a previous conversation with @shcheklein , see the full thread here : https://github.com/iterative/dvc/pull/10633#discussion_r1857943029)

|

open

|

2024-11-28T19:11:22Z

|

2024-11-28T20:00:44Z

|

https://github.com/iterative/dvc/issues/10639

|

[

"discussion",

"A: experiments"

] |

rmic

| 0

|

yunjey/pytorch-tutorial

|

pytorch

| 126

|

How to configure the Logger to show the structure of neural network in tensorboard?

|

How to configure the Logger to show the structure of neural network? thanks!!!!

|

open

|

2018-07-20T09:06:33Z

|

2018-07-20T09:06:33Z

|

https://github.com/yunjey/pytorch-tutorial/issues/126

|

[] |

mali-nuist

| 0

|

huggingface/datasets

|

pandas

| 7,415

|

Shard Dataset at specific indices

|

I have a dataset of sequences, where each example in the sequence is a separate row in the dataset (similar to LeRobotDataset). When running `Dataset.save_to_disk` how can I provide indices where it's possible to shard the dataset such that no episode spans more than 1 shard. Consequently, when I run `Dataset.load_from_disk`, how can I load just a subset of the shards to save memory and time on different ranks?

I guess an alternative to this would be, given a loaded `Dataset`, how can I run `Dataset.shard` such that sharding doesn't split any episode across shards?

|

open

|

2025-02-20T10:43:10Z

|

2025-02-24T11:06:45Z

|

https://github.com/huggingface/datasets/issues/7415

|

[] |

nikonikolov

| 3

|

vaexio/vaex

|

data-science

| 2,348

|

[BUG-REPORT] AttributeError: module 'vaex.dataset' has no attribute '_parse_f'

|

**Description**

Hi,

I am trying to create a plot2d_contour but it is giving me this error:

```---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

Cell In[44], line 2

1 df = vaex.example()

----> 2 df.plot2d_contour(x=df.x,y=df.y)

File ~/opt/anaconda3/envs/agb/lib/python3.10/site-packages/vaex/viz/contour.py:43, in plot2d_contour(self, x, y, what, limits, shape, selection, f, figsize, xlabel, ylabel, aspect, levels, fill, colorbar, colorbar_label, colormap, colors, linewidths, linestyles, vmin, vmax, grid, show, **kwargs)

14 """

15 Plot conting contours on 2D grid.

16

(...)

38 :param show:

39 """

42 # Get the function out of the string

---> 43 f = vaex.dataset._parse_f(f)

45 # Internals on what to bin

46 binby = []

AttributeError: module 'vaex.dataset' has no attribute '_parse_f'

```

Code:

```

import vaex

df = vaex.example()

df.plot2d_contour(x=df.x,y=df.y)

```

I also tried to explicitly set f = "identity" and f="log" and it didn't work.

**Software information**

- Vaex version:

{'vaex': '4.16.0',

'vaex-core': '4.16.1',

'vaex-viz': '0.5.4',

'vaex-hdf5': '0.14.1',

'vaex-server': '0.8.1',

'vaex-astro': '0.9.3',

'vaex-jupyter': '0.8.1',

'vaex-ml': '0.18.1'}

- Vaex was installed via: pip

- OS: macOS 11.6.5

|

open

|

2023-03-01T08:11:57Z

|

2024-04-06T17:01:32Z

|

https://github.com/vaexio/vaex/issues/2348

|

[] |

marixko

| 1

|

fastapiutils/fastapi-utils

|

fastapi

| 357

|

[QUESTION] Is it possible to use repeated tasks with lifetimes? if so how?

|

**Description**

How can I use repeated tasks with lifetimes?

The docs only give examples how how to use these utils with the app.on_event(...) api, but fastapi now recommends using lifetimes. Its unclear to me whether the repeated tasks are supported here, and if they are how they would be used.

|

open

|

2025-01-16T11:12:11Z

|

2025-02-19T00:04:45Z

|

https://github.com/fastapiutils/fastapi-utils/issues/357

|

[

"question"

] |

ollz272

| 3

|

google-deepmind/sonnet

|

tensorflow

| 169

|

Colab mnist example does not work

| ERROR: type should be string, got "https://colab.research.google.com/github/deepmind/sonnet/blob/v2/examples/mlp_on_mnist.ipynb\r\n\r\n---------------------------------------------------------------------------\r\nAssertionError Traceback (most recent call last)\r\n/usr/local/lib/python3.6/dist-packages/tensorflow/python/autograph/impl/api.py in to_graph(entity, recursive, experimental_optional_features)\r\n 661 autograph_module=tf_inspect.getmodule(to_graph))\r\n--> 662 return conversion.convert(entity, program_ctx)\r\n 663 except (ValueError, AttributeError, KeyError, NameError, AssertionError) as e:\r\n\r\n26 frames\r\nAssertionError: Bad argument number for Name: 4, expecting 3\r\n\r\nDuring handling of the above exception, another exception occurred:\r\n\r\nConversionError Traceback (most recent call last)\r\n/usr/local/lib/python3.6/dist-packages/tensorflow/python/autograph/impl/api.py in to_graph(entity, recursive, experimental_optional_features)\r\n 664 logging.error(1, 'Error converting %s', entity, exc_info=True)\r\n 665 raise ConversionError('converting {}: {}: {}'.format(\r\n--> 666 entity, e.__class__.__name__, str(e)))\r\n 667 \r\n 668 \r\n\r\nConversionError: converting <function BaseBatchNorm.__call__ at 0x7f09acc45ae8>: AssertionError: Bad argument number for Name: 4, expecting 3"

|

closed

|

2020-04-15T13:56:18Z

|

2020-04-17T08:24:13Z

|

https://github.com/google-deepmind/sonnet/issues/169

|

[

"bug"

] |

VogtAI

| 2

|

opengeos/leafmap

|

plotly

| 464

|

Streamlit RuntimeError with default leafmap module

|

### Environment Information

- leafmap version: 0.21.0

- Python version: 3.10

- Operating System: Mac

### Description

The default leafmap module can't work well with Streamlit, but foliumap works.

### What I Did

```

import streamlit as st

import leafmap

#import leafmap.foliumap as leafmap

landsat_url = (

'https://drive.google.com/file/d/1EV38RjNxdwEozjc9m0FcO3LFgAoAX1Uw/view?usp=sharing'

)

leafmap.download_file(landsat_url, 'test.tif', unzip=False, overwrite=True)

m = leafmap.Map()

m.add_raster('test.tif', band=1, colormap='terrain', layer_name='DEM')

col1, col2 = st.columns([7, 3])

with col1:

m.to_streamlit()

```

### Error

```

2023-06-07 20:48:38.297 Uncaught app exception

Traceback (most recent call last):

File "/Users/xinz/miniconda3/envs/streamlit/lib/python3.10/site-packages/streamlit/runtime/scriptrunner/script_runner.py", line 565, in _run_script

exec(code, module.__dict__)

File "/Users/xinz/Documents/github/enmap/app/test/test_raster.py", line 10, in <module>

m.add_raster('test.tif', band=1, colormap='terrain', layer_name='DEM')

File "/Users/xinz/miniconda3/envs/streamlit/lib/python3.10/site-packages/leafmap/leafmap.py", line 1898, in add_raster

self.zoom_to_bounds(bounds)

File "/Users/xinz/miniconda3/envs/streamlit/lib/python3.10/site-packages/leafmap/leafmap.py", line 242, in zoom_to_bounds

self.fit_bounds([[bounds[1], bounds[0]], [bounds[3], bounds[2]]])

File "/Users/xinz/miniconda3/envs/streamlit/lib/python3.10/site-packages/ipyleaflet/leaflet.py", line 2622, in fit_bounds

asyncio.ensure_future(self._fit_bounds(bounds))

File "/Users/xinz/miniconda3/envs/streamlit/lib/python3.10/asyncio/tasks.py", line 615, in ensure_future

return _ensure_future(coro_or_future, loop=loop)

File "/Users/xinz/miniconda3/envs/streamlit/lib/python3.10/asyncio/tasks.py", line 634, in _ensure_future

loop = events._get_event_loop(stacklevel=4)

File "/Users/xinz/miniconda3/envs/streamlit/lib/python3.10/asyncio/events.py", line 656, in get_event_loop

raise RuntimeError('There is no current event loop in thread %r.'

RuntimeError: There is no current event loop in thread 'ScriptRunner.scriptThread'.

```

|

closed

|

2023-06-07T18:51:48Z

|

2023-06-07T19:38:14Z

|

https://github.com/opengeos/leafmap/issues/464

|

[

"bug"

] |

zxdawn

| 4