repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

mckinsey/vizro

|

plotly

| 123

|

Consider AG Grid as recommended default over Dash data table

|

_Originally posted by @antonymilne in https://github.com/mckinsey/vizro/issues/114#issuecomment-1770347893_

Just from quickly playing around with the the example dashboard here, I prefer the AG Grid. I wonder whether we should be recommending that instead of Dash data table as the default table for people to use in the (I guess) most common case that someone just wants to draw a nice table?

* for small screen sizes the table has its own little scroll bar rather than stretching out the whole screen

* I can rearrange column order by drag and drop

* the active row is highlighted

* the style just feels a bit more modern and slicker somehow (though dark theme is obviously not usable right now)

I'd guess some of these you can probably also achieve with Dash data table by using appropriate styling parameters or arguments though?

These are just my impressions from playing around for a few seconds though, so don't take them too seriously - it's the first time I've used both sorts of table, so I don't know what each is capable of or how easily we can get all the features we want out of each. But curious what other people think - should Dash data table or AG Grid be the "default" table that we suggest people to use?

|

closed

|

2023-10-25T05:20:46Z

|

2024-03-07T13:21:49Z

|

https://github.com/mckinsey/vizro/issues/123

|

[] |

antonymilne

| 6

|

aeon-toolkit/aeon

|

scikit-learn

| 1,799

|

[ENH] Add the option to turn off all type checking and conversion and go straight to _fit/_predict/_transform

|

### Describe the feature or idea you want to propose

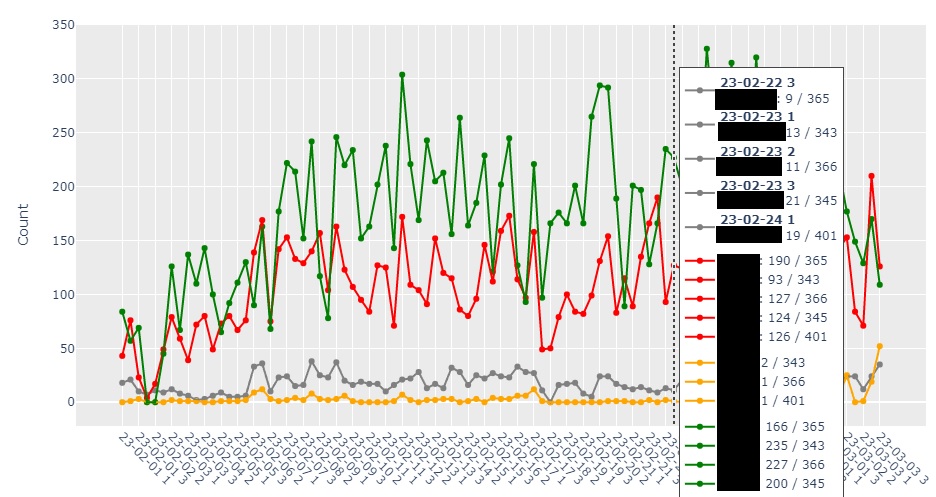

in estimator base classes (looking at BaseCollectionTransformer but same elsewhere) we do checks, get meta data and convert to inner type. All good, but it does introduce an overhead. For example, this is minirocket with and without the checks

the checks involve several scans of the data. For larger data it would be good to have an option to just go straight to inner methods and fail without informative error.

This is minirocket in safe and unsafe modes (time in secs, to transform length 500 series varying number of cases). Note the difference is linear in n

<html xmlns:v="urn:schemas-microsoft-com:vml"

xmlns:o="urn:schemas-microsoft-com:office:office"

xmlns:x="urn:schemas-microsoft-com:office:excel"

xmlns="http://www.w3.org/TR/REC-html40">

<head>

<meta name=ProgId content=Excel.Sheet>

<meta name=Generator content="Microsoft Excel 15">

<link id=Main-File rel=Main-File

href="file:///C:/Users/Tony/AppData/Local/Temp/msohtmlclip1/01/clip.htm">

<link rel=File-List

href="file:///C:/Users/Tony/AppData/Local/Temp/msohtmlclip1/01/clip_filelist.xml">

<style>

<!--table

{mso-displayed-decimal-separator:"\.";

mso-displayed-thousand-separator:"\,";}

@page

{margin:.75in .7in .75in .7in;

mso-header-margin:.3in;

mso-footer-margin:.3in;}

tr

{mso-height-source:auto;}

col

{mso-width-source:auto;}

br

{mso-data-placement:same-cell;}

td

{padding-top:1px;

padding-right:1px;

padding-left:1px;

mso-ignore:padding;

color:black;

font-size:11.0pt;

font-weight:400;

font-style:normal;

text-decoration:none;

font-family:"Aptos Narrow", sans-serif;

mso-font-charset:0;

mso-number-format:General;

text-align:general;

vertical-align:bottom;

border:none;

mso-background-source:auto;

mso-pattern:auto;

mso-protection:locked visible;

white-space:nowrap;

mso-rotate:0;}

.xl65

{mso-number-format:Fixed;}

-->

</style>

</head>

<body link="#467886" vlink="#96607D">

| With | Unsafe | Diff

-- | -- | -- | --

500 | 0.49 | 0.16 | -0.33

1000 | 0.88 | 0.20 | -0.68

1500 | 1.30 | 0.26 | -1.04

2000 | 1.74 | 0.32 | -1.43

2500 | 2.02 | 0.49 | -1.53

3000 | 2.46 | 0.44 | -2.02

3500 | 2.81 | 0.49 | -2.32

4000 | 3.19 | 0.54 | -2.65

4500 | 3.66 | 0.60 | -3.06

5000 | 4.08 | 0.73 | -3.35

5500 | 4.57 | 0.75 | -3.82

6000 | 4.75 | 0.83 | -3.92

6500 | 5.17 | 0.83 | -4.33

7000 | 5.80 | 0.95 | -4.85

7500 | 6.14 | 0.99 | -5.15

8000 | 6.51 | 1.01 | -5.50

8500 | 6.85 | 1.05 | -5.80

9000 | 7.33 | 1.13 | -6.21

9500 | 7.55 | 1.23 | -6.32

10000 | 7.92 | 1.22 | -6.70

</body>

</html>

### Describe your proposed solution

I would have a parameter in constructor, say "unsafe", that defaults to False, then just test in fit etc.

```python

def fit(self, X, y=None):

if self.unsafe:

self._fit(X,y)

return self

if self.get_tag("requires_y"):

if y is None:

raise ValueError("Tag requires_y is true, but fit called with y=None")

# skip the rest if fit_is_empty is True

if self.get_tag("fit_is_empty"):

self._is_fitted = True

return self

self.reset()

```

etc

### Describe alternatives you've considered, if relevant

_No response_

### Additional context

_No response_

|

closed

|

2024-07-14T16:26:50Z

|

2024-07-14T17:11:59Z

|

https://github.com/aeon-toolkit/aeon/issues/1799

|

[

"enhancement"

] |

TonyBagnall

| 1

|

ipython/ipython

|

data-science

| 14,364

|

Documentation about available options and their structural hierarchy

|

<!-- This is the repository for IPython command line, if you can try to make sure this question/bug/feature belong here and not on one of the Jupyter repositories.

If it's a generic Python/Jupyter question, try other forums or discourse.jupyter.org.

If you are unsure, it's ok to post here, though, there are few maintainer so you might not get a fast response.

-->

Hi, I'm looking for a list of the available options and I still cannot find in the latest documentation.

There were the pages for them in docs in previous versions:

https://ipython.org/ipython-doc/dev/config/options/index.html

Is there any page that I'm missing or is there any way to get the equivalent information using IPython shell?

i.e. I found the following magic command tells the available options for the `TerminalPythonApp` class, but I want comprehensive list of available options other than the class as well.

```py

%config TerminalIPythonApp

TerminalIPythonApp(BaseIPythonApplication, InteractiveShellApp) options

---------------------------------------------------------------------

TerminalIPythonApp.add_ipython_dir_to_sys_path=<Bool>

...

%config InteractiveShellApp

UsageError: Invalid config statement: 'InteractiveShellApp'

# No information available for the super classes

```

I found a part of the information is described in the latest docs:

https://ipython.readthedocs.io/en/stable/config/intro.html

But I still feel it difficult to customize options because:

- There is no list of available options for each class

- The structural hierarchy of classes is unclear (i.e. I have no idea where is the best place to put option values because the relationship of the similar classes:`TerminalIPythonApp`, `InteractiveShellApp`, `InteractiveShell`)

Thank you!

|

open

|

2024-03-06T18:29:10Z

|

2024-03-06T20:36:03Z

|

https://github.com/ipython/ipython/issues/14364

|

[] |

furushchev

| 2

|

home-assistant/core

|

asyncio

| 140,830

|

Transmission integration says 'Failed to connect'

|

### The problem

I can normally connect my Transmission daemon 4.0.6 installed as OpenWrt 23.05.5 package from desktop and mobile app clients. When I connect via HA integration setup wizard it always says Failed to connect as soon as I set the correct user/password (if I set them wrong it says authentication is incorrect. If I start netcat on a different port I can see the integration sends a POST request correctly. It only immediately fails when everything is set correctly.

The issue is probably the same reported here: https://community.home-assistant.io/t/transmission-integration-failed-to-connect/402640

### What version of Home Assistant Core has the issue?

core-2025.3.1

### What was the last working version of Home Assistant Core?

_No response_

### What type of installation are you running?

Home Assistant Container

### Integration causing the issue

transmission

### Link to integration documentation on our website

https://www.home-assistant.io/integrations/transmission

### Diagnostics information

HA container runs as host network mode. In many other cases I can connect to other local services for different integrations. Both HA and Transmission daemon share the same LAN IP as host.

I think the actual TCP connection does not fail. Not sure what else makes the integration setup wizard reporting the failure.

### Example YAML snippet

```yaml

```

### Anything in the logs that might be useful for us?

```txt

I set Transmission log level to 5 but it does not print anything about the failure.

```

### Additional information

_No response_

|

open

|

2025-03-17T21:52:42Z

|

2025-03-17T21:52:48Z

|

https://github.com/home-assistant/core/issues/140830

|

[

"integration: transmission"

] |

nangirl

| 1

|

Kanaries/pygwalker

|

matplotlib

| 12

|

UnicodeDecodeError: 'charmap' codec can't decode byte 0x8d in position 511737: character maps to <undefined>

|

This is an issue from [reddit](https://www.reddit.com/r/datascience/comments/117bptb/comment/j9cb6wn/?utm_source=share&utm_medium=web2x&context=3)

```bash

Traceback (most recent call last):

File "E:\py\test-pygwalker\main.py", line 15, in <module>

gwalker = pyg.walk(df)

File "E:\py\test-pygwalker\venv\lib\site-packages\pygwalker\gwalker.py", line 91, in walk

js = render_gwalker_js(gid, props)

File "E:\py\test-pygwalker\venv\lib\site-packages\pygwalker\gwalker.py", line 65, in render_gwalker_js

js = gwalker_script() + js

File "E:\py\test-pygwalker\venv\lib\site-packages\pygwalker\base.py", line 15, in gwalker_script

gwalker_js = "const exports={};const process={env:{NODE_ENV:\"production\"} };" + f.read()

File "E:\Python\lib\encodings\cp1252.py", line 23, in decode

return codecs.charmap_decode(input,self.errors,decoding_table)[0]

UnicodeDecodeError: 'charmap' codec can't decode byte 0x8d in position 511737: character maps to <undefined>

```

> even loading df = pd.DataFrame(data={'a':[1]}) causes this problem to appear.

|

closed

|

2023-02-21T02:24:51Z

|

2023-02-21T03:15:03Z

|

https://github.com/Kanaries/pygwalker/issues/12

|

[

"bug"

] |

ObservedObserver

| 1

|

TencentARC/GFPGAN

|

pytorch

| 368

|

original or clean?

|

Does anyone knows what's the difference between clean version and original version? which version performance is better?

|

open

|

2023-04-18T09:43:29Z

|

2023-04-18T09:43:29Z

|

https://github.com/TencentARC/GFPGAN/issues/368

|

[] |

sunjian2015

| 0

|

iterative/dvc

|

data-science

| 9,924

|

remote: migrate: deduplicate objects between v2 and v3

|

My situation is like this

a) dataFolderA containing fileA.bin fileB.bin, fileC.Bin and I added that via "dvc add dataFolderA" to the remote dvc via 2.0

b) then I changed fileB.bin and added that via "dvc add dataFolderB" to the remove via dvc 3.0

when investigating the remote(and cache) I can see the md5-renamed file for fileA.bin and fileC.bin in both files/md5/<xx>/<xyz> and <xx>/<xyz>

it is the same exact md5 hash and the data for fileA.bin and fileC.bin are now twice in the remote (and cache)

(I am simplifying my case there are many fileA,fileB,fileC's involved)

How can I clean up the remote?. I know there exists a "dvc cache migrate" (have not tried it yet though) .

Kindest regards

|

closed

|

2023-09-07T13:36:16Z

|

2023-10-13T16:06:27Z

|

https://github.com/iterative/dvc/issues/9924

|

[

"feature request"

] |

12michi34

| 7

|

ets-labs/python-dependency-injector

|

flask

| 664

|

Installation via pip warns about deprecated installation method

|

I see this warning when installing the package using pip 22.3.1

```

Running setup.py install for dependency-injector ... done

DEPRECATION: juice-client-db is being installed using the legacy 'setup.py install' method, because it does not have a 'pyproject.toml' and the 'wheel' package is not installed. pip 23.1 will enforce this behaviour change. A possible replacement is to enable the '--use-pep517' option. Discussion can be found at https://github.com/pypa/pip/issues/8559

```

|

closed

|

2023-01-31T17:17:08Z

|

2024-12-10T14:21:23Z

|

https://github.com/ets-labs/python-dependency-injector/issues/664

|

[] |

chbndrhnns

| 1

|

tensorflow/tensor2tensor

|

deep-learning

| 1,083

|

InvalidArgumentError in Transformer model

|

### Description

I am trying to run the `Transformer` model in training mode. I took the as the [`asr_transformer` notebook](https://github.com/tensorflow/tensor2tensor/blob/v1.9.0/tensor2tensor/notebooks/asr_transformer.ipynb) as example and built up on this.

> **Note:** For `hparams` the input and target modality is just `'default'`.

### The error

The exception information:

```

tensorflow.python.framework.errors_impl.InvalidArgumentError:

In[0].dim(0) and In[1].dim(0) must be the same:

[100,2,1,192] vs [1,2,229,192] [Op:BatchMatMul]

name: transformer/parallel_0/transformer/transformer/body/decoder/layer_0/encdec_attention/multihead_attention/dot_product_attention/MatMul/

```

### Inspection

I was debugging into the `transformer` model right to where `dot_product_attention` is being called [common_attention.py#L3470](https://github.com/tensorflow/tensor2tensor/blob/v1.9.0/tensor2tensor/layers/common_attention.py#L3470). From the docs:

```python

"""Dot-product attention.

Args:

q: Tensor with shape [..., length_q, depth_k].

k: Tensor with shape [..., length_kv, depth_k]. Leading dimensions must

match with q.

v: Tensor with shape [..., length_kv, depth_v] Leading dimensions must

match with q.

```

### Environment information

```

OS: Linux everest11 4.15.0-34-generic #37~16.04.1-Ubuntu SMP Tue Aug 28 10:44:06 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

$ pip freeze | grep tensor

tensor2tensor==1.9.0

tensorboard==1.10.0

tensorflow==1.10.1

$ python -V

Python 3.5.6 :: Anaconda, Inc.

```

# Steps to reproduce:

```python

import os

import logging.config

import tensorflow as tf

from tensor2tensor import problems

from tensor2tensor import models

from tensor2tensor.utils import metrics

from tensor2tensor.utils import registry

from tensor2tensor.utils import trainer_lib

from asr.util import is_debug_mode

Modes = tf.estimator.ModeKeys

tfe = tf.contrib.eager

tfe.enable_eager_execution()

if __name__ == '__main__':

problem_name = 'librispeech_clean_small'

input_dir = os.path.join('datasets', 'input', 'problems', problem_name) #'input/ende_wmt_bpe32k'

data_dir = os.path.join(input_dir, 'data')

tmp_dir = os.path.join(input_dir, 'tmp')

tf.gfile.MakeDirs(data_dir)

tf.gfile.MakeDirs(tmp_dir)

problem = problems.problem(problem_name)

problem.generate_data(data_dir, tmp_dir)

encoders = problem.feature_encoders(None)

model_name = "transformer"

hparams_set = "transformer_librispeech_tpu"

hparams = trainer_lib.create_hparams(hparams_set, data_dir=data_dir, problem_name=problem_name)

model_class = registry.model(model_name)

model = model_class(hparams=hparams, mode=Modes.TRAIN)

# In Eager mode, opt.minimize must be passed a loss function wrapped with

# implicit_value_and_gradients

@tfe.implicit_value_and_gradients

def loss_fn(features):

_, losses = model(features)

return losses["training"]

# Setup the training data

train_data = problem.dataset(Modes.TRAIN, data_dir)

optimizer = tf.train.AdamOptimizer()

# Train

NUM_STEPS = 100

for count, example in enumerate(tfe.Iterator(train_data)):

example['inputs'] = tf.reshape(example['inputs'], (1,) + tuple([d.value for d in example['inputs'].shape]))

loss, gv = loss_fn(example)

optimizer.apply_gradients(gv)

```

|

closed

|

2018-09-20T10:45:00Z

|

2018-09-20T12:20:46Z

|

https://github.com/tensorflow/tensor2tensor/issues/1083

|

[] |

stefan-falk

| 1

|

gee-community/geemap

|

streamlit

| 2,022

|

geemap module: extract_values_to_points(filepath) function export bugs

|

<!-- Please search existing issues to avoid creating duplicates. -->

### Environment Information

Python version 3.12

geemap version 0.32.1

### Description

I added the Google Earth Engine Landsat data to Geemap, manually plotted some random points of interest on the map interface, and extracted the CSV file for future reference.

The output CSV file has the wrong latitude and longitude (It seems that the actual point longitude are in the latitude column and the actual Latitude in the longitude column)

Point I plot:

Output CSV:

### What I Did

```python

import geemap

import ee

Map = geemap.Map(center=[40, -100], zoom=4)

landsat7 = ee.Image('LANDSAT/LE7_TOA_5YEAR/1999_2003').select(

['B1', 'B2', 'B3', 'B4', 'B5', 'B7']

)

landsat_vis = {'bands': ['B3', 'B2', 'B1'], 'gamma': 1.4}

Map.addLayer(landsat7, landsat_vis, "Landsat")

Map.set_plot_options(add_marker_cluster=True, overlay=True)

Map.extract_values_to_points('samples.csv')

```

|

closed

|

2024-05-27T22:06:21Z

|

2024-05-27T22:22:12Z

|

https://github.com/gee-community/geemap/issues/2022

|

[

"bug"

] |

zyang91

| 1

|

keras-team/keras

|

deep-learning

| 20,081

|

Loading up Json_files built and trained in Keras 2 for Keras 3

|

Using Keras 3, I am trying to load up a built and trained model from Keras 2 API that is stored in .json with weights stored in .h5. The model file is the following: [cnn_model.json](https://github.com/user-attachments/files/16462021/cnn_model.json). Since model_from_json does not exist in Keras 3, I rewrote the function from the Keras 2 API so that I can load the .json file. With Keras 3 (with torch backend), I am trying to load the model and the weights with the following code

```

import os

import keras

import json

os.environ["KERAS_BACKEND"] = "torch"

def model_from_json(json_string, custom_objects=None):

"""Parses a JSON model configuration string and returns a model instance.

Args:

json_string: JSON string encoding a model configuration.

custom_objects: Optional dictionary mapping names

(strings) to custom classes or functions to be

considered during deserialization.

Returns:

A Keras model instance (uncompiled).

model_config = json.loads(json_string)

return deserialize_keras_object(model_config, custom_objects=custom_objects)

def model_torch():

model_name = 'cnn_model' #model file name

model_file = model_name + '.json'

with open(model_file, 'r') as json_file:

print('USING MODEL:' + model_file)

loaded_model_json = json_file.read()

loaded_model = model_from_json(loaded_model_json)

loaded_model.load_weights(model_name + '.h5')

loaded_model.compile('sgd', 'mse')

if __name__ == "__main__":

model_torch()

```

However, when I run this code, I obtain the error below (as shown below). With this, I have the three following questions:

1. How does one possibly fix this error given that the model I want to load (in Keras 3) was built and trained in tensorflow-keras 2?

2. Is it better to rebuild the model in Keras using the load_model() function in Keras 3, and if so, how can you translate the weights from the .h5 file that was created in tensorflow-keras 2 to keras 3?

3. To rebuild how, how should one translate the json dictionary to actual code?

Error I obtain:

`

TypeError: Could not locate class 'Sequential'. Make sure custom classes are decorated with `@keras.saving.register_keras_serializable()`. Full object config: {'class_name': 'Sequential', 'config': {'name': 'sequential', 'layers': [{'class_name': 'Conv2D', 'config': {'name': 'conv2d_20', 'trainable': True, 'batch_input_shape': [None, 50, 50, 1], 'dtype': 'float32', 'filters': 32, 'kernel_size': [3, 3], 'strides': [1, 1], 'padding': 'valid', 'data_format': 'channels_last', 'dilation_rate': [1, 1], 'activation': 'relu', 'use_bias': True, 'kernel_initializer': {'class_name': 'VarianceScaling', 'config': {'scale': 1.0, 'mode': 'fan_avg', 'distribution': 'uniform', 'seed': None, 'dtype': 'float32'}}, 'bias_initializer': {'class_name': 'Zeros', 'config': {'dtype': 'float32'}}, 'kernel_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}}, {'class_name': 'Activation', 'config': {'name': 'activation_13', 'trainable': True, 'dtype': 'float32', 'activation': 'relu'}}, {'class_name': 'Conv2D', 'config': {'name': 'conv2d_21', 'trainable': True, 'dtype': 'float32', 'filters': 32, 'kernel_size': [3, 3], 'strides': [1, 1], 'padding': 'valid', 'data_format': 'channels_last', 'dilation_rate': [1, 1], 'activation': 'linear', 'use_bias': True, 'kernel_initializer': {'class_name': 'VarianceScaling', 'config': {'scale': 1.0, 'mode': 'fan_avg', 'distribution': 'uniform', 'seed': None, 'dtype': 'float32'}}, 'bias_initializer': {'class_name': 'Zeros', 'config': {'dtype': 'float32'}}, 'kernel_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}}, {'class_name': 'Activation', 'config': {'name': 'activation_14', 'trainable': True, 'dtype': 'float32', 'activation': 'relu'}}, {'class_name': 'MaxPooling2D', 'config': {'name': 'max_pooling2d_10', 'trainable': True, 'dtype': 'float32', 'pool_size': [2, 2], 'padding': 'valid', 'strides': [2, 2], 'data_format': 'channels_last'}}, {'class_name': 'Dropout', 'config': {'name': 'dropout_17', 'trainable': True, 'dtype': 'float32', 'rate': 0.25, 'noise_shape': None, 'seed': None}}, {'class_name': 'Conv2D', 'config': {'name': 'conv2d_22', 'trainable': True, 'dtype': 'float32', 'filters': 64, 'kernel_size': [3, 3], 'strides': [1, 1], 'padding': 'same', 'data_format': 'channels_last', 'dilation_rate': [1, 1], 'activation': 'linear', 'use_bias': True, 'kernel_initializer': {'class_name': 'VarianceScaling', 'config': {'scale': 1.0, 'mode': 'fan_avg', 'distribution': 'uniform', 'seed': None, 'dtype': 'float32'}}, 'bias_initializer': {'class_name': 'Zeros', 'config': {'dtype': 'float32'}}, 'kernel_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}}, {'class_name': 'Activation', 'config': {'name': 'activation_15', 'trainable': True, 'dtype': 'float32', 'activation': 'relu'}}, {'class_name': 'Conv2D', 'config': {'name': 'conv2d_23', 'trainable': True, 'dtype': 'float32', 'filters': 64, 'kernel_size': [3, 3], 'strides': [1, 1], 'padding': 'valid', 'data_format': 'channels_last', 'dilation_rate': [1, 1], 'activation': 'linear', 'use_bias': True, 'kernel_initializer': {'class_name': 'VarianceScaling', 'config': {'scale': 1.0, 'mode': 'fan_avg', 'distribution': 'uniform', 'seed': None, 'dtype': 'float32'}}, 'bias_initializer': {'class_name': 'Zeros', 'config': {'dtype': 'float32'}}, 'kernel_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}}, {'class_name': 'Activation', 'config': {'name': 'activation_16', 'trainable': True, 'dtype': 'float32', 'activation': 'relu'}}, {'class_name': 'MaxPooling2D', 'config': {'name': 'max_pooling2d_11', 'trainable': True, 'dtype': 'float32', 'pool_size': [2, 2], 'padding': 'valid', 'strides': [2, 2], 'data_format': 'channels_last'}}, {'class_name': 'Dropout', 'config': {'name': 'dropout_18', 'trainable': True, 'dtype': 'float32', 'rate': 0.25, 'noise_shape': None, 'seed': None}}, {'class_name': 'Flatten', 'config': {'name': 'flatten_8', 'trainable': True, 'dtype': 'float32', 'data_format': 'channels_last'}}, {'class_name': 'Dense', 'config': {'name': 'dense_15', 'trainable': True, 'dtype': 'float32', 'units': 512, 'activation': 'linear', 'use_bias': True, 'kernel_initializer': {'class_name': 'VarianceScaling', 'config': {'scale': 1.0, 'mode': 'fan_avg', 'distribution': 'uniform', 'seed': None, 'dtype': 'float32'}}, 'bias_initializer': {'class_name': 'Zeros', 'config': {'dtype': 'float32'}}, 'kernel_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}}, {'class_name': 'Activation', 'config': {'name': 'activation_17', 'trainable': True, 'dtype': 'float32', 'activation': 'relu'}}, {'class_name': 'Dropout', 'config': {'name': 'dropout_19', 'trainable': True, 'dtype': 'float32', 'rate': 0.5, 'noise_shape': None, 'seed': None}}, {'class_name': 'Dense', 'config': {'name': 'dense_16', 'trainable': True, 'dtype': 'float32', 'units': 2, 'activation': 'linear', 'use_bias': True, 'kernel_initializer': {'class_name': 'VarianceScaling', 'config': {'scale': 1.0, 'mode': 'fan_avg', 'distribution': 'uniform', 'seed': None, 'dtype': 'float32'}}, 'bias_initializer': {'class_name': 'Zeros', 'config': {'dtype': 'float32'}}, 'kernel_regularizer': None, 'bias_regularizer': None, 'activity_regularizer': None, 'kernel_constraint': None, 'bias_constraint': None}}, {'class_name': 'Activation', 'config': {'name': 'activation_18', 'trainable': True, 'dtype': 'float32', 'activation': 'softmax'}}]}, 'keras_version': '2.2.4-tf', 'backend': 'tensorflow'}

`

|

closed

|

2024-08-01T21:36:25Z

|

2024-09-07T19:33:52Z

|

https://github.com/keras-team/keras/issues/20081

|

[

"stat:awaiting response from contributor",

"stale",

"type:Bug"

] |

manuelpaeza

| 7

|

vastsa/FileCodeBox

|

fastapi

| 178

|

设置好S3存储后还是默认保存到本地以及#96的问题又出现了

|

①如题,我的环境是docker

我的设置应该没错吧,后台日志也没任何报错信息。

②还有#96的问题又出现了,我按照日志修改后可以用了,但是就像上面说的,只能本地存储。日志如下:

```

ERROR: Exception in ASGI application

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/uvicorn/protocols/http/h11_impl.py", line 408, in run_asgi

result = await app( # type: ignore[func-returns-value]

File "/usr/local/lib/python3.9/site-packages/uvicorn/middleware/proxy_headers.py", line 84, in __call__

return await self.app(scope, receive, send)

File "/usr/local/lib/python3.9/site-packages/fastapi/applications.py", line 289, in __call__

await super().__call__(scope, receive, send)

File "/usr/local/lib/python3.9/site-packages/starlette/applications.py", line 122, in __call__

await self.middleware_stack(scope, receive, send)

File "/usr/local/lib/python3.9/site-packages/starlette/middleware/errors.py", line 184, in __call__

raise exc

File "/usr/local/lib/python3.9/site-packages/starlette/middleware/errors.py", line 162, in __call__

await self.app(scope, receive, _send)

File "/usr/local/lib/python3.9/site-packages/starlette/middleware/cors.py", line 91, in __call__

await self.simple_response(scope, receive, send, request_headers=headers)

File "/usr/local/lib/python3.9/site-packages/starlette/middleware/cors.py", line 146, in simple_response

await self.app(scope, receive, send)

File "/usr/local/lib/python3.9/site-packages/starlette/middleware/exceptions.py", line 79, in __call__

raise exc

File "/usr/local/lib/python3.9/site-packages/starlette/middleware/exceptions.py", line 68, in __call__

await self.app(scope, receive, sender)

File "/usr/local/lib/python3.9/site-packages/fastapi/middleware/asyncexitstack.py", line 20, in __call__

raise e

File "/usr/local/lib/python3.9/site-packages/fastapi/middleware/asyncexitstack.py", line 17, in __call__

await self.app(scope, receive, send)

File "/usr/local/lib/python3.9/site-packages/starlette/routing.py", line 718, in __call__

await route.handle(scope, receive, send)

File "/usr/local/lib/python3.9/site-packages/starlette/routing.py", line 276, in handle

await self.app(scope, receive, send)

File "/usr/local/lib/python3.9/site-packages/starlette/routing.py", line 66, in app

response = await func(request)

File "/usr/local/lib/python3.9/site-packages/fastapi/routing.py", line 273, in app

raw_response = await run_endpoint_function(

File "/usr/local/lib/python3.9/site-packages/fastapi/routing.py", line 190, in run_endpoint_function

return await dependant.call(**values)

File "/app/apps/base/views.py", line 41, in share_file

if file.size > settings.uploadSize:

TypeError: '>' not supported between instances of 'int' and 'str'

```

麻烦作者修复一下~

|

closed

|

2024-06-18T04:10:20Z

|

2024-06-18T17:24:01Z

|

https://github.com/vastsa/FileCodeBox/issues/178

|

[] |

ChanLicher

| 5

|

clovaai/donut

|

nlp

| 74

|

prediction results

|

The number of predictions I am getting in the inference is limited to 16, while it should be much more than that.

Is there a certain parameter that I need to modify in order to increase this number?

|

closed

|

2022-10-20T12:17:20Z

|

2022-11-01T11:06:54Z

|

https://github.com/clovaai/donut/issues/74

|

[] |

josianem

| 0

|

microsoft/JARVIS

|

pytorch

| 183

|

importError

|

python models_server.py --config configs/config.default.yaml

Traceback (most recent call last):

File "/home/ml/docker/projects/JARVIS/server/models_server.py", line 29, in <module>

from controlnet_aux import OpenposeDetector, MLSDdetector, HEDdetector, CannyDetector, MidasDetector

ImportError: cannot import name 'CannyDetector' from 'controlnet_aux' (/home/ml/miniconda3/lib/python3.10/site-packages/controlnet_aux/__init__.py)

some debug suggestions?

|

closed

|

2023-04-24T06:42:37Z

|

2024-08-26T03:28:59Z

|

https://github.com/microsoft/JARVIS/issues/183

|

[] |

birchmi

| 2

|

mljar/mercury

|

jupyter

| 84

|

Sort apps in the mercury gallery

|

Hi

Is there a way to sort apps in the gallery based on the title of the notebook or date updated or any other given condition?? Please look at the attached image. It consists of ML notebooks of a course. It has a few apps. I want to sort the apps based on the title so that notebooks will be in order and users can go through them easily. This is the website [link ](https://mlnotebooks.herokuapp.com/) for the app in which I want to sort.

|

closed

|

2022-04-15T10:12:09Z

|

2023-02-20T08:37:12Z

|

https://github.com/mljar/mercury/issues/84

|

[] |

rajeshai

| 1

|

d2l-ai/d2l-en

|

pytorch

| 2,032

|

Contribution Steps

|

The absence of the best techniques for revising and contributing to this project makes it is tedious. Can you update the contributing guidelines?

|

closed

|

2022-02-01T07:13:18Z

|

2022-03-21T22:16:50Z

|

https://github.com/d2l-ai/d2l-en/issues/2032

|

[] |

callmekofi

| 1

|

idealo/imagededup

|

computer-vision

| 161

|

Precision, recall is not right

|

Precision, recall score computed by the evaluate function was not as I expected. So I wrote my own function and it output different results. I double check by using sklearn and it returned the same result as my own function. Can you recheck the evaluate function?

|

closed

|

2021-11-11T11:12:33Z

|

2021-11-11T13:06:02Z

|

https://github.com/idealo/imagededup/issues/161

|

[] |

yosajka

| 1

|

Avaiga/taipy

|

data-visualization

| 2,236

|

[🐛 BUG] Taipy Studio does not recognize some properties

|

### What went wrong? 🤔

With Taipy 4.0.0 and Taipy Studio 2.0.0, Taipy Studio does not recognize some valid properties like mode for text or content for part:

```python

page = """

<|{map_title}|text|mode=md|>

<|part|content={folium_map()}|height=600px|>

"""

```

### OS

Windows

### Version of Taipy

4.0.0

### Acceptance Criteria

- [ ] A unit test reproducing the bug is added.

- [ ] Any new code is covered by a unit tested.

- [ ] Check code coverage is at least 90%.

- [ ] The bug reporter validated the fix.

- [ ] Related issue(s) in taipy-doc are created for documentation and Release Notes are updated.

### Code of Conduct

- [X] I have checked the [existing issues](https://github.com/Avaiga/taipy/issues?q=is%3Aissue+).

- [ ] I am willing to work on this issue (optional)

|

open

|

2024-11-12T12:54:06Z

|

2025-03-21T13:43:00Z

|

https://github.com/Avaiga/taipy/issues/2236

|

[

"💥Malfunction",

"🟧 Priority: High",

"🔒 Staff only",

"👩💻Studio"

] |

AlexandreSajus

| 0

|

plotly/dash-cytoscape

|

plotly

| 6

|

Can't Change Layout to Preset

|

|

closed

|

2018-08-16T15:54:32Z

|

2018-09-27T17:46:10Z

|

https://github.com/plotly/dash-cytoscape/issues/6

|

[

"react"

] |

xhluca

| 1

|

noirbizarre/flask-restplus

|

api

| 773

|

Parse argument as a list

|

I have the following model and parser:

```

component = api.model('Component', {

'location': fields.String,

'amount': fields.Integer,

})

...

parser = api.parser()

parser.add_argument('presented_argument', type=[component])

args = parser.parse_args()

```

I have error if I do it in this way: "'list' object is not callable"

What is the correct way to parse list?

|

closed

|

2020-01-22T16:46:35Z

|

2020-01-27T09:54:20Z

|

https://github.com/noirbizarre/flask-restplus/issues/773

|

[] |

glebmikulko

| 2

|

pydantic/pydantic-ai

|

pydantic

| 93

|

Examples builder

|

We plan to add an examples builder which would take a sequence of things (e.g. pydantic models, dataclasses, dicts etc.) and serialize them.

Usage would be something like

```py

from pydantic_ai import format_examples

@agent.system_prompt

def foobar():

return f'the examples are:\n{format_examples(examples, dialect='xml')}'

```

The suggest is that LLMs find it particularly easy to read XML, so we'll offer (among other formats) XML as way to format the examples.

By default, should it use

```py

"""

<example>

<input>

show me values greater than 5

</input>

<sql>

SELECT * FROM table WHERE value > 5

</sql>

</example>

...

"""

```

or

```py

"""

<example input="show me values greater than 5" sql="SELECT * FROM table WHERE value > 5" />

...

"""

```

?

|

closed

|

2024-11-25T19:36:36Z

|

2025-01-02T23:00:33Z

|

https://github.com/pydantic/pydantic-ai/issues/93

|

[

"Feature request"

] |

samuelcolvin

| 4

|

albumentations-team/albumentations

|

deep-learning

| 1,508

|

Label additional targets keypoints.

|

## 🐛 Bug

I want to augment an image, a main set of keypoints ('stems') and a varying amount of additional sets of keypoints ('row_center_lines') on it and labels for these keypoints ('row_center_line_classes').

It appears to be working as intended when using [A.ReplayCompose](https://albumentations.ai/docs/examples/replay/) (compare code below).

However for every time I apply replay, the original image has to be augmented again as well, leading to unnecessary memory use, right? Therefore I thought It'd make sense to use additional_targets instead. This does indeed work in the case of transforming keypoints only, however after some research and experimenting I haven't been able to get it to work for labels as well. At best it throws a len(data) needs to be len(labels) error. Is there a way to label both additional target keypoints and standard keypoints and transform them, or am I better of for now with sticking to using replay?

The reason I want to augment the labels as well is because as it appears the order of the keypoints is swapped around in case of flips or similar augmentations.

## To Reproduce

Steps to reproduce the behavior:

1. Add keypoints additional_targets to A.Compose.

2. Try to somehow add labels for these additional keypoints.

3. Apply the transform created by A.Compose, entering the additional keypoints and somehow the labels.

4. Be disappointed.

Working replay_code:

` def aug_fn(img, stems, stem_classes, row_center_lines, row_center_line_classes, no_augment):

"""This function takes the input image and stems and returns the augmented image

:img: input image

:stems: numpy array of stem positions

:stem_classes: list of stem classes

:row_center_lines: numpy array of row center lines

:row_center_line_classes: list of row center line classes

"""

# could use tf.numpy_function instead, but that's discouraged

img = img.numpy()

stems = stems.numpy()

stem_classes = stem_classes.numpy()

# don't remove out of bounds keypoints and their classes

remove_invisible=False

label_fields = ['stem_classes']

# only flip and crop to target size in some cases

transforms_list = self.limited_transforms_list if no_augment else self.all_transforms_list

transforms = A.ReplayCompose(

transforms=transforms_list,

keypoint_params=A.KeypointParams(format='xy', remove_invisible=remove_invisible,

label_fields=label_fields,

)) # can add more labels here, but also need to add when using replay

# Augment the image and stems

aug_data = transforms(image=img,

keypoints=stems,

stem_classes=stem_classes,

)

# for testing purposes (only if determinism=true)

#self.aug_data_list = aug_data['replay']

stems = tf.convert_to_tensor(aug_data['keypoints'], dtype=tf.float32)

stem_classes = tf.convert_to_tensor(aug_data['stem_classes'], dtype=tf.string)

if len(row_center_lines) > 0:

# augment each row_center_line by replay

row_center_lines = row_center_lines.numpy().astype(np.float32)

row_center_line_classes = row_center_line_classes.numpy().astype(str)

for i, row_center_line in enumerate(row_center_lines):

# image is augmented again, could enter empty np.empty() if faster

replay_row_center_lines = A.ReplayCompose.replay(aug_data['replay'],

image=img,

keypoints=row_center_line,

# one label for each of the two row_center_line keypoints

stem_classes=(row_center_line_classes[i], row_center_line_classes[i]))

# both classes are the same, so [0] or [1] is fine

row_center_lines[i] = replay_row_center_lines['keypoints']

row_center_line_classes[i] = replay_row_center_lines['stem_classes'][0]

img = aug_data["image"]

return img, stems, stem_classes, row_center_lines, row_center_line_classes

# 3. Crop and apply augmentations to images and stems (randomcrop only in validation mode)

# tf.numpy_function converts the input tensors to numpy arrays automatically, which are needed for augmentation

img, stem_pos, stem_classes, row_center_lines, row_center_line_classes = tf.py_function(func=aug_fn,

inp=[img, stem_pos, stem_classes, row_center_lines, row_center_line_classes, no_augment],

Tout=[tf.uint8, tf.float32, tf.string, tf.float32, tf.string],

name="aug_fn") `

Faulty additonal_targets code:

` def aug_fn(img, stems, stem_classes, row_center_lines, row_center_line_classes, no_augment):

"""This function takes the input image and stems and returns the augmented image

:img: input image

:stems: stem positions

:stem_classes: stem classes

:row_center_lines: row center lines

:row_center_line_classes: row center line classes

"""

# could use tf.numpy_function instead, but that's discouraged

img = img.numpy()

stems = stems.numpy()

stem_classes = stem_classes.numpy()

# don't remove out of bounds keypoints and their classes

remove_invisible=False

label_fields = [] if len(stems) == 0 else ['stem_classes']

additional_targets = {}

additional_target_args = {}

if len(row_center_lines) > 0:

row_center_lines = row_center_lines.numpy().astype(np.float32)

row_center_line_classes = row_center_line_classes.numpy().astype(str)

for i, row_center_line in enumerate(row_center_lines):

additional_targets[f'row_center_line_{i}'] = 'keypoints'

additional_target_args[f'row_center_line_{i}'] = row_center_line

#additional_targets[f'row_center_line_class_{i}'] = 'classification'

#additional_target_args[f'row_center_line_class_{i}'] = row_center_line_classes[i]

# only flip and crop to target size in some cases

transforms_list = self.limited_transforms_list if no_augment else self.all_transforms_list

transforms = A.Compose(

transforms=transforms_list,

additional_targets=additional_targets,

keypoint_params=A.KeypointParams(format='xy', remove_invisible=remove_invisible,

label_fields=label_fields,

))

# Augment the image and stems

aug_data = transforms(image=img,

keypoints=stems,

stem_classes=stem_classes,

**additional_target_args

)

for asc, osc in zip(stem_classes, aug_data['stem_classes']):

print(f'asc: {asc}')

print(f'osc: {osc}')

if asc != osc:

print('wooops')

img = aug_data["image"]

stems = tf.convert_to_tensor(aug_data['keypoints'], dtype=tf.float32)

stem_classes = tf.convert_to_tensor(aug_data['stem_classes'], dtype=tf.string)

row_center_line_classes_copy=row_center_line_classes.copy()

if len(row_center_lines) > 0:

for j, row_center_line in enumerate(row_center_lines):

row_center_lines[j] = aug_data[f'row_center_line_{j}']

#row_center_line_classes[j] = aug_data[f'row_center_line_class_{j}']

return img, stems, stem_classes, row_center_lines, row_center_line_classes

img, stem_pos, stem_classes, row_center_lines, row_center_line_classes = tf.py_function(func=aug_fn,

inp=[img, stem_pos, stem_classes, row_center_lines, row_center_line_classes, no_augment],

Tout=[tf.uint8, tf.float32, tf.string, tf.float32, tf.string],

name="aug_fn") `

## Expected behavior

Have labels of all keypoints be augmented according to the keypoint augmentations themselves.

Thank you very much for having a look!

## Environment

- Albumentations version (e.g., 0.1.8): 1.3.1

- Python version (e.g., 3.7): 3.8.10

- OS (e.g., Linux): Linux

- How you installed albumentations (`conda`, `pip`, source): pip install albumentations

- Any other relevant information:

## Additional context

Notes: 'row_center_lines' are pairs of two keypoints forming a line, so a "line transformation" would also work, if something like that existed. Maybe bounding box augmentation could be used for it?

|

open

|

2024-01-18T10:12:45Z

|

2024-01-23T10:50:19Z

|

https://github.com/albumentations-team/albumentations/issues/1508

|

[] |

JonathanGehret

| 1

|

holoviz/panel

|

plotly

| 7,580

|

panel oauth-secret not a valid command

|

The section https://panel.holoviz.org/how_to/authentication/configuration.html#encryption mentions you can run `panel oauth-secret`.

But you cannot with panel 1.5.5. Do you mean `panel secret`?

|

closed

|

2025-01-02T12:30:27Z

|

2025-01-17T19:11:57Z

|

https://github.com/holoviz/panel/issues/7580

|

[] |

MarcSkovMadsen

| 0

|

plotly/dash

|

flask

| 2,325

|

[BUG] `pip install dash` is still installing obsolete packages

|

Thank you so much for helping improve the quality of Dash!

We do our best to catch bugs during the release process, but we rely on your help to find the ones that slip through.

**Describe your context**

Please provide us your environment, so we can easily reproduce the issue.

- replace the result of `pip list | grep dash` below

```

dash 2.7.0

dash-core-components 2.0.0

dash-html-components 2.0.0

dash-table 5.0.0

```

**Describe the bug**

Using pip install installs components that are now incorporated into dash itself. These are unnecessary as far as dash is concerned.

**Expected behavior**

Dash installs with incorporating obsolete pypi packages.

**After note**

I see that this was already covered in #1944. Apologies for the new issue, I will be patient.

|

closed

|

2022-11-18T00:02:51Z

|

2022-11-18T00:09:25Z

|

https://github.com/plotly/dash/issues/2325

|

[] |

ryanskeith

| 0

|

tableau/server-client-python

|

rest-api

| 734

|

Add documentation for Data Acceleration Report

|

Add docs to match code added in #596

|

open

|

2020-11-19T00:43:18Z

|

2023-03-03T21:42:04Z

|

https://github.com/tableau/server-client-python/issues/734

|

[

"docs"

] |

bcantoni

| 0

|

coqui-ai/TTS

|

deep-learning

| 2,801

|

[Bug] cannot install TTS from pip (Dependency lookup for OpenBLAS with method 'pkgconfig' failed)

|

### Describe the bug

When I run pip install TTS (on mac in vscode) I run into this error:

Found Pkg-config: NO

Run-time dependency python found: YES 3.10

Program cython found: YES (/private/var/folders/0y/kbqk5xzn5f397bmdxh0jt8h40000gn/T/pip-build-env-422m5n95/overlay/bin/cython)

Compiler for C supports arguments -Wno-unused-but-set-variable: NO

Compiler for C supports arguments -Wno-unused-function: YES

Compiler for C supports arguments -Wno-conversion: YES

Compiler for C supports arguments -Wno-misleading-indentation: YES

Library m found: YES

Fortran compiler for the host machine: gfortran (gcc 13.1.0 "GNU Fortran (Homebrew GCC 13.1.0) 13.1.0")

Fortran linker for the host machine: gfortran ld64 650.9

Compiler for Fortran supports arguments -Wno-conversion: YES

Checking if "-Wl,--version-script" : links: NO

Program pythran found: YES (/private/var/folders/0y/kbqk5xzn5f397bmdxh0jt8h40000gn/T/pip-build-env-422m5n95/overlay/bin/pythran)

Did not find CMake 'cmake'

Found CMake: NO

Run-time dependency xsimd found: NO (tried pkgconfig, framework and cmake)

Run-time dependency threads found: YES

Library npymath found: YES

Library npyrandom found: YES

pybind11-config found: YES (/private/var/folders/0y/kbqk5xzn5f397bmdxh0jt8h40000gn/T/pip-build-env-422m5n95/overlay/bin/pybind11-config) 2.10.4

Run-time dependency pybind11 found: YES 2.10.4

Run-time dependency openblas found: NO (tried pkgconfig, framework and cmake)

Run-time dependency openblas found: NO (tried framework)

../../scipy/meson.build:159:9: ERROR: Dependency lookup for OpenBLAS with method 'pkgconfig' failed: Pkg-config binary for machine 1 not found. Giving up.

A full log can be found at /private/var/folders/0y/kbqk5xzn5f397bmdxh0jt8h40000gn/T/pip-install-jmtiyxte/scipy_aaa7ee9f969b4a2984a0151a62db7a37/.mesonpy-820un46a/build/meson-logs/meson-log.txt

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

error: metadata-generation-failed

### To Reproduce

pip install TTS

or

git clone https://github.com/coqui-ai/TTS

pip install -e .[all,dev,notebooks] # Select the relevant extras

### Expected behavior

Supposed to install TTS

### Logs

```shell

pip install TTS

Collecting TTS

Using cached TTS-0.15.6.tar.gz (1.5 MB)

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing metadata (pyproject.toml) ... done

Collecting cython==0.29.30 (from TTS)

Using cached Cython-0.29.30-py2.py3-none-any.whl (985 kB)

Collecting scipy>=1.4.0 (from TTS)

Using cached scipy-1.11.1.tar.gz (56.0 MB)

Installing build dependencies ... done

Getting requirements to build wheel ... done

Installing backend dependencies ... done

Preparing metadata (pyproject.toml) ... error

error: subprocess-exited-with-error

× Preparing metadata (pyproject.toml) did not run successfully.

│ exit code: 1

╰─> [44 lines of output]

+ meson setup /private/var/folders/0y/kbqk5xzn5f397bmdxh0jt8h40000gn/T/pip-install-jmtiyxte/scipy_aaa7ee9f969b4a2984a0151a62db7a37 /private/var/folders/0y/kbqk5xzn5f397bmdxh0jt8h40000gn/T/pip-install-jmtiyxte/scipy_aaa7ee9f969b4a2984a0151a62db7a37/.mesonpy-820un46a/build -Dbuildtype=release -Db_ndebug=if-release -Db_vscrt=md --native-file=/private/var/folders/0y/kbqk5xzn5f397bmdxh0jt8h40000gn/T/pip-install-jmtiyxte/scipy_aaa7ee9f969b4a2984a0151a62db7a37/.mesonpy-820un46a/build/meson-python-native-file.ini

The Meson build system

Version: 1.2.0

Source dir: /private/var/folders/0y/kbqk5xzn5f397bmdxh0jt8h40000gn/T/pip-install-jmtiyxte/scipy_aaa7ee9f969b4a2984a0151a62db7a37

Build dir: /private/var/folders/0y/kbqk5xzn5f397bmdxh0jt8h40000gn/T/pip-install-jmtiyxte/scipy_aaa7ee9f969b4a2984a0151a62db7a37/.mesonpy-820un46a/build

Build type: native build

Project name: SciPy

Project version: 1.11.1

C compiler for the host machine: cc (clang 12.0.5 "Apple clang version 12.0.5 (clang-1205.0.22.11)")

C linker for the host machine: cc ld64 650.9

C++ compiler for the host machine: c++ (clang 12.0.5 "Apple clang version 12.0.5 (clang-1205.0.22.11)")

C++ linker for the host machine: c++ ld64 650.9

Cython compiler for the host machine: cython (cython 0.29.36)

Host machine cpu family: aarch64

Host machine cpu: aarch64

Program python found: YES (/Library/Frameworks/Python.framework/Versions/3.10/bin/python3)

Did not find pkg-config by name 'pkg-config'

Found Pkg-config: NO

Run-time dependency python found: YES 3.10

Program cython found: YES (/private/var/folders/0y/kbqk5xzn5f397bmdxh0jt8h40000gn/T/pip-build-env-422m5n95/overlay/bin/cython)

Compiler for C supports arguments -Wno-unused-but-set-variable: NO

Compiler for C supports arguments -Wno-unused-function: YES

Compiler for C supports arguments -Wno-conversion: YES

Compiler for C supports arguments -Wno-misleading-indentation: YES

Library m found: YES

Fortran compiler for the host machine: gfortran (gcc 13.1.0 "GNU Fortran (Homebrew GCC 13.1.0) 13.1.0")

Fortran linker for the host machine: gfortran ld64 650.9

Compiler for Fortran supports arguments -Wno-conversion: YES

Checking if "-Wl,--version-script" : links: NO

Program pythran found: YES (/private/var/folders/0y/kbqk5xzn5f397bmdxh0jt8h40000gn/T/pip-build-env-422m5n95/overlay/bin/pythran)

Did not find CMake 'cmake'

Found CMake: NO

Run-time dependency xsimd found: NO (tried pkgconfig, framework and cmake)

Run-time dependency threads found: YES

Library npymath found: YES

Library npyrandom found: YES

pybind11-config found: YES (/private/var/folders/0y/kbqk5xzn5f397bmdxh0jt8h40000gn/T/pip-build-env-422m5n95/overlay/bin/pybind11-config) 2.10.4

Run-time dependency pybind11 found: YES 2.10.4

Run-time dependency openblas found: NO (tried pkgconfig, framework and cmake)

Run-time dependency openblas found: NO (tried framework)

../../scipy/meson.build:159:9: ERROR: Dependency lookup for OpenBLAS with method 'pkgconfig' failed: Pkg-config binary for machine 1 not found. Giving up.

A full log can be found at /private/var/folders/0y/kbqk5xzn5f397bmdxh0jt8h40000gn/T/pip-install-jmtiyxte/scipy_aaa7ee9f969b4a2984a0151a62db7a37/.mesonpy-820un46a/build/meson-logs/meson-log.txt

[end of output]

```

### Environment

```shell

Python 3.10

```

### Additional context

_No response_

|

closed

|

2023-07-25T14:34:57Z

|

2023-07-31T13:56:25Z

|

https://github.com/coqui-ai/TTS/issues/2801

|

[

"bug"

] |

valenmoore

| 3

|

ultralytics/ultralytics

|

pytorch

| 19,252

|

Yolo11 speed very slow compared to Yolo8

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/orgs/ultralytics/discussions) and found no similar questions.

### Question

Hi,

I tested Yolov11 for the first time today and the performances are really bad and I do not know why.

I tried with a fresh new environment and the coco validation set, code below

```

from ultralytics import YOLO

# Load a model

# model = YOLO("yolo8l.pt")

model = YOLO("yolo11l.pt")

model.val(data='coco.yaml', batch=32)

```

and the results are the following

YOLO11:

Speed: 0.2ms preprocess, 99.2ms inference, 0.0ms loss, 0.8ms postprocess per image

Class Images Instances Box(P R mAP50 mAP50-95):

all 5000 36335 0.748 0.634 0.697 0.534

YOLO8:

Speed: 0.2ms preprocess, 41.0ms inference, 0.0ms loss, 0.8ms postprocess per image

Class Images Instances Box(P R mAP50 mAP50-95):

all 5000 36335 0.739 0.634 0.695 0.531

Is it normal to be that slow?

environment:

Ultralytics 8.3.75 🚀 Python-3.12.8 torch-2.5.1+cu124 CUDA:0 (NVIDIA GeForce RTX 3060 Laptop GPU, 6144MiB)

Setup complete ✅ (20 CPUs, 29.4 GB RAM, 226.1/1006.9 GB disk)

OS Linux-5.15.167.4-microsoft-standard-WSL2-x86_64-with-glibc2.35

Environment Linux

Python 3.12.8

Install pip

RAM 29.38 GB

Disk 226.1/1006.9 GB

CPU 12th Gen Intel Core(TM) i7-12700H

CPU count 20

GPU NVIDIA GeForce RTX 3060 Laptop GPU, 6144MiB

GPU count 1

CUDA 12.4

numpy ✅ 2.1.1<=2.1.1,>=1.23.0

matplotlib ✅ 3.10.0>=3.3.0

opencv-python ✅ 4.10.0.84>=4.6.0

pillow ✅ 11.1.0>=7.1.2

pyyaml ✅ 6.0.2>=5.3.1

requests ✅ 2.32.3>=2.23.0

scipy ✅ 1.15.0>=1.4.1

torch ✅ 2.5.1>=1.8.0

torch ✅ 2.5.1!=2.4.0,>=1.8.0; sys_platform == "win32"

torchvision ✅ 0.20.1>=0.9.0

tqdm ✅ 4.67.1>=4.64.0

psutil ✅ 6.1.1

py-cpuinfo ✅ 9.0.0

pandas ✅ 2.2.3>=1.1.4

seaborn ✅ 0.13.2>=0.11.0

ultralytics-thop ✅ 2.0.13>=2.0.0

### Additional

_No response_

|

closed

|

2025-02-14T16:10:32Z

|

2025-02-19T08:30:37Z

|

https://github.com/ultralytics/ultralytics/issues/19252

|

[

"question",

"detect"

] |

francescobodria

| 10

|

jadore801120/attention-is-all-you-need-pytorch

|

nlp

| 197

|

download dataset error

|

hello, I want to download the WMT'17 by your codes,but I faid,could you tell me how to solve this problem,thank you so much.

the error as following:

Already downloaded and extracted http://data.statmt.org/wmt17/translation-task/training-parallel-nc-v12.tgz.

Already downloaded and extracted http://data.statmt.org/wmt17/translation-task/dev.tgz.

Downloading from http://storage.googleapis.com/tf-perf-public/official_transformer/test_data/newstest2014.tgz to newstest2014.tgz.

newstest2014.tgz: 0.00B [00:00, ?B/s]

Traceback (most recent call last):

File "preprocess.py", line 336, in <module>

main()

File "preprocess.py", line 187, in main

raw_test = get_raw_files(opt.raw_dir, _TEST_DATA_SOURCES)

File "preprocess.py", line 100, in get_raw_files

src_file, trg_file = download_and_extract(raw_dir, d["url"], d["src"], d["trg"])

File "preprocess.py", line 71, in download_and_extract

compressed_file = _download_file(download_dir, url)

File "preprocess.py", line 93, in _download_file

urllib.request.urlretrieve(url, filename=filename, reporthook=t.update_to)

File "/usr/local/lib/python3.7/urllib/request.py", line 247, in urlretrieve

with contextlib.closing(urlopen(url, data)) as fp:

File "/usr/local/lib/python3.7/urllib/request.py", line 222, in urlopen

return opener.open(url, data, timeout)

File "/usr/local/lib/python3.7/urllib/request.py", line 531, in open

response = meth(req, response)

File "/usr/local/lib/python3.7/urllib/request.py", line 641, in http_response

'http', request, response, code, msg, hdrs)

File "/usr/local/lib/python3.7/urllib/request.py", line 569, in error

return self._call_chain(*args)

File "/usr/local/lib/python3.7/urllib/request.py", line 503, in _call_chain

result = func(*args)

File "/usr/local/lib/python3.7/urllib/request.py", line 649, in http_error_default

raise HTTPError(req.full_url, code, msg, hdrs, fp)

urllib.error.HTTPError: HTTP Error 403: Forbidden

|

open

|

2022-04-26T14:10:04Z

|

2023-09-20T03:35:31Z

|

https://github.com/jadore801120/attention-is-all-you-need-pytorch/issues/197

|

[] |

qimg412

| 4

|

davidsandberg/facenet

|

computer-vision

| 602

|

Incorrect labels

|

Just for didactic purposes, the comment here (https://github.com/davidsandberg/facenet/blob/master/src/models/inception_resnet_v1.py#L178 ) is incorrect (also the following ones in the lines 182, 186, 189...).

For instance, in L178 is says 149x149x32 and it's supposed to be 79x79x32.

Do you accept patches?

Thanks

|

open

|

2018-01-04T09:40:45Z

|

2018-01-04T09:40:45Z

|

https://github.com/davidsandberg/facenet/issues/602

|

[] |

tiagofrepereira2012

| 0

|

sktime/pytorch-forecasting

|

pandas

| 1,360

|

Get an error when creating dataset,how to fix it?

|

@jdb78

I used 150,000 pieces of data to create a dataset,add'series' and 'time_idx' column like this:

```

.......

data_len=150000

max_encoder_length = 4*96

max_prediction_length = 96

batch_size = 512

data=get_data(data_len)

data['time_idx']= data.index%(96*5) # as time index

data['series']=data.index//(96*5) # as group id

training_cutoff = data["time_idx"].max() - max_prediction_length

context_length = max_encoder_length

prediction_length = max_prediction_length

training = TimeSeriesDataSet(

data[lambda x: x.time_idx <= training_cutoff],

time_idx="time_idx",

target="close",

categorical_encoders={"series":NaNLabelEncoder().fit(data.series)},

group_ids=["series"],

time_varying_unknown_reals=["value"],

max_encoder_length=context_length,

max_prediction_length=prediction_length,

)

.......

```

The TimeSeriesDataSet give me an erro:'AssertionError: filters should not remove entries all entries - check encoder/decoder lengths and lags', I think there is some wrong in my definition

```

max_encoder_length = 4*96

max_prediction_length = 96

batch_size = 512

```

This problem has been bothering me for days,how can I modify these values to fix this problem,HELP PLEASE!

|

open

|

2023-08-05T03:49:32Z

|

2023-08-06T00:37:28Z

|

https://github.com/sktime/pytorch-forecasting/issues/1360

|

[] |

Lookforworld

| 4

|

Miksus/rocketry

|

automation

| 192

|

@app.task(daily.between("08:00", "20:00") & every("10 minutes"))

|

**Describe the bug**

Tasks with this task definition only run once

**Expected behavior**

task should run every day, every 10 minutes during 8am and 8pm

**Desktop (please complete the following information):**

- OS: Ubuntu 20

- Python 3.8

|

open

|

2023-02-09T13:47:51Z

|

2023-02-26T17:32:55Z

|

https://github.com/Miksus/rocketry/issues/192

|

[

"bug"

] |

faulander

| 3

|

ivy-llc/ivy

|

tensorflow

| 28,372

|

Fix Frontend Failing Test: tensorflow - mathematical_functions.jax.numpy.minimum

|

closed

|

2024-02-21T17:50:59Z

|

2024-02-21T21:29:19Z

|

https://github.com/ivy-llc/ivy/issues/28372

|

[

"Sub Task"

] |

samthakur587

| 0

|

|

waditu/tushare

|

pandas

| 860

|

fut_holding 字段vol_chg none

|

通过

ts_pro.query( 'fut_holding',trade_date=date_str,exchange=ec,fields='trade_date,symbol,broker,vol,long_hld,long_chg,short_hld,short_chg,exchange')

查询的数据,其中字段vol_chg 的数据都是None

查询日期为 20181203,20181204,20181205,20181206

所有品种

本人推广连接

https://tushare.pro/register?reg=125923

|

closed

|

2018-12-07T06:59:57Z

|

2018-12-09T01:02:10Z

|

https://github.com/waditu/tushare/issues/860

|

[] |

xiangzhy

| 2

|

pywinauto/pywinauto

|

automation

| 508

|

Examples do not work correctly from python console on windows 10

|

Hello,

I tried examples provided in readme.md using python console. I use Python 3.5.2 (v3.5.2:4def2a2901a5, Jun 25 2016, 22:18:55) [MSC v.1900 64 bit (AMD64)] on win32

'Simple' example that run from console produced notepad like on screnshot attached.

If I understood the 'About' window must be closed on executing:

`app.AboutNotepad.OK.click()` - but it did not happen.

But if I run this example as script it works fine.

Second example: 'MS UI Automation Example' failed on

```python

Properties = Desktop(backend='uia').Common_Files_Properties

```

with `NameError: name 'Desktop' is not defined`

But again it works fine as script.

Is it normal behavior and this module is not intended to work from console?

|

open

|

2018-06-18T14:24:05Z

|

2019-05-13T12:41:26Z

|

https://github.com/pywinauto/pywinauto/issues/508

|

[

"question"

] |

0de554K

| 1

|

openapi-generators/openapi-python-client

|

rest-api

| 373

|

Enums with default values generate incorrect field type and to_dict implementation

|

**Describe the bug**

We have several Enums in our API spec, and models that include fields of that enum type. Sometimes, we set default values on those enum fields. In those cases, the generated code in `to_dict` is incorrect and cannot be used to POST entities to our API.

**To Reproduce**

Steps to reproduce the behavior:

Add this code to a main.py file

```

from typing import Optional, Union, Dict

from fastapi import FastAPI

from pydantic import BaseModel

from enum import Enum

from starlette.responses import Response

app = FastAPI()

class ItemType(str, Enum):

RATIO = "ratio"

CURRENCY = "currency"

class ItemResource(BaseModel):

id: int

optional_id: Optional[int]

id_with_default: int = 42

item_type: ItemType

optional_item_type: Optional[ItemType]

item_type_with_default: ItemType = ItemType.RATIO

@app.post("/")

def write_item(model: ItemResource):

return Response(status_code=201)

```

Run the code with `uvicorn main:app &`

Generate an sdk with `openapi-python-client generate --url http://localhost:8000/openapi.json`

Open the generated `item_resource.py`

**Expected behavior**

`item_type_with_default` should look like:

`item_type_with_default: Union[Unset, ItemType] = ItemType.RATIO`

`to_dict` should have code like:

```

item_type_with_default = self.item_type_with_default

if item_type_with_default is not UNSET:

field_dict["item_type_with_default"] = item_type_with_default

```

**Actual behavior**

`item_type_with_default` looks like:

`item_type_with_default: Union[Unset, None] = UNSET`

`to_dict` has code like:

```

item_type_with_default = None

if item_type_with_default is not UNSET:

field_dict["item_type_with_default"] = item_type_with_default

```

Since `self.item_type_with_default` is never accessed, the caller has no way to actually provide a value to the server.

You can see the code in there for `id_with_default`, an int field with default that does the right thing.

**OpenAPI Spec File**

```

{"openapi":"3.0.2","info":{"title":"FastAPI","version":"0.1.0"},"paths":{"/":{"post":{"summary":"Write Item","operationId":"write_item__post","requestBody":{"content":{"application/json":{"schema":{"$ref":"#/components/schemas/ItemResource"}}},"required":true},"responses":{"200":{"description":"Successful Response","content":{"application/json":{"schema":{}}}},"422":{"description":"Validation Error","content":{"application/json":{"schema":{"$ref":"#/components/schemas/HTTPValidationError"}}}}}}}},"components":{"schemas":{"HTTPValidationError":{"title":"HTTPValidationError","type":"object","properties":{"detail":{"title":"Detail","type":"array","items":{"$ref":"#/components/schemas/ValidationError"}}}},"ItemResource":{"title":"ItemResource","required":["id","item_type"],"type":"object","properties":{"id":{"title":"Id","type":"integer"},"optional_id":{"title":"Optional Id","type":"integer"},"id_with_default":{"title":"Id With Default","type":"integer","default":42},"item_type":{"$ref":"#/components/schemas/ItemType"},"optional_item_type":{"$ref":"#/components/schemas/ItemType"},"item_type_with_default":{"allOf":[{"$ref":"#/components/schemas/ItemType"}],"default":"ratio"}}},"ItemType":{"title":"ItemType","enum":["ratio","currency"],"type":"string","description":"An enumeration."},"ValidationError":{"title":"ValidationError","required":["loc","msg","type"],"type":"object","properties":{"loc":{"title":"Location","type":"array","items":{"type":"string"}},"msg":{"title":"Message","type":"string"},"type":{"title":"Error Type","type":"string"}}}}}}

```

**Desktop:**

- OS: macOS 11.2.2

- Python Version: 3.8.6

- openapi-python-client version 0.8.0

- fast-api version 0.62.0

|

closed

|

2021-03-31T17:34:44Z

|

2021-03-31T21:04:45Z

|

https://github.com/openapi-generators/openapi-python-client/issues/373

|

[

"🐞bug"

] |

joshzana

| 2

|

iperov/DeepFaceLab

|

machine-learning

| 5,686

|

The Chinese(ZH-cn) translation.

|

[https://zhuanlan.zhihu.com/p/165589205](https://zhuanlan.zhihu.com/p/165589205)

# How to use it

最近这几年视频换脸十分流行,在B站常有up主上传自己恶搞的AI换脸视频。当然,PS修图一直都是热点,但PS常用于P一张图。而网上看到的,比如将迪丽热巴演的某片段换成了鹿晗的脸(没有其他意思,确实有这些恶搞)??以至于以假乱真,这些都是咋做到的呢?其实就是使用到了强大的AI技术:AI+“造假”混合,就产生了“深度造假”。

Deepfakes,一种混合“深度学习”和“造假” 的合成技术 ,其中一人的现有图像或视频被替换为其他人的肖像。Deepfakes利用了机器学习和人工智能中的强大技术来生成具有极高欺骗力的视觉和音频内容。用于创建的主要机器学习方法是基于深度学习的训练生成神经网络,如生成对抗网络GAN。

按照维基的资料,Deepfakes这个词起源于2017年底,来自Reddit用户分享了他们创建的“深度造假”产品。2018年1月,启动了名为FakeApp的桌面应用程序。此应用程序使用户可以轻松创建和共享彼此交换脸部的视频。截至2019年,FakeApp已被Faceswap和基于命令行的DeepFaceLab等开源替代产品所取代。较大的公司也开始使用Deepfake。

本文介绍使用DeepFaceLab这款开源产品,它基于python和tensorflow。

说明,基于本文掌握的内容不得用于非法违法目的以及违背道德的行为以及用于商业利益,否则本人概不负责。

开始前,需要在[https://github.com/iperov/DeepFaceLab](https://link.zhihu.com/?target=https%3A//github.com/iperov/DeepFaceLab)上获取下载地址,并进行安装(想要我文章版本链接的,私聊我,人多的话那回不过来!记得赞赞一下嘛!)。

这里要说下,使用DeepFaceLab最好需要足够好的电脑配置,因为AI深度训练的过程基于cpu以及gpu,显卡性能越好意味着其速度越快效果越好。但这不是绝对,如果有足够的耐心也是能够合成出一定效果的,一切都只是娱乐嘛。(ps:我写本文时用到的是win7电脑,非高配置,这不重要)

安装完毕后,你会在DeepFaceLab_NVIDIA\下看到类似下图的文件:

安装后会看到的一些文件

其中,workplace存放我们的视频素材以及图片。在这之前,你需要准备两个视频,源视频是你想换过去的人脸的视频(比如你自己),目标视频是被换掉的人脸的视频(比如星爷)。本文把吴孟达老师的一段“你在教我做事啊”的视频片段换成沈腾,所以使用的源视频素材是沈腾,而目标视频就是“你在教我做事啊”小片段。将源视频重命名为data_src.mp4,目标视频重命名为data_dst.mp4并放置于workplace。(确保选择的源视频素材人脸清晰、正脸、表情丰富但不要遮挡、模糊,时长不需要长)

视频放置于workplace下

然后双击2) extract images from video data_src.bat,此命令将data_src视频每一帧提取为图片,回车,再回车默认选择图片格式为png。

等待将data_src视频分帧

等待命令行窗口运行完毕便可关掉,以上是deepfacelab使用ffmpeg提取帧。然后,在workplace/data_src文件夹下就会看到data_src.mp4的每一帧图片,如图:

提取data_src.mp4后的每一帧

删除data_src文件夹下没有沈腾的图片以及模糊的图片。随后再执行3) extract images from video data_dst FULL FPS.bat,同样的,此命令将从data_dst.mp4中提取每一帧,执行完后在workplace/data_dst文件夹下将会看到每一帧图片。

提取data_dst.mp4后的每一帧

执行4) data_src faceset extract.bat,这意味着将从data_src下的每一张图片里获取人脸。操作如下:

回车,Face type选择wf(wf=整个脸,基于情况而定,本教程基于默认选择此),Max number of faces from image默认回车键,提取最大的数量。Image Size是图片大小,默认回车512,Jpeg quality是图片质量,越高越好,默认回车90。Write debug images to aligned_debug这个选项默认回车,在data_src文件下我们可以不需要,但在操作data_dst时就需要了(程序会自动)。然后等待进程的执行,依据电脑配置,配置越高速度越快。

完成后,在data_src/aligned文件夹下会看到全部提取到的人脸,对就是沈腾的大头贴。

data_src/aligned下的人脸图

同样,我们在这里删除掉模糊的照片。其实前面在data_src/下删除了不合规图片,现在应该没有模糊的大头贴了。

点击5) data_dst faceset extract MANUAL.bat,从data_dst中提取人脸,这一步很重要也很繁琐!为何?我要说一下,我写本文章选的目标素材是吴孟达和叶德娴的小片段,所以视频里存在两个不同的脸。如果你的目标视频只单独存在一个人,使用data_dst faceset extract.bat可以快速的直接提取(所以并不需要此步骤的手动绘制)。而本情况一张图片里同时出现多脸,所以要选择data_dst faceset extract MANUAL.bat,随后会跳出选取窗口,需要手动提取,如下图。

这是第一帧的图片,我们只想用沈腾的脸替换掉吴孟达老师的脸,因此用鼠标抓取。轻轻移动鼠标,程序会根据当前区域而绘制轮廓图,可以滚动滚轴放大缩小,左键锁定右键解锁,按回车确定,意味着每一张图片都需要这样,不过可以连续按住回车,当脸部大幅度变动时再重新调整。

此步骤执行完后,data_dst目录下将会出现aligned和aligned_debug两个目录。前者是吴孟达老师的大头图,后者是绘制的脸部轮廓原型。

最后到了关键一步了,就是训练模型,利用AI算法不断训练。

Deepfacelab提供了两种训练方式:quick96和SAEHD。如何选择?若你的显卡显存低于6gb,建议使用前者,而saehd是更高清晰度的模型,用于具有至少6GB显存的GPU。两种模型都可以产生良好的效果,但显然SAEHD功能更强大,因此面向非新手。为什么要训练模型?因为我们要给AI学习的这些素材,AI通过算法识别A和B两者的脸部,然后根据前者脸部的特征学会面部表情,以及如何换向另一个的脸。训练就是AI学习两个脸部的过程。

本文选择使用quick96。执行6) train Quick96.bat,第一次训练时会让你命名此模型的名字,然后就会开始训练。

刚开始训练时

随后将弹出预览图,会看到五列脸。靠左是学习沈腾老师的脸部,靠右是吴孟达老师的,最后一列是替换之后的模样。刚开始十分模糊,随着时间的深入(根据时长以及你的计算机性能),模型将会越来越清晰。按p是更新,按s保存当前模型进度,回车关闭。模型训练需要很长的时间,如果基本上是正面的脸部视频,最好也不要低于6小时,而想要制作出精良的换脸视频,需要你的技术以及模型的时长了,甚至超过72小时都是可以的。因为模型可以随时进行训练,因此关闭之后可以再次执行train Quick96.bat。训练一段时间之后,预览图就为变得清晰:

训练一段后

当你觉得不需要再训练时,可以做最后一步了。执行7) merge Quick96.bat,开始调整合并你的模型。提示Use interactive merger时按y进入交互选项界面,如图:

interactive窗口的配置提示

Tab键切换面板(帮助/当前帧预览),你需要按tab切换到预览窗口才能点击按钮。+和-选择窗口大小。按c是color transfer mode,更改脸部传输颜色的模式,顶部1-6数字键是更换脸部模型,u和j调整脸部覆盖的大小,n更改sharpen锐化模式,y和h调整模糊效果,w和s调整脸部遮罩的范围,e和d模糊遮罩程度,还有其他选项,这些都依据自己想要的效果不断混合调整,因此需要耐心以及熟练度。<和>步入上下帧,shfit+<返回第一帧,每完成当前帧,进入下一帧时就需要重新调整选项来达到适合的效果,你肯定要问这一帧帧得弄到猴年马月?所以若脸部没有较大波动,可以直接按/键覆盖当前选项到下一帧,若需要一次性全部覆盖,可按shift+>覆盖到最后一帧。当所有的帧都配置完毕时,可按shift+>执行Meger,可在控制窗口查看进度。

以下是某一帧类似的效果(为了写文章,效果先凑合吧):

进行调整的某一帧

下图是执行Meger的进度,当交互界面的选项更新时,命令窗口会更新选项信息。若在交互界面按了按钮卡住不动时,通常是发生错误了,可在命令窗口看到python的错误日志(遇到此情况,则需要关闭重来)。

执行最终的合并时

当前操作执行完毕后,在data_dst下多出了两个文件夹merged以及merged_mask,前者是用于合成最终视频的已换脸的图片,后者是遮罩图。好的,我们执行本文教程的最后一步8) merged to mp4.bat,意味着将诞生最终的换脸视频,进程执行完毕后,在workspace目录下会看到result.mp4这个文件。

以下是训练了4小时(不够久)的大概效果:

参数配置1

参数配置2

如果你设置得不太好以及训练太短了,效果都会不尽人意,可以重复第7步重新调整。好,来欣赏最终的换脸视频吧。

沈腾:“你在教我做事啊?”

补一个,以下是曾黎换脸的高圆圆的周芷若。

(建议视频最好正脸,效果会很好)

【曾黎版 周芷若】

编辑于 2023-05-07 13:23・IP 属地福建

|

open

|

2023-06-17T16:44:19Z

|

2023-06-17T16:44:19Z

|

https://github.com/iperov/DeepFaceLab/issues/5686

|

[] |

zelihole

| 0

|

pydantic/logfire

|

fastapi

| 477

|

Is there a way to add tags to a span after it is created?

|

### Question

I have additional tags I want to that come from the result of a function running inside a span, but I'm not able to add them via `span.tags += ["my_tag"]` with the current API.

Is there some other way to do this?

Thanks!

|

closed

|

2024-10-04T17:23:46Z

|

2024-10-23T00:37:36Z

|

https://github.com/pydantic/logfire/issues/477

|

[

"good first issue",

"Feature Request"

] |

ohmeow

| 6

|

gradio-app/gradio

|

python

| 10,772

|

Gradio ChatInterface's bash/cURL API Example Is Incorrect

|

### Describe the bug

The bash/cURL API example provided for ChatInterface does not work. The server returns "Method Not Allowed", and the server log prints an error message.

### Have you searched existing issues? 🔎

- [x] I have searched and found no existing issues

### Reproduction

``` python

import gradio as gr

def echo(message, history):

print(f"cool {message} {history}")

return message

demo = gr.ChatInterface(fn=echo, type="messages", examples=["hello", "hola", "merhaba"], title="Echo Bot")

demo.launch()

```

### Screenshot

```

ed@banana:/tmp$ curl -X POST http://localhost:7860/gradio_api/call/chat -s -H "Content-Type: application/json" -d '{

"data": [

"Hello!!"

]}' | awk -F'"' '{ print $4}' | read EVENT_ID; curl -N http://localhost:7860/gradio_api/call/chat/$EVENT_ID

{"detail":"Method Not Allowed"}

```

### Logs

```shell

* Running on local URL: http://127.0.0.1:7860

To create a public link, set `share=True` in `launch()`.

Traceback (most recent call last):

File "/home/ed/.pyenv/versions/gradio/lib/python3.10/site-packages/gradio/queueing.py", line 625, in process_events

response = await route_utils.call_process_api(

File "/home/ed/.pyenv/versions/gradio/lib/python3.10/site-packages/gradio/route_utils.py", line 322, in call_process_api

output = await app.get_blocks().process_api(

File "/home/ed/.pyenv/versions/gradio/lib/python3.10/site-packages/gradio/blocks.py", line 2092, in process_api

inputs = await self.preprocess_data(

File "/home/ed/.pyenv/versions/gradio/lib/python3.10/site-packages/gradio/blocks.py", line 1761, in preprocess_data

self.validate_inputs(block_fn, inputs)

File "/home/ed/.pyenv/versions/gradio/lib/python3.10/site-packages/gradio/blocks.py", line 1743, in validate_inputs

raise ValueError(

ValueError: An event handler (_submit_fn) didn't receive enough input values (needed: 2, got: 1).

Check if the event handler calls a Javascript function, and make sure its return value is correct.

Wanted inputs:

[<gradio.components.textbox.Textbox object at 0x7c70f759fa00>, <gradio.components.state.State object at 0x7c70f75bbd90>]

Received inputs:

["Hello!!"]

```

### System Info

```shell

Gradio Environment Information:

------------------------------

Operating System: Linux

gradio version: 5.17.1

gradio_client version: 1.7.1

------------------------------------------------