repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

Gozargah/Marzban

|

api

| 1,086

|

subscription v2ray-json does not support quic

|

Hi, this quic inbound works for v2ray format, but does not work for v2ray-json format, i mean subscription cannot update

inbound:

```

{

"listen": "0.0.0.0",

"port": 3636,

"protocol": "vless",

"settings": {

"clients": [],

"decryption": "none",

"fallbacks": []

},

"sniffing": {

"destOverride": [

"tls",

"quic",

"fakedns"

],

"enabled": true,

"metadataOnly": false,

"routeOnly": false

},

"streamSettings": {

"network": "quic",

"quicSettings": {

"header": {

"type": "none"

},

"key": "key",

"security": "none"

},

"security": "none"

},

"tag": "inbound-3636"

},

```

marzban logs:

|

closed

|

2024-07-04T23:32:48Z

|

2024-08-13T21:35:09Z

|

https://github.com/Gozargah/Marzban/issues/1086

|

[

"Bug"

] |

m0x61h0x64i

| 2

|

mars-project/mars

|

pandas

| 2,585

|

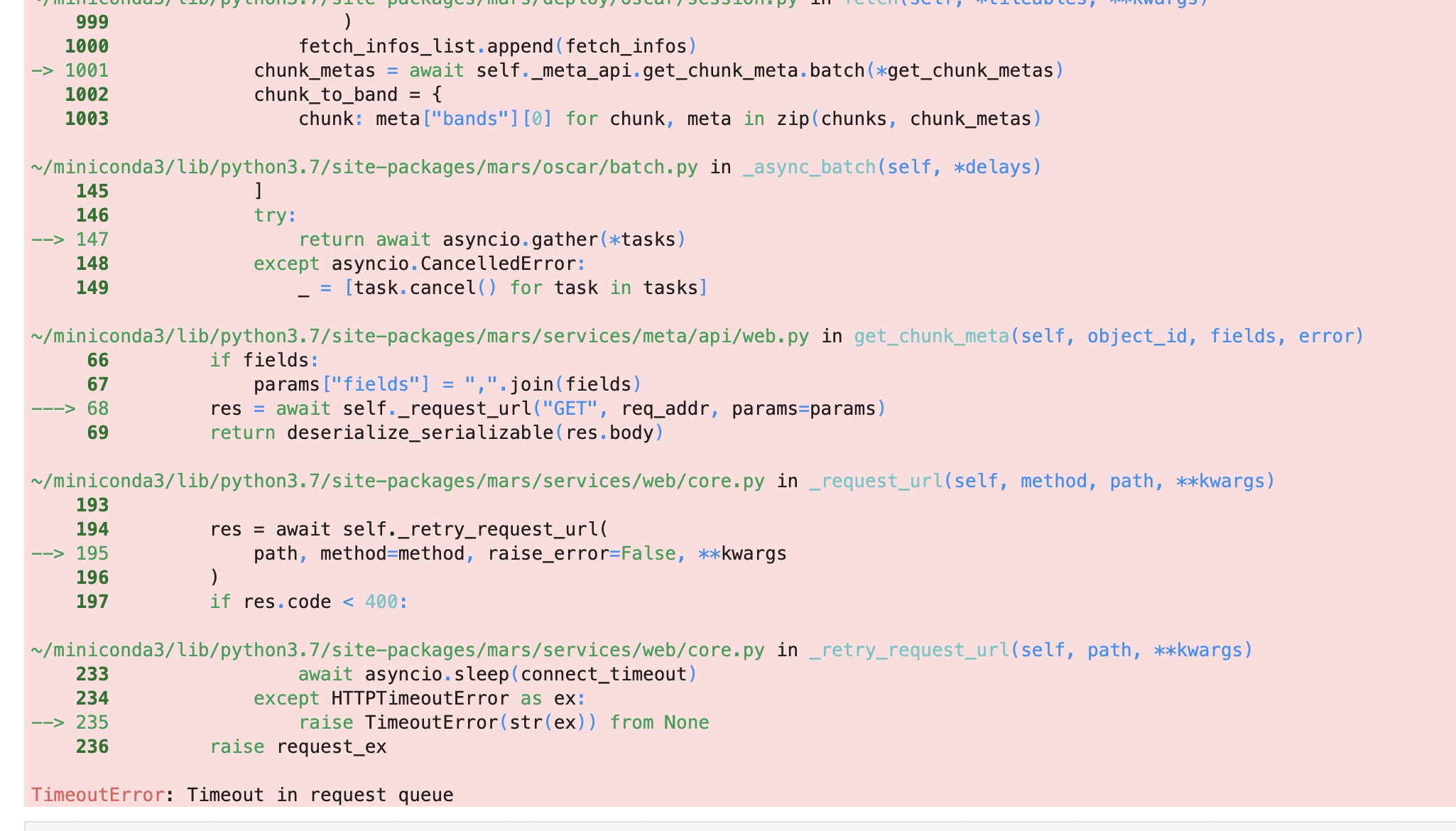

[BUG] TimeoutError: Timeout in request queue

|

When fetching dataframe to local, when chunks is greater than 200, following errors happen:

|

open

|

2021-11-25T10:35:52Z

|

2021-11-25T10:35:52Z

|

https://github.com/mars-project/mars/issues/2585

|

[] |

chaokunyang

| 0

|

roboflow/supervision

|

machine-learning

| 1,694

|

Crash when filtering empty detections: xyxy shape (0, 0, 4).

|

Reproduction code:

```python

import supervision as sv

import numpy as np

CLASSES = [0, 1, 2]

prediction = sv.Detections.empty()

prediction = prediction[np.isin(prediction["class_name"], CLASSES)]

```

Error:

```

Traceback (most recent call last):

File "/Users/linasko/.settler_workspace/pr/supervision-fresh/run_detections.py", line 7, in <module>

prediction = prediction[np.isin(prediction["class_name"], CLASSES)]

File "/Users/linasko/.settler_workspace/pr/supervision-fresh/supervision/detection/core.py", line 1206, in __getitem__

return Detections(

File "<string>", line 10, in __init__

File "/Users/linasko/.settler_workspace/pr/supervision-fresh/supervision/detection/core.py", line 144, in __post_init__

validate_detections_fields(

File "/Users/linasko/.settler_workspace/pr/supervision-fresh/supervision/validators/__init__.py", line 120, in validate_detections_fields

validate_xyxy(xyxy)

File "/Users/linasko/.settler_workspace/pr/supervision-fresh/supervision/validators/__init__.py", line 11, in validate_xyxy

raise ValueError(

ValueError: xyxy must be a 2D np.ndarray with shape (_, 4), but got shape (0, 0, 4)

```

|

closed

|

2024-11-28T11:31:18Z

|

2024-12-04T10:15:33Z

|

https://github.com/roboflow/supervision/issues/1694

|

[

"bug"

] |

LinasKo

| 0

|

flasgger/flasgger

|

rest-api

| 146

|

OpenAPI 3.0

|

https://www.youtube.com/watch?v=wBDSR0x3GZo

|

open

|

2017-08-10T17:42:13Z

|

2020-07-16T10:23:14Z

|

https://github.com/flasgger/flasgger/issues/146

|

[

"hacktoberfest"

] |

rochacbruno

| 10

|

piskvorky/gensim

|

machine-learning

| 3,536

|

scipy probably not needed in [build-system.requires] table

|

<!--

**IMPORTANT**:

- Use the [Gensim mailing list](https://groups.google.com/g/gensim) to ask general or usage questions. Github issues are only for bug reports.

- Check [Recipes&FAQ](https://github.com/RaRe-Technologies/gensim/wiki/Recipes-&-FAQ) first for common answers.

Github bug reports that do not include relevant information and context will be closed without an answer. Thanks!

-->

#### Problem description

I believe that specifying `scipy` as a build-only dependency is unnecessary.

Pip builds the library in isolated environment (by default) where it first downloads (and alternatively builds) build-only dependencies. This behaviour creates additional problems for architectures for which there are not many _.whl_ distributions, like e.g. **ppc64le**.

#### Steps/code/corpus to reproduce

1) create an environment where there is already an older `scipy` library version installed

2)

```sh

cd gensim/

pip install .

```

3) As there is no `gensim` _.whl_ distribution for **ppc64le**, pip will try building `gensim` in a new isolated environment with build-only dependencies.

As there is no `scipy` _.whl_ distribution for ppc64le either, pip will try building that as well.

Despite the fact that `scipy` is already installed in desired version within the target environment.

Not only there will be a `scipy` version mismatch (pip will be building the latest version in the isolated environment) but it will also:

* significantly prolong the install phase, as `scipy` takes relatively long to build from source,

* create additional dependencies mess, as `scipy` build requires multiple other dependencies and system-level libraries

#### Desired resolution

I've tested locally installing gensim with modified `pyproject.toml` file (deleted `scipy`) and it works as expected.

Is there any other logic that does not allow deleting `scipy` as a build-only dependency?

#### Versions

`gensim>4.3.*`

|

closed

|

2024-06-06T13:42:33Z

|

2024-07-18T12:03:09Z

|

https://github.com/piskvorky/gensim/issues/3536

|

[

"awaiting reply"

] |

filip-komarzyniec

| 2

|

albumentations-team/albumentations

|

deep-learning

| 2,097

|

[Add transform] Add RandomJPEG

|

Add RandomJPEG which is a child of ImageCompression and has the same API as Kornia's

https://kornia.readthedocs.io/en/latest/augmentation.module.html#kornia.augmentation.RandomJPEG

|

closed

|

2024-11-08T15:50:40Z

|

2024-11-09T00:58:42Z

|

https://github.com/albumentations-team/albumentations/issues/2097

|

[

"enhancement"

] |

ternaus

| 0

|

gyli/PyWaffle

|

data-visualization

| 2

|

width problems with a thousand blocks

|

When plotting a larger number of blocks, the width of the white space between them become unstable:

```

plt.figure(

FigureClass=Waffle,

rows=20,

columns=80,

values=[300, 700],

figsize=(18, 10)

);

plt.savefig('example.png')

```

This is probably outside the original scope of the package and maybe should even be discouraged, but sometimes is useful to give the reader the impression of dealing with a large population. Feel free to close this issue.

|

open

|

2017-11-23T17:27:12Z

|

2019-10-06T22:29:14Z

|

https://github.com/gyli/PyWaffle/issues/2

|

[] |

lincolnfrias

| 2

|

pyjanitor-devs/pyjanitor

|

pandas

| 1,200

|

[BUG] `deprecated_kwargs` (list[str]) in v0.24 raises type object not subscriptable error

|

# Brief Description

The addition of deprecated_kwargs in version 0.23 causes a type object not subscriptable error.

# System Information

I'm using Python 3.8.12 on a sagemaker instance. I'm pretty sure this is the issue, that my company has us locked at 3.8.12 right now. Selecting the v.0.23 does solve the problem.

I'm sorry if this isn't enough information at the moment, let me know if you need anything else.

# Error

```

TypeError Traceback (most recent call last)

/tmp/ipykernel_18038/2902872131.py in <cell line: 1>()

----> 1 import janitor

~/anaconda3/envs/python3/lib/python3.8/site-packages/janitor/__init__.py in <module>

7

8 from .accessors import * # noqa: F403, F401

----> 9 from .functions import * # noqa: F403, F401

10 from .io import * # noqa: F403, F401

11 from .math import * # noqa: F403, F401

~/anaconda3/envs/python3/lib/python3.8/site-packages/janitor/functions/__init__.py in <module>

17

18

---> 19 from .add_columns import add_columns

20 from .also import also

21 from .bin_numeric import bin_numeric

~/anaconda3/envs/python3/lib/python3.8/site-packages/janitor/functions/add_columns.py in <module>

1 import pandas_flavor as pf

2

----> 3 from janitor.utils import check, deprecated_alias

4 import pandas as pd

5 from typing import Union, List, Any, Tuple

~/anaconda3/envs/python3/lib/python3.8/site-packages/janitor/utils.py in <module>

214

215 def deprecated_kwargs(

--> 216 *arguments: list[str],

217 message: str = (

218 "The keyword argument '{argument}' of '{func_name}' is deprecated."

TypeError: 'type' object is not subscriptable

```

|

closed

|

2022-11-14T16:11:14Z

|

2022-11-21T06:04:41Z

|

https://github.com/pyjanitor-devs/pyjanitor/issues/1200

|

[

"bug"

] |

zykezero

| 11

|

modelscope/modelscope

|

nlp

| 1,122

|

from modelscope.msdatasets import MsDataset 报错

|

(Pdb) from modelscope.msdatasets import MsDataset

*** ModuleNotFoundError: No module named 'datasets.download'

(Pdb) import modelscope

(Pdb) modelscope.__version__

'1.17.0'

(Pdb) datasets.__version__

'2.0.0'

Python 3.10.15,ubuntu 22.04 系统

当前modescope 需要使用哪个版本的datasets ?

|

closed

|

2024-12-04T10:15:02Z

|

2024-12-19T12:13:28Z

|

https://github.com/modelscope/modelscope/issues/1122

|

[] |

robator0127

| 1

|

microsoft/UFO

|

automation

| 190

|

Batch Mode and Follower Mode get "No module named 'ufo.config'; 'ufo' is not a package" exception

|

When trying the steps with [Batch Mode](https://microsoft.github.io/UFO/advanced_usage/batch_mode/) and [Follower Mode](https://microsoft.github.io/UFO/advanced_usage/follower_mode/) based on the document, it will throw "ModuleNotFoundError: No module named 'ufo.config'; 'ufo' is not a package" exception which result to the command cannot be executed.

**Here is the repro steps:**

Assume the Plan file is prepared based on the document.

1. Open Command Prompt Window and navigate to the cloned UFO folder.

2. Run "python ufo\ufo.py --task_name testbatchmode --mode batch_normal --plan "parentpath\planfilename.json"".

**Expected Result:**

Command will be run without errors.

**Actually Result:**

Failed with below error:

```

Traceback (most recent call last):

File "E:\Repos\UFO\ufo\ufo.py", line 7, in <module>

from ufo.config.config import Config

File "E:\Repos\UFO\ufo\ufo.py", line 7, in <module>

from ufo.config.config import Config

ModuleNotFoundError: No module named 'ufo.config'; 'ufo' is not a package

```

**What we have did:**

We tried use pip install command to install ufo or ufo.config, but both them are could not be found:

We also tried with the newest vyokky/dev branch, but the error still exists.

|

open

|

2025-03-19T06:33:06Z

|

2025-03-19T08:28:24Z

|

https://github.com/microsoft/UFO/issues/190

|

[] |

WeiweiCaiAcpt

| 2

|

automl/auto-sklearn

|

scikit-learn

| 1,573

|

Add pylint linter

|

After we have removed all mypy ignores.

|

open

|

2022-08-22T11:23:17Z

|

2022-08-24T04:04:50Z

|

https://github.com/automl/auto-sklearn/issues/1573

|

[

"maintenance"

] |

mfeurer

| 0

|

RobertCraigie/prisma-client-py

|

pydantic

| 106

|

Experimental support for the Decimal type

|

## Why is this experimental?

Currently Prisma Client Python does not have access to the field metadata containing the precision of `Decimal` fields at the database level. This means that we cannot:

- Raise an error if you attempt to pass a `Decimal` value with a greater precision than the database supports, leading to implicit truncation which may cause confusing errors

- Set the precision level on the returned `decimal.Decimal` objects to match the database level, potentially leading to even more confusing errors.

To try and mitigate the effects of these errors you must be explicit that you understand that the support for the `Decimal` type is not up to the standard of the other types. You do this by setting the `enable_experimental_decimal` config flag, e.g.

```prisma

generator py {

provider = "prisma-client-py"

enable_experimental_decimal = true

}

```

|

closed

|

2021-11-07T23:58:44Z

|

2022-03-24T22:03:06Z

|

https://github.com/RobertCraigie/prisma-client-py/issues/106

|

[

"topic: types",

"kind/feature",

"level/advanced",

"priority/medium"

] |

RobertCraigie

| 12

|

graphdeco-inria/gaussian-splatting

|

computer-vision

| 986

|

Error when installing the SIBR viewer on Ubuntu 22.04

|

Hi! I had this error when I ran the installation command

`cmake -Bbuild . -DCMAKE_BUILD_TYPE=Release`

> There is no provided OpenCV library for your compiler, relying on find_package to find it

-- Found OpenCV: /usr (found suitable version "4.5.4", minimum required is "4.5")

-- Populating library imgui...

-- Populating library nativefiledialog...

-- Checking for module 'gtk+-3.0'

-- Package 'Lerc', required by 'libtiff-4', not found

CMake Error at /usr/share/cmake-3.22/Modules/FindPkgConfig.cmake:603 (message):

A required package was not found

Call Stack (most recent call first):

/usr/share/cmake-3.22/Modules/FindPkgConfig.cmake:825 (_pkg_check_modules_internal)

extlibs/nativefiledialog/nativefiledialog/CMakeLists.txt:20 (pkg_check_modules)

-- Configuring incomplete, errors occurred!

See also "/home/yiduo/projects/code/gs/SIBR_viewers/build/CMakeFiles/CMakeOutput.log".

System: Ubuntu 22.04.5 LTS

Cmake version: 3.22.1

It seems like this is not necessarily related to the codebase here. But does anyone have any idea how to solve this? Thanks a lot!

|

open

|

2024-09-13T00:09:47Z

|

2024-09-13T00:09:47Z

|

https://github.com/graphdeco-inria/gaussian-splatting/issues/986

|

[] |

yiduohao

| 0

|

koxudaxi/datamodel-code-generator

|

fastapi

| 1,982

|

AttributeError: 'FieldInfo' object has no attribute '<EnumName>'

|

**Describe the bug**

Generating from a schema with an Enum type causes `AttributeError: 'FieldInfo' object has no attribute '<EnumName>'`

**To Reproduce**

File structure after codegen should look like:

```

schemas/

├─ bean.json

├─ bean_type.json

src/

├─ __init__.py

├─ bean.py

├─ bean_type.py

main.py

```

With the schemas defined as follows:

`schemas/bean.json`

```json

{

"$schema": "https://json-schema.org/draft/2020-12/schema",

"$id": "bean.json",

"type": "object",

"title": "Bean",

"properties": {

"beanType": { "$ref": "bean_type.json" },

"name": { "type": "string" }

},

"additionalProperties": false,

"required": ["beanType", "name"]

}

```

`schemas/bean_type.json`

```json

{

"$schema": "https://json-schema.org/draft/2020-12/schema",

"$id": "bean_type.json",

"title": "BeanType",

"additionalProperties": false,

"enum": ["STRING_BEAN", "RUNNER_BEAN", "GREEN_BEAN", "BAKED_BEAN"]

}

```

and

`main.py`

```py

from src.bean import Bean

if __name__ == "__main__":

pass

```

**Used commandline**

```

$ datamodel-codegen \

--use-title-as-name \

--use-standard-collections \

--snake-case-field \

--target-python-version 3.12 \

--input schemas \

--input-file-type jsonschema \

--output src

```

**Expected behavior**

Exactly what happened, except we should then be able to import and use the generated classes. Instead, an AttributeError is raised.

**Version:**

- OS: MacOS 14.3.1 (23D60)

- Python version: 3.12.3

- Pydantic version: 2.7.2

- datamodel-code-generator version: 0.25.6

**Additional context**

In the generated file `src/bean.py`, if we manually change `from . import bean_type` to `from .bean_type import BeanType`, and the corresponding usage in the `Bean` class definition, the error disappears. Might be related to #1683 / #1684

|

closed

|

2024-06-02T15:01:30Z

|

2024-06-18T05:14:07Z

|

https://github.com/koxudaxi/datamodel-code-generator/issues/1982

|

[] |

alpoi-x

| 0

|

tensorflow/tensor2tensor

|

machine-learning

| 1,523

|

The evolved transformer code is the final graph or the whole procedure to find the best graph?

|

I'm new to neural architecture search. Thank you.

|

open

|

2019-03-25T02:27:41Z

|

2020-11-12T15:56:57Z

|

https://github.com/tensorflow/tensor2tensor/issues/1523

|

[] |

guotong1988

| 6

|

ghtmtt/DataPlotly

|

plotly

| 257

|

Display every record as a line in a scatter plot

|

Hi,

**Short Feature Explanation**

I am wondering if it would be possible to create a scatter plot that displays a line per record instead of a point per record.

This would be done by selecting two columns storing arrays of values for the x and y fields.

For example, with a table: Temp(xs int[], ys[]), selecting the xs and ys columns for the x and y fields respectively would create a scatterplot with as many lines as records in table A, and as many points per line as values in the xs and ys arrays. (Of course, xs and ys should have the same amount of elements each)

**Context**

To explain my problem, I am a developer of [MobilityDB](https://github.com/MobilityDB/MobilityDB), and I am trying to display temporal properties in QGIS using DataPlotly. An example of a table that we want to display would be:

Ports(name text, port geometry(Polygon), shipsInside tint)

Every record thus represents a port and has an attribute storing the number of ships inside this port over time. The ports are represented as polygons on the map, and I would thus like to represent the 'shipsInside' attribute as a line on a scatter plot.

For simplicity, let's assume that this temporal attribute is stored in two columns: one containing an array of timestamps, and one containing an array of values: (this can be done in practice as well)

Ports(name text, port geometry, ts timestamptz[], vals int[])

**Current Workaround**

Currently, I can display a single record of the original table by creating a new table for it:

Ports_temp(name text, port geometry, t timestamptz, val int)

This table contains a record for each pair of (t, val) in the arrays of the original record.

Using this table, I can then create a scatterplot using t and val as the x and y fields respectively.

Of course, this solution is not ideal, since this demands a new table for each record of the original Ports table.

**Conclusion**

I am thus wondering how hard it would be to allow scatterplots to display such records with temporal attributes as lines on a scatterplot.

Ideally, this should be done either by selecting two columns that store arrays of values or selecting a single temporal column (tint, float or tbool).

Best Regards,

Maxime Schoemans

|

open

|

2021-03-15T17:03:19Z

|

2021-03-24T12:58:10Z

|

https://github.com/ghtmtt/DataPlotly/issues/257

|

[

"enhancement"

] |

mschoema

| 6

|

apache/airflow

|

python

| 47,970

|

"consuming_dags" and "producing_tasks" do not correct account for Asset.ref

|

### Body

They are direct SQLAlchemy relationships to only concrete references (DagAssetScheduleReference and TaskOutletAssetReference). Not quite sure how to fix this. Maybe they should be plain properties that return list-of-union instead? We don’t really need those relationships….

### Committer

- [x] I acknowledge that I am a maintainer/committer of the Apache Airflow project.

|

open

|

2025-03-19T18:23:11Z

|

2025-03-19T18:29:31Z

|

https://github.com/apache/airflow/issues/47970

|

[

"kind:bug",

"area:datasets"

] |

uranusjr

| 1

|

coqui-ai/TTS

|

deep-learning

| 3,177

|

[Bug] Loading XTTS via Xtts.load_checkpoint()

|

### Describe the bug

When loading the model using `Xtts.load_checkpoint`, exception is raised as `Error(s) in loading state_dict for Xtts`, which leads to missing keys GPT embedding weights and size mismatch on Mel embedding. Even tried providing the directory which had base(v2) model checkpoints and got the same result.

### To Reproduce

```

import os

import torch

import torchaudio

from TTS.tts.configs.xtts_config import XttsConfig

from TTS.tts.models.xtts import Xtts

print("Loading model...")

config = XttsConfig()

config.load_json("/path/to/xtts/config.json")

model = Xtts.init_from_config(config)

model.load_checkpoint(config, checkpoint_dir="/path/to/xtts/", use_deepspeed=True)

model.cuda()

print("Computing speaker latents...")

gpt_cond_latent, speaker_embedding = model.get_conditioning_latents(audio_path=["reference.wav"])

print("Inference...")

out = model.inference(

"It took me quite a long time to develop a voice and now that I have it I am not going to be silent.",

"en",

gpt_cond_latent,

speaker_embedding,

temperature=0.7, # Add custom parameters here

)

torchaudio.save("xtts.wav", torch.tensor(out["wav"]).unsqueeze(0), 24000)

```

### Expected behavior

Load the checkpoint and run inference without exception.

### Logs

```shell

11-08 22:13:53 [__main__ ] ERROR - Error(s) in loading state_dict for Xtts:

Missing key(s) in state_dict: "gpt.gpt.wte.weight", "gpt.prompt_embedding.weight", "gpt.prompt_pos_embedding.emb.weight", "gpt.gpt_inference.transformer.h.0.ln_1.weight", "gpt.gpt_inference.transformer.h.0.ln_1.bias", "gpt.gpt_inference.transformer.h.0.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.0.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.0.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.0.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.0.ln_2.weight", "gpt.gpt_inference.transformer.h.0.ln_2.bias", "gpt.gpt_inference.transformer.h.0.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.0.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.0.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.0.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.1.ln_1.weight", "gpt.gpt_inference.transformer.h.1.ln_1.bias", "gpt.gpt_inference.transformer.h.1.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.1.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.1.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.1.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.1.ln_2.weight", "gpt.gpt_inference.transformer.h.1.ln_2.bias", "gpt.gpt_inference.transformer.h.1.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.1.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.1.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.1.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.2.ln_1.weight", "gpt.gpt_inference.transformer.h.2.ln_1.bias", "gpt.gpt_inference.transformer.h.2.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.2.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.2.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.2.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.2.ln_2.weight", "gpt.gpt_inference.transformer.h.2.ln_2.bias", "gpt.gpt_inference.transformer.h.2.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.2.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.2.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.2.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.3.ln_1.weight", "gpt.gpt_inference.transformer.h.3.ln_1.bias", "gpt.gpt_inference.transformer.h.3.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.3.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.3.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.3.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.3.ln_2.weight", "gpt.gpt_inference.transformer.h.3.ln_2.bias", "gpt.gpt_inference.transformer.h.3.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.3.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.3.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.3.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.4.ln_1.weight", "gpt.gpt_inference.transformer.h.4.ln_1.bias", "gpt.gpt_inference.transformer.h.4.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.4.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.4.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.4.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.4.ln_2.weight", "gpt.gpt_inference.transformer.h.4.ln_2.bias", "gpt.gpt_inference.transformer.h.4.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.4.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.4.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.4.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.5.ln_1.weight", "gpt.gpt_inference.transformer.h.5.ln_1.bias", "gpt.gpt_inference.transformer.h.5.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.5.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.5.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.5.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.5.ln_2.weight", "gpt.gpt_inference.transformer.h.5.ln_2.bias", "gpt.gpt_inference.transformer.h.5.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.5.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.5.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.5.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.6.ln_1.weight", "gpt.gpt_inference.transformer.h.6.ln_1.bias", "gpt.gpt_inference.transformer.h.6.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.6.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.6.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.6.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.6.ln_2.weight", "gpt.gpt_inference.transformer.h.6.ln_2.bias", "gpt.gpt_inference.transformer.h.6.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.6.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.6.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.6.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.7.ln_1.weight", "gpt.gpt_inference.transformer.h.7.ln_1.bias", "gpt.gpt_inference.transformer.h.7.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.7.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.7.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.7.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.7.ln_2.weight", "gpt.gpt_inference.transformer.h.7.ln_2.bias", "gpt.gpt_inference.transformer.h.7.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.7.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.7.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.7.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.8.ln_1.weight", "gpt.gpt_inference.transformer.h.8.ln_1.bias", "gpt.gpt_inference.transformer.h.8.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.8.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.8.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.8.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.8.ln_2.weight", "gpt.gpt_inference.transformer.h.8.ln_2.bias", "gpt.gpt_inference.transformer.h.8.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.8.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.8.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.8.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.9.ln_1.weight", "gpt.gpt_inference.transformer.h.9.ln_1.bias", "gpt.gpt_inference.transformer.h.9.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.9.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.9.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.9.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.9.ln_2.weight", "gpt.gpt_inference.transformer.h.9.ln_2.bias", "gpt.gpt_inference.transformer.h.9.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.9.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.9.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.9.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.10.ln_1.weight", "gpt.gpt_inference.transformer.h.10.ln_1.bias", "gpt.gpt_inference.transformer.h.10.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.10.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.10.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.10.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.10.ln_2.weight", "gpt.gpt_inference.transformer.h.10.ln_2.bias", "gpt.gpt_inference.transformer.h.10.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.10.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.10.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.10.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.11.ln_1.weight", "gpt.gpt_inference.transformer.h.11.ln_1.bias", "gpt.gpt_inference.transformer.h.11.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.11.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.11.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.11.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.11.ln_2.weight", "gpt.gpt_inference.transformer.h.11.ln_2.bias", "gpt.gpt_inference.transformer.h.11.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.11.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.11.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.11.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.12.ln_1.weight", "gpt.gpt_inference.transformer.h.12.ln_1.bias", "gpt.gpt_inference.transformer.h.12.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.12.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.12.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.12.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.12.ln_2.weight", "gpt.gpt_inference.transformer.h.12.ln_2.bias", "gpt.gpt_inference.transformer.h.12.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.12.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.12.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.12.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.13.ln_1.weight", "gpt.gpt_inference.transformer.h.13.ln_1.bias", "gpt.gpt_inference.transformer.h.13.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.13.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.13.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.13.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.13.ln_2.weight", "gpt.gpt_inference.transformer.h.13.ln_2.bias", "gpt.gpt_inference.transformer.h.13.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.13.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.13.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.13.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.14.ln_1.weight", "gpt.gpt_inference.transformer.h.14.ln_1.bias", "gpt.gpt_inference.transformer.h.14.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.14.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.14.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.14.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.14.ln_2.weight", "gpt.gpt_inference.transformer.h.14.ln_2.bias", "gpt.gpt_inference.transformer.h.14.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.14.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.14.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.14.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.15.ln_1.weight", "gpt.gpt_inference.transformer.h.15.ln_1.bias", "gpt.gpt_inference.transformer.h.15.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.15.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.15.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.15.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.15.ln_2.weight", "gpt.gpt_inference.transformer.h.15.ln_2.bias", "gpt.gpt_inference.transformer.h.15.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.15.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.15.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.15.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.16.ln_1.weight", "gpt.gpt_inference.transformer.h.16.ln_1.bias", "gpt.gpt_inference.transformer.h.16.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.16.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.16.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.16.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.16.ln_2.weight", "gpt.gpt_inference.transformer.h.16.ln_2.bias", "gpt.gpt_inference.transformer.h.16.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.16.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.16.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.16.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.17.ln_1.weight", "gpt.gpt_inference.transformer.h.17.ln_1.bias", "gpt.gpt_inference.transformer.h.17.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.17.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.17.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.17.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.17.ln_2.weight", "gpt.gpt_inference.transformer.h.17.ln_2.bias", "gpt.gpt_inference.transformer.h.17.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.17.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.17.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.17.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.18.ln_1.weight", "gpt.gpt_inference.transformer.h.18.ln_1.bias", "gpt.gpt_inference.transformer.h.18.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.18.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.18.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.18.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.18.ln_2.weight", "gpt.gpt_inference.transformer.h.18.ln_2.bias", "gpt.gpt_inference.transformer.h.18.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.18.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.18.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.18.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.19.ln_1.weight", "gpt.gpt_inference.transformer.h.19.ln_1.bias", "gpt.gpt_inference.transformer.h.19.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.19.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.19.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.19.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.19.ln_2.weight", "gpt.gpt_inference.transformer.h.19.ln_2.bias", "gpt.gpt_inference.transformer.h.19.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.19.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.19.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.19.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.20.ln_1.weight", "gpt.gpt_inference.transformer.h.20.ln_1.bias", "gpt.gpt_inference.transformer.h.20.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.20.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.20.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.20.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.20.ln_2.weight", "gpt.gpt_inference.transformer.h.20.ln_2.bias", "gpt.gpt_inference.transformer.h.20.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.20.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.20.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.20.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.21.ln_1.weight", "gpt.gpt_inference.transformer.h.21.ln_1.bias", "gpt.gpt_inference.transformer.h.21.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.21.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.21.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.21.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.21.ln_2.weight", "gpt.gpt_inference.transformer.h.21.ln_2.bias", "gpt.gpt_inference.transformer.h.21.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.21.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.21.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.21.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.22.ln_1.weight", "gpt.gpt_inference.transformer.h.22.ln_1.bias", "gpt.gpt_inference.transformer.h.22.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.22.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.22.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.22.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.22.ln_2.weight", "gpt.gpt_inference.transformer.h.22.ln_2.bias", "gpt.gpt_inference.transformer.h.22.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.22.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.22.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.22.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.23.ln_1.weight", "gpt.gpt_inference.transformer.h.23.ln_1.bias", "gpt.gpt_inference.transformer.h.23.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.23.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.23.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.23.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.23.ln_2.weight", "gpt.gpt_inference.transformer.h.23.ln_2.bias", "gpt.gpt_inference.transformer.h.23.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.23.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.23.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.23.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.24.ln_1.weight", "gpt.gpt_inference.transformer.h.24.ln_1.bias", "gpt.gpt_inference.transformer.h.24.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.24.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.24.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.24.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.24.ln_2.weight", "gpt.gpt_inference.transformer.h.24.ln_2.bias", "gpt.gpt_inference.transformer.h.24.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.24.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.24.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.24.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.25.ln_1.weight", "gpt.gpt_inference.transformer.h.25.ln_1.bias", "gpt.gpt_inference.transformer.h.25.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.25.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.25.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.25.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.25.ln_2.weight", "gpt.gpt_inference.transformer.h.25.ln_2.bias", "gpt.gpt_inference.transformer.h.25.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.25.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.25.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.25.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.26.ln_1.weight", "gpt.gpt_inference.transformer.h.26.ln_1.bias", "gpt.gpt_inference.transformer.h.26.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.26.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.26.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.26.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.26.ln_2.weight", "gpt.gpt_inference.transformer.h.26.ln_2.bias", "gpt.gpt_inference.transformer.h.26.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.26.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.26.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.26.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.27.ln_1.weight", "gpt.gpt_inference.transformer.h.27.ln_1.bias", "gpt.gpt_inference.transformer.h.27.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.27.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.27.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.27.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.27.ln_2.weight", "gpt.gpt_inference.transformer.h.27.ln_2.bias", "gpt.gpt_inference.transformer.h.27.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.27.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.27.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.27.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.28.ln_1.weight", "gpt.gpt_inference.transformer.h.28.ln_1.bias", "gpt.gpt_inference.transformer.h.28.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.28.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.28.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.28.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.28.ln_2.weight", "gpt.gpt_inference.transformer.h.28.ln_2.bias", "gpt.gpt_inference.transformer.h.28.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.28.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.28.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.28.mlp.c_proj.bias", "gpt.gpt_inference.transformer.h.29.ln_1.weight", "gpt.gpt_inference.transformer.h.29.ln_1.bias", "gpt.gpt_inference.transformer.h.29.attn.c_attn.weight", "gpt.gpt_inference.transformer.h.29.attn.c_attn.bias", "gpt.gpt_inference.transformer.h.29.attn.c_proj.weight", "gpt.gpt_inference.transformer.h.29.attn.c_proj.bias", "gpt.gpt_inference.transformer.h.29.ln_2.weight", "gpt.gpt_inference.transformer.h.29.ln_2.bias", "gpt.gpt_inference.transformer.h.29.mlp.c_fc.weight", "gpt.gpt_inference.transformer.h.29.mlp.c_fc.bias", "gpt.gpt_inference.transformer.h.29.mlp.c_proj.weight", "gpt.gpt_inference.transformer.h.29.mlp.c_proj.bias", "gpt.gpt_inference.transformer.ln_f.weight", "gpt.gpt_inference.transformer.ln_f.bias", "gpt.gpt_inference.transformer.wte.weight", "gpt.gpt_inference.pos_embedding.emb.weight", "gpt.gpt_inference.embeddings.weight", "gpt.gpt_inference.final_norm.weight", "gpt.gpt_inference.final_norm.bias", "gpt.gpt_inference.lm_head.0.weight", "gpt.gpt_inference.lm_head.0.bias", "gpt.gpt_inference.lm_head.1.weight", "gpt.gpt_inference.lm_head.1.bias".

Unexpected key(s) in state_dict: "gpt.conditioning_perceiver.latents", "gpt.conditioning_perceiver.layers.0.0.to_q.weight", "gpt.conditioning_perceiver.layers.0.0.to_kv.weight", "gpt.conditioning_perceiver.layers.0.0.to_out.weight", "gpt.conditioning_perceiver.layers.0.1.0.weight", "gpt.conditioning_perceiver.layers.0.1.0.bias", "gpt.conditioning_perceiver.layers.0.1.2.weight", "gpt.conditioning_perceiver.layers.0.1.2.bias", "gpt.conditioning_perceiver.layers.1.0.to_q.weight", "gpt.conditioning_perceiver.layers.1.0.to_kv.weight", "gpt.conditioning_perceiver.layers.1.0.to_out.weight", "gpt.conditioning_perceiver.layers.1.1.0.weight", "gpt.conditioning_perceiver.layers.1.1.0.bias", "gpt.conditioning_perceiver.layers.1.1.2.weight", "gpt.conditioning_perceiver.layers.1.1.2.bias", "gpt.conditioning_perceiver.norm.gamma".

size mismatch for gpt.mel_embedding.weight: copying a param with shape torch.Size([1026, 1024]) from checkpoint, the shape in current model is torch.Size([8194, 1024]).

size mismatch for gpt.mel_head.weight: copying a param with shape torch.Size([1026, 1024]) from checkpoint, the shape in current model is torch.Size([8194, 1024]).

size mismatch for gpt.mel_head.bias: copying a param with shape torch.Size([1026]) from checkpoint, the shape in current model is torch.Size([8194]).

```

### Environment

```shell

{

"CUDA": {

"GPU": ["NVIDIA A100-SXM4-80GB"],

"available": true,

"version": "11.8"

},

"Packages": {

"PyTorch_debug": false,

"PyTorch_version": "2.1.0+cu118",

"TTS": "0.20.1",

"numpy": "1.22.0"

},

"System": {

"OS": "Linux",

"architecture": [

"64bit",

"ELF"

],

"processor": "x86_64",

"python": "3.9.18",

"version": "#183-Ubuntu SMP Mon Oct 2 11:28:33 UTC 2023"

}

}

```

### Additional context

_No response_

|

closed

|

2023-11-09T03:28:30Z

|

2024-06-25T12:46:25Z

|

https://github.com/coqui-ai/TTS/issues/3177

|

[

"bug"

] |

caffeinetoomuch

| 12

|

ultralytics/yolov5

|

pytorch

| 13,243

|

Exporting trained yolov5 model (trained on custom dataset) to 'saved model' format changes the no. of classes and the name of classes to default coco128 values

|

### Search before asking

- [X] I have searched the YOLOv5 [issues](https://github.com/ultralytics/yolov5/issues) and found no similar bug report.

### YOLOv5 Component

Export

### Bug

I trained yolov5s model to detect various logos (amazon, ups, fedex etc). The model detects the logos well.

The command used for training is:

```python train.py --weights yolov5s.pt --epoch 100 --data C:\projects\logo_detector\yolov5\datasetv3\data.yaml```

The command used for detecting logos is:

```python detect.py --weights best.pt --source 0```

Screenshot of trained yolov5s model detecting the logos:

When I use export.py to convert the above model to saved model format, the model starts giving wrong output.

The command used for exporting the model is:

```python export.py --weights best.pt --data C:\projects\logo_detector\yolov5\datasetv3\data.yaml --include saved_model```

The command used for detection of logos using this saved model is:

```python detect.py --weights best_saved_model --source 0```

Screenshot of yolov5s saved model giving wrong output is:

As far as I can understand, the model starts giving output according to the default coco128.yaml file. But I have not specified this file in my commands, so I cannot understand the reason behind this behaviour. Please let me know how to get correct output.

### Environment

- I have used the default git repository for yolov5

- OS: Windows 10 Pro

- Python: 3.12.3

### Minimal Reproducible Example

_No response_

### Additional

_No response_

### Are you willing to submit a PR?

- [ ] Yes I'd like to help by submitting a PR!

|

open

|

2024-08-05T07:38:31Z

|

2024-10-27T13:30:48Z

|

https://github.com/ultralytics/yolov5/issues/13243

|

[

"bug"

] |

ssingh17j

| 2

|

Lightning-AI/pytorch-lightning

|

deep-learning

| 19,978

|

Running `test` with LightningCLI, the program can quit before the test loop ends

|

### Bug description

Within my `LightningModule`, I used `self.log_dict(metrics, on_step=True, on_epoch=True)` in `test_step`, and run with `python main.py test --config config.yaml`, with `main.py` containing only `cli = LightningCLI()`, and `config.yaml` providing both the datasets and model. The `TensorBoardLogger` is used.

However, after the programs ends, sometimes I can normally get the metrics `epoch`, `test_accuracy_epoch` and `test_loss_epoch` in the logger file, but at most attempts these 3 metrics didn't show up, and step-level logged objects can always be seen normally.

When the problems occurs, nothing abnormal can be seen from command line outputs. It looks as if the program quited normally.

I find a walkaround to be sleeping for a while in `main.py` right after `cli = LightningCLI()`. It seems like this is because a child thread is not waited to the end.

### What version are you seeing the problem on?

v2.2

### How to reproduce the bug

main.py

```python

from lightning.pytorch.cli import LightningCLI

from lightning.pytorch.loggers import TensorBoardLogger

from lightning.pytorch.callbacks import ModelCheckpoint

from model import Model

from datamodule import DataModule

def cli_main():

cli = LightningCLI()

if __name__ == "__main__":

cli_main()

from time import sleep

sleep(2)

# The problem can be solved by adding sleep.

```

config.yaml

```yaml

# lightning.pytorch==2.2.5

ckpt_path: null

seed_everything: 0

model:

class_path: model.Model

init_args:

learning_rate: 1e-3

data:

class_path: datamodule.DataModule

init_args:

data_dir: data

trainer:

accelerator: gpu

strategy: auto

devices: 1

num_nodes: 1

precision: null

fast_dev_run: false

max_epochs: 100

min_epochs: null

max_steps: -1

min_steps: null

max_time: null

limit_train_batches: null

limit_val_batches: 10

limit_test_batches: null

limit_predict_batches: null

logger:

class_path: lightning.pytorch.loggers.TensorBoardLogger

init_args:

save_dir: lightning_logs/resnet50

name: normalized

callbacks:

class_path: lightning.pytorch.callbacks.ModelCheckpoint

init_args:

save_top_k: 5

monitor: valid_loss

filename: "{epoch}-{step}-{valid_loss:.8f}"

overfit_batches: 0.0

val_check_interval: 50

check_val_every_n_epoch: 1

num_sanity_val_steps: null

log_every_n_steps: 50

enable_checkpointing: null

enable_progress_bar: null

enable_model_summary: null

accumulate_grad_batches: 1

gradient_clip_val: null

gradient_clip_algorithm: null

deterministic: false

benchmark: null

inference_mode: true

use_distributed_sampler: true

profiler: null

detect_anomaly: false

barebones: false

plugins: null

sync_batchnorm: true

reload_dataloaders_every_n_epochs: 0

default_root_dir: null

```

model.py

```Python

import torch

from torch import nn

import torch.nn.functional as F

import lightning as pl

from torchvision.models import resnet50

class Model(pl.LightningModule):

def __init__(self, learning_rate: float):

super().__init__()

self.save_hyperparameters()

CHARS = "0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz"

class_num = len(CHARS)

self.text_len = 4

resnet = resnet50()

resnet.conv1 = nn.Conv2d(

1, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False

)

layers = list(resnet.children())

self.resnet = nn.Sequential(*layers[:9])

self.linear = nn.Linear(512, class_num)

self.softmax = nn.Softmax(2)

def _calc_softmax(self, x: torch.Tensor) -> torch.Tensor:

x = self.resnet(x) # (batch, 2048, 1, 1)

x = x.reshape(x.shape[0], self.text_len, -1) # (batch, 4, 512)

x = self.linear(x) # (batch, 4, 62)

x = self.softmax(x) # (batch, 4, 62)

return x

def forward(self, x: torch.Tensor) -> torch.Tensor:

# in lightning, forward defines the prediction/inference actions

x = self._calc_softmax(x) # (batch, 4, 62)

return torch.argmax(x, 2) # (batch, 4)

def training_step(self, batch: torch.Tensor, batch_idx: int) -> torch.Tensor:

# training_step defined the train loop.

# It is independent of forward

img, target = batch

batch_size = img.shape[0]

pred_softmax = self._calc_softmax(img) # (batch, 4, 62)

pred_softmax_permute = pred_softmax.permute((0, 2, 1)) # (batch, 62, 4)

loss = F.cross_entropy(pred_softmax_permute, target)

with torch.no_grad():

pred = torch.argmax(pred_softmax, 2) # (batch, 4)

char_correct = (pred == target).sum(1) # (batch)

batch_correct = (char_correct == self.text_len).sum()

batch_accuracy = batch_correct / batch_size

metrics = {"train_accuracy": batch_accuracy, "train_loss": loss}

self.log_dict(metrics, prog_bar=True, logger=True, on_step=True, on_epoch=True)

return loss

def configure_optimizers(self) -> torch.optim.Optimizer:

optimizer = torch.optim.Adam(self.parameters(), lr=self.hparams.learning_rate)

return optimizer

def validation_step(self, batch: torch.Tensor, batch_idx: int) -> torch.Tensor:

# validation_step defined the validation loop.

# It is independent of forward

img, target = batch

batch_size = img.shape[0]

pred_softmax = self._calc_softmax(img) # (batch, 4, 62)

pred_softmax_permute = pred_softmax.permute((0, 2, 1)) # (batch, 62, 4)

loss = F.cross_entropy(pred_softmax_permute, target)

with torch.no_grad():

pred = torch.argmax(pred_softmax, 2) # (batch, 4)

char_correct = (pred == target).sum(1) # (batch)

batch_correct = (char_correct == self.text_len).sum()

batch_accuracy = batch_correct / batch_size

metrics = {"valid_accurary": batch_accuracy, "valid_loss": loss}

self.log_dict(metrics, prog_bar=True, logger=True, on_step=True, on_epoch=True)

return loss

def test_step(self, batch: torch.Tensor, batch_idx: int) -> torch.Tensor:

# test_step defined the test loop.

# It is independent of forward

img, target = batch

batch_size = img.shape[0]

pred_softmax = self._calc_softmax(img) # (batch, 4, 62)

pred_softmax_permute = pred_softmax.permute((0, 2, 1)) # (batch, 62, 4)

loss = F.cross_entropy(pred_softmax_permute, target)

with torch.no_grad():

pred = torch.argmax(pred_softmax, 2) # (batch, 4)

char_correct = (pred == target).sum(1) # (batch)

batch_correct = (char_correct == self.text_len).sum()

batch_accuracy = batch_correct / batch_size

metrics = {"test_accurary": batch_accuracy, "test_loss": loss}

self.log_dict(metrics, prog_bar=True, logger=True, on_step=True, on_epoch=True)

## The `on_epoch` part of behaviors are unstable, but `test_accuracy_step` can always be seen.

## If `on_step=False` and `on_epoch=True`, it works fine to me.

return loss

```

```

### Error messages and logs

```

# Error messages and logs here please

```

### Environment

<details>

<summary>Current environment</summary>

```

- PyTorch Lightning Version: 2.2.5

- PyTorch Version: 2.3.1+cu121

- Python version: 3.12.4

- OS: Windows 11

- CUDA/cuDNN version: 12.1

- GPU models and configuration: GTX 1650

- How you installed Lightning: pip

```

</details>

### More info

_No response_

|

open

|

2024-06-15T16:24:26Z

|

2024-06-15T16:36:11Z

|

https://github.com/Lightning-AI/pytorch-lightning/issues/19978

|

[

"bug",

"needs triage"

] |

t4rf9

| 0

|

autogluon/autogluon

|

computer-vision

| 4,792

|

[timeseries] Clarify the documentation for `known_covariates` during `predict()`

|

## Description

We should clarify which values should be provided as `known_covariates` during prediction time.

The [current documentation](https://auto.gluon.ai/stable/tutorials/timeseries/forecasting-indepth.html) says:

"The timestamp index must include the values for prediction_length many time steps into the future from the end of each time series in train_data".

This formulation is ambiguous.

- [ ] impove wording in the documentation

- [ ] add a method `TimeSeriesPredictor.get_forecast_index(data) -> pd.MultiIndex` that returns the `item_id, timestamp` index that should be covered by the `known_covariates` during `predict()`.

|

open

|

2025-01-14T08:03:33Z

|

2025-01-14T08:05:06Z

|

https://github.com/autogluon/autogluon/issues/4792

|

[

"API & Doc",

"enhancement",

"module: timeseries"

] |

shchur

| 0

|

pyeve/eve

|

flask

| 711

|

extra_response_fields should be after (not before) any on_inserted hooks on POST

|

Currently, `extra_response_fields` are processed after `on_insert` hooks are complete but before any `on_inserted` hooks.

It would be intuitive and great to have `extra_response_fields` processed after both of these hooks are complete - in case we changed something during `on_inserted`.

|

closed

|

2015-09-14T05:20:29Z

|

2018-05-18T18:19:30Z

|

https://github.com/pyeve/eve/issues/711

|

[

"enhancement",

"on hold",

"stale"

] |

kenmaca

| 2

|

DistrictDataLabs/yellowbrick

|

scikit-learn

| 949

|

Some plot directive visualizers not rendering in Read the Docs

|

Currently on Read the Docs (develop branch), a few of our visualizers that use the plot directive (#687) are not rendering the plots:

- [Classification Report](http://www.scikit-yb.org/en/develop/api/classifier/classification_report.html)

- [Silhouette Scores](http://www.scikit-yb.org/en/develop/api/cluster/silhouette.html)

- [ScatterPlot](http://www.scikit-yb.org/en/develop/api/contrib/scatter.html)

- [JointPlot](http://www.scikit-yb.org/en/develop/api/features/jointplot.html)

|

closed

|

2019-08-15T20:58:39Z

|

2019-08-29T00:03:24Z

|

https://github.com/DistrictDataLabs/yellowbrick/issues/949

|

[

"type: bug",

"type: documentation"

] |

rebeccabilbro

| 1

|

plotly/dash

|

flask

| 2,754

|

[BUG] Dropdown options not rendering on the UI even though it is generated

|

**Describe your context**

Python Version -> `3.8.18`

`poetry show | grep dash` gives the below packages:

```

dash 2.7.0 A Python framework for building reac...

dash-bootstrap-components 1.5.0 Bootstrap themed components for use ...

dash-core-components 2.0.0 Core component suite for Dash

dash-html-components 2.0.0 Vanilla HTML components for Dash

dash-prefix 0.0.4 Dash library for managing component IDs

dash-table 5.0.0 Dash table

```

- if frontend related, tell us your Browser, Version and OS

- OS: MacOSx (Sonoma 14.3)

- Browser: Chrome (also tried on Firefox and Safari)

- Version: 121.0.6167.160 (Official Build) (x86_64)

**Describe the bug**

I have a multi-dropdown that syncs up with the input from a separate tab to pull in the list of regions associated with a country. A particular country, GB, when selected does not seem to populate the dropdown options. The UI element created was written to stdout which lists the elements correctly, but it does not render on the UI itself.

stdout printout is as follows:

```

Div([P(children='Group A - (Control)', style={'marginBottom': 5}),

Dropdown(options=[

{'label': 'Cheshire', 'value': 'Cheshire'},

{'label': 'Leicestershire', 'value': 'Leicestershire'},

{'label': 'Hertfordshire', 'value': 'Hertfordshire'},

{'label': 'Surrey', 'value': 'Surrey'},

{'label': 'Lancashire', 'value': 'Lancashire'},

{'label': 'Warwickshire', 'value': 'Warwickshire'},

{'label': 'Cumbria', 'value': 'Cumbria'},

{'label': 'Northamptonshire', 'value': 'Northamptonshire'},

{'label': 'Dorset', 'value': 'Dorset'},

{'label': 'Isle of Wight', 'value': 'Isle of Wight'},

{'label': 'Kent', 'value': 'Kent'},

{'label': 'Lincolnshire', 'value': 'Lincolnshire'},

{'label': 'Hampshire', 'value': 'Hampshire'},

{'label': 'Cornwall', 'value': 'Cornwall'},

{'label': 'Scotland', 'value': 'Scotland'},

{'label': 'Berkshire', 'value': 'Berkshire'},

{'label': 'Gloucestershire, Wiltshire & Bristol', 'value': 'Gloucestershire, Wiltshire & Bristol'},

{'label': 'Durham', 'value': 'Durham'},

{'label': 'Rutland', 'value': 'Rutland'},

{'label': 'Northumberland', 'value': 'Northumberland'},

{'label': 'West Midlands', 'value': 'West Midlands'},

{'label': 'Derbyshire', 'value': 'Derbyshire'},

{'label': 'Merseyside', 'value': 'Merseyside'},

{'label': 'East Sussex', 'value': 'East Sussex'},

{'label': 'Northern Ireland', 'value': 'Northern Ireland'},

{'label': 'Oxfordshire', 'value': 'Oxfordshire'},

{'label': 'Herefordshire', 'value': 'Herefordshire'},

{'label': 'Staffordshire', 'value': 'Staffordshire'},

{'label': 'East Riding of Yorkshire', 'value': 'East Riding of Yorkshire'},

{'label': 'South Yorkshire', 'value': 'South Yorkshire'},

{'label': 'West Sussex', 'value': 'West Sussex'},

{'label': 'Tyne and Wear', 'value': 'Tyne and Wear'},

{'label': 'Buckinghamshire', 'value': 'Buckinghamshire'},

{'label': 'West Yorkshire', 'value': 'West Yorkshire'},

{'label': 'Wales', 'value': 'Wales'},

{'label': 'Somerset', 'value': 'Somerset'},

{'label': 'Worcestershire', 'value': 'Worcestershire'},

{'label': 'North Yorkshire', 'value': 'North Yorkshire'},

{'label': 'Shropshire', 'value': 'Shropshire'},

{'label': 'Nottinghamshire', 'value': 'Nottinghamshire'},

{'label': 'Essex', 'value': 'Essex'},

{'label': 'Greater London & City of London', 'value': 'Greater London & City of London'},

{'label': 'Cambridgeshire', 'value': 'Cambridgeshire'},

{'label': 'Greater Manchester', 'value': 'Greater Manchester'},

{'label': 'Suffolk', 'value': 'Suffolk'},

{'label': 'Norfolk', 'value': 'Norfolk'},

{'label': 'Devon', 'value': 'Devon'},

{'label': 'Bedfordshire', 'value': 'Bedfordshire'}],

value=[],

multi=True,

id={'role': 'experiment-design-geoassignment-manual-geodropdown', 'group_id': 'Group-ID1234'})])

```

**Expected behavior**

When the country GB is selected, I expect the relevant options to be populated in the dropdown that can be selected. The code below:

``` python

def get_geos(self, all_geos):

element = html.Div(

[

html.P("TEST", style={"marginBottom": 5}),

dcc.Dropdown(

id={"role": self.prefix("dropdown"), "group_id": "1234"},

multi=True,

value=[],

searchable=True,

options=[{"label": g, "value": g} for g in all_geos],

),

]

)

print(element) # Print output is posted above showing that the callback is working fine. But it is not rendering correctly on the front end

return element

```

**Screen Recording**

https://github.com/plotly/dash/assets/94958897/13909683-244c-4cbe-853a-be148f3aae1c

|

closed

|

2024-02-08T13:47:01Z

|

2024-05-31T20:12:51Z

|

https://github.com/plotly/dash/issues/2754

|

[] |

malavika-menon

| 2

|

nikitastupin/clairvoyance

|

graphql

| 100

|

500 internal server error

|

Hey tool showing 500 ERROR on loop, i then burp Intercepted my clairvoyance traffic

clairvoyance -H "Authorization: Bearer" -H "X-api-key:" -x "127.1:8080" -k http://example.com/graphql

**Body it sending**

`{"query": "query { reporting essential myself tours platform load affiliate labor immediately admin nursing defense machines designated tags heavy covered recovery joe guys integrated configuration merchant comprehensive expert universal protect drop solid cds presentation languages became orange compliance vehicles prevent theme rich im campaign marine improvement vs guitar finding pennsylvania examples ipod saying spirit ar claims challenge motorola acceptance strategies mo seem affairs touch intended towards sa }"}`

**Response**

```

HTTP/2 500 Internal Server Error

Content-Type: application/json; charset=utf-8

{"errors":[{"message":"Too many validation errors, error limit reached. Validation aborted.","extensions":{"code":"INTERNAL_SERVER_ERROR"}}]}

```

but sending manually this it works:

`{"query": "query { along among death writing speed }"}`

|

open

|

2024-05-15T15:16:51Z

|

2024-08-27T06:13:53Z

|

https://github.com/nikitastupin/clairvoyance/issues/100

|

[

"bug"

] |

649abhinav

| 1

|

predict-idlab/plotly-resampler

|

plotly

| 341

|

Dash Callback says FigureResampler is not JSON serializable

|

Apologies, this is more of a "this broke and I don't know what went wrong" type of issue. What it looks like so far is that everything in the dash dashboard ive made works except for the plotting. This is the exception i get:

```

dash.exceptions.InvalidCallbackReturnValue: The callback for `[<Output `data-plot.figure`>, <Output `store.data`>, <Output `status-msg.children`>]`

returned a value having type `FigureResampler`

which is not JSON serializable.

The value in question is either the only value returned,

or is in the top level of the returned list,

and has string representation

`FigureResampler({ 'data': [{'mode': 'lines',

'name': '<b style="color:sandybrown">[R]</b> Category1 <i style="color:#fc9944">~10s</i>',

'type': 'scatter',

'uid': 'c78a3bb2-658c-44d0-b791-dfc0bbe76cd8',

'x': array([datetime.datetime(2025, 1, 22, 18, 38, 21),...

```

The relevant code chunks that could cause this break is:

```

from dash import Dash, html, dcc, Output, Input, State, callback, no_update, ctx

from dash_extensions.enrich import DashProxy, ServersideOutputTransform, Serverside

import dash_bootstrap_components as dbc

import pandas as pd

import plotly.express as px

app = DashProxy(

__name__,

external_stylesheets=[dbc.themes.LUX],

transforms=[ServersideOutputTransform()],

)

# assume app creation within a dbc. container here

dcc.Graph(id="data-plot", figure=go.Figure())

# this is the callback for the function triggering the break:

@callback(

[Output("data-plot", "figure"),

Output("store", "data"), # Cache the figure data

Output("status-msg", "children")],

[Input("load-btn", "n_clicks"),

State("dropdown-1", "value"),

State("dropdown-2", "value"),

State("dropdown-3", "value"),

State("dropdown-4", "value"),

State("dropdown-5", "value")],

prevent_initial_call=True # Prevents callback from running at startup

)

# this is how i made the figure, assume its right next to the call back from above

fig = FigureResampler(go.Figure(), default_n_shown_samples=10000)

# added trace and update layout here

# and then i return fig, Serverside(fig), "this thing works"

# i also use this function to update the resampling

@app.callback(

Output("data-plot", "figure", allow_duplicate=True),

Input("data-plot", "relayoutData"),

State("store", "data"), # The server side cached FigureResampler per session

prevent_initial_call=True,

)

def update_fig(relayoutdata: dict, fig: FigureResampler):

if fig is None:

return no_update

return fig.construct_update_data_patch(relayoutdata)

```

it looks like from the docs that you can return plotly resample figures as returns to the output for dcc.Graph. what could have gone wrong?

|

closed

|

2025-03-05T20:37:21Z

|

2025-03-06T18:06:15Z

|

https://github.com/predict-idlab/plotly-resampler/issues/341

|

[] |

FDSRashid

| 1

|

matplotlib/matplotlib

|

matplotlib

| 29,799

|

[ENH]: set default color cycle to named color sequence

|

### Problem

It would be great if I could put something like this in my matplotlibrc to use the petroff10 color sequence by default:

```

axes.prop_cycle : cycler('color', 'petroff10')

```

### Proposed solution

Currently if a single string is supplied we try to interpret as a list of single character colors

https://github.com/matplotlib/matplotlib/blob/a9dc9acc2dd1bab761b45e48c8d63aa108811a82/lib/matplotlib/rcsetup.py#L105-L108

None of the current built in color sequences can be interpreted that way, so it would not be ambiguous to try both that and querying the color sequence registry. However, maybe we would need something to guard against user- or third-party-defined color sequences having a name like "rygbk"?

|

open

|

2025-03-24T16:57:39Z

|

2025-03-24T17:42:14Z

|

https://github.com/matplotlib/matplotlib/issues/29799

|

[

"New feature",

"topic: rcparams",

"topic: color/cycle"

] |

rcomer

| 2

|

horovod/horovod

|

pytorch

| 3,795

|

Seen with tf-head: ModuleNotFoundError: No module named 'keras.optimizers.optimizer_v2'

|

Problem with tf-head / Keras seen in CI, for instance at https://github.com/horovod/horovod/actions/runs/3656223581/jobs/6180240570

```

___________ ERROR collecting test/parallel/test_tensorflow_keras.py ____________

ImportError while importing test module '/horovod/test/parallel/test_tensorflow_keras.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

test_tensorflow_keras.py:36: in <module>

from keras.optimizers.optimizer_v2 import optimizer_v2

E ModuleNotFoundError: No module named 'keras.optimizers.optimizer_v2'

```

```

___________ ERROR collecting test/parallel/test_tensorflow2_keras.py ___________

ImportError while importing test module '/horovod/test/parallel/test_tensorflow2_keras.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

test_tensorflow2_keras.py:35: in <module>

from keras.optimizers.optimizer_v2 import optimizer_v2

E ModuleNotFoundError: No module named 'keras.optimizers.optimizer_v2'

```

|

closed

|

2022-12-09T14:25:50Z

|

2022-12-10T09:54:00Z

|

https://github.com/horovod/horovod/issues/3795

|

[

"bug"

] |

maxhgerlach

| 1

|

plotly/dash-table

|

plotly

| 700

|

`Backspace` on cell only reflects deleted content after cell selection changes

|

In the recording below, `backspace` is hit right after the cell selection and the displayed cell content only updates after the selected cell changed.

|

open

|

2020-02-20T17:08:28Z

|

2024-01-25T21:34:23Z

|

https://github.com/plotly/dash-table/issues/700

|

[

"dash-type-bug",

"regression"

] |

Marc-Andre-Rivet

| 2

|

AirtestProject/Airtest

|

automation

| 1,205

|

airtest自动安装的urllib3库, 需要旧版 (比如1.26.17) 才能通过uid连接ios手机

|

**描述问题bug**

airtest自动安装的urllib3库, 需要旧版 (比如1.26.17) 才能通过uuid连接ios手机, 否则会提示wda未准备好并且在20秒等待后报错, 当你将 urllib3库改为旧版本可以解决这个问题, 控制端mac和windows 设备端ios15/16/17 下均是如此.

**python 版本:** `python3.11`

**airtest 版本:** `1.3.3`

**设备:**

- 手机型号: [iphone se2]

- 控制端: [mbp m1/windows11]

- 手机系统: [ios15/ios16/ios17]

|

open

|

2024-04-15T06:33:57Z

|

2024-04-15T06:41:39Z

|

https://github.com/AirtestProject/Airtest/issues/1205

|

[] |

yh1121yh

| 0

|

ultrafunkamsterdam/undetected-chromedriver

|

automation

| 2,155

|

[NODRIVER] Add ability to capture and return screenshot as base64 - changes are ready to PR merge

|

Hello, I would like to create a PR to (as title suggest) giving the base64 of the screenshots instead of saving files locally

Here is the commit: https://github.com/falmar/nodriver/commit/d903cca8aac2406ff0c4462785b61d5ce474256c

it includes and demo example

EDIT: I'm unable to create PR on the nodriver [repository](https://github.com/ultrafunkamsterdam/nodriver)

|

closed

|

2025-03-07T12:29:58Z

|

2025-03-10T07:48:53Z

|

https://github.com/ultrafunkamsterdam/undetected-chromedriver/issues/2155

|

[] |

falmar

| 2

|

xinntao/Real-ESRGAN

|

pytorch

| 209

|

希望能增加带去除扫描图片网纹的超分辨率算法

|

在进行扫图的杂志 周边 同人志等超分辨率的时候 网纹也会被放大得非常明显 不知道有没有办法先把网纹去除后再进行超分辨率呢?

|

open

|

2022-01-02T15:53:01Z

|

2022-01-02T15:53:01Z

|

https://github.com/xinntao/Real-ESRGAN/issues/209

|

[] |

sistinafibe

| 0

|

2noise/ChatTTS

|

python

| 3

|

运行到一半就自动停止了

|

如图,运行到一半就停止了。。

系统:linux

python版本:3.12

另外建议写个requirements吧

|

closed

|

2024-05-28T02:50:19Z

|

2024-05-28T11:29:23Z

|

https://github.com/2noise/ChatTTS/issues/3

|

[] |

luosaidage

| 1

|

kizniche/Mycodo

|

automation

| 442

|

install mycodo error???

|

what happen ?! How to install mycodo version lower to 5.6.10? I'm want to install mycodo version 5.5.24. how can i do?

|

closed

|

2018-04-03T07:11:43Z

|

2018-04-06T00:42:52Z

|

https://github.com/kizniche/Mycodo/issues/442

|

[] |

bike2538

| 4

|

InstaPy/InstaPy

|

automation

| 6,296

|

Not posting the comment when mentioning any account.

|

## InstaPy configuration

InstaPy Version: 0.6.14-AS

I am trying to comment on a hashtag by mentioning some page with @..... but it seems like when I am using the @.... it doesn't post the comment instead it skips it. Anyone else is facing the same issue? What is the solution?

|

open

|

2021-08-16T09:16:11Z

|

2021-10-10T00:35:52Z

|

https://github.com/InstaPy/InstaPy/issues/6296

|

[] |

moshema10

| 6

|

StackStorm/st2

|

automation

| 6,160

|

Provide support for passing "=" in a string

|

**alias yaml**

`---

name: "launch_quasar"

action_ref: "quasar.quasar1"

description: "launch a quasar execution"

formats:

- display: "*<command>* *<payload>*"

representation:

- "{{ command }} {{ payload }}"

result:

format: |

```{{ execution.result.result }}```

`

**action yaml**

name: quasar1

description: Action that takes an input parameter

runner_type: 'python-script'

entry_point: 'quasar1.py'

enabled: true

parameters:

command:

type: string

description: 'Input parameter'

required: true

payload:

type: string

description: 'Input parameter'

required: true

user:

type: "string"

description: "Slack user who triggered the action"

required: false

default: "{{action_context.api_user}}"

**Representation used:** "{{ command }} {{ payload }}"

**Parameter defintion**:

payload:

type: string

description: 'Input parameter'

required: true

We have an usecase where we need to pass a string with "=" in it for payload for some reason stackstorm is not allowing me to do that, if i pass such value it is not taking it as a seperate string and causing multiple issues

Issue is not oberved if we are putting ":" instead of "=" and also putting a space after "=" solves the issue

Have tried different things (using jinja template replace option and replaced "=" with "= " but i am getting internal server error.

if i am able to pass the yaml validation, i can have my own validation in action py file but the issue the execution is not even going to the python file.

basically this should be accepted by stackstorm **quasar create cluster_name=weekly_nats**

<img width="372" alt="Screenshot 2024-03-04 at 11 35 28 AM" src="https://github.com/StackStorm/st2/assets/41072130/ae316000-c84a-4493-976e-e2e6f5b38fbc">

|

open

|

2024-03-04T06:08:03Z

|

2024-03-04T06:09:32Z

|

https://github.com/StackStorm/st2/issues/6160

|

[] |

sivudu47

| 0

|

assafelovic/gpt-researcher

|

automation

| 645

|

UnicodeEncodeError: 'charmap' codec can't encode character '\U0001f50e' in position 0: character maps to <undefined>

|

I'm testing a simple next.js/fastapi app on Windows 11, using the example FastAPI from https://docs.tavily.com/docs/gpt-researcher/pip-package (btw I think this example is missing the query parameter)

It's a very simple parameter report test with api call/url of

```

const query = encodeURIComponent("What is 42?");

const type = encodeURIComponent("outline_report");

const URL = `/api/reporttest/${query}/${type}`;

const URL2 = `/api/report/${query}/${type}`;

```

URL is a simple parameter test

```

@app.get("/api/reporttest/{query}/{report_type}")

async def get_report(query: str, report_type: str) -> dict: