repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

pytorch/vision

|

computer-vision

| 8,730

|

release for python 3.13

|

### 🚀 The feature

Any plans to release for python 3.13?

thanks

### Motivation, pitch

torch is already compatible with 3.13

### Alternatives

_No response_

### Additional context

_No response_

|

closed

|

2024-11-14T08:41:04Z

|

2025-02-27T10:40:56Z

|

https://github.com/pytorch/vision/issues/8730

|

[] |

dpinol

| 6

|

wagtail/wagtail

|

django

| 12,408

|

Streamfield migrations fail on revisions that don't have target field

|

### Issue Summary

`wagtail.blocks.migrations.migrate_operation.MigrateStreamData` does not gracefully handle revisions that do not contain the field that is being operated on. This may occur when running a migration on a model that has revisions from before the creation of the field on the model. We do support limiting which revisions to operate on by date, but we should also gracefully handle this scenario (by doing nothing if the field does not exist in a given revision).

### Impact

Prevents migrations from being successfully run.

### Steps to Reproduce

1. Start a new Wagtail project

2. makemigrations, migrate, createsuperuser

3. Save a revision of the homepage

4. Add a "body" StreamField to the homepage

5. makemigrations

6. Create an empty migration in the home app

7. Add a streamfield data migration operation that operates on the newly added "body" field

```python

# Generated by Django 4.2.16 on 2024-10-12 10:51

from django.db import migrations

from wagtail.blocks.migrations.migrate_operation import MigrateStreamData

from wagtail.blocks.migrations.operations import AlterBlockValueOperation

class Migration(migrations.Migration):

dependencies = [

('home', '0003_homepage_body'),

]

operations = [

MigrateStreamData(

"home",

"homepage",

"body",

[

(AlterBlockValueOperation("Hello world"), "text")

]

)

]

```

8. Run the migrations

```sh

gitpod /workspace/wagtail-gitpod (main) $ python manage.py migrate

Operations to perform:

Apply all migrations: admin, auth, contenttypes, home, sessions, taggit, wagtailadmin, wagtailcore, wagtaildocs, wagtailembeds, wagtailforms, wagtailimages, wagtailredirects, wagtailsearch, wagtailusers

Running migrations:

Applying home.0003_homepage_body... OK

Applying home.0004_auto_20241012_1051...Traceback (most recent call last):

File "manage.py", line 10, in <module>

execute_from_command_line(sys.argv)

File "/workspace/.pip-modules/lib/python3.8/site-packages/django/core/management/__init__.py", line 442, in execute_from_command_line

utility.execute()

File "/workspace/.pip-modules/lib/python3.8/site-packages/django/core/management/__init__.py", line 436, in execute

self.fetch_command(subcommand).run_from_argv(self.argv)

File "/workspace/.pip-modules/lib/python3.8/site-packages/django/core/management/base.py", line 412, in run_from_argv

self.execute(*args, **cmd_options)

File "/workspace/.pip-modules/lib/python3.8/site-packages/django/core/management/base.py", line 458, in execute

output = self.handle(*args, **options)

File "/workspace/.pip-modules/lib/python3.8/site-packages/django/core/management/base.py", line 106, in wrapper

res = handle_func(*args, **kwargs)

File "/workspace/.pip-modules/lib/python3.8/site-packages/django/core/management/commands/migrate.py", line 356, in handle

post_migrate_state = executor.migrate(

File "/workspace/.pip-modules/lib/python3.8/site-packages/django/db/migrations/executor.py", line 135, in migrate

state = self._migrate_all_forwards(

File "/workspace/.pip-modules/lib/python3.8/site-packages/django/db/migrations/executor.py", line 167, in _migrate_all_forwards

state = self.apply_migration(

File "/workspace/.pip-modules/lib/python3.8/site-packages/django/db/migrations/executor.py", line 252, in apply_migration

state = migration.apply(state, schema_editor)

File "/workspace/.pip-modules/lib/python3.8/site-packages/django/db/migrations/migration.py", line 132, in apply

operation.database_forwards(

File "/workspace/.pip-modules/lib/python3.8/site-packages/django/db/migrations/operations/special.py", line 193, in database_forwards

self.code(from_state.apps, schema_editor)

File "/workspace/.pip-modules/lib/python3.8/site-packages/wagtail/blocks/migrations/migrate_operation.py", line 159, in migrate_stream_data_forward

raw_data = json.loads(revision.content[self.field_name])

KeyError: 'body'

```

https://github.com/wagtail/wagtail/blob/309e47f0ccb19dba63aaa64d52914e87eef390dc/wagtail/blocks/migrations/migrate_operation.py#L159

### Technical details

- Python version: 3.8.12

- Django version: 4.2.16

- Wagtail version: 6.2.2

|

open

|

2024-10-12T11:03:21Z

|

2024-12-01T03:58:01Z

|

https://github.com/wagtail/wagtail/issues/12408

|

[

"type:Bug",

"component:Streamfield"

] |

jams2

| 2

|

xinntao/Real-ESRGAN

|

pytorch

| 328

|

请问在哪可以看到生成器的网络结构

|

作者您好,我没有在代码中找到生成器的arch文件,basicsr的arch文件夹下也没有ESRGAN的arch文件

请问在哪里可以看到ESRGAN的arch文件

|

closed

|

2022-05-12T10:41:16Z

|

2023-02-15T07:34:34Z

|

https://github.com/xinntao/Real-ESRGAN/issues/328

|

[] |

EgbertMeow

| 1

|

miguelgrinberg/Flask-Migrate

|

flask

| 73

|

The multidb is not putting changes in the correct database.

|

I am having an issue where my schema changes are not showing up in the correct database. Furthermore, the test_multidb_migrate_upgrade fails when running "python setup.py test".

When I run these commands:

``` sh

cd tests

rm *.db && rm -rf migrations # cleanup

python app_multidb.py db init --multidb

python app_multidb.py db migrate

python app_multidb.py db upgrade

```

I get this version. Which is wrong, because User should be in upgrade_, not upgrade_db1.

``` python

"""empty message

Revision ID: af440038899

Revises:

Create Date: 2015-08-17 14:24:58.842302

"""

# revision identifiers, used by Alembic.

revision = 'af440038899'

down_revision = None

branch_labels = None

depends_on = None

from alembic import op

import sqlalchemy as sa

def upgrade(engine_name):

globals()["upgrade_%s" % engine_name]()

def downgrade(engine_name):

globals()["downgrade_%s" % engine_name]()

def upgrade_():

pass

def downgrade_():

pass

def upgrade_db1():

### commands auto generated by Alembic - please adjust! ###

op.create_table('user',

sa.Column('id', sa.Integer(), nullable=False),

sa.Column('name', sa.String(length=128), nullable=True),

sa.PrimaryKeyConstraint('id')

)

op.create_table('group',

sa.Column('id', sa.Integer(), nullable=False),

sa.Column('name', sa.String(length=128), nullable=True),

sa.PrimaryKeyConstraint('id')

)

### end Alembic commands ###

def downgrade_db1():

### commands auto generated by Alembic - please adjust! ###

op.drop_table('group')

op.drop_table('user')

### end Alembic commands ###

```

Using the latest libraries, not sure if a recent upgrade broke it:

```

$ pip freeze

Flask==0.10.1

Flask-Migrate==1.5.0

Flask-SQLAlchemy==2.0

Flask-Script==2.0.5

Jinja2==2.8

Mako==1.0.1

MarkupSafe==0.23

SQLAlchemy==1.0.8

Werkzeug==0.10.4

alembic==0.8.0

itsdangerous==0.24

python-editor==0.3

wsgiref==0.1.2

```

For sanity, can you confirm unit tests still pass with alembic 0.8, which I believe was released a few days ago? Thanks!

|

closed

|

2015-08-17T18:38:27Z

|

2015-09-04T17:57:35Z

|

https://github.com/miguelgrinberg/Flask-Migrate/issues/73

|

[

"bug"

] |

espositocode

| 3

|

jina-ai/serve

|

fastapi

| 5,994

|

Dynamic k8s namespace for generating kubernetes yaml

|

**Describe the feature**

The current implementation for to_kubernetes_yaml flow [takes `k8s_namespace` to be explicitly specified somewhere otherwise it outputs as `default` namespace](https://github.com/jina-ai/jina/blob/34664ee8db0a0e593a6c71dd6476cbf266a80641/jina/orchestrate/flow/base.py#L2772C69-L2772C69). The namespace need not be explicitly set and can be dynamically injected through metadata, hence improving the reusability of the generated templates.

For example:

```shell

kubectl apply -f "file.yaml" --namespace=prod

kubectl apply -f "file.yaml" --namespace=dev

```

**Your proposal**

We may accept that the Optional field can be `None` and propagate that when generating the yaml, in which case the generated yaml will include this for env injection:

```yaml

- name: K8S_NAMESPACE_NAME

valueFrom:

fieldRef:

fieldPath: metadata.namespace

```

And of course we may remove the injection of namespace for all the generated objects in this case.

More info from official docs

- https://kubernetes.io/docs/tasks/inject-data-application/environment-variable-expose-pod-information/

- https://kubernetes.io/docs/tasks/inject-data-application/downward-api-volume-expose-pod-information/

|

closed

|

2023-07-30T11:05:54Z

|

2024-06-06T00:18:51Z

|

https://github.com/jina-ai/serve/issues/5994

|

[

"Stale"

] |

sansmoraxz

| 25

|

suitenumerique/docs

|

django

| 113

|

🐛Editor difference with PDF

|

## Bug Report

Some properties of the editor are not reflected to the PDF (color / bg / alignment)

An issue was opened about it:

- [x] https://github.com/TypeCellOS/BlockNote/issues/893

## Demo

## Code

https://github.com/numerique-gouv/impress/blob/9c19b22a66766018f91262f9d1bd243cdecfa884/src/frontend/apps/impress/src/features/docs/doc-tools/components/ModalPDF.tsx#L88

|

closed

|

2024-07-01T12:50:13Z

|

2024-08-02T15:34:03Z

|

https://github.com/suitenumerique/docs/issues/113

|

[

"bug",

"enhancement",

"frontend"

] |

AntoLC

| 0

|

agronholm/anyio

|

asyncio

| 418

|

'get_coro' doesn't apply to a 'Task' object

|

Don't know if I should file it under `anyio` or `httpx`.

I have a FastAPI web app that makes some external calls with `httpx`. I sometimes (timeout involved? loop terminated elsewhere?) get the following error. I was unsuccessful at reproducing it in a minimal example, so please forgive me for just pasting the traceback.

As you can see in the first block, it is just a call with `httpx.AsycnClient` GET.

using

python 3.9 (dockerized with python:3.9-alpine3.14)

anyio 3.5.0

httpx 0.19.0

EDIT: The problem could be related to the use of nest-asyncio elsewhere in the app.

```

File "/usr/local/lib/python3.9/site-packages/httpx/_client.py", line 1740, in get

return await self.request(

File "/usr/local/lib/python3.9/site-packages/httpx/_client.py", line 1494, in request

response = await self.send(

File "/usr/local/lib/python3.9/site-packages/httpx/_client.py", line 1586, in send

response = await self._send_handling_auth(

File "/usr/local/lib/python3.9/site-packages/httpx/_client.py", line 1616, in _send_handling_auth

response = await self._send_handling_redirects(

File "/usr/local/lib/python3.9/site-packages/httpx/_client.py", line 1655, in _send_handling_redirects

response = await self._send_single_request(request, timeout)

File "/usr/local/lib/python3.9/site-packages/httpx/_client.py", line 1699, in _send_single_request

) = await transport.handle_async_request(

File "/usr/local/lib/python3.9/site-packages/httpx/_transports/default.py", line 281, in handle_async_request

) = await self._pool.handle_async_request(

File "/usr/local/lib/python3.9/site-packages/httpcore/_async/connection_pool.py", line 219, in handle_async_request

async with self._connection_acquiry_lock:

File "/usr/local/lib/python3.9/site-packages/httpcore/_backends/base.py", line 76, in __aenter__

await self.acquire()

File "/usr/local/lib/python3.9/site-packages/httpcore/_backends/anyio.py", line 104, in acquire

await self._lock.acquire()

File "/usr/local/lib/python3.9/site-packages/anyio/_core/_synchronization.py", line 119, in acquire

self.acquire_nowait()

File "/usr/local/lib/python3.9/site-packages/anyio/_core/_synchronization.py", line 150, in acquire_nowait

task = get_current_task()

File "/usr/local/lib/python3.9/site-packages/anyio/_core/_testing.py", line 59, in get_current_task

return get_asynclib().get_current_task()

File "/usr/local/lib/python3.9/site-packages/anyio/_backends/_asyncio.py", line 1850, in get_current_task

return _create_task_info(current_task()) # type: ignore[arg-type]

File "/usr/local/lib/python3.9/site-packages/anyio/_backends/_asyncio.py", line 1846, in _create_task_info

return TaskInfo(id(task), parent_id, name, get_coro(task))

TypeError: descriptor 'get_coro' for '_asyncio.Task' objects doesn't apply to a 'Task' object

```

|

closed

|

2022-02-10T10:26:31Z

|

2022-05-08T19:08:01Z

|

https://github.com/agronholm/anyio/issues/418

|

[] |

jacopo-exact

| 7

|

pytest-dev/pytest-cov

|

pytest

| 605

|

Maximum coverage in minimal time

|

# Summary

Given a project where tests have been added incrementally over time and there is a significant amount of overlap between tests,

I'd like to be able to generate a list of tests that creates maximum coverage in minimal time. Clearly this is a pure coverage approach and doesn't guarantee that functional coverage is maintained, but this could be a good approach to identifying redundant tests.

I have a quick proof-of-concept that's not integrated into pytest that:

* runs all tests with `pytest-cov` and `--durations=0`

* processes `CoverageData` and the output of `--durations=0` to generate a list of arcs/lines that are covered for each context

* reduces the list of subsets using the set cover algorithm

* optionally applies a coverage 'confidence' in the event you want a faster smoke test that has reduced coverage (say 95%).

I am happy to work on a PR and include tests, but before I do I wanted to gauge fit to your project's goals and if you'd rather not have this feature, I can always create a separate plugin for people who want it.

|

closed

|

2023-08-10T11:57:49Z

|

2023-12-11T09:26:55Z

|

https://github.com/pytest-dev/pytest-cov/issues/605

|

[] |

masaccio

| 5

|

horovod/horovod

|

deep-learning

| 3,162

|

Spark with Horovod fails with py4j.protocol.Py4JJavaError

|

**Environment:**

1. Framework: TensorFlow, Keras

2. Framework version: tensorflow-2.4.3, keras-2.6.0

3. Horovod version: horovod-0.22.1

4. MPI version:

5. CUDA version:

6. NCCL version:

7. Python version: python-3.6.9

8. Spark / PySpark version: Spark-3.1.2

9. Ray version:

10. OS and version: Ubuntu 18

11. GCC version: gcc-7.5.0

12. CMake version: cmake-3.21.2

When running the sample script keras_spark_rossmann_estimator.py, spark app fails at model training with the following error:

```

Total params: 2,715,603

Trainable params: 2,715,567

Non-trainable params: 36

__________________________________________________________________________________________________

/home/cc/.local/lib/python3.6/site-packages/keras/optimizer_v2/optimizer_v2.py:356: UserWarning: The `lr` argument is deprecated, use `learning_rate` instead.

"The `lr` argument is deprecated, use `learning_rate` instead.")

num_partitions=80

writing dataframes

train_data_path=file:///tmp/intermediate_train_data.0

val_data_path=file:///tmp/intermediate_val_data.0

train_partitions=76===========================================> (15 + 1) / 16]

val_partitions=8

/home/cc/.local/lib/python3.6/site-packages/horovod/spark/common/util.py:479: FutureWarning: The 'field_by_name' method is deprecated, use 'field' instead

metadata, avg_row_size = make_metadata_dictionary(train_data_schema)

train_rows=806871

val_rows=37467

Exception in thread Thread-3: (0 + 8) / 8]

Traceback (most recent call last):

File "/usr/lib/python3.6/threading.py", line 916, in _bootstrap_inner

self.run()

File "/usr/lib/python3.6/threading.py", line 864, in run

self._target(*self._args, **self._kwargs)

File "/home/cc/.local/lib/python3.6/site-packages/horovod/spark/runner.py", line 140, in run_spark

result = procs.mapPartitionsWithIndex(mapper).collect()

File "/usr/local/lib/python3.6/dist-packages/pyspark/rdd.py", line 949, in collect

sock_info = self.ctx._jvm.PythonRDD.collectAndServe(self._jrdd.rdd())

File "/home/cc/.local/lib/python3.6/site-packages/py4j/java_gateway.py", line 1310, in __call__

answer, self.gateway_client, self.target_id, self.name)

File "/usr/local/lib/python3.6/dist-packages/pyspark/sql/utils.py", line 111, in deco

return f(*a, **kw)

File "/home/cc/.local/lib/python3.6/site-packages/py4j/protocol.py", line 328, in get_return_value

format(target_id, ".", name), value)

py4j.protocol.Py4JJavaError: An error occurred while calling z:org.apache.spark.api.python.PythonRDD.collectAndServe.

: org.apache.spark.SparkException: Job 63 cancelled part of cancelled job group horovod.spark.run.0

at org.apache.spark.scheduler.DAGScheduler.failJobAndIndependentStages(DAGScheduler.scala:2258)

at org.apache.spark.scheduler.DAGScheduler.handleJobCancellation(DAGScheduler.scala:2154)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$handleJobGroupCancelled$4(DAGScheduler.scala:1048)

at scala.runtime.java8.JFunction1$mcVI$sp.apply(JFunction1$mcVI$sp.java:23)

at scala.collection.mutable.HashSet.foreach(HashSet.scala:79)

at org.apache.spark.scheduler.DAGScheduler.handleJobGroupCancelled(DAGScheduler.scala:1047)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2407)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2387)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2376)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:868)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2196)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2217)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2236)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2261)

at org.apache.spark.rdd.RDD.$anonfun$collect$1(RDD.scala:1030)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:414)

at org.apache.spark.rdd.RDD.collect(RDD.scala:1029)

at org.apache.spark.api.python.PythonRDD$.collectAndServe(PythonRDD.scala:180)

at org.apache.spark.api.python.PythonRDD.collectAndServe(PythonRDD.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Thread.java:748)

Traceback (most recent call last):

File "keras_spark_rossmann_estimator.py", line 397, in <module>

keras_model = keras_estimator.fit(train_df).setOutputCols(['Sales_output'])

File "/home/cc/.local/lib/python3.6/site-packages/horovod/spark/common/estimator.py", line 35, in fit

return super(HorovodEstimator, self).fit(df, params)

File "/usr/local/lib/python3.6/dist-packages/pyspark/ml/base.py", line 161, in fit

return self._fit(dataset)

File "/home/cc/.local/lib/python3.6/site-packages/horovod/spark/common/estimator.py", line 81, in _fit

backend, train_rows, val_rows, metadata, avg_row_size, dataset_idx)

File "/home/cc/.local/lib/python3.6/site-packages/horovod/spark/keras/estimator.py", line 317, in _fit_on_prepared_data

env=env)

File "/home/cc/.local/lib/python3.6/site-packages/horovod/spark/common/backend.py", line 85, in run

**self._kwargs)

File "/home/cc/.local/lib/python3.6/site-packages/horovod/spark/runner.py", line 284, in run

_launch_job(use_mpi, use_gloo, settings, driver, env, stdout, stderr)

File "/home/cc/.local/lib/python3.6/site-packages/horovod/spark/runner.py", line 155, in _launch_job

settings.verbose)

File "/home/cc/.local/lib/python3.6/site-packages/horovod/runner/launch.py", line 706, in run_controller

gloo_run()

File "/home/cc/.local/lib/python3.6/site-packages/horovod/spark/runner.py", line 152, in <lambda>

run_controller(use_gloo, lambda: gloo_run(settings, nics, driver, env, stdout, stderr),

File "/home/cc/.local/lib/python3.6/site-packages/horovod/spark/gloo_run.py", line 67, in gloo_run

launch_gloo(command, exec_command, settings, nics, {}, server_ip)

File "/home/cc/.local/lib/python3.6/site-packages/horovod/runner/gloo_run.py", line 271, in launch_gloo

.format(name=name, code=exit_code))

RuntimeError: Horovod detected that one or more processes exited with non-zero status, thus causing the job to be terminated. The first process to do so was:

Process name: 0

Exit code: 255

```

This is followed by the following thread dump

```

21/09/13 04:12:33 ERROR TransportRequestHandler: Error while invoking RpcHandler#receive() for one-way message.

org.apache.spark.SparkException: Could not find CoarseGrainedScheduler.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:176)

at org.apache.spark.rpc.netty.Dispatcher.postOneWayMessage(Dispatcher.scala:150)

at org.apache.spark.rpc.netty.NettyRpcHandler.receive(NettyRpcEnv.scala:691)

at org.apache.spark.network.server.TransportRequestHandler.processOneWayMessage(TransportRequestHandler.java:255)

at org.apache.spark.network.server.TransportRequestHandler.handle(TransportRequestHandler.java:111)

at org.apache.spark.network.server.TransportChannelHandler.channelRead0(TransportChannelHandler.java:140)

at org.apache.spark.network.server.TransportChannelHandler.channelRead0(TransportChannelHandler.java:53)

at io.netty.channel.SimpleChannelInboundHandler.channelRead(SimpleChannelInboundHandler.java:99)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:103)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at org.apache.spark.network.util.TransportFrameDecoder.channelRead(TransportFrameDecoder.java:102)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:163)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:714)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:650)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:576)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:493)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:989)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:748)

21/09/13 04:12:33 ERROR TransportRequestHandler: Error while invoking RpcHandler#receive() for one-way message.

org.apache.spark.SparkException: Could not find CoarseGrainedScheduler.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:176)

at org.apache.spark.rpc.netty.Dispatcher.postOneWayMessage(Dispatcher.scala:150)

at org.apache.spark.rpc.netty.NettyRpcHandler.receive(NettyRpcEnv.scala:691)

at org.apache.spark.network.server.TransportRequestHandler.processOneWayMessage(TransportRequestHandler.java:255)

at org.apache.spark.network.server.TransportRequestHandler.handle(TransportRequestHandler.java:111)

at org.apache.spark.network.server.TransportChannelHandler.channelRead0(TransportChannelHandler.java:140)

at org.apache.spark.network.server.TransportChannelHandler.channelRead0(TransportChannelHandler.java:53)

at io.netty.channel.SimpleChannelInboundHandler.channelRead(SimpleChannelInboundHandler.java:99)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.handler.codec.MessageToMessageDecoder.channelRead(MessageToMessageDecoder.java:103)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at org.apache.spark.network.util.TransportFrameDecoder.channelRead(TransportFrameDecoder.java:102)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:163)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:714)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:650)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:576)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:493)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:989)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:748)

```

|

open

|

2021-09-13T05:06:05Z

|

2021-09-14T22:34:34Z

|

https://github.com/horovod/horovod/issues/3162

|

[

"bug"

] |

aakash-sharma

| 2

|

scikit-optimize/scikit-optimize

|

scikit-learn

| 569

|

ImportError: cannot import name MaskedArray

|

I got scikit-optimize from your pypi release [here](https://pypi.python.org/pypi/scikit-optimize), where it says I need scikit-learn >= 0.18. "I'm in luck." thought I, for 0.18 I have. But trying to import skopt I get an error that MaskedArray can not be imported from sklearn.utils.fixes, and trying to import that class from there myself also yields an error. *Does scikit-optimize actually have a dependency on a later version of sklearn?*

|

closed

|

2017-12-11T19:31:36Z

|

2023-06-20T19:06:05Z

|

https://github.com/scikit-optimize/scikit-optimize/issues/569

|

[] |

pavelkomarov

| 25

|

KevinMusgrave/pytorch-metric-learning

|

computer-vision

| 138

|

How to use a queue of negative samples as done in MoCo

|

Hi Kevin,

I wonder if such an extended NT-Xent loss could be implemented?

The NT-Xent implemented in this package can return the pairwise loss when given a mini-batch and a label array. I wonder if for the purpose of increasing negative samples to make the task harder, could we directly use this package?

To be more specific, I use @JohnGiorgi's example:

`import torch`

`from pytorch_metric_learning.losses import NTXentLoss`

`batch_size = 16`

`embedding_dim = 512`

`anchor_embeddings = torch.randn(batch_size, embedding_dim)`

`positive_embeddings = torch.randn(batch_size, embedding_dim)`

`embeddings = torch.cat((anchor_embeddings, positive_embeddings))`

`indices = torch.arange(0, anchor_embeddings.size(0), device=anchor_embeddings.device)`

`labels = torch.cat((indices, indices))`

`loss = NTXentLoss(temperature=0.10)`

`loss(embeddings, labels)`

Assuming I have another list of negative samples with size 224 * 512, how could I use the package?

I would be really appreciate if you could provide this function since this would be really useful for making the CL harder when limited by the resource.

|

closed

|

2020-07-14T01:22:43Z

|

2020-07-25T14:21:05Z

|

https://github.com/KevinMusgrave/pytorch-metric-learning/issues/138

|

[

"Frequently Asked Questions",

"question"

] |

CSerxy

| 13

|

inventree/InvenTree

|

django

| 9,196

|

[FR] PUI: Please add IPN to supplier parts table

|

### Please verify that this feature request has NOT been suggested before.

- [x] I checked and didn't find a similar feature request

### Problem statement

With reference to #9179: The IPN is missing in the supplierpart table. In CUI it was combined with the name. An

additional column is also fine.

### Suggested solution

Either as in CUI or additional column

### Describe alternatives you've considered

We can stay with CUI

### Examples of other systems

_No response_

### Do you want to develop this?

- [ ] I want to develop this.

|

closed

|

2025-02-27T06:56:01Z

|

2025-02-27T12:18:00Z

|

https://github.com/inventree/InvenTree/issues/9196

|

[

"enhancement",

"User Interface"

] |

SergeoLacruz

| 3

|

graphql-python/graphene-django

|

graphql

| 750

|

Bug: Supposedly wrong types in query with filter_fields since 2.4.0

|

### Problem

When using filter_fields I get an error about using wrong types which started appearing in 2.4.0.

`Variable "startedAtNull" of type "Boolean" used in position expecting type "DateTime".` The error does not occur with graphene-django 2.3.2

### Context

- using django-filter 2.2.0

- django 2.4.0

###

**Schema.py**

```

DATETIME_FILTERS = ['exact', 'isnull', 'lt', 'lte', 'gt', 'gte', 'month', 'year', 'date']

class OrderNode(DjangoObjectType):

class Meta:

model = Order

exclude = ('tenant', )

filter_fields = {

'id': ['exact'],

'start_at': DATETIME_FILTERS,

'finish_at': DATETIME_FILTERS,

'finished_at': DATETIME_FILTERS,

'started_at': DATETIME_FILTERS,

}

interfaces = (OrderNodeInterface,)

```

**Query:**

```

ORDERS_QUERY = '''

query order(

$tenant: String

$projectId: ID

$startedAtNull: Boolean

) {

orders(

tenant: $tenant

project_Id: $projectId

startedAt_Isnull: $startedAtNull

) {

edges {

node {

id,

city

}

}

}

}

'''

```

**Result:**

`Variable "startedAtNull" of type "Boolean" used in position expecting type "DateTime".`

### Solution

I am confident it is related to this PR: https://github.com/graphql-python/graphene-django/pull/682/files . In graphene_django/filter/utils.py the way how to retrieve the Type of a field was changed. Or maybe I misunderstood the changelog.

|

closed

|

2019-08-16T09:43:08Z

|

2019-10-10T08:20:16Z

|

https://github.com/graphql-python/graphene-django/issues/750

|

[

"🐛bug"

] |

lassesteffen

| 10

|

plotly/dash-table

|

dash

| 600

|

Incorrect cell validation / coercion

|

1 - Validation default is not applied correctly when its value is `0` (number) -- the value is falsy and trips the default case

2 - Deleting cell content with `backspace` does not run validation

1 - This is simple, update https://github.com/plotly/dash-table/blob/dev/src/dash-table/type/reconcile.ts#L67 to do a `R.isNil` check instead

2 - This is a bit more involved, I suggest that we start using the `on_change` settings applicable to each cell and use the reconciliation result of `null` if the reconciliation is successful. Otherwise, continue using `''` as we do right now.

|

closed

|

2019-09-24T12:34:57Z

|

2019-09-24T16:28:50Z

|

https://github.com/plotly/dash-table/issues/600

|

[

"dash-type-bug",

"size: 0.5"

] |

Marc-Andre-Rivet

| 0

|

stanfordnlp/stanza

|

nlp

| 808

|

Stanza sluggish with multiprocessing

|

Down below testcase.

Stanza is fast if `parallel == 1`, but becomes sluggish when distributed among processes.

````

import os, multiprocessing, time

import stanza

parallel = os.cpu_count()

language = 'cs'

sentence = 'ponuže dobře al ja nemam i zpětlou vas dbu od policiei teto ty teto věci najitam spravným orbganutyperypřecuji nas praco zdněspotaměcham'

stanza.download( language )

def nlp_stanza( ignore ):

nlp_pipeline = stanza.Pipeline( language, logging_level='WARN', use_gpu=False )

for i in range(50):

s = int(time.process_time()*1000)

nlp_pipeline( sentence )

e = int(time.process_time()*1000)

print( os.getpid(), str(e-s)+'ms:', sentence )

pool = multiprocessing.Pool( processes=parallel )

pool.map( nlp_stanza, range(parallel) )

pool.join()

````

|

closed

|

2021-09-16T12:41:13Z

|

2021-09-20T12:35:40Z

|

https://github.com/stanfordnlp/stanza/issues/808

|

[

"bug"

] |

doublex

| 1

|

deepinsight/insightface

|

pytorch

| 2,328

|

batch SimilarityTransform

|

The following code implements face alignment using functions from the `skimage` library. In cases where there are a small number of faces, using this function for face alignment can yield satisfactory results. However, when dealing with a large collection of faces, I'm looking for a method to calculate similarity transformation matrices in batches. I have already achieved batch face alignment using similarity transformation matrices of shape [b, 2, 3] with the `kornia` library. However, I'm struggling to find a way to calculate similarity transformation matrices in batches while maintaining consistent results with the computation performed by `skimage`. Furthermore, I'm hoping to accomplish this using the PyTorch framework. I have attempted to replicate the computation of similarity transformation matrices from `skimage` using PyTorch, but the results do not match. This discrepancy could impact the accuracy of subsequent face recognition tasks. Has anyone successfully implemented this? Any help would be greatly appreciated.

```

tform = trans.SimilarityTransform()

tform.estimate(src,dst)

M = tform.params[0:2,:]

```

can stack the results of `trans.SimilarityTransform()` into a [n, 2, 3] matrix, which can then be used with `kornia.geometry.transform.warp_affine` along with the corresponding images to perform batch alignment tasks.

|

open

|

2023-06-06T03:29:15Z

|

2023-07-11T02:55:29Z

|

https://github.com/deepinsight/insightface/issues/2328

|

[] |

muqishan

| 1

|

python-visualization/folium

|

data-visualization

| 1,850

|

export / save Folium map as static image (PNG)

|

Code:

```python

colormap = branca.colormap.linear.plasma.scale(vmin, vmax).to_step(100)

r_map = folium.Map(location=[lat, long], tiles='openstreetmap')

for i in range(0, len(df)):

r_lat = ...

r_long = ...

r_score = ...

Circle(location=[r_lat, r_long], radius=5, color=colormap(r_score)).add_to(r_map)

colormap.add_to(r_map)

r_map

```

This works fine, but there seems to be no way to generate the map, optionally, as a fixed-size, non-zoomable bitmap in a decent format like PNG.

A side-effect of this is - the map does not show in a PDF export of the Jupyter notebook where the map is generated.

It would be nice if `folium.Map()` had a way to generate fixed bitmap output, e.g. like Matplotlib.pyplot.

I've read the documentation, searched the web, there really seems to be no good solution other than a Selenium hack which requires too many moving parts and extra libraries. This should be instead a standard Folium feature.

To be clear, I am running all this code in a Jupyter notebook.

I don't think I have the time to implement a PR myself.

|

closed

|

2023-12-22T21:15:47Z

|

2024-05-25T14:11:19Z

|

https://github.com/python-visualization/folium/issues/1850

|

[] |

FlorinAndrei

| 5

|

tradingstrategy-ai/web3-ethereum-defi

|

pytest

| 125

|

Error when loading last N blocks using JSONRPCReorganisationMonitor

|

How do you only load the last N blocks? This code wants to loads only last 5 blocks i believe, however it errors when adding new blocks.

```

reorg_mon = JSONRPCReorganisationMonitor(web3, check_depth=30)

reorg_mon.load_initial_block_headers(block_count=5)

while True:

try:

# Figure out the next good unscanned block range,

# and fetch block headers and timestamps for this block range

chain_reorg_resolution = reorg_mon.update_chain()

if chain_reorg_resolution.reorg_detected:

logger.info(f"Chain reorganisation data updated: {chain_reorg_resolution}")

# Read specified events in block range

for log_result in read_events(

web3,

start_block=chain_reorg_resolution.latest_block_with_good_data + 1,

end_block=chain_reorg_resolution.last_live_block,

filter=filter,

notify=None,

chunk_size=100,

context=token_cache,

extract_timestamps=None,

reorg_mon=reorg_mon,

):

pass

```

```

INFO:eth_defi.event_reader.reorganisation_monitor:figure_reorganisation_and_new_blocks(), range 17,285,423 - 17,285,443, last block we have is 17,285,443, check depth is 20

ERROR: LoadError: Python: AssertionError: Blocks must be added in order. Last block we have: 17285443, the new record is: BlockHeader(block_number=17285423, block_hash='0x8d481922bd607150c9f3299004a113e44955327770ab04ed10de115e2172d6fe', timestamp=1684400615)

Python stacktrace:

[1] add_block

@ eth_defi.event_reader.reorganisation_monitor ~/Library/Caches/pypoetry/virtualenvs/cryptopy-5siZoxZ4-py3.10/lib/python3.10/site-packages/eth_defi/event_reader/reorganisation_monitor.py:324

[2] figure_reorganisation_and_new_blocks

@ eth_defi.event_reader.reorganisation_monitor ~/Library/Caches/pypoetry/virtualenvs/cryptopy-5siZoxZ4-py3.10/lib/python3.10/site-packages/eth_defi/event_reader/reorganisation_monitor.py:396

[3] update_chain

```

|

closed

|

2023-05-18T09:10:38Z

|

2023-08-08T11:47:19Z

|

https://github.com/tradingstrategy-ai/web3-ethereum-defi/issues/125

|

[] |

bryaan

| 2

|

graphdeco-inria/gaussian-splatting

|

computer-vision

| 850

|

basic question from beginner of 3d reconstruction using 3DGS

|

Hello! I am trying to start 3d reconstruction with your 3DGS software.

I'd like to ask some basic questions.

1. Does 3DGS needs camera intrinsic parameter? It just helps 3D quality, or it is must to have?

2. I am using Colmap to make SfM as prerequisite before train.py used to make 3DGS .ply file. I put 2800 images using basic gpu 16GB VRAM (10 fps from a movie), then takes nearly 30 hours to make a .ply for 3DGS. Do you know any good way to speed it up? like other tool.

3. so I should put .ply to train.py, right? or what do you mean "Colmap dataset" in readme?

Thank you for your kind advice!

|

open

|

2024-06-15T15:19:30Z

|

2024-06-18T11:58:18Z

|

https://github.com/graphdeco-inria/gaussian-splatting/issues/850

|

[] |

RickMaruyama

| 1

|

hankcs/HanLP

|

nlp

| 1,806

|

文件流未正确关闭

|

<!--

感谢找出bug,请认真填写下表:

-->

**Describe the bug**

A clear and concise description of what the bug is.

VectorsReader类的readVectorFile()方法未正确关闭文件流,导致资源泄漏

**Code to reproduce the issue**

Provide a reproducible test case that is the bare minimum necessary to generate the problem.

使用Word2VecTrainer的train方法,或者new 一个WordVectorModel对象,进程进行中,删除模型文件比如model.txt,会无法删除

```java

```

**Describe the current behavior**

A clear and concise description of what happened.

**Expected behavior**

A clear and concise description of what you expected to happen.

我在使用该jar进行词向量模型训练或者文档转换为向量后,模型文件始终删除不掉,文件资源积压

**System information**

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04):任何系统都会出现

- Python version:

- HanLP version:目前发现1.5-1.8.3的版本都会出现该问题

**Other info / logs**

Include any logs or source code that would be helpful to diagnose the problem. If including tracebacks, please include the full traceback. Large logs and files should be attached.

[VectorsReader.java](https://github.com/hankcs/HanLP/blob/v1.8.3/src/main/java/com/hankcs/hanlp/mining/word2vec/VectorsReader.java)

* [x] I've completed this form and searched the web for solutions.

<!-- ⬆️此处务必勾选,否则你的issue会被机器人自动删除! -->

<!-- ⬆️此处务必勾选,否则你的issue会被机器人自动删除! -->

<!-- ⬆️此处务必勾选,否则你的issue会被机器人自动删除! -->

|

closed

|

2023-02-24T13:40:24Z

|

2023-02-25T01:02:15Z

|

https://github.com/hankcs/HanLP/issues/1806

|

[

"bug"

] |

zjqer

| 3

|

NullArray/AutoSploit

|

automation

| 406

|

Unhandled Exception (e195f1bf9)

|

Autosploit version: `3.0`

OS information: `Linux-4.18.0-kali2-amd64-x86_64-with-Kali-kali-rolling-kali-rolling`

Running context: `autosploit.py`

Error meesage: `global name 'Except' is not defined`

Error traceback:

```

Traceback (most recent call):

File "/root/Puffader/Autosploit/autosploit/main.py", line 113, in main

loaded_exploits = load_exploits(EXPLOIT_FILES_PATH)

File "/root/Puffader/Autosploit/lib/jsonize.py", line 61, in load_exploits

except Except:

NameError: global name 'Except' is not defined

```

Metasploit launched: `False`

|

closed

|

2019-01-24T09:40:39Z

|

2019-04-02T20:27:09Z

|

https://github.com/NullArray/AutoSploit/issues/406

|

[] |

AutosploitReporter

| 0

|

piskvorky/gensim

|

machine-learning

| 2,973

|

phrases.export_phrases() doesn't yield all bigrams

|

Hallo and thank you for this tool,

phrases.export_phrases() doesn't yield all bigrams when some are part of a bigger n-gram.

If I create a Phrases object with

`phrases = gensim.models.phrases.Phrases(sentences, min_count=1, threshold=10, delimiter=b' ', scoring='default')`

on the following two sentences

New York City has the largest population of all the cities in the United States .

Every year, many travelers come to the United States to visit New York City .

`print(dict(phrases.export_phrases(sentences)))` only returns {b'New York': 11.5, b'United States': 11.5}. It should also return {b'York City': 11.5} however.

line 187 of phrases.py should probably be changed to `last_uncommon = word` . It fixes the problem on my side and seems to be what the code was intended to be.

Thank you,

Olivier NC

macOS-10.14.5-x86_64-i386-64bit

Python 3.8.5 (v3.8.5:580fbb018f, Jul 20 2020, 12:11:27)

[Clang 6.0 (clang-600.0.57)]

Bits 64

NumPy 1.19.2

SciPy 1.5.2

gensim 3.8.3

FAST_VERSION 0

|

closed

|

2020-10-06T03:28:57Z

|

2020-10-09T00:04:27Z

|

https://github.com/piskvorky/gensim/issues/2973

|

[] |

o-nc

| 3

|

cvat-ai/cvat

|

pytorch

| 8,431

|

Toggle switch for mask point does not work anymore

|

For instance segmentation, I used to draw the first mask. For the second mask which would always overlap the first mask, I usually used CTRL to make the points of mask 1 appear to have a suitable overlap in annotation.

Now, the function of making the points of mask 1 appear is gone?! I can not turn the points on with CTRL, which makes the instance segmentation impossible for now. The only way to make the points appear is visible in figure 1 is to hover over it. But this does not work in draw mode. As soon as I enter the draw mode the points disappear, as seen in figure 2.

Please have a look at it. I'm sure its just a small oneliner that must have been the reason to make the Toggle of the points not work with CTRL.

|

closed

|

2024-09-11T10:24:34Z

|

2024-09-11T11:11:25Z

|

https://github.com/cvat-ai/cvat/issues/8431

|

[] |

hasano20

| 2

|

encode/apistar

|

api

| 71

|

Interactive API Documentation

|

We'll be pulling in REST framework's existing interactive API docs.

It's gonna be ✨fabulous✨.

|

closed

|

2017-04-20T14:07:21Z

|

2017-08-04T15:06:37Z

|

https://github.com/encode/apistar/issues/71

|

[

"Baseline feature"

] |

tomchristie

| 6

|

pytorch/pytorch

|

machine-learning

| 149,516

|

```StateDictOptions``` in combination with ```cpu_offload=True``` and ```strict=False``` not working

|

### 🐛 Describe the bug

When running the following for distributed weight loading:

```

options = StateDictOptions(

full_state_dict=True,

broadcast_from_rank0=True,

strict=False,

cpu_offload=True,

)

set_model_state_dict(model=model, model_state_dict=weights, options=options)

```

I am getting `KeyError`for keys that are not in the model.

I believe it has to do with not checking for strict at this point:

https://github.com/pytorch/pytorch/blob/main/torch/distributed/_state_dict_utils.py#L656

Which only appears to be done afterwards.

### Versions

Current main

cc @LucasLLC @pradeepfn

|

open

|

2025-03-19T14:53:37Z

|

2025-03-20T19:19:49Z

|

https://github.com/pytorch/pytorch/issues/149516

|

[

"oncall: distributed checkpointing"

] |

psinger

| 0

|

getsentry/sentry

|

django

| 86,783

|

Add ttid_contribution_rate() function

|

### Problem Statement

Add ttid_contribution_rate() to eap via rpc

### Solution Brainstorm

_No response_

### Product Area

Unknown

|

closed

|

2025-03-11T13:16:41Z

|

2025-03-11T17:46:36Z

|

https://github.com/getsentry/sentry/issues/86783

|

[] |

DominikB2014

| 0

|

Evil0ctal/Douyin_TikTok_Download_API

|

fastapi

| 98

|

I can't use shortcuts on ios

|

I have updated to the latest 6.0 version but the shortcut still says to update, it makes me unable to download videos on tiktok

I use iphone X iOS 15.5

|

closed

|

2022-11-08T18:48:19Z

|

2022-11-10T08:00:58Z

|

https://github.com/Evil0ctal/Douyin_TikTok_Download_API/issues/98

|

[

"API Down",

"Fixed"

] |

beelyhot5

| 1

|

timkpaine/lantern

|

plotly

| 169

|

Chop out email to separate jlab plugin

|

closed

|

2018-07-22T17:57:18Z

|

2018-08-10T19:55:22Z

|

https://github.com/timkpaine/lantern/issues/169

|

[

"feature"

] |

timkpaine

| 2

|

|

deepspeedai/DeepSpeed

|

deep-learning

| 6,729

|

GPU mem doesn't release after delete tensors in optimizer.bit16groups

|

I'm developing a peft algorithm, basically it does the following:

Say the training process has 30 steps in total,

1. For global step 0\~9: train `lmhead` + `layer_0`

2. For global step 10\~19: train `lmhead` + `layer_1`

3. For global step 20\~29: train `lmhead` + `layer_0`

The key point is that, after the switch, the states of `lmhead` are expected to be kept, while the states of the body layers should be deleted.

For example, the `step` in `lmhead` state should go from 0 to 29, while `step` for body layers count from 0 after every switch, even if the layer has been selected before.

In this case, the parameter group looks like:

```python

optimizer_grouped_parameters = [

{

# this should always be lmhead:

# `requires_grad` and `not in active_layers_names` rules out all body layers

# `in decay_parameters` rules out ln

"params": [

p for n, p in opt_model.named_parameters() if (

n not in self.active_layers_names and n in decay_parameters and p.requires_grad)

],

"weight_decay": self.args.weight_decay,

},

{

# this should always be ln (outside of body layers)

"params": [

p for n, p in opt_model.named_parameters() if (

n not in self.active_layers_names and n not in decay_parameters and p.requires_grad)

],

"weight_decay": 0.0,

},

{

# selected body layers with decay

"params": [

p for n, p in opt_model.named_parameters() if (

n in self.active_layers_names and n in decay_parameters and p.requires_grad)

],

"weight_decay": self.args.weight_decay,

},

{

# selected body layers without decay

"params": [

p for n, p in opt_model.named_parameters() if (

n in self.active_layers_names and n not in decay_parameters and p.requires_grad)

],

"weight_decay": 0.0,

},

]

```

The first two represents layers that states should be kept, while the last two will change.

An approach I came up with is that partially "re-init" the optimizer at the beginning of the step that should do the switch. I modified my huggingface trainer based on ds optimizer `__init__` method:

```python

def _reinit_deepspeed_zero_optimizer_params(self, optimizer: DeepSpeedZeroOptimizer):

num_non_lisa_body_layer_pgs = len(self.optimizer.param_groups) - len(LISA_BODY_LAYER_PARAM_GROUPS_IDX)

objs = [

optimizer.bit16_groups,

optimizer.round_robin_bit16_groups,

optimizer.round_robin_bit16_indices,

optimizer.round_robin_bit16_meta,

optimizer.bit16_groups_flat,

optimizer.groups_padding,

optimizer.parallel_partitioned_bit16_groups,

optimizer.single_partition_of_fp32_groups,

optimizer.partition_size,

optimizer.params_in_partition,

optimizer.params_not_in_partition,

optimizer.first_offset

]

for obj in objs:

del obj[num_non_lisa_body_layer_pgs:]

empty_cache()

torch.cuda.empty_cache()

gc.collect()

for i, param_group in enumerate(optimizer.optimizer.param_groups):

if i in range(num_non_lisa_body_layer_pgs):

# skip lmhead, ln, etc.

continue

## same as deepspeed/runtime/zero/stage_1_and_2.py DeepSpeedZeroOptimizer.__init__ below

partition_id = dist.get_rank(group=optimizer.real_dp_process_group[i])

# push this group to list before modify

# TODO: Explore simplification that avoids the extra book-keeping by pushing the reordered group

trainable_parameters = []

for param in param_group['params']:

if param.requires_grad:

param.grad_accum = None

trainable_parameters.append(param)

optimizer.bit16_groups.append(trainable_parameters)

# not sure why apex was cloning the weights before flattening

# removing cloning here

see_memory_usage(f"Before moving param group {i} to CPU")

# move all the parameters to cpu to free up GPU space for creating flat buffer

# Create temp CPU param copies, free accelerator tensors

orig_group_numel = 0

for param in optimizer.bit16_groups[i]:

orig_group_numel += param.numel()

param.cpu_data = param.data.cpu()

param.data = torch.empty(1).to(param.device)

empty_cache()

see_memory_usage(f"After moving param group {i} to CPU", force=False)

# Reorder group parameters for load balancing of gradient partitioning during backward among ranks.

# This ensures that gradients are reduced in a fashion such that ownership round robins among the ranks.

# For example, rather than 3 gradients (g_n+2, g_n+1, g_n) that are reduced consecutively belonging

# to the same rank, instead they will belong to 3 ranks (r_m+2, r_m+1, r_m).

if optimizer.round_robin_gradients:

round_robin_tensors, round_robin_indices = optimizer._round_robin_reorder(

optimizer.bit16_groups[i], dist.get_world_size(group=optimizer.real_dp_process_group[i]))

else:

round_robin_tensors = optimizer.bit16_groups[i]

round_robin_indices = list(range(len(optimizer.bit16_groups[i])))

optimizer.round_robin_bit16_groups.append(round_robin_tensors)

optimizer.round_robin_bit16_indices.append(round_robin_indices)

# Create meta tensors list, ordered according to round_robin_tensors

meta_tensors = []

for param in round_robin_tensors:

meta_tensors.append(torch.zeros_like(param.cpu_data, device="meta"))

optimizer.round_robin_bit16_meta.append(meta_tensors)

# create flat buffer in CPU

flattened_buffer = optimizer.flatten_dense_tensors_aligned(

optimizer.round_robin_bit16_groups[i],

optimizer.nccl_start_alignment_factor * dist.get_world_size(group=optimizer.real_dp_process_group[i]),

use_cpu_data=True)

# free temp CPU params

for param in optimizer.bit16_groups[i]:

del param.cpu_data

# Move CPU flat tensor to the accelerator memory.

optimizer.bit16_groups_flat.append(flattened_buffer.to(get_accelerator().current_device_name()))

del flattened_buffer

see_memory_usage(f"After flattening and moving param group {i} to GPU", force=False)

# Record padding required for alignment

if partition_id == dist.get_world_size(group=optimizer.real_dp_process_group[i]) - 1:

padding = optimizer.bit16_groups_flat[i].numel() - orig_group_numel

else:

padding = 0

optimizer.groups_padding.append(padding)

if dist.get_rank(group=optimizer.real_dp_process_group[i]) == 0:

see_memory_usage(f"After Flattening and after emptying param group {i} cache", force=False)

# set model bit16 weight to slices of flattened buffer

optimizer._update_model_bit16_weights(i)

# divide the flat weights into near equal partition equal to the data parallel degree

# each process will compute on a different part of the partition

data_parallel_partitions = optimizer.get_data_parallel_partitions(optimizer.bit16_groups_flat[i], i)

optimizer.parallel_partitioned_bit16_groups.append(data_parallel_partitions)

# verify that data partition start locations are 4-byte aligned

for partitioned_data in data_parallel_partitions:

assert (partitioned_data.data_ptr() % (2 * optimizer.nccl_start_alignment_factor) == 0)

# A partition of the fp32 master weights that will be updated by this process.

# Note that the params in single_partition_of_fp32_groups is cloned and detached

# from the origin params of the model.

if not optimizer.fp16_master_weights_and_gradients:

weights_partition = optimizer.parallel_partitioned_bit16_groups[i][partition_id].to(

optimizer.device).clone().float().detach()

else:

weights_partition = optimizer.parallel_partitioned_bit16_groups[i][partition_id].to(

optimizer.device).clone().half().detach()

if optimizer.cpu_offload:

weights_partition = get_accelerator().pin_memory(weights_partition)

optimizer.single_partition_of_fp32_groups.append(weights_partition)

# Set local optimizer to have flat params of its own partition.

# After this, the local optimizer will only contain its own partition of params.

# In that case, the local optimizer only saves the states(momentum, variance, etc.) related to its partition's params(zero stage1).

optimizer.single_partition_of_fp32_groups[

i].requires_grad = True # keep this in case internal optimizer uses it

param_group['params'] = [optimizer.single_partition_of_fp32_groups[i]]

partition_size = len(optimizer.bit16_groups_flat[i]) / dist.get_world_size(group=optimizer.real_dp_process_group[i])

params_in_partition, params_not_in_partition, first_offset = optimizer.get_partition_info(

optimizer.round_robin_bit16_groups[i], partition_size, partition_id)

optimizer.partition_size.append(partition_size)

optimizer.params_in_partition.append(params_in_partition)

optimizer.params_not_in_partition.append(params_not_in_partition)

optimizer.first_offset.append(first_offset)

```

**However, I found `del obj` not working, as the mem profiling result shown below:**

I noticed the tensors the arrows point at spawn when:

```python

# Move CPU flat tensor to the accelerator memory.

optimizer.bit16_groups_flat.append(flattened_buffer.to(get_accelerator().current_device_name()))

```

Are there any insights?

|

closed

|

2024-11-08T11:42:49Z

|

2024-12-06T21:59:52Z

|

https://github.com/deepspeedai/DeepSpeed/issues/6729

|

[] |

wheresmyhair

| 2

|

ymcui/Chinese-LLaMA-Alpaca

|

nlp

| 575

|

跑run_pt.sh时,使用--deepspeed ds_zero2_no_offload.json运行卡住了

|

peft使用的指定的0.3.0dev,运行run_pt.sh时,参数都是默认配置,只修改了模型和token路径,运行后读取数据正常,但卡在下面这里不动了,试了几次都如此,但删除--deepspeed ${deepspeed_config_file}后不会卡住,单报其他错误,感觉问题可能出在deepspeed脚本上,下面是卡住时的log:

Loading checkpoint shards: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 33/33 [00:12<00:00, 2.66it/s]

Loading checkpoint shards: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 33/33 [00:12<00:00, 2.65it/s]

[INFO|modeling_utils.py:3283] 2023-06-12 18:58:17,569 >> All model checkpoint weights were used when initializing LlamaForCausalLM.

[INFO|modeling_utils.py:3291] 2023-06-12 18:58:17,569 >> All the weights of LlamaForCausalLM were initialized from the model checkpoint at /media/zzg/GJ_disk01/pretrained_model/text-generation-webui/models/decapoda-research_llama-7b-hf.

If your task is similar to the task the model of the checkpoint was trained on, you can already use LlamaForCausalLM for predictions without further training.

[INFO|configuration_utils.py:537] 2023-06-12 18:58:17,572 >> loading configuration file /media/zzg/GJ_disk01/pretrained_model/text-generation-webui/models/decapoda-research_llama-7b-hf/generation_config.json

[INFO|configuration_utils.py:577] 2023-06-12 18:58:17,572 >> Generate config GenerationConfig {

"_from_model_config": true,

"bos_token_id": 0,

"eos_token_id": 1,

"pad_token_id": 0,

"transformers_version": "4.30.0.dev0"

}

06/12/2023 18:58:34 - INFO - __main__ - Init new peft model

06/12/2023 18:58:34 - INFO - __main__ - target_modules: ['q_proj', 'v_proj', 'k_proj', 'o_proj', 'gate_proj', 'down_proj', 'up_proj']

06/12/2023 18:58:34 - INFO - __main__ - lora_rank: 8

trainable params: 429203456 || all params: 6905475072 || trainable%: 6.2154080859739

[INFO|trainer.py:594] 2023-06-12 18:59:44,457 >> max_steps is given, it will override any value given in num_train_epochs

/home/zzg/miniconda3/envs/py39_DL_cu118/lib/python3.9/site-packages/transformers/optimization.py:411: FutureWarning: This implementation of AdamW is deprecated and will be removed in a future version. Use the PyTorch implementation torch.optim.AdamW instead, or set `no_deprecation_warning=True` to disable this warning

warnings.warn(

[2023-06-12 18:59:44,476] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed info: version=0.9.4, git-hash=unknown, git-branch=unknown

06/12/2023 18:59:47 - INFO - torch.distributed.distributed_c10d - Added key: store_based_barrier_key:2 to store for rank: 0

trainable params: 429203456 || all params: 6905475072 || trainable%: 6.2154080859739

/home/zzg/miniconda3/envs/py39_DL_cu118/lib/python3.9/site-packages/transformers/optimization.py:411: FutureWarning: This implementation of AdamW is deprecated and will be removed in a future version. Use the PyTorch implementation torch.optim.AdamW instead, or set `no_deprecation_warning=True` to disable this warning

warnings.warn(

06/12/2023 18:59:51 - INFO - torch.distributed.distributed_c10d - Added key: store_based_barrier_key:2 to store for rank: 1

06/12/2023 18:59:51 - INFO - torch.distributed.distributed_c10d - Rank 1: Completed store-based barrier for key:store_based_barrier_key:2 with 2 nodes.

06/12/2023 18:59:51 - INFO - torch.distributed.distributed_c10d - Rank 0: Completed store-based barrier for key:store_based_barrier_key:2 with 2 nodes.

[2023-06-12 18:59:51,724] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed Flops Profiler Enabled: False

[2023-06-12 18:59:51,728] [INFO] [logging.py:96:log_dist] [Rank 0] Removing param_group that has no 'params' in the client Optimizer

[2023-06-12 18:59:51,728] [INFO] [logging.py:96:log_dist] [Rank 0] Using client Optimizer as basic optimizer

[2023-06-12 18:59:51,761] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed Basic Optimizer = AdamW

[2023-06-12 18:59:51,761] [INFO] [utils.py:54:is_zero_supported_optimizer] Checking ZeRO support for optimizer=AdamW type=<class 'transformers.optimization.AdamW'>

[2023-06-12 18:59:51,761] [WARNING] [engine.py:1116:_do_optimizer_sanity_check] **** You are using ZeRO with an untested optimizer, proceed with caution *****

[2023-06-12 18:59:51,761] [INFO] [logging.py:96:log_dist] [Rank 0] Creating torch.float16 ZeRO stage 2 optimizer

[2023-06-12 18:59:51,761] [INFO] [stage_1_and_2.py:133:__init__] Reduce bucket size 100000000

[2023-06-12 18:59:51,761] [INFO] [stage_1_and_2.py:134:__init__] Allgather bucket size 100000000

[2023-06-12 18:59:51,762] [INFO] [stage_1_and_2.py:135:__init__] CPU Offload: False

[2023-06-12 18:59:51,762] [INFO] [stage_1_and_2.py:136:__init__] Round robin gradient partitioning: False

Using /home/zzg/.cache/torch_extensions/py39_cu117 as PyTorch extensions root...

Using /home/zzg/.cache/torch_extensions/py39_cu117 as PyTorch extensions root...

deepspeed重新install后也同样如此,请问有解决办法吗?

|

closed

|

2023-06-12T11:04:47Z

|

2023-06-13T03:51:24Z

|

https://github.com/ymcui/Chinese-LLaMA-Alpaca/issues/575

|

[] |

guijuzhejiang

| 6

|

holoviz/panel

|

plotly

| 7,517

|

JSComponent not working in Jupyter

|

I'm on panel==1.5.4 panel-copy-paste==0.0.4

The `render_fn` cannot be found and the component does not display.

```python

import panel as pn

from panel_copy_paste import PasteToDataFrameButton

import pandas as pd

ACCENT = "#ff4a4a"

pn.extension("tabulator")

```

```python

PasteToDataFrameButton(target=to_table)

```

I'm on a JupyterHub behind a reverse proxy if that matters?

|

open

|

2024-11-25T06:35:13Z

|

2025-01-21T10:50:40Z

|

https://github.com/holoviz/panel/issues/7517

|

[

"more info needed"

] |

MarcSkovMadsen

| 2

|

donnemartin/data-science-ipython-notebooks

|

matplotlib

| 13

|

Command to run mrjob s3 log parser is incorrect

|

Current:

```

python mr-mr_s3_log_parser.py -r emr s3://bucket-source/ --output-dir=s3://bucket-dest/"

```

Should be:

```

python mr_s3_log_parser.py -r emr s3://bucket-source/ --output-dir=s3://bucket-dest/"

```

|

closed

|

2015-07-31T22:52:18Z

|

2015-12-28T13:14:13Z

|

https://github.com/donnemartin/data-science-ipython-notebooks/issues/13

|

[

"bug"

] |

donnemartin

| 1

|

google-research/bert

|

tensorflow

| 782

|

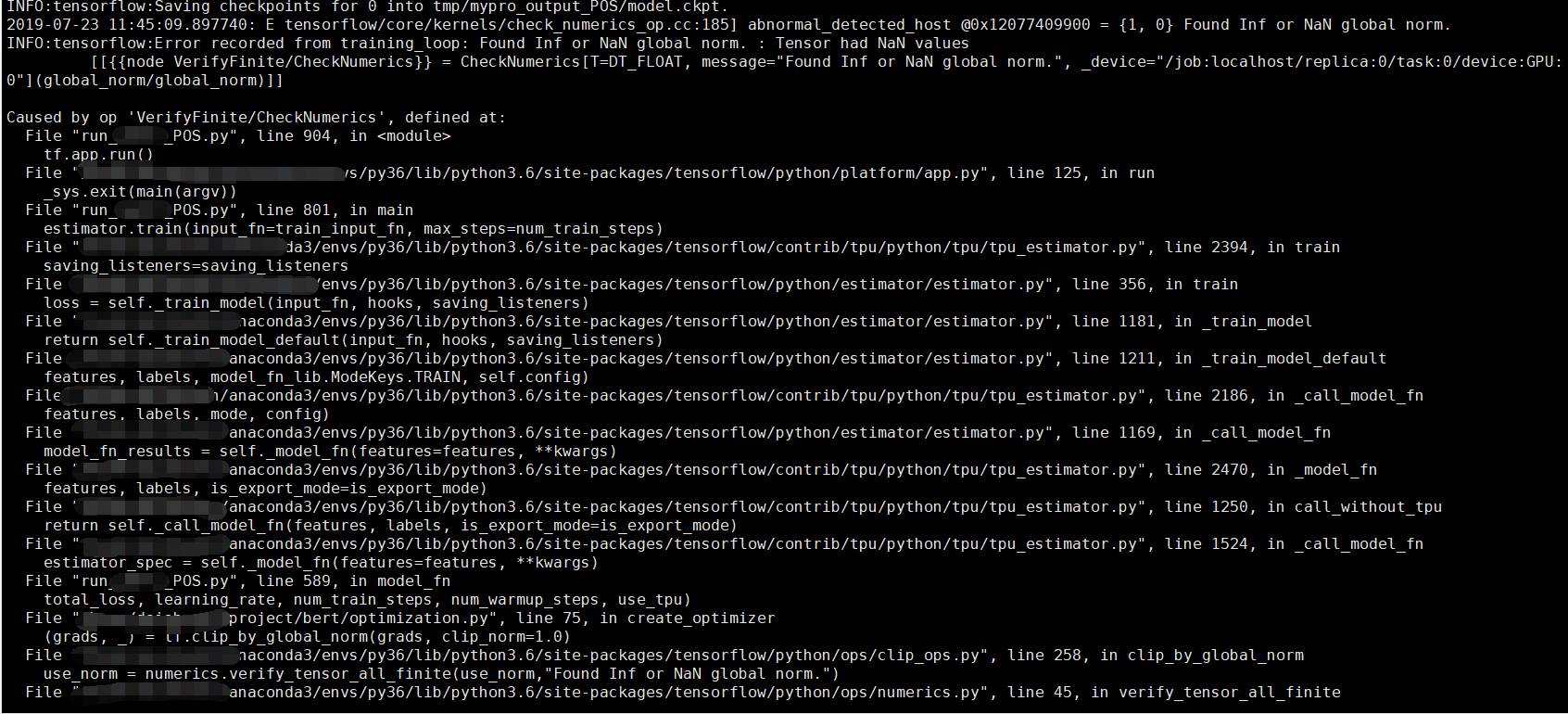

InvalidArgumentError (see above for traceback): Found Inf or NaN global norm. : Tensor had NaN values [[{{node VerifyFinite/CheckNumerics}} = CheckNumerics[T=DT_FLOAT, message="Found Inf or NaN global norm.", _device="/job:localhost/replica:0/task:0/device:GPU:0"](global_norm/global_norm)]]

|

I add POS tag feature to the BERT model and meet the following problem,I tried to reduce the batch_size, but it was useless.

python run_oqmrc_POS.py --task_name=MyPro --do_train=true --do_eval=true --data_dir=./data --vocab_file=chinese_bert/vocab.txt --pos_tag_vocab_file=pyltp_data/pos_tag_vocab.txt --bert_config_file=chinese_bert/bert_config.json --init_checkpoint=chinese_bert/bert_model.ckpt --max_seq_length=128 --train_batch_size=32 --learning_rate=2e-5 --num_train_epochs=3.0 --output_dir=tmp/mypro_output_POS/

|

open

|

2019-07-23T07:18:52Z

|

2019-07-23T07:18:52Z

|

https://github.com/google-research/bert/issues/782

|

[] |

daishu7

| 0

|

AUTOMATIC1111/stable-diffusion-webui

|

pytorch

| 15,870

|

[Bug]: Stable Diffusion is now very slow and won't work at all

|

### Checklist

- [ ] The issue exists after disabling all extensions

- [ ] The issue exists on a clean installation of webui

- [ ] The issue is caused by an extension, but I believe it is caused by a bug in the webui

- [ ] The issue exists in the current version of the webui

- [ ] The issue has not been reported before recently

- [ ] The issue has been reported before but has not been fixed yet

### What happened?

Well, after switching to the babes 3.1 checkpoint I tried to generate an image, but when I stopped it because I didn't like it I got the little CUDA message, so I tried to generate another image and got the same thing. So I closed out Stable Diffusion, went into the files, deleted some of the useless past outputs, ran it again, there was nothing off about the startup process, and when I tried to reload the last set of prompts, it wouldn't do it. So I had to go into the parameters, copy and paste the last prompts, hit generate, and it won't even load up, which I found strange. So I closed out of stable diffusion, checked to see if my NVIDIA card needed updating, it did, installed the update, ran Stable Diffusion again, same dang thing happened. Then I thought it might be my laptop since it needed an update, so I ran the update on my laptop, then tried to run SD again, NOPE! Same thing happened AGAIN. Then, I realized, it's ONLY SD that's taking forever, everything else on my laptop is fine. As of the moment, I can't do ANYTHING on SD, can't check for extension updates, can't generate anything, can't switch to previous versions, can't switch checkpoints, I was lucky to get the sysinfo, I tried closing out, going into settings, removing the checkpoints until there was only one left, my usual default one, Anythingv5.0, can't even load that one. Currently, it has been almost 10 minutes while typing this, and before typing this I tried to generate something on SD, IT HASN'T EVEN STARTED, IT'S STILL PROCESSING, NOTHING ELSE ON SD IS LOADING, NOTHING IS PREVENTING IT FROM GENERATING. I honestly don't know what's going on, can someone please help me?

### Steps to reproduce the problem

No idea

### What should have happened?

It should not be very freaking slow.

### What browsers do you use to access the UI ?

Google Chrome

### Sysinfo

[sysinfo-2024-05-23-05-16.json](https://github.com/AUTOMATIC1111/stable-diffusion-webui/files/15411881/sysinfo-2024-05-23-05-16.json)

### Console logs

```Shell

C:\Users\zach\OneDrive\Desktop\Stable Diffusion\stable-diffusion-webui>git pull

Already up to date.

venv "C:\Users\zach\OneDrive\Desktop\Stable Diffusion\stable-diffusion-webui\venv\Scripts\Python.exe"

Python 3.10.6 (tags/v3.10.6:9c7b4bd, Aug 1 2022, 21:53:49) [MSC v.1932 64 bit (AMD64)]

Version: v1.9.3

Commit hash: 1c0a0c4c26f78c32095ebc7f8af82f5c04fca8c0

#######################################################################################################

Initializing Civitai Link

If submitting an issue on github, please provide the below text for debugging purposes:

Python revision: 3.10.6 (tags/v3.10.6:9c7b4bd, Aug 1 2022, 21:53:49) [MSC v.1932 64 bit (AMD64)]

Civitai Link revision: 115cd9c35b0774c90cb9c397ad60ef6a7dac60de

SD-WebUI revision: 1c0a0c4c26f78c32095ebc7f8af82f5c04fca8c0

Checking Civitai Link requirements...

#######################################################################################################

Launching Web UI with arguments: --precision full --no-half --skip-torch-cuda-test --xformers

ControlNet preprocessor location: C:\Users\zach\OneDrive\Desktop\Stable Diffusion\stable-diffusion-webui\extensions\sd-webui-controlnet\annotator\downloads

2024-05-23 01:04:51,516 - ControlNet - INFO - ControlNet v1.1.448

Civitai: API loaded

Loading weights [90bef92d4f] from C:\Users\zach\OneDrive\Desktop\Stable Diffusion\stable-diffusion-webui\models\Stable-diffusion\babes_31.safetensors

[LyCORIS]-WARNING: LyCORIS legacy extension is now loaded, if you don't expext to see this message, please disable this extension.

2024-05-23 01:04:52,407 - ControlNet - INFO - ControlNet UI callback registered.

Creating model from config: C:\Users\zach\OneDrive\Desktop\Stable Diffusion\stable-diffusion-webui\configs\v1-inference.yaml

Running on local URL: http://127.0.0.1:7860

To create a public link, set `share=True` in `launch()`.

Civitai: Check resources for missing info files

Civitai: Check resources for missing preview images

Startup time: 17.4s (prepare environment: 4.6s, import torch: 5.8s, import gradio: 1.1s, setup paths: 1.2s, initialize shared: 0.3s, other imports: 0.7s, load scripts: 2.5s, create ui: 0.6s, gradio launch: 0.4s).

Civitai: Found 0 resources missing info files

Civitai: No info found on Civitai

Civitai: Found 0 resources missing preview images

Civitai: No preview images found on Civitai

Applying attention optimization: xformers... done.

Model loaded in 4.1s (load weights from disk: 0.9s, create model: 0.7s, apply weights to model: 1.7s, apply float(): 0.3s, move model to device: 0.1s, calculate empty prompt: 0.4s).

```

### Additional information

Everything is updated, everything is all caught up, there is nothing that needs updating as far as I'm aware of, feel free to point something out if I missed it.

|

open

|

2024-05-23T05:24:37Z

|

2024-05-28T22:59:12Z

|

https://github.com/AUTOMATIC1111/stable-diffusion-webui/issues/15870

|

[

"bug-report"

] |

MichaelDeathBringer

| 8

|

junyanz/pytorch-CycleGAN-and-pix2pix

|

deep-learning

| 1,122

|

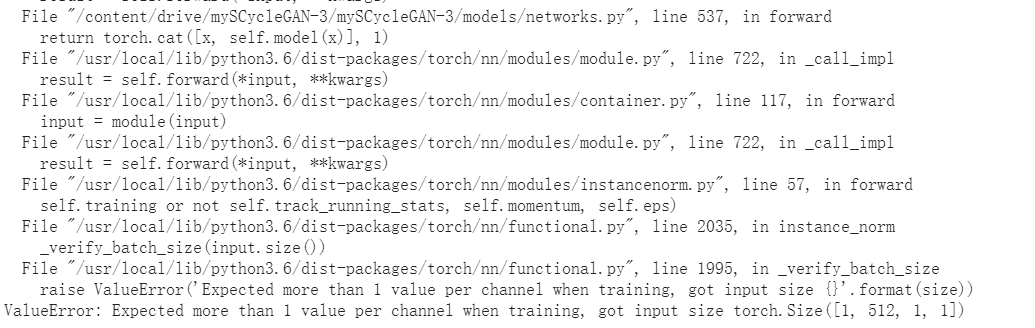

a trouble when testing cyclegan

|

Hi, appreciating your open source code, it's really a masterpiece.

I've met some trouble when I run the test.py using cyclegan, like this:

my input data are images with [256,256,3], I keep some flags the same as training, such as netG, norm, dropout, like belows: