repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

paperless-ngx/paperless-ngx

|

django

| 9,386

|

[BUG] OIDC group sync doens't work on first login.

|

### Description

While testing the excellent new social account features from #9039 thanks very much for the work on that!

I noticed that on first login with a social account (OIDC in my case) only the default groups set in

PAPERLESS_SOCIAL_ACCOUNT_DEFAULT_GROUPS and PAPERLESS_ACCOUNT_DEFAULT_GROUPS are applied.

On second login the group memberships are updated to those provided by OIDC.

### Steps to reproduce

1. Configure OIDC with group sync

2. Create one or more groups in paperless where the names match existing OIDC groups

3. ensure no accounts matching your OIDC account username already exist

4. authenticate with OIDC - only the default groups are applied

5. As admin, check the group memberships of the newly created account

6. log out

7. log in again with OIDC - now all OIDC groups are applied

8. Verify updated group memberships with admin level account

### Webserver logs

```bash

From first login with OIDC account:

[2025-03-13 11:38:04,402] [DEBUG] [paperless.auth] Adding default social groups to user `dl`: ['test']

From second login with the same OIDC account:

[2025-03-13 11:38:20,498] [DEBUG] [paperless.auth] Syncing groups for user `dl`: ['idm_all_persons@example.com',...

```

### Browser logs

```bash

```

### Paperless-ngx version

dev build 2025-03-12

### Host OS

K8s/amd64

### Installation method

Docker - official image

### System status

```json

```

### Browser

_No response_

### Configuration changes

_No response_

### Please confirm the following

- [x] I believe this issue is a bug that affects all users of Paperless-ngx, not something specific to my installation.

- [x] This issue is not about the OCR or archive creation of a specific file(s). Otherwise, please see above regarding OCR tools.

- [x] I have already searched for relevant existing issues and discussions before opening this report.

- [x] I have updated the title field above with a concise description.

|

closed

|

2025-03-13T12:00:12Z

|

2025-03-13T14:24:07Z

|

https://github.com/paperless-ngx/paperless-ngx/issues/9386

|

[

"backend"

] |

Serbitor

| 0

|

tfranzel/drf-spectacular

|

rest-api

| 988

|

Nested serializer schema not showing a field

|

**Describe the bug**

Very possible that I am misunderstanding how something should work, but in case I am not:

These serializers

```python

class ResponseNodeSerializer(serializers.Serializer):

type: serializers.ChoiceField(choices=["text"])

content: serializers.CharField(max_length=5000)

class CreatedResponseSerializer(serializers.Serializer):

response_body = ResponseNodeSerializer(many=True)

```

On a function view:

```python

@extend_schema(responses=CreatedResponseSerializer)

@api_view(["POST"])

@permission_classes([])

def responses_view(request: Request) -> Response:

```

Gives completely empty example and swagger definition.

Code | Description | Links

-- | -- | --

200 | No response body

**To Reproduce**

Simple example provided

**Expected behavior**

Expected the schema to have

```json

{

"response_body": [

"type": "text",

"content": "string"

]

}

```

|

closed

|

2023-05-16T21:53:25Z

|

2023-05-17T13:03:56Z

|

https://github.com/tfranzel/drf-spectacular/issues/988

|

[] |

armanckeser

| 2

|

feature-engine/feature_engine

|

scikit-learn

| 14

|

review tests for categorical encoders

|

Are they working with the latest implementation? are they testing every aspect of the class?

|

closed

|

2019-09-04T08:15:44Z

|

2020-04-19T09:53:23Z

|

https://github.com/feature-engine/feature_engine/issues/14

|

[] |

solegalli

| 0

|

NullArray/AutoSploit

|

automation

| 593

|

Unhandled Exception (81a4e7399)

|

Autosploit version: `3.0`

OS information: `Linux-4.17.0-kali1-amd64-x86_64-with-Kali-kali-rolling-kali-rolling`

Running context: `autosploit.py`

Error meesage: `global name 'Except' is not defined`

Error traceback:

```

Traceback (most recent call):

File "/root/AutoSploit/autosploit/main.py", line 113, in main

loaded_exploits = load_exploits(EXPLOIT_FILES_PATH)

File "/root/AutoSploit/lib/jsonize.py", line 61, in load_exploits

except Except:

NameError: global name 'Except' is not defined

```

Metasploit launched: `False`

|

closed

|

2019-03-25T12:03:01Z

|

2019-04-02T20:24:30Z

|

https://github.com/NullArray/AutoSploit/issues/593

|

[] |

AutosploitReporter

| 0

|

wkentaro/labelme

|

deep-learning

| 699

|

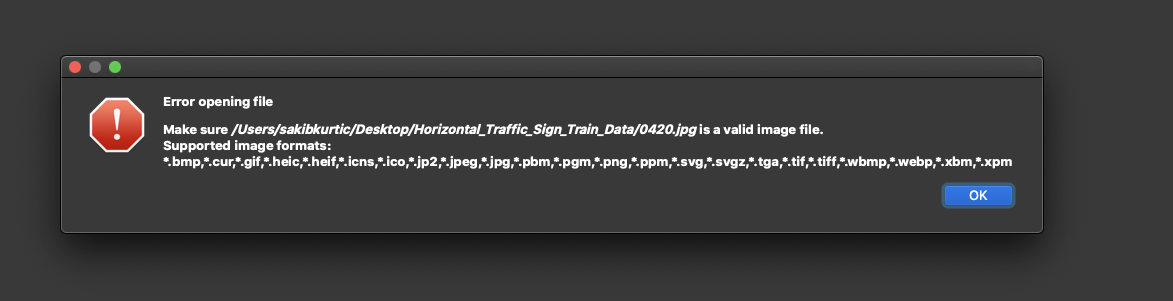

[BUG] Cannot open JPEG

|

**Describe the bug**

Labelme v4.5 does not want to load *.jpg or *.heic. I can see them in the picker view, and it does allow me to select it, but it does not want to load.

**To Reproduce**

Steps to reproduce the behavior:

You will find out that you won't see jpg images.

1. Install Labelme 4.5.0 using pip3 install labelme

2. Click on OpenDir/Open

3. Choose Dir or single image in .jpg or .heic format

4. Observe error

**Expected behavior**

The jpg images must be seen.

**Screenshots**

Here is the folder structure with image names and format.

Here is the error I get

List of applicable image formats

**Desktop (please complete the following information):**

- OS: MacOS Catalina v10.15.5

- Labelme Version [v4.5]

**Additional context**

[ERROR ] label_file:load_image_file:39 - Failed opening image file: /Users/sakibkurtic/Desktop/Horizontal_Traffic_Sign_Train_Data/0420.jpg

This is a detailed console error I got.

|

closed

|

2020-06-25T10:26:29Z

|

2021-09-23T15:20:56Z

|

https://github.com/wkentaro/labelme/issues/699

|

[

"issue::bug"

] |

sakibk

| 2

|

sqlalchemy/alembic

|

sqlalchemy

| 968

|

Alembic keeps detecting JSON change

|

**Describe the bug**

MariaDB's JSON type is an alias for LONGTEXT with a JSON_VALID check. When defining a column of type `sqlalchemy.dialects.mysql.JSON`, Alembic keeps generating a migration of `existing_type=mysql.LONGTEXT(charset='utf8mb4', collation='utf8mb4_bin')` to `type_=mysql.JSON()`.

**Expected behavior**

I would expect Alembic to not detect any changes between LONGTEXT with JSON_VALID check and `sqlalchemy.dialects.mysql.JSON`.

**To Reproduce**

- Add an `sqlalchemy.dialects.mysql.JSON` column

- Create a migration & upgrade

- Create another migration. This will cause another migration with an operation like the one below to be created.

```py

op.alter_column('api_users', 'trusted_ip_networks',

existing_type=mysql.LONGTEXT(charset='utf8mb4', collation='utf8mb4_bin'),

type_=mysql.JSON(),

existing_nullable=False,

existing_server_default=sa.text("'[]'")

)

```

**Error**

None.

**Versions.**

- OS: macOS 11.6

- Python: 3.8.9

- Alembic: 1.4.2

- SQLAlchemy: 1.3.16

- Database: MariaDB 10.5.9

- DBAPI: -

**Additional context**

None.

|

closed

|

2021-11-12T22:12:06Z

|

2021-11-18T15:54:45Z

|

https://github.com/sqlalchemy/alembic/issues/968

|

[

"bug",

"autogenerate - detection",

"mysql"

] |

WilliamDEdwards

| 1

|

iperov/DeepFaceLab

|

machine-learning

| 5,201

|

Head extraction size

|

## Expected behavior

Extract head aligned with more tight crop of the head for frontal shots, so we can use lower model resolution and faster training, rather than trying to compensate with higher res.

## Actual behavior

Frontal aligned are extracted at 40% of aligned frame, so there is ~60% of frame resolution wasted.

## Steps to reproduce

extract head (aligned)

|

closed

|

2020-12-15T14:48:11Z

|

2023-06-08T22:39:54Z

|

https://github.com/iperov/DeepFaceLab/issues/5201

|

[] |

zabique

| 5

|

indico/indico

|

sqlalchemy

| 6,731

|

Support text highlighting in Markdown-formatted minutes

|

In Markdown-formatted minutes, it would be very useful to be able to highlight (yellow background) some part of text. For example with `normal and ==highlighted== text` notation (which I understand is not part of the Markdown standard - but is a common Markdown extension).

|

closed

|

2025-02-06T10:50:14Z

|

2025-02-06T14:01:46Z

|

https://github.com/indico/indico/issues/6731

|

[

"enhancement",

"help wanted"

] |

SebastianLopienski

| 0

|

ckan/ckan

|

api

| 7,604

|

Tighten validation for `id` fields

|

Having loose or inconsistent validation on `id` fields can lead to confusing behaviour or security concerns. Let's do a review of the various entities with `id` field in the model and apply the same validation to all of them (if possible). Validators should include:

* "Can only be changed by a sysadmin": custom ids only make sense in the context of specific scenarios like migrations, it make sense that only sysadmins (or plugins that ignore auth) touch them

* "Value is a valid UUID". In the past we've allowed different values here to support potential use cases but it's probably safer to enforce UUIDs for all ids and use custom fields if needed

* "Id does not already exist when creating": to prevent taking over other entities

Whenever possible we should look at enforcing those not only with validators but also at the SQLAlchemy level, eg using inserts instead of upserts when creating records.

Some of these are already applied to some entities, but it would be good to be consistent across all models

* Dataset

* Resource

* Resource View

* Groups (org/groups)

* User

* Activity

* Extras

* Tags (?)

* ...

|

closed

|

2023-05-25T11:08:20Z

|

2024-05-23T13:42:52Z

|

https://github.com/ckan/ckan/issues/7604

|

[

"Good for Contribution"

] |

amercader

| 2

|

microsoft/MMdnn

|

tensorflow

| 359

|

Incorrect input layer name in code when emitting to MXNet.

|

### Hardware Specifications

Platform (like ubuntu 16.04/win10):

Ubuntu 16.04

**Python version:**

Python 3.5.2

**Source framework with version (like Tensorflow 1.4.1 with GPU):**

Tensorflow 1.9.0 compiled with CUDA 9.0

**Destination framework with version (like CNTK 2.3 with GPU):**

MXNet 1.3.0 with CPU

**Pre-trained model path (webpath or webdisk path):**

I am trying to convert Google's Audioset VGGish network trained on their Audioset data. The architecture is very similar to VGG. The network in located on my disk at the paths `export.ckpt.meta`, `export.ckpt.data-0000-of-00001`, `export.ckpt.index`.

**Running scripts:**

```bash

mmtoir -f tensorflow -n export.ckpt.meta -w export.ckpt --inNodeName vggish/input_features --dstNodeName vggish/embedding --inputShape 96,64 -o exportir

mmtocode -f mxnet -n exportir.pb -w exportir.npy -o exporttest.py -ow exporttest.params

```

### Issue

The mmtoir and mmtocode both return successfully. However, the output MXNet python file has an error when run.

```

ValueError: You created Module with Module(..., data_names=['vggish_input_features']) but input with name 'vggish_input_features' is not found in symbol.list_arguments(). Did you mean one of: vggish/input_features

```

It seems to be because in the autogenerated python MXNet network definition, there is one line

```python

model = mx.mod.Module(symbol = vggish_fc2_Relu, context = mx.cpu(), data_names = ['vggish_input_features'])

```

I was able to get everything to work correctly by replacing `data_names = ['vggish_input_features'])` with `data_names = ['vggish/input_features'])`.

The autogenerated code seems to point to the actual python variable name of the first layer rather than the name given. For reference, the autogenerated network definition has:

```python

vggish_input_features = mx.sym.var('vggish/input_features')

```

This seems to only be a problem when the input layer name has a character that causes the layer python name to be different from the given name.

|

closed

|

2018-08-14T07:49:37Z

|

2018-08-15T01:31:19Z

|

https://github.com/microsoft/MMdnn/issues/359

|

[] |

wfus

| 0

|

pydata/xarray

|

pandas

| 9,573

|

Consider renaming DataTree.map_over_subtree (or revising its API)

|

### What is your issue?

We have a bit of a naming inconsistency in DataTree:

- The `subtrees` property is an iterator over all sub-tree nodes as a DataTree objects

- `map_over_subtree` maps a function over all sub-tree nodes as Dataset objects

I think it makes sense for "subtree" to refer strictly to DataTree objects. In that case, perhaps we should rename `map_over_subtree` to `map_over_datasets`?

Alternatively, could also change the interface to iterate over DataTree objects instead, which are easy to convert into datasets (via `.dataset`) and additionally provide the full context of a node's `path` and parents.

|

closed

|

2024-10-03T01:47:53Z

|

2024-10-21T15:55:34Z

|

https://github.com/pydata/xarray/issues/9573

|

[

"topic-DataTree"

] |

shoyer

| 3

|

cobrateam/splinter

|

automation

| 589

|

Is Zope only supported in Python 2?

|

I'm seeing an exception using `Browser('zope.testbrowser')`

```

Traceback (most recent call last):

File "/d01/sandboxes/jgeorgeson/git/Dev-Engineering/gitlab-scripts/python-gitlab/lib64/python3.6/site-packages/splinter/browser.py", line 60, in Browser

driver = _DRIVERS[driver_name]

KeyError: 'zope.testbrowser'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "./scripts/import-project.py", line 5, in <module>

with Browser('zope.testbrowser') as browser:

File "/d01/sandboxes/jgeorgeson/git/Dev-Engineering/gitlab-scripts/python-gitlab/lib64/python3.6/site-packages/splinter/browser.py", line 62, in Browser

raise DriverNotFoundError("No driver for %s" % driver_name)

splinter.exceptions.DriverNotFoundError: No driver for zope.testbrowser

```

And see the sys.version_info check [here](https://github.com/cobrateam/splinter/blob/2eec5aa844f743ad3dd4ffc4a17070f5a5b7d9b5/splinter/browser.py#L25)

```

$ python --version

Python 3.6.3

$ pip3 list splinter[zopetestbrowser]

DEPRECATION: The default format will switch to columns in the future. You can use--format=(legacy|columns) (or define a format=(legacy|columns) in your pip.conf under the [list] section) to disable this warning.

beautifulsoup4 (4.6.0)

certifi (2018.1.18)

chardet (3.0.4)

cssselect (1.0.3)

idna (2.6)

lxml (4.1.1)

pip (9.0.1)

python-gitlab (1.2.0)

pytz (2018.3)

PyYAML (3.12)

requests (2.18.4)

selenium (3.9.0)

setuptools (28.8.0)

six (1.11.0)

splinter (0.7.7)

urllib3 (1.22)

waitress (1.1.0)

WebOb (1.7.4)

WebTest (2.0.29)

WSGIProxy2 (0.4.4)

zope.cachedescriptors (4.3.1)

zope.event (4.3.0)

zope.interface (4.4.3)

zope.schema (4.5.0)

zope.testbrowser (5.2.4)

```

|

closed

|

2018-02-26T21:47:11Z

|

2020-04-03T16:44:25Z

|

https://github.com/cobrateam/splinter/issues/589

|

[] |

jgeorgeson

| 4

|

deepset-ai/haystack

|

nlp

| 8,893

|

[Question]: Usage of Graph Algorithms and Any Slowdowns?

|

Hi there,

I'm interested in understanding if `haystack` depends on any graph algorithms from its usage of NetworkX? If so,

- What algorithms are used for what purpose?

- What graph sizes are they being used with?

- Have users experienced any slowdowns or issues with algorithms provided by NetworkX? (Speed, algorithm availability, etc)

Furthermore, would users be interested in accelerated nx algorithms via a GPU backend? This would involve zero code change.

Any insight into this topic would be greatly appreciated! Thank you.

|

closed

|

2025-02-20T17:39:13Z

|

2025-02-21T07:12:32Z

|

https://github.com/deepset-ai/haystack/issues/8893

|

[] |

nv-rliu

| 1

|

Miserlou/Zappa

|

flask

| 1,547

|

zappa package with slim_handler=true doesn't include Werkzeug package (introduced v0.46.0)

|

## Context

I thought I was running into issue #64 but turns out I found a regression introduced in v0.46 related to how the handler_venv is packaged and uploaded when using slim_handler.

I created a small repo to show the problem: https://github.com/sheats/zappa_issue

If you keep `zappa==0.46.0` (or 0.46.1) in `requirements.txt` and deploy the function the only thing that prints in the logs is:

```

Unable to import module 'handler': No module named 'werkzeug'

```

If you change requirements.txt to `zappa==0.45.1` and update the function the problem goes away.

When I unzipped the handler venv zip after running `zappa package` I only see the following:

```

drwx------ 9 user staff 288 Jun 25 06:27 .

drwxr-xr-x 16 user staff 512 Jun 25 06:27 ..

-rwxr-xr-x 1 user staff 0 Jun 25 2018 __init__.py

-rw-------@ 1 user staff 85824 Dec 31 1979 _sqlite3.so

-rw-r--r-- 1 user staff 605 Sep 13 2016 django_zappa_app.py

-rw------- 1 user staff 23461 Dec 31 1979 handler.py

-rw------- 1 user staff 143 Dec 31 1979 package_info.json

drwxr-xr-x 14 user staff 448 Jun 25 06:27 zappa

-rw-r--r-- 1 user staff 467 Jun 25 2018 zappa_settings.py

```

## Expected Behavior

I expect the logs to show other errors or normal successful logs.

## Actual Behavior

handler.py tries to [import werkzeug on line 17](https://github.com/Miserlou/Zappa/blob/master/zappa/handler.py#L17) but fails because Werkzeug wasn't included in the handler_venv zipped package.

## Possible Fix

Don't know the code well enough to come up with a fix strategy yet.

## Steps to Reproduce

```

git clone git@github.com:sheats/zappa_issue.git

make reproduce && make tail

```

## Your Environment

Everything is in the repo I referenced.

|

open

|

2018-06-25T21:09:57Z

|

2018-07-08T16:34:12Z

|

https://github.com/Miserlou/Zappa/issues/1547

|

[] |

sheats

| 8

|

ageitgey/face_recognition

|

python

| 1,152

|

Face recognition problem

|

Hello i have problem with face recognition i run your code on my laptop and works perfect but i try run te same code on my raspebrry pi and i have error :

Traceback (most recent call last):

File "2.py", line 73, in <module>

small_frame = cv2.resize(frame, (0, 0), fx=0.25, fy=0.25)

cv2.error: OpenCV(4.1.2) /home/pi/opencv/opencv-4.1.2/modules/imgproc/src/resize.cpp:3720: error: (-215:Assertion failed) !ssize.empty() in function 'resize'

* face_recognition version:

* Python version:

* Operating System:

### Description

Describe what you were trying to get done.

Tell us what happened, what went wrong, and what you expected to happen.

IMPORTANT: If your issue is related to a specific picture, include it so others can reproduce the issue.

### What I Did

```

Paste the command(s) you ran and the output.

If there was a crash, please include the traceback here.

```

|

open

|

2020-05-28T22:12:48Z

|

2020-08-03T16:26:07Z

|

https://github.com/ageitgey/face_recognition/issues/1152

|

[] |

rafalrk

| 1

|

sinaptik-ai/pandas-ai

|

data-science

| 1,330

|

Expose pandas API on SmartDataframe

|

### 🚀 The feature

Expose commonly used pandas API on SmartDataframe so that a smart dataframe can be used as if it is a normal pandas dataframe.

### Motivation, pitch

Continuous modification of a pandas dataframe is often needed. Is there a support for modifying an existing SmartDataframe after it has been constructed? If not, one way of enabling this is to expose pandas API on SmartDataframes.

### Alternatives

_No response_

### Additional context

_No response_

|

closed

|

2024-08-19T17:29:23Z

|

2024-11-25T16:07:45Z

|

https://github.com/sinaptik-ai/pandas-ai/issues/1330

|

[

"enhancement"

] |

c3-yiminliu

| 1

|

jwkvam/bowtie

|

plotly

| 35

|

charting component that's faster than plotly for rendering large data

|

- canvasjs

- echarts

ref: http://blog.udacity.com/2016/03/12-best-charting-libraries-for-web-developers.html

|

open

|

2016-11-09T18:28:22Z

|

2018-07-24T01:43:07Z

|

https://github.com/jwkvam/bowtie/issues/35

|

[] |

jwkvam

| 2

|

Lightning-AI/pytorch-lightning

|

data-science

| 19,667

|

test_ddp.py hangs at test_ddp_configure_ddp

|

### Bug description

When I try to run single test on multi-gpu device, it hangs at specific test case `test_ddp_configure_ddp` https://github.com/Lightning-AI/pytorch-lightning/blob/6f6c07dddfd68717f0b765a63d05a937b8508e15/tests/tests_pytorch/strategies/test_ddp.py#L142.

I've found that this case is related to `torch.distributed.init_process_group` . https://github.com/Lightning-AI/pytorch-lightning/blob/6f6c07dddfd68717f0b765a63d05a937b8508e15/src/lightning/fabric/utilities/distributed.py#L258

I have 4 gpus on my device, so the `world_size` send to `init_process_group` is 4, but the way I ran the test seems not include multiprocessing, so `init_process_group` just hangs for device to be ready.

When I set `CUDA_VISIBLE_DEVICES` to single device, it works fine. I'm not sure is this a bug or I've used the wrong command to run test. If so. how should I run this test?

### What version are you seeing the problem on?

v2.1

### How to reproduce the bug

```python

On multi-gpu environment:

pytest -v tests/tests_pytorch/strategies/test_ddp.py -k test_ddp_configure_ddp

```

### Error messages and logs

```

# Error messages and logs here please

no Error message, just hang up for a long time(default args: 1800 seconds)

```

### Environment

<details>

<summary>Current environment</summary>

* CUDA:

- GPU:

- Tesla V100-SXM2-16GB

- Tesla V100-SXM2-16GB

- Tesla V100-SXM2-16GB

- Tesla V100-SXM2-16GB

- available: True

- version: 12.2

* Lightning:

- lightning: 2.1.0

- lightning-utilities: 0.10.1

- pytorch-lightning: 2.2.1

- torch: 2.3.0a0+gitbfa71b5

- torchmetrics: 1.3.1

- torchvision: 0.18.0a0+423a1b0

* Packages:

- aiohttp: 3.9.4rc0

- aiosignal: 1.3.1

- annotated-types: 0.6.0

- astunparse: 1.6.3

- async-timeout: 4.0.3

- attrs: 23.2.0

- audioread: 3.0.1

- certifi: 2023.11.17

- cffi: 1.16.0

- charset-normalizer: 3.3.2

- contourpy: 1.2.0

- cycler: 0.12.1

- decorator: 5.1.1

- dllogger: 1.0.0

- exceptiongroup: 1.2.0

- expecttest: 0.2.1

- filelock: 3.13.1

- fonttools: 4.49.0

- frozenlist: 1.4.1

- fsspec: 2023.12.2

- hypothesis: 6.97.3

- idna: 3.6

- inflect: 7.0.0

- iniconfig: 2.0.0

- jinja2: 3.1.3

- joblib: 1.3.2

- kiwisolver: 1.4.5

- librosa: 0.8.1

- lightning: 2.1.0

- lightning-utilities: 0.10.1

- llvmlite: 0.42.0

- markupsafe: 2.1.4

- matplotlib: 3.8.3

- mpmath: 1.3.0

- multidict: 6.0.5

- networkx: 3.2.1

- numba: 0.59.0

- numpy: 1.23.1

- optree: 0.10.0

- packaging: 23.2

- pillow: 10.2.0

- pip: 22.2.2

- platformdirs: 4.2.0

- pluggy: 1.4.0

- pooch: 1.8.1

- psutil: 5.9.8

- pycparser: 2.21

- pydantic: 2.6.3

- pydantic-core: 2.16.3

- pyparsing: 3.1.2

- pytest: 8.1.1

- python-dateutil: 2.9.0.post0

- pytorch-lightning: 2.2.1

- pyyaml: 6.0.1

- requests: 2.31.0

- resampy: 0.4.3

- scikit-learn: 1.4.1.post1

- scipy: 1.12.0

- setuptools: 63.2.0

- six: 1.16.0

- sortedcontainers: 2.4.0

- soundfile: 0.12.1

- sympy: 1.12

- tabulate: 0.9.0

- threadpoolctl: 3.3.0

- tomli: 2.0.1

- torch: 2.3.0a0+gitbfa71b5

- torchmetrics: 1.3.1

- torchvision: 0.18.0a0+423a1b0

- tqdm: 4.66.2

- types-dataclasses: 0.6.6

- typing-extensions: 4.9.0

- unidecode: 1.3.8

- urllib3: 2.2.0

- wheel: 0.42.0

- yarl: 1.9.4

* System:

- OS: Linux

- architecture:

- 64bit

- ELF

- processor: x86_64

- python: 3.10.8

- release: 5.11.0-27-generic

- version: #29~20.04.1-Ubuntu SMP Wed Aug 11 15:58:17 UTC 2021

</details>

### More info

_No response_

|

open

|

2024-03-18T09:54:09Z

|

2024-03-18T09:54:23Z

|

https://github.com/Lightning-AI/pytorch-lightning/issues/19667

|

[

"bug",

"needs triage",

"ver: 2.1.x"

] |

PHLens

| 0

|

plotly/dash

|

jupyter

| 2,262

|

Method for generating component IDs

|

Hi there,

I wanted to know the method used to auto-generate component ids in Dash. Does it use UUIDs or something else?

Thanks

|

closed

|

2022-10-07T07:21:20Z

|

2022-10-11T14:31:19Z

|

https://github.com/plotly/dash/issues/2262

|

[] |

anu0012

| 1

|

axnsan12/drf-yasg

|

rest-api

| 84

|

Problem adding Model to definitions

|

Using a simplified serializer for a list view with a limited set of fields does not get a model added to definitions. We get this error instead.

> Resolver error at paths./customers-search/.get.responses.200.schema.items.$ref

> Could not resolve reference because of: Could not resolve pointer: /definitions/SimpleCustomerSerializerV1 does not exist in document

>

```

@swagger_auto_schema(

manual_parameters=[search_query_param],

responses={

status.HTTP_200_OK: openapi.Response(

'Customers found.',

serializers.SimpleCustomerSerializerV1(many=True)),

status.HTTP_404_NOT_FOUND: 'No matching customers found.',

status.HTTP_429_TOO_MANY_REQUESTS: 'Rate limit exceeded.',

status.HTTP_500_INTERNAL_SERVER_ERROR: 'Internal error.',

status.HTTP_504_GATEWAY_TIMEOUT: 'Request timed out.',

})

def list(self, request, *args, **kwargs):

```

If we remove the custom response for 200, the default behavior works as expected and does generate the model in definitions. The code is very hard to trace to figure out what is going on here.

|

closed

|

2018-03-12T17:56:39Z

|

2018-03-12T19:32:54Z

|

https://github.com/axnsan12/drf-yasg/issues/84

|

[

"bug"

] |

aarcro

| 2

|

jina-ai/serve

|

fastapi

| 5,545

|

check tests/integration/instrumentation flakyness

|

**Describe the bug**

This test tests/integration/instrumentation looks very flaky and do not let us work with 3.8

|

closed

|

2022-12-21T09:54:06Z

|

2023-01-25T10:42:34Z

|

https://github.com/jina-ai/serve/issues/5545

|

[

"epic/gRPCTransport"

] |

JoanFM

| 10

|

cobrateam/splinter

|

automation

| 783

|

Please release 0.14.0 to PyPI

|

I am unable to run tests without manually patching the fix for browser.py provided in #749

|

closed

|

2020-07-22T04:55:20Z

|

2020-08-19T22:56:32Z

|

https://github.com/cobrateam/splinter/issues/783

|

[] |

JosephKiranBabu

| 2

|

graphql-python/graphene-sqlalchemy

|

sqlalchemy

| 113

|

When is the next release ?

|

@syrusakbary Its been a while since there has been an official release, Wondering when will there be a release, because mainly im interested in using #78

|

closed

|

2018-02-13T23:26:15Z

|

2023-02-25T00:48:30Z

|

https://github.com/graphql-python/graphene-sqlalchemy/issues/113

|

[] |

kavink

| 3

|

ultralytics/ultralytics

|

python

| 19,369

|

yolo11ndetection evaluation numbers

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/orgs/ultralytics/discussions) and found no similar questions.

### Question

What numbers should be considered optimal to regard a custom object detection model as perfect? In graphs and in after training result ?

### Additional

_No response_

|

open

|

2025-02-22T08:20:56Z

|

2025-03-03T19:17:52Z

|

https://github.com/ultralytics/ultralytics/issues/19369

|

[

"question",

"detect"

] |

shwetakinger37

| 5

|

Zeyi-Lin/HivisionIDPhotos

|

fastapi

| 125

|

显存释放问题,使用API接口运行不停止就不释放显存!

|

使用api接口,作为服务运行在服务器,使用GPU加载birefnet-v1-lite模型以后,显存就一直被占用,一直没有释放

希望优化一下显存回收机制,比如五分钟十分钟内没有调用这个GPU才用的模型就释放掉显存!

|

open

|

2024-09-14T15:23:57Z

|

2024-10-26T03:02:50Z

|

https://github.com/Zeyi-Lin/HivisionIDPhotos/issues/125

|

[] |

simkinhu

| 3

|

fastapi-admin/fastapi-admin

|

fastapi

| 83

|

Docker config isn't very handy

|

The following improvements:

* Install DB and redis with docker-compose in isolation from the host system

* Mount fastapi-admin and example sources as volume (no image rebuild on src changes)

* Autorestart the example application on source changes

are proposed in the PR:

https://github.com/fastapi-admin/fastapi-admin/pull/82

|

open

|

2021-09-09T06:55:49Z

|

2021-12-17T13:18:29Z

|

https://github.com/fastapi-admin/fastapi-admin/issues/83

|

[] |

radiophysicist

| 1

|

Yorko/mlcourse.ai

|

plotly

| 362

|

Docker image - seaborn

|

Looks like the docker image is not connected to seaborn 0.9.0 , your exercise 2 requires catplot .

|

closed

|

2018-10-06T17:36:15Z

|

2018-10-11T13:48:40Z

|

https://github.com/Yorko/mlcourse.ai/issues/362

|

[

"enhancement"

] |

priteshwatch

| 2

|

healthchecks/healthchecks

|

django

| 131

|

Show user-friendly message when Telegram confirmation link is expired

|

... currently we raise a SignatureExpired exception and return 500.

|

closed

|

2017-08-14T12:20:58Z

|

2021-08-27T09:58:30Z

|

https://github.com/healthchecks/healthchecks/issues/131

|

[

"bug"

] |

cuu508

| 1

|

d2l-ai/d2l-en

|

machine-learning

| 2,218

|

Bahdanau attention (attention mechanisms) tensorflow notebook build fails in Turkish version.

|

Currently, PR-63 (Fixing more typos) builds fail only for tensorflow notebook. I could not figure out the reason. @AnirudhDagar , I would be glad if you could take a look.

|

closed

|

2022-07-25T20:59:30Z

|

2022-08-01T05:08:26Z

|

https://github.com/d2l-ai/d2l-en/issues/2218

|

[] |

semercim

| 1

|

TheKevJames/coveralls-python

|

pytest

| 235

|

coveralls --finish not working as expected in Circle-CI

|

Thanks for this nice client.

It looks like since the github api was introduced, the Circle-CI version seems broken. When I try putting having a run that finishes up a parallel go, the wrong build number is being used to notify the service

```

(venv) circleci@b3cd35dd8048:~/project$ coveralls debug -v --finish

Missing .coveralls.yml file. Using only env variables.

Testing coveralls-python...

{"source_files": [], "git": {"branch": "coveralls", "head": {"id": "fad6a2cc6ab84ef45d06bf2150a4d103c16e1a76", "author_name": "Stephen Roller", "author_email": "roller@fb.com", "committer_name": "Stephen Roller", "committer_email": "roller@fb.com", "message": "Hm."}, "remotes": [{"name": "origin", "url": "git@github.

com:facebookresearch/ParlAI.git"}]}, "config_file": ".coveragerc", "parallel": true, "repo_token": "[secure]", "service_name": "circle-ci", "service_job_id": "48529", "service_pull_request": "2950"}

==

Reporting 0 files

==

```

Here you see that the CIRCLE_BUILD_NUM value has been put into "service_job_id" field of the config. In

However, the call to finish uses the "service_number" field instead:

https://github.com/coveralls-clients/coveralls-python/blob/30e4815169b3db2616981939d55d2f4495816821/coveralls/api.py#L217-L218

As such, when you try calling `coveralls --finish` you get the following error:

```

$ coveralls -v --finish

Missing .coveralls.yml file. Using only env variables.

Finishing parallel jobs...

Parallel finish failed: No build matching CI build number found

Traceback (most recent call last):

File "/home/circleci/venv/lib/python3.7/site-packages/coveralls/cli.py", line 80, in main

coverallz.parallel_finish()

File "/home/circleci/venv/lib/python3.7/site-packages/coveralls/api.py", line 236, in parallel_finish

raise CoverallsException('Parallel finish failed: {}'.format(e))

coveralls.exception.CoverallsException: Parallel finish failed: No build matching CI build number found

```

Note that Circle always puts None into the service_number field:

https://github.com/coveralls-clients/coveralls-python/blob/30e4815169b3db2616981939d55d2f4495816821/coveralls/api.py#L87

https://github.com/coveralls-clients/coveralls-python/blob/30e4815169b3db2616981939d55d2f4495816821/coveralls/api.py#L52-L59

|

closed

|

2020-08-07T17:10:05Z

|

2021-01-12T02:50:08Z

|

https://github.com/TheKevJames/coveralls-python/issues/235

|

[] |

stephenroller

| 12

|

keras-team/keras

|

machine-learning

| 20,098

|

module 'keras.utils' has no attribute 'PyDataset'

|

I have correctly installed version 3.0.5 of keras and used pytorch for the backend, but it always prompts module 'keras. utils' has no attribute' PyDataset '. How can I solve this problem?

|

closed

|

2024-08-08T08:32:19Z

|

2024-08-13T06:58:42Z

|

https://github.com/keras-team/keras/issues/20098

|

[

"stat:awaiting response from contributor",

"type:Bug"

] |

Sticcolet

| 5

|

allenai/allennlp

|

nlp

| 4,798

|

PretrainedTransformerTokenizer doesn't work with seq2seq dataset reader

|

<!--

Please fill this template entirely and do not erase any of it.

We reserve the right to close without a response bug reports which are incomplete.

If you have a question rather than a bug, please ask on [Stack Overflow](https://stackoverflow.com/questions/tagged/allennlp) rather than posting an issue here.

-->

## Checklist

<!-- To check an item on the list replace [ ] with [x]. -->

- [X] I have verified that the issue exists against the `master` branch of AllenNLP.

- [X] I have read the relevant section in the [contribution guide](https://github.com/allenai/allennlp/blob/master/CONTRIBUTING.md#bug-fixes-and-new-features) on reporting bugs.

- [X] I have checked the [issues list](https://github.com/allenai/allennlp/issues) for similar or identical bug reports.

- [X] I have checked the [pull requests list](https://github.com/allenai/allennlp/pulls) for existing proposed fixes.

- [X] I have checked the [CHANGELOG](https://github.com/allenai/allennlp/blob/master/CHANGELOG.md) and the [commit log](https://github.com/allenai/allennlp/commits/master) to find out if the bug was already fixed in the master branch.

- [X] I have included in the "Description" section below a traceback from any exceptions related to this bug.

- [X] I have included in the "Related issues or possible duplicates" section below all related issues and possible duplicate issues (If there are none, check this box anyway).

- [X] I have included in the "Environment" section below the name of the operating system and Python version that I was using when I discovered this bug.

- [X] I have included in the "Environment" section below the output of `pip freeze`.

- [X] I have included in the "Steps to reproduce" section below a minimally reproducible example.

## Description

<!-- Please provide a clear and concise description of what the bug is here. -->

As far as I can tell, `PretrainedTransformerTokenizer` is not compatible with the `seq2seq` dataset reader of [`allennlp-models`](https://github.com/allenai/allennlp-models) when it is used as the `source_tokenizer`. The same error, contained in this try/except block [here](https://github.com/allenai/allennlp-models/blob/236034ff54ac3197ec4d710438cebdfa919c5a45/allennlp_models/generation/dataset_readers/seq2seq.py#L94-L102) is triggered in multiple cases.

1. When `allennlp.common.util.START_SYMBOL` and `allennlp.common.util.END_SYMBOL` are not in the pretrained transformers vocabulary. I was able to solve this in the config as follows:

```json

"dataset_reader": {

"type": "copynet_seq2seq",

"target_namespace": "target_tokens",

"source_tokenizer": {

"type": "pretrained_transformer",

"model_name": "distilroberta-base",

"tokenizer_kwargs": {

"additional_special_tokens": {

"allennlp_start_symbol": "@start@",

"allennlp_end_symbol": "@end@",

},

}

},

```

2. If `PretrainedTransformerTokenizer.add_special_tokens` is `True` (the default) for wordpiece-based tokenizers.

3. For any BPE-based tokenizer I tried.

The error arises because there are more than two tokens in the list returned by `self._source_tokeniser.tokenizer` in the [try/except block](https://github.com/allenai/allennlp-models/blob/236034ff54ac3197ec4d710438cebdfa919c5a45/allennlp_models/generation/dataset_readers/seq2seq.py#L94-L102) for all cases listed above:

```python

try:

self._start_token, self._end_token = self._source_tokenizer.tokenize(

start_symbol + " " + end_symbol

)

except ValueError:

raise ValueError(

f"Bad start or end symbol ('{start_symbol}', '{end_symbol}') "

f"for tokenizer {self._source_tokenizer}"

)

```

<details>

<summary><b>Python traceback:</b></summary>

<p>

<!-- Paste the traceback from any exception (if there was one) in between the next two lines below -->

```

2020-11-16 16:53:24,760 - CRITICAL - root - Uncaught exception

Traceback (most recent call last):

File "/project/6006286/johnmg/allennlp-models/allennlp_models/generation/dataset_readers/seq2seq.py", line 98, in __init__

self._start_token, self._end_token = self._source_tokenizer.tokenize(

ValueError: too many values to unpack (expected 2)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/johnmg/seq2rel/bin/allennlp", line 33, in <module>

sys.exit(load_entry_point('allennlp', 'console_scripts', 'allennlp')())

File "/project/6006286/johnmg/allennlp/allennlp/__main__.py", line 34, in run

main(prog="allennlp")

File "/project/6006286/johnmg/allennlp/allennlp/commands/__init__.py", line 118, in main

args.func(args)

File "/project/6006286/johnmg/allennlp/allennlp/commands/train.py", line 110, in train_model_from_args

train_model_from_file(

File "/project/6006286/johnmg/allennlp/allennlp/commands/train.py", line 170, in train_model_from_file

return train_model(

File "/project/6006286/johnmg/allennlp/allennlp/commands/train.py", line 236, in train_model

model = _train_worker(

File "/project/6006286/johnmg/allennlp/allennlp/commands/train.py", line 453, in _train_worker

train_loop = TrainModel.from_params(

File "/project/6006286/johnmg/allennlp/allennlp/common/from_params.py", line 595, in from_params

return retyped_subclass.from_params(

File "/project/6006286/johnmg/allennlp/allennlp/common/from_params.py", line 627, in from_params

kwargs = create_kwargs(constructor_to_inspect, cls, params, **extras)

File "/project/6006286/johnmg/allennlp/allennlp/common/from_params.py", line 198, in create_kwargs

constructed_arg = pop_and_construct_arg(

File "/project/6006286/johnmg/allennlp/allennlp/common/from_params.py", line 305, in pop_and_construct_arg

return construct_arg(class_name, name, popped_params, annotation, default, **extras)

File "/project/6006286/johnmg/allennlp/allennlp/common/from_params.py", line 339, in construct_arg

return annotation.from_params(params=popped_params, **subextras)

File "/project/6006286/johnmg/allennlp/allennlp/common/from_params.py", line 595, in from_params

return retyped_subclass.from_params(

File "/project/6006286/johnmg/allennlp/allennlp/common/from_params.py", line 629, in from_params

return constructor_to_call(**kwargs) # type: ignore

File "/project/6006286/johnmg/allennlp-models/allennlp_models/generation/dataset_readers/seq2seq.py", line 102, in __init__

raise ValueError(

ValueError: Bad start or end symbol ('@start@', '@end@') for tokenizer <allennlp.data.tokenizers.pretrained_transformer_tokenizer.PretrainedTransformerTokenizer object at 0x2b6503248970>

```

</p>

</details>

## Related issues or possible duplicates

- None

## Environment

<!-- Provide the name of operating system below (e.g. OS X, Linux) -->

OS: Linux

<!-- Provide the Python version you were using (e.g. 3.7.1) -->

Python version: 3.8.0

<details>

<summary><b>Output of <code>pip freeze</code>:</b></summary>

<p>

<!-- Paste the output of `pip freeze` in between the next two lines below -->

```bash

-f /cvmfs/soft.computecanada.ca/custom/python/wheelhouse/nix/avx2

-f /cvmfs/soft.computecanada.ca/custom/python/wheelhouse/nix/generic

-f /cvmfs/soft.computecanada.ca/custom/python/wheelhouse/generic

-e git+https://github.com/allenai/allennlp.git@0d8873cfef628eaf0457bee02422bbf8dae475a2#egg=allennlp

-e git+https://github.com/allenai/allennlp-models.git@236034ff54ac3197ec4d710438cebdfa919c5a45#egg=allennlp_models

attrs==20.2.0

blis==0.4.1

boto3==1.16.2

botocore==1.19.2

certifi==2020.6.20

chardet==3.0.4

click==7.1.2

conllu==4.2.1

cymem==2.0.2

filelock==3.0.12

ftfy==5.5.1

future==0.18.2

h5py==2.10.0

idna==2.10

importlib-metadata==2.0.0

iniconfig==1.0.1

jmespath==0.10.0

joblib==0.17.0

jsonnet==0.14.0

jsonpickle==1.4.1

more-itertools==8.5.0

murmurhash==1.0.2

nltk==3.5

numpy==1.19.1

overrides==3.1.0

packaging==20.4

plac==1.1.3

pluggy==0.13.1

preshed==3.0.2

protobuf==3.13.0

py==1.9.0

py-rouge==1.1

pyparsing==2.4.7

pytest==6.0.1

python-dateutil==2.8.1

regex==2019.11.1

requests==2.24.0

s3transfer==0.3.3

sacremoses==0.0.43

scikit-learn==0.23.0

scipy==1.5.2

sentencepiece==0.1.91

six==1.15.0

spacy==2.2.2

srsly==0.2.0

tensorboardX==2.1

thinc==7.3.1

threadpoolctl==2.1.0

tokenizers==0.9.3

toml==0.10.1

torch==1.7.0

tqdm==4.51.0

transformers==3.5.1

typing-extensions==3.7.4.3

urllib3==1.25.10

wasabi==0.6.0

wcwidth==0.2.5

word2number==1.1

zipp==3.4.0

```

</p>

</details>

## Steps to reproduce

<details>

<summary><b>Example source:</b></summary>

<p>

The proximate cause of the error can be reproduced as follows:

<!-- Add a fully runnable example in between the next two lines below that will reproduce the bug -->

```python

from allennlp.data.tokenizers import PretrainedTransformerTokenizer

from allennlp.common.util import START_SYMBOL, END_SYMBOL

tokenizer_kwargs = {"additional_special_tokens": [START_SYMBOL, END_SYMBOL]}

# Case 1, don't add AllenNLPs start/end symbols to vocabulary

tokenizer = PretrainedTransformerTokenizer("bert-base-uncased")

start_token, end_token = tokenizer.tokenize(START_SYMBOL + " " + END_SYMBOL)

# Case 2, set add_special_tokens=True (the default) in PretrainedTransformerTokenizer for a wordpiece based tokenizer

# this WON'T fail

tokenizer = PretrainedTransformerTokenizer("bert-base-uncased", tokenizer_kwargs=tokenizer_kwargs, add_special_tokens=False)

start_token, end_token = tokenizer.tokenize(START_SYMBOL + " " + END_SYMBOL)

# this WILL fail

tokenizer = PretrainedTransformerTokenizer("bert-base-uncased", tokenizer_kwargs=tokenizer_kwargs, add_special_tokens=True)

start_token, end_token = tokenizer.tokenize(START_SYMBOL + " " + END_SYMBOL)

# Case 3, BPE-based tokenizers fail regardless

# this WILL fail

tokenizer = PretrainedTransformerTokenizer("distilroberta-base", tokenizer_kwargs=tokenizer_kwargs, add_special_tokens=False)

start_token, end_token = tokenizer.tokenize(START_SYMBOL + " " + END_SYMBOL)

# this WILL fail

tokenizer = PretrainedTransformerTokenizer("distilroberta-base", tokenizer_kwargs=tokenizer_kwargs, add_special_tokens=True)

start_token, end_token = tokenizer.tokenize(START_SYMBOL + " " + END_SYMBOL)

```

</p>

</details>

|

closed

|

2020-11-17T01:31:42Z

|

2020-11-18T16:09:58Z

|

https://github.com/allenai/allennlp/issues/4798

|

[

"bug"

] |

JohnGiorgi

| 8

|

pywinauto/pywinauto

|

automation

| 1,321

|

The popup menu items are not identified via pywinauto

|

## Expected Behavior

The popup menu info should be available in the output of `app.dialog.print_control_identifiers()`

## Actual Behavior

The popup menu items are not listed in the app.dialog.print_control_identifiers() output, though the popup menu is visible on screen

## Steps to Reproduce the Problem

1. Right click on **TreeItem** control type

2. print **app.dialog.print_control_identifiers()**

3. check the output

4. popup menu items are not listed in the output

## Short Example of Code to Demonstrate the Problem

**Sample UI layout**

## Specifications

- Pywinauto version:0.6.8

- Python version and bitness:3.11 / x64

- Platform and OS: win 22h2

|

open

|

2023-08-11T05:41:17Z

|

2023-08-28T05:36:19Z

|

https://github.com/pywinauto/pywinauto/issues/1321

|

[] |

vikramjitSingh

| 5

|

miguelgrinberg/python-socketio

|

asyncio

| 461

|

multiple namespace, race condition in asyncio, no individual sid

|

## Problem

1. `sio.connect()` first connects to namespace `/` before connecting to other namespaces. Subsequent `emit()` commands are allowed before all namespaces are connected to - causing messages to disappear (due to namespace not connected yet).

Adding `asyncio.sleep(1)` before the first `emit()` seems to fix the problem,

2. in the documentation https://python-socketio.readthedocs.io/en/latest/server.html#namespaces, 2nd paragraph suggests that

> Each namespace is handled independently from the others, with separate session IDs (sids), ...

it seems in my snippet below the same `sid` is used for all namespaces

am I missing something?

## Code

Client code:

```python

import time

import asyncio

import socketio

import logging

logging.basicConfig(level='DEBUG')

loop = asyncio.get_event_loop()

sio = socketio.AsyncClient()

@sio.event

async def message(data):

print(data)

@sio.event(namespace='/abc')

def message(data):

print('/abc', data)

@sio.event

async def connect():

print('connection established', time.time())

@sio.event(namespace='/abc')

async def connect():

print("I'm connected to the /abc namespace!", time.time())

async def start_client():

await sio.connect('http://localhost:8080', transports=['websocket'],

namespaces=['/', '/abc'])

# await asyncio.sleep(2)

await sio.emit('echo', '12345')

await sio.emit('echo', '12345', namespace='/abc')

await sio.wait()

if __name__ == '__main__':

loop.run_until_complete(start_client())

```

server code:

```python

import socketio

import time

from aiohttp import web

sio = socketio.AsyncServer(async_mode = 'aiohttp')

app = web.Application()

sio.attach(app)

redis = None

@sio.event

async def connect(sid, environ):

print("connected", sid, time.time())

@sio.event(namespace='/abc')

async def connect(sid, environ):

print("connected /abc", sid, time.time())

@sio.event(namespace='/abc')

async def echo(sid, msg):

print('abc', sid, msg)

await sio.emit('message', msg, to=sid, namespace='/abc')

@sio.event

async def echo(sid, msg):

print(sid, msg)

await sio.emit('message', msg, to=sid)

if __name__ == '__main__':

web.run_app(app)

```

|

closed

|

2020-04-14T02:00:58Z

|

2023-09-21T11:19:07Z

|

https://github.com/miguelgrinberg/python-socketio/issues/461

|

[

"documentation"

] |

simingy

| 16

|

long2ice/fastapi-cache

|

fastapi

| 417

|

Trouble with Poetry Operations for a Project Dependent on FastAPI-Cache (commit # 8f0920d , dependency redis)

|

I'm working on a project and have specified a dependency in the `pyproject.toml` file as follows:

```toml

[tool.poetry.dependencies]

fastapi-cache2 = {extras = ["redis"], git = "https://github.com/long2ice/fastapi-cache.git", rev = "8f0920d"}

```

When I run `poetry install`, it completes successfully. However, attempting to run `poetry lock`, `poetry add "fastapi-cache2[redis]@git+https://github.com/long2ice/fastapi-cache.git#8f0920d"`, or `poetry update <any-other-dependency>` results in failures. The error message indicates a `CalledProcessError`, mentioning that a subprocess command returned a non-zero exit status:

```plaintext

CalledProcessError

Command '['/path/to/.venv/bin/python', '-']' returned non-zero exit status 1.

at /path/to/python3.11/subprocess.py:569 in run

```

Further details from the error suggest an `EnvCommandError`, indicating an issue with executing a command in the environment:

```plaintext

EnvCommandError

Command ['/path/to/.venv/bin/python', '-'] errored with the following return code 1

Output:

Traceback (most recent call last):

...

raise BuildException(f'Source {srcdir} does not appear to be a Python project: no pyproject.toml or setup.py')

build.BuildException: Source /path/to/cache/pypoetry/virtualenvs/project-py3.11/src/fastapi-cache does not appear to be a Python project: no pyproject.toml or setup.py

```

I attempted to replicate this in a fresh poetry project using the `poetry add "fastapi-cache2[redis]@git+https://github.com/long2ice/fastapi-cache.git#8f0920d"` command, but encountered a different issue:

```plaintext

Failed to clone https://github.com/long2ice/fastapi-cache.git at '8f0920d', verify ref exists on remote.

```

I've confirmed that the specific commit `8f0920d` indeed contains a `pyproject.toml` file.

Cloning the project and checking out this commit works fine outside of Poetry, which suggests there may be a bug within Poetry or the way it interacts with this specific git dependency.

|

open

|

2024-03-22T12:41:35Z

|

2024-11-09T11:16:04Z

|

https://github.com/long2ice/fastapi-cache/issues/417

|

[

"needs-triage"

] |

SudeshnaBora

| 0

|

521xueweihan/HelloGitHub

|

python

| 2,347

|

Android 系统彩蛋(集合)

|

## 推荐项目

<!-- 这里是 HelloGitHub 月刊推荐项目的入口,欢迎自荐和推荐开源项目,唯一要求:请按照下面的提示介绍项目。-->

<!-- 点击上方 “Preview” 立刻查看提交的内容 -->

<!--仅收录 GitHub 上的开源项目,请填写 GitHub 的项目地址-->

- 项目地址:https://github.com/hushenghao/AndroidEasterEggs

<!--请从中选择(C、C#、C++、CSS、Go、Java、JS、Kotlin、Objective-C、PHP、Python、Ruby、Rust、Swift、其它、书籍、机器学习)-->

- 类别:Java、Kotlin

<!--请用 20 个左右的字描述它是做什么的,类似文章标题让人一目了然 -->

- 项目标题:Android 系统彩蛋(集合)

<!--这是个什么项目、能用来干什么、有什么特点或解决了什么痛点,适用于什么场景、能够让初学者学到什么。长度 32-256 字符-->

- 项目描述:整理兼容了Android系统各正式版的彩蛋,可以在更多设备上运行

<!--令人眼前一亮的点是什么?类比同类型项目有什么特点!-->

- 亮点:项目包含了系统彩蛋完整代码,旨在对系统彩蛋的整理和兼容,以保证大多数设备可以体验到不同版本的彩蛋,不会对系统彩蛋代码做过多修改。部分版本使用了系统新特性,低版本只能使用部分功能。

- 截图:

- 后续更新计划:紧随Android 版本发布周期添加新的彩蛋,及现有彩蛋Bug修复

|

open

|

2022-09-01T06:30:00Z

|

2022-09-23T05:55:22Z

|

https://github.com/521xueweihan/HelloGitHub/issues/2347

|

[

"其它"

] |

hushenghao

| 0

|

plotly/dash

|

data-science

| 2,232

|

[BUG] Callbacks in `def layout()` are not triggering

|

**Describe your context**

```

dash 2.6.1

dash-bootstrap-components 1.2.1

dash-core-components 2.0.0

dash-html-components 2.0.0

dash-table 5.0.0

```

**Describe the bug**

When using the new multi-page support with the layout defined in a function in one of the page (`def layout()`), callbacks that are defined inside this layout function aren't getting triggered.

Minimal example

app.py

```

import dash

from dash import Dash, html

app = Dash(__name__, use_pages=True)

app.layout = html.Div(

[

html.H1("welcome to my multipage appp"),

html.Ul(

[

html.Li(

html.A("HOME", href="/"),

),

html.Li(

html.A("/cows", href="/cows?x=y"),

),

]

),

dash.page_container,

]

)

app.run_server(

host="0.0.0.0",

debug=True,

)

```

pages/cows.py

```

import dash

from dash import Input, Output, State, callback, dcc, html

def cow(say):

say = f"{say:5s}"

return html.Pre(

rf"""

_______

< {say} >

-------

\ ^__^

\ (oo)\_______

(__)\ )\/\

||----w |

|| ||

"""

)

dash.register_page(__name__)

def layout(**query_parameters):

root = html.Div(

[

cow_saying := dcc.Dropdown(

["MOO", "BAA", "EEK"],

"MOO",

),

cow_holder := html.Div(),

]

)

@callback(

Output(cow_holder, "children"),

Input(cow_saying, "value"),

)

def update_cow(say):

return cow(say)

return root

```

**Expected behavior**

The callback nested in the layout function should get properly triggered.

Nested functions allow us to capture the incoming query parameters in the closure. Based on that we can load data on page load and keep it in memory for callback changes.

I tried a workaround of defining the callbacks in the global (outer) context. However this has following problems:

* query parameters need to be passed to the callbacks via `State`

* not clear how to implement the load-data-on-pageload pattern, since using state could lead to transferring lots of data to the client

* can't use the `Input` and `Output` bindings by passing the component instead of the ID, as given in the example

|

closed

|

2022-09-14T16:31:44Z

|

2023-03-10T21:40:22Z

|

https://github.com/plotly/dash/issues/2232

|

[] |

HennerM

| 2

|

tensorflow/tensor2tensor

|

deep-learning

| 1,191

|

Universal Transformer appears to be buggy and not converging correctly

|

Summary

---------

Universal Transformer appears to be buggy and not converging correctly:

- Universal transformer does not converge on multi_nli as of the latest tensor2tensor master (9729521bc3cd4952c42dcfda53699e14bee7b409). See below for reproduction

- UT does converge on multi_nli as of August 3 2018 commit 5fff1cad2977f063b981e5d8b839bf9d7008e232 (we didn’t run this fully out, but it was making meaningful progress, unlike below, so we terminated it and considered it successful).

- To confirm this was not simply an odd issue with multi_nli, we tried UT with a number of other problems (exact repo not shown below), including ‘lambada_rc’ and ‘stanford_nli’ (run at commit ca628e4fcb04ff42ed21549a4f73e6dfa68a5f7a from around October 16 2018) All of these failed to converge.

Environment information

-------------------------

Docker image based off nvidia/cuda:9.0-devel-ubuntu16.04

Tf version: tensorflow-gpu=1.11.0

T2t version: Tensor2tensor master at commit 9729521bc3cd4952c42dcfda53699e14bee7b409 on Oct 30 2018.

We also saw this failed behavior on tf-nightly-gpu==1.13.0.dev20181022

Reproduce

-----------

Problem: multi_nli

Model: universal_transformer

Hparams_set: universal_transformer_tiny

python3 /usr/src/t2t/tensor2tensor/bin/t2t-trainer \

--data_dir="DATA_DIR" \

--eval_early_stopping_steps="10000" \

--eval_steps="10000" \

--generate_data="True" \

--hparams="" \

--hparams_set="universal_transformer_tiny" \

--iterations_per_loop="2000" \

--keep_checkpoint_max="80" \

--local_eval_frequency="2000" \

--model="universal_transformer" \

--output_dir="OUTPUT_DIR" \

--problem="multi_nli" \

--t2t_usr_dir="T2T_USR_DIR" \

--tmp_dir="T2T_TMP_DIR"

Run was stopped after 50000 steps due to lack of convergence as loss fluctuates between 1.098 and 1.099.

INFO:tensorflow:Saving dict for global step 50000: global_step = 50000, loss = 1.0991247, metrics-multi_nli/targets/accuracy = 0.31821653, metrics-multi_nli/targets/accuracy_per_sequence = 0.31821653, metrics-multi_nli/targets/accuracy_top5 = 1.0, metrics-multi_nli/targets/approx_bleu_score = 0.7479816, metrics-multi_nli/targets/neg_log_perplexity = -1.099124, metrics-multi_nli/targets/rouge_2_fscore = 0.0, metrics-multi_nli/targets/rouge_L_fscore = 0.31869644

|

closed

|

2018-10-31T21:02:06Z

|

2018-11-20T23:37:07Z

|

https://github.com/tensorflow/tensor2tensor/issues/1191

|

[] |

rllin-fathom

| 9

|

ipython/ipython

|

data-science

| 14,410

|

Is there a way to map ctrl+h and backspace to different functions?

|

if I set `'c-h'` only, `backspace` will do same thing as `ctrl+h`, just like below:

```

registry.add_binding('c-h', filter=(HasFocus(DEFAULT_BUFFER) & ViInsertMode()))(nc.backward_char)

```

And `'c-?'` is not allowed to `add_binding`.

Is there a way to make it as many terminal can distinguish `c-h` and `<bs>`.

If for compatibility, is it possible to support bind `c-?`?

Thanks in advance.

```[tasklist]

### Tasks

```

|

open

|

2024-04-19T07:52:39Z

|

2024-06-04T11:33:42Z

|

https://github.com/ipython/ipython/issues/14410

|

[] |

roachsinai

| 0

|

deeppavlov/DeepPavlov

|

nlp

| 1,315

|

multi-threading in NER model ?

|

Sorry for the naive question, I am a newbie of DeepPavlov.

Is a NER model built with build_model multi-threaded (e.g. build_model(configs.ner.ner_ontonotes_bert_mult) ) or is there any parameter/arg to set to have multi-threading?

Thanks for your kind support!

|

closed

|

2020-09-10T15:08:18Z

|

2020-09-10T15:30:13Z

|

https://github.com/deeppavlov/DeepPavlov/issues/1315

|

[

"enhancement"

] |

cattoni

| 1

|

google-research/bert

|

nlp

| 849

|

_

|

closed

|

2019-09-09T04:31:15Z

|

2024-01-30T07:38:36Z

|

https://github.com/google-research/bert/issues/849

|

[] |

garyshincc

| 0

|

|

pallets/flask

|

python

| 4,375

|

flask test client + pytest asyncio: RuntimeError when test and view are both async

|

Hi!

While migrating an application to asyncio, i encountered what I believed to be a bug.

Consider the following scenario:

* There is a view defined with a coroutine

* This function is called via the flask test client

* This test client is used in an async coroutine

Then there is the following exception:

```

Traceback (most recent call last):

File "/home/flo/documents/code/flask-bug-testclient-asyncio/venv/lib/python3.9/site-packages/flask/app.py", line 2073, in wsgi_app

response = self.full_dispatch_request()

File "/home/flo/documents/code/flask-bug-testclient-asyncio/venv/lib/python3.9/site-packages/flask/app.py", line 1518, in full_dispatch_request

rv = self.handle_user_exception(e)

File "/home/flo/documents/code/flask-bug-testclient-asyncio/venv/lib/python3.9/site-packages/flask/app.py", line 1516, in full_dispatch_request

rv = self.dispatch_request()

File "/home/flo/documents/code/flask-bug-testclient-asyncio/venv/lib/python3.9/site-packages/flask/app.py", line 1502, in dispatch_request

return self.ensure_sync(self.view_functions[rule.endpoint])(**req.view_args)

File "/home/flo/documents/code/flask-bug-testclient-asyncio/venv/lib/python3.9/site-packages/asgiref/sync.py", line 160, in __call__

raise RuntimeError(

RuntimeError: You cannot use AsyncToSync in the same thread as an async event loop - just await the async function directly.

```

See a reproducer in attachment.

[flask-bug-testclient-asyncio.tar.gz](https://github.com/pallets/flask/files/7687052/flask-bug-testclient-asyncio.tar.gz)

Steps to reproduce:

```

tar xvf flask-bug-testclient-asyncio.tar.gz

cd flask-bug-testclient-asyncio/

python -m venv venv

source venv/bin/activate

pip install -r requirements.txt

pytest

```

If you change the view or the test function to not be async, the test pass. Both being not-async also works.

Both being async does not work.

There's probably a conflict due to the way this coroutine is scheduled. Could you have a peek ?

Thanks!

Environment:

- Python version: 3.9.9

- Flask version: 2.0.2

PS: couldn't find anything on the internet, except for [this post](https://stackoverflow.com/q/69431468).

EDIT (2021-12-10): for reference, here's the code sample

```

# App definition

from flask import Flask

app = Flask(__name__)

@app.route("/")

async def hello_world():

return "aaa"

# Test definition

import pytest

@pytest.mark.asyncio

async def test_async_in_async_fail():

with app.test_client() as client:

assert client.get("/").data == b"aaa"

```

|

closed

|

2021-12-09T17:05:31Z

|

2021-12-25T00:03:43Z

|

https://github.com/pallets/flask/issues/4375

|

[] |

0xf10413

| 4

|

chainer/chainer

|

numpy

| 8,100

|

ConvolutionND output on GPU with certain batchsizes is zero

|

```py

> import chainer

> import chainer.links as L

```

CPU batchsize 64

```py

>> c = L.ConvolutionND(3, 3, 64, ksize=3, stride=1, pad=1)

>> d = c.xp.random.randn(64,3,16,64,64).astype('f')

>> c(d).array.var()

0.9107105

>> c(d).array.mean()

-4.8422127e-05

```

Move to GPU

```py

>> c.to_gpu()

<chainer.links.connection.convolution_nd.ConvolutionND object at 0x7f99c0408990>

>> d = c.xp.random.randn(64,3,16,64,64).astype('f')

>> c(d).array.mean()

array(-0.00012456, dtype=float32)

>> c(d).array.var()

array(0.9110826, dtype=float32)

```

GPU batchsize = 32

```py

>> d = c.xp.random.randn(32,3,16,64,64).astype('f')

>> c(d).array.mean()

array(0., dtype=float32)

>> c(d).array.var()

array(0., dtype=float32)

```

GPU batchsize = 64

```py

>> d = c.xp.random.randn(64,3,16,64,64).astype('f')

>> c(d).array.mean()

array(-3.2548433e-06, dtype=float32)

>> c(d).array.var()

array(0.9115594, dtype=float32)

```

GPU batchsize = 32

```py

>> d = c.xp.random.randn(32,3,16,64,64).astype('f')

>> c(d).array.mean()

array(0., dtype=float32)

>> c(d).array.var()

array(0., dtype=float32)

>> c.xp.all(c(d).array == 0)

**array(True)**

```

Runtime info:

```

> chainer.print_runtime_info()

Platform: Linux-4.15.0-47-generic-x86_64-with-debian-buster-sid

Chainer: 7.0.0b3

ChainerX: Not Available

NumPy: 1.17.2

CuPy:

CuPy Version : 7.0.0b3

CUDA Root : /xxx/x/cuda/8.0

CUDA Build Version : 8000

CUDA Driver Version : 10010

CUDA Runtime Version : 8000

cuDNN Build Version : 7102

cuDNN Version : 7102

NCCL Build Version : 2213

NCCL Runtime Version : (unknown)

iDeep: Not Available

```

Default config info

```

> chainer.config.show()

_will_recompute False

autotune False

cudnn_deterministic False

cudnn_fast_batch_normalization False

debug False

dtype float32

enable_backprop True

in_recomputing False

keep_graph_on_report False

lazy_grad_sum False

schedule_func None

train True

type_check True

use_cudnn auto

use_cudnn_tensor_core auto

use_ideep never

use_static_graph True

warn_nondeterministic False

```

|

closed

|

2019-09-10T04:13:57Z

|

2019-09-13T16:29:52Z

|

https://github.com/chainer/chainer/issues/8100

|

[

"prio:high",

"issue-checked"

] |

MannyKayy

| 5

|

tatsu-lab/stanford_alpaca

|

deep-learning

| 73

|

finetuning on 3090, is it possible?

|

Is it possible to finetune the 7B model using 8*3090?

I had set:

--per_device_train_batch_size 1 \

--per_device_eval_batch_size 1 \

but still got OOM:

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 194.00 MiB (GPU 0; 23.70 GiB total capacity; 22.21 GiB already allocated; 127.56 MiB free; 22.50 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

my scruptis as follows:

torchrun --nproc_per_node=4 --master_port=12345 train.py \

--model_name_or_path ../llama-7b-hf \

--data_path ./alpaca_data.json \

--bf16 True \

--output_dir ./output \

--num_train_epochs 3 \

--per_device_train_batch_size 1 \

--per_device_eval_batch_size 1 \

--gradient_accumulation_steps 1 \

--evaluation_strategy "no" \

--save_strategy "steps" \

--save_steps 2000 \

--save_total_limit 1 \

--learning_rate 2e-5 \

--weight_decay 0. \

--warmup_ratio 0.03 \

--lr_scheduler_type "cosine" \

--logging_steps 1 \

--fsdp "full_shard auto_wrap" \

--fsdp_transformer_layer_cls_to_wrap 'LLaMADecoderLayer' \

--tf32 True

|

open

|

2023-03-17T00:54:23Z

|

2023-03-21T05:59:53Z

|

https://github.com/tatsu-lab/stanford_alpaca/issues/73

|

[] |

yfliao

| 2

|

PokeAPI/pokeapi

|

graphql

| 1,166

|

Multiple issues resoved but are still open and need to be closed.

|

Going through the current open issues to fix problems to find many that have been resolved and need to be closed. Here is some examples.

https://github.com/PokeAPI/pokeapi/issues/786

https://github.com/PokeAPI/pokeapi/issues/882

https://github.com/PokeAPI/pokeapi/issues/865

https://github.com/PokeAPI/pokeapi/issues/901

https://github.com/PokeAPI/pokeapi/issues/1069 fixed by https://github.com/PokeAPI/sprites/pull/156

Was just pointing this out in hoping some of the issue clutter is reduced.

|

closed

|

2024-11-13T15:45:38Z

|

2024-11-14T03:04:08Z

|

https://github.com/PokeAPI/pokeapi/issues/1166

|

[] |

Writey0327

| 3

|

robotframework/robotframework

|

automation

| 4,960

|

Support integer conversion with strings representing whole number floats like `'1.0'` and `'2e10'`

|

Type conversions are a very convenient feature in Robot framework. To make them that extra bit convenient I propose an enhancement.

Currently passing any string representation of a `float` number to a keyword accepting only `int` will fail. In most cases this is justified, but there are situations where floats are convertible to `int` just fine. Examples are `"1.0"`, `"2.00"` or `"1e100"`. Note that these conversions currently are already accepted when passed as type `float` (i.e. `${1.0}` or `${1e100}`. Conversion for numbers for which the decimal part is non-zero should still fail. We are talking about conversion here, not type casting.

|

closed

|

2023-11-27T06:53:43Z

|

2023-12-07T00:15:56Z

|

https://github.com/robotframework/robotframework/issues/4960

|

[

"enhancement",

"priority: medium",

"beta 1",

"effort: small"

] |

JFoederer

| 2

|

pyqtgraph/pyqtgraph

|

numpy

| 2,634

|

When drawing a set of normal data, mark some of the abnormal data points or give them different colors.

|

Hello, I just used this library, and I think it's great, but I'm having some problems using it.

When I plot a set of normal data, I want to mark the abnormal data points or give them a different color.

```python

import pyqtgraph as pg

import numpy as np

w = pg.GraphicsLayoutWidget()

w.show()

x = np.arange(10) # [0 1 2 3 4 5 6 7 8 9]

y = np.arange(10) % 3 # [0 1 2 0 1 2 0 1 2 0]

# =====================================

# [0,0],[1,1],[2,2],[3,0],[4,1],[5,2],[6,0],[7,1],[8,2],[9,0]

# Now I know that some of the data is abnormal data(eg: [3,0],[4,1],[6,0]),

# but how do I plot it? Like giving different colors.

# =====================================

plt = w.addPlot(row=0, col=0)

plt.plot(x, y, symbol='o', pen={'color': 0.8, 'width': 2})

if __name__ == '__main__':

pg.exec()

```

|

closed

|

2023-03-03T07:13:12Z

|

2023-03-03T08:02:43Z

|

https://github.com/pyqtgraph/pyqtgraph/issues/2634

|

[] |

ningwana

| 1

|

modelscope/modelscope

|

nlp

| 972

|

创空间发布配置时,提示 Internal Server Error

|

第一次时,已经授权关联相关帐号。后续想切换到GPU资源,提升上面的错误。

|

closed

|

2024-09-03T09:08:28Z

|

2024-09-09T04:34:41Z

|

https://github.com/modelscope/modelscope/issues/972

|

[] |

Li-Lai

| 2

|

JaidedAI/EasyOCR

|

deep-learning

| 891

|

When I use DBnet, and set like guide, it pop up error.

|

<img width="1051" alt="image" src="https://user-images.githubusercontent.com/25415402/203797617-dede622c-31ce-4f18-87c7-353b00e8c771.png">

reader = easyocr.Reader(['en'],detect_network = 'dbnet18')

"Input type is cpu, but 'deform_conv_cuda.*.so' is not imported successfully."

ENV: Win11;

Python ENV: 3.8.8 in Jupyter

|

open

|

2022-11-24T13:39:05Z

|

2024-10-20T08:47:53Z

|

https://github.com/JaidedAI/EasyOCR/issues/891

|

[] |

CapitaineNemo

| 5

|

mars-project/mars

|

scikit-learn

| 2,952

|

Support Slurm (or other cluster management and job scheduling system)

|

There are lots of school and company which use slurm or other cluster management and job scheduling system.

It will be great if mars can support it.

|

open

|

2022-04-22T10:10:36Z

|

2022-04-22T16:41:40Z

|

https://github.com/mars-project/mars/issues/2952

|

[

"reso: duplicate"

] |

PeikaiLi

| 1

|

nalepae/pandarallel

|

pandas

| 13

|

Implement docstring for all functions.

|

For example, the docstring of `DataFrame.parallel_apply` should be exactly the same as `Dataframe.apply`.

|

open

|

2019-03-18T09:49:50Z

|

2019-11-11T19:05:39Z

|

https://github.com/nalepae/pandarallel/issues/13

|

[

"enhancement"

] |

nalepae

| 0

|

mckinsey/vizro

|

data-visualization

| 222

|

Integrate a chatbox feature to enhance the capabilities of natural language applications

|

### What's the problem this feature will solve?

Currently, there is no text input feature similar to Streamlit's chatbox in place

### Describe the solution you'd like

Create the custom component to recieve text

### Alternative Solutions

-

### Additional context

This would help teams create natural language applications using vizro

### Code of Conduct

- [X] I agree to follow the [Code of Conduct](https://github.com/mckinsey/vizro/blob/main/CODE_OF_CONDUCT.md).

|

closed

|

2023-12-16T14:53:49Z

|

2024-07-09T15:10:35Z

|

https://github.com/mckinsey/vizro/issues/222

|

[

"Custom Components :rocket:"

] |

matheus695p

| 1

|