repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

SciTools/cartopy

|

matplotlib

| 2,482

|

indicate_inset_zoom not working on some projections

|

### Description

On most non-standard projections (like `TransverseMercator`) the connectors to an inset axis are not drawn correctly.

#### Code to reproduce

```python

map_proj = ccrs.TransverseMercator()

fig, ax = plt.subplots(

figsize=(15, 15),

subplot_kw={

"projection": map_proj

},

)

ax.set_extent([-50, 15, 50, 70], crs=ccrs.PlateCarree())

ax.add_feature(cfeature.OCEAN.with_scale('50m'), facecolor="lightsteelblue", zorder=1)

ax.coastlines(linewidth=0.2, zorder=2, resolution='50m')

# Add inset

inset_ax = inset_axes(

ax,

width="30%", # Width as a percentage of the parent axes

height="30%", # Height as a percentage of the parent axes

loc='lower left', # Location of the inset

bbox_to_anchor=(0, 0, 1, 1),

bbox_transform=ax.transAxes,

axes_class=GeoAxes,

axes_kwargs=dict(projection=map_proj)

)

inset_extent = [-43, -39, 63, 66]

inset_ax.set_extent(inset_extent, crs=ccrs.PlateCarree())

# Add features to inset

inset_ax.add_feature(cfeature.OCEAN, facecolor="lightsteelblue", zorder=1)

inset_ax.coastlines(linewidth=0.5, zorder=2, resolution='50m')

# Add box around location of inset map on the main map

x = [inset_extent[0], inset_extent[1], inset_extent[1], inset_extent[0], inset_extent[0]]

y = [inset_extent[2], inset_extent[2], inset_extent[3], inset_extent[3], inset_extent[2]]

ax.plot(x, y, color='k', alpha=0.5, transform=ccrs.PlateCarree())

# Draw lines between inset map and box on main map

rect, connectors = ax.indicate_inset_zoom(inset_ax, edgecolor="black", alpha=0.5, transform=ax.transAxes)

```

produces this

However, for some projections like `Mercator` or `PlateCarree`, it works

<details>

<summary>Full environment definition</summary>

### Cartopy version

0.24.0

### conda list

```

# Name Version Build Channel

aemet-opendata 0.5.4 pypi_0 pypi

aenum 3.1.15 pyhd8ed1ab_0 conda-forge

affine 2.4.0 pyhd8ed1ab_0 conda-forge

aiohttp 3.9.5 py312h41838bb_0 conda-forge

aiosignal 1.3.1 pyhd8ed1ab_0 conda-forge

annotated-types 0.7.0 pyhd8ed1ab_0 conda-forge

anyio 4.6.0 pyhd8ed1ab_1 conda-forge

appdirs 1.4.4 pyh9f0ad1d_0 conda-forge

appnope 0.1.4 pyhd8ed1ab_0 conda-forge

argon2-cffi 23.1.0 pyhd8ed1ab_0 conda-forge

argon2-cffi-bindings 21.2.0 py312hb553811_5 conda-forge

arrow 1.3.0 pyhd8ed1ab_0 conda-forge

asciitree 0.3.3 py_2 conda-forge

asttokens 2.4.1 pyhd8ed1ab_0 conda-forge

async-lru 2.0.4 pyhd8ed1ab_0 conda-forge

attrs 24.2.0 pyh71513ae_0 conda-forge

aws-c-auth 0.7.30 h0e83244_0 conda-forge

aws-c-cal 0.7.4 h8128ea2_1 conda-forge

aws-c-common 0.9.28 h00291cd_0 conda-forge

aws-c-compression 0.2.19 h8128ea2_1 conda-forge

aws-c-event-stream 0.4.3 hcd1ed9e_2 conda-forge

aws-c-http 0.8.9 h2f86973_0 conda-forge

aws-c-io 0.14.18 hf9a0f1c_10 conda-forge

aws-c-mqtt 0.10.5 h3e3652f_0 conda-forge

aws-c-s3 0.6.5 hf761692_5 conda-forge

aws-c-sdkutils 0.1.19 h8128ea2_3 conda-forge

aws-checksums 0.1.20 h8128ea2_0 conda-forge

aws-crt-cpp 0.28.2 heb037f6_6 conda-forge

aws-sdk-cpp 1.11.379 hd82b0e1_10 conda-forge

azure-core-cpp 1.13.0 hf8dbe3c_0 conda-forge

azure-identity-cpp 1.8.0 h60298e3_2 conda-forge

azure-storage-blobs-cpp 12.12.0 h646f05d_0 conda-forge

azure-storage-common-cpp 12.7.0 hf91904f_1 conda-forge

azure-storage-files-datalake-cpp 12.11.0 h14965f0_1 conda-forge

babel 2.14.0 pyhd8ed1ab_0 conda-forge

backports-datetime-fromisoformat 2.0.2 py312hb401068_0 conda-forge

basemap 1.4.1 np126py312hb3450bc_0 conda-forge

basemap-data 1.3.2 pyhd8ed1ab_3 conda-forge

basemap-data-hires 1.3.2 pyhd8ed1ab_3 conda-forge

beautifulsoup4 4.12.3 pyha770c72_0 conda-forge

bleach 6.1.0 pyhd8ed1ab_0 conda-forge

blinker 1.8.2 pyhd8ed1ab_0 conda-forge

blosc 1.21.6 h7d75f6d_0 conda-forge

bokeh 3.5.2 pyhd8ed1ab_0 conda-forge

boltons 24.0.0 pyhd8ed1ab_0 conda-forge

boto3 1.35.34 pyhd8ed1ab_0 conda-forge

botocore 1.35.34 pyge310_1234567_0 conda-forge

bottleneck 1.4.0 py312h3a11e2b_2 conda-forge

branca 0.7.2 pyhd8ed1ab_0 conda-forge

brotli 1.1.0 h00291cd_2 conda-forge

brotli-bin 1.1.0 h00291cd_2 conda-forge

brotli-python 1.1.0 py312h5861a67_2 conda-forge

bzip2 1.0.8 hfdf4475_7 conda-forge

c-ares 1.33.1 h44e7173_0 conda-forge

ca-certificates 2024.8.30 h8857fd0_0 conda-forge

cached-property 1.5.2 hd8ed1ab_1 conda-forge

cached_property 1.5.2 pyha770c72_1 conda-forge

cachelib 0.9.0 pyhd8ed1ab_0 conda-forge

cachetools 5.5.0 pyhd8ed1ab_0 conda-forge

cachier 3.0.1 pyhd8ed1ab_0 conda-forge

cairo 1.18.0 h37bd5c4_3 conda-forge

cartopy 0.24.0 py312h98e817e_0 conda-forge

cctools_osx-64 973.0.1 habff3f6_15 conda-forge

cdo 2.4.1 h70e7d24_1 conda-forge

certifi 2024.8.30 pyhd8ed1ab_0 conda-forge

cf_xarray 0.9.5 pyhd8ed1ab_1 conda-forge

cffi 1.17.1 py312hf857d28_0 conda-forge

cfgrib 0.9.14.1 pyhd8ed1ab_0 conda-forge

cfitsio 4.4.1 ha105788_0 conda-forge

cftime 1.6.4 py312h3a11e2b_1 conda-forge

charset-normalizer 3.3.2 pyhd8ed1ab_0 conda-forge

clang 15.0.7 hdae98eb_5 conda-forge

clang-15 15.0.7 default_h7151d67_5 conda-forge

clang_impl_osx-64 15.0.7 h03d6864_8 conda-forge

clang_osx-64 15.0.7 hb91bd55_8 conda-forge

clangxx 15.0.7 default_h7151d67_5 conda-forge

clangxx_impl_osx-64 15.0.7 h2133e9c_8 conda-forge

clangxx_osx-64 15.0.7 hb91bd55_8 conda-forge

click 8.1.7 unix_pyh707e725_0 conda-forge

click-params 0.5.0 pyhd8ed1ab_0 conda-forge

click-plugins 1.1.1 py_0 conda-forge

cligj 0.7.2 pyhd8ed1ab_1 conda-forge

cloudpickle 3.0.0 pyhd8ed1ab_0 conda-forge

cloup 3.0.5 pyhd8ed1ab_0 conda-forge

cmdstan 2.33.1 ha749d2a_0 conda-forge

cmdstanpy 1.2.4 pyhd8ed1ab_0 conda-forge

cmweather 0.3.2 pyhd8ed1ab_0 conda-forge

colorama 0.4.6 pyhd8ed1ab_0 conda-forge

colorcet 3.1.0 pyhd8ed1ab_0 conda-forge

comm 0.2.2 pyhd8ed1ab_0 conda-forge

compiler-rt 15.0.7 ha38d28d_2 conda-forge

compiler-rt_osx-64 15.0.7 ha38d28d_2 conda-forge

configobj 5.0.9 pyhd8ed1ab_0 conda-forge

contourpy 1.3.0 py312hc5c4d5f_2 conda-forge

convertdate 2.4.0 pyhd8ed1ab_0 conda-forge

copernicusmarine 1.3.3 pyhd8ed1ab_0 conda-forge

cycler 0.12.1 pyhd8ed1ab_0 conda-forge

cyrus-sasl 2.1.27 hf9bab2b_7 conda-forge

cytoolz 1.0.0 py312hb553811_0 conda-forge

dash 2.18.1 pyhd8ed1ab_0 conda-forge

dash-bootstrap-components 1.6.0 pyhd8ed1ab_0 conda-forge

dash-iconify 0.1.2 pyhd8ed1ab_0 conda-forge

dash-leaflet 1.0.15 pyhd8ed1ab_0 conda-forge

dash-mantine-components 0.14.4 pyhd8ed1ab_0 conda-forge

dask 2024.9.1 pyhd8ed1ab_0 conda-forge

dask-core 2024.9.1 pyhd8ed1ab_0 conda-forge

dask-expr 1.1.15 pyhd8ed1ab_0 conda-forge

dataclasses 0.8 pyhc8e2a94_3 conda-forge

debugpy 1.8.6 py312h5861a67_0 conda-forge

decorator 5.1.1 pyhd8ed1ab_0 conda-forge

defusedxml 0.7.1 pyhd8ed1ab_0 conda-forge

deprecated 1.2.14 pyh1a96a4e_0 conda-forge

deprecation 2.1.0 pyh9f0ad1d_0 conda-forge

dill 0.3.9 pyhd8ed1ab_0 conda-forge

diskcache 5.6.3 pyhd8ed1ab_0 conda-forge

distributed 2024.9.1 pyhd8ed1ab_0 conda-forge

distro 1.9.0 pyhd8ed1ab_0 conda-forge

dnspython 2.7.0 pyhff2d567_0 conda-forge

docopt 0.6.2 py_1 conda-forge

docopt-ng 0.9.0 pyhd8ed1ab_0 conda-forge

donfig 0.8.1.post1 pyhd8ed1ab_0 conda-forge

eccodes 2.38.0 he0f85d2_0 conda-forge

ecmwf-opendata 0.3.10 pyhd8ed1ab_0 conda-forge

entrypoints 0.4 pyhd8ed1ab_0 conda-forge

environs 11.0.0 pyhd8ed1ab_1 conda-forge

ephem 4.1.5 py312hb553811_2 conda-forge

eumdac 2.2.3 pyhd8ed1ab_0 conda-forge

eval-type-backport 0.2.0 pyhd8ed1ab_0 conda-forge

eval_type_backport 0.2.0 pyha770c72_0 conda-forge

exceptiongroup 1.2.2 pyhd8ed1ab_0 conda-forge

executing 2.1.0 pyhd8ed1ab_0 conda-forge

expat 2.6.3 hac325c4_0 conda-forge

fasteners 0.17.3 pyhd8ed1ab_0 conda-forge

fftw 3.3.10 nompi_h292e606_110 conda-forge

filelock 3.16.1 pyhd8ed1ab_0 conda-forge

findlibs 0.0.5 pyhd8ed1ab_0 conda-forge

fiona 1.9.6 py312hfc836c0_3 conda-forge

flask 3.0.3 pyhd8ed1ab_0 conda-forge

flask-caching 2.1.0 pyhd8ed1ab_0 conda-forge

flexcache 0.3 pyhd8ed1ab_0 conda-forge

flexparser 0.3.1 pyhd8ed1ab_0 conda-forge

fmt 11.0.2 h3c5361c_0 conda-forge

folium 0.17.0 pyhd8ed1ab_0 conda-forge

font-ttf-dejavu-sans-mono 2.37 hab24e00_0 conda-forge

font-ttf-inconsolata 3.000 h77eed37_0 conda-forge

font-ttf-source-code-pro 2.038 h77eed37_0 conda-forge

font-ttf-ubuntu 0.83 h77eed37_3 conda-forge

fontconfig 2.14.2 h5bb23bf_0 conda-forge

fonts-conda-ecosystem 1 0 conda-forge

fonts-conda-forge 1 0 conda-forge

fonttools 4.54.1 py312hb553811_0 conda-forge

fqdn 1.5.1 pyhd8ed1ab_0 conda-forge

freetype 2.12.1 h60636b9_2 conda-forge

freexl 2.0.0 h3ec172f_0 conda-forge

fribidi 1.0.10 hbcb3906_0 conda-forge

frozenlist 1.4.1 py312hb553811_1 conda-forge

fsspec 2024.9.0 pyhff2d567_0 conda-forge

gdal 3.9.1 py312h9b1be66_3 conda-forge

geobuf 1.1.1 pyh9f0ad1d_0 conda-forge

geographiclib 2.0 pypi_0 pypi

geopandas 1.0.1 pyhd8ed1ab_1 conda-forge

geopandas-base 1.0.1 pyha770c72_1 conda-forge

geopy 2.4.1 pypi_0 pypi

geos 3.12.1 h93d8f39_0 conda-forge

geotiff 1.7.3 h4bbec01_2 conda-forge

gettext 0.22.5 hdfe23c8_3 conda-forge

gettext-tools 0.22.5 hdfe23c8_3 conda-forge

gflags 2.2.2 hac325c4_1005 conda-forge

giflib 5.2.2 h10d778d_0 conda-forge

glog 0.7.1 h2790a97_0 conda-forge

gmp 6.3.0 hf036a51_2 conda-forge

gmpy2 2.1.5 py312h165121d_2 conda-forge

graphite2 1.3.13 h73e2aa4_1003 conda-forge

h11 0.14.0 pyhd8ed1ab_0 conda-forge

h2 4.1.0 pyhd8ed1ab_0 conda-forge

h5netcdf 1.3.0 pyhd8ed1ab_0 conda-forge

h5py 3.11.0 nompi_py312hfc94b03_102 conda-forge

harfbuzz 9.0.0 h098a298_1 conda-forge

hdf4 4.2.15 h8138101_7 conda-forge

hdf5 1.14.3 nompi_h687a608_105 conda-forge

hdf5plugin 5.0.0 py312h54c024f_0 conda-forge

holidays 0.57 pyhd8ed1ab_0 conda-forge

holoviews 1.19.1 pyhd8ed1ab_0 conda-forge

hpack 4.0.0 pyh9f0ad1d_0 conda-forge

html5lib 1.1 pyh9f0ad1d_0 conda-forge

httpcore 1.0.6 pyhd8ed1ab_0 conda-forge

httpx 0.27.2 pyhd8ed1ab_0 conda-forge

hvplot 0.11.0 pyhd8ed1ab_0 conda-forge

hyperframe 6.0.1 pyhd8ed1ab_0 conda-forge

icu 75.1 h120a0e1_0 conda-forge

idna 3.10 pyhd8ed1ab_0 conda-forge

importlib-metadata 8.5.0 pyha770c72_0 conda-forge

importlib-resources 6.4.5 pyhd8ed1ab_0 conda-forge

importlib_metadata 8.5.0 hd8ed1ab_0 conda-forge

importlib_resources 6.4.5 pyhd8ed1ab_0 conda-forge

ipykernel 6.29.5 pyh57ce528_0 conda-forge

ipython 8.28.0 pyh707e725_0 conda-forge

ipywidgets 8.1.5 pyhd8ed1ab_0 conda-forge

isoduration 20.11.0 pyhd8ed1ab_0 conda-forge

itsdangerous 2.2.0 pyhd8ed1ab_0 conda-forge

jasper 4.2.4 hb10263b_0 conda-forge

jdcal 1.4.1 py_0 conda-forge

jedi 0.19.1 pyhd8ed1ab_0 conda-forge

jinja2 3.1.4 pyhd8ed1ab_0 conda-forge

jiter 0.5.0 py312h669792a_1 conda-forge

jmespath 1.0.1 pyhd8ed1ab_0 conda-forge

joblib 1.4.2 pyhd8ed1ab_0 conda-forge

json-c 0.17 h6253ea5_1 conda-forge

json5 0.9.25 pyhd8ed1ab_0 conda-forge

jsonpickle 3.3.0 pyhd8ed1ab_0 conda-forge

jsonpointer 3.0.0 py312hb401068_1 conda-forge

jsonschema 4.23.0 pyhd8ed1ab_0 conda-forge

jsonschema-specifications 2023.12.1 pyhd8ed1ab_0 conda-forge

jsonschema-with-format-nongpl 4.23.0 hd8ed1ab_0 conda-forge

jupyter 1.1.1 pyhd8ed1ab_0 conda-forge

jupyter-lsp 2.2.5 pyhd8ed1ab_0 conda-forge

jupyter_client 8.6.3 pyhd8ed1ab_0 conda-forge

jupyter_console 6.6.3 pyhd8ed1ab_0 conda-forge

jupyter_core 5.7.2 pyh31011fe_1 conda-forge

jupyter_events 0.10.0 pyhd8ed1ab_0 conda-forge

jupyter_server 2.14.2 pyhd8ed1ab_0 conda-forge

jupyter_server_terminals 0.5.3 pyhd8ed1ab_0 conda-forge

jupyterlab 4.2.5 pyhd8ed1ab_0 conda-forge

jupyterlab_pygments 0.3.0 pyhd8ed1ab_1 conda-forge

jupyterlab_server 2.27.3 pyhd8ed1ab_0 conda-forge

jupyterlab_widgets 3.0.13 pyhd8ed1ab_0 conda-forge

kealib 1.5.3 he475af8_2 conda-forge

kiwisolver 1.4.7 py312hc5c4d5f_0 conda-forge

krb5 1.21.3 h37d8d59_0 conda-forge

lat_lon_parser 1.3.0 pyhd8ed1ab_0 conda-forge

lcms2 2.16 ha2f27b4_0 conda-forge

ld64_osx-64 609 h0fd476b_15 conda-forge

lerc 4.0.0 hb486fe8_0 conda-forge

libabseil 20240116.2 cxx17_hf036a51_1 conda-forge

libaec 1.1.3 h73e2aa4_0 conda-forge

libarchive 3.7.4 h20e244c_0 conda-forge

libarrow 17.0.0 ha60c65e_13_cpu conda-forge

libarrow-acero 17.0.0 hac325c4_13_cpu conda-forge

libarrow-dataset 17.0.0 hac325c4_13_cpu conda-forge

libarrow-flight 17.0.0 hea76c88_13_cpu conda-forge

libarrow-flight-sql 17.0.0 h824516f_13_cpu conda-forge

libarrow-gandiva 17.0.0 h8c10372_13_cpu conda-forge

libarrow-substrait 17.0.0 hba007a9_13_cpu conda-forge

libasprintf 0.22.5 hdfe23c8_3 conda-forge

libasprintf-devel 0.22.5 hdfe23c8_3 conda-forge

libblas 3.9.0 22_osx64_openblas conda-forge

libboost-headers 1.86.0 h694c41f_2 conda-forge

libbrotlicommon 1.1.0 h00291cd_2 conda-forge

libbrotlidec 1.1.0 h00291cd_2 conda-forge

libbrotlienc 1.1.0 h00291cd_2 conda-forge

libcblas 3.9.0 22_osx64_openblas conda-forge

libclang-cpp15 15.0.7 default_h7151d67_5 conda-forge

libcrc32c 1.1.2 he49afe7_0 conda-forge

libcurl 8.10.1 h58e7537_0 conda-forge

libcxx 19.1.1 hf95d169_0 conda-forge

libdeflate 1.20 h49d49c5_0 conda-forge

libedit 3.1.20191231 h0678c8f_2 conda-forge

libev 4.33 h10d778d_2 conda-forge

libevent 2.1.12 ha90c15b_1 conda-forge

libexpat 2.6.3 hac325c4_0 conda-forge

libffi 3.4.2 h0d85af4_5 conda-forge

libgdal 3.9.1 hb1a0af8_3 conda-forge

libgettextpo 0.22.5 hdfe23c8_3 conda-forge

libgettextpo-devel 0.22.5 hdfe23c8_3 conda-forge

libgfortran 5.0.0 13_2_0_h97931a8_3 conda-forge

libgfortran5 13.2.0 h2873a65_3 conda-forge

libglib 2.82.1 h63bbcf2_0 conda-forge

libgoogle-cloud 2.28.0 h721cda5_0 conda-forge

libgoogle-cloud-storage 2.28.0 h9e84e37_0 conda-forge

libgrpc 1.62.2 h384b2fc_0 conda-forge

libhwloc 2.11.1 default_h456cccd_1000 conda-forge

libiconv 1.17 hd75f5a5_2 conda-forge

libintl 0.22.5 hdfe23c8_3 conda-forge

libintl-devel 0.22.5 hdfe23c8_3 conda-forge

libjpeg-turbo 3.0.0 h0dc2134_1 conda-forge

libkml 1.3.0 h9ee1731_1021 conda-forge

liblapack 3.9.0 22_osx64_openblas conda-forge

libllvm14 14.0.6 hc8e404f_4 conda-forge

libllvm15 15.0.7 hbedff68_4 conda-forge

libllvm17 17.0.6 hbedff68_1 conda-forge

libllvm18 18.1.8 h9ce406d_2 conda-forge

libnetcdf 4.9.2 nompi_h7334405_114 conda-forge

libnghttp2 1.58.0 h64cf6d3_1 conda-forge

libntlm 1.4 h0d85af4_1002 conda-forge

libopenblas 0.3.27 openmp_h8869122_1 conda-forge

libparquet 17.0.0 hf1b0f52_13_cpu conda-forge

libpng 1.6.44 h4b8f8c9_0 conda-forge

libpq 16.4 h75a757a_2 conda-forge

libprotobuf 4.25.3 hd4aba4c_1 conda-forge

libre2-11 2023.09.01 h81f5012_2 conda-forge

librttopo 1.1.0 hf05f67e_15 conda-forge

libsodium 1.0.20 hfdf4475_0 conda-forge

libspatialindex 2.0.0 hf036a51_0 conda-forge

libspatialite 5.1.0 h5579707_7 conda-forge

libsqlite 3.46.1 h4b8f8c9_0 conda-forge

libssh2 1.11.0 hd019ec5_0 conda-forge

libthrift 0.20.0 h75589b3_1 conda-forge

libtiff 4.6.0 h129831d_3 conda-forge

libudunits2 2.2.28 h516ac8c_3 conda-forge

libutf8proc 2.8.0 hb7f2c08_0 conda-forge

libuuid 2.38.1 hb7f2c08_0 conda-forge

libwebp-base 1.4.0 h10d778d_0 conda-forge

libxcb 1.17.0 hf1f96e2_0 conda-forge

libxml2 2.12.7 heaf3512_4 conda-forge

libxslt 1.1.39 h03b04e6_0 conda-forge

libzip 1.11.1 h3116616_0 conda-forge

libzlib 1.3.1 hd23fc13_2 conda-forge

linkify-it-py 2.0.3 pyhd8ed1ab_0 conda-forge

llvm-openmp 19.1.0 h56322cc_0 conda-forge

llvm-tools 15.0.7 hbedff68_4 conda-forge

llvmlite 0.43.0 py312hcc8fd36_1 conda-forge

locket 1.0.0 pyhd8ed1ab_0 conda-forge

lunarcalendar 0.0.9 py_0 conda-forge

lxml 5.3.0 py312h4feaf87_1 conda-forge

lz4 4.3.3 py312h83408cd_1 conda-forge

lz4-c 1.9.4 hf0c8a7f_0 conda-forge

lzo 2.10 h10d778d_1001 conda-forge

magics 4.15.4 hbffab32_1 conda-forge

magics-python 1.5.8 pyhd8ed1ab_1 conda-forge

make 4.4.1 h00291cd_2 conda-forge

mapclassify 2.8.1 pyhd8ed1ab_0 conda-forge

markdown 3.6 pyhd8ed1ab_0 conda-forge

markdown-it-py 3.0.0 pyhd8ed1ab_0 conda-forge

markupsafe 2.1.5 py312hb553811_1 conda-forge

marshmallow 3.22.0 pyhd8ed1ab_0 conda-forge

matplotlib 3.8.4 py312hb401068_2 conda-forge

matplotlib-base 3.8.4 py312hb6d62fa_2 conda-forge

matplotlib-inline 0.1.7 pyhd8ed1ab_0 conda-forge

mdit-py-plugins 0.4.2 pyhd8ed1ab_0 conda-forge

mdurl 0.1.2 pyhd8ed1ab_0 conda-forge

measurement 3.2.0 py_1 conda-forge

metpy 1.6.3 pyhd8ed1ab_0 conda-forge

minizip 4.0.7 h62b0c8d_0 conda-forge

mistune 3.0.2 pyhd8ed1ab_0 conda-forge

mpc 1.3.1 h9d8efa1_1 conda-forge

mpfr 4.2.1 haed47dc_3 conda-forge

mpmath 1.3.0 pyhd8ed1ab_0 conda-forge

msgpack-python 1.1.0 py312hc5c4d5f_0 conda-forge

multidict 6.1.0 py312h9131086_0 conda-forge

multiprocess 0.70.16 py312hb553811_1 conda-forge

multiurl 0.3.1 pyhd8ed1ab_0 conda-forge

munkres 1.1.4 pyh9f0ad1d_0 conda-forge

nbclient 0.10.0 pyhd8ed1ab_0 conda-forge

nbconvert 7.16.4 hd8ed1ab_1 conda-forge

nbconvert-core 7.16.4 pyhd8ed1ab_1 conda-forge

nbconvert-pandoc 7.16.4 hd8ed1ab_1 conda-forge

nbformat 5.10.4 pyhd8ed1ab_0 conda-forge

ncurses 6.5 hf036a51_1 conda-forge

nest-asyncio 1.6.0 pyhd8ed1ab_0 conda-forge

netcdf4 1.7.1 nompi_py312h683d7b0_102 conda-forge

networkx 3.3 pyhd8ed1ab_1 conda-forge

notebook 7.2.2 pyhd8ed1ab_0 conda-forge

notebook-shim 0.2.4 pyhd8ed1ab_0 conda-forge

nspr 4.35 hea0b92c_0 conda-forge

nss 3.105 h3135457_0 conda-forge

numba 0.60.0 py312hc3b515d_0 conda-forge

numcodecs 0.13.0 py312h1171441_0 conda-forge

numpy 1.26.4 py312he3a82b2_0 conda-forge

ollama 0.1.17 cpu_he06a1bc_0 conda-forge

openai 1.51.0 pyhd8ed1ab_0 conda-forge

openjpeg 2.5.2 h7310d3a_0 conda-forge

openldap 2.6.8 hcd2896d_0 conda-forge

openssl 3.4.0 hd471939_0 conda-forge

orc 2.0.2 h22b2039_0 conda-forge

overrides 7.7.0 pyhd8ed1ab_0 conda-forge

owslib 0.31.0 pyhd8ed1ab_0 conda-forge

packaging 24.1 pyhd8ed1ab_0 conda-forge

pandas 2.2.3 py312h98e817e_1 conda-forge

pandoc 3.5 h694c41f_0 conda-forge

pandocfilters 1.5.0 pyhd8ed1ab_0 conda-forge

panel 1.5.2 pyhd8ed1ab_0 conda-forge

pango 1.54.0 h115fe74_2 conda-forge

param 2.1.1 pyhff2d567_0 conda-forge

parso 0.8.4 pyhd8ed1ab_0 conda-forge

partd 1.4.2 pyhd8ed1ab_0 conda-forge

patsy 0.5.6 pyhd8ed1ab_0 conda-forge

pcre2 10.44 h7634a1b_2 conda-forge

pexpect 4.9.0 pyhd8ed1ab_0 conda-forge

pickleshare 0.7.5 py_1003 conda-forge

pillow 10.4.0 py312h683ea77_1 conda-forge

pint 0.24.3 pyhd8ed1ab_0 conda-forge

pip 24.2 pyh8b19718_1 conda-forge

pixman 0.43.4 h73e2aa4_0 conda-forge

pkgutil-resolve-name 1.3.10 pyhd8ed1ab_1 conda-forge

platformdirs 4.3.6 pyhd8ed1ab_0 conda-forge

plotly 5.24.1 pyhd8ed1ab_0 conda-forge

polars 1.9.0 py312h088783b_0 conda-forge

pooch 1.8.2 pyhd8ed1ab_0 conda-forge

poppler 24.04.0 h0face88_0 conda-forge

poppler-data 0.4.12 hd8ed1ab_0 conda-forge

portalocker 2.10.1 py312hb401068_0 conda-forge

portion 2.5.0 pyhd8ed1ab_0 conda-forge

postgresql 16.4 h4b98a8f_2 conda-forge

proj 9.4.1 hf92c781_1 conda-forge

prometheus_client 0.21.0 pyhd8ed1ab_0 conda-forge

prompt-toolkit 3.0.48 pyha770c72_0 conda-forge

prompt_toolkit 3.0.48 hd8ed1ab_0 conda-forge

prophet 1.1.5 py312h3264805_1 conda-forge

protobuf 4.25.3 py312hd13efa9_1 conda-forge

psutil 6.0.0 py312hb553811_1 conda-forge

pthread-stubs 0.4 h00291cd_1002 conda-forge

ptyprocess 0.7.0 pyhd3deb0d_0 conda-forge

pure_eval 0.2.3 pyhd8ed1ab_0 conda-forge

pyarrow 17.0.0 py312h0be7463_1 conda-forge

pyarrow-core 17.0.0 py312h63b501a_1_cpu conda-forge

pyarrow-hotfix 0.6 pyhd8ed1ab_0 conda-forge

pycparser 2.22 pyhd8ed1ab_0 conda-forge

pydantic 2.9.2 pyhd8ed1ab_0 conda-forge

pydantic-core 2.23.4 py312h669792a_0 conda-forge

pydap 3.5 pyhd8ed1ab_0 conda-forge

pygments 2.18.0 pyhd8ed1ab_0 conda-forge

pykdtree 1.3.13 py312h3a11e2b_1 conda-forge

pymeeus 0.5.12 pyhd8ed1ab_0 conda-forge

pymongo 4.10.1 py312h5861a67_0 conda-forge

pyobjc-core 10.3.1 py312hab44e94_1 conda-forge

pyobjc-framework-cocoa 10.3.1 py312hab44e94_1 conda-forge

pyogrio 0.9.0 py312h43b3a95_0 conda-forge

pyorbital 1.8.3 pyhd8ed1ab_0 conda-forge

pyparsing 3.1.4 pyhd8ed1ab_0 conda-forge

pypdf 5.0.1 pyha770c72_0 conda-forge

pyproj 3.6.1 py312haf32e09_9 conda-forge

pyresample 1.30.0 py312h98e817e_0 conda-forge

pyshp 2.3.1 pyhd8ed1ab_0 conda-forge

pysocks 1.7.1 pyha2e5f31_6 conda-forge

pyspectral 0.13.5 pyhd8ed1ab_0 conda-forge

pystac 1.11.0 pyhd8ed1ab_0 conda-forge

python 3.12.7 h8f8b54e_0_cpython conda-forge

python-dateutil 2.9.0 pyhd8ed1ab_0 conda-forge

python-dotenv 1.0.1 pyhd8ed1ab_0 conda-forge

python-eccodes 2.37.0 py312h3a11e2b_0 conda-forge

python-fastjsonschema 2.20.0 pyhd8ed1ab_0 conda-forge

python-geotiepoints 1.7.4 py312h5dc8b90_0 conda-forge

python-json-logger 2.0.7 pyhd8ed1ab_0 conda-forge

python-tzdata 2024.2 pyhd8ed1ab_0 conda-forge

python_abi 3.12 5_cp312 conda-forge

pytz 2024.1 pyhd8ed1ab_0 conda-forge

pyviz_comms 3.0.3 pyhd8ed1ab_0 conda-forge

pyyaml 6.0.2 py312hb553811_1 conda-forge

pyzmq 26.2.0 py312h54d5c6a_2 conda-forge

qhull 2020.2 h3c5361c_5 conda-forge

qtconsole-base 5.6.0 pyha770c72_0 conda-forge

qtpy 2.4.1 pyhd8ed1ab_0 conda-forge

rapidfuzz 3.10.0 py312h5861a67_0 conda-forge

rasterio 1.3.10 py312h1c98354_4 conda-forge

re2 2023.09.01 hb168e87_2 conda-forge

readline 8.2 h9e318b2_1 conda-forge

referencing 0.35.1 pyhd8ed1ab_0 conda-forge

requests 2.32.3 pyhd8ed1ab_0 conda-forge

retrying 1.3.3 pyhd8ed1ab_3 conda-forge

rfc3339-validator 0.1.4 pyhd8ed1ab_0 conda-forge

rfc3986-validator 0.1.1 pyh9f0ad1d_0 conda-forge

rioxarray 0.17.0 pyhd8ed1ab_0 conda-forge

rpds-py 0.20.0 py312h669792a_1 conda-forge

rtree 1.3.0 py312hb560d21_2 conda-forge

s3transfer 0.10.2 pyhd8ed1ab_0 conda-forge

satpy 0.51.0 pyhd8ed1ab_0 conda-forge

scikit-learn 1.5.2 py312h9d777eb_1 conda-forge

scipy 1.14.1 py312he82a568_0 conda-forge

seaborn 0.13.2 hd8ed1ab_2 conda-forge

seaborn-base 0.13.2 pyhd8ed1ab_2 conda-forge

seawater 3.3.5 pyhd8ed1ab_0 conda-forge

semver 3.0.2 pyhd8ed1ab_0 conda-forge

send2trash 1.8.3 pyh31c8845_0 conda-forge

setuptools 75.1.0 pyhd8ed1ab_0 conda-forge

shapely 2.0.4 py312h3daf033_1 conda-forge

sigtool 0.1.3 h88f4db0_0 conda-forge

simplejson 3.19.3 py312hb553811_1 conda-forge

six 1.16.0 pyh6c4a22f_0 conda-forge

snappy 1.2.1 he1e6707_0 conda-forge

sniffio 1.3.1 pyhd8ed1ab_0 conda-forge

snuggs 1.4.7 pyhd8ed1ab_1 conda-forge

sortedcontainers 2.4.0 pyhd8ed1ab_0 conda-forge

soupsieve 2.5 pyhd8ed1ab_1 conda-forge

spdlog 1.14.1 h325aa07_1 conda-forge

sqlite 3.46.1 he26b093_0 conda-forge

stack_data 0.6.2 pyhd8ed1ab_0 conda-forge

stamina 24.3.0 pyhd8ed1ab_0 conda-forge

stanio 0.5.1 pyhd8ed1ab_0 conda-forge

statsmodels 0.14.4 py312h3a11e2b_0 conda-forge

sympy 1.13.3 pypyh2585a3b_103 conda-forge

tabulate 0.9.0 pyhd8ed1ab_1 conda-forge

tapi 1100.0.11 h9ce4665_0 conda-forge

tbb 2021.13.0 h37c8870_0 conda-forge

tbb-devel 2021.13.0 hf74753b_0 conda-forge

tblib 3.0.0 pyhd8ed1ab_0 conda-forge

tenacity 9.0.0 pyhd8ed1ab_0 conda-forge

terminado 0.18.1 pyh31c8845_0 conda-forge

threadpoolctl 3.5.0 pyhc1e730c_0 conda-forge

tiledb 2.24.2 h313d0e2_12 conda-forge

tinycss2 1.3.0 pyhd8ed1ab_0 conda-forge

tk 8.6.13 h1abcd95_1 conda-forge

tomli 2.0.2 pyhd8ed1ab_0 conda-forge

toolz 1.0.0 pyhd8ed1ab_0 conda-forge

tornado 6.4.1 py312hb553811_1 conda-forge

tqdm 4.66.5 pyhd8ed1ab_0 conda-forge

traitlets 5.14.3 pyhd8ed1ab_0 conda-forge

trollimage 1.25.0 py312h1171441_0 conda-forge

trollsift 0.5.1 pyhd8ed1ab_0 conda-forge

types-python-dateutil 2.9.0.20241003 pyhff2d567_0 conda-forge

typing-extensions 4.12.2 hd8ed1ab_0 conda-forge

typing_extensions 4.12.2 pyha770c72_0 conda-forge

typing_utils 0.1.0 pyhd8ed1ab_0 conda-forge

tzcode 2024b h00291cd_0 conda-forge

tzdata 2024b hc8b5060_0 conda-forge

tzfpy 0.15.6 py312h669792a_1 conda-forge

uc-micro-py 1.0.3 pyhd8ed1ab_0 conda-forge

udunits2 2.2.28 h516ac8c_3 conda-forge

unidecode 1.3.8 pyhd8ed1ab_0 conda-forge

uri-template 1.3.0 pyhd8ed1ab_0 conda-forge

uriparser 0.9.8 h6aefe2f_0 conda-forge

urllib3 2.2.3 pyhd8ed1ab_0 conda-forge

validators 0.22.0 pyhd8ed1ab_0 conda-forge

watchdog 5.0.3 py312hb553811_0 conda-forge

wcwidth 0.2.13 pyhd8ed1ab_0 conda-forge

webcolors 24.8.0 pyhd8ed1ab_0 conda-forge

webencodings 0.5.1 pyhd8ed1ab_2 conda-forge

webob 1.8.8 pyhd8ed1ab_0 conda-forge

websocket-client 1.8.0 pyhd8ed1ab_0 conda-forge

werkzeug 3.0.4 pyhd8ed1ab_0 conda-forge

wheel 0.44.0 pyhd8ed1ab_0 conda-forge

widgetsnbextension 4.0.13 pyhd8ed1ab_0 conda-forge

wradlib 2.1.1 pyhd8ed1ab_0 conda-forge

wrapt 1.16.0 py312hb553811_1 conda-forge

xarray 2024.9.0 pyhd8ed1ab_0 conda-forge

xarray-datatree 0.0.14 pyhd8ed1ab_0 conda-forge

xclim 0.52.2 pyhd8ed1ab_0 conda-forge

xerces-c 3.2.5 h197e74d_2 conda-forge

xlsx2csv 0.8.3 pyhd8ed1ab_0 conda-forge

xlsxwriter 3.2.0 pyhd8ed1ab_0 conda-forge

xmltodict 0.13.0 pyhd8ed1ab_0 conda-forge

xorg-libxau 1.0.11 h00291cd_1 conda-forge

xorg-libxdmcp 1.1.5 h00291cd_0 conda-forge

xradar 0.6.5 pyhd8ed1ab_0 conda-forge

xyzservices 2024.9.0 pyhd8ed1ab_0 conda-forge

xz 5.2.6 h775f41a_0 conda-forge

yamale 5.2.1 pyhca7485f_0 conda-forge

yaml 0.2.5 h0d85af4_2 conda-forge

yarl 1.13.1 py312hb553811_0 conda-forge

zarr 2.18.3 pyhd8ed1ab_0 conda-forge

zeromq 4.3.5 hb33e954_5 conda-forge

zict 3.0.0 pyhd8ed1ab_0 conda-forge

zipp 3.20.2 pyhd8ed1ab_0 conda-forge

zlib 1.3.1 hd23fc13_2 conda-forge

zstandard 0.23.0 py312h7122b0e_1 conda-forge

zstd 1.5.6 h915ae27_0 conda-forge

```

</details>

|

open

|

2024-11-21T09:19:33Z

|

2024-11-22T21:15:22Z

|

https://github.com/SciTools/cartopy/issues/2482

|

[

"Component: Geometry transforms"

] |

guidocioni

| 4

|

jonaswinkler/paperless-ng

|

django

| 1,548

|

[BUG] Consume stops after initial run

|

**Describe the bug**

I am importing documents from my scanner to the consume directory. I've noticed that files are not picked up until I restart the container (running in Docker). After a restart, all files present are consumed correctly. After that initial run, if I continue placing files in the consume directory, no more will be picked up for consumption.

**To Reproduce**

1. Stop Docker container

2. Place files in consume directory

3. Start Docker container

4. Watch them being consumed

5. Now place some additional files in the directory

6. Watch them being **not** consumed!

**Expected behavior**

All files placed in the directory should be consumed after a short delay.

**Webserver logs**

I've posted the logs just before the last consume started. After `12:10:23 [Q] INFO recycled worker Process-1:13` I placed additional files in the directory which are not being picked up.

```

[2022-01-14 12:10:06,952] [INFO] [paperless.consumer] Consuming IMG_20220114_0003.pdf

[2022-01-14 12:10:16,126] [INFO] [paperless.handlers] Assigning correspondent EnBW to 2021-10-19 IMG_20220114_0002

[2022-01-14 12:10:16,155] [INFO] [paperless.handlers] Detected 2 potential document types, so we've opted for Rechnung

[2022-01-14 12:10:16,161] [INFO] [paperless.handlers] Assigning document type Rechnung to 2021-10-19 EnBW IMG_20220114_0002

[2022-01-14 12:10:16,187] [INFO] [paperless.handlers] Tagging "2021-10-19 EnBW IMG_20220114_0002" with "Vertrag"

[2022-01-14 12:10:17,271] [INFO] [paperless.consumer] Document 2021-10-19 EnBW IMG_20220114_0002 consumption finished

12:10:17 [Q] INFO Process-1:12 stopped doing work

12:10:17 [Q] INFO Processed [IMG_20220114_0002.pdf]

12:10:17 [Q] INFO recycled worker Process-1:12

12:10:17 [Q] INFO Process-1:14 ready for work at 579

12:10:17 [Q] INFO Process-1:14 processing [eighteen-colorado-potato-india]

12:10:17 [Q] INFO Process-1:14 stopped doing work

12:10:17 [Q] INFO Processed [eighteen-colorado-potato-india]

12:10:18 [Q] INFO recycled worker Process-1:14

12:10:18 [Q] INFO Process-1:15 ready for work at 581

[2022-01-14 12:10:22,528] [INFO] [paperless.handlers] Assigning correspondent DHBW Karlsruhe to 2022-01-14 IMG_20220114_0003

[2022-01-14 12:10:22,939] [INFO] [paperless.consumer] Document 2022-01-14 DHBW Karlsruhe IMG_20220114_0003 consumption finished

12:10:22 [Q] INFO Process-1:13 stopped doing work

12:10:23 [Q] INFO Processed [IMG_20220114_0003.pdf]

12:10:23 [Q] INFO recycled worker Process-1:13

12:10:23 [Q] INFO Process-1:16 ready for work at 589

[2022-01-14 12:10:56 +0000] [40] [CRITICAL] WORKER TIMEOUT (pid:45)

[2022-01-14 12:10:56 +0000] [40] [WARNING] Worker with pid 45 was terminated due to signal 6

12:19:59 [Q] INFO Enqueued 1

12:19:59 [Q] INFO Process-1 created a task from schedule [Check all e-mail accounts]

12:19:59 [Q] INFO Process-1:15 processing [fifteen-four-oregon-ceiling]

12:19:59 [Q] INFO Process-1:15 stopped doing work

12:19:59 [Q] INFO Processed [fifteen-four-oregon-ceiling]

12:19:59 [Q] INFO recycled worker Process-1:15

12:19:59 [Q] INFO Process-1:17 ready for work at 597

12:30:01 [Q] INFO Enqueued 1

12:30:01 [Q] INFO Process-1 created a task from schedule [Check all e-mail accounts]

12:30:01 [Q] INFO Process-1:16 processing [batman-iowa-sweet-berlin]

12:30:01 [Q] INFO Process-1:16 stopped doing work

12:30:01 [Q] INFO Processed [batman-iowa-sweet-berlin]

12:30:02 [Q] INFO recycled worker Process-1:16

12:30:02 [Q] INFO Process-1:18 ready for work at 599

12:39:33 [Q] INFO Enqueued 1

12:39:33 [Q] INFO Process-1 created a task from schedule [Check all e-mail accounts]

12:39:33 [Q] INFO Process-1:17 processing [timing-emma-football-monkey]

12:39:34 [Q] INFO Process-1:17 stopped doing work

12:39:34 [Q] INFO Processed [timing-emma-football-monkey]

12:39:34 [Q] INFO recycled worker Process-1:17

12:39:34 [Q] INFO Process-1:19 ready for work at 601

12:49:36 [Q] INFO Enqueued 1

12:49:36 [Q] INFO Process-1 created a task from schedule [Check all e-mail accounts]

12:49:36 [Q] INFO Process-1:18 processing [leopard-kansas-pip-orange]

12:49:36 [Q] INFO Process-1:18 stopped doing work

12:49:36 [Q] INFO Processed [leopard-kansas-pip-orange]

12:49:37 [Q] INFO recycled worker Process-1:18

12:49:37 [Q] INFO Process-1:20 ready for work at 603

```

**Relevant information**

- Running in Docker (on Kubernetes)

- 1.4.5

|

closed

|

2022-01-14T13:02:36Z

|

2022-01-20T09:41:10Z

|

https://github.com/jonaswinkler/paperless-ng/issues/1548

|

[] |

PhilippCh

| 2

|

sinaptik-ai/pandas-ai

|

data-visualization

| 1,488

|

Local LLM pandasai.json

|

### System Info

"name": "pandasai-all",

"version": "1.0.0",

MacOS (15.1.1)

The code is run directly as Poetry run. and not in a docker.

### 🐛 Describe the bug

I'm trying to use a local LLM but it keeps defaulting to BamboLLM

Here how the pandasai.json at the root directory looks like

Regardless what "llm" variable i use it defaults to BambooLLM!

```

"llm": "LLM",

"llm_options": {

"model": "Llama-3.3-70B-Instruct",

"api_url": "http://localhost:9000/v1"

}

```

This is the supported list

```

__all__ = [

"LLM",

"BambooLLM",

"AzureOpenAI",

"OpenAI",

"GooglePalm",

"GoogleVertexAI",

"GoogleGemini",

"HuggingFaceTextGen",

"LangchainLLM",

"BedrockClaude",

"IBMwatsonx",

]

```

|

closed

|

2024-12-19T10:45:00Z

|

2025-01-20T10:09:51Z

|

https://github.com/sinaptik-ai/pandas-ai/issues/1488

|

[

"bug"

] |

ahadda5

| 7

|

slackapi/python-slack-sdk

|

asyncio

| 1,261

|

blocks/attachments as str for chat.* API calls should be clearly supported

|

The use case reported at https://github.com/slackapi/python-slack-sdk/pull/1259#issuecomment-1237007209 has been supported for a long time but it was **not by design**.

```python

client = WebClient(token="....")

client.chat_postMessage(text="fallback", blocks="{ JSON string here }")

```

The `blocks` and `attachments` arguments for chat.postMessage API etc. are supposed to be `Sequence[Block | Attachment | dict]` as of today. However, passing the whole blocks/attachments as a single str should be a relatively common use case. In future versions, the use case should be clearly covered in both type hints and its implementation.

### Category (place an `x` in each of the `[ ]`)

- [x] **slack_sdk.web.WebClient (sync/async)** (Web API client)

- [ ] **slack_sdk.webhook.WebhookClient (sync/async)** (Incoming Webhook, response_url sender)

- [ ] **slack_sdk.models** (UI component builders)

- [ ] **slack_sdk.oauth** (OAuth Flow Utilities)

- [ ] **slack_sdk.socket_mode** (Socket Mode client)

- [ ] **slack_sdk.audit_logs** (Audit Logs API client)

- [ ] **slack_sdk.scim** (SCIM API client)

- [ ] **slack_sdk.rtm** (RTM client)

- [ ] **slack_sdk.signature** (Request Signature Verifier)

### Requirements

Please read the [Contributing guidelines](https://github.com/slackapi/python-slack-sdk/blob/main/.github/contributing.md) and [Code of Conduct](https://slackhq.github.io/code-of-conduct) before creating this issue or pull request. By submitting, you are agreeing to those rules.

|

closed

|

2022-09-06T04:44:42Z

|

2022-09-06T06:41:40Z

|

https://github.com/slackapi/python-slack-sdk/issues/1261

|

[

"enhancement",

"web-client",

"Version: 3x"

] |

seratch

| 0

|

lk-geimfari/mimesis

|

pandas

| 1,583

|

Replace black with ruff.

|

Ruff would be a good fit for us.

|

open

|

2024-07-19T13:24:33Z

|

2024-07-19T13:25:11Z

|

https://github.com/lk-geimfari/mimesis/issues/1583

|

[] |

lk-geimfari

| 0

|

tensorflow/tensor2tensor

|

machine-learning

| 1,449

|

ImportError: No module named 'mesh_tensorflow.transformer'

|

### Description

Importing `t2t_trainer` is not working in `1.12.0`.

I have just updated my t2t version to `1.12.0` and noticed that I cannot import `t2t_trainer` anymor as I am getting

> `ImportError: No module named 'mesh_tensorflow.transformer'`

which was not the case in `1.11.0`.

...

### Reproduce

```python

from tensor2tensor.bin import t2t_trainer

if __name__ == '__main__':

t2t_trainer.main(None)

```

### Stack trace

```

Traceback (most recent call last):

File "/home/sfalk/tmp/pycharm_project_265/asr/punctuation/test.py", line 1, in <module>

from tensor2tensor.bin import t2t_trainer

File "/home/sfalk/miniconda3/envs/t2t/lib/python3.5/site-packages/tensor2tensor/bin/t2t_trainer.py", line 24, in <module>

from tensor2tensor import models # pylint: disable=unused-import

File "/home/sfalk/miniconda3/envs/t2t/lib/python3.5/site-packages/tensor2tensor/models/__init__.py", line 35, in <module>

from tensor2tensor.models import mtf_transformer2

File "/home/sfalk/miniconda3/envs/t2t/lib/python3.5/site-packages/tensor2tensor/models/mtf_transformer2.py", line 23, in <module>

from mesh_tensorflow.transformer import moe

ImportError: No module named 'mesh_tensorflow.transformer'

```

### Environment information

```

OS: Linux 4.15.0-45-generic #48~16.04.1-Ubuntu SMP Tue Jan 29 18:03:48 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

$ pip freeze | grep tensor

mesh-tensorflow==0.0.4

tensor2tensor==1.12.0

tensorboard==1.12.0

tensorflow-gpu==1.12.0

tensorflow-metadata==0.9.0

tensorflow-probability==0.5.0

$ python -V

Python 3.5.6 :: Anaconda, Inc.

```

|

closed

|

2019-02-13T08:41:16Z

|

2019-02-13T08:45:50Z

|

https://github.com/tensorflow/tensor2tensor/issues/1449

|

[] |

stefan-falk

| 1

|

microsoft/nni

|

machine-learning

| 5,431

|

Can not prune model TypeError: 'model' object is not iterable

|

**Describe the issue**:

**Environment**:

- NNI version: 2.6.1

- Training service (local|remote|pai|aml|etc):

- Client OS:

- Server OS (for remote mode only):

- Python version:

- PyTorch/TensorFlow version:

- Is conda/virtualenv/venv used?:

- Is running in Docker?:

**Configuration**:

- Experiment config (remember to remove secrets!):

- Search space:

**Log message**:

- nnimanager.log:

- dispatcher.log:

- nnictl stdout and stderr:

<!--

Where can you find the log files:

LOG: https://github.com/microsoft/nni/blob/master/docs/en_US/Tutorial/HowToDebug.md#experiment-root-director

STDOUT/STDERR: https://nni.readthedocs.io/en/stable/reference/nnictl.html#nnictl-log-stdout

-->

**How to reproduce it?**:

I have some simple model:

```

class get_model(nn.Module):

def __init__(self, args, num_channel=3, num_class=40, **kwargs):

super(get_model, self).__init__()

self.args = args

self.bn1 = nn.BatchNorm2d(64)

self.bn2 = nn.BatchNorm2d(64)

self.bn3 = nn.BatchNorm2d(128)

self.bn4 = nn.BatchNorm2d(256)

self.bn5 = nn.BatchNorm1d(args.emb_dims)

self.conv1 = nn.Sequential(nn.Conv2d(num_channel*2, 64, kernel_size=1, bias=False),

self.bn1,

nn.LeakyReLU(negative_slope=0.2))

self.conv2 = nn.Sequential(nn.Conv2d(64*2, 64, kernel_size=1, bias=False),

self.bn2,

nn.LeakyReLU(negative_slope=0.2))

self.conv3 = nn.Sequential(nn.Conv2d(64*2, 128, kernel_size=1, bias=False),

self.bn3,

nn.LeakyReLU(negative_slope=0.2))

self.conv4 = nn.Sequential(nn.Conv2d(128*2, 256, kernel_size=1, bias=False),

self.bn4,

nn.LeakyReLU(negative_slope=0.2))

self.conv5 = nn.Sequential(nn.Conv1d(512, args.emb_dims, kernel_size=1, bias=False),

self.bn5,

nn.LeakyReLU(negative_slope=0.2))

self.linear1 = nn.Linear(args.emb_dims*2, 512, bias=False)

self.bn6 = nn.BatchNorm1d(512)

self.dp1 = nn.Dropout(p=args.dropout)

self.linear2 = nn.Linear(512, 256)

self.bn7 = nn.BatchNorm1d(256)

self.dp2 = nn.Dropout(p=args.dropout)

self.linear3 = nn.Linear(256, num_class)

def forward(self, x):

batch_size = x.size()[0]

x = get_graph_feature(x, k=self.args.k)

x = self.conv1(x)

x1 = x.max(dim=-1, keepdim=False)[0]

x = get_graph_feature(x1, k=self.args.k)

x = self.conv2(x)

x2 = x.max(dim=-1, keepdim=False)[0]

x = get_graph_feature(x2, k=self.args.k)

x = self.conv3(x)

x3 = x.max(dim=-1, keepdim=False)[0]

x = get_graph_feature(x3, k=self.args.k)

x = self.conv4(x)

x4 = x.max(dim=-1, keepdim=False)[0]

x = torch.cat((x1, x2, x3, x4), dim=1)

x = self.conv5(x)

x1 = F.adaptive_max_pool1d(x, 1).view(batch_size, -1)

x2 = F.adaptive_avg_pool1d(x, 1).view(batch_size, -1)

x = torch.cat((x1, x2), 1)

x = F.leaky_relu(self.bn6(self.linear1(x)), negative_slope=0.2)

x = self.dp1(x)

x = F.leaky_relu(self.bn7(self.linear2(x)), negative_slope=0.2)

x = self.dp2(x)

x = self.linear3(x)

return x

```

And I want to prune with different pruners, for example:

```

from nni.algorithms.compression.pytorch.pruning import L2NormPruner

pruner = L2NormPruner(model, config_list)

masked_model, masks = pruner.compress()

```

But get the error:

```

Traceback (most recent call last):

File "nni_optim.py", line 73, in <module>

masked_model, masks = pruner.compress()

TypeError: 'model' object is not iterable

```

Although in doc is written that model should be torch..nn.Module.

That is the issue with my model?

|

closed

|

2023-03-10T07:29:46Z

|

2023-03-13T07:47:34Z

|

https://github.com/microsoft/nni/issues/5431

|

[] |

Kracozebr

| 5

|

TheAlgorithms/Python

|

python

| 12,217

|

Add a index priority queue to Data Structures

|

### Feature description

As there is no IPQ implementation in the standard python library, I wish to add one to TheAlgorithms.

|

closed

|

2024-10-21T04:59:16Z

|

2024-10-21T05:03:04Z

|

https://github.com/TheAlgorithms/Python/issues/12217

|

[

"enhancement"

] |

alessadroc

| 1

|

flairNLP/flair

|

nlp

| 2,859

|

module 'conllu' has no attribute TokenList

|

This did not happen till today . I have been using this basic code for 6 months and this bug is new and did not appear anytime before today.

Please resolve quickly

|

closed

|

2022-07-12T18:03:37Z

|

2022-11-14T15:03:52Z

|

https://github.com/flairNLP/flair/issues/2859

|

[

"bug"

] |

yash-rathore

| 10

|

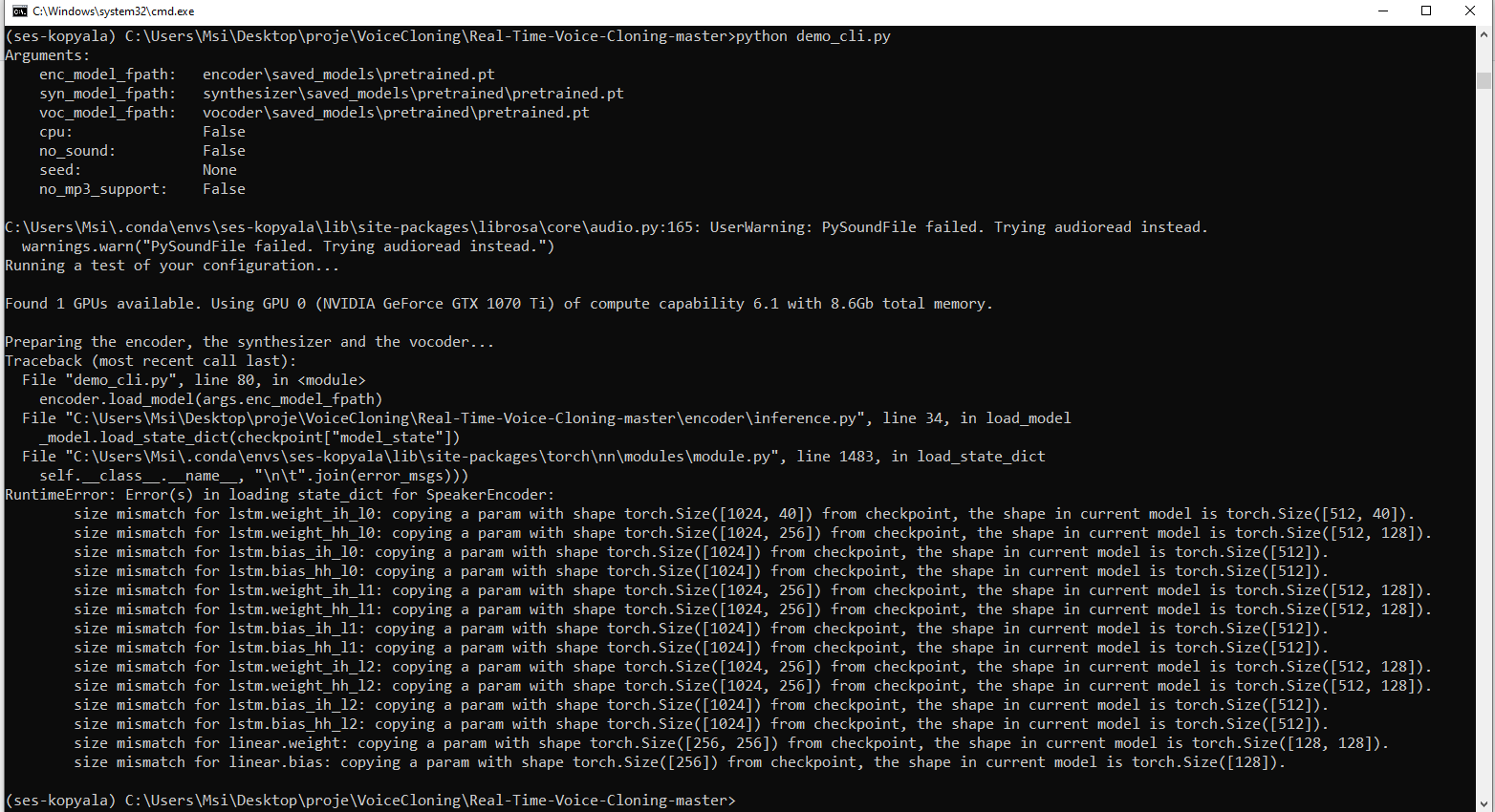

CorentinJ/Real-Time-Voice-Cloning

|

deep-learning

| 992

|

Error in loading state_dict for SpeakerEncoder: size mismatch

|

Hi! I trained a synthesizer a month ago and I could synthesize my voice, too mechanical though, but now I got this error. How can I fix this?

|

closed

|

2022-01-23T15:40:31Z

|

2022-01-28T19:41:32Z

|

https://github.com/CorentinJ/Real-Time-Voice-Cloning/issues/992

|

[] |

EkinUstundag

| 1

|

openapi-generators/openapi-python-client

|

fastapi

| 338

|

Add support for recursively defined schemas

|

**Describe the bug**

I tried to create a python client from an OpenApi-Spec with the command `openapi-python-client generate --path secret_server_openapi3.json`. Then I got multple warnings:

- invalid data in items of array settings

- Could not find reference in parsed models or enums

- Cannot parse response for status code 200, response will be ommitted from generated client

I searched the schemas, which are responsible for the errors and they all had in common, that they either reference another schema which is recursively defined or reference themself.

For example one of the problematic schemas:

```

{

"SecretDependencySetting": {

"type": "object",

"properties": {

"active": {

"type": "boolean",

"description": "Indicates the setting is active."

},

"childSettings": {

"type": "array",

"description": "The Child Settings that would be used for list of options.",

"items": {

"$ref": "#/components/schemas/SecretDependencySetting"

}

}

},

"description": "Secret Dependency Settings - Mostly used internally"

}

}

```

**To Reproduce**

Define a schema recursively and then try to create a client from it.

**Expected behavior**

The software can also parse a recursively defined schema.

**OpenAPI Spec File**

It's 66000 Lines, so I'm not sure if this will help you, or github will allow me to post it :smile:

Just ask if you need specific parts of the spec, aside from what I already provided above.

**Desktop (please complete the following information):**

- OS: Linux Manjaro

- Python Version: 3.9.1

- openapi-python-client version 0.7.3

|

closed

|

2021-02-17T13:27:58Z

|

2022-11-12T17:49:54Z

|

https://github.com/openapi-generators/openapi-python-client/issues/338

|

[

"✨ enhancement",

"🐲 here there be dragons"

] |

sp-schoen

| 13

|

WZMIAOMIAO/deep-learning-for-image-processing

|

deep-learning

| 730

|

FileNotFoundError: [Errno 2] No such file or directory: './save_weights/ssd300-14.pth'

|

有没有大哥可以分享一下训练好的ssd300-14.pth

|

open

|

2023-03-30T09:50:36Z

|

2023-03-30T09:50:36Z

|

https://github.com/WZMIAOMIAO/deep-learning-for-image-processing/issues/730

|

[] |

ANXIOUS-7

| 0

|

roboflow/supervision

|

tensorflow

| 1,044

|

[ByteTrack] add `minimum_consecutive_frames` to limit the number of falsely assigned tracker IDs

|

### Description

Expand the `ByteTrack` API by adding the `minimum_consecutive_frames` argument.

It will specify how many consecutive frames an object must be detected to be assigned a tracker ID. This will help prevent the creation of accidental tracker IDs in cases of false detection or double detection. Until detection reaches `minimum_consecutive_frames`, it should be assigned `-1`.

### API

```python

class ByteTrack:

def __init__(

self,

track_activation_threshold: float = 0.25,

lost_track_buffer: int = 30,

minimum_matching_threshold: float = 0.8,

frame_rate: int = 30,

minimum_consecutive_frames: int = 1

):

pass

```

### Additional

- Note: Please share a Google Colab with minimal code to test the new feature. We know it's additional work, but it will speed up the review process. The reviewer must test each change. Setting up a local environment to do this is time-consuming. Please ensure that Google Colab can be accessed without any issues (make it public). Thank you! 🙏🏻

|

closed

|

2024-03-25T15:29:06Z

|

2024-04-24T22:25:19Z

|

https://github.com/roboflow/supervision/issues/1044

|

[

"enhancement",

"api:tracker"

] |

SkalskiP

| 3

|

voila-dashboards/voila

|

jupyter

| 940

|

UI Tests: Update to the new Galata setup

|

<!--

Welcome! Thanks for thinking of a way to improve Voilà. If this solves a problem for you, then it probably solves that problem for lots of people! So the whole community will benefit from this request.

Before creating a new feature request please search the issues for relevant feature requests.

-->

### Problem

The new galata setup now lives in the core JupyterLab repo: https://github.com/jupyterlab/jupyterlab/tree/master/galata

We should update to it, as it comes with many improvements.

### Proposed Solution

Update the `ui-tests` setup here: https://github.com/voila-dashboards/voila/tree/master/ui-tests

Following the instructions here: https://github.com/jupyterlab/jupyterlab/tree/master/galata

<!-- Provide a clear and concise description of a way to accomplish what you want. For example:

* Add an option so that when [...] [...] will happen

-->

### Additional context

Galata was moved to the core lab repo in https://github.com/jupyterlab/jupyterlab/pull/10796

|

closed

|

2021-09-02T06:58:02Z

|

2021-09-03T16:02:02Z

|

https://github.com/voila-dashboards/voila/issues/940

|

[

"maintenance"

] |

jtpio

| 1

|

HumanSignal/labelImg

|

deep-learning

| 180

|

Image in portrait mode

|

I have some images which are took in portrait mode like this

https://imgur.com/baVUII8

But after I load the image, it becomes landscape mode

https://imgur.com/EQUGKIW

If it is in landscape mode, I cant label eyes and nose

both Win10 and Ubuntu16.04+python2+QT4 happened

How to fix this problem?

Thanks for ur helping

Best Wishes

|

closed

|

2017-10-23T06:25:10Z

|

2017-10-23T07:54:22Z

|

https://github.com/HumanSignal/labelImg/issues/180

|

[] |

nerv3890

| 1

|

mkhorasani/Streamlit-Authenticator

|

streamlit

| 10

|

Password modification

|

Great job !

Do you plan to add a "forgot password" option ?

|

closed

|

2022-05-16T13:32:30Z

|

2022-06-25T15:15:33Z

|

https://github.com/mkhorasani/Streamlit-Authenticator/issues/10

|

[] |

axel-prl-mrtg

| 2

|

neuml/txtai

|

nlp

| 41

|

Enhance API to fully support all txtai functionality

|

Currently, the API supports a subset of functionality in the embeddings module. Fully support embeddings and add methods qa extraction and labeling.

This will enable network-based implementations of txtai in other programming languages.

|

closed

|

2020-11-20T17:31:08Z

|

2021-05-13T15:04:12Z

|

https://github.com/neuml/txtai/issues/41

|

[] |

davidmezzetti

| 0

|

quantmind/pulsar

|

asyncio

| 195

|

Deprecate async function and replace with ensure_future

|

First step towards new syntax

|

closed

|

2016-01-30T20:38:38Z

|

2016-03-17T08:01:08Z

|

https://github.com/quantmind/pulsar/issues/195

|

[

"design decision",

"enhancement"

] |

lsbardel

| 1

|

learning-at-home/hivemind

|

asyncio

| 507

|

[BUG] Tests for compression fail on GPU servers with bitsandbytes installed

|

**Describe the bug**

While working on https://github.com/learning-at-home/hivemind/pull/490, I found that if I have bitsandbytes installed in a GPU-enabled environment, I get an error when running [test_adaptive_compression](https://github.com/learning-at-home/hivemind/blob/master/tests/test_compression.py#L152), which happens to be the only test that uses TrainingAverager under the hood.

I dug into it a bit, and the failure seems to be caused by `CUDA error: initialization error` from PyTorch, which AFAIK emerges when we're trying to initialize the CUDA context twice. More specifically, it appears when we are trying to initialize the optimizer states in TrainingAverager. My guess is that the context is created when importing bitsandbytes first and then when using something (anything?) from GPU-enabled PyTorch later. We are sunsetting the support for TrainingAverager anyway, but to me it's not obvious how to correctly migrate from this class in a given test.

**To Reproduce**

Install the environment in a GPU-enabled system, try running `CUDA_LAUNCH_BLOCKING=1 pytest -s --full-trace tests/test_compression.py`. Then uninstall bitsandbytes, comment out the parts in test_compression that rely on it (mostly `test_tensor_compression`), run the same command.

**Environment**

* Python 3.8.8

* [Commit 131f82c](https://github.com/learning-at-home/hivemind/commit/131f82c97ea67510d552bb7a68138ad27cbfa5d4)

* PyTorch 1.12.1, bitsandbytes 0.32.3

* NVIDIA RTX 2080 Ti GPU

|

open

|

2022-09-10T14:59:04Z

|

2022-09-10T14:59:04Z

|

https://github.com/learning-at-home/hivemind/issues/507

|

[

"bug",

"ci"

] |

mryab

| 0

|

dynaconf/dynaconf

|

fastapi

| 772

|

[bug] Setting auto_cast in instance options is ignored

|

**Affected version:** 3.1.9

**Describe the bug**

Setting **auto_cast** option inside `config.py` to **False** is ignored, even though the docs say otherwise.

**To Reproduce**

Add **auto_cast** to Dynaconf initialization.

```

from dynaconf import Dynaconf

settings = Dynaconf(

settings_files=["settings.toml", ".secrets.toml"],

auto_cast=False,

**more_options

)

```

Add the following to the `settings.toml`:

```

foo="@int 32"

```

Check value of **foo** in your `program.py`:

```

from config import settings

foo = settings.get('foo', False)

print(f"{foo=}", type(foo))

```

Executing `program.py` will return:

```

foo=32 <class 'int'>

```

when it should return:

```

foo='@int 32' <class 'str'>

```

Running `program.py` with **AUTO_CAST_FOR_DYNACONF=false** works as expected.

|

closed

|

2022-07-22T15:43:41Z

|

2022-09-22T12:49:36Z

|

https://github.com/dynaconf/dynaconf/issues/772

|

[

"bug"

] |

pvmm

| 1

|

Lightning-AI/pytorch-lightning

|

deep-learning

| 20,511

|

Cannot import OptimizerLRSchedulerConfig or OptimizerLRSchedulerConfigDict

|

### Bug description

Since I bumped up `lightning` to `2.5.0`, the `configure_optimizers` has been failing the type checker. I saw that `OptimizerLRSchedulerConfig` had been replaced with `OptimizerLRSchedulerConfigDict`, but I cannot import any of them.

### What version are you seeing the problem on?

v2.5

### How to reproduce the bug

```python

import torch

import pytorch_lightning as pl

from lightning.pytorch.utilities.types import OptimizerLRSchedulerConfigDict

from torch.optim.lr_scheduler import ReduceLROnPlateau

class Model(pl.LightningModule):

...

def configure_optimizers(self) -> OptimizerLRSchedulerConfigDict:

optimizer = torch.optim.Adam(self.parameters(), lr=1e-3)

scheduler = ReduceLROnPlateau(

optimizer, mode="min", factor=0.1, patience=20, min_lr=1e-6

)

return {

"optimizer": optimizer,

"lr_scheduler": {

"scheduler": scheduler,

"monitor": "val_loss",

"interval": "epoch",

"frequency": 1,

},

}

```

### Error messages and logs

```

In [2]: import lightning

In [3]: lightning.__version__

Out[3]: '2.5.0'

In [4]: from lightning.pytorch.utilities.types import OptimizerLRSchedulerConfigDict

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

Cell In[4], line 1

----> 1 from lightning.pytorch.utilities.types import OptimizerLRSchedulerConfigDict

ImportError: cannot import name 'OptimizerLRSchedulerConfigDict' from 'lightning.pytorch.utilities.types' (/home/test/.venv/lib/python3.11/site-packages/lightning/pytorch/utilities/types.py)

In [5]: from lightning.pytorch.utilities.types import OptimizerLRSchedulerConfig

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

Cell In[5], line 1

----> 1 from lightning.pytorch.utilities.types import OptimizerLRSchedulerConfig

ImportError: cannot import name 'OptimizerLRSchedulerConfig' from 'lightning.pytorch.utilities.types' (/home/test/.venv/lib/python3.11/site-packages/lightning/pytorch/utilities/types.py)

```

### Environment

<details>

<summary>Current environment</summary>

```

#- PyTorch Lightning Version (e.g., 2.5.0):

#- PyTorch Version (e.g., 2.5):

#- Python version (e.g., 3.12):

#- OS (e.g., Linux):

#- CUDA/cuDNN version:

#- GPU models and configuration:

#- How you installed Lightning(`conda`, `pip`, source):

```

</details>

### More info

_No response_

|

closed

|

2024-12-20T15:18:27Z

|

2024-12-21T01:42:58Z

|

https://github.com/Lightning-AI/pytorch-lightning/issues/20511

|

[

"bug",

"ver: 2.5.x"

] |

zordi-youngsun

| 4

|

iterative/dvc

|

machine-learning

| 10,452

|

Getting timeout in DVC pull

|

I have a large file arround 3GB

When I try to do dvc pull from inside a docker environment, I get this below error.

ERROR: unexpected error - The difference between the request time and the current time is too large.: An error occurred (RequestTimeTooSkewed) when calling the GetObject operation: The difference between the request time and the current time is too large.

Having any troubles? Hit us up at https://dvc.org/support, we are always happy to help!

Can you help regarding this ?

|

closed

|

2024-06-07T12:12:01Z

|

2025-01-12T15:10:47Z

|

https://github.com/iterative/dvc/issues/10452

|

[

"awaiting response",

"A: data-sync"

] |

bhaswa

| 6

|

minimaxir/textgenrnn

|

tensorflow

| 161

|

What version(s) of tensorflow are supported?

|

open

|

2019-12-14T03:32:11Z

|

2019-12-17T08:37:26Z

|

https://github.com/minimaxir/textgenrnn/issues/161

|

[] |

marcusturewicz

| 1

|

|

ymcui/Chinese-LLaMA-Alpaca

|

nlp

| 642

|

关于lora 精调 Alpaca-7B-Plus模型时为何不需要设置Lora参数的疑惑

|

### 提交前必须检查以下项目

- [X] 请确保使用的是仓库最新代码(git pull),一些问题已被解决和修复。

- [X] 由于相关依赖频繁更新,请确保按照[Wiki](https://github.com/ymcui/Chinese-LLaMA-Alpaca/wiki)中的相关步骤执行

- [X] 我已阅读[FAQ章节](https://github.com/ymcui/Chinese-LLaMA-Alpaca/wiki/常见问题)并且已在Issue中对问题进行了搜索,没有找到相似问题和解决方案

- [X] 第三方插件问题:例如[llama.cpp](https://github.com/ggerganov/llama.cpp)、[text-generation-webui](https://github.com/oobabooga/text-generation-webui)、[LlamaChat](https://github.com/alexrozanski/LlamaChat)等,同时建议到对应的项目中查找解决方案

- [X] 模型正确性检查:务必检查模型的[SHA256.md](https://github.com/ymcui/Chinese-LLaMA-Alpaca/blob/main/SHA256.md),模型不对的情况下无法保证效果和正常运行

### 问题类型

模型训练与精调

### 基础模型

Alpaca-Plus-7B

### 操作系统

Linux

### 详细描述问题

<img width="700" alt="image" src="https://github.com/ymcui/Chinese-LLaMA-Alpaca/assets/108610753/8ddc7cb5-9ae1-4ffb-a5e3-69f6ac9d7432">

一、我用您提供的指令训练了Chinese-Alpaca-7B-Plus的模型, 然而一部分较长的语句精调后能记住,而另一部分句子,则会发生遗忘。网上给出的建议是增加cut_off_length和增加lora权重,但是在您的指南里说精调Chinese-Alpaca-7B-Plus不需要设置Lora权重,请问这是为什么?

二、训练过程中我使用两张A5000(24GB),训练过程中计算loss函数的时候提示deepspeed OverFlow。但是测试训练结果的时候仍然比较令人满意,请问这里计算loss的时候的OverFlow是否可以直接忽略?

三、训练测试结果发现,lora算法,对于某些比较长的回答语句,会存在选择性记忆的情况,有些问题的回答可以做到与训练集一模一样,但有些问题的回答则是明显删减过的。请问这种情况下应当如何解决?我希望是回答得越精准越好,最好和我的训练集的答案一模一样。

非常感谢您的项目以及指南,对于我的工作有非常大的帮助!

### 依赖情况(代码类问题务必提供)

_No response_

### 运行日志或截图

_No response_

|

closed

|

2023-06-20T05:28:01Z

|

2023-06-27T23:56:01Z

|

https://github.com/ymcui/Chinese-LLaMA-Alpaca/issues/642

|

[

"stale"

] |

jjyu-ustc

| 2

|

marshmallow-code/marshmallow-sqlalchemy

|

sqlalchemy

| 232

|

Issue with primary keys that are also foreign keys

|

I have a case where marshmallow-sqlalchemy causes an SQLAlchemy FlushError at session commit time.

This is the error I get:

```

sqlalchemy.orm.exc.FlushError: New instance <Parent at 0x7f790f4832b0> with identity key (<class '__main__.Parent'>, (1,), None) conflicts with persistent instance <Parent at 0x7f790f4830f0>

```

My case is that of a Parent class/table with a primary key that also is a foreign key to a Child class/table.

The code that triggers the error is the following:

```py

parent = Session.query(Parent).one()

json_data = {"child": {"id": 1, "name": "new name"}}

with Session.no_autoflush:

instance = ParentSchema().load(json_data)

Session.add(instance.data)

Session.commit() # -> FlushError

```

`Session.query(Parent).one()` loads the parent object from the database and associates it with the session. `ParentSchema().load()` doesn't load the parent object from the database. Instead it creates a new object. And that new object has the same identity as the parent object that was loaded by `Session.query(Parent).one()`.

I tend to think that the problem is in [`ModelSchema.get_instance()`](https://github.com/marshmallow-code/marshmallow-sqlalchemy/blob/c46150667b98297a034dfe08582659129f9f9926/src/marshmallow_sqlalchemy/schema.py#L170-L182), which fails to load the instance from the database and return `None` instead.

This is the full test-case: https://gist.github.com/elemoine/dcd0475acb26cdbf827015c8fae744ba. The code that initially triggered this issue in my application is more complex than this. This test-case is the minimal code reproducing the issue that I've been able to come up with.

|

closed

|

2019-08-05T15:18:31Z

|

2025-01-12T05:37:10Z

|

https://github.com/marshmallow-code/marshmallow-sqlalchemy/issues/232

|

[] |

elemoine

| 3

|

dmlc/gluon-cv

|

computer-vision

| 865

|

Any channel pruning examples?

|

Hi there, I'm trying some channel pruning ideas in mxnet.

However it's hard to get some simple tutorials or examples I can start with.

So is there some basic examples showing how to do channel pruning (freezing)

during training?

An example of channel pruning is **Learning Efficient Convolutional Networks through Network Slimming** [arXiv](arxiv.org/abs/1708.06519) [PyTorch code](https://github.com/foolwood/pytorch-slimming).

And the example of channel freezing is **Soft Filter Pruning for Accelerating Deep Convolutional Neural Networks** [arXiv](arxiv.org/abs/1808.06866) [PyTorch code](https://github.com/he-y/soft-filter-pruning).

By channel pruning I mean eliminate some of the neurons (channels) during training.

By channel freezing I mean zero-mask the data (in forward) and the gradient (in backward) of part of the neurons during training.

I also raised a discussion here <https://discuss.gluon.ai/t/topic/13395>.

I just immigrated to MXNet from PyTorch so my main problem is that I'm not very familiar with

the API. For example I don't know how to access the data and gradient of model parameters.

____

Update-0:

I started to implement by myself. In the document of `Parameter` (<https://mxnet.incubator.apache.org/_modules/mxnet/gluon/parameter.html>), there only the

`set_data()` method. The `set_grad()` method is absent through which I can freeze some of the parameters by zeroing their gradients.

So how can I modify the parameter gradient manually?

|

closed

|

2019-07-14T09:50:16Z

|

2021-05-24T07:52:30Z

|

https://github.com/dmlc/gluon-cv/issues/865

|

[

"Stale"

] |

zeakey

| 1

|

geopandas/geopandas

|

pandas

| 3,512

|

BUG: to_arrow conversion should write crs as PROJJSON object and not string.

|

- [x] I have checked that this issue has not already been reported.

- [x] I have confirmed this bug exists on the latest version of geopandas.

- [ ] (optional) I have confirmed this bug exists on the main branch of geopandas.

---

#### Code Sample, a copy-pastable example

```python

import geopandas

import nanoarrow as na

df = geopandas.GeoDataFrame({"geometry": geopandas.GeoSeries.from_wkt(["POINT (0 1)"], crs="OGC:CRS84")})

na.Array(df.to_arrow()).schema.field(0).metadata

#> <nanoarrow._schema.SchemaMetadata>

#> - b'ARROW:extension:name': b'geoarrow.wkb'

#> - b'ARROW:extension:metadata': b'{"crs": "{\\"$schema\\":\\"https://proj.org/sch

```

#### Problem description

The `"crs"` field here is PROJJSON as a string within JSON (it should be a JSON object!). I suspect this is because somewhere there is a `to_json()` that maybe should be a `to_json_dict()`.

cc @jorisvandenbossche @kylebarron

#### Expected Output

#> - b'ARROW:extension:metadata': b'{"crs": {"$schema":"https://proj.org/sch

(i.e., value of CRS is a JSON object and not a string)

#### Output of ``geopandas.show_versions()``

<details>

SYSTEM INFO

-----------

python : 3.13.1 (main, Dec 3 2024, 17:59:52) [Clang 16.0.0 (clang-1600.0.26.4)]

executable : [/Users/dewey/gh/arrow/.venv/bin/python](https://file+.vscode-resource.vscode-cdn.net/Users/dewey/gh/arrow/.venv/bin/python)

machine : macOS-15.3-arm64-arm-64bit-Mach-O

GEOS, GDAL, PROJ INFO

---------------------

GEOS : 3.11.4

GEOS lib : None

GDAL : 3.9.1

GDAL data dir: [/Users/dewey/gh/arrow/.venv/lib/python3.13/site-packages/pyogrio/gdal_data/](https://file+.vscode-resource.vscode-cdn.net/Users/dewey/gh/arrow/.venv/lib/python3.13/site-packages/pyogrio/gdal_data/)

PROJ : 9.4.1

PROJ data dir: [/Users/dewey/gh/arrow/.venv/lib/python3.13/site-packages/pyproj/proj_dir/share/proj](https://file+.vscode-resource.vscode-cdn.net/Users/dewey/gh/arrow/.venv/lib/python3.13/site-packages/pyproj/proj_dir/share/proj)

PYTHON DEPENDENCIES

-------------------

geopandas : 1.0.1

numpy : 2.2.0

pandas : 2.2.3

pyproj : 3.7.0

shapely : 2.0.7

pyogrio : 0.10.0

geoalchemy2: None

geopy : None

matplotlib : None

mapclassify: None

fiona : None

psycopg : None

psycopg2 : None

pyarrow : 20.0.0.dev158+g8cb2868569.d20250213

</details>

|

closed

|

2025-02-13T05:30:49Z

|

2025-02-18T14:27:06Z

|

https://github.com/geopandas/geopandas/issues/3512

|

[

"bug"

] |

paleolimbot

| 1

|

davidsandberg/facenet

|

tensorflow

| 383

|

face authentication using custom dataset

|

Hi All,

I have trained set of images for which am able to create .pkl file. Now i need to authenticate with single image. Tried with existing code(classifier.py) but could not able to achieve the same.

Pls suggest the way forward

Thanks

vij

|

open

|

2017-07-18T15:43:44Z

|

2019-11-10T06:06:33Z

|

https://github.com/davidsandberg/facenet/issues/383

|

[] |

myinzack

| 2

|

piccolo-orm/piccolo

|

fastapi

| 593

|

Email column type

|

We don't have an email column type at the moment. It's because Postgres doesn't have an email column type.

However, I think it would be useful to designate a column as containing an email.

## Option 1

We can define a new column type, which basically just inherits from `Varchar`:

```python

class Email(Varchar):

pass

```

## Option 2

Or, let the user annotate a column as containing an email.

One option is using [Annotated](https://docs.python.org/3/library/typing.html#typing.Annotated), but the downside is it was only added in Python 3.9:

```python

from typing import Annotated

class MyTable(Table):

email: Annotated[Varchar, 'email'] = Varchar()

```

Or something like this instead:

```python

class MyTable(Table):

email = Varchar(validators=['email'])

```

## Benefits

The main reason it would be useful to have an email column is when using [create_pydantic_model](https://piccolo-orm.readthedocs.io/en/latest/piccolo/serialization/index.html#create-pydantic-model) to auto generate a Pydantic model, we can set the type to `EmailStr` (see [docs](https://pydantic-docs.helpmanual.io/usage/types/)).

## Input

Any input is welcome - once we decide on an approach, adding it will be pretty easy.

|

closed

|

2022-08-19T20:23:16Z

|

2022-08-20T21:42:11Z

|

https://github.com/piccolo-orm/piccolo/issues/593

|

[

"enhancement",

"good first issue",

"proposal - input needed"

] |

dantownsend

| 5

|

hbldh/bleak

|

asyncio

| 815

|

Distinguish Advertisement Data and Scan Response Data

|

* bleak version: 0.14.2

### Description

Could you clarify about a limitation of the library, is it possible to distinguish data, received by the first advertisement packet (PDU type: `ADV_IND`) and the subsequent scan response packet (PDU type: `SCAN_RSP`) from a specific device? Right now, launching the example https://github.com/hbldh/bleak/blob/develop/examples/discover.py, I see that it returns both advertisement data and scan response data joined together. I would like to see exactly which data were received through advertisement packet and which one through scan response packet. Is it possible to realize using Bleck library?

|

closed

|

2022-04-26T01:26:10Z

|

2022-04-26T07:42:13Z

|

https://github.com/hbldh/bleak/issues/815

|

[] |

RAlexeev

| 2

|

httpie/cli

|

python

| 518

|

h

|

o

|

closed

|

2016-09-12T07:11:16Z

|

2020-04-27T07:20:57Z

|

https://github.com/httpie/cli/issues/518

|

[] |

ghost

| 2

|

neuml/txtai

|

nlp

| 512

|

Add support for configurable text/object fields

|

Currently, the `text` and `object` fields are hardcoded throughout much of the code. This change will make the text and object fields configurable.

|

closed

|

2023-07-29T02:11:45Z

|

2023-07-29T02:17:45Z

|

https://github.com/neuml/txtai/issues/512

|

[] |

davidmezzetti

| 0

|

InstaPy/InstaPy

|

automation

| 6,147

|

Can't follow private accounts (even after enabling the option)

|

## Expected Behavior

I want to be able to follow private accounts, any help will be really appreciated!

## Current Behavior

I get this message: "is private account, by default skip"

Even after adding:

```

session.set_skip_users(skip_private=False,

skip_no_profile_pic=True,

no_profile_pic_percentage=100,

skip_business=True)

```

## Possible Solution (optional)

## InstaPy configuration

```

# imports

from instapy import InstaPy

from instapy import smart_run

# login credentials

insta_username = 'user'

insta_password = 'pass'

session = InstaPy(username=insta_username, password=insta_password)

with smart_run(session):

""" Activity flow """

# general settings

session.set_dont_include(['accounts'])

session.set_quota_supervisor(enabled=True,

sleep_after=["likes", "comments", "follows", "unfollows", "server_calls_h"],

sleepyhead=True,

stochastic_flow=True,

notify_me=True,

peak_likes_hourly=57,

peak_likes_daily=585,

peak_comments_hourly=21,

peak_comments_daily=182,

peak_follows_hourly=48,

peak_follows_daily=None,

peak_unfollows_hourly=35,

peak_unfollows_daily=402,

peak_server_calls_hourly=None,

peak_server_calls_daily=4700)

# follow

session.set_do_follow(enabled=True, percentage=35, times=1)

session.unfollow_users(amount=60, InstapyFollowed=(True, "nonfollowers"), style="FIFO", unfollow_after=90*60*60, sleep_delay=501)

# like

session.set_do_like(True, percentage=70)

session.set_delimit_liking(enabled=True, max_likes=100, min_likes=0)

session.set_dont_unfollow_active_users(enabled=True, posts=5)

session.set_relationship_bounds(enabled=True,

potency_ratio=None,

delimit_by_numbers=True,

max_followers=3000,

max_following=4490,

min_followers=100,

min_following=56,

min_posts=10,

max_posts=1000)

session.follow_likers(['accounts'], photos_grab_amount=1, follow_likers_per_photo=5, randomize=True, sleep_delay=200, interact=True)

session.set_skip_users(skip_private=False,

skip_no_profile_pic=True,

no_profile_pic_percentage=100,

skip_business=True)

# Commenting

session.set_do_comment(enabled=False)

```

|

closed

|

2021-04-12T17:50:17Z

|

2021-07-21T05:18:53Z

|

https://github.com/InstaPy/InstaPy/issues/6147

|

[

"wontfix"

] |

hecontreraso

| 1

|

gradio-app/gradio

|

deep-learning

| 10,252

|

Browser get Out of Memory when using Plotly for plotting.

|

### Describe the bug

I used Gradio to create a page for monitoring an image that needs to be refreshed continuously. When I used Plotly for plotting and set the refresh rate to 10 Hz, the browser showed an "**Out of Memory**" error after running for less than 10 minutes.

I found that the issue is caused by the `Plot.svelte` file generating new CSS, which is then continuously duplicated by `PlotlyPlot.svelte`.

This problem can be resolved by making the following change in the `Plot.svelte` file:

Replace:

```javascript

key += 1;

let type = value?.type;

```

With:

```javascript

let type = value?.type;

if (type !== "plotly") {

key += 1;

}

```

In other words, if the plot type is `plotly`, no new CSS will be generated.

Finally, I’m new to both Svelte and TypeScript, so some of my descriptions might not be entirely accurate, but this method does resolve the issue.

### Have you searched existing issues? 🔎

- [X] I have searched and found no existing issues

### Reproduction

```python

import gradio as gr

import numpy as np

from datetime import datetime

import plotly.express as px

def get_image(shape):

# data = caget(pv)

x = np.arange(0, shape[1])

y = np.arange(0, shape[0])

X, Y = np.meshgrid(x, y)

xc = np.random.randint(0, shape[1])

yc = np.random.randint(0, shape[0])

data = np.exp(-((X - xc) ** 2 + (Y - yc) ** 2) / (2 * 100**2)) * 1000

data = data.reshape(shape)

return data

fig: None = px.imshow(

get_image((1200, 1920)),

color_continuous_scale="jet",

)

fig["layout"]["uirevision"] = 'constant'

# fig["config"]["plotGlPixelRatio"] = 1

# fig.update_traces(hovertemplate="x: %{x} <br> y: %{y} <br> z: %{z} <br> color: %{color}")

# fig.update_layout(coloraxis_showscale=False)

fig["layout"]['hovermode']=False

fig["layout"]["annotations"]=None

def make_plot(width, height):

shape = (int(height), int(width))

img = get_image(shape)

## image plot

fig["data"][0].update(z=img)

return fig

with gr.Blocks(delete_cache=(120, 180)) as demo: