repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

jmcnamara/XlsxWriter

|

pandas

| 997

|

question: Is it possible to create a pie-of-pie chart?

|

### Question

Is it possible to create a pie-of-pie or bar-of-pie chart with XlsWriter? If not, is there a work-around or alternative to represent the same information?

|

closed

|

2023-07-01T01:16:58Z

|

2023-07-01T15:06:05Z

|

https://github.com/jmcnamara/XlsxWriter/issues/997

|

[

"question"

] |

rmsapre

| 1

|

microsoft/unilm

|

nlp

| 1,361

|

How to load beit3 fine-tined weight such as beit3_base_patch16_224_nlvr2.pth?

|

timm.create_model doesn't support beit3 yet, so how to load the fine-tined weight such as beit3_base_patch16_224_nlvr2.pth?

|

open

|

2023-11-08T13:16:14Z

|

2024-02-22T03:01:34Z

|

https://github.com/microsoft/unilm/issues/1361

|

[] |

yangzhj53

| 6

|

pyeve/eve

|

flask

| 1,077

|

At time comparison, $gt is the same as $gte query results

|

First of all, thanks for this project!

I have a scene that is querying data larger than a certain time, using "_updated": {"$gt": "2017-08-26 09:12:59"}, but the query returns a data equal to this time The The effect is equivalent to "$gte"

|

closed

|

2017-10-19T07:50:29Z

|

2018-05-18T16:19:48Z

|

https://github.com/pyeve/eve/issues/1077

|

[

"stale"

] |

fantasykai

| 1

|

pallets/flask

|

python

| 4,594

|

Recommended link update for "Make the Project Installable" docs

|

The link to the "official packaging guide" in the "Make the Project Installable" tutorial section makes little reference to `setup.py` and no reference to the `MANIFEST.in` file.

I recommend updating the link from the ["Packing Python Projects"](https://packaging.python.org/en/latest/tutorials/packaging-projects/) tutorial to the ["Packaging and distributing projects"](https://packaging.python.org/en/latest/guides/distributing-packages-using-setuptools/) guide which details usage of `setup.py` and the `MANIFEST.in` file.

I'd be happy to make a PR to update if this change is welcome.

|

closed

|

2022-05-12T19:18:01Z

|

2022-06-07T00:05:24Z

|

https://github.com/pallets/flask/issues/4594

|

[

"docs"

] |

nkabrown

| 1

|

piskvorky/gensim

|

machine-learning

| 2,657

|

Tweak placeholders

|

Continued from https://github.com/RaRe-Technologies/gensim/pull/2654

Point each placeholder to its corresponding tutorial.

|

open

|

2019-10-29T08:43:15Z

|

2019-10-29T08:43:22Z

|

https://github.com/piskvorky/gensim/issues/2657

|

[

"documentation"

] |

mpenkov

| 0

|

dask/dask

|

pandas

| 11,681

|

Unable to use scheduler 'external_address'

|

<!-- Please include a self-contained copy-pastable example that generates the issue if possible.

Please be concise with code posted. See guidelines below on how to provide a good bug report:

- Craft Minimal Bug Reports http://matthewrocklin.com/blog/work/2018/02/28/minimal-bug-reports

- Minimal Complete Verifiable Examples https://stackoverflow.com/help/mcve

Bug reports that follow these guidelines are easier to diagnose, and so are often handled much more quickly.

-->

**Describe the issue**:

Seems unable to find in the doc how to specify the `external_address` for the scheduler when initiating the LocalCluster. By guesswork, I failed to "inject" the setting via `scheduler_kwargs` due to `RuntimeError(f"Cluster failed to start: {e}") from e`.

**Minimal Complete Verifiable Example**:

```python

cluster = LocalCluster(

host="0.0.0.0",

scheduler_kwargs={"external_address": "localhost"},

)

```

The error stack:

```

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

File ~/.pyenv/versions/3.12.2/envs/venv_3.12.2/lib/python3.12/site-packages/distributed/deploy/spec.py:324, in SpecCluster._start(self)

323 cls = import_term(cls)

--> 324 self.scheduler = cls(**self.scheduler_spec.get("options", {}))

325 self.scheduler = await self.scheduler

File ~/.pyenv/versions/3.12.2/envs/venv_3.12.2/lib/python3.12/site-packages/distributed/scheduler.py:4019, in Scheduler.__init__(self, loop, services, service_kwargs, allowed_failures, extensions, validate, scheduler_file, security, worker_ttl, idle_timeout, interface, host, port, protocol, dashboard_address, dashboard, http_prefix, preload, preload_argv, plugins, contact_address, transition_counter_max, jupyter, **kwargs)

4005 SchedulerState.__init__(

4006 self,

4007 aliases=aliases,

(...)

4017 transition_counter_max=transition_counter_max,

4018 )

-> 4019 ServerNode.__init__(

4020 self,

4021 handlers=self.handlers,

4022 stream_handlers=merge(worker_handlers, client_handlers),

4023 connection_limit=connection_limit,

4024 deserialize=False,

4025 connection_args=self.connection_args,

4026 **kwargs,

4027 )

4029 if self.worker_ttl:

TypeError: Server.__init__() got an unexpected keyword argument 'external_address'

The above exception was the direct cause of the following exception:

RuntimeError Traceback (most recent call last)

Cell In[2], line 1

----> 1 cluster = LocalCluster(

2 host="0.0.0.0",

3 scheduler_kwargs={"external_address": "localhost"},

4 )

File ~/.pyenv/versions/3.12.2/envs/venv_3.12.2/lib/python3.12/site-packages/distributed/deploy/local.py:256, in LocalCluster.__init__(self, name, n_workers, threads_per_worker, processes, loop, start, host, ip, scheduler_port, silence_logs, dashboard_address, worker_dashboard_address, diagnostics_port, services, worker_services, service_kwargs, asynchronous, security, protocol, blocked_handlers, interface, worker_class, scheduler_kwargs, scheduler_sync_interval, **worker_kwargs)

253 worker = {"cls": worker_class, "options": worker_kwargs}

254 workers = {i: worker for i in range(n_workers)}

--> 256 super().__init__(

257 name=name,

258 scheduler=scheduler,

259 workers=workers,

260 worker=worker,

261 loop=loop,

262 asynchronous=asynchronous,

263 silence_logs=silence_logs,

264 security=security,

265 scheduler_sync_interval=scheduler_sync_interval,

266 )

File ~/.pyenv/versions/3.12.2/envs/venv_3.12.2/lib/python3.12/site-packages/distributed/deploy/spec.py:284, in SpecCluster.__init__(self, workers, scheduler, worker, asynchronous, loop, security, silence_logs, name, shutdown_on_close, scheduler_sync_interval)

282 if not self.called_from_running_loop:

283 self._loop_runner.start()

--> 284 self.sync(self._start)

285 try:

286 self.sync(self._correct_state)

File ~/.pyenv/versions/3.12.2/envs/venv_3.12.2/lib/python3.12/site-packages/distributed/utils.py:363, in SyncMethodMixin.sync(self, func, asynchronous, callback_timeout, *args, **kwargs)

361 return future

362 else:

--> 363 return sync(

364 self.loop, func, *args, callback_timeout=callback_timeout, **kwargs

365 )

File ~/.pyenv/versions/3.12.2/envs/venv_3.12.2/lib/python3.12/site-packages/distributed/utils.py:439, in sync(loop, func, callback_timeout, *args, **kwargs)

436 wait(10)

438 if error is not None:

--> 439 raise error

440 else:

441 return result

File ~/.pyenv/versions/3.12.2/envs/venv_3.12.2/lib/python3.12/site-packages/distributed/utils.py:413, in sync.<locals>.f()

411 awaitable = wait_for(awaitable, timeout)

412 future = asyncio.ensure_future(awaitable)

--> 413 result = yield future

414 except Exception as exception:

415 error = exception

File ~/.pyenv/versions/3.12.2/envs/venv_3.12.2/lib/python3.12/site-packages/tornado/gen.py:767, in Runner.run(self)

765 try:

766 try:

--> 767 value = future.result()

768 except Exception as e:

769 # Save the exception for later. It's important that

770 # gen.throw() not be called inside this try/except block

771 # because that makes sys.exc_info behave unexpectedly.

772 exc: Optional[Exception] = e

File ~/.pyenv/versions/3.12.2/envs/venv_3.12.2/lib/python3.12/site-packages/distributed/deploy/spec.py:335, in SpecCluster._start(self)

333 self.status = Status.failed

334 await self._close()

--> 335 raise RuntimeError(f"Cluster failed to start: {e}") from e

RuntimeError: Cluster failed to start: Server.__init__() got an unexpected keyword argument 'external_address'

```

**Anything else we need to know?**:

Nope

**Environment**:

- Dask version: 2024.12.1

- Python version:3.12.2

- Operating System:MacOS / Ubuntu 22.04

- Install method (conda, pip, source): pip

|

closed

|

2025-01-20T08:02:15Z

|

2025-02-07T11:41:57Z

|

https://github.com/dask/dask/issues/11681

|

[

"needs triage"

] |

carusyte

| 3

|

miguelgrinberg/flasky

|

flask

| 470

|

Cannot run docker container on VPS

|

I want to run the docker containers on my server. The services (database and app) can be started on my MacBook without problems, but when I start the same code on my server I get the following error message:

```

ERROR: for flasky-application Cannot start service app: OCI runtime create failed: container_linux.go:345: starting container process caused "process_linux.go:430: container init caused \"process_linux.go:413: running prestart hook 0 caused \\\"error running hook: exit status 2, stdout: , stderr: runtime/cgo: pthread_create failed: Resource temporarily unavailable

ERROR: for app Cannot start service app: OCI runtime create failed: container_linux.go:345: starting container process caused "process_linux.go:430: container init caused \"process_linux.go:413: running prestart hook 0 caused \\\"error running hook: exit status 2, stdout: , stderr: runtime/cgo: pthread_create failed: Resource temporarily unavailable

ERROR: Encountered errors while bringing up the project.

```

what can I do to make this work?

|

closed

|

2020-05-29T17:18:31Z

|

2020-05-29T19:02:19Z

|

https://github.com/miguelgrinberg/flasky/issues/470

|

[] |

mobeit00

| 0

|

coleifer/sqlite-web

|

flask

| 63

|

Rspberry pi installatiom

|

How to install it on RPi?

|

closed

|

2019-09-14T05:08:33Z

|

2019-09-14T15:00:50Z

|

https://github.com/coleifer/sqlite-web/issues/63

|

[] |

alanmilinovic

| 3

|

PokemonGoF/PokemonGo-Bot

|

automation

| 5,328

|

Starting bot error

|

Install By: ./setup.sh -i

Run By: ./run.sh

OS: Ubuntu 14.04.4 LTS

Branch: master

Python Version: Python 2.7.12

|

closed

|

2016-09-09T11:48:16Z

|

2016-09-22T05:58:04Z

|

https://github.com/PokemonGoF/PokemonGo-Bot/issues/5328

|

[] |

zZzWhoAmIzZz

| 1

|

gradio-app/gradio

|

python

| 10,084

|

tomlkit is out of date

|

### Describe the bug

The latest version of tomlkit is 0.13.2. This project currently requires `tomlkit==0.12.0`.

https://github.com/gradio-app/gradio/blob/main/requirements.txt#L24

I can work around it by creating a fork.

### Have you searched existing issues? 🔎

- [X] I have searched and found no existing issues

### Reproduction

requirements.txt

```requirements

gradio==5.7.1

tomlkit==0.13.2

```

```sh

pip install -r requirements.txt

```

### Screenshot

### Logs

```shell

INFO: pip is looking at multiple versions of gradio to determine which version is compatible with other requirements. This could take a while.

ERROR: Cannot install -r requirements.txt (line 13) and tomlkit==0.13.2 because these package versions have conflicting dependencies.

The conflict is caused by:

The user requested tomlkit==0.13.2

gradio 5.7.1 depends on tomlkit==0.12.0

To fix this you could try to:

1. loosen the range of package versions you've specified

2. remove package versions to allow pip to attempt to solve the dependency conflict

ERROR: ResolutionImpossible: for help visit https://pip.pypa.io/en/latest/topics/dependency-resolution/#dealing-with-dependency-conflicts

```

```

### System Info

```shell

➜ ✗ poetry run gradio environment

Launching in *reload mode* on: http://127.0.0.1:7860 (Press CTRL+C to quit)

Watching: '/home/brian/work/Presence-AI/presence/livekit/agent/.venv/lib/python3.12/site-packages/gradio', '/home/brian/work/Presence-AI/presence/livekit/agent'

ERROR: Error loading ASGI app. Could not import module "environment".

```

### Severity

I can work around it

|

closed

|

2024-11-29T23:45:01Z

|

2024-12-02T20:02:54Z

|

https://github.com/gradio-app/gradio/issues/10084

|

[

"bug"

] |

btakita

| 0

|

ray-project/ray

|

machine-learning

| 51,276

|

[core][gpu-objects] Support collective operations

|

### Description

Support collective operations of GPU objects such as gather / scatter / all-reduce.

### Use case

_No response_

|

open

|

2025-03-11T22:43:35Z

|

2025-03-11T22:43:51Z

|

https://github.com/ray-project/ray/issues/51276

|

[

"enhancement",

"P2",

"core",

"gpu-objects"

] |

kevin85421

| 0

|

s3rius/FastAPI-template

|

asyncio

| 65

|

Question: where to put Mangum handler?

|

How do I add the Mangum handler into this template produced code?

Intention:

https://dwisulfahnur.medium.com/fastapi-deployment-to-aws-lambda-with-serverless-framework-b637b455142c

```

from fastapi import FastAPI

from app.api.api_v1.api import router as api_router

from mangum import Mangum

app = FastAPI()

@app.get("/")

async def root():

return {"message": "Hello World!"}

app.include_router(api_router, prefix="api/v1")

handler = Mangum(app)

```

not sure how to wrap the handler to the app?

```

def main() -> None:

"""Entrypoint of the application."""

uvicorn.run(

"<project>.web.application:get_app",

workers=settings.workers_count,

host=settings.host,

port=settings.port,

reload=settings.reload,

factory=True,

)

```

```

def get_app() -> FastAPI:

"""

Get FastAPI application.

This is the main constructor of an application.

:return: application.

"""

app = FastAPI(

title="<project>",

description="<project>",

version=metadata.version("<project>"),

docs_url=None,

redoc_url=None,

openapi_url="/api/openapi.json",

default_response_class=UJSONResponse,

)

app.on_event("startup")(startup(app))

app.on_event("shutdown")(shutdown(app))

app.include_router(router=api_router, prefix="/api")

app.mount(

"/static",

StaticFiles(directory=APP_ROOT / "static"),

name="static",

)

return app

```

|

closed

|

2022-01-27T20:57:17Z

|

2023-12-12T23:51:25Z

|

https://github.com/s3rius/FastAPI-template/issues/65

|

[] |

am1ru1

| 6

|

recommenders-team/recommenders

|

deep-learning

| 2,154

|

[BUG] Ranking Evaluation Metrics Exceed 1 with "by_threshold" Relevancy Method

|

### Description

Hello!

I encountered an issue while evaluating the BPR (Bayesian Personalized Ranking) model with basically the same code provided in the example on a different dataset. Specifically, when using the "by_threshold" relevancy method with ranking metrics, the computed values for precision@k, ndcg@k, and map@k exceed 1, which seems incorrect. This issue does not occur when switching the relevancy method to "top_k."

### How do we replicate the issue?

I use the following parameter for BPR (all using the default seed):

```

bpr = cornac.models.BPR(

k=200,

max_iter=100,

learning_rate=0.01,

lambda_reg=0.001,

verbose=True

)

```

Using these evaluation

```

TOP_K = 10

threshold =50

eval_map = map_at_k(test, all_predictions, col_prediction="prediction",

relevancy_method='by_threshold', threshold=threshold, k=TOP_K)

eval_ndcg = ndcg_at_k(test, all_predictions, col_prediction="prediction",

relevancy_method='by_threshold', threshold=threshold, k=TOP_K)

eval_precision = precision_at_k(

test, all_predictions, col_prediction="prediction",

relevancy_method='by_threshold', threshold=threshold, k=TOP_K)

```

Here is the dataset I test on: https://github.com/mnhqut/rec_sys-dataset/blob/main/data.csv

My result:

MAP: 1.417529

NDCG: 1.359902

Precision@K: 2.256466

### Willingness to contribute

<!--- Go over all the following points, and put an `x` in the box that apply. -->

- [ ] Yes, I can contribute for this issue independently.

- [x ] Yes, I can contribute for this issue with guidance from Recommenders community.

- [ ] No, I cannot contribute at this time.

|

open

|

2024-08-23T15:01:27Z

|

2024-08-29T15:09:29Z

|

https://github.com/recommenders-team/recommenders/issues/2154

|

[

"bug"

] |

mnhqut

| 4

|

widgetti/solara

|

flask

| 156

|

No clear way to define dark theme

|

When I look at the solara output, I see that it is possible to define "theme--dark" in most widgets (see below). However, I couldn't find a programmatic way to set the theme in solara. Is it possible to set theme of widgets in a Page with a single statement from Python?

|

closed

|

2023-06-16T08:17:26Z

|

2023-06-16T14:40:46Z

|

https://github.com/widgetti/solara/issues/156

|

[

"documentation"

] |

hkayabilisim

| 3

|

CorentinJ/Real-Time-Voice-Cloning

|

pytorch

| 474

|

Should we create a Slack Channel to communicate?

|

@blue-fish should we create a Slack Channel to communicate?

|

closed

|

2020-08-06T16:03:47Z

|

2022-09-14T18:03:50Z

|

https://github.com/CorentinJ/Real-Time-Voice-Cloning/issues/474

|

[] |

mbdash

| 12

|

pytorch/vision

|

machine-learning

| 8,016

|

Scheduled workflow failed

|

Oh no, something went wrong in the scheduled workflow tests/download.

Please look into it:

https://github.com/pytorch/vision/actions/runs/6403841200

Feel free to close this if this was just a one-off error.

cc @pmeier

|

closed

|

2023-10-04T09:10:32Z

|

2023-10-12T07:19:39Z

|

https://github.com/pytorch/vision/issues/8016

|

[

"bug",

"module: datasets"

] |

github-actions[bot]

| 0

|

lucidrains/vit-pytorch

|

computer-vision

| 58

|

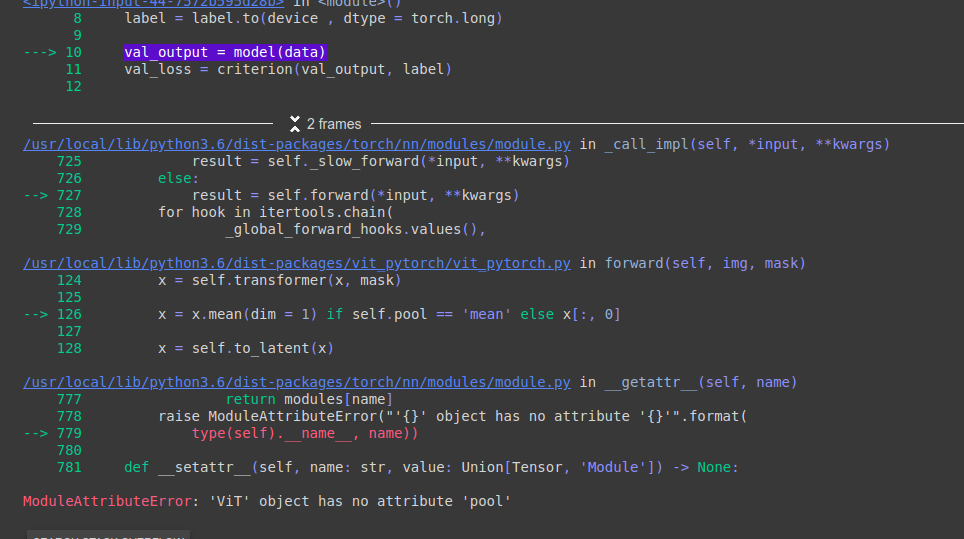

Error shows that ViT object has no attribute 'pool'

|

I am trying to load my saved model and then test it on some images.

This is my code snippet:

device = "cuda"

with torch.no_grad():

epoch_val_accuracy = 0

epoch_val_loss = 0

for data, label in val_loader:

data = data.to(device , dtype = torch.float)

label = label.to(device , dtype = torch.long)

val_output = model(data)

val_loss = criterion(val_output, label)

acc = (val_output.argmax(dim=1) == label).float().mean()

epoch_val_accuracy += acc / len(val_loader)

epoch_val_loss += val_loss / len(val_loader)

the screenshot is attached

|

closed

|

2021-01-08T14:15:24Z

|

2021-01-11T17:15:57Z

|

https://github.com/lucidrains/vit-pytorch/issues/58

|

[] |

Abhranta

| 4

|

autokey/autokey

|

automation

| 94

|

broken link in ABOUT

|

## Classification: none listed are relevant

## Summary

about file links to the autokey website:

https://code.google.com/archive/p/autokey/

that's 404.

and it seems like you sidestepped describing what the program does by pointing to the autokey website instead (which is reasonable when it works), so now there is none at all.

|

closed

|

2017-07-27T09:57:38Z

|

2017-07-27T14:52:10Z

|

https://github.com/autokey/autokey/issues/94

|

[] |

steanne

| 2

|

d2l-ai/d2l-en

|

data-science

| 2,107

|

Evaluation results of DenseNet TF look wrong

|

http://preview.d2l.ai.s3-website-us-west-2.amazonaws.com/d2l-en/master/chapter_convolutional-modern/densenet.html

l

|

closed

|

2022-04-21T00:58:48Z

|

2022-12-15T23:56:03Z

|

https://github.com/d2l-ai/d2l-en/issues/2107

|

[] |

astonzhang

| 2

|

pyeve/eve

|

flask

| 645

|

Restoring soft_delete using PATCH results in 422 unknown field “_deleted”

|

@nckpark [this](https://stackoverflow.com/questions/30632740/restoring-soft-delete-using-patch-results-in-422-unknown-field-deleted) soft-delete item was reported on Stack Overflow. Would you be willing to triage it? Might be a bug.

|

closed

|

2015-06-04T05:51:05Z

|

2015-06-09T06:55:50Z

|

https://github.com/pyeve/eve/issues/645

|

[] |

nicolaiarocci

| 2

|

custom-components/pyscript

|

jupyter

| 81

|

task.wait_until ignores state_check_now

|

The task.wait_until() function always checks state on entry, ignoring the documented state_check_now argument. In fact the state_check_now argument of wait_until() in trigger.py is declared but never used.

pyscript version: 0.32

|

closed

|

2020-11-05T08:12:52Z

|

2020-11-06T01:54:23Z

|

https://github.com/custom-components/pyscript/issues/81

|

[] |

dadler

| 2

|

exaloop/codon

|

numpy

| 432

|

Segmentation fault with -release flag

|

on current develop, works fine without -release flag, segfaults with it, even on small examples

--- SIGSEGV {si_signo=SIGSEGV, si_code=SEGV_MAPERR, si_addr=NULL} ---

+++ killed by SIGSEGV (core dumped) +++

Segmentation fault (core dumped)

|

open

|

2023-07-27T14:06:11Z

|

2024-11-10T06:25:39Z

|

https://github.com/exaloop/codon/issues/432

|

[] |

Miezhiko

| 4

|

mage-ai/mage-ai

|

data-science

| 5,460

|

Add Databend cloud data warehouse

|

**Is your feature request related to a problem? Please describe.**

[Databend](https://www.databend.com/) is an economical alternative to snowflake

**Describe the solution you'd like**

build intgration with databend for io and data integration pipelines

**Describe alternatives you've considered**

Snowflake Integration

|

open

|

2024-10-02T18:00:19Z

|

2024-10-09T18:49:26Z

|

https://github.com/mage-ai/mage-ai/issues/5460

|

[

"data integration",

"io"

] |

TalaatHasanin

| 0

|

pytest-dev/pytest-html

|

pytest

| 144

|

add custom test results to report

|

Hi,

I have a test function like this:

```

def test_score():

# load csv of one column

x = PrettyTable()

x.field_names = ['old','new']

for val in column:

new_val = some_func(val)

x.add_row([val, new_val])

assert val == new_val

output = x.get_string() # i want to print this in report when test success

```

and many other functions using this pattern, I can't figure out a way to retrieve output value inside pytest_html_results_table_html hook.

Do you have any suggestions ?

Thank you

|

closed

|

2018-01-04T17:18:04Z

|

2023-06-20T08:07:12Z

|

https://github.com/pytest-dev/pytest-html/issues/144

|

[] |

ohayak

| 5

|

qubvel-org/segmentation_models.pytorch

|

computer-vision

| 75

|

How to override input 3x3 param for Encoders

|

Hi,i see that it is defaulted to false for your encoders in param dict. How i override it while passing it to seg models ?

|

closed

|

2019-10-02T13:50:06Z

|

2022-02-09T01:53:31Z

|

https://github.com/qubvel-org/segmentation_models.pytorch/issues/75

|

[

"Stale"

] |

jaideep11061982

| 5

|

koxudaxi/datamodel-code-generator

|

pydantic

| 1,740

|

Invalid definition of graphql requirement

|

**Describe the bug**

Invalid definition of graphql requirement.

**To Reproduce**

For a graphql schema we have

Used commandline:

```

$ datamodel-codegen --input schema.graphql --output model.py --input-file-type graphql

```

we have

```bash

Exception: Please run `$pip install 'datamodel-code-generator[graphql]`' to generate data-model from a GraphQL schema.

```

**Expected behavior**

We get this behavior because we have

**pyproject.toml**

```toml

...

[tool.poetry.group.graphql.dependencies]

graphql-core = "^3.2.3"

...

```

It must be like this

**pyproject.toml**

```toml

...

[tool.poetry.dependencies]

...

graphql-core = { version = "^3.2.3", optional = true }

...

```

**Version:**

- OS: [e.g. iOS]

- Python version: 3.7-3.11 (i tested in 3.11)

- datamodel-code-generator version: 0.25.0

I will create a fix.

|

closed

|

2023-11-26T13:32:41Z

|

2023-11-26T19:08:12Z

|

https://github.com/koxudaxi/datamodel-code-generator/issues/1740

|

[] |

denisart

| 1

|

ets-labs/python-dependency-injector

|

flask

| 873

|

Making injections into class attributes can't work with `Resource` in nested container.

|

Reproducible project: https://github.com/YogiLiu/pdi_issue

When I run `python -m pdi_issue.main`, an error was raised:

```

Traceback (most recent call last):

File "<frozen runpy>", line 198, in _run_module_as_main

File "<frozen runpy>", line 88, in _run_code

File "/home/yogiliu/Workspace/YogiLiu/pdi_issue/src/pdi_issue/main.py", line 4, in <module>

container = SrvContainer()

^^^^^^^^^^^^^^

File "src/dependency_injector/containers.pyx", line 727, in dependency_injector.containers.DeclarativeContainer.__new__

File "src/dependency_injector/providers.pyx", line 4916, in dependency_injector.providers.deepcopy

File "src/dependency_injector/providers.pyx", line 4923, in dependency_injector.providers.deepcopy

File "/home/yogiliu/.local/share/uv/python/cpython-3.11.10-linux-x86_64-gnu/lib/python3.11/copy.py", line 146, in deepcopy

y = copier(x, memo)

^^^^^^^^^^^^^^^

File "/home/yogiliu/.local/share/uv/python/cpython-3.11.10-linux-x86_64-gnu/lib/python3.11/copy.py", line 231, in _deepcopy_dict

y[deepcopy(key, memo)] = deepcopy(value, memo)

^^^^^^^^^^^^^^^^^^^^^

File "/home/yogiliu/.local/share/uv/python/cpython-3.11.10-linux-x86_64-gnu/lib/python3.11/copy.py", line 153, in deepcopy

y = copier(memo)

^^^^^^^^^^^^

File "src/dependency_injector/providers.pyx", line 4024, in dependency_injector.providers.Container.__deepcopy__

File "src/dependency_injector/providers.pyx", line 4923, in dependency_injector.providers.deepcopy

File "/home/yogiliu/.local/share/uv/python/cpython-3.11.10-linux-x86_64-gnu/lib/python3.11/copy.py", line 153, in deepcopy

y = copier(memo)

^^^^^^^^^^^^

File "src/dependency_injector/containers.pyx", line 125, in dependency_injector.containers.DynamicContainer.__deepcopy__

File "src/dependency_injector/providers.pyx", line 4916, in dependency_injector.providers.deepcopy

File "src/dependency_injector/providers.pyx", line 4923, in dependency_injector.providers.deepcopy

File "/home/yogiliu/.local/share/uv/python/cpython-3.11.10-linux-x86_64-gnu/lib/python3.11/copy.py", line 146, in deepcopy

y = copier(x, memo)

^^^^^^^^^^^^^^^

File "/home/yogiliu/.local/share/uv/python/cpython-3.11.10-linux-x86_64-gnu/lib/python3.11/copy.py", line 231, in _deepcopy_dict

y[deepcopy(key, memo)] = deepcopy(value, memo)

^^^^^^^^^^^^^^^^^^^^^

File "/home/yogiliu/.local/share/uv/python/cpython-3.11.10-linux-x86_64-gnu/lib/python3.11/copy.py", line 153, in deepcopy

y = copier(memo)

^^^^^^^^^^^^

File "src/dependency_injector/providers.pyx", line 3669, in dependency_injector.providers.Resource.__deepcopy__

dependency_injector.errors.Error: Can not copy initialized resource

```

|

open

|

2025-03-23T12:36:43Z

|

2025-03-23T13:33:14Z

|

https://github.com/ets-labs/python-dependency-injector/issues/873

|

[] |

YogiLiu

| 2

|

huggingface/datasets

|

numpy

| 7,433

|

`Dataset.map` ignores existing caches and remaps when ran with different `num_proc`

|

### Describe the bug

If you `map` a dataset and save it to a specific `cache_file_name` with a specific `num_proc`, and then call map again with that same existing `cache_file_name` but a different `num_proc`, the dataset will be re-mapped.

### Steps to reproduce the bug

1. Download a dataset

```python

import datasets

dataset = datasets.load_dataset("ylecun/mnist")

```

```

Generating train split: 100%|██████████| 60000/60000 [00:00<00:00, 116429.85 examples/s]

Generating test split: 100%|██████████| 10000/10000 [00:00<00:00, 103310.27 examples/s]

```

2. `map` and cache it with a specific `num_proc`

```python

cache_file_name="./cache/train.map"

dataset["train"].map(lambda x: x, cache_file_name=cache_file_name, num_proc=2)

```

```

Map (num_proc=2): 100%|██████████| 60000/60000 [00:01<00:00, 53764.03 examples/s]

```

3. `map` it with a different `num_proc` and the same `cache_file_name` as before

```python

dataset["train"].map(lambda x: x, cache_file_name=cache_file_name, num_proc=3)

```

```

Map (num_proc=3): 100%|██████████| 60000/60000 [00:00<00:00, 65377.12 examples/s]

```

### Expected behavior

If I specify an existing `cache_file_name`, I don't expect using a different `num_proc` than the one that was used to generate it to cause the dataset to have be be re-mapped.

### Environment info

```console

$ datasets-cli env

- `datasets` version: 3.3.2

- Platform: Linux-5.15.0-131-generic-x86_64-with-glibc2.35

- Python version: 3.10.16

- `huggingface_hub` version: 0.29.1

- PyArrow version: 19.0.1

- Pandas version: 2.2.3

- `fsspec` version: 2024.12.0

```

|

open

|

2025-03-03T05:51:26Z

|

2025-03-04T05:55:08Z

|

https://github.com/huggingface/datasets/issues/7433

|

[] |

ringohoffman

| 2

|

nolar/kopf

|

asyncio

| 631

|

Defining a pydantic model in a kopf handler file seems to break kopf startup

|

## Long story short

If I define a pydantic class, kopf refuses to start

## Description

Steps to repro:

start out with a super-simple kopf handler file, such as:

```python

import kopf

@kopf.on.create('what.com', 'v1', 'stuffs')

def create_fn(body, **kwargs):

print(body)

@kopf.on.update('what.com', 'v1', 'stuffs')

def update_fn(body, **kwargs):

print(body)

```

I'm using pipenv so I'd run this with: `pipenv run kopf run "src/crawler_manager/handler.py"`. All good.

Now, expand the file with a simple pydantic model definition:

```python

import kopf

from pydantic import BaseModel, Field

from typing import Dict

class TestModel(BaseModel):

name: str = Field(...)

size: int

size2: int

@kopf.on.create('mohawkanalytics.com', 'v1', 'stuffs')

def create_fn(body, **kwargs):

print(json.dumps(body, indent=4, sort_keys=True))

@kopf.on.update('mohawkanalytics.com', 'v1', 'stuffs')

def update_fn(body, **kwargs):

print(json.dumps(body, indent=4, sort_keys=True))

```

This code now throws:

```

File "<frozen importlib._bootstrap_external>", line 790, in exec_module

File "<frozen importlib._bootstrap>", line 228, in _call_with_frames_removed

File "src/funnel_crawler_manager/handler.py", line 9, in <module>

class TestModel(BaseModel):

File "pydantic/main.py", line 247, in pydantic.main.ModelMetaclass.__new__

File "pydantic/typing.py", line 207, in pydantic.typing.resolve_annotations

for p in parameters:

KeyError: 'handler'

```

I have no idea why. The idea was to use a pydantic-defined model to define the CRD at if needed configure it during kopf startup (pydantic can generate openapi-compatible schemas, so this way we were hoping to maintain the CRD in code instead of in yaml).

Testing done using `pydantic==1.7.3`

I'm afraid I don't know more than this - I also don't know if the actual bug is in Pydantic or kopf.

## Environment

* Kopf version: 0.28.3

* Kubernetes version: 3.9

* Python version:

* OS/platform:

|

closed

|

2021-01-05T10:25:49Z

|

2021-01-22T20:04:59Z

|

https://github.com/nolar/kopf/issues/631

|

[

"bug"

] |

trondhindenes

| 7

|

deepspeedai/DeepSpeed

|

deep-learning

| 7,037

|

[REQUEST] Why is the column linear layer with all-gather not implemented in DeepSpeed Inference?

|

**Is your feature request related to a problem? Please describe.**

if there is no column linear layer with all-gather, we can't deal with single linear layer

i can see the rowlinear with allreduce aka [LinearAllreduce](https://github.com/deepspeedai/DeepSpeed/blob/e637677766e0a2063adc61ddd67b58abef74753e/deepspeed/module_inject/layers.py#L300). but there is no any implementations about column linear layer with all gather.

how could i set the linear type when running dit models:

```python

(transformer_blocks): ModuleList(

(0-18): 19 x FluxTransformerBlock(

(norm1): AdaLayerNormZero(

(silu): SiLU()

(linear): LinearLayer(in_features=3072, out_features=9216, bias=True, dtype=torch.bfloat16)

(norm): LayerNorm((3072,), eps=1e-06, elementwise_affine=False)

)

(norm1_context): AdaLayerNormZero(

(silu): SiLU()

(linear): LinearLayer(in_features=3072, out_features=9216, bias=True, dtype=torch.bfloat16)

(norm): LayerNorm((3072,), eps=1e-06, elementwise_affine=False)

)

(attn): Attention(

(norm_q): RMSNorm()

(norm_k): RMSNorm()

(to_q): LinearLayer(in_features=3072, out_features=1536, bias=True, dtype=torch.bfloat16)

(to_k): LinearLayer(in_features=3072, out_features=1536, bias=True, dtype=torch.bfloat16)

(to_v): LinearLayer(in_features=3072, out_features=1536, bias=True, dtype=torch.bfloat16)

(add_k_proj): LinearLayer(in_features=3072, out_features=1536, bias=True, dtype=torch.bfloat16)

(add_v_proj): LinearLayer(in_features=3072, out_features=1536, bias=True, dtype=torch.bfloat16)

(add_q_proj): LinearLayer(in_features=3072, out_features=1536, bias=True, dtype=torch.bfloat16)

(to_out): ModuleList(

(0): LinearLayer(in_features=3072, out_features=1536, bias=True, dtype=torch.bfloat16)

(1): Dropout(p=0.0, inplace=False)

)

(to_add_out): LinearLayer(in_features=3072, out_features=1536, bias=True, dtype=torch.bfloat16)

(norm_added_q): RMSNorm()

(norm_added_k): RMSNorm()

)

(norm2): LayerNorm((3072,), eps=1e-06, elementwise_affine=False)

(ff): FeedForward(

(net): ModuleList(

(0): GELU(

(proj): LinearLayer(in_features=3072, out_features=6144, bias=True, dtype=torch.bfloat16)

)

(1): Dropout(p=0.0, inplace=False)

(2): LinearLayer(in_features=12288, out_features=1536, bias=True, dtype=torch.bfloat16)

)

)

(norm2_context): LayerNorm((3072,), eps=1e-06, elementwise_affine=False)

(ff_context): FeedForward(

(net): ModuleList(

(0): GELU(

(proj): LinearLayer(in_features=3072, out_features=6144, bias=True, dtype=torch.bfloat16)

)

(1): Dropout(p=0.0, inplace=False)

(2): LinearLayer(in_features=12288, out_features=1536, bias=True, dtype=torch.bfloat16)

)

)

)

)

```

i can set the ``` attn.to_out.0 attn.to_add_out ff.net.2 ff_context.net.2 ``` to LinearAllreduce, but how to deal with ``` norm1.linear ``` and ``` norm1_context.linear```. i need all gather the results of a single linear layer or it will cause error because the inputs of both norm1 and norm1_context are a whole hidden_states

|

open

|

2025-02-14T07:09:38Z

|

2025-02-14T07:09:38Z

|

https://github.com/deepspeedai/DeepSpeed/issues/7037

|

[

"enhancement"

] |

zhangvia

| 0

|

ARM-DOE/pyart

|

data-visualization

| 810

|

Very outdated gcc in conda-forge causing CyLP to fail building.

|

When I try and build @jjhelmus's branch I get the following error:

gcc -pthread -B /home/rjackson/anaconda3/envs/adi_env3/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I./cylp/cpp -I./cylp/cy -I/home/rjackson/anaconda3/envs/adi_env3/include/coin -I/home/rjackson/anaconda3/envs/adi_env3/lib/python3.6/site-packages/numpy/core/include -I. -I/home/rjackson/anaconda3/envs/adi_env3/include/python3.6m -c cylp/cpp/IClpPrimalColumnPivotBase.cpp -o build/temp.linux-x86_64-3.6/cylp/cpp/IClpPrimalColumnPivotBase.o -w

cc1plus: warning: command line option ‘-Wstrict-prototypes’ is valid for C/ObjC but not for C++ [enabled by default]

In file included from /home/rjackson/anaconda3/envs/adi_env3/gcc/include/c++/cstdint:35:0,

from /home/rjackson/anaconda3/envs/adi_env3/include/coin/CoinTypes.hpp:15,

from /home/rjackson/anaconda3/envs/adi_env3/include/coin/CoinHelperFunctions.hpp:24,

from /home/rjackson/anaconda3/envs/adi_env3/include/coin/CoinIndexedVector.hpp:20,

from cylp/cpp/IClpPrimalColumnPivotBase.h:6,

from cylp/cpp/IClpPrimalColumnPivotBase.cpp:1:

/home/rjackson/anaconda3/envs/adi_env3/gcc/include/c++/bits/c++0x_warning.h:32:2: error: #error This file requires compiler and library support for the ISO C++ 2011 standard. This support is currently experimental, and must be enabled with the -std=c++11 or -std=gnu++11 compiler options.

#error This file requires compiler and library support for the \

I tracked this down to the very, very old version of gcc that is in conda-forge (4.8.5). I got it to build with gcc 6.3.0, so I would *not* use the conda-forge gcc to try and build CyLP.

|

closed

|

2019-02-06T19:56:11Z

|

2019-10-01T21:21:07Z

|

https://github.com/ARM-DOE/pyart/issues/810

|

[] |

rcjackson

| 7

|

scrapy/scrapy

|

web-scraping

| 6,186

|

lxml parser gives back wrong parsing results, messes up html

|

<!--

Thanks for taking an interest in Scrapy!

If you have a question that starts with "How to...", please see the Scrapy Community page: https://scrapy.org/community/.

The GitHub issue tracker's purpose is to deal with bug reports and feature requests for the project itself.

Keep in mind that by filing an issue, you are expected to comply with Scrapy's Code of Conduct, including treating everyone with respect: https://github.com/scrapy/scrapy/blob/master/CODE_OF_CONDUCT.md

The following is a suggested template to structure your issue, you can find more guidelines at https://doc.scrapy.org/en/latest/contributing.html#reporting-bugs

-->

### Description

parsing with lxml gives wrong result

### Steps to Reproduce

1. fetch this html code: https://pastebin.com/durYf56c

2. select section `section = response.css('main section section')[0]`

**Expected behavior:** [What you expect to happen]

section to be this:

https://pastebin.com/3wxdHFqY

**Actual behavior:** [What actually happens]

section is this:

https://pastebin.com/jzXZ0BRD

**Reproduces how often:** [What percentage of the time does it reproduce?]

100%

### Versions

Scrapy : 2.11.0

lxml : 4.9.4.0

libxml2 : 2.10.3

cssselect : 1.2.0

parsel : 1.8.1

w3lib : 2.1.2

Twisted : 22.10.0

Python : 3.10.4 (tags/v3.10.4:9d38120, Mar 23 2022, 23:13:41) [MSC v.1929 64 bit (AMD64)]

pyOpenSSL : 23.3.0 (OpenSSL 3.1.4 24 Oct 2023)

cryptography : 41.0.7

Platform : Windows-10-10.0.19045-SP0

### Additional context

Same error happens with Beautiful soup using `lxml` parser. Using regular `html.parser` there is no error.

So it must be lxml.

|

closed

|

2023-12-24T03:43:57Z

|

2023-12-24T15:47:30Z

|

https://github.com/scrapy/scrapy/issues/6186

|

[] |

tombohub

| 3

|

vllm-project/vllm

|

pytorch

| 15,207

|

[Bug]: msgspec.DecodeError: MessagePack data is malformed: trailing characters (byte 13)

|

### Your current environment

<details>

<summary>vllm==0.8.0, python=3.11</summary>

</details>

### 🐛 Describe the bug

I keep having this decode error when using vllm=0.8.0 for qwen-2.5 inference, I have the same error on both 1.5B and 7B model once I use apply-chat-template.

`msgspec.DecodeError: MessagePack data is malformed: trailing characters (byte 13)`

### Before submitting a new issue...

- [x] Make sure you already searched for relevant issues, and asked the chatbot living at the bottom right corner of the [documentation page](https://docs.vllm.ai/en/latest/), which can answer lots of frequently asked questions.

|

open

|

2025-03-20T10:48:12Z

|

2025-03-24T14:47:15Z

|

https://github.com/vllm-project/vllm/issues/15207

|

[

"bug"

] |

fangru-lin

| 1

|

KaiyangZhou/deep-person-reid

|

computer-vision

| 375

|

RuntimeError: cublas runtime error

|

I am getting this error

```

(torchreid) $ python scripts/main.py \

> --config-file configs/im_osnet_x1_0_softmax_256x128_amsgrad_cosine.yaml \

> --transforms random_flip random_erase \

> --root $PATH_TO_DATA

Show configuration

adam:

beta1: 0.9

beta2: 0.999

cuhk03:

classic_split: False

labeled_images: False

use_metric_cuhk03: False

data:

combineall: False

height: 256

k_tfm: 1

load_train_targets: False

norm_mean: [0.485, 0.456, 0.406]

norm_std: [0.229, 0.224, 0.225]

root: /home/me/code/tmp/deep-person-reid/data

save_dir: log/osnet_x1_0_market1501_softmax_cosinelr

sources: ['market1501']

split_id: 0

targets: ['market1501']

transforms: ['random_flip', 'random_erase']

type: image

width: 128

workers: 4

loss:

name: softmax

softmax:

label_smooth: True

triplet:

margin: 0.3

weight_t: 1.0

weight_x: 0.0

market1501:

use_500k_distractors: False

model:

load_weights:

name: osnet_x1_0

pretrained: True

resume:

rmsprop:

alpha: 0.99

sampler:

num_cams: 1

num_datasets: 1

num_instances: 4

train_sampler: RandomSampler

train_sampler_t: RandomSampler

sgd:

dampening: 0.0

momentum: 0.9

nesterov: False

test:

batch_size: 300

dist_metric: euclidean

eval_freq: -1

evaluate: False

normalize_feature: False

ranks: [1, 5, 10, 20]

rerank: False

start_eval: 0

visrank: False

visrank_topk: 10

train:

base_lr_mult: 0.1

batch_size: 64

fixbase_epoch: 10

gamma: 0.1

lr: 0.0015

lr_scheduler: cosine

max_epoch: 250

new_layers: ['classifier']

open_layers: ['classifier']

optim: amsgrad

print_freq: 20

seed: 1

staged_lr: False

start_epoch: 0

stepsize: [20]

weight_decay: 0.0005

use_gpu: True

video:

pooling_method: avg

sample_method: evenly

seq_len: 15

Collecting env info ...

** System info **

PyTorch version: 1.1.0

Is debug build: No

CUDA used to build PyTorch: 9.0.176

OS: Ubuntu 18.04.5 LTS

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

CMake version: version 3.10.2

Python version: 3.7

Is CUDA available: Yes

CUDA runtime version: Could not collect

GPU models and configuration: GPU 0: GeForce RTX 2080 Ti

Nvidia driver version: 440.33.01

cuDNN version: Could not collect

Versions of relevant libraries:

[pip3] numpy==1.19.2

[pip3] torch==1.1.0

[pip3] torchreid==1.3.3

[pip3] torchvision==0.3.0

[conda] blas 1.0 mkl

[conda] mkl 2020.2 256

[conda] mkl-service 2.3.0 py37he904b0f_0

[conda] mkl_fft 1.2.0 py37h23d657b_0

[conda] mkl_random 1.1.1 py37h0573a6f_0

[conda] pytorch 1.1.0 py3.7_cuda9.0.176_cudnn7.5.1_0 pytorch

[conda] torchreid 1.3.3 dev_0 <develop>

[conda] torchvision 0.3.0 py37_cu9.0.176_1 pytorch

Pillow (7.2.0)

Building train transforms ...

+ resize to 256x128

+ random flip

+ to torch tensor of range [0, 1]

+ normalization (mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

+ random erase

Building test transforms ...

+ resize to 256x128

+ to torch tensor of range [0, 1]

+ normalization (mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

=> Loading train (source) dataset

=> Loaded Market1501

----------------------------------------

subset | # ids | # images | # cameras

----------------------------------------

train | 751 | 12936 | 6

query | 750 | 3368 | 6

gallery | 751 | 15913 | 6

----------------------------------------

=> Loading test (target) dataset

=> Loaded Market1501

----------------------------------------

subset | # ids | # images | # cameras

----------------------------------------

train | 751 | 12936 | 6

query | 750 | 3368 | 6

gallery | 751 | 15913 | 6

----------------------------------------

**************** Summary ****************

source : ['market1501']

# source datasets : 1

# source ids : 751

# source images : 12936

# source cameras : 6

target : ['market1501']

*****************************************

Building model: osnet_x1_0

Successfully loaded imagenet pretrained weights from "/home/me/.cache/torch/checkpoints/osnet_x1_0_imagenet.pth"

** The following layers are discarded due to unmatched keys or layer size: ['classifier.weight', 'classifier.bias']

Model complexity: params=2,193,616 flops=978,878,352

Building softmax-engine for image-reid

=> Start training

* Only train ['classifier'] (epoch: 1/10)

Traceback (most recent call last):

File "scripts/main.py", line 191, in <module>

main()

File "scripts/main.py", line 187, in main

engine.run(**engine_run_kwargs(cfg))

File "/home/me/code/deep-person-reid/torchreid/engine/engine.py", line 193, in run

open_layers=open_layers

File "/home/me/code/deep-person-reid/torchreid/engine/engine.py", line 245, in train

loss_summary = self.forward_backward(data)

File "/home/me/code/deep-person-reid/torchreid/engine/image/softmax.py", line 85, in forward_backward

outputs = self.model(imgs)

File "/home/me/.conda/envs/torchreid/lib/python3.7/site-packages/torch/nn/modules/module.py", line 493, in __call__

result = self.forward(*input, **kwargs)

File "/home/me/.conda/envs/torchreid/lib/python3.7/site-packages/torch/nn/parallel/data_parallel.py", line 150, in forward

return self.module(*inputs[0], **kwargs[0])

File "/home/me/.conda/envs/torchreid/lib/python3.7/site-packages/torch/nn/modules/module.py", line 493, in __call__

result = self.forward(*input, **kwargs)

File "/home/me/code/deep-person-reid/torchreid/models/osnet.py", line 429, in forward

v = self.fc(v)

File "/home/me/.conda/envs/torchreid/lib/python3.7/site-packages/torch/nn/modules/module.py", line 493, in __call__

result = self.forward(*input, **kwargs)

File "/home/me/.conda/envs/torchreid/lib/python3.7/site-packages/torch/nn/modules/container.py", line 92, in forward

input = module(input)

File "/home/me/.conda/envs/torchreid/lib/python3.7/site-packages/torch/nn/modules/module.py", line 493, in __call__

result = self.forward(*input, **kwargs)

File "/home/me/.conda/envs/torchreid/lib/python3.7/site-packages/torch/nn/modules/linear.py", line 92, in forward

return F.linear(input, self.weight, self.bias)

File "/home/me/.conda/envs/torchreid/lib/python3.7/site-packages/torch/nn/functional.py", line 1406, in linear

ret = torch.addmm(bias, input, weight.t())

RuntimeError: cublas runtime error : the GPU program failed to execute at /opt/conda/conda-bld/pytorch_1556653215914/work/aten/src/THC/THCBlas.cu:259

```

What worries me is that I did not install conda in `/opt/conda` but in `/usr/local/miniconda3/condabin/conda`. However conda works perfectly.

To reproduce it, one just needs to install it following the README's instructions:

```

# cd to your preferred directory and clone this repo

git clone https://github.com/KaiyangZhou/deep-person-reid.git

# create environment

cd deep-person-reid/

conda create --name torchreid python=3.7

conda activate torchreid

# install dependencies

# make sure `which python` and `which pip` point to the correct path

pip install -r requirements.txt

# install torch and torchvision (select the proper cuda version to suit your machine)

conda install pytorch torchvision cudatoolkit=9.0 -c pytorch

# install torchreid (don't need to re-build it if you modify the source code)

python setup.py develop

# added by me

PATH_TO_DATA="/somewhere_accessible"

mkdir -p $PATH_TO_DATA

```

Here's my conda environment

```

(torchreid) $ conda list

# packages in environment at /home/me/.conda/envs/torchreid:

#

# Name Version Build Channel

_libgcc_mutex 0.1 main

absl-py 0.10.0 pypi_0 pypi

blas 1.0 mkl

ca-certificates 2020.7.22 0

cachetools 4.1.1 pypi_0 pypi

certifi 2020.6.20 py37_0

cffi 1.14.3 py37he30daa8_0

chardet 3.0.4 pypi_0 pypi

cudatoolkit 9.0 h13b8566_0

cycler 0.10.0 pypi_0 pypi

cython 0.29.21 pypi_0 pypi

filelock 3.0.12 pypi_0 pypi

flake8 3.8.3 pypi_0 pypi

freetype 2.10.2 h5ab3b9f_0

future 0.18.2 pypi_0 pypi

gdown 3.12.2 pypi_0 pypi

google-auth 1.21.3 pypi_0 pypi

google-auth-oauthlib 0.4.1 pypi_0 pypi

grpcio 1.32.0 pypi_0 pypi

h5py 2.10.0 pypi_0 pypi

idna 2.10 pypi_0 pypi

imageio 2.9.0 pypi_0 pypi

importlib-metadata 2.0.0 pypi_0 pypi

intel-openmp 2020.2 254

jpeg 9b h024ee3a_2

kiwisolver 1.2.0 pypi_0 pypi

lcms2 2.11 h396b838_0

ld_impl_linux-64 2.33.1 h53a641e_7

libedit 3.1.20191231 h14c3975_1

libffi 3.3 he6710b0_2

libgcc-ng 9.1.0 hdf63c60_0

libpng 1.6.37 hbc83047_0

libstdcxx-ng 9.1.0 hdf63c60_0

libtiff 4.1.0 h2733197_1

lz4-c 1.9.2 he6710b0_1

markdown 3.2.2 pypi_0 pypi

matplotlib 3.3.2 pypi_0 pypi

mkl 2020.2 256

mkl-service 2.3.0 py37he904b0f_0

mkl_fft 1.2.0 py37h23d657b_0

mkl_random 1.1.1 py37h0573a6f_0

ncurses 6.2 he6710b0_1

ninja 1.10.1 py37hfd86e86_0

numpy 1.19.2 pypi_0 pypi

numpy-base 1.19.1 py37hfa32c7d_0

oauthlib 3.1.0 pypi_0 pypi

olefile 0.46 py37_0

opencv-python 4.4.0.44 pypi_0 pypi

openssl 1.1.1h h7b6447c_0

pillow 7.2.0 py37hb39fc2d_0

pip 20.2.2 py37_0

protobuf 3.13.0 pypi_0 pypi

pyasn1 0.4.8 pypi_0 pypi

pyasn1-modules 0.2.8 pypi_0 pypi

pycparser 2.20 py_2

pyflakes 2.2.0 pypi_0 pypi

pyparsing 2.4.7 pypi_0 pypi

pysocks 1.7.1 pypi_0 pypi

python 3.7.9 h7579374_0

python-dateutil 2.8.1 pypi_0 pypi

pytorch 1.1.0 py3.7_cuda9.0.176_cudnn7.5.1_0 pytorch

pyyaml 5.3.1 pypi_0 pypi

readline 8.0 h7b6447c_0

requests 2.24.0 pypi_0 pypi

requests-oauthlib 1.3.0 pypi_0 pypi

rsa 4.6 pypi_0 pypi

scipy 1.5.2 pypi_0 pypi

setuptools 49.6.0 py37_0

six 1.15.0 py_0

sqlite 3.33.0 h62c20be_0

tb-nightly 2.4.0a20200921 pypi_0 pypi

tensorboard-plugin-wit 1.7.0 pypi_0 pypi

tk 8.6.10 hbc83047_0

torchreid 1.3.3 dev_0 <develop>

torchvision 0.3.0 py37_cu9.0.176_1 pytorch

tqdm 4.49.0 pypi_0 pypi

urllib3 1.25.10 pypi_0 pypi

werkzeug 1.0.1 pypi_0 pypi

wheel 0.35.1 pypi_0 pypi

xz 5.2.5 h7b6447c_0

yacs 0.1.8 pypi_0 pypi

yapf 0.30.0 pypi_0 pypi

zipp 3.2.0 pypi_0 pypi

zlib 1.2.11 h7b6447c_3

zstd 1.4.5 h9ceee32_0

```

|

closed

|

2020-09-24T15:40:36Z

|

2020-09-24T21:56:23Z

|

https://github.com/KaiyangZhou/deep-person-reid/issues/375

|

[] |

sopsos

| 2

|

pytorch/vision

|

machine-learning

| 8,845

|

`CocoDetection()` doesn't work using some train and validation images with some annotations

|

### 🚀 The feature

[CocoDetection()](https://pytorch.org/vision/stable/generated/torchvision.datasets.CocoDetection.html) doesn't work using `stuff_train2017_pixelmaps` with `stuff_train2017.json` and using `stuff_val2017_pixelmaps` with `stuff_val2017.json` as shown below:

```python

from torchvision.datasets import CocoDetection

pms_stf_train2017_data = CocoDetection(

root="data/coco/anns/stuff_trainval2017/stuff_train2017_pixelmaps",

annFile="data/coco/anns/stuff_trainval2017/stuff_train2017.json"

)

pms_stf_val2017_data = CocoDetection(

root="data/coco/anns/stuff_trainval2017/stuff_val2017_pixelmaps",

annFile="data/coco/anns/stuff_trainval2017/stuff_val2017.json"

)

pms_stf_train2017_data[0] # Error

pms_stf_val2017_data[0] # Error

```

> FileNotFoundError: [Errno 2] No such file or directory: '/.../data/coco/anns/stuff_trainval2017/stuff_train2017_pixelmaps/000000000009.jpg'

> FileNotFoundError: [Errno 2] No such file or directory: '/../data/coco/anns/stuff_trainval2017/stuff_val2017_pixelmaps/000000000139.jpg'

And, `CocoDetection()` doesn't work using `panoptic_train2017` with `panoptic_train2017.json` and using `panoptic_val2017` and `panoptic_val2017.json` as shown below:

```python

from torchvision.datasets import CocoDetection

pan_train2017_data = CocoDetection(

root="data/coco/anns/panoptic_trainval2017/panoptic_train2017",

annFile="data/coco/anns/panoptic_trainval2017/panoptic_train2017.json"

) # Error

pan_val2017_data = CocoDetection(

root="data/coco/anns/panoptic_trainval2017/panoptic_val2017",

annFile="data/coco/anns/panoptic_trainval2017/panoptic_val2017.json"

) # Error

```

> KeyError: 'id'

### Motivation, pitch

So, `CocoDetection()` should support for them.

### Alternatives

_No response_

### Additional context

_No response_

|

open

|

2025-01-10T15:36:41Z

|

2025-01-13T10:53:20Z

|

https://github.com/pytorch/vision/issues/8845

|

[] |

hyperkai

| 1

|

roboflow/supervision

|

computer-vision

| 1,709

|

Object detection precision-ap

|

### Search before asking

- [X] I have searched the Supervision [issues](https://github.com/roboflow/supervision/issues) and found no similar feature requests.

### Question

Suppose we have a single class object detection problem. Shouldn't the average precision and the precision metrics across the different iou thresholds be the same? This does not seem to be the case here.

### Additional

_No response_

|

open

|

2024-12-03T12:55:58Z

|

2024-12-04T10:16:47Z

|

https://github.com/roboflow/supervision/issues/1709

|

[

"question"

] |

GiannisApost

| 1

|

mlfoundations/open_clip

|

computer-vision

| 549

|

build_cls_mask() in CoCa TextTransfotmer

|

TL, DR: current implementation of `build_cls_mask()` produces `cls_mask` for [CLS] being as the first token. But in CoCa, [CLS] is the end token.

In [Issue 312](https://github.com/mlfoundations/open_clip/pull/312), `build_cls_mask()` was introduced by @gpucce in `TextTransformer` in CoCa to "preventing the CLS token at the end of the sequence from attending to padded tokens".

```python

# https://github.com/mlfoundations/open_clip/blob/main/src/open_clip/transformer.py#L587

def build_cls_mask(self, text, cast_dtype: torch.dtype):

cls_mask = (text != self.pad_id).unsqueeze(1)

cls_mask = F.pad(cls_mask, (1, 0, cls_mask.shape[2], 0), value=1.0)

additive_mask = torch.empty(cls_mask.shape, dtype=cast_dtype, device=cls_mask.device)

additive_mask.fill_(0)

additive_mask.masked_fill_(~cls_mask, float("-inf"))

additive_mask = torch.repeat_interleave(additive_mask, self.heads, 0)

return additive_mask

```

Taking `text = torch.tensor([[1,2,3,4,0,0,0]])` as an example,

```python

import torch

import torch.nn.functional as F

text = torch.tensor([[1,2,3,4,0,0,0]]) ### batch size 1, sequence 4 with 3 padding (pad_id=0)

pad_id = 0

cls_mask = (text != pad_id).unsqueeze(1)

cls_mask = F.pad(cls_mask, (1, 0, cls_mask.shape[2], 0), value=1.0)

additive_mask = torch.empty(cls_mask.shape)

additive_mask.fill_(0)

additive_mask.masked_fill_(~cls_mask, float("-inf"))

print(additive_mask)

```

This output

```

tensor([[[0., 0., 0., 0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0., -inf, -inf, -inf]]])

```

In @lucidrains [implementation](https://github.com/lucidrains/CoCa-pytorch/blob/main/coca_pytorch/coca_pytorch.py#L384-L385)

```python

# https://github.com/lucidrains/CoCa-pytorch/blob/main/coca_pytorch/coca_pytorch.py#L384-L385

cls_mask = rearrange(text!=self.pad_id, 'b j -> b 1 j')

attn_mask = F.pad(cls_mask, (0, 1, seq, 0), value=True)

```

taking the same `text` as the example

```python

import einops

import torch

import torch.nn.functional as F

from einops import rearrange, repeat

text = torch.tensor([[1,2,3,4,0,0,0]])

pad_id = 0

seq = text.shape[1]

cls_mask = rearrange(text!=pad_id, 'b j -> b 1 j')

attn_mask = F.pad(cls_mask, (0, 1, seq, 0), value=True)

print(attn_mask)

```

it produces (which I believe should be the desired outcome)

```

tensor([[[ True, True, True, True, True, True, True, True],

[ True, True, True, True, True, True, True, True],

[ True, True, True, True, True, True, True, True],

[ True, True, True, True, True, True, True, True],

[ True, True, True, True, True, True, True, True],

[ True, True, True, True, True, True, True, True],

[ True, True, True, True, True, True, True, True],

[ True, True, True, True, False, False, False, True]]])

```

Since [CLS] token is appended at the end of a sequence,

```python

# https://github.com/mlfoundations/open_clip/blob/main/src/open_clip/transformer.py#L607

x = torch.cat([x, self._repeat(self.cls_emb, x.shape[0])], dim=1)

```

I feel that the current implementation in `open_clip` is wrong? Do I miss anything?

|

open

|

2023-06-24T04:05:42Z

|

2023-09-20T17:31:30Z

|

https://github.com/mlfoundations/open_clip/issues/549

|

[] |

yiren-jian

| 2

|

CatchTheTornado/text-extract-api

|

api

| 55

|

[feat] Add `markitdown` support

|

https://github.com/microsoft/markitdown

It can be added as another OCR strategy

|

open

|

2025-01-08T10:32:31Z

|

2025-01-19T16:55:21Z

|

https://github.com/CatchTheTornado/text-extract-api/issues/55

|

[

"feature"

] |

pkarw

| 0

|

deezer/spleeter

|

deep-learning

| 775

|

[Discussion] How can I make sure separate running on GPU?

|

The separate worked, I use --verbose and it shows some info, but i'm not sure it run on GPU. how can i make sure it?

```

C:\Users\Administrator\Desktop\testSound>python -m spleeter separate -p spleeter:5stems -o output --verbose audio_example.mp3

INFO:tensorflow:Using config: {'_model_dir': 'pretrained_models\\5stems', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': gpu_options {

per_process_gpu_memory_fraction: 0.7

}

, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_experimental_max_worker_delay_secs': None, '_session_creation_timeout_secs': 7200, '_checkpoint_save_graph_def': True, '_service': None, '_cluster_spec': ClusterSpec({}), '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

WARNING:tensorflow:From C:\Users\Administrator\AppData\Local\Programs\Python\Python310\lib\site-packages\spleeter\separator.py:146: calling DatasetV2.from_generator (from tensorflow.python.data.ops.dataset_ops) with output_types is deprecated and will be removed in a future version.

Instructions for updating:

Use output_signature instead

WARNING:tensorflow:From C:\Users\Administrator\AppData\Local\Programs\Python\Python310\lib\site-packages\spleeter\separator.py:146: calling DatasetV2.from_generator (from tensorflow.python.data.ops.dataset_ops) with output_shapes is deprecated and will be removed in a future version.

Instructions for updating:

Use output_signature instead

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Apply unet for vocals_spectrogram

WARNING:tensorflow:From C:\Users\Administrator\AppData\Local\Programs\Python\Python310\lib\site-packages\keras\layers\normalization\batch_normalization.py:532: _colocate_with (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version.

Instructions for updating:

Colocations handled automatically by placer.

INFO:tensorflow:Apply unet for piano_spectrogram

INFO:tensorflow:Apply unet for drums_spectrogram

INFO:tensorflow:Apply unet for bass_spectrogram

INFO:tensorflow:Apply unet for other_spectrogram

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Restoring parameters from pretrained_models\5stems\model

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:spleeter:File output\audio_example/piano.wav written succesfully

INFO:spleeter:File output\audio_example/other.wav written succesfully

INFO:spleeter:File output\audio_example/vocals.wav written succesfully

INFO:spleeter:File output\audio_example/drums.wav written succesfully

INFO:spleeter:File output\audio_example/bass.wav written succesfully

```

I don't think it worked on GPU, because it run 28s. I don't think this is the right speed. Am I right?

|

open

|

2022-06-24T04:23:56Z

|

2024-02-04T05:38:42Z

|

https://github.com/deezer/spleeter/issues/775

|

[

"question"

] |

limengqilove

| 2

|

plotly/dash-core-components

|

dash

| 637

|

soft link to LICENSE.txt makes the github license invalid

|

@rpkyle your change of soft link makes the license tab on github invalid. Can you see how to fix that?

|

closed

|

2019-09-04T21:04:48Z

|

2019-09-05T11:29:37Z

|

https://github.com/plotly/dash-core-components/issues/637

|

[] |

byronz

| 1

|

BMW-InnovationLab/BMW-YOLOv4-Training-Automation

|

rest-api

| 10

|

Inference after training the model

|

Are there any ways to do inference/predictions using the latest weight after the model is trained?

I am able to do predictions during the training process using the Custom API at port 8099. However, the port is also closed after the training is finished.

Thanks!

|

closed

|

2020-07-27T19:46:10Z

|

2021-03-12T18:38:05Z

|

https://github.com/BMW-InnovationLab/BMW-YOLOv4-Training-Automation/issues/10

|

[] |

LSQI15

| 4

|

mckinsey/vizro

|

plotly

| 460

|

Multiple Series Line Chart Updates

|

### Description

When creating a line chart with multiple series, I've noticed it's not possible to update the legend and y_axis titles (potentially more). If specifying a single series, the titles are updated and represented accordingly.

### Expected behavior

I'd expect to be able to update the y_axis title and potentially also the legend titles when passing multiple column names (as a python list) to a px.line call, as can be done with dash.

### Which package?

vizro

### Package version

0.1.16

### Python version

3.10.14

### OS

Ubuntu 20.04

### How to Reproduce

Code to reproduce the behavior I'm observing

```

from vizro import Vizro

import vizro.plotly.express as px

import vizro.models as vm

df = px.data.stocks()

page = vm.Page(

title="My first dashboard",

components=[

vm.Graph(id="scatter_chart", figure=px.line(df, x="date", y=["GOOG", 'AAPL']).update_layout(yaxis_title='New Y-axis', legend_title='new legend')),

]

)

dashboard = vm.Dashboard(pages=[page])

Vizro().build(dashboard).run()

```

### Output

Sharing here the resulting dashboard view, titles are not being updated even though updates are specified.

### Code of Conduct

- [X] I agree to follow the [Code of Conduct](https://github.com/mckinsey/vizro/blob/main/CODE_OF_CONDUCT.md).

|

closed

|

2024-05-07T01:22:16Z

|

2024-05-08T01:26:01Z

|

https://github.com/mckinsey/vizro/issues/460

|

[

"Docs :spiral_notepad:",

"General Question :question:"

] |

mkretsch327

| 2

|

jstrieb/github-stats

|

asyncio

| 15

|

Update cronjob schedule

|

Hi!

Nice work, but I believe running the github action every hour is a bit overkill. (Over 3000 commits on just generated image)

Maybe everyday or every month could be a bit more reasonable.

What do you think?

|

closed

|

2021-01-14T14:30:23Z

|

2021-01-19T22:46:07Z

|

https://github.com/jstrieb/github-stats/issues/15

|

[] |

sylhare

| 1

|

zappa/Zappa

|

flask

| 842

|

[Migrated] 502 error while deploying

|

Originally from: https://github.com/Miserlou/Zappa/issues/2084 by [tomekbuszewski](https://github.com/tomekbuszewski)

I am getting 502 error while trying to deploy the app.

## Context

I have an app written with Django (3.0.4), works great locally. For dev purposes, I use sqlite only. I wanted to deploy it to AWS today, but I am getting 502 errors.

Sorry, I cannot post an app here, since it's private.

## Expected Behavior

It should be deployed without problems.

## Actual Behavior

Results in 502 error.

## Your Environment

Zappa version: 0.51.0

Django version: 3.0.4

Python: 3.7

Zappa config:

```

{

"dev": {

"aws_region": "eu-central-1",

"django_settings": "hay.hay.settings",

"profile_name": "default",

"project_name": "hundred-a-year",

"runtime": "python3.7",

"s3_bucket": "hay-dev",

"slim_handler": false

}

}

```

Error on AWS:

```

"{'message': 'An uncaught exception happened while servicing this request. You can investigate this with the `zappa tail` command.', 'traceback': ['Traceback (most recent call last):\\n', ' File \"/var/task/handler.py\", line 540, in handler\\n with Response.from_app(self.wsgi_app, environ) as response:\\n', ' File \"/var/task/werkzeug/wrappers/base_response.py\", line 287, in from_app\\n return cls(*_run_wsgi_app(app, environ, buffered))\\n', ' File \"/var/task/werkzeug/wrappers/base_response.py\", line 26, in _run_wsgi_app\\n return _run_wsgi_app(*args)\\n', ' File \"/var/task/werkzeug/test.py\", line 1119, in run_wsgi_app\\n app_rv = app(environ, start_response)\\n', \"TypeError: 'NoneType' object is not callable\\n\"]}"

```

Results of `zappa tail`:

```

[1587228323192] [DEBUG] 2020-04-18T16:45:23.192Z f41f3ef4-dfa2-46dc-95c2-99d6fd9f0852 Zappa Event: {'resource': '/', 'path': '/', 'httpMethod': 'GET', 'headers': {'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9', 'Accept-Encoding': 'gzip, deflate, br', 'Accept-Language': 'pl-PL,pl;q=0.9,en-US;q=0.8,en;q=0.7,ru;q=0.6,so;q=0.5', 'cache-control': 'max-age=0', 'CloudFront-Forwarded-Proto': 'https', 'CloudFront-Is-Desktop-Viewer': 'true', 'CloudFront-Is-Mobile-Viewer': 'false', 'CloudFront-Is-SmartTV-Viewer': 'false', 'CloudFront-Is-Tablet-Viewer': 'false', 'CloudFront-Viewer-Country': 'PL', 'Host': 'yiudwi21ux.execute-api.eu-central-1.amazonaws.com', 'Referer': 'https://eu-central-1.console.aws.amazon.com/apigateway/home?region=eu-central-1', 'sec-fetch-dest': 'document', 'sec-fetch-mode': 'navigate', 'sec-fetch-site': 'cross-site', 'sec-fetch-user': '?1', 'upgrade-insecure-requests': '1', 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36', 'Via': '2.0 70d111e01220d4724cfea727fa9dfb91.cloudfront.net (CloudFront)', 'X-Amz-Cf-Id': 'VMUK9rPoyIcqWqOjowgpHrWsI-qW0OTnW1YAUIJ0BZHH-Vr7Z3rWCw==', 'X-Amzn-Trace-Id': 'Root=1-5e9b2ea3-5181626ee6404a71bf9a1db9', 'X-Forwarded-For': '89.64.74.188, 54.239.171.153', 'X-Forwarded-Port': '443', 'X-Forwarded-Proto': 'https'}, 'multiValueHeaders': {'Accept': ['text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9'], 'Accept-Encoding': ['gzip, deflate, br'], 'Accept-Language': ['pl-PL,pl;q=0.9,en-US;q=0.8,en;q=0.7,ru;q=0.6,so;q=0.5'], 'cache-control': ['max-age=0'], 'CloudFront-Forwarded-Proto': ['https'], 'CloudFront-Is-Desktop-Viewer': ['true'], 'CloudFront-Is-Mobile-Viewer': ['false'], 'CloudFront-Is-SmartTV-Viewer': ['false'], 'CloudFront-Is-Tablet-Viewer': ['false'], 'CloudFront-Viewer-Country': ['PL'], 'Host': ['yiudwi21ux.execute-api.eu-central-1.amazonaws.com'], 'Referer': ['https://eu-central-1.console.aws.amazon.com/apigateway/home?region=eu-central-1'], 'sec-fetch-dest': ['document'], 'sec-fetch-mode': ['navigate'], 'sec-fetch-site': ['cross-site'], 'sec-fetch-user': ['?1'], 'upgrade-insecure-requests': ['1'], 'User-Agent': ['Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36'], 'Via': ['2.0 70d111e01220d4724cfea727fa9dfb91.cloudfront.net (CloudFront)'], 'X-Amz-Cf-Id': ['VMUK9rPoyIcqWqOjowgpHrWsI-qW0OTnW1YAUIJ0BZHH-Vr7Z3rWCw=='], 'X-Amzn-Trace-Id': ['Root=1-5e9b2ea3-5181626ee6404a71bf9a1db9'], 'X-Forwarded-For': ['89.64.74.188, 54.239.171.153'], 'X-Forwarded-Port': ['443'], 'X-Forwarded-Proto': ['https']}, 'queryStringParameters': None, 'multiValueQueryStringParameters': None, 'pathParameters': None, 'stageVariables': None, 'requestContext': {'resourceId': '3achqb2vbg', 'resourcePath': '/', 'httpMethod': 'GET', 'extendedRequestId': 'LMQ5gG_WliAFbBw=', 'requestTime': '18/Apr/2020:16:45:23 +0000', 'path': '/dev', 'accountId': '536687225340', 'protocol': 'HTTP/1.1', 'stage': 'dev', 'domainPrefix': 'yiudwi21ux', 'requestTimeEpoch': 1587228323137, 'requestId': 'd3fd55d0-bec3-4424-877b-f6cf00c7affb', 'identity': {'cognitoIdentityPoolId': None, 'accountId': None, 'cognitoIdentityId': None, 'caller': None, 'sourceIp': '89.64.74.188', 'principalOrgId': None, 'accessKey': None, 'cognitoAuthenticationType': None, 'cognitoAuthenticationProvider': None, 'userArn': None, 'userAgent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36', 'user': None}, 'domainName': 'yiudwi21ux.execute-api.eu-central-1.amazonaws.com', 'apiId': 'yiudwi21ux'}, 'body': None, 'isBase64Encoded': False}

[1587228323192] [DEBUG] 2020-04-18T16:45:23.192Z f41f3ef4-dfa2-46dc-95c2-99d6fd9f0852 host found: [yiudwi21ux.execute-api.eu-central-1.amazonaws.com]

[1587228323192] [DEBUG] 2020-04-18T16:45:23.192Z f41f3ef4-dfa2-46dc-95c2-99d6fd9f0852 amazonaws found in host

[1587228323192] 'NoneType' object is not callable

```

I've tried various solutions found on the internet, disabling `slim_handler`, removing `pyc` files, reinstalling venv. Nothing helped.

|

closed

|

2021-02-20T12:52:22Z

|

2022-07-16T05:45:41Z

|

https://github.com/zappa/Zappa/issues/842

|

[] |

jneves

| 3

|

autogluon/autogluon

|

computer-vision

| 4,441

|

[BUG] feature_prune_kwargs={"force_prune": True} does not work when tuning_data is on for presets="medium_quality",

|

```

Verbosity: 4 (Maximum Logging)

=================== System Info ===================

AutoGluon Version: 1.1.1

Python Version: 3.11.9

Operating System: Linux

Platform Machine: x86_64

Platform Version: #1 SMP Fri Mar 29 23:14:13 UTC 2024

CPU Count: 8

GPU Count: 1

Memory Avail: 8.36 GB / 23.47 GB (35.6%)

Disk Space Avail: 326.38 GB / 911.84 GB (35.8%)

===================================================

Presets specified: ['medium_quality']

============ fit kwarg info ============

User Specified kwargs: