repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

flaskbb/flaskbb

|

flask

| 189

|

make: *** [install] Error 1

|

When i input `make install` it showed me as below instantly:

InsecurePlatformWarning

> /home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/requests/packages/urllib3/util/ssl_.py:120: InsecurePlatformWarning: A true SSLContext object is not available. This prevents urllib3 from configuring SSL appropriately and may cause certain SSL connections to fail. For more information, see https://urllib3.readthedocs.org/en/latest/security.html#insecureplatformwarning.

> Then I solve this problem by `pip install pyopenssl ndg-httpsclient pyasn1`

but the error message was still going on .so I used `control+ c` to stop it

and when i input `make install`again ,it return me that:

> pip install -r requirements.txt

> Requirement already satisfied (use --upgrade to upgrade): alembic==0.8.4 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 1))

> Requirement already satisfied (use --upgrade to upgrade): Babel==2.2.0 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 2))

> Requirement already satisfied (use --upgrade to upgrade): blinker==1.3 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 3))

> Requirement already satisfied (use --upgrade to upgrade): cov-core==1.15.0 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 4))

> Requirement already satisfied (use --upgrade to upgrade): coverage==4.0.3 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 5))

> Requirement already satisfied (use --upgrade to upgrade): Flask==0.10.1 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 6))

> Requirement already satisfied (use --upgrade to upgrade): flask-allows==0.1.0 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 7))

> Requirement already satisfied (use --upgrade to upgrade): Flask-BabelPlus==1.0.1 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 8))

> Requirement already satisfied (use --upgrade to upgrade): Flask-Cache==0.13.1 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 9))

> Requirement already satisfied (use --upgrade to upgrade): Flask-DebugToolbar==0.10.0 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 10))

> Requirement already satisfied (use --upgrade to upgrade): Flask-Login==0.3.2 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 11))

> Requirement already satisfied (use --upgrade to upgrade): Flask-Mail==0.9.1 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 12))

> Requirement already satisfied (use --upgrade to upgrade): Flask-Migrate==1.7.0 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 13))

> Requirement already satisfied (use --upgrade to upgrade): Flask-Plugins==1.6.1 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 14))

> Requirement already satisfied (use --upgrade to upgrade): Flask-Redis==0.1.0 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 15))

> Requirement already satisfied (use --upgrade to upgrade): Flask-Script==2.0.5 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 16))

> Requirement already satisfied (use --upgrade to upgrade): Flask-SQLAlchemy==2.1 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 17))

> Requirement already satisfied (use --upgrade to upgrade): Flask-Themes2==0.1.4 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 18))

> Requirement already satisfied (use --upgrade to upgrade): Flask-WTF==0.12 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 19))

> Requirement already satisfied (use --upgrade to upgrade): itsdangerous==0.24 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 20))

> Requirement already satisfied (use --upgrade to upgrade): Jinja2==2.8 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 21))

> Requirement already satisfied (use --upgrade to upgrade): Mako==1.0.3 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 22))

> Requirement already satisfied (use --upgrade to upgrade): MarkupSafe==0.23 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 23))

> Requirement already satisfied (use --upgrade to upgrade): mistune==0.7.1 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 24))

> Requirement already satisfied (use --upgrade to upgrade): Pygments==2.1 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 25))

> Requirement already satisfied (use --upgrade to upgrade): pytz==2015.7 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 26))

> Requirement already satisfied (use --upgrade to upgrade): redis==2.10.5 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 27))

> Requirement already satisfied (use --upgrade to upgrade): requests==2.9.1 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 28))

> Requirement already satisfied (use --upgrade to upgrade): simplejson==3.8.1 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 29))

> Requirement already satisfied (use --upgrade to upgrade): six==1.10.0 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 30))

> Requirement already satisfied (use --upgrade to upgrade): speaklater==1.3 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 31))

> Requirement already satisfied (use --upgrade to upgrade): SQLAlchemy==1.0.11 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 32))

> Requirement already satisfied (use --upgrade to upgrade): SQLAlchemy-Utils==0.31.6 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 33))

> Requirement already satisfied (use --upgrade to upgrade): Unidecode==0.04.19 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 34))

> Requirement already satisfied (use --upgrade to upgrade): Werkzeug==0.11.3 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 35))

> Requirement already satisfied (use --upgrade to upgrade): Whoosh==2.7.0 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 36))

> Requirement already satisfied (use --upgrade to upgrade): WTForms==2.1 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 37))

> Requirement already satisfied (use --upgrade to upgrade): Flask-Whooshalchemy from https://github.com/jshipley/Flask-WhooshAlchemy/archive/master.zip in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from -r requirements.txt (line 38))

> Requirement already satisfied (use --upgrade to upgrade): python-editor>=0.3 in /home/xzp/.virtualenvs/flaskbb/lib/python2.7/site-packages (from alembic==0.8.4->-r requirements.txt (line 1))

> Cleaning up...

> clear

>

> python manage.py install

> Creating default data...

> 2016-03-23 05:52:01,852 INFO sqlalchemy.engine.base.Engine SELECT CAST('test plain returns' AS VARCHAR(60)) AS anon_1

> 2016-03-23 05:52:01,852 INFO sqlalchemy.engine.base.Engine ()

> 2016-03-23 05:52:01,853 INFO sqlalchemy.engine.base.Engine SELECT CAST('test unicode returns' AS VARCHAR(60)) AS anon_1

> 2016-03-23 05:52:01,853 INFO sqlalchemy.engine.base.Engine ()

> 2016-03-23 05:52:01,853 INFO sqlalchemy.engine.base.Engine BEGIN (implicit)

> 2016-03-23 05:52:01,854 INFO sqlalchemy.engine.base.Engine INSERT INTO groups (name, description, admin, super_mod, mod, guest, banned, mod_edituser, mod_banuser, editpost, deletepost, deletetopic, posttopic, postreply) VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)

> 2016-03-23 05:52:01,854 INFO sqlalchemy.engine.base.Engine ('Administrator', 'The Administrator Group', 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1)

> 2016-03-23 05:52:01,854 INFO sqlalchemy.engine.base.Engine ROLLBACK

> No database found.

> Do you want to create the database now? (y/n) [n]: y

> INFO [alembic.runtime.migration] Context impl SQLiteImpl.

> INFO [alembic.runtime.migration] Will assume non-transactional DDL.

> INFO [sqlalchemy.engine.base.Engine] BEGIN (implicit)

> INFO [sqlalchemy.engine.base.Engine] INSERT INTO groups (name, description, admin, super_mod, mod, guest, banned, mod_edituser, mod_banuser, editpost, deletepost, deletetopic, posttopic, postreply) VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)

> INFO [sqlalchemy.engine.base.Engine]('Administrator', 'The Administrator Group', 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1)

> INFO [sqlalchemy.engine.base.Engine] ROLLBACK

> Traceback (most recent call last):

> File "manage.py", line 317, in <module>

> manager.run()

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/flask_script/**init**.py", line 412, in run

> result = self.handle(sys.argv[0], sys.argv[1:])

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/flask_script/**init**.py", line 383, in handle

> res = handle(_args, *_config)

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/flask_script/commands.py", line 216, in **call**

> return self.run(_args, *_kwargs)

> File "manage.py", line 137, in install

> create_default_groups()

> File "/home/xzp/PycharmProjects/flaskbb/flaskbb/utils/populate.py", line 155, in create_default_groups

> group.save()

> File "/home/xzp/PycharmProjects/flaskbb/flaskbb/utils/database.py", line 21, in save

> db.session.commit()

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/scoping.py", line 150, in do

> return getattr(self.registry(), name)(_args, *_kwargs)

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/session.py", line 813, in commit

> self.transaction.commit()

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/session.py", line 392, in commit

> self._prepare_impl()

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/session.py", line 372, in _prepare_impl

> self.session.flush()

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/session.py", line 2027, in flush

> self._flush(objects)

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/session.py", line 2145, in _flush

> transaction.rollback(_capture_exception=True)

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/util/langhelpers.py", line 60, in __exit__

> compat.reraise(exc_type, exc_value, exc_tb)

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/session.py", line 2109, in _flush

> flush_context.execute()

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/unitofwork.py", line 373, in execute

> rec.execute(self)

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/unitofwork.py", line 532, in execute

> uow

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/persistence.py", line 174, in save_obj

> mapper, table, insert)

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/orm/persistence.py", line 800, in _emit_insert_statements

> execute(statement, params)

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/engine/base.py", line 914, in execute

> return meth(self, multiparams, params)

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/sql/elements.py", line 323, in _execute_on_connection

> return connection._execute_clauseelement(self, multiparams, params)

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/engine/base.py", line 1010, in _execute_clauseelement

> compiled_sql, distilled_params

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/engine/base.py", line 1146, in _execute_context

> context)

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/engine/base.py", line 1341, in _handle_dbapi_exception

> exc_info

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/util/compat.py", line 200, in raise_from_cause

> reraise(type(exception), exception, tb=exc_tb)

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/engine/base.py", line 1139, in _execute_context

> context)

> File "/home/xzp/.virtualenvs/flaskbb/local/lib/python2.7/site-packages/sqlalchemy/engine/default.py", line 450, in do_execute

> cursor.execute(statement, parameters)

> sqlalchemy.exc.OperationalError: (sqlite3.OperationalError) no such table: groups [SQL: u'INSERT INTO groups (name, description, admin, super_mod, mod, guest, banned, mod_edituser, mod_banuser, editpost, deletepost, deletetopic, posttopic, postreply) VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)'] [parameters: ('Administrator', 'The Administrator Group', 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1)]

> make: **\* [install] Error 1

How to solve this?

|

closed

|

2016-03-23T06:01:13Z

|

2018-04-15T07:47:38Z

|

https://github.com/flaskbb/flaskbb/issues/189

|

[] |

XzAmrzs

| 3

|

deepspeedai/DeepSpeed

|

pytorch

| 6,522

|

[BUG] error :past_key, past_value = layer_past,how to solve this ?

|

**Describe the bug**

when i run train,rlhf step 3;

```

Actor_Lr=9.65e-6

Critic_Lr=5e-6

#--data_path Dahoas/rm-static \

#--offload_reference_model \

deepspeed --master_port 12346 main_step3.py \

--data_path ${data_path}/beyond/rlhf-reward-single-round-trans_chinese_step3 \

--data_split 2,4,4 \

--actor_model_name_or_path $ACTOR_MODEL_PATH \

--critic_model_name_or_path $CRITIC_MODEL_PATH \

--data_output_path ${data_path}/train_data_file_step3 \

--num_padding_at_beginning 1 \

--per_device_generation_batch_size 1 \

--per_device_training_batch_size 1 \

--generation_batches 1 \

--ppo_epochs 1 \

--max_answer_seq_len 256 \

--max_prompt_seq_len 256 \

--actor_learning_rate ${Actor_Lr} \

--critic_learning_rate ${Critic_Lr} \

--actor_weight_decay 0.1 \

--critic_weight_decay 0.1 \

--num_train_epochs 1 \

--lr_scheduler_type cosine \

--gradient_accumulation_steps 1 \

--actor_gradient_checkpointing \

--critic_gradient_checkpointing \

--actor_dropout 0.0 \

--num_warmup_steps 100 \

--deepspeed --seed 1234 \

--enable_hybrid_engine \

--actor_zero_stage $ACTOR_ZERO_STAGE \

--critic_zero_stage $CRITIC_ZERO_STAGE \

--enable_ema \

--output_dir $output_path \

```

**Log output**

i got error:

```

[rank3]: ValueError: not enough values to unpack (expected 2, got 0)

[rank1]: Traceback (most recent call last):

[rank1]: File "/home/deepspeed/DeepSpeedExamples/applications/DeepSpeed-Chat/main_step3.py", line 673, in <module>

[rank1]: main()

[rank1]: File "/home/deepspeed/DeepSpeedExamples/applications/DeepSpeed-Chat/main_step3.py", line 527, in main

[rank1]: out = trainer.generate_experience(batch_prompt['prompt'],

[rank1]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

[rank1]: File "/home/deepspeed/DeepSpeedExamples/applications/DeepSpeed-Chat/dschat/rlhf/ppo_trainer.py", line 140, in generate_experience

[rank1]: seq = self._generate_sequence(prompts, mask, step)

[rank1]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

[rank1]: File "/home/deepspeed/DeepSpeedExamples/applications/DeepSpeed-Chat/dschat/rlhf/ppo_trainer.py", line 87, in _generate_sequence

[rank1]: seq = self.actor_model.module.generate(

[rank1]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/deepspeed/runtime/hybrid_engine.py", line 253, in generate

[rank1]: generate_ret_vals = self._generate(*inputs, **kwargs)

[rank1]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/torch/utils/_contextlib.py", line 116, in decorate_context

[rank1]: return func(*args, **kwargs)

[rank1]: ^^^^^^^^^^^^^^^^^^^^^

[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/transformers/generation/utils.py", line 2024, in generate

[rank1]: result = self._sample(

[rank1]: ^^^^^^^^^^^^^

[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/transformers/generation/utils.py", line 2982, in _sample

[rank1]: outputs = self(**model_inputs, return_dict=True)[rank1]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1553, in _wrapped_call_impl

[rank1]: return self._call_impl(*args, **kwargs)[rank1]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1609, in _call_impl

[rank1]: result = forward_call(*args, **kwargs)

[rank1]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/transformers/models/bloom/modeling_bloom.py", line 955, in forward

[rank1]: transformer_outputs = self.transformer(

[rank1]: ^^^^^^^^^^^^^^^^^[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1553, in _wrapped_call_impl

[rank1]: return self._call_impl(*args, **kwargs)

[rank1]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1609, in _call_impl

[rank1]: result = forward_call(*args, **kwargs)

[rank1]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/transformers/models/bloom/modeling_bloom.py", line 744, in forward

[rank1]: outputs = block(

[rank1]: ^^^^^^

[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1553, in _wrapped_call_impl[rank1]: return self._call_impl(*args, **kwargs)[rank1]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1609, in _call_impl[rank1]: result = forward_call(*args, **kwargs)[rank1]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/deepspeed/model_implementations/transformers/ds_transformer.py", line 171, in forward

[rank1]: self.attention(input,

[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1553, in _wrapped_call_impl

[rank1]: return self._call_impl(*args, **kwargs)[rank1]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/torch/nn/modules/module.py", line 1568, in _call_impl

[rank1]: return forward_call(*args, **kwargs)[rank1]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/deepspeed/ops/transformer/inference/ds_attention.py", line 160, in forward

[rank1]: context_layer, key_layer, value_layer = self.compute_attention(qkv_out=qkv_out,

[rank1]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^[rank1]: File "/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/deepspeed/ops/transformer/inference/ds_attention.py", line 239, in compute_attention

[rank1]: past_key, past_value = layer_past

[rank1]: ^^^^^^^^^^^^^^^^^^^^

[rank1]: ValueError: not enough values to unpack (expected 2, got 0)

huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks...

To disable this warning, you can either:

- Avoid using `tokenizers` before the fork if possible

- Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false)

```

**To Reproduce**

Steps to reproduce the behavior:

1. Command/Script to reproduce

2. What packages are required and their versions

3. How to run the script

4. ...

**Expected behavior**

A clear and concise description of what you expected to happen.

**ds_report output**

# ds_report

```

[2024-09-11 19:27:52,618] [INFO] [real_accelerator.py:203:get_accelerator] Setting ds_accelerator to cuda (auto detect)

--------------------------------------------------

DeepSpeed C++/CUDA extension op report

--------------------------------------------------

NOTE: Ops not installed will be just-in-time (JIT) compiled at

runtime if needed. Op compatibility means that your system

meet the required dependencies to JIT install the op.

--------------------------------------------------

JIT compiled ops requires ninja

ninja .................. [OKAY]

--------------------------------------------------

op name ................ installed .. compatible

--------------------------------------------------

async_io ............... [NO] ....... [OKAY]

fused_adam ............. [NO] ....... [OKAY]

cpu_adam ............... [NO] ....... [OKAY]

cpu_adagrad ............ [NO] ....... [OKAY]

cpu_lion ............... [NO] ....... [OKAY]

[WARNING] Please specify the CUTLASS repo directory as environment variable $CUTLASS_PATH

evoformer_attn ......... [NO] ....... [NO]

fp_quantizer ........... [NO] ....... [OKAY]

fused_lamb ............. [NO] ....... [OKAY]

fused_lion ............. [NO] ....... [OKAY]

gds .................... [NO] ....... [OKAY]

inference_core_ops ..... [NO] ....... [OKAY]

cutlass_ops ............ [NO] ....... [OKAY]

transformer_inference .. [NO] ....... [OKAY]

quantizer .............. [NO] ....... [OKAY]

ragged_device_ops ...... [NO] ....... [OKAY]

ragged_ops ............. [NO] ....... [OKAY]

random_ltd ............. [NO] ....... [OKAY]

[WARNING] sparse_attn requires a torch version >= 1.5 and < 2.0 but detected 2.4

[WARNING] using untested triton version (3.0.0), only 1.0.0 is known to be compatible

sparse_attn ............ [NO] ....... [NO]

spatial_inference ...... [NO] ....... [OKAY]

transformer ............ [NO] ....... [OKAY]

stochastic_transformer . [NO] ....... [OKAY]

--------------------------------------------------

DeepSpeed general environment info:

torch install path ............... ['/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/torch']

torch version .................... 2.4.0+cu121

deepspeed install path ........... ['/home/tools/anaconda3/envs/deepspeed/lib/python3.12/site-packages/deepspeed']

deepspeed info ................... 0.15.1, unknown, unknown

torch cuda version ............... 12.1

torch hip version ................ None

nvcc version ..................... 12.1

deepspeed wheel compiled w. ...... torch 2.4, cuda 12.1

shared memory (/dev/shm) size .... 503.77 GB

```

**Screenshots**

If applicable, add screenshots to help explain your problem.

**System info (please complete the following information):**

```

- OS: Ubuntu 20.04.6 LTS

- GPU :NVIDIA L20*4 46G

- (if applicable) what [DeepSpeed-MII](https://github.com/microsoft/deepspeed-mii) 0.15.1

- (if applicable) Hugging Face Transformers/Accelerate/etc. versions 4.44.2

- Python 3.12.0

- transformers 4.44.2

- cuda 12.1

- torch 2.4.0

- deepspeed 0.15.1

- accelerate 0.33.0

- Any other relevant info about your setup

```

**Docker context**

Are you using a specific docker image that you can share?

**Additional context**

```

home/deepspeed/DeepSpeedExamples/applications/DeepSpeed-Chat/dschat/utils/model/model_utils.py:155: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

model_ckpt_state_dict = torch.load(model_ckpt_path, map_location='cpu')

```

```[tasklist]

### Tasks

```

|

open

|

2024-09-11T11:25:48Z

|

2024-10-08T19:47:54Z

|

https://github.com/deepspeedai/DeepSpeed/issues/6522

|

[

"bug",

"deepspeed-chat"

] |

lovychen

| 2

|

huggingface/text-generation-inference

|

nlp

| 2,819

|

Failure when start the model using TGI 3

|

### System Info

I tried to serve llama3.1-8b using TGI on A10 (24G) on context length 4k.

coomand:

```

docker run --gpus all -it --rm -p 8000:80 ghcr.io/huggingface/text-generation-inference:3.0.0 --model-id NousResearch/Meta-Llama-3.1-8B-Instruct --max-total-tokens 4096 --dtype bfloat16

```

- However it work with the same command using image `ghcr.io/huggingface/text-generation-inference:2.2.0`

but i got the following error:

```

2024-12-10T21:24:12.674619Z INFO text_generation_launcher: Starting Webserver

2024-12-10T21:24:12.849356Z INFO text_generation_router_v3: backends/v3/src/lib.rs:125: Warming up model

2024-12-10T21:25:42.531534Z ERROR warmup{max_input_length=None max_prefill_tokens=8192 max_total_tokens=Some(4096) max_batch_size=None}:warmup: text_generation_router_v3::client: backends/v3/src/client/mod.rs:45: Server error: transport error

Error: Backend(Warmup(Generation("transport error")))

2024-12-10T21:25:42.679824Z ERROR text_generation_launcher: Webserver Crashed

2024-12-10T21:25:42.684321Z INFO text_generation_launcher: Shutting down shards

2024-12-10T21:25:42.698301Z ERROR shard-manager: text_generation_launcher: Shard complete standard error output:

2024-12-10 21:23:52.620 | INFO | text_generation_server.utils.import_utils:<module>:80 - Detected system cuda

/opt/conda/lib/python3.11/site-packages/text_generation_server/layers/gptq/triton.py:242: FutureWarning: `torch.cuda.amp.custom_fwd(args...)` is deprecated. Please use `torch.amp.custom_fwd(args..., device_type='cuda')` instead.

@custom_fwd(cast_inputs=torch.float16)

/opt/conda/lib/python3.11/site-packages/mamba_ssm/ops/selective_scan_interface.py:158: FutureWarning: `torch.cuda.amp.custom_fwd(args...)` is deprecated. Please use `torch.amp.custom_fwd(args..., device_type='cuda')` instead.

@custom_fwd

/opt/conda/lib/python3.11/site-packages/mamba_ssm/ops/selective_scan_interface.py:231: FutureWarning: `torch.cuda.amp.custom_bwd(args...)` is deprecated. Please use `torch.amp.custom_bwd(args..., device_type='cuda')` instead.

@custom_bwd

/opt/conda/lib/python3.11/site-packages/mamba_ssm/ops/triton/layernorm.py:507: FutureWarning: `torch.cuda.amp.custom_fwd(args...)` is deprecated. Please use `torch.amp.custom_fwd(args..., device_type='cuda')` instead.

@custom_fwd

/opt/conda/lib/python3.11/site-packages/mamba_ssm/ops/triton/layernorm.py:566: FutureWarning: `torch.cuda.amp.custom_bwd(args...)` is deprecated. Please use `torch.amp.custom_bwd(args..., device_type='cuda')` instead.

@custom_bwd

/opt/conda/lib/python3.11/site-packages/torch/distributed/c10d_logger.py:79: FutureWarning: You are using a Backend <class 'text_generation_server.utils.dist.FakeGroup'> as a ProcessGroup. This usage is deprecated since PyTorch 2.0. Please use a public API of PyTorch Distributed instead.

return func(*args, **kwargs) rank=0

2024-12-10T21:25:42.700830Z ERROR shard-manager: text_generation_launcher: Shard process was signaled to shutdown with signal 9 rank=0

```

### Information

- [X] Docker

- [ ] The CLI directly

### Tasks

- [X] An officially supported command

- [ ] My own modifications

### Reproduction

```

docker run --gpus all -it --rm -p 8000:80 ghcr.io/huggingface/text-generation-inference:3.0.0 --model-id NousResearch/Meta-Llama-3.1-8B-Instruct --max-total-tokens 4096 --dtype bfloat16

```

### Expected behavior

Should serve the model successfully

|

open

|

2024-12-10T21:36:23Z

|

2024-12-11T09:05:01Z

|

https://github.com/huggingface/text-generation-inference/issues/2819

|

[] |

hahmad2008

| 0

|

PablocFonseca/streamlit-aggrid

|

streamlit

| 108

|

Customize headers and hover behavior

|

Hey @PablocFonseca , thanks for this amazing streamlit component. Is there a way to customize the following items:

1. header rows for bg-color, font-color, font-size etc - I tried a custom css, but it doesn't seem to be working

```

AgGrid(

final_df,

fit_columns_on_grid_load=True,

custom_css={

"header-background-color": "#7FB56C",

"background-color": "#3B506C",

},

)

```

2. change the hover and selection behavior for rows and/or columns

3. change the default size of the aggrid table?

|

closed

|

2022-06-23T22:04:06Z

|

2024-04-04T17:53:58Z

|

https://github.com/PablocFonseca/streamlit-aggrid/issues/108

|

[] |

hummingbird1989

| 1

|

iperov/DeepFaceLab

|

machine-learning

| 5,468

|

Can't access earlier backups

|

THIS IS NOT TECH SUPPORT FOR NEWBIE FAKERS

POST ONLY ISSUES RELATED TO BUGS OR CODE

## Expected behavior

"start over" from an earlier backup of the model

## Actual behavior

When I delete the backup folders and start to train, it just keeps on training as if i wouldn't have deleted anything.

So how am i supposed to access an earlier backup when for example I'm not happy with the result?

## Steps to reproduce

Open the model folder, go to "new_SAEHD_autobackups"

## Other relevant information

- **Command lined used (if not specified in steps to reproduce)**: main.py ...

- **Operating system and version:** Windows, macOS, Linux

- **Python version:** 3.5, 3.6.4, ... (if you are not using prebuilt windows binary)

|

closed

|

2022-02-01T13:53:19Z

|

2022-03-19T07:15:56Z

|

https://github.com/iperov/DeepFaceLab/issues/5468

|

[] |

bioheater

| 0

|

ansible/ansible

|

python

| 84,636

|

Data Tagging PR Merge Blocking Tracker

|

This is an omnibus issue to track items blocking merge of #84621.

@ansibot bot_skip

|

open

|

2025-01-30T00:49:17Z

|

2025-01-30T01:04:01Z

|

https://github.com/ansible/ansible/issues/84636

|

[] |

nitzmahone

| 0

|

wagtail/wagtail

|

django

| 12,937

|

CSP style-src refactorings to avoid unsafe-inline

|

### Issue Summary

Part of [CSP compatibility issues #1288](https://github.com/wagtail/wagtail/issues/1288). There are a few places in Wagtail where styling can be refactored to avoid inline styles.

- Half that seem like the refactoring can be done with HTML-only changes, either removing the inline styles altogether, or replacing with Tailwind, or refactoring to use an existing CSS class / component.

- The other half that are similar but more likely to also require JS changes.

#### HTML & CSS refactorings

- [ ] [wagtailadmin/pages/add_subpage.html#L32](https://github.com/wagtail/wagtail/blob/main/wagtail/admin/templates/wagtailadmin/pages/add_subpage.html#L32)

- [ ] [wagtailadmin/pages/confirm_delete.html#L95](https://github.com/wagtail/wagtail/blob/main/wagtail/admin/templates/wagtailadmin/pages/confirm_delete.html#L95)

- [ ] [wagtailadmin/pages/edit_alias.html#L5](https://github.com/wagtail/wagtail/blob/main/wagtail/admin/templates/wagtailadmin/pages/edit_alias.html#L5)

- [ ] [wagtailstyleguide/base.html#L115](https://github.com/wagtail/wagtail/blob/main/wagtail/contrib/styleguide/templates/wagtailstyleguide/base.html#L115)

- [ ] [wagtailstyleguide/base.html#L124](https://github.com/wagtail/wagtail/blob/main/wagtail/contrib/styleguide/templates/wagtailstyleguide/base.html#L124)

- [ ] [wagtailstyleguide/base.html#L133](https://github.com/wagtail/wagtail/blob/main/wagtail/contrib/styleguide/templates/wagtailstyleguide/base.html#L133)

- [ ] [wagtailstyleguide/base.html#L470](https://github.com/wagtail/wagtail/blob/main/wagtail/contrib/styleguide/templates/wagtailstyleguide/base.html#L470)

- [ ] [wagtailstyleguide/base.html#L496](https://github.com/wagtail/wagtail/blob/main/wagtail/contrib/styleguide/templates/wagtailstyleguide/base.html#L496)

- [ ] [wagtaildocs/multiple/add.html#L52](https://github.com/wagtail/wagtail/blob/main/wagtail/documents/templates/wagtaildocs/multiple/add.html#L52)

- [ ] [wagtaildocs/multiple/add.html#L62](https://github.com/wagtail/wagtail/blob/main/wagtail/documents/templates/wagtaildocs/multiple/add.html#L62)

- [ ] [wagtailimages/images/url_generator.html#L5](https://github.com/wagtail/wagtail/blob/main/wagtail/images/templates/wagtailimages/images/url_generator.html#L5)

### JS changes possibly required

For those, there’s more of a need to confirm what the correct change is, and integrate with existing JS code. The `display: none` ones might be refactor-able to the `hidden` attribute or a "hidden" or `hidden!` utility class, checking specificity.

- [ ] [wagtailadmin/shared/icons.html#L2](https://github.com/wagtail/wagtail/blob/main/wagtail/admin/templates/wagtailadmin/shared/icons.html#L2)

- [ ] [wagtailsearchpromotions/includes/searchpromotion_form.html#L5](https://github.com/wagtail/wagtail/blob/main/wagtail/contrib/search_promotions/templates/wagtailsearchpromotions/includes/searchpromotion_form.html#L5)

- [ ] [wagtailstyleguide/base.html#L423](https://github.com/wagtail/wagtail/blob/main/wagtail/contrib/styleguide/templates/wagtailstyleguide/base.html#L423)

- [ ] [wagtailimages/images/edit.html#L32](https://github.com/wagtail/wagtail/blob/main/wagtail/images/templates/wagtailimages/images/edit.html#L32)

### Steps to Reproduce

Search for `style=` in the Wagtail code (ignoring email templates, tests, docs, and developer tools) or run a [CSP scanner](https://github.com/thibaudcolas/wagtail-tooling/tree/main/csp)

### Working on this

<!--

Do you have thoughts on skills needed?

Are you keen to work on this yourself once the issue has been accepted?

Please let us know here.

-->

See [CSP compatibility issues #1288](https://github.com/wagtail/wagtail/issues/1288). View our [contributing guidelines](https://docs.wagtail.org/en/latest/contributing/index.html), add a comment to the issue once you’re ready to start. Consider picking only some of the items on this list

|

open

|

2025-03-04T12:23:00Z

|

2025-03-04T14:12:56Z

|

https://github.com/wagtail/wagtail/issues/12937

|

[

"type:Cleanup/Optimisation",

"component:Security"

] |

thibaudcolas

| 1

|

brightmart/text_classification

|

tensorflow

| 112

|

suggest upgrade to support python3

|

open

|

2019-03-13T12:34:27Z

|

2023-11-13T10:05:56Z

|

https://github.com/brightmart/text_classification/issues/112

|

[] |

kevinew

| 1

|

|

amidaware/tacticalrmm

|

django

| 2,095

|

[Feature Request] Advanced Detailed Logging and Features to accommodate

|

Feature Request: Advanced Detailed Logging

Description:

Requesting an option for [Advanced Detailed Logging] to enhance the system's logging capabilities for device connectivity and remote session events. This feature would provide deeper insights and traceability, particularly for actions that are currently logged only when specific alerts are enabled.

Key Features:

Device Connectivity Logging:

Log [disconnection] and [re-connection] events for all devices, regardless of whether alerts for these events are enabled.

Include timestamps and device identifiers to accurately track offline and online durations.

Remote Session Logging:

Disconnection Events: Log when a [remote session ends], capturing details about the session duration.

Session Type: Log the type of remote session:

[Take Control]

[Remote Background]

Idle Timeout Auto-Disconnect:

Introduce an optional feature to automatically terminate remote sessions after a specified period of inactivity (e.g., 15, 30, or 60 minutes).

Enhanced Details:

Provide a clear distinction between user-initiated disconnections and system-triggered (e.g., auto-disconnect) disconnections.

Include IP addresses or user identifiers (if available) associated with the session for audit purposes.

Optional Settings for Advanced Logging:

Allow administrators to toggle Advanced Detailed Logging on or off per site, client, or globally.

Include filters to specify which event types are logged (e.g., device disconnections, session auto-disconnects, or specific session types).

Benefits:

Enhanced Auditability:

Provides comprehensive logs for compliance and troubleshooting.

Tracks exact periods of device downtime and remote session usage.

Improved Security:

Automatically terminate idle sessions to reduce unauthorized access risks.

Maintain detailed logs for session activity for forensic purposes.

Operational Efficiency:

Enables better monitoring of device and session uptime/downtime.

Offers clear insights into inactive session trends to optimize resource usage.

This feature would significantly enhance the system's logging capabilities, making it a more powerful tool for administrators and auditors. Please consider this addition to improve traceability and operational oversight.

|

open

|

2024-12-06T00:23:42Z

|

2025-02-18T16:09:57Z

|

https://github.com/amidaware/tacticalrmm/issues/2095

|

[] |

NavCC

| 6

|

miguelgrinberg/python-socketio

|

asyncio

| 614

|

Code which was working on socketio version 4.6.0 is not working now in version 5.0.4

|

`pythons-socketio version 4.6.0 & engineio version 3.13.1`

Connects and works perfectly withtout any problem.

Note: `Namespace / is connected` and no rejection from server side

Version information of socketio & engineio

```

>pip show python-socketio

Name: python-socketio

Version: 4.6.0

Summary: Socket.IO server

Home-page: http://github.com/miguelgrinberg/python-socketio/

Author: Miguel Grinberg

Author-email: miguelgrinberg50@gmail.com

License: MIT

Location: c:\python38\lib\site-packages

Requires: six, python-engineio

Required-by:

>pip show python-engineio

Name: python-engineio

Version: 3.13.1

Summary: Engine.IO server

Home-page: http://github.com/miguelgrinberg/python-engineio/

Author: Miguel Grinberg

Author-email: miguelgrinberg50@gmail.com

License: MIT

Location: c:\python38\lib\site-packages

Requires: six

Required-by: python-socketio

```

logging enabled in both library

```

>python main.py

23:37:40.891.263, client, INFO, Attempting WebSocket connection to wss://ws.upstox.com/socket.io/? information removed &transport=websocket&EIO=3

23:37:41.931.590, client, INFO, WebSocket connection accepted with {'sid': 'AKfyGHnlpuv_91ZTAJFS', 'upgrades': [], 'pingInterval': 2000, 'pingTimeout': 60000}

23:37:41.931.590, client, INFO, Engine.IO connection established

23:37:41.932.587, client, INFO, Sending packet PING data None

23:37:41.942.563, client, INFO, Received packet MESSAGE data 0

23:37:41.943.559, client, INFO, Namespace / is connected

23:37:42.160.441, client, INFO, Received packet PONG data None

23:37:43.934.515, client, INFO, Sending packet PING data None

23:37:44.163.661, client, INFO, Received packet PONG data None

23:37:45.935.990, client, INFO, Sending packet PING data None

23:37:46.164.545, client, INFO, Received packet PONG data None

23:37:47.936.101, client, INFO, Sending packet PING data None

23:37:48.168.060, client, INFO, Received packet PONG data None

23:37:49.936.557, client, INFO, Sending packet PING data None

23:37:50.165.909, client, INFO, Received packet PONG data None

23:37:51.936.624, client, INFO, Sending packet PING data None

23:37:51.944.657, broker, INFO, Logging in to upstox server

23:37:51.944.657, client, INFO, Emitting event "message" [/]

23:37:51.944.657, client, INFO, Sending packet MESSAGE data 2["message",{"method":"client_login","type":"interactive","data":{"client_id":"","password":""}}]

23:37:52.170.459, client, INFO, Received packet PONG data None

23:37:52.222.219, client, INFO, Received packet MESSAGE data 2["message",{"timestamp":1610734070921,"response_type":"client_login","guid":null,"data":{"success":true,"statusCode":1,"connected_server":"ip-172-31-21-200"}}]

23:37:52.223.191, client, INFO, Received event "message" [/]

23:37:52.223.191, client, INFO, Emitting event "message" [/]

23:37:56.175.874, client, INFO, Received packet PONG data None

23:37:57.939.303, client, INFO, Sending packet PING data None

23:37:58.180.645, client, INFO, Received packet PONG data None

23:37:59.939.735, client, INFO, Sending packet PING data None

23:38:00.166.902, client, INFO, Received packet PONG data None

23:38:01.940.612, client, INFO, Sending packet PING data None

23:38:02.169.766, client, INFO, Received packet PONG data None

23:38:03.940.732, client, INFO, Sending packet PING data None

23:38:04.180.604, client, INFO, Received packet PONG data None

23:38:05.941.043, client, INFO, Sending packet PING data None

23:38:06.169.315, client, INFO, Received packet PONG data None

23:38:07.941.815, client, INFO, Sending packet PING data None

23:38:08.170.819, client, INFO, Received packet PONG data None

23:38:09.942.222, client, INFO, Sending packet PING data None

23:38:10.171.609, client, INFO, Received packet PONG data None

23:38:11.942.829, client, INFO, Sending packet PING data None

23:38:12.172.043, client, INFO, Received packet PONG data None

23:38:13.943.691, client, INFO, Sending packet PING data None

```

Uninstalled both and installed latest version of both. `python-socketio = 5.0.4` `python-engineio = 4.0.0`

```

>pip uninstall python-engineio

Found existing installation: python-engineio 3.13.1

Uninstalling python-engineio-3.13.1:

Would remove:

c:\python38\lib\site-packages\engineio\*

c:\python38\lib\site-packages\python_engineio-3.13.1.dist-info\*

Proceed (y/n)? y

Successfully uninstalled python-engineio-3.13.1

>pip uninstall python-socketio

Found existing installation: python-socketio 4.6.0

Uninstalling python-socketio-4.6.0:

Would remove:

c:\python38\lib\site-packages\python_socketio-4.6.0.dist-info\*

c:\python38\lib\site-packages\socketio\*

Proceed (y/n)? y

Successfully uninstalled python-socketio-4.6.0

>pip install python-socketio

Collecting python-socketio

Using cached python_socketio-5.0.4-py2.py3-none-any.whl (52 kB)

Requirement already satisfied: bidict>=0.21.0 in c:\python38\lib\site-packages (from python-socketio) (0.21.2)

Collecting python-engineio>=4

Using cached python_engineio-4.0.0-py2.py3-none-any.whl (50 kB)

Installing collected packages: python-engineio, python-socketio

Successfully installed python-engineio-4.0.0 python-socketio-5.0.4

```

Latest library connects, but server sending `namespace / was rejected message`, eventually leading to BadNamespaceError \ error

`python-socketio = 5.0.4` `python-engineio = 4.0.0`

```

>python main.py

23:42:35.111.527, client, INFO, Attempting WebSocket connection to wss://ws.upstox.com/socket.io/? Information removed &transport=websocket&EIO=4

23:42:36.158.799, client, INFO, WebSocket connection accepted with {'sid': 'xzIaRZIKYz1BwKKkAJEw', 'upgrades': [], 'pingInterval': 2000, 'pingTimeout': 60000}

23:42:36.158.799, client, INFO, Engine.IO connection established

23:42:36.158.799, client, INFO, Sending packet MESSAGE data 0

23:42:36.184.729, client, INFO, Received packet MESSAGE data 0

23:42:36.185.752, client, INFO, Namespace / is connected

23:42:36.390.209, client, INFO, Received packet MESSAGE data 4"{\"timestamp\":1610734355081,\"response_type\":\"server_error\",\"error\":{\"message\":\"You have not been authorized for this action\",\"statusCode\":500}}"

23:42:36.395.195, client, INFO, Connection to namespace / was rejected

Exception in thread Thread-3:

Traceback (most recent call last):

File "C:\Python38\lib\threading.py", line 932, in _bootstrap_inner

self.run()

File "C:\Python38\lib\threading.py", line 870, in run

self._target(*self._args, **self._kwargs)

File "C:\Python38\lib\site-packages\socketio\client.py", line 611, in _handle_eio_message

self._handle_connect(pkt.namespace, pkt.data)

File "C:\Python38\lib\site-packages\socketio\client.py", line 485, in _handle_connect

self._trigger_event('connect', namespace=namespace)

File "C:\Python38\lib\site-packages\socketio\client.py", line 547, in _trigger_event

return self.handlers[namespace][event](*args)

File "D:\projects\PTrade\broker_upstox_hacked\broker.py", line 205, in __login_to_upstox_server

self.__sio.send(loginJSON)

File "C:\Python38\lib\site-packages\socketio\client.py", line 364, in send

self.emit('message', data=data, namespace=namespace,

File "C:\Python38\lib\site-packages\socketio\client.py", line 328, in emit

raise exceptions.BadNamespaceError(

socketio.exceptions.BadNamespaceError: / is not a connected namespace.

23:43:36.157.881, client, WARNING, WebSocket connection was closed, aborting

23:43:36.158.879, client, INFO, Waiting for write loop task to end

23:43:36.158.879, client, INFO, Exiting write loop task

23:43:36.160.875, client, INFO, Engine.IO connection dropped

23:43:36.161.870, client, INFO, Exiting read loop task

```

In both client code is same, server is same only difference is socketio and enginio libraries.

Please let me know what I'm doing wrong or how to tackle this.

|

closed

|

2021-01-15T21:24:19Z

|

2021-06-27T19:44:59Z

|

https://github.com/miguelgrinberg/python-socketio/issues/614

|

[

"question"

] |

krishnavelu

| 10

|

katanaml/sparrow

|

computer-vision

| 52

|

When running Unstructured, { ModuleNotFoundError: No module named 'backoff._typing' }

|

(.env_unstructured) root@testvm:/home/testvmadmin/main/sparrow/sparrow-ml/llm# pip install backoff==1.11.1

Collecting backoff==1.11.1

Using cached backoff-1.11.1-py2.py3-none-any.whl (13 kB)

Installing collected packages: backoff

Attempting uninstall: backoff

Found existing installation: backoff 2.2.1

Uninstalling backoff-2.2.1:

Successfully uninstalled backoff-2.2.1

Successfully installed backoff-1.11.1

WARNING: You are using pip version 22.0.4; however, version 24.0 is available.

You should consider upgrading via the '/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/bin/python -m pip install --upgrade pip' command.

(.env_unstructured) root@testvm:/home/testvmadmin/main/sparrow/sparrow-ml/llm# ./sparrow.sh "invoice_number, invoice_date, total_gross_worth" "int, str, str" --agent unstructured --file-path ./data/invoice_1.pdf

Detected Python version: Python 3.10.4

Running pipeline with unstructured

⠸ Processing file with unstructured...Traceback (most recent call last):

File "/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/bin/unstructured-ingest", line 5, in <module>

from unstructured.ingest.main import main

File "/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/lib/python3.10/site-packages/unstructured/ingest/main.py", line 2, in <module>

from unstructured.ingest.cli.cli import get_cmd

File "/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/lib/python3.10/site-packages/unstructured/ingest/cli/__init__.py", line 5, in <module>

from unstructured.ingest.cli.cmds import base_dest_cmd_fns, base_src_cmd_fns

File "/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/lib/python3.10/site-packages/unstructured/ingest/cli/cmds/__init__.py", line 6, in <module>

from unstructured.ingest.cli.base.src import BaseSrcCmd

File "/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/lib/python3.10/site-packages/unstructured/ingest/cli/base/src.py", line 13, in <module>

from unstructured.ingest.runner import runner_map

File "/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/lib/python3.10/site-packages/unstructured/ingest/runner/__init__.py", line 4, in <module>

from .airtable import AirtableRunner

File "/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/lib/python3.10/site-packages/unstructured/ingest/runner/airtable.py", line 7, in <module>

from unstructured.ingest.runner.base_runner import Runner

File "/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/lib/python3.10/site-packages/unstructured/ingest/runner/base_runner.py", line 20, in <module>

from unstructured.ingest.processor import process_documents

File "/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/lib/python3.10/site-packages/unstructured/ingest/processor.py", line 15, in <module>

from unstructured.ingest.pipeline import (

File "/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/lib/python3.10/site-packages/unstructured/ingest/pipeline/__init__.py", line 1, in <module>

from .doc_factory import DocFactory

File "/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/lib/python3.10/site-packages/unstructured/ingest/pipeline/doc_factory.py", line 4, in <module>

from unstructured.ingest.pipeline.interfaces import DocFactoryNode

File "/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/lib/python3.10/site-packages/unstructured/ingest/pipeline/interfaces.py", line 15, in <module>

from unstructured.ingest.ingest_backoff import RetryHandler

File "/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/lib/python3.10/site-packages/unstructured/ingest/ingest_backoff/__init__.py", line 1, in <module>

from ._wrapper import RetryHandler

File "/home/testvmadmin/main/sparrow/sparrow-ml/llm/.env_unstructured/lib/python3.10/site-packages/unstructured/ingest/ingest_backoff/_wrapper.py", line 9, in <module>

from backoff._typing import (

ModuleNotFoundError: No module named 'backoff._typing'

Command failed. Error:

⠴ Processing file with unstructured...

╭───────────────────── Traceback (most recent call last) ──────────────────────╮

│ /home/testvmadmin/main/sparrow/sparrow-ml/llm/engine.py:31 in run │

│ │

│ 28 │ │

│ 29 │ try: │

│ 30 │ │ rag = get_pipeline(user_selected_agent) │

│ ❱ 31 │ │ rag.run_pipeline(user_selected_agent, query_inputs_arr, query_t │

│ 32 │ │ │ │ │ │ debug) │

│ 33 │ except ValueError as e: │

│ 34 │ │ print(f"Caught an exception: {e}") │

│ │

│ ╭───────────────────────────────── locals ─────────────────────────────────╮ │

│ │ agent = 'unstructured' │ │

│ │ debug = False │ │

│ │ file_path = './data/invoice_1.pdf' │ │

│ │ index_name = None │ │

│ │ inputs = 'invoice_number, invoice_date, total_gross_worth' │ │

│ │ options = None │ │

│ │ query = 'retrieve invoice_number, invoice_date, │ │

│ │ total_gross_worth' │ │

│ │ query_inputs_arr = [ │ │

│ │ │ 'invoice_number', │ │

│ │ │ 'invoice_date', │ │

│ │ │ 'total_gross_worth' │ │

│ │ ] │ │

│ │ query_types = 'int, str, str' │ │

│ │ query_types_arr = ['int', 'str', 'str'] │ │

│ │ rag = <rag.agents.unstructured.unstructured.Unstructure… │ │

│ │ object at 0x7f1d83b82f80> │ │

│ │ types = 'int, str, str' │ │

│ │ user_selected_agent = 'unstructured' │ │

│ ╰──────────────────────────────────────────────────────────────────────────╯ │

│ │

│ /home/testvmadmin/main/sparrow/sparrow-ml/llm/rag/agents/unstructured/unstru │

│ ctured.py:71 in run_pipeline │

│ │

│ 68 │ │ │ │

│ 69 │ │ │ os.makedirs(temp_output_dir, exist_ok=True) │

│ 70 │ │ │ │

│ ❱ 71 │ │ │ files = self.invoke_pipeline_step( │

│ 72 │ │ │ │ lambda: self.process_files(temp_output_dir, temp_input │

│ 73 │ │ │ │ "Processing file with unstructured...", │

│ 74 │ │ │ │ local │

│ │

│ ╭───────────────────────────────── locals ─────────────────────────────────╮ │

│ │ debug = False │ │

│ │ device = 'cpu' │ │

│ │ embedding_model_name = 'all-MiniLM-L6-v2' │ │

│ │ file_path = './data/invoice_1.pdf' │ │

│ │ index_name = None │ │

│ │ input_dir = 'data/pdf' │ │

│ │ local = True │ │

│ │ options = None │ │

│ │ output_dir = 'data/json' │ │

│ │ payload = 'unstructured' │ │

│ │ query = 'retrieve invoice_number, invoice_date, │ │

│ │ total_gross_worth' │ │

│ │ query_inputs = [ │ │

│ │ │ 'invoice_number', │ │

│ │ │ 'invoice_date', │ │

│ │ │ 'total_gross_worth' │ │

│ │ ] │ │

│ │ query_types = ['int', 'str', 'str'] │ │

│ │ self = <rag.agents.unstructured.unstructured.Unstructur… │ │

│ │ object at 0x7f1d83b82f80> │ │

│ │ start = 6444.234209002 │ │

│ │ temp_dir = '/tmp/tmpf7ym66qi' │ │

│ │ temp_input_dir = '/tmp/tmpf7ym66qi/data/pdf' │ │

│ │ temp_output_dir = '/tmp/tmpf7ym66qi/data/json' │ │

│ │ weaviate_url = 'http://localhost:8080' │ │

│ ╰──────────────────────────────────────────────────────────────────────────╯ │

│ │

│ /home/testvmadmin/main/sparrow/sparrow-ml/llm/rag/agents/unstructured/unstru │

│ ctured.py:364 in invoke_pipeline_step │

│ │

│ 361 │ │ │ │ │ transient=False, │

│ 362 │ │ │ ) as progress: │

│ 363 │ │ │ │ progress.add_task(description=task_description, total= │

│ ❱ 364 │ │ │ │ ret = task_call() │

│ 365 │ │ else: │

│ 366 │ │ │ print(task_description) │

│ 367 │ │ │ ret = task_call() │

│ │

│ ╭───────────────────────────────── locals ─────────────────────────────────╮ │

│ │ local = True │ │

│ │ progress = <rich.progress.Progress object at 0x7f1ca9cb5c00> │ │

│ │ self = <rag.agents.unstructured.unstructured.UnstructuredPi… │ │

│ │ object at 0x7f1d83b82f80> │ │

│ │ task_call = <function │ │

│ │ UnstructuredPipeline.run_pipeline.<locals>.<lambda> │ │

│ │ at 0x7f1d83b8cee0> │ │

│ │ task_description = 'Processing file with unstructured...' │ │

│ ╰──────────────────────────────────────────────────────────────────────────╯ │

│ │

│ /home/testvmadmin/main/sparrow/sparrow-ml/llm/rag/agents/unstructured/unstru │

│ ctured.py:72 in <lambda> │

│ │

│ 69 │ │ │ os.makedirs(temp_output_dir, exist_ok=True) │

│ 70 │ │ │ │

│ 71 │ │ │ files = self.invoke_pipeline_step( │

│ ❱ 72 │ │ │ │ lambda: self.process_files(temp_output_dir, temp_input │

│ 73 │ │ │ │ "Processing file with unstructured...", │

│ 74 │ │ │ │ local │

│ 75 │ │ │ ) │

│ │

│ ╭───────────────────────────────── locals ─────────────────────────────────╮ │

│ │ self = <rag.agents.unstructured.unstructured.UnstructuredPip… │ │

│ │ object at 0x7f1d83b82f80> │ │

│ │ temp_input_dir = '/tmp/tmpf7ym66qi/data/pdf' │ │

│ │ temp_output_dir = '/tmp/tmpf7ym66qi/data/json' │ │

│ ╰──────────────────────────────────────────────────────────────────────────╯ │

│ │

│ /home/testvmadmin/main/sparrow/sparrow-ml/llm/rag/agents/unstructured/unstru │

│ ctured.py:123 in process_files │

│ │

│ 120 │ │ return answer │

│ 121 │ │

│ 122 │ def process_files(self, temp_output_dir, temp_input_dir): │

│ ❱ 123 │ │ self.process_local(output_dir=temp_output_dir, num_processes=2 │

│ 124 │ │ files = self.get_result_files(temp_output_dir) │

│ 125 │ │ return files │

│ 126 │

│ │

│ ╭───────────────────────────────── locals ─────────────────────────────────╮ │

│ │ self = <rag.agents.unstructured.unstructured.UnstructuredPip… │ │

│ │ object at 0x7f1d83b82f80> │ │

│ │ temp_input_dir = '/tmp/tmpf7ym66qi/data/pdf' │ │

│ │ temp_output_dir = '/tmp/tmpf7ym66qi/data/json' │ │

│ ╰──────────────────────────────────────────────────────────────────────────╯ │

│ │

│ /home/testvmadmin/main/sparrow/sparrow-ml/llm/rag/agents/unstructured/unstru │

│ ctured.py:171 in process_local │

│ │

│ 168 │ │ │ print(output.decode()) │

│ 169 │ │ else: │

│ 170 │ │ │ print('Command failed. Error:') │

│ ❱ 171 │ │ │ print(error.decode()) │

│ 172 │ │

│ 173 │ def get_result_files(self, folder_path) -> List[Dict]: │

│ 174 │ │ file_list = [] │

│ │

│ ╭───────────────────────────────── locals ─────────────────────────────────╮ │

│ │ command = [ │ │

│ │ │ 'unstructured-ingest', │ │

│ │ │ 'local', │ │

│ │ │ '--input-path', │ │

│ │ │ '/tmp/tmpf7ym66qi/data/pdf', │ │

│ │ │ '--output-dir', │ │

│ │ │ '/tmp/tmpf7ym66qi/data/json', │ │

│ │ │ '--num-processes', │ │

│ │ │ '2', │ │

│ │ │ '--recursive', │ │

│ │ │ '--verbose' │ │

│ │ ] │ │

│ │ error = None │ │

│ │ input_path = '/tmp/tmpf7ym66qi/data/pdf' │ │

│ │ num_processes = 2 │ │

│ │ output = b'' │ │

│ │ output_dir = '/tmp/tmpf7ym66qi/data/json' │ │

│ │ process = <Popen: returncode: 1 args: ['unstructured-ingest', │ │

│ │ 'local', '--input-path',...> │ │

│ │ self = <rag.agents.unstructured.unstructured.UnstructuredPipel… │ │

│ │ object at 0x7f1d83b82f80> │ │

│ ╰──────────────────────────────────────────────────────────────────────────╯ │

╰──────────────────────────────────────────────────────────────────────────────╯

AttributeError: 'NoneType' object has no attribute 'decode'

|

closed

|

2024-05-13T07:30:42Z

|

2024-07-14T12:00:49Z

|

https://github.com/katanaml/sparrow/issues/52

|

[] |

pitbuk101

| 2

|

twopirllc/pandas-ta

|

pandas

| 291

|

New indicators issue

|

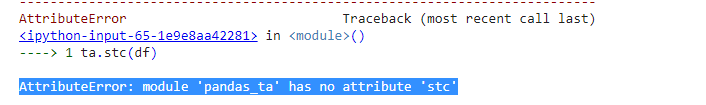

**Expected behavior**

I was keen to try new indicators add into this beautiful library however, the list of new indicators shows attribute error

AttributeError: module 'pandas_ta' has no attribute 'stc'

**Screenshots**

|

closed

|

2021-05-20T02:41:59Z

|

2021-05-26T21:11:44Z

|

https://github.com/twopirllc/pandas-ta/issues/291

|

[

"question",

"info"

] |

satishchaudhary382

| 2

|

Lightning-AI/pytorch-lightning

|

deep-learning

| 19,813

|

Existing metric keys not moved to device after LearningRateFinder

|

### Bug description

Running `LearningRateFinder` leads to `teardown()` on training epoch loop's results being moved to "cpu" [here](https://github.com/Lightning-AI/pytorch-lightning/blob/master/src/lightning/pytorch/loops/training_epoch_loop.py#L314).

The problem is that loop results are only moved to device when registering for the first time [here](https://github.com/Lightning-AI/pytorch-lightning/blob/b9680a364da4e875b237ec3c03e67a9c32ef475b/src/lightning/pytorch/trainer/connectors/logger_connector/result.py#L423). This leads to an issue for `cumulated_batch_size` reduction which used the device of the original `value` tensor when it was first created. So when it's still on `cpu` when the training starts for real after `lr_find` we face `RuntimeError('No backend type associated with device type cpu')`.

E.g. the issue happens when using 2 GPU device (see logs below).

I'll submit a fix for review shortly.

### What version are you seeing the problem on?

master

### How to reproduce the bug

_No response_

### Error messages and logs

```

train/0 [1]:-> s.trainer.fit(s.model, **kwargs)

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/trainer/trainer.py(543)fit()

train/0 [1]:-> call._call_and_handle_interrupt(

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/trainer/call.py(43)_call_and_handle_interrupt()

train/0 [1]:-> return trainer.strategy.launcher.launch(trainer_fn, *args, trainer=trainer, **kwargs)

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/strategies/launchers/subprocess_script.py(105)launch()

train/0 [1]:-> return function(*args, **kwargs)

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/trainer/trainer.py(579)_fit_impl()

train/0 [1]:-> self._run(model, ckpt_path=ckpt_path)

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/trainer/trainer.py(986)_run()

train/0 [1]:-> results = self._run_stage()

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/trainer/trainer.py(1032)_run_stage()

train/0 [1]:-> self.fit_loop.run()

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/loops/fit_loop.py(205)run()

train/0 [1]:-> self.advance()

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/loops/fit_loop.py(363)advance()

train/0 [1]:-> self.epoch_loop.run(self._data_fetcher)

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/loops/training_epoch_loop.py(139)run()

train/0 [1]:-> self.on_advance_end(data_fetcher)

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/loops/training_epoch_loop.py(287)on_advance_end()

train/0 [1]:-> self.val_loop.run()

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/loops/utilities.py(182)_decorator()

train/0 [1]:-> return loop_run(self, *args, **kwargs)

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/loops/evaluation_loop.py(142)run()

train/0 [1]:-> return self.on_run_end()

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/loops/evaluation_loop.py(254)on_run_end()

train/0 [1]:-> self._on_evaluation_epoch_end()

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/loops/evaluation_loop.py(336)_on_evaluation_epoch_end()

train/0 [1]:-> trainer._logger_connector.on_epoch_end()

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/trainer/connectors/logger_connector/logger_connector.py(195)on_epoch_end()

train/0 [1]:-> metrics = self.metrics

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/trainer/connectors/logger_connector/logger_connector.py(234)metrics()

train/0 [1]:-> return self.trainer._results.metrics(on_step)

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/trainer/connectors/logger_connector/result.py(483)metrics()

train/0 [1]:-> value = self._get_cache(result_metric, on_step)

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/trainer/connectors/logger_connector/result.py(447)_get_cache()

train/0 [1]:-> result_metric.compute()

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/trainer/connectors/logger_connector/result.py(289)wrapped_func()

train/0 [1]:-> self._computed = compute(*args, **kwargs)

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/trainer/connectors/logger_connector/result.py(251)compute()

train/0 [1]:-> cumulated_batch_size = self.meta.sync(self.cumulated_batch_size)

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/pytorch_lightning/strategies/ddp.py(342)reduce()

train/0 [1]:-> return _sync_ddp_if_available(tensor, group, reduce_op=reduce_op)

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/lightning_fabric/utilities/distributed.py(172)_sync_ddp_if_available()

train/0 [1]:-> return _sync_ddp(result, group=group, reduce_op=reduce_op)

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/lightning_fabric/utilities/distributed.py(222)_sync_ddp()

train/0 [1]:-> torch.distributed.all_reduce(result, op=op, group=group, async_op=False)

train/0 [1]: /opt/conda/envs/pytorch/lib/python3.10/site-packages/torch/distributed/c10d_logger.py(72)wrapper()

train/0 [1]:-> return func(*args, **kwargs)

train/0 [1]:> /opt/conda/envs/pytorch/lib/python3.10/site-packages/torch/distributed/distributed_c10d.py(1996)all_reduce()

train/0 [0]:RuntimeError('No backend type associated with device type cpu')

```

### Environment

<details>

<summary>Current environment</summary>

```

#- Lightning Component (e.g. Trainer, LightningModule, LightningApp, LightningWork, LightningFlow):

#- PyTorch Lightning Version (e.g., 1.5.0):

#- Lightning App Version (e.g., 0.5.2):

#- PyTorch Version (e.g., 2.0):

#- Python version (e.g., 3.9):

#- OS (e.g., Linux):

#- CUDA/cuDNN version:

#- GPU models and configuration:

#- How you installed Lightning(`conda`, `pip`, source):

#- Running environment of LightningApp (e.g. local, cloud):

```

</details>

### More info

_No response_

cc @carmocca

|

closed

|

2024-04-25T14:31:17Z

|

2024-07-26T18:03:19Z

|

https://github.com/Lightning-AI/pytorch-lightning/issues/19813

|

[

"bug",

"tuner",

"logging",

"ver: 2.2.x"

] |

clumsy

| 0

|

jina-ai/serve

|

deep-learning

| 6,226

|

Add support for mamba, alternative to transformers

|

**Describe the feature**

Is it possible to add support to Mamba, a deep learning architecture focused on long sequence modeling, for details please see https://en.wikipedia.org/wiki/Deep_learning

**Your proposal**

Just asking

|

open

|

2025-01-25T09:54:39Z

|

2025-01-27T07:42:20Z

|

https://github.com/jina-ai/serve/issues/6226

|

[] |

geoman2

| 1

|

zappa/Zappa

|

flask

| 1,291

|

About Python 3.12 support

|

Hello, Can you please tell, when will expect zappa support with python 3.12?

|

closed

|

2024-01-06T12:52:44Z

|

2024-01-10T17:07:23Z

|

https://github.com/zappa/Zappa/issues/1291

|

[

"enhancement",

"python"

] |

jagadeesh32

| 1

|

Gozargah/Marzban

|

api

| 719

|

environment: line 46: lsb_release: command not found

|

environment: line 46: lsb_release: command not found

|

closed

|

2023-12-28T08:34:14Z

|

2024-01-11T20:13:56Z

|

https://github.com/Gozargah/Marzban/issues/719

|

[

"Bug"

] |

saleh2323

| 2

|

scikit-learn/scikit-learn

|

python

| 30,138

|

How do I ensure IsolationForest detects only statistical outliers?

|

Hello Everyone! I am starting to learn how to utilize IsolationForest to detect outliers/anomalies. When I input a dataset of y = x with x going from 1 to 101 and contamination='auto' as the only argument, roughly the 20 lowest values and the 20 highest values are identified as outliers. I don't want these points to appear as outliers since they fall along a perfect straight line fit with none of the x-values being outliers. Am I using this correctly? What arguments do I insert to ensure the model generates the expected no outliers in this case?

import pandas as pd

import numpy as np

from sklearn.ensemble import IsolationForest

import matplotlib.pyplot as plt

import seaborn as sns

data = {

'x': range(1, 101),

'y': range(1, 101)

}

df = pd.DataFrame(data)

model = IsolationForest(contamination='auto') # Expecting 20% anomalies

df['anomaly'] = model.fit_predict(df[['x','y']])

plt.figure(figsize=(12, 6))

sns.scatterplot(x='x', y='y', hue='anomaly', palette={-1: 'red', 1: 'blue'}, data=df)

plt.title('Y=X')

plt.xlabel('X')

plt.ylabel('Y')

plt.legend(title='Anomaly', loc='upper right')

plt.show()

|

closed

|

2024-10-23T15:17:36Z

|

2024-10-29T09:12:26Z

|

https://github.com/scikit-learn/scikit-learn/issues/30138

|

[

"Needs Triage"

] |

BradBroo

| 0

|

hankcs/HanLP

|

nlp

| 1,464

|

繁体转简体有一些错误

|

<!--

Thank you for reporting a possible bug in HanLP.

Please fill in the template below to bypass our spam filter.

以下必填,否则直接关闭。

-->

- Java Code: `String simplified=HanLP.convertToSimplifiedChinese(tradition);`

- HanLP version: 1.7.7

比如“陷阱” 被 转换成 “猫腻”

“猛烈”被转换成“勐烈"

”顺口溜“被转换成”顺口熘"

"脊梁"被转换成“嵴梁”

“通道”被转换成“信道”

这些转换都没有必要,转换前后并不是简体与繁体的关系。

|

closed

|

2020-04-22T08:48:44Z

|

2020-04-24T19:28:39Z

|

https://github.com/hankcs/HanLP/issues/1464

|

[

"auto-replied"

] |

yangxudong

| 2

|

laughingman7743/PyAthena

|

sqlalchemy

| 130

|

Workgroup setting of _build_list_query_executions_request method is wrong

|

https://github.com/laughingman7743/PyAthena/commit/f91bf97e59e6d220eac6bc2400747157a9a80090

|

closed

|

2020-03-25T07:56:53Z

|

2020-03-26T15:04:46Z

|

https://github.com/laughingman7743/PyAthena/issues/130

|

[] |

laughingman7743

| 0

|

yt-dlp/yt-dlp

|

python

| 12,064

|

How to download video from Telegram?

|

### DO NOT REMOVE OR SKIP THE ISSUE TEMPLATE

- [X] I understand that I will be **blocked** if I *intentionally* remove or skip any mandatory\* field

### Checklist

- [X] I'm asking a question and **not** reporting a bug or requesting a feature

- [X] I've looked through the [README](https://github.com/yt-dlp/yt-dlp#readme)

- [X] I've verified that I have **updated yt-dlp to nightly or master** ([update instructions](https://github.com/yt-dlp/yt-dlp#update-channels))

- [X] I've searched [known issues](https://github.com/yt-dlp/yt-dlp/issues/3766) and the [bugtracker](https://github.com/yt-dlp/yt-dlp/issues?q=) for similar questions **including closed ones**. DO NOT post duplicates

- [X] I've read the [guidelines for opening an issue](https://github.com/yt-dlp/yt-dlp/blob/master/CONTRIBUTING.md#opening-an-issue)

### Please make sure the question is worded well enough to be understood

I can not download video from Telegram. Please help me.

yt-dlp -vU https://t.me/asiadrama99/3983

[debug] Command-line config: ['-vU', 'https://t.me/asiadrama99/3983']

[debug] Encodings: locale cp65001, fs utf-8, pref cp65001, out utf-8, error utf-8, screen utf-8

[debug] yt-dlp version stable@2024.12.23 from yt-dlp/yt-dlp [65cf46cdd] (win_exe)

[debug] Python 3.10.9 (CPython AMD64 64bit) - Windows-10-10.0.22631-SP0 (OpenSSL 1.1.1q 5 Jul 2022)

[debug] exe versions: ffmpeg 2024-12-19-git-494c961379-full_build-www.gyan.dev (setts), ffprobe 2024-12-19-git-494c961379-full_build-www.gyan.dev

[debug] Optional libraries: Cryptodome-3.21.0, brotli-1.1.0, certifi-2024.08.30, mutagen-1.47.0, requests-2.32.3, sqlite3-3.39.4, urllib3-2.2.3, websockets-13.1

[debug] Proxy map: {}

[debug] Request Handlers: urllib, requests, websockets

[debug] Loaded 1838 extractors

[debug] Fetching release info: https://api.github.com/repos/yt-dlp/yt-dlp/releases/latest

Latest version: stable@2024.12.23 from yt-dlp/yt-dlp

yt-dlp is up to date (stable@2024.12.23 from yt-dlp/yt-dlp)

[telegram:embed] Extracting URL: https://t.me/asiadrama99/3983

[telegram:embed] 3983: Downloading embed frame

WARNING: Extractor telegram:embed returned nothing; please report this issue on https://github.com/yt-dlp/yt-dlp/issues?q= , filling out the appropriate issue template. Confirm you are on the latest version using yt-dlp -U

### Provide verbose output that clearly demonstrates the problem

- [X] Run **your** yt-dlp command with **-vU** flag added (`yt-dlp -vU <your command line>`)

- [ ] If using API, add `'verbose': True` to `YoutubeDL` params instead

- [ ] Copy the WHOLE output (starting with `[debug] Command-line config`) and insert it below

### Complete Verbose Output

_No response_

|

closed

|

2025-01-12T12:19:17Z

|

2025-01-21T13:54:22Z

|

https://github.com/yt-dlp/yt-dlp/issues/12064

|

[

"question"

] |

k15fb-mmo

| 5

|

explosion/spaCy

|

deep-learning

| 13,139

|

spacy.load error decorative function

|

<!-- NOTE: For questions or install related issues, please open a Discussion instead. -->

## How to reproduce the behaviour

<!-- Include a code example or the steps that led to the problem. Please try to be as specific as possible. --> !python3 -m spacy download en_core_web_sm

import spacy

nlp = spacy.load("en_core_web_sm")

## Your Environment

<!-- Include details of your environment. You can also type `python -m spacy info --markdown` and copy-paste the result here.-->

2023-11-20 22:52:39.399591: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

## Info about spaCy

- **spaCy version:** 3.5.0

- **Platform:** Linux-6.2.0-36-generic-x86_64-with-glibc2.35

- **Python version:** 3.10.12

- **Pipelines:** fr_core_news_sm (3.5.0), en_core_web_sm (3.5.0), fr_core_news_md (3.5.0)

* Python Version Used: 3.10.12

* spaCy Version Used: 3.5.0

TypeError Traceback (most recent call last)

Cell In[33], line 1

----> 1 nlp = spacy.load("en_core_web_sm")