repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

ymcui/Chinese-LLaMA-Alpaca-2

|

nlp

| 500

|

关于chinese-alpaca-2-7b-64k模型在inference_hf.py推理部署中使用vllm报错的问题

|

### 提交前必须检查以下项目

- [X] 请确保使用的是仓库最新代码(git pull),一些问题已被解决和修复。

- [X] 我已阅读[项目文档](https://github.com/ymcui/Chinese-LLaMA-Alpaca-2/wiki)和[FAQ章节](https://github.com/ymcui/Chinese-LLaMA-Alpaca-2/wiki/常见问题)并且已在Issue中对问题进行了搜索,没有找到相似问题和解决方案。

- [x] 第三方插件问题:例如[llama.cpp](https://github.com/ggerganov/llama.cpp)、[LangChain](https://github.com/hwchase17/langchain)、[text-generation-webui](https://github.com/oobabooga/text-generation-webui)等,同时建议到对应的项目中查找解决方案。

### 问题类型

模型量化和部署

### 基础模型

Others

### 操作系统

Linux

### 详细描述问题

```

# 这是运行命令

```

python scripts/inference/inference_hf.py --base_model model/chinese-alpaca-2-7b-64k --with_prompt --interactive --use_vllm

### 依赖情况(代码类问题务必提供)

```

# 请在此处粘贴依赖情况(请粘贴在本代码块里)

```

bitsandbytes 0.41.1

peft 0.3.0

sentencepiece 0.1.99

torch 2.1.2

torchvision 0.16.2

transformers 4.36.2

### 运行日志或截图

```

# 请在此处粘贴运行日志(请粘贴在本代码块里)

```

USE_XFORMERS_ATTENTION: True

STORE_KV_BEFORE_ROPE: False

Traceback (most recent call last):

File "/hy-tmp/Aplaca2/Chinese-LLaMA-Alpaca-2-main/scripts/inference/inference_hf.py", line 129, in <module>

model = LLM(model=args.base_model,

File "/usr/local/miniconda3/lib/python3.10/site-packages/vllm/entrypoints/llm.py", line 105, in __init__

self.llm_engine = LLMEngine.from_engine_args(engine_args)

File "/usr/local/miniconda3/lib/python3.10/site-packages/vllm/engine/llm_engine.py", line 304, in from_engine_args

engine_configs = engine_args.create_engine_configs()

File "/usr/local/miniconda3/lib/python3.10/site-packages/vllm/engine/arg_utils.py", line 218, in create_engine_configs

model_config = ModelConfig(self.model, self.tokenizer,

File "/usr/local/miniconda3/lib/python3.10/site-packages/vllm/config.py", line 101, in __init__

self.hf_config = get_config(self.model, trust_remote_code, revision)

File "/usr/local/miniconda3/lib/python3.10/site-packages/vllm/transformers_utils/config.py", line 35, in get_config

raise e

File "/usr/local/miniconda3/lib/python3.10/site-packages/vllm/transformers_utils/config.py", line 23, in get_config

config = AutoConfig.from_pretrained(

File "/usr/local/miniconda3/lib/python3.10/site-packages/transformers/models/auto/configuration_auto.py", line 1099, in from_pretrained

return config_class.from_dict(config_dict, **unused_kwargs)

File "/usr/local/miniconda3/lib/python3.10/site-packages/transformers/configuration_utils.py", line 774, in from_dict

config = cls(**config_dict)

File "/usr/local/miniconda3/lib/python3.10/site-packages/transformers/models/llama/configuration_llama.py", line 160, in __init__

self._rope_scaling_validation()

File "/usr/local/miniconda3/lib/python3.10/site-packages/transformers/models/llama/configuration_llama.py", line 180, in _rope_scaling_validation

raise ValueError(

ValueError: `rope_scaling` must be a dictionary with with two fields, `type` and `factor`, got {'factor': 16.0, 'finetuned': True, 'original_max_position_embeddings': 4096, 'type': 'yarn'}

|

closed

|

2024-01-12T10:59:41Z

|

2024-02-10T01:36:06Z

|

https://github.com/ymcui/Chinese-LLaMA-Alpaca-2/issues/500

|

[

"stale"

] |

hoohooer

| 6

|

pywinauto/pywinauto

|

automation

| 599

|

Custom Type Object wont Take Click

|

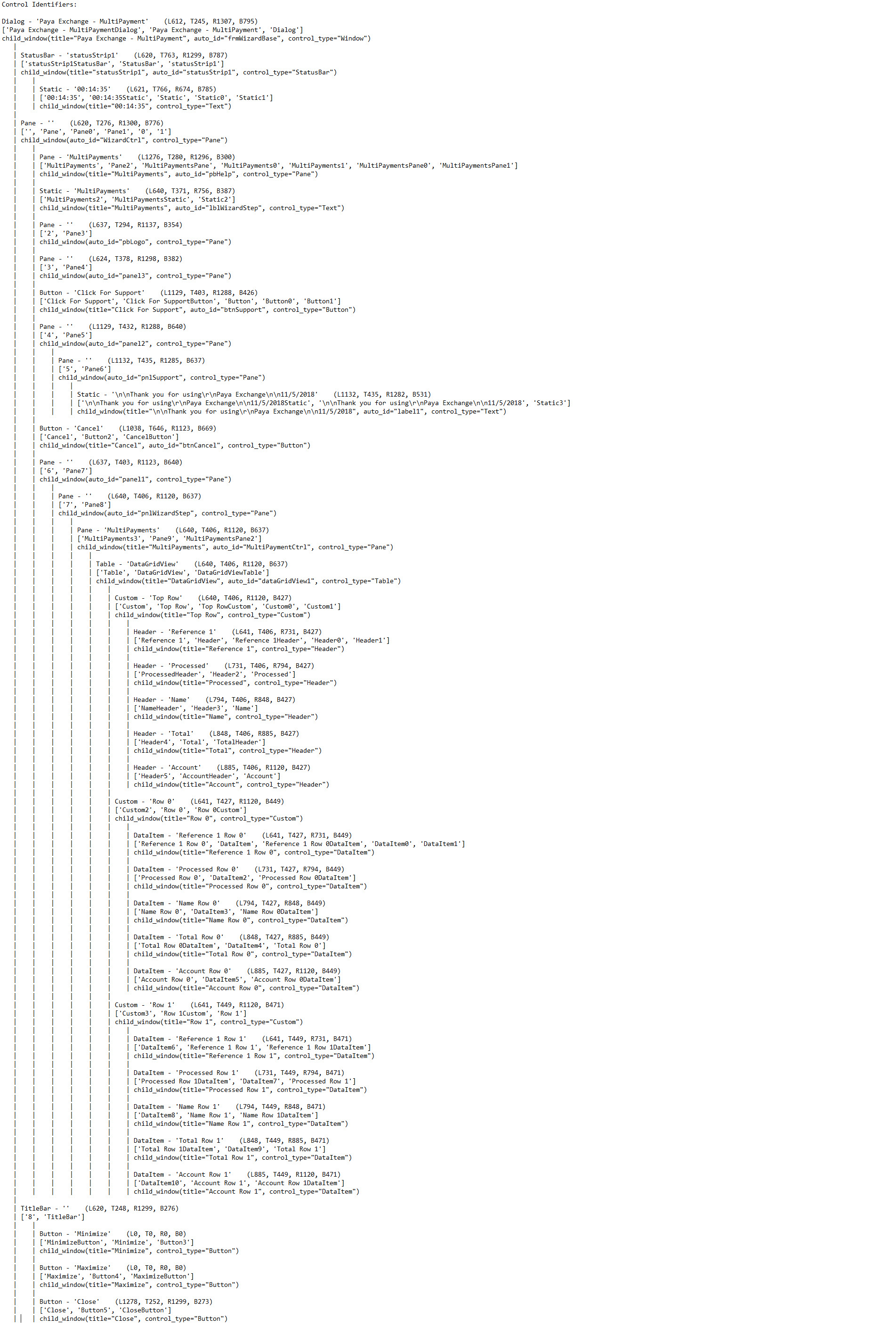

Currently i'm using Pywinauto along with Behave to test a desktop application and i have encountered a road bump. at one point in my automation i need to use double click, currently i have it working as this:

```

@step("User selects {row} in Multi payment window")

def step_impl(context, row):

"""

:param row: that we are going to fill.

:type context: behave.runner.Context

"""

tries = 5

for i in range(tries):

try:

context.popup[str(row)].click_input(button='left', double=True)

except:

if i < tries - 1: # i is zero indexed

continue

else:

break

```

It works perfectly! but if i'm not present or the machine is open this will cause issues because i'm using click_input() so i have tried using click(double=True) but returns this error _AttributeError: Neither GUI element (wrapper) nor wrapper method 'click' were found (typo?)_

Is there any way for me to get around this? I need to be able to run in a VM without having a session open.

This is the result of running print_control_identifiers(), The items i'm trying to double click is Row 0 and Row 1, they are custom items.

|

open

|

2018-11-06T16:19:56Z

|

2018-11-10T14:47:43Z

|

https://github.com/pywinauto/pywinauto/issues/599

|

[

"question"

] |

LeoDOD

| 3

|

widgetti/solara

|

fastapi

| 402

|

Media placeholder Jupyter dashboard tutorial

|

closed

|

2023-11-27T14:55:40Z

|

2023-11-27T20:15:09Z

|

https://github.com/widgetti/solara/issues/402

|

[] |

maartenbreddels

| 1

|

|

feature-engine/feature_engine

|

scikit-learn

| 786

|

yeo-johnson inverse transform throws an erro

|

```

InvalidIndexError Traceback (most recent call last)

File ~\Documents\Repositories\envs\fe_not\lib\site-packages\pandas\core\series.py:1289, in Series.__setitem__(self, key, value)

1288 try:

-> 1289 self._set_with_engine(key, value, warn=warn)

1290 except KeyError:

1291 # We have a scalar (or for MultiIndex or object-dtype, scalar-like)

1292 # key that is not present in self.index.

File ~\Documents\Repositories\envs\fe_not\lib\site-packages\pandas\core\series.py:1361, in Series._set_with_engine(self, key, value, warn)

1360 def _set_with_engine(self, key, value, warn: bool = True) -> None:

-> 1361 loc = self.index.get_loc(key)

1363 # this is equivalent to self._values[key] = value

File ~\Documents\Repositories\envs\fe_not\lib\site-packages\pandas\core\indexes\range.py:418, in RangeIndex.get_loc(self, key)

417 raise KeyError(key)

--> 418 self._check_indexing_error(key)

419 raise KeyError(key)

File ~\Documents\Repositories\envs\fe_not\lib\site-packages\pandas\core\indexes\base.py:6059, in Index._check_indexing_error(self, key)

6056 if not is_scalar(key):

6057 # if key is not a scalar, directly raise an error (the code below

6058 # would convert to numpy arrays and raise later any way) - GH29926

-> 6059 raise InvalidIndexError(key)

InvalidIndexError: 64 True

682 True

960 True

1384 True

1100 True

...

763 True

835 True

1216 True

559 True

684 True

Name: LotArea, Length: 1022, dtype: bool

During handling of the above exception, another exception occurred:

IndexingError Traceback (most recent call last)

Cell In [21], line 1

----> 1 train_unt = tf.inverse_transform(train_t)

2 test_unt = tf.inverse_transform(test_t)

File c:\users\sole\documents\repositories\feature_engine\feature_engine\transformation\yeojohnson.py:181, in YeoJohnsonTransformer.inverse_transform(self, X)

178 X = self._check_transform_input_and_state(X)

180 for feature in self.variables_:

--> 181 X[feature] = self._inverse_transform_series(

182 X[feature], lmbda=self.lambda_dict_[feature]

183 )

185 return X

File c:\users\sole\documents\repositories\feature_engine\feature_engine\transformation\yeojohnson.py:195, in YeoJohnsonTransformer._inverse_transform_series(self, X, lmbda)

193 x_inv[pos] = np.exp(X[pos]) - 1

194 else: # lmbda != 0

--> 195 x_inv[pos] = np.power(X[pos] * lmbda + 1, 1 / lmbda) - 1

197 # when x < 0

198 if lmbda != 2:

File ~\Documents\Repositories\envs\fe_not\lib\site-packages\pandas\core\series.py:1329, in Series.__setitem__(self, key, value)

1324 raise KeyError(

1325 "key of type tuple not found and not a MultiIndex"

1326 ) from err

1328 if com.is_bool_indexer(key):

-> 1329 key = check_bool_indexer(self.index, key)

1330 key = np.asarray(key, dtype=bool)

1332 if (

1333 is_list_like(value)

1334 and len(value) != len(self)

(...)

1339 # _where call below

1340 # GH#44265

File ~\Documents\Repositories\envs\fe_not\lib\site-packages\pandas\core\indexing.py:2662, in check_bool_indexer(index, key)

2660 indexer = result.index.get_indexer_for(index)

2661 if -1 in indexer:

-> 2662 raise IndexingError(

2663 "Unalignable boolean Series provided as "

2664 "indexer (index of the boolean Series and of "

2665 "the indexed object do not match)."

2666 )

2668 result = result.take(indexer)

2670 # fall through for boolean

IndexingError: Unalignable boolean Series provided as indexer (index of the boolean Series and of the indexed object do not match).

```

Error can be reproduced by applying inverse_transform to the code we currently have in the user guide as demo

|

closed

|

2024-07-17T09:14:48Z

|

2024-08-23T17:21:04Z

|

https://github.com/feature-engine/feature_engine/issues/786

|

[] |

solegalli

| 0

|

521xueweihan/HelloGitHub

|

python

| 2,681

|

【开源自荐】CardCarousel - 最易用的 iOS 轮播组件

|

- 项目地址:https://github.com/YuLeiFuYun/CardCarousel

- 类别:Swift

- 项目标题:一个功能强大且易于使用的轮播组件,支持使用咒语进行设置

- 项目描述:CardCarousel 可以让你对轮播进行更精细地控制,你可以设置滚动方向、页面尺寸、页间距、滚动停止时的页面对齐方式、自动滚动时的滚动动画效果、页面过渡效果、分页阈值和手动滚动时的页面减速速率等等。CardCarousel 可以在 UIKit 与 SwiftUI 中使用,支持链式调用,提供了丰富的初始化方法,参数可以通过点语法进行设置。更好的是,CardCarousel 还支持通过咒语进行设置。

- 亮点:更精细的控制、更好的易用性与咒语

- 示例代码:

```swift

CardCarousel(data: data) { (cell: CustomCell, index: Int, itemIdentifier: Item) in

cell.imageView.kf.setImage(with: url)

cell.indexLabel.backgroundColor = itemIdentifier.color

cell.indexLabel.text = itemIdentifier.index

}

.cardLayoutSize(widthDimension: .fractionalWidth(0.7), heightDimension: .fractionalHeight(0.7))

.cardTransformMode(.liner(minimumAlpha: 0.3))

.cardCornerRadius(10)

.move(to: view)

// 咒语(《高级动物》风格)

CardCarousel(咒语: "矛盾,自私,好色,爱喜,无聊,善良,爱喜 贪婪,真诚 善变,暗淡 无奈,埋怨", 施法材料: data, 作用域: CGRect(x: 0, y: 100, width: 393, height: 200))

.法术目标(view)

// 效果等同于

CardCarousel(frame: CGRect(x: 0, y: 100, width: 393, height: 200), data: data)

.cardLayoutSize(widthDimension: .fractionalWidth(0.7), heightDimension: .fractionalHeight(0.7))

.cardTransformMode(.liner)

.scrollDirection(.rightToLeft)

.loopMode(.rollback)

.move(to: view)

```

- 截图:

|

closed

|

2024-01-27T11:54:03Z

|

2024-04-24T12:12:53Z

|

https://github.com/521xueweihan/HelloGitHub/issues/2681

|

[] |

YuLeiFuYun

| 0

|

serengil/deepface

|

machine-learning

| 884

|

Memory usage in Windows Server is very high

|

i use this deepface package in windows server and this works well

but memory usage is very high

please tell me , this memory usage is normal?

|

closed

|

2023-11-04T19:41:38Z

|

2023-11-05T19:20:25Z

|

https://github.com/serengil/deepface/issues/884

|

[

"question"

] |

ghost

| 1

|

jschneier/django-storages

|

django

| 603

|

S3Boto3 listdir can no longer create buckets

|

Hi,

With the recent update of `listdir` in S3Boto3Backend, an undocumented behavior has also changed, I am not sure if this is a bug or if it is intended.

Formerly, when performing a `listdir` on a non-existing bucket, the function would call a `_get_or_create_bucket` which would create the bucket if `AWS_AUTO_CREATE_BUCKET` was set to True and then listdir would return that the bucket is empty.

After this commit, https://github.com/jschneier/django-storages/commit/b606a5129bc4d0f9189145c80382ba74b63350ef

this is no longer the case. An error is raised "NoSuchBucket" if the bucket does not already exist.

I am not sure what is the best, but if we follow the principles of a CRUD api, listdir is performing a GET request and I would not expect it to modify the state of the remote resource and create a bucket. But raising an error is also annoying as always checking if a bucket exists before a call to listdir is not very practical.

A third option would be:

- When `AWS_AUTO_CREATE_BUCKET` is set to True, return that the bucket is empty `return ([], [])` even if the bucket does not exist, because the bucket will be created anyway at the first occasion and this setting is mainly used in test environments

- When `AWS_AUTO_CREATE_BUCKET` is set to False, raise the error to warn the user about creating the bucket first.

Please, let me know what you think about this or close the issue if the current behavior is intended

Thanks

|

closed

|

2018-09-20T14:18:03Z

|

2020-02-03T06:08:02Z

|

https://github.com/jschneier/django-storages/issues/603

|

[

"s3boto"

] |

baldychristophe

| 1

|

capitalone/DataProfiler

|

pandas

| 856

|

Add documentation for `sampling_ratio` option

|

Related to PR #845 add documentation around the new `sampling_ratio` option paramter

|

closed

|

2023-06-05T17:40:23Z

|

2023-06-28T17:24:04Z

|

https://github.com/capitalone/DataProfiler/issues/856

|

[

"Documentation"

] |

taylorfturner

| 1

|

falconry/falcon

|

api

| 1,950

|

ASGI mount

|

Hi, I'm really glad to see Falcon supporting ASGI - great job!

In some other ASGI frameworks (for example FastAPI, Starlette and BlackSheep) there is the ability to mount other ASGI apps at a certain route. For example:

```python

asgi_app = falcon.asgi.App()

asgi_app.mount('/admin/', some_other_asgi_app)

```

It's nice because you can include third party ASGI apps within your own app - in my case it's [Piccolo admin](https://github.com/piccolo-orm/piccolo_admin). It also lets you compose your app in interesting ways, by making it consist of smaller sub apps.

I wonder if you'd consider this in a future version of Falcon? Or if it's currently possible, and I'm unaware.

If you feel it's out of scope, please feel free to close this issue. Thanks.

|

open

|

2021-08-14T22:17:27Z

|

2023-07-24T10:34:26Z

|

https://github.com/falconry/falcon/issues/1950

|

[

"enhancement",

"proposal",

"community"

] |

dantownsend

| 1

|

ansible/ansible

|

python

| 84,680

|

Cron module fails to properly work under some cases on systems with systemd-cron

|

### Summary

Hi!

I've found strange situation and killed few days to debug it properly.

I started with strange problem that ansble's cron module failed to install any jobs for any users (as I thought), throwing be a (not very useful) python traceback and

```

CronTabError: Unable to read crontab

```

error.

Also I found that creating even empty (`crontab <(echo -n)`) crontab fixes the issue.

So, problem only happens when user have no (personal) crontab installed.

After endless hours of debugging I've found that problem happens when Ansble calling [this](https://github.com/ansible/ansible/blob/cae4f90b21bc40c88a00e712d28531ab0261f759/lib/ansible/modules/cron.py#L539C35-L539C51) command.

Then, `systemd-cron`'s `crontab` returns exit code `2` (!!!) in case if user have no crontab yet.

(I guess, [here](https://github.com/systemd-cron/systemd-cron/blob/5f6f344de122476a9585d09f3f335138d231066e/src/bin/crontab.cpp#L226C61-L226C67) is the source, but I'm not sure about that)

And ansible [expects](https://github.com/ansible/ansible/blob/cae4f90b21bc40c88a00e712d28531ab0261f759/lib/ansible/modules/cron.py#L283) either `0` (success) or `1` (as comment states, it thinks `1` means missing crontab), annd bails out otherwise.

I already created an [issue](https://github.com/systemd-cron/systemd-cron/issues/163) on systemd-cron repo with question about making it consistent with other crons, but I also not sure if it is any chances, author will fix that (and also it is no way fixed release will be shipped on all the LTS distros where I faced this issue).

May it be possible to fix this (also) on Ansible side by either don't care so much on exit code, or by also support `rc=2`, please?

(I can make a PR if needed)

### Issue Type

Bug Report

### Component Name

cron

### Ansible Version

```console

$ ansible --version

ansible [core 2.18.2]

config file = None

configured module search path = ['/home/mva/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']

ansible python module location = /home/mva/.local/pipx/venvs/ansible/lib/python3.12/site-packages/ansible

ansible collection location = /home/mva/.ansible/collections:/usr/share/ansible/collections

executable location = /home/mva/.local/bin/ansible

python version = 3.12.8 (main, Feb 3 2025, 02:21:59) [GCC 13.3.1 20241220] (/home/mva/.local/pipx/venvs/ansible/bin/python)

jinja version = 3.1.4

libyaml = True

```

### Configuration

```console

# if using a version older than ansible-core 2.12 you should omit the '-t all'

$ ansible-config dump --only-changed -t all

ANSIBLE_FORCE_COLOR(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = True

CACHE_PLUGIN(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = jsonfile

CACHE_PLUGIN_CONNECTION(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = .ansible/fact_caching

CONFIG_FILE() = /home/mva/.vcs_repos/alpha/ansbl/ansible.cfg

DEFAULT_BECOME(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = True

DEFAULT_BECOME_METHOD(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = sudo

DEFAULT_BECOME_USER(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = root

DEFAULT_FORKS(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = 7

DEFAULT_HASH_BEHAVIOUR(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = replace

DEFAULT_HOST_LIST(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = ['/home/mva/.vcs_repos/alpha/ansbl/meta/inv']

DEFAULT_STDOUT_CALLBACK(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = yaml

DEPRECATION_WARNINGS(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = True

EDITOR(env: EDITOR) = nvim

HOST_KEY_CHECKING(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = True

PAGER(env: PAGER) = less

RETRY_FILES_ENABLED(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = False

SYSTEM_WARNINGS(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = True

GALAXY_SERVERS:

BECOME:

======

runas:

_____

become_user(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = root

su:

__

become_user(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = root

sudo:

____

become_user(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = root

CACHE:

=====

jsonfile:

________

_uri(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = /home/mva/.vcs_repos/alpha/ansbl/.ansible/fact_caching

CONNECTION:

==========

paramiko_ssh:

____________

host_key_checking(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = True

record_host_keys(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = False

ssh:

___

control_path(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = %(directory)s/%%h-%%p-%%r

host_key_checking(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = True

pipelining(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = True

ssh_args(/home/mva/.vcs_repos/alpha/ansbl/ansible.cfg) = -o ControlMaster=auto -o ControlPersist=300s

```

### OS / Environment

Gentoo (host), Ubuntu 24.04 (target)

### Steps to Reproduce

<!--- Paste example playbooks or commands between quotes below -->

```yaml (paste below)

- ansible.builtin.cron:

name: "moo"

special_time: "reboot"

job: "echo"

state: "present"

```

### Expected Results

Cronjob added

### Actual Results

```console

fatal: [atlas_db]: FAILED! => changed=false

module_stderr: |-

OpenSSH_9.8p1, OpenSSL 3.3.2 3 Sep 2024

debug1: Reading configuration data [redacted]

debug1: [redacted] line 15: Applying options for *

debug3: [redacted] line 46: Including file [redacted] depth 0 (parse only)

debug1: Reading configuration data [redacted]

debug3: kex names ok: [diffie-hellman-group1-sha1]

debug3: kex names ok: [diffie-hellman-group1-sha1]

debug3: kex names ok: [diffie-hellman-group1-sha1]

debug3: [redacted] line 93: Including file [redacted] depth 0 (parse only)

debug1: Reading configuration data [redacted]

debug3: [redacted] line 96: Including file [redacted] depth 0 (parse only)

debug1: Reading configuration data [redacted]

debug3: [redacted] line 6: Including file [redacted] depth 1 (parse only)

debug1: Reading configuration data [redacted]

debug3: [redacted] line 7: Including file [redacted] depth 1 (parse only)

debug1: Reading configuration data [redacted]

debug3: [redacted] line 99: Including file [redacted] depth 0 (parse only)

debug1: Reading configuration data [redacted]

debug3: [redacted] line 100: Including file [redacted] depth 0 (parse only)

debug1: Reading configuration data [redacted]

debug3: [redacted] line 103: Including file [redacted] depth 0 (parse only)

debug1: Reading configuration data [redacted]

debug1: [redacted] line 105: Applying options for *

debug3: [redacted] line 106: Including file [redacted] depth 0

debug1: Reading configuration data [redacted]

debug1: [redacted] line 1: Applying options for *

debug2: add_identity_file: ignoring duplicate key ~/.ssh/fp/all/ed.pub

debug1: Reading configuration data /etc/ssh/ssh_config

debug3: /etc/ssh/ssh_config line 17: Including file /etc/ssh/ssh_config.d/20-systemd-ssh-proxy.conf depth 0

debug1: Reading configuration data /etc/ssh/ssh_config.d/20-systemd-ssh-proxy.conf

debug3: /etc/ssh/ssh_config line 17: Including file /etc/ssh/ssh_config.d/9999999gentoo-security.conf depth 0

debug1: Reading configuration data /etc/ssh/ssh_config.d/9999999gentoo-security.conf

debug3: /etc/ssh/ssh_config line 17: Including file /etc/ssh/ssh_config.d/9999999gentoo.conf depth 0

debug1: Reading configuration data /etc/ssh/ssh_config.d/9999999gentoo.conf

debug2: resolve_canonicalize: hostname 100.100.100.1 is address

debug1: Setting implicit ProxyCommand from ProxyJump: ssh -p 55222 -vvv -W '[%h]:%p' [redacted]

debug3: expanded UserKnownHostsFile '~/.ssh/known_hosts' -> '[redacted]

debug3: expanded UserKnownHostsFile '~/.ssh/known_hosts2' -> '[redacted]

debug1: Authenticator provider $SSH_SK_PROVIDER did not resolve; disabling

debug1: auto-mux: Trying existing master at '[redacted]

debug2: fd 3 setting O_NONBLOCK

debug2: mux_client_hello_exchange: master version 4

debug3: mux_client_forwards: request forwardings: 0 local, 0 remote

debug3: mux_client_request_session: entering

debug3: mux_client_request_alive: entering

debug3: mux_client_request_alive: done pid = 17733

debug3: mux_client_request_session: session request sent

debug1: mux_client_request_session: master session id: 2

Traceback (most recent call last):

File "<stdin>", line 107, in <module>

File "<stdin>", line 99, in _ansiballz_main

File "<stdin>", line 47, in invoke_module

File "<frozen runpy>", line 226, in run_module

File "<frozen runpy>", line 98, in _run_module_code

File "<frozen runpy>", line 88, in _run_code

File "/tmp/ansible_ansible.builtin.cron_payload_n4yg75c0/ansible_ansible.builtin.cron_payload.zip/ansible/modules/cron.py", line 768, in <module>

File "/tmp/ansible_ansible.builtin.cron_payload_n4yg75c0/ansible_ansible.builtin.cron_payload.zip/ansible/modules/cron.py", line 630, in main

File "/tmp/ansible_ansible.builtin.cron_payload_n4yg75c0/ansible_ansible.builtin.cron_payload.zip/ansible/modules/cron.py", line 257, in __init__

File "/tmp/ansible_ansible.builtin.cron_payload_n4yg75c0/ansible_ansible.builtin.cron_payload.zip/ansible/modules/cron.py", line 279, in read

CronTabError: Unable to read crontab

debug3: mux_client_read_packet_timeout: read header failed: Broken pipe

debug2: Received exit status from master 1

module_stdout: ''

msg: |-

MODULE FAILURE: No start of json char found

See stdout/stderr for the exact error

rc: 1

```

### Code of Conduct

- [x] I agree to follow the Ansible Code of Conduct

|

closed

|

2025-02-06T15:09:38Z

|

2025-02-25T14:00:07Z

|

https://github.com/ansible/ansible/issues/84680

|

[

"module",

"bug",

"affects_2.18"

] |

msva

| 5

|

redis/redis-om-python

|

pydantic

| 59

|

list and tuple fields could have other types than strings

|

'this Preview release, list and tuple fields can only contain strings. Problem field: . See docs: TODO'

|

closed

|

2022-01-01T08:29:00Z

|

2022-08-30T09:48:28Z

|

https://github.com/redis/redis-om-python/issues/59

|

[] |

gam-phon

| 1

|

pydata/pandas-datareader

|

pandas

| 383

|

Eurostat - mismatched tag;

|

`eu_trade_since_2000 = web.DataReader("DS-043327", 'eurostat')`

gives the message

` File "<string>", line unknown

ParseError: mismatched tag: line 28, column 8`

That's not very informative. I have no idea what is going on at all. Part of the pip freeze output is:

>numpy==1.13.1

pandas==0.20.3

pandas-datareader==0.5.0

|

closed

|

2017-08-24T12:47:41Z

|

2019-09-26T21:20:30Z

|

https://github.com/pydata/pandas-datareader/issues/383

|

[] |

HristoBuyukliev

| 8

|

sczhou/CodeFormer

|

pytorch

| 207

|

Great job! How amazing, I was planning on reproducing the code myself today, but then it suddenly got updated!

|

open

|

2023-04-19T15:17:09Z

|

2023-04-19T15:20:10Z

|

https://github.com/sczhou/CodeFormer/issues/207

|

[] |

Liar-zzy

| 1

|

|

Sanster/IOPaint

|

pytorch

| 376

|

[Feature Request] Increase/decrease maximum base cursor size range.

|

Could it be possible to increase/decrease the maximum sizes for the cursor? I'd love to be able to make my cursor as small as 1px - 2px to get very exact in my masking for smaller images. If this can be adjusted on my own, I'd appreciate some guidance. And I don't mean that I need help figuring out how to work the normal cursor size slider, I'm asking for a change in the maximum smallness and bigness of the cursor or assistance to do it myself. Any help is greatly appreciated! :)

|

closed

|

2023-09-21T23:05:38Z

|

2025-03-21T02:05:02Z

|

https://github.com/Sanster/IOPaint/issues/376

|

[

"stale"

] |

ArchAngelAries

| 2

|

sammchardy/python-binance

|

api

| 1,459

|

python-binance ThreadedWebsocketManager not working with Python 3.11 or 3.12?

|

**Describe the bug**

When I run the following code in PyCharm, it doesn’t print any information. However, if I run it in debug mode, the information appears. This causes the code to not function properly on Python 3.11 or 3.12.

**To Reproduce**

```

from binance import ThreadedWebsocketManager

def handle_socket_message(msg):

print(msg)

print(1, flush=True)

def main():

# socket manager using threads

twm = ThreadedWebsocketManager()

twm.start()

twm.start_multiplex_socket(callback=handle_socket_message, streams=['!miniTicker@arr'])

twm.start_futures_multiplex_socket(callback=handle_socket_message, streams=['!miniTicker@arr'])

# join the threaded managers to the main thread

while True:

twm.join(3)

print("join")

if __name__ == '__main__':

main()

```

**Expected behavior**

I need Python-binance to work properly on python3.11or3.12 and to print information

**Environment (please complete the following information):**

- Python version: 3.11 or 3.12

- Virtual Env: conda

- OS: Mac, Ubuntu

- python-binance version 1.0.21

**Logs or Additional context**

This is running

This is debug

|

closed

|

2024-10-29T08:17:14Z

|

2024-10-30T01:11:17Z

|

https://github.com/sammchardy/python-binance/issues/1459

|

[] |

XiaoWXHang

| 5

|

horovod/horovod

|

machine-learning

| 4,043

|

NVIDIA CUDA TOOLKIT version to run Horovod in Conda Environment

|

Hi Developers

I wish to install horovod inside Conda environment for which I require nccl from NVIDIA CUDA toolkit installed in system so I just wanted to know which is version of NVIDIA CUDA Toolkit is required to build horovod inside conda env to run Pytorch library.

Many Thanks

Pushkar

|

open

|

2024-05-10T06:56:06Z

|

2025-01-31T23:14:47Z

|

https://github.com/horovod/horovod/issues/4043

|

[

"wontfix"

] |

ppandit95

| 2

|

huggingface/datasets

|

computer-vision

| 6,791

|

`add_faiss_index` raises ValueError: not enough values to unpack (expected 2, got 1)

|

### Describe the bug

Calling `add_faiss_index` on a `Dataset` with a column argument raises a ValueError. The following is the trace

```python

214 def replacement_add(self, x):

215 """Adds vectors to the index.

216 The index must be trained before vectors can be added to it.

217 The vectors are implicitly numbered in sequence. When `n` vectors are

(...)

224 `dtype` must be float32.

225 """

--> 227 n, d = x.shape

228 assert d == self.d

229 x = np.ascontiguousarray(x, dtype='float32')

ValueError: not enough values to unpack (expected 2, got 1)

```

### Steps to reproduce the bug

1. Load any dataset like `ds = datasets.load_dataset("wikimedia/wikipedia", "20231101.en")["train"]`

2. Add an FAISS index on any column `ds.add_faiss_index('title')`

### Expected behavior

The index should be created

### Environment info

- `datasets` version: 2.18.0

- Platform: Linux-6.5.0-26-generic-x86_64-with-glibc2.35

- Python version: 3.9.19

- `huggingface_hub` version: 0.22.2

- PyArrow version: 15.0.2

- Pandas version: 2.2.1

- `fsspec` version: 2024.2.0

- `faiss-cpu` version: 1.8.0

|

closed

|

2024-04-08T01:57:03Z

|

2024-04-11T15:38:05Z

|

https://github.com/huggingface/datasets/issues/6791

|

[] |

NeuralFlux

| 3

|

benbusby/whoogle-search

|

flask

| 250

|

[BUG] Whoogle spits out garbage when going to next page of search results

|

Whenever i try to go to the next page of a search (e.g. COVID-19) it spits out a ton of garbage

The exact string is the following

gAAAAABgZPRDBmNckg-txy85CufwUIccaLrnLWvW7gm9lyPJAXd8uFW1bFln-rKIyC3QxQAkoMDGjcZDgNlEtAS5_Kluz1OpGg==

It's the same no matter what search i do

Steps to reproduce the behavior:

1. Search something

2. Go to the next page

3. See garbage in search bar

**Deployment Method**

- [ ] Heroku (one-click deploy)

- [ ] Docker

- [] `run` executable

- [x] pip/pipx

- [ ] Other: [describe setup]

**Version of Whoogle Search**

- [] Latest build from [source] (i.e. GitHub, Docker Hub, pip, etc)

- [ x] Version [v0.3.1]

- [ ] Not sure

**Desktop (please complete the following information):**

- OS: Windows 7 SP1 Ultimate 64-bit

- Browser Google Chrome

- Version 89

**Additional context**

Issues always occur

|

closed

|

2021-03-31T22:19:16Z

|

2021-04-27T13:46:19Z

|

https://github.com/benbusby/whoogle-search/issues/250

|

[

"bug"

] |

Rowan-Bird

| 5

|

aiortc/aiortc

|

asyncio

| 558

|

Does the Raspberry PI 4B not support Google-CRC32C?

|

ity -Wdate-time -D_FORTIFY_SOURCE=2 -fPIC -I/usr/include/python3.7m -c src/google_crc32c/_crc32c.c -o build/temp.linux-aarch64-3.7/src/google_crc32c/_crc32c.o

src/google_crc32c/_crc32c.c:3:10: fatal error: crc32c/crc32c.h: No such file or directory

#include <crc32c/crc32c.h>

^~~~~~~~~~~~~~~~~

compilation terminated.

ERROR:root:Compiling the C Extension for the crc32c library failed. To enable building / installing a pure-Python-only version, set 'CRC32C_PURE_PYTHON=1' in the environment.

error: command 'aarch64-linux-gnu-gcc' failed with exit status 1

----------------------------------------

Failed building wheel for google-crc32c

Running setup.py clean for google-crc32c

Failed to build google-crc32c

Installing collected packages: pylibsrtp, google-crc32c, aiortc

Running setup.py install for google-crc32c ... error

Complete output from command /usr/bin/python3 -u -c "import setuptools, tokenize;__file__='/tmp/pip-install-zemk6oyq/google-crc32c/setup.py';f=getattr(tokenize, 'open', open)(__file__);code=f.read().replace('\r\n', '\n');f.close();exec(compile(code, __file__, 'exec'))" install --record /tmp/pip-record-_30_twxh/install-record.txt --single-version-externally-managed --compile:

running install

running build

running build_py

creating build

creating build/lib.linux-aarch64-3.7

creating build/lib.linux-aarch64-3.7/google_crc32c

copying src/google_crc32c/cext.py -> build/lib.linux-aarch64-3.7/google_crc32c

copying src/google_crc32c/_checksum.py -> build/lib.linux-aarch64-3.7/google_crc32c

copying src/google_crc32c/__config__.py -> build/lib.linux-aarch64-3.7/google_crc32c

copying src/google_crc32c/__init__.py -> build/lib.linux-aarch64-3.7/google_crc32c

copying src/google_crc32c/python.py -> build/lib.linux-aarch64-3.7/google_crc32c

running build_ext

building 'google_crc32c._crc32c' extension

creating build/temp.linux-aarch64-3.7

creating build/temp.linux-aarch64-3.7/src

creating build/temp.linux-aarch64-3.7/src/google_crc32c

aarch64-linux-gnu-gcc -pthread -DNDEBUG -g -fwrapv -O2 -Wall -g -fstack-protector-strong -Wformat -Werror=format-security -Wdate-time -D_FORTIFY_SOURCE=2 -fPIC -I/usr/include/python3.7m -c src/google_crc32c/_crc32c.c -o build/temp.linux-aarch64-3.7/src/google_crc32c/_crc32c.o

src/google_crc32c/_crc32c.c:3:10: fatal error: crc32c/crc32c.h: No such file or directory

#include <crc32c/crc32c.h>

^~~~~~~~~~~~~~~~~

compilation terminated.

ERROR:root:Compiling the C Extension for the crc32c library failed. To enable building / installing a pure-Python-only version, set 'CRC32C_PURE_PYTHON=1' in the environment.

error: command 'aarch64-linux-gnu-gcc' failed with exit status 1

/------------------------

Raspberry pi:

Distributor ID: Debian

Description: Debian GNU/Linux 10 (buster)

Release: 10

Codename: buster

|

closed

|

2021-09-03T10:14:20Z

|

2021-09-06T01:04:11Z

|

https://github.com/aiortc/aiortc/issues/558

|

[] |

Canees

| 2

|

junyanz/pytorch-CycleGAN-and-pix2pix

|

computer-vision

| 1,409

|

Abouttransfer learning

|

closed

|

2022-04-18T10:05:36Z

|

2022-04-18T10:05:43Z

|

https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/1409

|

[] |

ZhenyuLiu-SYSU

| 0

|

|

deepfakes/faceswap

|

deep-learning

| 722

|

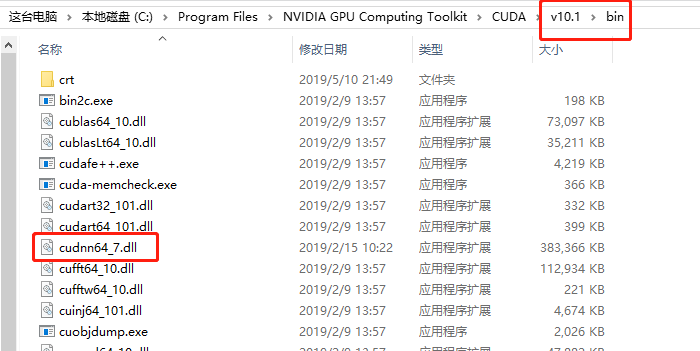

has installed cuDNN,but not found

|

**Describe the bug**

has installed cuDNN,but not found

**To Reproduce**

python setup.py

**Expected behavior**

WARNING Running without root/admin privileges

INFO The tool provides tips for installation

and installs required python packages

INFO Setup in Windows 10

INFO Installed Python: 3.6.8 64bit

INFO Encoding: cp936

INFO Upgrading pip...

INFO Installed pip: 19.1.1

Enable Docker? [y/N] n

INFO Docker Disabled

Enable CUDA? [Y/n] y

INFO CUDA Enabled

INFO CUDA version: 10.1

ERROR cuDNN not found. See https://github.com/deepfakes/faceswap/blob/master/INSTALL.md#cudnn for instructions

WARNING The minimum Tensorflow requirement is 1.12.

Tensorflow currently has no official prebuild for your CUDA, cuDNN combination.

Either install a combination that Tensorflow supports or build and install your own tensorflow-gpu.

CUDA Version: 10.1

cuDNN Version:

Help:

Building Tensorflow: https://www.tensorflow.org/install/install_sources

Tensorflow supported versions: https://www.tensorflow.org/install/source#tested_build_configurations

Location of custom tensorflow-gpu wheel (leave blank to manually install):

INFO Checking System Dependencies...

INFO CMake version: 3.14.3

INFO Visual Studio 2015 version: 14.0

INFO Visual Studio C++ version: v14.0.24215.01

INFO 1. Install PIP requirements

You may want to execute `chcp 65001` in cmd line

to fix Unicode issues on Windows when installing dependencies

**Screenshots**

**Desktop (please complete the following information):**

- Windows 10

|

closed

|

2019-05-10T15:00:49Z

|

2019-05-10T15:09:23Z

|

https://github.com/deepfakes/faceswap/issues/722

|

[] |

chenkarl

| 1

|

Nemo2011/bilibili-api

|

api

| 698

|

[提问]上传视频遇到问题 AttributeError: 'NoneType' object has no attribute '__dict__'. Did you mean: '__dir__'?

|

**Python 版本:** 3.10

**模块版本:** 16.2.0

**运行环境:** Windows

<!-- 务必提供模块版本并确保为最新版 -->

---

按照文档给的案例上传视频,credential和视频封面,视频文件都修改了,但是遇见这个问题

AttributeError: 'NoneType' object has no attribute '__dict__'. Did you mean: '__dir__'?

|

closed

|

2024-03-01T06:33:22Z

|

2024-03-15T14:14:36Z

|

https://github.com/Nemo2011/bilibili-api/issues/698

|

[

"bug",

"solved"

] |

RickyCui010

| 8

|

snarfed/granary

|

rest-api

| 46

|

Duplicate in-reply-to links on Tweets

|

In the last week or so, I've noticed Twitter posts have started showing up with duplicated in-reply-to links:

```

<article class="h-entry h-as-note">

<span class="u-uid">tag:twitter.com:653670712104738816</span>

<time class="dt-published" datetime="2015-10-12T20:36:57+00:00">2015-10-12T20:36:57+00:00</time>

<div class="h-card p-author">

<div class="p-name"><a class="u-url" href="https://kylewm.com">Kyle Mahan</a></div>

<img class="u-photo" src="https://twitter.com/kylewmahan/profile_image?size=original" alt="">

</div>

<a class="u-url" href="https://twitter.com/kylewmahan/status/653670712104738816"></a>

<div class="e-content p-name">

<a href="https://twitter.com/WeWantPlates">@WeWantPlates</a> <a href="https://twitter.com/BethFad91">@BethFad91</a> oh good, it looks like most of the paint has already been scraped off by other people's utensils

</div>

<a class="u-in-reply-to" href="https://twitter.com/WeWantPlates/status/653649456454365184"></a>

<a class="u-in-reply-to" href="https://twitter.com/WeWantPlates/status/653649456454365184"></a>

</article>

```

I haven't looked into what's causing this yet

|

closed

|

2015-10-13T15:55:00Z

|

2015-10-13T18:05:29Z

|

https://github.com/snarfed/granary/issues/46

|

[] |

karadaisy

| 4

|

CanopyTax/asyncpgsa

|

sqlalchemy

| 101

|

Asyncpg connection are not returning to a pool

|

An Asyncpg connection will not return to a pool connection if in the method __aenter__ was raised exception agter acquire_context.

```

async def __aenter__(self):

self.acquire_context = self.pool.acquire(timeout=self.timeout)

con = await self.acquire_context.__aenter__()

self.transaction = con.transaction(**self.trans_kwargs)

await self.transaction.__aenter__()

return con

```

Cancelled error may be `raised acquire_context` in await of `transaction.__aenter__`. And Connectio return to the pool never.

|

closed

|

2019-09-15T19:36:50Z

|

2019-09-19T19:21:19Z

|

https://github.com/CanopyTax/asyncpgsa/issues/101

|

[] |

matemax

| 0

|

numba/numba

|

numpy

| 9,650

|

Function with @guvectorize allow the index of array out of bound, not sure if this is in purpose

|

<!--

Thanks for opening an issue! To help the Numba team handle your information

efficiently, please first ensure that there is no other issue present that

already describes the issue you have

(search at https://github.com/numba/numba/issues?&q=is%3Aissue).

-->

## Reporting a bug

<!--

Before submitting a bug report please ensure that you can check off these boxes:

-->

- [ x] have tried using the latest released version of Numba (most recent is

visible in the release notes

(https://numba.readthedocs.io/en/stable/release-notes-overview.html).

- [ x] I have included a self contained code sample to reproduce the problem.

i.e. it's possible to run as 'python bug.py'.

<!--

Please include details of the bug here, including, if applicable, what you

expected to happen!

-->

*Not sure if this behavior is expected or it is potentially can be improved. I did not see much discuss on this issue on the internet, so if this is expected, please ignore this.*

Weird behavior:

when running a python function decorated by @guvectorize, then the numpy array in this function can run with an out of bound index, and without generating any exception.

Example code:

```

import numpy as np

from numba import vectorize, float64, guvectorize,int64

@guvectorize([(int64[:], int64, int64[:])], '(n),()->(n)')

def g(x, y, res):

for i in range(x.shape[0]):

res[i] = x[i + 10] + y # Add index 10 to make sure out of boundary for the array x

x = np.array([1,2,3])

y = np.array([1])

myRes = g(x, y)

print(myRes)

# No error, the printed result is:

#array([[ 12884901892, 1, 4075923963910]])

```

So above fundtion try to access an numpy array "x" with an index out of bound in the "g" function.

But this does not generate any error, and the result returned a random number.

----

I'm new to this kind of universal function, just guessing the cause.

Maybe numba help to convert this python code to another format to execute, like a C code.

Well, in C, the array name is a pointer that allow the index to out bound. It just calculate the element address using the array base address + the index, so the code is actually accessing a address that is out of the array and generate this random output.

Not sure if correct, would like some insight about this behavior, because this generated a weird behavior in our model testing work. We test the same function with same input but got different output, and we eventually found out there is a index out of bound hiding in a loop and the random array element value caused a random function output.

Hope can get some more insight on this behavior.

Thanks

|

closed

|

2024-07-12T04:11:44Z

|

2024-08-22T01:52:33Z

|

https://github.com/numba/numba/issues/9650

|

[

"question",

"stale"

] |

BixiongXiang

| 3

|

tensorpack/tensorpack

|

tensorflow

| 733

|

The usage of dataflow

|

Will the get_data() and the reset_state() method be called only once or at the beginning of each epoch?

I want to do some curriculum learning. If the get_data() method is called every epoch, then I can record the epoch index in it and change the data as epoch number increases. Currently I have a data set consists of millions of samples. I set the steps_per_epoch to 3000 and the batch size is 8. Thus each epoch only 24k samples are used. I want to use the simplest samples in the first epoch and increase the difficulty as the training goes. But it seems that in the beginning of the second epoch, get_data() is not called again.

|

closed

|

2018-04-20T01:23:23Z

|

2018-05-30T20:59:41Z

|

https://github.com/tensorpack/tensorpack/issues/733

|

[

"usage"

] |

JesseYang

| 3

|

aminalaee/sqladmin

|

asyncio

| 415

|

Protocol, Domain & port with request.get_url over just reporting the path

|

### Checklist

- [X] The bug is reproducible against the latest release or `master`.

- [X] There are no similar issues or pull requests to fix it yet.

### Describe the bug

When using SQLAdmin behind a proxy, the URLs use 'http://' instead of 'https://'

This can be fixed by setting the Uvicorn proxy settings.

However, using full URLs will lead to many unnecessary issues. Using just paths as mentioned here will work fine in all cases; https://github.com/encode/starlette/issues/538#issuecomment-1135096753

### Steps to reproduce the bug

Run SQLAdmin behind a proxy.

### Expected behavior

All URLs should just be the subpath.

### Actual behavior

All URLs contain the protocol, domain (optinally port as well) and finally the path.

### Debugging material

_No response_

### Environment

Python 3.8

SQLAdmin 0.8.0

### Additional context

_No response_

|

closed

|

2023-01-19T14:06:20Z

|

2023-03-08T20:34:18Z

|

https://github.com/aminalaee/sqladmin/issues/415

|

[

"waiting-for-feedback"

] |

Jorricks

| 5

|

davidsandberg/facenet

|

tensorflow

| 729

|

IndexError : index 1 is out of bounds for axis 0 with size 1

|

class_index = class_indices[i] line 332 of tripletloss.py file

I am using LFW dataset people per batch = 45 and image per person 5 I have a user. when I have use image per person is 40 than I am also getting this error.

|

open

|

2018-04-30T04:07:17Z

|

2018-04-30T04:10:19Z

|

https://github.com/davidsandberg/facenet/issues/729

|

[] |

praveenkumarchandaliya

| 0

|

ultralytics/ultralytics

|

pytorch

| 18,892

|

why cli results is different with python

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/orgs/ultralytics/discussions) and found no similar questions.

### Question

vs

```

yolo predict model=yolo11m.pt source=video.avi show

```

python code gives no detections. Why? Weights are the same (default)?

CLI results:

video 1/1 (frame 11/54025) C:\repos\restaurant\detection\video.avi: 480x640 5 persons, 19 chairs, 4 potted plants, 6 dining tables, 1 laptop, 1 vase, 11.7ms

### Additional

_No response_

|

closed

|

2025-01-25T22:50:01Z

|

2025-01-27T10:00:54Z

|

https://github.com/ultralytics/ultralytics/issues/18892

|

[

"question",

"detect"

] |

ankhafizov

| 2

|

iperov/DeepFaceLab

|

machine-learning

| 5,526

|

Train Quick96 press any key Forever

|

On step 6, after loading samples it says "Press any key", but nothing happens after pressing... Any ways i can fix it? Thanks.

Running trainer.

[new] No saved models found. Enter a name of a new model : 1

1

Model first run.

Choose one or several GPU idxs (separated by comma).

[CPU] : CPU

[0] : NVIDIA GeForce GTX 1060 6GB

[0] Which GPU indexes to choose? :

0

Initializing models: 100%|###############################################################| 5/5 [00:01<00:00, 2.72it/s]

Loading samples: 100%|############################################################| 2951/2951 [00:05<00:00, 497.68it/s]

Loading samples: 100%|##########################################################| 33410/33410 [01:09<00:00, 478.53it/s]

Для продолжения нажмите любую клавишу . . .

|

open

|

2022-05-29T12:27:07Z

|

2023-07-25T09:36:26Z

|

https://github.com/iperov/DeepFaceLab/issues/5526

|

[] |

huebez

| 5

|

pandas-dev/pandas

|

python

| 61,165

|

BUG: `datetime64[s]` fails round trip using `.to_parquet` and `read_parquet`

|

### Pandas version checks

- [x] I have checked that this issue has not already been reported.

- [x] I have confirmed this bug exists on the [latest version](https://pandas.pydata.org/docs/whatsnew/index.html) of pandas.

- [x] I have confirmed this bug exists on the [main branch](https://pandas.pydata.org/docs/dev/getting_started/install.html#installing-the-development-version-of-pandas) of pandas.

### Reproducible Example

```python

import pandas as pd

c = pd.Series(["2024-01-01", "2025-01-01", "2026-01-01"], dtype="datetime64[s]")

df0 = c.to_frame()

print(df0.dtypes)

df0.to_parquet("test.parquet")

df1 = pd.read_parquet("test.parquet")

print(df1.dtypes)

```

### Issue Description

The `dtype` changes from `datetime64[s]` to `datetime64[ms]`.

### Expected Behavior

I would expect the `dtype` to remain unchanged.

### Installed Versions

<details>

INSTALLED VERSIONS

------------------

commit : 0691c5cf90477d3503834d983f69350f250a6ff7

python : 3.10.16

python-bits : 64

OS : Linux

OS-release : 6.8.0-1021-azure

Version : #25-Ubuntu SMP Wed Jan 15 20:45:09 UTC 2025

machine : x86_64

processor : x86_64

byteorder : little

LC_ALL : None

LANG : C.UTF-8

LOCALE : en_US.UTF-8

pandas : 2.2.3

numpy : 2.2.2

pytz : 2025.1

dateutil : 2.9.0.post0

pip : 25.0

Cython : None

sphinx : None

IPython : 8.34.0

adbc-driver-postgresql: None

adbc-driver-sqlite : None

bs4 : 4.13.3

blosc : None

bottleneck : None

dataframe-api-compat : None

fastparquet : None

fsspec : 2025.3.0

html5lib : None

hypothesis : None

gcsfs : None

jinja2 : 3.1.6

lxml.etree : 5.3.1

matplotlib : None

numba : None

numexpr : None

odfpy : None

openpyxl : 3.1.5

pandas_gbq : None

psycopg2 : None

pymysql : None

pyarrow : 19.0.1

pyreadstat : None

pytest : None

python-calamine : None

pyxlsb : None

s3fs : None

scipy : None

sqlalchemy : 2.0.39

tables : None

tabulate : None

xarray : None

xlrd : 2.0.1

xlsxwriter : None

zstandard : None

tzdata : 2025.1

qtpy : None

pyqt5

</details>

|

closed

|

2025-03-21T23:39:25Z

|

2025-03-22T11:19:48Z

|

https://github.com/pandas-dev/pandas/issues/61165

|

[

"Bug",

"Datetime",

"IO Parquet"

] |

noahblakesmith

| 1

|

explosion/spaCy

|

nlp

| 12,611

|

support future pydantic v2

|

Spacy uses an older version of pydantic, please lighten the pinning to support 1.10.x and the forthcoming version 2.0.0

|

closed

|

2023-05-08T17:00:15Z

|

2023-09-08T00:02:11Z

|

https://github.com/explosion/spaCy/issues/12611

|

[

"enhancement",

"third-party"

] |

achapkowski

| 8

|

httpie/cli

|

rest-api

| 1,006

|

Redirected output starts response headers on same line as request body

|

When I run a POST request without redirection, I see the response headers start on a new line:

```

}

}

}

}

HTTP/1.1 201

Date: Mon, 21 Dec 2020 13:39:00 GMT

Content-Length: 0

```

But when I redirect the output, I see the `HTTP/1.1 201` on the same line as the request:

```

}

}

}

}HTTP/1.1 201

Date: Mon, 21 Dec 2020 13:36:09 GMT

Content-Length: 0

```

The options I specified in each case were `http -v --pretty format --unsorted`; the only difference was in the second case I redirected the output to a file.

|

closed

|

2020-12-21T13:43:07Z

|

2021-02-06T11:19:42Z

|

https://github.com/httpie/cli/issues/1006

|

[

"bug"

] |

hughpv

| 5

|

man-group/arctic

|

pandas

| 205

|

stock tick data storing tutorial.

|

Hi, is there any tutorial for storing tick data and how to update the data for my symbols?

|

closed

|

2016-08-30T03:15:59Z

|

2017-12-03T21:46:14Z

|

https://github.com/man-group/arctic/issues/205

|

[] |

leolle

| 18

|

serengil/deepface

|

machine-learning

| 980

|

cv:resize issue for functions.extract_faces

|

Hi, there seems to be an issue with the `functions.extract_faces` method (using ssd).

```

File C:\ProgramData\anaconda3\Lib\site-packages\deepface\commons\functions.py:211, in extract_faces(img, target_size, detector_backend, grayscale, enforce_detection, align)

205 factor = min(factor_0, factor_1)

207 dsize = (

208 int(current_img.shape[1] * factor),

209 int(current_img.shape[0] * factor),

210 )

--> 211 current_img = cv2.resize(current_img, dsize)

213 diff_0 = target_size[0] - current_img.shape[0]

214 diff_1 = target_size[1] - current_img.shape[1]

error: OpenCV(4.9.0) D:\a\opencv-python\opencv-python\opencv\modules\imgproc\src\resize.cpp:4155: error: (-215:Assertion failed) inv_scale_x > 0 in function 'cv::resize'

```

|

closed

|

2024-01-28T20:28:28Z

|

2024-01-31T09:12:05Z

|

https://github.com/serengil/deepface/issues/980

|

[

"bug"

] |

fechnologies-d

| 7

|

pywinauto/pywinauto

|

automation

| 932

|

Panel

|

## Expected Behavior

## Actual Behavior

Unable to get the Control in the Static Panel and open the child window

## Steps to Reproduce the Problem

1.

2.

3.

## Short Example of Code to Demonstrate the Problem

## Specifications

- Pywinauto version:

- Python version and bitness:

- Platform and OS:

|

open

|

2020-05-14T10:36:03Z

|

2020-06-07T13:19:28Z

|

https://github.com/pywinauto/pywinauto/issues/932

|

[

"question"

] |

uvanesh

| 3

|

explosion/spaCy

|

data-science

| 13,264

|

Regex doesn't work if less than 3 characters?

|

<!-- NOTE: For questions or install related issues, please open a Discussion instead. -->

## How to reproduce the behaviour

Taken and adjusted right from the docs:

```python

import spacy

from spacy.matcher import Matcher

nlp = spacy.blank("en")

matcher = Matcher(nlp.vocab, validate=True)

pattern = [

{

"TEXT": {

"regex": r"4K"

}

}

]

matcher.add("TV_RESOLUTION", [pattern])

doc = nlp("Sony 55 Inch 4K Ultra HD TV X90K Series:BRAVIA XR LED Smart Google TV, Dolby Vision HDR, Exclusive Features for PS 5 XR55X90K-2022 w/HT-A5000 5.1.2ch Dolby Atmos Sound Bar Surround Home Theater")

res = matcher(doc)

# res = []

```

However if I add a `D` after `4K` in both strings, a match is found. Is there a minimal length restriction?

```python

import spacy

from spacy.matcher import Matcher

nlp = spacy.blank("en")

matcher = Matcher(nlp.vocab, validate=True)

pattern = [

{

"TEXT": {

"regex": r"4KD"

}

}

]

matcher.add("TV_RESOLUTION", [pattern])

doc = nlp("Sony 55 Inch 4KD Ultra HD TV X90K Series:BRAVIA XR LED Smart Google TV, Dolby Vision HDR, Exclusive Features for PS 5 XR55X90K-2022 w/HT-A5000 5.1.2ch Dolby Atmos Sound Bar Surround Home Theater")

res = matcher(doc)

# res = [[(11960903833032025891, 3, 4)]]

```

## Your Environment

<!-- Include details of your environment. You can also type `python -m spacy info --markdown` and copy-paste the result here.-->

* Operating System: macOS

* Python Version Used: 3.11.3

* spaCy Version Used: 3.7.2

* Environment Information: Nothing special

|

closed

|

2024-01-23T16:14:48Z

|

2024-02-23T00:05:21Z

|

https://github.com/explosion/spaCy/issues/13264

|

[

"feat / matcher"

] |

SHxKM

| 3

|

joerick/pyinstrument

|

django

| 168

|

Feature request: cumulated time / total time / ncalls statistics + report

|

I used pyinstrument today to find bottlenecks in my optical simulation code, and found it overall very helpful. The HTML report is very usable and looks great!

One feature I was missing (or didn't find :-)) compared to builtin cProfile, is the possibility to sort / display **cumulative time for individual functions**. I.e. total time spent in that function, regardless of the call stack above. This is really crucial to find "hot" functions, i.e. with short runtime but high call count.

In the simplest form, the HTML report could show this as on-hover popup; or make it more fancy and display a sorted list grouped by module / function...

If this already possible, I'd appreciate a pointer on how to...

|

closed

|

2021-11-30T12:48:12Z

|

2022-11-06T18:22:40Z

|

https://github.com/joerick/pyinstrument/issues/168

|

[] |

loehnertj

| 4

|

dunossauro/fastapi-do-zero

|

sqlalchemy

| 234

|

Probleminha de versão do python na aula 10

|

Fiz esse gist, pois tive problema por causa da versão do Python na aula 10

https://gist.github.com/fabiocasadossites/7194d9c6b36eed1452547d7ea8f24bef

|

closed

|

2024-08-25T20:23:44Z

|

2024-08-27T17:32:21Z

|

https://github.com/dunossauro/fastapi-do-zero/issues/234

|

[] |

fabiocasadossites

| 2

|

dmlc/gluon-cv

|

computer-vision

| 1,038

|

Issue with "pose estimation" using GPU

|

For this tutorial: https://gluon-cv.mxnet.io/build/examples_pose/cam_demo.html.

I tried GPU, but failed with problems like :

```

[22:57:26] c:\jenkins\workspace\mxnet-tag\mxnet\src\operator\nn\cudnn\./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable)

[22:57:47] c:\jenkins\workspace\mxnet-tag\mxnet\src\operator\nn\cudnn\./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable)

[22:57:47] c:\jenkins\workspace\mxnet-tag\mxnet\src\operator\nn\cudnn\./cudnn_algoreg-inl.h:97: Running performance tests to find the best convolution algorithm, this can take a while... (set the environment variable MXNET_CUDNN_AUTOTUNE_DEFAULT to 0 to disable)

[ WARN:0] global C:\projects\opencv-python\opencv\modules\videoio\src\cap_msmf.cpp (674) SourceReaderCB::~SourceReaderCB terminating async callback

```

It seems a problem with opencv, but why it happened when gpu is used?

I am using latest gluoncv with mxnet 1.5 gpu cuda10 on win10.

|

closed

|

2019-11-13T15:05:07Z

|

2021-06-07T07:04:29Z

|

https://github.com/dmlc/gluon-cv/issues/1038

|

[

"Stale"

] |

dbsxdbsx

| 4

|

CorentinJ/Real-Time-Voice-Cloning

|

python

| 549

|

Import Error

|

Hey, i am trying to run this code and everytime i run demo_toolbox.py there comes an error "failed to load qt binding" i tried reinstalling matplotlib and also tried installing PYQt5 .

Need Help !!!

|

closed

|

2020-10-06T20:23:24Z

|

2020-10-12T09:55:04Z

|

https://github.com/CorentinJ/Real-Time-Voice-Cloning/issues/549

|

[] |

jay-1104

| 5

|

Nemo2011/bilibili-api

|

api

| 298

|

【建议】爬取视频弹幕时对cookies应该不设置要求

|

看了一下代码,发现爬取视频弹幕需要提供cookies,但是大部分视频不需要cookies即可获取弹幕,是否可以修改为若不提供credential也可以爬取弹幕。

|

closed

|

2023-05-22T00:03:54Z

|

2023-05-24T11:17:20Z

|

https://github.com/Nemo2011/bilibili-api/issues/298

|

[] |

jhzgjhzg

| 4

|

Avaiga/taipy

|

data-visualization

| 2,293

|

Have part or dialog centered to the element clicked

|

### Description

Here, I have clicked on an icon and I have a dropdown menu of labels next to where I clicked:

Here, I have clicked on icon and I see a dialog/part showing up next to where I clicked:

I want to do that generically to put anything in this part. If I click somewhere else, this dialog should disappear.

### Acceptance Criteria

- [ ] If applicable, a new demo code is provided to show the new feature in action.

- [ ] Integration tests exhibiting how the functionality works are added.

- [ ] Any new code is covered by a unit tested.

- [ ] Check code coverage is at least 90%.

- [ ] Related issue(s) in taipy-doc are created for documentation and Release Notes are updated.

### Code of Conduct

- [X] I have checked the [existing issues](https://github.com/Avaiga/taipy/issues?q=is%3Aissue+).

- [ ] I am willing to work on this issue (optional)

|

closed

|

2024-11-29T10:51:56Z

|

2024-12-17T18:15:45Z

|

https://github.com/Avaiga/taipy/issues/2293

|

[

"🖰 GUI",

"🟨 Priority: Medium",

"✨New feature",

"🔒 Staff only"

] |

FlorianJacta

| 15

|

inducer/pudb

|

pytest

| 84

|

IPython crashes when enabled with %pudb

|

If you use `%pudb` and then use `!` to enable IPython, it crashes (this is with IPython 1.0). The API has changed, I think. See https://github.com/inducer/pudb/pull/83.

|

open

|

2013-08-13T04:34:13Z

|

2014-01-25T20:17:55Z

|

https://github.com/inducer/pudb/issues/84

|

[] |

asmeurer

| 1

|

gradio-app/gradio

|

data-visualization

| 10,611

|

thinking=true in some models

|

- [X] I have searched to see if a similar issue already exists.

**Is your feature request related to a problem? Please describe.**

The IBM model granite has a setting which allows for reasoning or not. You set thinking=true or false.

It's like this:

```python

input_ids = tokenizer.apply_chat_template(conv, return_tensors="pt", thinking=True, return_dict=True, add_generation_prompt=True).to(device)

```

https://huggingface.co/ibm-granite/granite-3.2-8b-instruct-preview

We have no way of setting this on the vllm worker, from what I understand.

I can modify the tokenized and have one version or the other, but that's cumbersome to say the least.

**Describe the solution you'd like**

A way to send additional parameters to the models.

|

closed

|

2025-02-17T20:09:40Z

|

2025-02-17T21:10:44Z

|

https://github.com/gradio-app/gradio/issues/10611

|

[] |

surak

| 2

|

3b1b/manim

|

python

| 1,824

|

Pip doesn't install a new enough numpy

|

### Describe the bug

I ran

```

$ pip install manimgl

$ manimgl

```

and got the error

```

import numpy.typing as npt

ModuleNotFoundError: No module named 'numpy.typing'

```

### Additional context

I have numpy 1.19 and I numpy.typing requires numpy 1.20. I think the pip requirements files need to specify "numpy >= 1.20" rather than just "numpy" as it does now.

|

closed

|

2022-06-02T21:51:49Z

|

2022-06-04T08:04:34Z

|

https://github.com/3b1b/manim/issues/1824

|

[

"bug"

] |

thomasahle

| 3

|

twopirllc/pandas-ta

|

pandas

| 385

|

Stochastic Rsi is very different from trading view values (again without proof)

|

**Which version are you running? The lastest version is on Github. Pip is for major releases.**

```python

import pandas_ta as ta

print(ta.version)

```

**Upgrade.**

```sh

$ pip install -U git+https://github.com/twopirllc/pandas-ta

```

**Describe the bug**

I ran a simple call to stochastic rsi with the same parameters 14,14,3,3. The result are much different from Tradingview values.

**To Reproduce**

dt = ta.stochrsi(df['Close'], length=14, rsi_length=14, k=3, d=3)

df['momentum_stoch_rsi_d'] = dt['STOCHRSId_14_14_3_3']

df['momentum_stoch_rsi_k'] = dt['STOCHRSIk_14_14_3_3']

It is much different even though parameters are the same.

**Screenshots**

If applicable, add screenshots to help explain your problem.

**Additional context**

Add any other context about the problem here.

Thanks for using Pandas TA!

|

closed

|

2021-09-02T10:48:31Z

|

2021-09-02T15:19:44Z

|

https://github.com/twopirllc/pandas-ta/issues/385

|

[

"bug"

] |

hosseinghafarian

| 1

|

CorentinJ/Real-Time-Voice-Cloning

|

tensorflow

| 1,198

|

Error when training encoder

|

Hello, I am appealing to all who can and want to help. so I have a problem when I run encoder training, the first time everything is working fine and then gives an error. here it is:

..........

Step 110 Loss: 3.9845 EER: 0.4027 Step time: mean: 31023ms std: 39773ms

Average execution time over 10 steps:

Blocking, waiting for batch (threaded) (10/10): mean: 26881ms std: 38848ms

Data to cpu (10/10): mean: 1ms std: 0ms

Forward pass (10/10): mean: 966ms std: 37ms

Loss (10/10): mean: 32ms std: 2ms

Backward pass (10/10): mean: 2471ms std: 54ms

Parameter update (10/10): mean: 7ms std: 1ms

Extras (visualizations, saving) (10/10): mean: 0ms std: 1ms

........Traceback (most recent call last):

File "Z:\Real-Time-Voice-Cloning-master\encoder_train.py", line 44, in <module>

train(**vars(args))

File "Z:\Real-Time-Voice-Cloning-master\encoder\train.py", line 71, in train

for step, speaker_batch in enumerate(loader, init_step):

File "C:\Users\Professional\AppData\Local\Programs\Python\Python39\lib\site-packages\torch\utils\data\dataloader.py", line 634, in __next__

data = self._next_data()

File "C:\Users\Professional\AppData\Local\Programs\Python\Python39\lib\site-packages\torch\utils\data\dataloader.py", line 1326, in _next_data

return self._process_data(data)

File "C:\Users\Professional\AppData\Local\Programs\Python\Python39\lib\site-packages\torch\utils\data\dataloader.py", line 1372, in _process_data

data.reraise()

File "C:\Users\Professional\AppData\Local\Programs\Python\Python39\lib\site-packages\torch\_utils.py", line 644, in reraise

raise exception

Exception: Caught Exception in DataLoader worker process 2.

Original Traceback (most recent call last):

File "C:\Users\Professional\AppData\Local\Programs\Python\Python39\lib\site-packages\torch\utils\data\_utils\worker.py", line 308, in _worker_loop

data = fetcher.fetch(index)

File "C:\Users\Professional\AppData\Local\Programs\Python\Python39\lib\site-packages\torch\utils\data\_utils\fetch.py", line 54, in fetch

return self.collate_fn(data)

File "Z:\Real-Time-Voice-Cloning-master\encoder\data_objects\speaker_verification_dataset.py", line 55, in collate

return SpeakerBatch(speakers, self.utterances_per_speaker, partials_n_frames)

File "Z:\Real-Time-Voice-Cloning-master\encoder\data_objects\speaker_batch.py", line 9, in __init__

self.partials = {s: s.random_partial(utterances_per_speaker, n_frames) for s in speakers}

File "Z:\Real-Time-Voice-Cloning-master\encoder\data_objects\speaker_batch.py", line 9, in <dictcomp>

self.partials = {s: s.random_partial(utterances_per_speaker, n_frames) for s in speakers}

File "Z:\Real-Time-Voice-Cloning-master\encoder\data_objects\speaker.py", line 34, in random_partial

self._load_utterances()

File "Z:\Real-Time-Voice-Cloning-master\encoder\data_objects\speaker.py", line 18, in _load_utterances

self.utterance_cycler = RandomCycler(self.utterances)

File "Z:\Real-Time-Voice-Cloning-master\encoder\data_objects\random_cycler.py", line 14, in __init__

raise Exception("Can't create RandomCycler from an empty collection")

Exception: Can't create RandomCycler from an empty collection

what wrong? How can I fix it?

|

open

|

2023-04-19T10:38:46Z

|

2023-04-19T10:38:46Z

|

https://github.com/CorentinJ/Real-Time-Voice-Cloning/issues/1198

|

[] |

terminatormlp

| 0

|

scikit-image/scikit-image

|

computer-vision

| 6,890

|

Update Hausdorff Distance example to show usage as a segmentation metric and clarify docstring

|

### Description:

## What is the issue?

The current version of [the Hausdorff Distance example](https://scikit-image.org/docs/stable/auto_examples/segmentation/plot_hausdorff_distance.html#hausdorff-distance) computes the distance on a set of four points. The example, however, is a bit confusing, as generally Hausdorff Distance is used as a segmentation metric, and therefore starts from segmentation masks.

As the method itself takes as input parameters named `image0` and `image1`, it leads to some confusion where users may expect the method to work *directly* on the segmentation masks, instead of on "images **of contours**". This is particularly confusing since there is no direct method to compute a "contour image" based on a segmentation mask.

We can see this confusion in action in some uses of the metric on GitHub, sometimes in code accompanying published results [e.g. 1, 2].

[1] : "Unsupervised Nuclei Segmentation using Spatial Organization Priors" -- published in MICCAI 22 -- [metrics.py](https://github.com/loic-lb/Unsupervised-Nuclei-Segmentation-using-Spatial-Organization-Priors/blob/58200221430f19c955039d7bf56c0c0f9739ef87/performance/metrics.py), called from [objmetrics.py](https://github.com/loic-lb/Unsupervised-Nuclei-Segmentation-using-Spatial-Organization-Priors/blob/58200221430f19c955039d7bf56c0c0f9739ef87/performance/objmetrics.py) with the same arguments as the Dice score.

[2] : "Head and Neck Tumour Segmentation and Precition of Patient Survival" -- published in MICCAI 21 -- [metrics.py](https://github.com/EmmanuelleB985/Head-and-Neck-Tumour-Segmentation-and-Prediction-of-Patient-Survival/blob/bb36a0aa953367775d140abcc112342a50066759/src/Segmentation_Task/metrics.py) returns average of dice and hausdorff_distance, called with the same arguments.

## Possible improvements

The easiest way to mitigate the issue would probably be to:

* Update the **example** so that it starts from segmentation masks (ground truth and prediction) and shows how to create a "contours" image *then* compute the metric.

* Update the **docstring** so that it explicitly states that it expects contours image and not segmentation masks.

In the longer term, it may be useful to provide either a method to quickly generate a "contours" image from a binary mask (as the `find_contours` method returns a list of coordinates which is not compatible with the behaviour of `hausdorff_distance`), or an alternative method (e.g. `hausdorff_distance_from_masks`) that uses `find_contours` on the masks first.

### Possible updated example:

This could replace: https://github.com/scikit-image/scikit-image/blob/main/doc/examples/segmentation/plot_hausdorff_distance.py

```python

"""

==================

Hausdorff Distance

==================

This example shows how to calculate the Hausdorff distance between a "ground truth" and

a "predicted" segmentation mask. The `Hausdorff distance

<https://en.wikipedia.org/wiki/Hausdorff_distance>`__ is the maximum distance

between any point on the first set and its nearest point on the second set,

and vice-versa.

To use it as a segmentation metric, the contours of the masks have to be computed first.

In this example, this is done by removing the eroded mask from the mask itself.

"""

import numpy as np

import matplotlib.pyplot as plt

from skimage import metrics

from skimage.morphology import erosion, disk

# Creates a "ground truth" binary mask with a disk, and a partially overlapping "predicted" rectangle

ground_truth = np.zeros((100, 100), dtype=bool)

predicted = ground_truth.copy()

ground_truth[30:71, 30:71] = disk(20)

predicted[25:65, 40:70] = True

# Creates "contours" image by xor-ing an erosion

se = np.array([[0, 1, 0], [1, 1, 1], [0, 1, 0]])

gt_contour = ground_truth ^ erosion(ground_truth, se)

predicted_contour = predicted ^ erosion(predicted, se)

# Computes & display the distance & the corresponding pair of points

distance = metrics.hausdorff_distance(gt_contour, predicted_contour)

pair = metrics.hausdorff_pair(gt_contour, predicted_contour)

plt.figure(figsize=(15, 5))

plt.subplot(1, 3, 1)

plt.imshow(ground_truth)

plt.subplot(1, 3, 2)

plt.imshow(predicted)

plt.subplot(1, 3, 3)

plt.imshow(gt_contour)

plt.imshow(predicted_contour, alpha=0.5)

plt.plot([pair[0][1], pair[1][1]], [pair[0][0], pair[1][0]], 'wo-')

plt.title(f"HD={distance:.3f}px")

plt.show()

```

### Possible updated docstring

```python

def hausdorff_distance(image0, image1, method = 'standard'):

"""Calculate the Hausdorff distance between nonzero elements of given images.

To use as a segmentation metric, the method should receive as input images

containing the contours of the objects as nonzero elements.

Parameters

----------

image0, image1 : ndarray

Arrays where ``True`` represents a point that is included in a