repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

sqlalchemy/alembic

|

sqlalchemy

| 1,363

|

AttributeError: 'ConfigParser' object has no attribute '_proxies'

|

**Describe the bug**

After installing the `alembic` package, I entered the `alembic init migrations` command and alembic created the migrations directory and the `alembic.ini` file without any errors, but when I use the `alembic revision --autogenerate -m "Some message"` command, I get the error `AttributeError: 'ConfigParser' object has no attribute '_proxies'` and I don't know where the problem is. I also tested different versions of sqlalchemy and alembic, but the problem still persists. This error is also displayed when using the `alembic check` command.

Below is a picture of the error that occurred :

**Versions.**

- OS: Windows 10

- Python: 3.9

- Alembic: 1.12.1

- SQLAlchemy: 2.0.23

- Database: Sqlite

|

closed

|

2023-11-22T04:39:48Z

|

2023-11-22T16:01:34Z

|

https://github.com/sqlalchemy/alembic/issues/1363

|

[

"third party library / application issues"

] |

MosTafa2K

| 2

|

seleniumbase/SeleniumBase

|

web-scraping

| 2,098

|

Options with undetected driver

|

Hi,

How to add options chrome in undetected driver?

I dont see any example

Thanks

|

closed

|

2023-09-12T12:25:56Z

|

2023-09-12T15:43:01Z

|

https://github.com/seleniumbase/SeleniumBase/issues/2098

|

[

"question",

"UC Mode / CDP Mode"

] |

FranciscoPalomares

| 3

|

deepspeedai/DeepSpeed

|

deep-learning

| 6,044

|

Inference acceleration doesn't work

|

I'm trying to use DeepSpeed to accelerate a ViT model implemented by this [project](https://github.com/lucidrains/vit-pytorch). I following this [tutorial](https://www.deepspeed.ai/tutorials/inference-tutorial/) to optimize the model:

```

from vit_pytorch import ViT

import torch

import deepspeed

import time

def optimize_by_deepspeed(model):

ds_engine = deepspeed.init_inference(

model,

tensor_parallel={"tp_size": 1},

dtype=torch.float,

checkpoint=None,

replace_with_kernel_inject=True)

model = ds_engine.module

return model

device = "cuda:0"

model = ViT(

image_size=32,

patch_size=8,

channels=64,

num_classes=512,

dim=512,

depth=6,

heads=16,

mlp_dim=1024,

dropout=0.1,

emb_dropout=0.1

).to(device)

input_data = torch.randn(1, 64, 32, 32, dtype=torch.float32, device=device)

model = optimize_by_deepspeed(model)

start_time = time.time()

for i in range(1000):

res = model(input_data)

print("time consumption: {}".format(time.time() - start_time))

```

and launch the program with following command:

```

deepspeed --num_gpus 1 example.py

```

However, I found that the time consumption is roughly the same whether or not `model = optimize_by_deepspeed(model)` is executed. Is there any problem in my code?

|

closed

|

2024-08-17T09:13:52Z

|

2024-09-07T08:13:14Z

|

https://github.com/deepspeedai/DeepSpeed/issues/6044

|

[] |

broken-dream

| 2

|

Gerapy/Gerapy

|

django

| 255

|

Spider Works in Terminal Not in Gerapy

|

Before I start I just want to say that you all have done a great job developing this project. I love gerapy. I will probably start contributing to the project. I will try to document this as well as I can so it can be helpful to others.

**Describe the bug**

I have a scrapy project which runs perfectly fine in terminal using the following command:

`scrapy crawl examplespider`

However, when I schedule it in a task and run it on my local scrapyd client it runs but immediately closes. I don't know why it opens and closes without doing anything. Throws no errors. I think it's a config file issue. When I view the results of the job it shows the following:

```

`y.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2022-12-15 07:03:21 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2022-12-15 07:03:21 [scrapy.core.engine] INFO: Spider opened

2022-12-15 07:03:21 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2022-12-15 07:03:21 [scrapy.extensions.telnet] INFO: Telnet console listening on 127.0.0.1:6023

2022-12-15 07:03:21 [scrapy.core.engine] INFO: Closing spider (finished)

2022-12-15 07:03:21 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'elapsed_time_seconds': 0.002359,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2022, 12, 15, 7, 3, 21, 314439),

'log_count/DEBUG': 1,

'log_count/INFO': 10,

'log_count/WARNING': 1,

'memusage/max': 63709184,

'memusage/startup': 63709184,

'start_time': datetime.datetime(2022, 12, 15, 7, 3, 21, 312080)}

2022-12-15 07:03:21 [scrapy.core.engine] INFO: Spider closed (finished)`

```

In the logs it shows the following:

**/home/ubuntu/env/scrape/bin/logs/examplescraper/examplespider**

```

2022-12-15 07:03:21 [scrapy.utils.log] INFO: Scrapy 2.7.1 started (bot: examplescraper)

2022-12-15 07:03:21 [scrapy.utils.log] INFO: Versions: lxml 4.9.1.0, libxml2 2.9.14, cssselect 1.2.0, parsel 1.7.0, w3lib 2.1.1, Twisted 22.10.0, Python 3.8.10 (default, Nov 14 2022, 12:59:47) - [GCC 9.4.0], pyOpenSSL 22.1.0 (OpenSSL 3.0.7 1 Nov 2022), cryptography 38.0.4, Platform Linux-5.15.0-1026-aws-x86_64-with-glibc2.29

2022-12-15 07:03:21 [scrapy.crawler] INFO: Overridden settings:

{'BOT_NAME': 'examplescraper',

'DOWNLOAD_DELAY': 0.1,

'LOG_FILE': 'logs/examplescraper/examplespider/8d623d447c4611edad0641137877ddff.log',

'NEWSPIDER_MODULE': 'examplespider.spiders',

'SPIDER_MODULES': ['examplespider.spiders'],

'USER_AGENT': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 '

'(KHTML, like Gecko) Chrome/101.0.4951.67 Safari/537.36'

}

2022-12-15 07:03:21 [py.warnings] WARNING: /home/ubuntu/env/scrape/lib/python3.8/site-packages/scrapy/utils/request.py:231: ScrapyDeprecationWarning: '2.6' is a deprecated value for the 'REQUEST_FINGERPRINTER_IMPLEMENTATION' setting.

It is also the default value. In other words, it is normal to get this warning if you have not defined a value for the 'REQUEST_FINGERPRINTER_IMPLEMENTATION' setting. This is so for backward compatibility reasons, but it will change in a future version of Scrapy.

See the documentation of the 'REQUEST_FINGERPRINTER_IMPLEMENTATION' setting for information on how to handle this

deprecation.

return cls(crawler)

2022-12-15 07:03:21 [scrapy.utils.log] DEBUG: Using reactor: twisted.internet.epollreactor.EPollReactor

2022-12-15 07:03:21 [scrapy.extensions.telnet] INFO: Telnet Password: b11a24faee23f82c

2022-12-15 07:03:21 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole

'scrapy.extensions.memusage.MemoryUsage',

'scrapy.extensions.logstats.LogStats']

2022-12-15 07:03:21 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2022-12-15 07:03:21 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2022-12-15 07:03:21 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2022-12-15 07:03:21 [scrapy.core.engine] INFO: Spider opened

2022-12-15 07:03:21 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2022-12-15 07:03:21 [scrapy.extensions.telnet] INFO: Telnet console listening on 127.0.0.1:6023

2022-12-15 07:03:21 [scrapy.core.engine] INFO: Closing spider (finished)

2022-12-15 07:03:21 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'elapsed_time_seconds': 0.002359,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2022, 12, 15, 7, 3, 21, 314439),

'log_count/DEBUG': 1,

'log_count/INFO': 10,

'log_count/WARNING': 1,

'memusage/max': 63709184,

'memusage/startup': 63709184,

'start_time': datetime.datetime(2022, 12, 15, 7, 3, 21, 312080)

}

2022-12-15 07:03:21 [scrapy.core.engine] INFO: Spider closed (finished)

```

**/home/ubuntu/gerapy/logs**

```

ubuntu@ip-172-26-13-235:~/gerapy/logs$ cat 20221215065310.log

INFO - 2022-12-15 14:53:18,043 - process: 480 - scheduler.py - gerapy.server.core.scheduler - 105 - scheduler - successfully synced task with jobs with force

INFO - 2022-12-15 14:54:15,011 - process: 480 - scheduler.py - gerapy.server.core.scheduler - 34 - scheduler - execute job of client LOCAL, project examplescraper, spider examplespider

ubuntu@ip-172-26-13-235:~/gerapy/logs$

```

**To Reproduce**

Steps to reproduce the behavior:

1. AWS Ubuntu 20.04 Instance

2. Use python3 virtual environment and follow the installation instructions

3. Create a systemd service for scrapyd and gerapy by doing the following:

```

cd /lib/systemd/system

sudo nano scrapyd.service

```

paste the following:

```

[Unit]

Description=Scrapyd service

After=network.target

[Service]

User=ubuntu

Group=ubuntu

WorkingDirectory=/home/ubuntu/env/scrape/bin

ExecStart=/home/ubuntu/env/scrape/bin/scrapyd

[Install]

WantedBy=multi-user.target

```

Issue the following commands:

```

sudo systemctl enable scrapyd.service

sudo systemctl start scrapyd.service

sudo systemctl status scrapyd.service

```

It should say: **active (running)**

Create a script to run gerapy as a systemd service

```

cd ~/virtualenv/exampleproject/bin/

nano runserv-gerapy.sh

```

Paste the following:

```

#!/bin/bashcd

/home/ubuntu/virtualenv

source exampleproject/bin/activate

cd /home/ubuntu/gerapy

gerapy runserver 0.0.0.0:8000

```

Give this file execute permissions

`sudo chmod +x runserve-gerapy.sh`

Navigate back to systemd and create a service to run the runserve-gerapy.sh

```

cd /lib/systemd/system

sudo nano gerapy-web.service

```

Paste the following:

```

[Unit]

Description=Gerapy Webserver Service

After=network.target

[Service]

User=ubuntu

Group=ubuntu

WorkingDirectory=/home/ubuntu/virtualenv/exampleproject/bin

ExecStart=/bin/bash /home/ubuntu/virtualenv/exampleproject/bin/runserver-gerapy.sh

[Install]

WantedBy=multi-user.target

```

Again issue the following:

```

sudo systemctl enable gerapy-web.service

sudo systemctl start gerapy-web.service

sudo systemctl status gerapy-web.service

```

Look for **active (running)** and navigate to http://your.pub.ip.add:8000 or http://localhost:8000 or http://127.0.0.1:8000 to verify that it is running. Reboot the instance to verify that the services are running on system startup.

5. Log in and create a client for the local scrapyd service. Use IP 127.0.0.1 and Port 6800. No Auth. Save it as "Local" or "Scrapyd"

6. Create a project. Select Clone. For testing I used the following github scrapy project: https://github.com/eneiromatos/NebulaEmailScraper (actually a pretty nice starter project). Save the project. Build the project. Deploy the project. (If you get an error when deploying make sure to be running in the virtual env, you might need to reboot).

7. Create a task. Make sure the project name and spider name matches what is in the scrapy.cfg and examplespider.py files and save the task. Schedule the task. Run the task

**Traceback**

See logs above ^^^

**Expected behavior**

It should run for at least 5 minutes and output to a file called emails.json in the project root folder (the folder with scrapy.cfg file)

**Screenshots**

I can upload screenshots if requested.

**Environment (please complete the following information):**

- OS: AWS Ubuntu 20.04

- Browser Firefox

- Python Version 3.8

- Gerapy Version 0.9.11 (latest)

**Additional context**

Add any other context about the problem here.

|

open

|

2022-12-15T08:57:52Z

|

2022-12-17T19:18:58Z

|

https://github.com/Gerapy/Gerapy/issues/255

|

[

"bug"

] |

wmullaney

| 0

|

saulpw/visidata

|

pandas

| 1,768

|

[save_filetype] when save_filetype is set, you cannot gY as any other filetype on the DirSheet

|

**Small description**

I use `options.save_filetype = 'jsonl'` in my `.visidatarc`. I use this to set my default save type. However, sometimes I like to copy rows out as different file types, csv, fixed etc. and so I press gY and provide [e.g.] `copy 19 rows as filetype: fixed` but the response I get back is `"fixed" │ copying 19 rows to system clipboard as fixed │ saving 1 sheets to .fixed as jsonl` and the rows that are copied are in fact `jsonl`.

**Expected result**

To be able to set `options.save_filetype` and this be provided as the default option when copying and/or saving, however be able to save as different filetypes on-the-fly (as expected).

**Actual result with screenshot**

Shown above

**Steps to reproduce with sample data and a .vd**

1. Set `options.save_filetype = 'jsonl'` in `.visidatarc`

2. open a ~~file~~ directory (DirSheet) _< edited 2023-03-01_

3. Select some rows

4. Press `gY` (`syscopy-selected`)

5. enter "fixed"

|

closed

|

2023-02-27T19:17:42Z

|

2023-10-18T05:56:12Z

|

https://github.com/saulpw/visidata/issues/1768

|

[

"bug",

"fixed"

] |

geekscrapy

| 17

|

keras-team/keras

|

data-science

| 20,104

|

Tensorflow model.fit fails on test_step: 'NoneType' object has no attribute 'items'

|

I am using tf.data module to load my datasets. Although the training and validation data modules are almost the same. The train_step works properly and the training on the first epoch continues till the last batch, but in the test_step I get the following error:

```shell

353 val_logs = {

--> 354 "val_" + name: val for name, val in val_logs.items()

355 }

356 epoch_logs.update(val_logs)

358 callbacks.on_epoch_end(epoch, epoch_logs)

AttributeError: 'NoneType' object has no attribute 'items'

```

Here is the code for fitting the model:

```shell

results = auto_encoder.fit(

train_data,

epochs=config['epochs'],

steps_per_epoch=(num_train // config['batch_size']),

validation_data=valid_data,

validation_steps=(num_valid // config['batch_size'])-1,

callbacks=callbacks

)

```

I should mention that I have used .repeat() on both train_data and valid_data, so the problem is not with not having enough samples.

|

closed

|

2024-08-09T15:32:39Z

|

2024-08-10T17:59:05Z

|

https://github.com/keras-team/keras/issues/20104

|

[

"stat:awaiting response from contributor",

"type:Bug"

] |

JVD9kh96

| 2

|

napari/napari

|

numpy

| 6,744

|

Remove lambdas in help menu actions `_help_actions.py`

|

We should remove lambdas used in help menu actions in `napari/_app_model/actions/_help_actions.py`.

The callbacks are all calls to `webbrowser.open`. This is a case where having `kwargs` field in actions would be useful. (ref: https://github.com/pyapp-kit/app-model/issues/52)

|

closed

|

2024-03-13T11:21:08Z

|

2024-05-20T09:33:08Z

|

https://github.com/napari/napari/issues/6744

|

[

"maintenance"

] |

lucyleeow

| 0

|

autogluon/autogluon

|

computer-vision

| 4,181

|

Failed to load cask: libomp.rb

|

$ brew install libomp.rb

Error: Failed to load cask: libomp.rb

Cask 'libomp' is unreadable: wrong constant name #<Class:0x00000001150a5b48>

Warning: Treating libomp.rb as a formula.

==> Fetching libomp

==> Downloading https://mirrors.ustc.edu.cn/homebrew-bottles/libomp-11.1.0.big_sur.bottle.tar.gz

curl: (22) The requested URL returned error: 404

Warning: Bottle missing, falling back to the default domain...

==> Downloading https://ghcr.io/v2/homebrew/core/libomp/manifests/11.1.0

Already downloaded: /Users/leo/Library/Caches/Homebrew/downloads/d07c72aee9e97441cb0e3a5ea764f8acae989318597336359b6e111c3f4c44b1--libomp-11.1.0.bottle_manifest.json

==> Downloading https://ghcr.io/v2/homebrew/core/libomp/blobs/sha256:ec279162f0062c675ea96251801a99c19c3b82f395f1598ae2f31cd4cbd9a963

############################################################################################################################################################################## 100.0%

Warning: libomp 18.1.5 is available and more recent than version 11.1.0.

==> Pouring libomp--11.1.0.big_sur.bottle.tar.gz

🍺 /usr/local/Cellar/libomp/11.1.0: 9 files, 1.4MB

==> Running `brew cleanup libomp`...

Disable this behaviour by setting HOMEBREW_NO_INSTALL_CLEANUP.

Hide these hints with HOMEBREW_NO_ENV_HINTS (see `man brew`).

Removing: /Users/leo/Library/Caches/Homebrew/libomp--11.1.0... (467.3KB)

|

closed

|

2024-05-08T02:19:54Z

|

2024-06-27T09:26:02Z

|

https://github.com/autogluon/autogluon/issues/4181

|

[

"bug: unconfirmed",

"OS: Mac",

"Needs Triage"

] |

LeonTing1010

| 3

|

christabor/flask_jsondash

|

flask

| 134

|

Using the example app

|

I am sorry if this sounds stupid, but I am new to flask development. I am confused with how exactly to use the example app to study how the flask_jsondash work. I run the app and click the link that said 'Visit the chart blueprints' but it lead to server timeout error. When I looked at the code I understand that there is no function mapped to '/charts' URL, I guess you want people to implement that themselves but I can't figure out what I need to do. The doc seems to be built so people with experiences can understand quickly, but I can't. Do you have some guideline for people who are new to this? Specifically, I would appreciate a newbie tutorial. It does not have to be detailed, but a steps that I can follow to understand more easily.

|

closed

|

2017-07-19T02:50:23Z

|

2017-07-20T04:43:50Z

|

https://github.com/christabor/flask_jsondash/issues/134

|

[] |

FMFluke

| 7

|

davidteather/TikTok-Api

|

api

| 629

|

[FEATURE_REQUEST] - Accessing private tik toks

|

Unable to access private tik toks.

Running V.3.9.9 - have manually entered verifyFp cookie from logged in account with access to private content in question; working fine for public tik toks but for privates just runs and stops with no triggered error messages.

I know variations of this topic have been discussed in past, any functionality as of June 2021?

Also noticed class TikTokUser which seems relevant. Any help appreciated.

|

closed

|

2021-06-21T23:50:16Z

|

2021-08-07T00:13:59Z

|

https://github.com/davidteather/TikTok-Api/issues/629

|

[

"feature_request"

] |

alanblue2000

| 1

|

pydata/pandas-datareader

|

pandas

| 495

|

Release 0.7.0

|

The recent re-addition of Yahoo! will trigger a new release in the next week. I will try to get as many PRs in before then.

|

closed

|

2018-02-21T18:22:00Z

|

2018-09-12T11:32:24Z

|

https://github.com/pydata/pandas-datareader/issues/495

|

[] |

bashtage

| 15

|

kaliiiiiiiiii/Selenium-Driverless

|

web-scraping

| 300

|

is there a way to start it with firefox ?

|

SO my mian browser is firefox, but for selenium_driverless i use chrome.

so is there a way to use firefox instead ? thanks

|

closed

|

2024-12-26T12:55:21Z

|

2024-12-26T13:05:00Z

|

https://github.com/kaliiiiiiiiii/Selenium-Driverless/issues/300

|

[] |

firaki12345-cmd

| 1

|

ets-labs/python-dependency-injector

|

flask

| 814

|

Configuration object is a dictionary and not an object with which the dot notation could be used to access its elements

|

I cannot figure out why the config is a dictionary, while in every example it is an object with "." (dot) operator to access its variables.

Here is a simple code that I used:

```

from dependency_injector import containers, providers

from dependency_injector.wiring import Provide, inject

class Container(containers.DeclarativeContainer):

config = providers.Configuration()

@inject

def use_config(config: providers.Configuration = Provide[Container.config]):

print(type(config))

print(config)

if __name__ == "__main__":

container = Container()

container.config.from_yaml("config.yaml")

container.wire(modules=[__name__])

use_config()

```

and the type is a 'dict'

|

open

|

2024-09-02T12:55:35Z

|

2025-01-04T16:37:36Z

|

https://github.com/ets-labs/python-dependency-injector/issues/814

|

[] |

Zevrap-81

| 2

|

zappa/Zappa

|

django

| 520

|

[Migrated] Feature Request: Including vs. Excluding

|

Originally from: https://github.com/Miserlou/Zappa/issues/1366 by [rr326](https://github.com/rr326)

This may go against your fundamental design philosophy, but I feel it would be better to have Zappa require an explicit inclusion list rather than an exclusion list.

Background:

I've just spent a day trying to figure out why my Zappa installation stopped working. After following one cryptic lambda error after another, and reading many Zappa issues, I finally figured out that my package was > 500MB. (And it is a SIMPLE Flask app.) After more research, I figured out that it was including all sorts of stuff that it didn't need to. Then after a lot of trial and error, I excluded one dir after another, until my previously > 500MB .gz is now about 8 MB!

I'm not saying Zappa did anything "wrong". But it simply couldn't guess what should and shouldn't have been included in my project. (I'm happy to share a project structure and give details if you want, but I think my structure is reasonable.)

By including an "include" array, I know that it is my responsibility to modify it. And if you make it required and start with: `"include": ["."]`, I see it as a clue to tell me I need to figure it out.

Alternatively, much more prominent information on the importance of "exclude" in the documentation would help. I could take a stab at that if it would be helpful, though I don't really understand what is and isn't included in the first place.

|

closed

|

2021-02-20T09:43:51Z

|

2022-07-16T07:25:01Z

|

https://github.com/zappa/Zappa/issues/520

|

[

"enhancement",

"feature-request"

] |

jneves

| 2

|

chainer/chainer

|

numpy

| 8,623

|

can't use chainer with new cupy, can't install old cupy

|

Chainer officially only support cupy == 7.8.0. Sadly, I can't install older cupy and when installing newer cupy, cupy is not recognized by chainer.

Option 1:

`pip install Cython` # works and installs Cython 0.29.31

`pip install cupy==7.8.0` # fails

> CUDA_PATH : /cvmfs/soft.computecanada.ca/easybuild/software/2020/Core/cudacore/11.0.2

> NVTOOLSEXT_PATH : (none)

> NVCC : (none)

> ROCM_HOME : (none)

>

> Modules:

> cuda : Yes (version 11000)

> cusolver : Yes

> cudnn : Yes (version 8003)

> nccl : No

> -> Include files not found: ['nccl.h']

> -> Check your CFLAGS environment variable.

> nvtx : Yes

> thrust : Yes

> cutensor : No

> -> Include files not found: ['cutensor.h']

> -> Check your CFLAGS environment variable.

> cub : Yes

>

> WARNING: Some modules could not be configured.

> CuPy will be installed without these modules.

> Please refer to the Installation Guide for details:

> https://docs.cupy.dev/en/stable/install.html

>

> ************************************************************

>

> NOTICE: Skipping cythonize as cupy/core/_dtype.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/_kernel.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/_memory_range.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/_routines_indexing.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/_routines_logic.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/_routines_manipulation.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/_routines_math.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/_routines_sorting.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/_routines_statistics.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/_scalar.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/core.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/dlpack.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/flags.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/internal.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/fusion.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/core/raw.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/cublas.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/cufft.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/curand.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/cusparse.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/device.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/driver.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/memory.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/memory_hook.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/nvrtc.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/pinned_memory.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/profiler.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/function.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/stream.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/runtime.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/texture.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/util.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/cusolver.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/cudnn.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cudnn.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/nvtx.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/thrust.pyx does not exist.

> NOTICE: Skipping cythonize as cupy/cuda/cub.pyx does not exist.

> Traceback (most recent call last):

> File "<string>", line 2, in <module>

> File "<pip-setuptools-caller>", line 34, in <module>

> File "/tmp/pip-install-3nvzj2jg/cupy_b0144fd6a6cf445885b7f609ca09f2cc/setup.py", line 156, in <module>

> setup(

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/__init__.py", line 87, in setup

> return distutils.core.setup(**attrs)

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/_distutils/core.py", line 177, in setup

> return run_commands(dist)

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/_distutils/core.py", line 193, in run_commands

> dist.run_commands()

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/_distutils/dist.py", line 968, in run_commands

> self.run_command(cmd)

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/dist.py", line 1217, in run_command

> super().run_command(command)

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/_distutils/dist.py", line 987, in run_command

> cmd_obj.run()

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/command/install.py", line 68, in run

> return orig.install.run(self)

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/_distutils/command/install.py", line 695, in run

> self.run_command('build')

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/_distutils/cmd.py", line 317, in run_command

> self.distribution.run_command(command)

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/dist.py", line 1217, in run_command

> super().run_command(command)

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/_distutils/dist.py", line 987, in run_command

> cmd_obj.run()

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/command/build.py", line 24, in run

> super().run()

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/_distutils/command/build.py", line 131, in run

> self.run_command(cmd_name)

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/_distutils/cmd.py", line 317, in run_command

> self.distribution.run_command(command)

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/dist.py", line 1217, in run_command

> super().run_command(command)

> File "/home/jolicoea/vidgen2/lib/python3.8/site-packages/setuptools/_distutils/dist.py", line 987, in run_command

> cmd_obj.run()

> File "/tmp/pip-install-3nvzj2jg/cupy_b0144fd6a6cf445885b7f609ca09f2cc/cupy_setup_build.py", line 991, in run

> check_extensions(self.extensions)

> File "/tmp/pip-install-3nvzj2jg/cupy_b0144fd6a6cf445885b7f609ca09f2cc/cupy_setup_build.py", line 728, in check_extensions

> raise RuntimeError('''\

> RuntimeError: Missing file: cupy/cuda/cub.cpp

> Please install Cython 0.28.0 or later. Please also check the version of Cython.

> See https://docs.cupy.dev/en/stable/install.html for details.

I have Cython 0.29.31, pip install says that 0.28.0 is not available so I can't downgrade.

Option 2:

`pip install cupy` or install from a git clone with `pip install -e .`

cupy installs properly and I can use it, but chainer does not think that cupy is properly installed and I get cupy.core not found errors

### To Reproduce

```py

pip install Cython

pip install cupy==7.8.0 # error

pip install cupy-cuda110 # error not found

pip install cupy # works

pip install chainer

python -c 'import chainer; chainer.print_runtime_info()' # cupy not recognized by chainer

```

### Environment

```

# python -c 'import chainer; chainer.print_runtime_info()'

Chainer: 4.5.0

NumPy: 1.23.0

CuPy: Not Available

# python -c 'import cupy; cupy.show_config()'

OS : Linux-3.10.0-1160.62.1.el7.x86_64-x86_64-with-glibc2.2.5

Python Version : 3.8.10

CuPy Version : 11.0.0

CuPy Platform : NVIDIA CUDA

NumPy Version : 1.23.0

SciPy Version : 1.8.0

Cython Build Version : 0.29.31

Cython Runtime Version : 0.29.31

CUDA Root : /cvmfs/soft.computecanada.ca/easybuild/software/2020/Core/cudacore/11.0.2

nvcc PATH : /cvmfs/soft.computecanada.ca/easybuild/software/2020/Core/cudacore/11.0.2/bin/nvcc

CUDA Build Version : 11040

CUDA Driver Version : 11060

CUDA Runtime Version : 11040

cuBLAS Version : (available)

cuFFT Version : 10502

cuRAND Version : 10205

cuSOLVER Version : (11, 2, 0)

cuSPARSE Version : (available)

NVRTC Version : (11, 4)

Thrust Version : 101201

CUB Build Version : 101201

Jitify Build Version : d90e2e0

cuDNN Build Version : 8200

cuDNN Version : 8200

NCCL Build Version : 21104

NCCL Runtime Version : 21104

cuTENSOR Version : None

cuSPARSELt Build Version : None

Device 0 Name : Tesla V100-SXM2-16GB

Device 0 Compute Capability : 70

Device 0 PCI Bus ID : 0000:1C:00.0

Device 1 Name : Tesla V100-SXM2-16GB

Device 1 Compute Capability : 70

Device 1 PCI Bus ID : 0000:1D:00.0

```

|

closed

|

2022-08-01T17:54:52Z

|

2022-08-02T04:51:48Z

|

https://github.com/chainer/chainer/issues/8623

|

[] |

AlexiaJM

| 1

|

ydataai/ydata-profiling

|

pandas

| 1,288

|

Missing tags for latest v3.6.x releases

|

On PyPI, there are 3.6.4, 3.6.5 and 3.6.6 releases (https://pypi.org/project/pandas-profiling/#history), but no corresponding tags in this repo. Can you add them please?

|

closed

|

2023-03-17T10:08:19Z

|

2023-04-13T11:37:54Z

|

https://github.com/ydataai/ydata-profiling/issues/1288

|

[

"question/discussion ❓"

] |

jamesmyatt

| 1

|

tensorly/tensorly

|

numpy

| 143

|

Feature Request: Outer Product

|

Hi,

I am starting to work with tensors and found your toolbox. When trying the CPD, I first wanted to create a rank-3 tensor and then decompose it again and look at the result, since CPD is unique. There I noticed that no outer vector product is implemented in the toolbox. It would be nice if there is one, for simulations etc.

I know there is numpy.outer, but this only works for 2 vectors.

Thanks in advance,

Isi

|

closed

|

2019-12-02T10:49:47Z

|

2019-12-02T12:43:58Z

|

https://github.com/tensorly/tensorly/issues/143

|

[] |

IsabellLehmann

| 2

|

alpacahq/alpaca-trade-api-python

|

rest-api

| 453

|

Paper Trade client does not support fractional trading

|

Not sure if this is a client or server issue but when trying the below code with a paper trading endpoint and API key

resp = api.submit_order(

symbol='SPY',

notional=450, # notional value of 1.5 shares of SPY at $300

side='buy',

type='market',

time_in_force='day',

)

I get alpaca_trade_api.rest.APIError: qty is required

|

closed

|

2021-06-19T21:32:40Z

|

2021-06-19T21:42:51Z

|

https://github.com/alpacahq/alpaca-trade-api-python/issues/453

|

[] |

arun-annamalai

| 0

|

neuml/txtai

|

nlp

| 465

|

How to add a custom pipeline into yaml config?

|

I have MyPipeline class.

How to add the pipeline?

Can we use this syntax?

```yaml

my_pipeline:

__import__: app.MyPipeline

```

|

closed

|

2023-04-25T11:07:44Z

|

2023-04-26T13:56:58Z

|

https://github.com/neuml/txtai/issues/465

|

[] |

pyoner

| 2

|

microsoft/nni

|

deep-learning

| 5,225

|

customized trial issue from wechat

|

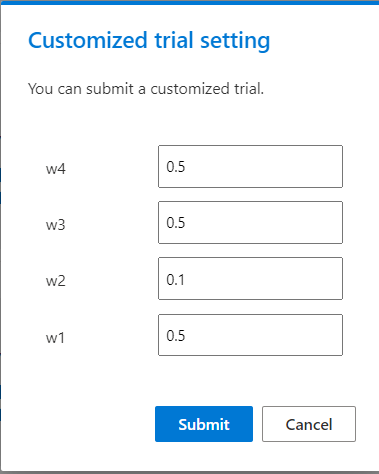

**Describe the issue**:

您好,这个操作如何使用命令执行?

hi, could I use nni-command to do it?

**Environment**:

- NNI version: --

- Training service (local|remote|pai|aml|etc):

- Client OS:

- Server OS (for remote mode only):

- Python version:

- PyTorch/TensorFlow version:

- Is conda/virtualenv/venv used?:

- Is running in Docker?:

**Configuration**:

- Experiment config (remember to remove secrets!):

- Search space:

**Log message**:

- nnimanager.log:

- dispatcher.log:

- nnictl stdout and stderr:

<!--

Where can you find the log files:

LOG: https://github.com/microsoft/nni/blob/master/docs/en_US/Tutorial/HowToDebug.md#experiment-root-director

STDOUT/STDERR: https://nni.readthedocs.io/en/stable/reference/nnictl.html#nnictl-log-stdout

-->

**How to reproduce it?**:

|

open

|

2022-11-14T01:58:20Z

|

2022-11-16T02:41:00Z

|

https://github.com/microsoft/nni/issues/5225

|

[

"feature request"

] |

Lijiaoa

| 0

|

ARM-DOE/pyart

|

data-visualization

| 1,297

|

ENH: Add keyword add_lines in method plot_grid in gridmapdisplay

|

On occasion you would like to have the grid lines but not the coast maps in the plot. Separating the two would be useful (see https://github.com/MeteoSwiss/pyart/blob/dev/pyart/graph/gridmapdisplay.py)

|

closed

|

2022-10-21T11:10:25Z

|

2022-11-03T15:57:17Z

|

https://github.com/ARM-DOE/pyart/issues/1297

|

[

"Enhancement",

"good first issue"

] |

jfigui

| 3

|

alpacahq/alpaca-trade-api-python

|

rest-api

| 409

|

Invalid TimeFrame in getbars for hourly

|

Hello when I try to use the example, I get an error:

`api.get_bars("AAPL", TimeFrame.Hour, "2021-02-08", "2021-02-08", limit=10, adjustment='raw').df`

```

"""

Traceback (most recent call last):

File "/home/kyle/.virtualenv/lib/python3.8/site-packages/alpaca_trade_api/rest.py", line 160, in _one_request

resp.raise_for_status()

File "/home/kyle/.virtualenv/lib/python3.8/site-packages/requests/models.py", line 943, in raise_for_status

raise HTTPError(http_error_msg, response=self)

requests.exceptions.HTTPError: 422 Client Error: Unprocessable Entity for url: https://data.alpaca.markets/v2/stocks/AAPL/bars?timeframe=1Hour&adjustment=raw&start=2021-02-08&end=2021-02-08&limit=10

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "run_hourly.py", line 231, in make_df

tdf = api.get_bars("AAPL", TimeFrame.Hour, "2021-02-08", "2021-02-08", limit=10, adjustment='raw').df

File "/home/kyle/.virtualenv/lib/python3.8/site-packages/alpaca_trade_api/rest.py", line 625, in get_bars

bars = list(self.get_bars_iter(symbol,

File "/home/kyle/.virtualenv/lib/python3.8/site-packages/alpaca_trade_api/rest.py", line 611, in get_bars_iter

for bar in bars:

File "/home/kyle/.virtualenv/lib/python3.8/site-packages/alpaca_trade_api/rest.py", line 535, in _data_get_v2

resp = self.data_get('/stocks/{}/{}'.format(symbol, endpoint),

File "/home/kyle/.virtualenv/lib/python3.8/site-packages/alpaca_trade_api/rest.py", line 192, in data_get

return self._request(

File "/home/kyle/.virtualenv/lib/python3.8/site-packages/alpaca_trade_api/rest.py", line 139, in _request

return self._one_request(method, url, opts, retry)

File "/home/kyle/.virtualenv/lib/python3.8/site-packages/alpaca_trade_api/rest.py", line 168, in _one_request

raise APIError(error, http_error)

alpaca_trade_api.rest.APIError: invalid timeframe

```

Minute data seems to work.

|

closed

|

2021-03-30T15:22:37Z

|

2021-07-02T09:35:55Z

|

https://github.com/alpacahq/alpaca-trade-api-python/issues/409

|

[] |

hansonkd

| 7

|

flaskbb/flaskbb

|

flask

| 24

|

Add tests

|

I think tests are needed,

I myself am I big fan of [py.test](http://pytest.org/latest/) in combination with [pytest-bdd](https://github.com/olegpidsadnyi/pytest-bdd) for functional tests.

|

closed

|

2014-03-08T11:14:40Z

|

2018-04-15T07:47:30Z

|

https://github.com/flaskbb/flaskbb/issues/24

|

[] |

hvdklauw

| 15

|

LAION-AI/Open-Assistant

|

python

| 3,293

|

user streak updates in the backend takes up 100% cpu

|

updating user streak seems to use the cpu 100% in db.commit().

Add end of task debug info

Batch the commits

|

closed

|

2023-06-05T00:16:51Z

|

2023-06-07T13:03:34Z

|

https://github.com/LAION-AI/Open-Assistant/issues/3293

|

[

"bug",

"backend"

] |

melvinebenezer

| 0

|

microsoft/nni

|

pytorch

| 4,874

|

Error about running with framecontroller mode

|

**config.yml**

experimentName: example_mnist_pytorch

trialConcurrency: 1

maxExecDuration: 1h

maxTrialNum: 2

debug: false

nniManagerIp: 172.16.40.155

#choice: local, remote, pai, kubeflow

trainingServicePlatform: frameworkcontroller

searchSpacePath: search_space.json

#choice: true, false

useAnnotation: false

tuner:

#choice: TPE, Random, Anneal, Evolution, BatchTuner, MetisTuner, GPTuner

builtinTunerName: TPE

classArgs:

#choice: maximize, minimize

optimize_mode: maximize

trial:

codeDir: .

taskRoles:

- name: worker

taskNum: 1

command: python3 model.py

gpuNum: 0

cpuNum: 1

memoryMB: 8192

image: frameworkcontroller/nni:v1.0

securityContext:

privileged: true

frameworkAttemptCompletionPolicy:

minFailedTaskCount: 1

minSucceededTaskCount: 1

frameworkcontrollerConfig:

storage: nfs

serviceAccountName: frameworkcontroller

nfs:

# Your NFS server IP, like 10.10.10.10

server: <my nfs server ip>

# Your NFS server export path, like /var/nfs/nni

path: /nfs/nni

readOnly: false

nfs server: /etc/exports

/nfs/nni *(rw,no_root_squash,sync,insecure,no_subtree_check)

**k8s logs**

kubectl describe pod nniexpywn5cftxenvma3hh-worker-0:

How to solve this error?

|

open

|

2022-05-19T08:02:48Z

|

2022-06-28T09:39:36Z

|

https://github.com/microsoft/nni/issues/4874

|

[

"user raised",

"support",

"Training Service"

] |

N-Kingsley

| 1

|

ydataai/ydata-profiling

|

data-science

| 1,134

|

Introduce type-checking

|

### Missing functionality

Types are not enforced by Python, nevertheless, to introduce type checking would reduce the number of issues open.

### Proposed feature

Introduce type-checking for the user interfaced methods.

### Alternatives considered

_No response_

### Additional context

_No response_

|

closed

|

2022-10-31T20:40:46Z

|

2022-11-10T11:08:04Z

|

https://github.com/ydataai/ydata-profiling/issues/1134

|

[

"needs-triage"

] |

fabclmnt

| 0

|

davidsandberg/facenet

|

tensorflow

| 292

|

the result question

|

closed

|

2017-05-26T07:41:04Z

|

2017-05-26T07:42:55Z

|

https://github.com/davidsandberg/facenet/issues/292

|

[] |

hanbim520

| 0

|

|

openapi-generators/openapi-python-client

|

rest-api

| 1,002

|

Providing package version via command properties

|

Allow setting generated client version by providing `--package-version` like property in the `openapi-python-client generate`

This will simplify automatic processes in the Git-like environments

|

open

|

2024-03-14T10:09:16Z

|

2024-03-14T10:09:16Z

|

https://github.com/openapi-generators/openapi-python-client/issues/1002

|

[] |

pasolid

| 0

|

pywinauto/pywinauto

|

automation

| 983

|

No Conda Installation

|

## Expected Behavior

I am trying to install pywinauto thought conda. Here is the documentation:

https://anaconda.org/conda-forge/pywinauto

## Actual Behavior

PackagesNotFoundError: The following packages are not available from current channels:

- pywinauto

## Steps to Reproduce the Problem

1. Open any Conda Environment

2. Write:

2.1. conda install -c conda-forge pywinauto or

2.2. conda install -c conda-forge/label/cf201901 pywinauto or

2.3. conda install -c conda-forge/label/cf202003 pywinauto

## Specifications

- Pywinauto version:

- Python version and bitness: Python 3.7

- Platform and OS: MacOS Sierra v10.12.6

|

closed

|

2020-09-15T14:25:23Z

|

2020-09-15T19:09:25Z

|

https://github.com/pywinauto/pywinauto/issues/983

|

[

"duplicate"

] |

CibelesR

| 3

|

rougier/scientific-visualization-book

|

matplotlib

| 33

|

Requirements files for package versions

|

Having the versions of packages used for the book in a `requirements.txt` would be helpful for others reproducing things a year or so down the line.

Looking through the repo for something such as `numpy` doesn't bring up anything wrt the version used, just that it's imported in several locations.

As this book was only recently finished, perhaps there's an environment on the authors computer which has all the correct package versions within it? And a file could be generated from that.

Example search for numpy with `rg numpy` :

```

README.md:* [From Python to Numpy](https://www.labri.fr/perso/nrougier/from-python-to-numpy/) (Scientific Python Volume I)

README.md:* [100 Numpy exercices](https://github.com/rougier/numpy-100)

rst/threed.rst:specify the color of each of the triangle using a numpy array, so let's just do

rst/anatomy.rst: import numpy as np

rst/anatomy.rst: import numpy as np

rst/00-preface.rst: import numpy as np

rst/00-preface.rst: >>> import numpy; print(numpy.__version__)

code/showcases/escher-movie.py:import numpy as np

code/showcases/domain-coloring.py:import numpy as np

code/showcases/mandelbrot.py:import numpy as np

code/showcases/text-shadow.py:import numpy as np

code/showcases/windmap.py:import numpy as np

code/showcases/mosaic.py:import numpy as np

code/showcases/mosaic.py: from numpy.random.mtrand import RandomState

code/showcases/contour-dropshadow.py:import numpy as np

code/showcases/escher.py:import numpy as np

code/showcases/text-spiral.py:import numpy as np

code/showcases/recursive-voronoi.py:import numpy as np

code/showcases/recursive-voronoi.py: from numpy.random.mtrand import RandomState

code/showcases/waterfall-3d.py:import numpy as np

rst/defaults.rst: import numpy as np

code/beyond/stamp.py:import numpy as np

code/beyond/dyson-hatching.py:import numpy as np

code/beyond/tikz-dashes.py:import numpy as np

rst/coordinates.rst: import numpy as np

rst/animation.rst: import numpy as np

rst/animation.rst: import numpy as np

rst/animation.rst: import numpy as np

code/beyond/tinybot.py:import numpy as np

code/beyond/dungeon.py:import numpy as np

cover/cover-pattern.py:import numpy as np

code/beyond/interactive-loupe.py:import numpy as np

code/beyond/bluenoise.py:import numpy as np

code/beyond/bluenoise.py: from numpy.random.mtrand import RandomState

code/beyond/polygon-clipping.py:import numpy as np

code/beyond/radial-maze.py:import numpy as np

code/reference/hatch.py:import numpy as np

code/reference/colormap-sequential-1.py:import numpy as np

code/reference/colormap-uniform.py:import numpy as np

code/reference/marker.py:import numpy as np

code/reference/line.py:import numpy as np

code/ornaments/annotation-zoom.py:import numpy as np

code/scales-projections/text-polar.py:import numpy as np

code/ornaments/legend-regular.py:import numpy as np

code/ornaments/elegant-scatter.py:import numpy as np

code/reference/axes-adjustment.py:import numpy as np

code/ornaments/legend-alternatives.py:import numpy as np

code/reference/colorspec.py:import numpy as np

code/typography/typography-matters.py:import numpy as np

code/colors/color-gradients.py:import numpy as np

code/threed/bunny-6.py:import numpy as np

code/scales-projections/projection-polar-config.py:import numpy as np

code/colors/color-wheel.py:import numpy as np

code/colors/mona-lisa.py:import numpy as np

code/threed/bunny-4.py:import numpy as np

code/ornaments/title-regular.py:import numpy as np

code/typography/text-outline.py:import numpy as np

code/ornaments/bessel-functions.py:import numpy as np

code/scales-projections/projection-polar-histogram.py:import numpy as np

code/rules/rule-6.py:import numpy as np

code/colors/open-colors.py:import numpy as np

code/colors/material-colors.py:import numpy as np

code/colors/flower-polar.py:import numpy as np

code/typography/typography-legibility.py:import numpy as np

code/colors/colored-plot.py:import numpy as np

code/rules/rule-7.py:import numpy as np

code/colors/colored-hist.py:import numpy as np

code/rules/rule-3.py:import numpy as np

code/colors/alpha-vs-color.py:import numpy as np

code/colors/stacked-plots.py:import numpy as np

code/ornaments/label-alternatives.py:import numpy as np

code/typography/tick-labels-variation.py:import numpy as np

code/rules/projections.py:import numpy as np

code/typography/typography-math-stacks.py:import numpy as np

code/rules/rule-2.py:import numpy as np

code/colors/alpha-scatter.py:import numpy as np

code/typography/typography-text-path.py:import numpy as np

code/scales-projections/polar-patterns.py:import numpy as np

code/scales-projections/scales-custom.py:import numpy as np

code/rules/parameters.py:import numpy as np

code/threed/bunny-1.py:import numpy as np

code/scales-projections/geo-projections.py:import numpy as np

code/rules/rule-8.py:import numpy as np

code/threed/bunny-5.py:import numpy as np

code/ornaments/latex-text-box.py:import numpy as np

code/ornaments/annotation-direct.py:import numpy as np

code/typography/projection-3d-gaussian.py:import numpy as np

code/threed/bunny-7.py:import numpy as np

code/rules/helper.py:import numpy as np

code/rules/helper.py: """ Generate a numpy array containing a disc. """

code/typography/typography-font-stacks.py:import numpy as np

code/scales-projections/scales-log-log.py:import numpy as np

code/scales-projections/projection-3d-frame.py:import numpy as np

code/threed/bunnies.py:import numpy as np

code/threed/bunny.py:import numpy as np

code/threed/bunny-3.py:import numpy as np

code/rules/rule-9.py:import numpy as np

code/ornaments/annotate-regular.py:import numpy as np

code/threed/bunny-2.py:import numpy as np

code/reference/tick-locator.py:import numpy as np

code/threed/bunny-8.py:import numpy as np

code/reference/colormap-diverging.py:import numpy as np

code/scales-projections/scales-comparison.py:import numpy as np

code/reference/text-alignment.py:import numpy as np

code/scales-projections/scales-check.py:import numpy as np

code/reference/scale.py:import numpy as np

code/reference/colormap-qualitative.py:import numpy as np

code/reference/tick-formatter.py:import numpy as np

code/rules/graphics.py:import numpy as np

code/rules/rule-1.py:import numpy as np

code/reference/collection.py:import numpy as np

code/ornaments/annotation-side.py:import numpy as np

code/reference/colormap-sequential-2.py:import numpy as np

code/defaults/defaults-exercice-1.py:import numpy as np

code/animation/platecarree.py:import numpy as np

code/defaults/defaults-step-4.py:import numpy as np

code/layout/standard-layout-2.py:import numpy as np

code/coordinates/transforms-blend.py:import numpy as np

code/unsorted/layout-weird.py:import numpy as np

code/unsorted/advanced-linestyles.py:import numpy as np

code/coordinates/transforms-exercise-1.py:import numpy as np

code/animation/fluid-animation.py:import numpy as np

code/unsorted/alpha-gradient.py:import numpy as np

code/animation/sine-cosine.py:import numpy as np

code/optimization/line-benchmark.py:import numpy as np

code/animation/fluid.py:import numpy as np

code/optimization/transparency.py:import numpy as np

code/animation/rain.py:import numpy as np

code/optimization/scatter-benchmark.py:import numpy as np

code/optimization/self-cover.py:import numpy as np

code/animation/imgcat.py:import numpy as np

code/unsorted/hatched-bars.py:import numpy as np

code/optimization/multithread.py:import numpy as np

code/animation/earthquakes.py:import numpy as np

code/unsorted/poster-layout.py:import numpy as np

code/coordinates/transforms-letter.py:import numpy as np

code/optimization/scatters.py:import numpy as np

code/animation/sine-cosine-mp4.py:import numpy as np

code/optimization/multisample.py:import numpy as np

code/unsorted/make-hatch-linewidth.py:import numpy as np

code/animation/lissajous.py:import numpy as np

code/coordinates/transforms-polar.py:import numpy as np

code/unsorted/earthquakes.py:import numpy as np

code/unsorted/git-commits.py:import numpy as np

code/unsorted/github-activity.py:import numpy as np

code/coordinates/collage.py:import numpy as np

code/introduction/matplotlib-timeline.py:import numpy as np

code/coordinates/transforms-floating-axis.py:import numpy as np

code/coordinates/transforms-hist.py:import numpy as np

code/layout/complex-layout-bare.py:import numpy as np

code/animation/less-is-more.py:import numpy as np

code/coordinates/transforms.py:import numpy as np

code/unsorted/alpha-compositing.py:import numpy as np

code/layout/standard-layout-1.py:import numpy as np

code/layout/layout-classical.py:import numpy as np

code/unsorted/stacked-bars.py:import numpy as np

code/layout/complex-layout.py:import numpy as np

code/layout/layout-aspect.py:import numpy as np

code/unsorted/metropolis.py:import numpy as np

code/unsorted/scale-logit.py:import numpy as np

code/unsorted/dyson-hatching.py:import numpy as np

code/unsorted/dyson-hatching.py: from numpy.random.mtrand import RandomState

code/layout/layout-gridspec.py:import numpy as np

code/defaults/defaults-step-1.py:import numpy as np

code/defaults/defaults-step-2.py:import numpy as np

code/defaults/defaults-step-5.py:import numpy as np

code/defaults/defaults-step-3.py:import numpy as np

code/anatomy/bold-ticklabel.py:import numpy as np

code/unsorted/3d/contour.py:import numpy as np

code/unsorted/3d/sphere.py:import numpy as np

code/unsorted/3d/platonic-solids.py:import numpy as np

code/unsorted/3d/surf.py:import numpy as np

code/unsorted/3d/bar.py:import numpy as np

tex/cover-pattern.py:import numpy as np

code/unsorted/3d/scatter.py:import numpy as np

tex/book.bib: url = {https://www.labri.fr/perso/nrougier/from-python-to-numpy/},

code/anatomy/pixel-font.py:import numpy as np

code/anatomy/raster-vector.py:import numpy as np

code/anatomy/zorder-plots.py:import numpy as np

code/anatomy/ruler.py:import numpy as np

code/anatomy/anatomy.py:import numpy as np

code/anatomy/zorder.py:import numpy as np

code/unsorted/3d/bunny.py:import numpy as np

code/unsorted/3d/bunnies.py:import numpy as np

code/unsorted/3d/plot.py:import numpy as np

code/unsorted/3d/plot.py: camera : 4x4 numpy array

code/unsorted/3d/glm.py:import numpy as np

```

These seem to be the imports used in the text:

```

'from __future__ import absolute_import',

'from __future__ import division',

'from __future__ import print_function',

'from __future__ import unicode_literals',

'from datetime import date, datetime',

'from datetime import datetime',

'from dateutil.relativedelta import relativedelta',

'from docutils import nodes',

'from docutils.core import publish_cmdline',

'from docutils.parsers.rst import directives, Directive',

'from fluid import Fluid, inflow',

'from functools import reduce',

'from graphics import *',

'from helper import *',

'from itertools import cycle',

'from math import cos, sin, floor, sqrt, pi, ceil',

'from math import factorial',

'from math import sqrt, ceil, floor, pi, cos, sin',

'from matplotlib import colors',

'from matplotlib import ticker',

'from matplotlib.animation import FuncAnimation, writers',

'from matplotlib.artist import Artist',

'from matplotlib.backend_bases import GraphicsContextBase, RendererBase',

'from matplotlib.backends.backend_agg import FigureCanvas',

'from matplotlib.backends.backend_agg import FigureCanvasAgg',

'from matplotlib.collections import AsteriskPolygonCollection',

'from matplotlib.collections import CircleCollection',

'from matplotlib.collections import EllipseCollection',

'from matplotlib.collections import LineCollection',

'from matplotlib.collections import PatchCollection',

'from matplotlib.collections import PathCollection',

'from matplotlib.collections import PolyCollection',

'from matplotlib.collections import PolyCollection',

'from matplotlib.collections import QuadMesh',

'from matplotlib.collections import RegularPolyCollection',

'from matplotlib.collections import StarPolygonCollection',

'from matplotlib.colors import LightSource',

'from matplotlib.figure import Figure',

'from matplotlib.font_manager import FontProperties',

'from matplotlib.font_manager import findfont, FontProperties',

'from matplotlib.gridspec import GridSpec',

'from matplotlib.gridspec import GridSpec',

'from matplotlib.patches import Circle',

'from matplotlib.patches import Circle',

'from matplotlib.patches import Circle, Rectangle',

'from matplotlib.patches import ConnectionPatch',

'from matplotlib.patches import Ellipse',

'from matplotlib.patches import Ellipse',

'from matplotlib.patches import FancyBboxPatch',

'from matplotlib.patches import PathPatch',

'from matplotlib.patches import Polygon',

'from matplotlib.patches import Polygon',

'from matplotlib.patches import Polygon, Ellipse',

'from matplotlib.patches import Rectangle',

'from matplotlib.patches import Rectangle, PathPatch',

'from matplotlib.path import Path',

'from matplotlib.patheffects import Stroke, Normal',

'from matplotlib.patheffects import withStroke',

'from matplotlib.text import TextPath',

'from matplotlib.textpath import TextPath',

'from matplotlib.ticker import AutoMinorLocator, MultipleLocator, FuncFormatter',

'from matplotlib.ticker import MultipleLocator',

'from matplotlib.ticker import NullFormatter',

'from matplotlib.ticker import NullFormatter, MultipleLocator',

'from matplotlib.ticker import NullFormatter, SymmetricalLogLocator',

'from matplotlib.transforms import Affine2D',

'from matplotlib.transforms import ScaledTranslation',

'from matplotlib.transforms import blended_transform_factory, ScaledTranslation',

'from mpl_toolkits.axes_grid1 import ImageGrid',

'from mpl_toolkits.axes_grid1 import ImageGrid',

'from mpl_toolkits.axes_grid1 import make_axes_locatable',

'from mpl_toolkits.axes_grid1.inset_locator import inset_axes',

'from mpl_toolkits.axes_grid1.inset_locator import mark_inset',

'from mpl_toolkits.axes_grid1.inset_locator import mark_inset',

'from mpl_toolkits.axes_grid1.inset_locator import zoomed_inset_axes',

'from mpl_toolkits.axes_grid1.inset_locator import zoomed_inset_axes',

'from mpl_toolkits.mplot3d import Axes3D, art3d',

'from mpl_toolkits.mplot3d import Axes3D, proj3d, art3d',

'from multiprocessing import Pool',

'from numpy.random.mtrand import RandomState',

'from parameters import *',

'from pathlib import Path',

'from projections import *',

'from pylab import *',

'from scipy.ndimage import gaussian_filter',

'from scipy.ndimage import gaussian_filter1d',

'from scipy.ndimage import map_coordinates, spline_filter',

'from scipy.sparse.linalg import factorized',

'from scipy.spatial import Voronoi',

'from scipy.special import erf',

'from scipy.special import jn, jn_zeros',

'from shapely.geometry import Polygon',

'from shapely.geometry import box, Polygon',

'from skimage.color import rgb2lab, lab2rgb, rgb2xyz, xyz2rgb',

'from timeit import default_timer as timer',

'from tqdm.autonotebook import tqdm',

'import bluenoise',

'import cartopy',

'import cartopy.crs',

'import cartopy.crs as ccrs',

'import colorsys',

'import dateutil.parser',

'import git',

'import glm',

'import html.parser',

'import imageio',

'import locale',

'import math',

'import matplotlib',

'import matplotlib as mpl',

'import matplotlib.animation as animation',

'import matplotlib.animation as animation',

'import matplotlib.colors as colors',

'import matplotlib.colors as mc',

'import matplotlib.colors as mcolors',

'import matplotlib.gridspec as gridspec',

'import matplotlib.image as mpimg',

'import matplotlib.patches as mpatch',

'import matplotlib.patches as mpatches',

'import matplotlib.patches as patches',

'import matplotlib.path as mpath',

'import matplotlib.path as path',

'import matplotlib.patheffects as PathEffects',

'import matplotlib.patheffects as path_effects',

'import matplotlib.pylab as plt',

'import matplotlib.pyplot as plt',

'import matplotlib.pyplot as plt',

'import matplotlib.ticker as ticker',

'import matplotlib.transforms as transforms',

'import mpl_toolkits.axisartist.floating_axes as floating_axes',

'import mpl_toolkits.mplot3d.art3d as art3d',

'import mpmath',

'import noise',

'import numpy as np',

'import os',

'import plot',

'import re',

'import scipy',

'import scipy.sparse as sp',

'import scipy.spatial',

'import shapely.geometry',

'import sys',

'import tqdm',

'import tqdm',

'import types',

'import urllib',

'import urllib.request'

```

|

open

|

2021-12-11T12:57:53Z

|

2021-12-13T11:26:24Z

|

https://github.com/rougier/scientific-visualization-book/issues/33

|

[] |

geo7

| 0

|

SYSTRAN/faster-whisper

|

deep-learning

| 869

|

Transcribe results being translated to different language

|

Hi all,

I just want to post an issue I encountered. As the title suggests, faster-whisper transcribed the audio file in the wrong language.

This is my code for this test:

```

from faster_whisper import WhisperModel

model_size = "medium"

file = "audio.mp3"

model = WhisperModel(model_size, device="cpu", compute_type="int8")

segments, info = model.transcribe(

file,

initial_prompt="Umm, hmm, Uhh, Ahh", #used to detect fillers

temperature=0,

vad_filter=True,

without_timestamps=True,

beam_size=1,

# chunk_length=3

)

print("Detected language '%s' with probability %f" % (info.language, info.language_probability))

print(info)

for segment in segments:

print("[%.2fs -> %.2fs] %s" % (segment.start, segment.end, segment.text))

```

And the result was:

> [1.01s -> 8.40s] Tidak ada, biar saya tolong awak dengan masalah pembayaran. Boleh saya tahu masalah apa yang awak hadapi?

I used google translate for the result and saw that the Malay sentence is close to what was said in the English audio, I'm not sure why it was translated. I checked the all_language_probs and saw that the highest prob was "ms". Given this, is there a way to prevent this? I know that setting a language helps, but the plan is to make it flexible and be able to transcribe audios of differing languages.

[audio.zip](https://github.com/user-attachments/files/15551003/audio.zip)

|

closed

|

2024-06-04T12:08:45Z

|

2024-06-17T07:04:37Z

|

https://github.com/SYSTRAN/faster-whisper/issues/869

|

[] |

acn-reginald-casela

| 11

|

plotly/dash

|

dash

| 2,764

|

Dangerous link detected error after upgrading to Dash 2.15.0

|

```

dash 2.15.0

dash-bootstrap-components 1.5.0

dash-core-components 2.0.0

dash-extensions 1.0.12

dash-html-components 2.0.0

dash-iconify 0.1.2

dash-mantine-components 0.12.1

dash-table 5.0.0

```

After upgrading to Dash 2.15.0, I have apps that are now breaking with `Dangerous link detected` errors, which are emitted when I use `Iframe` components to display embedded data, e.g. PDF files. Here is a small example,

```

import base64

import requests

from dash import Dash, html

# Get a sample PDF file.

r = requests.get("https://www.w3.org/WAI/ER/tests/xhtml/testfiles/resources/pdf/dummy.pdf")

bts = r.content

# Encode PDF file as base64 string.

encoded_string = base64.b64encode(bts).decode("ascii")

src = f"data:application/pdf;base64,{encoded_string}"

# Make a small example app.

app = Dash()

app.layout = html.Iframe(id="embedded-pdf", src=src, width="100%", height="100%")

if __name__ == '__main__':

app.run_server()

```

I would expect the app would continue to work, displaying the PDF, like it did in previous versions.

I guess the issue is related to the fixing of XSS vulnerabilities as mentioned in #2743 . However, I am not sure why it should be considered a vulnerability to display an embedded PDF file.

|

closed

|

2024-02-18T13:47:23Z

|

2024-04-22T15:43:09Z

|

https://github.com/plotly/dash/issues/2764

|

[

"bug",

"sev-1"

] |

emilhe

| 11

|

chatopera/Synonyms

|

nlp

| 25

|

请问words.nearby.json.gz这个词典的数据是怎么生成的啊,万分感谢

|

# description

## current

## expected

# solution

# environment

* version:

The commit hash (`git rev-parse HEAD`)

|

closed

|

2018-01-16T00:40:04Z

|

2018-01-16T06:22:40Z

|

https://github.com/chatopera/Synonyms/issues/25

|

[

"duplicate"

] |

waterzxj

| 3

|

sanic-org/sanic

|

asyncio

| 2,464

|

`Async for` can be used to iterate over a websocket's incoming messages

|

**Is your feature request related to a problem? Please describe.**

When creating a websocket server I'd like to use `async for` to iterate over the incoming messages from a connection. This is a feature that the [websockets lib](https://websockets.readthedocs.io/en/stable/) uses. Currently, if you try to use `async for` you get the following error:

```console

TypeError: 'async for' requires an object with __aiter__ method, got WebsocketImplProtocol

```

**Describe the solution you'd like**

Ideally, I could run something like the following on a Sanic websocket route:

```python

@app.websocket("/")

async def feed(request, ws):

async for msg in ws:

print(f'received: {msg.data}')

await ws.send(msg.data)

```

**Additional context**

[This was originally discussed on the sanic-support channel on the discord server](https://discord.com/channels/812221182594121728/813454547585990678/978393931903545344)

|

closed

|

2022-05-23T21:05:24Z

|

2022-09-20T21:20:33Z

|

https://github.com/sanic-org/sanic/issues/2464

|

[

"help wanted",

"intermediate",

"feature request"

] |

bradlangel

| 11

|

PaddlePaddle/PaddleHub

|

nlp

| 1,410

|

hub serving启动成功,访问返回503

|

用hub serving启动了我fintune后的模型,但在访问时返回503

|

open

|

2021-05-12T03:27:55Z

|

2021-05-14T02:21:05Z

|

https://github.com/PaddlePaddle/PaddleHub/issues/1410

|

[

"serving"

] |

dedex1994

| 3

|

TheKevJames/coveralls-python

|

pytest

| 4

|

Documentation could be clearer

|

The README says

> First, log in via Github and add your repo on Coveralls website.

>

> Second, install this package:

>

> $ pip install coveralls

>

> If you're using Travis CI, no further configuration is required.

Which raises a question: How can a package I pip install locally into my development laptop affect anything that happens between Travis CI and Coveralls.io?

Turns out some further configuration _is_ required after all. Specifically, I have to edit .travis.yml:

- I have to add `pip install coveralls` to the `install` section (or update my requirements.txt if I have one).

- I have to compute the coverage (using some variation of `coverage run testscript.py`),

- and then I have to push it to coveralls.io (by calling `coveralls` in the after_script section -- or should it be after_success? I don't know, I've seen both!).

It would be really helpful to have a sample .travis.yml in the README.

|

closed

|

2013-04-09T19:10:07Z

|

2013-04-10T16:57:21Z

|

https://github.com/TheKevJames/coveralls-python/issues/4

|

[] |

mgedmin

| 6

|

pallets-eco/flask-sqlalchemy

|

flask

| 1,145

|

flask-sqlAlchemy >=3.0.0 version: session.bind is None

|

<!--

This issue tracker is a tool to address bugs in Flask-SQLAlchemy itself.

Please use Pallets Discord or Stack Overflow for questions about your

own code.

Ensure your issue is with Flask-SQLAlchemy and not SQLAlchemy itself.

Replace this comment with a clear outline of what the bug is.

-->

<!--

Describe how to replicate the bug.

Include a minimal reproducible example that demonstrates the bug.

Include the full traceback if there was an exception.

-->

```

from flask import Flask

from flask_sqlalchemy import SQLAlchemy

import pymysql

db = SQLAlchemy()

app = Flask(__name__)

app.config['SQLALCHEMY_DATABASE_URI'] = ''

pymysql.install_as_MySQLdb()

db.init_app(app)

class Task(db.Model):

""" task info table """

__tablename__ = 'test_client_task'

__table_args__ = {'extend_existing': True}

id = db.Column(db.Integer, primary_key=True)

name = db.Column(db.String(32), nullable=False)

with app.app_context():

print(db.session.bind)

# Task.__table__.drop(db.session.bind)

Task.__table__.create(db.session.bind)

```

<!--

Describe the expected behavior that should have happened but didn't.

-->

Expected: db.session.bind is Engine, but happened db.session.bind is None.

When a table is created in this way, an exception is raised.

But I found it to be normal with version 2.5.1

Environment:

- Python version: 3.9

- Flask-SQLAlchemy version: >= 3.0

- SQLAlchemy version: 1.4.45

|

closed

|

2022-12-13T07:23:11Z

|

2023-01-31T14:25:21Z

|

https://github.com/pallets-eco/flask-sqlalchemy/issues/1145

|

[] |

wangtao2213405054

| 1

|

TencentARC/GFPGAN

|

pytorch

| 496

|

Add atile

|

open

|

2024-01-27T07:15:33Z

|

2024-01-27T07:15:33Z

|

https://github.com/TencentARC/GFPGAN/issues/496

|

[] |

dildarhossin

| 0

|

|

mwaskom/seaborn

|

data-visualization

| 3,292

|

Wrong legend color when using histplot multiple times

|

```

sns.histplot([1,2,3])

sns.histplot([4,5,6])

sns.histplot([7,8,9])

sns.histplot([10,11,12])

plt.legend(labels=["A", "B", "C", "D"])

```

Seaborn 0.12.2, matplotlib 3.6.2

This may be related to https://github.com/mwaskom/seaborn/issues/3115 but is not the same issue, since histplot is used multiple times

|

closed

|

2023-03-10T16:04:15Z

|

2023-03-10T19:10:43Z

|

https://github.com/mwaskom/seaborn/issues/3292

|

[] |

mesvam

| 1

|

huggingface/datasets

|

numpy

| 7,371

|

500 Server error with pushing a dataset

|

### Describe the bug

Suddenly, I started getting this error message saying it was an internal error.

`Error creating/pushing dataset: 500 Server Error: Internal Server Error for url: https://huggingface.co/api/datasets/ll4ma-lab/grasp-dataset/commit/main (Request ID: Root=1-6787f0b7-66d5bd45413e481c4c2fb22d;670d04ff-65f5-4741-a353-2eacc47a3928)

Internal Error - We're working hard to fix this as soon as possible!

Traceback (most recent call last):

File "/uufs/chpc.utah.edu/common/home/hermans-group1/martin/software/pkg/miniforge3/envs/myenv2/lib/python3.10/site-packages/huggingface_hub/utils/_http.py", line 406, in hf_raise_for_status

response.raise_for_status()

File "/uufs/chpc.utah.edu/common/home/hermans-group1/martin/software/pkg/miniforge3/envs/myenv2/lib/python3.10/site-packages/requests/models.py", line 1024, in raise_for_status

raise HTTPError(http_error_msg, response=self)

requests.exceptions.HTTPError: 500 Server Error: Internal Server Error for url: https://huggingface.co/api/datasets/ll4ma-lab/grasp-dataset/commit/main

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/uufs/chpc.utah.edu/common/home/u1295595/grasp_dataset_converter/src/grasp_dataset_converter/main.py", line 142, in main

subset_train.push_to_hub(dataset_name, split='train')

File "/uufs/chpc.utah.edu/common/home/hermans-group1/martin/software/pkg/miniforge3/envs/myenv2/lib/python3.10/site-packages/datasets/arrow_dataset.py", line 5624, in push_to_hub

commit_info = api.create_commit(