repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

Nemo2011/bilibili-api

|

api

| 241

|

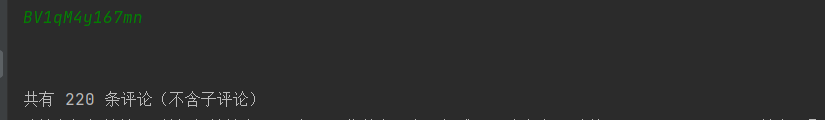

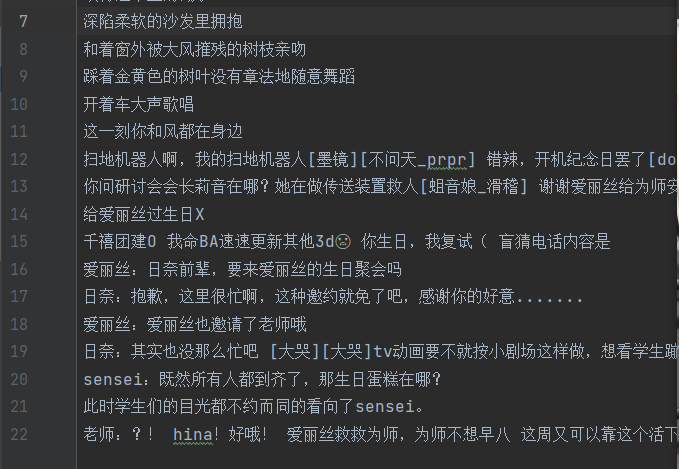

【提问】关于comment模块无法爬取所有评论

|

**Python 版本:** 3.8

**模块版本:** 15.3.1

**运行环境:** Windows

---

依照文档里的范例直接爬取,试过不同视频都只显示总评论在200多条左右,实际评论都超过这个数量。

而且爬取下来的也只有部分评论,请问是接口那边更新了导致失效吗

代码和示例里的一致 只加了个写入文本

|

closed

|

2023-03-20T05:29:33Z

|

2023-03-22T13:04:59Z

|

https://github.com/Nemo2011/bilibili-api/issues/241

|

[

"question"

] |

andoninari

| 4

|

brightmart/text_classification

|

nlp

| 2

|

sess.run() blocks

|

Hello! I am new to tensorflow and when I run your model TextCNN, I get a issue, that is, sess.run() blocks.

I can only get the print before the code: "curr_loss,curr_acc,_=sess.run([textCNN.loss_val,textCNN.accuracy,textCNN.train_op],feed_dict=feed_dict)" and then , the program blocks! I already make sure the input data exists and I fail to figure it out.

Hope you can give me the answer, thanks for your patience.

|

closed

|

2017-07-16T15:11:14Z

|

2017-07-17T01:13:17Z

|

https://github.com/brightmart/text_classification/issues/2

|

[] |

scutzck033

| 2

|

kaliiiiiiiiii/Selenium-Driverless

|

web-scraping

| 160

|

[TODO] serve docs

|

github pages seems to be active

but i dont see the result at

[kaliiiiiiiiii.github.io/Selenium-Driverless](https://kaliiiiiiiiii.github.io/Selenium-Driverless/)

where is it...?

the link should be in the "about" section of

[github.com/kaliiiiiiiiii/Selenium-Driverless](https://github.com/kaliiiiiiiiii/Selenium-Driverless)

also, currently, the github pages action runs jekyll

but [docs/](https://github.com/kaliiiiiiiiii/Selenium-Driverless/tree/master/docs) looks like static html files, generated by [sphinx](https://www.sphinx-doc.org/en/master/)

[pypi.org/project/selenium-driverless](https://pypi.org/project/selenium-driverless/)

has the same url for homepage, docs, source

|

closed

|

2024-02-01T13:37:43Z

|

2024-02-02T10:02:57Z

|

https://github.com/kaliiiiiiiiii/Selenium-Driverless/issues/160

|

[

"documentation"

] |

milahu

| 4

|

Buuntu/fastapi-react

|

sqlalchemy

| 86

|

[Feature Request] Add Storybook support

|

Any thoughts on adding [Storybook](https://storybook.js.org/) support as part of a development workflow?

|

closed

|

2020-07-15T13:33:48Z

|

2020-07-24T22:00:55Z

|

https://github.com/Buuntu/fastapi-react/issues/86

|

[] |

inactivist

| 3

|

huggingface/datasets

|

tensorflow

| 7,196

|

concatenate_datasets does not preserve shuffling state

|

### Describe the bug

After concatenate datasets on an iterable dataset, the shuffling state is destroyed, similar to #7156

This means concatenation cant be used for resolving uneven numbers of samples across devices when using iterable datasets in a distributed setting as discussed in #6623

I also noticed that the number of shards is the same after concatenation, which I found surprising, but I don't understand the internals well enough to know whether this is actually surprising or not

### Steps to reproduce the bug

```python

import datasets

import torch.utils.data

def gen(shards):

yield {"shards": shards}

def main():

dataset1 = datasets.IterableDataset.from_generator(

gen, gen_kwargs={"shards": list(range(25))} # TODO: how to understand this?

)

dataset2 = datasets.IterableDataset.from_generator(

gen, gen_kwargs={"shards": list(range(25, 50))} # TODO: how to understand this?

)

dataset1 = dataset1.shuffle(buffer_size=1)

dataset2 = dataset2.shuffle(buffer_size=1)

print(dataset1.n_shards)

print(dataset2.n_shards)

dataset = datasets.concatenate_datasets(

[dataset1, dataset2]

)

print(dataset.n_shards)

# dataset = dataset1

dataloader = torch.utils.data.DataLoader(

dataset,

batch_size=8,

num_workers=0,

)

for i, batch in enumerate(dataloader):

print(batch)

print("\nNew epoch")

dataset = dataset.set_epoch(1)

for i, batch in enumerate(dataloader):

print(batch)

if __name__ == "__main__":

main()

```

### Expected behavior

Shuffling state should be preserved

### Environment info

Latest datasets

|

open

|

2024-10-03T14:30:38Z

|

2025-03-18T10:56:47Z

|

https://github.com/huggingface/datasets/issues/7196

|

[] |

alex-hh

| 1

|

explosion/spaCy

|

nlp

| 13,252

|

Vocab Issue

|

<!-- NOTE: For questions or install related issues, please open a Discussion instead. -->

## How to reproduce the behaviour

<!-- Include a code example or the steps that led to the problem. Please try to be as specific as possible. -->

I am trying to find a word in the vocab and testing the example provided in the documentation. However I see that word apple is not in the vocab. Am I doing something wrong here? How can I check if a word exist in the vocab?

## Your Environment

<!-- Include details of your environment. You can also type `python -m spacy info --markdown` and copy-paste the result here.-->

## Info about spaCy

- **spaCy version:** 3.7.2

- **Platform:** Linux-5.15.133+-x86_64-with-glibc2.31

- **Python version:** 3.10.12

- **Pipelines:** en_core_web_sm (3.7.1), en_core_web_lg (3.7.1)

|

closed

|

2024-01-19T13:33:16Z

|

2024-02-26T00:02:24Z

|

https://github.com/explosion/spaCy/issues/13252

|

[

"docs",

"feat / vectors"

] |

lordsoffallen

| 4

|

ray-project/ray

|

machine-learning

| 51,272

|

[core][gpu-objects] Driver tries to get the data from in-actor store

|

### Description

The driver is not allowed to be in an NCCL group in Ray GPU objects. Hence, if the driver wants to retrieve data from the in-actor store, we can move the data from the in-actor store to the object store so that the driver can access it.

### Use case

_No response_

|

open

|

2025-03-11T22:06:53Z

|

2025-03-21T22:09:42Z

|

https://github.com/ray-project/ray/issues/51272

|

[

"enhancement",

"P1",

"core",

"gpu-objects"

] |

kevin85421

| 0

|

autokey/autokey

|

automation

| 368

|

v0.95.10 randomly consume 100% of the CPU and also silently crashs

|

## Classification:

Bug and crash

## Reproducibility:

sometimes

## Version

AutoKey version: 0.95.10-1

Used GUI (Gtk, Qt, or both):

both

AUR repo: https://aur.archlinux.org/packages/autokey

Linux Distribution:

Manjaro Linux XFCE 64 bit, kernel 4.14.170-1-MANJARO

## What happens:

Yestarday I updated AutoKey from 0.95.9-1 to 0.95.10-1

And I encounter some issues: the first is that it randomly starts to increase the CPU usage to 100% (without using the GUI and nothing else).

The second issue is that will also randomly stop to work (I cannot use the shortcuts and scripts) because silently crashs: I investigated in the Journal logs:

```

feb 18 06:59:07 systemd-coredump[2611]: Process 1166 (autokey-gtk) of user 1000 dumped core.

Stack trace of thread 2606:

#0 0x00007fb454f33f25 raise (libc.so.6)

#1 0x00007fb454f1d897 abort (libc.so.6)

#2 0x00007fb454f1d767 __assert_fail_base.cold (libc.so.6)

#3 0x00007fb454f2c526 __assert_fail (libc.so.6)

#4 0x00007fb4530229f9 n/a (libX11.so.6)

#5 0x00007fb453022a9e n/a (libX11.so.6)

#6 0x00007fb453022f12 _XReadEvents (libX11.so.6)

#7 0x00007fb45300a356 XIfEvent (libX11.so.6)

#8 0x00007fb45288626f gdk_x11_get_server_time (libgdk-3.so.0)

#9 0x00007fb451fd38a4 n/a (libgtk-3.so.0)

#10 0x00007fb451fd3aa8 n/a (libgtk-3.so.0)

#11 0x00007fb453794d5a g_closure_invoke (libgobject-2.0.so.0)

#12 0x00007fb4537829e4 n/a (libgobject-2.0.so.0)

#13 0x00007fb45378698a g_signal_emit_valist (libgobject-2.0.so.0)

#14 0x00007fb4537877f0 g_signal_emit (libgobject-2.0.so.0)

#15 0x00007fb451fd1cdd gtk_widget_realize (libgtk-3.so.0)

#16 0x00007fb45207102e n/a (libgtk-3.so.0)

#17 0x00007fb453794d5a g_closure_invoke (libgobject-2.0.so.0)

#18 0x00007fb4537829e4 n/a (libgobject-2.0.so.0)

#19 0x00007fb45378698a g_signal_emit_valist (libgobject-2.0.so.0)

#20 0x00007fb4537877f0 g_signal_emit (libgobject-2.0.so.0)

#21 0x00007fb45208b5da gtk_widget_show (libgtk-3.so.0)

#22 0x00007fb452027c97 gtk_status_icon_set_visible (libgtk-3.so.0)

#23 0x00007fb4504c6c0e n/a (libappindicator3.so.1)

#24 0x00007fb453794d5a g_closure_invoke (libgobject-2.0.so.0)

#25 0x00007fb45378288e n/a (libgobject-2.0.so.0)

#26 0x00007fb45378698a g_signal_emit_valist (libgobject-2.0.so.0)

#27 0x00007fb4537877f0 g_signal_emit (libgobject-2.0.so.0)

#28 0x00007fb4504c555c app_indicator_set_status (libappindicator3.so.1)

#29 0x00007fb45375e69a ffi_call_unix64 (libffi.so.6)

#30 0x00007fb45375dfb6 ffi_call (libffi.so.6)

#31 0x00007fb4537fb392 n/a (_gi.cpython-38-x86_64-linux-gnu.so)

#32 0x00007fb4537fa972 n/a (_gi.cpython-38-x86_64-linux-gnu.so)

#33 0x00007fb45380049e n/a (_gi.cpython-38-x86_64-linux-gnu.so)

#34 0x00007fb454c6fad2 _PyObject_MakeTpCall (libpython3.8.so.1.0)

#35 0x00007fb454d2c7f4 _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#36 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#37 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#38 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#39 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#40 0x00007fb454d14e3b _PyEval_EvalCodeWithName (libpython3.8.so.1.0)

#41 0x00007fb454d1624b _PyFunction_Vectorcall (libpython3.8.so.1.0)

#42 0x00007fb454c6730d PyObject_Call (libpython3.8.so.1.0)

#43 0x00007fb454d29d03 _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#44 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#45 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#46 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#47 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#48 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#49 0x00007fb454d16a7b n/a (libpython3.8.so.1.0)

#50 0x00007fb454c6730d PyObject_Call (libpython3.8.so.1.0)

#51 0x00007fb454d7c4e1 n/a (libpython3.8.so.1.0)

#52 0x00007fb454d368f4 n/a (libpython3.8.so.1.0)

#53 0x00007fb454b204cf start_thread (libpthread.so.0)

#54 0x00007fb454ff72d3 __clone (libc.so.6)

Stack trace of thread 1166:

#0 0x00007fb454fe84cf write (libc.so.6)

#1 0x00007fb454ba2380 _Py_write_noraise (libpython3.8.so.1.0)

#2 0x00007fb454bb0d60 n/a (libpython3.8.so.1.0)

#3 0x00007fb454f33fb0 __restore_rt (libc.so.6)

#4 0x00007fb454f33f25 raise (libc.so.6)

#5 0x00007fb454f1d897 abort (libc.so.6)

#6 0x00007fb454f1d767 __assert_fail_base.cold (libc.so.6)

#7 0x00007fb454f2c526 __assert_fail (libc.so.6)

#8 0x00007fb453022984 n/a (libX11.so.6)

#9 0x00007fb453022add n/a (libX11.so.6)

#10 0x00007fb453022d92 _XEventsQueued (libX11.so.6)

#11 0x00007fb453014782 XPending (libX11.so.6)

#12 0x00007fb45289aa00 n/a (libgdk-3.so.0)

#13 0x00007fb453910a00 g_main_context_prepare (libglib-2.0.so.0)

#14 0x00007fb453911046 n/a (libglib-2.0.so.0)

#15 0x00007fb4539120c3 g_main_loop_run (libglib-2.0.so.0)

#16 0x00007fb4521cb9ef gtk_main (libgtk-3.so.0)

#17 0x00007fb45375e69a ffi_call_unix64 (libffi.so.6)

#18 0x00007fb45375dfb6 ffi_call (libffi.so.6)

#19 0x00007fb4537fb392 n/a (_gi.cpython-38-x86_64-linux-gnu.so)

#20 0x00007fb4537fa972 n/a (_gi.cpython-38-x86_64-linux-gnu.so)

#21 0x00007fb454c673a0 PyObject_Call (libpython3.8.so.1.0)

#22 0x00007fb454d29d03 _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#23 0x00007fb454d14e3b _PyEval_EvalCodeWithName (libpython3.8.so.1.0)

#24 0x00007fb454d1624b _PyFunction_Vectorcall (libpython3.8.so.1.0)

#25 0x00007fb454d2c3c8 _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#26 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#27 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#28 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#29 0x00007fb454d27c8c _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#30 0x00007fb454d14e3b _PyEval_EvalCodeWithName (libpython3.8.so.1.0)

#31 0x00007fb454d9e3d3 PyEval_EvalCode (libpython3.8.so.1.0)

#32 0x00007fb454d9e428 n/a (libpython3.8.so.1.0)

#33 0x00007fb454da2623 n/a (libpython3.8.so.1.0)

#34 0x00007fb454c3d3e7 PyRun_FileExFlags (libpython3.8.so.1.0)

#35 0x00007fb454c47f4a PyRun_SimpleFileExFlags (libpython3.8.so.1.0)

#36 0x00007fb454daf8be Py_RunMain (libpython3.8.so.1.0)

#37 0x00007fb454daf9a9 Py_BytesMain (libpython3.8.so.1.0)

#38 0x00007fb454f1f153 __libc_start_main (libc.so.6)

#39 0x000055ea57db605e _start (python3.8)

Stack trace of thread 1256:

#0 0x00007fb454fec9ef __poll (libc.so.6)

#1 0x00007fb453911120 n/a (libglib-2.0.so.0)

#2 0x00007fb4539111f1 g_main_context_iteration (libglib-2.0.so.0)

#3 0x00007fb453911242 n/a (libglib-2.0.so.0)

#4 0x00007fb4538edbb1 n/a (libglib-2.0.so.0)

#5 0x00007fb454b204cf start_thread (libpthread.so.0)

#6 0x00007fb454ff72d3 __clone (libc.so.6)

Stack trace of thread 1263:

#0 0x00007fb454feee7b __select (libc.so.6)

#1 0x00007fb454d7505e n/a (libpython3.8.so.1.0)

#2 0x00007fb454c75f4f n/a (libpython3.8.so.1.0)

#3 0x00007fb454d2c3c8 _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#4 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#5 0x00007fb454d16a7b n/a (libpython3.8.so.1.0)

#6 0x00007fb454c6730d PyObject_Call (libpython3.8.so.1.0)

#7 0x00007fb454d29d03 _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#8 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#9 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#10 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#11 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#12 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#13 0x00007fb454d16a7b n/a (libpython3.8.so.1.0)

#14 0x00007fb454c6730d PyObject_Call (libpython3.8.so.1.0)

#15 0x00007fb454d7c4e1 n/a (libpython3.8.so.1.0)

#16 0x00007fb454d368f4 n/a (libpython3.8.so.1.0)

#17 0x00007fb454b204cf start_thread (libpthread.so.0)

#18 0x00007fb454ff72d3 __clone (libc.so.6)

Stack trace of thread 1267:

#0 0x00007fb454fec9ef __poll (libc.so.6)

#1 0x00007fb4541206d4 n/a (select.cpython-38-x86_64-linux-gnu.so)

#2 0x00007fb454c76104 n/a (libpython3.8.so.1.0)

#3 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#4 0x00007fb454d14e3b _PyEval_EvalCodeWithName (libpython3.8.so.1.0)

#5 0x00007fb454d1624b _PyFunction_Vectorcall (libpython3.8.so.1.0)

#6 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#7 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#8 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#9 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#10 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#11 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#12 0x00007fb454d16a7b n/a (libpython3.8.so.1.0)

#13 0x00007fb454c6730d PyObject_Call (libpython3.8.so.1.0)

#14 0x00007fb454d7c4e1 n/a (libpython3.8.so.1.0)

#15 0x00007fb454d368f4 n/a (libpython3.8.so.1.0)

#16 0x00007fb454b204cf start_thread (libpthread.so.0)

#17 0x00007fb454ff72d3 __clone (libc.so.6)

Stack trace of thread 1257:

#0 0x00007fb454fec9ef __poll (libc.so.6)

#1 0x00007fb453911120 n/a (libglib-2.0.so.0)

#2 0x00007fb4539120c3 g_main_loop_run (libglib-2.0.so.0)

#3 0x00007fb4535fcbc8 n/a (libgio-2.0.so.0)

#4 0x00007fb4538edbb1 n/a (libglib-2.0.so.0)

#5 0x00007fb454b204cf start_thread (libpthread.so.0)

#6 0x00007fb454ff72d3 __clone (libc.so.6)

Stack trace of thread 1265:

#0 0x00007fb454b29704 do_futex_wait.constprop.0 (libpthread.so.0)

#1 0x00007fb454b297f8 __new_sem_wait_slow.constprop.0 (libpthread.so.0)

#2 0x00007fb454c83a0e PyThread_acquire_lock_timed (libpython3.8.so.1.0)

#3 0x00007fb454d7c9a1 n/a (libpython3.8.so.1.0)

#4 0x00007fb454d95e1b n/a (libpython3.8.so.1.0)

#5 0x00007fb454d0bac9 n/a (libpython3.8.so.1.0)

#6 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#7 0x00007fb454d14e3b _PyEval_EvalCodeWithName (libpython3.8.so.1.0)

#8 0x00007fb454d1624b _PyFunction_Vectorcall (libpython3.8.so.1.0)

#9 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#10 0x00007fb454d14e3b _PyEval_EvalCodeWithName (libpython3.8.so.1.0)

#11 0x00007fb454d1624b _PyFunction_Vectorcall (libpython3.8.so.1.0)

#12 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#13 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#14 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#15 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#16 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#17 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#18 0x00007fb454d16a7b n/a (libpython3.8.so.1.0)

#19 0x00007fb454c6730d PyObject_Call (libpython3.8.so.1.0)

#20 0x00007fb454d7c4e1 n/a (libpython3.8.so.1.0)

#21 0x00007fb454d368f4 n/a (libpython3.8.so.1.0)

#22 0x00007fb454b204cf start_thread (libpthread.so.0)

#23 0x00007fb454ff72d3 __clone (libc.so.6)

Stack trace of thread 1264:

#0 0x00007fb454feee7b __select (libc.so.6)

#1 0x00007fb45412043e n/a (select.cpython-38-x86_64-linux-gnu.so)

#2 0x00007fb454c75e37 n/a (libpython3.8.so.1.0)

#3 0x00007fb454d2c3c8 _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#4 0x00007fb454d14e3b _PyEval_EvalCodeWithName (libpython3.8.so.1.0)

#5 0x00007fb454d16892 n/a (libpython3.8.so.1.0)

#6 0x00007fb454d28a9c _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#7 0x00007fb454d167a6 n/a (libpython3.8.so.1.0)

#8 0x00007fb454d2c3c8 _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#9 0x00007fb454d14e3b _PyEval_EvalCodeWithName (libpython3.8.so.1.0)

#10 0x00007fb454d1624b _PyFunction_Vectorcall (libpython3.8.so.1.0)

#11 0x00007fb454c67418 PyObject_Call (libpython3.8.so.1.0)

#12 0x00007fb454d29d03 _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#13 0x00007fb454d14e3b _PyEval_EvalCodeWithName (libpython3.8.so.1.0)

#14 0x00007fb454d1624b _PyFunction_Vectorcall (libpython3.8.so.1.0)

#15 0x00007fb454d179ab n/a (libpython3.8.so.1.0)

#16 0x00007fb454c6f962 _PyObject_MakeTpCall (libpython3.8.so.1.0)

#17 0x00007fb454d2c9f1 _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#18 0x00007fb454d167a6 n/a (libpython3.8.so.1.0)

#19 0x00007fb454d2c3c8 _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#20 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#21 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#22 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#23 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#24 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#25 0x00007fb454d16a7b n/a (libpython3.8.so.1.0)

#26 0x00007fb454c6730d PyObject_Call (libpython3.8.so.1.0)

#27 0x00007fb454d7c4e1 n/a (libpython3.8.so.1.0)

#28 0x00007fb454d368f4 n/a (libpython3.8.so.1.0)

#29 0x00007fb454b204cf start_thread (libpthread.so.0)

#30 0x00007fb454ff72d3 __clone (libc.so.6)

Stack trace of thread 1262:

#0 0x00007fb454b29704 do_futex_wait.constprop.0 (libpthread.so.0)

#1 0x00007fb454b297f8 __new_sem_wait_slow.constprop.0 (libpthread.so.0)

#2 0x00007fb454c83a0e PyThread_acquire_lock_timed (libpython3.8.so.1.0)

#3 0x00007fb454d7c9a1 n/a (libpython3.8.so.1.0)

#4 0x00007fb454d95e1b n/a (libpython3.8.so.1.0)

#5 0x00007fb454d0bac9 n/a (libpython3.8.so.1.0)

#6 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#7 0x00007fb454d14e3b _PyEval_EvalCodeWithName (libpython3.8.so.1.0)

#8 0x00007fb454d1624b _PyFunction_Vectorcall (libpython3.8.so.1.0)

#9 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#10 0x00007fb454d14e3b _PyEval_EvalCodeWithName (libpython3.8.so.1.0)

#11 0x00007fb454d1624b _PyFunction_Vectorcall (libpython3.8.so.1.0)

#12 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#13 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#14 0x00007fb454d16a7b n/a (libpython3.8.so.1.0)

#15 0x00007fb454c6730d PyObject_Call (libpython3.8.so.1.0)

#16 0x00007fb454d29d03 _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#17 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#18 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#19 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#20 0x00007fb454d280ce _PyEval_EvalFrameDefault (libpython3.8.so.1.0)

#21 0x00007fb454d1606d _PyFunction_Vectorcall (libpython3.8.so.1.0)

#22 0x00007fb454d16a7b n/a (libpython3.8.so.1.0)

#23 0x00007fb454c6730d PyObject_Call (libpython3.8.so.1.0)

#24 0x00007fb454d7c4e1 n/a (libpython3.8.so.1.0)

#25 0x00007fb454d368f4 n/a (libpython3.8.so.1.0)

#26 0x00007fb454b204cf start_thread (libpthread.so.0)

#27 0x00007fb454ff72d3 __clone (libc.so.6)

```

Then I restart it but the described issues will occurs again: furthermore sometimes, despite the fact that the scripts properly works, a notification of AutoKey is displayed on desktop: "This "scriptname" has encountered an error" or something similar.

Eg with a script which is very simple:

`output = system.exec_command("gedit '/home/dave/Documents/textfile'")`

|

closed

|

2020-02-18T07:10:01Z

|

2023-05-07T19:45:09Z

|

https://github.com/autokey/autokey/issues/368

|

[] |

MR-Diamond

| 8

|

plotly/dash

|

data-science

| 2,803

|

[Feature Request] Global set_props in backend callbacks.

|

Add a global `dash.set_props` to be used in callbacks to set arbitrary props not defined in the callbacks outputs, similar to the clientside `dash_clientside.set_props`.

Example:

```

app.layout = html.Div([

html.Div(id="output"),

html.Div(id="secondary-output"),

html.Button("click", id="clicker"),

])

@app.callback(

Output("output", "children"),

Input("clicker", "n_clicks"),

prevent_initial_call=True,

)

def on_click(n_clicks):

set_props("secondary-output", {"children": "secondary"})

return f"Clicked {n_clicks} times"

```

|

closed

|

2024-03-20T16:26:14Z

|

2024-05-03T13:20:34Z

|

https://github.com/plotly/dash/issues/2803

|

[] |

T4rk1n

| 2

|

ultralytics/yolov5

|

pytorch

| 13,141

|

how to convert pt to onnx to trt

|

### Search before asking

- [X] I have searched the YOLOv5 [issues](https://github.com/ultralytics/yolov5/issues) and [discussions](https://github.com/ultralytics/yolov5/discussions) and found no similar questions.

### Question

how to convert pt to onnx to trt

### Additional

im doing this

python export.py --weights best.pt --include onnx --opset 12

after trtexec --onnx=best.onnx --saveEngine=best.trt

after I try to load the model I get this

I used to be able to do it, but six months later I forgot how I did it.

Please help

|

closed

|

2024-06-27T03:11:08Z

|

2024-12-16T10:28:50Z

|

https://github.com/ultralytics/yolov5/issues/13141

|

[

"question",

"Stale"

] |

gdfapokgdpafog

| 8

|

pyro-ppl/numpyro

|

numpy

| 1,744

|

Adding HMCECS proxy functions

|

Hi,

I'm working on a neural proxy function for HMCECS and have a Taylor expansion proxy with an approximate Hessian. However, the file is becoming somewhat unruly as the proxies are currently in `hmc_gibbs.py`. If I move (only) the proxy functions to a separate file under `contrib` and keep using the static method interface for HMCECS (i.e., `HMCECS.taylor_proxy`), there is no change to the user interface, and I think it would be easier to work with.

Let me know what you think.

edit: change would look like [this](https://github.com/aleatory-science/numpyro/pull/3) (moved PR to aleatory)

|

closed

|

2024-02-23T11:08:06Z

|

2024-02-28T08:07:15Z

|

https://github.com/pyro-ppl/numpyro/issues/1744

|

[

"discussion"

] |

OlaRonning

| 2

|

neuml/txtai

|

nlp

| 585

|

Add support for binary indexes to Faiss ANN

|

This change will add support for [Faiss binary indexes](https://github.com/facebookresearch/faiss/wiki/Binary-indexes). Binary indexes will be used to index scalar quantized data.

|

closed

|

2023-10-27T09:52:56Z

|

2023-10-27T19:21:38Z

|

https://github.com/neuml/txtai/issues/585

|

[] |

davidmezzetti

| 1

|

tfranzel/drf-spectacular

|

rest-api

| 1,326

|

Weird issue when generating the schema

|

**Describe the bug**

I'm having a hard time but when I generate the schema from the Swagger UI, I got the correct schema (that includes the filter fields that I defined in a FilteSet class).

Then also the Swagger UI gives me a snippet to make the requests, but when I do the request the schema is different, and I don't really know what is happening.

**To Reproduce**

Try to add a Filter class to a View and get the schema from the UI (check the schema generated), and then copy the snippet provided by the Swagger UI, save the curl response and compare the schemas, they are different.

**Expected behavior**

I expect the schema file should be the same if I get it from the Swagger UI, than doing a curl request.

Thank you so much for your help, and for this amazing project.

|

closed

|

2024-11-07T07:53:41Z

|

2024-11-10T13:16:58Z

|

https://github.com/tfranzel/drf-spectacular/issues/1326

|

[] |

yoelfme

| 7

|

lepture/authlib

|

flask

| 328

|

PKCE check

|

https://github.com/lepture/authlib/blob/51261de795cddb93d5e5206d8206bfd87917c5b3/authlib/oauth2/rfc6749/grants/authorization_code.py#L207

Hi.

here when the request is "PKCE token request" as client is public and client credentials are not sent, shouldn't we skip client authentication and just check client_id instead? or i'm missing something in the request?

Thanks in advance

request parameters:

--------------------------

client_id

scope

redirect_uri

state

code

code_verifier

grant_type=authorization_code

response:

-------------

{

"error": "invalid_client",

"state": "345tfdgsut7i"

}

|

closed

|

2021-03-05T21:49:14Z

|

2021-03-06T03:56:58Z

|

https://github.com/lepture/authlib/issues/328

|

[] |

shahabGh77

| 1

|

custom-components/pyscript

|

jupyter

| 492

|

Response Data

|

With the recent introduction for Home Assistant allowing service calls to respond with data, I am curious if this is a planned feature for pyscript?

|

closed

|

2023-07-18T20:48:48Z

|

2023-07-30T18:02:41Z

|

https://github.com/custom-components/pyscript/issues/492

|

[] |

Sian-Lee-SA

| 1

|

gradio-app/gradio

|

python

| 10,711

|

Should not try to get_node_path() if SSR mode is disabled.

|

### Describe the bug

In Gradio code, the lines https://github.com/gradio-app/gradio/blob/54fd90703e74bd793668dda62fd87c4ef2cfff03/gradio/blocks.py#L2560 and https://github.com/gradio-app/gradio/blob/54fd90703e74bd793668dda62fd87c4ef2cfff03/gradio/routes.py#L1737 call `get_node_path()` prematurely. The call to `get_node_path()` should only happen only if SSR mode is set to true.

This is because this call breaks the application from launching if `get_node_path()` fails. The `get_node_path()` call fails due to failing to launch a subprocess (calling `which` to check the path of `node`) because it is not allowed. Note that SSR mode is set to false and is not required. This is a very niche use-case but this can happen, for instance, if the app is running inside a trusted platform module where forking new processes will fail.

I suggest a change along the following lines. I can submit a pull request if this is okay.

```

self.node_path = os.environ.get(

"GRADIO_NODE_PATH", "" if wasm_utils.IS_WASM else get_node_path()

)

```

be moved to within the following if block `if self.ssr_mode:`.

### Have you searched existing issues? 🔎

- [x] I have searched and found no existing issues

### Reproduction

It is less to do with the code that launches Gradio and more to do with the environment where it is launched.

### Screenshot

_No response_

### Logs

```shell

```

### System Info

```shell

Gradio Environment Information:

------------------------------

Operating System: Linux

gradio version: 5.20.0

gradio_client version: 1.7.2

------------------------------------------------

gradio dependencies in your environment:

aiofiles: 23.2.1

anyio: 4.8.0

audioop-lts is not installed.

fastapi: 0.115.11

ffmpy: 0.5.0

gradio-client==1.7.2 is not installed.

groovy: 0.1.2

httpx: 0.28.1

huggingface-hub: 0.29.1

jinja2: 3.1.5

markupsafe: 2.1.5

numpy: 2.0.2

orjson: 3.10.15

packaging: 24.2

pandas: 2.2.2

pillow: 11.1.0

pydantic: 2.10.6

pydub: 0.25.1

python-multipart: 0.0.20

pyyaml: 6.0.2

ruff: 0.9.9

safehttpx: 0.1.6

semantic-version: 2.10.0

starlette: 0.46.0

tomlkit: 0.13.2

typer: 0.15.1

typing-extensions: 4.12.2

urllib3: 2.3.0

uvicorn: 0.34.0

authlib; extra == 'oauth' is not installed.

itsdangerous; extra == 'oauth' is not installed.

gradio_client dependencies in your environment:

fsspec: 2025.2.0

httpx: 0.28.1

huggingface-hub: 0.29.1

packaging: 24.2

typing-extensions: 4.12.2

websockets: 15.0

```

### Severity

Blocking usage of gradio

|

closed

|

2025-03-03T03:12:59Z

|

2025-03-04T03:11:47Z

|

https://github.com/gradio-app/gradio/issues/10711

|

[

"bug",

"good first issue"

] |

anirbanbasu

| 2

|

scikit-multilearn/scikit-multilearn

|

scikit-learn

| 194

|

Getting ValueError: Can only tuple-index with a MultiIndex

|

I am trying to stratify my multi-label data, `total` is all my data. contains 20 columns, 1st column is text(X) and rest of 19 cols are labels( each col represent a class, if present for an example,set to 1 else set to 0). `total` is a csv file if this info is needed

```

from skmultilearn.model_selection import iterative_train_test_split

X_train, y_train, X_test, y_test = iterative_train_test_split(total.iloc[:,0], total.iloc[:,1:], test_size = 0.5)

```

i am getting the following Error:

```

ValueError: Can only tuple-index with a MultiIndex

```

Here is the traceback:

```

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-9-98829124e697> in <module>

1 from skmultilearn.model_selection import iterative_train_test_split

----> 2 X_train, y_train, X_test, y_test = iterative_train_test_split(total.iloc[:,0], total.iloc[:,1:], test_size = 0.5)

~/virtualenvs/anaconda3/envs/tf1/lib/python3.6/site-packages/skmultilearn/model_selection/iterative_stratification.py in iterative_train_test_split(X, y, test_size)

93 train_indexes, test_indexes = next(stratifier.split(X, y))

94

---> 95 X_train, y_train = X[train_indexes, :], y[train_indexes, :]

96 X_test, y_test = X[test_indexes, :], y[test_indexes, :]

97

~/virtualenvs/anaconda3/envs/tf1/lib/python3.6/site-packages/pandas/core/series.py in __getitem__(self, key)

1111 key = check_bool_indexer(self.index, key)

1112

-> 1113 return self._get_with(key)

1114

1115 def _get_with(self, key):

~/virtualenvs/anaconda3/envs/tf1/lib/python3.6/site-packages/pandas/core/series.py in _get_with(self, key)

1125 elif isinstance(key, tuple):

1126 try:

-> 1127 return self._get_values_tuple(key)

1128 except Exception:

1129 if len(key) == 1:

~/virtualenvs/anaconda3/envs/tf1/lib/python3.6/site-packages/pandas/core/series.py in _get_values_tuple(self, key)

1170

1171 if not isinstance(self.index, MultiIndex):

-> 1172 raise ValueError("Can only tuple-index with a MultiIndex")

1173

1174 # If key is contained, would have returned by now

ValueError: Can only tuple-index with a MultiIndex

```

Thanks in advance.

|

closed

|

2019-12-30T07:09:55Z

|

2023-03-14T17:05:30Z

|

https://github.com/scikit-multilearn/scikit-multilearn/issues/194

|

[] |

adiv5

| 6

|

tensorflow/tensor2tensor

|

machine-learning

| 1,914

|

Error: AttributeError: module 'tensorflow.compat.v2.__internal__' has no attribute 'monitoring'

|

### How to resolve this error?

I am running this code on Jupyter notebook.

I have imported `tensor2tensor` and `tensorflow` packages, however, this error arises. Can anyone assist what is the reason?

```

from tensor2tensor.data_generators.problem import Problem

...

```

```

ttributeError Traceback (most recent call last)

Input In [13], in <cell line: 11>()

9 from sklearn.metrics import mean_squared_error

10 from tensor2tensor.utils import contrib

---> 11 from tensor2tensor.data_generators.problem import Problem

12 from tensor2tensor.data_generators.text_encoder import TokenTextEncoder

13 from tqdm import tqdm

File ~/anaconda3/envs/tf-env/lib/python3.9/site-packages/tensor2tensor/data_generators/problem.py:27, in <module>

24 import random

25 import six

---> 27 from tensor2tensor.data_generators import generator_utils

28 from tensor2tensor.data_generators import text_encoder

29 from tensor2tensor.utils import contrib

File ~/anaconda3/envs/tf-env/lib/python3.9/site-packages/tensor2tensor/data_generators/generator_utils.py:1171, in <module>

1166 break

1167 return tmp_dir

1170 def tfrecord_iterator_for_problem(problem, data_dir,

-> 1171 dataset_split=tf.estimator.ModeKeys.TRAIN):

1172 """Iterate over the records on disk for the Problem."""

1173 filenames = tf.gfile.Glob(problem.filepattern(data_dir, mode=dataset_split))

File ~/anaconda3/envs/tf-env/lib/python3.9/site-packages/tensorflow/python/util/lazy_loader.py:62, in LazyLoader.__getattr__(self, item)

61 def __getattr__(self, item):

---> 62 module = self._load()

63 return getattr(module, item)

File ~/anaconda3/envs/tf-env/lib/python3.9/site-packages/tensorflow/python/util/lazy_loader.py:45, in LazyLoader._load(self)

43 """Load the module and insert it into the parent's globals."""

44 # Import the target module and insert it into the parent's namespace

---> 45 module = importlib.import_module(self.__name__)

46 self._parent_module_globals[self._local_name] = module

48 # Emit a warning if one was specified

File ~/anaconda3/envs/tf-env/lib/python3.9/importlib/__init__.py:127, in import_module(name, package)

125 break

126 level += 1

--> 127 return _bootstrap._gcd_import(name[level:], package, level)

File ~/anaconda3/envs/tf-env/lib/python3.9/site-packages/tensorflow_estimator/python/estimator/api/_v1/estimator/__init__.py:10, in <module>

6 from __future__ import print_function as _print_function

8 import sys as _sys

---> 10 from tensorflow_estimator.python.estimator.api._v1.estimator import experimental

11 from tensorflow_estimator.python.estimator.api._v1.estimator import export

12 from tensorflow_estimator.python.estimator.api._v1.estimator import inputs

File ~/anaconda3/envs/tf-env/lib/python3.9/site-packages/tensorflow_estimator/__init__.py:10, in <module>

6 from __future__ import print_function as _print_function

8 import sys as _sys

---> 10 from tensorflow_estimator._api.v1 import estimator

12 del _print_function

14 from tensorflow.python.util import module_wrapper as _module_wrapper

File ~/anaconda3/envs/tf-env/lib/python3.9/site-packages/tensorflow_estimator/_api/v1/estimator/__init__.py:13, in <module>

11 from tensorflow_estimator._api.v1.estimator import export

12 from tensorflow_estimator._api.v1.estimator import inputs

---> 13 from tensorflow_estimator._api.v1.estimator import tpu

14 from tensorflow_estimator.python.estimator.canned.baseline import BaselineClassifier

15 from tensorflow_estimator.python.estimator.canned.baseline import BaselineEstimator

File ~/anaconda3/envs/tf-env/lib/python3.9/site-packages/tensorflow_estimator/_api/v1/estimator/tpu/__init__.py:14, in <module>

12 from tensorflow_estimator.python.estimator.tpu.tpu_config import RunConfig

13 from tensorflow_estimator.python.estimator.tpu.tpu_config import TPUConfig

---> 14 from tensorflow_estimator.python.estimator.tpu.tpu_estimator import TPUEstimator

15 from tensorflow_estimator.python.estimator.tpu.tpu_estimator import TPUEstimatorSpec

17 del _print_function

File ~/anaconda3/envs/tf-env/lib/python3.9/site-packages/tensorflow_estimator/python/estimator/tpu/tpu_estimator.py:108, in <module>

105 _WRAP_INPUT_FN_INTO_WHILE_LOOP = False

107 # Track the adoption of TPUEstimator

--> 108 _tpu_estimator_gauge = tf.compat.v2.__internal__.monitoring.BoolGauge(

109 '/tensorflow/api/tpu_estimator',

110 'Whether the program uses tpu estimator or not.')

112 if ops.get_to_proto_function('{}_{}'.format(_TPU_ESTIMATOR,

113 _ITERATIONS_PER_LOOP_VAR)) is None:

114 ops.register_proto_function(

115 '{}_{}'.format(_TPU_ESTIMATOR, _ITERATIONS_PER_LOOP_VAR),

116 proto_type=variable_pb2.VariableDef,

117 to_proto=resource_variable_ops._to_proto_fn, # pylint: disable=protected-access

118 from_proto=resource_variable_ops._from_proto_fn) # pylint: disable=protected-access

AttributeError: module 'tensorflow.compat.v2.__internal__' has no attribute 'monitoring'

...

```

|

open

|

2022-07-18T08:02:14Z

|

2022-07-18T08:02:14Z

|

https://github.com/tensorflow/tensor2tensor/issues/1914

|

[] |

qm-intel

| 0

|

jacobgil/pytorch-grad-cam

|

computer-vision

| 391

|

Explanation scores using Remove and debias is none

|

During the reproduction of the blog: [https://jacobgil.github.io/pytorch-gradcam-book/CAM%20Metrics%20And%20Tuning%20Tutorial.html#road-remove-and-debias], I found the scores calculated by cam_metric using remove and debias is always none. I have located the source of the error, the "NoisyLinearImputer" function will produce a super large output tensor that will cause the target model to create a very large posterior. Do you have any ideas on how to solve this problem? I guess it may be due to the following code:

res = torch.tensor(spsolve(csc_matrix(A), b), dtype=torch.float)

Will this equation vulnerable to some numerical instability, that will output large values?

Thanks in advance!

|

open

|

2023-02-19T00:36:44Z

|

2025-03-11T10:35:55Z

|

https://github.com/jacobgil/pytorch-grad-cam/issues/391

|

[] |

MasterEndless

| 1

|

Lightning-AI/pytorch-lightning

|

machine-learning

| 19,526

|

Model stuck after saving a checkpoing when using the FSDPStrategy

|

### Bug description

I'm training a GPT model using Fabric. Below are the setups for Fabric

It works well if I'm running without saving a checkpoint. However, if I save a checkpoint using ethier `torch.save` with `fabric.barrier()` or with `fabric.save()` the training will stuck.

I saw `torch.distributed.barrier()` have a [similar issue](https://github.com/pytorch/pytorch/issues/54059). I don't have a similar utilities in my code. Not sure if there is a same usage in `Fabric`.

### What version are you seeing the problem on?

v2.1

### How to reproduce the bug

```python

strategy = FSDPStrategy(

auto_wrap_policy={Block},

activation_checkpointing_policy={Block},

state_dict_type="full",

limit_all_gathers=True,

cpu_offload=False,

)

self.fabric = L.Fabric(accelerator=device, devices=n_devices, strategy=strategy, precision=precision)

```

Saving model with

```python

state = {"model": model}

full_save_path = os.path.abspath(get_path(base_dir, base_name, '.pt'))

fabric.save(full_save_path, state)

```

### Error messages and logs

```

# Error messages and logs here please

```

No errors, only stuck!

### Environment

<details>

<summary>Current environment</summary>

```

#- Lightning Component (e.g. Trainer, LightningModule, LightningApp, LightningWork, LightningFlow): lightning, mainly using Fabric

#- PyTorch Lightning Version (e.g., 1.5.0): 2.1.3

#- Lightning App Version (e.g., 0.5.2):

#- PyTorch Version (e.g., 2.0): 2.1.2+cu118

#- Python version (e.g., 3.9): 3.10.13

#- OS (e.g., Linux): Ubuntu

#- CUDA/cuDNN version: 11.8

#- GPU models and configuration: A100 40Gx2

#- How you installed Lightning(`conda`, `pip`, source): pip

#- Running environment of LightningApp (e.g. local, cloud): local

```

</details>

### More info

I think it relates to the communications betweeen the systems.

cc @awaelchli @carmocca

|

closed

|

2024-02-24T20:07:41Z

|

2024-07-27T12:44:27Z

|

https://github.com/Lightning-AI/pytorch-lightning/issues/19526

|

[

"bug",

"strategy: fsdp",

"ver: 2.1.x",

"repro needed"

] |

charlesxu90

| 3

|

deezer/spleeter

|

tensorflow

| 508

|

no tag entry for v2[Bug] name your bug

|

you have not a releases entry for v2. and when models was updated at last time?

|

open

|

2020-10-27T18:01:11Z

|

2020-10-27T18:01:11Z

|

https://github.com/deezer/spleeter/issues/508

|

[

"bug",

"invalid"

] |

ilyapashuk

| 0

|

Asabeneh/30-Days-Of-Python

|

matplotlib

| 392

|

day 4_result is not correct

|

https://github.com/Asabeneh/30-Days-Of-Python/blame/c8656171d69e79b5dfc743f425991f46b7d1423e/04_Day_Strings/04_strings.md#L331

For this program the result should be 5 for ('y') and 0 for ('th')

challenge = 'thirty days of python'

print(challenge.find('y')) # 16

print(challenge.find('th')) # 17

|

closed

|

2023-05-09T18:09:54Z

|

2023-07-08T21:47:18Z

|

https://github.com/Asabeneh/30-Days-Of-Python/issues/392

|

[] |

Galio54

| 1

|

LAION-AI/Open-Assistant

|

python

| 3,368

|

Add Support for Language Dialect Consistency in Conversations

|

Hello,

I’m reaching out to propose a new feature that I believe would enhance the user experience.

### Issue

Currently, when selecting a language, the system does not differentiate between language variants. For instance, when Spanish is selected, dialogues are mixed between European Spanish (Spain) and Latin American Spanish. Similarly, with Catalan, where there is a mix of dialects (Catalan, Valencia, Balearic), and with Portuguese (Brazil, Portugal). This occasionally results in sentences and phrases that, while technically correct, can be perceived as "off" or "weird" by native speakers. I presume this happens with many other languages as well.

### Proposed Solution

I would like to suggest adding a more granular control over the language setting so that we can choose a specific variant (e.g. European Spanish, Mexican Spanish, etc.) for each language. Ideally, once a variant is chosen, the conversation thread should maintain consistency in the use of that variant throughout.

**Suggested Implementation:**

- Include a dropdown or a set of options under the language selection for users to choose the desired variant.

- Store the language variant selection and use it to keep the conversation thread consistent.

### Benefits

- **Enhanced Readability**: Ensuring consistency in language variants makes the conversation more readable and relatable for native speakers.

- **Greater Precision**: Some dialects have unique expressions or terminology. Maintaining consistency in language variants allows for more precise communication.

- **Cultural Sensitivity**: Respecting and utilizing the correct language variant reflects cultural awareness and sensitivity.

|

closed

|

2023-06-09T19:20:23Z

|

2023-06-10T09:10:38Z

|

https://github.com/LAION-AI/Open-Assistant/issues/3368

|

[] |

salvacarrion

| 0

|

horovod/horovod

|

deep-learning

| 3,883

|

Installation failure

|

**Environment:**

1. Framework: PyTorch

2. Framework version: 1.11.0

3. Horovod version: 0.27.0

4. MPI version: openmpi-4.1.4

5. CUDA version: 11.3

6. NCCL version: 2.9.9_1

7. Python version: 3.9

8. Spark / PySpark version:

9. Ray version:

10. OS and version: ubuntu18.04

11. GCC version:9.3.0

12. CMake version:3.26.3

**Checklist:**

1. Did you search issues to find if somebody asked this question before? i dont konw

2. If your question is about hang, did you read [this doc](https://github.com/horovod/horovod/blob/master/docs/running.rst)?

3. If your question is about docker, did you read [this doc](https://github.com/horovod/horovod/blob/master/docs/docker.rst)?

4. Did you check if you question is answered in the [troubleshooting guide] (https://github.com/horovod/horovod/blob/master/docs/troubleshooting.rst)? i cant actually describe what my problem is so i dont know how to check

**Bug report:**

Please describe erroneous behavior you're observing and steps to reproduce it.

this is my first time to install horovod, i have followed the requirements before installing horovod, but i still meet install failure, i dont know what is wrong, if anyone can give me a help?

this is my command:

HOROVOD_NCCL_HOME=/usr/local/nccl/nccl_2.9.9-1+cuda11.3_x86_64 HOROVOD_GPU_ALLREDUCE=NCCL HOROVOD_WITH_PYTORCH=1 pip install --no-cache-dir horovod/dist/horovod-0.27.0.tar.gz

my error outputs is following:

Looking in indexes: https://repo.huaweicloud.com/repository/pypi/simple

Processing ./horovod/dist/horovod-0.27.0.tar.gz

Preparing metadata (setup.py) ... done

Requirement already satisfied: cloudpickle in ./miniconda3/envs/DL/lib/python3.9/site-packages (from horovod==0.27.0) (2.1.0)

Requirement already satisfied: psutil in ./miniconda3/envs/DL/lib/python3.9/site-packages (from horovod==0.27.0) (5.9.4)

Requirement already satisfied: pyyaml in ./miniconda3/envs/DL/lib/python3.9/site-packages (from horovod==0.27.0) (6.0)

Requirement already satisfied: packaging in ./miniconda3/envs/DL/lib/python3.9/site-packages (from horovod==0.27.0) (23.0)

Requirement already satisfied: cffi>=1.4.0 in ./miniconda3/envs/DL/lib/python3.9/site-packages (from horovod==0.27.0) (1.15.1)

Requirement already satisfied: pycparser in ./miniconda3/envs/DL/lib/python3.9/site-packages (from cffi>=1.4.0->horovod==0.27.0) (2.21)

Building wheels for collected packages: horovod

Building wheel for horovod (setup.py) ... error

error: subprocess-exited-with-error

× python setup.py bdist_wheel did not run successfully.

│ exit code: 1

╰─> [307 lines of output]

running bdist_wheel

running build

running build_py

creating build

creating build/lib.linux-x86_64-cpython-39

creating build/lib.linux-x86_64-cpython-39/horovod

copying horovod/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod

creating build/lib.linux-x86_64-cpython-39/horovod/_keras

copying horovod/_keras/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/_keras

copying horovod/_keras/callbacks.py -> build/lib.linux-x86_64-cpython-39/horovod/_keras

copying horovod/_keras/elastic.py -> build/lib.linux-x86_64-cpython-39/horovod/_keras

creating build/lib.linux-x86_64-cpython-39/horovod/common

copying horovod/common/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/common

copying horovod/common/basics.py -> build/lib.linux-x86_64-cpython-39/horovod/common

copying horovod/common/elastic.py -> build/lib.linux-x86_64-cpython-39/horovod/common

copying horovod/common/exceptions.py -> build/lib.linux-x86_64-cpython-39/horovod/common

copying horovod/common/process_sets.py -> build/lib.linux-x86_64-cpython-39/horovod/common

copying horovod/common/util.py -> build/lib.linux-x86_64-cpython-39/horovod/common

creating build/lib.linux-x86_64-cpython-39/horovod/data

copying horovod/data/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/data

copying horovod/data/data_loader_base.py -> build/lib.linux-x86_64-cpython-39/horovod/data

creating build/lib.linux-x86_64-cpython-39/horovod/keras

copying horovod/keras/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/keras

copying horovod/keras/callbacks.py -> build/lib.linux-x86_64-cpython-39/horovod/keras

copying horovod/keras/elastic.py -> build/lib.linux-x86_64-cpython-39/horovod/keras

creating build/lib.linux-x86_64-cpython-39/horovod/mxnet

copying horovod/mxnet/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/mxnet

copying horovod/mxnet/compression.py -> build/lib.linux-x86_64-cpython-39/horovod/mxnet

copying horovod/mxnet/functions.py -> build/lib.linux-x86_64-cpython-39/horovod/mxnet

copying horovod/mxnet/mpi_ops.py -> build/lib.linux-x86_64-cpython-39/horovod/mxnet

creating build/lib.linux-x86_64-cpython-39/horovod/ray

copying horovod/ray/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/ray

copying horovod/ray/adapter.py -> build/lib.linux-x86_64-cpython-39/horovod/ray

copying horovod/ray/driver_service.py -> build/lib.linux-x86_64-cpython-39/horovod/ray

copying horovod/ray/elastic.py -> build/lib.linux-x86_64-cpython-39/horovod/ray

copying horovod/ray/elastic_v2.py -> build/lib.linux-x86_64-cpython-39/horovod/ray

copying horovod/ray/ray_logger.py -> build/lib.linux-x86_64-cpython-39/horovod/ray

copying horovod/ray/runner.py -> build/lib.linux-x86_64-cpython-39/horovod/ray

copying horovod/ray/strategy.py -> build/lib.linux-x86_64-cpython-39/horovod/ray

copying horovod/ray/utils.py -> build/lib.linux-x86_64-cpython-39/horovod/ray

copying horovod/ray/worker.py -> build/lib.linux-x86_64-cpython-39/horovod/ray

creating build/lib.linux-x86_64-cpython-39/horovod/runner

copying horovod/runner/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/runner

copying horovod/runner/gloo_run.py -> build/lib.linux-x86_64-cpython-39/horovod/runner

copying horovod/runner/js_run.py -> build/lib.linux-x86_64-cpython-39/horovod/runner

copying horovod/runner/launch.py -> build/lib.linux-x86_64-cpython-39/horovod/runner

copying horovod/runner/mpi_run.py -> build/lib.linux-x86_64-cpython-39/horovod/runner

copying horovod/runner/run_task.py -> build/lib.linux-x86_64-cpython-39/horovod/runner

copying horovod/runner/task_fn.py -> build/lib.linux-x86_64-cpython-39/horovod/runner

creating build/lib.linux-x86_64-cpython-39/horovod/spark

copying horovod/spark/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/spark

copying horovod/spark/conf.py -> build/lib.linux-x86_64-cpython-39/horovod/spark

copying horovod/spark/gloo_run.py -> build/lib.linux-x86_64-cpython-39/horovod/spark

copying horovod/spark/mpi_run.py -> build/lib.linux-x86_64-cpython-39/horovod/spark

copying horovod/spark/runner.py -> build/lib.linux-x86_64-cpython-39/horovod/spark

creating build/lib.linux-x86_64-cpython-39/horovod/tensorflow

copying horovod/tensorflow/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow

copying horovod/tensorflow/compression.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow

copying horovod/tensorflow/elastic.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow

copying horovod/tensorflow/functions.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow

copying horovod/tensorflow/gradient_aggregation.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow

copying horovod/tensorflow/gradient_aggregation_eager.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow

copying horovod/tensorflow/mpi_ops.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow

copying horovod/tensorflow/sync_batch_norm.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow

copying horovod/tensorflow/util.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow

creating build/lib.linux-x86_64-cpython-39/horovod/torch

copying horovod/torch/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/torch

copying horovod/torch/compression.py -> build/lib.linux-x86_64-cpython-39/horovod/torch

copying horovod/torch/functions.py -> build/lib.linux-x86_64-cpython-39/horovod/torch

copying horovod/torch/mpi_ops.py -> build/lib.linux-x86_64-cpython-39/horovod/torch

copying horovod/torch/optimizer.py -> build/lib.linux-x86_64-cpython-39/horovod/torch

copying horovod/torch/sync_batch_norm.py -> build/lib.linux-x86_64-cpython-39/horovod/torch

creating build/lib.linux-x86_64-cpython-39/horovod/runner/common

copying horovod/runner/common/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common

creating build/lib.linux-x86_64-cpython-39/horovod/runner/driver

copying horovod/runner/driver/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/driver

copying horovod/runner/driver/driver_service.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/driver

creating build/lib.linux-x86_64-cpython-39/horovod/runner/elastic

copying horovod/runner/elastic/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/elastic

copying horovod/runner/elastic/constants.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/elastic

copying horovod/runner/elastic/discovery.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/elastic

copying horovod/runner/elastic/driver.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/elastic

copying horovod/runner/elastic/registration.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/elastic

copying horovod/runner/elastic/rendezvous.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/elastic

copying horovod/runner/elastic/settings.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/elastic

copying horovod/runner/elastic/worker.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/elastic

creating build/lib.linux-x86_64-cpython-39/horovod/runner/http

copying horovod/runner/http/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/http

copying horovod/runner/http/http_client.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/http

copying horovod/runner/http/http_server.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/http

creating build/lib.linux-x86_64-cpython-39/horovod/runner/task

copying horovod/runner/task/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/task

copying horovod/runner/task/task_service.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/task

creating build/lib.linux-x86_64-cpython-39/horovod/runner/util

copying horovod/runner/util/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/util

copying horovod/runner/util/cache.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/util

copying horovod/runner/util/lsf.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/util

copying horovod/runner/util/network.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/util

copying horovod/runner/util/remote.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/util

copying horovod/runner/util/streams.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/util

copying horovod/runner/util/threads.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/util

creating build/lib.linux-x86_64-cpython-39/horovod/runner/common/service

copying horovod/runner/common/service/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/service

copying horovod/runner/common/service/compute_service.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/service

copying horovod/runner/common/service/driver_service.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/service

copying horovod/runner/common/service/task_service.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/service

creating build/lib.linux-x86_64-cpython-39/horovod/runner/common/util

copying horovod/runner/common/util/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/util

copying horovod/runner/common/util/codec.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/util

copying horovod/runner/common/util/config_parser.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/util

copying horovod/runner/common/util/env.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/util

copying horovod/runner/common/util/host_hash.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/util

copying horovod/runner/common/util/hosts.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/util

copying horovod/runner/common/util/network.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/util

copying horovod/runner/common/util/safe_shell_exec.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/util

copying horovod/runner/common/util/secret.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/util

copying horovod/runner/common/util/settings.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/util

copying horovod/runner/common/util/timeout.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/util

copying horovod/runner/common/util/tiny_shell_exec.py -> build/lib.linux-x86_64-cpython-39/horovod/runner/common/util

creating build/lib.linux-x86_64-cpython-39/horovod/spark/common

copying horovod/spark/common/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/common

copying horovod/spark/common/_namedtuple_fix.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/common

copying horovod/spark/common/backend.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/common

copying horovod/spark/common/cache.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/common

copying horovod/spark/common/constants.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/common

copying horovod/spark/common/datamodule.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/common

copying horovod/spark/common/estimator.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/common

copying horovod/spark/common/params.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/common

copying horovod/spark/common/serialization.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/common

copying horovod/spark/common/store.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/common

copying horovod/spark/common/util.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/common

creating build/lib.linux-x86_64-cpython-39/horovod/spark/data_loaders

copying horovod/spark/data_loaders/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/data_loaders

copying horovod/spark/data_loaders/pytorch_data_loaders.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/data_loaders

creating build/lib.linux-x86_64-cpython-39/horovod/spark/driver

copying horovod/spark/driver/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/driver

copying horovod/spark/driver/driver_service.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/driver

copying horovod/spark/driver/host_discovery.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/driver

copying horovod/spark/driver/job_id.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/driver

copying horovod/spark/driver/mpirun_rsh.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/driver

copying horovod/spark/driver/rendezvous.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/driver

copying horovod/spark/driver/rsh.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/driver

creating build/lib.linux-x86_64-cpython-39/horovod/spark/keras

copying horovod/spark/keras/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/keras

copying horovod/spark/keras/bare.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/keras

copying horovod/spark/keras/datamodule.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/keras

copying horovod/spark/keras/estimator.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/keras

copying horovod/spark/keras/optimizer.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/keras

copying horovod/spark/keras/remote.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/keras

copying horovod/spark/keras/tensorflow.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/keras

copying horovod/spark/keras/util.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/keras

creating build/lib.linux-x86_64-cpython-39/horovod/spark/lightning

copying horovod/spark/lightning/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/lightning

copying horovod/spark/lightning/datamodule.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/lightning

copying horovod/spark/lightning/estimator.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/lightning

copying horovod/spark/lightning/legacy.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/lightning

copying horovod/spark/lightning/remote.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/lightning

copying horovod/spark/lightning/util.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/lightning

creating build/lib.linux-x86_64-cpython-39/horovod/spark/task

copying horovod/spark/task/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/task

copying horovod/spark/task/gloo_exec_fn.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/task

copying horovod/spark/task/mpirun_exec_fn.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/task

copying horovod/spark/task/task_info.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/task

copying horovod/spark/task/task_service.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/task

creating build/lib.linux-x86_64-cpython-39/horovod/spark/tensorflow

copying horovod/spark/tensorflow/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/tensorflow

copying horovod/spark/tensorflow/compute_worker.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/tensorflow

creating build/lib.linux-x86_64-cpython-39/horovod/spark/torch

copying horovod/spark/torch/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/torch

copying horovod/spark/torch/datamodule.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/torch

copying horovod/spark/torch/estimator.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/torch

copying horovod/spark/torch/remote.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/torch

copying horovod/spark/torch/util.py -> build/lib.linux-x86_64-cpython-39/horovod/spark/torch

creating build/lib.linux-x86_64-cpython-39/horovod/tensorflow/data

copying horovod/tensorflow/data/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow/data

copying horovod/tensorflow/data/compute_service.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow/data

copying horovod/tensorflow/data/compute_worker.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow/data

creating build/lib.linux-x86_64-cpython-39/horovod/tensorflow/keras

copying horovod/tensorflow/keras/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow/keras

copying horovod/tensorflow/keras/callbacks.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow/keras

copying horovod/tensorflow/keras/elastic.py -> build/lib.linux-x86_64-cpython-39/horovod/tensorflow/keras

creating build/lib.linux-x86_64-cpython-39/horovod/torch/elastic

copying horovod/torch/elastic/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/torch/elastic

copying horovod/torch/elastic/sampler.py -> build/lib.linux-x86_64-cpython-39/horovod/torch/elastic

copying horovod/torch/elastic/state.py -> build/lib.linux-x86_64-cpython-39/horovod/torch/elastic

running build_ext

Running CMake in build/temp.linux-x86_64-cpython-39/RelWithDebInfo:

cmake /tmp/pip-req-build-bnegxg7f -DCMAKE_BUILD_TYPE=RelWithDebInfo -DCMAKE_LIBRARY_OUTPUT_DIRECTORY_RELWITHDEBINFO=/tmp/pip-req-build-bnegxg7f/build/lib.linux-x86_64-cpython-39 -DPYTHON_EXECUTABLE:FILEPATH=/root/miniconda3/envs/DL/bin/python

cmake --build . --config RelWithDebInfo -- -j8 VERBOSE=1

-- Could not find CCache. Consider installing CCache to speed up compilation.

-- The CXX compiler identification is GNU 9.3.0

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/c++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Build architecture flags: -mf16c -mavx -mfma

-- Using command /root/miniconda3/envs/DL/bin/python

-- Found MPI_CXX: /usr/local/openmpi/openmpi-4.1.4/build/lib/libmpi.so (found version "3.1")

-- Found MPI: TRUE (found version "3.1")

-- Looking for a CUDA compiler

-- Looking for a CUDA compiler - /usr/local/cuda/bin/nvcc

-- The CUDA compiler identification is NVIDIA 11.3.109

-- Detecting CUDA compiler ABI info

-- Detecting CUDA compiler ABI info - done

-- Check for working CUDA compiler: /usr/local/cuda/bin/nvcc - skipped

-- Detecting CUDA compile features

-- Detecting CUDA compile features - done

-- Found CUDAToolkit: /usr/local/cuda/include (found version "11.3.109")

-- Performing Test CMAKE_HAVE_LIBC_PTHREAD

-- Performing Test CMAKE_HAVE_LIBC_PTHREAD - Success

-- Found Threads: TRUE

-- Linking against static NCCL library

-- Found NCCL: /usr/local/nccl/nccl_2.9.9-1+cuda11.3_x86_64/include

-- Determining NCCL version from the header file: /usr/local/nccl/nccl_2.9.9-1+cuda11.3_x86_64/include/nccl.h

-- NCCL_MAJOR_VERSION: 2

-- NCCL_VERSION_CODE: 20909

-- Found NCCL (include: /usr/local/nccl/nccl_2.9.9-1+cuda11.3_x86_64/include, library: /usr/local/nccl/nccl_2.9.9-1+cuda11.3_x86_64/lib/libnccl_static.a)

-- Found NVTX: /usr/local/cuda/include

-- Found NVTX (include: /usr/local/cuda/include, library: dl)

CMake Error at CMakeLists.txt:299 (add_subdirectory):

add_subdirectory given source "third_party/gloo" which is not an existing

directory.

CMake Error at CMakeLists.txt:301 (target_compile_definitions):

Cannot specify compile definitions for target "gloo" which is not built by

this project.

Traceback (most recent call last):

File "<string>", line 1, in <module>

ModuleNotFoundError: No module named 'tensorflow'

-- Could NOT find Tensorflow (missing: Tensorflow_LIBRARIES) (Required is at least version "1.15.0")

-- Found Pytorch: 1.11.0 (found suitable version "1.11.0", minimum required is "1.5.0")

Traceback (most recent call last):

File "<string>", line 1, in <module>

ModuleNotFoundError: No module named 'mxnet'

-- Could NOT find Mxnet (missing: Mxnet_LIBRARIES) (Required is at least version "1.4.1")

-- HVD_NVCC_COMPILE_FLAGS = -O3 -Xcompiler -fPIC -gencode arch=compute_35,code=sm_35 -gencode arch=compute_37,code=sm_37 -gencode arch=compute_50,code=sm_50 -gencode arch=compute_52,code=sm_52 -gencode arch=compute_53,code=sm_53 -gencode arch=compute_60,code=sm_60 -gencode arch=compute_61,code=sm_61 -gencode arch=compute_62,code=sm_62 -gencode arch=compute_70,code=sm_70 -gencode arch=compute_72,code=sm_72 -gencode arch=compute_75,code=sm_75 -gencode arch=compute_80,code=sm_80 -gencode arch=compute_86,code=\"sm_86,compute_86\"

CMake Error at CMakeLists.txt:365 (file):

file COPY cannot find "/tmp/pip-req-build-bnegxg7f/third_party/gloo": No

such file or directory.

CMake Error at CMakeLists.txt:366 (file):

file failed to open for reading (No such file or directory):

/tmp/pip-req-build-bnegxg7f/third_party/compatible_gloo/gloo/CMakeLists.txt

CMake Error at CMakeLists.txt:369 (add_subdirectory):

The source directory

/tmp/pip-req-build-bnegxg7f/third_party/compatible_gloo

does not contain a CMakeLists.txt file.

CMake Error at CMakeLists.txt:370 (target_compile_definitions):

Cannot specify compile definitions for target "compatible_gloo" which is

not built by this project.

-- Configuring incomplete, errors occurred!

Traceback (most recent call last):

File "<string>", line 2, in <module>

File "<pip-setuptools-caller>", line 34, in <module>

File "/tmp/pip-req-build-bnegxg7f/setup.py", line 213, in <module>

setup(name='horovod',

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/__init__.py", line 87, in setup

return distutils.core.setup(**attrs)

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/_distutils/core.py", line 185, in setup

return run_commands(dist)

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/_distutils/core.py", line 201, in run_commands

dist.run_commands()

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/_distutils/dist.py", line 969, in run_commands

self.run_command(cmd)

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/dist.py", line 1208, in run_command

super().run_command(command)

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/_distutils/dist.py", line 988, in run_command

cmd_obj.run()

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/wheel/bdist_wheel.py", line 325, in run

self.run_command("build")

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/_distutils/cmd.py", line 318, in run_command

self.distribution.run_command(command)

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/dist.py", line 1208, in run_command

super().run_command(command)

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/_distutils/dist.py", line 988, in run_command

cmd_obj.run()

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/_distutils/command/build.py", line 132, in run

self.run_command(cmd_name)

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/_distutils/cmd.py", line 318, in run_command

self.distribution.run_command(command)

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/dist.py", line 1208, in run_command

super().run_command(command)

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/_distutils/dist.py", line 988, in run_command

cmd_obj.run()

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/command/build_ext.py", line 84, in run

_build_ext.run(self)

File "/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/_distutils/command/build_ext.py", line 346, in run

self.build_extensions()

File "/tmp/pip-req-build-bnegxg7f/setup.py", line 145, in build_extensions

subprocess.check_call(command, cwd=cmake_build_dir)

File "/root/miniconda3/envs/DL/lib/python3.9/subprocess.py", line 373, in check_call

raise CalledProcessError(retcode, cmd)

subprocess.CalledProcessError: Command '['cmake', '/tmp/pip-req-build-bnegxg7f', '-DCMAKE_BUILD_TYPE=RelWithDebInfo', '-DCMAKE_LIBRARY_OUTPUT_DIRECTORY_RELWITHDEBINFO=/tmp/pip-req-build-bnegxg7f/build/lib.linux-x86_64-cpython-39', '-DPYTHON_EXECUTABLE:FILEPATH=/root/miniconda3/envs/DL/bin/python']' returned non-zero exit status 1.

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

ERROR: Failed building wheel for horovod

Running setup.py clean for horovod

Failed to build horovod

Installing collected packages: horovod

Running setup.py install for horovod ... error

error: subprocess-exited-with-error

× Running setup.py install for horovod did not run successfully.

│ exit code: 1

╰─> [305 lines of output]

running install

/root/miniconda3/envs/DL/lib/python3.9/site-packages/setuptools/command/install.py:34: SetuptoolsDeprecationWarning: setup.py install is deprecated. Use build and pip and other standards-based tools.

warnings.warn(

running build

running build_py

creating build

creating build/lib.linux-x86_64-cpython-39

creating build/lib.linux-x86_64-cpython-39/horovod

copying horovod/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod

creating build/lib.linux-x86_64-cpython-39/horovod/_keras

copying horovod/_keras/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/_keras

copying horovod/_keras/callbacks.py -> build/lib.linux-x86_64-cpython-39/horovod/_keras

copying horovod/_keras/elastic.py -> build/lib.linux-x86_64-cpython-39/horovod/_keras

creating build/lib.linux-x86_64-cpython-39/horovod/common

copying horovod/common/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/common

copying horovod/common/basics.py -> build/lib.linux-x86_64-cpython-39/horovod/common

copying horovod/common/elastic.py -> build/lib.linux-x86_64-cpython-39/horovod/common

copying horovod/common/exceptions.py -> build/lib.linux-x86_64-cpython-39/horovod/common

copying horovod/common/process_sets.py -> build/lib.linux-x86_64-cpython-39/horovod/common

copying horovod/common/util.py -> build/lib.linux-x86_64-cpython-39/horovod/common

creating build/lib.linux-x86_64-cpython-39/horovod/data

copying horovod/data/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/data

copying horovod/data/data_loader_base.py -> build/lib.linux-x86_64-cpython-39/horovod/data

creating build/lib.linux-x86_64-cpython-39/horovod/keras

copying horovod/keras/__init__.py -> build/lib.linux-x86_64-cpython-39/horovod/keras

copying horovod/keras/callbacks.py -> build/lib.linux-x86_64-cpython-39/horovod/keras