repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

Textualize/rich

|

python

| 3,068

|

[REQUEST]

|

Please add a way to center/align the Progress module. Currently from what I've seen it can only align to the left but I would love for it to be able to be centered.

|

closed

|

2023-07-30T09:04:38Z

|

2023-07-30T20:53:16Z

|

https://github.com/Textualize/rich/issues/3068

|

[

"Needs triage"

] |

KingKDot

| 4

|

deezer/spleeter

|

deep-learning

| 290

|

Command not found: Spleeter

|

<img width="568" alt="Screen Shot 2020-03-12 at 9 33 25 AM" src="https://user-images.githubusercontent.com/58147163/76526825-87602400-6444-11ea-9ec2-a16ea279bd02.png">

Any ideas? I followed all the instructions.

|

closed

|

2020-03-12T13:34:49Z

|

2020-05-25T19:25:21Z

|

https://github.com/deezer/spleeter/issues/290

|

[

"bug",

"invalid"

] |

chrisgauthier9

| 2

|

ultralytics/ultralytics

|

computer-vision

| 18,758

|

Cannot export model to edgetpu on linux machine

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and found no similar bug report.

### Ultralytics YOLO Component

Other, Export

### Bug

The export to edgetpu works in google colab, however I cannot reproduce the model export in a personal computer

`from ultralytics import YOLO

model = YOLO('yolo11n.pt') # load a pretrained model (recommended for training)

results = model.export(format='edgetpu') # export the model to ONNX format`

TensorFlow SavedModel: export failure ❌ 77.2s: No module named 'imp'

Traceback (most recent call last):

File "/home/andrea/venv/myenv2/lib/python3.12/site-packages/ultralytics/engine/exporter.py", line 1285, in _add_tflite_metadata

from tensorflow_lite_support.metadata import metadata_schema_py_generated as schema # noqa

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

ModuleNotFoundError: No module named 'tensorflow_lite_support'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/andrea/venv/myenv2/lib/python3.12/site-packages/01.py", line 3, in <module>

results = model.export(format='edgetpu') # export the model to ONNX format

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/andrea/venv/myenv2/lib/python3.12/site-packages/ultralytics/engine/model.py", line 738, in export

return Exporter(overrides=args, _callbacks=self.callbacks)(model=self.model)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/andrea/venv/myenv2/lib/python3.12/site-packages/ultralytics/engine/exporter.py", line 403, in __call__

f[5], keras_model = self.export_saved_model()

^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/andrea/venv/myenv2/lib/python3.12/site-packages/ultralytics/engine/exporter.py", line 176, in outer_func

raise e

File "/home/andrea/venv/myenv2/lib/python3.12/site-packages/ultralytics/engine/exporter.py", line 171, in outer_func

f, model = inner_func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/andrea/venv/myenv2/lib/python3.12/site-packages/ultralytics/engine/exporter.py", line 1034, in export_saved_model

f.unlink() if "quant_with_int16_act.tflite" in str(f) else self._add_tflite_metadata(file)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/andrea/venv/myenv2/lib/python3.12/site-packages/ultralytics/engine/exporter.py", line 1288, in _add_tflite_metadata

from tflite_support import metadata # noqa

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/andrea/venv/myenv2/lib/python3.12/site-packages/tflite_support/metadata.py", line 28, in <module>

from tflite_support import flatbuffers

File "/home/andrea/venv/myenv2/lib/python3.12/site-packages/tflite_support/flatbuffers/__init__.py", line 15, in <module>

from .builder import Builder

File "/home/andrea/venv/myenv2/lib/python3.12/site-packages/tflite_support/flatbuffers/builder.py", line 15, in <module>

from . import number_types as N

File "/home/andrea/venv/myenv2/lib/python3.12/site-packages/tflite_support/flatbuffers/number_types.py", line 18, in <module>

from . import packer

File "/home/andrea/venv/myenv2/lib/python3.12/site-packages/tflite_support/flatbuffers/packer.py", line 22, in <module>

from . import compat

File "/home/andrea/venv/myenv2/lib/python3.12/site-packages/tflite_support/flatbuffers/compat.py", line 19, in <module>

import imp

ModuleNotFoundError: No module named 'imp'

### Environment

Ultralytics 8.3.63 🚀 Python-3.12.3 torch-2.5.1+cu124 CUDA:0 (NVIDIA GeForce RTX 3060, 11938MiB)

Setup complete ✅ (12 CPUs, 15.5 GB RAM, 360.8/467.9 GB disk)

os: ubuntu

python version: 3.12

package onnx2tf version: 1.26.3

### Minimal Reproducible Example

from ultralytics import YOLO

model = YOLO('yolo11n.pt') # load a pretrained model (recommended for training)

results = model.export(format='edgetpu') # export the model to ONNX format

### Additional

_No response_

### Are you willing to submit a PR?

- [ ] Yes I'd like to help by submitting a PR!

|

open

|

2025-01-19T11:22:22Z

|

2025-01-20T15:54:53Z

|

https://github.com/ultralytics/ultralytics/issues/18758

|

[

"bug",

"exports"

] |

Lorsu

| 3

|

graphql-python/graphene-django

|

django

| 1,515

|

Documentation Mismatch for Testing API Calls with Django

|

**What is the current behavior?**

The documentation for testing API calls with Django on the official website of [Graphene-Django](https://docs.graphene-python.org/projects/django/en/latest/testing/) shows incorrect usage of a parameter named **op_name** in the code examples for unit tests and pytest integration. However, upon inspecting the corresponding documentation in the [GitHub repository](https://github.com/graphql-python/graphene-django/blob/main/docs/testing.rst), the correct parameter operation_name is used.

**Steps to Reproduce**

Visit the [Graphene-Django](https://docs.graphene-python.org/projects/django/en/latest/testing/) documentation website's section on testing API calls with Django.

Observe the use of op_name in the example code blocks.

Compare with the content in the [testing.rst](https://github.com/graphql-python/graphene-django/blob/main/docs/testing.rst) file in the docs folder of the Graphene-Django GitHub repository, where operation_name is correctly used.

Expected Behavior

The online documentation should reflect the same parameter name, operation_name, as found in the GitHub repository documentation, ensuring consistency and correctness for developers relying on these docs for implementing tests.

Motivation / Use Case for Changing the Behavior

Ensuring the documentation is accurate and consistent across all platforms is crucial for developer experience and adoption. Incorrect documentation can lead to confusion and errors in implementation, especially for new users of Graphene-Django.

|

open

|

2024-04-06T05:32:32Z

|

2024-07-03T18:44:00Z

|

https://github.com/graphql-python/graphene-django/issues/1515

|

[

"🐛bug"

] |

hamza-m-farooqi

| 1

|

FlareSolverr/FlareSolverr

|

api

| 1,288

|

[yggtorrent] (testing) Exception (yggtorrent): FlareSolverr was unable to process the request, please check FlareSolverr logs. Message: Error: Error solving the challenge. Timeout after 55.0 seconds.: FlareSolverr was unable to process the request, please check FlareSolverr logs. Message: Error: Error solving the challenge. Timeout after 55.0 seconds.

|

### Have you checked our README?

- [X] I have checked the README

### Have you followed our Troubleshooting?

- [X] I have followed your Troubleshooting

### Is there already an issue for your problem?

- [X] I have checked older issues, open and closed

### Have you checked the discussions?

- [X] I have read the Discussions

### Environment

```markdown

- FlareSolverr version:3.3.21

- Last working FlareSolverr version:3.3.21

- Operating system: Linux-6.1.79-Unraid-x86_64-with-glibc2.31

- Are you using Docker: yes

- FlareSolverr User-Agent (see log traces or / endpoint): Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36

- Are you using a VPN: no

- Are you using a Proxy: no

- Are you using Captcha Solver: no

- If using captcha solver, which one:

- URL to test this issue:

https://www.ygg.re/

```

### Description

Hello guys, I hope you are having a nice day. I wanted to thank you all very much for your work.

Regarding the issue, I'm having the same issue that we had few days ago with yggtorrent.

Already implemented patch published here https://github.com/FlareSolverr/FlareSolverr/issues/1253

However it worked for a few days and stopped working today.

### Logged Error Messages

```text

2024-07-25 18:49:37 INFO ReqId 23039076726592 FlareSolverr 3.3.21

2024-07-25 18:49:37 DEBUG ReqId 23039076726592 Debug log enabled

2024-07-25 18:49:37 INFO ReqId 23039076726592 Testing web browser installation...

2024-07-25 18:49:37 INFO ReqId 23039076726592 Platform: Linux-6.1.79-Unraid-x86_64-with-glibc2.31

2024-07-25 18:49:37 INFO ReqId 23039076726592 Chrome / Chromium path: /usr/bin/chromium

2024-07-25 18:49:41 INFO ReqId 23039076726592 Chrome / Chromium major version: 120

2024-07-25 18:49:41 INFO ReqId 23039076726592 Launching web browser...

2024-07-25 18:49:41 DEBUG ReqId 23039076726592 Launching web browser...

2024-07-25 18:49:42 DEBUG ReqId 23039076726592 Started executable: `/app/chromedriver` in a child process with pid: 32

2024-07-25 18:49:43 INFO ReqId 23039076726592 FlareSolverr User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36

2024-07-25 18:49:43 INFO ReqId 23039076726592 Test successful!

2024-07-25 18:49:43 INFO ReqId 23039076726592 Serving on http://0.0.0.0:8191

2024-07-25 18:49:51 INFO ReqId 23039035483904 Incoming request => POST /v1 body: {'maxTimeout': 55000, 'cmd': 'request.get', 'url': 'https://www.ygg.re/engine/search?do=search&order=desc&sort=publish_date&category=all'}

2024-07-25 18:49:51 DEBUG ReqId 23039035483904 Launching web browser...

2024-07-25 18:49:51 DEBUG ReqId 23039035483904 Started executable: `/app/chromedriver` in a child process with pid: 177

2024-07-25 18:49:51 DEBUG ReqId 23039035483904 New instance of webdriver has been created to perform the request

2024-07-25 18:49:51 DEBUG ReqId 23039027078912 Navigating to... https://www.ygg.re/engine/search?do=search&order=desc&sort=publish_date&category=all

2024-07-25 18:49:54 INFO ReqId 23039027078912 Challenge detected. Title found: Just a moment...

2024-07-25 18:49:54 DEBUG ReqId 23039027078912 Waiting for title (attempt 1): Just a moment...

2024-07-25 18:49:55 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:49:55 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:49:55 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:49:55 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:49:55 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:49:57 DEBUG ReqId 23039027078912 Waiting for title (attempt 2): Just a moment...

2024-07-25 18:49:58 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:49:58 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:49:58 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:49:58 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:49:58 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:01 DEBUG ReqId 23039027078912 Waiting for title (attempt 3): Just a moment...

2024-07-25 18:50:02 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:02 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:02 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:02 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:02 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:04 DEBUG ReqId 23039027078912 Waiting for title (attempt 4): Just a moment...

2024-07-25 18:50:05 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:05 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:05 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:05 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:05 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:07 DEBUG ReqId 23039027078912 Waiting for title (attempt 5): Just a moment...

2024-07-25 18:50:08 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:08 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:08 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:08 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:08 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:10 DEBUG ReqId 23039027078912 Waiting for title (attempt 6): Just a moment...

2024-07-25 18:50:11 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:11 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:11 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:11 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:11 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:13 DEBUG ReqId 23039027078912 Waiting for title (attempt 7): Just a moment...

2024-07-25 18:50:14 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:14 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:14 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:14 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:14 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:16 DEBUG ReqId 23039027078912 Waiting for title (attempt 8): Just a moment...

2024-07-25 18:50:17 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:17 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:17 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:17 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:17 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:19 DEBUG ReqId 23039027078912 Waiting for title (attempt 9): Just a moment...

2024-07-25 18:50:20 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:20 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:20 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:20 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:20 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:22 DEBUG ReqId 23039027078912 Waiting for title (attempt 10): Just a moment...

2024-07-25 18:50:23 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:23 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:23 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:23 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:23 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:25 DEBUG ReqId 23039027078912 Waiting for title (attempt 11): Just a moment...

2024-07-25 18:50:26 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:26 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:26 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:26 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:26 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:28 DEBUG ReqId 23039027078912 Waiting for title (attempt 12): Just a moment...

2024-07-25 18:50:29 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:29 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:29 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:29 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:29 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:31 DEBUG ReqId 23039027078912 Waiting for title (attempt 13): Just a moment...

2024-07-25 18:50:32 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:32 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:32 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:32 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:32 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:34 DEBUG ReqId 23039027078912 Waiting for title (attempt 14): Just a moment...

2024-07-25 18:50:35 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:35 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:35 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:35 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:35 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:37 DEBUG ReqId 23039027078912 Waiting for title (attempt 15): Just a moment...

2024-07-25 18:50:38 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:38 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:38 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:38 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:38 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:40 DEBUG ReqId 23039027078912 Waiting for title (attempt 16): Just a moment...

2024-07-25 18:50:41 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:41 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:41 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:41 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:41 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:43 DEBUG ReqId 23039027078912 Waiting for title (attempt 17): Just a moment...

2024-07-25 18:50:44 DEBUG ReqId 23039027078912 Timeout waiting for selector

2024-07-25 18:50:44 DEBUG ReqId 23039027078912 Try to find the Cloudflare verify checkbox...

2024-07-25 18:50:44 DEBUG ReqId 23039027078912 Cloudflare verify checkbox not found on the page.

2024-07-25 18:50:44 DEBUG ReqId 23039027078912 Try to find the Cloudflare 'Verify you are human' button...

2024-07-25 18:50:44 DEBUG ReqId 23039027078912 The Cloudflare 'Verify you are human' button not found on the page.

2024-07-25 18:50:46 DEBUG ReqId 23039027078912 Waiting for title (attempt 18): Just a moment...

2024-07-25 18:50:46 DEBUG ReqId 23039035483904 A used instance of webdriver has been destroyed

2024-07-25 18:50:46 ERROR ReqId 23039035483904 Error: Error solving the challenge. Timeout after 55.0 seconds.

2024-07-25 18:50:46 DEBUG ReqId 23039035483904 Response => POST /v1 body: {'status': 'error', 'message': 'Error: Error solving the challenge. Timeout after 55.0 seconds.', 'startTimestamp': 1721933391173, 'endTimestamp': 1721933446914, 'version': '3.3.21'}

2024-07-25 18:50:46 INFO ReqId 23039035483904 Response in 55.741 s

2024-07-25 18:50:46 INFO ReqId 23039035483904 172.17.0.1 POST http://10.1.10.240:8191/v1 500 Internal Server Error

```

### Screenshots

_No response_

|

closed

|

2024-07-25T18:54:10Z

|

2024-07-25T20:19:26Z

|

https://github.com/FlareSolverr/FlareSolverr/issues/1288

|

[

"duplicate"

] |

touzenesmy

| 4

|

PaddlePaddle/PaddleHub

|

nlp

| 2,254

|

AttributeError: module 'paddle' has no attribute '__version__'

|

欢迎您反馈PaddleHub使用问题,非常感谢您对PaddleHub的贡献!

在留下您的问题时,辛苦您同步提供如下信息:

- 版本、环境信息

1)PaddleHub和PaddlePaddle版本:请提供您的PaddleHub和PaddlePaddle版本号,例如PaddleHub1.4.1,PaddlePaddle1.6.2

2)系统环境:请您描述系统类型,例如Linux/Windows/MacOS/,python版本

- 复现信息:如为报错,请给出复现环境、复现步骤

|

closed

|

2023-05-15T06:40:12Z

|

2023-09-15T08:45:13Z

|

https://github.com/PaddlePaddle/PaddleHub/issues/2254

|

[] |

HuangXinzhe

| 3

|

Kanaries/pygwalker

|

plotly

| 252

|

When deploying the streamlit apllication the graph is not visible in browser the api calls are going st_core which is not there in directory

|

closed

|

2023-09-29T02:17:29Z

|

2023-10-17T11:37:29Z

|

https://github.com/Kanaries/pygwalker/issues/252

|

[

"bug",

"fixed but needs feedback"

] |

JeevankumarDharmalingam

| 13

|

|

geex-arts/django-jet

|

django

| 289

|

Page not found (404)

|

Hi, I make download of the project later, I try to run the project and I have the next issue when try to surf of the dashboard.

Using the URLconf defined in jet.tests.urls, Django tried these URL patterns, in this order:

url: http://127.0.0.1:8000/admin/jet

^jet/

^jet/dashboard/

^admin/doc/

^admin/ ^$ [name='index']

^admin/ ^login/$ [name='login']

^admin/ ^logout/$ [name='logout']

^admin/ ^password_change/$ [name='password_change']

^admin/ ^password_change/done/$ [name='password_change_done']

^admin/ ^jsi18n/$ [name='jsi18n']

^admin/ ^r/(?P<content_type_id>\d+)/(?P<object_id>.+)/$ [name='view_on_site']

^admin/ ^auth/user/

^admin/ ^tests/testmodel/

^admin/ ^auth/group/

^admin/ ^sites/site/

^admin/ ^tests/relatedtotestmodel/

^admin/ ^(?P<app_label>auth|tests|sites)/$ [name='app_list']

The current path, admin/jet, didn't match any of these.

Somebody can help me please.

|

open

|

2018-01-31T13:36:45Z

|

2019-01-24T20:31:13Z

|

https://github.com/geex-arts/django-jet/issues/289

|

[] |

JorgeSevilla

| 10

|

ultralytics/ultralytics

|

python

| 19,292

|

Colab default setting could not covert to tflite

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and found no similar bug report.

### Ultralytics YOLO Component

_No response_

### Bug

Few weeks ago I can convert the yolo11n.pt to tflite, but now the colab default python setting will be python 3.11.11.

At this version, the tflite could not be converted by onnx2tf.

How can I fix it?

```

from ultralytics import YOLO

model = YOLO('yolo11n.pt')

model.export(format='tflite', imgsz=192, int8=True)

model = YOLO('yolo11n_saved_model/yolo11n_full_integer_quant.tflite')

res = model.predict(imgsz=192)

res[0].plot(show=True)

```

```

Downloading https://ultralytics.com/assets/Arial.ttf to '/root/.config/Ultralytics/Arial.ttf'...

100%|██████████| 755k/755k [00:00<00:00, 116MB/s]

Scanning /content/datasets/coco8/labels/val... 4 images, 0 backgrounds, 0 corrupt: 100%|██████████| 4/4 [00:00<00:00, 117.40it/s]New cache created: /content/datasets/coco8/labels/val.cache

TensorFlow SavedModel: WARNING ⚠️ >300 images recommended for INT8 calibration, found 4 images.

TensorFlow SavedModel: starting TFLite export with onnx2tf 1.26.3...

ERROR:root:Internal Python error in the inspect module.

Below is the traceback from this internal error.

ERROR: The trace log is below.

Traceback (most recent call last):

File "/usr/local/lib/python3.11/dist-packages/onnx2tf/utils/common_functions.py", line 312, in print_wrapper_func

result = func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/onnx2tf/utils/common_functions.py", line 385, in inverted_operation_enable_disable_wrapper_func

result = func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/onnx2tf/utils/common_functions.py", line 55, in get_replacement_parameter_wrapper_func

func(*args, **kwargs)

File "/usr/local/lib/python3.11/dist-packages/onnx2tf/ops/Mul.py", line 245, in make_node

correction_process_for_accuracy_errors(

File "/usr/local/lib/python3.11/dist-packages/onnx2tf/utils/common_functions.py", line 5894, in correction_process_for_accuracy_errors

min_abs_err_perm_1: int = [idx for idx in range(len(validation_data_1.shape))]

^^^^^^^^^^^^^^^^^^^^^^^

AttributeError: 'NoneType' object has no attribute 'shape'

ERROR: input_onnx_file_path: yolo11n.onnx

ERROR: onnx_op_name: wa/model.10/m/m.0/attn/Mul

ERROR: Read this and deal with it. https://github.com/PINTO0309/onnx2tf#parameter-replacement

ERROR: Alternatively, if the input OP has a dynamic dimension, use the -b or -ois option to rewrite it to a static shape and try again.

ERROR: If the input OP of ONNX before conversion is NHWC or an irregular channel arrangement other than NCHW, use the -kt or -kat option.

ERROR: Also, for models that include NonMaxSuppression in the post-processing, try the -onwdt option.

Traceback (most recent call last):

File "/usr/local/lib/python3.11/dist-packages/onnx2tf/utils/common_functions.py", line 312, in print_wrapper_func

result = func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/onnx2tf/utils/common_functions.py", line 385, in inverted_operation_enable_disable_wrapper_func

result = func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/onnx2tf/utils/common_functions.py", line 55, in get_replacement_parameter_wrapper_func

func(*args, **kwargs)

File "/usr/local/lib/python3.11/dist-packages/onnx2tf/ops/Mul.py", line 245, in make_node

correction_process_for_accuracy_errors(

File "/usr/local/lib/python3.11/dist-packages/onnx2tf/utils/common_functions.py", line 5894, in correction_process_for_accuracy_errors

min_abs_err_perm_1: int = [idx for idx in range(len(validation_data_1.shape))]

^^^^^^^^^^^^^^^^^^^^^^^

AttributeError: 'NoneType' object has no attribute 'shape'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/lib/python3.11/dist-packages/IPython/core/interactiveshell.py", line 3553, in run_code

exec(code_obj, self.user_global_ns, self.user_ns)

File "<ipython-input-6-da2eaec26985>", line 3, in <cell line: 0>

model.export(format='tflite', imgsz=192, int8=True)

File "/usr/local/lib/python3.11/dist-packages/ultralytics/engine/model.py", line 741, in export

return Exporter(overrides=args, _callbacks=self.callbacks)(model=self.model)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/ultralytics/engine/exporter.py", line 418, in __call__

f[5], keras_model = self.export_saved_model()

^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/ultralytics/engine/exporter.py", line 175, in outer_func

f, model = inner_func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/ultralytics/engine/exporter.py", line 1036, in export_saved_model

keras_model = onnx2tf.convert(

^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/onnx2tf/onnx2tf.py", line 1141, in convert

op.make_node(

File "/usr/local/lib/python3.11/dist-packages/onnx2tf/utils/common_functions.py", line 378, in print_wrapper_func

sys.exit(1)

SystemExit: 1

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/lib/python3.11/dist-packages/IPython/core/ultratb.py", line 1101, in get_records

return _fixed_getinnerframes(etb, number_of_lines_of_context, tb_offset)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/IPython/core/ultratb.py", line 248, in wrapped

return f(*args, **kwargs)

^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/dist-packages/IPython/core/ultratb.py", line 281, in _fixed_getinnerframes

records = fix_frame_records_filenames(inspect.getinnerframes(etb, context))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.11/inspect.py", line 1739, in getinnerframes

traceback_info = getframeinfo(tb, context)

^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.11/inspect.py", line 1671, in getframeinfo

lineno = frame.f_lineno

^^^^^^^^^^^^^^

AttributeError: 'tuple' object has no attribute 'f_lineno'

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

[/usr/local/lib/python3.11/dist-packages/onnx2tf/utils/common_functions.py](https://localhost:8080/#) in print_wrapper_func(*args, **kwargs)

311 try:

--> 312 result = func(*args, **kwargs)

313

18 frames

AttributeError: 'NoneType' object has no attribute 'shape'

During handling of the above exception, another exception occurred:

SystemExit Traceback (most recent call last)

[... skipping hidden 1 frame]

SystemExit: 1

During handling of the above exception, another exception occurred:

TypeError Traceback (most recent call last)

[... skipping hidden 1 frame]

[/usr/local/lib/python3.11/dist-packages/IPython/core/ultratb.py](https://localhost:8080/#) in find_recursion(etype, value, records)

380 # first frame (from in to out) that looks different.

381 if not is_recursion_error(etype, value, records):

--> 382 return len(records), 0

383

384 # Select filename, lineno, func_name to track frames with

TypeError: object of type 'NoneType' has no len()

```

Thanks,

Kris

### Environment

colab default Environment

### Minimal Reproducible Example

https://colab.research.google.com/github/ultralytics/ultralytics/blob/main/examples/tutorial.ipynb

### Additional

_No response_

### Are you willing to submit a PR?

- [ ] Yes I'd like to help by submitting a PR!

|

open

|

2025-02-18T09:09:13Z

|

2025-02-21T01:35:52Z

|

https://github.com/ultralytics/ultralytics/issues/19292

|

[

"bug",

"non-reproducible",

"exports"

] |

kris-himax

| 5

|

koxudaxi/datamodel-code-generator

|

fastapi

| 2,232

|

[PydanticV2] Add parameter to use Python Regex Engine in order to support look-around

|

**Is your feature request related to a problem? Please describe.**

1. I wrote a valid JSON Schema with many properties

2. Some properties' pattern make use of look-ahead and look-behind, which are supported by JSON Schema specifications.

See: [JSON Schema supported patterns](https://json-schema.org/understanding-json-schema/reference/regular_expressions)

4. `datamodel-code-generator` generated the PydanticV2 models.

5. PydanticV2 doesn't support look-around, look-ahead and look-behind by default (see https://github.com/pydantic/pydantic/issues/7058)

```Python traceback

ImportError while loading [...]: in <module>

[...]

.venv/lib/python3.12/site-packages/pydantic/_internal/_model_construction.py:205: in __new__

complete_model_class(

.venv/lib/python3.12/site-packages/pydantic/_internal/_model_construction.py:552: in complete_model_class

cls.__pydantic_validator__ = create_schema_validator(

.venv/lib/python3.12/site-packages/pydantic/plugin/_schema_validator.py:50: in create_schema_validator

return SchemaValidator(schema, config)

E pydantic_core._pydantic_core.SchemaError: Error building "model" validator:

E SchemaError: Error building "model-fields" validator:

E SchemaError: Field "version":

E SchemaError: Error building "str" validator:

E SchemaError: regex parse error:

E ^((?!0[0-9])[0-9]+(\.(?!$)|)){2,4}$

E ^^^

E error: look-around, including look-ahead and look-behind, is not supported

```

**Describe the solution you'd like**

PydanticV2 supports look-around, look-ahead and look-behind using Python as regex engine: https://github.com/pydantic/pydantic/issues/7058#issuecomment-2156772918

I'd like to have a configuration parameter to use `python-re` as regex engine for Pydantic V2.

**Describe alternatives you've considered**

Workaround:

1. Create a custom BaseModel

```python

from pydantic import BaseModel, ConfigDict

class _BaseModel(BaseModel):

model_config = ConfigDict(regex_engine='python-re')

```

2. Use that class as BaseModel:

```sh

datamodel-codegen --base-model "module.with.basemodel._BaseModel"

```

EDIT:

Configuration used:

```ini

[tool.datamodel-codegen]

# Options

input = "<project>/data/schemas/"

input-file-type = "jsonschema"

output = "<project>/models/"

output-model-type = "pydantic_v2.BaseModel"

# Typing customization

base-class = "<project>.models._base_model._BaseModel"

enum-field-as-literal = "all"

use-annotated = true

use-standard-collections = true

use-union-operator = true

# Field customization

collapse-root-models = true

snake-case-field = true

use-field-description = true

# Model customization

disable-timestamp = true

enable-faux-immutability = true

target-python-version = "3.12"

use-schema-description = true

# OpenAPI-only options

#

# We may not want to use these options as we are not generating from OpenAPI schemas

# but this is a workaround to avoid `type | None` in when we have a default value.

#

# The author of the tool doesn't know why he flagged this option as OpenAPI only.

# Reference: https://github.com/koxudaxi/datamodel-code-generator/issues/1441

strict-nullable = true

```

|

open

|

2024-12-17T15:39:44Z

|

2025-02-06T20:06:51Z

|

https://github.com/koxudaxi/datamodel-code-generator/issues/2232

|

[

"bug",

"help wanted"

] |

ilovelinux

| 4

|

deezer/spleeter

|

deep-learning

| 88

|

[Bug] Colab Runtime Disconnected

|

<!-- PLEASE READ THIS CAREFULLY :

- Any issue which does not respect following template or lack of information will be considered as invalid and automatically closed

- First check FAQ from wiki to see if your problem is not already known

-->

## Description

Was having trouble installing for local use, so I decided to try to use the Colab link.

## Step to reproduce

<!-- Indicates clearly steps to reproduce the behavior: -->

1. Opened Colab environment

2. Replaced the wget command with

```

from google.colab import files

uploaded = files.upload()

```

3. Ran all steps in order

4. Uploaded a local mp3 file

5. Ran the rest of the steps

6. Got a successful message from the actual separate function, and files exist in the output directory

7. Tried to listen to the output file and got a "runtime disconnected" error

## Output

`!spleeter separate -i keenanvskel.mp3 -o output/`

Output from the split function

```bash

INFO:spleeter:Loading audio b'keenanvskel.mp3' from 0.0 to 600.0

INFO:spleeter:Audio data loaded successfully

INFO:spleeter:File output/keenanvskel/vocals.wav written

INFO:spleeter:File output/keenanvskel/accompaniment.wav written

```

```

!ls output/keenanvskel

accompaniment.wav vocals.wav

```

Then I ran this

`Audio('output/keenanvskel/vocals.wav')`

And got this response

`Runtime Disconnected`

## Environment

| | |

| ----------------- | ------------------------------- |

| OS | My laptop is running MacOS, but using Colab |

| Installation type | pip |

| RAM available | Got the green checkmark for both RAM and Disk in the Colab environment |

| Hardware spec | unsure |

## Additional context

Not sure if this is an issue with spleeter or with Colab. I love this project conceptually so I was excited to try it out, I saw some other posts online about runtime disconnect errors in Colab, but everything I found seems to only occur if it's running for 15 minutes or more. This whole process was less than 5 minutes, and I've tried multiple times and continually get the same error.

If this is not the correct place for this type of error, or if I am doing something wrong, feel free to close this bug and let me know what next steps I might take.

Will also attach some screenshots of the environment.

<img width="1273" alt="Screen Shot 2019-11-13 at 6 42 28 PM" src="https://user-images.githubusercontent.com/11081954/68817684-0e90bd00-0648-11ea-8f55-8228f81970d5.png">

<img width="1291" alt="Screen Shot 2019-11-13 at 6 42 41 PM" src="https://user-images.githubusercontent.com/11081954/68817685-0e90bd00-0648-11ea-8c1b-c6a94dafd747.png">

<img width="1277" alt="Screen Shot 2019-11-13 at 6 42 54 PM" src="https://user-images.githubusercontent.com/11081954/68817686-0e90bd00-0648-11ea-8738-3bec1b194081.png">

<img width="1301" alt="Screen Shot 2019-11-13 at 6 43 22 PM" src="https://user-images.githubusercontent.com/11081954/68817688-13ee0780-0648-11ea-8a8e-c468bd32bf79.png">

|

closed

|

2019-11-14T01:02:26Z

|

2024-03-19T16:51:17Z

|

https://github.com/deezer/spleeter/issues/88

|

[

"bug",

"invalid",

"wontfix"

] |

tiwonku

| 3

|

proplot-dev/proplot

|

data-visualization

| 332

|

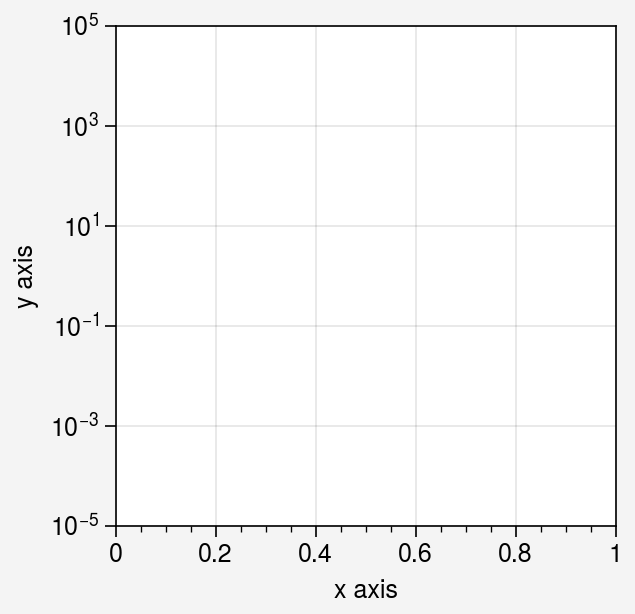

ticklabels of log scale axis should be 'log' by default?

|

<!-- Thanks for helping us make proplot a better package! If this is a bug report, please use the template provided below. If this is a feature request, you can delete the template text (just try to be descriptive with your request). -->

I feel it might be more natural to use 'log' for ticklabels when log scale is used.

### Steps to reproduce

```python

import proplot as pplt

fig = pplt.figure()

ax = fig.subplot(xlabel='x axis', ylabel='y axis')

ax.format(yscale='log', ylim=(1e-5, 1e5))

```

**Expected behavior**: [What you expected to happen]

**Actual behavior**: [What actually happened]

### Steps for expected behavior

I can fix it by hand with `yticklabels='log'`

```python

import proplot as pplt

fig = pplt.figure()

ax = fig.subplot(xlabel='x axis', ylabel='y axis')

ax.format(yscale='log', ylim=(1e-5, 1e5), yticklabels='log')

```

### Proplot version

Paste the results of `import matplotlib; print(matplotlib.__version__); import proplot; print(proplot.version)`here.

3.4.3

0.9.5

|

open

|

2022-01-29T16:56:23Z

|

2022-01-29T18:41:10Z

|

https://github.com/proplot-dev/proplot/issues/332

|

[

"enhancement"

] |

syrte

| 6

|

flasgger/flasgger

|

flask

| 512

|

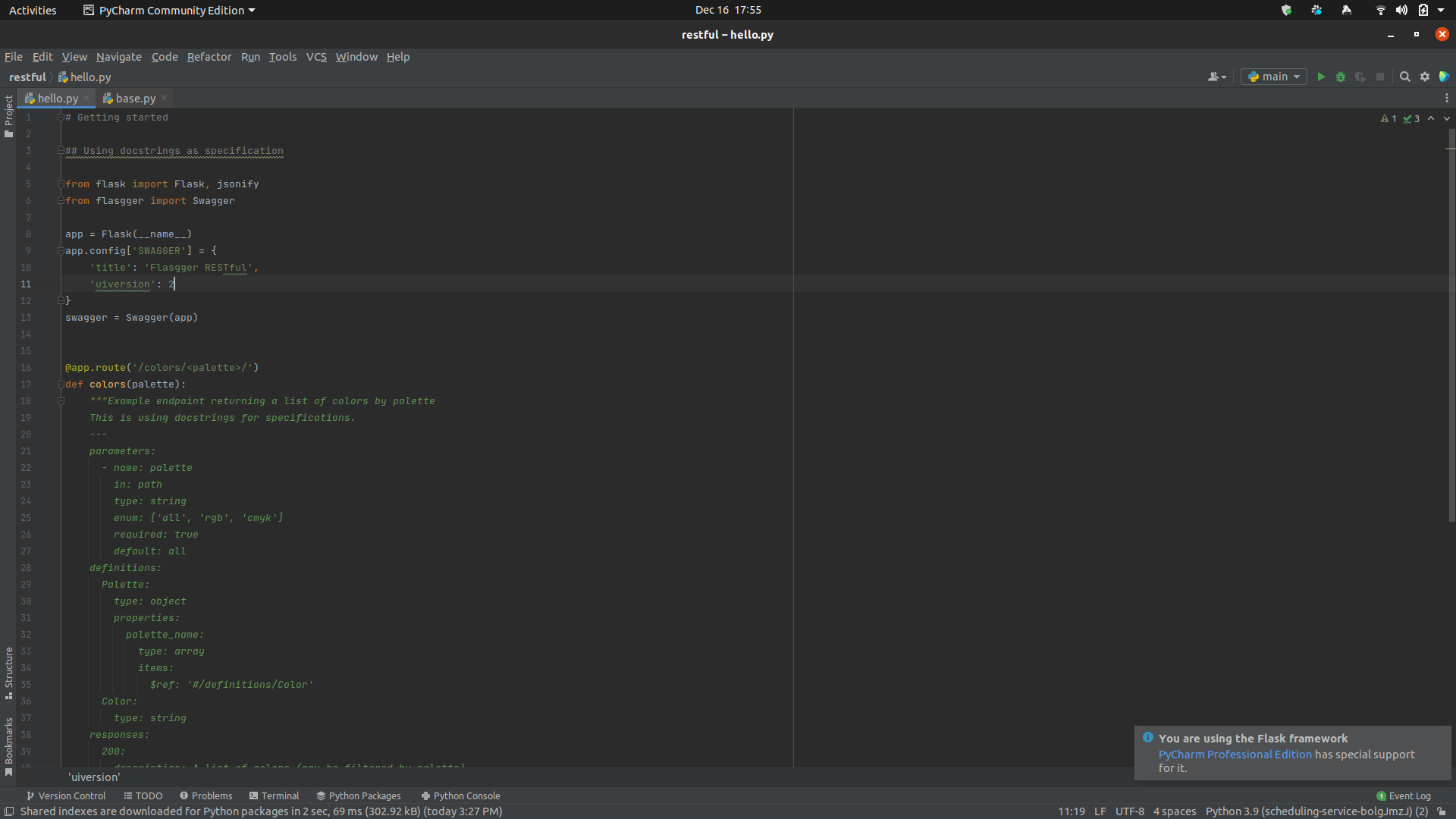

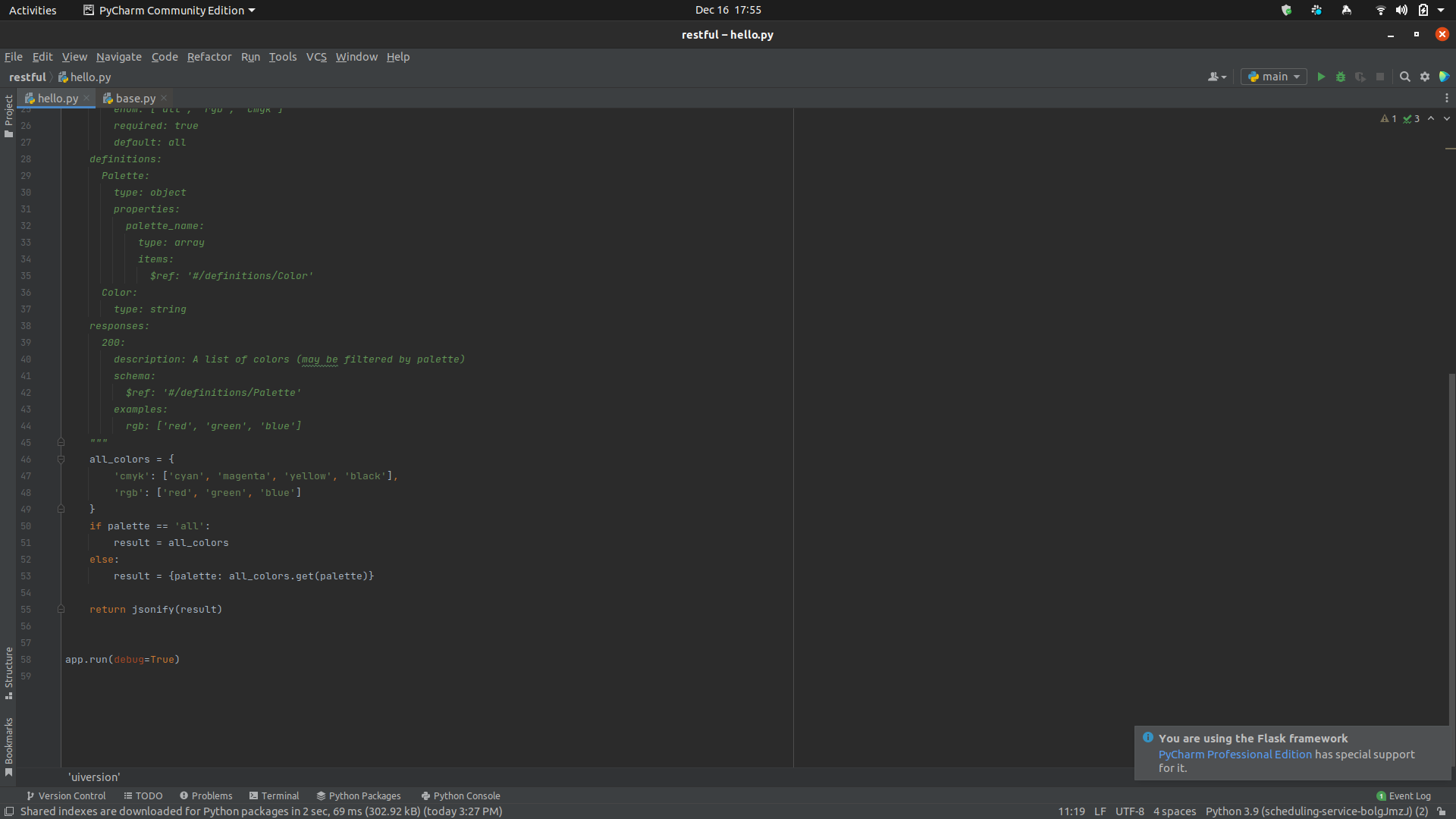

Flasgger Not Working

|

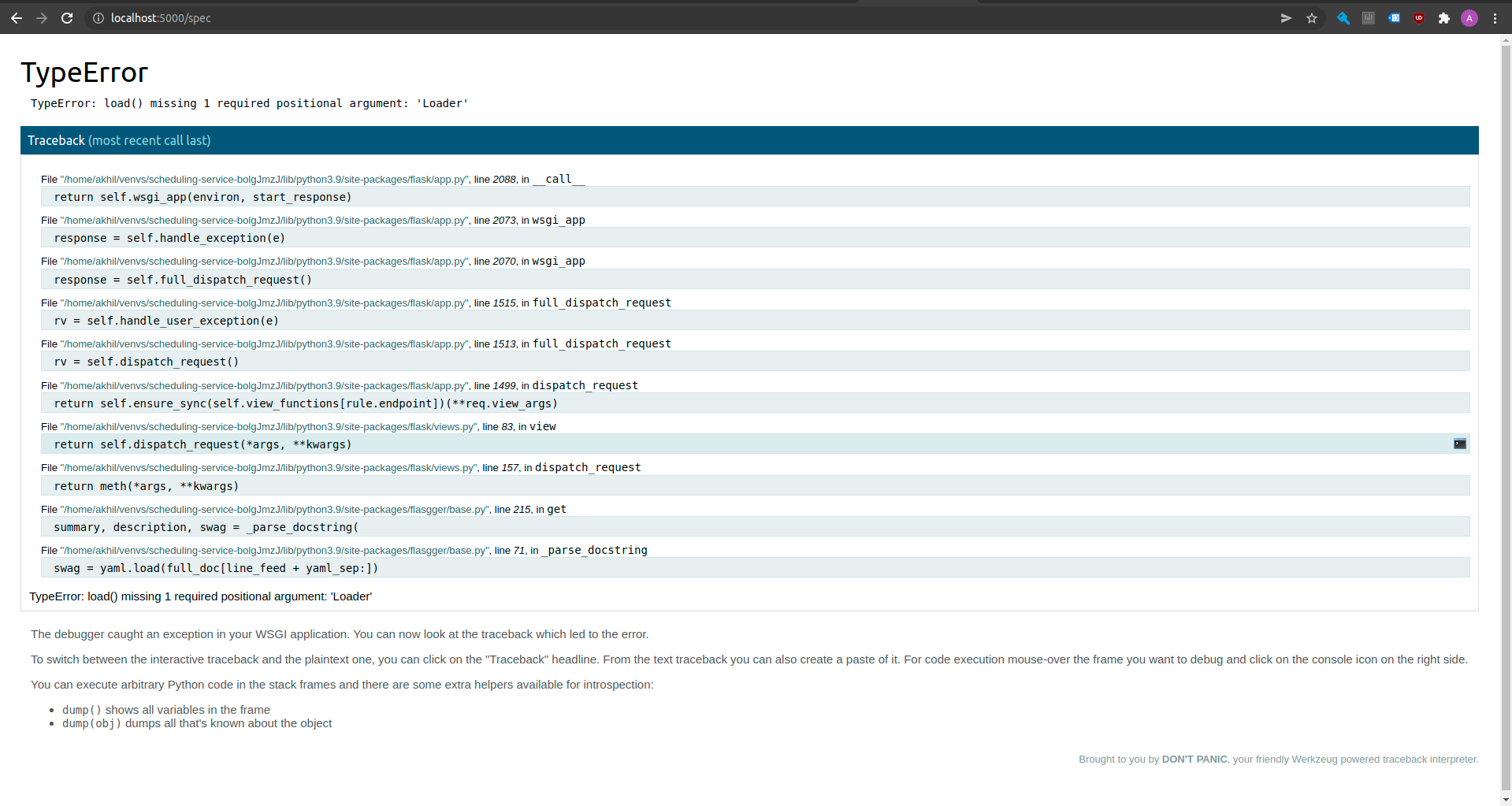

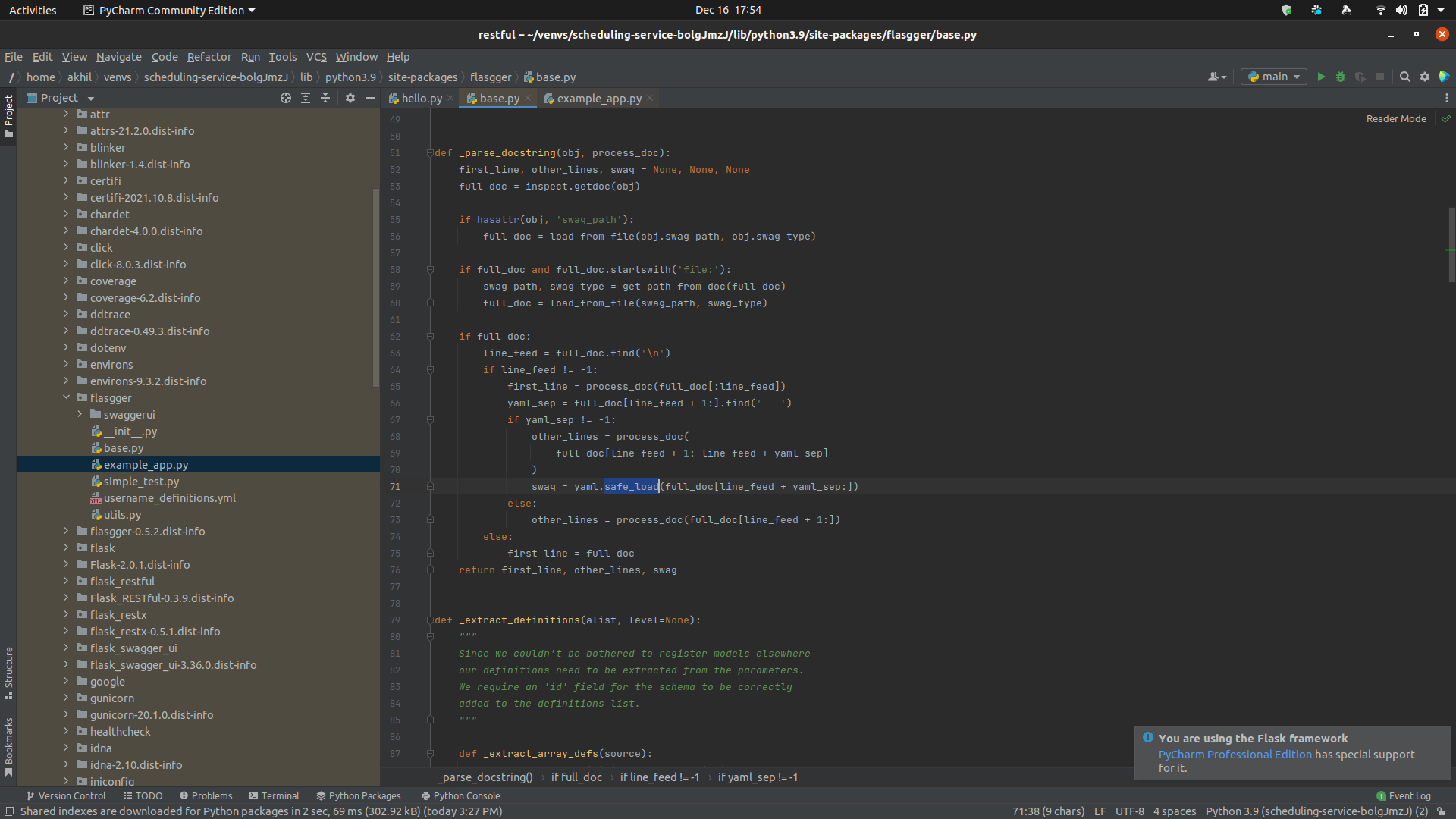

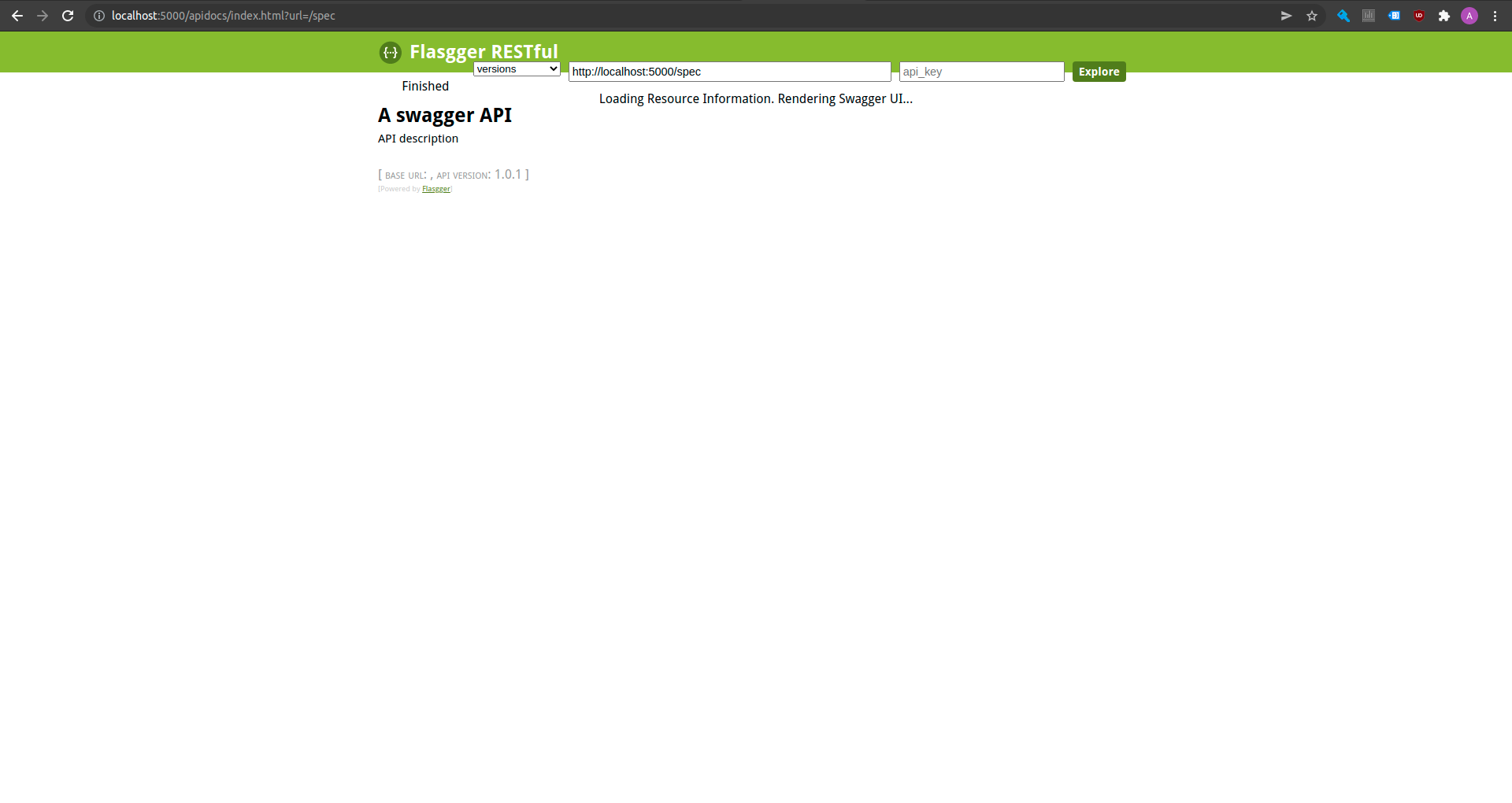

I tried to use colors.py example of the app, but it isn't working

It is giving infinite loader

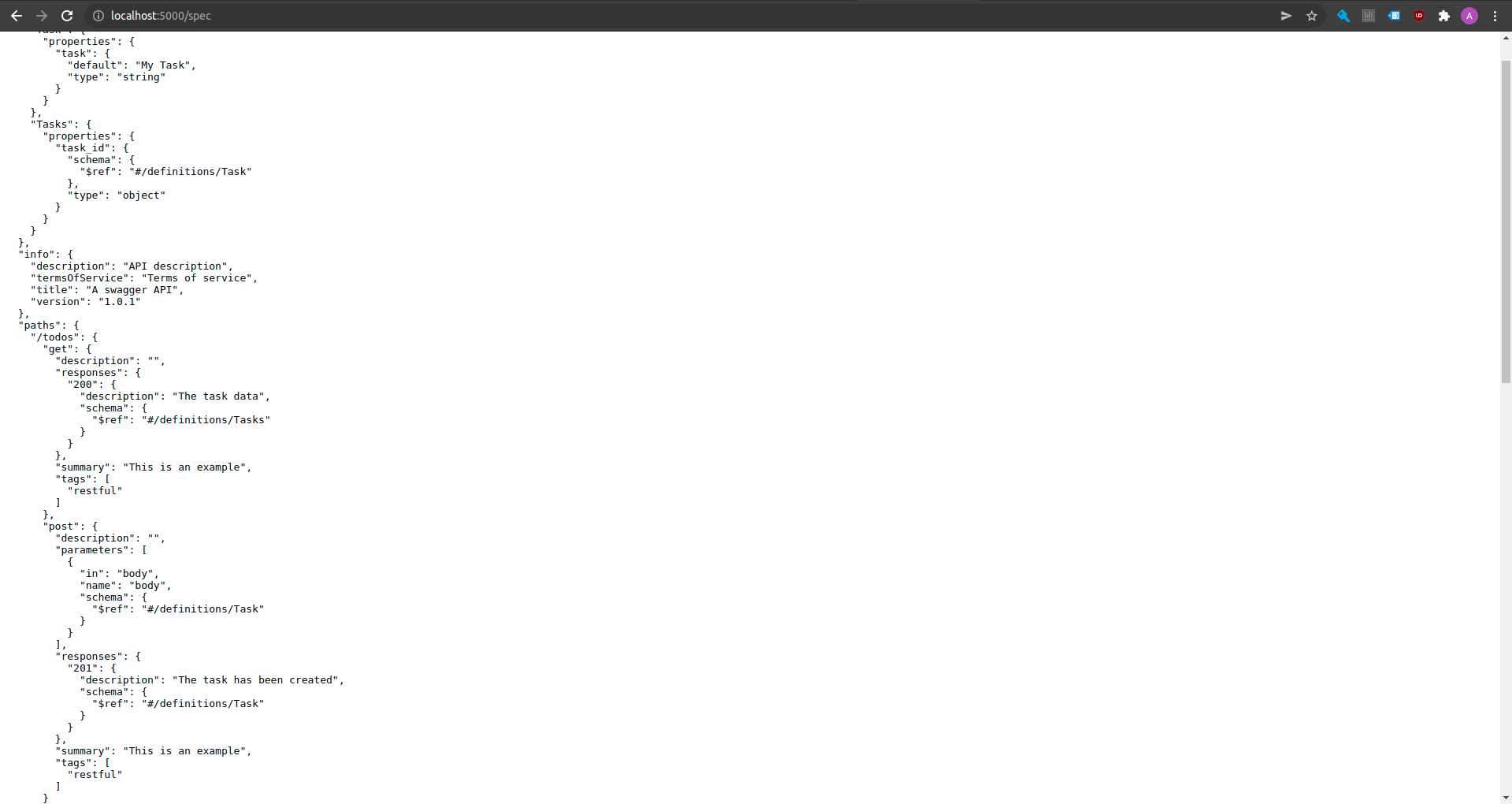

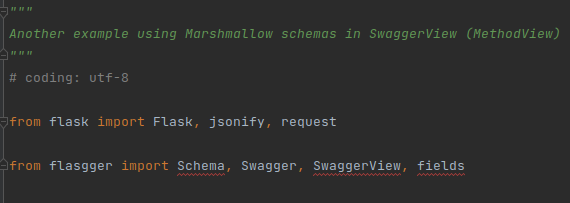

On checking http://localhost:5000/spec, i am seeing the following error

On googling, as fixing the error by doing yaml.safe_load

I start getting stuck at the following screen

I tried another example from https://github.com/flasgger/flasgger/blob/master/examples/restful.py

In this swag_from import is not available in flasgger, on removing that i am seeing the following empty swagger screen.

But the spec file has all the info

required

I also tried using this example https://github.com/flasgger/flasgger/blob/master/examples/marshmallow_apispec.py but imports doesn't seem to be available in flasgger

|

open

|

2021-12-16T12:24:48Z

|

2021-12-16T12:37:16Z

|

https://github.com/flasgger/flasgger/issues/512

|

[] |

akhilgargjosh

| 0

|

noirbizarre/flask-restplus

|

api

| 211

|

Unhelpful error when using validation and request has no Content-Type header

|

When no `content-type='application/json'` header is present, validation fails with `"": "None is not of type u'object'"`

Some examples:

```

~> curl http://localhost:5000/hello -X POST -d '{"name": "test"}'

{

"errors": {

"": "None is not of type u'object'"

},

"message": "Input payload validation failed"

}

~> curl http://localhost:5000/hello -X POST

{

"errors": {

"": "None is not of type u'object'"

},

"message": "Input payload validation failed"

}

```

And a successful request:

```

~> curl http://localhost:5000/hello -X POST -d '{"name": "test"}' -H "Content-Type: application/json"

{

"hello": "world"

}

```

This is the snippet I used, barely changed from the tutorials:

``` python

from flask import Flask

from flask_restplus import Resource, Api, fields

app = Flask(__name__)

api = Api(app)

resource_fields = api.model('Resource', {

'name': fields.String(required=True),

})

@api.route('/hello')

class HelloWorld(Resource):

@api.expect(resource_fields, validate=True)

def post(self):

return {'hello': 'world'}

if __name__ == '__main__':

app.run(debug=True)

```

|

open

|

2016-10-25T16:53:26Z

|

2019-08-16T13:31:35Z

|

https://github.com/noirbizarre/flask-restplus/issues/211

|

[

"bug"

] |

nfvs

| 3

|

vastsa/FileCodeBox

|

fastapi

| 198

|

后台不知道为啥登录不上

|

![Uploading Snipaste_2024-08-22_16-20-16.png…]()

|

closed

|

2024-08-22T08:20:58Z

|

2024-08-22T08:21:14Z

|

https://github.com/vastsa/FileCodeBox/issues/198

|

[] |

qiuyu2547

| 0

|

OFA-Sys/Chinese-CLIP

|

computer-vision

| 192

|

怎么利用这个工具实现图像描述任务

|

closed

|

2023-08-28T07:33:08Z

|

2023-08-28T13:01:42Z

|

https://github.com/OFA-Sys/Chinese-CLIP/issues/192

|

[] |

yazheng0307

| 2

|

|

sktime/sktime

|

scikit-learn

| 7,134

|

[ENH] `polars` schema checks - address performance warnings

|

The current schema checks for lazy `polars` based data types raise performance warnings, e.g.,

```

sktime/datatypes/tests/test_check.py::test_check_metadata_inference[Table-polars_lazy_table-fixture:1]

/home/runner/work/sktime/sktime/sktime/datatypes/_adapter/polars.py:234: PerformanceWarning: Determining the width of a LazyFrame requires resolving its schema, which is a potentially expensive operation. Use `LazyFrame.collect_schema().len()` to get the width without this warning.

metadata["n_features"] = obj.width - len(index_cols)

```

These should be addressed.

The tests to execute to check whether these warnings persist are those in the `datatypes` module - these are automatically executed for a change in the impacted file, on remote CI.

|

closed

|

2024-09-18T12:55:28Z

|

2024-10-06T19:35:47Z

|

https://github.com/sktime/sktime/issues/7134

|

[

"good first issue",

"module:datatypes",

"enhancement"

] |

fkiraly

| 0

|

chatanywhere/GPT_API_free

|

api

| 200

|

付费API key报错429错误

|

**Describe the bug 描述bug**

付费API key报错429错误

**To Reproduce 复现方法**

发了一条信息之后, 接着询问时出现的. 之前没有遇到过这样的问题

**Screenshots 截图**

**Tools or Programming Language 使用的工具或编程语言**

Botgem

**Additional context 其他内容**

Add any other context about the problem here.

|

closed

|

2024-03-25T05:31:15Z

|

2024-03-25T19:30:00Z

|

https://github.com/chatanywhere/GPT_API_free/issues/200

|

[] |

Sanguine-00

| 1

|

open-mmlab/mmdetection

|

pytorch

| 11,411

|

infer error about visualizer

|

mmdetection/mmdet/visualization/local_visualizer.py", line 153, in _draw_instances

label_text = classes[

IndexError: tuple index out of range

https://github.com/open-mmlab/mmdetection/blob/44ebd17b145c2372c4b700bfb9cb20dbd28ab64a/mmdet/visualization/local_visualizer.py#L153-L154

|

open

|

2024-01-19T19:15:23Z

|

2024-01-20T04:53:14Z

|

https://github.com/open-mmlab/mmdetection/issues/11411

|

[] |

dongfeicui

| 1

|

peerchemist/finta

|

pandas

| 31

|

EMA calculation not matching with Tv output

|

Hi

Can you please check EMA calculation the output is close but not matching with Tv outputs.

Better to test this with TV and realign the code.

|

closed

|

2019-06-08T08:41:44Z

|

2020-01-19T12:56:15Z

|

https://github.com/peerchemist/finta/issues/31

|

[] |

mbmarx

| 2

|

lepture/authlib

|

flask

| 408

|

authorize_access_token broken POST?

|

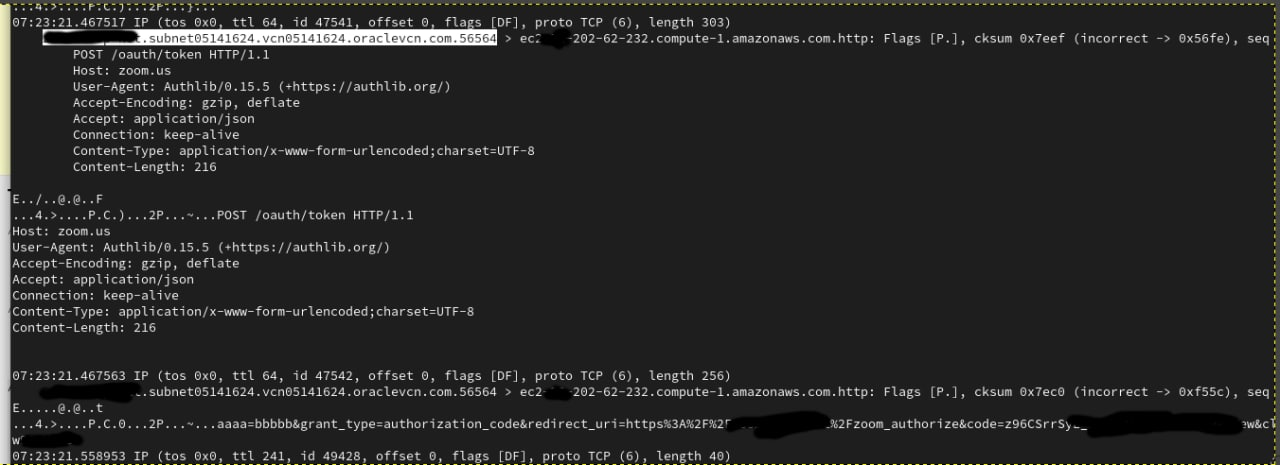

Hello, I'm really not sure if it is a bug, but when I am trying to use authorize_access_token there is no params in POST body, as I understand they are sending in a new TCP packet (see a tcpdump picture)?

```

oauth = OAuth()

oauth.register(

'zoom',

client_id=os.environ['ZOOM_CLIENT_ID'],

client_secret=os.environ['ZOOM_CLIENT_SECRET'],

access_token_url='http://zoom.us/oauth/token',

refresh_token_url='http://zoom.us/oauth/token',

authorize_url='http://zoom.us/oauth/authorize',

client_kwargs={'token_endpoint_auth_method': 'client_secret_post'},

)

def zoom_login(request):

redirect_uri = request.build_absolute_uri('/zoom_authorize')

return oauth.zoom.authorize_redirect(request, redirect_uri)

def zoom_authorize(request):

print(request)

token = oauth.zoom.authorize_access_token(request, body='aaaa=bbbbb')

#resp = oauth.zoom.get('user', token=token)

#resp.raise_for_status()

#profile = resp.json()

# do something with the token and profile

#return profile

```

I've started tcpdump and can see this:

I think it should be something like this?

```

POST /oauth/token HTTP/1.1

Host: zoom.us

User-Agent: Authlib/0.15.5 (+https://authlib.org/)

Accept-Encoding: gzip, deflate

Accept: application/json

Connection: keep-alive

Content-Type: application/x-www-form-urlencoded;charset=UTF-8

Content-Length: 216

aaa=bbb&client_id=xaaaaa&client_secret=aaaaaaaa&redirect_uri=ffsadfasd

```

|

closed

|

2021-12-04T07:35:07Z

|

2022-03-15T10:28:31Z

|

https://github.com/lepture/authlib/issues/408

|

[

"bug"

] |

akam-it

| 2

|

flasgger/flasgger

|

flask

| 497

|

TypeError: issubclass() arg 2 must be a class or tuple of classes (when apispec not installed)

|

I defined Schema using marshmallow, however I was not able to see swaggerUI (having not installed apispec), thus ending with very vague error

`File "C:\gryton\projects\turbo_auta_z_usa\venv\Lib\site-packages\flasgger\marshmallow_apispec.py", line 118, in convert_schemas

if inspect.isclass(v) and issubclass(v, Schema):

TypeError: issubclass() arg 2 must be a class or tuple of classes`

Debugging issue it seems that it was even considered before, as in marshmallow_api:

```python

if Schema is None:

raise RuntimeError('Please install marshmallow and apispec')

```

But this is called too late, as first will go `issubclass(v, Schema)`

I would move this checking even to `convert_schemas` start, so it's checked only once per function call, if needed libs are installed, so user that made the same mistake as me would receive more meaningful error.

|

open

|

2021-09-23T14:21:16Z

|

2021-09-23T14:21:16Z

|

https://github.com/flasgger/flasgger/issues/497

|

[] |

Gryton

| 0

|

serengil/deepface

|

machine-learning

| 897

|

Got an assertion error when installing deepface

|

i got an error like this when installing deepface

```

#13 138.8 Building wheel for fire (setup.py): finished with status 'done'

#13 138.8 Created wheel for fire: filename=fire-0.5.0-py2.py3-none-any.whl size=116951 sha256=f36f3a2c5b1987dda9e4c020d73b1617adb6c59ae81200fcf4920bd419c4a21f

#13 138.8 Stored in directory: /root/.cache/pip/wheels/90/d4/f7/9404e5db0116bd4d43e5666eaa3e70ab53723e1e3ea40c9a95

#13 138.8 Successfully built imface fire

#13 138.8 ERROR: Exception:

#13 138.8 Traceback (most recent call last):

#13 138.8 File "/usr/lib/python3/dist-packages/pip/_internal/cli/base_command.py", line 165, in exc_logging_wrapper

#13 138.8 status = run_func(*args)

#13 138.8 File "/usr/lib/python3/dist-packages/pip/_internal/cli/req_command.py", line 205, in wrapper

#13 138.8 return func(self, options, args)

#13 138.8 File "/usr/lib/python3/dist-packages/pip/_internal/commands/install.py", line 389, in run

#13 138.8 to_install = resolver.get_installation_order(requirement_set)

#13 138.8 File "/usr/lib/python3/dist-packages/pip/_internal/resolution/resolvelib/resolver.py", line 188, in get_installation_order

#13 138.8 weights = get_topological_weights(

#13 138.8 File "/usr/lib/python3/dist-packages/pip/_internal/resolution/resolvelib/resolver.py", line 276, in get_topological_weights

#13 138.8 assert len(weights) == expected_node_count

#13 138.8 AssertionError

```

|

closed

|

2023-11-29T05:12:49Z

|

2023-11-29T05:13:08Z

|

https://github.com/serengil/deepface/issues/897

|

[] |

ghost

| 0

|

tfranzel/drf-spectacular

|

rest-api

| 1,382

|

Inconsistency between [AllowAny] and [] with permission_classes in schema generation

|

**Describe the bug**

When using AllowAny or IsAuthenticatedOrReadOnly, opening the openapi doc using swagger looks like this:

**To Reproduce**

Create an APIView or an @api_view that overrides the permission_classes with [AllowAny]

**Expected behavior**

Instead, the swagger page should look like this for those endpoints, because they don't require any authentication:

**Workaround**

In Django, omitting AllowAny when it's the only permission_class doesn't change any behavior, but in drf-spectacular fixes this issue and properly displays the open lock icon in swagger.

**Insight**

I think the issue comes from these lines:

https://github.com/tfranzel/drf-spectacular/blob/205f898ff38f3470bf877f709fcf7e582c83c14d/drf_spectacular/openapi.py#L365-L368

where adding {} as schemes has the effect to require auth in swagger

|

open

|

2025-02-17T12:46:12Z

|

2025-02-17T17:50:07Z

|

https://github.com/tfranzel/drf-spectacular/issues/1382

|

[] |

ldeluigi

| 6

|

Lightning-AI/LitServe

|

rest-api

| 195

|

move `wrap_litserve_start` to utils

|

> I would rather reserve `conftest` for fixtures and this (seems to be) general functionality move to another utils module

_Originally posted by @Borda in https://github.com/Lightning-AI/LitServe/pull/190#discussion_r1705957686_

|

closed

|

2024-08-06T18:39:12Z

|

2024-08-12T10:49:09Z

|

https://github.com/Lightning-AI/LitServe/issues/195

|

[

"good first issue",

"help wanted"

] |

aniketmaurya

| 5

|

proplot-dev/proplot

|

data-visualization

| 301

|

Colormaps Docs Error

|

<!-- Thanks for helping us make proplot a better package! If this is a bug report, please use the template provided below. If this is a feature request, you can delete the template text (just try to be descriptive with your request). -->

### Description

The latest docs have error in Colormaps section https://proplot.readthedocs.io/en/latest/colormaps.html#

### Steps to reproduce

A "[Minimal, Complete and Verifiable Example](http://matthewrocklin.com/blog/work/2018/02/28/minimal-bug-reports)" will make it much easier for maintainers to help you.

```python

import proplot as pplt

fig, axs = pplt.show_cmaps(rasterize=True)

```

**Expected behavior**: [What you expected to happen]

List of included colormaps

**Actual behavior**: [What actually happened]

```TypeError: '>=' not supported between instances of 'list' and 'float'```

|

closed

|

2021-11-06T19:09:17Z

|

2021-11-09T18:19:22Z

|

https://github.com/proplot-dev/proplot/issues/301

|

[

"bug"

] |

pratiman-91

| 2

|

getsentry/sentry

|

django

| 87,159

|

Solve bolding of starred projects

|

closed

|

2025-03-17T10:12:00Z

|

2025-03-17T12:52:06Z

|

https://github.com/getsentry/sentry/issues/87159

|

[] |

matejminar

| 0

|

|

Avaiga/taipy

|

data-visualization

| 2,388

|

Add Taipy-specific and hidden parameters to navigate()

|

### Description

We had a request to allow a Taipy application to query another Taipy application page, potentially served by another Taipy GUI application.

This can be done using the *params* parameter to `navigate()` but then the URL is complemented with the query string that the requestee is willing to hide, for security reasons.

### Solution Proposed

`navigate()` will be added a *hidden_params* parameter, with the same semantics as for *params*: a dictionary that holds string keys and serializable value.

The `on_navigate` callback will receive an additional *hidden_params* parameter, set to a dictionary that reflects what was provided in the call to `navigate()`.

### Impact of Solution

Those parameters are encoded in the GET query header, so no POST is mandatory.

In order not to unintendedly overwrite the original headers configuration, each hidden parameter is propagated with an internal (and undocumented) prefix that identifies them before they're encoded in the query header.

Taipy GUI will decypher all that before `on_navigate` is triggered.

### Acceptance Criteria

- [ ] If applicable, a new demo code is provided to show the new feature in action.

- [ ] Integration tests exhibiting how the functionality works are added.

- [ ] Any new code is covered by a unit tested.

- [ ] Check code coverage is at least 90%.

- [ ] Related issue(s) in taipy-doc are created for documentation and Release Notes are updated.

### Code of Conduct

- [X] I have checked the [existing issues](https://github.com/Avaiga/taipy/issues?q=is%3Aissue+).

- [ ] I am willing to work on this issue (optional)

|

open

|

2025-01-09T13:55:18Z

|

2025-01-31T13:27:58Z

|

https://github.com/Avaiga/taipy/issues/2388

|

[

"🖰 GUI",

"🟧 Priority: High",

"✨New feature",

"📝Release Notes"

] |

FabienLelaquais

| 1

|

marimo-team/marimo

|

data-visualization

| 3,869

|

Code Is Repeated after Minimizing Marimo Interface

|

### Describe the bug

Whenever Multitasking with marimo and other tools, once i go back to marimo after minimizing it, the code in all cells are repeated multiple times making the usage of the Marimo not very friendly.

I tried to upgrade to newer version. Same issue persists/

### Environment

<details>

```

{

"marimo": "0.11.7",

"OS": "Windows",

"OS Version": "11",

"Processor": "Intel64 Family 6 Model 186 Stepping 2, GenuineIntel",

"Python Version": "3.12.4",

"Binaries": {

"Browser": "132.0.6834.196",

"Node": "--"

},

"Dependencies": {

"click": "8.1.7",

"docutils": "0.18.1",

"itsdangerous": "2.2.0",

"jedi": "0.18.1",

"markdown": "3.7",

"narwhals": "1.16.0",

"packaging": "23.2",

"psutil": "5.9.0",

"pygments": "2.15.1",

"pymdown-extensions": "10.12",

"pyyaml": "6.0.1",

"ruff": "0.8.2",

"starlette": "0.41.3",

"tomlkit": "0.13.2",

"typing-extensions": "4.11.0",

"uvicorn": "0.32.1",

"websockets": "14.1"

},

"Optional Dependencies": {

"altair": "5.0.1",

"anywidget": "0.9.13",

"duckdb": "1.1.3",

"pandas": "2.2.2",

"polars": "1.22.0",

"pyarrow": "14.0.2"

},

"Experimental Flags": {

"multi_column": true,

"tracing": true,

"rtc": true

}

}

```

</details>

### Code to reproduce

import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\...Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\....Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\.. Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\....Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\....Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\....Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\... Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\..Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\..Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\..Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\...Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\.....Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\....Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\.... Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\...Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")import pandas as pd

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

df = pd.read_excel(r"C:\Users\ASUS\Desktop\...Pigging Aug\TARC Frac Job Analysis\Lone Star All.xlsx")

|

closed

|

2025-02-21T10:04:25Z

|

2025-02-25T02:00:27Z

|

https://github.com/marimo-team/marimo/issues/3869

|

[

"bug"

] |

Nashat90

| 1

|

huggingface/transformers

|

python

| 36,769

|

Add Audio inputs available in apply_chat_template

|

### Feature request

Hello, I would like to request support for audio processing in the apply_chat_template function.

### Motivation

With the rapid advancement of multimodal models, audio processing has become increasingly crucial alongside image and text inputs. Models like Qwen2-Audio, Phi-4-multimodal, and various models now support audio understanding, making this feature essential for modern AI applications.

Supporting audio inputs would enable:

```python

messages = [

{

"role": "system",

"content": [{"type": "text", "text": "You are a helpful assistant."}]

},

{

"role": "user",

"content": [

{"type": "image", "image": "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/bee.jpg"},

{"type": "audio", "audio": "https://huggingface.co/microsoft/Phi-4-multimodal-instruct/resolve/main/examples/what_is_shown_in_this_image.wav"},

{"type": "text", "text": "Follow the instruction in the audio with this image."}

]

}

]

```

This enhancement would significantly expand the capabilities of the library to handle the full spectrum of multimodal inputs that state-of-the-art models now support, keeping the transformers library at the forefront of multimodal AI development.

### Your contribution

I've tested this implementation with several multimodal models and it works well for processing audio inputs alongside images and text. I'd be happy to contribute this code to the repository if there's interest.

|

open

|

2025-03-17T17:05:46Z

|

2025-03-17T20:41:41Z

|

https://github.com/huggingface/transformers/issues/36769

|

[

"Feature request"

] |

junnei

| 1

|

mlfoundations/open_clip

|

computer-vision

| 761

|

Gradient accumulation may requires scaling before backward

|

In function train_one_epoch, in the file [src/training/train.py ](https://github.com/mlfoundations/open_clip/blob/main/src/training/train.py#L159)from line 156 to 162, as shown below:

```python

losses = loss(**inputs, **inputs_no_accum, output_dict=True)

del inputs

del inputs_no_accum

total_loss = sum(losses.values())

losses["loss"] = total_loss

backward(total_loss, scaler)

```

Shouldn't we take the average of loss for gradient accumulation before calling backward()?

|

open

|

2023-12-12T17:46:49Z

|

2023-12-22T01:25:45Z

|

https://github.com/mlfoundations/open_clip/issues/761

|

[] |

yonghanyu

| 1

|

widgetti/solara

|

jupyter

| 206

|

Add release notes

|

As the Solars package gains wider adoption, it becomes increasingly important to provide users with a convenient way to track changes and make informed decisions when selecting versions. Adding release notes would greatly benefit users.

|

open

|

2023-07-11T12:39:34Z

|

2023-10-02T17:01:31Z

|

https://github.com/widgetti/solara/issues/206

|

[] |

lp9052

| 3

|

3b1b/manim

|

python

| 2,125

|

No video is generated

|

Showing the following error

Manim Extension XTerm

Serves as a terminal for logging purpose.

Extension Version 0.2.13

MSV d:\All document\VS Code\Python>"manim" "d:\All document\VS Code\Python\new2.py" CreateCircle

Manim Community v0.18.0

Animation 0: Create(Circle): 0%| | 0/60 [00:00<?, ?it/s][rawvideo @ 0194dfa0]Estimating duration from bitrate, this may be inaccurate

Input #0, rawvideo, from 'pipe:':

Duration: N/A, start: 0.000000, bitrate: N/A

Stream #0.0: Video: rawvideo, rgba, 1920x1080, 60 tbr, 60 tbn, 60 tbc

Unknown encoder 'libx264'

+--------------------- Traceback (most recent call last) ---------------------+

| C:\tools\Manim\Lib\site-packages\manim\cli\render\commands.py:115 in render |

| |

| 112 try: |

| 113 with tempconfig({}): |

| 114 scene = SceneClass() |

| > 115 scene.render() |

| 116 except Exception: |

| 117 error_console.print_exception() |

| 118 sys.exit(1) |

| |

| C:\tools\Manim\Lib\site-packages\manim\scene\scene.py:223 in render |

| |

| 220 """ |

| 221 self.setup() |

| 222 try: |

| > 223 self.construct() |

| 224 except EndSceneEarlyException: |

| 225 pass |

| 226 except RerunSceneException as e: |

| |

| d:\All document\VS Code\Python\new2.py:6 in construct |

| |

| 3 def construct(self): |

| 4 circle = Circle() # create a circle |

| 5 circle.set_fill(PINK, opacity=0.5) # set the color and transpa |

| > 6 self.play(Create(circle)) # show the circle on screen |

| 7 self.wait(1) |

| 8 |

| |

| C:\tools\Manim\Lib\site-packages\manim\scene\scene.py:1080 in play |

| |

| 1077 return |

| 1078 |

| 1079 start_time = self.renderer.time |

| > 1080 self.renderer.play(self, *args, **kwargs) |

| 1081 run_time = self.renderer.time - start_time |

| 1082 if subcaption: |

| 1083 if subcaption_duration is None: |

| |

| C:\tools\Manim\Lib\site-packages\manim\renderer\cairo_renderer.py:104 in |

| play |

| |

| 101 # In this case, as there is only a wait, it will be the l |

| 102 self.freeze_current_frame(scene.duration) |

| 103 else: |

| > 104 scene.play_internal() |

| 105 self.file_writer.end_animation(not self.skip_animations) |

| 106 |

| 107 self.num_plays += 1 |

| |

| C:\tools\Manim\Lib\site-packages\manim\scene\scene.py:1245 in play_internal |

| |

| 1242 for t in self.time_progression: |

| 1243 self.update_to_time(t) |

| 1244 if not skip_rendering and not self.skip_animation_previe |

| > 1245 self.renderer.render(self, t, self.moving_mobjects) |

| 1246 if self.stop_condition is not None and self.stop_conditi |

| 1247 self.time_progression.close() |

| 1248 break |

| |

| C:\tools\Manim\Lib\site-packages\manim\renderer\cairo_renderer.py:150 in |

| render |

| |

| 147 |

| 148 def render(self, scene, time, moving_mobjects): |

| 149 self.update_frame(scene, moving_mobjects) |

| > 150 self.add_frame(self.get_frame()) |

| 151 |

| 152 def get_frame(self): |

| 153 """ |

| |

| C:\tools\Manim\Lib\site-packages\manim\renderer\cairo_renderer.py:180 in |

| add_frame |

| |

| 177 return |

| 178 self.time += num_frames * dt |

| 179 for _ in range(num_frames): |

| > 180 self.file_writer.write_frame(frame) |

| 181 |

| 182 def freeze_current_frame(self, duration: float): |

| 183 """Adds a static frame to the movie for a given duration. The |

| |

| C:\tools\Manim\Lib\site-packages\manim\scene\scene_file_writer.py:391 in |

| write_frame |

| |

| 388 elif config.renderer == RendererType.CAIRO: |

| 389 frame = frame_or_renderer |

| 390 if write_to_movie(): |

| > 391 self.writing_process.stdin.write(frame.tobytes()) |

| 392 if is_png_format() and not config["dry_run"]: |

| 393 self.output_image_from_array(frame) |

| 394 |

+-----------------------------------------------------------------------------+

OSError: [Errno 22] Invalid argument

[19512] Execution returned code=1 in 2.156 seconds returned signal null

|

closed

|

2024-04-26T19:50:07Z

|

2024-04-26T20:16:04Z

|

https://github.com/3b1b/manim/issues/2125

|

[] |

ghost

| 0

|

ultralytics/ultralytics

|

deep-learning

| 19,632

|

about precision --Yolov11--fromdocker

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/orgs/ultralytics/discussions) and found no similar questions.

### Question

# baout preciseion

I't's been my first time to use ultarlytics in dokcer way.I'am confused about the yolov11.pt give defaulted in the docker image. the validation rst seems quite low :

root@71643adeada8:/ultralytics# yolo val detect data=coco.yaml device=0 batch=8

Ultralytics 8.3.86 🚀 Python-3.11.10 torch-2.5.1+cu124 CUDA:0 (NVIDIA GeForce RTX 4070 Ti SUPER, 16376MiB)

YOLO11n summary (fused): 100 layers, 2,616,248 parameters, 0 gradients, 6.5 GFLOPs

val: Scanning /datasets/coco/labels/val2017.cache... 4952 images, 48 backgrounds, 0 corrupt: 100%|██████████| 5000/5000 [00:00<?, ?it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 625/625 [00:50<00:00, 12.41it/s]

all 5000 36335 0.652 0.505 0.549 0.393

person 2693 10777 0.759 0.676 0.753 0.524

bicycle 149 314 0.682 0.404 0.478 0.276

car 535 1918 0.661 0.533 0.585 0.376

motorcycle 159 367 0.768 0.605 0.677 0.445

airplane 97 143 0.803 0.797 0.867 0.69

bus 189 283 0.772 0.707 0.774 0.651

train 157 190 0.832 0.805 0.862 0.681

truck 250 414 0.586 0.415 0.477 0.323

boat 121 424 0.579 0.351 0.413 0.22

traffic light 191 634 0.646 0.347 0.416 0.218

fire hydrant 86 101 0.867 0.743 0.806 0.637

stop sign 69 75 0.712 0.613 0.662 0.601

parking meter 37 60 0.698 0.55 0.613 0.467

bench 235 411 0.575 0.28 0.325 0.217

### Additional

_No response_

|

open

|

2025-03-11T04:57:45Z

|

2025-03-11T05:15:52Z

|

https://github.com/ultralytics/ultralytics/issues/19632

|

[

"question",

"detect"

] |

deadwoodeatmoon

| 2

|

sigmavirus24/github3.py

|

rest-api

| 641

|

Strict option for branch protection

|

The [protected branches API](https://developer.github.com/v3/repos/branches/) now contains a new option called _strict_, which defines whether the branch that is being merged into a protected branch has to be rebased before. Since github3.py doesn't have this feature implemented yet, it is automatically set to _true_, which is quite limiting.

It would be very practical to implement this feature. This should be easy; I'll try to take a look into it and provide a pull request shortly.

##

<bountysource-plugin>

---

Want to back this issue? **[Post a bounty on it!](https://www.bountysource.com/issues/38540587-strict-option-for-branch-protection?utm_campaign=plugin&utm_content=tracker%2F183477&utm_medium=issues&utm_source=github)** We accept bounties via [Bountysource](https://www.bountysource.com/?utm_campaign=plugin&utm_content=tracker%2F183477&utm_medium=issues&utm_source=github).

</bountysource-plugin>

|

closed

|

2016-10-20T14:37:30Z

|

2021-11-01T01:08:43Z

|

https://github.com/sigmavirus24/github3.py/issues/641

|

[] |

kristian-lesko

| 7

|

d2l-ai/d2l-en

|

tensorflow

| 2,308

|

Add chapter on diffusion models

|

GANs are the most advanced topic currently discussed in d2l.ai. However, diffusion models have taken the image generation mantle from GANs. Holding up GANs as the final chapter of the book and not discussing diffusion models at all feels like a clear indication of dated content. Additionally, fast.ai just announced that they will be adding diffusion models to their intro curriculum, as I imagine will many other intro courses. Want to make sure there's at least a discussion about this here if the content isn't already in progress or roadmapped.

|

open

|

2022-09-16T21:41:12Z

|

2023-05-15T13:48:32Z

|

https://github.com/d2l-ai/d2l-en/issues/2308

|

[

"feature request"

] |

dmarx

| 4

|

litestar-org/litestar

|

pydantic

| 3,601

|

Bug: Custom plugin with lifespan breaks channel startup hook

|

### Description