repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

huggingface/transformers

|

tensorflow

| 36,352

|

Implement Titans Architecture with GRPO Fine-Tuning

|

### Model description

It would be highly valuable to extend the Transformers library with an implementation of the Titans model—a hybrid architecture that combines traditional attention-based processing with a dedicated long-term memory module (for test-time memorization) and fine-tuning using a Group Relative Policy Optimization (GRPO) method. This approach would allow LLMs to better handle extremely long contexts and improve chain-of-thought reasoning by dynamically adapting their memory during inference while being fine-tuned with reinforcement learning techniques.

Motivation and Rationale:

Enhanced Long-Context Modeling:

The Titans architecture integrates a neural long-term memory module that learns to store, update, and selectively forget information based on a “surprise” metric (e.g., gradient magnitude). This mimics human long-term memory and overcomes the quadratic complexity limitation of traditional attention for long sequences.

Adaptive Test-Time Learning:

By learning to memorize at test time, the model can update its context representation on the fly, allowing for more robust reasoning in tasks with millions of tokens.

Reinforcement Learning Fine-Tuning via GRPO:

The GRPO method, a variant of PPO, uses group-based advantage estimates and ratio clipping to stabilize policy updates. Incorporating this into Transformers would allow for more efficient fine-tuning, reducing reliance on extensive supervised datasets and improving chain-of-thought outputs.

Proposed Implementation:

Titans Architecture:

Introduce a new model class (e.g., TitansForCausalLM) that wraps a standard Transformer with an additional long-term memory module.

The module should accept token embeddings and update a memory vector using an MLP with momentum-based updates and an adaptive forgetting gate.

Incorporate a set of persistent memory tokens (learnable parameters) that are concatenated with the Transformer’s output before the final prediction layer.

GRPO Fine-Tuning:

Create a custom trainer (e.g., subclassing TRL’s PPOTrainer) that overrides the loss computation to implement GRPO.

The loss should compute token-level log probabilities from both a reference (old) policy and the updated policy, compute the probability ratio, and then apply clipping based on a configurable epsilon value.

Integrate a dummy or real KL penalty term to control deviations between policies.

Integration with TRL:

Provide example scripts demonstrating the fine-tuning loop using TRL’s APIs with the custom GRPO loss function.

Update documentation and examples to guide users on how to apply this technique to long-context reasoning tasks.

Environment:

Transformers version: (latest)

Python version: 3.8+

Additional libraries: TRL (for PPOTrainer extension), PyTorch

Implementing Titans with GRPO fine-tuning can potentially revolutionize how we approach long-context learning and chain-of-thought reasoning. Several recent research efforts (e.g., the Titans paper [arXiv:2501.00663] and DeepSeek's work) have demonstrated promising results with these techniques. An open implementation in Transformers would help the community experiment with these ideas and possibly drive further research in scalable and adaptive LLMs.

### Open source status

- [ ] The model implementation is available

- [ ] The model weights are available

### Provide useful links for the implementation

https://arxiv.org/html/2501.00663v1

https://github.com/rajveer43/titan_transformer

|

open

|

2025-02-23T09:32:17Z

|

2025-02-24T14:42:43Z

|

https://github.com/huggingface/transformers/issues/36352

|

[

"New model"

] |

rajveer43

| 2

|

paulbrodersen/netgraph

|

matplotlib

| 88

|

Add multiple edges simultaneously

|

Hi guys, I hope you're well.

I work on a project called [GraphFilter](https://github.com/GraphFilter), where we use your library (we've even opened some issues here). Recently, a user asked us about the possibility of adding [multiple edges simultaneously](https://github.com/GraphFilter/GraphFilter/issues/453). I'd like to know if it's possible to implement this functionality.

We even tried to implement something similar, replacing the `_on_key_press` method, so that we could select all the desired vertices by dragging the left mouse button, and then press the button to add a new node, but we got the following traceback:

```

Traceback (most recent call last):

File "C:\Users\Fernando Pimenta\Documents\Github\GraphFilter\venv\lib\site-packages\matplotlib\cbook\__init__.py", line 307, in process

func(*args, **kwargs)

File "C:\Users\Fernando Pimenta\Documents\Github\GraphFilter\source\view\project\docks\visualize_graph_dock.py", line 116, in _on_key_press

self._add_node(event)

File "C:\Users\Fernando Pimenta\Documents\Github\GraphFilter\venv\lib\site-packages\netgraph\_interactive_variants.py", line 227, in _add_node

node_properties = self._extract_node_properties(self._selected_artists[-1])

File "C:\Users\Fernando Pimenta\Documents\Github\GraphFilter\venv\lib\site-packages\netgraph\_interactive_variants.py", line 178, in _extract_node_properties

radius = node_artist.radius,

AttributeError: 'EdgeArtist' object has no attribute 'radius'.

```

We are willing to contribute if you find it interesting.

Thank you in advance for all your support!

|

open

|

2024-04-09T21:09:23Z

|

2024-06-19T10:16:59Z

|

https://github.com/paulbrodersen/netgraph/issues/88

|

[

"enhancement"

] |

fsoupimenta

| 14

|

Guovin/iptv-api

|

api

| 329

|

m3u中存在使用错误的地址

|

CCTV-5+频道中出现以下地址,地址本身可响应,但返回内容中存在404错误

Name: CCTV-5+, URL: http://220.179.68.222:9901/tsfile/live/0016_1.m3u8?key=txiptv&playlive=1&authid=0, Date: None, Resolution: None, Response Time: 34 ms

`{"timestamp":"2024-09-20T17:08:33.979+0800","status":404,"error":"Not Found","message":"Not Found","path":"/tsfile/live/0016_1.m3u8"}`

是否有办法过滤此类频道?因为生成m3u会默认用Response Time最短的地址?

|

closed

|

2024-09-20T09:12:18Z

|

2024-09-23T01:43:24Z

|

https://github.com/Guovin/iptv-api/issues/329

|

[

"enhancement"

] |

zid99825

| 1

|

schemathesis/schemathesis

|

graphql

| 2,713

|

[BUG] "ignored_auth" check causes SSLError exception when verify is set to False in the case

|

### Checklist

- [x] I checked the [FAQ section](https://schemathesis.readthedocs.io/en/stable/faq.html#frequently-asked-questions) of the documentation

- [x] I looked for similar issues in the [issue tracker](https://github.com/schemathesis/schemathesis/issues)

- [x] I am using the latest version of Schemathesis

### Describe the bug

When attempting to test an openAPI spec with stateful testing, if TLS verification is set to False (i.e. defining `get_call_kwargs` to return `{"verify": False}`, even though the response received from the API is 200 an SSLError exception will be raised.

The bug manifests from L436 of `schemathesis/specs/openapi/checks.py`, but I think the issue starts in L174 of `schemathesis/stateful/state_machine.py` where the **kwargs are not passed to `validate_response` (like they are passed in L171 to `self.call()` and which contain the verify=False information).

### To Reproduce

🚨 **Mandatory** 🚨: Steps to reproduce the behavior:

Start a stateful test in any way you prefer, but make sure to enforce `verify=False` for TLS verification. Something along these lines

```python

@pytest.fixture(scope="class")

def generated_schema_local(my_endpoint):

return schemathesis.from_path(

path="my-spec.yaml",

base_url=f"https://{my_endpoint}/",

)

@pytest.fixture

def state_machine(generated_schema_local, current_client_token):

class APIWorkflow(generated_schema_local.as_state_machine()):

headers: dict

def setup(self):

self.headers = {"Authorization": f"Bearer {current_client_token}", "Content-Type": "application/json"}

# these kwargs are passed to requests.request()

def get_call_kwargs(self, case):

return {"verify": False, "headers": self.headers}

return APIWorkflow

def test_stateful_api(state_machine):

state_machine.run()

```

Please include a minimal API schema causing this issue:

I think any schema triggering the check would cause this, so it has to declare authentication as a requirement

### Expected behavior

if TLS verification is set to False any requests that are made should honor this setting or checks which enforce verification should be skipped

### Environment

```

- OS: MacOS 15.2

- Python version: 3.11

- Schemathesis version: 3.39.8

- Spec version: 3.0.3

```

### Additional context

Excluding the check "ignored_auth" with e.g.

```python

def validate_response(self, response, case, additional_checks):

case.validate_response(response, excluded_checks=(schemathesis.checks.ignored_auth,), additional_checks=additional_checks)

```

Allows the test to progress further

|

closed

|

2025-01-30T13:31:42Z

|

2025-02-03T10:07:30Z

|

https://github.com/schemathesis/schemathesis/issues/2713

|

[

"Type: Bug",

"Status: Needs Triage"

] |

lugi0

| 5

|

CorentinJ/Real-Time-Voice-Cloning

|

deep-learning

| 719

|

Replacing synthesizer from Tacotron to Non Attentive Tacotron

|

Working on it!

|

closed

|

2021-04-02T07:59:16Z

|

2021-04-20T03:01:11Z

|

https://github.com/CorentinJ/Real-Time-Voice-Cloning/issues/719

|

[] |

Garvit-32

| 2

|

coqui-ai/TTS

|

pytorch

| 3,264

|

[Bug] XTTS v2 keeps downloading model

|

### Describe the bug

model = 'tts_models/multilingual/multi-dataset/xtts_v2'

tts = TTS(model).to(device)

everytime I call this i get it redownloads the model

### To Reproduce

Running TTS generation

### Expected behavior

_No response_

### Logs

_No response_

### Environment

```shell

{

"CUDA": {

"GPU": [

"NVIDIA GeForce GTX 1650"

],

"available": true,

"version": "12.1"

},

"Packages": {

"PyTorch_debug": false,

"PyTorch_version": "2.1.0+cu121",

"TTS": "0.20.4",

"numpy": "1.22.0"

},

"System": {

"OS": "Windows",

"architecture": [

"64bit",

"WindowsPE"

],

"processor": "Intel64 Family 6 Model 165 Stepping 5, GenuineIntel",

"python": "3.9.16",

"version": "10.0.19045"

}

}

```

### Additional context

_No response_

|

closed

|

2023-11-18T20:57:06Z

|

2023-11-20T08:41:36Z

|

https://github.com/coqui-ai/TTS/issues/3264

|

[

"bug"

] |

darkzbaron

| 2

|

dgtlmoon/changedetection.io

|

web-scraping

| 1,672

|

(changed) and (into) string

|

How to get rid of "(changed)" and "(into)" string in order to leave changed things alone?

|

closed

|

2023-07-04T17:36:05Z

|

2023-07-04T19:34:36Z

|

https://github.com/dgtlmoon/changedetection.io/issues/1672

|

[] |

lukaskrol7

| 0

|

Morizeyao/GPT2-Chinese

|

nlp

| 35

|

Fail to run train_single

|

Great repo. However, the train_single script seems to be broken.

```Traceback (most recent call last):

File "train_single.py", line 223, in <module>

main()

File "train_single.py", line 74, in main

full_tokenizer = tokenization_bert.BertTokenizer(vocab_file=args.tokenizer_path)

UnboundLocalError: local variable 'tokenization_bert' referenced before assignment

```

|

closed

|

2019-08-24T12:30:35Z

|

2019-08-25T14:58:49Z

|

https://github.com/Morizeyao/GPT2-Chinese/issues/35

|

[] |

diansheng

| 1

|

quokkaproject/quokka

|

flask

| 270

|

Filter problem at the post list admin page

|

These options of filter: Title, Summary, Created At, Available At aren't working well. The first time that page is loaded and some filter is added, the filter wasn't work.

|

closed

|

2015-07-20T02:51:21Z

|

2016-03-02T15:18:06Z

|

https://github.com/quokkaproject/quokka/issues/270

|

[

"bug",

"EASY"

] |

felipevolpone

| 2

|

noirbizarre/flask-restplus

|

api

| 233

|

Swagger doesn't support converters with optional arguments

|

If one defines a route with optional arguments, http://werkzeug.pocoo.org/docs/0.11/routing/#rule-format, i.e., `@api.route('/my-resource/<string(length=2):id>')`, Swagger raises a `ValueError` (swagger.py#L82) because in senses the type converter is unsupported, i.e.,

```python

from flask import Flask

from flask_restplus import Api, Resource

app = Flask(__name__)

api = Api(app)

@api.route('/my-resource/<string(length=2):id>')

class MyResource(Resource):

def get(self, id):

return id

@api.response(403, 'Not Authorized')

def post(self, id):

api.abort(403)

if __name__ == '__main__':

app.run(debug=True)

```

The workaround seems to be to explicitly register the converter via,

```python

from werkzeug.routing import UnicodeConverter

app.url_map.converters['string(length=2)'] = UnicodeConverter

```

which seems somewhat kludgy, especially when one has multiple signatures which use arguments.

|

closed

|

2017-02-03T23:43:52Z

|

2017-03-04T20:49:48Z

|

https://github.com/noirbizarre/flask-restplus/issues/233

|

[] |

john-bodley

| 0

|

piskvorky/gensim

|

data-science

| 2,608

|

AttributeError in Doc2vec when compute_loss=True

|

<!--

**IMPORTANT**:

- Use the [Gensim mailing list](https://groups.google.com/forum/#!forum/gensim) to ask general or usage questions. Github issues are only for bug reports.

- Check [Recipes&FAQ](https://github.com/RaRe-Technologies/gensim/wiki/Recipes-&-FAQ) first for common answers.

Github bug reports that do not include relevant information and context will be closed without an answer. Thanks!

-->

#### Description

Gensim's Doc2Vec include an initialization parameter compute_loss which, if True, cause the model to keep a running total of loss during training which can then be requested via get_training_loss(). But i get 'Doc2Vec' object has no attribute 'get_training_loss'. A quick look at doc2vec.py verifies that that method isn't implemented there.

What are you trying to achieve? What is the expected result? What are you seeing instead?

I am trying to get the loss so that i can figure out how many epochs to run my model on

#### Steps/code/corpus to reproduce

Include full tracebacks, logs and datasets if necessary. Please keep the examples minimal ("minimal reproducible example").

#############################################################################

```

AttributeError Traceback (most recent call last)

<ipython-input-9-8c794e318315> in <module>

4 else:

5 print('Model does not exists, creating new one. This will take some time...')

----> 6 create_doc2vec_model(data['content'])

7 model_doc = Doc2Vec.load("/home/ubuntu/Jupyter_Notebook/Akash_testing/d2v_testing.model")

8 print("Model Loaded")

<ipython-input-5-f3af8a0c71ca> in create_doc2vec_model(X)

20 model_doc.train(tagged_data,

21 total_examples=model_doc.corpus_count,

---> 22 epochs=model_doc.iter)

23 model_doc.alpha -= 0.0002

24 model_doc.min_alpha = model_doc.alpha

~/anaconda3/lib/python3.7/site-packages/gensim/models/doc2vec.py in train(self, documents, corpus_file, total_examples, total_words, epochs, start_alpha, end_alpha, word_count, queue_factor, report_delay, callbacks)

811 sentences=documents, corpus_file=corpus_file, total_examples=total_examples, total_words=total_words,

812 epochs=epochs, start_alpha=start_alpha, end_alpha=end_alpha, word_count=word_count,

--> 813 queue_factor=queue_factor, report_delay=report_delay, callbacks=callbacks, **kwargs)

814

815 @classmethod

~/anaconda3/lib/python3.7/site-packages/gensim/models/base_any2vec.py in train(self, sentences, corpus_file, total_examples, total_words, epochs, start_alpha, end_alpha, word_count, queue_factor, report_delay, compute_loss, callbacks, **kwargs)

1079 total_words=total_words, epochs=epochs, start_alpha=start_alpha, end_alpha=end_alpha, word_count=word_count,

1080 queue_factor=queue_factor, report_delay=report_delay, compute_loss=compute_loss, callbacks=callbacks,

-> 1081 **kwargs)

1082

1083 def _get_job_params(self, cur_epoch):

~/anaconda3/lib/python3.7/site-packages/gensim/models/base_any2vec.py in train(self, data_iterable, corpus_file, epochs, total_examples, total_words, queue_factor, report_delay, callbacks, **kwargs)

537

538 for callback in self.callbacks:

--> 539 callback.on_train_begin(self)

540

541 trained_word_count = 0

~/anaconda3/lib/python3.7/site-packages/keras/callbacks.py in on_train_begin(self, logs)

293

294 def on_train_begin(self, logs=None):

--> 295 self.verbose = self.params['verbose']

296 self.epochs = self.params['epochs']

297

AttributeError: 'ProgbarLogger' object has no attribute 'params'

```

#############################################################################

#### Versions

Please provide the output of:

```python

import platform; print(platform.platform())

import sys; print("Python", sys.version)

import numpy; print("NumPy", numpy.__version__)

import scipy; print("SciPy", scipy.__version__)

import gensim; print("gensim", gensim.__version__)

from gensim.models import word2vec;print("FAST_VERSION", word2vec.FAST_VERSION)

Linux-4.4.0-1094-aws-x86_64-with-debian-stretch-sid

Python 3.7.3 (default, Mar 27 2019, 22:11:17)

[GCC 7.3.0]

NumPy 1.16.4

SciPy 1.3.0

gensim 3.8.0

FAST_VERSION 1

```

|

closed

|

2019-09-25T11:03:29Z

|

2019-09-28T13:37:40Z

|

https://github.com/piskvorky/gensim/issues/2608

|

[] |

Infinity1008

| 2

|

deezer/spleeter

|

tensorflow

| 626

|

[Bug] Spleeter Separate on custom trained model tries to download another model

|

## Description

I have trained a custom model and the separate function does not work as intended as it tries to download the model from a nonexisting url.

## Step to reproduce

1. Created custom model training data / specs annotated in the custom_model_config.json

2. Trained model (succesfully, apparently) using `!spleeter train -p "custom_model_config.json" -d "PathToCustomDataset"`. I check that the trained model folder is created.

3. When trying to separate a file using `!spleeter separate -o sep_out -p "custom_model_config.json" "test.mp3"`

The function tries to download the model from a url which obviously does not exists. Why is this happening?

## Output

```

tcmalloc: large alloc 1694474240 bytes == 0x556df36f0000 @ 0x7f9e527bf1e7 0x7f9e4ef5f631 0x7f9e4efc3cc8 0x7f9e4efc3de3 0x7f9e4f061ed8 0x7f9e4f062734 0x7f9e4f062882 0x556d45843f68 0x7f9e4efaf53d 0x556d45841c47 0x556d45841a50 0x556d458b5453 0x556d458b04ae 0x556d458433ea 0x556d458b232a 0x556d458b04ae 0x556d458433ea 0x556d458b160e 0x556d4584330a 0x556d458b160e 0x556d458b04ae 0x556d458433ea 0x556d458b160e 0x556d458b04ae 0x556d458433ea 0x556d458b232a 0x556d458b04ae 0x556d45782e2c 0x556d458b2bb5 0x556d458b07ad 0x556d45782e2c

INFO:spleeter:Downloading model archive https://github.com/deezer/spleeter/releases/download/v1.4.0/bach10_model_2.tar.gz

Traceback (most recent call last):

File "/usr/local/bin/spleeter", line 8, in <module>

sys.exit(entrypoint())

File "/usr/local/lib/python3.7/dist-packages/spleeter/__main__.py", line 256, in entrypoint

spleeter()

File "/usr/local/lib/python3.7/dist-packages/typer/main.py", line 214, in _call_

return get_command(self)(*args, **kwargs)

File "/usr/local/lib/python3.7/dist-packages/click/core.py", line 829, in _call_

return self.main(*args, **kwargs)

File "/usr/local/lib/python3.7/dist-packages/click/core.py", line 782, in main

rv = self.invoke(ctx)

File "/usr/local/lib/python3.7/dist-packages/click/core.py", line 1259, in invoke

return _process_result(sub_ctx.command.invoke(sub_ctx))

File "/usr/local/lib/python3.7/dist-packages/click/core.py", line 1066, in invoke

return ctx.invoke(self.callback, **ctx.params)

File "/usr/local/lib/python3.7/dist-packages/click/core.py", line 610, in invoke

return callback(*args, **kwargs)

File "/usr/local/lib/python3.7/dist-packages/typer/main.py", line 497, in wrapper

return callback(**use_params) # type: ignore

File "/usr/local/lib/python3.7/dist-packages/spleeter/__main__.py", line 137, in separate

synchronous=False,

File "/usr/local/lib/python3.7/dist-packages/spleeter/separator.py", line 382, in separate_to_file

sources = self.separate(waveform, audio_descriptor)

File "/usr/local/lib/python3.7/dist-packages/spleeter/separator.py", line 325, in separate

return self._separate_librosa(waveform, audio_descriptor)

File "/usr/local/lib/python3.7/dist-packages/spleeter/separator.py", line 269, in _separate_librosa

sess = self._get_session()

File "/usr/local/lib/python3.7/dist-packages/spleeter/separator.py", line 241, in _get_session

model_directory: str = provider.get(self._params["model_dir"])

File "/usr/local/lib/python3.7/dist-packages/spleeter/model/provider/__init__.py", line 80, in get

self.download(model_directory.split(sep)[-1], model_directory)

File "/usr/local/lib/python3.7/dist-packages/spleeter/model/provider/github.py", line 141, in download

response.raise_for_status()

File "/usr/local/lib/python3.7/dist-packages/httpx/_models.py", line 1103, in raise_for_status

raise HTTPStatusError(message, request=request, response=self)

httpx.HTTPStatusError: 404 Client Error: Not Found for url: https://github.com/deezer/spleeter/releases/download/v1.4.0/bach10_model_2.tar.gz

For more information check: https://httpstatuses.com/404

```

## Environment

<!-- Fill the following table -->

| | |

| ----------------- | ------------------------------- |

| OS | Linux (Colab environment) |

| Installation type | pip |

| RAM available | 16GB |

|

closed

|

2021-05-29T16:33:08Z

|

2021-05-31T09:36:24Z

|

https://github.com/deezer/spleeter/issues/626

|

[

"bug",

"invalid"

] |

andresC98

| 4

|

litestar-org/litestar

|

pydantic

| 3,396

|

Enhancement: SQLAdmin Support

|

### Summary

SQLAdmin has a fork for litestar, but it isn't properly supported and there are no issues or discussions around its limitations. Is there support for making this better integrated in the litestar community?

### Basic Example

When using both litestar sqlalchemy plugin and the sqladmin fork https://github.com/cemrehancavdar/sqladmin-litestar, I get errors about pickling a psycopg2 module or `AttributeError: Can't pickle local object 'create_engine.<locals>.connect'`

This seems to be due to the fact that litestar pickles each router mounted to the app, and the fact that sqladmin relies on passing the sql engine or session maker around which ends up getting pickled. If I want to implement a fix for this I don't know the right place to look. The examples in the fork only show sqlite and I don't see any similar discussions so I suppose this is not fully tested behavior.

### Drawbacks and Impact

Unfortunately sqladmin itself does not seem to be open to being framework agnostic, so any fork suffers the problems of forking and future updates.

### Unresolved questions

_No response_

|

closed

|

2024-04-16T16:34:28Z

|

2025-03-20T15:54:36Z

|

https://github.com/litestar-org/litestar/issues/3396

|

[

"Enhancement"

] |

colebaileygit

| 5

|

AutoGPTQ/AutoGPTQ

|

nlp

| 521

|

[BUG] Loading Saved Marlin Quantized Models Fails

|

**Describe the bug**

After saving a marlin model to disk with `save_pretrained`, reloading the model fails since the quantization config still has gptq in in.

**Hardware details**

A100

**Software version**

Current main

**To Reproduce**

1. Load a model in marlin format and save to disk

```python

from auto_gptq import AutoGPTQForCausalLM

model = AutoGPTQForCausalLM.from_quantized("TheBloke/Llama-2-7B-Chat-GPTQ", use_marlin=True)

model.save_pretrained("/network/rshaw/llama_marlin")

```

2. Restart python and try to reload the saved model:

```python

from auto_gptq import AutoGPTQForCausalLM

model = AutoGPTQForCausalLM.from_quantized("/network/rshaw/llama_marlin", use_marlin=True)

```

Fails with:

```bash

{

"name": "ValueError",

"message": "QuantLinear() does not have a parameter or a buffer named B.",

"stack": "---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In[2], line 3

1 from auto_gptq import AutoGPTQForCausalLM

----> 3 model = AutoGPTQForCausalLM.from_quantized(\"/network/rshaw/llama_marlin\", use_marlin=True)

File /network/rshaw/gptq-benchmarking/AutoGPTQ/auto_gptq/modeling/auto.py:129, in AutoGPTQForCausalLM.from_quantized(cls, model_name_or_path, device_map, max_memory, device, low_cpu_mem_usage, use_triton, inject_fused_attention, inject_fused_mlp, use_cuda_fp16, quantize_config, model_basename, use_safetensors, trust_remote_code, warmup_triton, trainable, disable_exllama, disable_exllamav2, **kwargs)

123 # TODO: do we need this filtering of kwargs? @PanQiWei is there a reason we can't just pass all kwargs?

124 keywords = {

125 key: kwargs[key]

126 for key in list(signature(quant_func).parameters.keys()) + huggingface_kwargs

127 if key in kwargs

128 }

--> 129 return quant_func(

130 model_name_or_path=model_name_or_path,

131 device_map=device_map,

132 max_memory=max_memory,

133 device=device,

134 low_cpu_mem_usage=low_cpu_mem_usage,

135 use_triton=use_triton,

136 inject_fused_attention=inject_fused_attention,

137 inject_fused_mlp=inject_fused_mlp,

138 use_cuda_fp16=use_cuda_fp16,

139 quantize_config=quantize_config,

140 model_basename=model_basename,

141 use_safetensors=use_safetensors,

142 trust_remote_code=trust_remote_code,

143 warmup_triton=warmup_triton,

144 trainable=trainable,

145 disable_exllama=disable_exllama,

146 disable_exllamav2=disable_exllamav2,

147 **keywords

148 )

File /network/rshaw/gptq-benchmarking/AutoGPTQ/auto_gptq/modeling/_base.py:1109, in BaseGPTQForCausalLM.from_quantized(cls, model_name_or_path, device_map, max_memory, device, low_cpu_mem_usage, use_triton, use_qigen, use_marlin, torch_dtype, inject_fused_attention, inject_fused_mlp, use_cuda_fp16, quantize_config, model_basename, use_safetensors, trust_remote_code, warmup_triton, trainable, disable_exllama, disable_exllamav2, **kwargs)

1103 model = convert_to_marlin(model, quant_linear_class, quantize_config, repack=False)

1104 else:

1105 # Loading the GPTQ checkpoint to do the conversion.

1106 # TODO: Avoid loading the model with wrong QuantLinear, and directly use

1107 # Marlin ones. The repacking can be done directly on the safetensors, just

1108 # as for AWQ checkpoints.

-> 1109 accelerate.utils.modeling.load_checkpoint_in_model(

1110 model,

1111 dtype=torch_dtype, # This is very hacky but works due to https://github.com/huggingface/accelerate/blob/bd72a5f1a80d5146554458823f8aeda0a9db5297/src/accelerate/utils/modeling.py#L292

1112 checkpoint=model_save_name,

1113 device_map=device_map,

1114 offload_state_dict=True,

1115 offload_buffers=True

1116 )

1118 model = convert_to_marlin(model, quant_linear_class, quantize_config, repack=True)

1120 # Cache the converted model.

File ~/.conda/envs/autogptq-env/lib/python3.10/site-packages/accelerate/utils/modeling.py:1550, in load_checkpoint_in_model(model, checkpoint, device_map, offload_folder, dtype, offload_state_dict, offload_buffers, keep_in_fp32_modules, offload_8bit_bnb)

1548 offload_weight(param, param_name, state_dict_folder, index=state_dict_index)

1549 else:

-> 1550 set_module_tensor_to_device(

1551 model,

1552 param_name,

1553 param_device,

1554 value=param,

1555 dtype=new_dtype,

1556 fp16_statistics=fp16_statistics,

1557 )

1559 # Force Python to clean up.

1560 del checkpoint

File ~/.conda/envs/autogptq-env/lib/python3.10/site-packages/accelerate/utils/modeling.py:301, in set_module_tensor_to_device(module, tensor_name, device, value, dtype, fp16_statistics)

298 tensor_name = splits[-1]

300 if tensor_name not in module._parameters and tensor_name not in module._buffers:

--> 301 raise ValueError(f\"{module} does not have a parameter or a buffer named {tensor_name}.\")

302 is_buffer = tensor_name in module._buffers

303 old_value = getattr(module, tensor_name)

ValueError: QuantLinear() does not have a parameter or a buffer named B."

}

```

I believe this occurs because the safetensors file has the marlin formatted model, but the quantization config is still gptq.

**Expected behavior**

It would be nice if there were a way to serialize marlin models cleanly, such that they can be reloaded.

I am targeting the vLLM case right now and I need a serialized format that I can reload cleanly by iterating the safetensors file to make the integration with vLLM work nicely. I would rather rely on HF/AutoGPTQ's serialization format rather than making my own if possible.

Is there any way we can create a quantization config that will allow for reloading these models?

**Screenshots**

If applicable, add screenshots to help explain your problem.

**Additional context**

Add any other context about the problem here.

|

closed

|

2024-01-24T18:51:57Z

|

2024-02-12T13:51:07Z

|

https://github.com/AutoGPTQ/AutoGPTQ/issues/521

|

[

"bug"

] |

robertgshaw2-redhat

| 4

|

ivy-llc/ivy

|

tensorflow

| 27,868

|

Fix Frontend Failing Test: paddle - tensor.torch.Tensor.__gt__

|

closed

|

2024-01-07T23:37:49Z

|

2024-01-07T23:48:41Z

|

https://github.com/ivy-llc/ivy/issues/27868

|

[

"Sub Task"

] |

NripeshN

| 0

|

|

python-restx/flask-restx

|

flask

| 377

|

Basic Auth for Swagger UI

|

Any suggestions on how to password protect the swagger UI with basic auth? I was going to use something like flask-httpauth or maybe just write my own wrapper but the Swagger UI route isn't exposed in an obvious way. If you wondering why, I may have to have my API available publicly and don't want anyone who isn't supposed to know the api have any more available information on it but it could be useful for some users. I may restrict it by IP but I'm on unsure of this yet.

|

open

|

2021-09-22T14:56:42Z

|

2023-06-26T14:33:10Z

|

https://github.com/python-restx/flask-restx/issues/377

|

[

"question"

] |

Bxmnx

| 2

|

paperless-ngx/paperless-ngx

|

machine-learning

| 8,289

|

[BUG] File permissions are not set correctly after e.g. deleting a page from a PDF

|

### Description

When deleting a page from a scanned PDF the newly created PDF does not inherrit the permissions from the folder. After deleting the file other process are not able to work with thenew file because of access issue.

### Steps to reproduce

1. Create a job / cron job which regularly syncs the files from the output directory to a different

--> this is the job which seems to fail due to incorrect permissions

2. Take a PDF with multiple pages

3. delete a page via the paperless ui

The permissions for normal files

The permission of the file where a page has been removed

### Webserver logs

```bash

[2024-11-15 08:05:03,698] [DEBUG] [paperless.tasks] Training data unchanged.

[2024-11-15 08:25:52,316] [DEBUG] [paperless.handlers] Deleted file /usr/src/paperless/media/documents/originals/2024/2024-10-18 - none - Dexcom - 20241103_113340_BRN3C2AF4DFAC1D_002264.pdf.

[2024-11-15 08:25:52,317] [DEBUG] [paperless.handlers] Deleted file /usr/src/paperless/media/documents/archive/2024/2024-10-18 - none - Dexcom - 20241103_113340_BRN3C2AF4DFAC1D_002264.pdf.

[2024-11-15 08:25:52,320] [DEBUG] [paperless.handlers] Deleted file /usr/src/paperless/media/documents/thumbnails/0003781.webp.

[2024-11-15 08:42:55,345] [DEBUG] [paperless.matching] Correspondent Deutsche Bank matched on document 2018-11-15 Deutsche Bank photoTAN-Aktivierungsgrafik because it contains all of these words: Deutsche Bank

[2024-11-15 08:43:10,770] [DEBUG] [paperless.matching] Correspondent Deutsche Bank matched on document 2018-11-15 Deutsche Bank photoTAN-Aktivierungsbrief because it contains all of these words: Deutsche Bank

[2024-11-15 08:54:59,693] [INFO] [paperless.bulk_edit] Attempting to delete pages [2] from 1 documents

[2024-11-15 08:54:59,956] [DEBUG] [paperless.filehandling] Document has storage_path 1 ({created_year}/{created} - {asn} - {correspondent} - {title}) set

[2024-11-15 08:55:00,353] [INFO] [paperless.bulk_edit] Deleted pages [2] from document 1033

[2024-11-15 08:55:00,957] [INFO] [paperless.parsing.tesseract] pdftotext exited 0

[2024-11-15 08:55:01,326] [DEBUG] [paperless.parsing.tesseract] Calling OCRmyPDF with args: {'input_file': PosixPath('/usr/src/paperless/media/documents/originals/2018/2018-11-15 - 379 - Deutsche Bank - photoTAN-Aktivierungsbrief.pdf'), 'output_file': PosixPath('/tmp/paperless/paperless-fkh6aute/archive.pdf'), 'use_threads': True, 'jobs': 4, 'language': 'deu+eng', 'output_type': 'pdfa', 'progress_bar': False, 'color_conversion_strategy': 'RGB', 'skip_text': True, 'clean': True, 'deskew': True, 'rotate_pages': True, 'rotate_pages_threshold': 12.0, 'sidecar': PosixPath('/tmp/paperless/paperless-fkh6aute/sidecar.txt'), 'invalidate_digital_signatures': True, 'continue_on_soft_render_error': True}

[2024-11-15 08:55:01,936] [INFO] [ocrmypdf._pipeline] skipping all processing on this page

[2024-11-15 08:55:01,941] [INFO] [ocrmypdf._pipelines.ocr] Postprocessing...

[2024-11-15 08:55:02,329] [ERROR] [ocrmypdf._exec.ghostscript] GPL Ghostscript 10.03.1 (2024-05-02)

Copyright (C) 2024 Artifex Software, Inc. All rights reserved.

This software is supplied under the GNU AGPLv3 and comes with NO WARRANTY:

see the file COPYING for details.

Processing pages 1 through 1.

Page 1

Loading font Helvetica (or substitute) from /usr/share/ghostscript/10.03.1/Resource/Font/NimbusSans-Regular

Loading font Times-Roman (or substitute) from /usr/share/ghostscript/10.03.1/Resource/Font/NimbusRoman-Regular

The following warnings were encountered at least once while processing this file:

A problem was encountered trying to preserve the Outlines

[2024-11-15 08:55:02,329] [ERROR] [ocrmypdf._exec.ghostscript] This file had errors that were repaired or ignored.

[2024-11-15 08:55:02,329] [ERROR] [ocrmypdf._exec.ghostscript] The file was produced by:

[2024-11-15 08:55:02,329] [ERROR] [ocrmypdf._exec.ghostscript] >>>> Adobe Acrobat Pro 11.0.23 Paper Capture Plug-in <<<<

[2024-11-15 08:55:02,330] [ERROR] [ocrmypdf._exec.ghostscript] Please notify the author of the software that produced this

[2024-11-15 08:55:02,330] [ERROR] [ocrmypdf._exec.ghostscript] file that it does not conform to Adobe's published PDF

[2024-11-15 08:55:02,330] [ERROR] [ocrmypdf._exec.ghostscript] specification.

[2024-11-15 08:55:02,353] [WARNING] [ocrmypdf._metadata] Some input metadata could not be copied because it is not permitted in PDF/A. You may wish to examine the output PDF's XMP metadata.

[2024-11-15 08:55:03,141] [INFO] [ocrmypdf._pipeline] Image optimization ratio: 1.27 savings: 21.1%

[2024-11-15 08:55:03,141] [INFO] [ocrmypdf._pipeline] Total file size ratio: 2.07 savings: 51.6%

[2024-11-15 08:55:03,144] [INFO] [ocrmypdf._pipelines._common] Output file is a PDF/A-2B (as expected)

[2024-11-15 08:55:03,570] [DEBUG] [paperless.parsing.tesseract] Incomplete sidecar file: discarding.

[2024-11-15 08:55:03,598] [INFO] [paperless.parsing.tesseract] pdftotext exited 0

[2024-11-15 08:55:03,600] [DEBUG] [paperless.parsing] Execute: convert -density 300 -scale 500x5000> -alpha remove -strip -auto-orient -define pdf:use-cropbox=true /tmp/paperless/paperless-fkh6aute/archive.pdf[0] /tmp/paperless/paperless-fkh6aute/convert.webp

[2024-11-15 08:55:04,983] [INFO] [paperless.parsing] convert exited 0

[2024-11-15 08:55:05,109] [INFO] [paperless.tasks] Updating index for document 1033 (77a22606b7bf3ca81a1586ae4745bf81)

[2024-11-15 08:55:05,230] [DEBUG] [paperless.parsing.tesseract] Deleting directory /tmp/paperless/paperless-fkh6aute

[2024-11-15 08:55:13,799] [DEBUG] [paperless.matching] Correspondent Deutsche Bank matched on document 2018-11-15 Deutsche Bank photoTAN-Aktivierungsbrief because it contains all of these words: Deutsche Bank

[2024-11-15 08:55:26,600] [DEBUG] [paperless.matching] Correspondent Deutsche Bank matched on document 2018-11-15 Deutsche Bank photoTAN-Aktivierungsgrafik because it contains all of these words: Deutsche Bank

[2024-11-15 08:55:31,959] [DEBUG] [paperless.matching] Correspondent Deutsche Bank matched on document 2018-12-31 Deutsche Bank Anlagen zum Kontoauszug because it contains all of these words: Deutsche Bank

[2024-11-15 08:55:31,971] [DEBUG] [paperless.matching] DocumentType Kontoauszug matched on document 2018-12-31 Deutsche Bank Anlagen zum Kontoauszug because it contains this string: "Kontoauszug"

[2024-11-15 08:55:33,325] [DEBUG] [paperless.matching] Correspondent Deutsche Bank matched on document 2018-11-15 Deutsche Bank photoTAN-Aktivierungsgrafik because it contains all of these words: Deutsche Bank

[2024-11-15 08:55:34,579] [DEBUG] [paperless.matching] Correspondent Deutsche Bank matched on document 2018-11-15 Deutsche Bank photoTAN-Aktivierungsbrief because it contains all of these words: Deutsche Bank

[2024-11-15 08:56:51,487] [DEBUG] [paperless.matching] Correspondent Deutsche Bank matched on document 2018-06-29 Deutsche Bank Kontoauszug because it contains all of these words: Deutsche Bank

[2024-11-15 08:56:51,556] [DEBUG] [paperless.matching] DocumentType Kontoauszug matched on document 2018-06-29 Deutsche Bank Kontoauszug because it contains this string: "Kontoauszug"

[2024-11-15 08:56:52,098] [DEBUG] [paperless.matching] Correspondent Deutsche Bank matched on document 2018-11-15 Deutsche Bank photoTAN-Aktivierungsbrief because it contains all of these words: Deutsche Bank

[2024-11-15 08:56:53,020] [DEBUG] [paperless.matching] Correspondent Deutsche Bank matched on document 2018-11-15 Deutsche Bank photoTAN-Aktivierungsgrafik because it contains all of these words: Deutsche Bank

[2024-11-15 08:57:31,656] [DEBUG] [paperless.filehandling] Document has storage_path 1 ({created_year}/{created} - {asn} - {correspondent} - {title}) set

[2024-11-15 08:57:48,464] [DEBUG] [paperless.filehandling] Document has storage_path 1 ({created_year}/{created} - {asn} - {correspondent} - {title}) set

[2024-11-15 08:57:51,950] [DEBUG] [paperless.filehandling] Document has storage_path 1 ({created_year}/{created} - {asn} - {correspondent} - {title}) set

[2024-11-15 09:00:02,462] [INFO] [paperless.management.consumer] Adding /usr/src/paperless/consume/20241114_SerienanschreibenDepot-Kontoinformationallgemein_18872298_436725975.pdf to the task queue.

[2024-11-15 09:00:02,587] [INFO] [paperless.management.consumer] Adding /usr/src/paperless/consume/Abrechnung_240753339100EUR_2024-11-01_KK_240753339100KD401H06110200342210879.pdf to the task queue.

[2024-11-15 09:00:02,825] [DEBUG] [paperless.tasks] Skipping plugin CollatePlugin

[2024-11-15 09:00:02,825] [DEBUG] [paperless.tasks] Executing plugin BarcodePlugin

[2024-11-15 09:00:02,825] [DEBUG] [paperless.barcodes] Scanning for barcodes using PYZBAR

[2024-11-15 09:00:02,828] [DEBUG] [paperless.barcodes] PDF has 2 pages

[2024-11-15 09:00:02,828] [DEBUG] [paperless.barcodes] Processing page 0

```

### Browser logs

```bash

Log which the schedules sends after

Der Aufgabenplaner hat eine geplante Aufgabe abgeschlossen.

Aufgabe: rsync Dokumente

Start: Fri, 15 Nov 2024 15:00:01 +0100

Ende: Fri, 15 Nov 2024 15:00:01 +0100

Aktueller Status: 23 (Unterbrochen)

Standardausgabe/Fehler:

rsync: send_files failed to open "/volume1/Dokumente/documents/archive/2018/2018-11-15 - 379 - Deutsche Bank - photoTAN-Aktivierungsbrief.pdf": Permission denied (13)

rsync error: some files/attrs were not transferred (see previous errors) (code 23) at main.c(1464) [sender=3.1.2]

```

### Paperless-ngx version

2.12.0

### Host OS

Linux-4.4.302+-x86_64-with-glibc2.36

### Installation method

Docker - official image

### System status

```json

{

"pngx_version": "2.12.0",

"server_os": "Linux-4.4.302+-x86_64-with-glibc2.36",

"install_type": "docker",

"storage": {

"total": 2848078716928,

"available": 2106884804608

},

"database": {

"type": "sqlite",

"url": "/usr/src/paperless/data/db.sqlite3",

"status": "OK",

"error": null,

"migration_status": {

"latest_migration": "documents.1052_document_transaction_id",

"unapplied_migrations": []

}

},

"tasks": {

"redis_url": "redis://broker:6379",

"redis_status": "OK",

"redis_error": null,

"celery_status": "OK",

"index_status": "OK",

"index_last_modified": "2024-11-15T14:00:05.589408Z",

"index_error": null,

"classifier_status": "OK",

"classifier_last_trained": "2024-11-15T13:05:03.671148Z",

"classifier_error": null

}

}

```

### Browser

Chrome

### Configuration changes

I used a youtube video to create the container, the only think that i remeber is that i changed some volumes:

/volume1/docker/paperless-ngx/consume >> /usr/src/paperless/consume

/volume1/docker/paperless-ngx/data >> /usr/src/paperless/data

/volume1/docker/paperless-ngx/export >> /usr/src/paperless/export

/volume1/Dokumente >> /usr/src/paperless/media

### Please confirm the following

- [X] I believe this issue is a bug that affects all users of Paperless-ngx, not something specific to my installation.

- [X] This issue is not about the OCR or archive creation of a specific file(s). Otherwise, please see above regarding OCR tools.

- [X] I have already searched for relevant existing issues and discussions before opening this report.

- [X] I have updated the title field above with a concise description.

|

closed

|

2024-11-15T14:16:19Z

|

2024-12-16T03:19:27Z

|

https://github.com/paperless-ngx/paperless-ngx/issues/8289

|

[

"not a bug"

] |

Kopierwichtel

| 3

|

xinntao/Real-ESRGAN

|

pytorch

| 87

|

Sample images

|

input

output

Of course the quality of this picture is very low, but these three areas circled should have the possibility of improvement.

|

open

|

2021-09-21T10:56:50Z

|

2021-09-26T02:52:17Z

|

https://github.com/xinntao/Real-ESRGAN/issues/87

|

[] |

tumuyan

| 4

|

FactoryBoy/factory_boy

|

sqlalchemy

| 1,044

|

Unusable password generator for Django

|

#### The problem

The recently added `Password` generator for Django is helpful, but it's not clear how to use it to create an unusable password (similar to calling `set_unusable_password` on the generated user).

#### Proposed solution

Django's `set_unusable_password` is a call to `make_password` with `None` as the password argument:

https://github.com/django/django/blob/0b506bfe1ab9f1c38e439c77b3c3f81c8ac663ea/django/contrib/auth/base_user.py#L118-L120

Using `password = factory.django.Password(None)` will actually work (and will allow factory users to override the password if desired). However, currently the password argument to this factory is documented as a string and this option is not mentioned.

#### Extra notes

The default value of the `password` argument to `factory.django.Password` could also be set to `None`. This would make that factory generate unusable passwords by default, which may or may not be desired.

|

closed

|

2023-09-20T07:47:12Z

|

2023-09-26T06:50:38Z

|

https://github.com/FactoryBoy/factory_boy/issues/1044

|

[

"Doc",

"BeginnerFriendly"

] |

jaap3

| 1

|

sktime/sktime

|

data-science

| 7,224

|

[BUG] DartsLinearRegression fails instead of giving warning message

|

**Describe the bug**

`DartsLinearRegressionModel` fails when a warning should be raised

**To Reproduce**

```python

from sktime.datasets import load_airline

from sktime.forecasting.darts import DartsLinearRegressionModel

y = load_airline()

forecaster = DartsLinearRegressionModel(output_chunk_length=6,likelihood="quantile",quantiles=[0.33,0.5,0.67])

forecaster.fit(y=y)

```

ouput:

```

TypeError: warn() got an unexpected keyword argument 'message'

```

**Expected behavior**

A python warning message saying:

```

"Setting multi_models=True with quantile regression may cause issues. Consider using multi_models=False."

```

**Additional context**

It looks like this is the wrong key word arguments were passed into warn (`message` instead of `msg`) at [line 595](https://github.com/sktime/sktime/blob/0f75b7ad0dce8b722c81fe49bb9624de20cc4923/sktime/forecasting/darts.py#L595) and [line 369](https://github.com/sktime/sktime/blob/0f75b7ad0dce8b722c81fe49bb9624de20cc4923/sktime/forecasting/darts.py#L369)

If you are okay with it, I could give a PR for this.

**Versions**

Python dependencies:

pip: 23.2.1

sktime: 0.33.1

sklearn: 1.5.2

skbase: 0.8.3

numpy: 1.26.4

scipy: 1.14.1

pandas: 2.1.2

matplotlib: 3.8.1

joblib: 1.4.2

numba: 0.60.0

statsmodels: 0.14.4

pmdarima: 2.0.4

statsforecast: 1.7.8

tsfresh: 0.20.3

tslearn: None

torch: None

tensorflow: 2.16.2

|

closed

|

2024-10-04T16:56:02Z

|

2024-10-11T09:21:09Z

|

https://github.com/sktime/sktime/issues/7224

|

[

"bug",

"module:forecasting"

] |

wilsonnater

| 2

|

davidsandberg/facenet

|

computer-vision

| 1,001

|

classifier problem

|

I trained the model with my own images (10k images, 1k classes) by train_softmax.py,

My settings :

`

--max_nrof_epochs 100 \

--epoch_size 100 \

--batch_size 30 \

`

Other settings are default: (emb size is 128)

I got Accuracy ~0.98 at epoch 80, Loss ~ 1.7

But when I use this trained model to calculate 128 features of the same images and then use these features on SVC I got 0.00149 accuracy, I've try another classifier models and got result ~0.65 accuracy on this train images but ~0.0003 on public test.(17k images, 1k classes)

Another problem is I can't use my 8GB RTX 2070 to train this model. I think my GPU has not enough memory to run but I'm not sure, so I trained the model on my CPU and It took ~4hours to run 80epochs.

|

open

|

2019-03-30T08:02:17Z

|

2019-03-30T08:02:17Z

|

https://github.com/davidsandberg/facenet/issues/1001

|

[] |

ducnguyen96

| 0

|

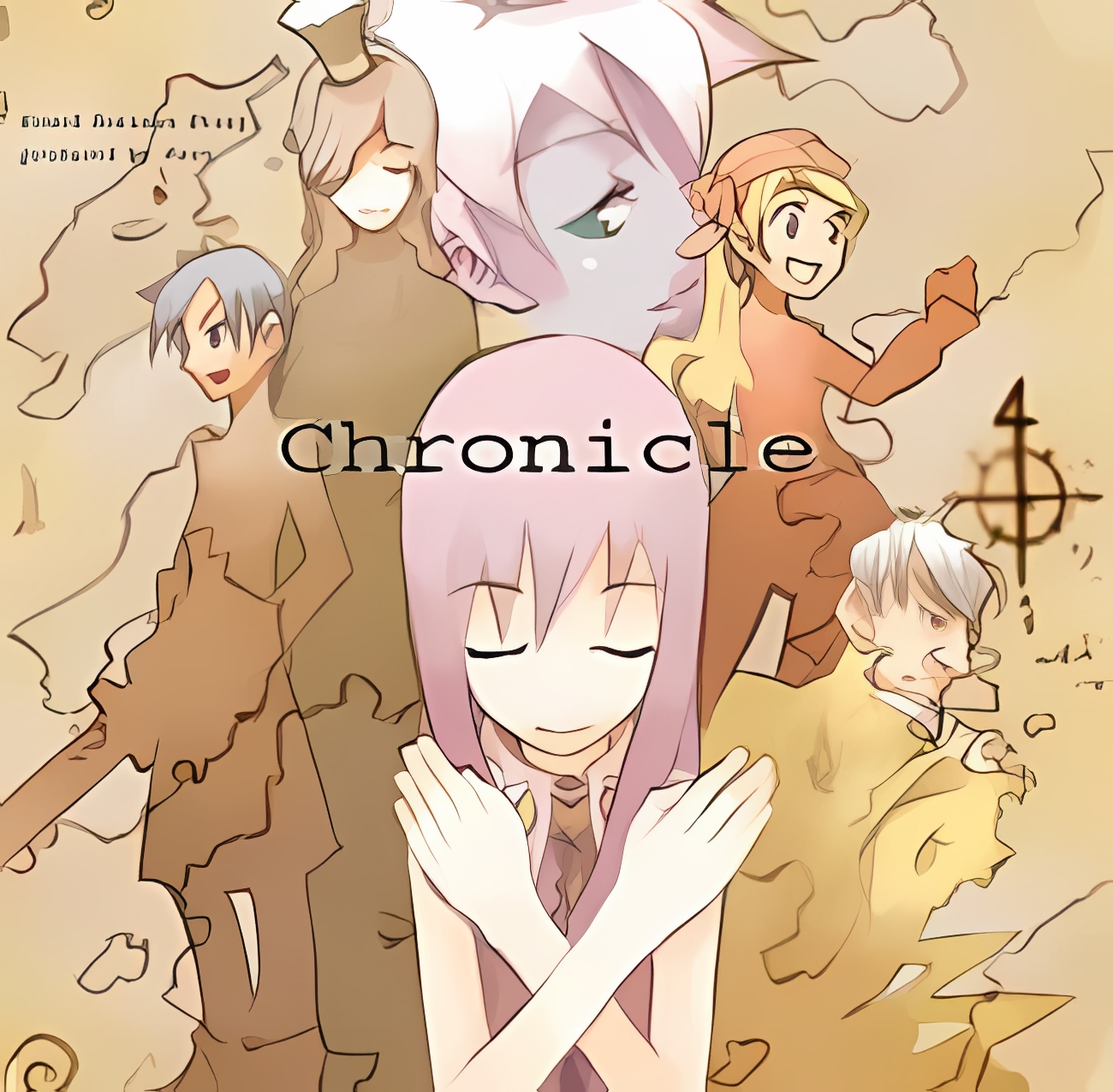

rthalley/dnspython

|

asyncio

| 277

|

In macos dnspython can not resolve some TLD domains.

|

In macos Sierra 10.12.4

pip list |grep dns

dnspython (1.15.0)

In Kali linux

pip list |grep dns

dnspython (1.15.0)

|

closed

|

2017-09-07T18:58:38Z

|

2017-09-08T08:38:12Z

|

https://github.com/rthalley/dnspython/issues/277

|

[] |

eldraco

| 2

|

google-research/bert

|

nlp

| 1,195

|

How we can fine-tune BERT by using multi-GPUs?

|

open

|

2021-01-20T15:14:49Z

|

2021-01-20T15:14:49Z

|

https://github.com/google-research/bert/issues/1195

|

[] |

FatmaSayedAhmed

| 0

|

|

flasgger/flasgger

|

flask

| 141

|

The Validation of API Payload does not passes if the optional Fields are of type other than String

|

I wrote this in YML file . The validation passes only if there is no optional field (Not required field in the definitions ) of type object or array such as approval_list in the below code.

```

Create new Deployment Unit

---

tags:

- Deployment Unit

parameters:

- name: Token

in: header

description: API key

required: true

type: string

format: string

- name: input

in: body

description: Deployment Unit data

required: true

type: string

format: string

schema:

$ref: "#/definitions/DeploymentUnit" # <---------

responses:

200:

description: New Deployment Unit has been added successfully

schema:

$ref: "#/definitions/Data" # <---------

examples:

result: "success"

message: "DeploymentUnit and deployment fields created successfully"

404:

description: exception in adding Deployment Unit

examples:

result: "failed"

message: "Throws an exception"

definitions:

DeploymentUnit: # <----------

type: object

required:

- name

- type

properties:

name:

type: string

type:

type: string

release_notes:

type: string

branch:

type: string

approval_status:

type: string

approval_list:

type: array

items:

type: object

properties:

approval_status:

type: string

approved_by:

type: string

approved_date:

type: string

Data:

type: object

properties:

data:

type: string

result:

type: string

message:

type: string

```

|

open

|

2017-08-03T10:12:24Z

|

2018-10-01T17:31:30Z

|

https://github.com/flasgger/flasgger/issues/141

|

[

"hacktoberfest"

] |

VjSng

| 2

|

xinntao/Real-ESRGAN

|

pytorch

| 905

|

After executing ”python inference_realesrgan.py -n RealESRGAN_x4plus -i inputs” command, it gets stuck and there is no other information

|

**The environment is as follows:**

(ml-env) PS C:\workspace\ml\Real-ESRGAN\Real-ESRGAN> pip list

Package Version Editable project location

----------------------- --------------- ---------------------------------------

absl-py 2.1.0

addict 2.4.0

autocommand 2.2.2

backports.tarfile 1.2.0

basicsr 1.4.2

certifi 2025.1.31

charset-normalizer 3.4.1

colorama 0.4.6

contourpy 1.3.1

cycler 0.12.1

facexlib 0.3.0

filelock 3.18.0

filterpy 1.4.5

fonttools 4.56.0

fsspec 2025.3.0

future 1.0.0

gfpgan 1.3.8

grpcio 1.71.0

idna 3.10

imageio 2.37.0

importlib_metadata 8.0.0

inflect 7.3.1

jaraco.collections 5.1.0

jaraco.context 5.3.0

jaraco.functools 4.0.1

jaraco.text 3.12.1

Jinja2 3.1.6

kiwisolver 1.4.8

lazy_loader 0.4

llvmlite 0.44.0

lmdb 1.6.2

Markdown 3.7

MarkupSafe 3.0.2

matplotlib 3.10.1

more-itertools 10.3.0

mpmath 1.3.0

networkx 3.4.2

numba 0.61.0

numpy 2.1.3

opencv-python 4.11.0.86

packaging 24.2

pillow 11.1.0

pip 25.0.1

platformdirs 4.3.6

protobuf 6.30.1

pyparsing 3.2.1

python-dateutil 2.9.0.post0

PyYAML 6.0.2

realesrgan 0.3.0 C:\workspace\ml\Real-ESRGAN\Real-ESRGAN

requests 2.32.3

scikit-image 0.25.2

scipy 1.15.2

setuptools 76.1.0

six 1.17.0

sympy 1.13.1

tb-nightly 2.20.0a20250318

tensorboard-data-server 0.7.2

tifffile 2025.3.13

tomli 2.0.1

torch 2.6.0

torchvision 0.21.0

tqdm 4.67.1

typeguard 4.3.0

typing_extensions 4.12.2

urllib3 2.3.0

Werkzeug 3.1.3

wheel 0.43.0

yapf 0.43.0

zipp 3.19.2

**Execute the following command:**

python inference_realesrgan.py -n RealESRGAN_x4plus -i inputs

Testing 0 00017_gray

**Output after manual cancellation via ctrl+c:**

Traceback (most recent call last):

File "C:\workspace\ml\Real-ESRGAN\Real-ESRGAN\inference_realesrgan.py", line 166, in <module>

main()

File "C:\workspace\ml\Real-ESRGAN\Real-ESRGAN\inference_realesrgan.py", line 147, in main

output, _ = upsampler.enhance(img, outscale=args.outscale) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "C:\workspace\ml\ml-env\Lib\site-packages\torch\utils\_contextlib.py", line 116, in decorate_context

return func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "C:\workspace\ml\Real-ESRGAN\Real-ESRGAN\realesrgan\utils.py", line 223, in enhance

self.process()

File "C:\workspace\ml\Real-ESRGAN\Real-ESRGAN\realesrgan\utils.py", line 115, in process

self.output = self.model(self.img)

^^^^^^^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\torch\nn\modules\module.py", line 1739, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\torch\nn\modules\module.py", line 1750, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\basicsr\archs\rrdbnet_arch.py", line 113, in forward

body_feat = self.conv_body(self.body(feat))

^^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\torch\nn\modules\module.py", line 1739, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\torch\nn\modules\module.py", line 1750, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\torch\nn\modules\container.py", line 250, in forward

input = module(input)

^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\torch\nn\modules\module.py", line 1739, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\torch\nn\modules\module.py", line 1750, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\basicsr\archs\rrdbnet_arch.py", line 60, in forward

out = self.rdb2(out)

^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\torch\nn\modules\module.py", line 1739, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\torch\nn\modules\module.py", line 1750, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\basicsr\archs\rrdbnet_arch.py", line 35, in forward

x3 = self.lrelu(self.conv3(torch.cat((x, x1, x2), 1)))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\torch\nn\modules\module.py", line 1739, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\torch\nn\modules\module.py", line 1750, in _call_impl

return forward_call(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\torch\nn\modules\conv.py", line 554, in forward

return self._conv_forward(input, self.weight, self.bias)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\workspace\ml\ml-env\Lib\site-packages\torch\nn\modules\conv.py", line 549, in _conv_forward

return F.conv2d(

^^^^^^^^^

KeyboardInterrupt

|

open

|

2025-03-19T06:08:30Z

|

2025-03-19T08:29:04Z

|

https://github.com/xinntao/Real-ESRGAN/issues/905

|

[] |

Le1q

| 1

|

encode/databases

|

sqlalchemy

| 161

|

Getting row count from an update

|

I'm aware of e.g. #108 but I'm wondering what the best way to get the row count for an update or delete is for now? On Postgres if it makes a difference.

|

open

|

2019-11-15T15:00:25Z

|

2019-12-11T11:11:26Z

|

https://github.com/encode/databases/issues/161

|

[] |

knyghty

| 6

|

Lightning-AI/LitServe

|

fastapi

| 121

|

during manual local testing, the processes are not killed if the test fails

|

We need to terminate the processes if test fails for whatsoever reason:

## Current

```python

def test_e2e_default_batching(killall):

process = subprocess.Popen(

["python", "tests/e2e/default_batching.py"],

stdout=subprocess.DEVNULL,

stderr=subprocess.DEVNULL,

stdin=subprocess.DEVNULL,

)

time.sleep(5)

resp = requests.post("http://127.0.0.1:8000/predict", json={"input": 4.0}, headers=None)

assert resp.status_code == 200, f"Expected response to be 200 but got {resp.status_code}"

assert resp.json() == {"output": 16.0}, "tests/simple_server.py didn't return expected output"

killall(process)

```

## Proposed

```py

def test_e2e_default_batching(killall):

process = subprocess.Popen(

["python", "tests/e2e/default_batching.py"],

stdout=subprocess.DEVNULL,

stderr=subprocess.DEVNULL,

stdin=subprocess.DEVNULL,

)

time.sleep(5)

try:

resp = requests.post("http://127.0.0.1:8000/predict", json={"input": 4.0}, headers=None)

assert resp.status_code == 200, f"Expected response to be 200 but got {resp.status_code}"

assert resp.json() == {"output": 16.0}, "tests/simple_server.py didn't return expected output"

except Exception as e:

raise e

finally: # kill the process before raising the exception

killall(process)

```

---

_Originally posted by @bhimrazy in https://github.com/Lightning-AI/LitServe/issues/119#issuecomment-2141734005_

|

closed

|

2024-05-31T12:02:02Z

|

2024-06-03T18:46:25Z

|

https://github.com/Lightning-AI/LitServe/issues/121

|

[

"bug",

"good first issue",

"ci / tests"

] |

aniketmaurya

| 0

|

donnemartin/system-design-primer

|

python

| 691

|

can you make it be a book?

|

open

|

2022-07-25T03:27:19Z

|

2023-10-02T12:12:55Z

|

https://github.com/donnemartin/system-design-primer/issues/691

|

[

"needs-review"

] |

aexftf

| 2

|

|

albumentations-team/albumentations

|

deep-learning

| 1,973

|

Supported mask formats with Albumentations

|

## Your Question

From the documentation, both API reference and [user guide] (https://albumentations.ai/docs/getting_started/mask_augmentation/) sections, it's not straightforward to understand which kind of mask format is supported and more importantly, if different mask formats can lead to different transformation outputs due to some internal implementation details.

Take for example a semantic segmentation task with 3 classes: A, B, and C, each class has an associated mask Ma, Mb, Mc stored as a different file. Besides RLE encoding and similar sparse formats, the most basic ways to encode a dense mask, and augment a sample are:

* Read Ma, Mb, and Mc as an np array and store them in a Python list, eg `masks`. The transform API allows to call `transformed = transform(image=image, masks=masks)` and gets the augmented image and mask pair.

* Read Ma, Mb, and Mc as a np array and stack them in a `mask` np array of shape (H, W, C), where C=3 and each array's element is True or False. Let's refer to this as _one-hot boolean encoding_. The transform API allows to call `transformed = transform(image=image, mask=mask)` and gets the augmented image and mask pair.

* Read Ma, Mb, and Mc as a np array and encode them in a `mask` array of shape (H, W), where each array's item represents the class index (0, 1, 2). Let's refer to this as _integer tensor encoding_. Then I can call `transformed = transform(image=image, mask=mask)` and get the augmented image and mask pair.

Now, my questions are:

* Does Albumentations support all of the 3 types of encodings for every transform?

* Does the encoding type affect the output of a given transformation?

* Is one approach better than another in terms of performance?

|

open

|

2024-10-07T16:07:00Z

|

2024-10-08T20:13:27Z

|

https://github.com/albumentations-team/albumentations/issues/1973

|

[

"question"

] |

PRFina

| 1

|

FlareSolverr/FlareSolverr

|

api

| 1,085

|

Error solving the challenge. Timeout after 60.0 seconds

|

### Have you checked our README?

- [X] I have checked the README

### Have you followed our Troubleshooting?

- [X] I have followed your Troubleshooting

### Is there already an issue for your problem?

- [X] I have checked older issues, open and closed

### Have you checked the discussions?

- [X] I have read the Discussions

### Environment

```markdown

- FlareSolverr version: 3.3.14

- Last working FlareSolverr version: 3.3.13

- Operating system: Unraid

- Are you using Docker: [yes/no] yes

- FlareSolverr User-Agent (see log traces or / endpoint): Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36

- Are you using a VPN: [yes/no] no

- Are you using a Proxy: [yes/no] no

- Are you using Captcha Solver: [yes/no] no

- If using captcha solver, which one:

- URL to test this issue: https://1337x.to/cat/Movies/time/desc/1/

```

### Description

Since updating to 3.3.14 I have been noticing FlareSolverr is not working for ANY of the indexers I use it with (1337x, ExtraTorrent.st, iDope).

Communication test between Prowlarr and FlareSolverr is successful, but tests from an indexer through FlareSolverr fail.

I have also tried ensuring Prowlarr is up to date, and tried rebooting containers / host to no avail.

### Logged Error Messages

```text

2024-02-20 19:43:34 INFO Incoming request => POST /v1 body: {'maxTimeout': 60000, 'cmd': 'request.get', 'url': 'https://1337x.to/cat/Movies/time/desc/1/', 'proxy': {}}

version_main cannot be converted to an integer

2024-02-20 19:43:35 INFO Challenge detected. Title found: Just a moment...

2024-02-20 19:44:35 ERROR Error: Error solving the challenge. Timeout after 60.0 seconds.

2024-02-20 19:44:35 INFO Response in 60.708 s

2024-02-20 19:44:35 INFO 192.168.1.61 POST http://192.168.1.59:8191/v1 500 Internal Server Error

```

### Screenshots

_No response_

|

closed

|

2024-02-20T19:48:46Z

|

2024-02-20T19:50:46Z

|

https://github.com/FlareSolverr/FlareSolverr/issues/1085

|

[] |

nickydd9

| 1

|

deepset-ai/haystack

|

nlp

| 8,366

|

Remove deprecated `Pipeline` init argument `debug_path`

|

The argument `debug_path` has been deprecate with PR #8364 and will be released with Haystack `2.6.0`.

We need to remove it before releasing version `2.7.0`.

|

closed

|

2024-09-16T07:52:13Z

|

2024-09-30T15:11:50Z

|

https://github.com/deepset-ai/haystack/issues/8366

|

[

"breaking change",

"P3"

] |

silvanocerza

| 0

|

fugue-project/fugue

|

pandas

| 377

|

[FEATURE] Create bag

|

Fugue has been built on top of the DataFrame concept. Although a collection of arbitrary objects can be converted to DataFrame to be distributed in Fugue, it is not always efficient or intuitive to do so. Looking at Spark (RDD), Dask (Bag) and even Ray, they all have separate ways to handle a distributed collection of arbitrary objects. So in Fugue, we should have the correspondent concept. And immediate benefit and distributing a collection of tasks, we no longer need to consider it in a dataframe way.

Regarding name, `bag` is a really nice name and a perfect term that is defined in mathematics, see https://en.wikipedia.org/wiki/Multiset It is unordered, and platform/scale agnostic matching Fugue's design philosophy. And this is also why Dask is using this name.

As an initial version, we don't plan to add many features like what RDD does. One major feature NOT to have in v1 is partitioning and shuffling. In order to do these, DataFrame is required.

|

closed

|

2022-10-22T05:14:59Z

|

2022-11-17T05:32:59Z

|

https://github.com/fugue-project/fugue/issues/377

|

[

"enhancement",

"high priority",

"programming interface",

"core feature",

"bag"

] |

goodwanghan

| 0

|

zappa/Zappa

|

flask

| 1,111

|

“python_requires” should be set with “>=3.6, <3.10”, as zappa 0.54.1 is not compatible with all Python versions.

|

Currently, the keyword argument **python_requires** of **setup()** is not set, and thus it is assumed that this distribution is compatible with all Python versions.

However, I found the following code checking Python compatibility locally in **zappa/\_\_init\_\_.py**

```python

SUPPORTED_VERSIONS = [(3, 6), (3, 7), (3, 8), (3, 9)]

if sys.version_info[:2] not in SUPPORTED_VERSIONS:

……

raise RuntimeError(err_msg)

```

I think it is a better way to declare Python compatibility by using the keyword argument **python_requires** than checking compatibility locally for some reasons:

* Descriptions in **python_requires** will be reflected in the metadata

* “pip install” can check such metadata on the fly during distribution selection, and prevent from downloading and installing the incompatible package versions.

* If the user does not specify any version constraint, pip can automatically choose the latest compatible package version for users.

Way to improve:

modify **setup()** in **setup.py,** add **python_requires** keyword argument:

```python

setup(…

python_requires=">=3.6, <3.10",

…)

```

Thanks for your attention.

Best regrads,

PyVCEchecker

|

closed

|

2022-02-22T03:46:22Z

|

2022-08-05T10:36:23Z

|

https://github.com/zappa/Zappa/issues/1111

|

[] |

PyVCEchecker

| 2

|

junyanz/pytorch-CycleGAN-and-pix2pix

|

deep-learning

| 789

|

conda install dependencies: downgrade python to 2.x

|

I am a conda user.

When I try './scripts/conda_deps.sh' to install dependencies.

It try to downgrade my python from 3.x to 2.x

`The following packages will be DOWNGRADED:

python: 3.6.5-hc3d631a_2 --> 2.7.16-h9bab390_7 `

|

open

|

2019-10-11T09:03:11Z

|

2019-10-12T08:54:54Z

|

https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/789

|

[] |

H0icky

| 2

|

Evil0ctal/Douyin_TikTok_Download_API

|

api

| 284

|

[BUG] /tiktok_profile_videos 没有定义get_tiktok_user_profile_videos方法

|

***发生错误的平台?***

TikTok /tiktok_profile_videos/ 没有定义get_tiktok_user_profile_videos方法

***发生错误的端点?***

如:API-V1/API-V2/Web APP

***提交的输入值?***

短视频链接

***是否有再次尝试?***

如:是,发生错误后X时间后错误依旧存在。

***你有查看本项目的自述文件或接口文档吗?***

如:有,并且很确定该问题是程序导致的。

|

closed

|

2023-09-28T07:14:00Z

|

2023-09-29T10:46:02Z

|

https://github.com/Evil0ctal/Douyin_TikTok_Download_API/issues/284

|

[

"BUG"

] |

wahahababaozhou

| 1

|

littlecodersh/ItChat

|

api

| 22

|

有没有办法将个人名片或自己关注的公众号名片转发出去?

|

本issue记录个人名片、公众号、文章转发的相关讨论。

|

closed

|

2016-06-18T13:48:56Z

|

2016-11-13T12:13:35Z

|

https://github.com/littlecodersh/ItChat/issues/22

|

[

"enhancement",

"help wanted"

] |

jireh-he

| 12

|

AntonOsika/gpt-engineer

|

python

| 134

|

[windows] File system "permission denied"

|

I downloaded the new repo today, and when running a prompt i receive the following error.

It worked fine last night on the old repo of the previous day.

I have full administrative rights.

Traceback (most recent call last):

File "<frozen runpy>", line 198, in _run_module_as_main

File "<frozen runpy>", line 88, in _run_code

File "C:\Users\Admin\Github\gpt-engineer\gpt_engineer\main.py", line 49, in <module>

app()

File "C:\Users\Admin\Github\gpt-engineer\gpt_engineer\main.py", line 45, in chat

messages = step(ai, dbs)

^^^^^^^^^^^^^

File "C:\Users\Admin\Github\gpt-engineer\gpt_engineer\steps.py", line 129, in gen_code

to_files(messages[-1]["content"], dbs.workspace)

File "C:\Users\Admin\Github\gpt-engineer\gpt_engineer\chat_to_files.py", line 29, in to_files

workspace[file_name] = file_content

~~~~~~~~~^^^^^^^^^^^

File "C:\Users\Admin\Github\gpt-engineer\gpt_engineer\db.py", line 20, in __setitem__

with open(self.path / key, 'w', encoding='utf-8') as f:

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

PermissionError: [Errno 13] Permission denied: 'C:\\Users\\Admin\\Github\\gpt-engineer\\my-new-project\\workspace'

|

closed

|

2023-06-18T01:23:25Z

|

2023-07-12T12:00:32Z

|

https://github.com/AntonOsika/gpt-engineer/issues/134

|

[] |

DoLife

| 4

|

biolab/orange3

|

scikit-learn

| 6,769

|

Unable to run when there is a logging.py in the directory Orange is launched in

|

Similar issue to https://github.com/jupyter/notebook/issues/4892

|

closed

|

2024-03-21T09:09:14Z

|

2024-04-12T07:20:43Z

|

https://github.com/biolab/orange3/issues/6769

|

[

"bug report"

] |

zactionn

| 3

|

coqui-ai/TTS

|

python

| 3,360

|

[Bug] Cannot restore from checkpoint

|

### Describe the bug

I'm training a vits model , when continue a training process using

````

python TTS/bin/train_tts.py --continue_path path/to/training/model/ouput/checkpoint/

````

the code in function _restore_best_loss ( trainer.py: line 1720 ) did not check type of ch["model_loss"] .

Restoring from best_model_xxx.pth or best_model.pth the ch["model_loss"] is a float/real number , when restore from checkpoint_xxx.pth , it becomes a dict.

At the end of one epoch , it will compare a loss value , it raise a error says 'dict' cannot compare with real number , and the training process exits.

currently I modify the code to following to avoid this problem.

````

def _restore_best_loss(self):

"""Restore the best loss from the args.best_path if provided else

from the model (`args.continue_path`) used for resuming the training"""

if self.args.continue_path and (self.restore_step != 0 or self.args.best_path):

logger.info(" > Restoring best loss from %s ...", os.path.basename(self.args.best_path))

ch = load_fsspec(self.args.restore_path, map_location="cpu")

if "model_loss" in ch:

theLoss = ch["model_loss"]

if type(theLoss)==dict:

self.best_loss = ch["model_loss"]["train_loss"]

else:

self.best_loss = theLoss

logger.info(" > Starting with loaded last best loss %f", self.best_loss)

````

### To Reproduce

retore training process using a checkpoint , triggered from ctrl-c or 'save_best_after'

### Expected behavior

continue a training without process exit

### Logs

_No response_

### Environment

```shell

Windows 10 with RTX3060

Colab With T4

Git Branch : Dev (11ec9f7471620ebaa57db7ff5705254829ffe516)

In both environment I encounter the issue.

```

### Additional context

_No response_

|

closed

|

2023-12-04T02:53:38Z

|

2023-12-07T13:21:33Z

|

https://github.com/coqui-ai/TTS/issues/3360

|

[

"bug"

] |

YuboLong

| 2

|

JoeanAmier/XHS-Downloader

|

api

| 9

|

可以输入作者主页链接,然后下载作者全部作品吗?

|

可以在xhs.txt中填写作者主页链接,实现批量下载

|

open

|

2023-11-04T08:12:59Z

|

2023-11-05T06:24:16Z

|

https://github.com/JoeanAmier/XHS-Downloader/issues/9

|

[] |

wwkk2580

| 3

|

cvat-ai/cvat

|

computer-vision

| 8,899

|

Problem with exporting annotations to Datumaro

|

### Actions before raising this issue

- [X] I searched the existing issues and did not find anything similar.

- [X] I read/searched [the docs](https://docs.cvat.ai/docs/)

### Steps to Reproduce

When exporting my annotations to datumaro format I get the following error: ValueError: could not broadcast input array from shape (13,11) into shape (0,11).

It is possible to export the annotations with the cvat format, but not with COCO or Datumaro. I also tried to perform the conversion via the CLI with datumaro, but without success. I get the same error message.

### Expected Behavior

The annotations to be correctly exported in datumaro format.

### Possible Solution

I was looking into the downloaded annotations in cvat format for empty annotations, but not sure if this is the right approach and what the best way is to search for these.

### Context