repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

serengil/deepface

|

machine-learning

| 924

|

Unable to find good face match with deepface and Annoy

|

Hi

I am using following code from Serengil's tutorial (you tube), for finding best face match. The embeddings are from deepface and Annoy's ANNS based search is used for find best matching face.

This code does not give good matching face, as was hightlighted in Serengil's you tube video.

Looking for help about why this code would not give best matching face.

Thanks

import os

from deepface.commons import functions

from keras_facenet import FaceNet

from deepface.basemodels import Facenet

from annoy import AnnoyIndex

import matplotlib.pyplot as plt

import random

import time

facial_images = []

for root, directories, files in os.walk("../../deepface/deepface/tests/dataset"):

for file in files:

if ('.jpg' in files):

exact_path = root + files

facial_images.append(exact_path)

embedder = FaceNet()

model = Facenet.loadModel()

representations = []

min = 100000000000

max = -10000000000

for face in facial_images:

img = functions.preprocess_face(img = face, target_size = (160,160))

# embedding = embedder.embeddings(img)

embedding = model.predict(img)[0,:]

temp = embedding.min()

if (min > temp):

min = temp

temp = embedding.max()

if (max < temp):

max = temp

representation = []

representation.append(img)

representation.append(embedding)

representations.append(representation)

#synthetic data to enlarge data size

for i in range(len(representations), 100000):

filename = "dummy_%d.jpg" % i

vector = [random.gauss(min, max) for z in range(128)]

dummy_item = []

dummy_item.append(filename)

dummy_item.append(vector)

representations.append(dummy_item)

t = AnnoyIndex(128, 'euclidean')

for i in range(len(representations)):

vector = representations[i][1]

t.add_item(i, vector)

t.build(3)

idx = 0

k = 2

start = time.time()

neighbors = t.get_nns_by_item(idx,k)

end = time.time()

print ("get_nns_by_item took " , end-start, " seconds");

print (neighbors)

|

closed

|

2023-12-20T08:22:34Z

|

2023-12-20T08:46:59Z

|

https://github.com/serengil/deepface/issues/924

|

[

"question"

] |

dumbogeorge

| 2

|

mwaskom/seaborn

|

matplotlib

| 2,821

|

Calling `sns.heatmap()` changes matplotlib rcParams

|

See the following example

```python

import matplotlib as mpl

import matplotlib.pyplot as plt

import seaborn as sns

mpl.rcParams["figure.dpi"] = 120

mpl.rcParams["figure.facecolor"] = "white"

mpl.rcParams["figure.figsize"] = (9, 6)

data = sns.load_dataset("iris")

print(mpl.rcParams["figure.dpi"])

print(mpl.rcParams["figure.facecolor"])

print(mpl.rcParams["figure.figsize"])

#120.0

#white

#[9.0, 6.0]

fig, ax = plt.subplots()

sns.heatmap(data.corr(), vmin=-1, vmax=1, center=0, annot=True, linewidths=4, ax=ax);

print(mpl.rcParams["figure.dpi"])

print(mpl.rcParams["figure.facecolor"])

print(mpl.rcParams["figure.figsize"])

#72.0

#(1, 1, 1, 0)

#[6.0, 4.0]

```

If I call again

```python

mpl.rcParams["figure.dpi"] = 120

mpl.rcParams["figure.facecolor"] = "white"

mpl.rcParams["figure.figsize"] = (9, 6)

```

then it works fine, but I don't know why it changes the rcParams.

**Edit** These are the versions being used

```

Last updated: Wed May 25 2022

Python implementation: CPython

Python version : 3.9.12

IPython version : 8.3.0

matplotlib: 3.5.2

seaborn : 0.11.2

sys : 3.9.12 | packaged by conda-forge | (main, Mar 24 2022, 23:25:59)

[GCC 10.3.0]

Watermark: 2.3.0

```

|

closed

|

2022-05-25T19:16:45Z

|

2022-05-27T11:13:29Z

|

https://github.com/mwaskom/seaborn/issues/2821

|

[] |

tomicapretto

| 2

|

strawberry-graphql/strawberry

|

fastapi

| 3,790

|

Incorrect typing for the `type` decorator

|

## Describe the Bug

The type function is decorated with

```

@dataclass_transform(

order_default=True, kw_only_default=True, field_specifiers=(field, StrawberryField)

)

```

Therefore mypy treats classes decorated with type as being dataclasses with ordering functions.

In particular, defining `__gt__` on such a class will be treated as an error by mypy.

However, type (that is the underlying `_wrap_dataclass` function) do not do anything to ensure the dataclass actually has order functions defined.

I see multiple solutions:

- Removing the `order_default=True` part of the `dataclass_transform` decorating `type`

- Enforcing the `order=True` in `_wrap_dataclass`

- Allowing the caller to pass dataclass kwargs (as per [my previous issue](https://github.com/strawberry-graphql/strawberry/issues/2688))

## System Information

- Operating system: Ubuntu 24.04

- Strawberry version (if applicable): 0.256.1

## Additional Context

Code samples to be clear on the issue

```

@strawberry.type

class MyClass:

attr: str

k = MyClass(attr="abc")

j = MyClass(attr="def")

j > k # TypeError: '<' not supported between instances of 'MyClass' and 'MyClass'

```

```

@strawberry.type

class MyClass:

attr: str

def __gt__(self, other):

return self.attr > other.attr

k = MyClass(attr="abc")

j = MyClass(attr="def")

j > k # True

# When running mypy

error: You may not have a custom "__gt__" method when "order" is True [misc]

```

|

open

|

2025-02-21T11:26:35Z

|

2025-02-21T11:28:42Z

|

https://github.com/strawberry-graphql/strawberry/issues/3790

|

[

"bug"

] |

Corentin-Bravo

| 0

|

521xueweihan/HelloGitHub

|

python

| 2,078

|

【开源自荐】类似 rz / sz,支持 tmux 的文件传输工具 trzsz ( trz / tsz )

|

## 项目推荐

- 项目地址:https://github.com/trzsz/trzsz

- 类别:Python

- 项目后续更新计划:

* 支持 mac 以外的其他平台(例如 windows),要 SecureCRT、Xshell 等像 iTerm2 一样支持 [coprocesses](https://iterm2.com/documentation-coprocesses.html) 才能搞。

* 将进度条改成模态框,防止在文件传输过程中误触键盘导致传输失败,需要 iTerm2 支持显示 [mac 进度条](https://developer.apple.com/library/archive/documentation/LanguagesUtilities/Conceptual/MacAutomationScriptingGuide/DisplayProgress.html) 才能搞。

* 使用 tmux 控制模式时,支持二进制上传和下载,需要 tmux 和 iTerm2 支持一些 future 才好搞。

* 使用 tmux 普通模式时,现已支持二进制下载,支持二进制上传需要 tmux 支持输入设置成 latin1 字符集,或者找到个稳定可靠的办法,将被 tmux 转成 UTF-8 的数据转换回原始的二进制数据。

- 项目描述:

[trzsz](https://trzsz.github.io/) 是一个简单的文件传输工具,和 lrzsz ( rz / sz ) 类似但支持 tmux,和 iTerm2 一起使用,并且有一个不错的进度条。

- 推荐理由:

* 登录远程电脑时用 tmux 保持会话,但 tmux 不支持用 rz / sz 上传和下载文件,这就很不方便了。

* 既然 tmux 不愿意支持 rz / sz ,那我们就设计一个新的 trz / tsz ( [trzsz](https://github.com/trzsz/trzsz) ) 去支持 tmux 。

* 不管经过多少跳才登录到远程服务器,都可以方便地上传和下载文件,没有 scp 需要中转的麻烦。

* 原来 rz / sz 的实现比较简陋,连个进度条都没有,上传和下载文件不知道还要多久才能完成,又或者卡住了也不知道。

* trzsz ( trz / tsz ) 有一个不错的进度条,虽然现在还不是模态框,但也能清楚地看到上传和下载的进度。

- 截图:

* 上传文件示例

* 下载文件示例

|

closed

|

2022-01-16T08:50:39Z

|

2022-01-28T01:21:25Z

|

https://github.com/521xueweihan/HelloGitHub/issues/2078

|

[

"已发布",

"Python 项目"

] |

lonnywong

| 1

|

DistrictDataLabs/yellowbrick

|

scikit-learn

| 1,014

|

Using your own models with yellow brick

|

**Describe the issue**

If you create your own clustering algorithm that follows the sklearn pattern, is there anything I need to know to allow users to use the additional clustering methods to extend this package to my own sklearn like models?

|

closed

|

2020-01-29T22:48:21Z

|

2020-02-26T14:28:46Z

|

https://github.com/DistrictDataLabs/yellowbrick/issues/1014

|

[

"type: question"

] |

achapkowski

| 3

|

fa0311/TwitterInternalAPIDocument

|

graphql

| 660

|

Any idea how long each guest token is valid for?

|

I am using endpoints which can be viewed in incognito mode. I see each ip has a 95 request limit for a 13 minute window. But any idea how long a guest token remains valid before it starts giving 403?

I am caching the guest token in order to minimize requests but in production ended up getting 403 errors after sometime.

any idea @fa0311 ?

|

open

|

2024-10-14T04:49:27Z

|

2024-10-14T07:53:56Z

|

https://github.com/fa0311/TwitterInternalAPIDocument/issues/660

|

[] |

abhranil26

| 3

|

httpie/cli

|

python

| 1,402

|

can httpie support JSON5 (JSON for Humans) input?

|

## Checklist

- [x] I've searched for similar feature requests.

---

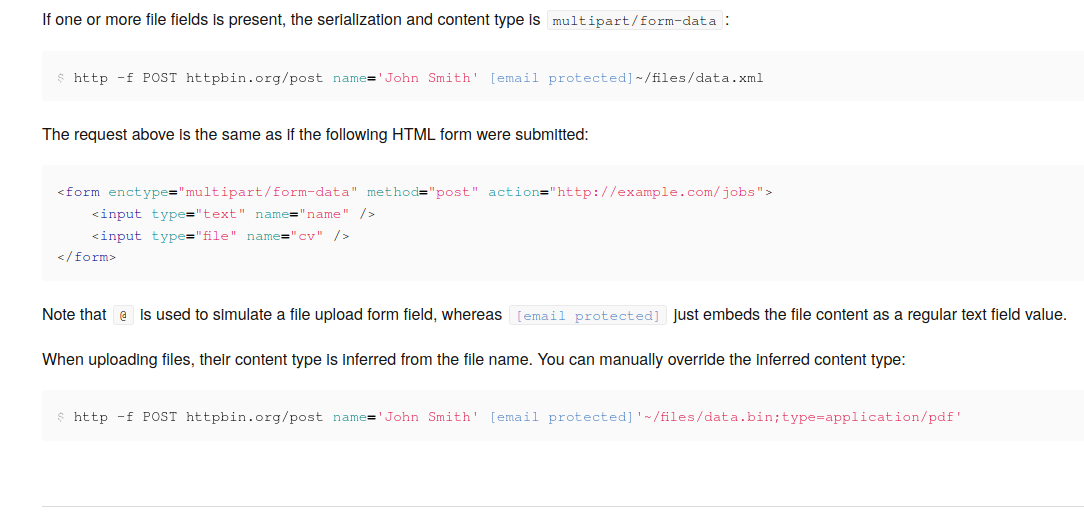

## Enhancement request

trying to call httpie with post body including raw valid js objects,

http <url> metrics:='[{name: "activeUsers"}]'

got error:

'metrics:=[{name: "activeUsers"}]': Expecting property name enclosed in double quotes: line 1 column 3 (char 2)

as expected;

but always expected tools like `httpie` could/should accept more format for flexibility,

and there is a project for that, called JSON5 (https://json5.org/) JSON for Humans, it allows many features, and some would be most useful for `httpie`:

1. Object keys may be an ECMAScript 5.1 IdentifierName, with single quote, or without quote, if unambiguous, like my example: `metrics:='[{name: "activeUsers"}]'` hope `httpie` can recognize it and auto-translate to valid json,

2. Strings may be single quoted,

3. Numbers may be hexadecimal, or scientific format, like `1e3` `28e6` ?

## Additional information, screenshots, or code examples

JSON5 Python libraries:

1. https://pypi.org/project/json5/

6. https://pyjson5.readthedocs.io/

|

open

|

2022-05-16T07:35:20Z

|

2022-05-16T07:52:38Z

|

https://github.com/httpie/cli/issues/1402

|

[

"enhancement",

"needs product design"

] |

tx0c

| 1

|

trevorstephens/gplearn

|

scikit-learn

| 32

|

Include logic regression

|

New estimator, needs much more research to see how/if it fits into `gplearn`'s API. No milestone yet. [Citation](http://kooperberg.fhcrc.org/logic/documents/logic-regression.pdf)

Add boolean/logical functions, conditional functions and potential to input a binary input dataset

|

closed

|

2017-04-27T10:29:19Z

|

2020-02-13T11:32:51Z

|

https://github.com/trevorstephens/gplearn/issues/32

|

[

"enhancement"

] |

trevorstephens

| 3

|

django-oscar/django-oscar

|

django

| 3,921

|

Unable to access oscar on https://example.com:8443/oscar

|

### Issue Summary

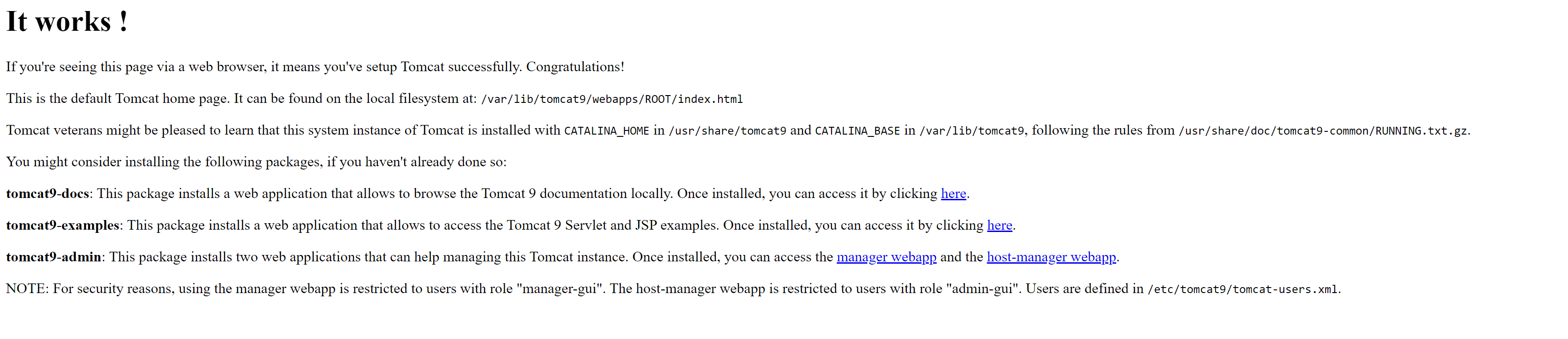

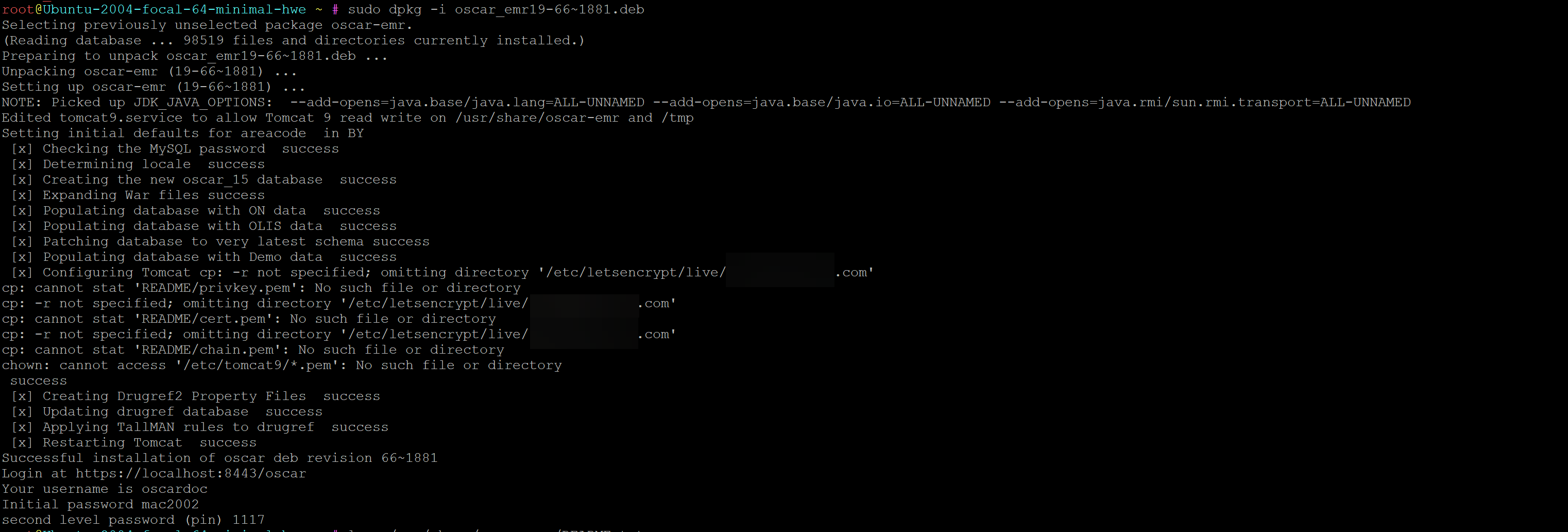

I followed [this guide](https://worldoscar.org/knowledge-base/oscar-19-installation/?epkb_post_type_1=oscar-19-installation) and I managed to install the most recent version [oscar_emr19-66~1881.deb](https://sourceforge.net/projects/oscarmcmaster/files/Oscar%20Debian%2BUbuntu%20deb%20Package/oscar_emr19-66~1881.deb/download) on Ubuntu 22.04. After a successful installation, I was able to arrive at the below stages:

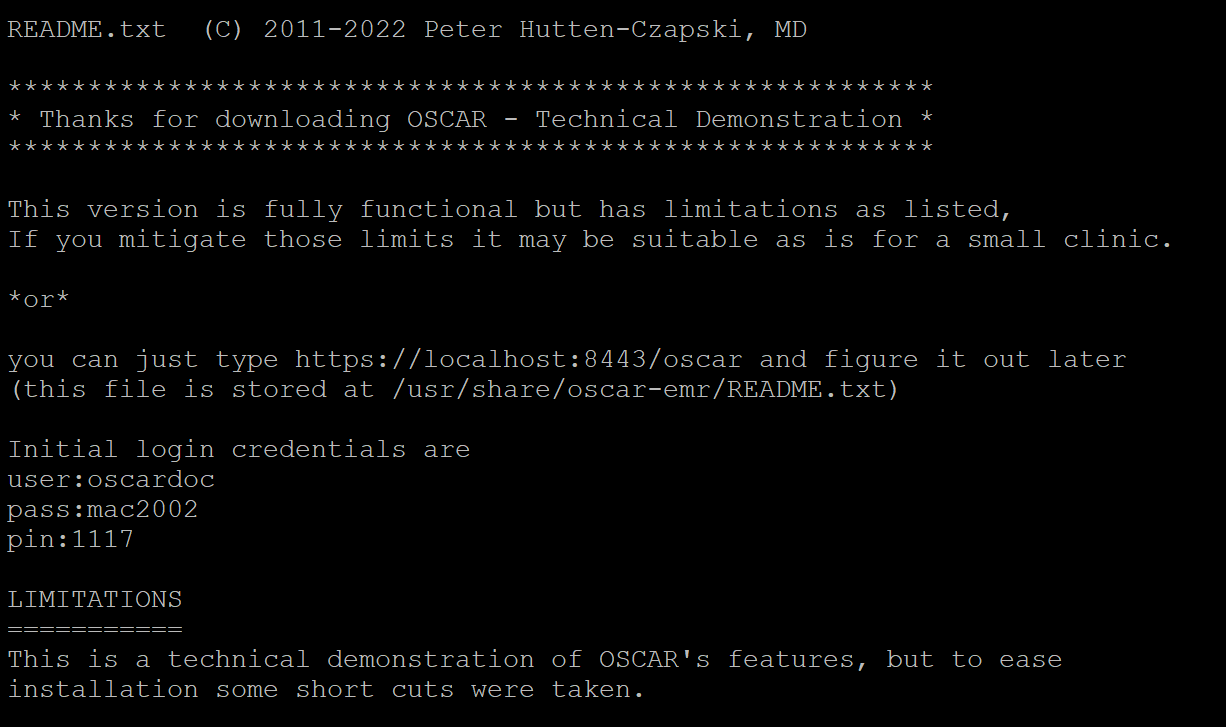

When I `run less /usr/share/oscar-emr/README.txt` , I got:

### The problem

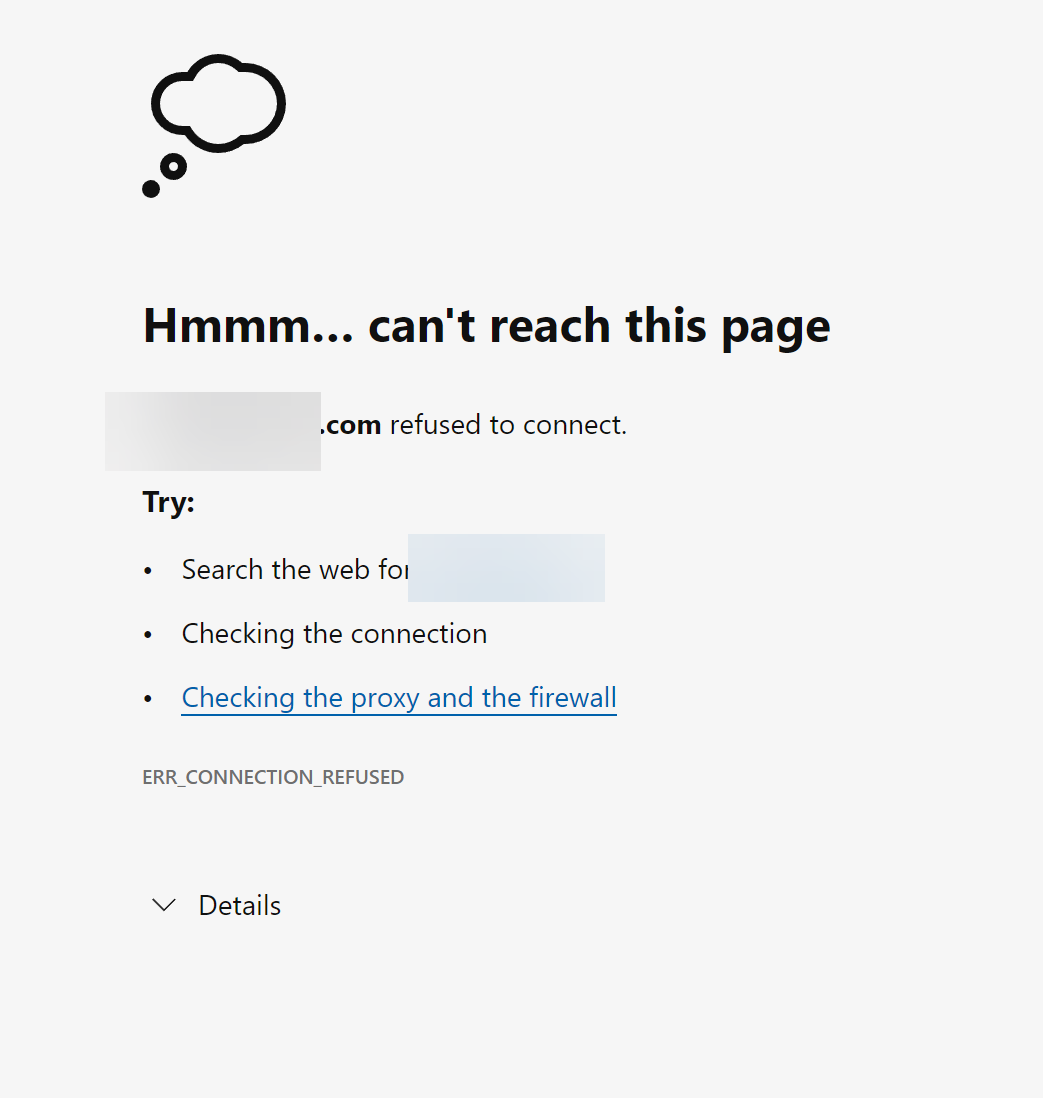

When I visited https://[example.com](https://example.com:8443/oscar):8443/oscar I got:

### Technical details

```

java -version

openjdk version "11.0.15" 2022-04-19

OpenJDK Runtime Environment (build 11.0.15+10-Ubuntu-0ubuntu0.20.04.1)

OpenJDK 64-Bit Server VM (build 11.0.15+10-Ubuntu-0ubuntu0.20.04.1, mixed mode, sharing)

```

* Python version: When I run `python --version`, I got: `-bash: python: command not found`. But `pip3 --version` returned `pip 20.0.2 from /usr/lib/python3/dist-packages/pip (python 3.8)`

* Django version: When I run `pip show django | grep Version`, I got `WARNING: Package(s) not found: django`

* Oscar version: When I run `pip show django-oscar | grep Version`, I got `WARNING: Package(s) not found: django-oscar`

### Help needed

How can I fix my installation to get this up and running?

|

closed

|

2022-05-01T16:35:58Z

|

2022-05-02T04:03:02Z

|

https://github.com/django-oscar/django-oscar/issues/3921

|

[] |

jessicana

| 1

|

dgtlmoon/changedetection.io

|

web-scraping

| 2,174

|

[feature] Sort tags / groups by alphabet

|

**Version and OS**

0.45.14 on Linux/Docker

**Is your feature request related to a problem? Please describe.**

To keep an overview about my watch jobs, I added tags / groups on them. In the meantime I work with 14 tags, and they are sorted by date created.

**Describe the solution you'd like**

It would be helpful, if the tags are sorted by alphabet automatically. Or if we had at least the ability to sort them manually.

**Describe the use-case and give concrete real-world examples**

<img width="714" alt="Bildschirmfoto 2024-02-10 um 09 20 11" src="https://github.com/dgtlmoon/changedetection.io/assets/3201804/ccf5be77-34a3-4385-9519-a5e1bd8b5b91">

<img width="694" alt="Bildschirmfoto 2024-02-10 um 09 20 42" src="https://github.com/dgtlmoon/changedetection.io/assets/3201804/c84d57d9-23c5-4e21-b6a2-1dd7aaa395a1">

**Additional context**

BTW, isn't tags the better term here than groups? From my experience, an item can have multiple tags attached, but can only be in one group at the same time.

|

closed

|

2024-02-10T08:21:00Z

|

2024-03-10T10:10:57Z

|

https://github.com/dgtlmoon/changedetection.io/issues/2174

|

[

"enhancement"

] |

plangin

| 3

|

deezer/spleeter

|

deep-learning

| 186

|

por favor su ayuda me sale esto

|

|

closed

|

2019-12-16T22:48:17Z

|

2019-12-18T14:18:10Z

|

https://github.com/deezer/spleeter/issues/186

|

[

"bug",

"invalid"

] |

excel77

| 1

|

predict-idlab/plotly-resampler

|

data-visualization

| 60

|

`FigureResampler` replace not working as it should when using a `go.Figure`

|

|

closed

|

2022-05-16T07:44:20Z

|

2022-05-16T15:34:17Z

|

https://github.com/predict-idlab/plotly-resampler/issues/60

|

[

"bug"

] |

jonasvdd

| 1

|

robotframework/robotframework

|

automation

| 4,803

|

Async support to dynamic and hybrid library APIs

|

For our Lib we are using framework that is async and we have to await for results.

our lib works similar to Remote Lib to get the keywords and arguments but communication is done using asyncio.

with RF 6.1 run_keyword can be made async but functions for getting keywords/arguments/etc. can't.

as a workaround I'm getting context with EXECUTION_CONTEXTS.current and run async operations with context.asynchronous.run_until_complete().

this works but if the implementation of EXECUTION_CONTEXTS changes the library will break.

would it be possible to add async to get_* functions in Dynamic & Hybrid Lib interfaces?

|

closed

|

2023-06-22T09:26:53Z

|

2023-11-27T12:28:17Z

|

https://github.com/robotframework/robotframework/issues/4803

|

[

"enhancement",

"priority: high",

"alpha 2",

"acknowledge"

] |

WisniewskiP

| 9

|

autogluon/autogluon

|

scikit-learn

| 4,900

|

[tabular] Add `num_cpus`, `num_gpus` to `predictor.predict`

|

Related: #4871

We should add ways for user to control num_cpus and num_gpus during model inference.

This also ties into adding parallel inference support.

|

open

|

2025-02-17T21:05:37Z

|

2025-02-17T21:05:37Z

|

https://github.com/autogluon/autogluon/issues/4900

|

[

"enhancement",

"module: tabular"

] |

Innixma

| 0

|

plotly/dash

|

data-visualization

| 2,765

|

When moving the cursor, it will sometimes get stuck

|

**Describe your context**

Please provide us your environment, so we can easily reproduce the issue.¨

16 core 32 Thread dual CPU server, view selected to show all cores

Running in Docker on Ubuntu Server

- if frontend related, tell us your Browser, Version and OS

- OS: Windows

- Browser Chrome

- Version 123.0.6308.0

**Describe the bug**

When moving the cursor around on the preview, especially on machines with many cores/threads the cursor will sometimes get stuck.

Video Attached

**Expected behavior**

Graph cursor should follow the mouse and not stay in a previous position

**Screenshots**

If applicable, add screenshots or screen recording to help explain your problem.

https://github.com/plotly/dash/assets/46653946/3576cae4-67ba-4d46-9b21-7af5febac7b3

|

closed

|

2024-02-18T19:45:58Z

|

2024-05-31T20:09:58Z

|

https://github.com/plotly/dash/issues/2765

|

[

"bug",

"sev-2"

] |

Spillebulle

| 1

|

deeppavlov/DeepPavlov

|

nlp

| 1,008

|

Readme for /examples

|

Please add readme with short description of provided examples in

https://github.com/deepmipt/DeepPavlov/tree/master/examples

Please also add to the readme links to other resources to learn DeepPavlov -

https://github.com/deepmipt/dp_tutorials

https://github.com/deepmipt/dp_notebooks

|

closed

|

2019-09-21T10:24:24Z

|

2019-09-26T13:54:55Z

|

https://github.com/deeppavlov/DeepPavlov/issues/1008

|

[] |

DeepPavlovAdmin

| 1

|

vitalik/django-ninja

|

rest-api

| 818

|

How to create Generic Schema for openapi?

|

### Discussed in https://github.com/vitalik/django-ninja/discussions/817

<div type='discussions-op-text'>

<sup>Originally posted by **suuperhu** August 8, 2023</sup>

**I have the following piece of code, but there is a problem with openapi page, how should I solve it?**

_Code environment :

ubuntu 20.04

python 3.10.11

django 3.2.15

django-ninja 0.22.2

pydantic 1.10.12_

```python

from typing import TypeVar, Generic, List

from django.http import HttpRequest

from ninja import Router, Query, Schema

from pydantic import Field

T1 = TypeVar('T1')

T2 = TypeVar('T2')

router = Router()

class Params(Schema):

name: str

age: int = Field(0, ge=0, le=120)

class Response(Schema, Generic[T1, T2]):

code: int

data: T1

message: T2

class Data(Schema):

a: int

b: List[str]

@router.get('demo/', response=Response[Data, str])

def list_level(request: HttpRequest, params: Params = Query(...)):

return {

'code': 200,

'data': {'a': 1, 'b': ['a', 'b', 'c']},

'message': 'good'

}

```

The openapi page:

So,How should I make the openapi page's 200 response look like this:

```json

{

"code": 0,

"data": {

"a": 0,

"b": [

"string",

"string",

"..."

]

},

"message": "string"

}

```

</div>

|

closed

|

2023-08-08T05:04:16Z

|

2023-08-08T06:46:52Z

|

https://github.com/vitalik/django-ninja/issues/818

|

[] |

hushoujier

| 1

|

huggingface/datasets

|

nlp

| 7,041

|

`sort` after `filter` unreasonably slow

|

### Describe the bug

as the tittle says ...

### Steps to reproduce the bug

`sort` seems to be normal.

```python

from datasets import Dataset

import random

nums = [{"k":random.choice(range(0,1000))} for _ in range(100000)]

ds = Dataset.from_list(nums)

print("start sort")

ds = ds.sort("k")

print("finish sort")

```

but `sort` after `filter` is extremely slow.

```python

from datasets import Dataset

import random

nums = [{"k":random.choice(range(0,1000))} for _ in range(100000)]

ds = Dataset.from_list(nums)

ds = ds.filter(lambda x:x > 100, input_columns="k")

print("start sort")

ds = ds.sort("k")

print("finish sort")

```

### Expected behavior

Is this a bug, or is it a misuse of the `sort` function?

### Environment info

- `datasets` version: 2.20.0

- Platform: Linux-3.10.0-1127.19.1.el7.x86_64-x86_64-with-glibc2.17

- Python version: 3.10.13

- `huggingface_hub` version: 0.23.4

- PyArrow version: 16.1.0

- Pandas version: 2.2.2

- `fsspec` version: 2023.10.0

|

open

|

2024-07-12T03:29:27Z

|

2024-07-22T13:55:17Z

|

https://github.com/huggingface/datasets/issues/7041

|

[] |

Tobin-rgb

| 1

|

piskvorky/gensim

|

machine-learning

| 2,873

|

Further focus/slim keyedvectors.py module

|

Pre-#2698, `keyedvectors.py` was 2500+ lines, including functionality over-specific to other models, & redundant classes. Post-#2698, with some added generic functionality, it's still over 1800 lines.

It should shed some other grab-bag utility functions that have accumulated, & don't logically fit inside the `KeyedVectors` class.

In particular, the evaluation (analogies, word_ranks) helpers could move to their own module that takes a KV instance as an argument. (If other more-sophisticated evaluations can be contributed, as would be welcome, they should also live alongside those, rather than bloating `KeyedVectors`.)

The `get_keras_embedding` method, as its utilit is narrow to very specific uses, and is conditional on a not-necessarily install package, could go elsewhere too – either a kera-focused utilities module, or even just documentation/example code about how to convert to/from keras from `KeyedVectors.

Some of the more advanced word-vector-**using** calculations, like 'Word Mover's Distance' or 'Soft Cosine SImilarity', could move to method-specific modules that are then better documented/self-contained/optimized, without bloating the generic 'set of vectors' module. (They might be more discoverable, there, as well.)

And finally, some of the existing calculations could be unified/streamlined (especially the two variants of `most_similar()`, and some of the steps shared by multiple operations). My hope would be the module is eventually <1000 lines.

|

open

|

2020-07-06T20:00:39Z

|

2021-03-09T07:59:52Z

|

https://github.com/piskvorky/gensim/issues/2873

|

[] |

gojomo

| 8

|

3b1b/manim

|

python

| 1,442

|

Error installing manim and running a test program

|

### Describe the error

<!-- A clear and concise description of what you want to make. -->

I installed ffmpeg from the APT package manager and PyOpenGL from pip3 in a virtual environment. Then, when I tried to install manim in the same virtual environment it gave the following error

However, in the end, it said that it successfully installed manim and its dependencies.

Then, I tried to run a simple program to see if it works:

But, it just keeps running with no errors (like it is frozen).

### Code and Error

**Code**:

<!-- The code you run -->

```

class SquareImage(Scene):

def construct(self):

square = Square()

self.add(square)

```

```

manimgl manim_test.py SquareImage -s

```

**Error**:

<!-- The error traceback you get when run your code -->

```

ERROR: Command errored out with exit status 1:

command: /home/karan/manim/venv/bin/python /home/karan/manim/venv/lib/python3.8/site-packages/pip/_vendor/pep517/_in_process.py get_requires_for_build_wheel /tmp/tmp8troxa62

cwd: /tmp/pip-install-vtkpor5m/manimpango_d759c30457a744afbb343d792b24d300

Complete output (33 lines):

Package pangocairo was not found in the pkg-config search path.

Perhaps you should add the directory containing `pangocairo.pc'

to the PKG_CONFIG_PATH environment variable

No package 'pangocairo' found

Traceback (most recent call last):

File "setup.py", line 124, in check_min_version

check_call(command, stdout=subprocess.DEVNULL)

File "/usr/lib/python3.8/subprocess.py", line 364, in check_call

raise CalledProcessError(retcode, cmd)

subprocess.CalledProcessError: Command '['pkg-config', '--print-errors', '--atleast-version', '1.30.0', 'pangocairo']' returned non-zero exit status 1.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/karan/manim/venv/lib/python3.8/site-packages/pip/_vendor/pep517/_in_process.py", line 280, in <module>

main()

File "/home/karan/manim/venv/lib/python3.8/site-packages/pip/_vendor/pep517/_in_process.py", line 263, in main

json_out['return_val'] = hook(**hook_input['kwargs'])

File "/home/karan/manim/venv/lib/python3.8/site-packages/pip/_vendor/pep517/_in_process.py", line 114, in get_requires_for_build_wheel

return hook(config_settings)

File "/tmp/pip-build-env-vytjtzxg/overlay/lib/python3.8/site-packages/setuptools/build_meta.py", line 149, in get_requires_for_build_wheel

return self._get_build_requires(

File "/tmp/pip-build-env-vytjtzxg/overlay/lib/python3.8/site-packages/setuptools/build_meta.py", line 130, in _get_build_requires

self.run_setup()

File "/tmp/pip-build-env-vytjtzxg/overlay/lib/python3.8/site-packages/setuptools/build_meta.py", line 253, in run_setup

super(_BuildMetaLegacyBackend,

File "/tmp/pip-build-env-vytjtzxg/overlay/lib/python3.8/site-packages/setuptools/build_meta.py", line 145, in run_setup

exec(compile(code, __file__, 'exec'), locals())

File "setup.py", line 190, in <module>

_pkg_config.check_min_version(MINIMUM_PANGO_VERSION)

File "setup.py", line 127, in check_min_version

raise RequiredDependencyException(f"{self.name} >= {version} is required")

__main__.RequiredDependencyException: pangocairo >= 1.30.0 is required

----------------------------------------

```

### Environment

**OS System**: Linux Mint

**manim version**: master <!-- make sure you are using the latest version of master branch -->

**python version**: 3.8.5

|

open

|

2021-03-21T09:19:49Z

|

2022-06-13T16:52:39Z

|

https://github.com/3b1b/manim/issues/1442

|

[] |

kkin1995

| 6

|

tensorlayer/TensorLayer

|

tensorflow

| 680

|

Failed: TensorLayer (bfffb588)

|

*Sent by Read the Docs (readthedocs@readthedocs.org). Created by [fire](https://fire.fundersclub.com/).*

---

| TensorLayer build #7291120

---

|

---

| Build Failed for TensorLayer (latest)

---

You can find out more about this failure here:

[TensorLayer build #7291120](https://readthedocs.org/projects/tensorlayer/builds/7291120/) \- failed

If you have questions, a good place to start is the FAQ:

<https://docs.readthedocs.io/en/latest/faq.html>

You can unsubscribe from these emails in your [Notification Settings](https://readthedocs.org/dashboard/tensorlayer/notifications/)

Keep documenting,

Read the Docs

| Read the Docs

<https://readthedocs.org>

---

|

closed

|

2018-06-04T14:24:24Z

|

2018-06-04T14:56:34Z

|

https://github.com/tensorlayer/TensorLayer/issues/680

|

[] |

fire-bot

| 0

|

RayVentura/ShortGPT

|

automation

| 70

|

🐛 [Bug]:

|

### What happened?

Step 9 _prepareBackgroundAssets

{'voiceover_audio_url': '.editing_assets/reddit_shorts_assets/b32f7d0a87944aa99dd1b826/audio_voice.wav', 'video_duration': None, 'background_video_url': 'https://rr3---sn-npoe7nez.googlevideo.com/videoplayback?expire=1690975098&ei=GufJZN7mJaei9fwPveCisA8&ip=194.156.163.133&id=o-AC0PipqKARrGGjkNooYfuCxs-noXKlpCEhSXMUGnVmtJ&itag=335&source=youtube&requiressl=yes&mh=ww&mm=31%2C26&mn=sn-npoe7nez%2Csn-ntqe6n76&ms=au%2Conr&mv=m&mvi=3&pl=24&initcwndbps=70318750&vprv=1&svpuc=1&mime=video%2Fwebm&gir=yes&clen=463772648&dur=545.611&lmt=1629834060752995&mt=1690953169&fvip=5&keepalive=yes&fexp=24007246%2C51000011%2C51000023&c=IOS&txp=5511222&sparams=expire%2Cei%2Cip%2Cid%2Citag%2Csource%2Crequiressl%2Cvprv%2Csvpuc%2Cmime%2Cgir%2Cclen%2Cdur%2Clmt&sig=AOq0QJ8wRgIhANUzWaBjEcKzgNA2yiHX_42W4mkpVTLPv_64hiw9laDRAiEA2VYtKYeThTpaZIHsqSbBU8hsPz9Rkpwyb5_VXocQfDE%3D&lsparams=mh%2Cmm%2Cmn%2Cms%2Cmv%2Cmvi%2Cpl%2Cinitcwndbps&lsig=AG3C_xAwRgIhAKQyMfTyaa5QifrExwyXHxaomTdA5wP4q5aICytBfRuFAiEAn7pYfppbDvlnRTNZQfRstO3gD894BpmVRo1fPKp8am0%3D', 'music_url': 'public/Music dj quads.wav'}

Error File "C:\Users\EDY\Desktop\c\shortgpt\gui\short_automation_ui.py", line 107, in create_short

for step_num, step_info in shortEngine.makeContent():

File "C:\Users\EDY\Desktop\c\shortgpt\shortGPT\engine\abstract_content_engine.py", line 70, in makeContent

self.stepDict[currentStep]()

File "C:\Users\EDY\Desktop\c\shortgpt\shortGPT\engine\content_short_engine.py", line 97, in _prepareBackgroundAssets

self.verifyParameters(

File "C:\Users\EDY\Desktop\c\shortgpt\shortGPT\engine\abstract_content_engine.py", line 55, in verifyParameters

raise Exception(f"Parameter :{key} is null")

### What type of browser are you seeing the problem on?

Chrome

### What type of Operating System are you seeing the problem on?

Windows

### Python Version

3.10

### Application Version

v0.0.1

### Expected Behavior

Step 9 _prepareBackgroundAssets

{'voiceover_audio_url': '.editing_assets/reddit_shorts_assets/b32f7d0a87944aa99dd1b826/audio_voice.wav', 'video_duration': None, 'background_video_url': 'https://rr3---sn-npoe7nez.googlevideo.com/videoplayback?expire=1690975098&ei=GufJZN7mJaei9fwPveCisA8&ip=194.156.163.133&id=o-AC0PipqKARrGGjkNooYfuCxs-noXKlpCEhSXMUGnVmtJ&itag=335&source=youtube&requiressl=yes&mh=ww&mm=31%2C26&mn=sn-npoe7nez%2Csn-ntqe6n76&ms=au%2Conr&mv=m&mvi=3&pl=24&initcwndbps=70318750&vprv=1&svpuc=1&mime=video%2Fwebm&gir=yes&clen=463772648&dur=545.611&lmt=1629834060752995&mt=1690953169&fvip=5&keepalive=yes&fexp=24007246%2C51000011%2C51000023&c=IOS&txp=5511222&sparams=expire%2Cei%2Cip%2Cid%2Citag%2Csource%2Crequiressl%2Cvprv%2Csvpuc%2Cmime%2Cgir%2Cclen%2Cdur%2Clmt&sig=AOq0QJ8wRgIhANUzWaBjEcKzgNA2yiHX_42W4mkpVTLPv_64hiw9laDRAiEA2VYtKYeThTpaZIHsqSbBU8hsPz9Rkpwyb5_VXocQfDE%3D&lsparams=mh%2Cmm%2Cmn%2Cms%2Cmv%2Cmvi%2Cpl%2Cinitcwndbps&lsig=AG3C_xAwRgIhAKQyMfTyaa5QifrExwyXHxaomTdA5wP4q5aICytBfRuFAiEAn7pYfppbDvlnRTNZQfRstO3gD894BpmVRo1fPKp8am0%3D', 'music_url': 'public/Music dj quads.wav'}

Error File "C:\Users\EDY\Desktop\c\shortgpt\gui\short_automation_ui.py", line 107, in create_short

for step_num, step_info in shortEngine.makeContent():

File "C:\Users\EDY\Desktop\c\shortgpt\shortGPT\engine\abstract_content_engine.py", line 70, in makeContent

self.stepDict[currentStep]()

File "C:\Users\EDY\Desktop\c\shortgpt\shortGPT\engine\content_short_engine.py", line 97, in _prepareBackgroundAssets

self.verifyParameters(

File "C:\Users\EDY\Desktop\c\shortgpt\shortGPT\engine\abstract_content_engine.py", line 55, in verifyParameters

raise Exception(f"Parameter :{key} is null")

### Error Message

_No response_

### Code to produce this issue.

_No response_

### Screenshots/Assets/Relevant links

_No response_

|

open

|

2023-08-02T05:37:02Z

|

2023-08-02T05:37:02Z

|

https://github.com/RayVentura/ShortGPT/issues/70

|

[

"bug"

] |

rpp-Little-pig

| 0

|

raphaelvallat/pingouin

|

pandas

| 209

|

pairwise_nonparametric()

|

Hi, I was looking for non-parametric pairwise tests and only found the parameter `parametric=False` of the `pairwise_ttests()` after some time from the flowcharts. This seems confusing to me. I'd suggest adding function `pairwise_nonparametric()` for this purpose mainly because of discoverability and clarity. Also pls note that the documentation to `pairwise_ttests()` just says: "Pairwise T-tests.". I'd be happy to prepare PR if that helps.

|

closed

|

2021-11-10T10:45:33Z

|

2022-03-12T23:51:51Z

|

https://github.com/raphaelvallat/pingouin/issues/209

|

[

"docs/testing :book:",

"IMPORTANT❗"

] |

michalkahle

| 5

|

ymcui/Chinese-BERT-wwm

|

nlp

| 146

|

RoBERTa-wwm-ext-large能不能把mlm权重补充上?

|

现在很多研究都表明MLM其实也是一个相当有用的语言模型,并不是纯粹的只有预训练的左右了,所以能不能麻烦一下把MLM的权重补上?

而且我最不能理解的就是,要是扔掉MLM的权重也就算了,为啥还要随机初始化一个放在那里,这不是容易误导人么?

|

closed

|

2020-09-21T06:22:18Z

|

2020-09-21T07:01:24Z

|

https://github.com/ymcui/Chinese-BERT-wwm/issues/146

|

[

"wontfix"

] |

bojone

| 1

|

newpanjing/simpleui

|

django

| 43

|

左侧栏收起后无全屏缩小

|

**bug描述**

简单的描述下遇到的bug:

**重现步骤**

1.

2.

3.

**环境**

1.操作系统:

2.python版本:

3.django版本:

4.simpleui版本:

**其他描述**

|

closed

|

2019-05-21T01:38:45Z

|

2019-05-21T02:30:57Z

|

https://github.com/newpanjing/simpleui/issues/43

|

[

"bug"

] |

Qianzujin

| 1

|

tqdm/tqdm

|

pandas

| 749

|

Logging from separate threads pushes tqdm bar up

|

Versions I'm using:

```

tqdm = 4.31.1

python = 3.7.3

OS: Ubuntu 19.04 (Linux: 5.0)

```

If we're processing an list of elements with a multiprocessing function, say applying `f` to the list with `pool.imap_unordered`, and log a message from within `f`, then the progress bar will be pushed up for each message logged.

An example script that produces this behavior:

```python

import logging

import multiprocessing

import time

import tqdm

# Set up tqdm logging handler

class TqdmLoggingHandler (logging.StreamHandler):

def __init__(self, stream=None):

super().__init__(stream)

def emit(self, record):

try:

msg = self.format(record)

tqdm.tqdm.write(msg)

self.flush()

except (KeyboardInterrupt, SystemExit):

raise

except:

self.handleError(record)

logger = logging.getLogger()

logger.setLevel(logging.INFO)

logger.handlers = []

logger.addHandler(TqdmLoggingHandler())

def func_mult(x):

logger.info('processing %s', x) # <- log from other threads

time.sleep(1)

return x

array = list(range(6))

with multiprocessing.Pool(2) as pool:

for xx in tqdm.tqdm(

pool.imap_unordered(func_mult, array),

total=len(array)):

logger.info('getting result: %s', xx) # <- log from current thread

```

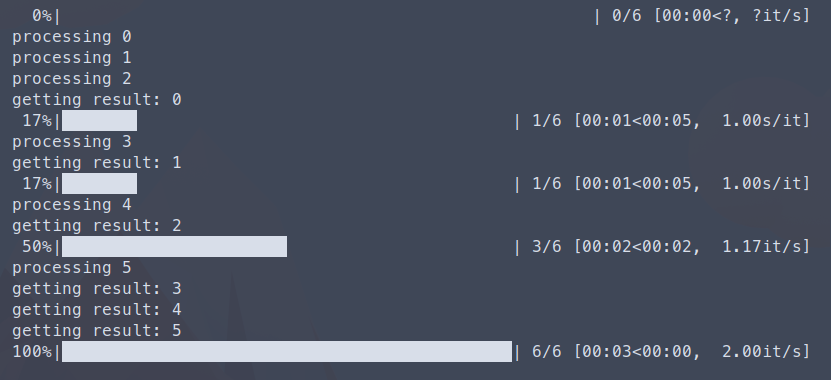

This is the output I get:

Note that the `getting result` messages are printed correctly (above the bar), but the `processing` messages issued from other threads don't respect the bar and are added to the bottom of the screen instead.

Is this behavior expected or should log messages issued from other threats respect tqdm?

|

open

|

2019-05-23T12:51:57Z

|

2019-06-17T16:43:02Z

|

https://github.com/tqdm/tqdm/issues/749

|

[

"p3-enhancement 🔥",

"help wanted 🙏",

"need-feedback 📢",

"p2-bug-warning ⚠",

"synchronisation ⇶"

] |

tupini07

| 1

|

huggingface/transformers

|

nlp

| 36,217

|

Albert does not use SDPA's Flash Attention since attention mask is always created

|

### System Info

NA

### Who can help?

@ArthurZucker

### Information

- [ ] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

https://github.com/huggingface/transformers/blob/dd16acb8a3e93b643aa374c9fb80749f5235c1a6/src/transformers/models/albert/modeling_albert.py#L772-L773

Since attention mask is always used by `AlbertModel`, SDPA will dispatch memory-efficient kernel instead of flash attention. The inner classes (`AlbertTransformer`, `AlbertLayerGroup`, ...) seem to handle attention_mask=None correctly, so it's only the outermost class is problematic.

### Expected behavior

Flash attention should be used when attention_mask=None

|

closed

|

2025-02-15T15:40:58Z

|

2025-02-16T02:36:30Z

|

https://github.com/huggingface/transformers/issues/36217

|

[

"bug"

] |

gau-nernst

| 1

|

plotly/dash-component-boilerplate

|

dash

| 62

|

capitalize component name

|

hi, while creating a new project it asks for component_name, if i give a name that starts with lowercase, it creates react component with lowercase, which is wrong from react component standards. React component must starts with uppercase.

|

open

|

2019-03-13T01:14:51Z

|

2019-03-13T01:14:51Z

|

https://github.com/plotly/dash-component-boilerplate/issues/62

|

[] |

rajeevmaster

| 0

|

igorbenav/FastAPI-boilerplate

|

sqlalchemy

| 138

|

DB session from a worker function?

|

Hey, Im trying for 2 days now to make this work,

How do I pass the session from an endpoint to the background worker function?

My idea is to insert a record in the db from my endpoint then process it from the background function.

*Edit*

I made it work by creating another function with contextmanager

```

@asynccontextmanager

async def async_get_context() -> AsyncSession:

async_session = local_session

async with async_session() as db:

yield db

```

|

closed

|

2024-05-21T13:16:39Z

|

2024-06-02T14:05:30Z

|

https://github.com/igorbenav/FastAPI-boilerplate/issues/138

|

[

"documentation",

"enhancement"

] |

kaStoyanov

| 4

|

ultralytics/yolov5

|

machine-learning

| 13,519

|

Detection with Torch Hub Failing

|

### Search before asking

- [x] I have searched the YOLOv5 [issues](https://github.com/ultralytics/yolov5/issues) and [discussions](https://github.com/ultralytics/yolov5/discussions) and found no similar questions.

### Question

I have ML project on Python 3.7.8, it was working fine, but recently I am getting error when loading model with torch.hub.load

File "C:\Users\Strange/.cache\torch\hub\ultralytics_yolov5_master\models\common.py", line 39, in <module>

from utils.dataloaders import exif_transpose, letterbox

File "C:\Users\Strange/.cache\torch\hub\ultralytics_yolov5_master\utils\dataloaders.py", line 776

if mosaic := self.mosaic and random.random() < hyp["mosaic"]:

I know yolov5 require python > 3.8, but all current dependencies in my project is of 3.7.8, please suggest workaround to work with it

### Additional

_No response_

|

closed

|

2025-02-25T04:56:37Z

|

2025-02-27T23:58:53Z

|

https://github.com/ultralytics/yolov5/issues/13519

|

[

"question",

"dependencies",

"detect"

] |

llavkush

| 4

|

WeblateOrg/weblate

|

django

| 14,232

|

Expose 'add comment' API endpoint

|

### Describe the problem

We're migrating from another platform to Weblate, and would like to take along our comments. It's currently not possible to do this easily/programmatically. Consequently, we're using valuable contributor feedback on our strings.

### Describe the solution you would like

We'd like to have an API endpoint that allows to post a comment on a given string. Matching:

* If we provide the email address of the poster, and it exists as user on Weblate, the comment should be associated with that user.

* We'd like to provide the string key to identify the relevant string on Weblate.

### Describe alternatives you have considered

I wondered whether to request the following, but concluded that the benefits don't outweigh the (technical) challenges: If we provide a timestamp (in the past), it is maintained so that when viewing the comment in context there is additional metadata to consider whether the comment is still relevant.

### Screenshots

_No response_

### Additional context

_No response_

|

open

|

2025-03-16T11:51:08Z

|

2025-03-17T18:52:56Z

|

https://github.com/WeblateOrg/weblate/issues/14232

|

[

"enhancement",

"undecided",

"Area: API"

] |

keunes

| 2

|

graphql-python/graphene-django

|

graphql

| 1,116

|

graphene-neo4j

|

I will be glad if you update graphene-neo4j with Django 3.

|

closed

|

2021-02-15T19:17:27Z

|

2021-02-16T06:12:03Z

|

https://github.com/graphql-python/graphene-django/issues/1116

|

[

"🐛bug"

] |

MajidHeydari

| 1

|

3b1b/manim

|

python

| 2,097

|

Example Gallery Bug when Rendering High Quality

|

### Describe the bug

For the Gallery Example with https://docs.manim.community/en/stable/examples.html#pointwithtrace

**Code**:

```py

from manim import *

class PointWithTrace(Scene):

def construct(self):

path = VMobject()

dot = Dot()

path.set_points_as_corners([dot.get_center(), dot.get_center()])

def update_path(path):

previous_path = path.copy()

previous_path.add_points_as_corners([dot.get_center()])

path.become(previous_path)

path.add_updater(update_path)

self.add(path, dot)

self.play(Rotating(dot, radians=PI, about_point=RIGHT, run_time=2))

self.wait()

self.play(dot.animate.shift(UP))

self.play(dot.animate.shift(LEFT))

self.wait()

```

**Wrong display or Error traceback**:

### Additional context

The circular arc is drawn correctly, but once it is done, it deletes the whole arc and continuous as if the path started from the origin completing the drawing. This doesn't happen with low quality, but happens when you use high quality.

|

open

|

2024-01-29T04:51:34Z

|

2024-01-29T04:53:52Z

|

https://github.com/3b1b/manim/issues/2097

|

[

"bug"

] |

wmstack

| 1

|

ivy-llc/ivy

|

pytorch

| 28,339

|

Fix Frontend Failing Test: paddle - math.tensorflow.math.argmin

|

To-do List: https://github.com/unifyai/ivy/issues/27500

|

closed

|

2024-02-20T08:12:51Z

|

2024-02-20T10:21:20Z

|

https://github.com/ivy-llc/ivy/issues/28339

|

[

"Sub Task"

] |

Sai-Suraj-27

| 0

|

gevent/gevent

|

asyncio

| 1,962

|

ImportError: cannot import name 'match_hostname' from 'ssl' (/usr/lib/python3.12/ssl.py)

|

* gevent version: 22.10.2 - fedora package

* Python version: 3.12.0b3

* Operating System: Fedora rawhide

### Description:

While trying to build the sphinx documentation for the x2go python module:

```

+ make -C docs SPHINXBUILD=/usr/bin/sphinx-build-3 html

make: Entering directory '/builddir/build/BUILD/python-x2go-0.6.1.3/docs'

/usr/bin/sphinx-build-3 -b html -d build/doctrees source build/html

Running Sphinx v6.1.3

Configuration error:

There is a programmable error in your configuration file:

Traceback (most recent call last):

File "/usr/lib/python3.12/site-packages/sphinx/config.py", line 351, in eval_config_file

exec(code, namespace) # NoQA: S102

^^^^^^^^^^^^^^^^^^^^^

File "/builddir/build/BUILD/python-x2go-0.6.1.3/docs/source/conf.py", line 22, in <module>

import x2go

File "/builddir/build/BUILD/python-x2go-0.6.1.3/x2go/__init__.py", line 42, in <module>

monkey.patch_all()

File "/usr/lib/python3.12/site-packages/gevent/monkey.py", line 1279, in patch_all

patch_ssl(_warnings=_warnings, _first_time=first_time)

File "/usr/lib/python3.12/site-packages/gevent/monkey.py", line 200, in ignores

return func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.12/site-packages/gevent/monkey.py", line 1044, in patch_ssl

gevent_mod, _ = _patch_module('ssl', _warnings=_warnings)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.12/site-packages/gevent/monkey.py", line 462, in _patch_module

gevent_module, target_module, target_module_name = _check_availability(name)

^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.12/site-packages/gevent/monkey.py", line 448, in _check_availability

gevent_module = getattr(__import__('gevent.' + name), name)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.12/site-packages/gevent/ssl.py", line 32, in <module>

from gevent import _ssl3 as _source # pragma: no cover

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3.12/site-packages/gevent/_ssl3.py", line 53, in <module>

from ssl import match_hostname

ImportError: cannot import name 'match_hostname' from 'ssl' (/usr/lib/python3.12/ssl.py)

```

|

closed

|

2023-06-26T23:33:53Z

|

2023-07-10T15:30:56Z

|

https://github.com/gevent/gevent/issues/1962

|

[] |

opoplawski

| 1

|

sinaptik-ai/pandas-ai

|

data-science

| 686

|

TypeError: 'NoneType' object is not callable . Retrying Unfortunately, I was not able to answer your question, because of the following error: No code found in the response

|

#### Error:-/usr/local/lib/python3.10/dist-packages/pandasai/llm/starcoder.py:28: UserWarning: Starcoder is deprecated and will be removed in a future release.

Please use langchain.llms.HuggingFaceHub instead, although please be

aware that it may perform poorly.

warnings.warn(

/usr/local/lib/python3.10/dist-packages/pandasai/llm/falcon.py:29: UserWarning: Falcon is deprecated and will be removed in a future release.

Please use langchain.llms.HuggingFaceHub instead, although please be

aware that it may perform poorly.

warnings.warn(

WARNING:pandasai.helpers.logger:Error of executing code

WARNING:pandasai.helpers.logger:Failed to execute code with a correction framework [retry number: 1]

ERROR:pandasai.helpers.logger:Failed with error: Traceback (most recent call last):

File "/usr/local/lib/python3.10/dist-packages/pandasai/smart_datalake/__init__.py", line 394, in chat

result = self._code_manager.execute_code(

File "/usr/local/lib/python3.10/dist-packages/pandasai/helpers/code_manager.py", line 276, in execute_code

return analyze_data(self._get_originals(dfs))

TypeError: 'NoneType' object is not callable

. Retrying

Unfortunately, I was not able to answer your question, because of the following error:

No code found in the response

Unfortunately, I was not able to answer your question, because of the following error:

#### Code:--

```python

df = pd.DataFrame({

"country": [

"United States",

"United Kingdom",

"France",

"Germany",

"Italy",

"Spain",

"Canada",

"Australia",

"Japan",

"China",

],

"gdp": [

19294482071552,

2891615567872,

2411255037952,

3435817336832,

1745433788416,

1181205135360,

1607402389504,

1490967855104,

4380756541440,

14631844184064,

],

"happiness_index": [6.94, 7.16, 6.66, 7.07, 6.38, 6.4, 7.23, 7.22, 5.87, 5.12],

})

from pandasai import SmartDataframe

from pandasai.llm import Starcoder, Falcon

starcoder_llm = Starcoder(api_token=token)

falcon_llm = Falcon(api_token=token)

df1 = SmartDataframe(df, config={"llm": starcoder_llm})

df2 = SmartDataframe(df, config={"llm": falcon_llm})

print(df1.chat("Which country has the highest GDP?"))

print(df2.chat("Which one is the unhappiest country?"))```

|

closed

|

2023-10-25T12:07:05Z

|

2024-06-01T00:20:12Z

|

https://github.com/sinaptik-ai/pandas-ai/issues/686

|

[] |

jaysinhpadhiyar

| 1

|

matplotlib/matplotlib

|

data-science

| 29,489

|

[Bug]: Systematic test failures with ubuntu-22.04-arm pipeline

|

### Bug summary

```

__________________________ test_errorbar_limits[svg] ___________________________

[gw2] linux -- Python 3.12.8 /opt/hostedtoolcache/Python/3.12.8/arm64/bin/python

args = ()

kwds = {'extension': 'svg', 'request': <FixtureRequest for <Function test_errorbar_limits[svg]>>}

@wraps(func)

def inner(*args, **kwds):

with self._recreate_cm():

> return func(*args, **kwds)

E matplotlib.testing.exceptions.ImageComparisonFailure: images not close (RMS 0.002):

E result_images/test_axes/errorbar_limits_svg.png

E result_images/test_axes/errorbar_limits-expected_svg.png

E result_images/test_axes/errorbar_limits_svg-failed-diff.png

/opt/hostedtoolcache/Python/3.12.8/arm64/lib/python3.12/contextlib.py:81: ImageComparisonFailure

```

and

```

_____________________________ test_get_font_names ______________________________

[gw3] linux -- Python 3.12.8 /opt/hostedtoolcache/Python/3.12.8/arm64/bin/python

@pytest.mark.skipif(sys.platform == 'win32', reason='Linux or OS only')

def test_get_font_names():

paths_mpl = [cbook._get_data_path('fonts', subdir) for subdir in ['ttf']]

fonts_mpl = findSystemFonts(paths_mpl, fontext='ttf')

fonts_system = findSystemFonts(fontext='ttf')

ttf_fonts = []

for path in fonts_mpl + fonts_system:

try:

font = ft2font.FT2Font(path)

prop = ttfFontProperty(font)

ttf_fonts.append(prop.name)

except Exception:

pass

available_fonts = sorted(list(set(ttf_fonts)))

mpl_font_names = sorted(fontManager.get_font_names())

> assert set(available_fonts) == set(mpl_font_names)

E AssertionError: assert {'C059', 'D05...ns Mono', ...} == {'C059', 'D05...ns Mono', ...}

E

E Extra items in the right set:

E 'Liberation Serif'

E 'Liberation Sans Narrow'

E 'Liberation Sans'

E 'Liberation Mono'

[...]

```

|

closed

|

2025-01-20T11:12:23Z

|

2025-01-21T23:05:49Z

|

https://github.com/matplotlib/matplotlib/issues/29489

|

[

"CI: testing"

] |

timhoffm

| 3

|

Gerapy/Gerapy

|

django

| 74

|

在用admin进行user和group添加和修改时会出现TypeError

|

前端显示:

后台报错:

主要原因是,在/server/core/TransformMiddleware中对所有request格式进行了转换,所以django自带处理方法中会出现类型错误。我目前的解决办法是加入判断,屏蔽掉来自admin的请求,初步测试可行。

> class TransformMiddleware(MiddlewareMixin):

def __call__(self, request):

"""

Change request body to str type

:param request:

:return:

"""

if isinstance(request.body, bytes):

if not 'admin' in request.path:

data = getattr(request, '_body', request.body)

request._body = data.decode('utf-8')

response = self.get_response(request)

return response

不过这个解决方案只针对了admin,不知道会不会有别的类似的问题。

|

open

|

2018-07-26T04:37:27Z

|

2018-07-26T04:37:27Z

|

https://github.com/Gerapy/Gerapy/issues/74

|

[] |

Fesbruk

| 0

|

MilesCranmer/PySR

|

scikit-learn

| 2

|

[Question] Pure Julia package

|

Hi, Is there a plan to have pure Julia API and expose it Julia package?

|

closed

|

2020-09-24T22:42:20Z

|

2021-01-18T09:24:06Z

|

https://github.com/MilesCranmer/PySR/issues/2

|

[

"implemented"

] |

sheevy

| 13

|

hbldh/bleak

|

asyncio

| 1,419

|

Qt timer can't stop bleak's notify.

|

* bleak version:0.20.0

* Python version: 3.11.4

* Operating System: windows 11

### Description

I integrated Bleak into a Qt application. I found that when using Qtimer to close the notification, there will be a problem and notify cannot be stopped.

### What I Did

My code

```python

class MainWindow(QMainWindow):

# device discovery signal

devices_discovery_signal = Signal()

devices_discovery_end_signal = Signal()

connect_signal = Signal()

disconnect_signal = Signal()

start_notify_signal = Signal()

start_notify_end_signal = Signal()

stop_notify_signal = Signal()

stop_notify_end_signal = Signal()

def __init__(self):

super().__init__()

self.devices = []

self.devices_addr = []

self.ui = Ui_VTCSensorEvaluation()

self.ui.setupUi(self)

self.ui.pushButton_3.clicked.connect(self.async_start_device_discovery)

self.ui.pushButton.clicked.connect(self.connect_device_click)

self.ui.pushButton_2.clicked.connect(self.start_notify_click)

self.ui.pushButton_2.setEnabled(False)

self.myclass = myclass()

self.update_timer = QTimer(self)

self.ui.pushButton_5.clicked.connect(self.calculate_data_bias)

self.bias_num = 0

self.call_num = 0

self.Ble = Ble_inst(self.update_device_list ,self.connect_status_update,self.receive_data_cb)

self.ui.ini_ui()

def update_data_timer_func(self):

datas = self.myclass.ret_cali_data()

if(datas == None):

return

self.ui.sensor_message_update("acc = {},{},{}".format(round(datas[0],2),round(datas[1],2),round(datas[2],2)))

self.ui.sensor_message_update("mag = {},{},{}".format(round(datas[3],2),round(datas[4],2),round(datas[5],2)))

self.ui.sensor_message_update("gyro = {},{},{}".format(round(datas[6],2),round(datas[7],2),round(datas[8],2)))

self.ui.sensor_message_update("")

return

def close_notify(self):

self.start_notify_end_signal.emit()

self.stop_notify_signal.emit()

self.stop_notify_end_signal.emit()

return

def update_device_list(self,dev_list):

self.ui.common_message_update("search the device")

self.ui.comboBox.clear()

self.ui.comboBox.addItems(dev_list)

self.devices_discovery_end_signal.emit()

return

@Slot()

def async_start_device_discovery(self):

self.devices_discovery_signal.emit()

return

def connect_status_update(self,flag):

self.ui.pushButton.setEnabled(flag)

self.ui.pushButton_2.setEnabled(not(flag))

self.ui.pushButton_2.setText("Run Compass")

if(flag == False):

self.ui.common_message_update("Connect Successfully....")

else :

self.ui.common_message_update("Connect Failed.....")

return

def receive_data_cb(self,ax,ay,az,mx,my,mz,gx,gy,gz):

self.myclass.do_cali_data(ax,ay,az,mx,my,mz,gx,gy,gz)

# print("acc data:",round(ax,2),round(ay,2),round(az,2))

# print("mag data:",round(mx,2),round(my,2),round(mz,2))

# print("gyro data:",round(gx,2),round(gy,2),round(gz,2))

return

def connect_device_click(self):

self.ui.pushButton.setEnabled(False)

ind = self.ui.comboBox.currentIndex()

if(ind == 0):

return

self.Ble.set_con_index(ind)

self.ui.common_message_update("connect the device({})".format(self.ui.comboBox.currentText()))

self.connect_signal.emit()

return

def start_notify_click(self):

print("click Run")

if(self.ui.pushButton_2.text() == "Run Compass"):

print("Run select A")

self.ui.common_message_update("start the notification....")

self.stop_notify_end_signal.emit()

self.start_notify_signal.emit()

self.ui.pushButton_2.setText("Stop Compass")

self.update_timer.timeout.connect(self.update_data_timer_func)

self.update_timer.start(10)

# self.start_notify_end_signal.emit()

elif(self.ui.pushButton_2.text() == "Stop Compass"):

print("Run select B")

self.ui.common_message_update("stop the notification....")

self.close_notify()

self.ui.pushButton_2.setText("Run Compass")

self.update_timer.stop()

return

def calculate_data_bias(self):

tmp_txt = self.ui.plainTextEdit_30.toPlainText()

try:

self.bias_num = int(tmp_txt)

if(self.bias_num > 1024):

self.ui.common_message_update("The number is bigger than 1024.")

return

if(self.bias_num <= 0):

self.ui.common_message_update("The number is less than 1.")

return

except:

self.ui.common_message_update("Text cannot be converted to a number")

return

self.call_num = 0

self.myclass.init_bias_container()

self.start_notify_signal.emit()

self.update_timer.timeout.connect(self.close_notify)

self.update_timer.start(1000)

return

class Ble_inst(QObject):

def __init__(self, update_func ,connect_status_func,rec_func):

super().__init__()

self.devices_addr = []

self.devices = []

self.client = bleak.BleakClient("FF:FF:FF:FF:FF:FF")

self.list_upadte = update_func

self.con_status_func = connect_status_func

self.con_index = -1

self.recieve_data = rec_func

return

async def add_device(self):

devices = await bleak.BleakScanner.discover()

self.devices = [""]

self.devices_addr = [""]

for d in devices:

if(d.name != None):

self.devices.append(d.name)

self.devices_addr.append(d.address)

print(d.name)

self.list_upadte(self.devices)

def disconnect_cb(self,client):

self.con_status_func(True)

print("connect loss....")

return

def set_con_index(self,index):

self.con_index = index

return

async def connect_device_func(self):

self.client = bleak.BleakClient(self.devices_addr[self.con_index],disconnected_callback=self.disconnect_cb)

await self.client.connect()

if(self.client.is_connected):

self.con_status_func(False)

return

def receive_notify_cb(self,uuid,barray):

........

return

async def stop_notify(self):

print("stop notify....")

await self.client.stop_notify(target_char)

return

async def start_notify(self):

print("start notify.....")

await self.client.start_notify(target_char,self.receive_notify_cb)

return

```

### Logs

Error Log

```console

Task exception was never retrieved

future: <Task finished name='Task-38' coro=<Ble_inst.stop_notify() done, defined at d:\workstation\VTC_display\Ble_instance.py:71> exception=KeyError(15)>

Traceback (most recent call last):

File "d:\workstation\VTC_display\Ble_instance.py", line 73, in stop_notify

await self.client.stop_notify(target_char)

File "C:\Users\isly8\anaconda3\Lib\site-packages\bleak\__init__.py", line 852, in stop_notify

await self._backend.stop_notify(char_specifier)

File "C:\Users\isly8\anaconda3\Lib\site-packages\bleak\backends\winrt\client.py", line 1020, in stop_notify

event_handler_token = self._notification_callbacks.pop(characteristic.handle)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

KeyError: 15

Task exception was never retrieved

future: <Task finished name='Task-39' coro=<Ble_inst.stop_notify() done, defined at d:\workstation\VTC_display\Ble_instance.py:71> exception=KeyError(15)>

Traceback (most recent call last):

File "d:\workstation\VTC_display\Ble_instance.py", line 73, in stop_notify

await self.client.stop_notify(target_char)

File "C:\Users\isly8\anaconda3\Lib\site-packages\bleak\__init__.py", line 852, in stop_notify

await self._backend.stop_notify(char_specifier)

File "C:\Users\isly8\anaconda3\Lib\site-packages\bleak\backends\winrt\client.py", line 1020, in stop_notify

event_handler_token = self._notification_callbacks.pop(characteristic.handle)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

KeyError: 15

```

|

closed

|

2023-09-15T01:26:02Z

|

2023-09-15T01:48:48Z

|

https://github.com/hbldh/bleak/issues/1419

|

[] |

ChienHao-Hung

| 0

|

microsoft/nni

|

pytorch

| 5,019

|

where is scripts.compression_mnist_model

|

**Describe the issue**:

When I tried to run the demo from the doc here https://nni.readthedocs.io/en/stable/tutorials/quantization_speedup.html, I could not found `scripts.compression_mnist_model` in

`from scripts.compression_mnist_model import TorchModel, trainer, evaluator, device, test_trt`

**Environment**:

- NNI version: 2.8

- Training service (local|remote|pai|aml|etc):

- Client OS: linux

- Server OS (for remote mode only):

- Python version: 3.8

- PyTorch/TensorFlow version:

- Is conda/virtualenv/venv used?:

- Is running in Docker?:

**Configuration**:

- Experiment config (remember to remove secrets!):

- Search space:

**Log message**:

- nnimanager.log:

- dispatcher.log:

- nnictl stdout and stderr:

<!--

Where can you find the log files:

LOG: https://github.com/microsoft/nni/blob/master/docs/en_US/Tutorial/HowToDebug.md#experiment-root-director

STDOUT/STDERR: https://nni.readthedocs.io/en/stable/reference/nnictl.html#nnictl-log-stdout

-->

**How to reproduce it?**:

|

closed

|

2022-07-25T23:37:38Z

|

2022-11-23T03:09:31Z

|

https://github.com/microsoft/nni/issues/5019

|

[

"user raised",

"documentation",

"support",

"ModelSpeedup",

"v2.9.1"

] |

james20141606

| 4

|

neuml/txtai

|

nlp

| 544

|

Add support for custom scoring instances

|

Add support for custom scoring instances.

See implementations in ANNFactory, DatabaseFactory and GraphFactory.

|

closed

|

2023-09-06T21:21:06Z

|

2023-09-06T21:25:06Z

|

https://github.com/neuml/txtai/issues/544

|

[] |

davidmezzetti

| 0

|

LAION-AI/Open-Assistant

|

python

| 3,630

|

Add Persian QA Dataset

|

After fine-tuning on [Farsi data](https://github.com/LAION-AI/Open-Assistant/pull/3629), I think adding QA Datasets like [this one](https://github.com/sajjjadayobi/PersianQA) can be helpful.

If the teams want to add support for Farsi, I will be glad to contribute and add this dataset in the standard format.

|

closed

|

2023-08-02T10:30:53Z

|

2023-08-03T19:24:31Z

|

https://github.com/LAION-AI/Open-Assistant/issues/3630

|

[] |

pourmand1376

| 0

|

adbar/trafilatura

|

web-scraping

| 712

|

setup: use `pyproject.toml` file

|

This is now standard for Python 3.8+ versions.

|

closed

|

2024-10-07T10:33:18Z

|

2024-10-10T11:12:05Z

|

https://github.com/adbar/trafilatura/issues/712

|

[

"maintenance"

] |

adbar

| 0

|

Ehco1996/django-sspanel

|

django

| 925

|

有一个请求不知道是什么 找不到,404

|

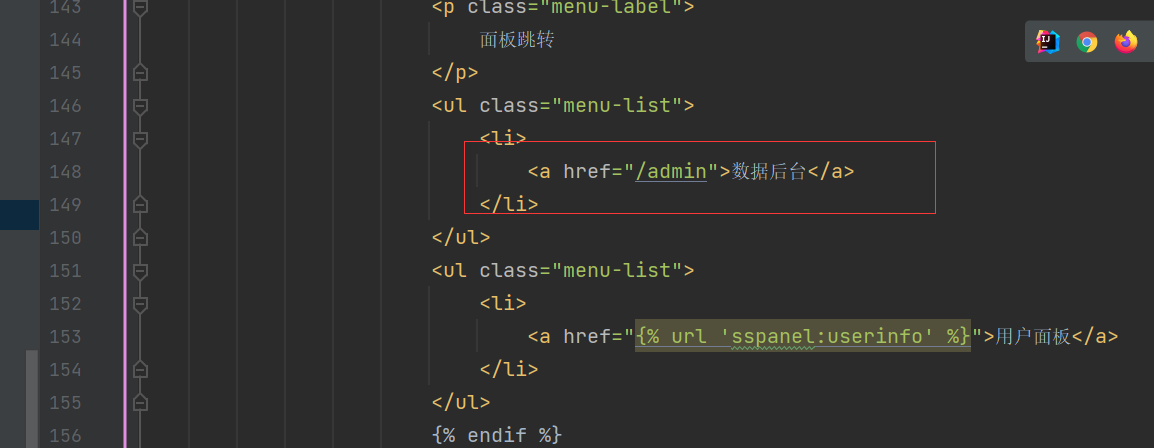

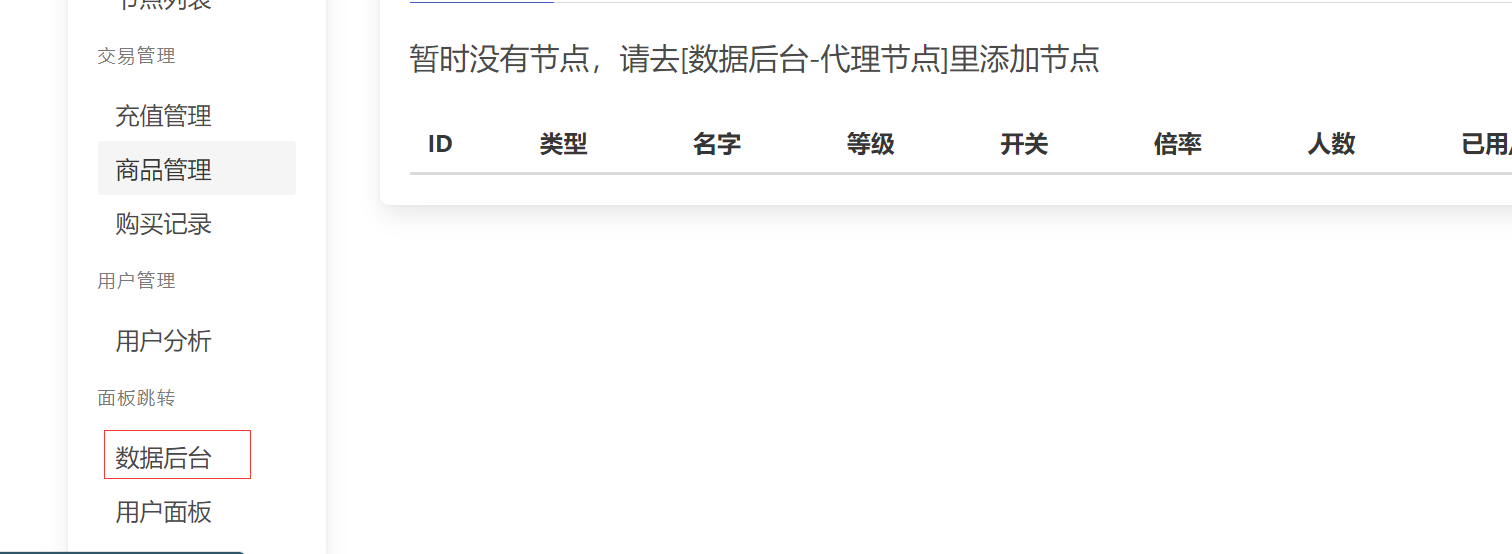

**问题的描述**

有一个请求不知道是什么 找不到,404

**项目的配置文件**

**如何复现**

**相关截图/log**

**其他信息**

|

closed

|

2024-03-06T08:39:24Z

|

2024-03-10T00:20:20Z

|

https://github.com/Ehco1996/django-sspanel/issues/925

|

[] |

wangxingsheng

| 1

|

tableau/server-client-python

|

rest-api

| 861

|

Extracting "Who has seen the view"?

|

Hi,

I looked through the documentation, maybe it's there but i couldn't find it.

Is there a way to extract the breakdown of "Who has seen this view" for each view?

Thanks in advance!

|

open

|

2021-07-12T08:23:19Z

|

2021-11-08T06:13:15Z

|

https://github.com/tableau/server-client-python/issues/861

|

[

"enhancement",

"Server-Side Enhancement"

] |

Zestsx

| 2

|

jumpserver/jumpserver

|

django

| 14,217

|

[Bug] 配置LDAP会自动还原

|

### 产品版本

v4.1.0

### 版本类型

- [X] 社区版

- [ ] 企业版

- [ ] 企业试用版

### 安装方式

- [ ] 在线安装 (一键命令安装)

- [ ] 离线包安装

- [ ] All-in-One

- [ ] 1Panel

- [X] Kubernetes

- [ ] 源码安装

### 环境信息

helm 包安装

### 🐛 缺陷描述

admin账号登陆后,配置ldap登陆。配置信息填写完毕、开启ldap功能,选择提交。测试链接正常,可以获取到用户数;测试ldap用户登陆提示Authentication failed (before login check failed): not enabled auth ldap。刷新页面再看即可发现ldap开关已被关闭,配置信息已被还原。

### 复现步骤

admin账号登陆后,配置ldap登陆。配置信息填写完毕、开启ldap功能,选择提交。测试链接正常,可以获取到用户数;测试ldap用户登陆提示Authentication failed (before login check failed): not enabled auth ldap。刷新页面再看即可发现ldap开关已被关闭,配置信息已被还原。

### 期望结果

能够正常保存ldap信息,不被还原。

### 补充信息

_No response_

### 尝试过的解决方案

_No response_

|

closed

|

2024-09-23T06:45:24Z

|

2024-09-26T10:01:03Z

|

https://github.com/jumpserver/jumpserver/issues/14217

|

[

"🐛 Bug"

] |

yulinor

| 6

|

strawberry-graphql/strawberry

|

asyncio

| 3,430

|

Schema basics docs

|

https://strawberry.rocks/docs/general/schema-basics returns 500 error

|

closed

|

2024-04-02T08:58:46Z

|

2025-03-20T15:56:39Z

|

https://github.com/strawberry-graphql/strawberry/issues/3430

|

[

"bug"

] |

lorddaedra

| 2

|

dmlc/gluon-cv

|

computer-vision

| 1,582

|

Top-1 accuracy on UCF101 dataset is bad?

|

I trained `i3d_nl5_resnet50_v1` on `UCF101` datasets with `Kinetics400` pretrained, the acc: `top-1=85.2%, top-5=95.4%`.

Params: `clip_len=32, input=224x224, lr=0.01, batch_size=8`.

It seems not so good, what is the possible reason?

|

closed

|

2021-01-07T03:19:58Z

|

2021-01-08T02:41:31Z

|

https://github.com/dmlc/gluon-cv/issues/1582

|

[] |

Tramac

| 3

|

HIT-SCIR/ltp

|

nlp

| 261

|

编译完成后在lib目录下并看不到segmentor_jni.so呀?

|

ltp编译完成后有个lib目录里面有很多so文件, 但是并看不到segmentor_jni.so等ltp4j使用的so?

这是为什么? 是因为ltp4j太久没人维护了吗?

|

closed

|

2017-11-11T16:07:55Z

|

2018-01-23T12:56:06Z

|

https://github.com/HIT-SCIR/ltp/issues/261

|

[] |

ambjlon

| 1

|

PeterL1n/RobustVideoMatting

|

computer-vision

| 127

|

CUA

|

closed

|

2022-01-12T11:35:10Z

|

2022-01-14T06:39:23Z

|

https://github.com/PeterL1n/RobustVideoMatting/issues/127

|

[] |

HarrytheOrange

| 0

|

|

plotly/dash-core-components

|

dash

| 475

|

box plot issue with dcc.Graph?

|

Getting a few reports in the community forum that look valid, but I haven't attempted reproducing yet: https://community.plot.ly/t/boxplot-in-dash/19623/4

|

open

|

2019-03-05T15:00:28Z

|

2019-03-05T15:00:28Z

|

https://github.com/plotly/dash-core-components/issues/475

|

[] |

chriddyp

| 0

|

gradio-app/gradio

|

python

| 10,255

|

Bug of gr.ImageMask save image

|

### Describe the bug

Hi, author.

I use gr.ImageMask met a bug. Wish you can solve this problem.

I set of ImageMask width and height, in the page save image from gr.ImageMask, but image size is compressed, not the width and height of the original upload.

### Have you searched existing issues? 🔎

- [X] I have searched and found no existing issues

### Reproduction

```python

import gradio as gr

with gr.Blocks() as demo:

with gr.Row():

im = gr.ImageMask(

type="numpy",

interactive=True,

height=150,

width=500

)

```

### Screenshot

_No response_

### Logs

_No response_

### System Info

```shell

gradio:5.9.1

```

### Severity

I can work around it

|

closed

|

2024-12-26T09:42:37Z

|

2025-01-22T18:35:03Z

|

https://github.com/gradio-app/gradio/issues/10255

|

[

"bug",

"🖼️ ImageEditor"

] |

yaosheng216

| 1

|

satwikkansal/wtfpython

|

python

| 137

|

Is copy or reference in self recursion?

|

Hello, I not found in this repository about this grammar. so, I open this issue.

My CPython version is 3.7.1

- list recursion (reference )

```python

class C:

def f1(self, a):

if a[0][0] == 0:

return a

else:

a[0][0] -= 1

self.f1(a)

return a

print(C().f1([[5, 2],[3, 4]]))

```

result:

```bash

>>> [[0, 2], [3, 4]]

```

- variable recursion (copy)

```python

class C:

def f1(self, a):

if a == 0:

return a

else:

a -= 1

self.f1(a)

return a

print(C().f1(3))

```

result

```bash

>>> 2

```

Just defining functions is the same.

Thank you.

|

closed

|

2019-09-09T04:14:31Z

|

2019-12-21T17:08:04Z

|

https://github.com/satwikkansal/wtfpython/issues/137

|

[

"new snippets"

] |

daidai21

| 1

|

plotly/dash-cytoscape

|

plotly

| 175

|

Graph nodes flocking to single point

|

#### Description

Nodes in graphs are shown correctly for a second before flocking to a single point, usually happens when inserting Cyto-components using callbacks. It is independent of any particular layout. This happens often.

Looks like this:

<img src="https://i.ibb.co/6WV2BF3/Screenshot-2022-05-17-at-16-15-32.png" alt="Screenshot-2022-05-17-at-16-15-32" border="0">

#### Steps/Code to Reproduce

Not deterministic. Happens when having multiple graphs, shown at different times in the Dash app.

#### Expected Results

A graph with properly placed nodes.

#### Actual Results

This.

<img src="https://i.ibb.co/6WV2BF3/Screenshot-2022-05-17-at-16-15-32.png" alt="Screenshot-2022-05-17-at-16-15-32" border="0">

#### Versions

```

Dash 2.3.1

/Users/niels/Documents/GitHub/test/test.py:3: UserWarning:

The dash_html_components package is deprecated. Please replace

`import dash_html_components as html` with `from dash import html`

import dash_html_components; print("Dash Core Components", dash_html_components.__version__)

Dash Core Components 2.0.2

/Users/niels/Documents/GitHub/test/test.py:4: UserWarning:

The dash_core_components package is deprecated. Please replace

`import dash_core_components as dcc` with `from dash import dcc`

import dash_core_components; print("Dash HTML Components", dash_core_components.__version__)

Dash HTML Components 2.3.0

Dash Renderer 1.9.1

Dash HTML Components 0.2.0

```

|

open

|

2022-05-17T14:21:44Z

|

2022-05-24T15:48:35Z

|

https://github.com/plotly/dash-cytoscape/issues/175

|

[] |

nilq

| 3

|

aio-libs/aiomysql

|

asyncio

| 979

|

aiomysql raise InvalidRequestError: Cannot release a connection with not finished transaction

|

### Describe the bug

When the database connection runs out and a new request is sent, an error occurd and the whole application goes down!

in the aiomysql/utils.py, line 137

```python3

async def __aexit__(self, exc_type, exc, tb):

try:

await self._pool.release(self._conn)

finally:

self._pool = None

self._conn = None

```

why release the whole connection pool?

### To Reproduce

```python3

import asyncio

import base64

import aiohttp.web_routedef

from aiohttp import web

from aiomysql.sa import create_engine

route = aiohttp.web.RouteTableDef()

@route.get('/')

async def test(request):

async with request.app.db.acquire() as conn:

trans = await conn.begin()

await asyncio.sleep(60)

await trans.commit()

return web.Response(text="ok")

@route.get('/test')

async def test(request):

async with request.app.db.acquire() as conn:

trans = await conn.begin()

await trans.close()

return web.Response(text="not ok")

async def mysql_context(app):

engine = await create_engine(

db='fmp_new',

user='root',

password="lyp82nlf",

host="localhost",

maxsize=1,

echo=True,

pool_recycle=1

)

app.db = engine

yield

app.db.close()

await app.db.wait_closed()

async def init_app():

app = web.Application()

app.add_routes(route)

app.cleanup_ctx.append(mysql_context)

return app

def main():

app = init_app()

web.run_app(app, port=9999)

if __name__ == '__main__':

main()

```

### Expected behavior

when a new request arrives , If the connection is exhausted, then wait for it release

### Logs/tracebacks

```python-traceback

Error handling request

Traceback (most recent call last):

File "test_aiomysql.py", line 20, in test

await asyncio.sleep(60)

File "/usr/lib/python3.7/asyncio/tasks.py", line 595, in sleep

return await future

concurrent.futures._base.CancelledError

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/yangshen/Envs/fmp_37/lib/python3.7/site-packages/aiohttp/web_protocol.py", line 422, in _handle_request

resp = await self._request_handler(request)

File "/home/yangshen/Envs/fmp_37/lib/python3.7/site-packages/aiohttp/web_app.py", line 499, in _handle

resp = await handler(request)

File "test_aiomysql.py", line 21, in test

await trans.commit()

File "/home/yangshen/Envs/fmp_37/lib/python3.7/site-packages/aiomysql/utils.py", line 139, in __aexit__

await self._pool.release(self._conn)

File "/home/yangshen/Envs/fmp_37/lib/python3.7/site-packages/aiomysql/sa/engine.py", line 163, in release