repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

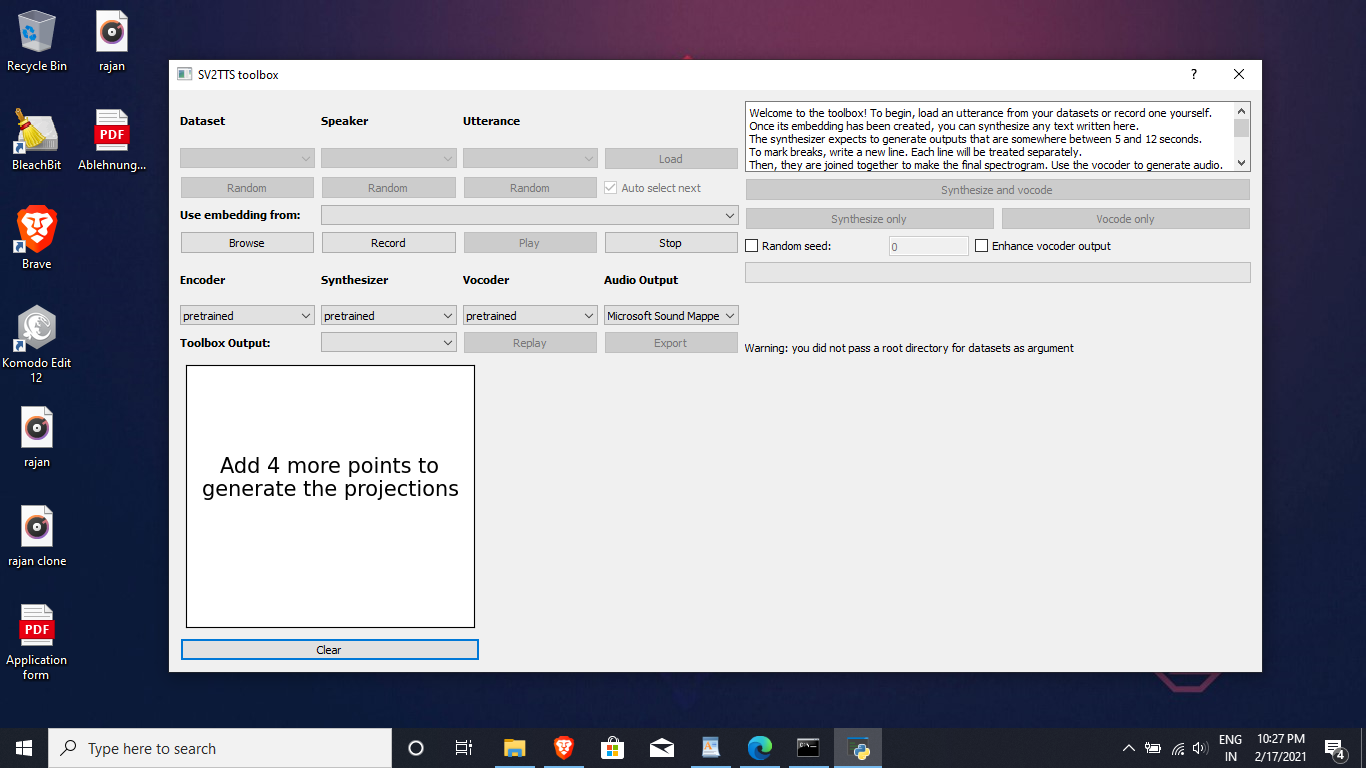

babysor/MockingBird

|

pytorch

| 764

|

如何解决colab炼丹每次都要上传数据集预处理数据集还爆磁盘的蛋疼问题

|

许多同鞋因为家里设备不佳训练模型效果不好,不得不去世界最大乞丐炼丹聚集地colab上训练。但是对于无法扩容google drive和升级colab的同鞋来说,上传数据集真的如同地狱一般,网速又慢空间又不够,而且每次重置都要上传,预处理令人头疼。我耗时9天终于解决了这个问题,现在给各位同学分享我的解决方案。

首先要去kaggle这个网站上面注册一个账号,然后获取token

我已经把预处理了的数据集(用的aidatatang_200zh)上传在上面了,但是下载数据集需要token,token需要注册账号,具体获取token的方法请自行百度,在此不过多赘述。

然后打开colab

修改-> 笔记本设置->运行时把 None 改成 GPU

输入以下代码:

```

!pip install kaggle

import json

token = {"username":"你的账号","key":"你获取到的token"}

with open('/content/kaggle.json', 'w') as file:

json.dump(token, file)

!mkdir -p ~/.kaggle

!cp /content/kaggle.json ~/.kaggle/

!chmod 600 ~/.kaggle/kaggle.json

!kaggle config set -n path -v /content

```

第三行请根据之前获取到的token填写

这一步是准备好kaggle命令行

然后是下载数据集并解压

```

!kaggle datasets download -d bjorndido/sv2ttspart1

!unzip "/content/datasets/bjorndido/sv2ttspart1/sv2ttspart1.zip" -d "/content/aidatatang_200zh"

!rm -rf /content/datasets

!kaggle datasets download -d bjorndido/sv2ttspart2

!unzip "/content/datasets/bjorndido/sv2ttspart2/sv2ttspart2.zip" -d "/content/aidatatang_200zh"

!rm -rf /content/datasets

```

为了怕某些童鞋用和我一样的免费版,如果从下载未处理的数据集开始磁盘要爆炸,所以我把预处理过的数据集上传到kaggle了

而且解压后会自己删掉zip,非常滴银杏

实测下载速度能达到200MB/s,网慢点也有50MB/s,非常滴快

这一步要不了10分钟就可以弄好了

```

!git clone https://github.com/babysor/MockingBird.git

!pip install -r /content/MockingBird/requirements.txt

git仓库,下载依赖,这一步不多说

```

然后改hparams

```

%%writefile /content/MockingBird/synthesizer/hparams.py

import ast

import pprint

import json

class HParams(object):

def __init__(self, **kwargs): self.__dict__.update(kwargs)

def __setitem__(self, key, value): setattr(self, key, value)

def __getitem__(self, key): return getattr(self, key)

def __repr__(self): return pprint.pformat(self.__dict__)

def parse(self, string):

# Overrides hparams from a comma-separated string of name=value pairs

if len(string) > 0:

overrides = [s.split("=") for s in string.split(",")]

keys, values = zip(*overrides)

keys = list(map(str.strip, keys))

values = list(map(str.strip, values))

for k in keys:

self.__dict__[k] = ast.literal_eval(values[keys.index(k)])

return self

def loadJson(self, dict):

print("\Loading the json with %s\n", dict)

for k in dict.keys():

if k not in ["tts_schedule", "tts_finetune_layers"]:

self.__dict__[k] = dict[k]

return self

def dumpJson(self, fp):

print("\Saving the json with %s\n", fp)

with fp.open("w", encoding="utf-8") as f:

json.dump(self.__dict__, f)

return self

hparams = HParams(

### Signal Processing (used in both synthesizer and vocoder)

sample_rate = 16000,

n_fft = 800,

num_mels = 80,

hop_size = 200, # Tacotron uses 12.5 ms frame shift (set to sample_rate * 0.0125)

win_size = 800, # Tacotron uses 50 ms frame length (set to sample_rate * 0.050)

fmin = 55,

min_level_db = -100,

ref_level_db = 20,

max_abs_value = 4., # Gradient explodes if too big, premature convergence if too small.

preemphasis = 0.97, # Filter coefficient to use if preemphasize is True

preemphasize = True,

### Tacotron Text-to-Speech (TTS)

tts_embed_dims = 512, # Embedding dimension for the graphemes/phoneme inputs

tts_encoder_dims = 256,

tts_decoder_dims = 128,

tts_postnet_dims = 512,

tts_encoder_K = 5,

tts_lstm_dims = 1024,

tts_postnet_K = 5,

tts_num_highways = 4,

tts_dropout = 0.5,

tts_cleaner_names = ["basic_cleaners"],

tts_stop_threshold = -3.4, # Value below which audio generation ends.

# For example, for a range of [-4, 4], this

# will terminate the sequence at the first

# frame that has all values < -3.4

### Tacotron Training

tts_schedule = [(2, 1e-3, 10_000, 32), # Progressive training schedule

(2, 5e-4, 15_000, 32), # (r, lr, step, batch_size)

(2, 2e-4, 20_000, 32), # (r, lr, step, batch_size)

(2, 1e-4, 30_000, 32), #

(2, 5e-5, 40_000, 32), #

(2, 1e-5, 60_000, 32), #

(2, 5e-6, 160_000, 32), # r = reduction factor (# of mel frames

(2, 3e-6, 320_000, 32), # synthesized for each decoder iteration)

(2, 1e-6, 640_000, 32)], # lr = learning rate

tts_clip_grad_norm = 1.0, # clips the gradient norm to prevent explosion - set to None if not needed

tts_eval_interval = 500, # Number of steps between model evaluation (sample generation)

# Set to -1 to generate after completing epoch, or 0 to disable

tts_eval_num_samples = 1, # Makes this number of samples

## For finetune usage, if set, only selected layers will be trained, available: encoder,encoder_proj,gst,decoder,postnet,post_proj

tts_finetune_layers = [],

### Data Preprocessing

max_mel_frames = 900,

rescale = True,

rescaling_max = 0.9,

synthesis_batch_size = 16, # For vocoder preprocessing and inference.

### Mel Visualization and Griffin-Lim

signal_normalization = True,

power = 1.5,

griffin_lim_iters = 60,

### Audio processing options

fmax = 7600, # Should not exceed (sample_rate // 2)

allow_clipping_in_normalization = True, # Used when signal_normalization = True

clip_mels_length = True, # If true, discards samples exceeding max_mel_frames

use_lws = False, # "Fast spectrogram phase recovery using local weighted sums"

symmetric_mels = True, # Sets mel range to [-max_abs_value, max_abs_value] if True,

# and [0, max_abs_value] if False

trim_silence = True, # Use with sample_rate of 16000 for best results

### SV2TTS

speaker_embedding_size = 256, # Dimension for the speaker embedding

silence_min_duration_split = 0.4, # Duration in seconds of a silence for an utterance to be split

utterance_min_duration = 1.6, # Duration in seconds below which utterances are discarded

use_gst = True, # Whether to use global style token

use_ser_for_gst = True, # Whether to use speaker embedding referenced for global style token

)

```

我用的batch size是32,同鞋们可以根据情况自行更改

开始训练

```

%cd "/content/MockingBird/"

!python synthesizer_train.py train "/content/aidatatang_200zh" -m /content/drive/MyDrive/

```

注意,开始这个步骤前请先挂载谷歌云盘,不想挂载的就把-m后面的路径改了

我选择drive是因为下次训练又能继续上传训练的进度继续训练

然后就是欢快的白嫖时间了

氪金的同鞋可以运行!nvidia-smi查看显卡信息,白嫖版的都是tesla t4 16g显存

实测9k步的时候开始出现注意力曲线,loss值为0.45

注意!白嫖版的用户长时间不碰电脑colab会自动断开

再次打开环境会还原成最初的样子

这个时候选择drive保存的优势就体现出来了:不用担心模型重置被删掉

第一次写,写得不好请见谅

希望这篇教程可以帮助到你们

|

open

|

2022-10-10T08:52:38Z

|

2023-03-31T12:02:28Z

|

https://github.com/babysor/MockingBird/issues/764

|

[] |

HexBanana

| 10

|

pyppeteer/pyppeteer

|

automation

| 254

|

Installation in docker fails

|

I am using Ubuntu bionic container (18.04)

```

Step 19/29 : RUN python3 -m venv .

---> Using cache

---> 921d12b1ff09

Step 20/29 : RUN python3 -m pip install setuptools

---> Using cache

---> 112749c3d9f4

Step 21/29 : RUN python3 -m pip install -U git+https://github.com/pyppeteer/pyppeteer@dev

---> Running in d9ff781c3217

Collecting git+https://github.com/pyppeteer/pyppeteer@dev

Cloning https://github.com/pyppeteer/pyppeteer (to dev) to /tmp/pip-d7qe1_0r-build

Complete output from command python setup.py egg_info:

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "/usr/lib/python3.6/tokenize.py", line 452, in open

buffer = _builtin_open(filename, 'rb')

FileNotFoundError: [Errno 2] No such file or directory: '/tmp/pip-d7qe1_0r-build/setup.py'

```

|

closed

|

2021-05-11T06:49:12Z

|

2021-05-11T08:03:34Z

|

https://github.com/pyppeteer/pyppeteer/issues/254

|

[] |

larytet

| 1

|

polarsource/polar

|

fastapi

| 5,156

|

API Reference docs: validate/activate/deactivate license keys don't need a token

|

### Discussed in https://github.com/orgs/polarsource/discussions/5155

The overlay matches every endpoints: https://github.com/polarsource/polar/blob/37646b3cab3219369bd48b3f105300893515ad9c/sdk/overlays/security.yml#L6-L10

We should exclude those endpoints.

|

closed

|

2025-03-04T16:01:26Z

|

2025-03-04T16:42:42Z

|

https://github.com/polarsource/polar/issues/5156

|

[

"docs"

] |

frankie567

| 0

|

tox-dev/tox

|

automation

| 3,249

|

tox seems to ignore part of tox.ini file

|

## Issue

This PR of mine is failing: https://github.com/open-telemetry/opentelemetry-python/pull/3746

The issue seems to be that `tox` ignores part of the `tox.ini` file, from [this](https://github.com/open-telemetry/opentelemetry-python/pull/3746/files#diff-ef2cef9f88b4fe09ca3082140e67f5ad34fb65fb6e228f119d3812261ae51449R128) line onwards (including that line). When I run `tox -rvvve py38-proto4-opentelemetry-exporter-otlp-proto-grpc` tests fail because certain test requirements that would have been installed if that line was executed are not installed (of course, because that line was not executed). Other tests fail (the PR has many jobs failing besides `py38-proto4-opentelemetry-exporter-otlp-proto-grpc`) as well because they need lines in the `tox.ini` file that are below the line mentioned before. When I run `tox -rvvve py38-proto3-opentelemetry-exporter-otlp-proto-grpc` everything seems to work fine.

Here is the output of both commands:

[`tox -rvvve py38-proto3-opentelemetry-exporter-otlp-proto-grpc`](https://gist.github.com/ocelotl/2690254b7fc2aa0b04af054e04248949#file-tox-rvvve-py38-proto3-opentelemetry-exporter-otlp-proto-grpc-txt)

[`tox -rvvve py38-proto4-opentelemetry-exporter-otlp-proto-grpc`](https://gist.github.com/ocelotl/2690254b7fc2aa0b04af054e04248949#file-tox-rvvve-py38-proto4-opentelemetry-exporter-otlp-proto-grpc-txt)

The issue seems to be that `tox` is not executing [this](https://github.com/open-telemetry/opentelemetry-python/pull/3746/files#diff-ef2cef9f88b4fe09ca3082140e67f5ad34fb65fb6e228f119d3812261ae51449R128) line nor anything below that line.

For example, when running `tox -rvvve py38-proto3-opentelemetry-exporter-otlp-proto-grpc`, `pip install -r ... test-requirements-0.txt` gets called (line [226](https://gist.github.com/ocelotl/2690254b7fc2aa0b04af054e04248949#file-tox-rvvve-py38-proto3-opentelemetry-exporter-otlp-proto-grpc-txt-L226)):

```

py38-proto3-opentelemetry-exporter-otlp-proto-grpc: 15305 I exit 0 (9.53 seconds) /home/tigre/github/ocelotl/opentelemetry-python> pip install /home/tigre/github/ocelotl/opentelemetry-python/opentelemetry-api /home/tigre/github/ocelotl/opentelemetry-python/opentelemetry-semantic-conventions /home/tigre/github/ocelotl/opentelemetry-python/opentelemetry-sdk /home/tigre/github/ocelotl/opentelemetry-python/tests/opentelemetry-test-utils pid=433928 [tox/execute/api.py:280]

py38-proto3-opentelemetry-exporter-otlp-proto-grpc: 15306 W commands_pre[2]> pip install -r /home/tigre/github/ocelotl/opentelemetry-python/exporter/opentelemetry-exporter-otlp-proto-grpc/test-requirements-0.txt [tox/tox_env/api.py:425]

```

But when running `tox -rvvve py38-proto4-opentelemetry-exporter-otlp-proto-grpc` it doesn't, it jumps straight to calling `pytest` (line [226](https://gist.github.com/ocelotl/2690254b7fc2aa0b04af054e04248949#file-tox-rvvve-py38-proto4-opentelemetry-exporter-otlp-proto-grpc-txt-L226)):

```

py38-proto4-opentelemetry-exporter-otlp-proto-grpc: 16148 I exit 0 (10.33 seconds) /home/tigre/github/ocelotl/opentelemetry-python> pip install /home/tigre/github/ocelotl/opentelemetry-python/opentelemetry-api /home/tigre/github/ocelotl/opentelemetry-python/opentelemetry-semantic-conventions /home/tigre/github/ocelotl/opentelemetry-python/opentelemetry-sdk /home/tigre/github/ocelotl/opentelemetry-python/tests/opentelemetry-test-utils pid=436384 [tox/execute/api.py:280]

py38-proto4-opentelemetry-exporter-otlp-proto-grpc: 16149 W commands[0]> pytest /home/tigre/github/ocelotl/opentelemetry-python/exporter/opentelemetry-exporter-otlp-proto-grpc/tests [tox/tox_env/api.py:425]

```

## Environment

Provide at least:

- OS:

`lsb_release -a`

```

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 22.04.3 LTS

Release: 22.04

Codename: jammy

```

`tox --version`

```

4.14.1 from /home/tigre/.pyenv/versions/3.11.2/lib/python3.11/site-packages/tox/__init__.py

```

`pip list`

```

Package Version Editable project location

---------------------------------- ---------- -----------------------------------------------------------------

argcomplete 3.0.8

asttokens 2.2.1

attrs 23.1.0

autopep8 2.0.3

backcall 0.2.0

backoff 2.2.1

beartype 0.16.4

behave 1.2.6

black 23.3.0

bleach 6.0.0

boolean.py 4.0

build 0.10.0

cachetools 5.3.2

certifi 2022.12.7

chardet 5.2.0

charset-normalizer 3.1.0

click 8.1.3

cmake 3.26.3

colorama 0.4.6

colorlog 6.7.0

commonmark 0.9.1

cyclonedx-bom 3.11.7

cyclonedx-python-lib 3.1.5

decorator 5.1.1

distlib 0.3.8

docutils 0.20.1

elementpath 4.1.5

executing 1.2.0

fancycompleter 0.9.1

filelock 3.13.1

flake8 6.0.0

fsspec 2023.4.0

gensim 4.3.1

grpcio 1.59.0

grpcio-tools 1.59.0

huggingface-hub 0.14.1

idna 3.4

importlib-metadata 6.0.1

iniconfig 2.0.0

ipdb 0.13.11

ipython 8.10.0

isodate 0.6.1

isort 5.12.0

jedi 0.18.2

Jinja2 3.1.2

joblib 1.2.0

jsonschema 4.20.0

jsonschema-specifications 2023.11.1

license-expression 30.1.1

lit 16.0.2

Markdown 3.5.1

marko 1.3.0

MarkupSafe 2.1.2

matplotlib-inline 0.1.6

mccabe 0.7.0

mistletoe 1.3.0

mpmath 1.3.0

mypy-extensions 1.0.0

networkx 3.1

nltk 3.8.1

nox 2023.4.22

numpy 1.24.3

nvidia-cublas-cu11 11.10.3.66

nvidia-cuda-cupti-cu11 11.7.101

nvidia-cuda-nvrtc-cu11 11.7.99

nvidia-cuda-runtime-cu11 11.7.99

nvidia-cudnn-cu11 8.5.0.96

nvidia-cufft-cu11 10.9.0.58

nvidia-curand-cu11 10.2.10.91

nvidia-cusolver-cu11 11.4.0.1

nvidia-cusparse-cu11 11.7.4.91

nvidia-nccl-cu11 2.14.3

nvidia-nvtx-cu11 11.7.91

openspecification 0.0.1

opentelemetry-api 0.0.0 /home/tigre/github/ocelotl/opentelemetry-python/opentelemetry-api

opentelemetry-sdk 0.0.0

opentelemetry-semantic-conventions 0.0.0

packageurl-python 0.11.2

packaging 23.2

pandas 2.0.1

parse 1.19.1

parse-type 0.6.2

parso 0.8.3

pathspec 0.11.1

pdbpp 0.10.3

pexpect 4.8.0

pickleshare 0.7.5

Pillow 9.5.0

pip 24.0

pip-requirements-parser 32.0.1

platformdirs 4.2.0

pluggy 1.4.0

ply 3.11

prompt-toolkit 3.0.36

protobuf 4.24.3

psycopg2-binary 2.9.9

ptyprocess 0.7.0

pure-eval 0.2.2

py 1.11.0

pycodestyle 2.10.0

pyflakes 3.0.1

Pygments 2.14.0

pyparsing 3.1.1

pyproject-api 1.6.1

pyproject_hooks 1.0.0

pyrepl 0.9.0

pytest 7.3.1

python-dateutil 2.8.2

python-frontmatter 1.1.0

pytz 2023.3

PyYAML 5.1

rdflib 7.0.0

readme-renderer 36.0

referencing 0.31.0

regex 2023.5.4

requests 2.29.0

restview 3.0.1

rpds-py 0.13.1

sacremoses 0.0.53

sbom 2023.10.7

scikit-learn 1.3.0

scipy 1.10.1

semantic-version 2.10.0

sentence-transformers 2.2.2

sentencepiece 0.1.99

setuptools 65.5.0

six 1.16.0

smart-open 6.3.0

sortedcontainers 2.4.0

spdx-tools 0.8.2

specification_parser 0.0.1

stack-data 0.6.2

sympy 1.11.1

threadpoolctl 3.1.0

tokenizers 0.13.3

toml 0.10.2

torch 2.0.0

torchvision 0.15.1

tox 4.14.1

tqdm 4.65.0

traitlets 5.9.0

transformers 4.28.1

triton 2.0.0

typer 0.9.0

typing_extensions 4.5.0

tzdata 2023.3

uritools 4.0.2

urllib3 1.26.15

virtualenv 20.25.0

wcwidth 0.2.6

webencodings 0.5.1

Werkzeug 0.16.1

wheel 0.40.0

wmctrl 0.5

wrapt 1.15.0

xmlschema 2.5.0

xmltodict 0.13.0

zipp 3.15.0

```

## Minimal example

Check out https://github.com/open-telemetry/opentelemetry-python/pull/3746.

Run `tox -rvvve py38-proto3-opentelemetry-exporter-otlp-proto-grpc` (it should work)

Run `tox -rvvve py38-proto4-opentelemetry-exporter-otlp-proto-grpc` (it should fail)

Sorry if this ends up being a dumb mistake on my part but I just can't find out why this is happening.

To make things even more confusing, I have a very similar PR, everything worked perfectly there: https://github.com/open-telemetry/opentelemetry-python/pull/3742

|

closed

|

2024-03-21T01:12:26Z

|

2024-04-12T20:43:57Z

|

https://github.com/tox-dev/tox/issues/3249

|

[] |

ocelotl

| 2

|

strawberry-graphql/strawberry

|

asyncio

| 3,613

|

Hook for new results in subscription (and also on defer/stream)

|

From #3554

|

open

|

2024-09-02T17:57:36Z

|

2025-03-20T15:56:51Z

|

https://github.com/strawberry-graphql/strawberry/issues/3613

|

[

"feature-request"

] |

patrick91

| 0

|

qwj/python-proxy

|

asyncio

| 112

|

It works from command line but not from within a python script

|

Hello, thanks for pproxy, it's very useful. One problem I am facing is that the following command works well (I can browse the internet normally)...

```

C:\tools>pproxy -l http://127.0.0.1:12345 -r socks5://127.0.0.1:9050

Serving on 127.0.0.1:12345 by http

```

...but the following script, with the same schemes and settings, doesn't work (I get the error at the end of this issue):

```

import asyncio

import pproxy

server = pproxy.Server('http://127.0.0.1:12345')

remote = pproxy.Connection('socks5://127.0.0.1:9050')

args = dict( rserver = [remote],

verbose = print )

loop = asyncio.get_event_loop()

handler = loop.run_until_complete(server.start_server(args))

try:

loop.run_forever()

except KeyboardInterrupt:

print('exit!')

handler.close()

loop.run_until_complete(handler.wait_closed())

loop.run_until_complete(loop.shutdown_asyncgens())

loop.close()

```

The error I got:

`http_accept() missing 1 required positional argument: 'httpget' from 127.0.0.1`

|

closed

|

2021-02-22T20:05:16Z

|

2021-02-23T14:42:44Z

|

https://github.com/qwj/python-proxy/issues/112

|

[] |

analyserdmz

| 2

|

aleju/imgaug

|

deep-learning

| 186

|

matplotlib to show is not convenient for some linux platform

|

when pip install,some error appear,such as:

Collecting matplotlib>=2.0.0 (from scikit-image>=0.11.0->imgaug==0.2.6)

Downloading http://10.123.98.50/pypi/web/packages/ec/06/def4fb2620cbe671ba0cb6462cbd8653fbffa4acd87d6d572659e7c71c13/matplotlib-3.0.0.tar.gz (36.3MB)

100% |################################| 36.3MB 101.1MB/s

Complete output from command python setup.py egg_info:

Matplotlib 3.0+ does not support Python 2.x, 3.0, 3.1, 3.2, 3.3, or 3.4.

Beginning with Matplotlib 3.0, Python 3.5 and above is required.

This may be due to an out of date pip.

Make sure you have pip >= 9.0.1.

|

open

|

2018-09-26T09:19:44Z

|

2018-10-02T19:27:56Z

|

https://github.com/aleju/imgaug/issues/186

|

[] |

sanren99999

| 1

|

scikit-optimize/scikit-optimize

|

scikit-learn

| 615

|

TypeError: %d format: a number is required

|

Here is my example:

```python

from skopt import Optimizer

from skopt.utils import dimensions_aslist

from skopt.space import Integer, Categorical, Real

NN = {

'activation': Categorical(['identity', 'logistic', 'tanh', 'relu']),

'solver': Categorical(['adam', 'sgd', 'lbfgs']),

'learning_rate': Categorical(['constant', 'invscaling', 'adaptive']),

'hidden_layer_sizes': Categorical([(100,100)])

}

listified_space = dimensions_aslist(NN)

acq_optimizer_kwargs = {'n_points': 20, 'n_restarts_optimizer': 5, 'n_jobs': 3}

acq_func_kwargs = {'xi': 0.01, 'kappa': 1.96}

optimizer = Optimizer(listified_space, base_estimator='gp', n_initial_points=10,

acq_func='EI', acq_optimizer='auto', random_state=None,

acq_optimizer_kwargs=acq_optimizer_kwargs, acq_func_kwargs=acq_fun_kwargs)

rand_xs = []

for n in range(10):

rand_xs.append(optimizer.ask())

rand_ys = [1,2,3,4,5,6,7,8,9,10]

print rand_xs

print rand_ys

optimizer.tell(rand_xs, rand_ys)

```

Running with `acq_optimizer='lbfgs'` I was seeing `ValueError: The regressor <class 'skopt.learning.gaussian_process.gpr.GaussianProcessRegressor'> should run with acq_optimizer='sampling'.` But by tracing the Optimizer's `_check_arguments()` code I was able to figure out that my base_estimator must simply not have gradients in this Categoricals-only case. Changing to `acq_optimizer='auto'` solves that problem.

But now I see a new `TypeError: %d format: a number is required` thrown deep inside skopt/learning/gaussian_process/kernels.py. *If I print the transformed space before it gets passed to the Gaussian process, I see that it isn't really transformed at all: It still has strings in it!*

Adding a numerical dimension like `alpha: Real(0.0001, 0.001, prior='log-uniform')` causes the construction and the `.tell()` to succeed because the transformed space is then purely numerical.

*So the way purely-categorical spaces are transformed should be updated.*

Or there is an other possibility: Does it even make sense to try to do Bayesian optimization on a purely categorical space like this? Say I try setting (A,B), setting (A,C), setting (X,Z), and setting (Y,Z). For the sake of argument say (A,B) does better than (A,C) and (X,Z) does better than (Y,Z). Can we then suppose (X,B) will do better than (Y,C)? Who is to say (Y,C) isn't a super-combination or that the gains we seem to see from varying the first parameter to X or the second to B are unrelated? It seems potentially dangerous to reason this way, so perhaps the intent is that no Bayesian optimization should be possible in purely Categorical spaces. If this is the case, an error should be thrown early to say this. Furthermore, if this is correct, then how are point-values in Categorical dimensions decided during optimization? Are the numerical parameters optimized while Categoricals are selected at random?

It seems the previous paragraph should be wrong: If I try many examples with some parameter set to some value and observe a pattern of poor performance, I can update my beliefs to say "This is a bad setting". It shouldn't matter whether a parameter is Categorical or not; Bayesian optimization should be equally powerful in all cases.

|

closed

|

2018-01-25T14:28:05Z

|

2018-04-19T12:09:11Z

|

https://github.com/scikit-optimize/scikit-optimize/issues/615

|

[] |

pavelkomarov

| 1

|

ufoym/deepo

|

tensorflow

| 120

|

Building wheel for torchvision (setup.py): finished with status 'error'

|

`$ docker build -f Dockerfile.pytorch-py36-cpu .`

Collecting git+https://github.com/pytorch/vision.git

Cloning https://github.com/pytorch/vision.git to /tmp/pip-req-build-8_0m83s6

Running command git clone -q https://github.com/pytorch/vision.git /tmp/pip-req-build-8_0m83s6

Requirement already satisfied, skipping upgrade: numpy in /usr/local/lib/python3.6/dist-packages (from torchvision==0.5.0a0+7c9bbf5) (1.17.1)

Requirement already satisfied, skipping upgrade: six in /usr/local/lib/python3.6/dist-packages (from torchvision==0.5.0a0+7c9bbf5) (1.12.0)

Requirement already satisfied, skipping upgrade: torch in /usr/local/lib/python3.6/dist-packages (from torchvision==0.5.0a0+7c9bbf5) (1.2.0)

Collecting pillow>=4.1.1 (from torchvision==0.5.0a0+7c9bbf5)

Downloading https://files.pythonhosted.org/packages/14/41/db6dec65ddbc176a59b89485e8cc136a433ed9c6397b6bfe2cd38412051e/Pillow-6.1.0-cp36-cp36m-manylinux1_x86_64.whl (2.1MB)

Building wheels for collected packages: torchvision

Building wheel for torchvision (setup.py): started

**Building wheel for torchvision (setup.py): finished with status 'error'

ERROR: Command errored out with exit status 1:

command: /usr/local/bin/python -u -c 'import sys, setuptools, tokenize; sys.argv[0] = '"'"'/tmp/pip-req-build-8_0m83s6/setup.py'"'"'; __file__='"'"'/tmp/pip-req-build-8_0m83s6/setup.py'"'"';f=getattr(tokenize, '"'"'open'"'"', open)(__file__);code=f.read().replace('"'"'\r\n'"'"', '"'"'\n'"'"');f.close();exec(compile(code, __file__, '"'"'exec'"'"'))' bdist_wheel -d /tmp/pip-wheel-yob68akv --python-tag cp36

cwd: /tmp/pip-req-build-8_0m83s6/**

Complete output (513 lines):

Building wheel torchvision-0.5.0a0+7c9bbf5

running bdist_wheel

running build

running build_py

creating build

creating build/lib.linux-x86_64-3.6

creating build/lib.linux-x86_64-3.6/torchvision

copying torchvision/utils.py -> build/lib.linux-x86_64-3.6/torchvision

copying torchvision/extension.py -> build/lib.linux-x86_64-3.6/torchvision

...

Thank you.

|

closed

|

2019-09-02T08:47:29Z

|

2019-11-19T11:24:47Z

|

https://github.com/ufoym/deepo/issues/120

|

[] |

oiotoxt

| 1

|

PokeAPI/pokeapi

|

graphql

| 536

|

Normalized entities in JSON data?

|

I asked about this in the Slack channel but didn't get any responses. I looked through the documentation but couldn't find any mention of this; is it possible to ask an endpoint for normalized entity data? Currently the entity relationships are eagerly fetched, so, for example, a berry has a list of flavors, and the flavor entities are included in the json payload for the berry entity.

```jsonc

{

"id": 1,

"name": "cheri",

/*... snip ... */

"flavors": [

{

"potency": 10,

"flavor": {

"name": "spicy",

"url": "https://pokeapi.co/api/v2/berry-flavor/1/"

}

}

],

}

```

I would like to be able to send a query parameter or header that asks the endpoint to return references to relations instead of nesting them:

```jsonc

{

"id": 1,

"name": "cheri",

/*... snip ... */

"flavors": [

"https://pokeapi.co/api/v2/berry-flavor/1/",

],

}

```

Of course this requires more API requests to fetch the data the client requires, and I know PokeAPI relies heavily on cacheing. However, it's easier to parse if you are using a stateful client UI (since the client state should already be normalized), and it uses less bandwidth. If the service is using HTTP2 the latency issue from multiple requests is mostly mitigated. Is this something that is supported, or that could possibly be supported in the future? I'd like to use PokeAPI in examples when I'm coaching, but normalizing json payloads adds complexity that I'd rather not focus on (payload normalization is its own topic of study).

|

closed

|

2020-10-25T16:08:23Z

|

2020-12-27T18:27:14Z

|

https://github.com/PokeAPI/pokeapi/issues/536

|

[] |

parkerault

| 2

|

matterport/Mask_RCNN

|

tensorflow

| 2,392

|

Create environment

|

Could anyone help create the environment in anaconda? I've tried it in different ways and it shows error. I'm trying to create an environment using python 3.4, tensorflow 1.15.3 and keras 2.2.4

|

open

|

2020-10-16T11:10:13Z

|

2022-03-08T14:59:50Z

|

https://github.com/matterport/Mask_RCNN/issues/2392

|

[] |

teixeirafabiano

| 3

|

cupy/cupy

|

numpy

| 8,269

|

CuPy v14 Release Plan

|

## Roadmap

```[tasklist]

## Planned Features

- [ ] #8306

- [ ] `complex32` support for limited APIs (restart [#4454](https://github.com/cupy/cupy/pull/4454))

- [ ] Support bfloat16

- [ ] Structured Data Type

- [ ] #8013

- [ ] #6986

- [ ] Drop support for Python 3.9 following [SPEC 0](https://scientific-python.org/specs/spec-0000/)

- [ ] https://github.com/cupy/cupy/issues/8215

```

```[tasklist]

## Timeline & Release Manager

- [ ] **v14.0.0a1** (Mar 2025)

- [ ] v14.0.0a2 (TBD)

- [ ] **v14.0.0b1** (TBD)

- [ ] **v14.0.0rc1** (TBD)

- [ ] **v14.0.0** (TBD)

```

## Notes

* Starting in the CuPy v13 development cycle, we have adjusted our release frequency to once every two months. Mid-term or hot-fix releases may be provided depending on necessity, such as for new CUDA/Python version support or critical bug fixes.

* The schedule and planned features may be subject to change depending on the progress of the development.

* `v13.x` releases will only contain backported pull-requests (mainly bug-fixes) from the v14 (`main`) branch.

## Past Release Plans

* v9: https://github.com/cupy/cupy/issues/3891

* v10: https://github.com/cupy/cupy/issues/5049

* v11: https://github.com/cupy/cupy/issues/6246

* v12: https://github.com/cupy/cupy/issues/6866

* v13: https://github.com/cupy/cupy/issues/7555

|

open

|

2024-04-03T02:51:19Z

|

2025-02-13T08:02:10Z

|

https://github.com/cupy/cupy/issues/8269

|

[

"issue-checked"

] |

asi1024

| 1

|

matplotlib/matplotlib

|

data-visualization

| 28,931

|

[Bug]: plt.savefig incorrectly discarded z-axis label when saving Line3D/Bar3D/Surf3D images using bbox_inches='tight'

|

### Bug summary

I drew a 3D bar graph in python3 using ax.bar3d and then saved the image to pdf under bbox_inches='tight' using plt.savefig, but the Z-axis label was missing in the pdf. Note that everything worked fine after switching matplotlib to version 3.5.0 without changing my python3 code.

### Code for reproduction

```Python

import matplotlib.pyplot as plt

import numpy as np

from matplotlib.ticker import MultipleLocator

def plot_3D_bar(x_data, data, save_path, x_label, y_label, z_label,

var_orient='horizon'):

if var_orient != 'vertical' and var_orient != 'horizon':

print('plot_figure var_orient error!')

exit(1)

if var_orient == 'vertical':

data = list(map(list, zip(*data)))

x_number = len(data[0])

y_number = len(data)

if len(x_data) != x_number:

exit(1)

x_data_label = ['A', 'B', 'C', 'D', 'E', 'F', 'G']

if len(x_data_label) % x_number != 0:

exit(1)

if y_number == 1:

label = ['label1']

elif y_number == 2:

label = ['label1', 'label2']

elif y_number == 3:

label = ['l1', 'l2', 'l3']

else:

label = []

if y_number == 1:

color = ['green']

elif y_number == 2:

color = ['green', 'red']

elif y_number == 3:

color = ['green', 'red', 'blue']

else:

color = []

if y_number == 1:

hatch = ['x']

elif y_number == 2:

hatch = ['x', '.']

elif y_number == 3:

hatch = ['x', '.', '|']

else:

hatch = []

if y_number != len(label) or y_number != len(color) or \

y_number != len(hatch):

exit(1)

# plt.title('title')

plt.rc('text', usetex=False)

plt.rc('font', family='Times New Roman', size=15)

font1 = {'family': 'Times New Roman', 'weight': 'normal', 'size': 15}

bar_width_x = 0.5

dx = [bar_width_x for _ in range(x_number)]

bar_width_y = 0.2

dy = [bar_width_y for _ in range(x_number)]

ax = plt.subplot(projection='3d')

ax.xaxis.set_major_locator(MultipleLocator(1))

ax.yaxis.set_major_locator(MultipleLocator(1))

ax.yaxis.set_minor_locator(MultipleLocator(0.5))

ax.zaxis.set_major_locator(MultipleLocator(5))

ax.tick_params(labelrotation=0)

ax.set_xticks(x_data)

ax.set_xticklabels(x_data_label, rotation=0)

ax.set_yticks(np.arange(y_number)+1)

ax.set_yticklabels(label, rotation=0)

ax.set_xlabel(x_label, fontweight='bold', size=15)

# ax.set_xlim([0, 8])

ax.set_ylabel(y_label, fontweight='bold', size=15)

# ax.set_ylim([0, 4])

ax.set_zlabel(z_label, fontweight='bold', size=15)

ax.set_zlim([0, 25])

min_x = [0 for _ in range(y_number)]

min_z = [0 for _ in range(y_number)]

for _ in range(y_number):

min_z[_] = np.min(data[_])

for __ in range(x_number):

if data[_][__] == min_z[_]:

min_x[_] = x_data[__]

min_z[_] = round(min_z[_], 2)

y_list = [_+1-1/2*bar_width_y for __ in range(x_number)]

z_list = [0 for __ in range(x_number)]

ax.bar3d(x_data, y_list, z_list,

dx=dx, dy=dy, dz=data[_], label=label[_],

color=color[_], edgecolor='k', hatch=hatch[_])

ax.text(x=min_x[_], y=_+1, z=min_z[_]+3, s='%.2f' % min_z[_],

horizontalalignment='center', verticalalignment='top',

backgroundcolor='white', zorder=5,

fontsize=10, color='k', fontweight='bold')

plt.grid(axis='both', color='gray', linestyle='-')

fig = plt.gcf()

fig.set_size_inches(4, 4)

if save_path is not None:

plt.savefig(save_path, bbox_inches='tight')

plt.show()

x_data = [1, 2, 3, 4, 5, 6, 7]

y_data = [[3, 13, 1, 7, 9, 10, 2],

[6, 3, 9, 4, 9, 20, 9],

[7, 9, 5, 8, 5, 3, 9]]

plot_3D_bar(x_data, y_data, 'figure.pdf', '\nxlabel',

'\nylabel', '\nzlabel', 'horizon')

```

### Actual outcome

There is no Z-axis label in the saved figure.pdf

[figure.pdf](https://github.com/user-attachments/files/17243046/figure.pdf)

### Expected outcome

Saved figure.pdf should contain the z-axis label

[figure.pdf](https://github.com/user-attachments/files/17243055/figure.pdf)

### Additional information

_No response_

### Operating system

Ubuntu 20.04

### Matplotlib Version

3.9.2

### Matplotlib Backend

_No response_

### Python version

_No response_

### Jupyter version

_No response_

### Installation

pip

|

closed

|

2024-10-03T11:05:30Z

|

2024-10-03T14:17:50Z

|

https://github.com/matplotlib/matplotlib/issues/28931

|

[

"status: duplicate"

] |

NJU-ZAD

| 5

|

JoeanAmier/TikTokDownloader

|

api

| 383

|

unhandled exception,直接使用打包版exe,运行6,1,1,可获取视频数量,但无法下载

|

报错如下,使用的是打包版,是setting配置问题吗,可否帮忙看看,谢谢!

TikTokDownloader V5.5

Traceback (most recent call last):

File "main.py", line 19, in <module>

File "asyncio\runners.py", line 194, in run

File "asyncio\runners.py", line 118, in run

File "asyncio\base_events.py", line 686, in run_until_complete

File "main.py", line 10, in main

File "src\application\TikTokDownloader.py", line 355, in run

File "src\application\TikTokDownloader.py", line 244, in main_menu

File "src\application\TikTokDownloader.py", line 324, in compatible

File "src\application\TikTokDownloader.py", line 251, in complete

File "src\application\main_complete.py", line 1709, in run

File "src\application\main_complete.py", line 232, in account_acquisition_interactive

File "src\application\main_complete.py", line 260, in __secondary_menu

File "src\application\main_complete.py", line 263, in account_detail_batch

File "src\application\main_complete.py", line 299, in __account_detail_batch

File "src\application\main_complete.py", line 445, in deal_account_detail

File "src\application\main_complete.py", line 571, in _batch_process_detail

TypeError: cannot unpack non-iterable NoneType object

[PYI-6840:ERROR] Failed to execute script 'main' due to unhandled exception!

|

closed

|

2025-01-22T03:25:34Z

|

2025-01-22T07:18:57Z

|

https://github.com/JoeanAmier/TikTokDownloader/issues/383

|

[] |

WaymonHe

| 2

|

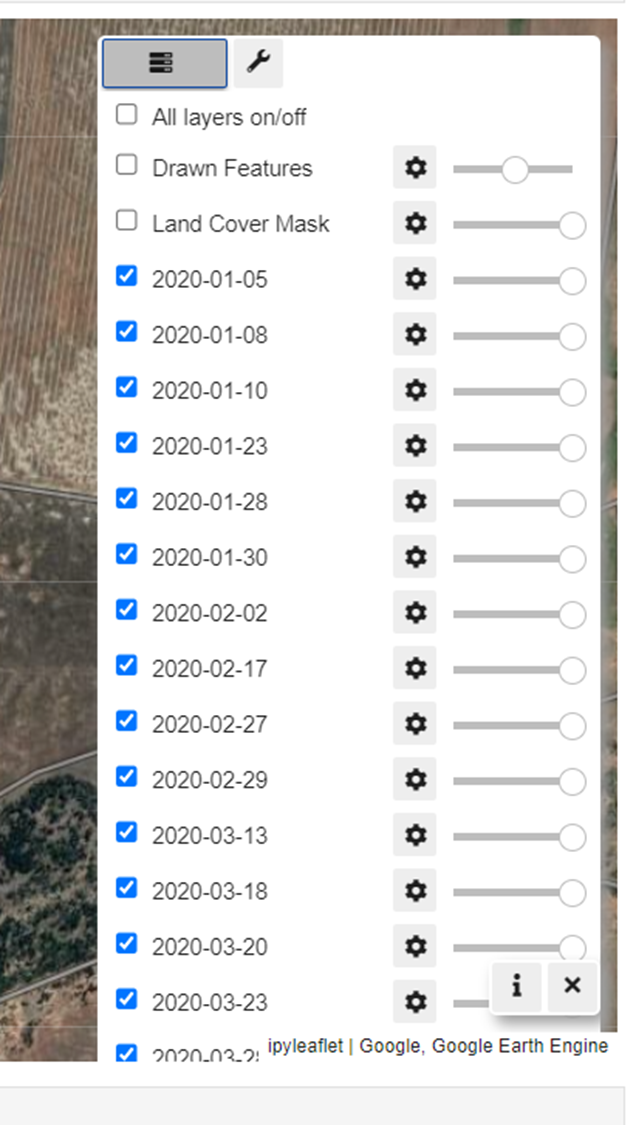

gee-community/geemap

|

streamlit

| 1,180

|

Scrolling for layer panel

|

Hi,

Is there a way to scroll down the layer panel when we have a lot of layers?

Thanks,

Daniel

|

closed

|

2022-08-07T04:54:23Z

|

2022-08-08T01:37:02Z

|

https://github.com/gee-community/geemap/issues/1180

|

[

"Feature Request"

] |

Daniel-Trung-Nguyen

| 1

|

coqui-ai/TTS

|

python

| 4,162

|

[Bug] Python 3.12.0

|

### Describe the bug

Hi,

My version of python isn't supported by TTS 3.12.0 on Cygwin and 3.12 on Windows 10.

Also TTS isn't known when i try to install from pip: pip install TTS

### To Reproduce

tried installing from pip or manually

### Expected behavior

_No response_

### Logs

```shell

```

### Environment

```shell

python 3.12 & 3.12.0

```

### Additional context

_No response_

|

open

|

2025-02-27T18:45:18Z

|

2025-03-06T16:03:13Z

|

https://github.com/coqui-ai/TTS/issues/4162

|

[

"bug"

] |

TDClarke

| 4

|

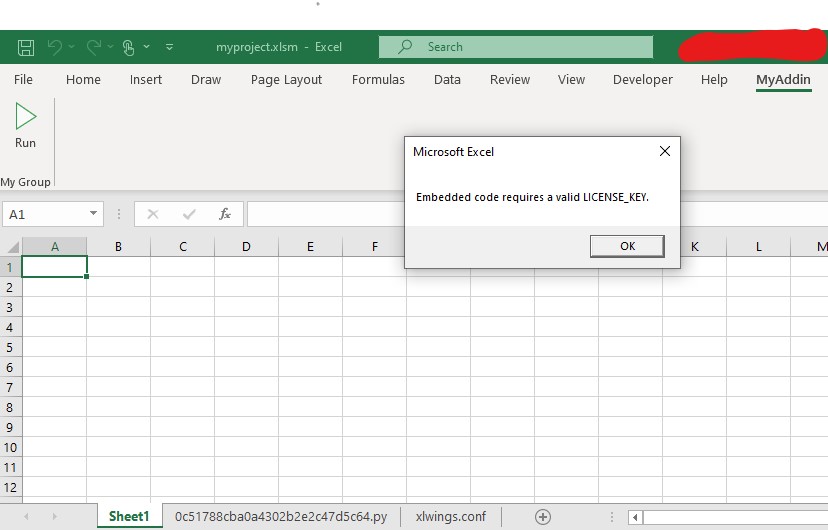

xlwings/xlwings

|

automation

| 2,150

|

Unable to embed code using version 0.28.9

|

#### OS Windows 10

#### Versions of xlwings 0.28.9, Excel LTSC Professional Plus 2021 version 2108 and Python 3.8.6

#### I was able to embed my py code using the same setup with xlwings version 0.28.8. But it's not working with the latest version. I used noncommercial license of xlwings Pro with both versions.

### How can I downgrade to version 0.28.8?

#### The files and screenshot are attached.

### Only the following commands were used. The embedded code was working with xlwings version 0.28.8, but not working anymore with version 0.28.9

```python

# pip install xlwings

# xlwings license update -k noncommercial

# xlwings quickstart myproject --addin --ribbon

# xlwings code embed

```

[myproject.zip](https://github.com/xlwings/xlwings/files/10485543/myproject.zip)

|

closed

|

2023-01-22T19:36:29Z

|

2023-01-25T00:54:53Z

|

https://github.com/xlwings/xlwings/issues/2150

|

[] |

MansuraKhanom

| 4

|

encode/uvicorn

|

asyncio

| 1,228

|

Support Custom HTTP implementations protocols

|

### Checklist

<!-- Please make sure you check all these items before submitting your feature request. -->

- [x] There are no similar issues or pull requests for this yet.

- [ ] I discussed this idea on the [community chat](https://gitter.im/encode/community) and feedback is positive.

### Is your feature related to a problem? Please describe.

<!-- A clear and concise description of what you are trying to achieve.

Eg "I want to be able to [...] but I can't because [...]". -->

I want to be able to use custom http protocol implementation by providing a module path to it. Eg. `mypackage.http.MyHttpProtocol`

## Describe the solution you would like.

<!-- A clear and concise description of what you would want to happen.

For API changes, try to provide a code snippet of what you would like the API to look like.

-->

Uvicorn can import the path and use it as the http implementation, specifically I want to be able to use a full cython based http implementation using httptools for efficiency and speed.

|

closed

|

2021-10-24T06:18:35Z

|

2021-10-28T01:24:38Z

|

https://github.com/encode/uvicorn/issues/1228

|

[] |

kumaraditya303

| 1

|

ranaroussi/yfinance

|

pandas

| 2,200

|

yfinance - Unable to retrieve Ticker earnings_dates

|

### Describe bug

Unable to retrieve Ticker earnings_dates

E.g. for NVDA, using Python get yf message back: $NVDA: possibly delisted; no earnings dates found

ditto for AMD and apparently all other valid ticker symbols: $AMD: possibly delisted; no earnings dates found

A list of all methods available for yf Ticker includes both earnings_dates and a likely newer entry called get_earnings_dates

but neither one currently returns 1) a history of Qtrly Earnings report dates nor 2) a list of future Qtrly Earnings report dates

The earnings_dates method/function/process has worked very well in the past at retrieving both the Earnings Dates as well as the actual release approx Times, like 8AM, after 4PM, etc.

### Simple code that reproduces your problem

yf_tkr_obj = yf.Ticker("NVDA")

df_earnings_dates=yf_tkr_obj.earnings_dates

None seems to get returned.

### Debug log

Unable to supply this, diff PC

### Bad data proof

_No response_

### `yfinance` version

yfinance 0.2.51

### Python version

3.7

### Operating system

Debian Buster

|

closed

|

2025-01-02T19:58:24Z

|

2025-01-02T23:03:06Z

|

https://github.com/ranaroussi/yfinance/issues/2200

|

[] |

SymbReprUnlim

| 1

|

python-restx/flask-restx

|

flask

| 272

|

Remove default HTTP 200 response code in doc

|

**Ask a question**

Hi, I was wondering if it's possible to remove the default "200 - Success" response in the swagger.json?

My model:

```

user_model = api.model(

"User",

{

"user_id": fields.String,

"project_id": fields.Integer(default=1),

"reward_points": fields.Integer(default=0),

"rank": fields.String,

},

)

```

The route:

```

@api.route("/")

class UserRouteList(Resource):

@api.doc(model=user_model, body=user_model)

@api.response(201, "User created", user_model)

@api.marshal_with(user_model, code=201)

def post(self):

"""Add user"""

data = api.payload

return (

userDB.add(data),

201

)

```

**Additional context**

flask-restx version: 0.2.0

|

closed

|

2021-01-04T16:26:19Z

|

2021-02-01T12:29:09Z

|

https://github.com/python-restx/flask-restx/issues/272

|

[

"question"

] |

mateusz-chrzastek

| 2

|

flasgger/flasgger

|

api

| 585

|

a little miss

|

when i forget install this packet -> apispec

page will back err -> internal server error

just have not other tips, so i search the resource code,

in marshmallow_apispec.py

there have some import code, if err ,set Schema = None

|

open

|

2023-06-27T07:43:36Z

|

2023-06-27T07:45:55Z

|

https://github.com/flasgger/flasgger/issues/585

|

[] |

Spectator133

| 2

|

benlubas/molten-nvim

|

jupyter

| 237

|

[Feature Request] Option to show images only in output buffer

|

Option to show images only in the output buffer and not in the virtual text while still having virtual text enabled. This is because images can be very buggy in the virtual text compared to the buffer and it is nice to have the virtual text enabled for other types of output. This could be its own option where you specify to show images in: "virtual_text", "output_buffer", "virtual_text_and_output_buffer", "native_application". There are many ways this option could be implemented and this is just a suggestion for how the API could look.

|

closed

|

2024-09-12T11:11:37Z

|

2024-10-05T13:59:42Z

|

https://github.com/benlubas/molten-nvim/issues/237

|

[

"enhancement"

] |

michaelbrusegard

| 0

|

keras-team/keras

|

machine-learning

| 21,004

|

Ensured torch import is properly handled

|

Before :

try:

import torch # noqa: F401

except ImportError:

pass

After :

try:

import torch # noqa: F401

except ImportError:

torch = None # Explicitly set torch to None if not installed

|

open

|

2025-03-07T19:58:17Z

|

2025-03-13T07:09:01Z

|

https://github.com/keras-team/keras/issues/21004

|

[

"type:Bug"

] |

FNICKE

| 1

|

huggingface/pytorch-image-models

|

pytorch

| 1,477

|

[FEATURE] Huge discrepancy between HuggingFace and timm in terms of the initialization of ViT

|

I see a huge discrepancy between HuggingFace and timm in terms of the initialization of ViT. Timm's implementation uses trunc_normal whereas huggingface uses "module.weight.data.normal_(mean=0.0, std=self.config.initializer_range)". I noticed this cause a huge drop in performance when training ViT models on imagenet with huggingface implementaion. I'm not sure if it's not just the initialization but also something more. Is it possible if one of you check and try to make the huggingface implementation as consistent as the timm's version? Thanks!

|

closed

|

2022-09-28T18:57:06Z

|

2023-02-02T04:45:09Z

|

https://github.com/huggingface/pytorch-image-models/issues/1477

|

[

"enhancement"

] |

Phuoc-Hoan-Le

| 7

|

desec-io/desec-stack

|

rest-api

| 223

|

Send emails asynchronously

|

Currently, requests that trigger emails have to wait until the email has been sent. This is "not nice", and also opens a timing side channel for email enumeration at account registration or password reset.

Let's move to an asynchronous solution, like:

- https://pypi.org/project/django-celery-email/ (full-fledged)

- https://github.com/pinax/django-mailer/ (much simpler)

- (Simple threading does not seem like the best solution: https://code.djangoproject.com/ticket/19214#comment:5)

|

closed

|

2019-07-02T22:27:43Z

|

2019-07-12T01:01:43Z

|

https://github.com/desec-io/desec-stack/issues/223

|

[

"bug",

"api"

] |

peterthomassen

| 1

|

marcomusy/vedo

|

numpy

| 1,035

|

Adding text to screenshot

|

Hi there,

May I know how to adjust the size and text font of the text on a screenshot? Thank you so much

This is my code:

vp = Plotter(axes=0, offscreen=True)

text = str(lab)

vp.add(text)

vp.show(item, interactive=False, at=0)

screenshot(os.path.join(save_path,file_name))

|

closed

|

2024-01-25T07:43:40Z

|

2024-01-25T09:16:36Z

|

https://github.com/marcomusy/vedo/issues/1035

|

[] |

priyabiswas12

| 2

|

AirtestProject/Airtest

|

automation

| 1,150

|

执行了70来次才出现的一个问题,非常偶现

|

Traceback (most recent call last):

File "/Users/administrator/jenkinsauto/workspace/autotest-IOS/initmain.py", line 35, in <module>

raise e

File "/Users/administrator/jenkinsauto/workspace/autotest-IOS/initmain.py", line 27, in <module>

t.pushtag_ios()

File "/Users/administrator/jenkinsauto/workspace/autotest-IOS/pubscirpt/tag.py", line 25, in pushtag_ios

touch(Template(r'tag输入.png'))

File "/usr/local/lib/python3.11/site-packages/airtest/utils/logwraper.py", line 124, in wrapper

res = f(*args, **kwargs)

^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/airtest/core/api.py", line 367, in touch

pos = loop_find(v, timeout=ST.FIND_TIMEOUT)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/airtest/utils/logwraper.py", line 124, in wrapper

res = f(*args, **kwargs)

^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/airtest/core/cv.py", line 62, in loop_find

screen = G.DEVICE.snapshot(filename=None, quality=ST.SNAPSHOT_QUALITY)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/airtest/core/ios/ios.py", line 47, in wrapper

return func(self, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/airtest/core/ios/ios.py", line 615, in snapshot

data = self._neo_wda_screenshot()

^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/airtest/core/ios/ios.py", line 601, in _neo_wda_screenshot

raw_value = base64.b64decode(value)

^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/Cellar/python@3.11/3.11.4_1/Frameworks/Python.framework/Versions/3.11/lib/python3.11/base64.py", line 88, in b64decode

return binascii.a2b_base64(s, strict_mode=validate)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

binascii.Error: Invalid base64-encoded string: number of data characters (2433109) cannot be 1 more than a multiple of 4

|

open

|

2023-07-28T09:15:06Z

|

2023-07-28T09:15:06Z

|

https://github.com/AirtestProject/Airtest/issues/1150

|

[] |

z929151231

| 0

|

Evil0ctal/Douyin_TikTok_Download_API

|

api

| 322

|

[Feature request] Brief and clear description of the problem

|

**Is your feature request related to a problem? Please describe.**

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

**Describe the solution you'd like**

A clear and concise description of what you want to happen.

**Describe alternatives you've considered**

A clear and concise description of any alternative solutions or features you've considered.

**Additional context**

Add any other context or screenshots about the feature request here.

|

closed

|

2024-02-05T21:10:46Z

|

2024-02-07T03:40:58Z

|

https://github.com/Evil0ctal/Douyin_TikTok_Download_API/issues/322

|

[

"enhancement"

] |

s6k6s6

| 0

|

ipyflow/ipyflow

|

jupyter

| 40

|

separate modes for prod / dev

|

closed

|

2020-08-06T22:17:30Z

|

2021-03-08T05:45:32Z

|

https://github.com/ipyflow/ipyflow/issues/40

|

[] |

smacke

| 0

|

|

nvbn/thefuck

|

python

| 889

|

how can you get the history for current terminal session

|

cuz it has not been written to the history file, how to get it? I'm very curious

|

open

|

2019-03-03T10:49:49Z

|

2023-10-30T20:00:47Z

|

https://github.com/nvbn/thefuck/issues/889

|

[] |

imroc

| 1

|

donnemartin/system-design-primer

|

python

| 235

|

Anki Error on Launching using Ubuntu 18.10

|

I'm using Ubuntu 18.10 on Lenovo Thinkpad:

I get this error right after the install using Ubuntu software install ....after installed ...when pressing Launch button:

_____________________________________________________

Error during startup:

Traceback (most recent call last):

File "/usr/share/anki/aqt/main.py", line 50, in __init__

self.setupUI()

File "/usr/share/anki/aqt/main.py", line 75, in setupUI

self.setupMainWindow()

File "/usr/share/anki/aqt/main.py", line 585, in setupMainWindow

tweb = self.toolbarWeb = aqt.webview.AnkiWebView()

File "/usr/share/anki/aqt/webview.py", line 114, in __init__

self.focusProxy().installEventFilter(self)

AttributeError: 'NoneType' object has no attribute 'installEventFilter'

_____________________________________________________

Anki version: 2.1.0+dfsg-1

Updated: 11/17/2018

The error message won't reappear unless you uninstall and reboot O/S. Any help is appreciated. Thanks!

DJ

|

open

|

2018-11-17T16:17:20Z

|

2019-04-10T00:51:09Z

|

https://github.com/donnemartin/system-design-primer/issues/235

|

[

"help wanted",

"needs-review"

] |

FunMyWay

| 6

|

piskvorky/gensim

|

machine-learning

| 3,154

|

Yes, thanks. If it's really a bug with the FB model, not much we can do about it.

|

Yes, thanks. If it's really a bug with the FB model, not much we can do about it.

_Originally posted by @piskvorky in https://github.com/RaRe-Technologies/gensim/issues/2969#issuecomment-799811459_

|

closed

|

2021-05-19T22:08:58Z

|

2021-05-19T23:05:28Z

|

https://github.com/piskvorky/gensim/issues/3154

|

[] |

Dino1981

| 0

|

streamlit/streamlit

|

data-visualization

| 10,029

|

st.radio label alignment and label_visibility issues in the latest versions

|

### Checklist

- [X] I have searched the [existing issues](https://github.com/streamlit/streamlit/issues) for similar issues.

- [X] I added a very descriptive title to this issue.

- [X] I have provided sufficient information below to help reproduce this issue.

### Summary

The st.radio widget has two unexpected behaviors in the latest versions of Streamlit:

The label is centered instead of left-aligned, which is inconsistent with previous versions.

The label_visibility option 'collapsed' does not work as expected, and the label remains visible.

### Reproducible Code Example

```Python

import streamlit as st

# Store the initial value of widgets in session state

if "visibility" not in st.session_state:

st.session_state.visibility = "visible"

st.session_state.disabled = False

st.session_state.horizontal = False

col1, col2 = st.columns(2)

with col1:

st.checkbox("Disable radio widget", key="disabled")

st.checkbox("Orient radio options horizontally", key="horizontal")

with col2:

st.radio(

"Set label visibility 👇",

["visible", "hidden", "collapsed"],

key="visibility",

label_visibility=st.session_state.visibility,

disabled=st.session_state.disabled,

horizontal=st.session_state.horizontal,

)

```

### Steps To Reproduce

please play with the options of the above snippet, and inspect label

### Expected Behavior

The label of the st.radio widget should be left-aligned, consistent with previous versions.

When label_visibility='collapsed' is set, the label should not be visible.

### Current Behavior

The label is centered.

Setting label_visibility='collapsed' does not hide the label; it remains visible.

### Is this a regression?

- [ ] Yes, this used to work in a previous version.

### Debug info

- Streamlit version: 1.41.1

- Python version:

- Operating System:

- Browser:

### Additional Information

_No response_

|

closed

|

2024-12-16T12:28:53Z

|

2024-12-16T16:06:20Z

|

https://github.com/streamlit/streamlit/issues/10029

|

[

"type:bug",

"feature:st.radio",

"status:awaiting-team-response"

] |

Panosero

| 4

|

deeppavlov/DeepPavlov

|

tensorflow

| 1,372

|

Go-Bot: migrate to PyTorch (policy)

|

Moved to internal Trello

|

closed

|

2021-01-12T11:19:57Z

|

2021-11-30T10:16:57Z

|

https://github.com/deeppavlov/DeepPavlov/issues/1372

|

[] |

danielkornev

| 0

|

jina-ai/clip-as-service

|

pytorch

| 235

|

Support for FP16 (mixed precision) trained models

|

I trained a little german BERT Model with FP16 and tensorflow, so the Nvidia suggestion https://github.com/google-research/bert/pull/255

the suggestions are hosted in this repository

https://github.com/thorjohnsen/bert/tree/gpu_optimizations

Training works fine, but bert-as-service doesn't work with that, because of the tensorflows typechecks when loading the graph. Same problem with the fp16 option activated.

Is there a simple way of modifiing bert-as-service or convert the model so i can use the embeddings with bert-as-service?

Thank you!

|

closed

|

2019-02-13T17:20:31Z

|

2019-03-14T03:44:38Z

|

https://github.com/jina-ai/clip-as-service/issues/235

|

[] |

miweru

| 1

|

ultralytics/ultralytics

|

computer-vision

| 18,799

|

YOLO导出TensorRT格式或许不支持JetPack 6.2

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/orgs/ultralytics/discussions) and found no similar questions.

### Question

你好!我目前发现我在将pytorch格式转成tensorRT格式时,程序会报错:

下面的错误表明似乎无法支持tensorRT,目前的jetson orin nano super的tensorRT版本是10.3.并且无法降级

requirements: Ultralytics requirement ['tensorrt>7.0.0,!=10.1.0'] not found, attempting AutoUpdate...

error: subprocess-exited-with-error

× python setup.py egg_info did not run successfully.

│ exit code: 1

╰─> [6 lines of output]

Traceback (most recent call last):

File "<string>", line 2, in <module>

File "<pip-setuptools-caller>", line 34, in <module>

File "/tmp/pip-install-zhfqxl_t/tensorrt-cu12_7e93d472a67644448c0bd7e73bd68a66/setup.py", line 71, in <module>

raise RuntimeError("TensorRT does not currently build wheels for Tegra systems")

RuntimeError: TensorRT does not currently build wheels for Tegra systems

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

error: metadata-generation-failed

× Encountered error while generating package metadata.

╰─> See above for output.

note: This is an issue with the package mentioned above, not pip.

hint: See above for details.

Retry 1/2 failed: Command 'pip install --no-cache-dir "tensorrt>7.0.0,!=10.1.0" ' returned non-zero exit status 1.

error: subprocess-exited-with-error

× python setup.py egg_info did not run successfully.

│ exit code: 1

╰─> [6 lines of output]

Traceback (most recent call last):

File "<string>", line 2, in <module>

File "<pip-setuptools-caller>", line 34, in <module>

File "/tmp/pip-install-cnl6plvw/tensorrt-cu12_af3eec6e67824417ac3fee8b4d1a66b0/setup.py", line 71, in <module>

raise RuntimeError("TensorRT does not currently build wheels for Tegra systems")

RuntimeError: TensorRT does not currently build wheels for Tegra systems

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

error: metadata-generation-failed

× Encountered error while generating package metadata.

╰─> See above for output.

note: This is an issue with the package mentioned above, not pip.

hint: See above for details.

Retry 2/2 failed: Command 'pip install --no-cache-dir "tensorrt>7.0.0,!=10.1.0" ' returned non-zero exit status 1.

requirements: ❌ Command 'pip install --no-cache-dir "tensorrt>7.0.0,!=10.1.0" ' returned non-zero exit status 1.

TensorRT: export failure ❌ 14.1s: No module named 'tensorrt'

Traceback (most recent call last):

File "/home/nvidia/YOLO11/YOLO/lib/python3.10/site-packages/ultralytics/engine/exporter.py", line 810, in export_engine

import tensorrt as trt # noqa

ModuleNotFoundError: No module named 'tensorrt'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/nvidia/YOLO11/v3(m-model)/convert.py", line 7, in <module>

model.export(format="engine") # creates 'best.engine'

File "/home/nvidia/YOLO11/YOLO/lib/python3.10/site-packages/ultralytics/engine/model.py", line 738, in export

return Exporter(overrides=args, _callbacks=self.callbacks)(model=self.model)

File "/home/nvidia/YOLO11/YOLO/lib/python3.10/site-packages/ultralytics/engine/exporter.py", line 396, in __call__

f[1], _ = self.export_engine(dla=dla)

File "/home/nvidia/YOLO11/YOLO/lib/python3.10/site-packages/ultralytics/engine/exporter.py", line 180, in outer_func

raise e

File "/home/nvidia/YOLO11/YOLO/lib/python3.10/site-packages/ultralytics/engine/exporter.py", line 175, in outer_func

f, model = inner_func(*args, **kwargs)

File "/home/nvidia/YOLO11/YOLO/lib/python3.10/site-packages/ultralytics/engine/exporter.py", line 814, in export_engine

import tensorrt as trt # noqa

ModuleNotFoundError: No module named 'tensorrt'

### Additional

_No response_

|

open

|

2025-01-21T12:45:56Z

|

2025-01-23T14:31:26Z

|

https://github.com/ultralytics/ultralytics/issues/18799

|

[

"question",

"embedded",

"exports"

] |

Vectorvin

| 6

|

hpcaitech/ColossalAI

|

deep-learning

| 5,601

|

[FEATURE]: support multiple (partial) backward passes for zero

|

### Describe the feature

In some vae training, users may use weight adaptive loss which may compute grad of some parameters twice, like

This will trigger backward hook twice.

Based on pytorch's document, we may use post-grad-accumulation hook to solve this problem.

|

closed

|

2024-04-16T05:02:06Z

|

2024-04-16T09:49:22Z

|

https://github.com/hpcaitech/ColossalAI/issues/5601

|

[

"enhancement"

] |

ver217

| 0

|

exaloop/codon

|

numpy

| 595

|

Is there a way to use python Enum?

|

I cannot find an example : ( .

|

closed

|

2024-09-30T07:21:46Z

|

2024-09-30T07:28:37Z

|

https://github.com/exaloop/codon/issues/595

|

[] |

BeneficialCode

| 0

|

mwaskom/seaborn

|

data-visualization

| 3,584

|

UserWarning: The figure layout has changed to tight self._figure.tight_layout(*args, **kwargs)

|

I have encountered a persistent `UserWarning` when using Seaborn's `pairplot` function, indicating a change in figure layout to tight.

`\anaconda3\Lib\site-packages\seaborn\axisgrid.py:118: UserWarning: The figure layout has changed to tight

self._figure.tight_layout(*args, **kwargs)`

`\AppData\Local\Temp\ipykernel_17504\146091522.py:3: UserWarning: The figure layout has changed to tight

plt.tight_layout()`

The warning appears even when attempting to adjust the layout using `plt.tight_layout()` or other methods. The warning does not seem to affect the appearance or functionality of the plot, but it's causing some concern. Seeking guidance on resolving or understanding the implications of this warning. Any insights or recommendations would be greatly appreciated. Thank you!

|

closed

|

2023-12-05T19:21:49Z

|

2023-12-05T22:23:49Z

|

https://github.com/mwaskom/seaborn/issues/3584

|

[] |

rahulsaran21

| 1

|

AirtestProject/Airtest

|

automation

| 595

|

有截图的时候会报错 AttributeError: module 'cv2.cv2' has no attribute 'xfeatures2d'

|

AttributeError: module 'cv2.cv2' has no attribute 'xfeatures2d'

好像也没什么影响,麻烦看一下

|

open

|

2019-11-07T04:47:01Z

|

2019-11-14T07:13:36Z

|

https://github.com/AirtestProject/Airtest/issues/595

|

[] |

abcdd12

| 5

|

gevent/gevent

|

asyncio

| 1,679

|

Python 3.8.6 and perhaps other recent Python 3.8 builds are incompatible

|

* gevent version: 1.4.0

* Python version: Please be as specific as possible: the `python:3.8` docker image as of 2020-09-24 6:30PM PST

* Operating System: Linux - ubuntu or Docker on Windows or Docker on Mac or Google container optimized linux

### Description:

Trying to boot a gunicorn worker with `--worker-class=gevent` and it logs this and then infinite loops:

```<frozen importlib._bootstrap>:219: RuntimeWarning: greenlet.greenlet size changed, may indicate binary incompatibility. Expected 144 from C header, got 152 from PyObject

<frozen importlib._bootstrap>:219: RuntimeWarning: greenlet.greenlet size changed, may indicate binary incompatibility. Expected 144 from C header, got 152 from PyObject

<frozen importlib._bootstrap>:219: RuntimeWarning: greenlet.greenlet size changed, may indicate binary incompatibility. Expected 144 from C header, got 152 from PyObject

<frozen importlib._bootstrap>:219: RuntimeWarning: greenlet.greenlet size changed, may indicate binary incompatibility. Expected 144 from C header, got 152 from PyObject

<frozen importlib._bootstrap>:219: RuntimeWarning: greenlet.greenlet size changed, may indicate binary incompatibility. Expected 144 from C header, got 152 from PyObject

<frozen importlib._bootstrap>:219: RuntimeWarning: greenlet.greenlet size changed, may indicate binary incompatibility. Expected 144 from C header, got 152 from PyObject

<frozen importlib._bootstrap>:219: RuntimeWarning: greenlet.greenlet size changed, may indicate binary incompatibility. Expected 144 from C header, got 152 from PyObject

[2020-09-25 01:13:02 +0000] [1] [INFO] Starting gunicorn 19.9.0

[2020-09-25 01:13:02 +0000] [1] [INFO] Listening at: http://0.0.0.0:8000 (1)

[2020-09-25 01:13:02 +0000] [1] [INFO] Using worker: gevent

/usr/local/lib/python3.8/os.py:1023: RuntimeWarning: line buffering (buffering=1) isn't supported in binary mode, the default buffer size will be used

return io.open(fd, *args, **kwargs)

[2020-09-25 01:13:02 +0000] [7] [INFO] Booting worker with pid: 7

[2020-09-25 01:13:02 +0000] [7] [INFO] Made Psycopg2 Green

/usr/local/lib/python3.8/os.py:1023: RuntimeWarning: line buffering (buffering=1) isn't supported in binary mode, the default buffer size will be used

return io.open(fd, *args, **kwargs)

[2020-09-25 01:13:02 +0000] [8] [INFO] Booting worker with pid: 8

[2020-09-25 01:13:02 +0000] [8] [INFO] Made Psycopg2 Green

/usr/local/lib/python3.8/os.py:1023: RuntimeWarning: line buffering (buffering=1) isn't supported in binary mode, the default buffer size will be used

return io.open(fd, *args, **kwargs)

[2020-09-25 01:13:02 +0000] [9] [INFO] Booting worker with pid: 9

[2020-09-25 01:13:02 +0000] [9] [INFO] Made Psycopg2 Green

# infinite loop

```

|

closed

|

2020-09-25T01:34:56Z

|

2020-09-25T10:25:02Z

|

https://github.com/gevent/gevent/issues/1679

|

[] |

AaronFriel

| 2

|

microsoft/nni

|

deep-learning

| 5,651

|

Can not download Tar when Build from Source

|

The tar package cannot be downloaded when the source code is used for building.

nni version: dev

python: 3.7.9

os: openeuler-20.03

How to reproduce it?

```

git clone https://github.com/microsoft/nni.git

cd nni

export NNI_RELEASE=2.0

python setup.py build_ts

```

https://nodejs.org/dist/v18.15.0/node-v18.15.0-linux-aarch64.tar.xz

|

open

|

2023-07-28T08:28:37Z

|

2023-08-11T07:09:50Z

|

https://github.com/microsoft/nni/issues/5651

|

[] |

zhuofeng6

| 3

|

KevinMusgrave/pytorch-metric-learning

|

computer-vision

| 331

|

problem with implem of mAP@R ?

|

Hi,

Thanks for open-sourcing this repo!

I want to report a problem which I noticed the the [XBM repo](https://github.com/msight-tech/research-xbm) which uses code similar to yours for mAP@R calculation (I am not sure who's based on who, opening this issue on both).

I suspect there is a mistake in the implementation of mAP@R:

[Line 99](https://github.com/KevinMusgrave/pytorch-metric-learning/blob/9559b21559ca6fbcb46d2d51d7953166e18f9de6/src/pytorch_metric_learning/utils/accuracy_calculator.py#L99), you divide by max_possible_matches_per_row for this query (which for mAP@R is equal to R for each query).

But what should be done is divide by the __actual__ number of relevant items in the R-best-ranked ones for this query (as is done for [mAP](https://github.com/KevinMusgrave/pytorch-metric-learning/blob/9559b21559ca6fbcb46d2d51d7953166e18f9de6/src/pytorch_metric_learning/utils/accuracy_calculator.py#L97) 2 line later).

wikipedia page on AP:

(by the way, for mAP, as you do line 98, one has to decide what to do with queries which have no relevant items and on which AP is therefore not defined. You use max_possible_values_per_row=0 for these rows, but I am not sure this is the most standard choice, maybe it's good to give to option to set AP=0, or AP=1, or exclude these rows...)

Regards,

A

|

closed

|

2021-05-25T15:24:50Z

|

2021-05-26T06:58:39Z

|

https://github.com/KevinMusgrave/pytorch-metric-learning/issues/331

|

[

"question"

] |

drasros

| 3

|

liangliangyy/DjangoBlog

|

django

| 525

|

文章只是点播视频吗?

|

<!--

如果你不认真勾选下面的内容,我可能会直接关闭你的 Issue。

提问之前,建议先阅读 https://github.com/ruby-china/How-To-Ask-Questions-The-Smart-Way

-->

**我确定我已经查看了** (标注`[ ]`为`[x]`)

- [x] [DjangoBlog的readme](https://github.com/liangliangyy/DjangoBlog/blob/master/README.md)

- [x] [配置说明](https://github.com/liangliangyy/DjangoBlog/blob/master/bin/config.md)

- [x] [其他 Issues](https://github.com/liangliangyy/DjangoBlog/issues)

----

**我要申请** (标注`[ ]`为`[x]`)

- [ ] BUG 反馈

- [ ] 添加新的特性或者功能

- [x] 请求技术支持

|

closed

|

2021-11-22T07:10:34Z

|

2022-03-02T11:22:56Z

|

https://github.com/liangliangyy/DjangoBlog/issues/525

|

[] |

glinxx

| 2

|

httpie/cli

|

rest-api

| 1,247

|

Test new httpie command on various actions

|

We have 2 actions that gets triggered when specific files are edited (for testing snap/brew works), they test the `http` but not `httpie`, so let's also test it too.

|

closed

|

2021-12-21T17:32:58Z

|

2021-12-23T18:55:40Z

|

https://github.com/httpie/cli/issues/1247

|

[

"bug",

"new"

] |

isidentical

| 1

|

scrapy/scrapy

|

python

| 6,378

|

Edit Contributing.rst document to specify how to propose documentation suggestions

|

There are multiple types of contributions that the community can suggest including bug reports, feature requests, code improvements, security vulnerability reports, and documentation changes.

For the Scrapy.py project it was difficult to discern what process to follow to make a documentation improvement suggestion.

I want to suggest an additional section to the documentation that clearly explains how to propose a non-code related change.

This section will follow the guidelines outlined in the Contributing.rst file from another open source project, https://github.com/beetbox/beets

|

closed

|

2024-05-26T15:43:40Z

|

2024-07-10T07:37:32Z

|

https://github.com/scrapy/scrapy/issues/6378

|

[] |

jtoallen

| 11

|

pytorch/pytorch

|

machine-learning

| 149,186

|

torch.fx.symbolic_trace failed on deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B

|

### 🐛 Describe the bug

I try to compile deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B to mlir with the following script.

```python

# Import necessary libraries

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

from torch.export import export

import onnx

from torch_mlir import fx

# Load the DeepSeek model and tokenizer

model_name = "deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

class Qwen2(torch.nn.Module):

def __init__(self) -> None:

super().__init__()

self.qwen = model

def forward(self, x):

result = self.qwen(x)

result.past_key_values = ()

return result

qwen2 = Qwen2()

# Define a prompt for the model

prompt = "What are the benefits of using AI in healthcare?"

# Encode the prompt

input_ids = tokenizer.encode(prompt, return_tensors="pt")

exported_program: torch.export.ExportedProgram = export (

qwen2, (input_ids,)

)

traced_model = torch.fx.symbolic_trace(qwen2)

m = fx.export_and_import(traced_model, (input_ids,), enable_ir_printing=True,

enable_graph_printing=True)

with open("qwen1.5b_s.mlir", "w") as f:

f.write(str(m))

```

But it failed with following backtrace.

```shell

/home/hmsjwzb/work/models/QWEN/qwen/lib/python3.11/site-packages/torch/backends/mkldnn/__init__.py:78: UserWarning: TF32 acceleration on top of oneDNN is available for Intel GPUs. The current Torch version does not have Intel GPU Support. (Triggered internally at /pytorch/aten/src/ATen/Context.cpp:148.)

torch._C._set_onednn_allow_tf32(_allow_tf32)

/home/hmsjwzb/work/models/QWEN/qwen/lib/python3.11/site-packages/torch/backends/mkldnn/__init__.py:78: UserWarning: TF32 acceleration on top of oneDNN is available for Intel GPUs. The current Torch version does not have Intel GPU Support. (Triggered internally at /pytorch/aten/src/ATen/Context.cpp:148.)

torch._C._set_onednn_allow_tf32(_allow_tf32)

Traceback (most recent call last):

File "/home/hmsjwzb/work/models/QWEN/qwen5.py", line 55, in <module>

traced_model = torch.fx.symbolic_trace(qwen2)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hmsjwzb/work/models/QWEN/qwen/lib/python3.11/site-packages/torch/fx/_symbolic_trace.py", line 1314, in symbolic_trace

graph = tracer.trace(root, concrete_args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hmsjwzb/work/models/QWEN/qwen/lib/python3.11/site-packages/torch/_dynamo/eval_frame.py", line 838, in _fn

return fn(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^

File "/home/hmsjwzb/work/models/QWEN/qwen/lib/python3.11/site-packages/torch/fx/_symbolic_trace.py", line 838, in trace

(self.create_arg(fn(*args)),),

^^^^^^^^^

File "/home/hmsjwzb/work/models/QWEN/qwen5.py", line 18, in forward

result = self.qwen(x)

^^^^^^^^^^^^

File "/home/hmsjwzb/work/models/QWEN/qwen/lib/python3.11/site-packages/torch/fx/_symbolic_trace.py", line 813, in module_call_wrapper

return self.call_module(mod, forward, args, kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/hmsjwzb/work/models/QWEN/qwen/lib/python3.11/site-packages/torch/fx/_symbolic_trace.py", line 531, in call_module

ret_val = forward(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^