repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

pandas-dev/pandas

|

pandas

| 60,429

|

DOC: Missing 'pickleshare' package when running 'sphinx-build' command

|

### Pandas version checks

- [X] I have checked that the issue still exists on the latest versions of the docs on `main` [here](https://pandas.pydata.org/docs/dev/)

### Location of the documentation

https://github.com/pandas-dev/pandas/blob/106f33cfce16f4e08f6ca5bd0e6e440ec9a94867/requirements-dev.txt#L26

### Documentation problem

After installing `requirements-dev.txt` and `pandas` from sources, I tried to run `sphinx-build` command to build pandas docs. However, I noticed that there are lots of such warnings:

```bash

UserWarning: This is now an optional IPython functionality, using bookmarks requires you to install the `pickleshare` library.

bkms = self.shell.db.get('bookmarks',{})

UsageError: %bookmark -d: Can't delete bookmark 'ipy_savedir'

```

The followings are the commands I used:

```bash

git clone --branch=main https://github.com/pandas-dev/pandas.git pandas-main

cd pandas-main

git log -1 --pretty=format:"%H%n%s"

conda create --prefix ./.venv --channel conda-forge --yes

conda activate ./.venv

conda install python=3.10 --yes

export PYTHONNOUSERSITE=1

python -m pip install --requirement=requirements-dev.txt --progress-bar=off --verbose

python -m pip install . --no-build-isolation --no-deps -Csetup-args=--werror --progress-bar=off --verbose

export LANG=en_US.UTF-8

sphinx-build -b html -v -j 4 -c doc/source doc/source doc/build/html

```

Log file of the above commands:

[log-sphinx-build-pandas-docs.txt](https://github.com/user-attachments/files/17928982/log-sphinx-build-pandas-docs.txt)

### Suggested fix for documentation

It seems that this issue is caused by https://github.com/ipython/ipython/issues/14237. Therefore, my suggested fix is to add `pickleshare` requirement in `environment.yml` and `requirements-dev.txt`.

Just like NumPy demonstrated here: [requirements/doc_requirements.txt#L12-L14](https://github.com/numpy/numpy/blob/4e8f724fbc136b1bac1c43e24d189ebc45e056eb/requirements/doc_requirements.txt#L12-L14)

### Versions and Platforms

- OS version: Kubuntu 24.04

- Current Branch: [`main`](https://github.com/numpy/numpy/tree/main)

- Latest Commit: 106f33cfce16f4e08f6ca5bd0e6e440ec9a94867

- Conda version: `24.9.2`

- Python version: `3.10.15`

- iPython version: `8.29.0`

|

closed

|

2024-11-27T04:36:42Z

|

2024-12-03T21:21:21Z

|

https://github.com/pandas-dev/pandas/issues/60429

|

[

"Build",

"Docs"

] |

hwhsu1231

| 4

|

mirumee/ariadne

|

graphql

| 80

|

Make Resolver type definition more accurate

|

Our current type for `Resolver` is `Callable[..., Any]`, catching any arguments and relasing anything.

This is because its currently impossible to tpe `Callable` that accepts `*args` or `**kwagrs`.

This issue is [known to MyPy authors](https://github.com/python/typing/issues/264 but a the time of writing no solution is available.

This is related to #79

|

open

|

2018-12-13T16:32:26Z

|

2022-02-21T18:53:56Z

|

https://github.com/mirumee/ariadne/issues/80

|

[

"enhancement"

] |

rafalp

| 2

|

ResidentMario/missingno

|

pandas

| 62

|

Suggest adding test coverage and code quality trackers

|

[landscape.io](https://landscape.io) is helpful for tracking test coverage for your package.

[coveralls.io](https://coveralls.io) is helpful for linting and tracking the code quality for your package.

Both are free services for public repos and can be paired with the continuous integration services mentioned in #61.

|

closed

|

2018-01-31T03:23:50Z

|

2020-04-09T21:12:43Z

|

https://github.com/ResidentMario/missingno/issues/62

|

[] |

rhiever

| 0

|

ivy-llc/ivy

|

numpy

| 28,340

|

Fix Frontend Failing Test: jax - math.tensorflow.math.argmin

|

To-do List: https://github.com/unifyai/ivy/issues/27496

|

closed

|

2024-02-20T08:14:20Z

|

2024-02-20T10:21:20Z

|

https://github.com/ivy-llc/ivy/issues/28340

|

[

"Sub Task"

] |

Sai-Suraj-27

| 0

|

scanapi/scanapi

|

rest-api

| 474

|

Move Wiki to Read The Docs

|

## Feature request

### Description the feature

<!-- A clear and concise description of what the new feature is. -->

Move Wiki to Read The Docs. To have everything in a single place. It should be easier for contributors.

We need to wait for #469 to be done first.

|

open

|

2021-08-01T14:54:58Z

|

2021-08-01T14:55:20Z

|

https://github.com/scanapi/scanapi/issues/474

|

[

"Documentation",

"Multi Contributors",

"Status: Blocked",

"Wiki"

] |

camilamaia

| 0

|

agronholm/anyio

|

asyncio

| 671

|

`ClosedResourceError` vs. `BrokenResourceError` is sometimes backend-dependent, and is sometimes not raised at all (in favor of a non-AnyIO exception type)

|

### Things to check first

- [X] I have searched the existing issues and didn't find my bug already reported there

- [X] I have checked that my bug is still present in the latest release

### AnyIO version

main

### Python version

CPython 3.12.1

### What happened?

see the title. here are some reproducers for some issues that i noticed while working on a fix for #669: https://github.com/agronholm/anyio/compare/c6f0334e67818b90540dac20815cad9e0b2c7eee...b6576ae16a9b055e8109c8d2ca81e00bf439cb3f

the main question that these tests raise is: if a send stream is in a `Broken` state (i.e. our side of the connection learned that it was closed by the peer, or otherwise broken due to something external) and then is explicitly `aclose()`d by our side, should subsequent calls raise `BrokenResourceError` or should they raise `ClosedResourceError`? i.e. if you `aclose()` a `Broken` send stream, does that "clear" its `Broken`ness and convert it to just being `Closed`?

the documentation does not seem to discuss what should happen if the conditions for `ClosedResourceError` and `BrokenResourceError` are _both_ met. i am not sure what the behavior here is intended to be (or if it's intended to be undefined behavior?), as currently different streams do different things in this situation, with the behavior sometimes also varying between backends.

my initial intuition was that the intended behavior was to give precedence to `raise BrokenResourceError` over `raise ClosedResourceError` (this choice prevents the stream from changing from `Broken` to `Closed`). I thought this becuase this behavior looks like it was rather explicitly chosen when implementing the "important" asyncio-backend streams (TCP and UDP): they explicitly do _not_ set `self._closed` if they are already closing due to an external cause:

* `SocketStream` https://github.com/agronholm/anyio/blob/c6efbe352705529123d55f87d6dbb366a3e0612f/src/anyio/_backends/_asyncio.py#L1173-L1184

* `UDPStream` https://github.com/agronholm/anyio/blob/c6efbe352705529123d55f87d6dbb366a3e0612f/src/anyio/_backends/_asyncio.py#L1459-L1463

so I started to implement it: here is most of an implementation of that behavior: https://github.com/agronholm/anyio/compare/c6f0334e67818b90540dac20815cad9e0b2c7eee...1be403d20f94a8a6522f27f24a1830f2351aab3b [^1]

[^1]: note: github shows these commits out of order as it's sorting based on time rather than doing a correct topological sort, so it may be easier to look at these locally, in order.

however, `MemoryObjectSendStream` is also an "important" stream and it has the opposite behavior, even on the asyncio backend.

### How can we reproduce the bug?

see above

|

open

|

2024-01-17T08:26:06Z

|

2025-03-24T11:18:49Z

|

https://github.com/agronholm/anyio/issues/671

|

[

"bug"

] |

gschaffner

| 0

|

JaidedAI/EasyOCR

|

pytorch

| 404

|

Tajik Language

|

Here's needed data. Can you tell me when your ocr can learn it, so i can use it? Thank you!

[easyocr.zip](https://github.com/JaidedAI/EasyOCR/files/6220472/easyocr.zip)

|

closed

|

2021-03-29T08:59:30Z

|

2021-03-31T01:36:32Z

|

https://github.com/JaidedAI/EasyOCR/issues/404

|

[] |

KhayrulloevDD

| 1

|

deepinsight/insightface

|

pytorch

| 2,461

|

Wild Anti-Spoofing dataset providing is stop?

|

Hello,

I'm interested in Anti-Spoofing dataset.

A month ago, I had submitted the application to get the permission of dataset.

But, I haven't received any contact.

Do you still provide the dataset?

Thanks.

|

closed

|

2023-10-25T08:12:30Z

|

2023-11-17T01:53:53Z

|

https://github.com/deepinsight/insightface/issues/2461

|

[] |

ralpyna

| 7

|

HumanSignal/labelImg

|

deep-learning

| 515

|

Draw Squares Resets

|

OS: Windows 7 (x64)

PyQt version: Installed labelImg from binary; otherwise PyQt5

labelImg binary version: v1.8.1

Issue: I set the "Draw Squares" setting in 'Edit". However, when scrolling in (CTRL* + Scroll wheel) the setting is disabled even though it is still checked in 'Edit'. Upon further inspection, I discovered simply pressing CTRL disables the setting.

If I recheck the setting in 'Edit', the setting is re-enabled until I press CTRL again.

Steps to reproduce: Enable Draw Squares, press CTRL

*CTRL refers to the left CTRL button

|

open

|

2019-10-25T14:27:42Z

|

2019-10-25T14:27:42Z

|

https://github.com/HumanSignal/labelImg/issues/515

|

[] |

JulianOrteil

| 0

|

jupyter/docker-stacks

|

jupyter

| 1,520

|

Failed write to /etc/passwd

|

From @bilke:

> @maresb Does this part got lost?

>

> I am asking because the following does not work anymore:

>

```bash

$ docker run --rm -p 8888:8888 -v $PWD:/home/jovyan/work --user `id -u $USER` \

--group-add users my_image

Running: start.sh jupyter lab

id: cannot find name for user ID 40841

WARNING: container must be started as root to change the desired user's name with NB_USER!

WARNING: container must be started as root to change the desired user's id with NB_UID!

WARNING: container must be started as root to change the desired user's group id with NB_GID!

There is no entry in /etc/passwd for our UID. Attempting to fix...

Renaming old jovyan user to nayvoj (1000:100)

sed: couldn't open temporary file /etc/sedAELey6: Permission denied

```

> Before removal of this section it printed:

```

Adding passwd file entry for 40841

```

_Originally posted by @bilke in https://github.com/jupyter/docker-stacks/pull/1512#discussion_r746462652_

|

closed

|

2021-11-10T11:14:43Z

|

2021-12-15T10:23:30Z

|

https://github.com/jupyter/docker-stacks/issues/1520

|

[] |

maresb

| 4

|

numpy/numpy

|

numpy

| 28,394

|

ENH: Compiled einsum path support

|

### Proposed new feature or change:

`einsum_path` pre-analyzes the best contraction strategy for an Einstein sum; fine. `einsum` can accept that contraction strategy; also fine. I imagine that this works well in the case that there are very few, very large calls to `einsum`.

Where this breaks down is the case where the input is smaller or the calls are more frequent, making the overhead of the Python side of `einsum` dominant when `optimize` is not `False`. This effect can get really bad and easily overwhelm any benefit of the planned `einsum_path` contraction.

Another issue is that the planned contraction implies a specific subscript string, but that string needs to be passed again to `einsum`; this API allows for a mismatch between the subscript and optimize arguments.

I can imagine a few solutions, but the one that's currently my favourite is a simple loop evaluation -> AST -> compiled set of calls to `tensordot` and `c_einsum`. In my testing this solves all of the problems I described above.

For a vile patch-hack that demonstrates the concept and does function correctly but is probably not the best way to implement this (and also does not have `out` support):

```python

import ast

import logging

import typing

import numpy as np

logger = logging.getLogger('contractions')

if typing.TYPE_CHECKING:

class Contract(typing.Protocol):

def __call__(self, *operands: np.ndarray) -> np.ndarray: ...

def build_contraction(

name: str, subscripts: str, *shapes: tuple[int, ...],

optimize: typing.Literal['greedy', 'optimal'] = 'greedy',

) -> 'Contract':

"""

This is a wrapper for the numpy.einsum and numpy.einsum_path pair of functions to de-duplicate

some parameters. It represents an Einstein tensor contraction that has been pre-planned to

perform as well as possible.

If we simply call einsum() with no optimization, the performance is "good" because it skips

straight to a naive but efficient c_einsum.

If we call einsum_path and then pass the calculated contraction to einsum(), the performance is

awful because there's a lot of overhead in the contraction stage loops. The only way to

alleviate this is to have einsum() run the contraction once to sort out its stage logic and

essentially cache that procedure so that actual runs simply make calls to c_einsum() or

tensordot().

"""

def get_index(a: np.ndarray | object) -> int:

# simple .index() does not work when `a` is an ndarray

return next(i for i, x in enumerate(operands) if x is a)

def c_einsum(intermediate_subscripts: str, *intermediate_operands: np.ndarray, **kwargs) -> object:

"""

This is a patched-in c_einsum that accepts intermediate arguments from the real einsum()

but, rather than calling the actual sum, constructs a planned call for our compiled

contraction.

"""

if kwargs:

raise NotImplementedError()

# xi = c_einsum(intermediate_subscripts, *operands)

body.append(ast.Assign(

targets=[ast.Name(id=f'x{len(operands)}', ctx=ast.Store())],

value=ast.Call(

func=ast.Name(id='c_einsum', ctx=ast.Load()),

args=[ast.Constant(value=intermediate_subscripts)]

+ [

ast.Name(id=f'x{get_index(o)}', ctx=ast.Load())

for o in intermediate_operands

],

),

))

# This is a placeholder sentinel only. It's used to identify inputs to subsequent einsum or tensordot calls.

out = object()

operands.append(out)

return out

def tensordot(a: np.ndarray, b: np.ndarray, axes: tuple[tuple[int, ...], ...]) -> object:

"""

This is a patched-in tensordot that accepts intermediate arguments from the real einsum()

but, rather than calling the actual dot, constructs a planned call for our compiled

contraction.

"""

# xi = tensordot(a, b, axes=axes)

body.append(ast.Assign(

targets=[ast.Name(id=f'x{len(operands)}', ctx=ast.Store())],

value=ast.Call(

func=ast.Name(id='tensordot', ctx=ast.Load()),

args=[

ast.Name(id=f'x{get_index(a)}', ctx=ast.Load()),

ast.Name(id=f'x{get_index(b)}', ctx=ast.Load()),

],

keywords=[ast.keyword(arg='axes', value=ast.Constant(value=axes))],

),

))

# This is a placeholder sentinel only. It's used to identify inputs to subsequent einsum or tensordot calls.

out = object()

operands.append(out)

return out

# These are needed for einsum_path() and einsum() to perform planning, but the actual content

# doesn't matter, only the shape.

fake_arrays = [np.empty(shape) for shape in shapes]

# Build and describe the optimized contraction path plan.

contraction, desc = np.einsum_path(subscripts, *fake_arrays, optimize=optimize)

logger.debug('%s contraction: \n%s', name, desc)

# This will be mutated by our monkeypatched functions and assumes that each element is

# accessible by an indexed-like variable, i.e. x0, x1... in this list's order.

operands: list[np.ndarray | object] = list(fake_arrays)

# AST statements in the function body; will be mutated by the monkeypatched functions

body = []

# Preserve the old numerical backend functions

old_c_einsum = np._core.einsumfunc.c_einsum

old_tensordot = np._core.einsumfunc.tensordot

try:

# Monkeypatch in our substitute functions

np._core.einsumfunc.c_einsum = c_einsum

np._core.einsumfunc.tensordot = tensordot

# Run the real einsum() with fake data and fake numerical backend.

np.einsum(subscripts, *fake_arrays, optimize=contraction)

finally:

# Restore the old numerical backend functions

np._core.einsumfunc.c_einsum = old_c_einsum

np._core.einsumfunc.tensordot = old_tensordot

# The AST function representation; will always have the same name

func = ast.FunctionDef(

name='contraction',

args=ast.arguments(

args=[ast.arg(arg=f'x{i}') for i in range(len(shapes))],

),

body=body + [ast.Return(

value=ast.Name(id=f'x{len(operands) - 1}', ctx=ast.Load()),

)],

)

ast.fix_missing_locations(func) # Add line numbers

code = compile( # Compile to an in-memory anonymous module

source=ast.Module(body=[func]), filename='<compiled tensor contraction>',

mode='exec', flags=0, dont_inherit=1, optimize=2,

)

globs = { # Globals accessible to the module

'__builtins__': {}, # no built-ins

'c_einsum': old_c_einsum, # real numerical backends

'tensordot': old_tensordot,

}

exec(code, globs) # Evaluate the code, putting the function in globs

return globs['contraction'] # Return the function reference

```

|

open

|

2025-02-26T20:23:41Z

|

2025-03-16T19:31:39Z

|

https://github.com/numpy/numpy/issues/28394

|

[

"01 - Enhancement"

] |

gtoombs-avidrone

| 11

|

Guovin/iptv-api

|

api

| 438

|

docker容器闪退

|

在爱快 docker 上运行tv-driver,运行命令类似。

```

docker run -v /etc/docker/config:/tv-driver/config -v /etc/docker/output:/tv-driver/output -d -p 8000:8000 guovern/tv-driver

```

闪退,日志如下:

```

cron: unrecognized service

/tv-driver/updates/fofa/request.py:73: TqdmMonitorWarning: tqdm:disabling monitor support (monitor_interval = 0) due to:

can't start new thread

pbar = tqdm_asyncio(

Processing fofa for hotel: 0% 0/45 [00:00<?, ?it/s]Traceback (most recent call last):

File "/tv-driver/main.py", line 273, in <module>

scheduled_task()

File "/tv-driver/main.py", line 259, in scheduled_task

loop.run_until_complete(update_source.start())

File "/usr/local/lib/python3.8/asyncio/base_events.py", line 616, in run_until_complete

return future.result()

File "/tv-driver/main.py", line 244, in start

await self.main()

File "/tv-driver/main.py", line 148, in main

await self.visit_page(channel_names)

File "/tv-driver/main.py", line 117, in visit_page

setattr(self, result_attr, await task)

File "/tv-driver/updates/fofa/request.py", line 155, in get_channels_by_fofa

futures = [

File "/tv-driver/updates/fofa/request.py", line 156, in <listcomp>

executor.submit(process_fofa_channels, fofa_url) for fofa_url in fofa_urls

File "/usr/local/lib/python3.8/concurrent/futures/thread.py", line 188, in submit

self._adjust_thread_count()

File "/usr/local/lib/python3.8/concurrent/futures/thread.py", line 213, in _adjust_thread_count

t.start()

File "/usr/local/lib/python3.8/threading.py", line 852, in start

_start_new_thread(self._bootstrap, ())

RuntimeError: can't start new thread

Error in atexit._run_exitfuncs:

Traceback (most recent call last):

File "/root/.local/share/virtualenvs/tv-driver-D9SmWF1i/lib/python3.8/site-packages/tqdm/_monitor.py", line 44, in exit

self.join()

File "/usr/local/lib/python3.8/threading.py", line 1006, in join

raise RuntimeError("cannot join thread before it is started")

RuntimeError: cannot join thread before it is started

Processing fofa for hotel: 0% 0/45 [00:00<?, ?it/s]

/tv_entrypoint.sh: line 15: gunicorn: command not found

cron: unrecognized service

/tv-driver/updates/fofa/request.py:73: TqdmMonitorWarning: tqdm:disabling monitor support (monitor_interval = 0) due to:

can't start new thread

pbar = tqdm_asyncio(

Processing fofa for hotel: 0% 0/45 [00:00<?, ?it/s]Traceback (most recent call last):

File "/tv-driver/main.py", line 273, in <module>

scheduled_task()

File "/tv-driver/main.py", line 259, in scheduled_task

loop.run_until_complete(update_source.start())

File "/usr/local/lib/python3.8/asyncio/base_events.py", line 616, in run_until_complete

return future.result()

File "/tv-driver/main.py", line 244, in start

await self.main()

File "/tv-driver/main.py", line 148, in main

await self.visit_page(channel_names)

File "/tv-driver/main.py", line 117, in visit_page

setattr(self, result_attr, await task)

File "/tv-driver/updates/fofa/request.py", line 155, in get_channels_by_fofa

futures = [

File "/tv-driver/updates/fofa/request.py", line 156, in <listcomp>

executor.submit(process_fofa_channels, fofa_url) for fofa_url in fofa_urls

File "/usr/local/lib/python3.8/concurrent/futures/thread.py", line 188, in submit

self._adjust_thread_count()

File "/usr/local/lib/python3.8/concurrent/futures/thread.py", line 213, in _adjust_thread_count

t.start()

File "/usr/local/lib/python3.8/threading.py", line 852, in start

_start_new_thread(self._bootstrap, ())

RuntimeError: can't start new thread

Error in atexit._run_exitfuncs:

Traceback (most recent call last):

File "/root/.local/share/virtualenvs/tv-driver-D9SmWF1i/lib/python3.8/site-packages/tqdm/_monitor.py", line 44, in exit

self.join()

File "/usr/local/lib/python3.8/threading.py", line 1006, in join

raise RuntimeError("cannot join thread before it is started")

RuntimeError: cannot join thread before it is started

Processing fofa for hotel: 0% 0/45 [00:00<?, ?it/s]

/tv_entrypoint.sh: line 15: gunicorn: command not found

cron: unrecognized service

/tv-driver/updates/fofa/request.py:73: TqdmMonitorWarning: tqdm:disabling monitor support (monitor_interval = 0) due to:

can't start new thread

pbar = tqdm_asyncio(

Processing fofa for hotel: 0% 0/45 [00:00<?, ?it/s]Traceback (most recent call last):

File "/tv-driver/main.py", line 273, in <module>

scheduled_task()

File "/tv-driver/main.py", line 259, in scheduled_task

loop.run_until_complete(update_source.start())

File "/usr/local/lib/python3.8/asyncio/base_events.py", line 616, in run_until_complete

return future.result()

File "/tv-driver/main.py", line 244, in start

await self.main()

File "/tv-driver/main.py", line 148, in main

await self.visit_page(channel_names)

File "/tv-driver/main.py", line 117, in visit_page

setattr(self, result_attr, await task)

File "/tv-driver/updates/fofa/request.py", line 155, in get_channels_by_fofa

futures = [

File "/tv-driver/updates/fofa/request.py", line 156, in <listcomp>

executor.submit(process_fofa_channels, fofa_url) for fofa_url in fofa_urls

File "/usr/local/lib/python3.8/concurrent/futures/thread.py", line 188, in submit

self._adjust_thread_count()

File "/usr/local/lib/python3.8/concurrent/futures/thread.py", line 213, in _adjust_thread_count

t.start()

File "/usr/local/lib/python3.8/threading.py", line 852, in start

_start_new_thread(self._bootstrap, ())

RuntimeError: can't start new thread

Error in atexit._run_exitfuncs:

Traceback (most recent call last):

File "/root/.local/share/virtualenvs/tv-driver-D9SmWF1i/lib/python3.8/site-packages/tqdm/_monitor.py", line 44, in exit

self.join()

File "/usr/local/lib/python3.8/threading.py", line 1006, in join

raise RuntimeError("cannot join thread before it is started")

RuntimeError: cannot join thread before it is started

Processing fofa for hotel: 0% 0/45 [00:00<?, ?it/s]

/tv_entrypoint.sh: line 15: gunicorn: command not found

```

|

closed

|

2024-10-22T12:33:22Z

|

2024-11-05T08:32:53Z

|

https://github.com/Guovin/iptv-api/issues/438

|

[

"question"

] |

snowdream

| 4

|

ultralytics/yolov5

|

machine-learning

| 12,533

|

!yolo task=detect mode=predict

|

2023-12-21 09:13:37.146006: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

2023-12-21 09:13:37.146066: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:607] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

2023-12-21 09:13:37.147291: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1515] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

2023-12-21 09:13:38.201310: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

Ultralytics YOLOv8.0.20 🚀 Python-3.10.12 torch-2.1.0+cu121 CUDA:0 (Tesla T4, 15102MiB)

Model summary (fused): 218 layers, 25841497 parameters, 0 gradients, 78.7 GFLOPs

WARNING ⚠️ NMS time limit 0.550s exceeded

image 1/1 /content/DJI0009.JPG: 480x640 58 IMs, 54 IPs, 23 ITs, 74.2ms

Speed: 0.9ms pre-process, 74.2ms inference, 840.7ms postprocess per image at shape (1, 3, 640, 640)

Results saved to runs/detect/predict3

can i increase the nms time limit in predict mode in google colab with yolo v8? thank u

|

closed

|

2023-12-21T09:16:19Z

|

2024-10-20T19:35:02Z

|

https://github.com/ultralytics/yolov5/issues/12533

|

[

"Stale"

] |

SkripsiFighter

| 7

|

nolar/kopf

|

asyncio

| 166

|

Invalid attribute apiVersion in build_object_reference()

|

> <a href="https://github.com/olivier-mauras"><img align="left" height="50" src="https://avatars3.githubusercontent.com/u/1299371?v=4"></a> An issue by [olivier-mauras](https://github.com/olivier-mauras) at _2019-08-06 05:54:25+00:00_

> Original URL: https://github.com/zalando-incubator/kopf/issues/166

>

## Actual Behavior

``` text

[2019-08-06 05:50:32,627] kopf.objects [ERROR ] [xxx-dev/tiller] Handler 'sa_delete' failed with an exception. Will retry.

Traceback (most recent call last):

File "/usr/local/lib/python3.7/site-packages/kopf/reactor/handling.py", line 387, in _execute

lifecycle=lifecycle, # just a default for the sub-handlers, not used directly.

File "/usr/local/lib/python3.7/site-packages/kopf/reactor/handling.py", line 478, in _call_handler

**kwargs,

File "/usr/local/lib/python3.7/site-packages/kopf/reactor/invocation.py", line 66, in invoke

result = await fn(*args, **kwargs)

File "./ns.py", line 235, in sa_delete

kopf.info(ns.to_dict(), reason='SA_DELETED', message='Managed service account got deleted')

File "/usr/local/lib/python3.7/site-packages/kopf/engines/posting.py", line 79, in info

event(obj, type='Normal', reason=reason, message=message)

File "/usr/local/lib/python3.7/site-packages/kopf/engines/posting.py", line 72, in event

ref = hierarchies.build_object_reference(obj)

File "/usr/local/lib/python3.7/site-packages/kopf/structs/hierarchies.py", line 12, in build_object_reference

apiVersion=body['apiVersion'],

KeyError: 'apiVersion'

```

## Steps to Reproduce the Problem

``` python

@kopf.on.delete('', 'v1', 'serviceaccounts', annotations={'custom/created_by': 'namespace-manager'})

async def sa_delete(body, namespace, **kwargs):

try:

api = kubernetes.client.CoreV1Api()

ns = api.read_namespace(name=namespace)

except ApiException as err:

sprint('ERROR', 'Exception when calling CoreV1Api->read_namespace: {}'.format(err))

kopf.info(ns.to_dict(), reason='SA_DELETED', message='Managed service account got deleted')

return

```

#######

Culprit lies here in `hierarchies.py`

``` python

def build_object_reference(body):

"""

Construct an object reference for the events.

"""

return dict(

apiVersion=body['apiVersion'],

kind=body['kind'],

name=body['metadata']['name'],

uid=body['metadata']['uid'],

namespace=body['metadata']['namespace'],

)

```

As described in https://github.com/kubernetes-client/python/blob/master/kubernetes/docs/V1Namespace.md `apiVersion` attribute should be `api_version`.

Replacing line 12 with `api_version` makes indeed the above code work but I'm not sure there's other implication before sending you a PR

---

> <a href="https://github.com/olivier-mauras"><img align="left" height="30" src="https://avatars3.githubusercontent.com/u/1299371?v=4"></a> Commented by [olivier-mauras](https://github.com/olivier-mauras) at _2019-08-07 14:37:17+00:00_

>

Oh apparently it's not the API that is inconsistent, but the body returned by on.xxx() handlers of kopf.

Here's an example:

body returned by on.resume/create

``` text

{'apiVersion': 'v1',

'kind': 'Namespace',

'metadata': {'annotations': {'cattle.io/status': '{"Conditions":[{"Type":"ResourceQuotaInit","Status":"True","Message":"","LastUpdateTime":"2019-04-29T08:26:31Z"},{"Type":"InitialRolesPopulated","Status":"True","Message":"","LastUpdateTime":"2019-04-29T08:26:32Z"}]}',

'kopf.zalando.org/last-handled-configuration': '{"spec": '

'{"finalizers": '

'["kubernetes"]}, '

'"annotations": '

'{"cattle.io/status": '

'"{\\"Conditions\\":[{\\"Type\\":\\"ResourceQuotaInit\\",\\"Status\\":\\"True\\",\\"Message\\":\\"\\",\\"LastUpdateTime\\":\\"2019-04-29T08:26:31Z\\"},{\\"Type\\":\\"InitialRolesPopulated\\",\\"Status\\":\\"True\\",\\"Message\\":\\"\\",\\"LastUpdateTime\\":\\"2019-04-29T08:26:32Z\\"}]}", '

'"lifecycle.cattle.io/create.namespace-auth": '

'"true"}}}',

'lifecycle.cattle.io/create.namespace-auth': 'true''},

'creationTimestamp': '2019-02-04T12:18:54Z',

'finalizers': ['controller.cattle.io/namespace-auth'],

'name': 'trident',

'resourceVersion': '27520896',

'selfLink': '/api/v1/namespaces/trident',

'uid': '0a305ee9-2877-11e9-a525-005056abd413'},

'spec': {'finalizers': ['kubernetes']},

'status': {'phase': 'Active'}}

```

body returned directly by the API with a read_namespace call:

``` text

{'api_version': 'v1',

'kind': 'Namespace',

'metadata': {'annotations': {'cattle.io/status': '{"Conditions":[{"Type":"ResourceQuotaInit","Status":"True","Message":"","LastUpdateTime":"2019-04-29T08:26:31Z"},{"Type":"InitialRolesPopulated","Status":"True","Message":"","LastUpdateTime":"2019-04-29T08:26:32Z"}]}',

'kopf.zalando.org/last-handled-configuration': '{"spec": '

'{"finalizers": '

'["kubernetes"]}, '

'"annotations": '

'{"cattle.io/status": '

'"{\\"Conditions\\":[{\\"Type\\":\\"ResourceQuotaInit\\",\\"Status\\":\\"True\\",\\"Message\\":\\"\\",\\"LastUpdateTime\\":\\"2019-04-29T08:26:31Z\\"},{\\"Type\\":\\"InitialRolesPopulated\\",\\"Status\\":\\"True\\",\\"Message\\":\\"\\",\\"LastUpdateTime\\":\\"2019-04-29T08:26:32Z\\"}]}", '

'"lifecycle.cattle.io/create.namespace-auth": '

'"true"}}}',

'lifecycle.cattle.io/create.namespace-auth': 'true'},

'cluster_name': None,

'creation_timestamp': datetime.datetime(2019, 2, 4, 12, 18, 54, tzinfo=tzlocal()),

'deletion_grace_period_seconds': None,

'deletion_timestamp': None,

'finalizers': ['controller.cattle.io/namespace-auth'],

'generate_name': None,

'generation': None,

'initializers': None,

'name': 'trident',

'namespace': None,

'owner_references': None,

'resource_version': '27520896',

'self_link': '/api/v1/namespaces/trident',

'uid': '0a305ee9-2877-11e9-a525-005056abd413'},

'spec': {'finalizers': ['kubernetes']},

'status': {'phase': 'Active'}}

```

I had taken example from https://kopf.readthedocs.io/en/latest/events/#other-objects

https://github.com/kubernetes-client/python/blob/master/kubernetes/docs/V1Pod.md is clear that it should return `api_version`

---

> <a href="https://github.com/psycho-ir"><img align="left" height="30" src="https://avatars0.githubusercontent.com/u/726875?v=4"></a> Commented by [psycho-ir](https://github.com/psycho-ir) at _2019-08-07 15:40:18+00:00_

>

I think that's because of the difference naming in `pykube`(camelCase) has from `kubernetes-client`(snake_case).

so probably if you use the `pykube-ng` instead of `kubernetes-client` this issue should be gone, just as an interim solution.

we need to find a proper way probably to handle these naming mismatches [nolar](https://github.com/nolar).

---

> <a href="https://github.com/nolar"><img align="left" height="30" src="https://avatars0.githubusercontent.com/u/544296?v=4"></a> Commented by [nolar](https://github.com/nolar) at _2019-08-07 16:26:45+00:00_

>

Kopf is agnostic of the clients used by the developers (kind of). The only "canonical" reference is the Kubernetes API. The API doc [says the field name is `apiVersion`](https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.15/#objectreference-v1-core).

This is how Kopf gets it from the API, and passes it to the handlers. And this is how Kopf expects it from the handlers to be passed to the API.

In the base case, there are no Kubernetes clients at all, just the raw dicts, the json parser-serializer, and an HTTP library.

`api_version` is a naming convention used in the Python Kubernetes client only (in an attempt to follow Python's snake_case convention). I.e., it is a client's issue that it produces and consumes such strange non-API-compatible dicts.

---

Kopf also tries to be friendly to all clients — but to an extent. I'm not sure if bringing the workarounds for literally _all_ clients with their nuances would be a good idea.

Even for the official client alone (just for the [principle of least astonishment](https://en.wikipedia.org/wiki/Principle_of_least_astonishment)), it would mean one of the following:

* Adding the duplicating dict keys (both `apiVersion` & `api_version`), and filtering out the non-canonical forms on the internal use, the canonical forms on the external use — thus increasing the complexity.

* Making special classes for all these structs, with properties as aliases. And so we turn Kopf into a client library with its own K8s object-manipulation DSL — which goes against some principles of API-client neutrality. (Now it runs on plain dicts.)

* Allowing the design and conventions of the official client (sometimes questionable) to dictate the design and conventions of Kopf, making Kopf incompatible with other clients that _do_ follow the canonical API naming.

Neither of this I see as a good solution to the problem.

At the moment, and in my opinion, the best solution is to do nothing, and to let this issue exist (to be solved by the operator developers on the app-level).

---

> <a href="https://github.com/olivier-mauras"><img align="left" height="30" src="https://avatars3.githubusercontent.com/u/1299371?v=4"></a> Commented by [olivier-mauras](https://github.com/olivier-mauras) at _2019-08-07 17:09:16+00:00_

>

[nolar](https://github.com/nolar) Thanks for the reply.

I'm actually super fine to fix that in my code directly. I guess we can close the PR

|

closed

|

2020-08-18T19:59:31Z

|

2020-08-23T20:48:38Z

|

https://github.com/nolar/kopf/issues/166

|

[

"wontfix",

"archive"

] |

kopf-archiver[bot]

| 0

|

schemathesis/schemathesis

|

graphql

| 1,762

|

Documentation += report format

|

Finding out how can I see the report file generated. Neither file extension nor any hints in documentation.

Can it be added to the documentation near the option? Is it gzip compressed data? (did not manage to unzip yet...) Or what tool to use to read it? I only found:

`--report FILENAME Upload test report to Schemathesis.io, or store in a file`

Thank you

|

closed

|

2023-08-18T10:27:40Z

|

2023-08-21T15:28:02Z

|

https://github.com/schemathesis/schemathesis/issues/1762

|

[] |

tsyg

| 2

|

jupyterlab/jupyter-ai

|

jupyter

| 671

|

Change the list of provided Anthropic and Bedrock models

|

<!-- Welcome! Thank you for contributing. These HTML comments will not render in the issue, but you can delete them once you've read them if you prefer! -->

<!--

Thanks for thinking of a way to improve JupyterLab. If this solves a problem for you, then it probably solves that problem for lots of people! So the whole community will benefit from this request.

Before creating a new feature request please search the issues for relevant feature requests.

-->

### Problem

Anthropic's Claude v1 (`anthropic.claude-v1`) is no longer supported and the Claude-v3-Sonnet model needs to be added to the `%ai list` command. The drop down list in the left panel also needs to be updated for the same model changes.

Update for three providers: `bedrock-chat`, `anthropic`, `anthropic-chat`

<!-- Provide a clear and concise description of what problem this feature will solve. For example:

* I'm always frustrated when [...] because [...]

* I would like it if [...] happened when I [...] because [...]

-->

|

closed

|

2024-03-04T22:41:39Z

|

2024-03-07T19:38:37Z

|

https://github.com/jupyterlab/jupyter-ai/issues/671

|

[

"enhancement"

] |

srdas

| 2

|

aleju/imgaug

|

deep-learning

| 3

|

Cant load augmenters

|

As said in the README, I copied the required files into my directory and ran the

`from imgaug import augmenters as iaa`

but I get an error

"ImportError: cannot import name 'augmenters'"

|

closed

|

2016-12-10T16:34:31Z

|

2016-12-10T17:52:29Z

|

https://github.com/aleju/imgaug/issues/3

|

[] |

SarthakYadav

| 9

|

deepspeedai/DeepSpeed

|

pytorch

| 7,041

|

ambiguity in Deepspeed Ulysses

|

Hi,

There is a discrepancy between Figure 2 and Section 3.1 in the DeepSpeed-Ulysses paper (https://arxiv.org/abs/2309.14509). My understanding from the text is that the entire method simply partitions the sequence length N across P available devices, and that’s it. However, Figure 3 seems to suggest that there is an additional partitioning happening across attention heads and devices. Is that correct? If so, could you provide an equation to better explain this method?

In addition, if the partitioning happens only in the queries (Q), keys (K), and values (V) embeddings, while attention is still calculated on the full NxN sequence (as the text states that QKV embeddings are gathered into global QKV before computing attention...), then how does this method help with longer sequences? The main challenge in handling long sequences is the quadratic computational complexity of attention, so it is unclear how this method addresses that issue.

I believe writing down the equations to complement the figure would greatly help clarify the ambiguity in this method.

|

closed

|

2025-02-16T10:56:21Z

|

2025-02-24T07:45:24Z

|

https://github.com/deepspeedai/DeepSpeed/issues/7041

|

[] |

rasoolfa

| 0

|

graphql-python/graphql-core

|

graphql

| 226

|

How to pass additional information to GraphQLResolveInfo

|

Hi there 😊

I was looking at this PR on Strawberry: https://github.com/strawberry-graphql/strawberry/pull/3461

and I was wondering if there's a nicer to pass the input extensions data around, so I stumbled on the ExecutionContext class which can be useful for us to customise the GraphQLResolveInfo data[1], but I haven't figured out a nice way to pass some data to ExecutionContext.build without customising the execute function here: https://github.com/graphql-python/graphql-core/blob/9dcf25e66f6ed36b77de788621cf50bab600d1d3/src/graphql/execution/execute.py#L1844-L1856

Is there a better alternative for something like this?

[1] We also have a custom version of info, so customising the ExecutionContext class will help with that too :D

|

open

|

2024-08-27T10:24:57Z

|

2025-02-02T09:09:50Z

|

https://github.com/graphql-python/graphql-core/issues/226

|

[] |

patrick91

| 2

|

Ehco1996/django-sspanel

|

django

| 412

|

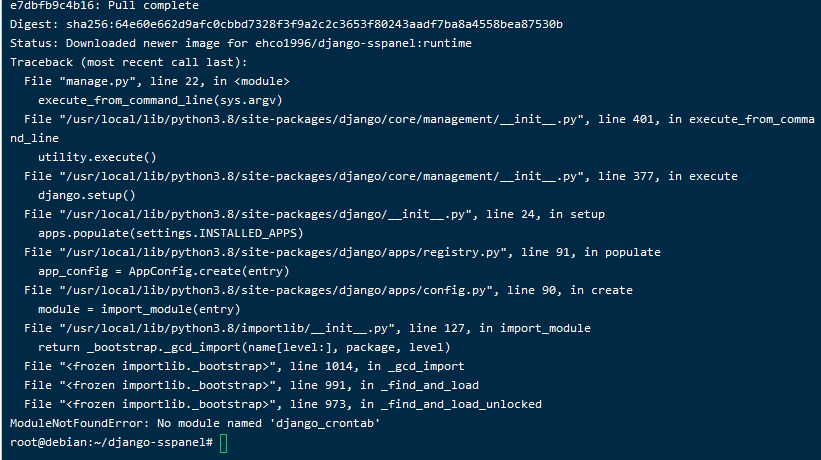

启动失败,错误报告 No module named 'django_crontab'

|

**问题的描述**

使用2.4版的release https://github.com/Ehco1996/django-sspanel/releases/tag/2.4

命令 docker-compose run --rm web python manage.py collectstatic --noinput 后报错 No module named 'django_crontab'

**项目的配置文件**

**如何复现**

wget https://codeload.github.com/Ehco1996/django-sspanel/zip/2.4

unzip django-sspanel-2.4

cd django-sspanel-2.4

docker-compose run --rm web python manage.py collectstatic --noinput

**相关截图/log**

**其他信息**

docker-compose up 的话 里面是一大堆报错>.>

|

closed

|

2020-10-04T03:30:30Z

|

2020-10-04T10:12:52Z

|

https://github.com/Ehco1996/django-sspanel/issues/412

|

[

"bug"

] |

jackjieYYY

| 2

|

gradio-app/gradio

|

python

| 9,977

|

Queuing related guides contain outdated information about `concurrency_count`

|

### Describe the bug

These guides related to queuing still refer to `concurrency_count`:

- [Queuing](https://www.gradio.app/guides/queuing)

- [Setting Up a Demo for Maximum Performance](https://www.gradio.app/guides/setting-up-a-demo-for-maximum-performance)

However, as confirmed in #9463:

> The `concurrency_count` parameter has been removed from `.queue()`. In Gradio 4, this parameter was already deprecated and had no effect. In Gradio 5, this parameter has been removed altogether.

Running the code from [Queuing](https://www.gradio.app/guides/queuing) guide results in the error below:

```

Exception has occurred: TypeError

EventListener._setup.<locals>.event_trigger() got an unexpected keyword argument 'concurrency_count'

File "./test_gradio.py", line 23, in <module>

greet_btn.click(fn=greet, inputs=[tag, output], outputs=[

TypeError: EventListener._setup.<locals>.event_trigger() got an unexpected keyword argument 'concurrency_count'

```

### Have you searched existing issues? 🔎

- [X] I have searched and found no existing issues

### Reproduction

```python

# Sample code from https://www.gradio.app/guides/queuing

import gradio as gr

with gr.Blocks() as demo:

prompt = gr.Textbox()

image = gr.Image()

generate_btn = gr.Button("Generate Image")

generate_btn.click(image_gen, prompt, image, concurrency_count=5)

```

### Screenshot

_No response_

### Logs

_No response_

### System Info

```shell

Gradio Environment Information:

------------------------------

Operating System: Darwin

gradio version: 5.6.0

gradio_client version: 1.4.3

```

### Severity

I can work around it

|

closed

|

2024-11-17T23:13:20Z

|

2024-11-24T05:47:38Z

|

https://github.com/gradio-app/gradio/issues/9977

|

[

"good first issue",

"docs/website"

] |

the-eddie

| 3

|

sqlalchemy/alembic

|

sqlalchemy

| 595

|

Adding a unique constraint doesn't work when more than one columns are given.

|

So I tried adding a unique constraint for two columns of a table at the same time and migrating to it. This works if I drop the database and create the new one using these changes but I cannot migrate to the new database version using alembic.

Here's the class I want to add the constraint to:

```

class UserItems(BASE):

__tablename__ = 'user_items'

id = Column(Integer, primary_key=True)

user_id = Column(Integer, ForeignKey('users.id'))

user = relationship('Users', back_populates='items')

item_id = Column(Integer, ForeignKey('items.id'), nullable=False)

item= relationship('Items', back_populates='users')

# The combination of a user and an item should be unique.

__table_args__ = (UniqueConstraint('user_id', 'item_id',

name='unique_association'), )

```

And here's the code that is supposed to upgrade the database:

```

def upgrade():

with op.batch_alter_table("user_items") as batch_op:

batch_op.create_unique_constraint("unique_association", "user_items", ["user_id', 'item_id"])

```

When I run the `python3 migrate.py db upgrade` command, I get this error:

```

INFO [alembic.runtime.migration] Context impl PostgresqlImpl.

INFO [alembic.runtime.migration] Will assume transactional DDL.

INFO [alembic.runtime.migration] Running upgrade -> 740399c84e0f, empty message

Traceback (most recent call last):

File "migrate.py", line 13, in <module>

manager.run()

File "/usr/local/lib/python3.5/dist-packages/flask_script/__init__.py", line 417, in run

result = self.handle(argv[0], argv[1:])

File "/usr/local/lib/python3.5/dist-packages/flask_script/__init__.py", line 386, in handle

res = handle(*args, **config)

File "/usr/local/lib/python3.5/dist-packages/flask_script/commands.py", line 216, in __call__

return self.run(*args, **kwargs)

File "/usr/local/lib/python3.5/dist-packages/flask_migrate/__init__.py", line 95, in wrapped

f(*args, **kwargs)

File "/usr/local/lib/python3.5/dist-packages/flask_migrate/__init__.py", line 280, in upgrade

command.upgrade(config, revision, sql=sql, tag=tag)

File "/usr/local/lib/python3.5/dist-packages/alembic/command.py", line 276, in upgrade

script.run_env()

File "/usr/local/lib/python3.5/dist-packages/alembic/script/base.py", line 475, in run_env

util.load_python_file(self.dir, "env.py")

File "/usr/local/lib/python3.5/dist-packages/alembic/util/pyfiles.py", line 90, in load_python_file

module = load_module_py(module_id, path)

File "/usr/local/lib/python3.5/dist-packages/alembic/util/compat.py", line 156, in load_module_py

spec.loader.exec_module(module)

File "<frozen importlib._bootstrap_external>", line 665, in exec_module

File "<frozen importlib._bootstrap>", line 222, in _call_with_frames_removed

File "migrations/env.py", line 96, in <module>

run_migrations_online()

File "migrations/env.py", line 90, in run_migrations_online

context.run_migrations()

File "<string>", line 8, in run_migrations

File "/usr/local/lib/python3.5/dist-packages/alembic/runtime/environment.py", line 839, in run_migrations

self.get_context().run_migrations(**kw)

File "/usr/local/lib/python3.5/dist-packages/alembic/runtime/migration.py", line 361, in run_migrations

step.migration_fn(**kw)

File "/vagrant/Project/migrations/versions/740399c84e0f_.py", line 26, in upgrade

["user_id", "item_id"])

TypeError: <flask_script.commands.Command object at 0x7fe108a46fd0>: create_unique_constraint() takes 3 positional arguments but 4 were given

```

|

closed

|

2019-08-25T08:44:50Z

|

2019-09-17T16:55:26Z

|

https://github.com/sqlalchemy/alembic/issues/595

|

[

"question"

] |

Nikitas-io

| 4

|

TracecatHQ/tracecat

|

automation

| 7

|

CrowdStrike Integration

|

You can start with my CS_BADGER it's API for CS's Splunk uses MFA / persistent tokens. :

I know it's gross ... I want to rewrite it with PING auth but I suck with SAML in python

https://github.com/freeload101/SCRIPTS/blob/master/Bash/CS_BADGER/CS_BADGER.sh

|

closed

|

2024-03-20T19:17:05Z

|

2024-06-16T19:14:51Z

|

https://github.com/TracecatHQ/tracecat/issues/7

|

[

"enhancement",

"integrations"

] |

freeload101

| 5

|

deepset-ai/haystack

|

nlp

| 8,912

|

`tools_strict` option in `OpenAIChatGenerator` broken with `ComponentTool`

|

**Describe the bug**

When using `ComponentTool` and setting `tools_strict=True` OpenAI API is complaining that `additionalProperties` of the schema is not `false`.

**Error message**

```---------------------------------------------------------------------------

BadRequestError Traceback (most recent call last)

Cell In[19], line 1

----> 1 result = generator.run(messages=chat_messages["prompt"], tools=tool_invoker.tools)

2 result

File ~/.local/lib/python3.12/site-packages/haystack/components/generators/chat/openai.py:246, in OpenAIChatGenerator.run(self, messages, streaming_callback, generation_kwargs, tools, tools_strict)

237 streaming_callback = streaming_callback or self.streaming_callback

239 api_args = self._prepare_api_call(

240 messages=messages,

241 streaming_callback=streaming_callback,

(...)

244 tools_strict=tools_strict,

245 )

--> 246 chat_completion: Union[Stream[ChatCompletionChunk], ChatCompletion] = self.client.chat.completions.create(

247 **api_args

248 )

250 is_streaming = isinstance(chat_completion, Stream)

251 assert is_streaming or streaming_callback is None

File ~/.local/lib/python3.12/site-packages/ddtrace/contrib/trace_utils.py:336, in with_traced_module.<locals>.with_mod.<locals>.wrapper(wrapped, instance, args, kwargs)

334 log.debug("Pin not found for traced method %r", wrapped)

335 return wrapped(*args, **kwargs)

--> 336 return func(mod, pin, wrapped, instance, args, kwargs)

File ~/.local/lib/python3.12/site-packages/ddtrace/contrib/internal/openai/patch.py:282, in _patched_endpoint.<locals>.patched_endpoint(openai, pin, func, instance, args, kwargs)

280 resp, err = None, None

281 try:

--> 282 resp = func(*args, **kwargs)

283 return resp

284 except Exception as e:

File ~/.local/lib/python3.12/site-packages/openai/_utils/_utils.py:279, in required_args.<locals>.inner.<locals>.wrapper(*args, **kwargs)

277 msg = f"Missing required argument: {quote(missing[0])}"

278 raise TypeError(msg)

--> 279 return func(*args, **kwargs)

File ~/.local/lib/python3.12/site-packages/openai/resources/chat/completions/completions.py:879, in Completions.create(self, messages, model, audio, frequency_penalty, function_call, functions, logit_bias, logprobs, max_completion_tokens, max_tokens, metadata, modalities, n, parallel_tool_calls, prediction, presence_penalty, reasoning_effort, response_format, seed, service_tier, stop, store, stream, stream_options, temperature, tool_choice, tools, top_logprobs, top_p, user, extra_headers, extra_query, extra_body, timeout)

837 @required_args(["messages", "model"], ["messages", "model", "stream"])

838 def create(

839 self,

(...)

876 timeout: float | httpx.Timeout | None | NotGiven = NOT_GIVEN,

877 ) -> ChatCompletion | Stream[ChatCompletionChunk]:

878 validate_response_format(response_format)

--> 879 return self._post(

880 "/chat/completions",

881 body=maybe_transform(

882 {

883 "messages": messages,

884 "model": model,

885 "audio": audio,

886 "frequency_penalty": frequency_penalty,

887 "function_call": function_call,

888 "functions": functions,

889 "logit_bias": logit_bias,

890 "logprobs": logprobs,

891 "max_completion_tokens": max_completion_tokens,

892 "max_tokens": max_tokens,

893 "metadata": metadata,

894 "modalities": modalities,

895 "n": n,

896 "parallel_tool_calls": parallel_tool_calls,

897 "prediction": prediction,

898 "presence_penalty": presence_penalty,

899 "reasoning_effort": reasoning_effort,

900 "response_format": response_format,

901 "seed": seed,

902 "service_tier": service_tier,

903 "stop": stop,

904 "store": store,

905 "stream": stream,

906 "stream_options": stream_options,

907 "temperature": temperature,

908 "tool_choice": tool_choice,

909 "tools": tools,

910 "top_logprobs": top_logprobs,

911 "top_p": top_p,

912 "user": user,

913 },

914 completion_create_params.CompletionCreateParams,

915 ),

916 options=make_request_options(

917 extra_headers=extra_headers, extra_query=extra_query, extra_body=extra_body, timeout=timeout

918 ),

919 cast_to=ChatCompletion,

920 stream=stream or False,

921 stream_cls=Stream[ChatCompletionChunk],

922 )

File ~/.local/lib/python3.12/site-packages/openai/_base_client.py:1290, in SyncAPIClient.post(self, path, cast_to, body, options, files, stream, stream_cls)

1276 def post(

1277 self,

1278 path: str,

(...)

1285 stream_cls: type[_StreamT] | None = None,

1286 ) -> ResponseT | _StreamT:

1287 opts = FinalRequestOptions.construct(

1288 method="post", url=path, json_data=body, files=to_httpx_files(files), **options

1289 )

-> 1290 return cast(ResponseT, self.request(cast_to, opts, stream=stream, stream_cls=stream_cls))

File ~/.local/lib/python3.12/site-packages/openai/_base_client.py:967, in SyncAPIClient.request(self, cast_to, options, remaining_retries, stream, stream_cls)

964 else:

965 retries_taken = 0

--> 967 return self._request(

968 cast_to=cast_to,

969 options=options,

970 stream=stream,

971 stream_cls=stream_cls,

972 retries_taken=retries_taken,

973 )

File ~/.local/lib/python3.12/site-packages/openai/_base_client.py:1071, in SyncAPIClient._request(self, cast_to, options, retries_taken, stream, stream_cls)

1068 err.response.read()

1070 log.debug("Re-raising status error")

-> 1071 raise self._make_status_error_from_response(err.response) from None

1073 return self._process_response(

1074 cast_to=cast_to,

1075 options=options,

(...)

1079 retries_taken=retries_taken,

1080 )

BadRequestError: Error code: 400 - {'error': {'message': "Invalid schema for function 'web_search': In context=(), 'additionalProperties' is required to be supplied and to be false.", 'type': 'invalid_request_error', 'param': 'tools[0].function.parameters', 'code': 'invalid_function_parameters'}}

```

**Expected behavior**

`use_strict=True` is working

**Additional context**

Add any other context about the problem here, like document types / preprocessing steps / settings of reader etc.

**To Reproduce**

```python

from haystack.components.generators.chat.openai import OpenAIChatGenerator

from haystack.dataclasses.chat_message import ChatMessage

gen = OpenAIChatGenerator.from_dict({'type': 'haystack.components.generators.chat.openai.OpenAIChatGenerator',

'init_parameters': {'model': 'gpt-4o',

'streaming_callback': None,

'api_base_url': None,

'organization': None,

'generation_kwargs': {},

'api_key': {'type': 'env_var',

'env_vars': ['OPENAI_API_KEY'],

'strict': False},

'timeout': None,

'max_retries': None,

'tools': [{'type': 'haystack.tools.component_tool.ComponentTool',

'data': {'name': 'web_search',

'description': 'Search the web for current information on any topic',

'parameters': {'type': 'object',

'properties': {'query': {'type': 'string',

'description': 'Search query.'}},

'required': ['query']},

'component': {'type': 'haystack.components.websearch.serper_dev.SerperDevWebSearch',

'init_parameters': {'top_k': 10,

'allowed_domains': None,

'search_params': {},

'api_key': {'type': 'env_var',

'env_vars': ['SERPERDEV_API_KEY'],

'strict': False}}}}}],

'tools_strict': True}})

gen.run([ChatMessage.from_user("How is the weather today in Berlin?")])

```

**FAQ Check**

- [ ] Have you had a look at [our new FAQ page](https://docs.haystack.deepset.ai/docs/faq)?

**System:**

- OS:

- GPU/CPU:

- Haystack version (commit or version number): 2.10.2

- DocumentStore:

- Reader:

- Retriever:

|

closed

|

2025-02-24T13:42:08Z

|

2025-03-03T15:23:26Z

|

https://github.com/deepset-ai/haystack/issues/8912

|

[

"P1"

] |

tstadel

| 0

|

sgl-project/sglang

|

pytorch

| 4,013

|

[Bug] Qwen2.5-32B-Instruct-GPTQ-Int answer end abnormally,Qwen2.5-32B-Instruct answer is ok,use vllm is ok

|

### Checklist

- [x] 1. I have searched related issues but cannot get the expected help.

- [x] 2. The bug has not been fixed in the latest version.

- [ ] 3. Please note that if the bug-related issue you submitted lacks corresponding environment info and a minimal reproducible demo, it will be challenging for us to reproduce and resolve the issue, reducing the likelihood of receiving feedback.

- [ ] 4. If the issue you raised is not a bug but a question, please raise a discussion at https://github.com/sgl-project/sglang/discussions/new/choose Otherwise, it will be closed.

- [x] 5. Please use English, otherwise it will be closed.

### Describe the bug

sglang 0.4.3.post2

start command: python3 -m sglang.lanuch_server --model-path /Qwen2.5-32B-Instruct-GPTQ-Int4 --host 0.0.0.0 --port 8088 --tensor-parallel-size 2

Qwen2.5-32B-Instruct is ok

start command: python3 -m sglang.lanuch_server --model-path /Qwen2.5-32B-Instruct --host 0.0.0.0 --port 8088 --tensor-parallel-size 2

use vllm 0.7.3 is ok

start command: vllm serve /Qwen2.5-32B-Instruct-GPTQ-Int4 --host 0.0.0.0 --port 8088 --served-model-name qwen -tp 2

### Reproduction

-

### Environment

-

|

closed

|

2025-03-03T06:32:39Z

|

2025-03-05T07:41:11Z

|

https://github.com/sgl-project/sglang/issues/4013

|

[] |

Flynn-Zh

| 5

|

piskvorky/gensim

|

nlp

| 3,234

|

Update release instructions

|

https://github.com/RaRe-Technologies/gensim/wiki/Maintainer-page is out of date

- We don't use gensim-wheels repo anymore - everything happens in the main gensim repo

- Wheel building is less of a pain now - GHA takes care of most things

- Some release scripts appear out of date, e.g. prepare.sh

- A general description of what we're doing and why wrt to the wheel builds (interplay between manylinux, multibuild, etc) would be helpful

|

open

|

2021-09-14T13:45:07Z

|

2021-09-14T13:45:17Z

|

https://github.com/piskvorky/gensim/issues/3234

|

[

"documentation",

"housekeeping"

] |

mpenkov

| 0

|

plotly/dash

|

plotly

| 2,761

|

[BUG] extending a trace in callback using extendData property doesn't work for a figure with multi-level axis

|

Hello, plotly community,

I'm seeking your help in resolving a trace extension issue for a multi-level axis figure.

**Describe your context**

Please provide us your environment, so we can easily reproduce the issue.

- replace the result of `pip list | grep dash` below

-

```

dash 2.14.1

dash-bootstrap-components 1.5.0

dash-core-components 2.0.0

dash-extendable-graph 1.3.0

dash-html-components 2.0.0

dash-table 5.0.0

dash-treeview-antd 0.0.1

```

- if frontend related, tell us your Browser, Version and OS

- OS: Windows 10

- Browser: Microsoft Edge

**Describe the bug**

Extending a trace in callback using extendData property doesn't work for a figure with multi-level axis

```

import dash

from dash.dependencies import Input, Output, State

import dash_html_components as html

import dash_core_components as dcc

import random

app = dash.Dash(__name__)

app.layout = html.Div([

html.Div([

dcc.Graph(

id='graph-extendable',

figure=dict(

data=[{'x': [0, 1, 2, 3, 4],

'y': [[0,0,0,0], [0,0,0,0]],

'mode':'lines+markers'

}],

)

),

]),

dcc.Interval(

id='interval-graph-update',

interval=1000,

n_intervals=0),

])

@app.callback(Output('graph-extendable', 'extendData'),

[Input('interval-graph-update', 'n_intervals')],

[State('graph-extendable', 'figure')])

def update_extend_traces_traceselect(n_intervals, existing):

print("")

print(existing['data'][0])

x_new = existing['data'][0]['x'][-1] + 1

d = dict(x=[[x_new]], y=[[[0],[0]]])

print(d)

return d, [0]

if __name__ == '__main__':

app.run_server(debug=True)

```

produces the following sequence of the trace data updates:

`{'x': [0, 1, 2, 3, 4], 'y': [[0, 0, 0, 0], [0, 0, 0, 0]], 'mode': 'lines+markers'}

{'x': [[5]], 'y': [[[0], [0]]]}

{'x': [0, 1, 2, 3, 4, **5**], 'y': [[0, 0, 0, 0], [0, 0, 0, 0]**, [0], [0]**], 'mode': 'lines+markers'}

{'x': [[6]], 'y': [[[0], [0]]]}

{'x': [0, 1, 2, 3, 4, **5, 6**], 'y': [[0, 0, 0, 0], [0, 0, 0, 0], **[0], [0], [0], [0]**], 'mode': 'lines+markers'}

{'x': [[7]], 'y': [[[0], [0]]]}

{'x': [0, 1, 2, 3, 4, **5, 6, 7**], 'y': [[0, 0, 0, 0], [0, 0, 0, 0], **[0], [0], [0], [0], [0], [0]**], 'mode': 'lines+markers'}

{'x': [[8]], 'y': [[[0], [0]]]}`

**Expected behavior**

it's expected that both existing levels of y axis are extended 'y': [[0, 0, 0, 0, **0**], [0, 0, 0, 0, **0**]]

instead of adding new levels 'y': [[0, 0, 0, 0], [0, 0, 0, 0], **[0], [0]**]

|

closed

|

2024-02-16T00:06:01Z

|

2024-07-25T13:13:30Z

|

https://github.com/plotly/dash/issues/2761

|

[] |

dmitrii-erkin

| 2

|

ploomber/ploomber

|

jupyter

| 922

|

lint failing when using `ploomber nb --remove`

|

From Slack:

> Currently, in ploomber, after running ploomber nb remove, the parameters cell is empty. This causes checkers to complain as product variable doesn’t exist.

I would be great if instead of being empty, it was a TypedDic

|

open

|

2022-07-19T15:01:57Z

|

2022-07-19T15:02:05Z

|

https://github.com/ploomber/ploomber/issues/922

|

[] |

edublancas

| 0

|

ydataai/ydata-profiling

|

data-science

| 1,514

|

Bug Report

|

### Current Behaviour

IndexError: list index out of range

### Expected Behaviour

The data is getting summarized but during generating report structure it gives IndexError: list index out of range

### Data Description

Dataset link - https://www.kaggle.com/datasets/himanshupoddar/zomato-bangalore-restaurants

### Code that reproduces the bug

_No response_

### pandas-profiling version

3.2.0

### Dependencies

```Text

pandas

numpy

```

### OS

windows

### Checklist

- [X] There is not yet another bug report for this issue in the [issue tracker](https://github.com/ydataai/pandas-profiling/issues)

- [X] The problem is reproducible from this bug report. [This guide](http://matthewrocklin.com/blog/work/2018/02/28/minimal-bug-reports) can help to craft a minimal bug report.

- [X] The issue has not been resolved by the entries listed under [Common Issues](https://pandas-profiling.ydata.ai/docs/master/pages/support_contrib/common_issues.html).

|

open

|

2023-12-05T14:15:17Z

|

2024-01-18T07:24:14Z

|

https://github.com/ydataai/ydata-profiling/issues/1514

|

[

"information requested ❔"

] |

Shashankb1910

| 2

|

tortoise/tortoise-orm

|

asyncio

| 1,774

|

Enums not quoted (bug of pypika-torotise)

|

**Describe the bug**

Reported here: https://github.com/tortoise/pypika-tortoise/issues/7

**To Reproduce**

```py

from tortoise import Model, fields, run_async

from tortoise.contrib.test import init_memory_sqlite

from tortoise.fields.base import StrEnum

class MyModel(Model):

id = fields.IntField(pk=True)

name = fields.TextField()

class MyEnum(StrEnum):

A = "a"

@init_memory_sqlite

async def do():

await MyModel.create(name="a")

qs = MyModel.filter(name=MyEnum.A)

qs._make_query()

print(qs.query)

# expected SELECT "id","name" FROM "mymodel" WHERE "name"='a'

# actual SELECT "id","name" FROM "mymodel" WHERE "name"=a

print(await MyModel.filter(name=MyEnum.A).exists())

# expected True

# actual raises tortoise.exceptions.OperationalError: no such column: a

run_async(do())

```

**Expected behavior**

Support filter by enum

**Additional context**

Will be fixed after this PR(https://github.com/tortoise/pypika-tortoise/pull/10) merged.

|

closed

|

2024-11-17T19:10:02Z

|

2024-11-19T08:55:17Z

|

https://github.com/tortoise/tortoise-orm/issues/1774

|

[] |

waketzheng

| 0

|

mwaskom/seaborn

|

data-visualization

| 3,318

|

Add read-only permissions to ci.yaml GitHub workflow

|

Seaborn's ci.yaml workflow currently run with write-all permissions. This is dangerous, since it opens the project up to supply-chain attacks. [GitHub itself](https://docs.github.com/en/actions/security-guides/security-hardening-for-github-actions#using-secrets) recommends ensuring all workflows run with minimal permissions.

I've taken a look at the workflow, and it doesn't seem to require any permissions other than `contents: read`.

This issue can be solved in two ways:

- add top-level read-only permissions to ci.yaml; and/or

- set the default token permissions to read-only in the repo settings.

I'll be sending a PR along with this issue that sets the top-level permissions. If you instead (or also) wish to modify the default token permissions:

1. Open the repo settings

2. Go to [Actions > General](https://github.com/mwaskom/seaborn/settings/actions)

3. Under "Workflow permissions", set them to "Read repository contents and packages permissions"

---

**Disclosure:** My name is Pedro and I work with Google and the [Open Source Security Foundation (OpenSSF)](https://www.openssf.org/) to improve the supply-chain security of the open-source ecosystem.

|

closed

|

2023-04-12T14:08:36Z

|

2023-04-12T23:19:14Z

|

https://github.com/mwaskom/seaborn/issues/3318

|

[] |

pnacht

| 0

|

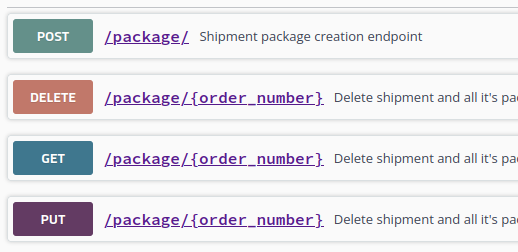

flasgger/flasgger

|

rest-api

| 544

|

swag_from with specs arguments not working properly

|

When used swag_from with specs argument multiple times for one endpoint and different methods results in overwriting text in doc.

```@bp.route("/<string:order_number>", methods=["GET", "PUT", "DELETE"])

@swag_from(specs=doc_shipment_delete, methods=["DELETE"])

@swag_from(specs=doc_shipment_put, methods=["PUT"])

@swag_from(specs=doc_shipment_get, methods=["GET"])

@api_basic_authentication

def shipment(order_number: str) -> bp.route:

(....)

```

the dicts doc_shipment_delete, doc_shipment_put and doc_shipment_get are all different, but on generated webpage there is just the content of the "delete" dict.

|

open

|

2022-08-04T11:59:54Z

|

2022-08-08T06:38:08Z

|

https://github.com/flasgger/flasgger/issues/544

|

[] |

Zbysekz

| 3

|

reloadware/reloadium

|

django

| 64

|

Won't work with remote developing.

|

**Describe the bug**

**To Reproduce**

Steps to reproduce the behavior:

1. Meau -> Tools -> Deployment -> Configuration, add a configuration to connect your server, map the project folder on the local disk and the server.

2. Meau -> File -> Setting -> Project: Name -> Python Interpreter -> Add Interpreter, add a python Interpreter on the server.

3. Meau -> Tools -> Deployment -> Download from default server, download the code from the server to local disk.

4. Open a *.py file, add a breakpoint, click 'Debug with Relodium'.

5. The thread stop on the breakpoint, modify the code, ctrl+s to upload the code to the server, but nothing changes.

**Expected behavior**

What I hope: When I modify the code, ctrl+s to upload the code to the server, the value of a variable should change.

**Screenshots**

[Imgur](https://i.imgur.com/xjGn0LA.gifv)

**Desktop (please complete the following information):**

- OS: PyCharm on Windows 10 and python 3.10 on Ubuntu 20.0.4

- OS version: 10

- Reloadium package version: 0.9.4

- PyCharm plugin version: 0.8.8

- Editor: PyCharm 2022.2.3

- Run mode: Debug

|

closed

|

2022-11-09T14:52:37Z

|

2022-11-23T08:01:08Z

|

https://github.com/reloadware/reloadium/issues/64

|

[] |

00INDEX

| 3

|

modelscope/modelscope

|

nlp

| 867

|

cpu memory leak

|

After I calling the skin retouch pipeline, CPU memory increase about 100Mb for one time, later callings will keep on increasing the memory, does this relate to CPU memory leak?

|

closed

|

2024-05-25T09:59:23Z

|

2024-07-21T01:56:15Z

|

https://github.com/modelscope/modelscope/issues/867

|

[

"Stale"

] |

garychan22

| 4

|

anselal/antminer-monitor

|

dash

| 47

|

Support for PORT

|

Hi there,

##I use the same network IP for all my miners, and only have a different port for each miner, if I fill in for example 192.168.1.200:4030, it won't work. I know this is not wrong or anything, but would it be possible to have support for ports as well to solve this quite simple issue?

Thank you

PS. Perhaps not have a separate input field box for field, but simply auto detect all ports on a given IP, or allow people to add :<port> in the IP field. if no port, change nothing, same as now/currently. If mentioned a port, then use that one only :)

|

closed

|

2018-01-08T15:48:32Z

|

2018-01-09T21:44:14Z

|

https://github.com/anselal/antminer-monitor/issues/47

|

[

":dancing_men: duplicate"

] |

webhunter69

| 9

|

horovod/horovod

|

tensorflow

| 3,303

|

Is there an example of distributed training using only CPU

|

I want to try distributed training with multiple machines using CPU. What command should I use to start it? Is there an example for reference

|

closed

|

2021-12-08T10:08:25Z

|

2021-12-08T10:33:15Z

|

https://github.com/horovod/horovod/issues/3303

|

[] |

liiitleboy

| 0

|

flasgger/flasgger

|

rest-api

| 239

|

instructions for apispec example are underspecified, causing TypeError

|

New to flasgger and was interested in the apispec example, but unfortunately the instructions seem out of date?

Quoting https://github.com/rochacbruno/flasgger#readme

> Flasgger also supports Marshmallow APISpec as base template for specification, if you are using APISPec from Marshmallow take a look at [apispec example](https://github.com/rochacbruno/flasgger/blob/master/examples/apispec_example.py).

> ...

> NOTE: If you want to use Marshmallow Schemas you also need to run `pip install marshmallow apispec`

Is some non-latest version of one of these required? Following the instructions as written results in apispec-0.39.0 and marshmallow-2.15.4, which results in TypeError when running the apispec example:

```

jab@pro ~> python3 -m virtualenv tmpvenv

Using base prefix '/usr/local/Cellar/python/3.7.0/Frameworks/Python.framework/Versions/3.7'

/usr/local/lib/python3.7/site-packages/virtualenv.py:1041: DeprecationWarning: the imp module is deprecated in favour of importlib; see the module's documentation for alternative uses

import imp

New python executable in /Users/jab/tmpvenv/bin/python3.7

Also creating executable in /Users/jab/tmpvenv/bin/python

Installing setuptools, pip, wheel...done.

jab@pro ~> cd tmpvenv

jab@pro ~/tmpvenv> . bin/activate.fish

(tmpvenv) jab@pro ~/tmpvenv> pip install flasgger

Collecting flasgger

Using cached https://files.pythonhosted.org/packages/59/25/d25af3ebe1f04f47530028647e3476b829b1950deab14237948fe3aea552/flasgger-0.9.0-py2.py3-none-any.whl

Collecting PyYAML>=3.0 (from flasgger)

Collecting six>=1.10.0 (from flasgger)

Using cached https://files.pythonhosted.org/packages/67/4b/141a581104b1f6397bfa78ac9d43d8ad29a7ca43ea90a2d863fe3056e86a/six-1.11.0-py2.py3-none-any.whl

Collecting mistune (from flasgger)

Using cached https://files.pythonhosted.org/packages/c8/8c/87f4d359438ba0321a2ae91936030110bfcc62fef752656321a72b8c1af9/mistune-0.8.3-py2.py3-none-any.whl

Collecting jsonschema>=2.5.1 (from flasgger)

Using cached https://files.pythonhosted.org/packages/77/de/47e35a97b2b05c2fadbec67d44cfcdcd09b8086951b331d82de90d2912da/jsonschema-2.6.0-py2.py3-none-any.whl

Collecting Flask>=0.10 (from flasgger)

Using cached https://files.pythonhosted.org/packages/7f/e7/08578774ed4536d3242b14dacb4696386634607af824ea997202cd0edb4b/Flask-1.0.2-py2.py3-none-any.whl

Collecting Werkzeug>=0.14 (from Flask>=0.10->flasgger)

Using cached https://files.pythonhosted.org/packages/20/c4/12e3e56473e52375aa29c4764e70d1b8f3efa6682bef8d0aae04fe335243/Werkzeug-0.14.1-py2.py3-none-any.whl

Collecting itsdangerous>=0.24 (from Flask>=0.10->flasgger)

Collecting click>=5.1 (from Flask>=0.10->flasgger)

Using cached https://files.pythonhosted.org/packages/34/c1/8806f99713ddb993c5366c362b2f908f18269f8d792aff1abfd700775a77/click-6.7-py2.py3-none-any.whl

Collecting Jinja2>=2.10 (from Flask>=0.10->flasgger)

Using cached https://files.pythonhosted.org/packages/7f/ff/ae64bacdfc95f27a016a7bed8e8686763ba4d277a78ca76f32659220a731/Jinja2-2.10-py2.py3-none-any.whl

Collecting MarkupSafe>=0.23 (from Jinja2>=2.10->Flask>=0.10->flasgger)

Installing collected packages: PyYAML, six, mistune, jsonschema, Werkzeug, itsdangerous, click, MarkupSafe, Jinja2, Flask, flasgger

Successfully installed Flask-1.0.2 Jinja2-2.10 MarkupSafe-1.0 PyYAML-3.13 Werkzeug-0.14.1 click-6.7 flasgger-0.9.0 itsdangerous-0.24 jsonschema-2.6.0 mistune-0.8.3 six-1.11.0