code

stringlengths 114

1.05M

| path

stringlengths 3

312

| quality_prob

float64 0.5

0.99

| learning_prob

float64 0.2

1

| filename

stringlengths 3

168

| kind

stringclasses 1

value |

|---|---|---|---|---|---|

defmodule Remedy.Schema.Component do

@moduledoc """

Components are a framework for adding interactive elements to the messages your app or bot sends. They're accessible, customizable, and easy to use. There are several different types of components; this documentation will outline the basics of this new framework and each example.+

> Components have been broken out into individual modules for easy distinction between them and to separate helper functions and individual type checking between component types - especially as more components are added by Discord.

Each of the components are provided all of the valid types through this module to avoid repetition and allow new components to be added quicker and easier.

## Action Row

An Action Row is a non-interactive container component for other types of components. It has a `type: 1` and a sub-array of `components` of other types.

- You can have up to 5 Action Rows per message

- An Action Row cannot contain another Action Row

- An Action Row containing buttons cannot also contain a select menu

## Buttons

Buttons are interactive components that render on messages. They have a `type: 2`, They can be clicked by users. Buttons in Nostrum are further separated into two types, detailed below. Only the [Interaction Button](#module-interaction-buttons-non-link-buttons) will fire a `Nostrum.Struct.Interaction` when pressed.

- Buttons must exist inside an Action Row

- An Action Row can contain up to 5 buttons

- An Action Row containing buttons cannot also contain a select menu

For more information check out the [Discord API Button Styles](https://discord.com/developers/docs/interactions/message-components#button-object-button-styles) for more information.

## Link Buttons

- Link buttons **do not** send an `interaction` to your app when clicked

- Link buttons **must** have a `url`, and **cannot** have a `custom_id`

- Link buttons will **always** use `style: 5`

#### Link `style: 5`

## Interaction Buttons ( Non-link Buttons )

> Discord calls these buttons "Non-link Buttons" due to the fact that they do not contain a url. However it would be more accurate to call them an "Interaction Button" as they **do** fire an interaction when clicked which is far more useful for your applications interactivity. As such they are referred to as "Interaction Button" throughout the rest of this module.

- Interaction buttons **must** have a `custom_id`, and **cannot** have a `url`

- Can have one of the below `:style` applied.

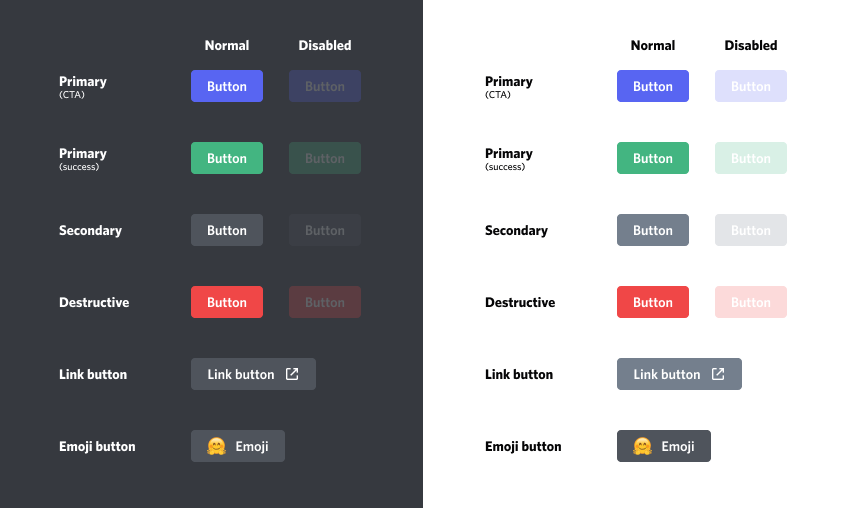

#### Primary `style: 1`

#### Secondary `style: 2`

#### Success `style: 3`

#### Danger `style: 4`

## 🐼 ~~Emoji Buttons~~

> Note: The discord documentation and marketing material in relation to buttons indicates that there are three kinds of buttons: 🐼 **Emoji Buttons**, **Link Buttons** & **Non-Link Buttons**. When in fact all buttons can contain an emoji. Because of this reason 🐼 **Emoji Buttons** are not included as a seperate type. Emojis will be instead handled by the two included ( superior ) button types.

> The field requirements are already becoming convoluted especially considering everything so far is all still a "Component". Using the sub types and helper functions will ensure all of the rules are followed when creating components.

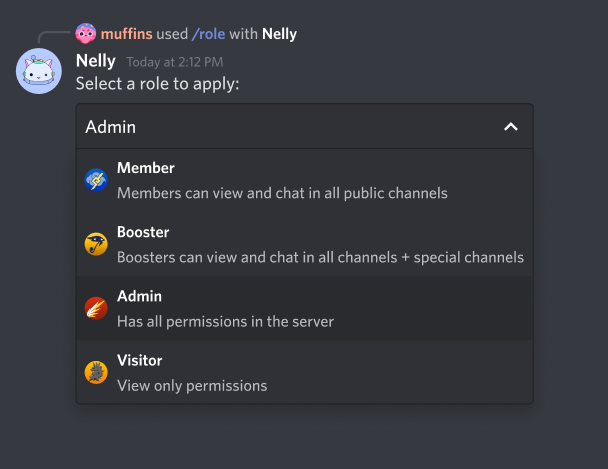

## Select Menu

Select menus are another interactive component that renders on messages. On desktop, clicking on a select menu opens a dropdown-style UI; on mobile, tapping a select menu opens up a half-sheet with the options.

Select menus support single-select and multi-select behavior, meaning you can prompt a user to choose just one item from a list, or multiple. When a user finishes making their choice by clicking out of the dropdown or closing the half-sheet, your app will receive an interaction.

- Select menus **must** be sent inside an Action Row

- An Action Row can contain **only one** select menu

- An Action Row containing a select menu **cannot** also contain buttons

"""

defmacro __using__(_opts) do

quote do

alias Remedy.Schema.Component.{ActionRow, Button, Option, SelectMenu}

alias Remedy.Schema.{Component, Emoji}

@before_compile Component

end

end

defmacro __before_compile__(_env) do

quote do

alias Remedy.Schema.Component

defp new(opts \\ []) do

@defaults

|> to_component(opts)

end

defp update(%Component{} = component, opts \\ []) do

component

|> Map.from_struct()

|> to_component(opts)

end

defp to_component(component_map, opts) do

opts

|> Enum.reject(fn {_, v} -> v == nil end)

|> Enum.into(component_map)

|> Enum.filter(fn {k, _} -> k in allowed_keys() end)

|> Enum.into(%{})

|> flatten()

|> Component.new()

end

defp allowed_keys, do: Map.keys(@defaults)

## Destroy all structs and ensure nested map

def flatten(map), do: :maps.map(&do_flatten/2, map)

defp do_flatten(_key, value), do: enm(value)

defp enm(list) when is_list(list), do: Enum.map(list, &enm/1)

defp enm(%{__struct__: _} = strct), do: :maps.map(&do_flatten/2, Map.from_struct(strct))

defp enm(data), do: data

end

end

@doc """

Create a component from the given keyword list of options

> Note: While using this function directly, you are not guaranteed to produce a valid component and it is the responsibility of the user to ensure they are passing a valid combination of component attributes. eg. if you pass a button component both a `custom_id`, and a `url`, the component is invalid as only one of these fields is allowed.

"""

@callback new(opts :: [keyword()]) :: t()

@doc """

Updates a component with the parameters provided.

> Note: While using this function directly, you are not guaranteed to produce a valid component and it is the responsibility of the user to ensure they are passing a valid combination of component attributes. eg. if you pass a button component both a `custom_id`, and a `url`, the component is invalid as only one of these fields is allowed.

"""

@callback update(t(), opts :: [keyword()]) :: t()

alias Remedy.Schema.Component.{ActionRow, Button, SelectMenu, ComponentOption}

use Remedy.Schema

embedded_schema do

field :type, ComponentType

field :custom_id, :string

field :disabled, :boolean

field :style, :integer

field :label, :string

field :url, :string

field :placeholder, :string

field :min_values, :integer

field :max_values, :integer

embeds_one :emoji, Emoji

embeds_many :options, ComponentOption

embeds_many :components, Component

end

@typedoc """

The currently valid component types.

"""

@type t :: ActionRow.t() | Button.t() | SelectMenu.t()

@typedoc """

The type of component.

Valid for All Types.

| | Component Types |

|------|-----|

| `1` | Action Row |

| `2` | Button |

| `3` | SelectMenu |

Check out the [Discord API Message Component Types](https://discord.com/developers/docs/interactions/message-components#component-object-component-types) for more information.

"""

@type type :: integer()

@typedoc """

Used to identify the command when the interraction is sent to you from the user.

Valid for [Interaction Buttons](#module-interaction-button) & [Select Menus](#module-select-menu).

"""

@type custom_id :: String.t() | nil

@typedoc """

Indicates if the component is disabled or not.

Valid for [Buttons](#module-buttons) & [Select Menus](#module-select-menu).

"""

@type disabled :: boolean() | nil

@typedoc """

Indicates the style.

Valid for Valid for [Interaction Buttons](#module-interaction-button) only,

"""

@type style :: integer() | nil

@typedoc """

A string that appears on the button, max 80 characters.

Valid for [Buttons](#module-buttons)

"""

@type label :: String.t() | nil

@typedoc """

A partial emoji to display on the object.

Valid for [Buttons](#module-buttons)

"""

@type emoji :: Emoji.t() | nil

@typedoc """

A url for link buttons.

Valid for: [Buttons](#module-buttons)

"""

@type url :: String.t() | nil

@typedoc """

A list of options for select menus, max 25.

Valid for [Select Menus](#module-select-menu).

"""

@type options :: [ComponentOption.t()] | nil

@typedoc """

Placeholder text if nothing is selected, max 100 characters

Valid for [Select Menus](#module-select-menu).

"""

@type placeholder :: String.t() | nil

@typedoc """

The minimum number of permitted selections. Minimum value 0, max 25.

Valid for [Select Menus](#module-select-menu).

"""

@type min_values :: integer() | nil

@typedoc """

The maximum number of permitted selections. Minimum value 0, max 25.

Valid for [Select Menus](#module-select-menu).

"""

@type max_values :: integer() | nil

@typedoc """

A list of components to place inside an action row.

Due to constraints of action rows, this can either be a list of up to five buttons, or a single select menu.

Valid for [Action Row](#module-action-row).

"""

@type components :: [SelectMenu.t() | Button.t() | nil]

def changeset(model \\ %__MODULE__{}, params) do

fields = __MODULE__.__schema__(:fields)

embeds = __MODULE__.__schema__(:embeds)

cast_model = cast(model, params, fields -- embeds)

Enum.reduce(embeds, cast_model, fn embed, cast_model ->

cast_embed(cast_model, embed)

end)

end

end

|

components/component.ex

| 0.873512

| 0.734715

|

component.ex

|

starcoder

|

defmodule Rolodex.Headers do

@moduledoc """

Exposes functions and macros for defining reusable headers in route doc

annotations or responses.

It exposes the following macros, which when used together will set up the headers:

- `headers/2` - for declaring the headers

- `header/3` - for declaring a single header for the set

It also exposes the following functions:

- `is_headers_module?/1` - determines if the provided item is a module that has

defined a reusable headers set

- `to_map/1` - serializes the headers module into a map

"""

alias Rolodex.{DSL, Field}

defmacro __using__(_) do

quote do

use Rolodex.DSL

import Rolodex.Headers, only: :macros

end

end

@doc """

Opens up the headers definition for the current module. Will name the headers

set and generate a list of header fields based on the macro calls within.

**Accepts**

- `name` - the headers name

- `block` - headers shape definition

## Example

defmodule SimpleHeaders do

use Rolodex.Headers

headers "SimpleHeaders" do

field "X-Rate-Limited", :boolean

field "X-Per-Page", :integer, desc: "Number of items in the response"

end

end

"""

defmacro headers(name, do: block) do

quote do

unquote(block)

def __headers__(:name), do: unquote(name)

def __headers__(:headers), do: Map.new(@headers, fn {id, opts} -> {id, Field.new(opts)} end)

end

end

@doc """

Sets a header field.

**Accepts**

- `identifier` - the header name

- `type` - the header field type

- `opts` (optional) - additional metadata. See `Field.new/1` for a list of

valid options.

"""

defmacro field(identifier, type, opts \\ []) do

DSL.set_field(:headers, identifier, type, opts)

end

@doc """

Determines if an arbitrary item is a module that has defined a reusable headers

set via `Rolodex.Headers` macros

## Example

defmodule SimpleHeaders do

...> use Rolodex.Headers

...> headers "SimpleHeaders" do

...> field "X-Rate-Limited", :boolean

...> end

...> end

iex>

# Validating a headers module

Rolodex.Headers.is_headers_module?(SimpleHeaders)

true

iex> # Validating some other module

iex> Rolodex.Headers.is_headers_module?(OtherModule)

false

"""

@spec is_headers_module?(any()) :: boolean()

def is_headers_module?(mod), do: DSL.is_module_of_type?(mod, :__headers__)

@doc """

Serializes the `Rolodex.Headers` metadata into a formatted map

## Example

iex> defmodule SimpleHeaders do

...> use Rolodex.Headers

...>

...> headers "SimpleHeaders" do

...> field "X-Rate-Limited", :boolean

...> field "X-Per-Page", :integer, desc: "Number of items in the response"

...> end

...> end

iex>

iex> Rolodex.Headers.to_map(SimpleHeaders)

%{

"X-Per-Page" => %{desc: "Number of items in the response", type: :integer},

"X-Rate-Limited" => %{type: :boolean}

}

"""

@spec to_map(module()) :: map()

def to_map(mod), do: mod.__headers__(:headers)

end

|

lib/rolodex/headers.ex

| 0.873674

| 0.534612

|

headers.ex

|

starcoder

|

defmodule Exchange do

@moduledoc """

The best Elixir Exchange supporting limit and market orders. Restful API and fancy dashboard supported soon!

"""

@doc """

Places an order on the Exchange

## Parameters

- order_params: Map that represents the parameters of the order to be placed

- ticker: Atom that represents on which market the order should be placed

"""

@spec place_order(order_params :: map(), ticker :: atom()) :: atom() | {atom(), String.t()}

def place_order(order_params, ticker) do

order_params = Map.put(order_params, :ticker, ticker)

case Exchange.Validations.cast_order(order_params) do

{:ok, limit_order} ->

Exchange.MatchingEngine.place_order(ticker, limit_order)

{:error, errors} ->

{:error, errors}

end

end

@doc """

Cancels an order on the Exchange

## Parameters

- order_id: String that represents the id of the order to cancel

- ticker: Atom that represents on which market the order should be canceled

"""

@spec cancel_order(order_id :: String.t(), ticker :: atom) :: atom

def cancel_order(order_id, ticker) do

Exchange.MatchingEngine.cancel_order(ticker, order_id)

end

# Level 1 Market Data

@doc """

Returns the difference between the lowest sell order and the highest buy order

## Parameters

- ticker: Atom that represents on which market the order should be canceled

"""

@spec spread(ticker :: atom) :: {atom, Money}

def spread(ticker) do

Exchange.MatchingEngine.spread(ticker)

end

@doc """

Returns the highest price of all buy orders

## Parameters

- ticker: Atom that represents on which market the query should be placed

"""

@spec highest_bid_price(ticker :: atom) :: {atom, Money}

def highest_bid_price(ticker) do

Exchange.MatchingEngine.bid_max(ticker)

end

@doc """

Returns the sum of all active buy order's size

## Parameters

- ticker: Atom that represents on which market the query should be placed

"""

@spec highest_bid_volume(ticker :: atom) :: {atom, number}

def highest_bid_volume(ticker) do

Exchange.MatchingEngine.bid_volume(ticker)

end

@doc """

Returns the lowest price of all sell orders

## Parameters

- ticker: Atom that represents on which market the query should be placed

"""

@spec lowest_ask_price(ticker :: atom) :: {atom, Money}

def lowest_ask_price(ticker) do

Exchange.MatchingEngine.ask_min(ticker)

end

@doc """

Returns the sum of all active sell order's size

## Parameters

- ticker: Atom that represents on which market the query should be placed

"""

@spec highest_ask_volume(ticker :: atom) :: {atom, number}

def highest_ask_volume(ticker) do

Exchange.MatchingEngine.ask_volume(ticker)

end

@doc """

Returns a list of all active orders

## Parameters

- ticker: Atom that represents on which market the query should be placed

"""

@spec open_orders(ticker :: atom) :: {atom, list}

def open_orders(ticker) do

Exchange.MatchingEngine.open_orders(ticker)

end

@doc """

Returns an order by id.

## Parameters

- ticker: Atom that represents on which market the query should be placed

- order_id: String that represents the id of the order to cancel

"""

@spec open_orders_by_id(ticker :: atom, order_id :: String.t()) ::

{atom, Exchange.Order.order()}

def open_orders_by_id(ticker, order_id) do

Exchange.MatchingEngine.open_order_by_id(ticker, order_id)

end

@doc """

Returns a list of active orders placed by the trader

## Parameters

- ticker: Atom that represents on which market the query should be made

- trader_id: String that represents the id of the traderd

"""

@spec open_orders_by_trader(ticker :: atom, trader_id :: String.t()) :: {atom, list}

def open_orders_by_trader(ticker, trader_id) do

Exchange.MatchingEngine.open_orders_by_trader(ticker, trader_id)

end

@doc """

Returns the lastest price from a side of an Exchange

## Parameters

- ticker: Exchange identifier

- side: Atom to decide which side of the book is used

"""

@spec last_price(ticker :: atom, side :: atom) :: {atom, number}

def last_price(ticker, side) do

Exchange.MatchingEngine.last_price(ticker, side)

end

@doc """

Returns the lastest size from a side of an Exchange

## Parameters

- ticker: Exchange identifier

- side: Atom to decide which side of the book is used

"""

@spec last_size(ticker :: atom, ticker :: atom) :: {atom, number}

def last_size(ticker, side) do

Exchange.MatchingEngine.last_size(ticker, side)

end

@doc """

Returns a list of completed trades where the trader is one of the participants

## Parameters

- ticker: Atom that represents on which market the query should be made

- trader_id: String that represents the id of the trader

"""

@spec completed_trades_by_id(ticker :: atom, trader_id :: String.t() | atom()) :: [

Exchange.Trade

]

def completed_trades_by_id(ticker, trader_id) when is_atom(trader_id) do

completed_trades_by_id(ticker, Atom.to_string(trader_id))

end

def completed_trades_by_id(ticker, trader_id) do

Exchange.Utils.fetch_completed_trades(ticker, trader_id)

end

@doc """

Returns the number of active buy orders

## Parameters

- ticker: Atom that represents on which market the query should made

"""

@spec total_buy_orders(ticker :: atom) :: {atom, number}

def total_buy_orders(ticker) do

Exchange.MatchingEngine.total_bid_orders(ticker)

end

@doc """

Returns the number of active sell orders

## Parameters

- ticker: Atom that represents on which market the query should made

"""

@spec total_sell_orders(ticker :: atom) :: {atom, number}

def total_sell_orders(ticker) do

Exchange.MatchingEngine.total_ask_orders(ticker)

end

@doc """

Returns all the completed trades

## Parameters

- ticker: Atom that represents on which market the query should made

"""

@spec completed_trades(ticker :: atom) :: list

def completed_trades(ticker) do

Exchange.Utils.fetch_all_completed_trades(ticker)

end

@doc """

Returns the trade with trade_id

## Parameters

- ticker: Atom that represents on which market the query should made

- trade_id: Id of the requested trade

"""

@spec completed_trade_by_trade_id(ticker :: atom, trade_id :: String.t()) :: Exchange.Trade.t()

def completed_trade_by_trade_id(ticker, trade_id) do

Exchange.Utils.fetch_completed_trade_by_trade_id(ticker, trade_id)

end

end

|

lib/exchange.ex

| 0.898819

| 0.732879

|

exchange.ex

|

starcoder

|

defmodule Janus do

@moduledoc """

Core public API for `Janus`.

There are two foundational components this graph query language

is built upon: fully namespaced property names, and resolving

functions with specified inputs and outputs (i.e. resolvers).

## Fully Namespaced Property Names

Let's look at an example to get a glimpse of the significance:

%{

id: 123,

name: "<NAME>",

address: "456 Lambda Ln"

}

From this map, it *could* be difficult to understand to what

entity (or logical group) the properties `:id`, `:name`, and

`:address` belong to. Because of the specific values, we can

intuit that it's likely a person, but is it a customer, or an

employee? Something else? Hard to tell...

customers: [

%{

id: 123,

name: "<NAME>",

address: "456 Lambda Ln"

}

]

Much more obvious now, but what if we're talking about multiple

organizations, regions, offerings, etc. There is still

ambiguity...

And this is where fully namespaced properties come in:

%{

{Company.Sales.Customer, :id} => 123,

{Company.Sales.Customer, :name} => "<NAME>",

{Company.Sales.Customer, :address} => "456 Lambda Ln"

}

Now, even without knowing the values, or the associated grouped

properties the meaning/context of `{Company.Sales.Customer, :id}`

is understood, purely by it's name.

In Elixir we'll be using `{module, atom}` tuples as that seems

more idiomatic (similar to mfa tuples).

See [Clojure's Keywords](https://clojuredocs.org/clojure.core/keyword),

for an environment that supports fully namespaced keywords/atoms

natively.

## Resolvers

Resolvers are functions bundled with a list of fully namespaced

input properties, and fully namespaced output properties. The

expectation of the function being that: given the named inputs,

it will return the outputs. Similiar to the following:

%{

name: {Foo, :get_by_id},

input: [{Foo, :id}],

output: [

{Foo, :bar},

{Foo, :baz}

],

function: fn _env, %{id: id} ->

{bar, baz} = get_foo_by_id(id)

%{

{Foo, :bar} => bar,

{Foo, :baz} => baz

}

end

}

Resolvers connect properties/attributes. If properties are the

nodes, then resolvers are the edges of a directed graph,

drawing the edges from the input nodes to point at their output

nodes.

Resolvers and namespaced properties are all you need to

construct a graph-based query system similiar to

[GraphQL](https://graphql.org/), but without the overly

restrictive nature of a type system.

"""

use Boundary, deps: [Digraph, EQL, Interceptor, Rails], exports: []

@type env :: map

@type attr :: EQL.AST.Prop.expr()

@type shape_descriptor(x) :: %{optional(x) => shape_descriptor(x)}

@type shape_descriptor :: shape_descriptor(attr)

@type response_form(x) :: %{optional(x) => [response_form(x)] | any}

@type response_form :: response_form(attr)

end

|

lib/janus.ex

| 0.871591

| 0.739446

|

janus.ex

|

starcoder

|

defmodule Animu.Media.Anime.Video do

@moduledoc """

Stores video metadata from ffprobe plus the location of the file.

Should be immutable after initial generation.

"""

use Animu.Ecto.Schema

alias __MODULE__

@derive Jason.Encoder

embedded_schema do

field :filename, :string

field :dir, :string, default: "videos"

field :extension, :string

field :path, :string

field :format, :string

field :format_name, :string

field :duration, :decimal

field :start_time, :decimal

field :size, :integer

field :bit_rate, :integer

field :probe_score, :integer

field :original, :string

field :thumbnail, {:map, :string}

embeds_one :video_track, VideoTrack, on_replace: :delete do

field :index, :integer

field :codec_name, :string

field :coded_width, :integer

field :coded_height, :integer

field :width, :integer

field :height, :integer

field :pix_fmt, :string

field :bit_rate, :integer

field :profile, :string

field :nb_frames, :integer

field :avg_frame_rate, :string

field :start_time, :decimal

field :duration, :decimal

field :bits_per_raw_sample, :integer

field :display_aspect_ratio, :string

end

embeds_one :audio_track, AudioTrack, on_replace: :delete do

field :index, :integer

field :codec_name, :string

field :language, :string

field :bit_rate, :integer

field :bits_per_sample, :integer

field :max_bit_rate, :integer

field :sample_rate, :integer

field :sample_fmt, :string

field :profile, :string

field :nb_frames, :integer

field :channel_layout, :string

field :channels, :integer

field :start_time, :decimal

field :duration, :decimal

end

embeds_one :subtitles, Subtitles, on_replace: :delete do

field :type, :string, default: "ass"

field :filename, :string

field :dir, :string

field :fonts, {:array, :string}

field :font_dir, :string

end

end

require Protocol

Protocol.derive(Jason.Encoder, Video.VideoTrack)

Protocol.derive(Jason.Encoder, Video.AudioTrack)

Protocol.derive(Jason.Encoder, Video.Subtitles)

def changeset(%Video{} = video, attrs) do

video

|> cast(attrs, all_fields(Video, except: [:video_track, :audio_track, :subtitles]))

|> validate_required([:filename])

|> cast_embed(:video_track, with: &video_track_changeset/2)

|> cast_embed(:audio_track, with: &audio_track_changeset/2)

|> cast_embed(:subtitles, with: &subtitles_changeset/2)

|> update_path

end

def change(%Video{} = video) do

changeset(video, %{})

end

def video_track_changeset(%Video.VideoTrack{} = video_codec, attrs) do

video_codec

|> cast(attrs, all_fields(Video.VideoTrack))

end

def audio_track_changeset(%Video.AudioTrack{} = audio_codec, attrs) do

audio_codec

|> cast(attrs, all_fields(Video.AudioTrack))

end

def subtitles_changeset(%Video.Subtitles{} = subtitles, attrs) do

subtitles

|> cast(attrs, all_fields(Video.Subtitles))

end

def update_path(ch) do

case ch.valid? do

true ->

dir = get_field(ch, :dir)

name = get_field(ch, :filename)

path = Path.join(dir, name)

update_change(ch, :path, path)

_ -> ch

end

end

defdelegate new(golem, video_path, anime_dir), to: Video.Invoke

end

|

lib/animu/media/anime/video.ex

| 0.754599

| 0.415017

|

video.ex

|

starcoder

|

defmodule StarWars.GraphQL.DB do

@moduledoc """

DB is a "in memory" database implemented with Elixir.Agent to support the [Relay Star Wars example](https://github.com/relayjs/relay-examples/blob/master/star-wars)

NOTICE: in the original example the format of the data is id => name where name is a string.

"""

@initial_state %{

ship: %{

"1" => %{id: "1", name: "X-Wing", type: :star_wars_ship},

"2" => %{id: "2", name: "Y-Wing", type: :star_wars_ship},

"3" => %{id: "3", name: "A-Wing", type: :star_wars_ship},

"4" => %{id: "4", name: "Millenium Falcon", type: :star_wars_ship},

"5" => %{id: "5", name: "Home One", type: :star_wars_ship},

"6" => %{id: "6", name: "TIE Fighter", type: :star_wars_ship},

"7" => %{id: "7", name: "TIE Interceptor", type: :star_wars_ship},

"8" => %{id: "8", name: "Executor", type: :star_wars_ship}

},

faction: %{

"1" => %{

id: "1",

name: "Alliance to Restore the Republic",

ships: ["1", "2", "3", "4", "5"]

},

"2" => %{

id: "2",

name: "Galactic Empire",

ships: ["6", "7", "8"]

}

}

}

@doc """

Initialize a DB process (Agent)

"""

def start_link do

Agent.start_link(fn -> @initial_state end, name: __MODULE__)

end

def stop do

Agent.stop(__MODULE__)

end

def get(type, id) do

case Agent.get(__MODULE__, &get_in(&1, [type, id])) do

nil ->

{:error, "No #{type} with ID #{id}"}

result ->

{:ok, result}

end

end

@doc """

Create a new Ship and assign it to Faction identified by faction_id

NOTICE: this function is not "concurrent" safe because there is no "lock" on "next_ship_id"

and also is doesn't take care of referential integrity.

"""

def create_ship(ship_name, faction_id) do

next_ship_id = Agent.get(__MODULE__, fn data ->

Map.keys(data[:ship])

|> Enum.map(fn id -> String.to_integer(id) end)

|> Enum.sort

|> List.last

|> Kernel.+(1) # there's a deprecation msg when trying to pipe to +

|> Integer.to_string

end)

ship_data = %{id: next_ship_id, name: ship_name, type: :star_wars_ship}

case Agent.update(__MODULE__, &put_in(&1, [:ship, next_ship_id], ship_data)) do

nil ->

{:error, "Could not create ship"}

:ok ->

faction_ships = Agent.get(__MODULE__, &Map.get(&1, :faction))[faction_id][:ships]

faction_ships = faction_ships ++ [next_ship_id]

Agent.update(__MODULE__, &put_in(&1, [:faction, faction_id, :ships], faction_ships))

{:ok, ship_data}

end

end

def dump_db do

Agent.get(__MODULE__, fn state -> state end)

end

def get_factions(names) do

factions = Agent.get(__MODULE__, &Map.get(&1, :faction)) |> Map.values

Enum.map(names, fn name ->

factions

|> Enum.find(&(&1.name == name))

end)

end

def get_faction(id) do

Agent.get(__MODULE__, &get_in(&1, [:faction, id]))

end

end

|

apps/star_wars/graphql/db.ex

| 0.778986

| 0.404625

|

db.ex

|

starcoder

|

defmodule Flawless.Spec do

@moduledoc """

A structure for defining the spec of a schema element.

The `for` attribute allows to define type-specific specs.

"""

defstruct checks: [],

late_checks: [],

type: :any,

cast_from: [],

nil: :default,

on_error: nil,

for: nil

@type t() :: %__MODULE__{

checks: list(Flawless.Rule.t()),

late_checks: list(Flawless.Rule.t()),

type: atom(),

cast_from: list(atom()) | atom(),

nil: :default | true | false,

on_error: binary() | nil,

for:

Flawless.Spec.Value.t()

| Flawless.Spec.Struct.t()

| Flawless.Spec.List.t()

| Flawless.Spec.Tuple.t()

| Flawless.Spec.Literal.t()

}

defmodule Value do

@moduledoc """

Represents a simple value or a map.

The `schema` field is used when the value is a map, and is `nil` otherwise.

"""

defstruct schema: nil

@type t() :: %__MODULE__{

schema: map() | nil

}

end

defmodule Struct do

@moduledoc """

Represents a struct.

"""

defstruct schema: nil,

module: nil

@type t() :: %__MODULE__{

schema: map() | nil,

module: atom()

}

end

defmodule List do

@moduledoc """

Represents a list of elements.

Each element must conform to the `item_type` definition.

"""

defstruct item_type: nil

@type t() :: %__MODULE__{

item_type: Flawless.spec_type()

}

end

defmodule Tuple do

@moduledoc """

Represents a tuple.

Matching values are expected to be a tuple with the same

size as elem_types, and matching the rule for each element.

"""

defstruct elem_types: nil

@type t() :: %__MODULE__{

elem_types: {Flawless.spec_type()}

}

end

defmodule Literal do

@moduledoc """

Represents a literal constant.

Matching values are expected to be strictly equal to the value.

"""

defstruct value: nil

@type t() :: %__MODULE__{

value: any()

}

end

end

|

lib/flawless/spec.ex

| 0.899296

| 0.670072

|

spec.ex

|

starcoder

|

defmodule Timex.DateFormat do

@moduledoc """

Date formatting and parsing.

This module provides an interface and core implementation for converting date

values into strings (formatting) or the other way around (parsing) according

to the specified template.

Multiple template formats are supported, each one provided by a separate

module. One can also implement custom formatters for use with this module.

"""

alias Timex.DateTime

alias Timex.Format.DateTime.Formatter

alias Timex.Format.DateTime.Formatters.Strftime

alias Timex.Parse.DateTime.Parser

alias Timex.Parse.DateTime.Tokenizers.Strftime, as: StrftimeTokenizer

@doc """

Converts date values to strings according to the given template (aka format string).

"""

@spec format(%DateTime{}, String.t) :: {:ok, String.t} | {:error, String.t}

defdelegate format(%DateTime{} = date, format_string), to: Formatter

@doc """

Same as `format/2`, but takes a custom formatter.

"""

@spec format(%DateTime{}, String.t, :default | :strftime | atom()) :: {:ok, String.t} | {:error, String.t}

def format(%DateTime{} = date, format_string, formatter) when is_binary(format_string) do

case formatter do

:default -> Formatter.format(date, format_string)

:strftime -> Formatter.format(date, format_string, Strftime)

_ -> Formatter.format(date, format_string, formatter)

end

end

@doc """

Raising version of `format/2`. Returns a string with formatted date or raises a `FormatError`.

"""

@spec format!(%DateTime{}, String.t) :: String.t | no_return

defdelegate format!(%DateTime{} = date, format_string), to: Formatter

@doc """

Raising version of `format/3`. Returns a string with formatted date or raises a `FormatError`.

"""

@spec format!(%DateTime{}, String.t, atom) :: String.t | no_return

def format!(%DateTime{} = date, format_string, :default),

do: Formatter.format!(date, format_string)

def format!(%DateTime{} = date, format_string, :strftime),

do: Formatter.format!(date, format_string, Strftime)

defdelegate format!(%DateTime{} = date, format_string, formatter), to: Formatter

@doc """

Parses the date encoded in `string` according to the template.

"""

@spec parse(String.t, String.t) :: {:ok, %DateTime{}} | {:error, term}

defdelegate parse(date_string, format_string), to: Parser

@doc """

Parses the date encoded in `string` according to the template by using the

provided formatter.

"""

@spec parse(String.t, String.t, atom) :: {:ok, %DateTime{}} | {:error, term}

def parse(date_string, format_string, :default), do: Parser.parse(date_string, format_string)

def parse(date_string, format_string, :strftime), do: Parser.parse(date_string, format_string, StrftimeTokenizer)

defdelegate parse(date_string, format_string, parser), to: Parser

@doc """

Raising version of `parse/2`. Returns a DateTime struct, or raises a `ParseError`.

"""

@spec parse!(String.t, String.t) :: %DateTime{} | no_return

defdelegate parse!(date_string, format_string), to: Parser

@doc """

Raising version of `parse/3`. Returns a DateTime struct, or raises a `ParseError`.

"""

@spec parse!(String.t, String.t, atom) :: %DateTime{} | no_return

def parse!(date_string, format_string, :default), do: Parser.parse!(date_string, format_string)

def parse!(date_string, format_string, :strftime), do: Parser.parse!(date_string, format_string, StrftimeTokenizer)

defdelegate parse!(date_string, format_string, parser), to: Parser

@doc """

Verifies the validity of the given format string according to the provided

formatter, defaults to the Default formatter if one is not provided.

Returns `:ok` if the format string is clean, `{ :error, <reason> }` otherwise.

"""

@spec validate(String.t) :: :ok | {:error, term}

@spec validate(String.t, atom) :: :ok | {:error, term}

def validate(format_string, formatter \\ nil) do

case formatter do

f when f in [:default, nil] ->

Formatter.validate(format_string)

:strftime -> Formatter.validate(format_string, StrftimeTokenizer)

_ -> Formatter.validate(format_string, formatter)

end

end

end

|

lib/date/date_format.ex

| 0.919706

| 0.608943

|

date_format.ex

|

starcoder

|

defmodule OAuth2TokenManager.Store.Local do

@default_cleanup_interval 15

@moduledoc """

Simple token store using ETS and DETS

Access tokens are stored in an ETS, since they can easily be renewed with an access

token. Refresh tokens and claims are stored in DETS.

This implementation is probably not suited for production, firstly because it's not

distributed.

Since the ETS table must be owned by a process and a cleanup process must be

implemented to delete expired tokens, this implementation must be started under a

supervision tree. It implements the `child_spec/1` and `start_link/1` functions (from

`GenServer`).

The DETS read and write in the following tables:

- `"Elixir.OAuth2TokenManager.Store.Local.RefreshToken"` for refresh tokens

- `"Elixir.OAuth2TokenManager.Store.Local.Claims"` for claims and ID tokens

## Options

- `:cleanup_interval`: the interval between cleanups of the underlying ETS and DETS table in

seconds. Defaults to #{@default_cleanup_interval}

## Starting this implementation

In your `MyApp.Application` module, add:

children = [

OAuth2TokenManager.Store.Local

]

or

children = [

{OAuth2TokenManager.Store.Local, cleanup_interval: 30}

]

"""

@behaviour OAuth2TokenManager.Store

use GenServer

alias OAuth2TokenManager.Store

defmodule InsertError do

defexception [:reason]

@impl true

def message(%{reason: reason}), do: "insert failed with reason: #{inspect(reason)}"

end

defmodule MultipleResultsError do

defexception message: "illegal return of multiples entries"

end

def start_link(opts) do

GenServer.start_link(__MODULE__, opts, name: __MODULE__)

end

@impl GenServer

def init(opts) do

:dets.open_file(rt_tab(), [])

:ets.new(at_tab(), [:public, :named_table, {:read_concurrency, true}])

:dets.open_file(claim_tab(), [])

schedule_cleanup(opts)

{:ok, opts}

end

@impl GenServer

def handle_info(:cleanup, state) do

cleanup_access_tokens()

cleanup_refresh_tokens()

schedule_cleanup(state)

{:noreply, state}

end

defp cleanup_access_tokens() do

match_spec = [

{

{:_, :_, :_, %{"exp" => :"$1"}},

[{:<, :"$1", now()}],

[:"$1"]

}

]

:ets.select_delete(at_tab(), match_spec)

end

defp cleanup_refresh_tokens() do

match_spec = [

{

{:_, :_, %{"exp" => :"$1"}},

[{:<, :"$1", now()}],

[:"$1"]

}

]

:dets.select_delete(rt_tab(), match_spec)

end

@impl Store

def get_access_token(at) do

case :ets.lookup(at_tab(), at) do

[{at, _issuer, token_type, at_metadata, updated_at}] ->

if OAuth2TokenManager.token_valid?(at_metadata) do

{:ok, {at, token_type, at_metadata, updated_at}}

else

delete_access_token(at)

{:ok, nil}

end

[] ->

{:ok, nil}

[_ | _] ->

{:error, %MultipleResultsError{}}

end

end

@impl Store

def get_access_tokens_for_subject(iss, sub) do

match_spec = [

{

{:"$1", :"$2", :_, %{"sub" => :"$3"}, :_},

[{:==, :"$2", iss}, {:==, :"$3", sub}],

[:"$1"]

}

]

result =

:ets.select(at_tab(), match_spec)

|> Enum.reduce(

[],

fn at, acc ->

case get_access_token(at) do

{:ok, {^at, token_type, at_metadata, updated_at}} ->

[{at, token_type, at_metadata, updated_at} | acc]

_ ->

acc

end

end

)

|> Enum.filter(&OAuth2TokenManager.token_valid?/1)

{:ok, result}

rescue

e ->

{:error, e}

end

@impl Store

def get_access_tokens_client_credentials(iss, client_id) do

match_spec = [

{

{:"$1", :"$2", :_, %{"client_id" => :"$3"}, :_},

[{:==, :"$2", iss}, {:==, :"$3", client_id}],

[:"$1"]

}

]

result =

:ets.select(at_tab(), match_spec)

|> Enum.reduce(

[],

fn at, acc ->

case get_access_token(at) do

{:ok, {^at, _token_type, %{"sub" => _}, _updated_at}} ->

acc

{:ok, {^at, token_type, at_metadata, updated_at}} ->

[{at, token_type, at_metadata, updated_at} | acc]

_ ->

acc

end

end

)

|> Enum.filter(&OAuth2TokenManager.token_valid?/1)

{:ok, result}

rescue

e ->

{:error, e}

end

@impl Store

def put_access_token(at, token_type, at_metadata, iss) do

:ets.insert(at_tab(), {at, iss, token_type, at_metadata, now()})

{:ok, at_metadata}

rescue

e ->

{:error, e}

end

@impl Store

def delete_access_token(at) do

:ets.delete(at_tab(), at)

:ok

rescue

e ->

{:error, e}

end

@impl Store

def get_refresh_token(rt) do

case :dets.lookup(rt_tab(), rt) do

[{rt, _issuer, rt_metadata, updated_at}] ->

{:ok, {rt, rt_metadata, updated_at}}

[] ->

{:ok, nil}

[_ | _] ->

{:error, %MultipleResultsError{}}

end

end

@impl Store

def get_refresh_tokens_for_subject(iss, sub) do

match_spec = [

{

{:"$1", :"$2", %{"sub" => :"$3"}, :_},

[{:==, :"$2", iss}, {:==, :"$3", sub}],

[:"$1"]

}

]

result =

:dets.select(rt_tab(), match_spec)

|> Enum.reduce(

[],

fn rt, acc ->

case get_refresh_token(rt) do

{:ok, {^rt, rt_metadata, updated_at}} ->

[{rt, rt_metadata, updated_at} | acc]

_ ->

acc

end

end

)

|> Enum.filter(&OAuth2TokenManager.token_valid?/1)

{:ok, result}

rescue

e ->

{:error, e}

end

@impl Store

def get_refresh_tokens_client_credentials(iss, client_id) do

match_spec = [

{

{:"$1", :"$2", %{"client_id" => :"$3"}, :_},

[{:==, :"$2", iss}, {:==, :"$3", client_id}],

[:"$1"]

}

]

result =

:dets.select(rt_tab(), match_spec)

|> Enum.reduce(

[],

fn rt, acc ->

case get_refresh_token(rt) do

{:ok, {^rt, %{"sub" => _}, _updated_at}} ->

acc

{:ok, {^rt, rt_metadata, updated_at}} ->

[{rt, rt_metadata, updated_at} | acc]

_ ->

acc

end

end

)

|> Enum.filter(&OAuth2TokenManager.token_valid?/1)

{:ok, result}

rescue

e ->

{:error, e}

end

@impl Store

def put_refresh_token(rt, rt_metadata, iss) do

:dets.insert(rt_tab(), {rt, iss, rt_metadata, now()})

{:ok, rt_metadata}

rescue

e ->

{:error, e}

end

@impl Store

def delete_refresh_token(rt) do

:dets.delete(rt_tab(), rt)

:ok

rescue

e ->

{:error, e}

end

@impl Store

def get_claims(iss, sub) do

case :dets.lookup(claim_tab(), {iss, sub}) do

[{{_iss, _sub}, _id_token, claims_or_nil, updated_at_or_nil}] ->

{:ok, {claims_or_nil, updated_at_or_nil}}

[] ->

{:ok, nil}

[_ | _] ->

{:error, %MultipleResultsError{}}

end

end

@impl Store

def put_claims(iss, sub, claims) do

entry =

case get_id_token(iss, sub) do

{:ok, <<_::binary>> = id_token} ->

{{iss, sub}, id_token, claims, now()}

{:ok, nil} ->

{{iss, sub}, nil, claims, now()}

end

case :dets.insert(claim_tab(), entry) do

:ok ->

:ok

{:error, reason} ->

{:error, %InsertError{reason: reason}}

end

end

@impl Store

def get_id_token(iss, sub) do

case :dets.lookup(claim_tab(), {iss, sub}) do

[{{_iss, _sub}, id_token_or_nil, _claims_or_nil, _updated_at_or_nil}] ->

{:ok, id_token_or_nil}

[] ->

{:ok, nil}

[_ | _] ->

{:error, %MultipleResultsError{}}

end

end

@impl Store

def put_id_token(iss, sub, id_token) do

entry =

case get_claims(iss, sub) do

{:ok, {claims_or_nil, updated_at_or_nil}} ->

{{iss, sub}, id_token, claims_or_nil, updated_at_or_nil}

{:ok, nil} ->

{{iss, sub}, id_token, nil, nil}

end

case :dets.insert(claim_tab(), entry) do

:ok ->

:ok

{:error, reason} ->

{:error, %InsertError{reason: reason}}

end

end

defp at_tab(), do: Module.concat(__MODULE__, AccessToken)

defp rt_tab(), do: Module.concat(__MODULE__, RefreshToken) |> :erlang.atom_to_list()

defp claim_tab(), do: Module.concat(__MODULE__, Claims) |> :erlang.atom_to_list()

defp now, do: System.system_time(:second)

defp schedule_cleanup(state) do

interval = (state[:cleanup_interval] || @default_cleanup_interval) * 1000

Process.send_after(self(), :cleanup, interval)

end

end

|

lib/oauth2_token_manager/store/local.ex

| 0.777638

| 0.54468

|

local.ex

|

starcoder

|

defmodule Day16 do

def part1(input) do

{rules, _, nearby} = parse(input)

rules = flatten_rules(rules)

nearby

|> List.flatten()

|> Enum.filter(fn field ->

not valid_field?(field, rules)

end)

|> Enum.sum

end

def part2(input) do

{rules, yours, nearby} = parse(input)

nearby = discard_invalid_tickets(rules, nearby)

all_tickets = [yours | nearby]

# Figure out the zero-based index for each field.

{indices,_} = rules

|> Enum.map(fn {name, rules} ->

{name, find_index_candidates(rules, all_tickets)}

end)

|> Enum.sort_by(fn {_name, set} -> MapSet.size(set) end)

|> Enum.map_reduce(MapSet.new(), fn {name, candidates}, seen ->

[index] = MapSet.difference(candidates, seen)

|> MapSet.to_list()

{{name, index}, MapSet.put(seen, index)}

end)

# Retrieve the values for the departure fields and multiply them.

indices

|> Enum.filter(fn {name, _index} ->

String.starts_with?(name, "departure")

end)

|> Enum.map(fn {_, index} ->

Enum.at(yours, index)

end)

|> Enum.reduce(1, &*/2)

end

defp find_index_candidates(rules, tickets) do

candidates = MapSet.new(0..length(hd(tickets))-1)

Enum.reduce(tickets, candidates, fn ticket, acc ->

Enum.with_index(ticket)

|> Enum.reduce(acc, fn {field, index}, acc ->

case valid_field?(field, rules) do

true -> acc

false -> MapSet.delete(acc, index)

end

end)

end)

end

defp discard_invalid_tickets(rules, nearby) do

rules = flatten_rules(rules)

Enum.filter(nearby, fn ticket -> valid?(ticket, rules) end)

end

defp valid?(ticket, rules) do

Enum.all?(ticket, fn field ->

valid_field?(field, rules)

end)

end

defp valid_field?(field, rules) do

Enum.any?(rules, fn range ->

field in range

end)

end

defp flatten_rules(rules) do

Enum.flat_map(rules, &(elem(&1, 1)))

end

defp parse(input) do

{first, next} = Enum.split_while(input, fn line ->

line != "your ticket:"

end)

{yours, nearby} = Enum.split_while(next, fn line ->

line != "nearby tickets:"

end)

rules = TicketParser.parse_rules(first)

yours = parse_ticket(hd(tl(yours)))

nearby = Enum.map(tl(nearby), &parse_ticket/1)

{rules, yours, nearby}

end

defp parse_ticket(fields) do

String.split(fields, ",") |> Enum.map(&String.to_integer/1)

end

end

defmodule TicketParser do

import NimbleParsec

defp reduce_range([min, max]) do

min..max

end

defp reduce_rule([description, range1, range2]) do

{description, [range1, range2]}

end

range = integer(min: 1)

|> ignore(string("-"))

|> integer(min: 1)

|> reduce({:reduce_range, []})

rule_def = ascii_string([?a..?z,?\s], min: 1)

|> ignore(string(": "))

|> concat(range)

|> ignore(string(" or "))

|> concat(range)

|> eos()

|> reduce({:reduce_rule, []})

defparsecp :rule, rule_def

def parse_rules(input) do

Enum.map(input, fn(line) ->

{:ok, [res], _, _, _, _} = rule(line)

res

end)

end

end

|

day16/lib/day16.ex

| 0.624408

| 0.532243

|

day16.ex

|

starcoder

|

defmodule Similarity.Cosine do

@moduledoc """

A struct that can be used to accumulate ids & attributes and calcuate similarity between them.

"""

alias Similarity.Cosine

defstruct attributes_counter: 0, attributes_map: %{}, map: %{}

@doc """

Returns a new `%Cosine{}` struct to be first used with `add/3` function

"""

def new, do: %Cosine{}

@doc """

Puts a new id with attributes into `%Cosine{}.map` and returns `%Cosine{}` struct.

## Example:

s = Similarity.Cosine.new

s = s |> Similarity.Cosine.add("barna", [{"n_of_bacons", 3}, {"hair_color_r", 124}, {"hair_color_g", 188}, {"hair_color_b", 11}])

"""

def add(struct = %Cosine{map: map}, id, attributes) do

struct = %Cosine{attributes_map: attributes_map} = add_attributes(struct, attributes)

transformed_attributes =

attributes |> Enum.map(fn {key, value} -> {Map.get(attributes_map, key), value} end)

new_map = map |> Map.put(id, transformed_attributes)

%Cosine{struct | map: new_map}

end

@doc """

Returns `Similarity.cosine_srol/2` similarity between two pairs of ids (id_a, id_b) in `%Cosine{}`

## Example:

s = Similarity.Cosine.new

s = s |> Similarity.Cosine.add("barna", [{"n_of_bacons", 3}, {"hair_color_r", 124}, {"hair_color_g", 188}, {"hair_color_b", 11}])

s = s |> Similarity.Cosine.add("somebody", [{"n_of_bacons", 0}, {"hair_color_r", 222}, {"hair_color_g", 62}, {"hair_color_b", 11}])

s |> Similarity.Cosine.between("barna", "somebody")

"""

def between(%Cosine{map: map}, id_a, id_b) do

do_between(map, id_a, id_b)

end

defp do_between(map, id_a, id_b) do

attributes_a = map |> Map.get(id_a)

attributes_b = map |> Map.get(id_b)

keys_a = attributes_a |> Enum.map(fn {k, _v} -> k end) |> MapSet.new()

keys_b = attributes_b |> Enum.map(fn {k, _v} -> k end) |> MapSet.new()

common_attributes_keys = MapSet.intersection(keys_a, keys_b)

common_attributes_a =

common_attributes_keys

|> Enum.map(fn common_key ->

Enum.find(attributes_a, fn {k, _v} -> k == common_key end) |> elem(1)

end)

common_attributes_b =

common_attributes_keys

|> Enum.map(fn common_key ->

Enum.find(attributes_b, fn {k, _v} -> k == common_key end) |> elem(1)

end)

Similarity.cosine_srol(common_attributes_a, common_attributes_b)

end

@doc """

Returns a stream of all unique pairs of similarities in `%Cosine{}.map`

## Example:

s = Similarity.Cosine.new

s = s |> Similarity.Cosine.add("barna", [{"n_of_bacons", 3}, {"hair_color_r", 124}, {"hair_color_g", 188}, {"hair_color_b", 11}])

s = s |> Similarity.Cosine.add("somebody", [{"n_of_bacons", 0}, {"hair_color_r", 222}, {"hair_color_g", 62}, {"hair_color_b", 11}])

Similarity.Cosine.stream(s)

"""

def stream(%Cosine{map: map}) do

Stream.resource(

fn -> {_all_ids = Map.keys(map), map} end,

&stream_next/1,

fn _ -> nil end

)

end

@doc false

def stream_next({[_last | []], _map}) do

{:halt, nil}

end

@doc false

def stream_next({[h_id | tl_ids], map}) do

{

tl_ids |> Enum.map(fn id -> {h_id, id, do_between(map, h_id, id)} end),

{tl_ids, map}

}

end

@doc false

def add_attributes(

struct = %Cosine{attributes_counter: attributes_counter, attributes_map: attributes_map},

attributes

) do

{new_attributes_counter, new_attributes_map} =

do_add_attributes(attributes, attributes_counter, attributes_map)

%Cosine{

struct

| attributes_counter: new_attributes_counter,

attributes_map: new_attributes_map

}

end

@doc false

def do_add_attributes([], attributes_counter, attributes_map) do

{attributes_counter, attributes_map}

end

@doc false

def do_add_attributes([{key, _value} | tl], attributes_counter, attributes_map) do

if Map.has_key?(attributes_map, key) do

do_add_attributes(tl, attributes_counter, attributes_map)

else

new_attributes_map = Map.put(attributes_map, key, attributes_counter)

new_attributes_counter = attributes_counter + 1

do_add_attributes(tl, new_attributes_counter, new_attributes_map)

end

end

end

|

lib/similarity/cosine.ex

| 0.90445

| 0.50592

|

cosine.ex

|

starcoder

|

defmodule Ello.V3.Schema.DiscoveryTypes do

use Absinthe.Schema.Notation

alias Ello.V3.Resolvers

object :category do

field :id, :id

field :name, :string

field :slug, :string

field :level, :string

field :order, :integer

field :description, :string

field :tile_image, :tshirt_image_versions, resolve: fn(_args, %{source: category}) ->

{:ok, category.tile_image_struct}

end

field :allow_in_onboarding, :boolean

field :is_creator_type, :boolean

field :created_at, :datetime

field :category_users, list_of(:category_user) do

arg :roles, list_of(:category_user_role)

resolve &Resolvers.CategoryUsers.call/3

end

field :current_user_state, :category_user

field :brand_account, :user

end

object :category_search_result do

field :categories, list_of(:category)

field :is_last_page, :boolean, resolve: fn(_, _) -> {:ok, true} end

end

object :category_post do

field :id, :id

field :status, :string

field :submitted_at, :datetime

field :submitted_by, :user

field :featured_at, :datetime

field :featured_by, :user

field :unfeatured_at, :datetime

field :removed_at, :datetime

field :category, :category

field :post, :post

field :actions, :category_post_actions, resolve: &actions/2

end

object :category_post_actions do

field :feature, :category_post_action

field :unfeature, :category_post_action

end

object :category_post_action do

field :href, :string

field :label, :string

field :method, :string

end

object :category_user do

field :id, :id

field :role, :category_user_role

field :created_at, :datetime

field :updated_at, :datetime

field :category, :category

field :user, :user

end

enum :category_user_role do

value :moderator, as: "moderator"

value :curator, as: "curator"

value :featured, as: "featured"

end

object :page_header do

field :id, :id

field :user, :user

field :post_token, :string

field :slug, :string, resolve: &page_header_slug/2

field :kind, :page_header_kind, resolve: &page_header_kind/2

field :header, :string, resolve: &page_header_header/2

field :subheader, :string, resolve: &page_header_sub_header/2

field :cta_link, :page_header_cta_link, resolve: &page_header_cta_link/2

field :image, :responsive_image_versions, resolve: &page_header_image/2

field :category, :category

end

enum :page_header_kind do

value :category

value :artist_invite

value :editorial

value :authentication

value :generic

end

object :page_header_cta_link do

field :text, :string

field :url, :string

end

object :editorial_stream do

field :next, :string

field :per_page, :integer

field :is_last_page, :boolean

field :editorials, list_of(:editorial)

end

object :editorial do

field :id, :id

field :kind, :editorial_kind, resolve: &editorial_kind/2

field :title, :string, resolve: &editorial_content/2

field :subtitle, :string, resolve: &editorial_content(&1, &2, "rendered_subtitle")

field :path, :string, resolve: &editorial_content(&1, &2)

field :url, :string, resolve: &editorial_content(&1, &2)

field :post, :post

field :stream, :editorial_post_stream, resolve: &editorial_stream/2

field :one_by_one_image, :responsive_image_versions, resolve: &editorial_image/2

field :one_by_two_image, :responsive_image_versions, resolve: &editorial_image/2

field :two_by_one_image, :responsive_image_versions, resolve: &editorial_image/2

field :two_by_two_image, :responsive_image_versions, resolve: &editorial_image/2

end

enum :editorial_kind do

value :post

value :post_stream

value :internal

value :external

value :sponsored

end

object :editorial_post_stream do

field :query, :string

field :tokens, list_of(:string)

end

defp page_header_kind(_, %{source: %{category_id: _}}), do: {:ok, :category}

defp page_header_kind(_, %{source: %{is_editorial: true}}), do: {:ok, :editorial}

defp page_header_kind(_, %{source: %{is_artist_invite: true}}), do: {:ok, :artist_invite}

defp page_header_kind(_, %{source: %{is_authentication: true}}), do: {:ok, :authentication}

defp page_header_kind(_, %{source: _}), do: {:ok, :generic}

defp page_header_slug(_, %{source: %{category: %{slug: slug}}}), do: {:ok, slug}

defp page_header_slug(_, %{source: _}), do: {:ok, nil}

defp page_header_header(_, %{source: %{category: %{header: nil, name: copy}}}), do: {:ok, copy}

defp page_header_header(_, %{source: %{category: %{header: copy}}}), do: {:ok, copy}

defp page_header_header(_, %{source: %{header: copy}}), do: {:ok, copy}

defp page_header_sub_header(_, %{source: %{category: %{description: copy}}}), do: {:ok, copy}

defp page_header_sub_header(_, %{source: %{subheader: copy}}), do: {:ok, copy}

defp page_header_cta_link(_, %{source: %{category: %{cta_caption: text, cta_href: url}}}),

do: {:ok, %{text: text, url: url}}

defp page_header_cta_link(_, %{source: %{cta_caption: text, cta_href: url}}),

do: {:ok, %{text: text, url: url}}

defp page_header_image(_, %{source: %{image_struct: image}}), do: {:ok, image}

defp actions(args, %{context: %{current_user: nil}} = resolution) do

actions(args, resolution, nil)

end

defp actions(args, %{source: category_post, context: %{current_user: current_user}} = resolution) do

cat_user = Enum.find(current_user.category_users, &(&1.category_id == category_post.category.id))

actions(args, resolution, cat_user)

end

defp actions(_, %{

source: category_post,

context: %{current_user: %{is_staff: true}},

}, _) do

{:ok, %{

feature: feature_action(category_post),

unfeature: unfeature_action(category_post),

}}

end

defp actions(_, %{source: category_post}, %{role: "curator"}) do

{:ok, %{

feature: feature_action(category_post),

unfeature: unfeature_action(category_post),

}}

end

defp actions(_, _, _), do: {:ok, nil}

defp feature_action(%{id: id, status: "submitted"}), do: %{

href: "/api/v2/category_posts/#{id}/feature",

method: "put",

}

defp feature_action(_), do: nil

defp unfeature_action(%{id: id, status: "featured"}), do: %{

href: "/api/v2/category_posts/#{id}/unfeature",

method: "put",

}

defp unfeature_action(_), do: nil

@editorial_kinds %{

"post" => :post,

"curated_posts" => :post_stream,

"internal" => :internal,

"external" => :external,

"sponsored" => :sponsored,

}

defp editorial_kind(_, %{source: %{kind: kind}}), do: {:ok, @editorial_kinds[kind]}

defp editorial_content(a, %{definition: %{schema_node: %{identifier: name}}} = b),

do: editorial_content(a, b, "#{name}")

defp editorial_content(_, %{source: editorial}, key),

do: {:ok, Map.get(editorial.content, key)}

defp editorial_stream(_, %{source: %{kind: "curated_posts"} = editorial}) do

{:ok, %{

query: "findPosts",

tokens: editorial.content["post_tokens"],

}}

end

defp editorial_stream(_, _), do: {:ok, nil}

defp editorial_image(_, %{

definition: %{schema_node: %{identifier: :one_by_one_image}},

source: editorial,

}), do: one_by_one_image(editorial)

defp editorial_image(_, %{

definition: %{schema_node: %{identifier: :one_by_two_image}},

source: %{one_by_two_image_struct: nil} = editorial,

}), do: one_by_one_image(editorial)

defp editorial_image(_, %{

definition: %{schema_node: %{identifier: :one_by_two_image}},

source: %{one_by_two_image_struct: image},

}), do: {:ok, image}

defp editorial_image(_, %{

definition: %{schema_node: %{identifier: :two_by_one_image}},

source: %{two_by_one_image_struct: nil} = editorial,

}), do: one_by_one_image(editorial)

defp editorial_image(_, %{

definition: %{schema_node: %{identifier: :two_by_one_image}},

source: %{two_by_one_image_struct: image},

}), do: {:ok, image}

defp editorial_image(_, %{

definition: %{schema_node: %{identifier: :two_by_two_image}},

source: %{two_by_two_image_struct: nil} = editorial,

}), do: one_by_one_image(editorial)

defp editorial_image(_, %{

definition: %{schema_node: %{identifier: :two_by_two_image}},

source: %{two_by_two_image_struct: image},

}), do: {:ok, image}

defp one_by_one_image(%{one_by_one_image_struct: nil}), do: {:ok, nil}

defp one_by_one_image(%{one_by_one_image_struct: image}), do: {:ok, image}

end

|

apps/ello_v3/lib/ello_v3/schema/discovery_types.ex

| 0.574872

| 0.537223

|

discovery_types.ex

|

starcoder

|

defmodule Central.Helpers.StructureHelper do

@moduledoc """

A module to make import/export of JSON objects easier. Currently only tested with a single parent object and multiple sets of child objects.

Designed to not take the IDs with it as they are liable to change based on the database they go into.

"""

alias Central.Repo

import Ecto.Query, warn: false

@skip_export_fields [:__meta__, :inserted_at, :updated_at]

@skip_import_fields ~w(id)

defp query_obj(module, id) do

query =

from objects in module,

where: objects.id == ^id

Repo.one!(query)

end

defp cast_many(object, field, parent_module) do

association = parent_module.__schema__(:association, field)

object_module = association.queryable

case association.relationship do

:parent ->

:skip

:child ->

Repo.preload(object, field)

|> Map.get(field)

|> Enum.map(fn item -> cast_one(item, object_module) end)

end

end

defp cast_one(object, module) do

skip_fields =

if Kernel.function_exported?(module, :structure_export_skips, 0) do

module.structure_export_skips()

else

[]

end

object

|> Map.from_struct()

|> Enum.filter(fn {k, _} ->

not Enum.member?(@skip_export_fields, k) and not Enum.member?(skip_fields, k)

end)

|> Enum.map(fn {k, v} ->

cond do

module.__schema__(:field_source, k) -> {k, v}

module.__schema__(:association, k) -> {k, cast_many(object, k, module)}

end

end)

|> Enum.filter(fn {_, v} -> v != :skip end)

|> Map.new()

end

def export(module, id) do

query_obj(module, id)

|> cast_one(module)

end

defp import_assoc(parent_module, field, data, parent_id) when is_list(data) do

field = String.to_existing_atom(field)

assoc = parent_module.__schema__(:association, field)

data

|> Enum.map(fn item_params ->

import_assoc(assoc, item_params, parent_id)

end)

end

defp import_assoc(assoc, params, parent_id) when is_map(params) do

key = assoc.related_key |> to_string

params =

Map.put(params, key, parent_id)

|> Enum.filter(fn {k, _} -> not Enum.member?(@skip_import_fields, k) end)

|> Map.new()

module = assoc.queryable

{:ok, _new_object} =

module.changeset(module.__struct__, params)

|> Repo.insert()

end

# Given the root module and the data, this should create everything you need

def import(module, data) do

assocs =

module.__schema__(:associations)

|> Enum.map(&to_string/1)

# First, create and insert the core object

core_params =

data

|> Enum.filter(fn {k, _} ->

not Enum.member?(assocs, k) and not Enum.member?(@skip_import_fields, k)

end)

|> Map.new()

{:ok, core_object} =

module.changeset(module.__struct__, core_params)

|> Repo.insert()

# Now, lets add the assocs

data

|> Enum.filter(fn {k, _} -> Enum.member?(assocs, k) end)

|> Enum.each(fn {k, v} -> import_assoc(module, k, v, core_object.id) end)

core_object

end

end

|

lib/central/helpers/structure_helper.ex

| 0.663124

| 0.514827

|

structure_helper.ex

|

starcoder

|

defmodule Relay.Marathon.App do

@moduledoc """

Turns Marathon API JSON into consistent App objects.

"""

alias Relay.Marathon.{Labels, Networking}

@enforce_keys [:id, :networking_mode, :ports_list, :port_indices, :labels, :version]

defstruct [:id, :networking_mode, :ports_list, :port_indices, :labels, :version]

@type t :: %__MODULE__{

id: String.t(),

networking_mode: Networking.networking_mode(),

ports_list: [:inet.port_number()],

port_indices: [non_neg_integer],

labels: Labels.labels(),

version: String.t()

}

@spec from_definition(map, String.t()) :: t

def from_definition(%{"id" => id, "labels" => labels} = app, group) do

ports_list = Networking.ports_list(app)

%__MODULE__{

id: id,

networking_mode: Networking.networking_mode(app),

ports_list: ports_list,

port_indices: port_indices(ports_list, labels, group),

labels: labels,

version: version(app)

}

end

@spec from_event(map, String.t()) :: t

def from_event(

%{"eventType" => "api_post_event", "appDefinition" => definition} = _event,

group

),

do: from_definition(definition, group)

defp port_indices([], _labels, _group), do: []

@spec port_indices([:inet.port_number()], Labels.labels(), String.t()) :: [non_neg_integer]

defp port_indices(ports_list, labels, group) do

0..(length(ports_list) - 1)

|> Enum.filter(fn port_index -> Labels.marathon_lb_group(labels, port_index) == group end)

end

@spec version(map) :: String.t()

defp version(app) do

case app do

# In most cases the `lastConfigChangeAt` value should be available...

%{"versionInfo" => %{"lastConfigChangeAt" => version}} ->

version

# ...but if this is an app that hasn't been changed yet then use `version`

%{"version" => version} ->

version

end

end

@spec marathon_lb_vhost(t, non_neg_integer) :: [String.t()]

def marathon_lb_vhost(%__MODULE__{labels: labels}, port_index),

do: Labels.marathon_lb_vhost(labels, port_index)

@spec marathon_lb_redirect_to_https?(t, non_neg_integer) :: boolean

def marathon_lb_redirect_to_https?(%__MODULE__{labels: labels}, port_index),

do: Labels.marathon_lb_redirect_to_https?(labels, port_index)

@spec marathon_acme_domain(t, non_neg_integer) :: [String.t()]

def marathon_acme_domain(%__MODULE__{labels: labels}, port_index),

do: Labels.marathon_acme_domain(labels, port_index)

end

|

lib/relay/marathon/app.ex

| 0.797833

| 0.415847

|

app.ex

|

starcoder

|

defmodule Membrane.Element.Action do

@moduledoc """

This module contains type specifications of actions that can be returned

from element callbacks.

Returning actions is a way of element interaction with

other elements and parts of framework. Each action may be returned by any

callback (except for `c:Membrane.Element.Base.Mixin.CommonBehaviour.handle_init`

and `c:Membrane.Element.Base.Mixin.CommonBehaviour.handle_terminate`, as they

do not return any actions) unless explicitly stated otherwise.

"""

alias Membrane.{Buffer, Caps, Event, Message}

alias Membrane.Element.Pad

@typedoc """

Sends a message to the pipeline.

"""

@type message_t :: {:message, Message.t()}

@typedoc """

Sends an event through a pad (sink or source).

Forbidden when playback state is stopped.

"""

@type event_t :: {:event, {Pad.name_t(), Event.t()}}

@typedoc """

Allows to split callback execution into multiple applications of another callback

(called from now sub-callback).

Executions are synchronous in the element process, and each of them passes

subsequent arguments from the args_list, along with the element state (passed

as the last argument each time).

Return value of each execution of sub-callback can be any valid return value

of the original callback (this also means sub-callback can return any action

valid for the original callback, unless expliciltly stated). Returned actions

are executed immediately (they are NOT accumulated and executed after all

sub-callback executions are finished).

Useful when a long action is to be undertaken, and partial results need to

be returned before entire process finishes (e.g. default implementation of

`c:Membrane.Element.Base.Filter.handle_process/4` uses split action to invoke

`c:Membrane.Element.Base.Filter.handle_process1/4` with each buffer)

"""

@type split_t :: {:split, {callback_name :: atom, args_list :: [[any]]}}

@typedoc """

Sends caps through a pad (it must be source pad). Sended caps must fit

constraints on the pad.

Forbidden when playback state is stopped.

"""

@type caps_t :: {:caps, {Pad.name_t(), Caps.t()}}

@typedoc """

Sends buffers through a pad (it must be source pad).

Allowed only when playback state is playing.

"""

@type buffer_t :: {:buffer, {Pad.name_t(), Buffer.t() | [Buffer.t()]}}

@typedoc """

Makes a demand on a pad (it must be sink pad in pull mode). It does NOT

entail _sending_ demand through the pad, but just _requesting_ some amount

of data from `Membrane.Core.PullBuffer`, which _sends_ demands automatically when it

runs out of data.

If there is any data available at the pad, the data is passed to

`c:Membrane.Element.Base.Filter.handle_process/4`

or `c:Membrane.Element.Base.Sink.handle_write/4` callback. Invoked callback is

guaranteed not to receive more data than demanded.

Depending on element type and callback, it may contain different payloads or

behave differently:

In sinks:

- Payload `{pad, size}` increases demand on given pad by given size.

- Payload `{pad, {:set_to, size}}` erases current demand and sets it to given size.

In filters:

- Payload `{pad, size}` is only allowed from

`c:Membrane.Element.Base.Mixin.SourceBehaviour.handle_demand/5` callback. It overrides

current demand.

- Payload `{pad, {:source, demanding_source_pad}, size}` can be returned from

any callback. `demanding_source_pad` is a pad which is to receive demanded

buffers after they are processed.

- Payload `{pad, :self, size}` makes demand act as if element was a sink,

that is extends demand on a given pad. Buffers received as a result of the

demand should be consumed by element itself or sent through a pad in `push` mode.

Allowed only when playback state is playing.

"""

@type demand_t ::

{:demand, demand_common_payload_t | demand_filter_payload_t | demand_sink_payload_t}

@type demand_filter_payload_t ::

{Pad.name_t(), {:source, Pad.name_t()} | :self, size :: non_neg_integer}

@type demand_sink_payload_t :: {Pad.name_t(), {:set_to, size :: non_neg_integer}}

@type demand_common_payload_t :: Pad.name_t() | {Pad.name_t(), size :: non_neg_integer}

@typedoc """

Executes `c:Membrane.Element.Base.Mixin.SourceBehaviour.handle_demand/5` callback with

given pad (which must be a source pad in pull mode) if this demand is greater

than 0.

Useful when demand could not have been supplied when previous call to

`c:Membrane.Element.Base.Mixin.SourceBehaviour.handle_demand/5` happened, but some

element-specific circumstances changed and it might be possible to supply

it (at least partially).

Allowed only when playback state is playing.

"""

@type redemand_t :: {:redemand, Pad.name_t()}

@typedoc """

Sends buffers/caps/event to all source pads of element (or to sink pads when

event occurs on the source pad). Used by default implementations of

`c:Membrane.Element.Base.Mixin.SinkBehaviour.handle_caps/4` and

`c:Membrane.Element.Base.Mixin.CommonBehaviour.handle_event/4` callbacks in filter.

Allowed only when _all_ below conditions are met:

- element is filter,

- callback is `c:Membrane.Element.Base.Filter.handle_process/4`,

`c:Membrane.Element.Base.Mixin.SinkBehaviour.handle_caps/4`

or `c:Membrane.Element.Base.Mixin.CommonBehaviour.handle_event/4`,

- playback state is valid for sending buffer, caps or event action

respectively.

Keep in mind that `c:Membrane.Element.Base.Filter.handle_process/4` can only

forward buffers, `c:Membrane.Element.Base.Mixin.SinkBehaviour.handle_caps/4` - caps

and `c:Membrane.Element.Base.Mixin.CommonBehaviour.handle_event/4` - events.

"""

@type forward_t :: {:forward, Buffer.t() | [Buffer.t()] | Caps.t() | Event.t()}

@typedoc """

Suspends/resumes change of playback state.

- `playback_change: :suspend` may be returned only from

`c:Membrane.Element.Base.Mixin.CommonBehaviour.handle_prepare/3`,

`c:Membrane.Element.Base.Mixin.CommonBehaviour.handle_play/2` and

`c:Membrane.Element.Base.Mixin.CommonBehaviour.handle_stop/2` callbacks,

and defers playback state change until `playback_change: :resume` is returned.

- `playback_change: :resume` may be returned from any callback, only when

playback state change is suspended, and causes it to finish.

There is no straight limit how long playback change can take, but keep in mind

that it may affect application quality if not done quick enough.

"""

@type playback_change_t :: {:playback_change, :suspend | :resume}

@typedoc """

Type that defines a single action that may be returned from element callbacks.

Depending on element type, callback, current playback state and other

circumstances there may be different actions available.

"""

@type t ::

event_t

| message_t

| split_t

| caps_t

| buffer_t

| demand_t

| redemand_t

| forward_t

| playback_change_t

end

|

lib/membrane/element/action.ex

| 0.968036

| 0.573141

|

action.ex

|

starcoder

|

defmodule AWS.CodeBuild do

@moduledoc """

CodeBuild is a fully managed build service in the cloud.

CodeBuild compiles your source code, runs unit tests, and produces artifacts

that are ready to deploy. CodeBuild eliminates the need to provision, manage,

and scale your own build servers. It provides prepackaged build environments for

the most popular programming languages and build tools, such as Apache Maven,

Gradle, and more. You can also fully customize build environments in CodeBuild

to use your own build tools. CodeBuild scales automatically to meet peak build

requests. You pay only for the build time you consume. For more information