repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

ryfeus/lambda-packs

|

numpy

| 20

|

Magpie package : Multi label text classification

|

Hi, great work ! You inspired me to build my own package for a project that you can find on my [repo](https://github.com/Sach97/serverless-multilabel-text-classification). Unfortunately I'm facing an issue.

I have this [error](https://github.com/Sach97/serverless-multilabel-text-classification/issues/1). Have you already seen this type of error before ?

|

closed

|

2018-04-30T20:50:46Z

|

2018-05-02T18:15:10Z

|

https://github.com/ryfeus/lambda-packs/issues/20

|

[] |

sachaarbonel

| 1

|

aiortc/aiortc

|

asyncio

| 332

|

Receiving parallel to sending frames

|

I am trying to modify server example to make receiving and sending frames parallel.

The problem appeared when I've modified frame processing and it became too long. So I need to find out the way to deal with it. Now I see 2 ways to do it.

1. Understand input frame queue length. So I can read more when queue is too big.

2. Read frames as fast as it comes in parallel process.

For the first way I don't understand how to get queue length. Is it possible for WebRTC at all? Maybe this way is wrong.

For the second way I've tried to run in parallel process but in parallel receiving frames not worked in my implementation. believe it's more possible than first way but can't do it for now.

|

closed

|

2020-04-09T10:22:19Z

|

2021-03-07T14:52:33Z

|

https://github.com/aiortc/aiortc/issues/332

|

[] |

Alick09

| 3

|

feder-cr/Jobs_Applier_AI_Agent_AIHawk

|

automation

| 293

|

Non Descriptive errors any advice:

|

```

Traceback (most recent call last):

File "App/HawkAI/src/linkedIn_job_manager.py", line 138, in apply_jobs

self.easy_applier_component.job_apply(job)

File "App/HawkAI/src/linkedIn_easy_applier.py", line 67, in job_apply

raise Exception(f"Failed to apply to job! Original exception: \nTraceback:\n{tb_str}")

Exception: Failed to apply to job! Original exception:

Traceback:

Traceback (most recent call last):

File "App/HawkAI/src/linkedIn_easy_applier.py", line 63, in job_apply

self._fill_application_form(job)

File "App/HawkAI/src/linkedIn_easy_applier.py", line 130, in _fill_application_form

if self._next_or_submit():

^^^^^^^^^^^^^^^^^^^^^^

File "App/HawkAI/src/linkedIn_easy_applier.py", line 145, in _next_or_submit

self._check_for_errors()

File "App/HawkAI/src/linkedIn_easy_applier.py", line 158, in _check_for_errors

raise Exception(f"Failed answering or file upload. {str([e.text for e in error_elements])}")

Exception: Failed answering or file upload. ['Please enter a valid answer', 'Please enter a valid answer']

```

any idea why it keeps failing to answer? lines are mostly about filling application or going next or submit or uploading.

|

closed

|

2024-09-05T19:39:07Z

|

2024-09-11T02:01:47Z

|

https://github.com/feder-cr/Jobs_Applier_AI_Agent_AIHawk/issues/293

|

[] |

elonbot

| 3

|

deepfakes/faceswap

|

deep-learning

| 595

|

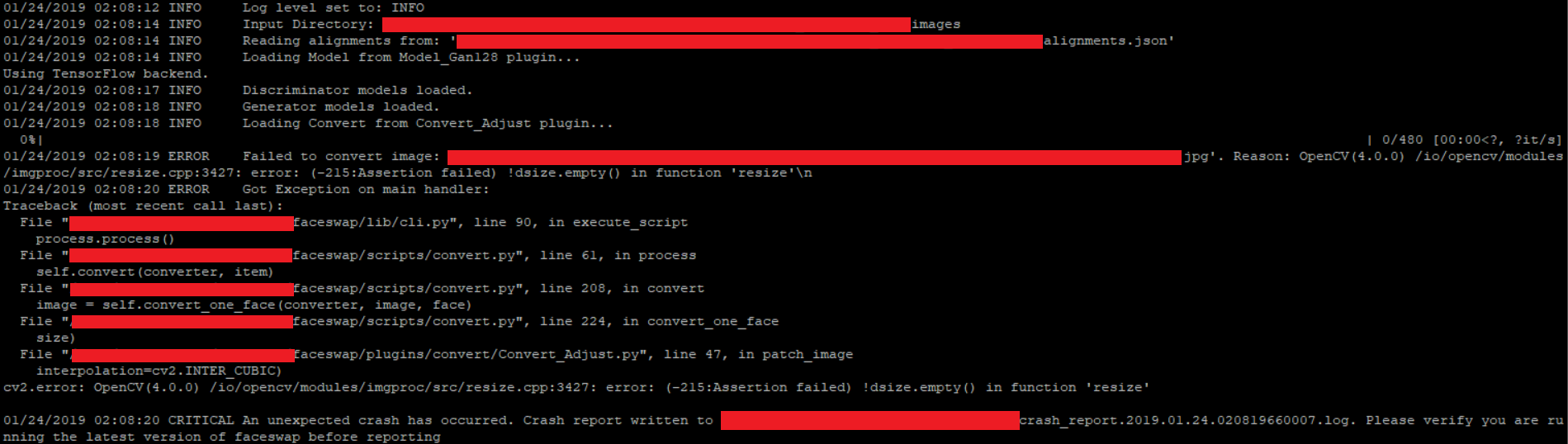

Adjust convert does not work for GAN and GAN128 model

|

Adjust Convert plugin fails in case of GAN or GAN128 model

## Expected behavior

Adjust convert completes w/o errors

## Actual behavior

GAN and GAN128 fails with errors (please see pic attached)

## Steps to reproduce

1) Extract faces from images and train GAN or GAN128 model

2) Perform python faceswap convert with Adjust plugin

## Other relevant information

- **Command lined used (GAN128)**: faceswap.py convert -i "path/to/images" -o "output/path" -m "path/to/GAN128/model" -t GAN128 -c Adjust

- **Operating system and version:** Ubuntu 16.04.5 LTS

- **Python version:** 3.5,2,

- **Faceswap version:** 4376bbf4f85f9771b0e3752ccf9504efb4e43d21

- **Faceswap method:** GPU

|

closed

|

2019-01-23T23:19:49Z

|

2019-01-23T23:37:18Z

|

https://github.com/deepfakes/faceswap/issues/595

|

[] |

temp-42

| 2

|

supabase/supabase-py

|

fastapi

| 280

|

Error when running this code

|

When i run this code i get an error

```

import os

from supabase import create_client, Client

url: str = os.environ.get("SUPABASE_URL")

key: str = os.environ.get("SUPABASE_KEY")

supabase: Client = create_client(url, key)

# Create a random user login email and password.

random_email: str = "cam@example.com"

random_password: str = "9696"

user = supabase.auth.sign_up(email=random_email, password=random_password)

```

here's the error message

```

Traceback (most recent call last):

File "C:\Users\Camer\AppData\Local\Programs\Python\Python310\lib\site-packages\gotrue\helpers.py", line 16, in check_response

response.raise_for_status()

File "C:\Users\Camer\AppData\Local\Programs\Python\Python310\lib\site-packages\httpx\_models.py", line 1508, in

raise_for_status

raise HTTPStatusError(message, request=request, response=self)

httpx.HTTPStatusError: Client error '422 Unprocessable Entity' for url 'https://gcaycwdskjdsfqvrtdqh.supabase.co/auth/v1/signup'

For more information check: https://httpstatuses.com/422

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "C:\Users\Camer\Cams-Stuff\coding\pyTesting\main.py", line 11, in <module>

user = supabase.auth.sign_up(email=random_email, password=random_password)

File "C:\Users\Camer\AppData\Local\Programs\Python\Python310\lib\site-packages\gotrue\_sync\client.py", line 129, in sign_up

response = self.api.sign_up_with_email(

File "C:\Users\Camer\AppData\Local\Programs\Python\Python310\lib\site-packages\gotrue\_sync\api.py", line 140, in sign_up_with_email

return SessionOrUserModel.parse_response(response)

File "C:\Users\Camer\AppData\Local\Programs\Python\Python310\lib\site-packages\gotrue\types.py", line 26, in parse_response

check_response(response)

File "C:\Users\Camer\AppData\Local\Programs\Python\Python310\lib\site-packages\gotrue\helpers.py", line 18, in check_response

raise APIError.from_dict(response.json())

gotrue.exceptions.APIError

```

|

closed

|

2022-09-27T23:50:54Z

|

2022-10-07T08:35:21Z

|

https://github.com/supabase/supabase-py/issues/280

|

[] |

camnoalive

| 1

|

ipyflow/ipyflow

|

jupyter

| 22

|

add binder launcher

|

See e.g. [here](https://github.com/jtpio/jupyterlab-cell-flash)

|

closed

|

2020-05-11T01:12:44Z

|

2020-05-12T22:54:34Z

|

https://github.com/ipyflow/ipyflow/issues/22

|

[] |

smacke

| 0

|

BayesWitnesses/m2cgen

|

scikit-learn

| 525

|

The converted C model compiles very slow,how can i fix it?

|

open

|

2022-06-17T07:29:37Z

|

2022-06-17T07:29:37Z

|

https://github.com/BayesWitnesses/m2cgen/issues/525

|

[] |

miszuoer

| 0

|

|

python-visualization/folium

|

data-visualization

| 1,701

|

On click circle radius changes not available

|

**Is your feature request related to a problem? Please describe.**

After adding the folium.Circle feature to the map I just get circles defined by radius. Having them several, they overlap each other when close enough and it seems like there is no option for switching them on/off by clicking on the marker too, which the circle has been appended.

The issue in detail has been raised here:

https://stackoverflow.com/questions/74520790/python-folium-circle-not-working-along-with-popup

and here

https://stackoverflow.com/questions/75096366/python-folium-clickformarker-2-functions-dont-collaborate-with-each-other

**Describe the solution you'd like**

I would like to have an option that would allow me to control the folium.Circle appearance is based on the click feature keeping them invisible when the cursor is out of the circle range.

**Describe alternatives you've considered**

Tried to apply class Circle(folium.ClickForMarker):

It doesn't work correctly. Despite if statement it appears for all the cases at once. Moreover, it remains in conflict with other classes ClickForOneMarker(folium.ClickForMarker): making it completely unusable.

**Additional context**

Provided in the link above

**Implementation**

The way of implementation should be creating a new option allowing using the on-click operation for folium.Circle feature.

|

closed

|

2023-01-13T09:31:00Z

|

2023-02-17T10:47:52Z

|

https://github.com/python-visualization/folium/issues/1701

|

[] |

Krukarius

| 1

|

CPJKU/madmom

|

numpy

| 175

|

madmom.features.notes module incomplete

|

There should be something like a `NoteOnsetProcessor` to pick the note onsets from a 2-d note activation function. The respective functionality could then be removed from `madmom.features.onsets.PeakPickingProcessor`. Be aware that this processor returns MIDI note numbers right now which is quite counter-intuitive.

Furthermore a `NoteTrackingProcessor` should be created which not only detects the note onsets but also the length (and velocity) of the notes.

|

closed

|

2016-07-20T09:56:45Z

|

2017-03-02T07:42:43Z

|

https://github.com/CPJKU/madmom/issues/175

|

[] |

superbock

| 0

|

vitalik/django-ninja

|

django

| 430

|

[BUG] Schema defaults when using Pagination

|

**The bug**

It seems that when I'm setting default values with Field() Pagination does not work as expected.

In this case: it returns the default values of the schema (Bob, age 21)

**Versions**

- Python version: [3.10]

- Django version: [4.0.4]

- Django-Ninja version: [0.17.0]

**How to reproduce:**

Use the following code snippets or [clone my repo here.](https://github.com/florisgravendeel/DjangoNinjaPaginationBug)

models.py

```python

from django.db import models

class Person(models.Model):

id = models.AutoField(primary_key=True)

name = models.CharField(null=False, blank=False, max_length=30)

age = models.PositiveSmallIntegerField(null=False, blank=False)

def __str__(self):

return self.name + ", age: " + str(self.age) + " id: " + str(self.id)

```

api.py

```python

from typing import List

from ninja import Schema, Field, Router

from ninja.pagination import PageNumberPagination, paginate

from DjangoNinjaPaginationBug.models import Person

router = Router()

class PersonOutBugged(Schema):

id: int = Field(1, alias="Short summary of the variable.")

name: str = Field("Bob", alias="Second short summary of the variable.")

age: str = Field(21, alias="Third summary of the variable.")

class PersonOutWorks(Schema):

id: int

name: str

age: str

@router.get("/person-bugged", response=List[PersonOutBugged], tags=["Person"])

@paginate(PageNumberPagination)

def list_persons(request):

"""Retrieves all persons from the database. Bugged. Returns the default values of the schema. """

return Person.objects.all()

@router.get("/person-works", response=List[PersonOutWorks], tags=["Person"])

@paginate(PageNumberPagination)

def list_persons2(request):

"""Retrieves all persons from the database. This works. """

return Person.objects.all()

```

Don't forget to add persons to the database (if you're using the code snippets)!

|

closed

|

2022-04-25T14:40:20Z

|

2022-04-25T16:15:07Z

|

https://github.com/vitalik/django-ninja/issues/430

|

[] |

florisgravendeel

| 2

|

ploomber/ploomber

|

jupyter

| 461

|

Document command tester

|

closed

|

2022-01-03T19:02:56Z

|

2022-01-04T04:13:18Z

|

https://github.com/ploomber/ploomber/issues/461

|

[] |

edublancas

| 0

|

|

tqdm/tqdm

|

jupyter

| 855

|

Merge to progressbar2

|

- [x] I have marked all applicable categories:

+ [x] new feature request

- [x] I have visited the [source website], and in particular

read the [known issues]

- [x] I have searched through the [issue tracker] for duplicates

[progressbar2](https://github.com/WoLpH/python-progressbar) has a concept of widgets. It allows library user to set up what is to be shown.

I guess it may make sense to merge the 2 projects.

@WoLpH

|

open

|

2019-11-30T07:40:11Z

|

2019-12-02T23:03:56Z

|

https://github.com/tqdm/tqdm/issues/855

|

[

"question/docs ‽",

"p4-enhancement-future 🧨",

"submodule ⊂"

] |

KOLANICH

| 11

|

streamlit/streamlit

|

python

| 10,603

|

Rerun fragment from anywhere, not just from within itself

|

### Checklist

- [x] I have searched the [existing issues](https://github.com/streamlit/streamlit/issues) for similar feature requests.

- [x] I added a descriptive title and summary to this issue.

### Summary

Related to #8511 and #10045.

Currently the only way to rerun a fragment is calling `st.rerun(scope="fragment")` from within itself. Allowing a fragment rerun to be triggered from anywhere (another fragment or the main app) would unlock new powerful use-cases.

### Why?

When a fragment is dependent on another fragment or on a change on the main app, the only current way to reflect that dependency is to rerun the full application whenever a dependency changes.

### How?

I think adding a key to each fragment would allow the user to build his custom logic to manage the dependency chain and take full control on when to run a given fragment:

```python

@st.fragment(key="depends_on_b")

def a():

st.write(st.session_state.input_for_a)

@st.fragment(key="depends_on_main")

def b():

st.session_state.input_for_a = ...

if st.button("Should rerun a"):

st.rerun(scope="fragment", key="depends_on_b")

if other_condition:

st.rerun(scope="fragment", key="depends_on_main")

```

This implementation doesn't go the full mile to describe the dependency chain in the fragment's definition and let streamlit handle the rerun logic as suggested in #10045, but provides more flexibility for the user to rerun a fragment from anywhere and under any conditions that fits his use-case

### Additional Context

_No response_

|

open

|

2025-03-03T14:55:41Z

|

2025-03-13T04:44:33Z

|

https://github.com/streamlit/streamlit/issues/10603

|

[

"type:enhancement",

"feature:st.fragment"

] |

Abdelgha-4

| 1

|

ultrafunkamsterdam/undetected-chromedriver

|

automation

| 1,758

|

InvalidArgumentException

|

this is my code

import undetected_chromedriver as uc

options = uc.ChromeOptions()

driver = uc.Chrome()

if __name__ == '__main__':

page = driver.get(url='www.google.com')

print(page.title)

and this is the error message

python app.py

Traceback (most recent call last):

File "C:\Users\user\Desktop\zalando\farfetch\app.py", line 8, in <module>

page = driver.get(url='www.google.com')

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\user\Desktop\zalando\farfetch\venv\Lib\site-packages\undetected_chromedriver\__init__.py", line 665, in get

return super().get(url)

^^^^^^^^^^^^^^^^

File "C:\Users\user\Desktop\zalando\farfetch\venv\Lib\site-packages\selenium\webdriver\remote\webdriver.py", line 356, in get

self.execute(Command.GET, {"url": url})

File "C:\Users\user\Desktop\zalando\farfetch\venv\Lib\site-packages\selenium\webdriver\remote\webdriver.py", line 347, in execute

self.error_handler.check_response(response)

File "C:\Users\user\Desktop\zalando\farfetch\venv\Lib\site-packages\selenium\webdriver\remote\errorhandler.py", line 229, in check_response

raise exception_class(message, screen, stacktrace)

selenium.common.exceptions.InvalidArgumentException: Message: invalid argument

(Session info: chrome=122.0.6261.58)

Stacktrace:

GetHandleVerifier [0x00BE8CD3+51395]

(No symbol) [0x00B55F31]

(No symbol) [0x00A0E004]

(No symbol) [0x009FF161]

(No symbol) [0x009FD9DE]

(No symbol) [0x009FE0AB]

(No symbol) [0x00A10258]

(No symbol) [0x00A7AC91]

(No symbol) [0x00A63E8C]

(No symbol) [0x00A7A570]

(No symbol) [0x00A63C26]

(No symbol) [0x00A3C629]

(No symbol) [0x00A3D40D]

GetHandleVerifier [0x00F66493+3711107]

GetHandleVerifier [0x00FA587A+3970154]

GetHandleVerifier [0x00FA0B68+3950424]

GetHandleVerifier [0x00C99CD9+776393]

(No symbol) [0x00B61704]

(No symbol) [0x00B5C5E8]

(No symbol) [0x00B5C799]

(No symbol) [0x00B4DDC0]

BaseThreadInitThunk [0x76CC00F9+25]

RtlGetAppContainerNamedObjectPath [0x77B47BBE+286]

RtlGetAppContainerNamedObjectPath [0x77B47B8E+238]

Exception ignored in: <function Chrome.__del__ at 0x0000027EF89C8540>

Traceback (most recent call last):

File "C:\Users\user\Desktop\zalando\farfetch\venv\Lib\site-packages\undetected_chromedriver\__init__.py", line 843, in __del__

File "C:\Users\user\Desktop\zalando\farfetch\venv\Lib\site-packages\undetected_chromedriver\__init__.py", line 798, in quit

OSError: [WinError 6] The handle is invalid

How can i solve this?

|

open

|

2024-02-23T08:04:44Z

|

2024-02-23T08:05:20Z

|

https://github.com/ultrafunkamsterdam/undetected-chromedriver/issues/1758

|

[] |

idyweb

| 0

|

mirumee/ariadne-codegen

|

graphql

| 30

|

Handle fragments in provided queries

|

Given example schema:

```gql

schema {

query: Query

}

type Query {

testQuery: ResultType

}

type ResultType {

field1: Int!

field2: String!

field3: Boolean!

}

```

and query with fragment:

```gql

query QueryName {

testQuery {

...TestFragment

}

}

fragment TestFragment on ResultType {

field1

field2

}

```

`graphql-sdk-gen` for now raises `KeyError: 'TestFragment'`, but should generate `query_name.py` file with model definition:

```python

class QueryNameResultType(BaseModel):

field1: int

field2: str

```

|

closed

|

2022-11-15T10:22:17Z

|

2022-11-17T07:35:52Z

|

https://github.com/mirumee/ariadne-codegen/issues/30

|

[] |

mat-sop

| 0

|

dnouri/nolearn

|

scikit-learn

| 191

|

Update Version of Lasagne Required in requirements.txt

|

closed

|

2016-01-13T18:19:13Z

|

2016-01-14T21:03:43Z

|

https://github.com/dnouri/nolearn/issues/191

|

[] |

cancan101

| 0

|

|

python-visualization/folium

|

data-visualization

| 1,384

|

search plugin display multiple places

|

**Describe the issue**

I display places where certain historic persons appear at different places on a stamen map. They are coded in geojson. Search works fine: it shows found places in search combo box and I after selecting one value it zooms to that place on the map.

My question:

Is it possible after search to display not only one found place, but all places where the name was found?

cheers

Uli

|

closed

|

2020-08-30T10:49:38Z

|

2022-11-28T12:36:10Z

|

https://github.com/python-visualization/folium/issues/1384

|

[] |

uli22

| 1

|

ContextLab/hypertools

|

data-visualization

| 51

|

demo scripts: naming and paths

|

all of the demo scripts have names that start with `hypertools_demo-`, which is redundant. i propose removing `hypertools_demo-` from each script name.

also, some of the paths are relative rather than absolute. for example, the `sample_data` folder is only visible within the `examples` folder, but it is referenced using relative paths. this causes some of the demo functions (e.g. `hypertools_demo-align.py`) to fail. references to `sample_data` could be changed as follows (from within any scripts that reference data in `sample_data`):

`import os`

`datadir = os.path.join(os.path.realpath(__file__), 'sample_data')`

|

closed

|

2017-01-02T23:27:43Z

|

2017-01-03T02:55:08Z

|

https://github.com/ContextLab/hypertools/issues/51

|

[

"bug"

] |

jeremymanning

| 2

|

aiortc/aiortc

|

asyncio

| 1,102

|

Connection(0) ICE failed when using mobile Internet. Connection(0) ICE completed in local network.

|

I use the latest (1.8.0) version of aiortc. The "server" example (aiortc/tree/main/examples/server) works well on a local network and with mobile internet. My project uses django-channels for "offer/answer" message exchange, and a connection establishes perfectly when I use a local network (both computers on the same Wi-Fi network). However, when I try to connect to the server from my smartphone (Android 12, Chrome browser) using a mobile network, the output waits for several seconds and then shows "Connection(0) ICE failed".

I examined the source code of "venv311/Lib/site-packages/aioice/ice.py" and found that the initial list of Pairs (self._check_list) is extended by this call: self.check_incoming(message, addr, protocol) - line 1062 in the request_received() method of the Connection class.

It's a crucial part because it allows the server to know the dynamic IP address of the remote client.

For the "server" example and my local setup, it works well, and the remote peer sends the needed request, triggering request_received() but for mobile internet request_received() is not called.

Could you suggest any ideas about what I might have done wrong? Or maybe you can suggest things which impact this situation.

Thanks in advance

|

closed

|

2024-05-23T12:25:46Z

|

2024-05-29T22:20:35Z

|

https://github.com/aiortc/aiortc/issues/1102

|

[] |

Aroxed

| 0

|

CTFd/CTFd

|

flask

| 2,103

|

backport core theme for compatibility with new plugins

|

During updating of our plugins for compatibility with the currently developed theme It would be beneficial to move certain functions from the plugins to ctfd.js. It's working fine with the new theme however the current core theme needs to be backported for compatibility.

|

closed

|

2022-04-28T11:36:34Z

|

2022-04-29T04:17:12Z

|

https://github.com/CTFd/CTFd/issues/2103

|

[] |

MilyMilo

| 0

|

AirtestProject/Airtest

|

automation

| 706

|

红米8aJAVACAP截图不正确

|

**(重要!问题分类)**

* 图像识别、设备控制相关问题

**描述问题bug**

JAVACAP在所有横屏应用下返回的图像方向正确但尺寸不正确,就像是把一块横着放的海绵塞进了竖着放的盒子里

JAVACAP返回的图片是经过压缩编码的,这个被编码的图片就是错误的

**相关截图**

**复现步骤**

在IDE里显示的就不正确,简化后就是以下代码

```python

from airtest.core.android.android import Android

from airtest.core.android.constant import CAP_METHOD,ORI_METHOD

a=Android(cap_method=CAP_METHOD.JAVACAP,ori_method=ORI_METHOD.ADB)

import cv2

cv2.imshow('',a.snapshot())

```

**预期效果**

使用MINICAP会报错,使用ADB能得到正确的截图

**python 版本:**

python3.7

**airtest 版本:**

1.1.3

**设备:**

- 型号: 红米redmi8a

- 系统: MIUI(11.0.7)(android 9)

**其他相关环境信息**

win10 1909,python3.7,airtest1.1.3,adb1.0.40,Android.sdk_version=28

|

closed

|

2020-03-16T06:50:22Z

|

2020-07-07T08:11:09Z

|

https://github.com/AirtestProject/Airtest/issues/706

|

[

"to be released"

] |

hgjazhgj

| 8

|

mwaskom/seaborn

|

pandas

| 3,591

|

Heatmap doees not display all entries

|

Hi all,

I have an issue with my heatmap.

I generated a dataframe with 30k columns and here I set some of the values to a non-nan value (2k of them) (some might be double hits but that is beside the point). The values I fill the dataframe with are values between 0-1 to tell the function how to color each sample

When I plot this, I only get a low amount of hits displayed and wondered why this is.

In my real case example what is shown is even less (more rows, less non-nan's) as in this example.

Am I doing something wrong here?

python=3.12; seaborn=0.12; matplotlib=3.8.2; pandas=2.1.4 (Ubuntu=22.04)

```

import seaborn as sns

import matplotlib.pyplot as plt

import matplotlib as mpl

import numpy as np

import os

import pandas as pd

new_dict = {}

for k in ["a", "b", "c", "d"]:

v = {str(idx): None for idx in range(30000)}

rand_ints = [np.random.randint(low=0, high=30000) for i in range(2000)]

for v_hits in rand_ints:

v[str(v_hits)] = v_hits/30000

new_dict[k] = v

df_heatmap_hits = pd.DataFrame(new_dict).transpose()

sns.heatmap(df_heatmap_hits)

plt.show()

```

|

closed

|

2023-12-11T11:45:03Z

|

2023-12-11T16:34:24Z

|

https://github.com/mwaskom/seaborn/issues/3591

|

[] |

dansteiert

| 5

|

sqlalchemy/sqlalchemy

|

sqlalchemy

| 12,441

|

Remove features deprecated in 1.3 and earlier

|

Compation to #12437 that covers the other deprecated features

|

closed

|

2025-03-17T20:01:01Z

|

2025-03-18T16:12:31Z

|

https://github.com/sqlalchemy/sqlalchemy/issues/12441

|

[

"deprecations"

] |

CaselIT

| 1

|

3b1b/manim

|

python

| 1,167

|

[Hiring] Need help creating animation

|

Hello,

I have a simple project which requires a simple animation and though manim would be perfect. Since there is a time contraint, I won't be able to create an animation. Would anyone be willing to for hire?

|

closed

|

2020-07-14T17:59:48Z

|

2020-08-18T03:44:19Z

|

https://github.com/3b1b/manim/issues/1167

|

[] |

OGALI

| 3

|

ResidentMario/geoplot

|

matplotlib

| 69

|

Failing voronoi example with the new 0.2.2 release

|

The geoplot release seems to have broken the geopandas examples (the voronoi one). I am getting the following error on our readthedocs build:

```

Unexpected failing examples:

/home/docs/checkouts/readthedocs.org/user_builds/geopandas/checkouts/latest/examples/plotting_with_geoplot.py failed leaving traceback:

Traceback (most recent call last):

File "/home/docs/checkouts/readthedocs.org/user_builds/geopandas/checkouts/latest/examples/plotting_with_geoplot.py", line 80, in <module>

linewidth=0)

File "/home/docs/checkouts/readthedocs.org/user_builds/geopandas/conda/latest/lib/python3.6/site-packages/geoplot/geoplot.py", line 2133, in voronoi

geoms = _build_voronoi_polygons(df)

File "/home/docs/checkouts/readthedocs.org/user_builds/geopandas/conda/latest/lib/python3.6/site-packages/geoplot/geoplot.py", line 2687, in _build_voronoi_polygons

ls = np.vstack([np.asarray(infinite_segments), np.asarray(finite_segments)])

File "/home/docs/checkouts/readthedocs.org/user_builds/geopandas/conda/latest/lib/python3.6/site-packages/numpy/core/shape_base.py", line 234, in vstack

return _nx.concatenate([atleast_2d(_m) for _m in tup], 0)

ValueError: all the input arrays must have same number of dimensions

```

|

closed

|

2019-01-07T14:10:45Z

|

2019-03-17T04:24:29Z

|

https://github.com/ResidentMario/geoplot/issues/69

|

[] |

jorisvandenbossche

| 12

|

CorentinJ/Real-Time-Voice-Cloning

|

python

| 394

|

Is there any way to change the language?

|

Hey, i have successfully installed it,

And I just wanted to ask if there was any way to

Change the pronunciation language to German?

|

closed

|

2020-07-02T10:16:08Z

|

2020-07-04T15:03:11Z

|

https://github.com/CorentinJ/Real-Time-Voice-Cloning/issues/394

|

[] |

ozanaaslan

| 2

|

WZMIAOMIAO/deep-learning-for-image-processing

|

deep-learning

| 572

|

关于MobileNetV3的训练问题

|

您好,我在使用MobileNetV3进行自定义数据集训练时,调用

`net = MobileNetV3(num_classes=16)`

时报错如下:

> TypeError: __init__() missing 2 required positional arguments: 'inverted_residual_setting' and 'last_channel'

请问如何解决?感谢。

|

closed

|

2022-06-13T06:52:35Z

|

2022-06-15T02:38:15Z

|

https://github.com/WZMIAOMIAO/deep-learning-for-image-processing/issues/572

|

[] |

myfeet2cold

| 1

|

dmlc/gluon-cv

|

computer-vision

| 1,032

|

NMS code question

|

I am a fresher of gluon-cv, I want to know how you implement nms in ssd model.

Anyone can tell me where the code is? I see there is a tvm convert tutorials of gluon-cv ssd models which includes nms and post-processing operations which can be converted to tvm model directly, I want to know how it works so as to use it in pytorch detection model to conver to tvm.

Thank you very much.

Best,

Edward

|

closed

|

2019-11-07T09:03:23Z

|

2019-12-19T06:49:49Z

|

https://github.com/dmlc/gluon-cv/issues/1032

|

[] |

Edwardmark

| 3

|

InstaPy/InstaPy

|

automation

| 6,452

|

Ubuntu server // Hide Selenium Extension: error

|

I use Droplet on Digital Ocean _(Ubuntu 20.04 (LTS) x64)_

When I start my quickstart.py, it's fail cause "Hide Selenium Extension: error", I don't found solution for this time, somebody can help me ?

> InstaPy Version: 0.6.15

._. ._. ._. ._. ._. ._. ._.

Workspace in use: "/root/InstaPy"

OOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOO

INFO [2022-01-03 23:29:27] [xxxxx] Session started!

oooooooooooooooooooooooooooooooooooooooooooooooooooooo

INFO [2022-01-03 23:29:27] [xxxxx] -- Connection Checklist [1/2] (Internet Connection Status)

INFO [2022-01-03 23:29:28] [xxxxx] - Internet Connection Status: ok

INFO [2022-01-03 23:29:28] [xxxxx] - Current IP is "64.227.120.175" and it's from "Germany/DE"

INFO [2022-01-03 23:29:28] [xxxxx] -- Connection Checklist [2/2] (Hide Selenium Extension)

INFO [2022-01-03 23:29:28] [xxxxx] - window.navigator.webdriver response: True

WARNING [2022-01-03 23:29:28] [xxxxx] - Hide Selenium Extension: error

INFO [2022-01-03 23:29:31] [xxxxx] - Cookie file not found, creating cookie...

WARNING [2022-01-03 23:29:45] [xxxxx] Login A/B test detected! Trying another string...

WARNING [2022-01-03 23:29:50] [xxxxx] Could not pass the login A/B test. Trying last string...

......................................................................................................................

CRITICAL [2022-01-03 23:30:24] [xxxxx] Unable to login to Instagram! You will find more information in the logs above.

''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''

INFO [2022-01-03 23:30:25] [xxxxx] Sessional Live Report:

|> No any statistics to show

[Session lasted 1.04 minutes]

OOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOO

INFO [2022-01-03 23:30:25] [xxxxx] Session ended!

ooooooooooooooooooooooooooooooooooooooooooooooooooooo

|

closed

|

2022-01-03T23:37:58Z

|

2022-02-01T14:54:18Z

|

https://github.com/InstaPy/InstaPy/issues/6452

|

[] |

celestinsoum

| 8

|

modin-project/modin

|

data-science

| 7,409

|

BUG: can't use list of tuples of select multiple columns when columns are multiindex

|

### Modin version checks

- [X] I have checked that this issue has not already been reported.

- [X] I have confirmed this bug exists on the latest released version of Modin.

- [X] I have confirmed this bug exists on the main branch of Modin. (In order to do this you can follow [this guide](https://modin.readthedocs.io/en/stable/getting_started/installation.html#installing-from-the-github-main-branch).)

### Reproducible Example

```python

data = {

"ix": [1, 2, 1, 1, 2, 2],

"iy": [1, 2, 2, 1, 2, 1],

"col": ["b", "b", "a", "a", "a", "a"],

"col_b": ["x", "y", "x", "y", "x", "y"],

"foo": [7, 1, 0, 1, 2, 2],

"bar": [9, 4, 0, 2, 0, 0],

}

pivot_modin = mpd.DataFrame(data).pivot_table(

values=['foo'],

index=['ix'],

columns=['col'],

aggfunc='min',

margins=False,

observed=True,

)

pivot_modin.loc[:, [('foo', 'b')]]

```

### Issue Description

this raises

```

KeyError Traceback (most recent call last)

File /opt/conda/lib/python3.10/site-packages/pandas/core/indexes/base.py:3805, in Index.get_loc(self, key)

3804 try:

-> 3805 return self._engine.get_loc(casted_key)

3806 except KeyError as err:

File index.pyx:167, in pandas._libs.index.IndexEngine.get_loc()

File index.pyx:196, in pandas._libs.index.IndexEngine.get_loc()

File pandas/_libs/hashtable_class_helper.pxi:7081, in pandas._libs.hashtable.PyObjectHashTable.get_item()

File pandas/_libs/hashtable_class_helper.pxi:7089, in pandas._libs.hashtable.PyObjectHashTable.get_item()

KeyError: ('foo', 'b')

The above exception was the direct cause of the following exception:

KeyError Traceback (most recent call last)

File /opt/conda/lib/python3.10/site-packages/pandas/core/indexes/multi.py:3499, in MultiIndex.get_locs(self, seq)

3498 try:

-> 3499 lvl_indexer = self._get_level_indexer(k, level=i, indexer=indexer)

3500 except (InvalidIndexError, TypeError, KeyError) as err:

3501 # InvalidIndexError e.g. non-hashable, fall back to treating

3502 # this as a sequence of labels

3503 # KeyError it can be ambiguous if this is a label or sequence

3504 # of labels

3505 # github.com/pandas-dev/pandas/issues/39424#issuecomment-871626708

File /opt/conda/lib/python3.10/site-packages/pandas/core/indexes/multi.py:3391, in MultiIndex._get_level_indexer(self, key, level, indexer)

3390 else:

-> 3391 idx = self._get_loc_single_level_index(level_index, key)

3393 if level > 0 or self._lexsort_depth == 0:

3394 # Desired level is not sorted

File /opt/conda/lib/python3.10/site-packages/pandas/core/indexes/multi.py:2980, in MultiIndex._get_loc_single_level_index(self, level_index, key)

2979 else:

-> 2980 return level_index.get_loc(key)

File /opt/conda/lib/python3.10/site-packages/pandas/core/indexes/base.py:3812, in Index.get_loc(self, key)

3811 raise InvalidIndexError(key)

-> 3812 raise KeyError(key) from err

3813 except TypeError:

3814 # If we have a listlike key, _check_indexing_error will raise

3815 # InvalidIndexError. Otherwise we fall through and re-raise

3816 # the TypeError.

KeyError: ('foo', 'b')

During handling of the above exception, another exception occurred:

KeyError Traceback (most recent call last)

File /opt/conda/lib/python3.10/site-packages/pandas/core/indexes/base.py:3805, in Index.get_loc(self, key)

3804 try:

-> 3805 return self._engine.get_loc(casted_key)

3806 except KeyError as err:

File index.pyx:167, in pandas._libs.index.IndexEngine.get_loc()

File index.pyx:196, in pandas._libs.index.IndexEngine.get_loc()

File pandas/_libs/hashtable_class_helper.pxi:7081, in pandas._libs.hashtable.PyObjectHashTable.get_item()

File pandas/_libs/hashtable_class_helper.pxi:7089, in pandas._libs.hashtable.PyObjectHashTable.get_item()

KeyError: 'b'

The above exception was the direct cause of the following exception:

KeyError Traceback (most recent call last)

Cell In[17], line 1

----> 1 pivot_modin.loc[:, [('foo', 'b')]]

File /opt/conda/lib/python3.10/site-packages/modin/logging/logger_decorator.py:144, in enable_logging.<locals>.decorator.<locals>.run_and_log(*args, **kwargs)

129 """

130 Compute function with logging if Modin logging is enabled.

131

(...)

141 Any

142 """

143 if LogMode.get() == "disable":

--> 144 return obj(*args, **kwargs)

146 logger = get_logger()

147 logger.log(log_level, start_line)

File /opt/conda/lib/python3.10/site-packages/modin/pandas/indexing.py:666, in _LocIndexer.__getitem__(self, key)

664 except KeyError:

665 pass

--> 666 return self._helper_for__getitem__(

667 key, *self._parse_row_and_column_locators(key)

668 )

File /opt/conda/lib/python3.10/site-packages/modin/logging/logger_decorator.py:144, in enable_logging.<locals>.decorator.<locals>.run_and_log(*args, **kwargs)

129 """

130 Compute function with logging if Modin logging is enabled.

131

(...)

141 Any

142 """

143 if LogMode.get() == "disable":

--> 144 return obj(*args, **kwargs)

146 logger = get_logger()

147 logger.log(log_level, start_line)

File /opt/conda/lib/python3.10/site-packages/modin/pandas/indexing.py:712, in _LocIndexer._helper_for__getitem__(self, key, row_loc, col_loc, ndim)

709 if isinstance(row_loc, Series) and is_boolean_array(row_loc):

710 return self._handle_boolean_masking(row_loc, col_loc)

--> 712 qc_view = self.qc.take_2d_labels(row_loc, col_loc)

713 result = self._get_pandas_object_from_qc_view(

714 qc_view,

715 row_multiindex_full_lookup,

(...)

719 ndim,

720 )

722 if isinstance(result, Series):

File /opt/conda/lib/python3.10/site-packages/modin/core/storage_formats/pandas/query_compiler_caster.py:157, in apply_argument_cast.<locals>.cast_args(*args, **kwargs)

155 kwargs = cast_nested_args_to_current_qc_type(kwargs, current_qc)

156 args = cast_nested_args_to_current_qc_type(args, current_qc)

--> 157 return obj(*args, **kwargs)

File /opt/conda/lib/python3.10/site-packages/modin/logging/logger_decorator.py:144, in enable_logging.<locals>.decorator.<locals>.run_and_log(*args, **kwargs)

129 """

130 Compute function with logging if Modin logging is enabled.

131

(...)

141 Any

142 """

143 if LogMode.get() == "disable":

--> 144 return obj(*args, **kwargs)

146 logger = get_logger()

147 logger.log(log_level, start_line)

File /opt/conda/lib/python3.10/site-packages/modin/core/storage_formats/base/query_compiler.py:4217, in BaseQueryCompiler.take_2d_labels(self, index, columns)

4197 def take_2d_labels(

4198 self,

4199 index,

4200 columns,

4201 ):

4202 """

4203 Take the given labels.

4204

(...)

4215 Subset of this QueryCompiler.

4216 """

-> 4217 row_lookup, col_lookup = self.get_positions_from_labels(index, columns)

4218 if isinstance(row_lookup, slice):

4219 ErrorMessage.catch_bugs_and_request_email(

4220 failure_condition=row_lookup != slice(None),

4221 extra_log=f"Only None-slices are acceptable as a slice argument in masking, got: {row_lookup}",

4222 )

File /opt/conda/lib/python3.10/site-packages/modin/core/storage_formats/pandas/query_compiler_caster.py:157, in apply_argument_cast.<locals>.cast_args(*args, **kwargs)

155 kwargs = cast_nested_args_to_current_qc_type(kwargs, current_qc)

156 args = cast_nested_args_to_current_qc_type(args, current_qc)

--> 157 return obj(*args, **kwargs)

File /opt/conda/lib/python3.10/site-packages/modin/logging/logger_decorator.py:144, in enable_logging.<locals>.decorator.<locals>.run_and_log(*args, **kwargs)

129 """

130 Compute function with logging if Modin logging is enabled.

131

(...)

141 Any

142 """

143 if LogMode.get() == "disable":

--> 144 return obj(*args, **kwargs)

146 logger = get_logger()

147 logger.log(log_level, start_line)

File /opt/conda/lib/python3.10/site-packages/modin/core/storage_formats/base/query_compiler.py:4314, in BaseQueryCompiler.get_positions_from_labels(self, row_loc, col_loc)

4312 axis_lookup = self.get_axis(axis).get_indexer_for(axis_loc)

4313 else:

-> 4314 axis_lookup = self.get_axis(axis).get_locs(axis_loc)

4315 elif is_boolean_array(axis_loc):

4316 axis_lookup = boolean_mask_to_numeric(axis_loc)

File /opt/conda/lib/python3.10/site-packages/pandas/core/indexes/multi.py:3513, in MultiIndex.get_locs(self, seq)

3509 raise err

3510 # GH 39424: Ignore not founds

3511 # GH 42351: No longer ignore not founds & enforced in 2.0

3512 # TODO: how to handle IntervalIndex level? (no test cases)

-> 3513 item_indexer = self._get_level_indexer(

3514 x, level=i, indexer=indexer

3515 )

3516 if lvl_indexer is None:

3517 lvl_indexer = _to_bool_indexer(item_indexer)

File /opt/conda/lib/python3.10/site-packages/pandas/core/indexes/multi.py:3391, in MultiIndex._get_level_indexer(self, key, level, indexer)

3388 return slice(i, j, step)

3390 else:

-> 3391 idx = self._get_loc_single_level_index(level_index, key)

3393 if level > 0 or self._lexsort_depth == 0:

3394 # Desired level is not sorted

3395 if isinstance(idx, slice):

3396 # test_get_loc_partial_timestamp_multiindex

File /opt/conda/lib/python3.10/site-packages/pandas/core/indexes/multi.py:2980, in MultiIndex._get_loc_single_level_index(self, level_index, key)

2978 return -1

2979 else:

-> 2980 return level_index.get_loc(key)

File /opt/conda/lib/python3.10/site-packages/pandas/core/indexes/base.py:3812, in Index.get_loc(self, key)

3807 if isinstance(casted_key, slice) or (

3808 isinstance(casted_key, abc.Iterable)

3809 and any(isinstance(x, slice) for x in casted_key)

3810 ):

3811 raise InvalidIndexError(key)

-> 3812 raise KeyError(key) from err

3813 except TypeError:

3814 # If we have a listlike key, _check_indexing_error will raise

3815 # InvalidIndexError. Otherwise we fall through and re-raise

3816 # the TypeError.

3817 self._check_indexing_error(key)

KeyError: 'b'

```

### Expected Behavior

what pandas does

```

ix

1 7

2 1

Name: (foo, b), dtype: int64

```

### Error Logs

<details>

```python-traceback

Replace this line with the error backtrace (if applicable).

```

</details>

### Installed Versions

<details>

INSTALLED VERSIONS

------------------

commit : 3e951a63084a9cbfd5e73f6f36653ee12d2a2bfa

python : 3.10.14

python-bits : 64

OS : Linux

OS-release : 5.15.154+

Version : #1 SMP Thu Jun 27 20:43:36 UTC 2024

machine : x86_64

processor : x86_64

byteorder : little

LC_ALL : POSIX

LANG : C.UTF-8

LOCALE : None.None

Modin dependencies

------------------

modin : 0.32.0

ray : 2.24.0

dask : 2024.9.1

distributed : None

pandas dependencies

-------------------

pandas : 2.2.3

numpy : 1.26.4

pytz : 2024.1

dateutil : 2.9.0.post0

pip : 24.0

Cython : 3.0.10

sphinx : None

IPython : 8.21.0

adbc-driver-postgresql: None

adbc-driver-sqlite : None

bs4 : 4.12.3

blosc : None

bottleneck : None

dataframe-api-compat : None

fastparquet : None

fsspec : 2024.6.1

html5lib : 1.1

hypothesis : None

gcsfs : 2024.6.1

jinja2 : 3.1.4

lxml.etree : 5.3.0

matplotlib : 3.7.5

numba : 0.60.0

numexpr : 2.10.1

odfpy : None

openpyxl : 3.1.5

pandas_gbq : None

psycopg2 : None

pymysql : None

pyarrow : 17.0.0

pyreadstat : None

pytest : 8.3.3

python-calamine : None

pyxlsb : None

s3fs : 2024.6.1

scipy : 1.14.1

sqlalchemy : 2.0.30

tables : 3.10.1

tabulate : 0.9.0

xarray : 2024.9.0

xlrd : None

xlsxwriter : None

zstandard : 0.23.0

tzdata : 2024.1

qtpy : None

pyqt5 : None

</details>

|

open

|

2024-11-13T08:15:15Z

|

2024-11-13T11:17:32Z

|

https://github.com/modin-project/modin/issues/7409

|

[

"bug 🦗",

"Triage 🩹"

] |

MarcoGorelli

| 1

|

recommenders-team/recommenders

|

deep-learning

| 1,813

|

[ASK] Recommendation algorithms leveraging user attributes as input

|

Hi Recommenders,

Thank you for the interesting repository. I came across `recommenders` repository very recently. I am still exploring different algorithms. I have a particular interest to recommendation algorithms leveraging or taking user attributes i.e., gender, age, occupation as input.

### Description

<!--- Describe your general ask in detail -->

In my experiments, I am exploring the impact of user attributes as side information to a recommender system algorithms. So I am looking for a number of algorithms that are available in `recommenders`. Could you please point me / direct me to all algorithms that do take user attributes as input.

Bests,

_Manel._

### Comments

Please note that I have already tried Factorization machine.

|

closed

|

2022-08-18T14:36:28Z

|

2022-10-19T07:58:51Z

|

https://github.com/recommenders-team/recommenders/issues/1813

|

[

"help wanted"

] |

SlokomManel

| 1

|

wandb/wandb

|

data-science

| 9,046

|

[Feature]: Better grouping of runs

|

### Description

Hi,

I would suggest an option to group better the runs.

Let us suppose we have 3 methods with some common (hyper)-parameters such as LR, WD but also they have some specific parameters such as attr_A (values B,C,...), attr_0 (values 1,2,3,...), attr_i (values ii,iii,iv,...)

The problem is to compare the runs not only within the group of specific method, but also globally.

### Suggested Solution

One idea to group them in order to compare not only within the selected method, but also holistically is to group method,LR,WD,attr_A,attr_0,attr_i and where a method does not accept/is independent of some attribute then it is just null.

Currently in wandb it does not work.

So this feature would be related to https://github.com/wandb/wandb/issues/6460

But another, more general idea would be to allow group in a tree manner.

| LR

| WD

| method

|->attr_A

| |-> attr_AA

|->attr_B

|-> attr_C

etc.

Another way to handle this (situation where in our space we have runs from different methods) is to allow to have multiple grouping options saved - just as in filter field. This would at least allow quickly compare runs within the same family of approach, without grouping every single time when we want to switch method between method with attr_A and method with attr_0.

However, so many users would happy with just "null" solution. Many thanks

|

closed

|

2024-12-09T00:31:19Z

|

2024-12-14T00:22:10Z

|

https://github.com/wandb/wandb/issues/9046

|

[

"ty:feature",

"a:app"

] |

matekrk

| 3

|

0xTheProDev/fastapi-clean-example

|

pydantic

| 6

|

question on service-to-service

|

Is it an issue that we expose models directly? For example in BookService we expose model in get method, and we also use models of Author repository.

This implies that we are allowed to use those interfaces - CRUD, read all properties, call other relations, etc.

Is exposing schemas objects between domains is a better solution?

|

closed

|

2023-02-13T10:09:41Z

|

2023-04-25T07:10:49Z

|

https://github.com/0xTheProDev/fastapi-clean-example/issues/6

|

[] |

Murtagy

| 1

|

kensho-technologies/graphql-compiler

|

graphql

| 698

|

register_macro_edge builds a schema for macro definitions every time its called

|

`register_macro_edge` calls `make_macro_edge_descriptor` which calls `get_and_validate_macro_edge_info` which calls `_validate_ast_with_builtin_graphql_validation` which calls `get_schema_for_macro_edge_definitions` which builds the macro edge definition schema. Building the macro edge definition schema involves copying the entire original schema so we probably want to avoid doing this every time we register a macro edge.

We should probably make a MacroRegistry a dataclass and have a `post_init` method that creates a schema for macro edge definitions once.

|

open

|

2019-12-10T03:42:49Z

|

2019-12-10T03:43:30Z

|

https://github.com/kensho-technologies/graphql-compiler/issues/698

|

[

"enhancement"

] |

pmantica1

| 0

|

explosion/spacy-course

|

jupyter

| 31

|

Chapter 4.4 evaluates correct with no entities

|

<img width="859" alt="Screen Shot 2019-05-30 at 1 12 58 am" src="https://user-images.githubusercontent.com/21038129/58568874-6729f480-8278-11e9-8802-730ec6ea13d2.png">

I put `doc.ents` instead of `entities` but it was still marked correct

|

closed

|

2019-05-29T15:16:23Z

|

2020-04-17T01:32:50Z

|

https://github.com/explosion/spacy-course/issues/31

|

[] |

natashawatkins

| 3

|

kubeflow/katib

|

scikit-learn

| 1,581

|

[chore] Upgrade CRDs to apiextensions.k8s.io/v1

|

/kind feature

**Describe the solution you'd like**

[A clear and concise description of what you want to happen.]

> The api group apiextensions.k8s.io/v1beta1 is no longer served in k8s 1.22 https://kubernetes.io/docs/reference/using-api/deprecation-guide/#customresourcedefinition-v122

>

> kubeflow APIs need to be upgraded

/cc @alculquicondor

Maybe there is problem about https://github.com/kubernetes/apiextensions-apiserver/issues/50

**Anything else you would like to add:**

[Miscellaneous information that will assist in solving the issue.]

|

closed

|

2021-07-19T03:27:14Z

|

2021-08-10T23:31:25Z

|

https://github.com/kubeflow/katib/issues/1581

|

[

"help wanted",

"area/operator",

"kind/feature"

] |

gaocegege

| 2

|

python-visualization/folium

|

data-visualization

| 1,942

|

Add example pattern for pulling JsCode snippets from external javascript files

|

**Is your feature request related to a problem? Please describe.**

I have found myself embedding a lot of javascript code via `JsCode` when using the `Realtime` plugin. This gets messy for a few reasons:

- unit testing the javascript code is not possible

- adding leaflet plugins can result in a huge amount of javascript code embedded inside python

- and the code is just not as modular as it could be

I'm wondering if there's a recommended way to solve this.

**Describe the solution you'd like**

A solution would allow one to reference specific functions from external javascript files within a `JsCode` object.

It's not clear to me what would be better: whether to do this from within `folium` (using the API) or to use some external library to populate the string of javascript code that's passed to `JsCode` based on some javascript file and function. In either case, having an example in the `examples` folder would be great.

**Describe alternatives you've considered**

If nothing is changed about the `folium` API, this could just be done external to `folium`. As in, another library interprets a javascript file, and given a function name, the function's definition is returned as a string. Is this preferable to building the functionality into `folium`? If so, does anybody know of an existing library that can already do this?

**Additional context**

n/a

**Implementation**

n/a

|

closed

|

2024-04-30T17:03:24Z

|

2024-05-19T08:46:33Z

|

https://github.com/python-visualization/folium/issues/1942

|

[] |

thomasegriffith

| 9

|

learning-at-home/hivemind

|

asyncio

| 158

|

Optimize the tests

|

Right now, our tests can take upwards of 10 minutes both in CircleCI and locally, which slows down the development workflow and leads to unnecessary context switches. We should find a way to reduce the time requirements and make sure it stays that way.

* Identify and speed up the slow tests. Main culprits: multiple iterations in tests using random samples, large model sizes for DMoE tests.

* All the tests in our CI pipelines run sequentially, which increases the runtime with the number of tests. It is possible to use [pytest-xdist](https://pypi.org/project/pytest-xdist/), since the default executor has 2 cores.

* Add a global timeout to ensure that future tests don't introduce any regressions

|

closed

|

2021-02-28T15:35:48Z

|

2021-08-03T17:43:27Z

|

https://github.com/learning-at-home/hivemind/issues/158

|

[

"ci"

] |

mryab

| 2

|

mwaskom/seaborn

|

data-science

| 3,608

|

0.13.1: test suite needs `husl` module

|

<details>

<summary>Looks like test suite needs husl module</summary>

```console

+ PYTHONPATH=/home/tkloczko/rpmbuild/BUILDROOT/python-seaborn-0.13.1-2.fc35.x86_64/usr/lib64/python3.8/site-packages:/home/tkloczko/rpmbuild/BUILDROOT/python-seaborn-0.13.1-2.fc35.x86_64/usr/lib/python3.8/site-packages

+ /usr/bin/pytest -ra -m 'not network' -p no:cacheprovider

============================= test session starts ==============================

platform linux -- Python 3.8.18, pytest-7.4.4, pluggy-1.3.0

rootdir: /home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1

configfile: pyproject.toml

collected 0 items / 34 errors

==================================== ERRORS ====================================

__________________ ERROR collecting tests/test_algorithms.py ___________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_algorithms.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_algorithms.py:6: in <module>

from seaborn import algorithms as algo

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

___________________ ERROR collecting tests/test_axisgrid.py ____________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_axisgrid.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_axisgrid.py:11: in <module>

from seaborn._base import categorical_order

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

_____________________ ERROR collecting tests/test_base.py ______________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_base.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_base.py:11: in <module>

from seaborn.axisgrid import FacetGrid

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

__________________ ERROR collecting tests/test_categorical.py __________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_categorical.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_categorical.py:19: in <module>

from seaborn import categorical as cat

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

_________________ ERROR collecting tests/test_distributions.py _________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_distributions.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_distributions.py:12: in <module>

from seaborn import distributions as dist

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

__________________ ERROR collecting tests/test_docstrings.py ___________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_docstrings.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_docstrings.py:1: in <module>

from seaborn._docstrings import DocstringComponents

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

____________________ ERROR collecting tests/test_matrix.py _____________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_matrix.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_matrix.py:27: in <module>

from seaborn import matrix as mat

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

___________________ ERROR collecting tests/test_miscplot.py ____________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_miscplot.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_miscplot.py:3: in <module>

from seaborn import miscplot as misc

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

____________________ ERROR collecting tests/test_objects.py ____________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_objects.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_objects.py:1: in <module>

import seaborn.objects

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

___________________ ERROR collecting tests/test_palettes.py ____________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_palettes.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_palettes.py:8: in <module>

from seaborn import palettes, utils, rcmod

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

_____________________ ERROR collecting tests/test_rcmod.py _____________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_rcmod.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_rcmod.py:7: in <module>

from seaborn import rcmod, palettes, utils

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

__________________ ERROR collecting tests/test_regression.py ___________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_regression.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_regression.py:18: in <module>

from seaborn import regression as lm

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

__________________ ERROR collecting tests/test_relational.py ___________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_relational.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_relational.py:12: in <module>

from seaborn.palettes import color_palette

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

__________________ ERROR collecting tests/test_statistics.py ___________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_statistics.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_statistics.py:12: in <module>

from seaborn._statistics import (

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

_____________________ ERROR collecting tests/test_utils.py _____________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/test_utils.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/test_utils.py:23: in <module>

from seaborn import utils, rcmod, scatterplot

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

__________________ ERROR collecting tests/_core/test_data.py ___________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/_core/test_data.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/_core/test_data.py:9: in <module>

from seaborn._core.data import PlotData

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

_________________ ERROR collecting tests/_core/test_groupby.py _________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/_core/test_groupby.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/_core/test_groupby.py:8: in <module>

from seaborn._core.groupby import GroupBy

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

__________________ ERROR collecting tests/_core/test_moves.py __________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/_core/test_moves.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/_core/test_moves.py:9: in <module>

from seaborn._core.moves import Dodge, Jitter, Shift, Stack, Norm

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

__________________ ERROR collecting tests/_core/test_plot.py ___________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/_core/test_plot.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/_core/test_plot.py:17: in <module>

from seaborn._core.plot import Plot, PlotConfig, Default

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

_______________ ERROR collecting tests/_core/test_properties.py ________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/_core/test_properties.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/_core/test_properties.py:11: in <module>

from seaborn._core.rules import categorical_order

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

__________________ ERROR collecting tests/_core/test_rules.py __________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/_core/test_rules.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/_core/test_rules.py:7: in <module>

from seaborn._core.rules import (

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

_________________ ERROR collecting tests/_core/test_scales.py __________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/_core/test_scales.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/_core/test_scales.py:11: in <module>

from seaborn._core.plot import Plot

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

________________ ERROR collecting tests/_core/test_subplots.py _________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/_core/test_subplots.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/_core/test_subplots.py:6: in <module>

from seaborn._core.subplots import Subplots

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

__________________ ERROR collecting tests/_marks/test_area.py __________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/_marks/test_area.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/_marks/test_area.py:7: in <module>

from seaborn._core.plot import Plot

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

__________________ ERROR collecting tests/_marks/test_bar.py ___________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/_marks/test_bar.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/_marks/test_bar.py:9: in <module>

from seaborn._core.plot import Plot

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

__________________ ERROR collecting tests/_marks/test_base.py __________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/_marks/test_base.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/_marks/test_base.py:10: in <module>

from seaborn._marks.base import Mark, Mappable, resolve_color

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes

seaborn/palettes.py:7: in <module>

import husl

E ModuleNotFoundError: No module named 'husl'

__________________ ERROR collecting tests/_marks/test_dot.py ___________________

ImportError while importing test module '/home/tkloczko/rpmbuild/BUILD/seaborn-0.13.1/tests/_marks/test_dot.py'.

Hint: make sure your test modules/packages have valid Python names.

Traceback:

/usr/lib64/python3.8/importlib/__init__.py:127: in import_module

return _bootstrap._gcd_import(name[level:], package, level)

tests/_marks/test_dot.py:6: in <module>

from seaborn.palettes import color_palette

seaborn/__init__.py:2: in <module>

from .rcmod import * # noqa: F401,F403

seaborn/rcmod.py:5: in <module>

from . import palettes