repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

jowilf/starlette-admin

|

sqlalchemy

| 552

|

Bug: UUID pk gives JSON serialization error when excluded from the list

|

**Describe the bug**

When I have a model with the pk (id) field of UUID type and include it in the list, it works fine. But if I exclude it, while leaving among the fields, it gives an error:

```

File "/Users/alg/p/template-fastapi/.venv/lib/python3.11/site-packages/starlette/responses.py", line 187, in render

return json.dumps(

^^^^^^^^^^^

File "/opt/homebrew/Cellar/python@3.11/3.11.9/Frameworks/Python.framework/Versions/3.11/lib/python3.11/json/__init__.py", line 238, in dumps

**kw).encode(obj)

^^^^^^^^^^^

File "/opt/homebrew/Cellar/python@3.11/3.11.9/Frameworks/Python.framework/Versions/3.11/lib/python3.11/json/encoder.py", line 200, in encode

chunks = self.iterencode(o, _one_shot=True)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/opt/homebrew/Cellar/python@3.11/3.11.9/Frameworks/Python.framework/Versions/3.11/lib/python3.11/json/encoder.py", line 258, in iterencode

return _iterencode(o, 0)

^^^^^^^^^^^^^^^^^

File "/opt/homebrew/Cellar/python@3.11/3.11.9/Frameworks/Python.framework/Versions/3.11/lib/python3.11/json/encoder.py", line 180, in default

raise TypeError(f'Object of type {o.__class__.__name__} '

TypeError: Object of type UUID is not JSON serializable

```

**To Reproduce**

- Create a model with the id field of type UUID.

- List it among fields in the model.

- Exclude it from the list.

**Environment (please complete the following information):**

- Starlette-Admin version: 0.14.0

- ORM/ODMs: SQLAlchemy

|

closed

|

2024-06-25T08:29:24Z

|

2024-07-12T19:22:21Z

|

https://github.com/jowilf/starlette-admin/issues/552

|

[

"bug"

] |

alg

| 5

|

zappa/Zappa

|

django

| 514

|

[Migrated] Using Wildcard Subdomains with API Gateway

|

Originally from: https://github.com/Miserlou/Zappa/issues/1355 by [atkallie](https://github.com/atkallie)

## Context

I have developed a Django project that makes use of the [django-hosts ](https://django-hosts.readthedocs.io/en/latest/) library and now I am trying to deploy it. After working through the Zappa tutorials, I became convinced that Zappa was the way to go. However, I later realized that API Gateway does not support wildcard subdomains. While this is not strictly a Zappa issue, I was wondering if there was anyone who had any success in circumventing this issue since [it doesn't look like the API Gateway developers have any intention of enabling this in the near future](https://forums.aws.amazon.com/message.jspa?messageID=798965).

## Expected Behavior

Allow *.example.com to host a Django project and let Django map to a view based on the subdomain.

## Actual Behavior

Wildcard subdomains are not permitted by API Gateway; only subdomains that are explicitly defined are passed.

## Possible Fix

I tried to manually create a CloudFront distribution that allows "*.example.com" as a CNAME and set the raw API gateway URL as the origin. However, due to CloudFront's default caching behavior, the problem then becomes that any subdomain (e.g. api.example.com) returns a 301 redirect to the raw API Gateway URL. Not sure how the hidden CloudFront distribution created via the Custom Domains tab in API Gateway handles this.

## Steps to Reproduce (for possible fix)

* Create a CloudFront distribution and select 'Web' Distribution

* Use the Zappa distribution domain name from API Gateway as the Origin Domain Name,(e.g. ajnfioanpsda.execute-api.us-east-1.amazonaws.com)

* For alternate domain names, enter in "*.example.com, example.com"

* Use the Zappa deployment name (e.g. dev) as the Origin Path

* For Object Caching select 'Use Origin Cache Headers'

* Turn on "compress objects automatically"

* Associate the CloudFront distribution with your ACM SSL/TLS certificate

|

closed

|

2021-02-20T09:43:45Z

|

2024-04-13T16:36:52Z

|

https://github.com/zappa/Zappa/issues/514

|

[

"enhancement",

"aws",

"feature-request",

"good-idea",

"no-activity",

"auto-closed"

] |

jneves

| 2

|

aio-libs/aiomysql

|

asyncio

| 11

|

rename Connection.wait_closed()

|

`asyncio.AbstractServer` uses the following idiom:

- `close()` closes connection asynchronously, it's regular function.

- `wait_closed()` is a coroutine that waits for actual closing.

`Connection.wait_closed()` has different behavior: it sends disconnection signal to server and after that closes connection.

I guess rename it to `.ensure_closed()`.

|

closed

|

2015-04-20T17:19:55Z

|

2015-04-26T18:33:02Z

|

https://github.com/aio-libs/aiomysql/issues/11

|

[] |

asvetlov

| 1

|

horovod/horovod

|

pytorch

| 3,011

|

System env variables are not captured when using Spark as backend.

|

**Environment:**

1. Framework: (TensorFlow, Keras, PyTorch, MXNet) TensorFlow

2. Framework version: 2.5.0

3. Horovod version: 0.22.1

4. MPI version: 4.0.2

5. CUDA version: 11.2

6. NCCL version: 2.9.9

7. Python version: 3.8

8. Spark / PySpark version: 3.1.2

9. Ray version:

10. OS and version: Ubuntu20.04

11. GCC version: 9.3

12. CMake version: 3.19

**Checklist:**

1. Did you search issues to find if somebody asked this question before?

Y

2. If your question is about hang, did you read [this doc](https://github.com/horovod/horovod/blob/master/docs/running.rst)?

3. If your question is about docker, did you read [this doc](https://github.com/horovod/horovod/blob/master/docs/docker.rst)?

4. Did you check if you question is answered in the [troubleshooting guide](https://github.com/horovod/horovod/blob/master/docs/troubleshooting.rst)?

Y

**Bug report:**

When using horovod.spark.run API, if `env` parameter is not set the following error could be seen:

```

Was unable to run mpirun --version:

/bin/sh: 1: mpirun: not found

```

my environment:

```

(base) allxu@allxu-home:~/github/e2e-train$ which mpirun

/home/allxu/miniconda3/bin/mpirun

```

The whole demo for this error could be found here: https://github.com/wjxiz1992/e2e-train

I've seen similar issue: https://github.com/horovod/horovod/issues/2002

but it's not the spark case.

|

open

|

2021-06-30T07:36:11Z

|

2021-07-09T16:59:22Z

|

https://github.com/horovod/horovod/issues/3011

|

[

"bug"

] |

wjxiz1992

| 1

|

huggingface/transformers

|

pytorch

| 36,623

|

Why are there so many variables named layrnorm in the codebase?

|

Running

`grep -R -n --color=auto "layrnorm" .`

gives these results when ran in src/tranformers

```

./models/idefics/vision.py:441: self.pre_layrnorm = nn.LayerNorm(embed_dim, eps=config.layer_norm_eps)

./models/idefics/vision.py:468: hidden_states = self.pre_layrnorm(hidden_states)

./models/idefics/vision_tf.py:506: self.pre_layrnorm = tf.keras.layers.LayerNormalization(epsilon=config.layer_norm_eps, name="pre_layrnorm")

./models/idefics/vision_tf.py:534: hidden_states = self.pre_layrnorm(hidden_states)

./models/idefics/vision_tf.py:564: if getattr(self, "pre_layrnorm", None) is not None:

./models/idefics/vision_tf.py:565: with tf.name_scope(self.pre_layrnorm.name):

./models/idefics/vision_tf.py:566: self.pre_layrnorm.build([None, None, self.embed_dim])

./models/altclip/modeling_altclip.py:1140: self.pre_layrnorm = nn.LayerNorm(embed_dim, eps=config.layer_norm_eps)

./models/altclip/modeling_altclip.py:1168: hidden_states = self.pre_layrnorm(hidden_states)

./models/git/convert_git_to_pytorch.py:88: rename_keys.append((f"{prefix}image_encoder.ln_pre.weight", "git.image_encoder.vision_model.pre_layrnorm.weight"))

./models/git/convert_git_to_pytorch.py:89: rename_keys.append((f"{prefix}image_encoder.ln_pre.bias", "git.image_encoder.vision_model.pre_layrnorm.bias"))

./models/git/modeling_git.py:997: self.pre_layrnorm = nn.LayerNorm(embed_dim, eps=config.layer_norm_eps)

./models/git/modeling_git.py:1025: hidden_states = self.pre_layrnorm(hidden_states)

./models/clipseg/modeling_clipseg.py:849: self.pre_layrnorm = nn.LayerNorm(embed_dim, eps=config.layer_norm_eps)

./models/clipseg/modeling_clipseg.py:874: hidden_states = self.pre_layrnorm(hidden_states)

./models/clipseg/convert_clipseg_original_pytorch_to_hf.py:87: name = name.replace("visual.ln_pre", "vision_model.pre_layrnorm")

./models/chinese_clip/convert_chinese_clip_original_pytorch_to_hf.py:84: copy_linear(hf_model.vision_model.pre_layrnorm, pt_weights, "visual.ln_pre")

./models/chinese_clip/modeling_chinese_clip.py:1097: self.pre_layrnorm = nn.LayerNorm(embed_dim, eps=config.layer_norm_eps)

./models/chinese_clip/modeling_chinese_clip.py:1124: hidden_states = self.pre_layrnorm(hidden_states)

./models/clip/modeling_tf_clip.py:719: self.pre_layernorm = keras.layers.LayerNormalization(epsilon=config.layer_norm_eps, name="pre_layrnorm")

./models/clip/modeling_clip.py:1073: self.pre_layrnorm = nn.LayerNorm(embed_dim, eps=config.layer_norm_eps)

./models/clip/modeling_clip.py:1101: hidden_states = self.pre_layrnorm(hidden_states)

./models/clip/convert_clip_original_pytorch_to_hf.py:96: copy_linear(hf_model.vision_model.pre_layrnorm, pt_model.visual.ln_pre)

./models/clip/modeling_flax_clip.py:584: self.pre_layrnorm = nn.LayerNorm(epsilon=self.config.layer_norm_eps, dtype=self.dtype)

./models/clip/modeling_flax_clip.py:603: hidden_states = self.pre_layrnorm(hidden_states)

./models/kosmos2/modeling_kosmos2.py:748: self.pre_layrnorm = nn.LayerNorm(embed_dim, eps=config.layer_norm_eps)

./models/kosmos2/modeling_kosmos2.py:770: hidden_states = self.pre_layrnorm(hidden_states)

./models/kosmos2/modeling_kosmos2.py:1440: module.pre_layrnorm.bias.data.zero_()

./models/kosmos2/modeling_kosmos2.py:1441: module.pre_layrnorm.weight.data.fill_(1.0)

./models/kosmos2/convert_kosmos2_original_pytorch_checkpoint_to_pytorch.py:16: "ln_pre": "pre_layrnorm",

```

Why are there so many layernorm variables named layrnorm? Is it a typo or is this intended?

|

closed

|

2025-03-10T01:10:24Z

|

2025-03-10T14:26:12Z

|

https://github.com/huggingface/transformers/issues/36623

|

[] |

jere357

| 1

|

kynan/nbstripout

|

jupyter

| 100

|

documentation: .gitattributes and .git/config - install still required after cloning

|

I thought writing to `.gitattributes` would make someone cloning the repo to get the nbstripout functionality out of the box assuming `nbstripout` and a `.gitattributes` file was provided in the repo. But, after thorough inspection I realized that the `.gitattributes` file only references a name (`nbstripout` / `ipynb`) that needs to be defined somewhere.

__`.gitattributes` (in repo) or `.git/info/attributes` (local)__

```

*.ipynb filter=nbstripout

*.ipynb diff=ipynb

```

The git configuration with the actual definitions of referenced functionality (`nbstripout` / `ipynb`) can either in the local `.git/config` or system wide in `/etc/gitconfig`.

__`.git/config` (local), `~/.gitconfig` (global), or `/etc/gitconfig` (system)__

```

[filter "nbstripout"]

clean = \"/opt/conda/bin/python3.6\" \"/opt/conda/lib/python3.6/site-packages/nbstripout\"

smudge = cat

required = true

[diff "ipynb"]

textconv = \"/opt/conda/bin/python3.6\" \"/opt/conda/lib/python3.6/site-packages/nbstripout\" -t

```

Learning this, I realized that someone cloning the repo would also need to run `nbstripout --install` unless they were provided with a system wide git configuration with these definitions.

---

It would be useful to have this understanding communicated in the repo! Thanks for providing that excellent video earlier btw, that was great!

|

closed

|

2019-06-19T12:29:31Z

|

2020-05-09T10:17:17Z

|

https://github.com/kynan/nbstripout/issues/100

|

[

"type:documentation",

"resolution:fixed"

] |

consideRatio

| 4

|

ultralytics/ultralytics

|

python

| 19,518

|

Metrics all 0 after TensorRT INT8 export for mode val, only INT8 ONNX performs well

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/orgs/ultralytics/discussions) and found no similar questions.

### Question

I succesfully exported my FP32 YOLOv8 OBB (s) model to FP16 and INT8. For FP16 I get nearly the same metrics values like FP32, but the INT8 model performs very bad. My calibration set are 3699 images, I tried with training calibration set (18536 images) too, but the metrics stay all at 0. Different export `batch_sizes=1,8,16` didn't helped.

Update: The problem, must be between the conversion from `ONNX` to `engine` format (see below). There must be a bug between the conversion process, which leads to 0 in all metrics using `engine` model.

Exporter Code:

```python

from ultralytics import YOLO

import argparse

def export_model(model, export_args):

model.export(**export_args)

def main():

parser = argparse.ArgumentParser(description='Export YOLOv8 OBB model to TensorRT with user-configurable parameters.')

parser.add_argument('--model_path', type=str, required=True, help='Path to the trained YOLOv8 model (.pt file).')

parser.add_argument('--export_fp16', type=bool, default=False, help='Export to FP16 TensorRT model.')

parser.add_argument('--export_int8', type=bool, default=False, help='Export to INT8 TensorRT model.')

parser.add_argument('--format', type=str, default='engine', help="Format to export to (e.g., 'engine', 'onnx').")

parser.add_argument('--imgsz', type=int, default=640, help='Desired image size for the model input. Can be an integer for square images or a tuple (height, width) for specific dimensions.')

parser.add_argument('--keras', type=bool, default=False, help='Enables export to Keras format for TensorFlow SavedModel, providing compatibility with TensorFlow serving and APIs.')

parser.add_argument('--optimize', type=bool, default=False, help='Applies optimization for mobile devices when exporting to TorchScript, potentially reducing model size and improving performance.')

parser.add_argument('--half', type=bool, default=False, help='Enables FP16 (half-precision) quantization, reducing model size and potentially speeding up inference on supported hardware.')

parser.add_argument('--int8', type=bool, default=False, help='Activates INT8 quantization, further compressing the model and speeding up inference with minimal accuracy loss, primarily for edge devices.')

parser.add_argument('--dynamic', type=bool, default=False, help='Allows dynamic input sizes for ONNX, TensorRT and OpenVINO exports, enhancing flexibility in handling varying image dimensions (enforced).')

parser.add_argument('--simplify', type=bool, default=False, help='Simplifies the model graph for ONNX exports with onnxslim, potentially improving performance and compatibility.')

parser.add_argument('--opset', type=int, default=None, help='Specifies the ONNX opset version for compatibility with different ONNX parsers and runtimes. If not set, uses the latest supported version.')

parser.add_argument('--workspace', type=int, default=None, help='Sets the maximum workspace size in GiB for TensorRT optimizations, balancing memory usage and performance; use None for auto-allocation by TensorRT up to device maximum.')

parser.add_argument('--nms', type=bool, default=False, help='Adds Non-Maximum Suppression (NMS) to the exported model when supported (see Export Formats), improving detection post-processing efficiency.')

parser.add_argument('--batch', type=int, default=1, help="Batch size for export. For INT8 it's recommended using a larger batch like batch=8 (calibrated as batch=16))")

parser.add_argument('--device', type=str, default='0', help="Device to use for export (e.g., '0' for GPU 0).")

parser.add_argument('--data', type=str, default=None, help="Path to the dataset configuration file for INT8 calibration.")

args = parser.parse_args()

# Load the final trained YOLOv8 model

model = YOLO(args.model_path, task='obb')

export_args = {

'format': args.format,

'imgsz': args.imgsz,

'keras': args.keras,

'optimize': args.optimize,

'half': args.half,

'int8': args.int8,

'dynamic': args.dynamic,

'simplify': args.simplify,

'opset': args.opset,

'workspace': args.workspace,

'nms': args.nms,

'batch': args.batch,

'device': args.device,

'data': args.data,

}

if args.export_fp16: # data argument isn't needed for FP16 exports since no calibration is required

print('Exporting to FP16 TensorRT model...')

fp16_args = export_args.copy()

fp16_args['half'] = True

fp16_args['int8'] = False

export_model(model, fp16_args)

print('FP16 export completed.')

if args.export_int8: # NOTE: https://docs.nvidia.com/deeplearning/tensorrt/developer-guide/index.html#enable_int8_c, for INT8 calibration, the kitti_bev.yaml val split with 3769 images is used.

print('Exporting to INT8 TensorRT model...')

int8_args = export_args.copy()

int8_args['half'] = False

int8_args['int8'] = True

export_model(model, int8_args)

print('INT8 export completed.\nThe calibration .cache which can be reused to speed up export of future model weights using the same data, but this may result in poor calibration when the data is vastly different or if the batch value is changed drastically. In these circumstances, the existing .cache should be renamed and moved to a different directory or deleted entirely.')

if not args.export_fp16 and not args.export_int8:

print('No export option selected. Please specify --export_fp16 and/or --export_int8.')

if __name__ == '__main__':

main()

```

Used export command:

```txt

python export_kitti_obb.py --model_path /home/heizung1/ultralytics_yolov8-obb_ob_kitti/ultralytics/kitti_bev_yolo/run_94_Adam_88.8_87.2/weights/best.pt --export_int8 True --int8 True --dynamic=True --batch 1 --data /home/heizung1/ultralytics_yolov8-obb_ob_kitti/ultralytics/cfg/datasets/kitti_bev.yaml

```

Validation script:

```python

from ultralytics import YOLO

model = YOLO('/home/heizung1/ultralytics_yolov8-obb_ob_kitti/ultralytics/kitti_bev_yolo/run_94_Adam_88.8_87.2/weights/best_1.engine', task='obb', verbose=False)

metrics = model.val(data='/home/heizung1/ultralytics_yolov8-obb_ob_kitti/ultralytics/cfg/datasets/kitti_bev.yaml', imgsz=640,

batch=16, save_json=False, save_hybrid=False, conf=0.001, iou=0.5, max_det=300, half=False,

device='0', dnn=False, plots=False, rect=False, split='val', project=None, name=None)

```

Validation output with INT8 TensorRT:

Validation output with INT8 ONNX:

Thank you very much!

### Additional

_No response_

|

open

|

2025-03-04T17:11:26Z

|

2025-03-14T01:33:53Z

|

https://github.com/ultralytics/ultralytics/issues/19518

|

[

"question",

"OBB",

"exports"

] |

Petros626

| 19

|

TheAlgorithms/Python

|

python

| 12,531

|

Football questions

|

### What would you like to share?

Questions about football

### Additional information

_No response_

|

closed

|

2025-01-18T18:19:16Z

|

2025-01-19T00:45:37Z

|

https://github.com/TheAlgorithms/Python/issues/12531

|

[

"awaiting triage"

] |

ninostudio

| 0

|

Guovin/iptv-api

|

api

| 636

|

一直执行不起来,lite版本,用标准版好像也不行

|

[2024_12_9 13_52_58_log.txt](https://github.com/user-attachments/files/18056923/2024_12_9.13_52_58_log.txt)

|

closed

|

2024-12-09T05:54:42Z

|

2024-12-13T08:43:10Z

|

https://github.com/Guovin/iptv-api/issues/636

|

[

"duplicate",

"incomplete"

] |

Andy-Home

| 4

|

Lightning-AI/pytorch-lightning

|

deep-learning

| 20,116

|

Error when disabling an optimizer with native AMP turned on

|

### Bug description

I'm using 2 optimizers and trying to train with AMP (FP16). I can take steps with my first optimizer. When I take my first step with the second optimizer I get the following error:

```

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/torch/amp/grad_scaler.py", line 450, in step

len(optimizer_state["found_inf_per_device"]) > 0

AssertionError: No inf checks were recorded for this optimizer.

```

I can train this correctly in FP32 -- so it seems to be an issue with AMP.

### What version are you seeing the problem on?

version 2.3.3

### How to reproduce the bug

```python

def training_step(self, batch: Dict, batch_idx: int):

"""

We have 2 sets of optimizers.

Every N batches (self.n_batches_per_optimizer), we make an optimizer update and

switch the optimizer to update.

If self.n_batches_per_optimizer = 1, then we make updates every batch and alternate optimizers

every batch.

If self.n_batches_per_optimizer > 1, then we're doing gradient accumulartion, where we are making

updates evern n_batches_per_optimizer batches and alternating optimizers every n_batches_per_optimizer

batches.

"""

opts = self.optimizers()

current_cycle = (batch_idx // self.n_batches_per_optimizer) % len(opts)

opt = opts[current_cycle]

opt.zero_grad()

if current_cycle == 0:

compute_model_1_loss = True

elif current_cycle == 1:

compute_model_1_loss = False

else:

raise NotImplementedError(f"Unknown optimizer {current_cycle}")

with opt.toggle_model():

loss = self.inner_training_step(batch=batch, compute_model_1_loss=compute_model_1_loss)

self.manual_backward(loss=loss)

# Perform the optimization step every accumulate_grad_batches steps

if (batch_idx + 1) % self.n_batches_per_optimizer == 0:

if not compute_model_1_loss:

print("About to take compute model 2 loss ...")

opt.step()

opt.zero_grad()

```

### Error messages and logs

```

Traceback (most recent call last):

File "/home/sahil/train.py", line 82, in <module>

main(config)

File "/home/sahil/train.py", line 62, in main

trainer.fit(model, datamodule=data_module, ckpt_path=ckpt)

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/trainer/trainer.py", line 543, in fit

call._call_and_handle_interrupt(

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/trainer/call.py", line 44, in _call_and_handle_interrupt

return trainer_fn(*args, **kwargs)

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/trainer/trainer.py", line 579, in _fit_impl

self._run(model, ckpt_path=ckpt_path)

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/trainer/trainer.py", line 986, in _run

results = self._run_stage()

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/trainer/trainer.py", line 1030, in _run_stage

self.fit_loop.run()

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/loops/fit_loop.py", line 205, in run

self.advance()

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/loops/fit_loop.py", line 363, in advance

self.epoch_loop.run(self._data_fetcher)

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/loops/training_epoch_loop.py", line 140, in run

self.advance(data_fetcher)

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/loops/training_epoch_loop.py", line 252, in advance

batch_output = self.manual_optimization.run(kwargs)

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/loops/optimization/manual.py", line 94, in run

self.advance(kwargs)

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/loops/optimization/manual.py", line 114, in advance

training_step_output = call._call_strategy_hook(trainer, "training_step", *kwargs.values())

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/trainer/call.py", line 311, in _call_strategy_hook

output = fn(*args, **kwargs)

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/strategies/strategy.py", line 390, in training_step

return self.lightning_module.training_step(*args, **kwargs)

File "/home/sahil/model/model.py", line 169, in training_step

opt.step()

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/core/optimizer.py", line 153, in step

step_output = self._strategy.optimizer_step(self._optimizer, closure, **kwargs)

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/strategies/strategy.py", line 238, in optimizer_step

return self.precision_plugin.optimizer_step(optimizer, model=model, closure=closure, **kwargs)

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/pytorch_lightning/plugins/precision/amp.py", line 93, in optimizer_step

step_output = self.scaler.step(optimizer, **kwargs) # type: ignore[arg-type]

File "/home/sahil/.cache/pypoetry/virtualenvs/env-auw7Hy33-py3.10/lib/python3.10/site-packages/torch/amp/grad_scaler.py", line 450, in step

len(optimizer_state["found_inf_per_device"]) > 0

AssertionError: No inf checks were recorded for this optimizer.

```

### Environment

<details>

<summary>Current environment</summary>

```

* CUDA:

- GPU:

- NVIDIA A100-SXM4-80GB

- available: True

- version: 12.1

* Lightning:

- lightning-utilities: 0.11.5

- pytorch-lightning: 2.3.3

- torch: 2.3.1

- torchmetrics: 1.4.0.post0

- torchvision: 0.18.1

* Packages:

- aiohttp: 3.9.5

- aiosignal: 1.3.1

- annotated-types: 0.7.0

- antlr4-python3-runtime: 4.9.3

- anyio: 4.4.0

- argon2-cffi: 23.1.0

- argon2-cffi-bindings: 21.2.0

- arrow: 1.3.0

- asttokens: 2.4.1

- async-lru: 2.0.4

- async-timeout: 4.0.3

- attrs: 23.2.0

- autocommand: 2.2.2

- babel: 2.15.0

- backports.tarfile: 1.2.0

- beautifulsoup4: 4.12.3

- bitsandbytes: 0.43.1

- bleach: 6.1.0

- boto3: 1.34.144

- botocore: 1.34.144

- braceexpand: 0.1.7

- certifi: 2024.7.4

- nvidia-curand-cu12: 10.3.2.106

- nvidia-cusolver-cu12: 11.4.5.107

- nvidia-cusparse-cu12: 12.1.0.106

- nvidia-nccl-cu12: 2.20.5

- nvidia-nvjitlink-cu12: 12.5.82

- nvidia-nvtx-cu12: 12.1.105

- omegaconf: 2.3.0

- opencv-python: 4.10.0.84

- ordered-set: 4.1.0

- overrides: 7.7.0

- packaging: 24.1

- pandocfilters: 1.5.1

- parso: 0.8.4

- pexpect: 4.9.0

- pillow: 10.4.0

- pip: 24.1

- platformdirs: 4.2.2

- pre-commit: 3.7.1

- proglog: 0.1.10

- prometheus-client: 0.20.0

- prompt-toolkit: 3.0.47

- protobuf: 5.27.2

- psutil: 6.0.0

- ptyprocess: 0.7.0

- pure-eval: 0.2.2

- pycparser: 2.22

- pydantic: 2.8.2

- pydantic-core: 2.20.1

- pydantic-settings: 2.3.4

- pygments: 2.18.0

- python-dateutil: 2.9.0.post0

- python-dotenv: 1.0.1

- python-json-logger: 2.0.7

- pytorch-lightning: 2.3.3

- pyyaml: 6.0.1

- pyzmq: 26.0.3

- referencing: 0.35.1

- requests: 2.32.3

- rfc3339-validator: 0.1.4

- rfc3986-validator: 0.1.1

- rpds-py: 0.19.0

- s3transfer: 0.10.2

- send2trash: 1.8.3

- sentry-sdk: 2.10.0

- setproctitle: 1.3.3

- setuptools: 71.0.2

- six: 1.16.0

- smmap: 5.0.1

- sniffio: 1.3.1

- soupsieve: 2.5

- stack-data: 0.6.3

- sympy: 1.13.0

- terminado: 0.18.1

- tinycss2: 1.3.0

- tomli: 2.0.1

- torch: 2.3.1

- torchmetrics: 1.4.0.post0

- torchvision: 0.18.1

- tornado: 6.4.1

- tqdm: 4.66.4

- traitlets: 5.14.3

- triton: 2.3.1

- typeguard: 4.3.0

- types-python-dateutil: 2.9.0.20240316

- typing-extensions: 4.12.2

- uri-template: 1.3.0

- urllib3: 2.2.2

- virtualenv: 20.26.3

- wandb: 0.17.4

- wcwidth: 0.2.13

- webcolors: 24.6.0

- webdataset: 0.2.86

- webencodings: 0.5.1

- websocket-client: 1.8.0

- wheel: 0.43.0

- yarl: 1.9.4

- zipp: 3.19.2

* System:

- OS: Linux

- architecture:

- 64bit

- ELF

- processor:

- python: 3.10.14

- release: 5.10.0-31-cloud-amd64

- version: #1 SMP Debian 5.10.221-1 (2024-07-14)

```

</details>

### More info

_No response_

|

open

|

2024-07-22T18:15:07Z

|

2024-07-22T18:17:32Z

|

https://github.com/Lightning-AI/pytorch-lightning/issues/20116

|

[

"bug",

"needs triage",

"ver: 2.2.x"

] |

schopra8

| 1

|

encode/httpx

|

asyncio

| 2,677

|

[Bug] Logged issue with Pygments #2418

|

The starting point for issues should usually be a discussion... - What if not: I have a syntax update issue with a dependency of yours - compatibility 3.11. Easy fix

https://github.com/encode/httpx/discussions

Possible bugs may be raised as a "Potential Issue" discussion, feature requests may be raised as an "Ideas" discussion. We can then determine if the discussion needs to be escalated into an "Issue" or not.

This will help us ensure that the "Issues" list properly reflects ongoing or needed work on the project.

---

- [ ] Initially raised as discussion #... https://github.com/pygments/pygments/issues/2418 for `import pygments.lexers`

Issue with your transitive dependency on `Pygments.lexers` import. I logged it with the pygments. Details are as per that issue

https://github.com/pygments/pygments/issues/2418

|

closed

|

2023-04-24T15:07:28Z

|

2023-04-26T10:21:40Z

|

https://github.com/encode/httpx/issues/2677

|

[] |

iPoetDev

| 0

|

sinaptik-ai/pandas-ai

|

data-visualization

| 993

|

File exists error when creating a `SmartDataframe` object

|

### System Info

OS version: Ubuntu 20.04.6 LTS

Python version: 3.11.8

The current version of `pandasai` being used: 2.0.3

### 🐛 Describe the bug

Here is the code (simple flask API) that I'm using right now:

```python

# Route to get all books

@app.route('/run', methods=['POST'])

def run_pandasai():

data = request.get_json()

engine = create_engine(SQLALCHEMY_BASE_DATABASE_URI)

df = None

with engine.connect() as conn:

df = pd.read_sql(text(f'SELECT * FROM some_table;'), conn)

llm = OpenAI(api_token='<my_api_key>')

df = SmartDataframe(df, config={"llm": llm})

response = df.chat(some prompt?')

return jsonify({'response': response})

```

I get the following error while running this:

```

Traceback (most recent call last):

File "/usr/local/lib/python3.11/site-packages/flask/app.py", line 1463, in wsgi_app

response = self.full_dispatch_request()

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/flask/app.py", line 872, in full_dispatch_request

rv = self.handle_user_exception(e)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/flask/app.py", line 870, in full_dispatch_request

rv = self.dispatch_request()

^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/flask/app.py", line 855, in dispatch_request

return self.ensure_sync(self.view_functions[rule.endpoint])(**view_args) # type: ignore[no-any-return]

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/app/app.py", line 20, in run_pandasai

df = SmartDataframe(df, config={"llm": llm})

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/pandasai/smart_dataframe/__init__.py", line 64, in __init__

self._agent = Agent([df], config=config)

^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/pandasai/agent/base.py", line 75, in __init__

self.context = PipelineContext(

^^^^^^^^^^^^^^^^

File "/usr/local/lib/python3.11/site-packages/pandasai/pipelines/pipeline_context.py", line 35, in __init__

self.cache = cache if cache is not None else Cache()

^^^^^^^

File "/usr/local/lib/python3.11/site-packages/pandasai/helpers/cache.py", line 29, in __init__

os.makedirs(cache_dir, mode=DEFAULT_FILE_PERMISSIONS, exist_ok=True)

File "<frozen os>", line 225, in makedirs

FileExistsError: [Errno 17] File exists: '/app/cache'

```

I can understand that this issue is because it is trying to create the dir that already exists, and even though the exist_ok is `True` the `DEFAULT_FILE_PERMISSIONS` is such that it can't create in and it fails, is this a bug?

|

closed

|

2024-03-04T15:06:35Z

|

2024-03-07T18:54:46Z

|

https://github.com/sinaptik-ai/pandas-ai/issues/993

|

[

"wontfix"

] |

araghuvanshi-systango

| 2

|

graphistry/pygraphistry

|

pandas

| 269

|

[FEA] validator

|

**Is your feature request related to a problem? Please describe.**

A recent viz had some null titles -- would help to check validate somehow!

**Describe the solution you'd like**

Ex: https://gist.github.com/lmeyerov/423df6b3b5bd85d12fd74b85eca4a17a

- nodes not in edges

- edges referencing non-existent nodes

- na nodes/edges

- if colors/sizes/icons/titles, NA vals

- if no title and defaulting to guess title, NAs there

|

open

|

2021-10-15T05:45:44Z

|

2024-12-16T17:25:38Z

|

https://github.com/graphistry/pygraphistry/issues/269

|

[

"enhancement",

"good-first-issue"

] |

lmeyerov

| 0

|

TracecatHQ/tracecat

|

pydantic

| 342

|

[FEATURE IDEA] Add UI to show action if `run_if` is specified

|

## Why

- It's hard to tell if a node has a conditional attached unless you select the node or gave it a meaningful title

## Suggested solution

- Add a greenish `#C1DEAF` border that shows up around the node

- Prompt the the user to give the "condition" a human-readable name (why? because the expression will probably be too long)

- If no human-readable name is given. Take the last `.attribute` and `operator value` part of the expression as the condition name.

|

open

|

2024-08-22T17:08:12Z

|

2024-08-22T17:17:01Z

|

https://github.com/TracecatHQ/tracecat/issues/342

|

[

"enhancement",

"frontend"

] |

topher-lo

| 2

|

CorentinJ/Real-Time-Voice-Cloning

|

pytorch

| 385

|

What versions of everything does one need to avoid errors during setup?

|

Hi all,

I'm on Ubuntu 20.04 with Python 3.7 in a conda env. I have a Nvidia GTX660 GPU installed.

I'm currently rockin' torch 1.2, cuda-10-0, tensorflow 1.14.0, tensorflow-gpu 1.14.0, and torchvision 0.4.0, along with everything else in requirements.txt. I am using python 3.7. For the life of me, I can't figure out how to get demo_cli.py to not give the error a bunch of people get:

```Your PyTorch installation is not configured to use CUDA. If you have a GPU ready for deep learning, ensure that the drivers are properly installed, and that your CUDA version matches your PyTorch installation. CPU-only inference is currently not supported.```

Could someone give me the lowdown on precisely what packages and version numbers I need to make this thing fire up?

|

closed

|

2020-06-27T04:21:48Z

|

2020-06-29T21:58:11Z

|

https://github.com/CorentinJ/Real-Time-Voice-Cloning/issues/385

|

[] |

deltabravozulu

| 4

|

JaidedAI/EasyOCR

|

pytorch

| 584

|

AttributeError: 'Reader' object has no attribute 'detector'

|

I'm following the api documentation (https://www.jaided.ai/easyocr/documentation/), which points to the detector as a parameter, but I can't use it! :/

The traceback follows...

```bash

Traceback (most recent call last):

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/uvicorn/protocols/http/h11_impl.py", line 396, in run_asgi

result = await app(self.scope, self.receive, self.send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/uvicorn/middleware/proxy_headers.py", line 45, in __call__

return await self.app(scope, receive, send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/fastapi/applications.py", line 199, in __call__

await super().__call__(scope, receive, send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/applications.py", line 111, in __call__

await self.middleware_stack(scope, receive, send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/middleware/errors.py", line 181, in __call__

raise exc from None

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/middleware/errors.py", line 159, in __call__

await self.app(scope, receive, _send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/middleware/cors.py", line 78, in __call__

await self.app(scope, receive, send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/exceptions.py", line 82, in __call__

raise exc from None

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/exceptions.py", line 71, in __call__

await self.app(scope, receive, sender)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/routing.py", line 566, in __call__

await route.handle(scope, receive, send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/routing.py", line 227, in handle

await self.app(scope, receive, send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/routing.py", line 41, in app

response = await func(request)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/fastapi/routing.py", line 201, in app

raw_response = await run_endpoint_function(

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/fastapi/routing.py", line 148, in run_endpoint_function

return await dependant.call(**values)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/./web_ocr/routers/v2/ocr.py", line 71, in ocr_extract

result = await runner.run_pipeline(postprocesses=postprocesses,

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/./web_ocr/repository/ocr.py", line 259, in run_pipeline

text, conf = await self.apply_ocr(*args, **kwargs)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/./web_ocr/repository/ocr.py", line 246, in apply_ocr

text, conf = await self.framework.predict(self.im, *args, **kwargs)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/./web_ocr/repository/ocr.py", line 141, in predict

results = reader.readtext(image, **config)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/easyocr/easyocr.py", line 376, in readtext

horizontal_list, free_list = self.detect(img, min_size, text_threshold,\

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/easyocr/easyocr.py", line 274, in detect

text_box = get_textbox(self.detector, img, canvas_size, mag_ratio,\

AttributeError: 'Reader' object has no attribute 'detector'

ERROR:uvicorn.error:Exception in ASGI application

Traceback (most recent call last):

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/uvicorn/protocols/http/h11_impl.py", line 396, in run_asgi

result = await app(self.scope, self.receive, self.send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/uvicorn/middleware/proxy_headers.py", line 45, in __call__

return await self.app(scope, receive, send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/fastapi/applications.py", line 199, in __call__

await super().__call__(scope, receive, send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/applications.py", line 111, in __call__

await self.middleware_stack(scope, receive, send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/middleware/errors.py", line 181, in __call__

raise exc from None

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/middleware/errors.py", line 159, in __call__

await self.app(scope, receive, _send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/middleware/cors.py", line 78, in __call__

await self.app(scope, receive, send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/exceptions.py", line 82, in __call__

raise exc from None

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/exceptions.py", line 71, in __call__

await self.app(scope, receive, sender)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/routing.py", line 566, in __call__

await route.handle(scope, receive, send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/routing.py", line 227, in handle

await self.app(scope, receive, send)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/starlette/routing.py", line 41, in app

response = await func(request)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/fastapi/routing.py", line 201, in app

raw_response = await run_endpoint_function(

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/fastapi/routing.py", line 148, in run_endpoint_function

return await dependant.call(**values)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/./web_ocr/routers/v2/ocr.py", line 71, in ocr_extract

result = await runner.run_pipeline(postprocesses=postprocesses,

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/./web_ocr/repository/ocr.py", line 259, in run_pipeline

text, conf = await self.apply_ocr(*args, **kwargs)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/./web_ocr/repository/ocr.py", line 246, in apply_ocr

text, conf = await self.framework.predict(self.im, *args, **kwargs)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/./web_ocr/repository/ocr.py", line 141, in predict

results = reader.readtext(image, **config)

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/easyocr/easyocr.py", line 376, in readtext

horizontal_list, free_list = self.detect(img, min_size, text_threshold,\

File "/home/lead/Documents/wal-project/ocr/icaro-ocr/.venv/lib/python3.8/site-packages/easyocr/easyocr.py", line 274, in detect

text_box = get_textbox(self.detector, img, canvas_size, mag_ratio,\

AttributeError: 'Reader' object has no attribute 'detector'

```

|

closed

|

2021-11-03T18:39:29Z

|

2022-08-07T05:00:33Z

|

https://github.com/JaidedAI/EasyOCR/issues/584

|

[] |

igormcsouza

| 0

|

SYSTRAN/faster-whisper

|

deep-learning

| 1,215

|

Service Execution Failure: when Running FasterWhisper as a Service on Ubuntu

|

# Issue: Service Execution Failure - `status=6/ABRT`

The following code executes successfully when run directly on an Ubuntu-based system like say python3 make_transcript.py. However, when executed as a service file, it fails and exits with the error:

```

code=dumped, status=6/ABRT

```

## Code Snippet

```python

segments, _ = model.transcribe(audio_file, task='translate', vad_filter=True)

print("[Transcribing COMPLETE]")

cleaned_segments = []

stX = time.time()

for idx, segment in enumerate(tqdm(segments)):

print(f"current running {idx} xxxxxxxxxxxxx")

text = segment.text

cleaned_segments.append({

'start': segment.start,

'end': segment.end,

'text': cleaned_text.strip()

})

```

## Observed Behavior

1. The code runs without any issues when executed directly in a terminal or Python environment.

2. When the code is run as part of a systemd service file, it crashes with the following error and basically it does not execute properly.

3. It runs properly until it starts entering the for loop there it crashes

```

code=dumped, status=6/ABRT

```

5. Observe the failure in logs.

I would be very grateful if anyone can identify the cause of the failure and provide a resolution or guidance on debugging this issue further.

|

open

|

2024-12-24T11:39:49Z

|

2024-12-24T11:39:49Z

|

https://github.com/SYSTRAN/faster-whisper/issues/1215

|

[] |

manashb96

| 0

|

hankcs/HanLP

|

nlp

| 1,319

|

安装过程出现的问题,以及最后安装成功的版本号,汇报一下

|

<!--

注意事项和版本号必填,否则不回复。若希望尽快得到回复,请按模板认真填写,谢谢合作。

-->

## 注意事项

请确认下列注意事项:

* 我已仔细阅读下列文档,都没有找到答案:

- [首页文档](https://github.com/hankcs/HanLP)

- [wiki](https://github.com/hankcs/HanLP/wiki)

- [常见问题](https://github.com/hankcs/HanLP/wiki/FAQ)

* 我已经通过[Google](https://www.google.com/#newwindow=1&q=HanLP)和[issue区检索功能](https://github.com/hankcs/HanLP/issues)搜索了我的问题,也没有找到答案。

* 我明白开源社区是出于兴趣爱好聚集起来的自由社区,不承担任何责任或义务。我会礼貌发言,向每一个帮助我的人表示感谢。

* [x] 我在此括号内输入x打钩,代表上述事项确认完毕。

## 版本号

<!-- 发行版请注明jar文件名去掉拓展名的部分;GitHub仓库版请注明master还是portable分支 -->

当前最新版本号是:pyhanlp 0.1.50

我使用的版本是:pyhanlp 0.1.47

<!--以上属于必填项,以下可自由发挥-->

## 我的问题

<!-- 请详细描述问题,越详细越可能得到解决 -->

环境参数:

* win10

* jdk 1.8

* python 3.7

失败安装过程:

* 第一次:直接`pip install pyhanlp` (版本0.1.50),自动安装依赖jpype1(版本0.7.0),下载data文件执行`hanlp`报错:`startJVM() got an unexpected keyword argument 'convertStrings'`

* 第二次:`pip install pyhanlp=0.1.47`,`pip install jpype1=0.6.2`,执行报错:`AttributeError: module '_jpype' has no attribute 'setResource'`

成功过程:

* `pip install pyhanlp=0.1.47`

* `pip install jpype1=0.6.3`

|

closed

|

2019-11-07T01:48:48Z

|

2019-11-07T02:03:00Z

|

https://github.com/hankcs/HanLP/issues/1319

|

[

"discussion"

] |

chenwenhang

| 3

|

PaddlePaddle/ERNIE

|

nlp

| 134

|

训练任务killed

|

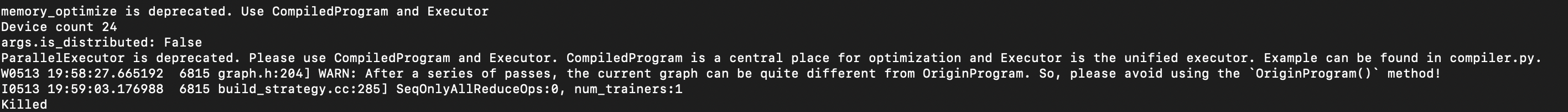

下载了bert的demo,在开发机本地运行train.sh,任务直接被killed

|

closed

|

2019-05-13T12:11:04Z

|

2019-10-12T09:15:09Z

|

https://github.com/PaddlePaddle/ERNIE/issues/134

|

[] |

MaxLingwei

| 5

|

deepfakes/faceswap

|

deep-learning

| 1,282

|

No Module Decorator

|

Tried to run training.py crashed with this in console

```

Traceback (most recent call last):

File "C:\Users\Admin\faceswap\lib\cli\launcher.py", line 217, in execute_script

process.process()

File "C:\Users\Admin\faceswap\scripts\train.py", line 218, in process

self._end_thread(thread, err)

File "C:\Users\Admin\faceswap\scripts\train.py", line 258, in _end_thread

thread.join()

File "C:\Users\Admin\faceswap\lib\multithreading.py", line 217, in join

raise thread.err[1].with_traceback(thread.err[2])

File "C:\Users\Admin\faceswap\lib\multithreading.py", line 96, in run

self._target(*self._args, **self._kwargs)

File "C:\Users\Admin\faceswap\scripts\train.py", line 280, in _training

raise err

File "C:\Users\Admin\faceswap\scripts\train.py", line 268, in _training

model = self._load_model()

File "C:\Users\Admin\faceswap\scripts\train.py", line 292, in _load_model

model: "ModelBase" = PluginLoader.get_model(self._args.trainer)(

File "C:\Users\Admin\faceswap\plugins\plugin_loader.py", line 131, in get_model

return PluginLoader._import("train.model", name, disable_logging)

File "C:\Users\Admin\faceswap\plugins\plugin_loader.py", line 197, in _import

module = import_module(mod)

File "C:\Users\Admin\anaconda3\envs\faceswap\lib\importlib\__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 1030, in _gcd_import

File "<frozen importlib._bootstrap>", line 1007, in _find_and_load

File "<frozen importlib._bootstrap>", line 986, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 680, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 850, in exec_module

File "<frozen importlib._bootstrap>", line 228, in _call_with_frames_removed

File "C:\Users\Admin\faceswap\plugins\train\model\original.py", line 11, in <module>

from ._base import KerasModel, ModelBase

File "C:\Users\Admin\faceswap\plugins\train\model\_base\__init__.py", line 4, in <module>

from .model import get_all_sub_models, KerasModel, ModelBase # noqa

File "C:\Users\Admin\faceswap\plugins\train\model\_base\model.py", line 23, in <module>

from .settings import Loss, Optimizer, Settings

File "C:\Users\Admin\faceswap\plugins\train\model\_base\settings.py", line 37, in <module>

from lib.model.autoclip import AutoClipper # pylint:disable=ungrouped-imports

File "C:\Users\Admin\faceswap\lib\model\autoclip.py", line 8, in <module>

import tensorflow_probability as tfp

File "C:\Users\Admin\anaconda3\envs\faceswap\lib\site-packages\tensorflow_probability\__init__.py", line 75, in <module>

from tensorflow_probability.python import * # pylint: disable=wildcard-import

File "C:\Users\Admin\anaconda3\envs\faceswap\lib\site-packages\tensorflow_probability\python\__init__.py", line 21, in <module>

from tensorflow_probability.python import bijectors

File "C:\Users\Admin\anaconda3\envs\faceswap\lib\site-packages\tensorflow_probability\python\bijectors\__init__.py", line 23, in <module>

from tensorflow_probability.python.bijectors.absolute_value import AbsoluteValue

File "C:\Users\Admin\anaconda3\envs\faceswap\lib\site-packages\tensorflow_probability\python\bijectors\absolute_value.py", line 23, in <module>

from tensorflow_probability.python.bijectors import bijector

File "C:\Users\Admin\anaconda3\envs\faceswap\lib\site-packages\tensorflow_probability\python\bijectors\bijector.py", line 33, in <module>

from tensorflow_probability.python.internal import distribution_util

File "C:\Users\Admin\anaconda3\envs\faceswap\lib\site-packages\tensorflow_probability\python\internal\distribution_util.py", line 29, in <module>

from tensorflow_probability.python.internal import prefer_static

File "C:\Users\Admin\anaconda3\envs\faceswap\lib\site-packages\tensorflow_probability\python\internal\prefer_static.py", line 22, in <module>

import decorator

ModuleNotFoundError: No module named 'decorator'

11/15/2022 21:24:55 CRITICAL An unexpected crash has occurred. Crash report written to 'C:\Users\Admin\faceswap\crash_report.2022.11.15.212446667521.log'. You MUST provide this file if seeking assistance. Please verify you are running the latest version of faceswap before reporting

`

`11/15/2022 21:24:44 MainProcess MainThread __init__ wrapper DEBUG CONFIGDIR=C:\Users\Admin\.matplotlib

11/15/2022 21:24:44 MainProcess MainThread __init__ <module> DEBUG interactive is False

11/15/2022 21:24:44 MainProcess MainThread __init__ <module> DEBUG platform is win32

11/15/2022 21:24:44 MainProcess MainThread __init__ wrapper DEBUG CACHEDIR=C:\Users\Admin\.matplotlib

11/15/2022 21:24:44 MainProcess MainThread font_manager _load_fontmanager DEBUG Using fontManager instance from C:\Users\Admin\.matplotlib\fontlist-v330.json

11/15/2022 21:24:44 MainProcess MainThread queue_manager __init__ DEBUG Initializing _QueueManager

11/15/2022 21:24:44 MainProcess MainThread queue_manager __init__ DEBUG Initialized _QueueManager

11/15/2022 21:24:45 MainProcess MainThread stats __init__ DEBUG Initializing GlobalSession

11/15/2022 21:24:45 MainProcess MainThread stats __init__ DEBUG Initialized GlobalSession

11/15/2022 21:24:45 MainProcess MainThread train __init__ DEBUG Initializing Train: (args: Namespace(func=<bound method ScriptExecutor.execute_script of <lib.cli.launcher.ScriptExecutor object at 0x00000268DF228E80>>, exclude_gpus=None, configfile=None, loglevel='INFO', logfile=None, redirect_gui=True, colab=False, input_a='C:\\Users\\Admin\\Desktop\\faces\\A', input_b='C:\\Users\\Admin\\Desktop\\faces\\B', model_dir='C:\\Users\\Admin\\Desktop\\faces', load_weights=None, trainer='original', summary=False, freeze_weights=False, batch_size=16, iterations=1180000, distributed=False, distribution_strategy='default', save_interval=250, snapshot_interval=25000, timelapse_input_a=None, timelapse_input_b=None, timelapse_output=None, preview=True, write_image=False, no_logs=False, warp_to_landmarks=False, no_flip=False, no_augment_color=False, no_warp=False)

11/15/2022 21:24:45 MainProcess MainThread train _get_images DEBUG Getting image paths

11/15/2022 21:24:45 MainProcess MainThread utils get_image_paths DEBUG Scanned Folder contains 33 files

11/15/2022 21:24:45 MainProcess MainThread utils get_image_paths DEBUG Returning 33 images

11/15/2022 21:24:45 MainProcess MainThread train _get_images DEBUG Test file: (filename: C:\Users\Admin\Desktop\faces\A\2020-04-17 22.55.41 2289591023441328516_291158439_0.png, metadata: {'width': 512, 'height': 512, 'itxt': {'alignments': {'x': 202, 'w': 124, 'y': 179, 'h': 175, 'landmarks_xy': [[186.1354217529297, 263.46875], [193.3229217529297, 282.63543701171875], [200.5104217529297, 299.40625], [207.6979217529297, 313.78125], [219.67709350585938, 330.55206298828125], [236.44790649414062, 342.53125], [255.6145782470703, 352.11456298828125], [279.57293701171875, 361.69793701171875], [301.13543701171875, 364.09375], [315.51043701171875, 356.90625], [317.90625, 349.71875], [317.90625, 337.73956298828125], [320.30206298828125, 318.57293701171875], [327.48956298828125, 297.01043701171875], [329.88543701171875, 280.23956298828125], [327.48956298828125, 265.86456298828125], [322.69793701171875, 249.09375], [231.65625, 241.90625], [243.6354217529297, 234.71875], [258.01043701171875, 229.9270782470703], [267.59375, 229.9270782470703], [277.17706298828125, 234.71875], [303.53125, 229.9270782470703], [308.32293701171875, 227.53125], [313.11456298828125, 227.53125], [317.90625, 229.9270782470703], [317.90625, 234.71875], [296.34375, 249.09375], [303.53125, 261.07293701171875], [313.11456298828125, 270.65625], [315.51043701171875, 280.23956298828125], [289.15625, 289.82293701171875], [296.34375, 289.82293701171875], [303.53125, 289.82293701171875], [308.32293701171875, 287.42706298828125], [310.71875, 287.42706298828125], [250.8229217529297, 256.28125], [260.40625, 253.8854217529297], [267.59375, 253.8854217529297], [272.38543701171875, 256.28125], [267.59375, 258.67706298828125], [258.01043701171875, 258.67706298828125], [298.73956298828125, 251.4895782470703], [305.92706298828125, 246.6979217529297], [313.11456298828125, 246.6979217529297], [313.11456298828125, 249.09375], [313.11456298828125, 251.4895782470703], [305.92706298828125, 251.4895782470703], [265.19793701171875, 311.38543701171875], [281.96875, 306.59375], [298.73956298828125, 301.80206298828125], [305.92706298828125, 301.80206298828125], [310.71875, 299.40625], [315.51043701171875, 301.80206298828125], [313.11456298828125, 306.59375], [313.11456298828125, 318.57293701171875], [310.71875, 323.36456298828125], [305.92706298828125, 325.7604064941406], [296.34375, 325.7604064941406], [284.36456298828125, 320.96875], [267.59375, 311.38543701171875], [293.94793701171875, 306.59375], [303.53125, 306.59375], [308.32293701171875, 306.59375], [313.11456298828125, 306.59375], [308.32293701171875, 313.78125], [303.53125, 316.17706298828125], [293.94793701171875, 316.17706298828125]], 'mask': {'components': {'mask': b"x\x9c\xed\x9a[\x92\xc2@\x08E\xdd\x19Kci,-\xa3\xd1\xa91>:\x81{i\xa6*\x9c\x0f\x7f\x0f\rD#\xcd\xe5\xd24M\xd34M\xd34M\xd34M\xd34M\xf3?P\xd5eY\xae\x9f:\xdb,\xaby\xcb5\x0c\x99cW{\x93\xffa\xc9\xe9\x90\x91|\x9b\x0e~\x1c\xf2\x9e\xf6\xbd0h5\x91a\xda\x07\x110\xe4\x1f\xfa\xed0\x06\x1f<\xee^\x81\xdc\x06\xca\x81\xf3\x13\xdc7B\r\x80\x14\x1c\xf53\x92\xfe\x8cO\xceu\xbb\xfdJ\xd7;\xcf\xcf\xf7\xfb\x1a\xe0\xec~\xe3\x07\xe0\xf2\xeb\xc9\xfdR\xecOh@\xdf;\x08\xdf_\xfd\x00\xf8\xfczr?\xbf\x01\x9co@t\xbf\xf3\x01\xd4\x93\xfb\xa5\xd8\xcfo\x00\xa7\xdf\x8a\xfdZ\xec\x97b?\xbd\x01\xbc~+\xf6k\xb1\x9f]\x00\xb7\xdf\xa8z\xff?`\xa5\xfa\xfd3 \xa1\xfa\xddzn\x03D\x06\x10Z\xec\x17\xa2?\xa0g\x16 6\x7fR\x9a?8\x80\xa4\xf9czZ\x02\xc2\xe3?\x92?<\x80V\x8e?\xaa'%\x00\x18\x7f+\xc3\x8f\xcc\xff\tzh\xf8\xae\xb8\x1f\xbb}\xc0\xfd\x90\x1e\xff\x15\x80\xef>@?|\xfb\x04\x06\x80\xea\xc1\x00\x18w_\n\xf8)\x97\x7f\x16\xd6\xa3\xdd\x87\x06\xc0\xba\x80\x8d\x06@\xd2G\x03 \xa5?\x1c\x00s\x1d@\x03~\xa2>\xf2=\xc0\xbe\xfe7\xa7\x9f\xbe\x8d\xa1.=\xb1\xfb~\x11\x8f?e\x0b\xc4\x8e\xfb3\xf4\x8e\x1a$\xa4\x7f\xe5h\r\xf2v\x81\x8e\xa5 M\x7f\xec\x8a>w%k\xbf\x08\xd9\xabX;E\xc8\xea\xbe'\x86E\x98\xb1\x896h\x83\t\xc7\xbf\xf1\xb5\x08\x93\x16\xf1\xbeE0s\x1f\xf1\xc3v\xd6\xecu\xc8\xcd\xbe\xd0\xac%\xc8\x17\xee!\x14\xc9\x1f!T\xca\x19\xfc\x00=Z\x92s", 'affine_matrix': [[0.5971773365351224, -0.10676463488366382, -77.57558414093813], [0.10676463488366382, 0.5971773365351223, -123.80531080504358]], 'interpolator': 3, 'stored_size': 128, 'stored_centering': 'face'}, 'extended': {'mask': b"x\x9c\xed\xda\x81m\xc30\x0c\x04@o\xc6\xd1~4\x8d\xe6\xb6i\x02\x18\xa8\xea\x9a\xfc\xa7\xd8\xc0\xfc\x05\x8e!\x95\xb4\x90\xb8m\x9dN\xa7\xd3\xe9t:\x9dN\xa7\xd3\xe9t:\xff.\x06X\x11\r`\x7ff\x00\x8b\xed\xb1\xff\x0cVUa\x13\xfc\xd0\x8a\xec\x81\xe0\x84\xcf\x1f\x88\xcdZ?O\xc6@\xcez?o\x85r\x1c\x8e\x0f\x7f\xa8\xa0T\x97\xb5`\xfa\x9d\xbb\x18\x81\x1e\xc7\xf9\tD\x1b\xff\n\xf75`\xf5}g\xe6\x0f\x16\xdf\x99\xf1+\xf4\xb0\xcf7\x9e\xf1ez\xc8\x87\x0c\x0f\xf8&\xd5\xbd\xbe\xb0\xf1\xcfx\xbe\x7fz\xdd\xf7\xfb\x03=\xef\xf2\xad\xd8\xdf\xee\xee\x8fb\x1f7\xf7\xad\xd8O8\x80\xef\xe5\x0f\xb9\xef\xfb\xff\x13r\xdf\xf7\x07\xb0\xdaO8\x00\xef\xe5\xe3\xe6\xbe\x15\xfb\xfa\x03\xe0\xf4G\xb1\x8fb\xdf\x8a}\xf9\x01\xf0\xfa\xa3\xd8G\xb1\xaf\x1e\x80\xdb\x1fR\xde\x7f\xff\x03\xa9\xef\xbf\xff1\xa9\xef\xe6\xb5\x07 r\xfd\x86b\xdf\x84~\x80W\x0e v\xfb\n\x99\x1f\xbc}\x95\xf91^\xd6\x80\xf0\xe5\xb7\xc8\x0f_>C\xe3GyQ\x03\x88\xbb\x7f(|\xe6\xee_\xc0SO/\xe0}\xee\xe9\x85\xf7)\x9e\xff+@\xbf|\x91>\xfd\xf2H\x16\xc0\xf2d\x01\x8a\xf7o\x10\xbe\xe4\xe1w\x84y\xd1\xcbw\xb8\x00\xd5\xfaA\xb4\x00\x11\x1f-@\xb6x\x10,@\xb9y\x81\x80/\xe4#\xbf\x03\xea\xe5\x97\xe1\xf4\xe5{Hp\xf1\xc2\xd3\xf7\x8ay\xfc\x94\r\xa8q\xdd\xcf\xe0\x1d3Hh\xff#Wg\x90\xb7\x05w\xad\x05i\xfc\xb5'\xfa\xdcU\xc4\xbf\x87P\xbc\x84\x98u\xfa\x0e9\x1d\xc2\x8a\xa5\xd8\x93c\xb0\xe0\xe3\x7f\xe5\xd7!,\xdb\t\x9eW\xb0r\x1bx\xb2+\xb4x\x19\xf9\xb3\x84\xc3I(\xda\xc7\xfe.\xa1l\x19\xfcQB%\xae\xc8\x07D\xd6_\xbc", 'affine_matrix': [[0.5971773365351224, -0.10676463488366382, -77.57558414093813], [0.10676463488366382, 0.5971773365351223, -123.80531080504358]], 'interpolator': 3, 'stored_size': 128, 'stored_centering': 'face'}}, 'identity': {}}, 'source': {'alignments_version': 2.3, 'original_filename': '2020-04-17 22.55.41 2289591023441328516_291158439_0.png', 'face_index': 0, 'source_filename': '2020-04-17 22.55.41 2289591023441328516_291158439.jpg', 'source_is_video': False, 'source_frame_dims': (1349, 1080)}}})

11/15/2022 21:24:45 MainProcess MainThread train _get_images INFO Model A Directory: 'C:\Users\Admin\Desktop\faces\A' (33 images)

11/15/2022 21:24:45 MainProcess MainThread utils get_image_paths DEBUG Scanned Folder contains 180 files

11/15/2022 21:24:45 MainProcess MainThread utils get_image_paths DEBUG Returning 180 images

11/15/2022 21:24:45 MainProcess MainThread train _get_images DEBUG Test file: (filename: C:\Users\Admin\Desktop\faces\B\2019-07-14 10.37.45 2087731961133796176_380280568_0.png, metadata: {'width': 512, 'height': 512, 'itxt': {'alignments': {'x': 308, 'w': 631, 'y': 78, 'h': 937, 'landmarks_xy': [[328.24359130859375, 427.7779541015625], [315.67950439453125, 528.290771484375], [315.67950439453125, 603.6753540039062], [328.24359130859375, 679.0599975585938], [340.80767822265625, 767.0087280273438], [365.9359130859375, 842.3933715820312], [403.62823486328125, 892.6497192382812], [441.32049560546875, 942.9061889648438], [529.2692260742188, 1005.7266235351562], [629.7820434570312, 993.1625366210938], [692.6025390625, 955.4702758789062], [742.8589477539062, 917.7778930664062], [805.6795043945312, 854.9574584960938], [843.3717651367188, 779.5728149414062], [881.0640869140625, 704.1881713867188], [906.1922607421875, 628.8035888671875], [931.320556640625, 540.8548583984375], [365.9359130859375, 339.8292236328125], [416.19232177734375, 302.13690185546875], [466.44873046875, 314.7010498046875], [504.14105224609375, 327.26513671875], [541.8333129882812, 352.393310546875], [730.2948608398438, 390.08563232421875], [767.9871826171875, 377.52154541015625], [830.8076782226562, 377.52154541015625], [881.0640869140625, 390.08563232421875], [906.1922607421875, 440.342041015625], [604.6538696289062, 490.59844970703125], [604.6538696289062, 553.4189453125], [592.0897216796875, 603.6753540039062], [579.525634765625, 653.9317626953125], [516.7051391601562, 679.0599975585938], [541.8333129882812, 691.6240844726562], [566.9615478515625, 704.1881713867188], [604.6538696289062, 704.1881713867188], [629.7820434570312, 704.1881713867188], [416.19232177734375, 427.7779541015625], [441.32049560546875, 427.7779541015625], [491.576904296875, 427.7779541015625], [529.2692260742188, 465.47027587890625], [479.0128173828125, 465.47027587890625], [441.32049560546875, 452.9061279296875], [705.1666870117188, 490.59844970703125], [755.423095703125, 490.59844970703125], [793.1153564453125, 490.59844970703125], [830.8076782226562, 515.7266845703125], [793.1153564453125, 528.290771484375], [742.8589477539062, 515.7266845703125], [453.8846435546875, 792.1369018554688], [479.0128173828125, 767.0087280273438], [541.8333129882812, 754.4446411132812], [566.9615478515625, 754.4446411132812], [592.0897216796875, 754.4446411132812], [629.7820434570312, 792.1369018554688], [667.474365234375, 829.8291625976562], [629.7820434570312, 867.5215454101562], [579.525634765625, 880.0856323242188], [541.8333129882812, 880.0856323242188], [504.14105224609375, 867.5215454101562], [479.0128173828125, 842.3933715820312], [453.8846435546875, 792.1369018554688], [529.2692260742188, 779.5728149414062], [554.3974609375, 792.1369018554688], [592.0897216796875, 804.7009887695312], [654.9102783203125, 829.8291625976562], [579.525634765625, 829.8291625976562], [554.3974609375, 829.8291625976562], [516.7051391601562, 817.2650756835938]], 'mask': {'components': {'mask': b'x\x9c\xed\x99Q\xae\xc20\x0c\x04{\xb3\x1c-G\xf3\xd1\xca\xa3| D\x81\xc4\xb3I@o\xe7\x02\xb3\xb5\xe3Tr\xb6\xcd\x18c\x8c1\xc6\x18c\x8c1\xc6\x18c\xcc7R\xea)bK\xec\xfd\x94\xb5za\x80\x9c~\xdfE\xfa\x92\xd4\xef\xb1V/\n\x90\xd7K\x8e\x00\xf8|I\x01*\xf1\x0b\n\xc0\xfc\xbc\x00H/\x98A\xe8\xa77q\x81~Z\x80J\xfd\xb0\x00\xd8\x0f\x0b\x80\xf5\xb0\x00\xf6S\xd8\x15\xc8\xfdH\xbf\xdc\x1f\x8b\xfd\xf5\xd7\xfd\xf0\x0f\x88\xfd\xab\xef_\xfb\x19\xd4\x0f\xf5\xff\xde_\x7f\xdb\x8f7\x01e\xb1\x1f\x1e\x00\xee\x0f\xe4\xc7zx\x00\xb8\x1f5@\xb1\x88\n\xe0W\xec\x80\n\xf0\x0b\xf4\xa4\x01\x9a\rPM\xfb5{\xc8\x92\xf6K\xf4\xa0\x01"\x7fM\xeaUk\xe0\x92\xf4\xcb6\xb0I\xbfJ\x9fl\x80f\xfa\x0eR~\xe1+@d\xfc:}\xae\x01B\x7f\xa6\x01\xd2G\x98D\x01\x94\xfaD\x01\x84\xef?WJ\xa7^8|7\xa2\xcf\xaf\xd6w\x16@\\\xfd+\xb5C\xaf~\x80<h\xd7\xcb\x9b\x7f\xd0\xde\x81\x01\xd5\xef\t0H\xff\x17 \x96\xea\xb7\xa6)\x1c\xd3\xfc\xe6\x00c\xf5\x1f\x03\x8c\xd6\x7f\x080^\xffv\x0c\x86\xdc;\xed\x01F\x9e\xfc\x87\x00\xe7=\x98\xa5?O0\xa3\xf5\xaf\x13\xd4\x89\x1f\xff\x94 \xe6\xcb\xef\tb\xce\xa1\x7f\x91`\xa5\xdc\x18cL\x9a\x0bJ\xab\xda&', 'affine_matrix': [[0.10563867057061942, 0.02008655320207599, -11.293521284931124], [-0.020086553202075995, 0.10563867057061942, 20.28065738741556]], 'interpolator': 2, 'stored_size': 128, 'stored_centering': 'face'}, 'extended': {'mask': b"x\x9c\xed\x99\x8b\x8d\x03!\x0cD\xb73\x97\xe6\xd2\\\x1a\x97\x8f\xa2\xe8\x94\x90\x80g\xc0Ze^\x01y\x13\xc6 \xc4\x1e\x87\x10B\x08!\x84\x10B\x08!\x84\x10\xf3\xb8;\xed\xb7\xac]\xf0\x0e\xad\x0b-@\xf4\x1d\x1f\xb1Z}k\x14\xbd\xa5\xf5-j\xf5\x94\x06\x02\xf1\xe3\x010=\xdc\x80azx\x04\x1d\xf5\x83\x87\x80\xfc(\xf2\xd7\xfa\xc1\x01\x90\x1fE\xfeZ?6\x00\xf2\x9f\xdd\x8f]\xc1p?\xa4/\xf7G\xb1\xdf\xcf\xee\x07o\xe0\xb0\xbf\xfa\x02(?\x06\xea\x07\xf5?\xef\xf7s\xfb\xe1G0+\xf6\x83\x03\x80\xfb\x03\xf2\xc3zp\x00p?T\x00\xe3\r6\x00?\xe3\t\xd6\x00?A\x8f\x14\xc0x\x80E&\x90\xf3\x04oi?E\x0f\x14@\xf2{R\xcf\xfa\x02bI?\xe9\x03H\xba\x00\x96>Y\x00g\xf7\xddH\xf9y_\xe0rg0O\x9f+\x80\xe8\xcf\x14@\\\xfe\xd4\x020\xf5\x89\x05\xa0m\xfe;6\xa9'n\xbe;1\xe7g\xeb'\x17\x80\xbc\xfaW|BO\x9d\xfd\x07\xe3zz\xf97\xc6\x1bX\xb0\xfa3\x01\x16\xe9/\x01\xa2T\x7f\x0c\xed\xc25\xe5\x0f\x07X\xab\xff\x1a`\xb5\xfeK\x80\xf5\xfa\x8f\xdb`\xc9\xb93\x1e`\xe5\xe4\xff\x0b\xf0\xbe\x83]\xfa\xf7\tvT\xdfO\xe0\x1b\xff\xfcK\x82\xd8/\x7f&\x88=C\xdfIP)\x17B\x08\x91\xe6\x0f\xfc\xf7\xa9.", 'affine_matrix': [[0.10563867057061942, 0.02008655320207599, -11.293521284931124], [-0.020086553202075995, 0.10563867057061942, 20.28065738741556]], 'interpolator': 2, 'stored_size': 128, 'stored_centering': 'face'}}, 'identity': {}}, 'source': {'alignments_version': 2.3, 'original_filename': '2019-07-14 10.37.45 2087731961133796176_380280568_0.png', 'face_index': 0, 'source_filename': '2019-07-14 10.37.45 2087731961133796176_380280568.jpg', 'source_is_video': False, 'source_frame_dims': (1350, 1080)}}})

11/15/2022 21:24:45 MainProcess MainThread train _get_images INFO Model B Directory: 'C:\Users\Admin\Desktop\faces\B' (180 images)

11/15/2022 21:24:45 MainProcess MainThread train _get_images DEBUG Got image paths: [('a', '33 images'), ('b', '180 images')]

11/15/2022 21:24:45 MainProcess MainThread train _validate_image_counts WARNING At least one of your input folders contains fewer than 250 images. Results are likely to be poor.

11/15/2022 21:24:45 MainProcess MainThread train _validate_image_counts WARNING You need to provide a significant number of images to successfully train a Neural Network. Aim for between 500 - 5000 images per side.

11/15/2022 21:24:45 MainProcess MainThread preview_cv __init__ DEBUG Initializing: PreviewBuffer

11/15/2022 21:24:45 MainProcess MainThread preview_cv __init__ DEBUG Initialized: PreviewBuffer

11/15/2022 21:24:45 MainProcess preview preview_tk __init__ DEBUG Initializing PreviewTk (parent: 'None')

11/15/2022 21:24:45 MainProcess preview preview_cv __init__ DEBUG Initializing PreviewTk parent (triggers: {'toggle_mask': <threading.Event object at 0x00000268DF24EC10>, 'refresh': <threading.Event object at 0x00000268EA541A90>, 'save': <threading.Event object at 0x00000268EA549700>, 'quit': <threading.Event object at 0x00000268EA5A8700>, 'shutdown': <threading.Event object at 0x00000268EA5A8A60>})

11/15/2022 21:24:45 MainProcess preview preview_cv __init__ DEBUG Initialized PreviewTk parent

11/15/2022 21:24:45 MainProcess MainThread train __init__ DEBUG Initialized Train

11/15/2022 21:24:45 MainProcess MainThread train process DEBUG Starting Training Process

11/15/2022 21:24:45 MainProcess MainThread train process INFO Training data directory: C:\Users\Admin\Desktop\faces

11/15/2022 21:24:45 MainProcess MainThread train _start_thread DEBUG Launching Trainer thread

11/15/2022 21:24:45 MainProcess MainThread multithreading __init__ DEBUG Initializing MultiThread: (target: '_training', thread_count: 1)

11/15/2022 21:24:45 MainProcess MainThread multithreading __init__ DEBUG Initialized MultiThread: '_training'

11/15/2022 21:24:45 MainProcess MainThread multithreading start DEBUG Starting thread(s): '_training'

11/15/2022 21:24:45 MainProcess MainThread multithreading start DEBUG Starting thread 1 of 1: '_training'

11/15/2022 21:24:45 MainProcess preview preview_tk __init__ DEBUG Initializing _Taskbar (parent: '.!frame', taskbar: None)

11/15/2022 21:24:45 MainProcess preview preview_tk _add_scale_combo DEBUG Adding scale combo

11/15/2022 21:24:45 MainProcess MainThread multithreading start DEBUG Started all threads '_training': 1

11/15/2022 21:24:45 MainProcess MainThread train _start_thread DEBUG Launched Trainer thread

11/15/2022 21:24:45 MainProcess MainThread train _output_startup_info DEBUG Launching Monitor

11/15/2022 21:24:45 MainProcess MainThread train _output_startup_info INFO ===================================================

11/15/2022 21:24:45 MainProcess MainThread train _output_startup_info INFO Starting

11/15/2022 21:24:45 MainProcess MainThread train _output_startup_info INFO Using live preview

11/15/2022 21:24:45 MainProcess MainThread train _output_startup_info INFO ===================================================

11/15/2022 21:24:45 MainProcess preview preview_tk _add_scale_combo DEBUG Added scale combo: '.!frame.!frame.!combobox'

11/15/2022 21:24:45 MainProcess preview preview_tk _add_scale_slider DEBUG Adding scale slider

11/15/2022 21:24:45 MainProcess preview preview_tk _add_scale_slider DEBUG Added scale slider: '.!frame.!frame.!scale'

11/15/2022 21:24:45 MainProcess preview preview_tk _add_interpolator_radio DEBUG Adding nearest_neighbour radio button

11/15/2022 21:24:45 MainProcess preview preview_tk _add_interpolator_radio DEBUG Added .!frame.!frame.!frame.!radiobutton radio button

11/15/2022 21:24:45 MainProcess preview preview_tk _add_interpolator_radio DEBUG Adding bicubic radio button

11/15/2022 21:24:45 MainProcess preview preview_tk _add_interpolator_radio DEBUG Added .!frame.!frame.!frame.!radiobutton2 radio button

11/15/2022 21:24:45 MainProcess preview preview_tk _add_save_button DEBUG Adding save button

11/15/2022 21:24:45 MainProcess preview preview_tk _add_save_button DEBUG Added save burron: '.!frame.!frame.!button'

11/15/2022 21:24:45 MainProcess preview preview_tk __init__ DEBUG Initialized _Taskbar ('<lib.training.preview_tk._Taskbar object at 0x00000268EA5A8BE0>')

11/15/2022 21:24:45 MainProcess preview preview_tk _get_geometry DEBUG Obtaining screen geometry

11/15/2022 21:24:45 MainProcess preview preview_tk _get_geometry DEBUG Obtained screen geometry: (1920, 1080)

11/15/2022 21:24:45 MainProcess preview preview_tk __init__ DEBUG Initializing _PreviewCanvas (parent: '.!frame', scale_var: PY_VAR1, screen_dimensions: (1920, 1080))

11/15/2022 21:24:45 MainProcess preview preview_tk _configure_scrollbars DEBUG Configuring scrollbars

11/15/2022 21:24:45 MainProcess preview preview_tk _configure_scrollbars DEBUG Configured scrollbars. x: '.!frame.!frame2.!scrollbar', y: '.!frame.!frame2.!scrollbar2'

11/15/2022 21:24:45 MainProcess preview preview_tk __init__ DEBUG Initialized _PreviewCanvas ('.!frame.!frame2.!_previewcanvas')

11/15/2022 21:24:45 MainProcess preview preview_tk __init__ DEBUG Initializing _Image: (save_variable: PY_VAR0, is_standalone: True)

11/15/2022 21:24:45 MainProcess preview preview_tk __init__ DEBUG Initialized _Image

11/15/2022 21:24:45 MainProcess preview preview_tk __init__ DEBUG Initializing _Bindings (canvas: '.!frame.!frame2.!_previewcanvas', taskbar: '<lib.training.preview_tk._Taskbar object at 0x00000268EA5A8BE0>', image: '<lib.training.preview_tk._Image object at 0x00000268EA5D78E0>')

11/15/2022 21:24:45 MainProcess preview preview_tk _set_mouse_bindings DEBUG Binding mouse events

11/15/2022 21:24:45 MainProcess preview preview_tk _set_mouse_bindings DEBUG Bound mouse events

11/15/2022 21:24:45 MainProcess preview preview_tk _set_key_bindings DEBUG Binding key events

11/15/2022 21:24:45 MainProcess preview preview_tk _set_key_bindings DEBUG Bound key events

11/15/2022 21:24:45 MainProcess preview preview_tk __init__ DEBUG Initialized _Bindings

11/15/2022 21:24:45 MainProcess preview preview_tk _process_triggers DEBUG Processing triggers

11/15/2022 21:24:45 MainProcess preview preview_tk _process_triggers DEBUG Adding trigger for key: 'm'

11/15/2022 21:24:45 MainProcess preview preview_tk _process_triggers DEBUG Adding trigger for key: 'r'

11/15/2022 21:24:45 MainProcess preview preview_tk _process_triggers DEBUG Adding trigger for key: 's'

11/15/2022 21:24:45 MainProcess preview preview_tk _process_triggers DEBUG Adding trigger for key: 'Return'

11/15/2022 21:24:45 MainProcess preview preview_tk _process_triggers DEBUG Processed triggers

11/15/2022 21:24:45 MainProcess preview preview_tk pack DEBUG Packing master frame: (args: (), kwargs: {'fill': 'both', 'expand': True})

11/15/2022 21:24:45 MainProcess preview preview_tk _output_helptext INFO ---------------------------------------------------

11/15/2022 21:24:45 MainProcess preview preview_tk _output_helptext INFO Preview key bindings:

11/15/2022 21:24:45 MainProcess preview preview_tk _output_helptext INFO Zoom: +/-

11/15/2022 21:24:45 MainProcess preview preview_tk _output_helptext INFO Toggle Zoom Mode: i

11/15/2022 21:24:45 MainProcess preview preview_tk _output_helptext INFO Move: arrow keys

11/15/2022 21:24:45 MainProcess preview preview_tk _output_helptext INFO Save Preview: Ctrl+s

11/15/2022 21:24:45 MainProcess preview preview_tk _output_helptext INFO ---------------------------------------------------

11/15/2022 21:24:45 MainProcess preview preview_tk __init__ DEBUG Initialized PreviewTk

11/15/2022 21:24:45 MainProcess preview preview_cv _launch DEBUG Launching PreviewTk

11/15/2022 21:24:45 MainProcess preview preview_cv _launch DEBUG Waiting for preview image

11/15/2022 21:24:46 MainProcess _training train _training DEBUG Commencing Training

11/15/2022 21:24:46 MainProcess _training train _training INFO Loading data, this may take a while...

11/15/2022 21:24:46 MainProcess _training train _load_model DEBUG Loading Model

11/15/2022 21:24:46 MainProcess _training utils get_folder DEBUG Requested path: 'C:\Users\Admin\Desktop\faces'

11/15/2022 21:24:46 MainProcess _training utils get_folder DEBUG Returning: 'C:\Users\Admin\Desktop\faces'

11/15/2022 21:24:46 MainProcess _training plugin_loader _import INFO Loading Model from Original plugin...

11/15/2022 21:24:46 MainProcess _training multithreading run DEBUG Error in thread (_training): No module named 'decorator'

11/15/2022 21:24:46 MainProcess MainThread train _monitor DEBUG Thread error detected

11/15/2022 21:24:46 MainProcess MainThread train shutdown DEBUG Sending shutdown to preview viewer