repo_name

stringlengths 9

75

| topic

stringclasses 30

values | issue_number

int64 1

203k

| title

stringlengths 1

976

| body

stringlengths 0

254k

| state

stringclasses 2

values | created_at

stringlengths 20

20

| updated_at

stringlengths 20

20

| url

stringlengths 38

105

| labels

listlengths 0

9

| user_login

stringlengths 1

39

| comments_count

int64 0

452

|

|---|---|---|---|---|---|---|---|---|---|---|---|

sherlock-project/sherlock

|

python

| 1,938

|

Would modern versions of Sherlock still(?) work for iSH installations?

|

<!--

######################################################################

WARNING!

IGNORING THE FOLLOWING TEMPLATE WILL RESULT IN ISSUE CLOSED AS INCOMPLETE.

######################################################################

-->

## Checklist

<!--

Put x into all boxes (like this [x]) once you have completed what they say.

Make sure complete everything in the checklist.

-->

- [X] I'm asking a question regarding Sherlock

- [X] My question is not a tech support question.

**We are not your tech support**.

If you have questions related to `pip`, `git`, or something that is not related to Sherlock, please ask them on [Stack Overflow](https://stackoverflow.com/) or [r/learnpython](https://www.reddit.com/r/learnpython/)

## Question

As someone who loves having this tool as part of my loadout, I've been wanting to install this on iSH, but I'm wondering if that's possible to get up and running on modern versions of iSH. I think I might have been able to do that in the past, but it's also possible that I may be misremembering something, hence why I flagged this as a question. Thanks for any answer in advance.

|

open

|

2023-11-09T19:41:20Z

|

2023-11-09T19:41:33Z

|

https://github.com/sherlock-project/sherlock/issues/1938

|

[

"question"

] |

GenowJ24

| 0

|

encode/databases

|

sqlalchemy

| 222

|

i have a question.when i test the process code , the service "/v1/test/" is very low. which can not suport high Concurrent access.

|

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import asyncio

import uvicorn

from fastapi import FastAPI

import databases

from databases import Database

from asyncio import gather

from pydantic import BaseModel

from databases.core import Connection

app = FastAPI()

mysql_url = 'mysql://username:password@ip:port/db'

database = Database(mysql_url)

@app.on_event('startup')

async def init_scheduler():

await database.connect()

@app.on_event("shutdown")

async def down():

await database.disconnect()

class TestResult(BaseModel):

code: str

value_1: str

value_2: str

class TestItem(BaseModel):

code: str

@app.post("/v1/test/", response_model=TestResult)

async def test(item: TestItem):

code = item.code

# v1, v2 = 0, 0

sql = f"select `Close`,ChangePercActual from search_realtime where SecuCode = {code}"

res = await gather(database.fetch_one(query=sql))

# print(res)

v1, v2 = float(res[0][0]), float(res[0][1])

res = {"code": str(code), "value_1": str(v1), "value_2": str(v2)}

return res

if __name__ == "__main__":

uvicorn.run("server:app", host="127.0.0.1", port=8083, workers=2)

|

open

|

2020-06-19T09:07:36Z

|

2020-06-19T09:07:36Z

|

https://github.com/encode/databases/issues/222

|

[] |

dtMndas

| 0

|

PeterL1n/BackgroundMattingV2

|

computer-vision

| 27

|

[mov,mp4,m4a,3gp,3g2,mj2 @ 0xc5867600] moov atom not found

|

I uploaded a video file and background image and tried using BackgroundMattingV2-VideoMatting.ipynb, but it gives me the following error:

[mov,mp4,m4a,3gp,3g2,mj2 @ 0xc5867600] moov atom not found

VIDIOC_REQBUFS: Inappropriate ioctl for device

0% 0/1 [00:00<?, ?it/s]Traceback (most recent call last):

File "inference_video.py", line 178, in <module>

for src, bgr in tqdm(DataLoader(dataset, batch_size=1, pin_memory=True)):

File "/usr/local/lib/python3.6/dist-packages/tqdm/std.py", line 1104, in __iter__

for obj in iterable:

File "/usr/local/lib/python3.6/dist-packages/torch/utils/data/dataloader.py", line 435, in __next__

data = self._next_data()

File "/usr/local/lib/python3.6/dist-packages/torch/utils/data/dataloader.py", line 475, in _next_data

data = self._dataset_fetcher.fetch(index) # may raise StopIteration

File "/usr/local/lib/python3.6/dist-packages/torch/utils/data/_utils/fetch.py", line 44, in fetch

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/usr/local/lib/python3.6/dist-packages/torch/utils/data/_utils/fetch.py", line 44, in <listcomp>

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/content/BackgroundMattingV2/dataset/zip.py", line 17, in __getitem__

x = tuple(d[idx % len(d)] for d in self.datasets)

File "/content/BackgroundMattingV2/dataset/zip.py", line 17, in <genexpr>

x = tuple(d[idx % len(d)] for d in self.datasets)

ZeroDivisionError: integer division or modulo by zero

0% 0/1 [00:00<?, ?it/s]

How can I resolve it?

Thanks!

|

closed

|

2021-01-01T16:38:36Z

|

2022-06-21T16:32:39Z

|

https://github.com/PeterL1n/BackgroundMattingV2/issues/27

|

[] |

KinjalParikh

| 3

|

fastapi-admin/fastapi-admin

|

fastapi

| 9

|

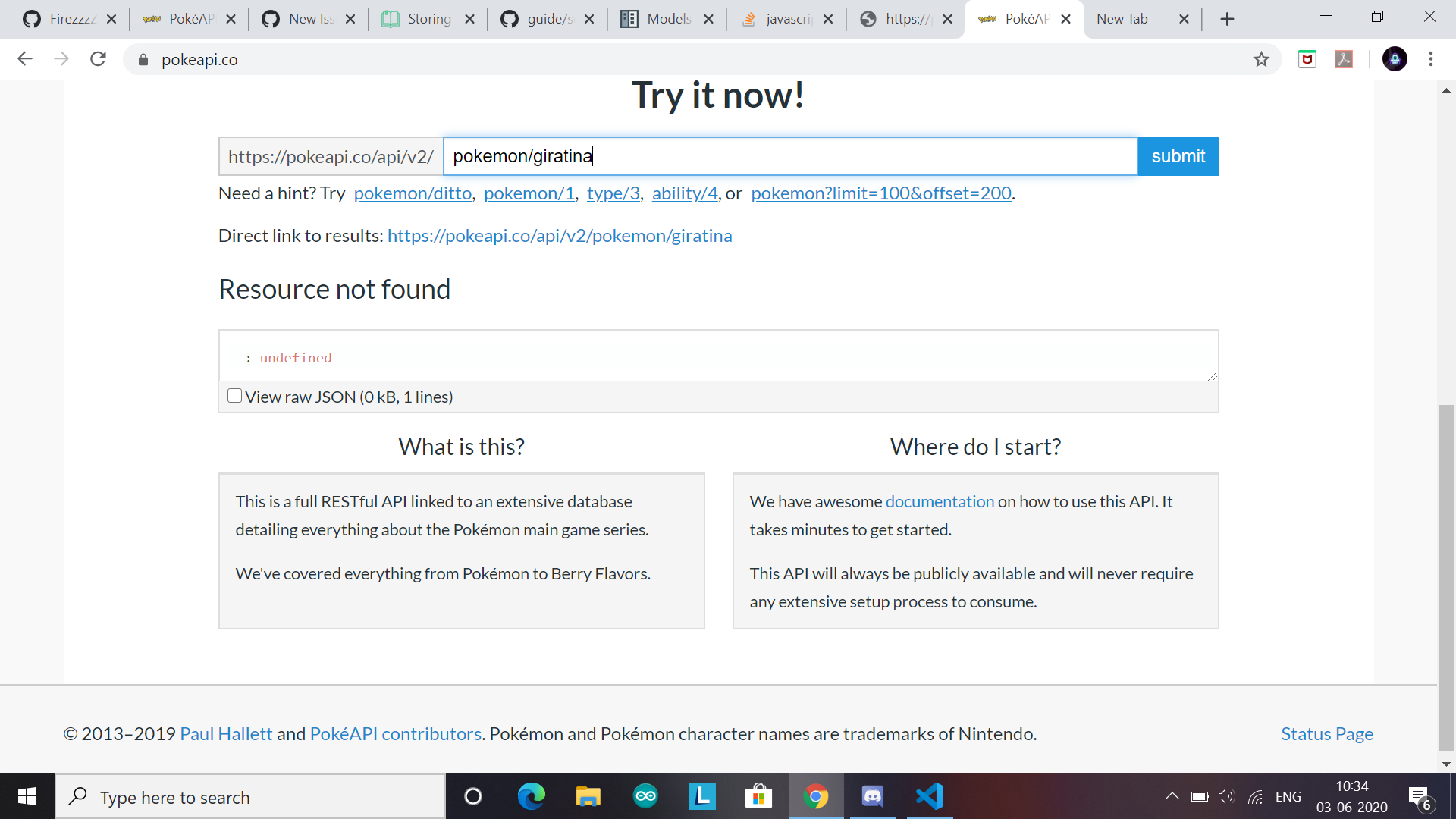

404 not found

|

```

app = FastAPI()

register_tortoise(

app,

config={

'connections': {

'default': 'sqlite://database.sqlite',

},

'apps': {

'models': {

'models': ['fastapi_admin.models', 'models'],

'default_connection': 'default',

}

}

}, generate_schemas=True, add_exception_handlers=True,

)

@app.get("/")

def hello():

return {"Hello": "World"}

app.mount('/admin', admin_app, 'admin_panel')

@app.on_event('startup')

async def startup():

admin_app.init(

admin_secret="test",

permission=True,

site=Site(

name="FastAPI-Admin DEMO",

login_footer="FASTAPI ADMIN - FastAPI Admin Dashboard",

login_description="FastAPI Admin Dashboard",

locale="en-US",

locale_switcher=True,

theme_switcher=True,

),

)

```

I got 404 not found on host:port/admin

Thank you for help

|

closed

|

2020-08-06T18:49:28Z

|

2020-08-10T08:35:19Z

|

https://github.com/fastapi-admin/fastapi-admin/issues/9

|

[

"question"

] |

BezBartek

| 2

|

hpcaitech/ColossalAI

|

deep-learning

| 5,597

|

[BUG]: pretraing llama2 using "gemini" plugin, can not resume from saved checkpoints

|

### 🐛 Describe the bug

pretrain llama2-7b can resume when using "zero2" plugin, but can not resume when using "gemini" plugin, when using "gemini" plugin, the resume process will stuck, the cuda memory do not change in "nvtop" monitor.

### Environment

16 * 8 * H100

torch 2.0.0

|

open

|

2024-04-15T07:13:05Z

|

2024-05-07T23:47:17Z

|

https://github.com/hpcaitech/ColossalAI/issues/5597

|

[

"bug"

] |

jiejie1993

| 1

|

sqlalchemy/alembic

|

sqlalchemy

| 620

|

Does alembic plan to add data migrations?

|

These ones: https://docs.djangoproject.com/en/2.2/topics/migrations/#data-migrations

I understand that it is not an easy thing to add, but probably out-of-the-box way to run data migrations in ["database reflection"](https://docs.sqlalchemy.org/en/13/core/reflection.html) mode?

Also, the docs say nothing about data migrations, as if they didn't exist)

Sorry if it sounds pushy, but I really admire sqlalchemy in so many aspects

|

closed

|

2019-11-11T10:27:45Z

|

2020-02-12T15:03:49Z

|

https://github.com/sqlalchemy/alembic/issues/620

|

[

"question"

] |

abetkin

| 6

|

alteryx/featuretools

|

scikit-learn

| 2,066

|

Add IsLeapYear primitive

|

- This primitive determine the `is_leap_year` attribute of a datetime column

|

closed

|

2022-05-11T20:07:11Z

|

2022-06-22T13:50:14Z

|

https://github.com/alteryx/featuretools/issues/2066

|

[

"good first issue"

] |

gsheni

| 0

|

serengil/deepface

|

machine-learning

| 1,403

|

[BUG]: Multipart-form data not being accepted

|

### Before You Report a Bug, Please Confirm You Have Done The Following...

- [X] I have updated to the latest version of the packages.

- [X] I have searched for both [existing issues](https://github.com/serengil/deepface/issues) and [closed issues](https://github.com/serengil/deepface/issues?q=is%3Aissue+is%3Aclosed) and found none that matched my issue.

### DeepFace's version

0.0.94

### Python version

3.8.12

### Operating System

Fedora 41

### Dependencies

absl-py==2.1.0

astunparse==1.6.3

beautifulsoup4==4.12.3

blinker==1.8.2

cachetools==5.5.0

certifi==2024.12.14

charset-normalizer==3.4.0

click==8.1.7

# Editable install with no version control (deepface==0.0.94)

-e /app

filelock==3.16.1

fire==0.7.0

Flask==3.0.3

Flask-Cors==5.0.0

flatbuffers==24.3.25

gast==0.4.0

gdown==5.2.0

google-auth==2.37.0

google-auth-oauthlib==1.0.0

google-pasta==0.2.0

grpcio==1.68.1

gunicorn==23.0.0

h5py==3.11.0

idna==3.10

importlib_metadata==8.5.0

itsdangerous==2.2.0

Jinja2==3.1.4

keras==2.13.1

libclang==18.1.1

Markdown==3.7

MarkupSafe==2.1.5

mtcnn==0.1.1

numpy==1.22.3

oauthlib==3.2.2

opencv-python==4.9.0.80

opt_einsum==3.4.0

packaging==24.2

pandas==2.0.3

Pillow==9.0.0

protobuf==4.25.5

pyasn1==0.6.1

pyasn1_modules==0.4.1

PySocks==1.7.1

python-dateutil==2.9.0.post0

pytz==2024.2

requests==2.32.3

requests-oauthlib==2.0.0

retina-face==0.0.17

rsa==4.9

six==1.17.0

soupsieve==2.6

tensorboard==2.13.0

tensorboard-data-server==0.7.2

tensorflow==2.13.1

tensorflow-estimator==2.13.0

tensorflow-io-gcs-filesystem==0.34.0

termcolor==2.4.0

tqdm==4.67.1

typing_extensions==4.5.0

tzdata==2024.2

urllib3==2.2.3

Werkzeug==3.0.6

wrapt==1.17.0

zipp==3.20.2

### Reproducible example

```Python

Run the docker container, then attempt to execute this curl command outside the container: `curl -X POST http://0.0.0.0:5005/represent -H "Content-Type: multipart/form-data" -F "model_name=ArcFace" -F "im

g=@Cruelty_of_life.jpg"`

```

### Relevant Log Output

```html

<!doctype html>

<html lang=en>

<title>415 Unsupported Media Type</title>

<h1>Unsupported Media Type</h1>

<p>Did not attempt to load JSON data because the request Content-Type was not 'application/json'.</p>

```

### Expected Result

I expected to obtain the embeddings from the given image

### What happened instead?

The application expected a JSON format

### Additional Info

This was requested in #1382

|

closed

|

2024-12-19T04:58:09Z

|

2024-12-19T11:20:44Z

|

https://github.com/serengil/deepface/issues/1403

|

[

"bug",

"dependencies"

] |

mr-staun

| 3

|

sqlalchemy/alembic

|

sqlalchemy

| 666

|

Numeric fields with scale but no precision do not work as expected.

|

When defining a field in a model as `sa.Numeric(scale=2, asdecimal=True)` I expect the database to do the rounding for me, in this case with two decimal digits in the fractional part.

However if `scale` is given but no `precision` is given then `alembic` generates SQL resulting in ` price NUMERIC,`, **without the scale! This is not intuitive**, as a developer I set a parameter (`scale` in this case) which has **no** effect at all so that's probably either a bug or a mistake on my part.

I think either there should be a warning or error when `scale` is set but no `precision` is given (as this doesn't work.) or it should have a default value for `precision` in that case.

Note that when the database doesn't support NUMERIC (for example sqlite) then the behavior of this example works as expected since `sqlalchemy` uses python's `Decimals` to do the correct (intuitive) rounding.

Workaround is to set a `precision` manually then it works. But it is not clear that the database isn't doing what the developer expects unless they manually inspect the SQL or database.

|

closed

|

2020-02-26T17:55:54Z

|

2020-02-26T18:21:16Z

|

https://github.com/sqlalchemy/alembic/issues/666

|

[

"question"

] |

wapiflapi

| 2

|

lorien/grab

|

web-scraping

| 119

|

Queue backend tests fail some times

|

Example: https://travis-ci.org/lorien/grab/jobs/61755664

|

closed

|

2015-05-08T13:41:14Z

|

2016-12-31T14:19:52Z

|

https://github.com/lorien/grab/issues/119

|

[] |

lorien

| 1

|

facebookresearch/fairseq

|

pytorch

| 5,154

|

10ms shift VS 320 upsample of hubert

|

https://github.com/facebookresearch/fairseq/blob/3f6ba43f07a6e9e2acf957fc24e57251a7a3f55c/examples/hubert/simple_kmeans/dump_mfcc_feature.py#L42

How can they align?

|

open

|

2023-05-25T09:33:48Z

|

2023-05-25T09:33:48Z

|

https://github.com/facebookresearch/fairseq/issues/5154

|

[] |

hdmjdp

| 0

|

WZMIAOMIAO/deep-learning-for-image-processing

|

pytorch

| 693

|

Add new transformation class to save memory in training MaskRCNN

|

Hi. Thank you for the informative notebook:) For my environment(RTX2080 and RTX2080 super), I could not run even with small COCO dataset available from https://github.com/giddyyupp/coco-minitrain

So I cropped images with new `FixedSizeCrop` class added in `transforms.py`.

Then I could train model with no memory issue.

The snippets comes from original pytorch repository https://github.com/pytorch/vision/blob/main/references/detection/transforms.py which is BSD 3-Clause License.

If this feature is convenient, I will make a PR.

- https://github.com/WZMIAOMIAO/deep-learning-for-image-processing/compare/master...r-matsuzaka:deep-learning-for-image-processing:master

|

closed

|

2022-11-20T00:54:05Z

|

2022-11-20T04:42:36Z

|

https://github.com/WZMIAOMIAO/deep-learning-for-image-processing/issues/693

|

[] |

r-matsuzaka

| 2

|

explosion/spaCy

|

deep-learning

| 13,223

|

Example from https://spacy.io/universe/project/neuralcoref doesn't work for polish

|

## How to reproduce the behaviour

Example from https://spacy.io/universe/project/neuralcoref works with english models:

```python

import spacy

import neuralcoref

nlp = spacy.load('en')

neuralcoref.add_to_pipe(nlp)

doc1 = nlp('My sister has a dog. She loves him.')

print(doc1._.coref_clusters)

doc2 = nlp('Angela lives in Boston. She is quite happy in that city.')

for ent in doc2.ents:

print(ent._.coref_cluster)

```

Which outputs:

```

>> python .\spacy_alt.py

[My sister: [My sister, She], a dog: [a dog, him]]

Boston: [Boston, that city]

```

However if I use either `pl_core_news_lg` or `pl_core_news_sm` like that:

```python

import spacy

import neuralcoref

import pl_core_news_lg

#nlp = spacy.load('en_core_web_sm')

nlp = pl_core_news_lg.load()

neuralcoref.add_to_pipe(nlp)

doc1 = nlp('Moja siostra ma psa. Ona go kocha.')

#doc1 = nlp('My sister has a dog. She loves him.')

print(doc1._.coref_clusters)

doc2 = nlp(u'Anna żyje w Krakowie. Jest szczęśliwa w tym mieście.')

#doc2 = nlp('Angela lives in Boston. She is quite happy in that city.')

for ent in doc2.ents:

print(ent._.coref_cluster)

```

I get following output:

```

>> python .\spacy_alt.py

[]

None

None

```

I was guessing it might be connected to the fact english model is `_web_` and polish is `_news_` however:

```

>> python -m spacy download pl_core_web_sm

✘ No compatible model found for 'pl_core_web_sm' (spaCy v2.3.7).

```

## Your Environment

<!-- Include details of your environment. You can also type `python -m spacy info --markdown` and copy-paste the result here.-->

* Operating System: Windows 10 x64

* Python Version Used: Python 3.9.6

* spaCy Version Used: v2.3.7

* Environment Information: most likely irrelevant

|

closed

|

2024-01-08T10:42:48Z

|

2024-01-08T15:10:48Z

|

https://github.com/explosion/spaCy/issues/13223

|

[

"feat / coref"

] |

Zydnar

| 1

|

floodsung/Deep-Learning-Papers-Reading-Roadmap

|

deep-learning

| 129

|

Is this repository maintained? How can us help you?

|

Hi, I am a freshman in the Deep Learning field. I love reading Deep Learning papers and your work likes the guide help me so much. But it seem so far from the last update.

Are there anyone maintain this project. If not, how can us help you?

|

open

|

2020-12-02T03:43:32Z

|

2020-12-03T15:27:42Z

|

https://github.com/floodsung/Deep-Learning-Papers-Reading-Roadmap/issues/129

|

[] |

damminhtien

| 0

|

aio-libs/aiomysql

|

sqlalchemy

| 504

|

ENH: Remove show warnings when "forced"

|

Hi folks, i'm executing +/- 10k qps in a single process

I checked that i have a bottleneck here:

https://github.com/aio-libs/aiomysql/blob/2eb8533d18b3a231231561a3ac881ce334f01312/aiomysql/cursors.py#L478

could be possible include a parameter to forcely don't execute "SHOW WARNINGS" ?

this changed my app to run from 7k to +/-10k qps

|

open

|

2020-06-17T01:56:48Z

|

2022-01-13T00:38:03Z

|

https://github.com/aio-libs/aiomysql/issues/504

|

[

"enhancement"

] |

rspadim

| 1

|

ultralytics/ultralytics

|

computer-vision

| 19,303

|

How should i modify the YAML file of yolov8 default architecture

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/orgs/ultralytics/discussions) and found no similar questions.

### Question

Hi, I’d like to ask how I should modify the layers and parameters in the YOLOv8 YAML file below. I’m using it for plastic detection, specifically for two classes: PET and HDPE. However, when I try to change the architecture, the detection accuracy decreases.

Can anyone explain each line of the YAML file, what it does, and how I can improve the architecture for better detection accuracy?

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs

x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 3, C2f, [128, True]]

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- [-1, 6, C2f, [256, True]]

- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- [-1, 6, C2f, [512, True]]

- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- [-1, 3, C2f, [1024, True]]

- [-1, 1, SPPF, [1024, 5]] # 9

# YOLOv8.0n head

head:

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [[-1, 6], 1, Concat, [1]] # cat backbone P4

- [-1, 3, C2f, [512]] # 12

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [[-1, 4], 1, Concat, [1]] # cat backbone P3

- [-1, 3, C2f, [256]] # 15 (P3/8-small)

- [-1, 1, Conv, [256, 3, 2]]

- [[-1, 12], 1, Concat, [1]] # cat head P4

- [-1, 3, C2f, [512]] # 18 (P4/16-medium)

- [-1, 1, Conv, [512, 3, 2]]

- [[-1, 9], 1, Concat, [1]] # cat head P5

- [-1, 3, C2f, [1024]] # 21 (P5/32-large)

- [[15, 18, 21], 1, Detect, [nc]] # Detect(P3, P4, P5)

### Additional

Modify Architecture

# Parameters

nc: 2 # Only PET and HDPE plastic

scales: # model compound scaling constants

n: [0.33, 0.25, 1024] # Small model

s: [0.33, 0.50, 1024]

m: [0.67, 0.75, 768]

l: [1.00, 1.00, 512]

x: [1.00, 1.25, 512]

# Backbone (Feature extraction)

backbone:

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 4, C2f, [128, True]] # More depth for feature extraction

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- [-1, 8, C2f, [256, True]] # Increased depth for better plastic recognition

- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- [-1, 6, C2f, [512, True]] # Deeper feature extraction

- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- [-1, 4, C2f, [1024, True]] # More layers for refined detection

- [-1, 1, SPPF, [1024, 5]] # Better multi-scale feature aggregation

# YOLOv8 detection head (Final processing)

head:

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [[-1, 6], 1, Concat, [1]] # Concatenate with P4

- [-1, 4, C2f, [512]] # More feature fusion for plastic detection

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [[-1, 4], 1, Concat, [1]] # Concatenate with P3

- [-1, 4, C2f, [256]] # More feature learning at smaller scale

- [-1, 1, Conv, [256, 3, 2]]

- [[-1, 12], 1, Concat, [1]] # Concatenate with P4

- [-1, 4, C2f, [512]] # Further refining features

- [-1, 1, Conv, [512, 3, 2]]

- [[-1, 9], 1, Concat, [1]] # Concatenate with P5

- [-1, 4, C2f, [1024]] # Final detection layer

- [[15, 18, 21], 1, Detect, [nc]] # Detect PET & HDPE plastic

My Dataset:

https://universe.roboflow.com/fong-xkhmg/plastic-recyclable-detection-quvyc/dataset/1

|

closed

|

2025-02-19T02:30:03Z

|

2025-03-18T01:28:38Z

|

https://github.com/ultralytics/ultralytics/issues/19303

|

[

"enhancement",

"question",

"detect"

] |

karfong

| 2

|

deepfakes/faceswap

|

machine-learning

| 1,155

|

Swap Model to convert From A->B to B->A

|

Hi,

I am trying to swap the model by passing -s argument, but the output gives the original mp4 file in return.

In short, the swap model does not swap.

The commad i am running,

This works ( A -> B)

python faceswap.py convert -i output/00099.mp4 -o output/ -m output/mo -al output/AA/alignments.fsa -w ffmpe

g

This does not work ( B -> A)

python faceswap.py convert -i output/00094.mp4 -o output/ -m output/mo -al output/BB/alignments.fsa -w ffmpe

g -s

|

closed

|

2021-06-04T05:33:13Z

|

2021-06-04T14:41:35Z

|

https://github.com/deepfakes/faceswap/issues/1155

|

[] |

hasamkhalid

| 1

|

google-research/bert

|

nlp

| 933

|

Too much Prediction Time

|

After training the Bert classification model it is taking 8 sec to predict.

I have following questions

1) is it because of loading time? If yes, can we load one time and use it for multiple inference.

2) Or is it because of computation time? If yes, what are possible way to reduce it?

3) If I use the quantization will inference time reduces? If yes by how much factor?

Thanks All

|

open

|

2019-11-22T17:01:16Z

|

2019-11-22T17:01:16Z

|

https://github.com/google-research/bert/issues/933

|

[] |

hegebharat

| 0

|

JaidedAI/EasyOCR

|

deep-learning

| 1,121

|

Training a custom model : how to improve accuracy?

|

Hello All,

I am trying to train a new model dedicated to french characters set and domain specific fonts set.

After a bunch of difficulties ;-) I managed to make the training and testing working!

I looked at the train.py code and as far as I understand:

- each training loop consumes 32 images (1 torch data batch).

- A round of 10000 batches is necessary to get the fisrt model checkpoint.

-

To ensure this traning without oversampling, I created a set of 320,000 (10000*32) images/labels.

Is this way of thinking correct ?

Training works, I get the iter_10000.pth model. Test shows accuracy around 90 %.

Running to the second checkpoint, trainer delivers the iter_20000.pth model, but accuracy is not better, even worse.

Moreover, when third turn starts I get a CUDA memory overflow (RTX 3060 8GB in my box).

Questions:

How many images where used to train the latin_g2.pth model?

What was the size of the images?

How manay words were present in each image?

What kind of GPU was used?

How long time did this training last?

Any advice is greatly apprciated.

Thanks a lot

AV

|

open

|

2023-08-23T11:14:05Z

|

2023-10-01T17:38:22Z

|

https://github.com/JaidedAI/EasyOCR/issues/1121

|

[] |

averatio

| 1

|

apify/crawlee-python

|

automation

| 1,111

|

Integrate web automation tool `DrissionPage`

|

Would you plan to integrate DrissionPage (https://github.com/g1879/DrissionPage) as an alternative to the browser dependent flow?

|

open

|

2025-03-20T17:31:55Z

|

2025-03-24T10:50:37Z

|

https://github.com/apify/crawlee-python/issues/1111

|

[

"t-tooling"

] |

meanguins

| 2

|

pytorch/pytorch

|

numpy

| 149,462

|

Avoid recompilation caused by is_mm_compute_bound

|

From @Elias Ellison

is_mm_compute_bound is just to avoid benchmarking cases where it is reliably unprofitable.

so in the case of dynamic we probably should just return keep it on and not guard.

Here is my proposal to address this

The benchmarking is on by default, we disable it iff some conditions are statically known true.

internal post

https://fb.workplace.com/groups/8940092306109185/permalink/9211657442286002/

cc @chauhang @penguinwu @ezyang @bobrenjc93

|

open

|

2025-03-18T23:30:28Z

|

2025-03-24T10:37:49Z

|

https://github.com/pytorch/pytorch/issues/149462

|

[

"triaged",

"oncall: pt2",

"module: dynamic shapes"

] |

laithsakka

| 0

|

peerchemist/finta

|

pandas

| 48

|

Should return type in signature be DataFrame when returning pd.concat()?

|

Great library, and I like your coding style.

In functions that return using the Pandas concat() function, the return type is currently specified as Pandas Series. Shouldn't it be DataFrame?

Example:

https://github.com/peerchemist/finta/blob/22460ba4a73272895ee162b0b8125988bbefe88c/finta/finta.py#L408

So shouldn't the signature:

https://github.com/peerchemist/finta/blob/22460ba4a73272895ee162b0b8125988bbefe88c/finta/finta.py#L376

instead be:

`... ) -> DataFrame:`?

|

closed

|

2019-12-30T22:56:47Z

|

2020-01-11T11:59:46Z

|

https://github.com/peerchemist/finta/issues/48

|

[] |

BillEndow

| 2

|

dgtlmoon/changedetection.io

|

web-scraping

| 2,594

|

Telegram notification

|

Docker Qnap nas.

When configuring the Telegram bot it always gives the same error, I have other dockers that notify by telegram bot and I don't have any problem.

|

closed

|

2024-08-26T15:09:18Z

|

2024-08-26T16:32:35Z

|

https://github.com/dgtlmoon/changedetection.io/issues/2594

|

[

"triage"

] |

yeraycito

| 2

|

holoviz/panel

|

plotly

| 7,441

|

Support dark theme for JSONEditor

|

The `JSONEditor` does not look nice in dark theme:

I can see that the js library can be dark styled:

- https://github.com/josdejong/jsoneditor/blob/develop/examples/06_custom_styling.html

- https://github.com/josdejong/jsoneditor/blob/develop/examples/css/darktheme.css

|

open

|

2024-10-24T18:46:42Z

|

2025-01-21T13:44:00Z

|

https://github.com/holoviz/panel/issues/7441

|

[

"type: enhancement"

] |

MarcSkovMadsen

| 0

|

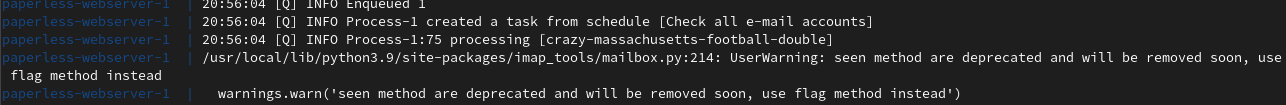

paperless-ngx/paperless-ngx

|

django

| 8,664

|

[BUG] PAPERLESS_IGNORE_DATES is ignored

|

### Description

Paperless ignores the date set in PAPERLESS_IGNORE_DATES.

The standard for dates is DMY (this only affects the order, it does not set the specific format like DDMMYYYY as far as I understand), as stated in the documentation. I have set my ignore date to "03-03-2005" but it still uses it as the creation date after processing documents.

How do I have to set it?

Just 03032005 or 03.03.2005? Is it correct to put PAPERLESS_IGNORE_DATES="xxx" into my environment variables or do I need to format it differently?

### Steps to reproduce

1. Set PAPERLESS_IGNORE_DATES

2. Consume document

3. ignored date is still used

### Webserver logs

```bash

when i try to reprocess a document after changing the environment variable, everything seems to work:

[2025-01-10 02:15:25,886] [INFO] [celery.worker.strategy] Task documents.tasks.update_document_archive_file[66cd2d28-cdcd-4743-bb59-c7d177b6eaef] received

[2025-01-10 02:15:26,017] [INFO] [paperless.parsing.tesseract] pdftotext exited 0

[2025-01-10 02:15:27,976] [INFO] [ocrmypdf._pipeline] page is facing ⇧, confidence 12.76 - rotation appears correct

[2025-01-10 02:15:39,895] [INFO] [ocrmypdf._pipelines.ocr] Postprocessing...

[2025-01-10 02:15:40,713] [INFO] [ocrmypdf._pipeline] Image optimization ratio: 1.24 savings: 19.6%

[2025-01-10 02:15:40,724] [INFO] [ocrmypdf._pipeline] Total file size ratio: 1.21 savings: 17.6%

[2025-01-10 02:15:40,736] [INFO] [ocrmypdf._pipelines._common] Output file is a PDF/A-2B (as expected)

[2025-01-10 02:15:42,322] [INFO] [paperless.parsing] convert exited 0

[2025-01-10 02:15:42,398] [INFO] [paperless.tasks] Updating index for document 793 (b9040f17d7011a5d80767006f2d8e5e8)

[2025-01-10 02:15:43,349] [INFO] [celery.app.trace] Task documents.tasks.update_document_archive_file[66cd2d28-cdcd-4743-bb59-c7d177b6eaef] succeeded in 17.44106532796286s: None

```

### Browser logs

_No response_

### Paperless-ngx version

2.13.5

### Host OS

Ubuntu Server with Docker

### Installation method

Docker - official image

### System status

_No response_

### Browser

Chrome

### Configuration changes

see above

### Please confirm the following

- [X] I believe this issue is a bug that affects all users of Paperless-ngx, not something specific to my installation.

- [X] This issue is not about the OCR or archive creation of a specific file(s). Otherwise, please see above regarding OCR tools.

- [X] I have already searched for relevant existing issues and discussions before opening this report.

- [X] I have updated the title field above with a concise description.

|

closed

|

2025-01-10T01:20:06Z

|

2025-01-10T03:16:23Z

|

https://github.com/paperless-ngx/paperless-ngx/issues/8664

|

[

"not a bug"

] |

lx05

| 1

|

15r10nk/inline-snapshot

|

pytest

| 116

|

`Error: one snapshot has incorrect values (--inline-snapshot=fix)` is confusing when all tests pass

|

Again, thank you for inline-snaphots, we use it all the time and it's great.

I think use of the word "Error" is pretty confusing here:

<img width="772" alt="image" src="https://github.com/user-attachments/assets/73408bbb-aa3f-4eec-9508-6e47b72751cd">

If I understand this correctly, inline-snapshots is really just suggesting it would format one of the tests differently? If so it should say that, not "Error".

... or am I miss understanding?

|

closed

|

2024-09-21T15:01:20Z

|

2024-09-24T09:20:52Z

|

https://github.com/15r10nk/inline-snapshot/issues/116

|

[] |

samuelcolvin

| 3

|

jschneier/django-storages

|

django

| 1,431

|

[azure] Broken listdir

|

Since #1403 the logic of listdir was changed and this is not compatible with standard lib os.listdir. Do you plane do leave it as is or fix this behavior?

Issue occurs since v.1.14.4

thanx

|

open

|

2024-07-11T08:24:51Z

|

2025-01-21T07:59:56Z

|

https://github.com/jschneier/django-storages/issues/1431

|

[] |

marcinfair

| 9

|

aiortc/aiortc

|

asyncio

| 903

|

RuntimeWarning: coroutine 'RTCSctpTransport._data_channel_flush' was never awaited

|

Trying to run `RTCDataChannel.send` in parallel and facing the error in the title.

What I want to do as a minimal reproducible example:

```python

import asyncio

def say_hi(num: int):

print(f"Hello {num}")

async def main():

loop = asyncio.get_running_loop()

tasks = []

for i in range(5):

tasks.append(loop.run_in_executor(None, say_hi, i))

await asyncio.wait(tasks)

if __name__ == "__main__":

asyncio.run(main())

```

This code works as expected, it runs the sync `say_hi` in parallel using the loop from an `async` context. Since `RTCDataChannel.send` is sync (cannot be awaited), I wanted to do the same. My code:

```python

async def send(self, data: bytes | str, chunk_size_kb: int = 15):

chunk_size = chunk_size_kb * 1024

num_chunks = ceil(len(data) / chunk_size)

tasks = []

loop = asyncio.get_running_loop()

for i in range(num_chunks):

chunk = data[i * chunk_size : min((i + 1) * chunk_size, len(data))]

chunk = Chunk(self.id, i, num_chunks, chunk)

tasks.append(

loop.run_in_executor(

None, self.__peer_chan.send, encode_message(chunk)

)

)

await asyncio.wait(tasks)

```

This method wraps the `channel.send` in an async context and basically sends the data in chunks of +/-15 kbs.

Since the logic is the same as in the example above, I expect it to work. However, I am running in an issue, and here is the stack trace:

>ERROR:asyncio:Future exception was never retrieved

future: <Future finished exception=RuntimeError("There is no current event loop in thread 'asyncio_0'.")>

Traceback (most recent call last):

File "/home/sb/.pyenv/versions/3.10.0/lib/python3.10/concurrent/futures/thread.py", line 52, in run

result = self.fn(*self.args, **self.kwargs)

File "/home/<proj>/.venv/lib/python3.10/site-packages/aiortc/rtcdatachannel.py", line 186, in send

self.transport._data_channel_send(self, data)

File "/home/<proj>/.venv/lib/python3.10/site-packages/aiortc/rtcsctptransport.py", line 1805, in _data_channel_send

asyncio.ensure_future(self._data_channel_flush())

File "/home/sb/.pyenv/versions/3.10.0/lib/python3.10/asyncio/tasks.py", line 619, in ensure_future

return _ensure_future(coro_or_future, loop=loop)

File "/home/sb/.pyenv/versions/3.10.0/lib/python3.10/asyncio/tasks.py", line 637, in _ensure_future

loop = events._get_event_loop(stacklevel=4)

File "/home/sb/.pyenv/versions/3.10.0/lib/python3.10/asyncio/events.py", line 656, in get_event_loop

raise RuntimeError('There is no current event loop in thread %r.'

RuntimeError: There is no current event loop in thread 'asyncio_0'.

/home/<proj>/src/webrtc/web_rtc_worker.py:127: RuntimeWarning: coroutine 'RTCSctpTransport._data_channel_flush' was never awaited

Versions:

- python: 3.10

- aiortc: 1.5.0

- nest-asyncio: 1.5.6

P.S.: the operation is running on the main thread which has a loop due to `asyncio.run`

|

closed

|

2023-07-06T22:10:23Z

|

2023-11-18T02:03:54Z

|

https://github.com/aiortc/aiortc/issues/903

|

[

"stale"

] |

vivere-dally

| 1

|

roboflow/supervision

|

deep-learning

| 1,114

|

[DetectionDataset] - extend `from_coco` and `as_coco` with support for masks in RLE format

|

### Description

The COCO dataset format allows for the storage of segmentation masks in two ways:

- Polygon Masks: These masks use a series of vertices on an x-y plane to represent segmented object areas. The vertices are connected by straight lines to form polygons that approximate the shapes of objects.

Run-Length Encoding (RLE): RLE compresses segments of pixels into counts of consecutive pixels (runs). This method efficiently sequences pixels by reporting the number of pixels that are either foreground or background. For instance, starting from the top left of an image, the encoding might record '5 white pixels, 3 black pixels, 6 white pixels', and so on.

Supervision currently only supports Polygon Masks, but we want to expand support for masks in RLE format. To do this, you will need to make changes in [`coco_annotations_to_detections`](https://github.com/roboflow/supervision/blob/9d9acd7e587d117a2faa395580664aeb83be5efb/supervision/dataset/formats/coco.py#L72) and [`detections_to_coco_annotations`](https://github.com/roboflow/supervision/blob/9d9acd7e587d117a2faa395580664aeb83be5efb/supervision/dataset/formats/coco.py#L100).

### Links

- an official [explanation](https://github.com/cocodataset/cocoapi/issues/184) from the COCO dataset repository

- old supervision [issue](https://github.com/roboflow/supervision/issues/373) providing more context

### Additional

- Note: Please share a Google Colab with minimal code to test the new feature. We know it's additional work, but it will speed up the review process. The reviewer must test each change. Setting up a local environment to do this is time-consuming. Please ensure that Google Colab can be accessed without any issues (make it public). Thank you! 🙏🏻

|

closed

|

2024-04-12T15:06:27Z

|

2024-05-21T11:30:15Z

|

https://github.com/roboflow/supervision/issues/1114

|

[

"enhancement",

"help wanted",

"api:datasets",

"Q2.2024"

] |

LinasKo

| 11

|

chiphuyen/stanford-tensorflow-tutorials

|

nlp

| 84

|

ValueError: Sample larger than population

|

i used python3.5 and tensorflow1.3 , I got below error while running data.py

I tried to edit random.py but it's read only

File "/usr/lib/python3.5/random.py", line 324, in sample

raise ValueError("Sample larger than population")

ValueError: Sample larger than population

how to fix it

|

open

|

2017-12-30T11:14:25Z

|

2018-01-30T23:02:51Z

|

https://github.com/chiphuyen/stanford-tensorflow-tutorials/issues/84

|

[] |

bandarikanth

| 1

|

skypilot-org/skypilot

|

data-science

| 4,853

|

[Test] support run specific cases in sandbox in smoke test

|

The API server is shared globally in smoke test, but we also want to run some cases in isolated sandbox with its dedicated API server, e.g.:

> It is a bit complicated to run smoke test for --foreground since we need an isolated sandbox to avoid intervening other cases, for this PR, the test are done manually.

https://github.com/skypilot-org/skypilot/pull/4852

|

open

|

2025-02-28T06:04:23Z

|

2025-03-05T02:22:07Z

|

https://github.com/skypilot-org/skypilot/issues/4853

|

[

"api server"

] |

aylei

| 0

|

horovod/horovod

|

tensorflow

| 3,119

|

Spark Lightning MNIST fails on GPU

|

From buildkite.

```

/horovod/examples/spark/pytorch/pytorch_lightning_spark_mnist.py --num-proc 2 --work-dir /work --data-dir /data --epochs 3"' in service test-gpu-gloo-py3_8-tf2_4_3-keras2_3_1-torch1_7_1-mxnet1_6_0_p0-pyspark3_1_2 | 2m 46s

-- | --

| $ docker-compose -f docker-compose.test.yml -p buildkite27b20b242900470484e62a9743c390b7 -f docker-compose.buildkite-6387-override.yml run --name buildkite27b20b242900470484e62a9743c390b7_test-gpu-gloo-py3_8-tf2_4_3-keras2_3_1-torch1_7_1-mxnet1_6_0_p0-pyspark3_1_2_build_6387 -v /var/lib/buildkite-agent/builds/buildkite-2x-gpu-v510-i-0c5beeec60df27818-2/horovod/horovod/artifacts:/artifacts --rm test-gpu-gloo-py3_8-tf2_4_3-keras2_3_1-torch1_7_1-mxnet1_6_0_p0-pyspark3_1_2 /bin/sh -e -c 'bash -c "OMP_NUM_THREADS=1 /spark_env.sh python /horovod/examples/spark/pytorch/pytorch_lightning_spark_mnist.py --num-proc 2 --work-dir /work --data-dir /data --epochs 3"'

| Creating buildkite27b20b242900470484e62a9743c390b7_test-gpu-gloo-py3_8-tf2_4_3-keras2_3_1-torch1_7_1-mxnet1_6_0_p0-pyspark3_1_2_run ... done

| 21/08/18 15:13:48 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

| Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

| Setting default log level to "WARN".

| To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

| num_partitions=20

| writing dataframes

| train_data_path=file:///work/intermediate_train_data.0

| val_data_path=file:///work/intermediate_val_data.0

| train_partitions=18

| val_partitions=2

| /usr/local/lib/python3.8/dist-packages/horovod/spark/common/util.py:509: FutureWarning: The 'field_by_name' method is deprecated, use 'field' instead

| metadata, avg_row_size = make_metadata_dictionary(train_data_schema)

| train_rows=48721

| val_rows=5384

| 2021-08-18 15:14:26.091848: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /usr/local/nvidia/lib:/usr/local/nvidia/lib64

| 2021-08-18 15:14:26.091875: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

| 2021-08-18 15:14:27.292047: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /usr/local/nvidia/lib:/usr/local/nvidia/lib64

| 2021-08-18 15:14:27.292079: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

| 2021-08-18 15:14:28.372275: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /usr/local/nvidia/lib:/usr/local/nvidia/lib64

| 2021-08-18 15:14:28.372304: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

| Wed Aug 18 15:14:31 2021[1]<stdout>:Training data of rank[1]: train_rows:48721, batch_size:64, _train_steps_per_epoch:380.

| Wed Aug 18 15:14:34 2021[1]<stdout>:Creating trainer with:

| Wed Aug 18 15:14:34 2021[1]<stdout>: {'accelerator': 'horovod', 'gpus': 1, 'callbacks': [<__main__.MyDummyCallback object at 0x7f62c12d1fd0>, <pytorch_lightning.callbacks.model_checkpoint.ModelCheckpoint object at 0x7f6261f33490>, <pytorch_lightning.callbacks.early_stopping.EarlyStopping object at 0x7f6261b9a1f0>], 'max_epochs': 3, 'logger': <pytorch_lightning.loggers.tensorboard.TensorBoardLogger object at 0x7f62c12d1790>, 'log_every_n_steps': 50, 'resume_from_checkpoint': None, 'checkpoint_callback': True, 'num_sanity_val_steps': 0, 'reload_dataloaders_every_epoch': False, 'progress_bar_refresh_rate': 38, 'terminate_on_nan': False}

| Wed Aug 18 15:14:34 2021[1]<stdout>:Starting to init trainer!

| Wed Aug 18 15:14:34 2021[1]<stdout>:Trainer is initialized.

| Wed Aug 18 15:14:34 2021[1]<stdout>:pytorch_lightning version=1.3.8

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] NCCL INFO Bootstrap : Using [0]lo:127.0.0.1<0> [1]eth0:192.168.48.2<0>

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] NCCL INFO NET/Plugin : No plugin found (libnccl-net.so), using internal implementation

| Wed Aug 18 15:14:34 2021[1]<stdout>:

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] misc/ibvwrap.cc:63 NCCL WARN Failed to open libibverbs.so[.1]

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] NCCL INFO NET/Socket : Using [0]lo:127.0.0.1<0> [1]eth0:192.168.48.2<0>

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] NCCL INFO Using network Socket

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] NCCL INFO threadThresholds 8/8/64 \| 16/8/64 \| 8/8/64

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] NCCL INFO Trees [0] -1/-1/-1->1->0\|0->1->-1/-1/-1 [1] -1/-1/-1->1->0\|0->1->-1/-1/-1

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] NCCL INFO Could not enable P2P between dev 1(=1e0) and dev 0(=1d0)

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] NCCL INFO Could not enable P2P between dev 1(=1e0) and dev 0(=1d0)

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] NCCL INFO Channel 00 : 1[1e0] -> 0[1d0] via direct shared memory

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] NCCL INFO Could not enable P2P between dev 1(=1e0) and dev 0(=1d0)

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] NCCL INFO Could not enable P2P between dev 1(=1e0) and dev 0(=1d0)

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] NCCL INFO Channel 01 : 1[1e0] -> 0[1d0] via direct shared memory

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] NCCL INFO 2 coll channels, 2 p2p channels, 2 p2p channels per peer

| Wed Aug 18 15:14:34 2021[1]<stdout>:b436722175a2:337:369 [1] NCCL INFO comm 0x7f62ac01c6c0 rank 1 nranks 2 cudaDev 1 busId 1e0 - Init COMPLETE

| Wed Aug 18 15:14:35 2021[1]<stdout>:Setup train dataloader

| Wed Aug 18 15:14:35 2021[1]<stdout>:[train dataloader]: Initializing petastorm dataloader with batch_size=64shuffling_queue_capacity=24360, limit_step_per_epoch=380

| Wed Aug 18 15:14:35 2021[1]<stdout>:Apply the AsyncDataLoaderMixin on top of the data loader, async_loader_queue_size=64.

| Wed Aug 18 15:14:35 2021[1]<stdout>:setup val dataloader

| Wed Aug 18 15:14:35 2021[1]<stdout>:[val dataloader]: Initializing petastorm dataloader with batch_size=64shuffling_queue_capacity=0, limit_step_per_epoch=42

| Wed Aug 18 15:14:35 2021[1]<stdout>:Apply the AsyncDataLoaderMixin on top of the data loader, async_loader_queue_size=64.

| Wed Aug 18 15:14:35 2021[1]<stdout>:Start generating batches from async data loader.

| Wed Aug 18 15:14:35 2021[1]<stdout>:[train dataloader]: Start to generate batch data. limit_step_per_epoch=380

| Wed Aug 18 15:14:36 2021[1]<stdout>:training data batch size: torch.Size([64])

| Wed Aug 18 15:14:38 2021[1]<stdout>:[train dataloader]: Reach limit_step_per_epoch. Stop at step 380.

| Wed Aug 18 15:14:38 2021[1]<stdout>:[train dataloader]: Start to generate batch data. limit_step_per_epoch=380

| Wed Aug 18 15:14:38 2021[1]<stdout>:A train epoch ended.

| Wed Aug 18 15:14:38 2021[1]<stdout>:A train or eval epoch ended.

| Wed Aug 18 15:14:38 2021[1]<stdout>:Start generating batches from async data loader.

| Wed Aug 18 15:14:38 2021[1]<stdout>:[val dataloader]: Start to generate batch data. limit_step_per_epoch=42

| Wed Aug 18 15:14:39 2021[1]<stdout>:validation data batch size: torch.Size([64])

| Wed Aug 18 15:14:39 2021[1]<stdout>:[val dataloader]: Reach limit_step_per_epoch. Stop at step 42.

| Wed Aug 18 15:14:39 2021[1]<stdout>:[val dataloader]: Start to generate batch data. limit_step_per_epoch=42

| Wed Aug 18 15:14:39 2021[1]<stdout>:A val epoch ended.

| Wed Aug 18 15:14:39 2021[1]<stdout>:A train or eval epoch ended.

| Wed Aug 18 15:14:40 2021[1]<stdout>:Start generating batches from async data loader.

| Wed Aug 18 15:14:40 2021[1]<stdout>:training data batch size: torch.Size([64])

| Wed Aug 18 15:14:40 2021[1]<stdout>:[val dataloader]: Reach limit_step_per_epoch. Stop at step 42.

| Wed Aug 18 15:14:40 2021[1]<stdout>:[val dataloader]: Start to generate batch data. limit_step_per_epoch=42

| Wed Aug 18 15:14:42 2021[1]<stdout>:[train dataloader]: Reach limit_step_per_epoch. Stop at step 380.

| Wed Aug 18 15:14:42 2021[1]<stdout>:[train dataloader]: Start to generate batch data. limit_step_per_epoch=380

| Wed Aug 18 15:14:43 2021[1]<stdout>:A train epoch ended.

| Wed Aug 18 15:14:43 2021[1]<stdout>:A train or eval epoch ended.

| Wed Aug 18 15:14:43 2021[1]<stdout>:Start generating batches from async data loader.

| Wed Aug 18 15:14:43 2021[1]<stdout>:validation data batch size: torch.Size([64])

| Wed Aug 18 15:14:43 2021[1]<stdout>:A val epoch ended.

| Wed Aug 18 15:14:43 2021[1]<stdout>:A train or eval epoch ended.

| Wed Aug 18 15:14:43 2021[1]<stdout>:Start generating batches from async data loader.

| Wed Aug 18 15:14:43 2021[1]<stdout>:training data batch size: torch.Size([64])

| Wed Aug 18 15:14:43 2021[1]<stdout>:[val dataloader]: Reach limit_step_per_epoch. Stop at step 42.

| Wed Aug 18 15:14:43 2021[1]<stdout>:[val dataloader]: Start to generate batch data. limit_step_per_epoch=42

| Wed Aug 18 15:14:45 2021[1]<stdout>:[train dataloader]: Reach limit_step_per_epoch. Stop at step 380.

| Wed Aug 18 15:14:45 2021[1]<stdout>:[train dataloader]: Start to generate batch data. limit_step_per_epoch=380

| Wed Aug 18 15:14:46 2021[1]<stdout>:A train epoch ended.

| Wed Aug 18 15:14:46 2021[1]<stdout>:A train or eval epoch ended.

| Wed Aug 18 15:14:46 2021[1]<stdout>:Start generating batches from async data loader.

| Wed Aug 18 15:14:46 2021[1]<stdout>:validation data batch size: torch.Size([64])

| Wed Aug 18 15:14:46 2021[1]<stdout>:[val dataloader]: Reach limit_step_per_epoch. Stop at step 42.

| Wed Aug 18 15:14:46 2021[1]<stdout>:[val dataloader]: Start to generate batch data. limit_step_per_epoch=42

| Wed Aug 18 15:14:46 2021[1]<stdout>:A val epoch ended.

| Wed Aug 18 15:14:46 2021[1]<stdout>:A train or eval epoch ended.

| Wed Aug 18 15:14:46 2021[1]<stdout>:Training ends:epcoh_end_counter=6, train_epcoh_end_counter=3, validation_epoch_end_counter=3

| Wed Aug 18 15:14:46 2021[1]<stdout>:

| Wed Aug 18 15:14:46 2021[1]<stdout>:Tear down petastorm readers

| Wed Aug 18 15:14:34 2021[1]<stderr>:GPU available: True, used: True

| Wed Aug 18 15:14:34 2021[1]<stderr>:TPU available: False, using: 0 TPU cores

| Wed Aug 18 15:14:34 2021[1]<stderr>:/usr/local/lib/python3.8/dist-packages/petastorm/fs_utils.py:88: FutureWarning: pyarrow.localfs is deprecated as of 2.0.0, please use pyarrow.fs.LocalFileSystem instead.

| Wed Aug 18 15:14:34 2021[1]<stderr>: self._filesystem = pyarrow.localfs

| Wed Aug 18 15:14:34 2021[1]<stderr>:LOCAL_RANK: 1 - CUDA_VISIBLE_DEVICES: [2,3]

| Wed Aug 18 15:14:34 2021[1]<stderr>:Missing logger folder: /tmp/tmpwmgicp1r/logs/default

| Wed Aug 18 15:14:36 2021[1]<stderr>:/usr/local/lib/python3.8/dist-packages/petastorm/pytorch.py:339: UserWarning: The given NumPy array is not writeable, and PyTorch does not support non-writeable tensors. This means you can write to the underlying (supposedly non-writeable) NumPy array using the tensor. You may want to copy the array to protect its data or make it writeable before converting it to a tensor. This type of warning will be suppressed for the rest of this program. (Triggered internally at /pytorch/torch/csrc/utils/tensor_numpy.cpp:141.)

| Wed Aug 18 15:14:36 2021[1]<stderr>: row_as_dict[k] = self.transform_fn(v)

| Wed Aug 18 15:14:40 2021[1]<stderr>:[rank: 1] Metric val_loss improved. New best score: 0.500

| Wed Aug 18 15:14:46 2021[1]<stderr>:terminate called without an active exception

| Wed Aug 18 15:14:50 2021[1]<stderr>:Aborted (core dumped)

| Wed Aug 18 15:14:31 2021[0]<stdout>:Training data of rank[0]: train_rows:48721, batch_size:64, _train_steps_per_epoch:380.

| Wed Aug 18 15:14:34 2021[0]<stdout>:Creating trainer with:

| Wed Aug 18 15:14:34 2021[0]<stdout>: {'accelerator': 'horovod', 'gpus': 1, 'callbacks': [<__main__.MyDummyCallback object at 0x7f00ac791fd0>, <pytorch_lightning.callbacks.model_checkpoint.ModelCheckpoint object at 0x7f004d418490>, <pytorch_lightning.callbacks.early_stopping.EarlyStopping object at 0x7f004d07f1f0>], 'max_epochs': 3, 'logger': <pytorch_lightning.loggers.tensorboard.TensorBoardLogger object at 0x7f00ac791790>, 'log_every_n_steps': 50, 'resume_from_checkpoint': None, 'checkpoint_callback': True, 'num_sanity_val_steps': 0, 'reload_dataloaders_every_epoch': False, 'progress_bar_refresh_rate': 38, 'terminate_on_nan': False}

| Wed Aug 18 15:14:34 2021[0]<stdout>:Starting to init trainer!

| Wed Aug 18 15:14:34 2021[0]<stdout>:Trainer is initialized.

| Wed Aug 18 15:14:34 2021[0]<stdout>:pytorch_lightning version=1.3.8

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO Bootstrap : Using [0]lo:127.0.0.1<0> [1]eth0:192.168.48.2<0>

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO NET/Plugin : No plugin found (libnccl-net.so), using internal implementation

| Wed Aug 18 15:14:34 2021[0]<stdout>:

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] misc/ibvwrap.cc:63 NCCL WARN Failed to open libibverbs.so[.1]

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO NET/Socket : Using [0]lo:127.0.0.1<0> [1]eth0:192.168.48.2<0>

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO Using network Socket

| Wed Aug 18 15:14:34 2021[0]<stdout>:NCCL version 2.7.8+cuda10.1

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO Channel 00/02 : 0 1

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO Channel 01/02 : 0 1

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO threadThresholds 8/8/64 \| 16/8/64 \| 8/8/64

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO Trees [0] 1/-1/-1->0->-1\|-1->0->1/-1/-1 [1] 1/-1/-1->0->-1\|-1->0->1/-1/-1

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO Could not enable P2P between dev 0(=1d0) and dev 1(=1e0)

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO Could not enable P2P between dev 0(=1d0) and dev 1(=1e0)

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO Channel 00 : 0[1d0] -> 1[1e0] via direct shared memory

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO Could not enable P2P between dev 0(=1d0) and dev 1(=1e0)

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO Could not enable P2P between dev 0(=1d0) and dev 1(=1e0)

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO Channel 01 : 0[1d0] -> 1[1e0] via direct shared memory

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO 2 coll channels, 2 p2p channels, 2 p2p channels per peer

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO comm 0x7f009801d090 rank 0 nranks 2 cudaDev 0 busId 1d0 - Init COMPLETE

| Wed Aug 18 15:14:34 2021[0]<stdout>:b436722175a2:333:379 [0] NCCL INFO Launch mode Parallel

| Wed Aug 18 15:14:35 2021[0]<stdout>:Setup train dataloader

| Wed Aug 18 15:14:35 2021[0]<stdout>:[train dataloader]: Initializing petastorm dataloader with batch_size=64shuffling_queue_capacity=24360, limit_step_per_epoch=380

| Wed Aug 18 15:14:35 2021[0]<stdout>:Apply the AsyncDataLoaderMixin on top of the data loader, async_loader_queue_size=64.

| Wed Aug 18 15:14:35 2021[0]<stdout>:setup val dataloader

| Wed Aug 18 15:14:35 2021[0]<stdout>:[val dataloader]: Initializing petastorm dataloader with batch_size=64shuffling_queue_capacity=0, limit_step_per_epoch=42

| Wed Aug 18 15:14:35 2021[0]<stdout>:Apply the AsyncDataLoaderMixin on top of the data loader, async_loader_queue_size=64.

| Wed Aug 18 15:14:35 2021[0]<stdout>:Epoch 0: 0%\| \| 0/422 [00:00<?, ?it/s] Start generating batches from async data loader.

| Wed Aug 18 15:14:35 2021[0]<stdout>:[train dataloader]: Start to generate batch data. limit_step_per_epoch=380

| Wed Aug 18 15:14:36 2021[0]<stdout>:training data batch size: torch.Size([64])

| Wed Aug 18 15:14:38 2021[0]<stdout>:Epoch 0: 72%\|███████▏ \| 304/422 [00:02<00:00, 118.47it/s, loss=1.2, v_num=0] [train dataloader]: Reach limit_step_per_epoch. Stop at step 380.

| Wed Aug 18 15:14:38 2021[0]<stdout>:[train dataloader]: Start to generate batch data. limit_step_per_epoch=380

| Wed Aug 18 15:14:38 2021[0]<stdout>:Epoch 0: 90%\|█████████ \| 380/422 [00:03<00:00, 125.42it/s, loss=1.05, v_num=0]A train epoch ended.

| Wed Aug 18 15:14:38 2021[0]<stdout>:A train or eval epoch ended.

| Wed Aug 18 15:14:38 2021[0]<stdout>: Start generating batches from async data loader.

| Wed Aug 18 15:14:38 2021[0]<stdout>:[val dataloader]: Start to generate batch data. limit_step_per_epoch=42

| Wed Aug 18 15:14:40 2021[0]<stdout>:[val dataloader]: Reach limit_step_per_epoch. Stop at step 42.

| Wed Aug 18 15:14:40 2021[0]<stdout>:[val dataloader]: Start to generate batch data. limit_step_per_epoch=42

| Wed Aug 18 15:14:40 2021[0]<stdout>:validation data batch size: torch.Size([64])

| Wed Aug 18 15:14:40 2021[0]<stdout>:Epoch 0: 100%\|██████████\| 422/422 [00:04<00:00, 91.34it/s, loss=1.05, v_num=0] A val epoch ended.

| Wed Aug 18 15:14:40 2021[0]<stdout>:A train or eval epoch ended. 38/42 [00:01<00:00, 24.30it/s]

| Wed Aug 18 15:14:40 2021[0]<stdout>:Epoch 1: 0%\| \| 0/422 [00:00<?, ?it/s, loss=1.05, v_num=0]Start generating batches from async data loader.

| Wed Aug 18 15:14:40 2021[0]<stdout>:training data batch size: torch.Size([64])

| Wed Aug 18 15:14:40 2021[0]<stdout>:[val dataloader]: Reach limit_step_per_epoch. Stop at step 42.

| Wed Aug 18 15:14:40 2021[0]<stdout>:[val dataloader]: Start to generate batch data. limit_step_per_epoch=42

| Wed Aug 18 15:14:42 2021[0]<stdout>:Epoch 1: 72%\|███████▏ \| 304/422 [00:02<00:00, 138.95it/s, loss=0.939, v_num=0][train dataloader]: Reach limit_step_per_epoch. Stop at step 380.

| Wed Aug 18 15:14:42 2021[0]<stdout>:[train dataloader]: Start to generate batch data. limit_step_per_epoch=380

| Wed Aug 18 15:14:43 2021[0]<stdout>:Epoch 1: 90%\|█████████ \| 380/422 [00:02<00:00, 138.97it/s, loss=0.774, v_num=0]A train epoch ended.

| Wed Aug 18 15:14:43 2021[0]<stdout>:A train or eval epoch ended.

| Wed Aug 18 15:14:43 2021[0]<stdout>: Start generating batches from async data loader.

| Wed Aug 18 15:14:43 2021[0]<stdout>:validation data batch size: torch.Size([64])?it/s]

| Wed Aug 18 15:14:43 2021[0]<stdout>:A val epoch ended.

| Wed Aug 18 15:14:43 2021[0]<stdout>:A train or eval epoch ended.

| Wed Aug 18 15:14:43 2021[0]<stdout>:Epoch 2: 0%\| \| 0/422 [00:00<?, ?it/s, loss=0.774, v_num=0]Start generating batches from async data loader.

| Wed Aug 18 15:14:43 2021[0]<stdout>:training data batch size: torch.Size([64])

| Wed Aug 18 15:14:43 2021[0]<stdout>:[val dataloader]: Reach limit_step_per_epoch. Stop at step 42.

| Wed Aug 18 15:14:43 2021[0]<stdout>:[val dataloader]: Start to generate batch data. limit_step_per_epoch=42

| Wed Aug 18 15:14:45 2021[0]<stdout>:Epoch 2: 72%\|███████▏ \| 304/422 [00:02<00:00, 135.23it/s, loss=0.639, v_num=0][train dataloader]: Reach limit_step_per_epoch. Stop at step 380.

| Wed Aug 18 15:14:45 2021[0]<stdout>:[train dataloader]: Start to generate batch data. limit_step_per_epoch=380

| Wed Aug 18 15:14:46 2021[0]<stdout>:Epoch 2: 90%\|█████████ \| 380/422 [00:02<00:00, 134.50it/s, loss=0.656, v_num=0]A train epoch ended.

| Wed Aug 18 15:14:46 2021[0]<stdout>:A train or eval epoch ended.

| Wed Aug 18 15:14:46 2021[0]<stdout>: Start generating batches from async data loader.

| Wed Aug 18 15:14:46 2021[0]<stdout>:validation data batch size: torch.Size([64])?it/s]

| Wed Aug 18 15:14:46 2021[0]<stdout>:A val epoch ended.

| Wed Aug 18 15:14:46 2021[0]<stdout>:A train or eval epoch ended.

| Wed Aug 18 15:14:46 2021[0]<stdout>:Epoch 2: 100%\|██████████\| 422/422 [00:02<00:00, 14Training ends:epcoh_end_counter=6, train_epcoh_end_counter=3, validation_epoch_end_counter=3

| Wed Aug 18 15:14:46 2021[0]<stdout>:

| Wed Aug 18 15:14:46 2021[0]<stdout>:Epoch 2: 100%\|██████████\| 422/422 [00:02<00:00, 143.65it/s, loss=0.656, v_num=0]

| Wed Aug 18 15:14:46 2021[0]<stdout>:Tear down petastorm readers

| Wed Aug 18 15:14:34 2021[0]<stderr>:GPU available: True, used: True

| Wed Aug 18 15:14:34 2021[0]<stderr>:TPU available: False, using: 0 TPU cores

| Wed Aug 18 15:14:34 2021[0]<stderr>:/usr/local/lib/python3.8/dist-packages/petastorm/fs_utils.py:88: FutureWarning: pyarrow.localfs is deprecated as of 2.0.0, please use pyarrow.fs.LocalFileSystem instead.

| Wed Aug 18 15:14:34 2021[0]<stderr>: self._filesystem = pyarrow.localfs

| Wed Aug 18 15:14:34 2021[0]<stderr>:LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [2,3]

| Wed Aug 18 15:14:34 2021[0]<stderr>:2021-08-18 15:14:34.868268: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /usr/local/nvidia/lib:/usr/local/nvidia/lib64

| Wed Aug 18 15:14:34 2021[0]<stderr>:2021-08-18 15:14:34.868287: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

| Wed Aug 18 15:14:35 2021[0]<stderr>:

| Wed Aug 18 15:14:35 2021[0]<stderr>: \| Name \| Type \| Params

| Wed Aug 18 15:14:35 2021[0]<stderr>:-----------------------------------------

| Wed Aug 18 15:14:35 2021[0]<stderr>:0 \| conv1 \| Conv2d \| 260

| Wed Aug 18 15:14:35 2021[0]<stderr>:1 \| conv2 \| Conv2d \| 5.0 K

| Wed Aug 18 15:14:35 2021[0]<stderr>:2 \| conv2_drop \| Dropout2d \| 0

| Wed Aug 18 15:14:35 2021[0]<stderr>:3 \| fc1 \| Linear \| 16.1 K

| Wed Aug 18 15:14:35 2021[0]<stderr>:4 \| fc2 \| Linear \| 510

| Wed Aug 18 15:14:35 2021[0]<stderr>:-----------------------------------------

| Wed Aug 18 15:14:35 2021[0]<stderr>:21.8 K Trainable params

| Wed Aug 18 15:14:35 2021[0]<stderr>:0 Non-trainable params

| Wed Aug 18 15:14:35 2021[0]<stderr>:21.8 K Total params

| Wed Aug 18 15:14:35 2021[0]<stderr>:0.087 Total estimated model params size (MB)

| Wed Aug 18 15:14:35 2021[0]<stderr>:/usr/local/lib/python3.8/dist-packages/petastorm/pytorch.py:339: UserWarning: The given NumPy array is not writeable, and PyTorch does not support non-writeable tensors. This means you can write to the underlying (supposedly non-writeable) NumPy array using the tensor. You may want to copy the array to protect its data or make it writeable before converting it to a tensor. This type of warning will be suppressed for the rest of this program. (Triggered internally at /pytorch/torch/csrc/utils/tensor_numpy.cpp:141.)

| Wed Aug 18 15:14:35 2021[0]<stderr>: row_as_dict[k] = self.transform_fn(v)

| Wed Aug 18 15:14:40 2021[0]<stderr>:[rank: 0] Metric val_loss improved. New best score: 0.479

| Wed Aug 18 15:14:40 2021[0]<stderr>:/usr/local/lib/python3.8/dist-packages/pytorch_lightning/callbacks/model_checkpoint.py:610: LightningDeprecationWarning: Relying on `self.log('val_loss', ...)` to set the ModelCheckpoint monitor is deprecated in v1.2 and will be removed in v1.4. Please, create your own `mc = ModelCheckpoint(monitor='your_monitor')` and use it as `Trainer(callbacks=[mc])`.

| Wed Aug 18 15:14:40 2021[0]<stderr>: warning_cache.deprecation(

| Wed Aug 18 15:14:46 2021[0]<stderr>:terminate called without an active exception

| Wed Aug 18 15:14:51 2021[0]<stderr>:Aborted (core dumped)

| Exception in thread Thread-3:

| Traceback (most recent call last):

| File "/usr/lib/python3.8/threading.py", line 932, in _bootstrap_inner

| self.run()

| File "/usr/lib/python3.8/threading.py", line 870, in run

| self._target(*self._args, **self._kwargs)

| File "/usr/local/lib/python3.8/dist-packages/horovod/spark/runner.py", line 141, in run_spark

| result = procs.mapPartitionsWithIndex(mapper).collect()

| File "/usr/local/lib/python3.8/dist-packages/pyspark/rdd.py", line 949, in collect

| sock_info = self.ctx._jvm.PythonRDD.collectAndServe(self._jrdd.rdd())

| File "/usr/local/lib/python3.8/dist-packages/py4j/java_gateway.py", line 1304, in __call__

| return_value = get_return_value(

| File "/usr/local/lib/python3.8/dist-packages/pyspark/sql/utils.py", line 111, in deco

| return f(*a, **kw)

| File "/usr/local/lib/python3.8/dist-packages/py4j/protocol.py", line 326, in get_return_value

| raise Py4JJavaError(

| py4j.protocol.Py4JJavaError: An error occurred while calling z:org.apache.spark.api.python.PythonRDD.collectAndServe.

| : org.apache.spark.SparkException: Job 4 cancelled part of cancelled job group horovod.spark.run.0

| at org.apache.spark.scheduler.DAGScheduler.failJobAndIndependentStages(DAGScheduler.scala:2258)

| at org.apache.spark.scheduler.DAGScheduler.handleJobCancellation(DAGScheduler.scala:2154)

| at org.apache.spark.scheduler.DAGScheduler.$anonfun$handleJobGroupCancelled$4(DAGScheduler.scala:1048)

| at scala.runtime.java8.JFunction1$mcVI$sp.apply(JFunction1$mcVI$sp.java:23)

| at scala.collection.mutable.HashSet.foreach(HashSet.scala:79)

| at org.apache.spark.scheduler.DAGScheduler.handleJobGroupCancelled(DAGScheduler.scala:1047)

| at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2407)

| at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2387)

| at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2376)

| at org.apache.spark.util.EventLoop$anon$1.run(EventLoop.scala:49)

| at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:868)

| at org.apache.spark.SparkContext.runJob(SparkContext.scala:2196)

| at org.apache.spark.SparkContext.runJob(SparkContext.scala:2217)

| at org.apache.spark.SparkContext.runJob(SparkContext.scala:2236)

| at org.apache.spark.SparkContext.runJob(SparkContext.scala:2261)

| at org.apache.spark.rdd.RDD.$anonfun$collect$1(RDD.scala:1030)

| at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

| at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

| at org.apache.spark.rdd.RDD.withScope(RDD.scala:414)

| at org.apache.spark.rdd.RDD.collect(RDD.scala:1029)

| at org.apache.spark.api.python.PythonRDD$.collectAndServe(PythonRDD.scala:180)

| at org.apache.spark.api.python.PythonRDD.collectAndServe(PythonRDD.scala)

| at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

| at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

| at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

| at java.lang.reflect.Method.invoke(Method.java:498)

| at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

| at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

| at py4j.Gateway.invoke(Gateway.java:282)

| at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

| at py4j.commands.CallCommand.execute(CallCommand.java:79)

| at py4j.GatewayConnection.run(GatewayConnection.java:238)

| at java.lang.Thread.run(Thread.java:748)

|

| Traceback (most recent call last):

| File "/horovod/examples/spark/pytorch/pytorch_lightning_spark_mnist.py", line 214, in <module>

| train_model(args)

| File "/horovod/examples/spark/pytorch/pytorch_lightning_spark_mnist.py", line 199, in train_model

| torch_model = torch_estimator.fit(train_df).setOutputCols(['label_prob'])

| File "/usr/local/lib/python3.8/dist-packages/horovod/spark/common/estimator.py", line 35, in fit

| return super(HorovodEstimator, self).fit(df, params)

| File "/usr/local/lib/python3.8/dist-packages/pyspark/ml/base.py", line 161, in fit

| return self._fit(dataset)

| File "/usr/local/lib/python3.8/dist-packages/horovod/spark/common/estimator.py", line 80, in _fit

| return self._fit_on_prepared_data(

| File "/usr/local/lib/python3.8/dist-packages/horovod/spark/lightning/estimator.py", line 406, in _fit_on_prepared_data

| handle = backend.run(trainer, args=(serialized_model,), env={})

| File "/usr/local/lib/python3.8/dist-packages/horovod/spark/common/backend.py", line 83, in run

| return horovod.spark.run(fn, args=args, kwargs=kwargs,

| File "/usr/local/lib/python3.8/dist-packages/horovod/spark/runner.py", line 287, in run

| _launch_job(use_mpi, use_gloo, settings, driver, env, stdout, stderr, executable)

| File "/usr/local/lib/python3.8/dist-packages/horovod/spark/runner.py", line 154, in _launch_job

| run_controller(use_gloo, lambda: gloo_run(executable, settings, nics, driver, env, stdout, stderr),

| File "/usr/local/lib/python3.8/dist-packages/horovod/runner/launch.py", line 706, in run_controller

| gloo_run()

| File "/usr/local/lib/python3.8/dist-packages/horovod/spark/runner.py", line 154, in <lambda>

| run_controller(use_gloo, lambda: gloo_run(executable, settings, nics, driver, env, stdout, stderr),

| File "/usr/local/lib/python3.8/dist-packages/horovod/spark/gloo_run.py", line 68, in gloo_run

| launch_gloo(command, exec_command, settings, nics, {}, server_ip)

| File "/usr/local/lib/python3.8/dist-packages/horovod/runner/gloo_run.py", line 282, in launch_gloo

| raise RuntimeError('Horovod detected that one or more processes exited with non-zero '

| RuntimeError: Horovod detected that one or more processes exited with non-zero status, thus causing the job to be terminated. The first process to do so was:

| Process name: 1

| Exit code: 134

```

|

closed

|

2021-08-18T18:00:13Z

|

2021-08-19T17:10:12Z

|

https://github.com/horovod/horovod/issues/3119

|

[

"bug"

] |

chongxiaoc

| 2

|

google/seq2seq

|

tensorflow

| 297

|

Ho wto initialize tables in my own model?

|

Hi, I created a new model based on the base model, and there are hashtables in my model that require table initialization. Where should I insert the tables_initializer command? Without table initialization, I'd always get the following error messasge:

FailedPreconditionError (see above for traceback): Table not initialized.

Thanks in advance!

|

open

|

2017-09-07T14:58:10Z

|

2018-03-18T10:34:45Z

|

https://github.com/google/seq2seq/issues/297

|

[] |

anglil

| 3

|

mwaskom/seaborn

|

matplotlib

| 3,412

|

seaborn.objects so.Plot() accept drawstyle argument

|

Currently there seems to be no way to do something like:

```

import pandas as pd

import seaborn.objects as so

dataset = pd.DataFrame(dict(

x=[1, 2, 3, 4],

y=[1, 2, 3, 4],

group=['g1', 'g1', 'g2', 'g2'],

))

p = (

so.Plot(dataset,

x='x',

y='y',

drawstyle='group',

)

.add(so.Line())

.scale(drawstyle=so.Nominal({'g1': 'default', 'g2': 'steps'}))

)

p.show()

```

We get:

TypeError: Plot() got unexpected keyword argument(s): drawstyle

|

closed

|

2023-06-29T17:19:14Z

|

2023-08-28T11:41:23Z

|

https://github.com/mwaskom/seaborn/issues/3412

|

[] |

subsurfaceiodev

| 3

|

ultralytics/ultralytics

|

computer-vision

| 19,553

|

Build a dataloader without training

|

### Search before asking

- [x] I have searched the Ultralytics YOLO [issues](https://github.com/ultralytics/ultralytics/issues) and [discussions](https://github.com/orgs/ultralytics/discussions) and found no similar questions.

### Question

Hi. I would like to examine data in a train dataloader. How can I build one without starting training? I plan to train a detection model. Here is my attempt:

``` python

from ultralytics.models.yolo.detect import DetectionTrainer

import os

# Define paths

DATA_YAML = f"{os.environ['DATASETS']}/drone_tiny/data.yaml" # Path to dataset YAML file

WEIGHTS_PATH = f"{os.environ['WEIGHTS']}/yolo11n.pt" # Path to local weights file

SAVE_IMAGES_DIR = f"{os.environ['PROJECT_ROOT']}/saved_images"

# Ensure save directory exists

os.makedirs(SAVE_IMAGES_DIR, exist_ok=True)

# Load the model

trainer = DetectionTrainer(

overrides = dict(

data = DATA_YAML

)

)

train_data, test_data = trainer.get_dataset()

dataloader = trainer.get_dataloader(train_data)

```

But this fails with the following error:

```bash

Ultralytics 8.3.77 🚀 Python-3.10.12 torch-2.3.1+cu121 CUDA:0 (NVIDIA GeForce GTX 1080 Ti, 11169MiB)