text

stringlengths 8

6.05M

|

|---|

import numpy as np

from keras.layers import Input,Dense, Activation

from keras.models import Model

from keras.utils.generic_utils import get_custom_objects

# this function is just for example of usage of keras functional API

def XOR_train_fully_keras():

#Since Q1 (XOR) only asks for drawing, in the code, I used Keras/TF codes.

x = np.array([[0,0],

[0,1],

[1,0],

[1,1]])

y = np.array([0,1,1,0])

input = Input(shape=(2,))

hidden1 = Dense(10, activation='relu')(input)

hidden2 = Dense(10, activation='relu')(hidden1)

out = Dense(1, activation='linear')(hidden2)

model = Model(inputs=[input], outputs=[out])

model.compile(optimizer='adam', loss='mse', loss_weights=[1])

model.fit(x=[x], y=[y], batch_size=4, epochs=800)

y_p = model.predict(x)

print(y_p)

y_p = np.round(y_p, 2)

print(y_p)

def XOR_check_drawing():

# first columns are for the bias

x = np.array([[1, 0,0],

[1, 0,1],

[1, 1,0],

[1, 1,1]])

# label for xor

y = np.array([0,1,1,0])

# define weight vectors: example w1 = np.array([number, number, number])

w1 = None

w2 = None

w3 = None

# select an input: ex) input = x[index,:]

input = None

# calculate layer1:

# ex) logit = np.dot(x,w)

percept1_logit = None

# ex) out = 1 if logit >=0, 0 otherwise

percept1_out = None

percept2_logit = None

percept2_out = None

layer1_out = np.array([1, percept1_out, percept2_out]) # 1 for the bias

# calculate layer2

percept3_logit = None

percept3_out = None

out = percept3_out

# print output of the custom neural net

print(out)

if __name__ =='__main__':

XOR_check_drawing()

|

"""LoaderPool class.

ChunkLoader has one or more of these. They load data in worker pools.

"""

from __future__ import annotations

import logging

from concurrent.futures import (

CancelledError,

Future,

ProcessPoolExecutor,

ThreadPoolExecutor,

)

from typing import TYPE_CHECKING, Callable, Dict, List, Optional, Union

# Executor for either a thread pool or a process pool.

PoolExecutor = Union[ThreadPoolExecutor, ProcessPoolExecutor]

LOGGER = logging.getLogger("napari.loader")

DoneCallback = Optional[Callable[[Future], None]]

if TYPE_CHECKING:

from napari.components.experimental.chunk._request import ChunkRequest

class LoaderPool:

"""Loads chunks asynchronously in worker threads or processes.

We cannot call np.asarray() in the GUI thread because it might block on

IO or a computation. So we call np.asarray() in _chunk_loader_worker()

instead.

Parameters

----------

config : dict

Our configuration, see napari.utils._octree.py for format.

on_done_loader : Callable[[Future], None]

Called when a future finishes.

Attributes

----------

force_synchronous : bool

If True all requests are loaded synchronously.

num_workers : int

The number of workers.

use_processes | bool

Use processess as workers, otherwise use threads.

_executor : PoolExecutor

The thread or process pool executor.

_futures : Dict[ChunkRequest, Future]

In progress futures for each layer (data_id).

_delay_queue : DelayQueue

Requests sit in here for a bit before submission.

"""

def __init__(

self, config: dict, on_done_loader: DoneCallback = None

) -> None:

from napari.components.experimental.chunk._delay_queue import (

DelayQueue,

)

self.config = config

self._on_done_loader = on_done_loader

self.num_workers: int = int(config['num_workers'])

self.use_processes: bool = bool(config.get('use_processes', False))

self._executor: PoolExecutor = _create_executor(

self.use_processes, self.num_workers

)

self._futures: Dict[ChunkRequest, Future] = {}

self._delay_queue = DelayQueue(config['delay_queue_ms'], self._submit)

def load_async(self, request: ChunkRequest) -> None:

"""Load this request asynchronously.

Parameters

----------

request : ChunkRequest

The request to load.

"""

# Add to the DelayQueue which will call our self._submit() method

# right away, if zero delay, or after the configured delay.

self._delay_queue.add(request)

def cancel_requests(

self, should_cancel: Callable[[ChunkRequest], bool]

) -> List[ChunkRequest]:

"""Cancel pending requests based on the given filter.

Parameters

----------

should_cancel : Callable[[ChunkRequest], bool]

Cancel the request if this returns True.

Returns

-------

List[ChunkRequests]

The requests that were cancelled, if any.

"""

# Cancelling requests in the delay queue is fast and easy.

cancelled = self._delay_queue.cancel_requests(should_cancel)

num_before = len(self._futures)

# Cancelling futures may or may not work. Future.cancel() will

# return False if the worker is already loading the request and it

# cannot be cancelled.

for request in list(self._futures.keys()):

if self._futures[request].cancel():

del self._futures[request]

cancelled.append(request)

num_after = len(self._futures)

num_cancelled = num_before - num_after

LOGGER.debug(

"cancel_requests: %d -> %d futures (cancelled %d)",

num_before,

num_after,

num_cancelled,

)

return cancelled

def _submit(self, request: ChunkRequest) -> Optional[Future]:

"""Initiate an asynchronous load of the given request.

Parameters

----------

request : ChunkRequest

Contains the arrays to load.

"""

# Submit the future. Have it call self._done when finished.

future = self._executor.submit(_chunk_loader_worker, request)

future.add_done_callback(self._on_done)

self._futures[request] = future

LOGGER.debug(

"_submit_async: %s elapsed=%.3fms num_futures=%d",

request.location,

request.elapsed_ms,

len(self._futures),

)

return future

def _on_done(self, future: Future) -> None:

"""Called when a future finishes.

future : Future

This is the future that finished.

"""

try:

request = self._get_request(future)

except ValueError:

return # Pool not running? App exit in progress?

if request is None:

return # Future was cancelled, nothing to do.

# Tell the loader this request finished.

if self._on_done_loader is not None:

self._on_done_loader(request)

def shutdown(self) -> None:

"""Shutdown the pool."""

# Avoid crashes or hangs on exit.

self._delay_queue.shutdown()

self._executor.shutdown(wait=True)

@staticmethod

def _get_request(future: Future) -> Optional[ChunkRequest]:

"""Return the ChunkRequest for this future.

Parameters

----------

future : Future

Get the request from this future.

Returns

-------

Optional[ChunkRequest]

The ChunkRequest or None if the future was cancelled.

"""

try:

# Our future has already finished since this is being

# called from Chunk_Request._done(), so result() will

# never block. But we can see if it finished or was

# cancelled. Although we don't care right now.

return future.result()

except CancelledError:

return None

def _create_executor(use_processes: bool, num_workers: int) -> PoolExecutor:

"""Return the thread or process pool executor.

Parameters

----------

use_processes : bool

If True use processes, otherwise threads.

num_workers : int

The number of worker threads or processes.

"""

if use_processes:

LOGGER.debug("Process pool num_workers=%d", num_workers)

return ProcessPoolExecutor(max_workers=num_workers)

LOGGER.debug("Thread pool num_workers=%d", num_workers)

return ThreadPoolExecutor(max_workers=num_workers)

def _chunk_loader_worker(request: ChunkRequest) -> ChunkRequest:

"""This is the worker thread or process that loads the array.

We call np.asarray() in a worker because it might lead to IO or

computation which would block the GUI thread.

Parameters

----------

request : ChunkRequest

The request to load.

"""

request.load_chunks() # loads all chunks in the request

return request

|

import sys

import argparse

from misc.Logger import logger

from core.awschecks import awschecks

from core.awsrequests import awsrequests

from core.base import base

from misc import Misc

class securitygroup(base):

def __init__(self, global_options=None):

self.global_options = global_options

logger.info('Securitygroup module entry endpoint')

self.cli = Misc.cli_argument_parse()

def start(self):

logger.info('Invoked starting point for securitygroup')

parser = argparse.ArgumentParser(description='ec2 tool for devops', usage='''kerrigan.py securitygroup <command> [<args>]

Second level options are:

compare

''' + self.global_options)

parser.add_argument('command', help='Command to run')

args = parser.parse_args(sys.argv[2:3])

if not hasattr(self, args.command):

logger.error('Unrecognized command')

parser.print_help()

exit(1)

getattr(self, args.command)()

def compare(self):

logger.info("Going to compare security groups")

a = awsrequests()

parser = argparse.ArgumentParser(description='ec2 tool for devops', usage='''kerrigan.py instance compare_securitygroups [<args>]]

''' + self.global_options)

parser.add_argument('--env', action='store', help="Environment to check")

parser.add_argument('--dryrun', action='store_true', default=False, help="No actions should be taken")

args = parser.parse_args(sys.argv[3:])

a = awschecks()

a.compare_securitygroups(env=args.env, dryrun=args.dryrun)

|

# Definition for singly-linked list.

# class ListNode(object):

# def __init__(self, x):

# self.val = x

# self.next = None

class Solution(object):

def reverseList(self, head):

"""

:type head: ListNode

:rtype: ListNode

"""

prev = None

curr = head

next_node = None

while curr is not None:

# store the next node

next_node = curr.next

# reverse the pointer(actual reversing here)

curr.next = prev

# now move the prev and curr one forward

prev = curr

curr = next_node

# set the head to the node at the end originally

head = prev

return head

|

# settings.py

import os

import djcelery

djcelery.setup_loader() # 加载 djcelery

BROKER_URL = 'pyamqp://guest@localhost//'

BROKER_POOL_LIMIT = 0

CELERY_RESULT_BACKEND = 'djcelery.backends.database:DatabaseBackend'

CELERY_RESULT_BACKEND = 'django-db'

CELERY_CACHE_BACKEND = 'django-cache'

ALLOWED_HOSTS = os.environ.get('ALLOWED_HOSTS', 'localhost').split(',')

BASE_DIR = os.path.dirname(__file__)

DEBUG = True

SECRET_KEY = 'thisisthesecretkey'

ROOT_URLCONF = 'urls' # addcalculate/urls.py

# before 1.9: MIDDLEWARE_CLASSES; after 1.9: MIDDLEWARE

MIDDLEWARE = (

'django.middleware.security.SecurityMiddleware',

'django.contrib.sessions.middleware.SessionMiddleware',

'django.middleware.common.CommonMiddleware',

'django.middleware.csrf.CsrfViewMiddleware',

'django.contrib.auth.middleware.AuthenticationMiddleware',

'django.contrib.messages.middleware.MessageMiddleware',

'django.middleware.clickjacking.XFrameOptionsMiddleware',

)

INSTALLED_APPS = (

'django.contrib.admin',

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.messages',

'django.contrib.staticfiles',

# celery plug

'djcelery', # pip install django-celery

'django_celery_results', # pip install django-celery-results

# develop application

'tools.apps.ToolsConfig',

)

TEMPLATES = (

{

'BACKEND': 'django.template.backends.django.DjangoTemplates',

'DIRS': (os.path.join(BASE_DIR, 'templates'), ),

'APP_DIRS': True,

'OPTIONS': {

'context_processors': [

'django.template.context_processors.debug',

'django.template.context_processors.request',

'django.contrib.auth.context_processors.auth',

'django.contrib.messages.context_processors.messages',

],

},

},

)

# Password validation

# https://docs.djangoproject.com/en/2.1/ref/settings/#auth-password-validators

AUTH_PASSWORD_VALIDATORS = [

{

'NAME': 'django.contrib.auth.password_validation.UserAttributeSimilarityValidator',

},

{

'NAME': 'django.contrib.auth.password_validation.MinimumLengthValidator',

},

{

'NAME': 'django.contrib.auth.password_validation.CommonPasswordValidator',

},

{

'NAME': 'django.contrib.auth.password_validation.NumericPasswordValidator',

},

]

WSGI_APPLICATION = 'wsgi.application'

STATICFILES_DIRS = (

os.path.join(BASE_DIR, 'static'),

)

STATIC_URL = '/static/'

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.sqlite3',

'NAME': os.path.join(BASE_DIR, 'db.sqlite3'),

}

}

LANGUAGE_CODE = 'en-us'

TIME_ZONE = 'Asia/Shanghai'

USE_I18N = True

USE_L10N = True

USE_TZ = True

|

#!/usr/bin/python3.4

# -*-coding:Utf-8

adress = """{nom}, {prenom}, {ville} ({pays})""".format(prenom="Anthony", nom = "TASTET", ville = "Bayonne", pays = "FRANCE")

print(adress)

|

from Classes import Property, Note

from General import Functions

class Person:

def __init__(self, data_dict):

self.data_dict = data_dict

self.property_list = self.get_property_list()

self.note_list = self.get_note_list()

def get_property_list(self):

return [

Property.Property(data_dict) for data_dict in self.data_dict.get("property_list", [])

]

def get_note_list(self):

return [

Note.Note(data_dict) for data_dict in self.data_dict.get("note_list", [])

]

def __str__(self):

ret_str = "Person Instance"

for prop in self.property_list:

ret_str += "\n" + Functions.tab_str(prop)

return ret_str

|

"""

Created on Sat Nov 18 23:12:08 2017

@author: Utku Ozbulak - github.com/utkuozbulak

"""

import os

import numpy as np

import torch

from torch.optim import Adam

from torchvision import models

from misc_functions import preprocess_image, recreate_image, save_image

class CNNLayerVisualization():

"""

Produces an image that minimizes the loss of a convolution

operation for a specific layer and filter

"""

def __init__(self, model, selected_layer, selected_filter):

self.model = model

self.model.eval()

self.selected_layer = selected_layer

self.selected_filter = selected_filter

self.conv_output = 0

# Create the folder to export images if not exists

if not os.path.exists('./generated'):

os.makedirs('./generated')

def hook_layer(self):

def hook_function(module, grad_in, grad_out):

# Gets the conv output of the selected filter (from selected layer)

self.conv_output = grad_out[0, self.selected_filter]

# Hook the selected layer

self.model[self.selected_layer].register_forward_hook(hook_function)

def visualise_layer_with_hooks(self):

# Hook the selected layer

self.hook_layer()

# Generate a random image

random_image = np.uint8(np.random.uniform(150, 180, (224, 224, 3)))

# Process image and return variable

processed_image = preprocess_image(random_image, False)

# Define optimizer for the image

optimizer = Adam([processed_image], lr=0.1, weight_decay=1e-6)

for i in range(1, 31):

optimizer.zero_grad()

# Assign create image to a variable to move forward in the model

x = processed_image

for index, layer in enumerate(self.model):

# Forward pass layer by layer

# x is not used after this point because it is only needed to trigger

# the forward hook function

x = layer(x)

# Only need to forward until the selected layer is reached

if index == self.selected_layer:

# (forward hook function triggered)

break

# Loss function is the mean of the output of the selected layer/filter

# We try to minimize the mean of the output of that specific filter

loss = -torch.mean(self.conv_output)

print('Iteration:', str(i), 'Loss:', "{0:.2f}".format(loss.data.numpy()))

# Backward

loss.backward()

# Update image

optimizer.step()

# Recreate image

self.created_image = recreate_image(processed_image)

# Save image

if i % 30 == 0:

im_path = './generated/layer_vis_l' + str(self.selected_layer) + \

'_f' + str(self.selected_filter) + '_iter' + str(i) + '.jpg'

save_image(self.created_image, im_path)

def visualise_layer_without_hooks(self, images):

# Process image and return variable

# Generate a random image

# random_image = np.uint8(np.random.uniform(150, 180, (224, 224, 3)))

from PIL import Image

from torch.autograd import Variable

# img_path = '/home/hzq/tmp/waibao/data/cat_dog.png'

processed_image = images

processed_image = Variable(processed_image, requires_grad=True)

# Define optimizer for the image

optimizer = Adam([processed_image], lr=0.1, weight_decay=1e-6)

for i in range(1, 31):

optimizer.zero_grad()

# Assign create image to a variable to move forward in the model

x = processed_image

# for index, layer in enumerate(self.model):

# # Forward pass layer by layer

# x = layer(x)

# if index == self.selected_layer:

# # Only need to forward until the selected layer is reached

# # Now, x is the output of the selected layer

# break

for name, module in self.model._modules.items():

# Forward pass layer by layer

x = module(x)

print(name)

if name == self.selected_layer:

# Only need to forward until the selected layer is reached

# Now, x is the output of the selected layer

break

# Here, we get the specific filter from the output of the convolution operation

# x is a tensor of shape 1x512x28x28.(For layer 17)

# So there are 512 unique filter outputs

# Following line selects a filter from 512 filters so self.conv_output will become

# a tensor of shape 28x28

self.conv_output = x[0, self.selected_filter]

# feature map

# Loss function is the mean of the output of the selected layer/filter

# We try to minimize the mean of the output of that specific filter

loss = -torch.mean(self.conv_output)

print('Iteration:', str(i), 'Loss:', "{0:.2f}".format(loss.data.numpy()))

# Backward

loss.backward()

# Update image

optimizer.step()

# Recreate image

self.created_image = recreate_image(processed_image)

# Save image

if i % 30 == 0:

im_path = './generated/layer_vis_l' + str(self.selected_layer) + \

'_f' + str(self.selected_filter) + '_iter' + str(i) + '.jpg'

save_image(self.created_image, im_path)

from matplotlib import pyplot as plt

def layer_output_visualization(model, selected_layer, selected_filter, pic, png_dir, iter):

pic = pic[None,:,:,:]

# pic = pic.cuda()

x = pic

x = x.squeeze(0)

for name, module in model._modules.items():

x = module(x)

if name == 'layer1':

import torch.nn.functional as F

x = F.max_pool2d(x, 3, stride=2, padding=1)

if name == selected_layer:

break

conv_output = x[0, selected_filter]

x = conv_output.cpu().detach().numpy()

# print(x.shape)

if not os.path.exists('./output/'+ png_dir):

os.makedirs('./output/'+ png_dir)

im_path = './output/'+ png_dir+ '/layer_vis_' + str(selected_layer) + \

'_f' + str(selected_filter) + '_iter' + str(iter) + '.jpg'

plt.imshow(x, cmap = plt.cm.jet)

plt.axis('off')

plt.savefig(im_path)

def normalization(data):

_range = np.max(data) - np.min(data)

return (data - np.min(data)) * 255 / _range

def filter_visualization(model, selected_layer, selected_filter, png_dir):

for name, param in model.named_parameters():

print(name)

if name == selected_layer + '.weight':

x = param

x = x[selected_filter,:,:,:]

x = x.cpu().detach().numpy()

x = x.transpose(1,2,0)

x = normalization(x)

x = preprocess_image(x, resize_im=False)

x = recreate_image(x)

if not os.path.exists('./filter/'+ png_dir):

os.makedirs('./filter/'+ png_dir)

im_path = './filter/'+ png_dir+ '/layer_f' + str(selected_filter) + '_iter' + '.jpg'

# save_image(x, im_path)

plt.imshow(x, cmap = plt.cm.jet)

plt.axis('off')

plt.savefig(im_path)

if __name__ == '__main__':

cnn_layer = 'conv1'

# cnn_layer = 'layer4'

filter_pos = 5

# Fully connected layer is not needed

# use resnet insted

from cifar import ResNet18, ResidualBlock

pretrained_model = ResNet18(ResidualBlock)

pretrained_model.load_state_dict(torch.load("models/net_044.pth"))

print(

pretrained_model

)

# pretrained_model = models.vgg16(pretrained=True).features

import torchvision

def transform(x):

x = x.resize((224, 224), 2) # resize the image from 32*32 to 224*224

x = np.array(x, dtype='float32') / 255

x = (x - 0.5) / 0.5 # Normalize

x = x.transpose((2, 0, 1)) # reshape, put the channel to 1-d; input = {channel, size, size}

x = torch.from_numpy(x)

return x

trainset = torchvision.datasets.CIFAR10(root='../data', train=True,

download=False, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=64,

shuffle=True, num_workers=2)

device = torch.device("cuda:2" if torch.cuda.is_available() else "cpu")

images, labels = iter(trainloader).next()

images, labels = iter(trainloader).next()

images, labels = iter(trainloader).next()

images, labels = iter(trainloader).next()

images, labels = iter(trainloader).next()

# images, labels = iter(trainloader).next()

# images, labels = iter(trainloader).next()

x = images.cpu()[0, :, :, :]

x = x[None,:,:,:]

x = recreate_image(x)

save_image(x, 'orginal.jpg')

# images, labels = images.to(device), labels.to(device) # 有

# for filter_pos in range(64):

#

# layer_vis = CNNLayerVisualization(pretrained_model, cnn_layer, filter_pos)

# layer_vis.visualise_layer_without_hooks(images)

# Layer visualization with pytorch hooks

# layer_vis.visualise_layer_with_hooks()

# Layer visualization without pytorch hooks

for filter_pos in range(64):

# # get the filter

filter_visualization(model = pretrained_model, selected_layer = 'conv1.0', selected_filter = filter_pos, png_dir = 'conv1.0')

# get the feature map : conv1

layer_output_visualization(model = pretrained_model, selected_layer = 'conv1', selected_filter = filter_pos, pic = images, png_dir = 'conv1', iter = filter_pos)

# get the feature map: layer4

layer_output_visualization(model = pretrained_model, selected_layer = 'layer4', selected_filter = filter_pos, pic = images, png_dir = 'layer4', iter = filter_pos)

# get the reconstruct

layer_vis = CNNLayerVisualization(pretrained_model, cnn_layer, filter_pos)

layer_vis.visualise_layer_without_hooks(images)

for filter_pos in range(512):

layer_output_visualization(model = pretrained_model, selected_layer = 'layer4', selected_filter = filter_pos, pic = images, png_dir = 'layer4', iter = filter_pos)

layer_vis = CNNLayerVisualization(pretrained_model, cnn_layer, filter_pos)

layer_vis.visualise_layer_without_hooks(images)

|

import gzip

import string

import numpy as np

from collections import defaultdict

def get_sentences(corpus_file):

"""

Returns all the (content) sentences in a corpus file

:param corpus_file: the corpus file

:return: the next sentence (yield)

"""

# Read all the sentences in the file

with open(corpus_file, 'r', errors='ignore') as f_in:

s = []

for line in f_in:

line = line

# Ignore start and end of doc

if '<text' in line or '</text' in line or '<s>' in line:

continue

# End of sentence

elif '</s>' in line:

yield s

s = []

else:

try:

word, lemma, pos, index, parent, dep = line.split()

s.append((word, lemma, pos, int(index), parent, dep))

# One of the items is a space - ignore this token

except Exception as e:

print (str(e))

continue

def save_file_as_Matrix12(cooc_mat, frequent_contexts, output_Dir, MatrixFileName, MatrixSamplesFileName):

print(len(frequent_contexts))

cnts = ["Terms/contexts"]

list_of_lists = []

for context in frequent_contexts:

cnts.append(context)

list_of_lists.append(cnts)

for target, contexts in cooc_mat.items():

targets = []

targets.append(target)

for context in frequent_contexts:

if context in contexts.keys():

targets.append(str(contexts[context]))

else:

targets.append(str(0))

list_of_lists.append(targets)

res = np.array(list_of_lists)

np.savetxt(output_Dir + MatrixFileName, res, delimiter=",", fmt='%s')

return

def save_file_as_Matrix1(cooc_mat, frequent_contexts, output_Dir, MatrixFileName, MatrixSamplesFileName):

f = open(output_Dir + MatrixSamplesFileName, "w")

list_of_lists = []

for target, contexts in cooc_mat.items():

targets = []

f.write(target+"\n")

for context in frequent_contexts:

if context in contexts.keys():

targets.append(contexts[context])

else:

targets.append(0)

list_of_lists.append(targets)

res = np.array(list_of_lists)

np.savetxt(output_Dir + MatrixFileName, res, delimiter=",")

return

def save_file_as_Matrix2(cooc_mat, frequent_contexts, output_Dir, MatrixFileName, frequent_couples):

list_of_lists = []

cnts = ["NP_couples/contexts"]

for context in frequent_contexts:

cnts.append(context)

list_of_lists.append(cnts)

for target, contexts in cooc_mat.items():

if target in frequent_couples:

targets = []

targets.append(str(target).replace("\t", " ## "))

for context in frequent_contexts:

if context in contexts.keys():

targets.append(str(contexts[context]))

else:

targets.append(str(0))

list_of_lists.append(targets)

res = np.array(list_of_lists)

np.savetxt(output_Dir + MatrixFileName, res, delimiter=",", fmt='%s')

return

def save_file_as_Matrix(cooc_mat, frequent_contexts, output_Dir, MatrixFileName, MatrixSamplesFileName, frequent_couples):

f = open(output_Dir + MatrixSamplesFileName, "w")

list_of_lists = []

for target, contexts in cooc_mat.items():

if target in frequent_couples:

targets = []

f.write(target+"\n")

for context in frequent_contexts:

if context in contexts.keys():

targets.append(contexts[context])

else:

targets.append(0)

list_of_lists.append(targets)

res = np.array(list_of_lists)

np.savetxt(output_Dir + MatrixFileName, res, delimiter=",")

return

def filter_couples(cooc_mat, min_occurrences):

"""

Returns the couples that occurred at least min_occurrences times

:param cooc_mat: the co-occurrence matrix

:param min_occurrences: the minimum number of occurrences

:return: the frequent couples

"""

couple_freq = []

for target, contexts in cooc_mat.items():

occur = 0

for context, freq in contexts.items():

occur += freq

if occur >= min_occurrences:

couple_freq.append(target)

return couple_freq

def filter_contexts(cooc_mat, min_occurrences):

"""

Returns the contexts that occurred at least min_occurrences times

:param cooc_mat: the co-occurrence matrix

:param min_occurrences: the minimum number of occurrences

:return: the frequent contexts

"""

context_freq = defaultdict(int)

for target, contexts in cooc_mat.items():

for context, freq in contexts.items():

try:

str(context)

context_freq[context] = context_freq[context] + freq

except:

continue

frequent_contexts = set([context for context, frequency in context_freq.items() if frequency >= min_occurrences and context not in string.punctuation])

return frequent_contexts

def getSentence(strip_sentence):

"""

Returns sentence (space seperated tokens)

:param strip_sentence: the list of tokens with other information for each token

:return: the sentence as string

"""

sent = ""

for i, (t_word, t_lemma, t_pos, t_index, t_parent, t_dep) in enumerate(strip_sentence):

sent += t_word.lower() + " "

return sent

|

import os

class BaseConfig(object):

"""Base config class"""

BASE_DIR = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))

SECRET_KEY = 'AasHy7I8484K8I32seu7nni8YHHu6786gi'

TIMEZONE = "Africa/Kampala"

SQLALCHEMY_TRACK_MODIFICATIONS = True

UPLOADED_LOGOS_DEST = "app/models/media/"

UPLOADED_LOGOS_URL = "app/models/media/"

# HOST_ADDRESS = "http://192.168.1.117:8000"

# HOST_ADDRESS = "http://127.0.0.1:8000"

# HOST_ADDRESS = "https://traveler-ug.herokuapp.com"

DATETIME_FORMAT = "%B %d %Y, %I:%M %p %z"

DATE_FORMAT = "%B %d %Y %z"

DEFAULT_CURRENCY = "UGX"

class ProductionConfig(BaseConfig):

"""Production specific config"""

DEBUG = False

TESTING = False

# SQLALCHEMY_DATABASE_URI = 'mysql+pymysql://samuelitwaru:password@localhost/traveler' # TODO => MYSQL

SQLALCHEMY_DATABASE_URI = 'sqlite:///models/database.db'

class DevelopmentConfig(BaseConfig):

"""Development environment specific config"""

DEBUG = True

MAIL_DEBUG = True

# SQLALCHEMY_DATABASE_URI = 'mysql+pymysql://root:bratz123@localhost/traveler' # TODO => MYSQL

SQLALCHEMY_DATABASE_URI = 'sqlite:///models/database.db'

|

from nipype.pipeline.engine import Node, Workflow

import nipype.interfaces.utility as util

import nipype.interfaces.fsl as fsl

import nipype.interfaces.c3 as c3

import nipype.interfaces.freesurfer as fs

import nipype.interfaces.ants as ants

import nipype.interfaces.io as nio

import os

def create_coreg_pipeline(name='coreg'):

# fsl output type

fsl.FSLCommand.set_default_output_type('NIFTI_GZ')

# initiate workflow

coreg = Workflow(name='coreg')

#inputnode

inputnode=Node(util.IdentityInterface(fields=['epi_median',

'fs_subjects_dir',

'fs_subject_id',

'uni_highres',

'uni_lowres'

]),

name='inputnode')

# outputnode

outputnode=Node(util.IdentityInterface(fields=['uni_lowres',

'epi2lowres',

'epi2lowres_mat',

'epi2lowres_dat',

'epi2lowres_itk',

'highres2lowres',

'highres2lowres_mat',

'highres2lowres_dat',

'highres2lowres_itk',

'epi2highres',

'epi2highres_itk'

]),

name='outputnode')

# convert mgz head file for reference

fs_import = Node(interface=nio.FreeSurferSource(),

name = 'fs_import')

brain_convert=Node(fs.MRIConvert(out_type='niigz'),

name='brain_convert')

coreg.connect([(inputnode, fs_import, [('fs_subjects_dir','subjects_dir'),

('fs_subject_id', 'subject_id')]),

(fs_import, brain_convert, [('brain', 'in_file')]),

(brain_convert, outputnode, [('out_file', 'uni_lowres')])

])

# biasfield correctio of median epi

biasfield = Node(interface = ants.segmentation.N4BiasFieldCorrection(save_bias=True),

name='biasfield')

coreg.connect([(inputnode, biasfield, [('epi_median', 'input_image')])])

# linear registration epi median to lowres mp2rage with bbregister

bbregister_epi = Node(fs.BBRegister(contrast_type='t2',

out_fsl_file='epi2lowres.mat',

out_reg_file='epi2lowres.dat',

#registered_file='epi2lowres.nii.gz',

init='fsl',

epi_mask=True

),

name='bbregister_epi')

coreg.connect([(inputnode, bbregister_epi, [('fs_subjects_dir', 'subjects_dir'),

('fs_subject_id', 'subject_id')]),

(biasfield, bbregister_epi, [('output_image', 'source_file')]),

(bbregister_epi, outputnode, [('out_fsl_file', 'epi2lowres_mat'),

('out_reg_file', 'epi2lowres_dat'),

('registered_file', 'epi2lowres')

])

])

# convert transform to itk

itk_epi = Node(interface=c3.C3dAffineTool(fsl2ras=True,

itk_transform='epi2lowres.txt'),

name='itk')

coreg.connect([(brain_convert, itk_epi, [('out_file', 'reference_file')]),

(biasfield, itk_epi, [('output_image', 'source_file')]),

(bbregister_epi, itk_epi, [('out_fsl_file', 'transform_file')]),

(itk_epi, outputnode, [('itk_transform', 'epi2lowres_itk')])

])

# linear register highres highres mp2rage to lowres mp2rage

bbregister_anat = Node(fs.BBRegister(contrast_type='t1',

out_fsl_file='highres2lowres.mat',

out_reg_file='highres2lowres.dat',

#registered_file='uni_highres2lowres.nii.gz',

init='fsl'

),

name='bbregister_anat')

coreg.connect([(inputnode, bbregister_epi, [('fs_subjects_dir', 'subjects_dir'),

('fs_subject_id', 'subject_id'),

('uni_highres', 'source_file')]),

(bbregister_epi, outputnode, [('out_fsl_file', 'highres2lowres_mat'),

('out_reg_file', 'highres2lowres_dat'),

('registered_file', 'highres2lowres')

])

])

# convert transform to itk

itk_anat = Node(interface=c3.C3dAffineTool(fsl2ras=True,

itk_transform='highres2lowres.txt'),

name='itk_anat')

coreg.connect([(inputnode, itk_anat, [('uni_highres', 'source_file')]),

(brain_convert, itk_anat, [('out_file', 'reference_file')]),

(bbregister_anat, itk_epi, [('out_fsl_file', 'transform_file')]),

(itk_anat, outputnode, [('itk_transform', 'highres2lowres_itk')])

])

# merge transforms into list

translist = Node(util.Merge(2),

name='translist')

coreg.connect([(itk_anat, translist, [('highres2lowres_itk', 'in1')]),

(itk_epi, translist, [('itk_transform', 'in2')]),

(translist, outputnode, [('out', 'epi2highres_itk')])])

# transform epi to highres

epi2highres = Node(ants.ApplyTransforms(dimension=3,

#output_image='epi2highres.nii.gz',

interpolation = 'BSpline',

invert_transform_flags=[True, False]),

name='epi2highres')

coreg.connect([(inputnode, epi2highres, [('uni_highres', 'reference_image')]),

(biasfield, epi2highres, [('output_image', 'input_image')]),

(translist, epi2highres, [('out', 'transforms')]),

(epi2highres, outputnode, [('output_image', 'epi2highres')])])

return coreg

|

#! /usr/bin/env python3

import pandas as pd, pandas

import numpy as np

import sys, os, time, fastatools, json, re

from kontools import collect_files

from Bio import Entrez

# opens a `log` file path to read, searches for the `ome` code followed by a whitespace character, and edits the line with `edit`

def log_editor( log, ome, edit ):

with open( log, 'r' ) as raw:

data = raw.read()

if re.search( ome + r'\s', data ):

new_data = re.sub( ome + r'\s.*', edit, data )

else:

data = data.rstrip()

data += '\n'

new_data = data + edit

with open( log, 'w' ) as towrite:

towrite.write(new_data)

# imports database, converts into df

# returns database dataframe

def db2df(db_path):

while True:

try:

db_df = pd.read_csv(db_path,sep='\t')

break

except FileNotFoundError:

print('\n\nDatabase does not exist or directory input was incorrect.\nExit code 2')

sys.exit(2)

return db_df

# database standard format to organize columns

def df2std( df ):

#slowly integrating gtf with a check here. can remove once completely integrated

try:

dummy = df['gtf']

trans_df = df[[ 'internal_ome', 'proteome', 'assembly', 'gtf', 'gff', 'gff3', 'genus', 'species', 'strain', 'taxonomy', 'ecology', 'eco_conf', 'blastdb', 'genome_code', 'source', 'published']]

except KeyError:

trans_df = df[[ 'internal_ome', 'proteome', 'assembly', 'gff', 'gff3', 'genus', 'species', 'strain', 'taxonomy', 'ecology', 'eco_conf', 'blastdb', 'genome_code', 'source', 'published']]

return trans_df

# imports dataframe and organizes it in accord with the `std df` format

# exports as database file into `db_path`. If rescue is set to 1, save in home folder if output doesn't exist

# if rescue is set to 0, do not output database if output dir does not exit

def df2db(df, db_path, overwrite = 1, std_col = 1, rescue = 1):

df = df.reset_index()

if overwrite == 0:

number = 0

while os.path.exists(db_path):

number += 1

db_path = os.path.normpath(db_path) + '_' + str(number)

if std_col == 1:

df = df2std( df )

while True:

try:

df.to_csv(db_path, sep = '\t', index = None)

break

except FileNotFoundError:

if rescue == 1:

print('\n\nOutput directory does not exist. Attempting to save in home folder.\nExit code 17')

db_path = '~/' + os.path.basename(os.path.normpath(db_path))

df.to_csv(db_path, sep = '\t', index = None)

sys.exit( 17 )

else:

print('\n\nOutput directory does not exist. Rescue not enabled.\nExit code 18')

sys.exit( 18 )

# collect fastas from a database dataframe. NEEDS to be deprecated

def collect_fastas(db_df,assembly=0,ome_index='internal_ome'):

if assembly == 0:

print('\n\nCompiling fasta files from database, using proteome if available ...')

elif assembly == 1:

print('\n\nCompiling assembly fasta files from database ...')

ome_fasta_dict = {}

for index,row in db_df.iterrows():

ome = row[ome_index]

print(ome)

ome_fasta_dict[ome] = {}

if assembly == 1:

if type(row['assembly']) != float:

ome_fasta_dict[ome]['fasta'] = os.environ['ASSEMBLY'] + '/' + row['assembly']

ome_fasta_dict[ome]['type'] = 'nt'

else:

print('Assembly file does not exist for',ome,'\nExit code 3')

sys.exit(3)

elif assembly == 0:

if type(row['proteome']) != float:

print('\tproteome')

ome_fasta_dict[ome]['fasta'] = os.environ['PROTEOME'] + '/' + row['proteome']

ome_fasta_dict[ome]['type'] = 'prot'

elif type(row['assembly']) != float:

print('\tassembly')

ome_fasta_dict[ome]['fasta'] = os.environ['ASSEMBLY'] + '/' + row['assembly']

ome_fasta_dict[ome]['type'] = 'nt'

else:

print('Assembly file does not exist for',ome,'\nExit code 3')

sys.exit(3)

return ome_fasta_dict

# gather taxonomy by querying NCBI

# if `api_key` is set to `1`, it assumes the `Entrez.api` method has been called already

def gather_taxonomy(df, api_key=0, king='fungi', ome_index = 'internal_ome'):

print('\n\nGathering taxonomy information ...')

tax_dicts = []

count = 0

# print each ome code

for i,row in df.iterrows():

ome = row[ome_index]

print('\t' + ome)

# if there is no api key, sleep for a second after 3 queries

# if there is an api key, sleep for a second after 10 queries

if api_key == 0 and count == 3:

time.sleep(1)

count = 0

elif api_key == 1 and count == 10:

time.sleep(1)

count = 0

# gather taxonomy from information present in the genus column and query NCBI using Entrez

genus = row['genus']

search_term = str(genus) + ' [GENUS]'

handle = Entrez.esearch(db='Taxonomy', term=search_term)

ids = Entrez.read(handle)['IdList']

count += 1

if len(ids) == 0:

print('\t\tNo taxonomy information')

continue

# for each taxID acquired, fetch the actual taxonomy information

taxid = 0

for tax in ids:

if api_key == 0 and count == 3:

time.sleep(1)

count = 0

elif api_key == 1 and count == 10:

time.sleep(1)

count = 0

count += 1

handle = Entrez.efetch(db="Taxonomy", id=tax, remode="xml")

records = Entrez.read(handle)

lineages = records[0]['LineageEx']

# for each lineage, if it is a part of the kingdom, `king`, use that TaxID

# if there are multiple TaxIDs, use the first one found

for lineage in lineages:

if lineage['Rank'] == 'kingdom':

if lineage['ScientificName'].lower() == king:

taxid = tax

if len(ids) > 1:

print('\t\tTax ID(s): ' + str(ids))

print('\t\t\tUsing first "' + king + '" ID: ' + str(taxid))

break

if taxid != 0:

break

if taxid == 0:

print('\t\tNo taxonomy information')

continue

# for each taxonomic classification, add it to the taxonomy dictionary string

# append each taxonomy dictionary string to a list of dicts

tax_dicts.append({})

tax_dicts[-1][ome_index] = ome

for tax in lineages:

tax_dicts[-1][tax['Rank']] = tax['ScientificName']

count += 1

if count == 3 or count == 10:

if api_key == 0:

time.sleep( 1 )

count = 0

elif api_key == 1:

if count == 10:

time.sleep( 1 )

count = 0

# to read, use `read_tax` below

return tax_dicts

# read taxonomy by conterting the string into a dictionary using `json.loads`

def read_tax(taxonomy_string):

dict_string = taxonomy_string.replace("'",'"')

tax_dict = json.loads(dict_string)

return tax_dict

# converts db data from row or full db into a dictionary of dictionaries

# the first dictionary is indexed via `ome_index` and the second via db columns

# specific columns, like `taxonomy`, `gff`, etc. have specific functions to acquire

# their path. Can also report values from a list `empty_list` as a `None` type

def acquire( db_or_row, ome_index = 'internal_ome', empty_list = [''], datatype = 'row' ):

if ome_index != 'internal_ome':

alt_ome = 'internal_ome'

else:

alt_ome = 'genome_code'

info_dict = {}

if datatype == 'row':

row = db_or_row

ome = row[ome_index]

info_dict[ome] = {}

for key in row.keys():

if not pd.isnull(row[key]) and not row[key] in empty_list:

if key == alt_ome:

info_dict[ome]['alt_ome'] = row[alt_ome]

elif key == 'taxonomy':

info_dict[ome][key] = read_tax( row[key] )

elif key == 'gff' or key == 'gff3' or key == 'proteome' or key == 'assembly':

info_dict[ome][key] = os.environ[key.upper()] + '/' + str(row[key])

else:

info_dict[ome][key] = row[key]

else:

info_dict[ome][key] = None

else:

for i,row in db_or_row.iterrows():

info_dict[ome] = {}

for key in row.keys():

if not pd.isnull( row[key] ) or not row[key] in empty_list:

if key == alt_ome:

info_dict[ome]['alt_ome'] == row[alt_ome]

elif key == 'taxonomy':

info_dict[ome][key] == read_tax( row[key] )

elif key == 'gff' or key == 'gff3' or key == 'proteome' or key == 'assembly':

info_dict[ome][key] == os.environ[key.upper()] + '/' + row[key]

else:

info_dict[ome][key] == row[key]

else:

info_dict[ome][key] == None

return info_dict

# assimilate taxonomy dictionary strings and append the resulting taxonomy string dicts to an inputted database

# forbid a list of taxonomic classifications you are not interested in and return a new database

def assimilate_tax(db, tax_dicts, ome_index = 'internal_ome', forbid=['no rank', 'superkingdom', 'subkingdom', 'genus', 'species', 'species group', 'varietas', 'forma']):

if 'taxonomy' not in db.keys():

db['taxonomy'] = ''

tax_df = pd.DataFrame(tax_dicts)

for forbidden in forbid:

if forbidden in tax_df.keys():

del tax_df[forbidden]

output = {}

keys = tax_df.keys()

for i,row in tax_df.iterrows():

output[row[ome_index]] = {}

for key in keys:

if key != ome_index:

if pd.isnull(row[key]):

output[row[ome_index]][key] = ''

else:

output[row[ome_index]][key] = row[key]

for index in range(len(db)):

if db[ome_index][index] in output.keys():

db.at[index, 'taxonomy'] = str(output[db[ome_index][index]])

return db

# abstract taxonomy and return a database with just the taxonomy you are interested in

# NEED TO SIMPLIFY THE PUBLISHED STATEMENT TO SIMPLY DELETE ALL VALUES THAT ARENT 1 WHEN PUBLISHED = 1

def abstract_tax(db, taxonomy, classification, inverse = 0 ):

if classification == 0 or taxonomy == 0:

print('\n\nERROR: Abstracting via taxonomy requires both taxonomy name and classification.')

new_db = pd.DataFrame()

# `genus` is a special case because it has its own column, so if it is not a genus, we need to read the taxonomy

# once it is read, we can parse the taxonomy dictionary via the inputted `classification` (taxonomic classification)

if classification != 'genus':

for i,row in db.iterrows():

row_taxonomy = read_tax(row['taxonomy'])

if row_taxonomy[classification].lower() == taxonomy.lower() and inverse == 0:

new_db = new_db.append(row)

elif row_taxonomy[classification].lower() != taxonomy.lower() and inverse == 1:

new_db = new_db.append(row)

# if `classification` is `genus`, simply check if that fits the criteria in `taxonomy`

elif classification == 'genus':

for i,row in db.iterrows():

if row['genus'].lower() == taxonomy.lower() and inverse == 0:

new_db = new_db.append(row)

elif row['genus'].lower() != taxonomy.lower() and inverse == 1:

new_db = new_db.append(row)

return new_db

# abstracts all omes from an `ome_list` or the inverse and returns the abstracted database

def abstract_omes(db, ome_list, index = 'internal_ome', inverse = 0):

new_db = pd.DataFrame()

ref_set = set()

ome_set = set(ome_list)

if inverse is 0:

for ome in ome_set:

ref_set.add(ome.lower())

elif inverse is 1:

for i, row in db.iterrows():

if row[index] not in ome_set:

ref_set.add( row[index].lower() )

db = db.set_index(index, drop = False)

for i,row in db.iterrows():

if row[index].lower() in ref_set:

new_db = new_db.append(row)

return new_db

# creates a fastadict for blast2fasta.py - NEED to remove and restructure that script

def ome_fastas2dict(ome_fasta_dict):

ome_fasta_seq_dict = {}

for ome in ome_fasta_dict:

temp_fasta = fasta2dict(ome['fasta'])

ome_fasta_seq_dict['ome'] = temp_fasta

return ome_fasta_seq_dict

# this can lead to duplicates, ie Lenrap1_155 and Lenrap1

def column_pop(db_df,column,directory,ome_index='genome_code',file_type='fasta'):

db_df = db_df.set_index(ome_index)

files = collect_files(directory,file_type)

for ome,row in db_df.iterrows():

for file_ in files:

file_ = os.path.basename(file_)

if ome + '_' in temp_list:

index = temp_list.index(ome + '_')

db_df.at[ome, column] = files[index]

db_df.reset_index(level = 0, inplace = True)

return db_df

# imports a database reference (if provided) and a `df` and adds a `new_col` with new ome codes that do not overlap current ones

def gen_omes(df, reference = 0, new_col = 'internal_ome', tag = None):

print('\n\nGenerating omes ...')

newdf = df

newdf[new_col] = ''

tax_set = set()

access = '-'

if type(reference) == pd.core.frame.DataFrame:

for i,row in reference.iterrows():

tax_set.add(row['internal_ome'])

print('\t' + str(len(tax_set)) + ' omes from reference dataset')

for index in range(len(df)):

genus = df['genus'][index]

if pd.isnull(df['species'][index]):

df.at[index, 'species'] = 'sp.'

species = df['species'][index]

name = genus[:3].lower() + species[:3].lower()

name = re.sub(r'\(|\)|\[|\]|\$|\#|\@| |\||\+|\=|\%|\^|\&|\*|\'|\"|\!|\~|\`|\,|\<|\>|\?|\;|\:|\\|\{|\}', '', name)

name.replace('(', '')

name.replace(')', '')

number = 1

ome = name + str(number)

if tag != None:

access = df[tag][index]

ome = name + str(number) + '.' + access

while name + str(number) in tax_set:

number += 1

if access != '-':

ome = name + str(number) + '.' + access

else:

ome = name + str(number)

tax_set.add(ome)

newdf.at[index, new_col] = ome

print('\t' + ome)

return newdf

# NEED TO REMOVE AFTER CHECKING IN OTHER SCRIPTS

#def dup_check(db_df, column, ome_index='genome_code'):

#

# print('\n\nChecking for duplicates ...')

#

# for index,row in db_df.iterrows():

# iter1 = db_df[column][index]

# for index2,row2 in db_df.iterrows():

# if str(db_df[ome_index][index]) != str(db_df[ome_index][index2]):

# iter2 = db_df[column][index2]

# if str(iter1) == str(iter2):

# print('\t' + db_df[ome_index][index], db_df[ome_index][index2])

# queries database either by ome or column or ome and column. print the query, and return an exit_code (0 if fine, 1 if query1 empty)

def query(db, query_type, query1, query2 = None, ome_index='internal_ome'):

exit_code = 0

if query_type in ['column', 'c', 'ome', 'o']:

db = db.set_index(ome_index)

if query_type in ['ome', 'o']:

if query1 in db.index.values:

if query2 != None and query2 in db.columns.values:

print(query1 + '\t' + str(query2) + '\n' + str(db[query2][query1]))

elif query2 != None:

print('Query ' + str(query2) + ' does not exist.')

else:

print(query1 + '\n' + str(db.loc[query1]))

else:

print('Query ' + str(query1) + ' does not exist.')

elif query_type in ['column', 'c']:

if query1 in db.columns.values:

if query2 != None and query2 in db.index.values:

print(query2 + '\t' + str(query1) + '\n' + str(db[query1][query2]))

elif query2 != None:

print('Query ' + str(query2) + ' does not exist.')

else:

print(str(db[query1]))

else:

print('Query ' + str(query1) + ' does not exist.')

if query1 == '':

exit_code == 1

return exit_code

# edits an entry by querying the column and ome code and editing the entry with `edit`

# returns the database and an exit code

def db_edit(query1, query2, edit, db_df, query_type = '', ome_index='internal_ome',new_col=0):

exit_code = 0

if query_type in ['column', 'c']:

ome = query2

column = query1

else:

ome = query1

column = query2

if column in db_df or new_col==1:

db_df = db_df.set_index(ome_index)

db_df.at[ome, column] = edit

db_df.reset_index(level = 0, inplace = True)

if ome == '' and column == '':

exit_code = 1

return db_df, exit_code

def add_jgi_data(db_df,jgi_df,db_column,jgi_column,binary='0'):

jgi_df_ome = jgi_df.set_index('genome_code')

db_df.set_index('genome_code',inplace=True)

jgi_col_dict = {}

for ome in jgi_df['genome_code']:

jgi_col_dict[ome] = jgi_df_ome[jgi_column][ome]

if binary == 1:

for ome in jgi_col_dict:

if ome in db_df.index:

if pd.isnull(jgi_col_dict[ome]):

db_df.at[ome, db_column] = 0

else:

db_df[ome, db_column] = 1

else:

print(ome + '\tNot in database')

else:

for ome in jgi_col_dict:

if ome in db_df.index:

db_df[ome, db_column] = jgi_col_dict[ome]

else:

print(ome + '\tNot in database')

db_df.reset_index(inplace=True)

return db_df

def report_omes( db_df, ome_index = 'internal_ome' ):

ome_list = db_df[ome_index].tolist()

print('\n\n' + str(ome_list))

# converts a `pre_db` file to a `new_db` and returns

def predb2db( pre_db ):

data_dict_list = []

for i, row in pre_db.iterrows():

species_strain = str(row['species_and_strain']).replace( ' ', '_' )

spc_str = re.search(r'(.*?)_(.*)', species_strain)

if spc_str:

species = spc_str[1]

strain = spc_str[2]

else:

species = species_strain

strain = ''

data_dict_list.append( {

'genome_code': row['genome_code'],

'genus': row['genus'],

'strain': strain,

'ecology': '',

'eco_conf': '',

'species': species,

'proteome': row['proteome_full_path'],

'gff': row['gff_full_path'],

'gff3': row['gff3_full_path'],

'assembly': row['assembly_full_path'],

'blastdb': 0,

'source': row['source'],

'published': row['published_(0/1)']

} )

new_db = pd.DataFrame( data_dict_list )

return new_db

# converts a `db_df` into an `ogdb` and returns

def back_compat(db_df, ome_index='genome_code'):

old_df_data = []

for i,row in db_df.iterrows():

ome = row[ome_index]

taxonomy_prep = row['taxonomy']

tax_dict = read_tax(taxonomy_prep)

taxonomy_str = tax_dict['kingdom'] + ';' + tax_dict['phylum'] + ';' + tax_dict['subphylum'] + ';' + tax_dict['class'] + ';' + tax_dict['order'] + ';' + tax_dict['family'] + ';' + row['genus']

old_df_data.append({'genome_code': row[ome_index], 'proteome_file': row['proteome'], 'gtf_file': row['gtf'], 'gff_file': row['gff'], 'gff3_file': row['gff3'], 'assembly_file': row['assembly'], 'Genus': row['genus'], 'species': row['species'], 'taxonomy': taxonomy_str})

old_db_df = pd.DataFrame(old_df_data)

return old_db_df

|

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Created on Thu Feb 20 15:06:23 2020

@author: thomas

"""

#MODULES

import os,sys

import numpy as np

import pandas as pd

from mpl_toolkits import mplot3d

import matplotlib as mpl

#mpl.use('Agg')

import matplotlib.pyplot as plt

from matplotlib.ticker import (MultipleLocator,AutoMinorLocator)

from scipy.signal import savgol_filter

import pathlib

mpl.rcParams['axes.linewidth'] = 1.5 #set the value globally

#Gather position data located in ../PosData/$RLength/PI$Theta/$ANTIoPARA/$SSLoLSL/pd.txt

#Create a pandas database from each of them

#a,b = bot #; X,Y = dir; U,L = up/low spheres

#CONSTANTS

cwd_PYTHON = os.getcwd()

PERIOD = 0.1

DT = 1.0e-2

RADIUSLARGE = 0.002

RADIUSSMALL = 0.001

#Lists

#RLength

R = ["3","5","6","7"]

SwimDirList = ["SSL", "LSL", "Stat"]

allData = []

def StoreData(strR,strTheta, strConfig, strRe):

#global axAll

#Reset position data every Re

pdData = []

#Load position data

#Periodic

pdData = pd.read_csv(cwd_PYTHON+'/../Periodic/'+strR+'/'+strTheta+'/'+strConfig+'/'+strRe+'/pd.txt',delimiter = ' ')

#Save only every 10 rows (Every period)

pdData = pdData.iloc[::10]

#Reindex so index corresponds to period number

pdData = pdData.reset_index(drop=True)

#Create CM variables

pdData['aXCM'] = 0.8*pdData.aXU + 0.2*pdData.aXL

pdData['aYCM'] = 0.8*pdData.aYU + 0.2*pdData.aYL

pdData['bXCM'] = 0.8*pdData.bXU + 0.2*pdData.bXL

pdData['bYCM'] = 0.8*pdData.bYU + 0.2*pdData.bYL

#Find separation distance and relative heading for LS

pdData['Hx'], pdData['Hy'], pdData['Theta'] = FindDistAngleBW(pdData['aXU'],pdData['aYU'],

pdData['bXU'],pdData['bYU'],

pdData['aXL'],pdData['aYL'],

pdData['bXL'],pdData['bYL'])

#Calculate deltaH and deltaTheta

#pdData['dH'] = CalcDelta(pdData['H'])

#pdData['dTheta'] = CalcDelta(pdData['Theta'])

'''#Plot distBW vs time and angleBW vs time

PlotHTheta(pdData['H'],pdData['Theta'],pdData['dH'],pdData['dTheta'],

pdData['time'],strR,strTheta,strConfig,strRe)'''

#PlotAllHTheta(pdData['H'],pdData['Theta'],pdData['dH'],pdData['dTheta'],pdData['time'])

return pdData

def FindDistAngleBW(aXU,aYU,bXU,bYU,aXL,aYL,bXL,bYL):

#Find Distance b/w the 2 swimmers (Large Spheres)

distXU = bXU - aXU

distYU = bYU - aYU

distBW = np.hypot(distXU,distYU)

#Find relative heading Theta (angle formed by 2 swimmers)

#1) Find normal orientation for swimmer A

dist_aX = aXU - aXL

dist_aY = aYU - aYL

dist_a = np.hypot(dist_aX,dist_aY)

norm_aX, norm_aY = dist_aX/dist_a, dist_aY/dist_a

#Find normal orientation for swimmer B

dist_bX = bXU - bXL

dist_bY = bYU - bYL

dist_b = np.hypot(dist_bX,dist_bY)

norm_bX, norm_bY = dist_bX/dist_b, dist_bY/dist_b

#2) Calculate Theta_a

Theta_a = np.zeros(len(norm_aX))

for idx in range(len(norm_aX)):

if(norm_aY[idx] >= 0.0):

Theta_a[idx] = np.arccos(norm_aX[idx])

else:

Theta_a[idx] = -1.0*np.arccos(norm_aX[idx])+2.0*np.pi

#print('Theta_a = ',Theta_a*180.0/np.pi)

#3) Rotate A and B ccw by 2pi - Theta_a

Angle = 2.0*np.pi - Theta_a

#print('Angle = ',Angle*180.0/np.pi)

#Create rotation matrix

rotationMatrix = np.zeros((len(Angle),2,2))

rotationMatrix[:,0,0] = np.cos(Angle)

rotationMatrix[:,0,1] = -1.0*np.sin(Angle)

rotationMatrix[:,1,0] = np.sin(Angle)

rotationMatrix[:,1,1] = np.cos(Angle)

#print('rotationMatrix[-1] = ',rotationMatrix[10,:,:])

#Create swimmer position arrays

pos_a = np.zeros((len(norm_aX),2))

pos_b = np.zeros((len(norm_bX),2))

pos_a[:,0],pos_a[:,1] = norm_aX,norm_aY

pos_b[:,0],pos_b[:,1] = norm_bX,norm_bY

#print('pos_a = ',pos_a)

#print('pos_b = ',pos_b)

#Apply rotation operator

rotpos_a, rotpos_b = np.zeros((len(norm_aX),2)), np.zeros((len(norm_bX),2))

for idx in range(len(norm_aX)):

#print('pos_a = ',pos_a[idx,:])

#print('pos_b = ',pos_b[idx,:])

#print('rotationMatrix = ',rotationMatrix[idx])

rotpos_a[idx,:] = rotationMatrix[idx,:,:].dot(pos_a[idx,:])

rotpos_b[idx,:] = rotationMatrix[idx,:,:].dot(pos_b[idx,:])

#print('rotpos_a = ',rotpos_a[idx,:])

#print('rotpos_b = ',rotpos_b[idx,:])

#print('rotpos_a = ',rotpos_a)

#print('rotpos_b = ',rotpos_b)

#Calculate angleBW

angleBW = np.zeros(len(norm_bY))

for idx in range(len(norm_bY)):

if(rotpos_b[idx,1] >= 0.0):

angleBW[idx] = np.arccos(rotpos_a[idx,:].dot(rotpos_b[idx,:]))

else:

angleBW[idx] = -1.0*np.arccos(rotpos_a[idx,:].dot(rotpos_b[idx,:]))+2.0*np.pi

#print('angleBW = ',angleBW*180/np.pi)

return (distXU,distYU,angleBW)

def CalcDelta(data):

#Calculate the change in either H or Theta for every period

delta = data.copy()

delta[0] = 0.0

for idxPer in range(1,len(delta)):

delta[idxPer] = data[idxPer] - data[idxPer-1]

return delta

def PlotHTheta(H,Theta,dH,dTheta,time,strR,strTheta,strConfig,strRe):

#Create Folder for Plots

#Periodic

pathlib.Path('../HThetaPlots/Periodic/'+strR+'/'+strConfig+'/').mkdir(parents=True, exist_ok=True)

cwd_DIST = cwd_PYTHON + '/../HThetaPlots/Periodic/'+strR+'/'+strConfig+'/'

#GENERATE FIGURE

csfont = {'fontname':'Times New Roman'}

fig = plt.figure(num=0,figsize=(8,8),dpi=250)

ax = fig.add_subplot(111,projection='polar')

ax.set_title('H-Theta Space',fontsize=16,**csfont)

#Create Mesh of Delta H and Theta

mTheta, mH = np.meshgrid(Theta,H/RADIUSLARGE)

mdTheta, mdH = np.meshgrid(dTheta,dH/RADIUSLARGE)

#Plot Chnages in H and Theta in quiver plot (Vec Field)

for idx in range(0,len(Theta),20):

ax.quiver(mTheta[idx,idx],mH[idx,idx],

mdH[idx,idx]*np.cos(mTheta[idx,idx])-mdTheta[idx,idx]*np.sin(mTheta[idx,idx]),

mdH[idx,idx]*np.sin(mTheta[idx,idx])+mdTheta[idx,idx]*np.cos(mTheta[idx,idx]),

color='k')#,pivot='mid',scale=50)

ax.scatter(Theta,H/RADIUSLARGE,c=time,cmap='rainbow',s=9)

ax.set_ylim(0.0,np.amax(H)/RADIUSLARGE) #[Theta,H/RADIUSLARGE],

#ax.set_xlim(90.0*np.pi/180.0,270.0*np.pi/180.0)

#SetAxesParameters(ax,-0.05,0.05,0.02,0.01,0.01)

fig.tight_layout()

#fig.savefig(cwd_DIST+'Dist_'+strR+'R_'+strTheta+'_'+strConfig+'_'+strRe+'.png')

fig.savefig(cwd_DIST+'HTheta'+strTheta+'_'+strRe+'.png')

fig.clf()

#plt.close()

return

def PlotAllHTheta(data,name):

#global figAll,axAll

#Figure with every pt for each Re

csfont = {'fontname':'Times New Roman'}

figAll = plt.figure(num=1,figsize=(8,8),dpi=250)

#figAll, axAll = plt.subplots(nrows=1, ncols=1,num=0,figsize=(16,16),dpi=250)

axAll = figAll.add_subplot(111,projection='3d')

axAll.set_title('H-Theta Space: '+name,fontsize=20,**csfont)

axAll.set_zlabel(r'$\Theta/\pi$',fontsize=18,**csfont)

axAll.set_ylabel('Hy/R\tR=2.0mm',fontsize=18,**csfont)

axAll.set_xlabel('Hx/R\tR=2.0mm',fontsize=18,**csfont)

print('data length = ',len(data))

for idx in range(len(data)):

#print('idx = ',idx)

tempData = data[idx].copy()

Hx = tempData['Hx']/RADIUSLARGE

Hy = tempData['Hy']/RADIUSLARGE

Theta = tempData['Theta']/np.pi

#dH = tempData['dH']/RADIUSLARGE

#dTheta = tempData['dTheta']

time = tempData['time']

#GENERATE FIGURE

#print('Theta = ',Theta[0])

#axAll.scatter(Theta[0],H[0],c='k')

axAll.scatter3D(Hx,Hy,Theta,c=time,cmap='rainbow',s=9)

'''#Create Mesh of Delta H and Theta

mTheta, mH = np.meshgrid(Theta,H)

mdTheta, mdH = np.meshgrid(dTheta,dH)

normdH, normdTheta = mdH/np.hypot(mdH,mdTheta), mdTheta/np.hypot(mdH,mdTheta)

#Plot Chnages in H and Theta in quiver plot (Vec Field)

for idx in range(0,len(Theta),10):

#Polar

axAll.quiver(mTheta[idx,idx],mH[idx,idx],

normdH[idx,idx]*np.cos(mTheta[idx,idx])-normdTheta[idx,idx]*np.sin(mTheta[idx,idx]),

normdH[idx,idx]*np.sin(mTheta[idx,idx])+normdTheta[idx,idx]*np.cos(mTheta[idx,idx]),

color='k',scale=25,headwidth=3,minshaft=2)

axAll.quiver(mTheta[idx,idx],mH[idx,idx],

mdH[idx,idx]*np.cos(mTheta[idx,idx])-mdTheta[idx,idx]*np.sin(mTheta[idx,idx]),

mdH[idx,idx]*np.sin(mTheta[idx,idx])+mdTheta[idx,idx]*np.cos(mTheta[idx,idx]),

color='k',scale=25,headwidth=3,minshaft=2)

#Cartesian

axAll.quiver(mTheta[idx,idx],mH[idx,idx],

normdTheta[idx,idx],normdH[idx,idx],

color='k',scale=25,headwidth=3,minshaft=2)

'''

#print('Theta: ',len(Theta))

#print(Theta)

#axAll.set_ylim(0.0,np.amax(H)/RADIUSLARGE) #[Theta,H/RADIUSLARGE],

#ax.set_xlim(90.0*np.pi/180.0,270.0*np.pi/180.0)

#SetAxesParameters(ax,-0.05,0.05,0.02,0.01,0.01)

axAll.set_xlim(0.0,8.0)

axAll.set_ylim(0.0,8.0)

axAll.set_zlim(0.0,2.0)

figAll.tight_layout()

figAll.savefig(cwd_PYTHON + '/../HThetaPlots/Periodic/HThetaAll_3D_'+name+'.png')

figAll.clf()

plt.close()

print('About to exit PlotAll')

return

if __name__ == '__main__':

#dirsVisc = [d for d in os.listdir(cwd_STRUCT) if os.path.isdir(d)]

#The main goal of this script is to create an H-Theta Phase Space of all

#simulations for every period elapsed.

#Example: 20s of sim time = 200 periods. 200 H-Theta Plots

#We may find that we can combine the H-Theta data after steady state has been reached

#1) For each simulation, store position data for every period (nothing in between)

#2) Calculate separation distance between large spheres (H)

#3) Calculate relative heading (Theta)

#4) Calculate deltaH and deltaTheta (change after one Period)

#5) Plot H vs Theta (Polar) for each period

count = 0

for idxR in range(len(R)):

cwd_R = cwd_PYTHON + '/../Periodic/' + R[idxR]

dirsTheta = [d for d in os.listdir(cwd_R) if not d.startswith('.')]

for Theta in dirsTheta:

cwd_THETA = cwd_R + '/' + Theta

dirsConfig = [d for d in os.listdir(cwd_THETA) if not d.startswith('.')]

for Config in dirsConfig:

for idxRe in range(len(SwimDirList)):

if(idxRe == 2):

count += 1

print('count = ',count)

allData = [None]*(count)

#For each Reynolds number (aka Swim Direction)

for idxRe in range(len(SwimDirList)):

count = 0

#For each Initial Separation Distance

#H(0) = Rval*R; R = radius of large sphere = 0.002m = 2mm

for idxR in range(len(R)):

cwd_R = cwd_PYTHON + '/../Periodic/' + R[idxR]

#For each orientation at the specified length

dirsTheta = [d for d in os.listdir(cwd_R) if not d.startswith('.')]

for Theta in dirsTheta:

cwd_THETA = cwd_R + '/' + Theta

#For each configuration

dirsConfig = [d for d in os.listdir(cwd_THETA) if not d.startswith('.')]

for Config in dirsConfig:

print(R[idxR]+'\t'+Theta+'\t'+Config)

allData[count] = StoreData(R[idxR],Theta,Config,SwimDirList[idxRe])

count += 1

#PlotAllHTheta(axAll,data['H'],data['Theta'],data['dH'],data['dTheta'],data['time'])

#figAll.savefig(cwd_PYTHON + '/../HThetaPlots/Periodic/HThetaAll_SSL.png')

#sys.exit(0)

#Plot All H-Theta space

PlotAllHTheta(allData,SwimDirList[idxRe])

#sys.exit(0)

|

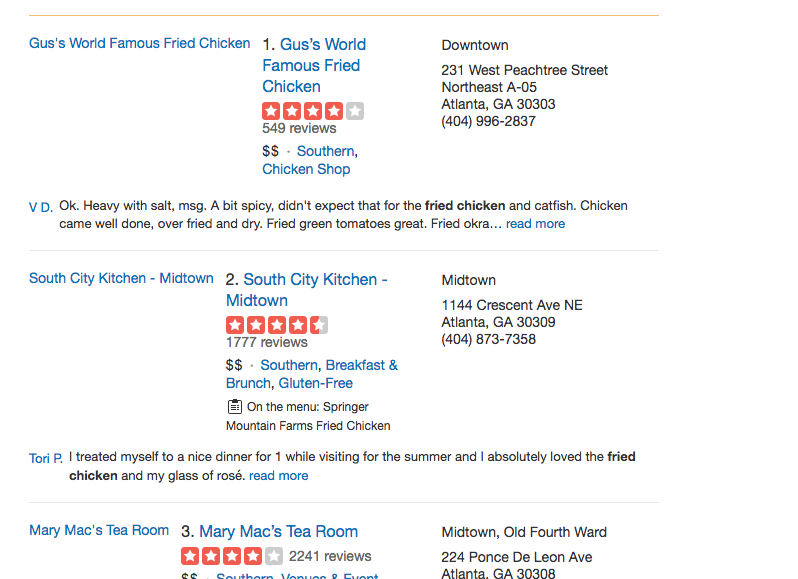

# coding: utf-8

# # Part 1 of 2: Processing an HTML file

#

# One of the richest sources of information is [the Web](http://www.computerhistory.org/revolution/networking/19/314)! In this notebook, we ask you to use string processing and regular expressions to mine a web page, which is stored in HTML format.

# **The data: Yelp! reviews.** The data you will work with is a snapshot of a recent search on the [Yelp! site](https://yelp.com) for the best fried chicken restaurants in Atlanta. That snapshot is hosted here: https://cse6040.gatech.edu/datasets/yelp-example

#

# If you go ahead and open that site, you'll see that it contains a ranked list of places:

#

#

# **Your task.** In this part of this assignment, we'd like you to write some code to extract this list.

# ## Getting the data

#

# First things first: you need an HTML file. The following Python code will download a particular web page that we've prepared for this exercise and store it locally in a file.

#

# > If the file exists, this command will not overwrite it. By not doing so, we can reduce accesses to the server that hosts the file. Also, if an error occurs during the download, this cell may report that the downloaded file is corrupt; in that case, you should try re-running the cell.

# In[36]:

import requests

import os

import hashlib

if os.path.exists('.voc'):

data_url = 'https://cse6040.gatech.edu/datasets/yelp-example/yelp.htm'

else:

data_url = 'https://github.com/cse6040/labs-fa17/raw/master/datasets/yelp.htm'

if not os.path.exists('yelp.htm'):

print("Downloading: {} ...".format(data_url))

r = requests.get(data_url)

with open('yelp.htm', 'w', encoding=r.encoding) as f:

f.write(r.text)

with open('yelp.htm', 'r', encoding='utf-8') as f:

yelp_html = f.read().encode(encoding='utf-8')

checksum = hashlib.md5(yelp_html).hexdigest()

assert checksum == "4a74a0ee9cefee773e76a22a52d45a8e", "Downloaded file has incorrect checksum!"

print("'yelp.htm' is ready!")

# **Viewing the raw HTML in your web browser.** The file you just downloaded is the raw HTML version of the data described previously. Before moving on, you should go back to that site and use your web browser to view the HTML source for the web page. Do that now to get an idea of what is in that file.

#

# > If you don't know how to view the page source in your browser, try the instructions on [this site](http://www.wikihow.com/View-Source-Code).

# **Reading the HTML file into a Python string.** Let's also open the file in Python and read its contents into a string named, `yelp_html`.

# In[149]:

with open('yelp.htm', 'r', encoding='utf-8') as yelp_file:

yelp_html = yelp_file.read()

# Print first few hundred characters of this string:

print("*** type(yelp_html) == {} ***".format(type(yelp_html)))

n = 1000

print("*** Contents (first {} characters) ***\n{} ...".format(n, yelp_html[:n]))

# Oy, what a mess! It will be great to have some code read and process the information contained within this file.

# ## Exercise (5 points): Extracting the ranking

#

# Write some Python code to create a variable named `rankings`, which is a list of dictionaries set up as follows:

#

# * `rankings[i]` is a dictionary corresponding to the restaurant whose rank is `i+1`. For example, from the screenshot above, `rankings[0]` should be a dictionary with information about Gus's World Famous Fried Chicken.

# * Each dictionary, `rankings[i]`, should have these keys:

# * `rankings[i]['name']`: The name of the restaurant, a string.

# * `rankings[i]['stars']`: The star rating, as a string, e.g., `'4.5'`, `'4.0'`

# * `rankings[i]['numrevs']`: The number of reviews, as an **integer.**

# * `rankings[i]['price']`: The price range, as dollar signs, e.g., `'$'`, `'$$'`, `'$$$'`, or `'$$$$'`.

#

# Of course, since the current topic is regular expressions, you might try to apply them (possibly combined with other string manipulation methods) find the particular patterns that yield the desired information.

# In[201]:

import re

from collections import defaultdict

yelp_html2 = yelp_html.split(r"After visiting", 1)

yelp_html2 = yelp_html2[1]

stars = []

rating_pattern = re.compile(r'\d.\d star rating">')

for index, rating in enumerate(re.findall(rating_pattern, yelp_html2)):

stars.append(rating[0:3])

name = []

name_pattern = re.compile('(\"\>\<span\>)(.*?)(\<\/span\>)')

for index, bname in enumerate(re.findall(name_pattern, yelp_html2)):

name.append(bname[1])

numrevs = []

number_pattern = re.compile('([0-9]{1,4})( reviews)')

for index, number in enumerate(re.findall(number_pattern, yelp_html2)):

numrevs.append(int(number[0]))

price = []

dollar_pattern = re.compile('(\${1,5})')

for index, dollar in enumerate(re.findall(dollar_pattern, yelp_html2)):

price.append(dollar)

complete_list = list(zip(name, stars, numrevs, price))

complete_list[0]

rankings = []

for i,j in enumerate(complete_list):

d = {

'name':complete_list[i][0],

'stars': complete_list[i][1],

'numrevs':complete_list[i][2],

'price':complete_list[i][3]

}

rankings.append(d)

rankings[1]['stars'] = '4.5'

rankings[3]['stars'] = '4.0'

rankings[2]['stars'] = '4.0'

rankings[5]['stars'] = '3.5'

rankings[7]['stars'] = '4.5'

rankings[8]['stars'] = '4.5'

# In[202]:

# Test cell: `rankings_test`

assert type(rankings) is list, "`rankings` must be a list"

assert all([type(r) is dict for r in rankings]), "All `rankings[i]` must be dictionaries"

print("=== Rankings ===")

for i, r in enumerate(rankings):

print("{}. {} ({}): {} stars based on {} reviews".format(i+1,

r['name'],

r['price'],

r['stars'],

r['numrevs']))

assert rankings[0] == {'numrevs': 549, 'name': 'Gus’s World Famous Fried Chicken', 'stars': '4.0', 'price': '$$'}

assert rankings[1] == {'numrevs': 1777, 'name': 'South City Kitchen - Midtown', 'stars': '4.5', 'price': '$$'}

assert rankings[2] == {'numrevs': 2241, 'name': 'Mary Mac’s Tea Room', 'stars': '4.0', 'price': '$$'}

assert rankings[3] == {'numrevs': 481, 'name': 'Busy Bee Cafe', 'stars': '4.0', 'price': '$$'}

assert rankings[4] == {'numrevs': 108, 'name': 'Richards’ Southern Fried', 'stars': '4.0', 'price': '$$'}

assert rankings[5] == {'numrevs': 93, 'name': 'Greens & Gravy', 'stars': '3.5', 'price': '$$'}

assert rankings[6] == {'numrevs': 350, 'name': 'Colonnade Restaurant', 'stars': '4.0', 'price': '$$'}

assert rankings[7] == {'numrevs': 248, 'name': 'South City Kitchen Buckhead', 'stars': '4.5', 'price': '$$'}

assert rankings[8] == {'numrevs': 1558, 'name': 'Poor Calvin’s', 'stars': '4.5', 'price': '$$'}

assert rankings[9] == {'numrevs': 67, 'name': 'Rock’s Chicken & Fries', 'stars': '4.0', 'price': '$'}

print("\n(Passed!)")

# **Fin!** This cell marks the end of Part 1. Don't forget to save, restart and rerun all cells, and submit it. When you are done, proceed to Part 2.

|

# -*- coding: utf-8 -*-

import numpy as np

import mat4py

import matplotlib.pyplot as plt

import matplotlib.colors as colors

import sys

from sklearn import preprocessing

def distance(pointA, pointB):

return np.linalg.norm(pointA - pointB)

def getMinDistance(point, centroids, numOfcentroids):

min_distance = sys.maxsize

for i in range(numOfcentroids):

dist = distance(point, centroids[i])

if dist < min_distance:

min_distance = dist

return min_distance

def kmeanspp(data, k, iterations=100):

'''

:param data: points

:param k: number Of classes

:param iterations:

:return: centroids, classes

'''

numOfPoints = data.shape[0]

classes = np.zeros(numOfPoints)

centroids = np.zeros((k, data.shape[1]))

centroids[0] = data[np.random.randint(0, numOfPoints)]

distanceArray = [0] * numOfPoints

for iterK in range(1, k):

total = 0.0

for i, point in enumerate(data):

distanceArray[i] = getMinDistance(point, centroids, iterK)

total += distanceArray[i]

total *= np.random.rand()

for i, di in enumerate(distanceArray):

total -= di

if total > 0:

continue

centroids[iterK] = data[i]

break

print(centroids)

distance_matrix = np.zeros((numOfPoints, k))

for i in range(iterations):

preClasses = classes.copy()

for p in range(numOfPoints):

for q in range(k):

distance_matrix[p, q] = distance(data[p], centroids[q])

classes = np.argmin(distance_matrix, 1)

for iterK in range(k):

centroids[iterK] = np.mean(data[np.where(classes==iterK)], 0)

print('iteration:', i+1)

# print((classes - preClasses).sum())

if (classes - preClasses).sum() == 0:

break

return (centroids, classes)

if __name__ == '__main__':

data = mat4py.loadmat('data/toy_clustering.mat')['r1']

data = np.array(data)

data = preprocessing.MinMaxScaler().fit_transform(data)

centroids, classes = kmeanspp(data, 3, 5000)

print(centroids)

colors = list(colors.cnames.keys())

colors[0] = 'red'

colors[1] = 'blue'

colors[2] = 'green'

colors[3] = 'purple'

for i in range(centroids.shape[0]):

plt.scatter(data[np.where(classes==i), 0], data[np.where(classes==i), 1], c=colors[i], alpha=0.4)

plt.scatter(centroids[i, 0], centroids[i, 1], c=colors[i], marker='+', s=1000)

plt.show()

|

# Generated by Django 2.2.6 on 2019-10-19 13:34

from django.db import migrations, models

class Migration(migrations.Migration):

dependencies = [

('food', '0001_initial'),

]

operations = [

migrations.AlterField(

model_name='food',

name='CO2',

field=models.DecimalField(decimal_places=5, max_digits=12),

),

]

|

from flask import Flask, jsonify, render_template, send_file

app = Flask(__name__, template_folder='templates')

from crawler import getFileNames, searchInternet

import os, pdfkit

@app.route("/search=<query>")

def search(query):

searchInternet(query)

resp = jsonify({"data": "Success"})

resp.headers['Access-Control-Allow-Origin'] = '*'

return resp

@app.route("/fileList")

def getFileList():

data = getFileNames()

dataJson=[topic.serialize() for topic in data]

resp = jsonify({"data": dataJson})

resp.headers['Access-Control-Allow-Origin'] = '*'

return resp

@app.route("/file/<folder>/<filename>")

def getFileByPath(folder,filename):

path = "../data/" + folder + "/" + filename

return send_file(open(path, 'rb'), attachment_filename='file.pdf')

if __name__ == "__main__":

app.run()

|

from pandas import *

from ggplot import *

import pprint

import datetime

import itertools

import operator

import brewer2mpl

import ggplot as gg

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import pylab

import scipy.stats

import statsmodels.api as sm

import pandasql

from datetime import datetime, date, time

turnstile_weather=pandas.read_csv("C:/move - bwlee/Data Analysis/Nano/\

Intro to Data Science/project/code/turnstile_data_master_with_weather.csv")