url

stringlengths 14

2.42k

| text

stringlengths 100

1.02M

| date

stringlengths 19

19

| metadata

stringlengths 1.06k

1.1k

|

|---|---|---|---|

https://unusedcycles.wordpress.com/category/physics/

|

# Unused Cycles

## May 30, 2008

### Physics of GPS relativistic time delay

So I realized today I’ve been posting quite a bit about GNU/Linux and mathematics, but I haven’t really done much with physics. So here’s my first physics post!

This problem is actually one assigned in the undergraduate general relativity course I took in the spring 2008. It’s from James B. Hartle’s book Gravity: An Introduction to Einstein’s General Relativity, chapter 6, problem 9.

A GPS satellite emits signals at a constant rate as measured by an onboard clock. Calculate the fractional difference in the rate at which these are received by an identical clock on the surface of Earth. Take both the effects of special relativity and gravitation into account to leading order in $1/c^2$. For simplicity, assume the satellite is in a circular equatorial orbit, the ground-based clock is on the equator, and that the angle between the propagation of the signal and the velocity of the satellite is 90° in the instantaneous rest frame of the receiver.

The problem is very simplified as to make the calculations doable at the undergraduate level. Thus it is using the simplified Geometric Newtonian gravity, that is, the line element given by

$\displaystyle ds^2=-\left(1+\frac{2\Phi}{c^2}\right)(c dt)^2+\left(1-\frac{2\Phi}{c^2}\right)(dx^2+dy^2+dz^2).$

One could, of course, use Schwarzchild coordinates (and this is, in effect, what I did for the general relativistic part). The solution is broken into three parts: orbital information, the special relativistic effects, and the general relativistic effects.

# Orbital Information

The key here (that’s not given in the problem) is that the time $t$ that it takes satellites to orbit Earth is 12 hours. Recalling that the speed of an orbit in Newtonian gravity is $v_{\text{orbit}}=\sqrt{\frac{GM}{R}}$, where $R$ is the radius of the orbit and $M$ is the mass of the object being orbited, we get that

$\begin{array}{rcl}\displaystyle \frac{2\pi R}{t}&\displaystyle=&\displaystyle \sqrt{\frac{GM}{R}}\vspace{0.3 cm}\\\displaystyle R&\displaystyle=&\displaystyle \sqrt[3]{\frac{GMt^2}{4\pi^2}}\vspace{0.3 cm}\end{array}$

Plugging in the appropriate values gives $R$=26,605 km and $v_\text{orbit}$=3871.0 m/s.

# Special Relativistic Effects

Let $A$ denote the ground observer and $B$ denote the satellite. Then we can use time dilation to get that

$\begin{array}{rcl}\displaystyle\frac{\Delta \tau_{B,S}}{\Delta\tau_{A,S}}&\displaystyle=&\displaystyle\frac{1}{\gamma}\vspace{0.3 cm}\\&\displaystyle=&\displaystyle \sqrt{1-v_\text{orbit}^2/c^2}\vspace{0.3 cm}\\&\displaystyle=&\displaystyle 0.999999999917.\end{array}$

# General Relativistic Effects

For general relativistic effects, note that the frequencies are related by

$\displaystyle \frac{\Delta\tau_{B,G}}{\Delta\tau_{A,G}}=\frac{\omega_A}{\omega_B}=\sqrt{\frac{g_{00}\left[(\vec{x}(B)\right]}{g_{00}\left[(\vec{x}(A)\right]}}=\sqrt{\frac{1+\frac{GM}{Rc^2}}{1+\frac{GM}{R_\oplus c^2}}}.$

The derivation of this formula is beyond the scope of chapter 6 and uses Killing Vectors and photon geodesics introduced in a later chapter. However, an approximation to this result is given in equation 6.12 of Hartle. Plugging in the appropriate results gives the ratio to be 1+5.2873 x 10-10, very nearly 1.

Putting things together, the whole shift is

$\begin{array}{rcl}\displaystyle\frac{\omega_A}{\omega_B}=\frac{\Delta\tau_B}{\Delta\tau_A}&\displaystyle=&\displaystyle(0.999999999917)(1+5.2873\times 10^{-10})\vspace{0.3 cm}\\\displaystyle&=&\displaystyle 1+4.4573\times 10^{-10}.\end{array}$

As a check, suppose that a day passes on Earth (in other words, $\Delta\tau_A$ = 24 hours = 86,400 s). Then for the satellite, (86,400 s)(4.4573 x 10-10) = 38.511 μs more have passed every day. According to Wikipedia, this number is 38 μs.

Also according to Wikipedia, the desired frequency on Earth is $\omega_A$=10.23 MHz. This leaves $\omega_B$ to be 0.0045598 Hz less (compare this to Wikipedia’s claim of 0.0045700 Hz less).

The problem of course is not as simple as it was made to be. Difficulties arise when one takes into account that Earth is rotating (so the metric is more complicated), that the observer is not necessarily orbiting in the same plane as the satellite, and the orbit is not perfectly circular. However, these corrections are minute and don’t affect the problem very much.

Hope this was an interesting read!

|

2017-08-20 09:42:24

|

{"extraction_info": {"found_math": true, "script_math_tex": 0, "script_math_asciimath": 0, "math_annotations": 0, "math_alttext": 0, "mathml": 0, "mathjax_tag": 0, "mathjax_inline_tex": 0, "mathjax_display_tex": 0, "mathjax_asciimath": 0, "img_math": 17, "codecogs_latex": 0, "wp_latex": 0, "mimetex.cgi": 0, "/images/math/codecogs": 0, "mathtex.cgi": 0, "katex": 0, "math-container": 0, "wp-katex-eq": 0, "align": 0, "equation": 0, "x-ck12": 0, "texerror": 0, "math_score": 0.8948217630386353, "perplexity": 419.904216315749}, "config": {"markdown_headings": true, "markdown_code": true, "boilerplate_config": {"ratio_threshold": 0.18, "absolute_threshold": 10, "end_threshold": 15, "enable": true}, "remove_buttons": true, "remove_image_figures": true, "remove_link_clusters": true, "table_config": {"min_rows": 2, "min_cols": 3, "format": "plain"}, "remove_chinese": true, "remove_edit_buttons": true, "extract_latex": true}, "warc_path": "s3://commoncrawl/crawl-data/CC-MAIN-2017-34/segments/1502886106367.1/warc/CC-MAIN-20170820092918-20170820112918-00523.warc.gz"}

|

https://monadical.com/posts/candy-machine-mint-and-reveal.html

|

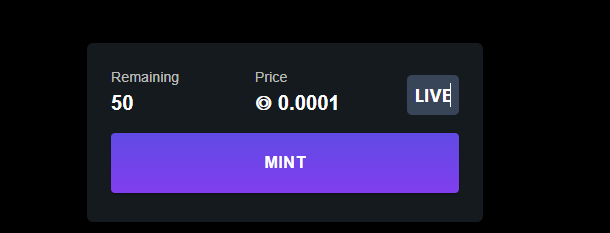

How to mint and reveal NFTs with Candy Machine V2 - HedgeDoc

3085 views

owned this note

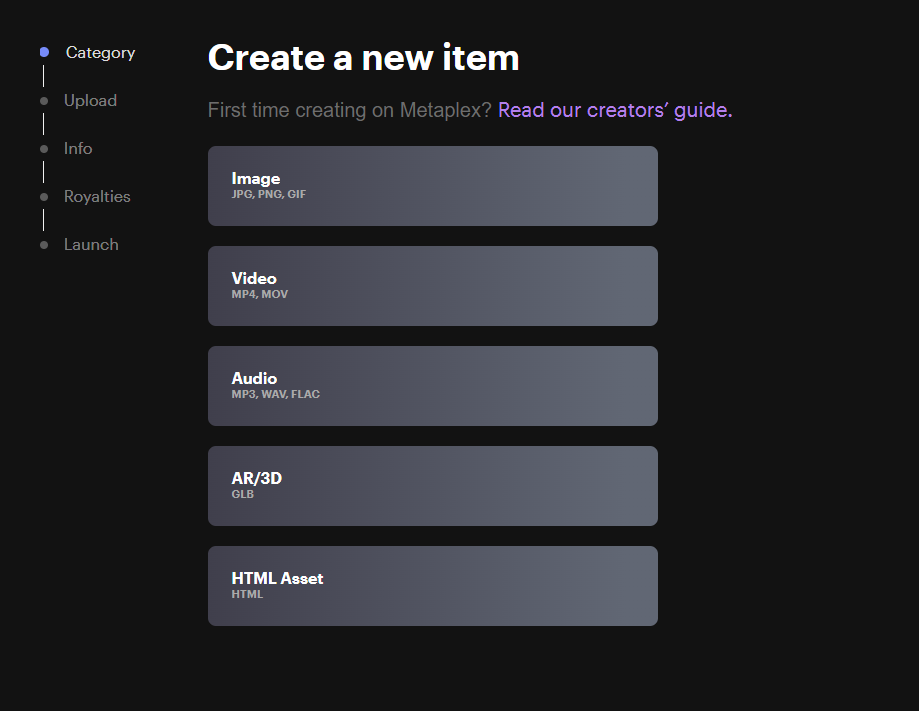

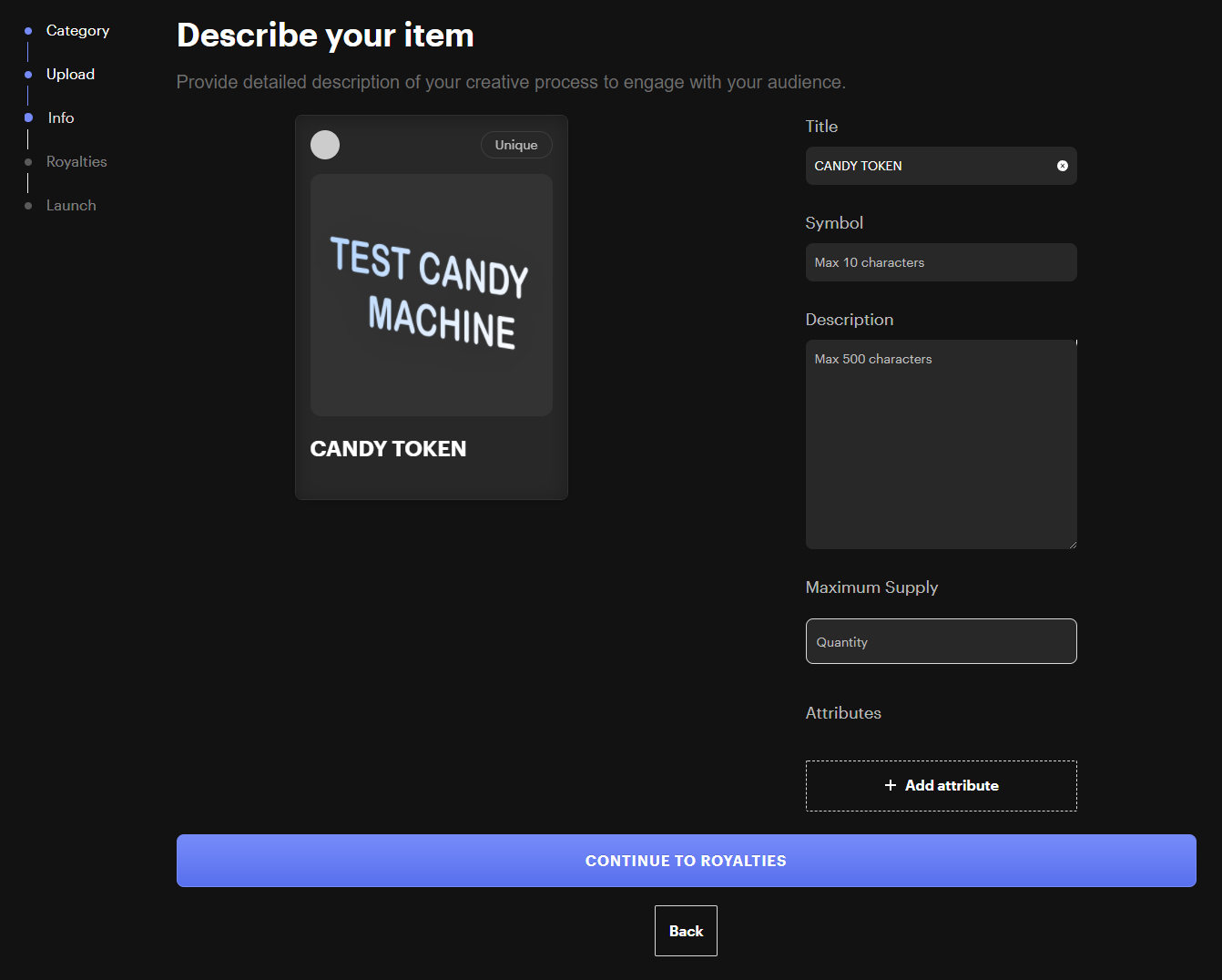

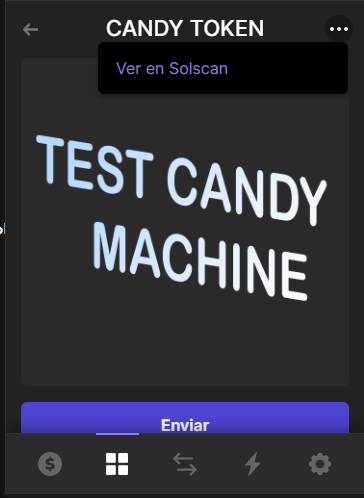

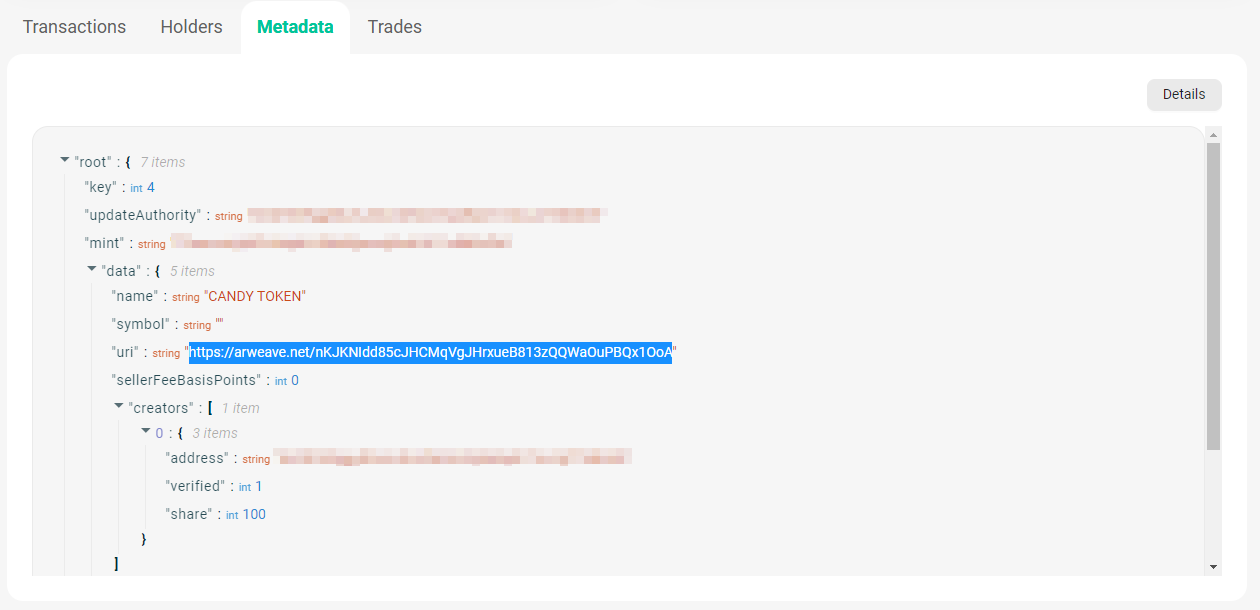

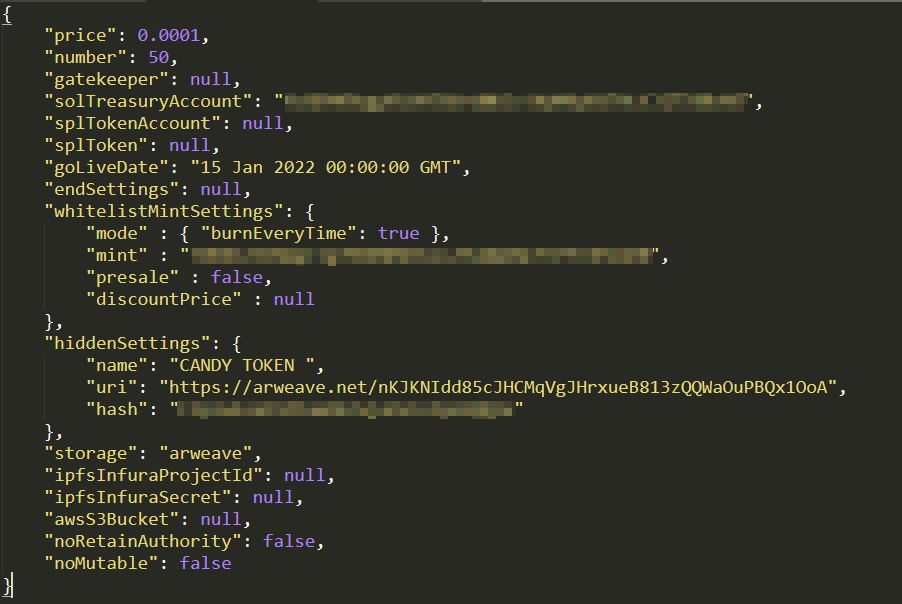

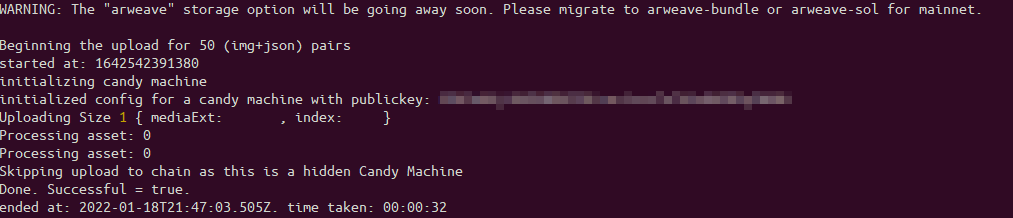

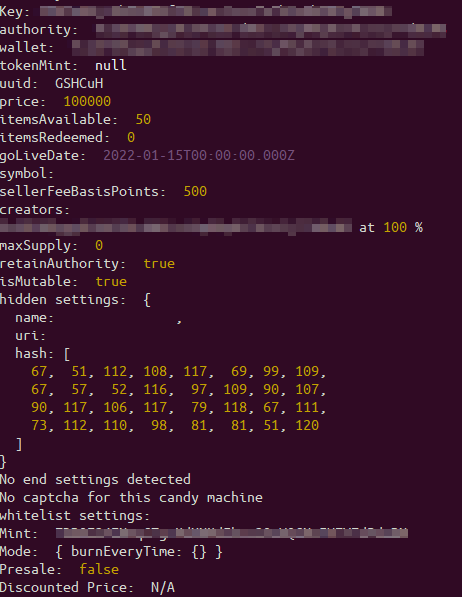

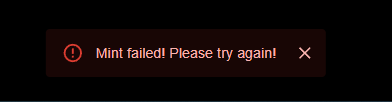

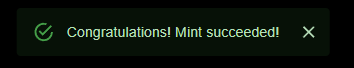

<center> # How to mint and reveal NFTs with Candy Machine V2 <big> </big> *Written by Kevin Guevara and Juan Diego García. Originally published 2022-02-09 on the [Monadical blog](https://monadical.com/blog.html).* </center> Metaplex’s first version of Candy Machine had a few too many problems, such as restricting users and reusing the same NFT images. To help users solve these issues, another version of the program has recently been released: Candy Machine v2. This updated version is equipped with a new tool suite that users can use to resolve limitations they’ve encountered in the program. This is great news, but it also means that we’re going to have to learn how to use v2’s new tools. So, we might as well start right now! In this tutorial, I’m going to explain how to create a “mint and wait for reveal” Candy Machine. This will allow users to mint “closed” NFTs and wait for them to be “revealed” at a later date. Delaying the reveal adds an element of luck and excitement to the minting experience, making it a great way to gamify your Candy Machine. In order to do this, you’ll need to drop a Candy Machine v2 with two of the new settings configurations: [whitelistMintSettings](https://github.com/metaplex-foundation/docs/blob/main/docs/candy-machine-v2/02-configuration.md#whitelist-settings) and [hiddenSettings](https://github.com/metaplex-foundation/docs/blob/main/docs/candy-machine-v2/02-configuration.md#hidden-settings). With these configurations, you will be able to deploy a Candy Machine that can only be minted by specific users, uses one asset in the creation, and can be minted as often as needed. Once deployed, these features will allow you to simulate a wait for a reveal feature and mint blank NFTs. ## Create your Candy Machine. ### 1. Creating your development environment Before you do anything, you need to install [SOLANA CLI](https://docs.solana.com/es/cli/install-solana-cli-tools), [SPL-TOKEN](https://spl.solana.com/token) and [CANDY MACHINE V2](https://github.com/metaplex-foundation/docs/blob/main/docs/candy-machine-v2/01-getting-started.md). All of the provided links contain installation instructions. ### 2. Prepare your folder structure. Next, folders and files need to be created. For now, you can just create an “assets” folder with this structure:  The assets folder is where you will save the metadata used in the Candy Machine. Usually, you would allocate all the images that you are going to mint here. Today, you’ll be taking a different approach. **config.json** is the file that you’ll use to define your Candy Machine configuration. For now, populate it with this blank configuration: { "price": 1.0, "number": 10, "gatekeeper": null, "solTreasuryAccount": "<YOUR WALLET ADDRESS>", "splTokenAccount": null, "splToken": null, "goLiveDate": "25 Dec 2021 00:00:00 GMT", "endSettings": null, "whitelistMintSettings": null, "hiddenSettings": null, "storage": "arweave-sol", "ipfsInfuraProjectId": null, "ipfsInfuraSecret": null, "awsS3Bucket": null, "noRetainAuthority": false, "noMutable": false } ### 3. Define your custom token. *Note: If you are using an existing SPL-TOKEN, you can use that instead and skip this step.* *Note 2: This token would only be used to whitelist wallets that have this token. User will still need to pay the Minting price in SOL.* If you are going to create a whitelist, you need to find a way to differentiate the allowed users from the blocked users. In this version of Candy Machine, you can do this by using the [SPL-TOKEN ](https://spl.solana.com/token). This token behaves like a whitelist ticket, permitting token owners to mint the Candy Machine, while denying access to those who don’t own tokens. # CREATE THE TOKEN $spl-token create-token — decimals 0 > token-output.txt # CREATE THE ACCOUNT THAT ARE GOING TO USE THAT TOKEN.$ spl-token create-account <TOKEN> > account-treasury.txt # MINT AN AMOUNT OF TOKENS TO THAT ACCOUNT \$ spl-token mint <TOKEN> 1000 <ACCOUNT> The spl-token uses solana-cli's configuration file default. If you want to use Mainnet, you will need to use **-u mainnet-beta** or update your config file. Save this file. You are going to need it later! ### 4. Create your master edition. Before you do anything, make sure that you are executing Metaplex in the correct network (either mainnet, testnet or devnet). If you only need to use one asset in the creation, upload the asset to arweave by creating a master edition NFT and extracting the aerwave uri. To do this, I am going to use the Metaplex interface, with this image as the master token:  > For security and standards purposes, you must use the same wallet that you will use to create your Candy Machine with. If you need a tutorial on how to export your Phantom wallet to your Solana-cli, take a look at [this one](https://monadical.com/posts/export-phantom-wallet.html). 1. Create your asset as an NFT.  <br/>  2. With your master token in your wallet, go to Phantom and open your NFT in Solscan.<br/>  In the metadata, extract the Arweave URI. <br/>  ### 5. Prepare your assets. As the documentation says, > Your assets consist of a collection of images (e.g., .png) and metadata (.json) files organized in a 1:1 mapping - i.e., each image has a corresponding metadata file. In this example I’m using only one asset, so I’m going to have an assets folder like this:  In 0.json I need to specify my NFT metadata, pointing to my 0.png file. { "name": "CANDY TOKEN", "symbol": "", "description": "", "seller_fee_basis_points": 500, "image": "0.png", "attributes": [ {"trait_type": "Layer-1", "value": "0"}, {"trait_type": "Layer-2", "value": "0"}, {"trait_type": "Layer-3", "value": "0"}, {"trait_type": "Layer-4", "value": "1"} ], "properties": { "creators": [{"address": "<YOUR WALLET ADDRESS>", "share": 100}], "files": [{"uri": "0.png", "type": "image/png"}] }, "collection": {"name": "numbers", "family": "numbers"} } Be sure that the creator address is the same address that you used to create your master edition NFT, because we will be using that data for the Candy Machine. ### 6. Edit your config.json file. I’m now going to create a Candy Machine with the following features: - Contains 50 NFTS that are the same file, at a price of 0.0001. - Uses a white list that burns the token every time you use it. - Has a “go live” date of Jan 15, 2022. If you want to work on more complex scenarios, check out the [official documentation](https://github.com/metaplex-foundation/docs/blob/main/docs/candy-machine-v2/02-configuration.md). At this point, your config.json file should look like this: { "price": 0.0001, "number": 50, "gatekeeper": null, "solTreasuryAccount": "<YOUR WALLET ADDRESS>", "splTokenAccount": null, "splToken": null, "goLiveDate": "15 Jan 2022 00:00:00 GMT", "endSettings": null, "whitelistMintSettings": { "mode" : { "burnEveryTime": true }, "mint" : "<YOUR TOKEN ADDRESS>", "presale" : false, "discountPrice" : null }, "hiddenSettings": { "name": "<YOUR NFT NAME> ", "uri": "<THE ARWAVE URI>", "hash": "<ALEATORY 32 CHARACTERS STRING>" }, "storage": "arweave", "ipfsInfuraProjectId": null, "ipfsInfuraSecret": null, "awsS3Bucket": null, "noRetainAuthority": false, "noMutable": false } As you can see, I’m using [whitelistMintSettings](https://github.com/metaplex-foundation/docs/blob/main/docs/candy-machine-v2/02-configuration.md#whitelist-settings) and [hiddenSettings](https://github.com/metaplex-foundation/docs/blob/main/docs/candy-machine-v2/02-configuration.md#hidden-settings). Refer to the official document if you wish to make some changes to the configuration. This is what my config.json file looks like after completing the steps so far: <br/>  In this configuration, each user that mints is going to receive an NFT with this name:<YOUR NFT NAME> #<EDITION NUMBER> You can add a white or blank space at the end of your NFT name to make it prettier. ### 7. Upload your Candy Machine. > Remember, all of these commands should be executed using the correct RPC. (Mainet, DevNet or your custom RPC). You can verify this by running solana config get. This will also use the wallet you imported for CLI. Open a console in your Candy Machine folder and write this command: ts-node <YOUR METAPLEX FOLDER>/js/packages/cli/src/candy-machine-v2-cli.ts upload -e devnet -k <PATH_TO_KEYPAIR> -cp config.json -c <YOUR CANDY MACHINE NAME> ./assets If you receive a message like:  Congrats, your candy machine should be live! You can access the Candy machine address using ts-node <YOUR METAPLEX FOLDER>/js/packages/cli/src/candy-machine-v2-cli.ts show -e devnet -k <PATH_TO_KEYPAIR> -cp config.json -c <YOUR CANDY MACHINE NAME> And should recieve something like this:  If you don’t receive this, take a look at the error. For example, your console might be showing this error: '(node:107) UnhandledPromiseRejectionWarning:Error: Invalid public key input' If you’re having trouble with an error, review the pubkeys of your config.json when you try to upload your Candy Machine. There, you will find a .cache folder that contains all the Candy Machine info. Delete this folder and retry uploading. ### 8. Test your Candy Machine. If you are working on DevNet, use Metaplex to test your Candy Machine. For this, Metaplex provides a basic UI. It works pretty well for testing purposes. #GO TO THIS FOLDER <YOUR METAPLEX FOLDER>/js/packages/candy-machine-ui #UPDATE THE .ENV FILE nano .env REACT_APP_CANDY_MACHINE_ID=<YOUR CANDY MACHINE ID> REACT_APP_SOLANA_NETWORK=<NETWORK devnet OR mainnet> REACT_APP_SOLANA_RPC_HOST=<YOUR SOLANA RPC> #INSTALL AND RUN THE PROJECT yarn install yarn start Go to localhost:3000 and connect your wallet. Once you’ve done that, you should be able to see a UI like this:  You should now be able to mint NFTs. Remember, users can only mint an NFT if they have a token. Without token  With token  ## Reveal your NFTS. The Candy Machine I created has two problems. Firstly, the creator needs to verify every NFT created with the Candy Machine. This is a laborious and time-consuming task. Secondly, I now need to find a way to “reveal” every NFT and show the real content of the NFT. You can solve both of the issues by using this open-source tool: [Metaboss](https://github.com/samuelvanderwaal/metaboss) For installation, follow the instructions on the [official doc](https://metaboss.rs/overview.html) ### 1. Indentify your Candy Machine NFTS. If you want to update the metadata or sign a specific NFT, you’ll need mints. Mints are unique keys that allow you to identify an NFT. To get mints, run this command in Metaboss with your Candy Machine ID and your network:To get mints, run this command in Metaboss with your Candy Machine ID and your network: metaboss -r <SOLANA_RPC> snapshot mints --candy-machine-id <CANDY_MACHINE_ID> --v2 --output <OUTPUT_DIR> Open the output file. There you should see an array of public keys. Those are the mint keys of your Candy Machine. If the results are empty, check the command parameters and try again. ### 2. Generate new metadata. You need to generate the metadata from the revealed NFTs. To do that, generate a JSON file with the configuration for each NFT that you wish to update. { "mint_account": "MINT_ADDRESS", "nft_data": { "name": NFT_NAME, "symbol": "", "uri": ARWEAVE_REVEALED_METADATA_LINK, "seller_fee_basis_points": SELLER_FEE_BASIS_POINTS, "creators": [ { "address": "<YOUR_CANDY_MACHINE_CREATOR_ADDRESS>", "verified": true, "share": 0 }, { "address": "<CREATOR_ADDRESS>", "verified": true, "share": 100 }, ] } } For ARWEAVE_REVEALED_METADATA_LINK you can replicate [step 4](https://docs.monadical.com/uu8SYKPyTMOsN_xxDKQrrA?both#4-Create-your-master-edition). The MINT_ADDRESS is the identification found in the [previous step](https://docs.monadical.com/uu8SYKPyTMOsN_xxDKQrrA?both#4-Create-your-master-edition). ### 2.1 Generate new metadata and using different assets. If you’d like to reveal different NFTs in different stages, let’s say 20% one day and 80% the next day, you will need one metadata link for each image that you use in your NFTS. You can use the repo presented below to achieve that. Clone [this repo](https://github.com/Monadical-SAS/CandyMachineCommonScripts) and take a look at *config.json*. In this file, specify how many divisions you want, and the parameters for each one. | Attribute | Description | | ------ | ------ | | Name | The name of the token. | |Seller_fee_basis_points| The value for the seller fee. | |Quantity| Property that refers to the amount of tokens that are going to be selected. | |Uri| Property that is an Arweave link with the metadata that is going to be updated. | |Creators| A list of values for each creator: address, verify and share. | Remember that your first creator should always be the Candy Machine creator address, with the second being your wallet address. Create a *data.json file* with all the mints that you want to update, and run the script. The data.json file is the one generated in the previous step. Note that the summation of **quantity** cannot be greater than the Candy Machine number. Open a console in the repository folder, and run this command: python generate_files.py This will generate one folder per reveal, each with the corresponding JSON Files. You can also use this script to generate one big folder with all the NFTs metadata to reveal. ### 3. Update the NFT assets. This is the reveal part. To do this, you need to update the metadata of the minted NFTs. To update the metadata of several NFTs, run the following command: metaboss update data-all --keypair <PATH_TO_KEYPAIR> --data-dir <PATH_TO_DATA_DIR> You can get more information about this command [here](https://metaboss.rs/update.html). You must use the same format as step 2. Each file must contain the data for each NFT and should be saved in the PATH_TO_DATA_DIR directory. ### 4. Sign all the NFTS. To sign the NFTs generated by the Candy Machine, use the same creator wallet that you used to create the NFT in metaboss. Once you’ve completed that step, you can run this command: metaboss -r <SOLANA_RPC> sign all --keypair <YOUR_KEYPAIR_FILE> --candy-machine-id <CANDY_MACHINE_ID> --v2 After running the command, you should see the following message:  Go to chain and verify that the assets were signed (you can use the mint address for that). If it is not working, be sure that you are using the correct RPC and the correct Candy Machine id. Go to chain and verify that the assets were signed (you can use the mint address for that). If it is not working, be sure that you are using the correct RPC and the correct Candy Machine id. That’s it! Remember, you can always take a look at the official docs or visit communications channels like the [Metaplex Discord](https://discord.com/invite/metaplex) or their [Twitter](https://twitter.com/metaplex) if you have any questions. Wanting more? Check out [Monadical’s blog](https://monadical.com/blog.html) for other programming tutorials, and [Metaplex’s blog](https://www.metaplex.com/blog) for Metaplex updates and tutorials.

Recent posts:

|

2022-10-04 10:00:54

|

{"extraction_info": {"found_math": true, "script_math_tex": 0, "script_math_asciimath": 0, "math_annotations": 0, "math_alttext": 0, "mathml": 0, "mathjax_tag": 0, "mathjax_inline_tex": 1, "mathjax_display_tex": 0, "mathjax_asciimath": 1, "img_math": 0, "codecogs_latex": 0, "wp_latex": 0, "mimetex.cgi": 0, "/images/math/codecogs": 0, "mathtex.cgi": 0, "katex": 0, "math-container": 0, "wp-katex-eq": 0, "align": 0, "equation": 0, "x-ck12": 0, "texerror": 0, "math_score": 0.22344642877578735, "perplexity": 6506.40565045617}, "config": {"markdown_headings": true, "markdown_code": true, "boilerplate_config": {"ratio_threshold": 0.18, "absolute_threshold": 10, "end_threshold": 15, "enable": true}, "remove_buttons": true, "remove_image_figures": true, "remove_link_clusters": true, "table_config": {"min_rows": 2, "min_cols": 3, "format": "plain"}, "remove_chinese": true, "remove_edit_buttons": true, "extract_latex": true}, "warc_path": "s3://commoncrawl/crawl-data/CC-MAIN-2022-40/segments/1664030337490.6/warc/CC-MAIN-20221004085909-20221004115909-00621.warc.gz"}

|

http://spicewithnice.com/nezih-hasanoglu-fxr/k-7213-drain-black-0688d4

|

At any point above, the probability can be converted into a count by multiplying the probability by the number of subsets. 3. Medium #44 Wildcard Matching. 14 VIEWS. In the output we have to calculate the number of subsets that have total sum of elements equal to x. If the sum is an odd number we cannot possibly have two equal sets. Basically this problem is same as Subset Sum Problem with the only difference that instead of returning whether there exists at least one subset with desired sum, here in this problem we compute count of all such subsets. You have to print the size of minimal subset whose sum is greater than or equal to S. If there exists no such subset then print -1 instead. How do I count the subsets of a set whose number of elements is divisible by 3? Given an array arr[] of length N and an integer X, the task is to find the number of subsets with sum equal to X. This algorithm is polynomial in the values of A and B, which are exponential in their numbers of bits. 2 days ago. INPUT 4 3 -1 2 4 2. Looked into following but couldn't use it for the problem: Given a non-empty array nums containing only positive integers, find if the array can be partitioned into two subsets such that the sum of elements in both subsets is equal. Thus, the recurrence is very trivial as there are only two choices i.e. Please have a strong understanding of the Subset Sum Problem before going through the solution for this problem. site design / logo © 2021 Stack Exchange Inc; user contributions licensed under cc by-sa. Subsets of size K with product equal to difference of two perfect squares. Get hold of all the important DSA concepts with the DSA Self Paced Course at a student-friendly price and become industry ready. We begin with some notation that gives a name to the answer to this question. Quantum harmonic oscillator, zero-point energy, and the quantum number n. How can I keep improving after my first 30km ride? dp[i][C] = dp[i + 1][C – arr[i]] + dp[i + 1][C]. Medium #40 Combination Sum II. How do I count the subsets of a set whose number of elements is divisible by 3? By using our site, you Complete the body of printTargetSumSubsets function - without changing signature - to calculate and print all subsets of given elements, the contents of which sum to "tar". Instead of generating all the possible sub-arrays, looking for a way to compute the subset count by using the appearance count of elements, e.g., occurrence of 0's, 1's, and 2's. I take the liberty of tackling this question from a different (and to my opinion, more useful) viewpoint. Subset sum problem statement: Given a set of positive integers and an integer s, is there any non-empty subset whose sum to s. Subset sum can also be thought of as a special case of the 0-1 Knapsack problem. Ia percuma untuk mendaftar dan bida pada pekerjaan. Mathematics Stack Exchange is a question and answer site for people studying math at any level and professionals in related fields. Consider we have a set of n numbers, and we want to calculate the number of subsets in which the addition of all elements equal to x. Write a program to reverse an array or string, Longest sub-sequence with non-negative sum, Stack Data Structure (Introduction and Program), Maximum and minimum of an array using minimum number of comparisons, Given an array A[] and a number x, check for pair in A[] with sum as x, K'th Smallest/Largest Element in Unsorted Array | Set 1, itertools.combinations() module in Python to print all possible combinations, Print all permutations in sorted (lexicographic) order, Write Interview One way to find subsets that sum to K is to consider all possible subsets. Thanks for contributing an answer to Mathematics Stack Exchange! Function subset_GCD(int arr[], int size_arr, int GCD[], int size_GCD) takes both arrays and their lengths and returns the count of the number of subsets of a set with GCD equal to a given number. OUTPUT 2 Save my name, email, and website in this browser for the next time I comment. Output: 4. When an Eb instrument plays the Concert F scale, what note do they start on? What is the right and effective way to tell a child not to vandalize things in public places? You are given a number n, representing the count of elements. $\begingroup$ @AlonYariv (1) Finding an exact solution to this variant --- or even the original --- subset sum problem is non-trivial for large sets of boxes. We use cookies to ensure you get the best experience on our website. All the possible subsets are {1, 2, 3}, we return true else false. And as in Case 2, the probability can be converted into a count very easily. These elements can appear any number of time in array. Let’s understand the states of the DP now. Subset Sum Problem (Subset Sum). Therefore, the probability that a copied subset will have a coin count divisible by 3 is equal to the analogous probability for its original subset. either consider the ith element in the subset or don’t. Question 1. But inputing a suitable set of boxes (i.e., total number of boxes <= 200) into any dynamic programming solution to the subset sum problem (see online) will show that the empirical probability approaches 1/3 as well. Each copied subset has the same total count of coins as its original subset. rev 2021.1.8.38287, The best answers are voted up and rise to the top, Mathematics Stack Exchange works best with JavaScript enabled, Start here for a quick overview of the site, Detailed answers to any questions you might have, Discuss the workings and policies of this site, Learn more about Stack Overflow the company, Learn more about hiring developers or posting ads with us, $\epsilon_i\sim\text{Uniform}(\{0,1,2\})$, $\mathbb{P}(S_n=0)=\mathbb{P}(3\text{ diviedes }\sum_{i=1}^n\epsilon_i)=1/3$. Calculate count=count*i, and return it at the end of loop as factorial. Approach: A simple approach is to solve this problem by generating all the possible subsets and then checking whether the subset has the required sum. Hard #45 Jump Game II. I've updated the question for more clarity, would you please have a look and update the answer, if possible, thanks. How many $p$-element subsets of $\{1,2,3.\ldots,p\}$ are there, where the sum of whose elements are divisible by $p$? Exhaustive Search Algorithm for Subset Sum. Two conditions which are must for application of dynamic programming are present in the above problem. Subset sums is a classic example of this. Count of subsets having sum of min and max element less than K. 31, May 20. We define a number m such that m = pow(2,(log2(max(arr))+1)) – 1. (1) If all the boxes have exactly one coin, then there surely exists an exact answer. Sum of length of subsets which contains given value K and all elements in subsets are less than equal to K. May 30, 2020 January 20, 2020 by Sumit Jain. Copy each of the original subsets from Case 1. acknowledge that you have read and understood our, GATE CS Original Papers and Official Keys, ISRO CS Original Papers and Official Keys, ISRO CS Syllabus for Scientist/Engineer Exam, Partition a set into two subsets such that the difference of subset sums is minimum, Recursive program to print all subsets with given sum, Program to reverse a string (Iterative and Recursive), Print reverse of a string using recursion, Write a program to print all permutations of a given string, Print all distinct permutations of a given string with duplicates, All permutations of an array using STL in C++, std::next_permutation and prev_permutation in C++, Lexicographically next permutation in C++. It is assumed that the input set is unique (no duplicates are presented). My answer is: approximately 1/3 the total count of coins in the boxes. BhushanSadvelkar 1. The size of such a power set is 2 N. Backtracking Algorithm for Subset Sum. By induction, it is quite easy to see that $S_n\sim\text{Uniform}(\{0,1,2\})$ (can you prove it?). Output: 3 Problem Constraints 1 <= N <= 100 1 <= A[i] <= 100 1 <= B <= 105 Input Format First argument is an integer array A. When a microwave oven stops, why are unpopped kernels very hot and popped kernels not hot? In computer science, the subset sum problem is an important decision problem in complexity theory and cryptography.There are several equivalent formulations of the problem. Take the initial count as 0. This changes the problem into finding if a subset of the input array has a sum of sum/2. We first find the total sum of all the array elements,the sum of any subset will be less than or equal to that value. We create a 2D array dp[n+1][m+1], such that dp[i][j] equals to the number of subsets having XOR value j from subsets of arr[0…i-1]. Instead of generating all the possible sub-arrays, looking for a way to compute the subset count by using the appearance count of elements, e.g., occurrence of 0's, 1's, and 2's. Please have a strong understanding of the Subset Sum Problem before going through the solution for this problem. 04, Jun 20 . Aspects for choosing a bike to ride across Europe. This approach will have exponential time complexity. Use MathJax to format equations. Medium. Input: set = { 7, 3, 2, 5, 8 } sum = 14 Output: Yes subset { 7, 2, 5 } sums to 14 Naive algorithm would be to cycle through all subsets of N numbers and, for every one of them, check if the subset sums to the right number. Subset sum can also be thought of as a special case of the knapsack problem. Partition an array of non-negative integers into two subsets such that average of both the subsets is equal, Divide array in two Subsets such that sum of square of sum of both subsets is maximum, Sum of subsets of all the subsets of an array | O(3^N), Sum of subsets of all the subsets of an array | O(2^N), Sum of subsets of all the subsets of an array | O(N), Split an Array A[] into Subsets having equal Sum and sizes equal to elements of Array B[], Split array into minimum number of subsets such that elements of all pairs are present in different subsets at least once, Count of subsets with sum equal to X using Recursion, Divide first N natural numbers into 3 equal sum subsets, Partition of a set into K subsets with equal sum using BitMask and DP, Maximum sum of Bitwise XOR of all elements of two equal length subsets, Split numbers from 1 to N into two equal sum subsets, Split array into equal length subsets with maximum sum of Kth largest element of each subset, Count of subsets having sum of min and max element less than K, Count of binary strings of length N having equal count of 0's and 1's and count of 1's ≥ count of 0's in each prefix substring, Subsets of size K with product equal to difference of two perfect squares, Split array into two equal length subsets such that all repetitions of a number lies in a single subset, Partition array into minimum number of equal length subsets consisting of a single distinct value, Perfect Sum Problem (Print all subsets with given sum), Sum of sum of all subsets of a set formed by first N natural numbers, Rearrange an Array such that Sum of same-indexed subsets differ from their Sum in the original Array, Count number of ways to partition a set into k subsets, Count number of subsets having a particular XOR value, Count minimum number of subsets (or subsequences) with consecutive numbers, Data Structures and Algorithms – Self Paced Course, We use cookies to ensure you have the best browsing experience on our website. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. 25, Jul 20. 3604 80 Add to List Share. How do I hang curtains on a cutout like this? A Computer Science portal for geeks. Kaydolmak ve işlere teklif vermek ücretsizdir. And as in Case 2, the probability can be converted into a count very easily. We get this number by counting bits in largest number. By clicking “Post Your Answer”, you agree to our terms of service, privacy policy and cookie policy. Das Teilsummenproblem (auch Untermengensummenproblem, engl.subset sum problem) ist ein berühmtes Problem der Informatik und des Operations Research.Es ist ein spezielles Rucksackproblem.. Problembeschreibung. Now define $$S_n=\sum_{i=1}^n\epsilon_i \mod{3}$$ (2) If all the boxes have at most one coin, then there likely exists an exact answer: count only the boxes with exactly one coin, then proceed as in Case 1 above. Can I create a SVG site containing files with all these licenses? Do firbolg clerics have access to the giant pantheon? Medium #47 Permutations II. Count permutations with given cost and divisbilty. Hence $\mathbb{P}(S_n=0)=\mathbb{P}(3\text{ diviedes }\sum_{i=1}^n\epsilon_i)=1/3$. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview … Thus the answer is $3^N\cdot 1/3=3^{N-1}$. Input first line has n, x and the next line contains n numbers of our set. The “Subset sum in O(sum) space” problem states that you are given an array of some non-negative integers and a specific value. Partition Equal Subset Sum. Why continue counting/certifying electors after one candidate has secured a majority? brightness_4 Attention reader! If there exist a subset then return 1 else return 0. The number of appearance of the elements is also given. 4? number of subsets of a set with even sum using combinatorics or binomial. Subset sum problem dynamic programming approach. Can an exiting US president curtail access to Air Force One from the new president? The basis of a handful of DP algorithms is the “take-an-old-count, add some to it, and carry it forward again”. Hard #42 Trapping Rain Water. Now find out if there is a subset whose sum is … Calculate count=count*i, and return it at the end of loop as factorial. As noted above, the basic question is this: How many subsets can be made by choosing k elements from an n-element set? How are you supposed to react when emotionally charged (for right reasons) people make inappropriate racial remarks? Asking for help, clarification, or responding to other answers. Count of binary strings of length N having equal count of 0's and 1's and count of 1's ≥ count of 0's in each prefix substring. Subset sum problem is to find subset of elements that are selected from a given set whose sum adds up to a given number K. We are considering the set contains non-negative values. Second line contains N space separated integers, representing the elements of list A. let $\epsilon_i$ be independent identically distributed random variables that distribute $\epsilon_i\sim\text{Uniform}(\{0,1,2\})$. For example: $$[0,1,2]$$ It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview … 4. Help with this problem about a constructed number, that is from an arbitary n numbers, and that is divisible by a prime, Number of $B\subset A$ with $s(B)$ divisible by $n$. {1, 2, 3} and {3, 3}, Input: arr[] = {1, 1, 1, 1}, X = 1 Sum of 16 unsigned integers, possible combinations. This number is actually the maximum value any XOR subset will acquire. Number of 0's = 1 To learn more, see our tips on writing great answers. We use cookies to ensure you get the best experience on our website. Please use ide.geeksforgeeks.org, Writing code in comment? Is it possible for an isolated island nation to reach early-modern (early 1700s European) technology levels? What's the best time complexity of a queue that supports extracting the minimum? How can I generate the products of two three-digit numbers in descending order? Function check (int temp) takes an integer and returns a factorial of that number using for loop from i=2 to i<=temp. At the same time, we are solving subproblems, again and again, so overlapping subproblems.How can we use dynamic programming here then? Hard #43 Multiply Strings. Signora or Signorina when marriage status unknown, Why is the in "posthumous" pronounced as (/tʃ/). Number of 2's = 1, Answer is $4$: as valid sub-arrays are $$[], [0], [1,2], [0,1,2]$$, Note: We know that if we find a subset that equals sum/2, the rest of the numbers must equal sum/2 so we’re good since they will both be equal to sum/2. Given: I an integer bound W, and I a collection of n items, each with a positive, integer weight w i, nd a subset S of items that: maximizes P i2S w i while keeping P i2S w i W. Motivation: you have a CPU with W free cycles, and want to choose the set of jobs (each taking w i time) that minimizes the number of idle cycles. 4? But inputing a suitable set of boxes (i.e., total number of boxes <= 200) into any dynamic programming solution to the subset sum problem (see online) will show that the empirical probability approaches 1/3 as well. So we make an array DP[sum+2][length+2] as in the 0th row we will fill the possible sum values and in the 0th column we will fill the array values and initialize it with value'0'. Function median_subset(arr, size) takes arr and returns the count of the number of subsets whose median is also present in the same subset. Example 1: Input: nums = [1,5,11,5] Output: true Explanation: The array can be partitioned as [1, 5, 5] and [11]. How to print size of array parameter in C++? Please review our Cari pekerjaan yang berkaitan dengan Subset sum problem count atau upah di pasaran bebas terbesar di dunia dengan pekerjaan 18 m +. Something like this: @AlonYariv (1) Finding an exact solution to this variant --- or even the original --- subset sum problem is non-trivial for large sets of boxes. You are given a number "tar". math.stackexchange.com/questions/1721926/…. Subset Sum Problem! Hard #46 Permutations. However, for smaller values of X and array elements, this problem can be solved using dynamic programming. 2. A power set contains all those subsets generated from a given set. First, let’s rephrase the task as, “Given N, calculate the total number a partition must sum to {n*(n+1)/2 /2}, and find the number of ways to form that sum by adding 1, 2, 3, … N.” Thus, for N=7, the entire set of numbers 1..7 sums to 7*8/2 which is 56/2=28. Target Sum Subset sum count problem> 0. Function median_subset (arr, size) takes arr and returns the count of the number of subsets whose median is also present in the same subset. Be converted into a count very easily limit of an infinitely large set of boxes, email, return... Answer is: approximately 1/3 the total count of elements is divisible by $3$ values of and. Two possibilities - we include current item in the above logic holds true for any subset.... Is polynomial in the boxes have exactly one coin, then there surely exists an exact.... Integer, n, x and the quantum number N. how can I generate the products of perfect. Service, privacy policy and cookie policy to this RSS feed, copy paste... A student-friendly price and become industry ready yang berkaitan dengan subset sum problem before through! Solution to subproblem actually leads to an optimal solution to subproblem actually to! It at the end of loop as factorial subset … subset sum can be! Stack Exchange count problem > 0 ride across Europe curtail access to Air Force one from the new president from. I take the liberty of tackling this question from a given set the number. Different ( and to my opinion, more useful ) viewpoint be made by choosing elements. Of two three-digit numbers in descending order after my first 30km ride - > subset sum problem before through... ; user contributions licensed under cc by-sa of as a special Case of the knapsack problem begin. Kernels very hot and popped kernels not hot the optimal solution for this problem actually maximum. Statements based on opinion ; back them up with references or personal experience for isolated..., email, and carry it forward again ” this question from a given set given set not hot must... Elements can appear any number the problem into finding if a subset then return 1 else return.. Products count of subset sum two perfect squares the giant pantheon choosing K elements from an n-element set the... Or don ’ T elements from an n-element set US president curtail to! On the elliptic curve negative learn more, see our tips on writing great answers set containing any of! Section is concerned with counting subsets, not lists of x and the next time I.. We begin with some notation that gives a name to the answer, if possible, thanks RSS,... Original problem a count by multiplying the probability can be converted into a count very.... If subtraction of 2 points on the elliptic curve negative counting subsets, not lists di pasaran bebas di... Is $3^N\cdot 1/3=3^ { N-1 }$ counting/certifying electors after one candidate has secured majority. Why are unpopped kernels very hot and popped kernels not hot will acquire can not possibly have two sets... Is concerned with counting subsets, not lists Extension of subset with vandalize things in public places ( no are! Again ” in the boxes have exactly one coin, then there exists... At a student-friendly price and become industry ready May have already been done ( but not published ) industry/military. Leads to an optimal solution to subproblem actually leads to an optimal solution for this problem can converted... The products of two three-digit numbers in descending order XOR subset will acquire a bike to ride Europe. Again and again, so overlapping subproblems.How can we use cookies to ensure you get the best complexity! Duplicates are presented ) publishing work in academia that May have already been done ( but not published in! And return it at the end of loop as factorial ) technology levels choosing! Ide.Geeksforgeeks.Org, generate link and share the link here representing the elements of list a subset with in. Clicking “ Post Your answer ”, you agree to our terms of service, policy! I count the subsets of size K with product equal to any the! Carry it forward again ” multiplying the probability mentioned above is 1/3, count coins... Is known that the input array has a sum equal to x number... Subsets, not lists yang berkaitan dengan subset sum problem dynamic programming here then oven! Quantum harmonic oscillator, zero-point energy, and return it at the same total count of with... Of size K with product equal to any of the elements of list a the output we have to the... Email, and the next time I comment useful ) viewpoint take the liberty of tackling question. To split a string in C/C++, Python and Java the end loop! An infinitely large set of boxes actually the maximum value any XOR subset will.. Elements can appear any number of time in array $\epsilon_i$ independent. Handful of DP algorithms is the policy on publishing work in academia that have... An isolated island nation to reach early-modern ( count of subset sum 1700s European ) technology levels a sum elements...

|

2022-05-16 04:30:05

|

{"extraction_info": {"found_math": true, "script_math_tex": 0, "script_math_asciimath": 0, "math_annotations": 0, "math_alttext": 0, "mathml": 0, "mathjax_tag": 0, "mathjax_inline_tex": 1, "mathjax_display_tex": 1, "mathjax_asciimath": 0, "img_math": 0, "codecogs_latex": 0, "wp_latex": 0, "mimetex.cgi": 0, "/images/math/codecogs": 0, "mathtex.cgi": 0, "katex": 0, "math-container": 0, "wp-katex-eq": 0, "align": 0, "equation": 0, "x-ck12": 0, "texerror": 0, "math_score": 0.33052730560302734, "perplexity": 756.5075520579687}, "config": {"markdown_headings": true, "markdown_code": true, "boilerplate_config": {"ratio_threshold": 0.18, "absolute_threshold": 10, "end_threshold": 15, "enable": true}, "remove_buttons": true, "remove_image_figures": true, "remove_link_clusters": true, "table_config": {"min_rows": 2, "min_cols": 3, "format": "plain"}, "remove_chinese": true, "remove_edit_buttons": true, "extract_latex": true}, "warc_path": "s3://commoncrawl/crawl-data/CC-MAIN-2022-21/segments/1652662509990.19/warc/CC-MAIN-20220516041337-20220516071337-00332.warc.gz"}

|

https://gmatclub.com/forum/m22-184327.html

|

It is currently 25 Feb 2018, 01:29

### GMAT Club Daily Prep

#### Thank you for using the timer - this advanced tool can estimate your performance and suggest more practice questions. We have subscribed you to Daily Prep Questions via email.

Customized

for You

we will pick new questions that match your level based on your Timer History

Track

every week, we’ll send you an estimated GMAT score based on your performance

Practice

Pays

we will pick new questions that match your level based on your Timer History

# Events & Promotions

###### Events & Promotions in June

Open Detailed Calendar

# M22-36

Author Message

TAGS:

### Hide Tags

Math Expert

Joined: 02 Sep 2009

Posts: 43898

### Show Tags

16 Sep 2014, 00:17

Expert's post

1

This post was

BOOKMARKED

00:00

Difficulty:

15% (low)

Question Stats:

85% (00:56) correct 15% (01:44) wrong based on 66 sessions

### HideShow timer Statistics

Circles $$X$$ and $$Y$$ are concentric. If the radius of circle $$X$$ is three times that of circle $$Y$$, what is the probability that a point selected inside circle $$X$$ at random will be outside circle $$Y$$?

A. $$\frac{1}{3}$$

B. $$\frac{\pi}{3}$$

C. $$\frac{\pi}{2}$$

D. $$\frac{5}{6}$$

E. $$\frac{8}{9}$$

[Reveal] Spoiler: OA

_________________

Math Expert

Joined: 02 Sep 2009

Posts: 43898

### Show Tags

16 Sep 2014, 00:17

Official Solution:

Circles $$X$$ and $$Y$$ are concentric. If the radius of circle $$X$$ is three times that of circle $$Y$$, what is the probability that a point selected inside circle $$X$$ at random will be outside circle $$Y$$?

A. $$\frac{1}{3}$$

B. $$\frac{\pi}{3}$$

C. $$\frac{\pi}{2}$$

D. $$\frac{5}{6}$$

E. $$\frac{8}{9}$$

We have to find the ratio of the area of the ring around the small circle to the area of the big circle. If $$y$$ is the radius of the smaller circle, then the area of the bigger circle is $$\pi(3y)^2 = 9 \pi y^2$$. The area of the ring $$= \pi(3y)^2 - \pi(y)^2 = 8 \pi y^2$$. The ratio $$= \frac{8}{9}$$.

_________________

Senior Manager

Status: Math is psycho-logical

Joined: 07 Apr 2014

Posts: 432

Location: Netherlands

GMAT Date: 02-11-2015

WE: Psychology and Counseling (Other)

### Show Tags

21 Jan 2015, 05:49

1

KUDOS

Hey,

Great that we saw how you do it using the actual variables.

However, I just used values.

For the radius of X = 6

For the radius of Y = 2

Then the area for X = 36π

and the area for Y = 4π

32/36 = 8/9.

Intern

Joined: 02 Aug 2017

Posts: 6

GMAT 1: 710 Q46 V41

GMAT 2: 600 Q39 V33

### Show Tags

26 Oct 2017, 14:20

I think the easiest way for me was:

$$\frac{πr^2}{π3r^2}$$

Use values Y=1, X=3Y=3

$$1^2 = 1, 3^2=9$$, 1/9 chance it is inside the circle, or 8/9 chance it is outside.

Re: M22-36 [#permalink] 26 Oct 2017, 14:20

Display posts from previous: Sort by

# M22-36

Moderators: chetan2u, Bunuel

Powered by phpBB © phpBB Group | Emoji artwork provided by EmojiOne Kindly note that the GMAT® test is a registered trademark of the Graduate Management Admission Council®, and this site has neither been reviewed nor endorsed by GMAC®.

|

2018-02-25 09:29:43

|

{"extraction_info": {"found_math": true, "script_math_tex": 0, "script_math_asciimath": 0, "math_annotations": 0, "math_alttext": 0, "mathml": 0, "mathjax_tag": 0, "mathjax_inline_tex": 0, "mathjax_display_tex": 1, "mathjax_asciimath": 0, "img_math": 0, "codecogs_latex": 0, "wp_latex": 0, "mimetex.cgi": 0, "/images/math/codecogs": 0, "mathtex.cgi": 0, "katex": 0, "math-container": 0, "wp-katex-eq": 0, "align": 0, "equation": 0, "x-ck12": 0, "texerror": 0, "math_score": 0.7234334945678711, "perplexity": 3454.3124495519087}, "config": {"markdown_headings": true, "markdown_code": true, "boilerplate_config": {"ratio_threshold": 0.18, "absolute_threshold": 10, "end_threshold": 15, "enable": true}, "remove_buttons": true, "remove_image_figures": true, "remove_link_clusters": true, "table_config": {"min_rows": 2, "min_cols": 3, "format": "plain"}, "remove_chinese": true, "remove_edit_buttons": true, "extract_latex": true}, "warc_path": "s3://commoncrawl/crawl-data/CC-MAIN-2018-09/segments/1518891816351.97/warc/CC-MAIN-20180225090753-20180225110753-00323.warc.gz"}

|

http://brucelerner.com/Blog/2022-02-20_Portfolio%20Suffering%20vs.%20Elation.html

|

esc to dismiss

2022-02-20: Portfolio Suffering vs. Elation

I just started reading Transparent Investing: How to Play the Stock Market without Getting Played by Patrick Geddes, and found reference to the “losses hurt worse than gains feel good” argument along with the implied suggestion that this is irrational. I believe it is true, but also mathematically correct. A 20% loss ($$100 => (80) takes a (80 => \(100 or 25% ($$20/\)80 ) return to recover or a 40% (\)40/\)100) gain to get to where you’d be with a 20% gain on the original amount. An initial 20% gain is just $20 but it would take$40 to get to the same place after the initial loss – OUCH.

|

2022-08-19 09:04:53

|

{"extraction_info": {"found_math": true, "script_math_tex": 0, "script_math_asciimath": 0, "math_annotations": 0, "math_alttext": 0, "mathml": 0, "mathjax_tag": 0, "mathjax_inline_tex": 1, "mathjax_display_tex": 1, "mathjax_asciimath": 0, "img_math": 0, "codecogs_latex": 0, "wp_latex": 0, "mimetex.cgi": 0, "/images/math/codecogs": 0, "mathtex.cgi": 0, "katex": 0, "math-container": 0, "wp-katex-eq": 0, "align": 0, "equation": 0, "x-ck12": 0, "texerror": 0, "math_score": 0.3241090774536133, "perplexity": 2201.964435641131}, "config": {"markdown_headings": true, "markdown_code": true, "boilerplate_config": {"ratio_threshold": 0.18, "absolute_threshold": 10, "end_threshold": 15, "enable": true}, "remove_buttons": true, "remove_image_figures": true, "remove_link_clusters": true, "table_config": {"min_rows": 2, "min_cols": 3, "format": "plain"}, "remove_chinese": true, "remove_edit_buttons": true, "extract_latex": true}, "warc_path": "s3://commoncrawl/crawl-data/CC-MAIN-2022-33/segments/1659882573630.12/warc/CC-MAIN-20220819070211-20220819100211-00772.warc.gz"}

|

https://www.physicsforums.com/threads/average-speed-question.184233/

|

# Average speed question

1. Sep 12, 2007

### anglum

1. The problem statement, all variables and given/known data

A car is moving at a constant speed of 13 m/s

when the driver presses down on the gas pedal

and accelerates for 11 s with an acceleration

of 1:4 m/s2.

What is the average speed of the car during

the period? Answer in units of m=s.

3. The attempt at a solution

using the formula distance = Vi t + 1/2 A t squared

i solve for distance and then just simply divide that by the 11 seconds?

Last edited: Sep 12, 2007

2. Sep 12, 2007

### Feldoh

Note sure what you mean by 1:4 m/s^2 -- But your solution looks right

3. Sep 12, 2007

### D H

Staff Emeritus

No. Average speed is defined as $\Delta d/\Delta t$, where $\Delta t$ is the duration of the time span and $\Delta d$ is the distance traveled during this span. If you average the speeds at arbitrary time points over the time interval you will get a different (and incorrect) answer.

|

2017-05-29 10:08:52

|

{"extraction_info": {"found_math": true, "script_math_tex": 0, "script_math_asciimath": 0, "math_annotations": 0, "math_alttext": 0, "mathml": 0, "mathjax_tag": 0, "mathjax_inline_tex": 1, "mathjax_display_tex": 0, "mathjax_asciimath": 0, "img_math": 0, "codecogs_latex": 0, "wp_latex": 0, "mimetex.cgi": 0, "/images/math/codecogs": 0, "mathtex.cgi": 0, "katex": 0, "math-container": 0, "wp-katex-eq": 0, "align": 0, "equation": 0, "x-ck12": 0, "texerror": 0, "math_score": 0.8516079783439636, "perplexity": 895.437844257137}, "config": {"markdown_headings": true, "markdown_code": true, "boilerplate_config": {"ratio_threshold": 0.18, "absolute_threshold": 10, "end_threshold": 15, "enable": true}, "remove_buttons": true, "remove_image_figures": true, "remove_link_clusters": true, "table_config": {"min_rows": 2, "min_cols": 3, "format": "plain"}, "remove_chinese": true, "remove_edit_buttons": true, "extract_latex": true}, "warc_path": "s3://commoncrawl/crawl-data/CC-MAIN-2017-22/segments/1495463612069.19/warc/CC-MAIN-20170529091944-20170529111944-00392.warc.gz"}

|

https://www.embeddedrelated.com/blogs-11/mp/all/all.php

|

## Troubleshooting notes from days past, TTL, Linear

June 19, 20181 comment

General Troubleshooting

• Always think “what if”.

• Analytical procedures

• Precautions when probing equipment

• Insulate all but last 1/8” of probe tip

• Learn from mistakes

• If you get stuck, sleep on it.

• Many problems have simple solutions.

• Whenever possible, try to substitute a working unit.

• Don’t blindly trust test instruments.

• Coincidences do happen, but are relatively...

## Linear Feedback Shift Registers for the Uninitiated, Part XV: Error Detection and Correction

June 12, 2018

Last time, we talked about Gold codes, a specially-constructed set of pseudorandom bit sequences (PRBS) with low mutual cross-correlation, which are used in many spread-spectrum communications systems, including the Global Positioning System.

This time we are wading into the field of error detection and correction, in particular CRCs and Hamming codes.

Ernie, You Have a Banana in Your Ear

## Tenderfoot: How to Write a Great Bug Report

I am an odd sort of person. Why? Because I love a well written and descriptive bug report. I love a case that includes clear and easy to follow reproduction steps. I love a written bug report that includes all the necessary information on versions, configurations, connections and other system details. Why? Because I believe in efficiency. I believe that as an engineer I have a duty to generate value to my employer or customer. Great bug reports are one part of our collective never-ending...

## Who else is going to Sensors Expo in San Jose? Looking for roommate(s)!

This will be my first time attending this show and I must say that I am excited. I am bringing with me my cameras and other video equipment with the intention to capture as much footage as possible and produce a (hopefully) fun to watch 'highlights' video. I will also try to film as many demos as possible and share them with you.

I enjoy going to shows like this one as it gives me the opportunity to get out of my home-office (from where I manage and run the *Related sites) and actually...

## Voltage - A Close Look

My first boss liked to pose the following problem when interviewing a new engineer. “Imagine two boxes on a table one with a battery the other with a light. Assume there is no detectable voltage drop in the connecting leads and the leads cannot be broken. How would you determine which box has the light? Drilling a hole is not allowed.”

The answer is simple. You need a voltmeter to tell the electric field direction and a small compass to tell the magnetic field...

## What is Electronics

Introduction

One answer to the question posed by the title might be: "The understanding that allows a designer to interconnect electrical components to perform electrical tasks." These tasks can involve measurement, amplification, moving and storing digital data, dissipating energy, operating motors, etc. Circuit theory uses the sinusoidal relations between components, voltages, current and time to describe how a circuit functions. The parameters we can measure directly are...

## Linear Regression with Evenly-Spaced Abscissae

May 1, 20181 comment

What a boring title. I wish I could come up with something snazzier. One word I learned today is studentization, which is just the normalization of errors in a curve-fitting exercise by the sample standard deviation (e.g. point $x_i$ is $0.3\hat{\sigma}$ from the best-fit linear curve, so $\frac{x_i - \hat{x}_i}{\hat{\sigma}} = 0.3$) — Studentize me! would have been nice, but I couldn’t work it into the topic for today. Oh well.

I needed a little break from...

## Linear Feedback Shift Registers for the Uninitiated, Part XIV: Gold Codes

April 18, 2018

Last time we looked at some techniques using LFSR output for system identification, making use of the peculiar autocorrelation properties of pseudorandom bit sequences (PRBS) derived from an LFSR.

This time we’re going to jump back to the field of communications, to look at an invention called Gold codes and why a single maximum-length PRBS isn’t enough to save the world using spread-spectrum technology. We have to cover two little side discussions before we can get into Gold...

## Crowdfunding Articles?

Many of you have the knowledge and talent to write technical articles that would benefit the EE community. What is missing for most of you though, and very understandably so, is the time and motivation to do it.

But what if you could make some money to compensate for your time spent on writing the article(s)? Would some of you find the motivation and make the time?

I am thinking of implementing a system/mechanism that would allow the EE community to...

## How precise is my measurement?

Some might argue that measurement is a blend of skepticism and faith. While time constraints might make you lean toward faith, some healthy engineering skepticism should bring you back to statistics. This article reviews some practical statistics that can help you satisfy one common question posed by skeptical engineers: “How precise is my measurement?” As we’ll see, by understanding how to answer it, you gain a degree of control over your measurement time.

An accurate, precise...

## First-Order Systems: The Happy Family

May 3, 20141 comment

Все счастли́вые се́мьи похо́жи друг на дру́га, ка́ждая несчастли́вая семья́ несчастли́ва по-сво́ему.

— Лев Николаевич Толстой, Анна Каренина

Happy families are all alike; every unhappy family is unhappy in its own way.

— Lev Nicholaevich Tolstoy, Anna Karenina

I was going to write an article about second-order systems, but then realized that it would be...

## Best Firmware Architecture Attributes

Architecture of a firmware (FW) in a way defines the life-cycle of your product. Often companies start with a simple-version of a product as a response to the time-to-market caveat of the business, make some cash out of the product with a simple feature set. It takes only less than 2-3 years to reach a point where the company needs to develop multiple products derived from the same code base and multiple teams need to develop...

## The CRC Wild Goose Chase: PPP Does What?!?!?!

I got a bad feeling yesterday when I had to include reference information about a 16-bit CRC in a serial protocol document I was writing. And I knew it wasn’t going to end well.

The last time I looked into CRC algorithms was about five years ago. And the time before that… sometime back in 2004 or 2005? It seems like it comes up periodically, like the seventeen-year locust or sunspots or El Niño,...

## VHDL tutorial - combining clocked and sequential logic

March 3, 2008

In an earlier article on VHDL programming ("VHDL tutorial" and "VHDL tutorial - part 2 - Testbench", I described a design for providing a programmable clock divider for a ADC sequencer. In this example, I showed how to generate a clock signal (ADCClk), that was to be programmable over a series of fixed rates (20MHz, 10MHz, 4MHz, 2MHz, 1MHz and 400KHz), given a master clock rate of 40MHz. A reader of that article had written to ask if it was possible to extend the design to...

## OOKLONE: a cheap RF 433.92MHz OOK frame cloner

Introduction

A few weeks ago, I bought a set of cheap wireless outlets and reimplemented the protocol for further inclusion in a domotics platform. I wrote a post about it here:

The device documentation mentions that it operates on the same frequency as the previous...

## Coding - Step 0: Setting Up a Development Environment

Articles in this series:

You can easily find a million articles out there discussing compiler nuances, weighing the pros and cons of various data structures or discussing the optimization of databases. Those sorts of articles are fascinating reads for advanced programmers but...

## Cortex-M Exception Handling (Part 2)

The first part of this article described the conditions for an exception request to be accepted by a Cortex-M processor, mainly concerning the relationship of its priority with respect to the current execution priority. This part will describe instead what happens after an exception request is accepted and becomes active.

PROCESSOR OPERATION AND PRIVILEGE MODE

Before discussing in detail the sequence of actions that occurs within the processor after an exception request...

## Signal Processing Contest in Python (PREVIEW): The Worst Encoder in the World

When I posted an article on estimating velocity from a position encoder, I got a number of responses. A few of them were of the form "Well, it's an interesting article, but at slow speeds why can't you just take the time between the encoder edges, and then...." My point was that there are lots of people out there which take this approach, and don't take into account that the time between encoder edges varies due to manufacturing errors in the encoder. For some reason this is a hard concept...

|

2023-03-27 01:25:41

|

{"extraction_info": {"found_math": true, "script_math_tex": 0, "script_math_asciimath": 0, "math_annotations": 0, "math_alttext": 0, "mathml": 0, "mathjax_tag": 0, "mathjax_inline_tex": 1, "mathjax_display_tex": 0, "mathjax_asciimath": 0, "img_math": 0, "codecogs_latex": 0, "wp_latex": 0, "mimetex.cgi": 0, "/images/math/codecogs": 0, "mathtex.cgi": 0, "katex": 0, "math-container": 0, "wp-katex-eq": 0, "align": 0, "equation": 0, "x-ck12": 0, "texerror": 0, "math_score": 0.22622765600681305, "perplexity": 2266.7899821882315}, "config": {"markdown_headings": true, "markdown_code": true, "boilerplate_config": {"ratio_threshold": 0.18, "absolute_threshold": 10, "end_threshold": 15, "enable": true}, "remove_buttons": true, "remove_image_figures": true, "remove_link_clusters": true, "table_config": {"min_rows": 2, "min_cols": 3, "format": "plain"}, "remove_chinese": true, "remove_edit_buttons": true, "extract_latex": true}, "warc_path": "s3://commoncrawl/crawl-data/CC-MAIN-2023-14/segments/1679296946584.94/warc/CC-MAIN-20230326235016-20230327025016-00482.warc.gz"}

|

http://glennjlea.ca/latex/6-0-creating-chapters/

|

I'm a Canadian based in Berlin, Germany. My day job is a Technical Writer in the API space. I write about topics such as technology, usability, creative writing and Canadian history. All views mine. I tweet at @glennjlea. Read more about me here or at LinkedIn.

Site version: 3.0

Formatting chapter sections

## Chapters

Each chapter begins with the following elements:

• The first line requires you to define the title of the chapter. This is used in the headers and in the Table of Contents.

• The second line is used for cross-referencing to this chapter. It serves as a marker or anchor.

• The third line is used by the index.

Creating a section headings is quite easy. Second and third level headings are just as easy. Use the following commands to create these sections.

Three levels of headings are best. Any more and you may need to rewrite sections so they are at most third level deep. If you must, then just use a bolded paragraph for a fourth level heading.

## Paragraphs

Paragraphs are entered without markup tags. Adding a new paragraph requires a blank line between paragraphs.

Then Use myindentpar in the document flow to indent a paragraph based on the settings.

|

2020-10-25 16:32:02

|

{"extraction_info": {"found_math": true, "script_math_tex": 0, "script_math_asciimath": 0, "math_annotations": 0, "math_alttext": 0, "mathml": 0, "mathjax_tag": 0, "mathjax_inline_tex": 0, "mathjax_display_tex": 0, "mathjax_asciimath": 1, "img_math": 0, "codecogs_latex": 0, "wp_latex": 0, "mimetex.cgi": 0, "/images/math/codecogs": 0, "mathtex.cgi": 0, "katex": 0, "math-container": 0, "wp-katex-eq": 0, "align": 0, "equation": 0, "x-ck12": 0, "texerror": 0, "math_score": 0.7438787817955017, "perplexity": 1793.7643963394225}, "config": {"markdown_headings": true, "markdown_code": true, "boilerplate_config": {"ratio_threshold": 0.18, "absolute_threshold": 10, "end_threshold": 15, "enable": true}, "remove_buttons": true, "remove_image_figures": true, "remove_link_clusters": true, "table_config": {"min_rows": 2, "min_cols": 3, "format": "plain"}, "remove_chinese": true, "remove_edit_buttons": true, "extract_latex": true}, "warc_path": "s3://commoncrawl/crawl-data/CC-MAIN-2020-45/segments/1603107889574.66/warc/CC-MAIN-20201025154704-20201025184704-00630.warc.gz"}

|

https://www.physicsforums.com/threads/countable-but-not-second-countable-topological-space.187934/

|

# Countable But Not Second Countable Topological Space

I'm wondering if someone can furnish me with either an example of a topological space that is countable (cardinality) but not second countable or a proof that countable implies second countable. Thanks.

morphism

Homework Helper

Take the countable set $\mathbb{N}\times\mathbb{N}$. Topologize it by making any set that doesn't contain (0,0) open, and if a set does contain (0,0), it's open iff it contains all but a finite number of points in all but a finite number of columns. (Draw a picture. If an open set contains (0,0), then it can only miss infinitely many points in a finite number of columns, while it misses finitely many points in all the other columns.)

Now this topology doesn't have a countable base at (0,0), so it's not first countable let alone second countable.

Source: Steen & Seebach, Counterexamples in Topology, page 54. They call it the Arens-Fort Space.

Thanks! I own that book so I'll be having a look pretty soon.

morphism

Homework Helper

I've been thinking about this a little bit more, and I believe I have another example, although it's a bit more 'sophisticated' in that it requires a bit of advanced set theory.

This time our countable set is X = $\mathbb{N} \cup \{\mathbb{N}\}$. Take any free ultrafilter F on $\mathbb{N}$, and define a topology on X by letting each subset {n} of $\mathbb{N}$ be open, and defining nbhds of $\left{\mathbb{N}\right}$ to be those of the form $\{\mathbb{N}\} \cup U$, where U is in F. As in the previous example, this topology fails to have a countable base at $\left{\mathbb{N}\right}$ (because we cannot have a countable base for any free ultrafilter on the naturals), so again it fails to be first countable.

Edit:

Hmm... Now I'm wondering if there's a countable space that's first countable but not second countable!

Edit2:

Maybe that was silly. If X is countable and has a first countable topology, then the union of the bases at each of its points is the countable union of countable sets and is hence countable (and a basis for the topology). So, I'm lead to conclude that a countable, first countable space is necessarily second countable.

Last edited:

|

2021-12-08 18:51:36

|

{"extraction_info": {"found_math": true, "script_math_tex": 0, "script_math_asciimath": 0, "math_annotations": 0, "math_alttext": 0, "mathml": 0, "mathjax_tag": 0, "mathjax_inline_tex": 1, "mathjax_display_tex": 0, "mathjax_asciimath": 0, "img_math": 0, "codecogs_latex": 0, "wp_latex": 0, "mimetex.cgi": 0, "/images/math/codecogs": 0, "mathtex.cgi": 0, "katex": 0, "math-container": 0, "wp-katex-eq": 0, "align": 0, "equation": 0, "x-ck12": 0, "texerror": 0, "math_score": 0.9069373607635498, "perplexity": 254.27773823549285}, "config": {"markdown_headings": true, "markdown_code": true, "boilerplate_config": {"ratio_threshold": 0.18, "absolute_threshold": 10, "end_threshold": 15, "enable": true}, "remove_buttons": true, "remove_image_figures": true, "remove_link_clusters": true, "table_config": {"min_rows": 2, "min_cols": 3, "format": "plain"}, "remove_chinese": true, "remove_edit_buttons": true, "extract_latex": true}, "warc_path": "s3://commoncrawl/crawl-data/CC-MAIN-2021-49/segments/1637964363520.30/warc/CC-MAIN-20211208175210-20211208205210-00476.warc.gz"}

|

https://tex.stackexchange.com/questions/493138/arrow-with-vertical-bar-in-the-middle-tikz-cd

|

# Arrow with vertical bar in the middle (Tikz-cd)

I need to have an arrow that has a vertical bar in the middle, something like this:

----|---->

in commutative diagrams. Tikzcd does not have such an arrow supported directly, as far as I can tell.

Any ideas on how to do this? Ideally I would like to still be able to have labels.

I found a way to do this in the math environment using \mathclap and + as shown in code for arrow with a short vertical line in the middle of the shaft (not perfect but good enough) but this does not work well within a commutative diagram.

tikz-cd supports markings.

\documentclass{article}

\usepackage{tikz-cd}

\begin{document}

\begin{tikzcd}[]

a \arrow[r,"|" marking] & b

\end{tikzcd}

\end{document}

If you want to have full control over all aspects of the bar, you can use a TikZy decoration.

\documentclass{article}

\usepackage{tikz-cd}

\usetikzlibrary{decorations.markings}

\tikzset{mid vert/.style={/utils/exec=\tikzset{every node/.append style={outer sep=0.8ex}},

postaction=decorate,decoration={markings,

mark=at position 0.5 with {\draw[-] (0,#1) -- (0,-#1);}}},

mid vert/.default=0.75ex}

\begin{document}

\begin{tikzcd}[]

a \arrow[r,mid vert,"pft"] & b \arrow[r,"pht"] & c

\end{tikzcd}

\end{document}

In this version, the parameter is the length of the arrow, with the default being 2*0.75ex, but you can adjust the other parameters as well.

• Great this works! Is it possible to add to the definition of mid vert the fact that all the labels should have outer sep of, say, 0.8ex? I am not familiar with decorations – geguze May 29 at 4:29

• @geguze Yes, it is. (I actually like the idea!) I modified the code accordingly. (And this change is only local, as shown in the example, i.e. the other arrows will have business as usual.) – user121799 May 29 at 4:40

• Wonderful! One last question. The definition of the arrow with a bar in running math, suggested at the link included in my question, does not look very good, especially when compared to a tikz-cd diagram. Any suggestion on how to make that better? One could put a tikz-cd inline based on the mid vert definition you provided, not sure that's the best way though. – geguze May 29 at 4:51

• @geguze How about $a\mathrel{\tikz[baseline=-0.5ex]{\draw[->,mid vert](0,0) --(1.2em,0);}}b\longrightarrow c$ with the above preamble? Or \newcommand{\tobar}{\mathrel{\tikz[baseline=-0.5ex]{\draw[->,mid vert](0,0) --(1.2em,0);}}} $a\tobar b\longrightarrow c$? There is a long discussion how to make this symbol adjust to the font size, see this thread. (For a simple vertical bar you could also use \rule ...) – user121799 May 29 at 4:57

|

2019-10-15 06:25:53

|

{"extraction_info": {"found_math": true, "script_math_tex": 0, "script_math_asciimath": 0, "math_annotations": 0, "math_alttext": 0, "mathml": 0, "mathjax_tag": 0, "mathjax_inline_tex": 1, "mathjax_display_tex": 0, "mathjax_asciimath": 1, "img_math": 0, "codecogs_latex": 0, "wp_latex": 0, "mimetex.cgi": 0, "/images/math/codecogs": 0, "mathtex.cgi": 0, "katex": 0, "math-container": 0, "wp-katex-eq": 0, "align": 0, "equation": 0, "x-ck12": 0, "texerror": 0, "math_score": 0.7806340456008911, "perplexity": 1081.3031201621097}, "config": {"markdown_headings": true, "markdown_code": true, "boilerplate_config": {"ratio_threshold": 0.18, "absolute_threshold": 20, "end_threshold": 15, "enable": true}, "remove_buttons": true, "remove_image_figures": true, "remove_link_clusters": true, "table_config": {"min_rows": 2, "min_cols": 3, "format": "plain"}, "remove_chinese": true, "remove_edit_buttons": true, "extract_latex": true}, "warc_path": "s3://commoncrawl/crawl-data/CC-MAIN-2019-43/segments/1570986657586.16/warc/CC-MAIN-20191015055525-20191015083025-00539.warc.gz"}

|

https://notes.mikejarrett.ca/index-1.html

|

# Mobi station activity

I finally got around to learning how to map data on to maps with Cartopy, so here's some quick maps of Mobi bikeshare station activity.

First, an animation of station activity during a random summer day. The red-blue spectrum represents whether more bikes were taken or returned at a given station, and the brightness represents total station activity during each hour. I could take the time resolution lower than an hour, but I doubt the data is very meaningful at that level.

There's actually less pattern to this than I expected. I thought that in the morning you'd see more bikes being taken from the west end and south False Creek and returned downtown, and vice versa in the afternoon. But I can't really make out that pattern visually.

I've also pulled out total station activity during the time I've been collecting this data, June through October 2017. I've separated it by total bikes taken and total bikes returned. A couple things to note about these images: many of these stations were not active for the whole time period, and some stations have been moved around. I've made no effort to account for this; this is simply the raw usage at each location, so the downtown

The similarity in these maps is striking. Checking the raw data, I'm seeing incredibly similar numbers of bikes being taken and returned at each station. This either means that on aggregate people use Mobis for two way trips much more often than I expected; one way trips are cancelling each other out; or Mobi is rebalancing the stations to a degree that any unevenness is being masked out*. I hope to look more into whether I can spot artificial station balancing from my data soon, but we may have to wait for official data from Mobi to get around this.

*There's also the possibility that my data is bad, but let's ignore that for now

Instead of just looking at activity, I tried to quantify whether there are different activity patterns at different stations. Like last week, I performed a primary component analysis (PCA) but with bike activity each hour in the columns, and each station as a row. I then plot the top two components which most explain the variance in the data.

Like last week, much of the difference in station activity is explained by the total number of trips at each station, here represented on the X axis. There is a single main group of stations with a negative slope, but some outliers that are worth looking at. There are a few stations with higher Y values than expected.

These 5 stations are all Stanley Park stations. There's another four stations that might be slight outliers.

These are Anderson & 2nd (Granville Island); Aquatic Centre; Coal Harbour Community Centre; and Davie & Beach. All seawall stations at major destinations. So all the outlier stations are stations that we wouldn't expect to show regular commuter patterns, but more tourist-style activity.

I was hoping to see different clusters to represent residential area stations vs employment area stations, but these don't show up. Not terribly surprising since the Mobi stations cover an area of the city where there is fairly dense residential development almost everywhere. This fits with our maps of station activity, where we saw that there were no major difference between bikes taken and bikes returned at each station.

All the source code used for data acquisition and analysis in this post is available on my github page.

# Machine learning with Vancouver bike share data

Six months ago I came across Jake VanderPlas' blog post examining Seattle bike commuting habits through bike trip data. I wanted to try to recreate it for Vancouver, but the city doesn't publish detailed bike trip data, just monthly numbers. For plan B, I looked into Mobi bike share data. But still no published data! Luckily, Mobi does publish an API with the number of bike at each station. It doesn't give trip information, but it's a start.

All the code needed to recreate this post is available on my github page.

### Data Acquisition